Abstract

Interoceptive processes emanating from baroreceptor signals support emotional functioning. Previous research suggests a unique link to fear: fearful faces, presented in synchrony with systolic baroreceptor firing draw more attention and are rated as more intense than those presented at diastole. This study examines whether this effect is unique to fearful faces or can be observed in other emotional faces.

Participants (n=71) completed an emotional visual search task (VST) in which fearful, happy, disgust and sad faces were presented during systolic and diastolic phases of the cardiac cycle. Visual search accuracy and emotion detection accuracy and latency were recorded, followed by a subjective intensity task.

A series of interactions between emotion and cardiac phase were observed. Visual search accuracy for happy and disgust faces was greater at systole than diastole; the opposite was found for fearful faces. Fearful and happy faces were perceived as more intense at systole.

Previous research proposed that cardiac signaling has specific effects on the attention and intensity ratings for fearful faces. Results from the present tasks suggest these effects are more generalized and raise the possibility that interoceptive signals amplify emotional superiority effects dependent on the task employed.

Keywords: Emotion, Interoception, Visual Search Task, Face detection

Introduction:

Interoception is the integration of afferent physiological signals with higher order neural signals to generate adaptive responses, particularly in emotional domains (Craig 2003; Critchley & Garfinkel 2017). Memories for emotional words (Garfinkel et al. 2013), the mapping of physiology onto memory for appetitive picture stimuli (Leganes-Fonteneau et al. 2020), and the integration of subjective and neural responses towards emotional picture stimuli (Pollatos et al. 2007) are all mediated via interoceptive mechanisms.

There is strong evidence that cardiovascular mechanisms play a central role in interception. Cardiac interoception is multidimensional, but specific attention has been paid to phasic cardiac signals triggered by baroreceptors, which can affect emotional and cognitive processing (Critchley & Garfinkel 2017). Arterial baroreceptors are stretch sensitive mechanoreceptors triggered when each systolic cardiac contraction pumps blood out of the heart. The baroreceptors relay information about the timing and strength of each individual heartbeat to the brain, which uses it to coordinate sympathetic and parasympathetic neural responses. Thus, baroreceptor firing provides a structured temporal framework for deconstructing the role of phasic cardiac interoceptive signaling on cognitive and emotional processing. Interoceptive studies use this framework to compare cognitive-emotional responses to stimuli presented in synchrony with the R-wave (upward deflection of the QRS complex of the electrocardiogram) which occurs at the end of the diastolic cardiac phase when baroreceptors are silent, with stimuli presented 300ms after the R-wave, during the systolic phase and when baroreceptor firing is estimated to be maximal (Gray et al. 2010).

The presentation of emotional stimuli in synchrony with baroreceptor firing has been examined using a variety of tasks, and allows inferring the role of interoceptive signals in emotional processing. For example, fearful faces presented during systole compared to diastole result in greater subjective ratings of intensity (Garfinkel et al. 2014). More objective measures, such as an emotional attentional blink, also show that fearful faces presented at systole are also more easily detected, as opposed to happy, disgust and neutral faces (Garfinkel et al. 2014). Similar results were found using a spatial cueing task (Azevedo et al. 2018); although in this case only responses for fearful and neural faces were compared. Taken together, results from these studies highlight the role of cardiac signals in attentional engagement towards salient stimuli and the automatic processing of cues. They also emphasize the role of interoception on fear processing, and have been used to suggest that baroreceptor signaling selectively increases the perception of fearful faces because of their relevance as a marker of threat (Garfinkel & Critchley 2016), reflecting a distinct neural mechanism that underpins fear processing. This latter point is supported by the fact that some experiments also investigated responses to disgust and happy faces without finding a significant effect of cardiac phase (Garfinkel et al. 2014; Pfeifer et al. 2017), in line with the hypothesis that different physiological signals support specific emotional processes (Friedman 2010).

The finding that interoceptive signals increase the perception of fearful faces parallels the so-called threat advantage effect observed in multiple instances of emotional face research. This effect posits that attentional responses towards aversive or threatening stimuli are higher than towards positive or neutral stimuli (i.e. Öhman et al. 2001). However, threat advantage has proven to be highly task-dependent (Lundqvist et al. 2014). In fact, superiority effects have been observed for happy rather than fearful faces using emotional visual search tasks (i.e. Williams et al. 2005). Such visual search tasks are unique in allowing insight into different factors underlying emotional face detection (Calvo & Nummenmaa 2008) such as the perceptual salience and physical features of the faces. Moreover, a visual search task, as opposed to other attentional paradigms, allows capturing attentional processes more representative of the naturalistic scanning of faces in a crowd (Calvo & Nummenmaa 2008).

The evidence for the effects of baroreceptor signaling in fear processing is strong, but limited. Likewise, definitive evidence of the specificity of cardiac interoception for fear processing is lacking and it seems necessary to further investigate this topic using other paradigms. To test whether interoceptive signals can amplify other emotional faces, we adapted a visual search task to study automatic attention allocation towards emotional faces (fear, sad, happy and disgust) when stimuli were presented at different phases of the cardiac cycle. Moreover, we administered an emotional intensity task (Garfinkel et al. 2014) seeking to replicate previous results. We expected an interaction between cardiac cycle and emotional cue on face detection and subjective intensity ratings. Based on previous literature, increased responses at systole should be found for fearful faces. The emotional VST may also reveal effects for happy faces, according to previous findings using this task, while the other emotional stimuli served as exploratory controls.

Methods:

Participants:

A power analysis performed using results from Garfinkel et al. (2014), ηp2=0.33, revealed that a sample of 45 participants would be sufficient to generate power = 0.95. Considering that these tasks were part of a more extensive protocol, we decided to increase sample size by 50%.

A sample of 71 young adults (mean age=20.31, SD=2.74, 22 men) was recruited from undergraduate students at the Rutgers University, New Jersey (USA). Participants were eligible if they had normal or corrected-to-normal vision and reported not having any serious health issue, cardiac abnormalities, or mental disorders in the past two years. Participants under pharmacological treatment (except birth control) were excluded to avoid any interactions with the experiment. This study was approved by the university Institutional Review Board for the Protection of Human Subjects Involved in Research. Participants received compensation, either as $7/hour or course credits.

Tasks

Emotional Visual Search Task

This task assessed participants’ ability to detect emotional faces within a visual array (Williams et al. 2005). The visual array consisted of 6 faces organized in a circular pattern around the center of the screen. Within a given trial, all faces belonged to the same actor, but one face expressed a ‘full-blown’ emotion (fear, sad, disgust or happy); the other 5 faces were emotionally neutral and served as distractors. In total, participants completed 200 trials (50 per emotion) that used faces from 50 different actors (28 females) extracted from the The Karolinska Directed Emotional Faces data base (Lundqvist et al. 1998). All faces were presented in black and white and cropped around the facial oval, (Figure 1A).

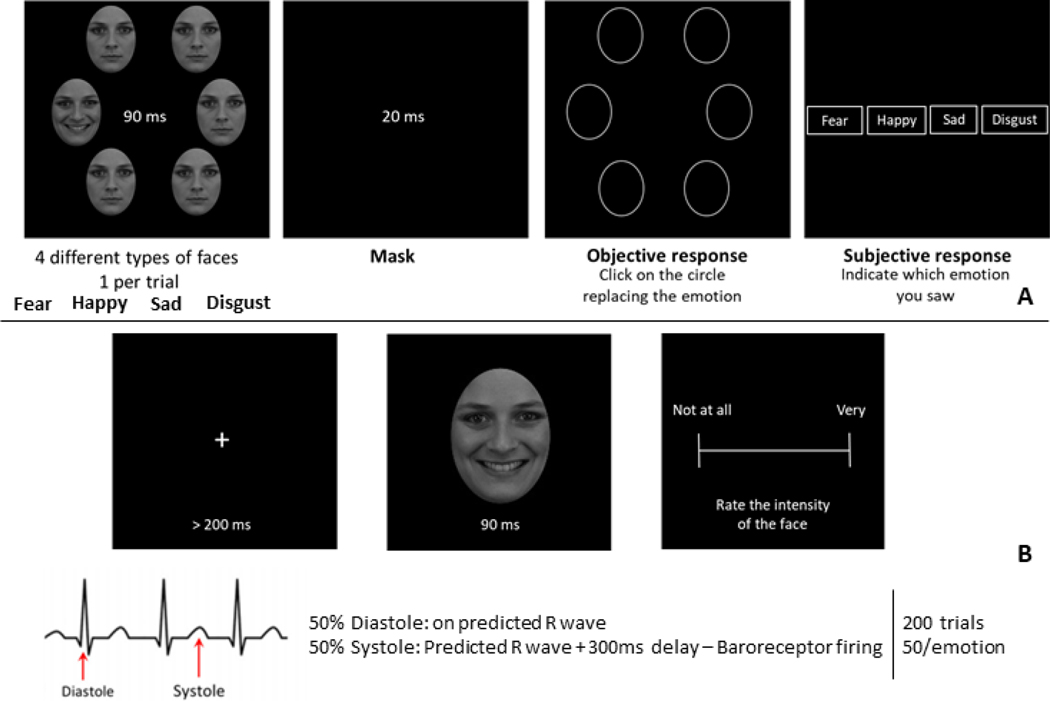

Figure 1. A- Emotional Visual Search Task, B-Emotional Intensity Task:

In the Emotional Visual Search Task (A), participants saw a target emotional face on the screen (Fear, Happy, Sad or Disgust) surrounded by 5 neutral distractors. In half of the trials, stimulus presentation was synchronized with cardiac Diastolic phase and on the other half with the Systolic phase. Participants were asked to indicate the location of the target emotional face (Objective response-Visual search accuracy) and indicate which kind of emotion they saw on the screen (Subjective response-Emotion detection accuracy). In the Emotional Intensity Task, participants were presented with a single emotional face at Systole or Diastole and were asked to rate its intensity level on a visual analog scale.

Each trial started with the presentation of a red fixation point that remained on screen until the stimulus array began. On 50% of the trials, the stimulus array appeared in synchrony with the participant’s R-wave (diastolic trials). On the other 50% of trials, the array appeared 300ms after the R-wave to approximate the systole (systolic trials). Trial presentation was fully randomized across emotion and cardiac synchrony.

R-waves were detected using a Nonin 8000SM Finger Pulse Oximeter processed through a C# script that then relayed information via UDP to Matlab for stimulus presentation. R-waves were predicted from the mean of the 4 previous RRI intervals. The script accounted for 250ms for pulse transit time, including 25ms for delays in signal processing (Suzuki et al. 2013).

The stimulus array appeared for 100ms, followed by a black screen for 20ms. An objective response array, which consisted of 6 white ovals at the location of the previously presented faces, then appeared. Participants were asked to select the oval in which the emotional face had appeared as quickly and accurately as possible using a computer mouse. Finally, participants saw a subjective response array, consisting on 4 words in a row: Fear, Disgust, Sad, Happy. Participants were instructed to select the emotional word that had been presented by using the mouse. Response arrays remained on screen until a response was detected. The cursor location was reset to the center of the screen (fixation point) at the end of each trial.

Pilot studies showed strong individual differences in objective emotional detection dependent on the distance between the stimuli and the center of the screen. A too high visual search accuracy could generate ceiling effects and blur the effect of cardiac phase. To control for these differences, participants first completed a practice block, in which stimuli (the same ones used in the main task) were not timed to cardiac signals, to calibrate accuracy in relation to the location of the faces. The distance between stimuli and the center of the screen increased from 110px to 155px across 60 trials using 15px intervals every 12 trials (3 trials per emotion). Accuracy scores were computed for each interval and the largest interval at which accuracy was nearest to < 55% (chance level =1/6) was selected for the main task. That average distance was 135px (SD<15).

Emotional Intensity task:

Participants were presented with one face at a time in the center of the screen for 150ms each. Faces were synchronized with participants’ systole (50% trials) or diastole (50% trials). Participants were asked to rate the intensity of each face using a visual analog scale, from “not at all intense” (0) to “very intense” (100) (Figure 1B). Stimuli and trial schedule (i.e. number of stimuli per condition) were identical to the Emotional VST.

Procedure

Participants were screened for eligibility using an online survey before completing one experimental session in the laboratory. Upon arrival at the laboratory, participants provided written informed consent and completed questionnaires (described in the Appendix section). Participants sat comfortably ~60cm in front of a 19” computer screen. ECG sensors (Thought Technology Ltd, Montreal, Quebec) were attached to participants and their physiological state was measured as part of the larger experimental design during a 5-minute baseline task (usable data for 52 subjects, mean heart rate (HR) = 78.80). Participants also completed two tasks measuring baseline individual differences in interoceptive awareness (data not shown). Finally, participants performed the Emotional VST and Emotional Intensity tasks, in that order.

Data extraction and analysis:

All data were extracted and averaged separately by emotion type and cardiac phase (systole vs. diastole). Visual search accuracy was measured from the objective response array as the proportion of correctly detected emotional face locations in the stimulus array. Emotion detection accuracy was measured from the subjective response array as the proportion of correctly detected emotional categories. Latencies for objective and subjective responses were computed as reaction times for accurate responses (min single trial latency for objective =0.305ms (disgust face) and subjective= 0.202ms (fearful face) responses). For the Emotional Intensity task, intensity ratings were computed from 0 to 100. Accuracy and intensity scores were transformed using arcsine square root.

A series of 2-way mixed model ANOVAs examined the interaction between emotion type and cardiac timing on accuracies and latencies for visual search and emotion detection. An equivalent analysis was performed on intensity ratings. Significant interactions were followed by post-hoc paired samples t-tests assessing the effect of cardiac synchrony on each emotion.

An exploratory covariate analysis with questionnaire scores and cardiovascular indices is presented in the Appendix.

Results:

Visual search accuracy:

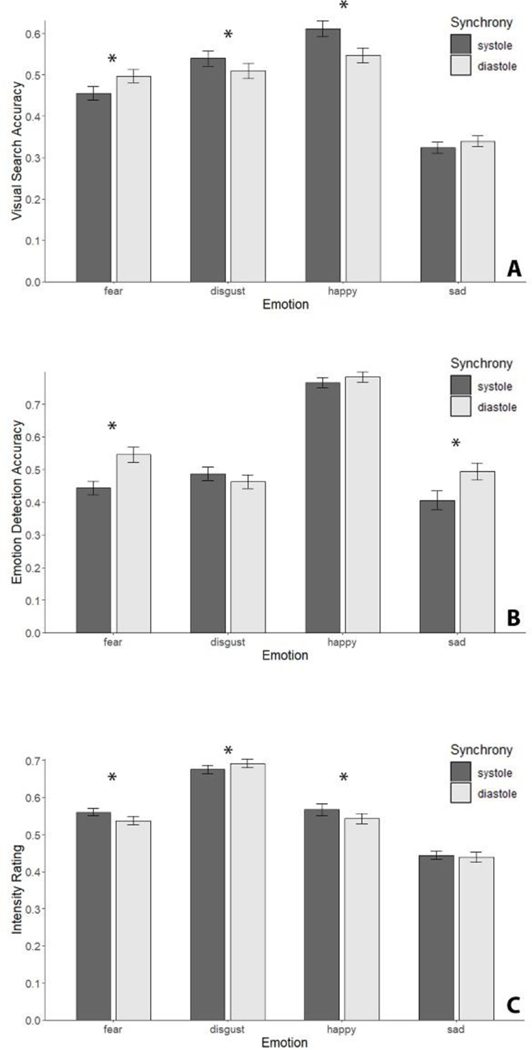

We found a significant main effect of emotion type, F(3,490)=143.750, p<.001, ηp2=.47, and a significant interaction between emotion type and cardiac synchrony, F(3,490)=11.880, p<.001, ηp2=.068, on visual search accuracy scores. There was no significant main effect of cardiac synchrony, F(1,490)=1.270, p=.261. Post-hoc paired samples t-tests compared accuracy scores between systole/diastole for each emotion type. We found that visual search accuracy in systole trials was significantly higher than in diastole trials for disgust, t(490)=−2.010, p=.0453, and happy faces, t(490)=−4.400, p<.001. Conversely, we found that visual search accuracy in diastole trials was significantly higher than in systole trials for fearful faces, t(490)=3.040, p=.0025. There was no significant effect of cardiac phase for sad faces, t(490)=1.000, p=.319, see Figure 2A.

Figure 2. A – Visual Search accuracy, B – Emotion Detection accuracy, C – Intensity ratings:

Task scores as a function of emotion and cardiac synchrony. Accuracy scores were computed as the mean proportion of correct responses. Error bars represent SE.

The analysis of differences in latencies by cardiac synchrony identified a main effect of synchrony, with latencies being lower at diastole than systole, F(1, 490) = 14.00, p<.001, ηp2=.028; and emotion, F(3, 490) = 17.63, p<.001, ηp2=.097, but no significant interaction between emotion and synchrony, F(3,490)=1.75, p=.156. See Table A1 in the Appendix for descriptive statistics.

Emotion detection Accuracy:

We found a significant main effect of emotion, F(3,490)=113.290, p<.001, ηp2=.41, and cardiac phase, F(1,490)=10.990, p=.001, ηp2=.022, on emotion detection accuracy. There was also a significant interaction between emotion and cardiac phase, F(3,490)=6.090, p<.001, ηp2=.036. Post-hoc paired samples t-tests compared accuracy scores between systole/diastole for each emotion type. We found that emotion detection accuracy in diastole trials was significantly higher than in systole trials for fear, t(490)=3.670, p<.001, and sad, t(490)=2.790, p=.006, faces. There was no significant effect of cardiac phase for disgust, t(490)=−1.280, p=.201, and happy, t(490)=0.840, p=.4, faces. See Figure 2B.

The analysis of differences in latencies found a main effect of emotion, F(3,490)=32.35, p<.001, ηp2=.17, but no significant main effect of synchrony, F(1,490)=1.36, p=.245 or interaction between emotion and synchrony, F(3,490)=2.01, p=.112. See Table A2 in Appendix for descriptive statistics.

Intensity Ratings:

We found a significant main effect of emotion, F(3,469)=102.000, p<.001, ηp2=.39, and cardiac phase, F(1,469)=7.610, p=.006, ηp2=.016, on intensity ratings. There was also a significant interaction between emotion and cardiac phase, F(3,469)=19.040, p<.001, ηp2=.039.

Post-hoc paired samples t-tests compared intensity between systole/diastole for each emotion type. We found that intensity ratings in systole trials were significantly higher than in diastole trials for fear, t(469)=3.720, p=.0002, and happy, t(469)=3.950, p<.001, faces. Conversely, we found that intensity ratings in diastole trials were significantly higher than in systole trials for disgust faces, t(469)=3.770, p=.0002. There was no significant effect of cardiac phase for sad faces, t(469)=01.010, p=.314. See Figure 2C.

Discussion:

The purpose of this study was to expand previous research on the role of cardiac signals in the detection of emotional faces. By adapting an Emo-VST to present emotional faces in synchrony with baroreceptor signals, we attempted to provide new insight into the interoceptive correlates of emotion. We included four emotional face sets (happy, fear, sad, disgust) and found that cardiac interoceptive signals contributed to cognitive-emotional processing beyond fear. The results did not uniformly support our hypotheses, but rather suggest that afferent baroreceptor signaling supports emotional processing depending on the experimental paradigm employed.

Specifically, participants showed poorer visual search and emotion detection accuracy for fearful faces, but greater intensity ratings when stimuli were presented at systole versus diastole. While the intensity finding replicates Garfinkel el al. (2014), the poorer accuracy does not support the role of interoceptive signals exclusively for fearful faces. This could be due to the fact that previous experimental paradigms were based on tasks for which aversive stimuli show a superiority effect. For example, the emotional attentional blink task used by Garfinkel et al. (2014) has repeatedly been shown to capture enhanced interference by aversive stimuli (Leganes-Fonteneau et al. 2018). Similarly, spatial cueing tasks, such as the one used by Azevedo and colleagues (2018) are more sensitive to threatening stimuli (Cisler & Olatunji 2010).

The Emotional VST on the other hand typically elicits superiority effects for happy faces and an inhibition of fearful faces (Williams et al. 2005). This is consistent with our findings that participants showed greater visual search accuracy for happy faces when stimuli were presented at systole versus diastole. Accuracy for emotion detection of happy faces did not differ as ceiling effects may have blurred the effect of systolic amplification. Finding that happy faces also are perceived as more intense at systole extends previous results obtained by Garfinkel el al. (2014), confirming that interoceptive signals do not exclusively amplify the subjective perception of fearful faces. We also found an increase in visual search accuracy for disgust faces presented at systole, although the effect was lesser than for happy faces, in line again with previous results in an emotional VST showing higher responsiveness for happy and disgust faces over fearful ones (Calvo & Nummenmaa 2008).

The inhibition at systole of visual search and emotion detection accuracy for fearful faces we observed can be interpreted according to Williams et al. (2005), who proposed that fearful faces were less detectable on a VST because they would signal imminent threat, directing subjects’ attention towards the surrounding faces. That same rationale could be applied to our results, and systolic presentation of fearful faces could have increased their ability to signal threat, directing attention towards the other faces in the stimulus array more effectively and decreasing visual search and emotion detection accuracy. The discussion of the attentional mechanisms supporting superiority effects for one or another emotion are beyond the scope of this brief report, but they could be attributed to the perceptual characteristics of the different faces and their salience (Calvo & Nummenmaa 2008; Lundqvist et al. 2015). In this experiment, beyond prior observations with fear faces and the expected superiority effects of happy faces, we observed the effect of cardiac signaling on disgust faces (greater visual search accuracy at systole) and sad faces (poorer accuracy of emotion detection at systole). Importantly, the flexibility of the emotional VST to incorporate different combinations of stimuli as targets or distractors, different instructions, or experimental techniques (i.e. eye tracking) can play a useful role in clarifying the interaction between emotional processing and cardiac signaling.

Importantly, we were not able to characterize the reliability of the R-wave prediction, but replication of previous findings (Garfinkel et al. 2014) supports the methodology we used. Future studies should include replication using more advanced and precise cardiac timing procedures.

Conclusions

Interoceptive deficits are proposed as a source of emotional impairment in a variety of psychiatric disorders (i.e. anxiety, autism spectrum disorders, depression; reviewed in Khalsa et al., (2017)). Individuals with the most prevalent psychiatric disorders often exhibit critical deficits in processing happy and sad stimuli (Joormann & Gotlib 2007), yet the few existing studies have suggested specificity of cardiac interoceptive signaling for fearful stimuli.

This study suggests that the specificity of interoception for fearful stimuli may be a task-based artifact, as other emotions (happy, disgust) were amplified by cardiac signals. Although this goes against current perspectives on the autonomic specificity of emotion (Friedman 2010), our results are consistent with cardiac outputs as an undifferentiated interoceptive signal of general arousal feeding into evaluative cognitive operations (Cacioppo et al. 2000). Clearly, more research is needed, but it is possible that our novel emotional VST task may allow a broader assessment of the interoceptive correlates of emotional processing in clinical populations. Future research aimed directly at disentangling the relationship between interoceptive signals and the cognitive and attentional factors driving emotional processing (Galvez-Pol et al. 2020) is needed.

Furthermore, based on evidence that baroreceptor signaling can be modulated through resonance paced breathing to possibly increase interoceptive awareness (Leganes-Fonteneau et al. In press), the emotional VST could be used to examine whether resonance breathing can tighten the link between interoceptive signals and emotional face detection as a prospective clinical tool.

Supplementary Material

Acknowledgments

The data that support the findings of this study are openly available in OSF at http://DOI 10.17605/OSF.IO/S2EW9.

References:

- Azevedo RT, Badoud D & Tsakiris M (2018) Afferent cardiac signals modulate attentional engagement to low spatial frequency fearful faces. Cortex 104:232–240. [DOI] [PubMed] [Google Scholar]

- Cacioppo JT, Berntson GG, Larsen JT, Poehlmann KM & Ito TA (2000) The psychophysiology of emotion. In: Handbook of emotions, 2nd ed. pp. 173–191. [Google Scholar]

- Calvo MG & Nummenmaa L (2008) Detection of emotional faces: Salient physical features guide effective visual search. J Exp Psychol Gen 137:471–494. [DOI] [PubMed] [Google Scholar]

- Cisler JM & Olatunji BO (2010) Components of attentional biases in contamination fear: Evidence for difficulty in disengagement. Behav Res Ther 48:74–78. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Craig A (2003) Interoception: the sense of the physiological condition of the body. Curr Opin Neurobiol 13:500–505. [DOI] [PubMed] [Google Scholar]

- Critchley H & Garfinkel S (2017) Interoception and emotion. Curr Opin Psychol 17:7–14. [DOI] [PubMed] [Google Scholar]

- Friedman BH (2010) Feelings and the body: The Jamesian perspective on autonomic specificity of emotion. Biol Psychol 84:383–393. [DOI] [PubMed] [Google Scholar]

- Galvez-Pol A, McConnell R & Kilner JM (2020) Active sampling in visual search is coupled to the cardiac cycle. Cognition 196:104149. [DOI] [PubMed] [Google Scholar]

- Garfinkel SN, Barrett AB, Minati L, Dolan RJ, Seth AK & Critchley HD (2013) What the heart forgets: Cardiac timing influences memory for words and is modulated by metacognition and interoceptive sensitivity. Psychophysiology 50:505–512. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garfinkel SN & Critchley HD (2016) Threat and the Body: How the Heart Supports Fear Processing. Trends Cogn Sci 20:34–46. [DOI] [PubMed] [Google Scholar]

- Garfinkel SN, Minati L, Gray MA, Seth AK, Dolan RJ & Critchley HD (2014) Fear from the heart: sensitivity to fear stimuli depends on individual heartbeats. J Neurosci 34:6573–82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gray MA, Minati L, Paoletti G & Critchley HD (2010) Baroreceptor activation attenuates attentional effects on pain-evoked potentials. Pain 151:853–861. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Joormann J & Gotlib IH (2007) Selective attention to emotional faces following recovery from depression. J Abnorm Psychol 116:80–85. [DOI] [PubMed] [Google Scholar]

- Khalsa SS, Adolphs R, Cameron OG, Critchley HD, Davenport PW, Feinstein JS, Feusner JD, Garfinkel SN, Lane RD, Mehling WE, Meuret AE, Nemeroff CB, Oppenheimer S, Petzschner FH, Pollatos O, Rhudy JL, Schramm LP, Simmons WK, Stein MB, Stephan KE, Van den Bergh O, Van Diest I, von Leupoldt A, Paulus MP, Ainley V, Al Zoubi O, Aupperle R, Avery J, Baxter L, Benke C, Berner L, Bodurka J, Breese E, Brown T, Burrows K, Cha Y-H, Clausen A, Deville D, Duncan L, Duquette P, Ekhtiari H, Fine T, Ford B, Garcia Cordero I, Gleghorn D, Guereca Y, Harrison NA, Hassanpour M, Hechler T, Heller A, Hellman N, Herbert B, Jarrahi B, Kerr K, Kirlic N, Klabunde M, Kraynak T, Kriegsman M, Kroll J, Kuplicki R, Lapidus R, Le T, Hagen KL, Mayeli A, Morris A, Naqvi N, Oldroyd K, Pané-Farré C, Phillips R, Poppa T, Potter W, Puhl M, Safron A, Sala M, Savitz J, Saxon H, Schoenhals W, Stanwell-Smith C, Teed A, Terasawa Y, Thompson K, Toups M, Umeda S, Upshaw V, Victor T, Wierenga C, Wohlrab C, Yeh H, Yoris A, Zeidan F, Zotev V & Zucker N (2017) Interoception and Mental Health: A Roadmap. Biol Psychiatry Cogn Neurosci Neuroimaging. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leganes-Fonteneau M, Buckman J, Islam S, Pawlak A, Vaschillo B, Vaschillo E & Marsha Bates M (no date) The Cardiovascular Mechanisms of Interoceptive Awareness: Effects of Resonance Breathing. Int J Psychophysiol Stage 1 Re. [DOI] [PubMed] [Google Scholar]

- Leganes-Fonteneau M, Scott R & Duka T (2018) Attentional responses to stimuli associated with a reward can occur in the absence of knowledge of their predictive values. Behav Brain Res 341:26–36. [DOI] [PubMed] [Google Scholar]

- Leganes-Fonteneau M, Buckman J, Pawlak A, Vaschillo B, Vaschillo E & Bates M (2020) Interoceptive signaling in alcohol cognitive biases: Role of family history and alliesthetic components. Addict Biol. [DOI] [PubMed] [Google Scholar]

- Lundqvist D, Bruce N & Öhman A (2015) Finding an emotional face in a crowd: Emotional and perceptual stimulus factors influence visual search efficiency. Cogn Emot 29:621–633. [DOI] [PubMed] [Google Scholar]

- Lundqvist D, Flykt A & Ohman A (1998) The Karolinska directed emotional faces (KDEF). CD ROM from Dep Clin Neurosci Psychol Sect Karolinska Institutet:91–630. [Google Scholar]

- Lundqvist D, Juth P & Öhman A (2014) Using facial emotional stimuli in visual search experiments: The arousal factor explains contradictory results. Cogn Emot 28:1012–1029. [DOI] [PubMed] [Google Scholar]

- Öhman A, Lundqvist D & Esteves F (2001) The face in the crowd revisited: A threat advantage with schematic stimuli. J Pers Soc Psychol 80:381–396. [DOI] [PubMed] [Google Scholar]

- Pfeifer G, Garfinkel SN, Gould van Praag CD, Sahota K, Betka S & Critchley HD (2017) Feedback from the heart: Emotional learning and memory is controlled by cardiac cycle, interoceptive accuracy and personality. Biol Psychol 126:19–29. [DOI] [PubMed] [Google Scholar]

- Pollatos O, Gramann K & Schandry R (2007) Neural systems connecting interoceptive awareness and feelings. Hum Brain Mapp 28:9–18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Suzuki K, Garfinkel SN, Critchley HD & Seth AK (2013) Multisensory integration across exteroceptive and interoceptive domains modulates self-experience in the rubber-hand illusion. Neuropsychologia 51:2909–2917. [DOI] [PubMed] [Google Scholar]

- Williams MA, Moss SA, Bradshaw JL & Mattingley JB (2005) Look at me, I’m smiling: Visual search for threatening and nonthreatening facial expressions. Vis cogn 12:29–50. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.