Abstract

Introduction

An increasing growth of systematic reviews (SRs) presents notable challenges for decision-makers seeking to answer clinical questions. In 1997, an algorithm was created by Jadad to assess discordance in results across SRs on the same question. Our study aims to (1) replicate assessments done in a sample of studies using the Jadad algorithm to determine if the same SR would have been chosen, (2) evaluate the Jadad algorithm in terms of utility, efficiency and comprehensiveness, and (3) describe how authors address discordance in results across multiple SRs.

Methods and analysis

We will use a database of 1218 overviews (2000–2020) created from a bibliometric study as the basis of our search for studies assessing discordance (called discordant reviews). This bibliometric study searched MEDLINE (Ovid), Epistemonikos and Cochrane Database of Systematic Reviews for overviews. We will include any study using Jadad (1997) or another method to assess discordance. The first 30 studies screened at the full-text stage by two independent reviewers will be included. We will replicate the authors’ Jadad assessments. We will compare our outcomes qualitatively and evaluate the differences between our Jadad assessment of discordance and the authors’ assessment.

Ethics and dissemination

No ethics approval was required as no human subjects were involved. In addition to publishing in an open-access journal, we will disseminate evidence summaries through formal and informal conferences, academic websites, and across social media platforms. This is the first study to comprehensively evaluate and replicate Jadad algorithm assessments of discordance across multiple SRs.

Keywords: public health, statistics & research methods, epidemiology

Strengths and limitations of this study.

This is the first proposed empirical study to use a systematic approach to evaluate authors’ assessment of discordance across systematic reviews and replicate Jadad algorithm assessments.

We believe the greatest strength of the proposed study will be that we used an iterative process among authors to develop decision rules for the interpretation and application of each step in the Jadad algorithm.

To mitigate observer bias, reviewers were blinded to the discordant review authors’ Jadad assessments.

In our search update, we only searched MEDLINE (Ovid), which would have limited the number of potentially relevant studies found.

Background

Information overload is an increasing problem for health practitioners, researchers and decision-makers. Global research output is growing rapidly, and the number of published systematic reviews (SRs) being produced yearly is also expanding.1 Between January and October 2020, 807 SRs on COVID-19 alone were published in PubMed,2 and the rate of growth from 1995 to 2017 in SRs was found to be 4676%.3 Challenges in dealing with growth in SRs include identification of high quality, comprehensive, and recent reports on the topic of interest.

‘Overviews of SRs’ (henceforth called overviews) evolved in response to these challenges.4–7 Overviews summarise the results of SRs, and help make sense of potentially conflicting, discrepant, and overlapping results and conclusions of SRs on the same question.8–15 Overviews may also include SRs with concordant results; hence, we have named studies that identify and explain the discordance between conflicting SRs on the same question as ‘discordant reviews’. These discordant reviews are often called SRs of overlapping meta-analyses,13 16 conflicting results of meta-analyses17 18 and discordant meta-analyses.19–21

We define discordance as when SRs with identical or/very similar clinical, public heath or policy questions report different results for the same outcome. A common method for dealing with multiple SRs with discordant results is to specify methodological criteria to select only one SR (eg, select the highest quality and most comprehensive SR).12 However, many other methods have been proposed, including statistical approaches to address discordance in results across SRs.11 12

Jadad et al22 developed a decision tree (ie, an algorithm) to assess discordance in results across a sample of SRs on the same question to aid healthcare providers in making clinical decisions. The Jadad algorithm guides users through a methodological assessment of SR components to identify potential causes of discordance and ultimately choose the best SR across multiple. Despite the availability of this tool since 1997, it has not been universally adopted and has been inconsistently applied when used.23–25 The aim of this study is to comprehensively replicate and evaluate the Jadad algorithm for assessing discordance across SRs.

Objectives

Our study objectives are:

Describe how discordant reviews address discordance in results across multiple SRs using content analysis (study 1).

Replicate Jadad assessments from published discordant reviews to identify sources of discordance and to determine if the same SR(s) was chosen as the ‘best available evidence’ (study 2).

Evaluate the Jadad algorithm in terms of utility, efficiency and comprehensiveness (study 2).

Methods

Study design

This is a methods study in the knowledge synthesis field. We followed SR guidance for the study selection and data extraction stages.26

Search and selection of discordant reviews

Database of 1218 overviews

We will use a database of 1218 overviews published between 1 January 2000 and 30 December 2020 created from a bibliometric study27 as the basis of our search for discordant reviews using Jadad or another method to assess discordance. A validated search filter for overviews28 was used in MEDLINE (Ovid), Epistemonikos and the Cochrane Database of Systematic Reviews (CDSR) for overviews. In an empirical methods study of the retrieval sensitivity of six databases, the combination of MEDLINE and Epistemonikos retrieved 95.2% of all SRs.29 As a rationale, we believe this combination would retrieve an equal proportion of overviews. The database Epistemonikos contains both published and unpublished reports. We searched the CDSR through the website interface using the filter for Cochrane reviews. Overviews included in the bibliometric study contain these characteristics: (1) synthesised the results of SRs, (2) systematically searched for evidence in a minimum of two databases and (3) conducted a search using a combination of text words and MeSH terms. The included overviews also had to have a methods section in the main body of the paper and focused on health interventions. To identify studies assessing discordance using the database of 1218 overviews, we will use the EndNote search function and Boolean logic to search for the following words: overlap*[title/abstract] or discrepan*[title/abstract] or discord*[title/abstract] or concord*[title/abstract] or conflict*[title/abstract] or Jadad [abstract].

Medline (Ovid) search strategy January to April 2021

We will update this search with an Ovid MEDLINE search using the following search string: (‘systematic reviews’.ti, ab. or ‘meta-analyses’.ti, ab.) AND (overlap.ti, ab or discrepant.ti, ab or discordant.ti, ab. or difference.ti, ab. or conflicting.ti, ab. or Jadad.ab.). Our search was conducted on 18 April 2021.

Screening discordant reviews

Process for screening discordant reviews

Citations identified by our searches will be assigned a random number and screened sequentially. The first 30 Discordant Reviews screened at full-text and meeting our eligibility criteria will be included.

All authors will pilot the screening form on 20 discordant reviews to ensure high levels of agreement and common definitions of eligibility criteria.

Two authors will independently screen discordant reviews as full-text publications. Discrepancies will be resolved by consensus, and arbitration by a third reviewer when necessary.

Stage 1 screening criteria

We first include all reports aiming to assess discordant results across SRs on the same question. Studies assessing discordance can assess (1) discordant results or (2) discordant interpretations of the results and conclusions. Both studies examining (1) and (2) were eligible.30–33

If a study meets stage 1 criteria, it will be included in study 1.

Stage 2 screening criteria

From this sample, we will then screen discordant reviews based on the following inclusion criteria:

Included a minimum of two SRs with a meta-analysis of randomised controlled trials (RCTs), but may have included other study types beyond RCTs.

Used the Jadad algorithm.

If a study meets stage 2 screening, it will move onto stage 3 screening.

Stage 3 screening

After stage 2 screening is complete, we will screen based on the authorship team. When the same ‘core’ authors (first, last and/or corresponding) conduct two or more of the identified discordant reviews, we will only include one of the multiple discordant reviews (ie, the most recent study will be selected). Our rationale is that author groups use the same methods to assess discordance (eg, Mascarenhas et al24 and Chalmers et al15).

We will include discordant reviews in any language and publication status, published anytime. We will use Google translate to interpret non-English studies for screening and assessment. The resulting publications will form the set for study 2.

In the case where the authors of this study are also authors of one or several of the included discordant reviews, those authors will not conduct screening, extraction, Jadad assessment or analysis of the discordant review in question.

Full texts of all SRs included in a discordant review will be obtained.

Extraction of the primary intervention and outcome

Identification of the primary outcome from the discordant review

As a first step in assessing discordance, we will identify the primary outcome from each discordant review. The primary outcome will be extracted when it is explicitly defined in the title, abstract, objectives, introduction or methods sections.34 35 If the primary outcome is not found in any of these sections, we will extract it as the first outcome mentioned in the manuscript.34 35 If the primary outcome cannot be identified by any of these approaches, we will consider that the article did not specify primary outcomes and the study will be excluded, and replaced with the next discordant review in our database.

Identification of the primary intervention from the discordant review

Identification of the primary outcome and intervention is a two-step process. As a first step, we will identify the primary intervention associated with the primary outcome from each discordant review. Then we will extract the primary outcome and intervention from the included SRs when doing the Jadad assessments. If this is unclear, we will choose the first intervention highlighted in the title or abstract of the discordant review.35

Identification of included SRs with meta-analysis of RCTs addressing the primary intervention/outcome of the discordant review

Once the primary outcome is identified, we will next identify how many SRs with meta-analysis of RCTs were included in the discordant review that address the primary outcome and intervention. It is this sample of SRs with meta-analysis of RCTs that will be the focus of our Jadad assessments.

Process to identify primary intervention and outcome

Two authors will extract the primary intervention and outcome, and disagreements will be discussed until consensus is reached.

Blinding of results in the included SRs

Observer bias, sometimes called ‘detection bias’ or ‘ascertainment bias’, occurs when outcome assessments are systematically influenced by the assessors’ conscious or unconscious predispositions.36 Blinded outcome assessors are used in trials to avoid such bias. One empirical study found evidence of high risk of substantial bias when authors failed to blind outcome assessors in trials,36 whereas another did not.37 In our study, it is important that reviewers are blinded to the discordant review authors’ result of the Jadad assessment, as unblinding might predispose them to unconsciously choose the same SR as the discordant review authors.

We will blind the following components containing study results of the Jadad assessment and conclusions: abstract, highlights, results of the Jadad assessment and discussion/conclusions section. Blinding will be achieved via deletion using the paywalled Adobe Acrobat Pro or the freeware PDFCandy (https://pdfcandy.com). One author will blind the discordant review results and will not be involved in the Jadad assessment pertaining to those results. Assessors will be instructed not to search for and read the included discordant reviews prior to, and during, assessment.

Piloting Jadad assessment prior to full assessment

A pilot practice exercise will be conducted by all assessors prior to the Jadad assessments, to ensure consistent assessments across reviewers. Two Jadad assessments will be piloted by each reviewer and compared with a second to identify discrepancies that are to be resolved through discussion. Any necessary revisions to the assessment (sections 3.7 and 3.8) will be noted.

Jadad assessments of discordance across SRs

While the Jadad paper provides an algorithm intended to identify and address discordance between SRs in a discordant review, there is limited guidance within the manuscript regarding the application/operationalisation of the algorithm. Absence of this detailed guidance leaves room for subjective (mis)interpretation and ultimately confusion when it comes time to use the algorithm. To address this, we engaged in an iterative process of interpretation and implementation of the algorithm step by step. This process involved virtual meetings whereby consensus was sought for decision rules at each step of the algorithm to ensure consistency in both interpretation and application. Feedback was solicited and decision rules were accordingly adjusted until consensus was achieved. This tool underwent pilot testing as described in section 3.6 where feedback was further solicited and adjustments were made.

The Jadad decision tree assesses and compares sources of inconsistency between SRs with meta-analyses, including differences in clinical, public health or policy questions, inclusion and exclusion criteria, extracted data, methodological quality assessments, data combining and statistical analysis methods.

Step A is to examine the multiple SRs matching the discordant review question using a PICO (Population, Intervention, Comparison, Outcome) framework. If the clinical, public health or policy questions are not identical, then step B indicates choosing the SR closest to the decision makers’ research question and no further assessment is necessary. If multiple SRs are found with the same PICO, then step C should be investigated. As we are using discordant reviews as our sample, we will start at Step C in the Jadad decision tree.

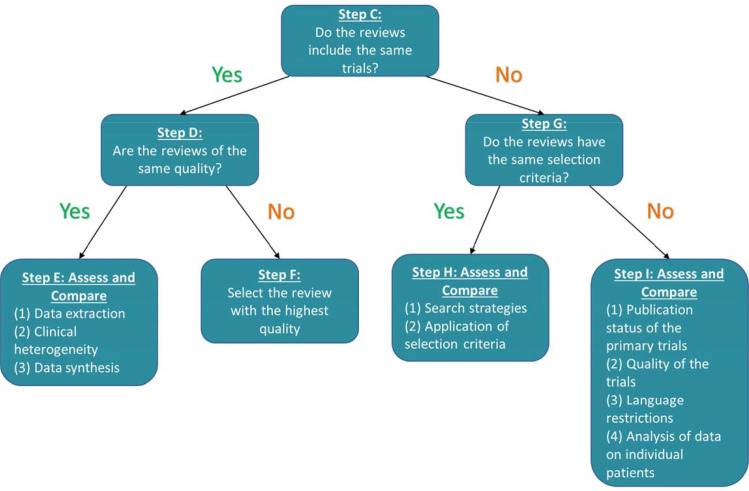

Here, we detail our interpretation of the Jadad algorithm for each step in assessing the discordance in a group of SRs with similar PICO elements. Two researchers will independently assess each set of SRs in the included Discordant Review using the Jadad algorithm, starting with step C (figure 1). Information and data from the discordant review will be used first if reported, and when data are not reported, we will consult the full text of the included SRs. If a discordant review or the included SRs does not report a method, we will indicate it as ‘not reported,’ and it will not be chosen for that step.

Figure 1.

Jadad (1997) decision tree.

Step D and G follow from step C. Steps E, F, H and I are completed depending on the decisions at steps D and G, respectively.

‘Meeting’ a step means an SR met the criteria in the substep or step that is highest in the hierarchy. For example, an SR that meets E3 criteria fulfils criteria A and B, which is the highest in our hierarchy.

Step C: do the reviews include the same trials for the primary intervention and outcome?

We will determine if the RCTs are similar across SRs by either finding this information in the discordant review, or extracting all RCTs from the included SRs using an excel matrix to list the SRs at the top, and trials in the left rows. The RCTs will be mapped to the SRs in order of publication date (earliest trials at the top). Using this matrix, we will determine if the SRs include the same or different trials.

Step D: are the reviews of the same quality?

If the SRs contain the same trials, then the assessor moves to step D—assess whether the SRs are the same methodological quality. We will either (1) extract the risk of bias/quality assessments from the Discordant Review if the Discordant Review used AMSTAR (A MeaSurement Tool to Assess the methodological quality of systematic Reviews),38 AMSTAR 239 or ROBIS (Risk Of Bias In Systematic reviews).40 AMSTAR38 and the updated AMSTAR 239 are tools to assess methodological quality (ie, quality of conduct and reporting) and ROBIS40 is a tool used to assess the risk of bias at the systematic-review level. Review-level biases include selective outcome reporting (eg, only describing the statistically significant and not describing all outcomes) and publication bias (eg, published studies are more likely to report positive results). If the discordant review authors used any other tool or method to assess the risk of bias/quality of the SRs or did not assess the risk of bias/quality assessment at all, we will conduct our own assessment using the ROBIS tool.40

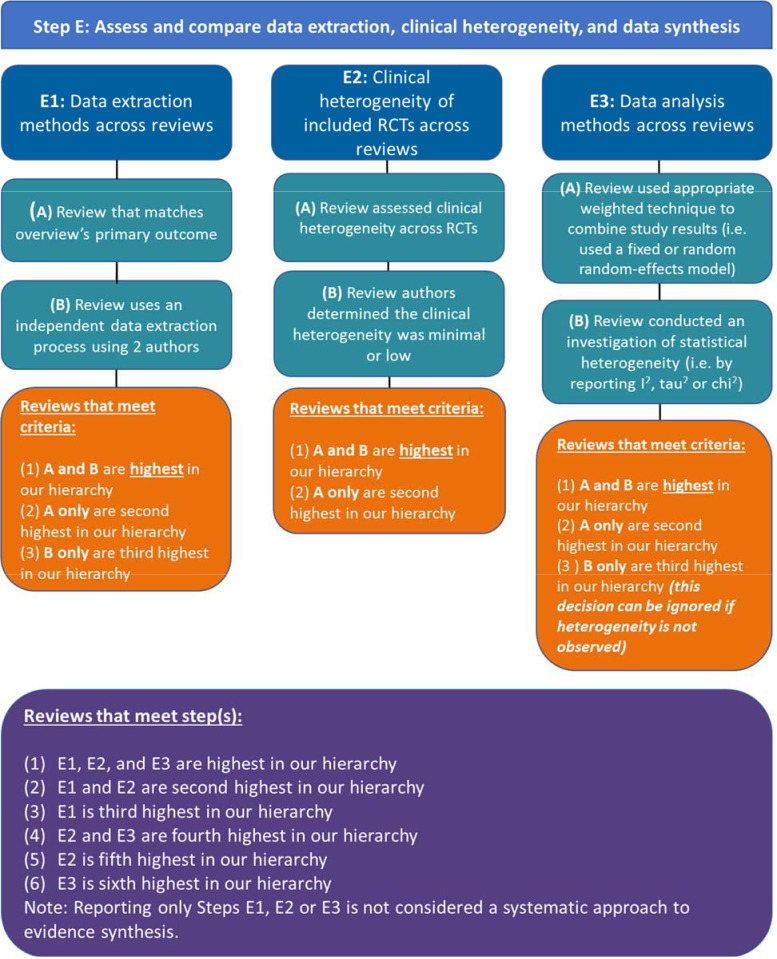

Step E: assess and compare data extraction, clinical heterogeneity and data synthesis

If the SRs are the same risk of bias/quality, then the next step is step E, to assess and compare data extraction, clinical heterogeneity and data synthesis across the SRs.

Step E1: assess and compare the data extraction methods across reviews

For this step, Jadad states, ‘If reviews differ [in outcomes reported], the decision-maker should identify the review that takes into account the outcome measures most relevant to the problem that he or she is solving.’ We interpret this step as selecting the SR that (A) matches the discordant review’s primary outcome.

Jadad then writes that SRs that conduct independent extractions by two reviewers are of the highest quality. We therefore decided that SRs that (B) used an independent data extraction process using two SR authors should be chosen. If a ROBIS assessment is done, then the latter point will be mapped to ROBIS 3.1. ‘Were efforts made to minimise error in data collection?’

Decision rules:

#1. Reviews that meet criteria A and B are highest in our hierarchy.

#2. Reviews that meet criteria A are second highest in our hierarchy.

#3. Reviews that meet criteria B are third highest in our hierarchy.

Step E2: assess and compare clinical heterogeneity of the included RCTs across reviews

Clinical heterogeneity is assessed at the SR level by examining the clinical, public health, or policy question pertaining to the primary outcome and the eligibility criteria PICO elements of each included RCT to see if they are sufficiently similar. If the PICO across RCTs are similar, then clinical heterogeneity is minimal, and SRs can progress with pooling study results in a meta-analysis. If a ROBIS assessment is done, this question is mapped to ROBIS 4.3 ‘Was the synthesis appropriate given the nature and similarity in the clinical, public health or policy questions, study designs and outcomes across included SRs?’

If an SR states that (A) they assessed for clinical (eg, PICO) heterogeneity across RCTs (in the methods or results sections), then this will be the SR that is chosen at this step. Example of an SR reporting a clinical heterogeneity assessment: ‘If we found 3 or more systematic SRs with similar study populations, treatment interventions and outcome assessments, we conducted quantitative analyses (Gaynes 2014)’. If authors reported and described clinical heterogeneity in the manuscript, then rule (B) authors that judged the clinical heterogeneity assessment to be minimal or low with rationale, will be chosen at this step.

Decision rule:

#1. Reviews that meet criteria A and B are highest in the hierarchy.

#2. Reviews that meet criteria A are second highest in our hierarchy.

Step E3: assess and compare data analysis methods across reviews

Jadad et al are purposefully vague when describing how to judge whether a meta-analysis was appropriately conducted. This step was interpreted as reviews that conducted: (A) an appropriate weighted technique to combine study results (ie fixed or random effects model) and (B) an investigation of statistical heterogeneity (ie, by reporting I2, τ2 or χ2) (figure 2).

Decision rules for if the presence or absence of heterogeneity is present in the meta-analysis:

#1. Reviews that meet criteria A and B are highest in our hierarchy.

#2. Reviews that meet criteria A only are second highest in our hierarchy.

#3. Reviews that meet criteria B only are third highest in our hierarchy (this decision can be ignored if heterogeneity is not observed).

Figure 2.

Our approach to operationalising step E of the Jadad algorithm.

Decision rules for step E

#1. Reviews that meet step E1, E2 and E3 are highest in our hierarchy.

#2. Reviews that meet step E1 and E2 second highest in our hierarchy.

#3. Reviews that meet step E1 third highest in our hierarchy.

#4. Reviews that meet step E2 and E3 fourth highest in our hierarchy.

#5. Reviews that meet step E2 fifth highest in our hierarchy.

#6. Reviews that meet step E3 sixth highest in our hierarchy.

Note: Reporting only steps E1, E2 or E3 is not considered a systematic approach to evidence synthesis.

Step F: select the review with the lowest risk of bias, or the highest quality

From the risk of bias/quality assessment conducted through step D, we will choose the SR with the lowest risk of bias judgement, or highest quality assessment rating. ROBIS contains a last phase where reviewers are asked to summarise concerns identified in each domain and describe whether the conclusions were supported by the evidence. Based on these last decisions, a final SR rating will be made based on high, low or unclear risk of bias. For our Jadad assessment, we will choose a binary rating of either high risk or low risk of bias. Any SRs assessed as ‘unclear’ risk of bias will be deemed as high risk. When using the assessments of risk of bias/quality of SRs from the included discordant reviews, we will choose the rating of the authors. If uncertainty exists, we will reassess the included SRs using ROBIS.

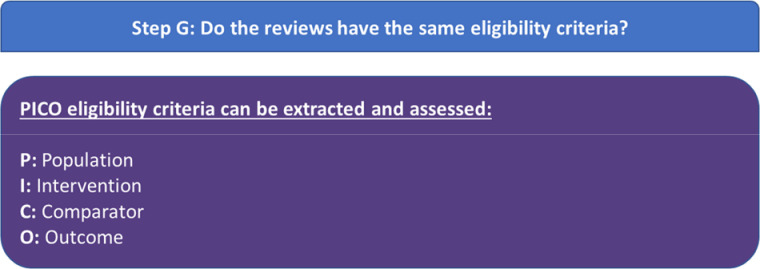

Step G: do the reviews have the same eligibility criteria?

If the SRs do not include the same trials, then decision-makers are directed to turn to step G—assess whether the SRs have the same eligibility criteria (figure 3). The discordant review may contain text in a methods section, or a characteristics of included SRs table where the PICO eligibility criteria can be extracted and assessed. If this is not the case, then the PICO eligibility criteria will be extracted from the included SRs by two authors independently and then compared with resolve any discrepancies.

Figure 3.

Our approach to operationalising step G of the Jadad algorithm.

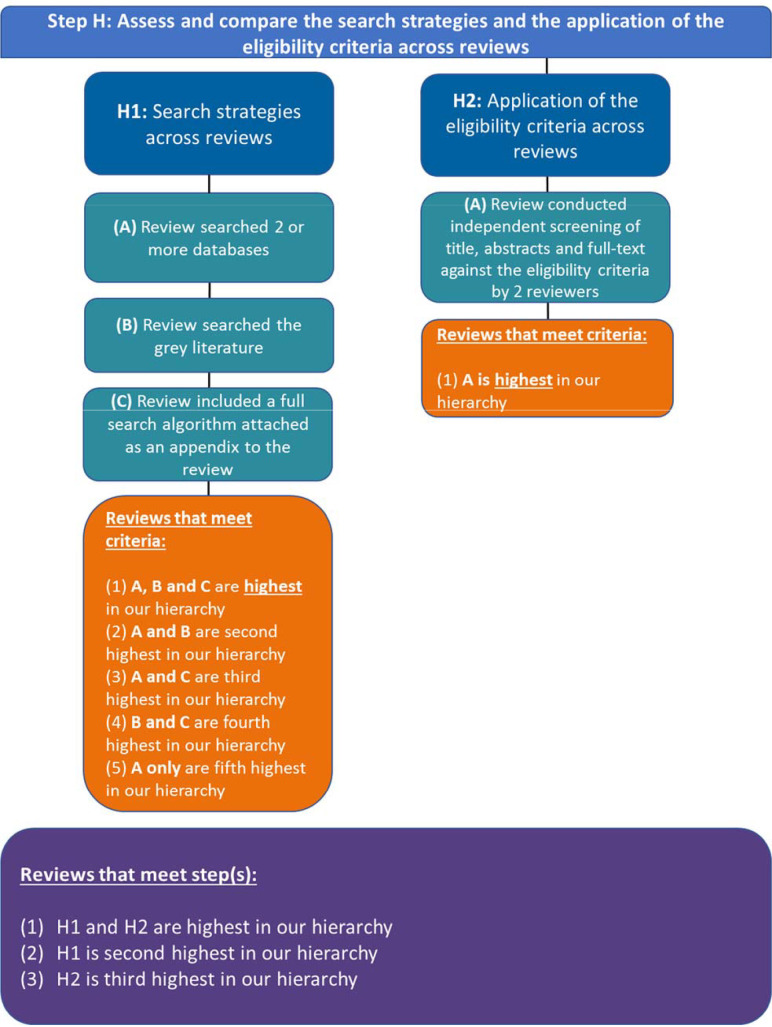

Step H: assess and compare the search strategies and the application of the eligibility criteria across reviews

If the SRs contain the same eligibility criteria, then step H is to assess and compare the search strategies and the application of the eligibility criteria across SRs (figure 4).

Figure 4.

Our approach to operationalising step H of the Jadad algorithm.

Step H1: assess and compare the search strategies across reviews

In this step, Jadad et al’s recommendations are vague, although they make reference to comprehensive search strategies as being less biased. We interpret this step as authors explicitly describing their search strategy such that it can be replicated. To meet this interpretation, our criteria are that SRs: (A) search 2 or more databases, (B) search the grey literature and (C) include a full search algorithm (may be attached as an appendix or included in the manuscript).

Decision rules:

#1. Reviews that meet criteria A, B and C are highest in our hierarchy.

#2. Reviews that meet criteria A and B are second highest in our hierarchy.

#3. Reviews that meet criteria A and C are third highest in our hierarchy.

#4. Reviews that meet criteria B and C are fourth highest in our hierarchy (unlikely scenario).

#5. Reviews that meet criteria A only are fifth highest in our hierarchy.

SCENARIOS for step H1.

Three SRs are identified for our Jadad assessment.

Criteria to choose a systematic SR at step H1: (A) 2 or more databases—(B) searched grey literature—(C) full search in appendix.

Scenario 1

Review 1: A and B, but not C (decision rule #2).

Review 2: A and B but not C (decision rule #2).

Review 3: A and C, but not B (decision rule #3).

Conclusion: No SR meets all of our criteria; which do we choose? Based on our decision rules, we choose both review 1 and 2.

Scenario 2

Review 1: A, but neither B nor C (decision rule #5).

Review 2: A and B, but not C (decision rule #2).

Review 3: neither A, B, nor C (does not report the search methods).

Conclusion: No SR meets allL of our criteria; which do we choose? Based on our decision rules, we choose review 2.

Step H2: assess and compare the application of the eligibility criteria across reviews

In this substep, Jadad indicates that we should choose the SR with the most explicit and reproducible inclusion criteria, which is ambiguous. Jadad states, ‘SRs with the same selection criteria may include different trials because of differences in the application of the criteria, which are due to random or systematic error. Decision-makers should regard as more rigorous those SRs with explicit, reproducible inclusion criteria. Such criteria are likely to reduce bias in the selection of studies’.22 We did not know if this meant clearly reproducible PICO eligibility criteria, as this would be a repeat to step G, whether the eligibility criteria were applied consistently by SRs (ie, compare eligibility criteria to included RCTs’ PICO to see if they indeed met the eligibility criteria), or if this meant (A) independently screening of title, abstracts, and full text against the eligibility criteria by two reviewers. We selected the latter criteria when choosing from the included SRs in a discordant review.

Decision rules:

#1. Reviews that meet criteria A is highest in our hierarchy

Decision rules for step H

#1. Reviews that meet Step H1 and H2 highest in our hierarchy.

#2. Reviews that meet Step H1 second highest in our hierarchy.

#3. Reviews that meet Step H2 third highest in our hierarchy.

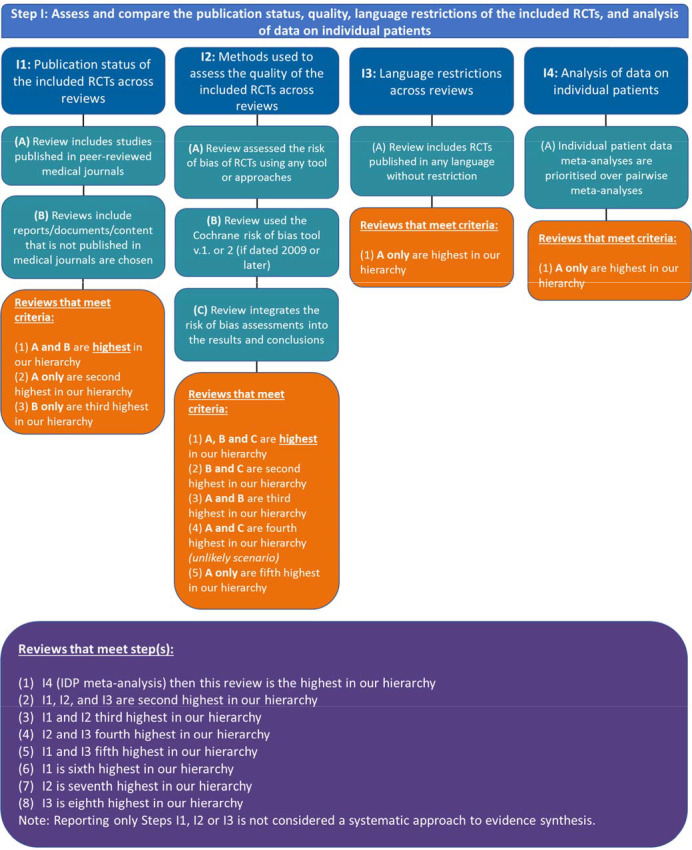

Step I: assess and compare the publication status, quality, language restrictions of the included RCTs, and analysis of data on individual patients

If the SRs do not have the same eligibility criteria, then the next step, step I, is to assess and compare the publication status, quality, language restrictions of the included RCTs, and analysis of data on individual patients across the SRs (figure 5). This step maps to ROBIS item 1.5, namely, ‘Were any restrictions in eligibility criteria based on appropriate information sources (eg, publication status or format, language, availability of data)40?’

Figure 5.

Our approach to operationalising step I of the Jadad algorithm. RCTs, randomised controlled trials.IPD: Independent Patient Data meta-analysis

Step I1: assess and compare the publication status of the included RCTs across reviews

In the absence of clear guidance, we interpret this step as ‘choose the SR that searches for and includes both published and unpublished data (grey literature).’ Published studies are defined as any study or data published in a peer-reviewed medical journal. Unpublished data are defined as any information that is difficult to locate and obtained from non-peer-reviewed sources such as websites (eg, WHO website, CADTH), clinical trial registries (eg, ClinicalTrials.gov), thesis and dissertation databases, and other unpublished data registries (eg, LILIACS). Our interpretation is that SRs are chosen at this step that search for: (A) studies published in peer-reviewed medical journals and (B) reports/documents/content that are not published in medical journals.

Decision rules:

#1. Reviews that meet criteria A and B are highest in our hierarchy.

#2. Reviews that meet criteria A are second highest in our hierarchy.

#3. Reviews that meet criteria B are third highest in our hierarchy.

Note: Reporting only A or B is not considered a systematic search.

Step I2: assess and compare the methods used to assess the quality of the included RCTs across reviews

In this step, the Jadad paper recommends assessing the appropriateness of the methods used to assess the quality of the included RCTs across SRs. This item maps to ROBIS item 3.4, ‘Was the risk of bias/quality of RCTs formally assessed using appropriate criteria?’ Here, we interpret this item as to whether the SR authors used the Cochrane risk of bias tool (V.1 or V.2). All other RCT quality assessment tools are inappropriate because they are out of date and omit important biases (eg, Agency for Healthcare Research and Quality 201241 omits allocation concealment). However, the Cochrane risk of bias tool was only published in October 2008. Therefore, we applied a decision rule: for SRs dated 2012 (giving one year for awareness of the tool to reach researchers) and later, the Cochrane risk of bias tool is considered the gold standard. For SRs dated 2009 or earlier, we considered the Jadad et al42 and Schulz et al43 scales to be the most common scales used between 1995 and 2011. Other tools will be considered on a case-by-case basis.

As a decision hierarchy, to meet the minimum criteria for this step, an SR will have (A) assessed the risk of bias of RCTs using any tool or approaches and (B) used the Cochrane risk of bias tool V.1 or 2 (if dated 2009 or later). If several SRs are included that meet these two criteria, the SR that (C) integrates the risk of bias assessments into the results or discussion section (ie, discusses the risk of bias in terms of high and low risk of bias studies, reports a subgroup or sensitivity analysis) will be chosen.

Decision rules:

#1. Reviews that meet criteria A, B and C are highest in our hierarchy.

#2. Reviews that meet criteria B and C are second highest in our hierarchy.

#3. Reviews that meet criteria A and B are third highest in our hierarchy.

#4. Reviews that meet criteria A and C are fourth highest in our hierarchy (unlikely scenario).

#5. Reviews that meet criteria A only are fifth highest in our hierarchy.

Scenarios for step I2.

Three SRs are identified for our Jadad assessment.

Scenario 1

Review 1: A and B but not C (decision rule #3).

Review 2: A and B but not C (decision rule #3).

Review 3: A and C, but not B (decision rule #4).

Conclusion: No SR meets ALL of our criteria; which do we choose? Based on our decision rules, we choose both reviews 1 and 2.

Scenario 2

Review 1: A, but neither B nor C (decision rule #5).

Review 2: A and B, but not C (decision rule #3).

Review 3: neither A, B, nor C (does not report the search methods).

Conclusion: No SR meets all of our criteria; which do we choose? Based on our decision rules, we choose review 2.

Step 13: assess and compare any language restrictions across reviews

In this step, Jadad indicates that SRs with (A) no language restrictions in eligibility criteria should be prioritised and chosen over those that only include English language RCTs. This step maps to ROBIS item 1.5, namely, ‘Were any restrictions in eligibility criteria based on sources of info appropriate (eg, publication status or format, language, availability of data)?’

Decision rule:

If (A) an individual patient data meta-analysis was identified in the discordant review, Jadad et al recommend this SR be chosen over SRs with pairwise meta-analysis.

#1. Reviews that meet criteria A are highest in our hierarchy

If (A) an individual patient data meta-analysis was identified in the discordant review, Jadad et al recommend this SR be chosen over SRs with pairwise meta-analysis.

Step I4: Choose the analysis of data on individual patients

If (A) an individual patient data meta-analysis was identified in the discordant review, Jadad et al recommend this SR be chosen over SRs with pairwise meta-analysis.

Decision rule:

#1. Reviews that meet criteria A are highest in our hierarchy.

Decision rules for step I

#1. If there is an IDP meta-analysis (step I4), then this SR is the highest in our hierarchy.

#2. Reviews that meet step I1, I2 and I3 are second highest in our hierarchy.

#3. Reviews that meet step I1 and I2 third highest in our hierarchy.

#4. Reviews that meet step I2 and I3 fourth highest in our hierarchy.

#5. Reviews that meet step I1 and I3 fifth highest in our hierarchy.

#6. Reviews that meet step I1 is sixth highest in our hierarchy.

#7. Reviews that meet step I2 is seventh highest in our hierarchy.

#8. Reviews that meet step I3 is eighth highest in our hierarchy.

Note: Reporting only steps I1, I2 or I3 is not considered a systematic approach to evidence synthesis.

Study outcomes

Evaluation of whether the discordant review authors

Used the Jadad decision tree to assess discordance.

Examine and record reasons for discordance (ie, authors did not use Jadad).

Use other approaches to deal with discordance (specify).

Present discordance in tables and figures.

Results from our discordance assessment and discordant review authors’ assessments

Utility: Is the Jadad decision tree easy to use? (see section 2.9.3)

Efficiency: How much time does it take to do one Jadad assessment?

Comprehensiveness: is the Jadad algorithm comprehensive? Is it missing methods that might explain discordance (eg, publication recency)?

Jadad cohort: Frequency of disagreement or agreement across Jadad assessments between (A) discordant review authors’ assessment and (B) our assessment (ie, choosing the same SR).

Non-Jadad cohort: Frequency of disagreement or agreement assessments between (A) Discordant Review authors’ assessment and (B) our assessment (ie, choosing the same SR).

Comparison of discordant review authors stated sources of discordance and our identified sources of discordance.

‘Ease of use’ outcome measure

Each Jadad assessment will be assessed for ‘ease of use’ by each assessor. Each Jadad assessment will be rated and coloured (green, yellow, red) based on how easy or difficult the assessment was judged to be for the user. The rating is based on the following rubric:

The step can be accomplished easily by the reviewer, due to low cognitive load or because it’s a recognised method or approach.

The step requires a notable degree of cognitive load by the reviewer but can generally be accomplished with some effort.

The step is difficult for the reviewer, due to significant cognitive load or confusion; some reviewers would likely fail or abandon the task at this point.

The lower the score, the easier the step is to complete.

Data extraction

Discordant review level extraction

The outcomes from section 3.8 will be extracted, along with the following information from the discordant review:

Study characteristics (lead author’s name, publication year).

Clinical, public health or policy question (objectives, health condition of treatment, PICO eligibility criteria: participant, intervention/comparison and primary outcome).

Methods (how the discordant review authors assessed discordance among the SRs (Jadad or other approach), how they operationalised Jadad, steps where they identified discordance, number of included SRs, type of SR (eg, individual patient data meta-analysis, SR with narrative summary, SR with meta-analysis of RCTs), type of analysis (narrative summary or meta-analysis), risk of bias/quality assessment (eg, AMSTAR, AMSTAR 2 or ROBIS tool), risk of bias judgements, and whether risk of bias/quality assessment was integrated into the synthesis.

Results (results of discordance assessment, effect size and CIs, number of total participants in treatment and control groups, number of total events in treatment and control groups), direction of study results (favourable or not favourable).

Conclusions (difference between results and conclusions defined as if they disagreed in direction (results, not favourable; conclusions, favourable), authors’ result interpretation (quote from abstract and discussion section about the primary outcome result and conclusion).

SR level extraction

The outcomes from section 3.8 will be extracted, along with the following information from the included SRs:

Study characteristics (lead author’s name, publication year).

Clinical, public health or policy question (objectives, health condition of treatment, PICO eligibility criteria: participant, intervention/comparison and primary outcome; language restrictions and restrictions on publication status in eligibility criteria; citation of previous SRs/meta-analyses in background or discussion).

Search methodology (the name and number of databases searched, grey literature search details, the search period, language restrictions, restrictions on publication status, included full search in an appendix).

Methods (number and first author/year of included RCTs, effect metric (OR, RR, MD) and CIs, whether SR authors assessed the clinical (PICO) heterogeneity across RCTs (in the methods or results sections), analysis method (appropriate weighted technique to combine study results (ie, used a fixed or random random-effects model), investigation of statistical heterogeneity (ie, by reporting I2, τ2 or χ2), and if heterogeneity is present, then the authors investigated the causes of any heterogeneity (ie, by reporting subgroup, sensitivity or meta-regression analyses)), risk of bias/quality assessment (eg, Cochrane risk of bias tool V.1 or V.2), risk of bias/quality judgement for each RCT, and whether the RCT quality/risk of bias assessment was integrated into the synthesis; two reviewers independently screened studies, extracted data and assessed risk of bias with process for resolving discrepancies found when comparing.

Results (effect size and CI, number of total participants in treatment and control groups, number of total events in treatment and control groups), direction of study results (favourable or not favourable).

Conclusions (difference between results and conclusions defined as if they disagreed in direction (results, not favourable; conclusions, favourable), authors’ result interpretation (quote from abstract and discussion section about the primary outcome result and conclusion).

Two authors will extract studies independently at full text, and in the case of discrepant decisions, will discuss until consensus is reached.

Data analysis

We will assess and compare our outcomes (A) narratively for qualitative data, (B) using frequencies and percentages for categorical data and (C) using median and IQR for continuous data. Our analysis will be organised by our study outcomes in tables and in figures. We will discuss differences in the assessment of discordance across Discordant Reviews using Jadad and Discordant Reviews not using Jadad.

Patient and public involvement

Patients nor the public were not involved in the design of our research protocol.

Ethics, dissemination, strengths and limitations

Ethics

No ethics approval was required as no human subjects were involved.

Dissemination

We will disseminate evidence summaries through academic and social media platforms and websites (eg, Twitter, Researchgate). We will publish in an open-access journal, and present at various formal and informal venues such as the Therapeutics Initiative Methods Speaker Series, and academic conferences such as Guidelines International Network conference, Cochrane Colloquium and Public Health.

Strengths and limitations

We aim to use a systematic approach to evaluate authors’ assessment of discordance across SRs in discordant reviews and replicate Jadad algorithm assessments from a sample of discordant reviews. We suspect that one reason for the inconsistent use of the Jadad algorithm in the existing literature may be due to the limited guidance available in the original Jadad manuscript on implementing the algorithm. We believe the greatest strength of the proposed study will be that we used an iterative process among authors to develop decision rules for the interpretation and application of each step in the Jadad algorithm. On completion and dissemination of this study, other discordant review authors will have more detailed guidance on how to apply the Jadad algorithm when addressing discordance in discordant reviews. Furthermore, our study adopted a systematic and transparent approach to address the objectives outlined in our protocol using SR guidance.26 A comprehensive search strategy, including a search of the grey literature, was employed with no restrictions on language and publication status to yield relevant studies and minimise publication bias. To minimise error, screening, extractions and assessments will be completed by two independent reviewers, and subsequently compared. Any discrepancies will be resolved on consensus, and if necessary, with the involvement of a third reviewer. To mitigate observer bias, reviewers were blinded to the discordant reviews’ Jadad assessments.

Despite several strengths, this study also has limitations that are to be noted. In our search update, we only searched MEDLINE (Ovid) which would have limited the number of potentially relevant studies found. However, since the aim of our methods study is to replicate Jadad algorithm assessments, we do not think updating our search would make a difference to the robustness of the results. Our search strategy may have been more complete by adding terms such as inconsistencies or concerns, and truncated variations. Moreover, the searches were not carried out with the guidance from a trained medical/health librarian and/or peer reviewed. We did not, however, aim to retrieve all discordant reviews in the literature, as we could only feasibly replicate 30 such studies.

In addition, by only focusing on discordant reviews specific to health interventions, we may potentially have overlooked a number of relevant discordant reviews that may have assessed discordance and/or employed the Jadad algorithm. When using the tools for assessment (ie, risk of bias tools or Jadad decision tree), there will be subjectivity in the judgements potentially introducing variability in the results. To overcome this limitation, pilot screening and pilot assessments will be completed by reviewers and assessed to ensure consistency in understanding of the screening criteria, definitions of extracted items and steps in the Jadad assessment.

Some steps in the Jadad algorithm were vague in description (eg, step I2), making it difficult to interpret. For example, step I2 assesses the methods used to assess the quality of included RCTs across SRs. Some authors could interpret this as assessing if SR authors used the Cochrane risk of bias to assess the quality of RCTs across SRs. However, the Cochrane risk of bias tool was only published in 2008. To minimise the incorrect interpretation of this step, we solicited feedback at the protocol stage from all authors in its interpretation prior to piloting. Thus, after discussion with authors, we applied a decision rule that for SRs dates 2009 and earlier, we considered the Jadad et al42 and Schulz et al43 scales to be the most common scale between 1995 and 2009, in addition to other tools being considered on a case-by-case basis. This was done for all steps. Additionally, during piloting, we will amend our interpretation and instructions on how to operationalise the Jadad algorithm to ensure consistent application.

Supplementary Material

Footnotes

Twitter: @carole_lunny, @PierreThabet, @serawhitelaw

Contributors: CL conceived of the study; CL, NF, ACT, SW, KK, AS, PS, DWWZ, LP, RA, JT, YC, BK, JHJZ, ER, HN, ST, DP, PT, SK and SST contributed to the design of the study; CL drafted the manuscript; CL, NF, ACT, SW, KK, AS, PS, DWWW, LP, RA, JT, YC, BK, JHJZ, ER, HN, ST, DP, PT, SK and SST edited the manuscript and read and approved the final manuscript.

Funding: ACT currently holds a tier 2 Canada Research Chair in Knowledge Synthesis and an Ontario Ministry of Research and Innovation Early Researcher Award. CL is funded by a CIHR project grant for her postdoctoral research.

Competing interests: None declared.

Patient and public involvement: Patients and/or the public were not involved in the design, or conduct, or reporting, or dissemination plans of this research.

Provenance and peer review: Not commissioned; externally peer reviewed.

Ethics statements

Patient consent for publication

Not applicable.

References

- 1.Bastian H, Glasziou P, Chalmers I. Seventy-Five trials and eleven systematic reviews a day: how will we ever keep up? PLoS Med 2010;7:e1000326. 10.1371/journal.pmed.1000326 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Taito S, Kataoka Y, Ariie T, et al. Assessment of the publication trends of COVID-19 systematic reviews and randomized controlled trials. Annals of Clinical Epidemiology 2021;3:56–8. 10.37737/ace.3.2_56 [DOI] [PubMed] [Google Scholar]

- 3.Niforatos JD, Weaver M, Johansen ME. Assessment of publication trends of systematic reviews and randomized clinical trials, 1995 to 2017. JAMA Intern Med 2019;179:1593–4. 10.1001/jamainternmed.2019.3013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Becker LA, Oxman AD. Chapter 22: Overviews of reviews. In: Higgins JPT GSE, ed. Cochrane Handbook for Systematic Reviews of Interventions. edn. Hoboken, NJ: John Wiley & Sons, 2008: 607–31. [Google Scholar]

- 5.Pieper D, Antoine S-L, Mathes T, et al. Systematic review finds overlapping reviews were not mentioned in every other overview. J Clin Epidemiol 2014;67:368–75. 10.1016/j.jclinepi.2013.11.007 [DOI] [PubMed] [Google Scholar]

- 6.Pieper D, Mathes T, Eikermann M. Impact of choice of quality appraisal tool for systematic reviews in overviews. J Evid Based Med 2014;7:72–8. 10.1111/jebm.12097 [DOI] [PubMed] [Google Scholar]

- 7.Smith V, Devane D, Begley CM, et al. Methodology in conducting a systematic review of systematic reviews of healthcare interventions. BMC Med Res Methodol 2011;11:15. 10.1186/1471-2288-11-15 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Caird J, Sutcliffe K, Kwan I, et al. Mediating policy-relevant evidence at speed: are systematic reviews of systematic reviews a useful approach? evid policy 2015;11:81–97. 10.1332/174426514X13988609036850 [DOI] [Google Scholar]

- 9.Hunt H, Pollock A, Campbell P, et al. An introduction to overviews of reviews: planning a relevant research question and objective for an overview. Syst Rev 2018;7:39. 10.1186/s13643-018-0695-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Ioannidis JPA. The mass production of redundant, misleading, and Conflicted systematic reviews and meta-analyses. Milbank Q 2016;94:485–514. 10.1111/1468-0009.12210 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Lunny C, Brennan SE, McDonald S, et al. Toward a comprehensive evidence map of overview of systematic review methods: paper 1-purpose, eligibility, search and data extraction. Syst Rev 2017;6:231. 10.1186/s13643-017-0617-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Lunny C, Brennan SE, McDonald S, et al. Toward a comprehensive evidence map of overview of systematic review methods: paper 2-risk of bias assessment; synthesis, presentation and summary of the findings; and assessment of the certainty of the evidence. Syst Rev 2018;7:159. 10.1186/s13643-018-0784-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Bolland MJ, Grey A. A case study of discordant overlapping meta-analyses: vitamin D supplements and fracture. PLoS One 2014;9:e115934. 10.1371/journal.pone.0115934 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Campbell J, Bellamy N, Gee T. Differences between systematic reviews/meta-analyses of hyaluronic acid/hyaluronan/hylan in osteoarthritis of the knee. Osteoarthritis Cartilage 2007;15:1424–36. 10.1016/j.joca.2007.01.022 [DOI] [PubMed] [Google Scholar]

- 15.Chalmers PN, Mascarenhas R, Leroux T, et al. Do arthroscopic and open stabilization techniques restore equivalent stability to the shoulder in the setting of anterior glenohumeral instability? A systematic review of overlapping meta-analyses. Arthroscopy 2015;31:355–63. 10.1016/j.arthro.2014.07.008 [DOI] [PubMed] [Google Scholar]

- 16.Riva N, Puljak L, Moja L, et al. Multiple overlapping systematic reviews facilitate the origin of disputes: the case of thrombolytic therapy for pulmonary embolism. J Clin Epidemiol 2018;97:1–13. 10.1016/j.jclinepi.2017.11.012 [DOI] [PubMed] [Google Scholar]

- 17.Vavken P, Dorotka R. A systematic review of conflicting meta-analyses in orthopaedic surgery. Clin Orthop Relat Res 2009;467:2723–35. 10.1007/s11999-009-0765-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Peters WD, Panchbhavi VK. Primary arthrodesis versus open reduction and internal fixation outcomes for Lisfranc injuries: an analysis of conflicting meta-analysis results. Foot & Ankle Orthopaedics 2020;5:2473011420S2473000383:2473011420S0038. 10.1177/2473011420S00383 [DOI] [PubMed] [Google Scholar]

- 19.Susantitaphong P, Jaber BL. Understanding discordant meta-analyses of convective dialytic therapies for chronic kidney failure. Am J Kidney Dis 2014;63:888–91. 10.1053/j.ajkd.2014.03.005 [DOI] [PubMed] [Google Scholar]

- 20.Teehan GS, Liangos O, Lau J. Dialysis membrane and modality in Acute Renal Failure: Understanding discordant metaanalyses. In: Seminars in dialysis: 2003: Wiley online library, 2003: 356–60. [DOI] [PubMed] [Google Scholar]

- 21.Druyts E, Thorlund K, Humphreys S, et al. Interpreting discordant indirect and multiple treatment comparison meta-analyses: an evaluation of direct acting antivirals for chronic hepatitis C infection. Clin Epidemiol 2013;5:173–83. 10.2147/CLEP.S44273 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Jadad AR, Cook DJ, Browman GP. A guide to interpreting discordant systematic reviews. CMAJ 1997;156:1411–6. [PMC free article] [PubMed] [Google Scholar]

- 23.Li Q, Wang C, Huo Y, et al. Minimally invasive versus open surgery for acute Achilles tendon rupture: a systematic review of overlapping meta-analyses. J Orthop Surg Res 2016;11:65. 10.1186/s13018-016-0401-2 [DOI] [PMC free article] [PubMed] [Google Scholar] [Retracted]

- 24.Mascarenhas R, Chalmers PN, Sayegh ET, et al. Is double-row rotator cuff repair clinically superior to single-row rotator cuff repair: a systematic review of overlapping meta-analyses. Arthroscopy 2014;30:1156–65. 10.1016/j.arthro.2014.03.015 [DOI] [PubMed] [Google Scholar]

- 25.Zhao J-G, Wang J, Long L. Surgical versus conservative treatments for displaced Midshaft Clavicular fractures: a systematic review of overlapping meta-analyses. Medicine 2015;94:e1057. 10.1097/MD.0000000000001057 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Higgins JP. Cochrane Handbook for systematic reviews of interventions version 5.0. 1. The Cochrane collaboration, 2008. Available: http://wwwcochrane-handbookorg

- 27.Lunny C, Neelakant T, Chen A, et al. Bibliometric study of 'overviews of systematic reviews' of health interventions: Evaluation of prevalence, citation and journal impact factor. Res Synth Methods 2022;13:109-120. 10.1002/jrsm.1530 [DOI] [PubMed] [Google Scholar]

- 28.Lunny C, McKenzie JE, McDonald S. Retrieval of overviews of systematic reviews in MEDLINE was improved by the development of an objectively derived and validated search strategy. J Clin Epidemiol 2016;74:107–18. 10.1016/j.jclinepi.2015.12.002 [DOI] [PubMed] [Google Scholar]

- 29.Goossen K, Hess S, Lunny C, et al. Database combinations to retrieve systematic reviews in overviews of reviews: a methodological study. BMC Med Res Methodol 2020;20:138. 10.1186/s12874-020-00983-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Fabiano GA, Schatz NK, Aloe AM, et al. A systematic review of meta-analyses of psychosocial treatment for attention-deficit/hyperactivity disorder. Clin Child Fam Psychol Rev 2015;18:77–97. 10.1007/s10567-015-0178-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Finnane A, Janda M, Hayes SC. Review of the evidence of lymphedema treatment effect. Am J Phys Med Rehabil 2015;94:483–98. 10.1097/PHM.0000000000000246 [DOI] [PubMed] [Google Scholar]

- 32.Lau R, Stevenson F, Ong BN, et al. Achieving change in primary care--effectiveness of strategies for improving implementation of complex interventions: systematic review of reviews. BMJ Open 2015;5:e009993. 10.1136/bmjopen-2015-009993 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.López NJ, Uribe S, Martinez B. Effect of periodontal treatment on preterm birth rate: a systematic review of meta-analyses. Periodontol 2000 2015;67:87–130. 10.1111/prd.12073 [DOI] [PubMed] [Google Scholar]

- 34.Moher D, Dulberg CS, Wells GA. Statistical power, sample size, and their reporting in randomized controlled trials. JAMA 1994;272:122–4. 10.1001/jama.1994.03520020048013 [DOI] [PubMed] [Google Scholar]

- 35.Tricco AC, Tetzlaff J, Pham Ba', et al. Non-Cochrane vs. Cochrane reviews were twice as likely to have positive conclusion statements: cross-sectional study. J Clin Epidemiol 2009;62:e381:380–6. 10.1016/j.jclinepi.2008.08.008 [DOI] [PubMed] [Google Scholar]

- 36.Hróbjartsson A, Thomsen ASS, Emanuelsson F, et al. Observer bias in randomized clinical trials with measurement scale outcomes: a systematic review of trials with both blinded and nonblinded assessors. CMAJ 2013;185:E201–11. 10.1503/cmaj.120744 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Morissette K, Tricco AC, Horsley T, et al. Blinded versus unblinded assessments of risk of bias in studies included in a systematic review. Cochrane Database Syst Rev 2011;9:MR000025. 10.1002/14651858.MR000025.pub2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Shea BJ, Grimshaw JM, Wells GA, et al. Development of AMSTAR: a measurement tool to assess the methodological quality of systematic reviews. BMC Med Res Methodol 2007;7:1–7. 10.1186/1471-2288-7-10 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Shea BJ, Reeves BC, Wells G, et al. AMSTAR 2: a critical appraisal tool for systematic reviews that include randomised or non-randomised studies of healthcare interventions, or both. BMJ 2017;358:j4008. 10.1136/bmj.j4008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Whiting PSJ, Churchill R. Introduction to ROBIS, a new tool to assess the risk of bias in a systematic review. In: 23Rd Cochrane Colloquium: 2015. Vienna, Austria: John Wiley & Sons, 2015. [Google Scholar]

- 41.Viswanathan M, Patnode CD, Berkman ND. Assessing the risk of bias in systematic reviews of health care interventions. Methods guide for effectiveness and comparative effectiveness reviews 2017. [Google Scholar]

- 42.Jadad AR, Moher D, Klassen TP. Guides for reading and interpreting systematic reviews: II. How did the authors find the studies and assess their quality? Arch Pediatr Adolesc Med 1998;152:812–7. 10.1001/archpedi.152.8.812 [DOI] [PubMed] [Google Scholar]

- 43.Schulz KF, Chalmers I, Hayes RJ, et al. Empirical evidence of bias. dimensions of methodological quality associated with estimates of treatment effects in controlled trials. JAMA 1995;273:408–12. 10.1001/jama.273.5.408 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.