Abstract

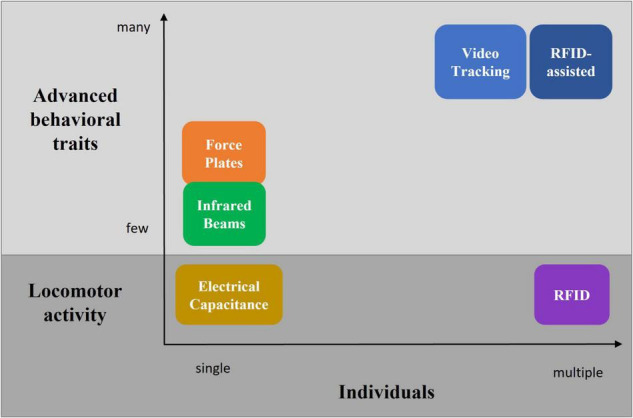

Automatization and technological advances have led to a larger number of methods and systems to monitor and measure locomotor activity and more specific behavior of a wide variety of animal species in various environmental conditions in laboratory settings. In rodents, the majority of these systems require the animals to be temporarily taken away from their home-cage into separate observation cage environments which requires manual handling and consequently evokes distress for the animal and may alter behavioral responses. An automated high-throughput approach can overcome this problem. Therefore, this review describes existing automated methods and technologies which enable the measurement of locomotor activity and behavioral aspects of rodents in their most meaningful and stress-free laboratory environment: the home-cage. In line with the Directive 2010/63/EU and the 3R principles (replacement, reduction, refinement), this review furthermore assesses their suitability and potential for group-housed conditions as a refinement strategy, highlighting their current technological and practical limitations. It covers electrical capacitance technology and radio-frequency identification (RFID), which focus mainly on voluntary locomotor activity in both single and multiple rodents, respectively. Infrared beams and force plates expand the detection beyond locomotor activity toward basic behavioral traits but discover their full potential in individually housed rodents only. Despite the great premises of these approaches in terms of behavioral pattern recognition, more sophisticated methods, such as (RFID-assisted) video tracking technology need to be applied to enable the automated analysis of advanced behavioral aspects of individual animals in social housing conditions.

Keywords: locomotor activity, behavior, home-cage, rodents, 3Rs, phenotyping, animal tracking

Introduction

Historically, animals’ behavior was monitored, assessed and quantified manually by an experienced human observer in real-time (Altmann, 1974). This process is very time- and labor-intensive, prevents large-scale and high-throughput studies, is mostly restricted to daytime scoring, subjective to the human observer and thus prone to (human) bias (Levitis et al., 2009). This required the development of alternative, automated methods to make (behavioral) phenotyping more rapid, objective, and consistent within and across laboratories, aiming to increase reproducibility and replicability of research outcomes (Kafkafi et al., 2018). Automatization will also help to standardize experiments, which are impacted by heterogeneity between laboratories (Crabbe et al., 1999; Crawley, 1999; Muller et al., 2016) and personnel (Kennard et al., 2021). Automatization is especially relevant during social animal experimentations, which stimulate very complex and rich behavioral profiles challenging to the human eye. One well-established way to increase standardization and reduce (bias from) animal handling is to study animals in their “living room”: the home-cage. So far, the introduction of automatization as well as of computational ethology has led to an enormous number of different methods to study behavioral and physiological traits in various animals and experimental set-ups (Anderson and Perona, 2014; Dell et al., 2014; Voikar and Gaburro, 2020). This review focusses on rodents and aims to give an overview of current technologies and methods which enable researchers to automatically study rodents’ locomotor activity and behavioral traits, highlighting their individual strengths and limitations. It includes electrical capacitance, radio-frequency identification (RFID), infrared (IR) beams, force plates, and (RFID-assisted) video tracking technology. Since the Directive 2010/63/EU recommends the housing of social animals in social conditions during experimentation for animal welfare reasons, this review furthermore evaluates the suitability and limitations of the described technologies to study socially housed rodents either in their home-cage or in a social arena. For the purpose of this review, any cage environment in which multiple (at least 2) rodents can be housed under minimally stressed conditions for a long duration (several weeks to months) with appropriate bedding and nesting material as well as access to feed and drink is taken as the home cage or social arena. At the end, this review will also provide insights into current developments in the field of multiple animal tracking as well as possible future directions in the field.

Measuring Voluntary Locomotor Activity

Electrical Capacitance

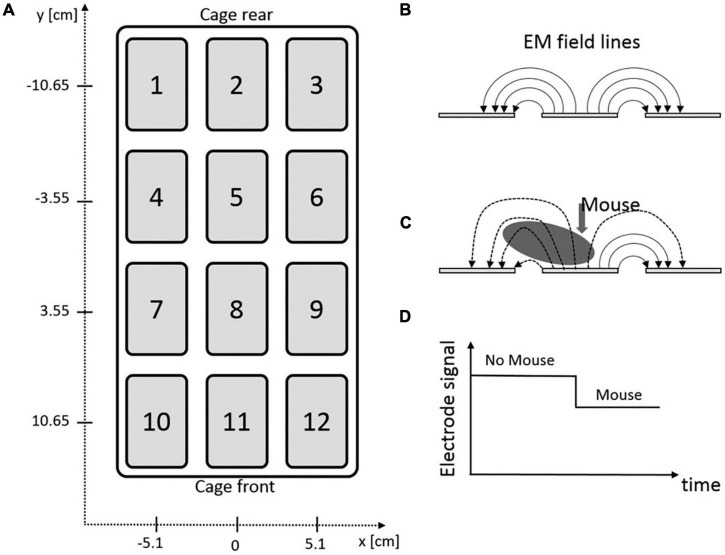

Measuring an animal’s activity can be done by electrical capacitance technology. This technology comprises several electrodes embedded in an electronic sensing board (Figure 1), which is installed underneath the home-cage. The animal’s presence changes the electromagnetic field emitted by these electrodes. Thereby, the exact position (with spatial resolution of 1 mm) and trajectory can be identified based on capacity variation [with temporal resolution of 4 hertz (Hz)]. The sensing board sends its raw data to an associated software and computer infrastructure, which enables the researcher to additionally analyze distance traveled, average speed, position distribution, and activity density of the animal. The activity metrics show comparable results when benchmarked against video-recording technology (Iannello, 2019). This board was developed as part of the Digital Ventilated Cage (DVC) monitoring system (Tecniplast, Buguggiate, Italy), allowing fully automated, 24/7, non-invasive, real-time activity monitoring and traceability of individually housed mice. It requires only modest computational power resulting in a small data footprint per unit. It is highly scalable, allowing arbitrary numbers of home-cages to be monitored simultaneously. DVC-derived datasets can be used subsequently for a deeper analysis of several activity metrics in individual-housed mice (Shenk et al., 2020). However, this system does not support the analysis of ethologically relevant behavioral patterns (grooming, rearing, climbing etc.) which makes it less suitable for phenotyping and behavioral studies. It is currently also designed for the use of mice only. Whereas multiple animals can be housed in one home-cage to monitor group activity (Pernold et al., 2019), the full potential of the technology relies on individually housed conditions. This makes this system currently unable to study social interaction and behavior. Since it was originally developed as a component of the DVC system, it cannot be integrated in automated monitoring systems of other vendors. In conclusion, the sensor plate is a useful module within the DVC system aiming to improve animals’ health monitoring and facility management. It allows monitoring of overall activity, but the limited behavioral pattern recognition makes this system less suitable for more sophisticated phenotyping and behavioral studies, especially in group-housed settings.

FIGURE 1.

DVC sensor plate of Tecniplast. (A) Graphical illustration of electrical board containing 12 electrodes. (B) Side-view of three adjacent electrodes and the corresponding electromagnetic (EM) field lines. (C) Effect of animal’s presence on the EM field lines. (D) Impact of animal’s presence on electrode signal output (Iannello, 2019).

Radio-Frequency Identification

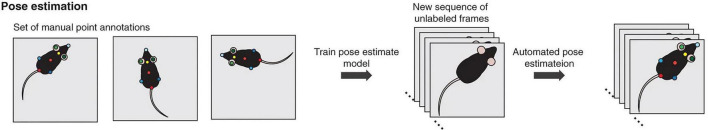

Locomotion activity can also be measured using radio-frequency identification (RFID) technology. RFID uses radio-waves to wirelessly identify and track specific tags, which can be attached to or inserted into objects and animals. The technology consists of four elements: tags, readers, antennas, and a computer network for data handling. Passive RFID tags do not require an internal power supply (battery)—in contrast to active tags—since they are powered via the radio waves emitted by the antennas. This reduces the overall size of the RFID tag, which makes passive tags more suitable for implantation in small laboratory animals. The RFID tag is activated once it is in the range of an RFID antenna and thereby sends its unique ID code to the RFID reader. Depending on the type of tag, additional information (strain, age, etc.) can be stored and conveyed. It allows also for physiological characteristics (i.e., peripheral body temperature) to be measured additionally (Unified Information Devices Inc., Lake Villa, United States) (Winn et al., 2021). When implanted into the animals, individual animals are tracked and identified within the home-cage or any other experimental unit. The benefits of RFID technology have inspired researchers to develop the IntelliCage (TSE Systems, Berlin, Germany) which enables the study of complex behaviors in socially interacting mice and rats living in a stress-free cage environment without human interference (Lipp et al., 2005; Kiryk et al., 2020). The IntelliCage consists of four operant conditioning corners and allows for several, longitudinal (social) behavioral and cognitive test batteries in a meaningful and social living environment. Furthermore, it can serve as a core component of a new automated multi-dimensional phenotyping paradigm: the PhenoWorld (TSE Systems). The PhenoWorld supports behavioral, cognitive, metabolic, and physiological measurements in an ethological meaningful multi-component living environment stimulating rodents to display their species-specific natural social behavior (Castelhano-Carlos et al., 2014, 2017). Others applied a similar approach to study groups of rodents in a semi-naturalistic environment by installing RFID antennas at strategically relevant locations within multiple living quarters (Lewejohann et al., 2009; Howerton et al., 2012; Puścian et al., 2016; Linnenbrink and von Merten, 2017; Habedank et al., 2021). However, since RFID antennas are usually not distributed equally within those (multi-) cage environments, but rather at strategically interesting locations, only cross trajectories and cross activity of individual animals can be measured. To circumvent this and to get a more accurate picture of the activity and trajectories of individual rodents, a non-commercial passive RFID system based on ultra-high frequency was developed suitable for standard home-cage applications in rodents (Catarinucci et al., 2014). In vivo validation against IR-beams (see “Infrared Beams”) and a well-established video-tracking system showed a strong correlation regarding positional data and total activity (Catarinucci et al., 2014; Macrì et al., 2015). This design has been adopted by the industry resulting in commercially available systems consisting of a RFID antenna matrix underneath the home-cage, in which each antenna emits a confined electromagnetic field (Figure 2). In general, the RFID technology enables long-term, 24/7, real-time identification, tracking and general activity measurement of a large number of various animals within a given experimental area (Dudek et al., 2015; Frahm et al., 2018). Its major advantage is the correct long-term identification preservation and traceability of multiple animals in relative complex social housing conditions (Lewejohann et al., 2009; Howerton et al., 2012; Catarinucci et al., 2014; Puścian et al., 2016; Castelhano-Carlos et al., 2017). It can be fully automated to monitor animal’s locomotion activity without human interference, it requires only condensed data storage, and it can easily be integrated with automated monitoring home-cage systems (Catarinucci et al., 2014; Frahm et al., 2018). Since RFID is a detection and tracking technology, its major limitation in the field of animal behavior is the inability to provide detailed information on behavioral traits (grooming, rearing, climbing, etc.). This restricts its application to the sole purpose of animal identification and tracking functionality, which still offers the opportunity to analyze some aspects of social behavior (Puścian et al., 2016; Torquet et al., 2018). Combining RFID with video tracking technology largely extends the possibilities to study social behavior in more detail (see “RFID-Assisted Video Tracking”). Another important technological limitation is the challenge of the RFID antennas to simultaneously detect multiple tags and therefore animals. When multiple animals are located within the reading range of the same RFID antenna, simultaneous signal transmission can interfere with each other, often leading to missed readings and thus data loss. By nature, RFID technology comprises a trade-off between sensitivity (reading range) and accuracy (spatial resolution) since both depend on the dimension of each of the RFID antenna, which is usually the size of the animal in question. The smaller the RFID antenna, the more antennas can be integrated in the RFID antenna matrix underneath the home-cage increasing the positioning and tracking accuracy of the systems and reducing the possibility of signal interference of different RFID tags. On the other hand, small RFID antennas have a shorter reading range. This might result in temporary detection loss in case of vertical movements (i.e., rearing and climbing). This sensitivity-accuracy trade-off needs to be considered when choosing an appropriate RFID hardware set-up and a suitable location of the RFID tag within the animal’s body. Furthermore, RFID is minimally invasive and inserting the tag requires anesthetics. It also bears minor risks of affecting animals’ health condition during long-term application (Albrecht, 2014) or of losing its functionality.

FIGURE 2.

The TraffiCage (TSE Systems) uses a RFID matrix underneath the home-cage to track multiple animals in the home-cage.

In summary, RFID technology is a great way to identify, track and therefore measure locomotor activity of individual animals in a social context. Its lack of measuring behavioral traits requires the combination with another technology, for example with video tracking.

While there are many companies which provide RFID components, only a few offer an all-in-one and stand-alone apparatus for rodent home-cage application enabling individual voluntary locomotor activity measurements in group-housed settings (Table 1).

TABLE 1.

Commercially available all-in-one RFID systems for home-cage application.

| Company | Product name | Strengths | Limitation | Website | References |

| PhenoSys | MultimouseMonitor | Multiple animals, small footprint1 | Limited spatial accuracy, 2 standard sizes available | https://www.phenosys.com/products/multi-mouse-monitor/ | Frahm et al., 2018; Alonso et al., 2020 |

| TSE Systems | IntelliCage | Multiple animals (16 mice or 8 rats), small footprint1, HCMS containing 4 operant conditioning corners, wide range of cognitive and behavioral tests | Fixed size/dimension, only gross trajectories of individuals | https://www.tse-systems.com/service/intellicage/ | Voikar et al., 2018; Kiryk et al., 2020 |

| TSE Systems | TraffiCage | Multiple animals, several sizes available, small footprint1 | Limited spatial accuracy | https://www.tse-systems.com/service/trafficage/ | Dudek et al., 2015; Kotańska et al., 2019 |

| Unified Information Devices | Mouse Matrix | Multiple animals, customizable size/dimension, small footprint1, body temperature measurement available | Mice only, not validated yet due to recent market launch | https://www.uidevices.com/home-cage-monitoring/ | − |

1Footprint refers to how much space the system occupies in addition to the home-cage.

Small: Marginal impact on overall space; large: Significant increase of footprint relative to home-cage.

Measuring Behavioral Traits

Infrared Beams

One of the most traditional and simplest principles to continuously monitor voluntary locomotor activity of rodents in the home-cage environment is through infra-red (IR) beam breaks. It uses specially designed frames that surround the home-cage (Figure 3), which emit an array of IR beams invisible to the rodents. Beam interruptions, or breaks, due to the movement of the animal are registered in the horizontal plane (x- and y-axis) allowing locomotor activity to be reliably detected with a high spatial and temporal resolution. Expanding such systems with an additional frame covering the vertical plane (z-axis), furthermore enables the detection and analysis of basic behavioral patterns, such as rearing and climbing. The obtained spatial and temporal information can further be utilized to analyze a variety of other behavioral events, such as feeding and drinking activities (Goulding et al., 2008). Nowadays, associated software packages can fine-tune the raw data to extract a more comprehensive picture of the animal’s behavior, including, but not limited to distance traveled, position distribution, zone entries and trajectory within a given time-period. The use of beam frames is easily applicable, non-invasive, and comes with the freedom to adjust the position of the z-frames depending on the desired rodent species and research questions. Since it is independent of lighting conditions, a 24/7 analysis is possible. Numerous home-cages can be simultaneously monitored by the software infrastructure, which generates a clear and small set of raw data without the need of extensive data processing. In general, it can also be easily implemented in automated home-cage monitoring systems (HCMS) to combine behavioral with physiological and metabolic studies (e.g., CLAMS, Columbus Instruments International; Promethion, Sable Systems International; PhenoMaster, TSE Systems). However, a sophisticated behavioral analysis based solely on beam interruptions is difficult due to its limited capability to recognize the rich repertoire of behavioral features that rodents display. Furthermore, to discover the full potential of these beam frames, animals need to be housed individually, otherwise only average group activity can be measured with underestimated activity levels due to blocked beams by the other animals in the same cage. This limits its application for social interaction and behavior studies. Potential occlusion/breaking of the beams by nesting, cage enrichment, or bedding material is another common constraint.

FIGURE 3.

Large infrared beam frame ActiMot3 (TSE Systems) to measure locomotor activity in rodents.

There are several commercially available IR-beam break systems suitable for home-cage application, which mainly differ in accuracy and size (Table 2).

TABLE 2.

Frequently applied commercially available IR beam frames for home-cage application.

| Company | Product name | Strengths | Limitations | Website | References |

| AfaSci | SmartCage (basic platform module) | Small footprint1, extendable with modular add-ons | Animal observation only from top view, resolution unknown | https://www.afasci.com/index.php/instruments/smartcage | Khroyan et al., 2012 |

| Columbus Instruments | Animal Activity Meter: Opto-M4 | High flexibility to re-arrange sensors, in-house HCMS component | Large footprint1, large spatial resolution (3.12 mm for mice, 6.4 mm for rats), medium temporal resolution (160 Hz) | https://www.colinst.com/products/animal-activity-meter-opto-m | Dorigatti et al., 2021 |

| Kinder Scientific | SmartFrame | Extendable with additional modules, fits several different home-cages | Large footprint1, no HCMS component, resolution unknown | http://kinderscientific.com/products/motor-activity-2/cage-rack-2/ | Ingram et al., 2013 |

| Omnitech | Custom Home Cage Frame | Interchangeable size for mice/rats, small footprint1, max. 60 cages per PC | Max. 2 sensor-axis per cage, resolution unknown, limited scientific validation | https://omnitech-usa.com/product/home-cage/ | Reitz et al., 2021 |

| Sable Systems | BXYZ Beam Break Activity Monitor | In-house HCMS component, high spatial (2.5 mm) and temporal (450 Hz) resolution, small footprint1 | − | https://www.sablesys.com/products/promethion-core-line/promethion-core-cages-and-monitoring/ | Woodie et al., 2020 |

| San Diego Instruments | Photobeam Activity System | Small footprint1 | One size, no HCMS component, low spatial resolution (1.2 cm) | https://sandiegoinstruments.com/product/pas-homecage/ | Liu et al., 2021 |

| TSE Systems | ActiMot3 | In-house HCMS component, 3 standard sizes (incl. interchangeable for mice/rats), extra high spatial resolution (1.25 mm), small footprint1 | Medium temporal resolution (100 Hz) | https://www.tse-systems.com/service/actimot3-locomotor-activity/ | Neess et al., 2021 |

1Footprint refers to how much space the system occupies in addition to the home-cage.

Small: Marginal impact on overall space; large: Significant increase of footprint relative to home-cage.

Force Plates

Automated recognition of rodents’ (mice, rats) behavior can also be done by turning mechanical force into electrical signals. Specially designed force plates rest underneath the home-cage and are equipped with sensors that translate the animal’s movement force into electrical signals (Schlingmann et al., 1998; Van de Weerd et al., 2001). Several behavioral attributes can be classified based on their own unique electrical signature characteristics which each of the behavioral traits are generating. The force plates generally enable the quantification of basic behavioral patterns similar to the IR technology, such as resting, rearing, climbing and general locomotion. They also identify the exact position (X,Y) of the animal with high spatiotemporal resolution, providing detailed tracking information, such as trajectories, distance traveled, velocity and position distribution. Probably the most sophisticated force plate system is the Laboratory Animal Behavior Observation Registration and Analysis System (LABORAS; Metris b.v., Hoofddorp, Netherlands). It is a specially designed triangular shaped measurement platform (Figure 4). It showed similar results regarding acute locomotor activity compared to IR beam technology (Lynch et al., 2011). Importantly, since the platform recognizes muscle contractions of different body parts (jaws, head, paws, limbs, etc.) more subtle behavioral patterns can also be analyzed (grooming, scratching, seizures, freezing, head shakes, startle response, etc.) which makes this system therefore superior to the IR beam break technology in terms of behavioral profiling. Still, a full sophisticated behavioral analysis is difficult due to the limited discrimination of behavioral patterns in terms of their electrical signatures.

FIGURE 4.

The measurement platform LABORAS turns animal’s movement into electrical signals to analyze an extended set of rodent behavioral patterns (Metris b.v.).

Like the IR beam method, electrical capacitive and RFID technology, force plates can be run fully automated (without human interference/handling), are easily and long-term applicable in mice and rats, non-invasive, independent of lighting conditions, and require relative low handling time. The hardware and software infrastructure enables real-time analysis of multiple platforms at the same time, each generating a small set of raw data. It relies on single housing environments to measure the full set of behavioral patterns thereby limiting its use for social housing conditions. Interestingly, since some social behavioral attributes also generate unique electrical signals, such as for mating or fighting, force plates, in theory, do enable the study of some basic social behavior in pair of rodents. However, force plates are unable to discriminate between conspecifics making it impracticable to attribute these social traits to the individual level.

While the IR beam technology and the force plates generally share many commonalities (similar behavioral parameters) and limitations (most importantly single housing), the LABORAS system identifies a broader range of behavioral patterns, can be used interchangeably for mice and rats, and makes this system thus more versatile in its application. This comes with the limitation of tracking the animal accurately only in a two-dimensional plane (compared to IR frames) as well as of occupying a larger floor space (footprint) due to the wider and more complex construction of the platform.

Currently, there are only a few commercial systems available suitable for home-cage application or already incorporated into a HCMS. These systems mainly differ in the spectrum of behavioral pattern recognition (Table 3).

TABLE 3.

Available force plates for home-cage applications.

| Company | Product name | Strengths | Limitations | Website | References |

| AfaSci | SmartCage (Vibration sensor module) | Small footprint1, extendable with modular add-ons | No stand-alone module, few behavioral parameters (increasable when combined with IR module) | https://www.afasci.com/index.php/instruments/smartcage | Khroyan et al., 2012; Xie et al., 2012 |

| Bioseb | Activmeter | Small footprint1, combination with HCMS, rearing/climbing detection optional (mice), numerous behavioral parameters | Few behavioral parameters | https://www.bioseb.com/en/activity-motor-control-coordination/898-activmeter.html | Morgan et al., 2018 |

| Metris | LABORAS | Rich behavioral pattern recognition | Large footprint1 | https://www.metris.nl/laboras/laboras.htm | Quinn et al., 2003; Castagne et al., 2012 |

| Sable Systems | ADX- Activity Detector | Small footprint1, combination with in-house HCMS | Limited behavioral parameters (focus on total activity) | https://www.sablesys.com/products/classic-line/adx-classic-activity-detector/ | Fiedler and Careau, 2021 |

| Home Cage Activity Counter | Adaptable and modifiable do-it-yourself (DIY) system | Limited behavioral parameters (focus on total activity), large footprint1 | – | Ganea et al., 2007 |

1Footprint refers to how much space the system occupies in addition to the home-cage.

Small: Marginal impact on overall space; large: Significant increase of footprint relative to home-cage.

Others have integrated piezoelectric sensors into force plates which are able to detect micromovements (Flores et al., 2007). These sensors are generally used to distinguish between sleep and wake phases, serve as an alternative to invasive techniques such as electroencephalograms and electromyograms, and are frequently applied in sleep research (Signal Solutions LLC, Lexington, United States). Piezoelectric sensor technology has introduced new opportunities in behavioral phenotyping and thus gained popularity in the field of animal research. Internal movements such as individual heart beats or breathing cycles are hardly detectable by other phenotyping techniques (including IR-beams or video recording) and the use of piezoelectric sensor plates can thus contribute to establish a more sophisticated rodent ethogram (Carreño-Muñoz et al., 2022).

Video Tracking

Advances in computational and imaging performance and efficiency have led to new image-based video tracking systems in the field of animal ecology [reviewed by Dell et al. (2014)], which replaces the human observer by a computer to monitor and assess animals’ behavior. Conventionally, these systems consist of hardware and software equipment which undertake a three-way process (Dell et al., 2014). First, the hardware component (one or multiple cameras) digitally records the animals in a given environment and produces a consecutive set of image sequences. Second, the software uses computer vision algorithms to highlight the individual animal from the static background (usually by background subtraction) on each image and propagates its position and thus trajectory across the whole set of images. In group-housed settings, the software must additionally distinguish and separate each individuum from the conspecific (usually creating a pixel blob for each individual) (Giancardo et al., 2013). Individual differences in natural appearance (color, fur pattern, size, contour) serve the software to easier discriminate between individual animals and maintain their identity throughout the video (Hong et al., 2015). Third, the software classifies and quantifies behavioral events based on pre-defined mathematical assumptions established by human expertise (Giancardo et al., 2013). In general, video tracking systems ensure long-term, non-invasive, and real-time tracking of single and multiple animals (Jhuang et al., 2010; Giancardo et al., 2013). By combining optical with IR video, 24/7 tracking is maintained. Video tracking systems enable the analysis of a wide set of behavioral traits with high spatiotemporal resolution and perform well in individual housed rodents (Jhuang et al., 2010). However, two conditions affect the performance and results of such video tracking systems: The complexity of the cage environment and the number of individuals therein, since both result in animals’ (temporal) occlusion from video camera capture. Such occlusion events or animal crossings challenge the software algorithm to preserve the correct animal identity once the individual animal is in sight again or has been separated from its conspecific (Yamanaka and Takeuchi, 2018). This commonly leads to miss identification and/or loss of track, which often propagates throughout the remaining sequence if no appropriate measures are undertaken, i.e., automatic or manual correction (de Chaumont et al., 2012; Giancardo et al., 2013; Yamanaka and Takeuchi, 2018). Marking the animal’s fur with (fluorescent) hair dye or bleach addresses this specific problem (Ohayon et al., 2013; Shemesh et al., 2013), but introduces other drawbacks. Applying artificial markers is time-consuming (needs re-application after some time), requires an invasive procedure (bleaching is done in unconscious animals), and might affect animals’ (social) behavior (Lacey et al., 2007; Dennis et al., 2008). Therefore, different marker-less approaches have been developed aiming to robustly identify and track multiple individuals, such as 3D imaging via multiple camera views (Matsumoto et al., 2013), using differences in animals’ body shape/contour (Giancardo et al., 2013), size, color (Noldus et al., 2001; Ohayon et al., 2013; Hong et al., 2015), heat signature (Giancardo et al., 2013), or individual “fingerprints” (Perez-Escudero et al., 2014). Still, accurate and robust maintenance of individual identities within a group remains a major challenge of automated video tracking systems, especially if inbred mice are used which are (almost) indistinguishable by size, color, fur pattern, and likely body shape/contour. The increasing interest in multiple animal tracking with correct identification preservation associated with group-housed conditions has led to a very large number of different video tracking systems and algorithms being available or under development, for a large variety of species and experimental environments [freely available animal tracking software was recently reviewed by Panadeiro et al. (2021)]. Several video tracking systems have already been successfully applied in socially housed rodents, either in an observation cage or open-field arena, usually lacking the supply of drink, feed, and shelter. Examples are idTracker (Perez-Escudero et al., 2014), idtracker.ai (Romero-Ferrero et al., 2019), ToxTrac/ToxId (Rodriguez et al., 2017, 2018), MiceProfiler (de Chaumont et al., 2012), Multi-Animal Tracker (Itskovits et al., 2017) and 3DTracker (Matsumoto et al., 2013, 2017). In principle, these systems are suitable for home-cage or social arena applications but require further scientific validation.

Currently, there are only a few systems available or described which are specifically designed for or already validated in rodents socially housed in the home-cage or in a social arena as defined by this review (Table 4). Each of them comes with the strength of analyzing a wide variety of behavioral traits on the individual level within a social context, but also with several different limitations, including, but not limited to, incorrect or loss of identification through animal crossing or visual obstruction (which requires human intervention), low spatial accuracy, limited scientific validation data, facilitating only pairs or small groups, applying artificial markers, or limited data on (social) behavioral parameters.

TABLE 4.

Overview of image-based video-tracking systems to track and analyze behavioral events in multiple rodents simultaneously in a single home-cage/arena.

| Company/Institution/University | Name | Type1 | Availability | Limitations | Behavioral data output2 | Max. animals3 | Identification of unmarked animals | Website | References |

| California Institute of Technology | Motr | Algorithm | Open source | Invasive, manual correction required, limited behavioral pattern recognition | Social | 6 | No (bleaching) | https://motr.janelia.org/ | Ohayon et al., 2013 |

| California Institute of Technology | – | All-in-one | DIY | Different fur color required | Social | 2 | Yes (different fur color required) | – | Hong et al., 2015 |

| CleverSys | GroupHousedScan | Software4 | Commercial | Not validated yet | Individual + social | –/4 | – | http://cleversysinc.com/CleverSysInc/csi_products/grouphousedscan/ | – |

| Hiroshima University | UMATracker | Software | Open Source | Manual correction required after identity swap | Social | 4 | Yes | https://ymnk13.github.io/UMATracker/ | Yamanaka and Takeuchi, 2018 |

| Loligo Systems | LoliTrack 5 | Software | Commercial | Not validated in rodents yet | Individual + limited social | Yes | https://loligosystems.com/lolitrack-version-5-video-tracking-and-behavior-analysis-software | – | |

| National Institute of Health | SCORHE | All-in-one | Open source (software) | Individual identities not maintained, mice only | Individual (grouped) | 25 | Yes | https://spis.cit.nih.gov/node/30 | Salem et al., 2015 |

| Noldus | EthoVision/PhenoTyper | All-in-one | Commercial | Marking required | Individual + social | 5/16 | No (color marking) | https://www.noldus.com/ethovision-xt/https://www.noldus.com/phenotyper | Noldus et al., 2001; de Visser et al., 2006 |

| University of California, San Diego | Smart Vivarium | Algorithm | DIY | Poor identity maintenance after occlusion | Individual | 35 | Yes | http://smartvivarium.calit2.net/ | Branson and Belongie, 2005 |

| Weizmann Institute of Science | – | All-in-one | DIY | Color marking | Social | 4/ > 10 | No (color marking) | – | Shemesh et al., 2013 |

1All-in-one: one apparatus consisting of complete hardware and software infrastructure without the need of additional equipment to be purchased.

–Software: software package with a graphical user interface.

–Algorithm: source codes which can be implemented in software solutions or require external programming and analyzing platforms, such as MATLAB or Icy.

2Individual: measurement of individual behavioral traits.

–Social: measurement of social interaction.

3Maximum number of rodents which has been scientifically validated in the mentioned reference(s)/officially communicated by the developer of the system.

4Associated hardware equipment available from same vendor.

The full power of the system (tracking + behavioral phenotyping) requires solitary housing.

Recently, artificial intelligence has become very prominent on different aspects of computer vision technology and enabled such systems to learn from existing data (Rousseau et al., 2000). Nowadays, most of the computer vision systems have incorporated at least some elements of artificial intelligence, starting from animal detection toward the automated analysis of behavioral traits. In general, machine learning approaches are applied in a supervised fashion, meaning that the existing training video sequences were first labeled and then classified into specific behavioral traits by human experts. This required the systems to be programmed by humans in order to set robust rules for identifying specific behavioral attributes.

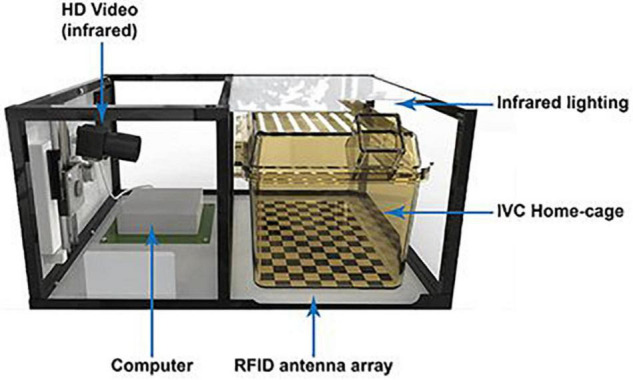

A new promising approach within the field of machine learning is inspired by human joint localization (Toshev and Szegedy, 2014), which enables the tracking of joints or body parts and thereby measures different postures. This approach is rapidly finding its way into laboratory settings using the tracking of multiple body parts to establish postures. Indeed, posture estimation algorithms have been developed tailoring the “human approach” to laboratory animals. For example, DeepLabCut (Mathis et al., 2018; Nath et al., 2019) and LEAP Estimates Animal Pose (LEAP) (Pereira et al., 2019) are based on algorithms previously applied in humans (Insafutdinov et al., 2016). In general, these algorithms use a three-step process: First, specific body parts (joints or key points) of interest are manually labeled on selected video images. Second, the pose estimation model is trained to recognize the corresponding body parts. Third, the trained algorithm is applied to the full video sequence for automatic prediction of body part location and thus pose estimation (Figure 5).

FIGURE 5.

Machine learning approach of pose estimation (von Ziegler et al., 2021).

In contrast to conventional machine learning technology which often focusses on tracking only the centroid of each animal, these new algorithms provide tracking of multiple body parts. Therefore, the main advantage of these body-part-algorithms is the analysis of a tremendous variety of behavioral patterns, postures and orientation in various animals based on a limited training period. No visual marking of the animal is required, it is non-invasive, freely available, open-source and thus gives the researcher the freedom to adjust the algorithm to the particular needs. Furthermore, analyzing the raw data set once the experiment (or video) is completed, offers post hoc analysis of specific scientific questions. The commonly observed speed-accuracy trade-off generally experienced in the field of machine learning, has been solved recently (Graving et al., 2019). A drawback may be that specific postures (based on user-defined body parts) need to be predefined before applying the algorithm, which may induce laboratory or investigator specific variation. Also, training the algorithm requires manual annotation, which is, even on a small set of video images, labor intensive. The need to train the algorithm on individual animals often prevents real-time analysis. Interestingly, recent developments in the field provide real-time approaches based on already trained data sets (Kane et al., 2020). LEAP requires the least amount of training images and—like DeepLabCut—has currently been optimized for group-housed conditions in order to identify individual animals in a social group, resulting in Social LEAP Estimates Animal Pose (SLEAP) (Pereira et al., 2020; Lauer et al., 2021). However, analysis of multiple animals is prone to visual occlusion and can be very laborious (requires annotation of every individual per image), especially when analysis of complex postures is desired. Nevertheless, DeepLabCut has been shown to outperform commercially available systems regarding animal tracking and is able to compete with human scoring of relevant behavioral patterns (Sturman et al., 2020).

In summary, the use of such algorithms is representative of a new generation of video tracking systems within the rapidly evolving field of behavioral animal research. Current algorithm development focusses on pose-estimation and body part classification of unmarked animals enabling the analysis of various predefined body postures and behavioral patterns. On the other hand, the open-source, publicly accessible software/algorithm needs to be combined with (commercially available) hardware infrastructure to conduct video tracking. For each experiment, manual labeling of predefined images is still required which can be prone to subjectivity. It can also be a laborious process, especially during social experiments.

RFID-Assisted Video Tracking

The biggest challenge of applying video tracking technology is maintaining the correct identification and thus position and direction of multiple interacting animals. To solve this problem to the best, the strength of the RFID technology (consistent identification of almost unlimited numbers of animals even in diverse complex living environments) has been combined with the strengths of video tracking (high spatiotemporal analysis of complex (social) behavioral events) resulting in a synergistic hybrid tracking technology (Weissbrod et al., 2013; Dandan and Lin, 2016).

The Home Cage Analyser (Actual Analytics Ltd., Edinburgh, United Kingdom) (Figure 6; Bains et al., 2016; Redfern et al., 2017) and the RFID-Assisted SocialScan (CleverSys Inc., Reston, United States) (Peleh et al., 2019) are commercially available systems that integrate RFID tracking with 2D IR video capturing. By synchronizing the RFID readings with the video tracking, possible identity swaps are automatically corrected by the software without human intervention. The Live Mouse Tracker is similar to the aforementioned systems but uses a depth-sensing camera for three-dimensional activity and behavior monitoring of multiple mice in a social arena (de Chaumont et al., 2019). Its main advantage is a very rich repertoire of 35 behavioral patterns that can be recognized—again, without the need of human intervention. The analysis ranges from simple locomotor activity of individual mice toward more sophisticated social behavior between multiple (n = 4) conspecifics. Furthermore, it is a comprehensive, do-it-yourself, and end-to-end solution based on open-source frameworks. At present, the Live Mouse Tracker sets a new standard in multiple animal phenotyping, since it offers an open-source end-to-end solution, is easy to apply for an ordinary researcher, and—importantly—enables the analysis of a considerable set of behavioral patterns supported by machine learning, however, currently in mice only.

FIGURE 6.

The Home Cage Analyser (Actual Analytics) combines RFID technology with video recording to study behavioral traits in socially interacting rodents (Bains et al., 2016).

In conclusion, RFID-assisted video tracking systems combine the strengths of video tracking and RFID technology to create a synergistic effect. These systems overcome major limitations of the previous listed technologies making it possible to continuously track many individual rodents including monitoring and quantifying individual as well as social behavioral traits in a complex environment. Since these systems have become available to the market rather recently, further developments on hardware and especially software solutions (machine learning) will certainly enhance the performance and wider applications of these hybrid tracking systems (Table 5).

TABLE 5.

Available RFID-assisted video tracking technology.

| Company/Institute | Product name | Environment | Characteristics | Website | References |

| Actual Analytics | Home Cage Analyser | Home Cage | Side-view camera (prone to occlusion), all-in-one apparatus, mice and rats, individual and social parameters, relatively small footprint1 | https://www.actualanalytics.com/products | Bains et al., 2016; Redfern et al., 2017; Hobson et al., 2020 |

| Clever Sys Inc. | RFID-Assisted SocialScan | Social arena | Top-view camera, all-in-one apparatus, mice and rats, rodents need to be distinguishable by color/size, Focus on social behavior parameters, small footprint1 | http://cleversysinc.com/CleverSysInc/rfid-assisted-socialscan/ | Peleh et al., 2019; Peleh et al., 2020 |

| Institute Pasteur | Live Mouse Tracker | Social arena | Top-view camera, DIY, mice only, individual and rich social behavioral parameters, small footprint1, end user can add new behavioral parameters of interest | https://livemousetracker.org/ | Ey et al., 2018; de Chaumont et al., 2019 |

1Footprint refers to how much space the system occupies in addition to the home-cage/social arena.

Small: Marginal impact on overall space; large: Significant increase of footprint relative to cage environment.

Summary

There are a fair number of different systems available for behavioral phenotyping of rodents living in home-cages or social arenas (Figure 7 and Table 6). These range from targeting voluntary locomotor activity measurements toward more advanced methods which expand the analysis of the behavioral repertoire beyond basic locomotor activity metrics. These methods often comprise a trade-off between group housing and extended behavioral pattern recognition. A well-established and prominent method of behavior analysis is the use of video tracking systems, especially in combination with recent advances in machine learning technology. Unfortunately, until now only a minority of such systems have been validated in a social context and in meaningful and heterogeneous environments, such as the home-cage, consisting of appropriate refinement material. The latter challenges the performance of dedicated tracking and behavioral phenotyping systems. Latest developments in multiple pose estimation hold great promise in further enhancing the performance of such video tracking systems. Importantly, a common technical limitation of video-tracking systems is the correct identification preservation, which is compromised by animal crossing or camera occlusion. Prevention requires human intervention and thus prohibits large-scale, high-throughput studies. Combining the strengths of video tracking and RFID technology opens the door to a much more complex analysis of locomotor activity and behavioral traits of socially interacting animals. At the same time, it addresses the identification preservation challenge. Therefore, such RFID-assisted video tracking solutions seem to be the most comprehensive systems currently available and hold great promise for further development.

FIGURE 7.

Overview of different technologies to measure locomotor activity and advanced behavioral traits in individually and group-housed rodents.

TABLE 6.

Overview of strengths and limitations of current technology used to measure rodents’ locomotor activity and more advanced behavioral aspects in the home-cage.

| Technology | Housing | Advanced behavioral traits1 | Strengths | Limitation |

| Electrical capacitance | Individual2 | No | High spatial accuracy, small (data) footprint | Single housing, no behavioral parameters (only locomotor activity), no stand-alone method, mice only |

| RFID | Individual/Group | No | Social housing, reliable animal identification and tracking even with high animal density, small (data) footprint | Low spatial resolution, no behavioral parameters (only locomotor activity), possible data loss due to animal interferences |

| Infrared beam | Individual2 | Few | High spatiotemporal accuracy, small (data) footprint | Single housing, few behavioral parameters |

| Force plate | Individual2 | Few | Small (data) footprint | Single housing, few behavioral parameters |

| Video tracking | Individual/Group | Many | Social housing, rich (social) behavioral pattern recognition | Frequent identity swaps require corrections, high processing power, large data footprint |

| RFID-assisted video tracking | Individual/Group | Many | Social housing, rich (social) behavioral pattern recognition, large animal density | High processing power, large (data) footprint |

1Behavioral traits which go beyond basic locomotor activity metrics.

2Group-housing possible, but would limit the full scope of the technology.

Future Perspective

Recent developments in computer vision have resulted in several freely available open-source software and algorithm solutions that can be shared among the scientific community for user-defined application and further development. The use and development of open-source software is encouraged by the European Commission to foster innovation by sharing knowledge and expertise (European Commission, 2020). It also allows insight into how data are processed by the software and consequences of changes can be better understood. The ambition to improve animal tracking is further enhanced by current trends in the field of machine learning, which is rapidly gaining ground in animal research (von Ziegler et al., 2021). This development will continue to increase the supply of new software solutions freely available for the research community (Nilsson et al., 2020; Hsu and Yttri, 2021), providing alternatives to costly and commercially available tracking software packages. Importantly, machine learning algorithms have already proven to outperform commercially available systems regarding animal tracking, highlighting their promising capability for future applications (Sturman et al., 2020).

Most of these machine learning algorithms still require individually housed animals (Wiltschko et al., 2015; Geuther et al., 2019; Pennington et al., 2019). DeepLabCut and SLEAP act as forerunner to more complex situations, inspiring others to follow (Mathis et al., 2018; Pereira et al., 2020). Despite their reliance on training the algorithm by human annotations, machine learning algorithms have drastically reduced the need and time of human labeling compared to manual scoring (either real-time or post video tracking) (Jhuang et al., 2010; van Dam et al., 2013). Once trained by an individual or a group of experts, the algorithm replicates the human input on any new data sets. Thereby the inter-observer variability is diminished within and across laboratories, which contributes to objectivity and consistency and thus reproducibility and replicability of scientific data (Levitis et al., 2009). At present, human annotations set the benchmark for such automated systems to be able to recognize, classify and thus quantify specific behavioral events. To overcome the human factor and to push machine learning into a new direction, there are recent ambitious efforts to optimize unsupervised machine learning methods (Todd et al., 2017). Such algorithms ensure behavioral classification in an unsupervised manner which makes the need of annotation of several example images by a human expert redundant. One such promising new algorithm is AlphaTracker. It achieves behavioral classification of individual as well as social behavioral motifs of identical and unmarked mice with high accuracy aiming to overcome the commonly identification preservation challenge in socially housed animals (Chen et al., 2020). The wide application of those type of algorithms might revolutionize our current understanding of (rodent) animal behavior, since very subtle and unexpected behavioral events (“syllables”) can be analyzed and studied in more detail, which are unrecognizable for the human eye (Wiltschko et al., 2015; Markowitz et al., 2018). Such potential new behavioral traits need to be classified in a way which reaches consensus among the behavioral scientific community supporting the interpretation as well as the reproducibility and replicability of research data. Interestingly, unsupervised algorithms are currently under development, tailored to combine behavioral analysis and electrophysiological recordings. The algorithm’s properties are fine-tuned to meet the specific requirements (i.e., high temporal resolution) for analyzing electrophysiological characteristics during behavioral studies (Hsu and Yttri, 2021).

One of the drawbacks associated with the general use of open-source machine learning technology is the necessity for the user to have at least some basic, if not substantial, computational expertise. This can serve as a high entry barrier for research laboratories to implement and further develop such methods, especially for non-behavioral research groups aiming for interdisciplinary breakthroughs. Associated video equipment is often less flexible to be incorporated into HCMS. These issues have already been addressed by some developers and need to be taken into account to make an innovative technology user-friendly and thus widely applicable in practice (Mathis et al., 2018; Singh et al., 2019; Nilsson et al., 2020). Commercially available all-in-one solutions come with a higher financial burden but are generally more user-friendly and thus lower such entry barrier significantly. They also include customer support to assist laboratories to conduct their research in a technologically sound way. In the end, the regular user will decide whether to rely on more financially demanding, but sophisticated and technically mature all-in-one solutions or to step toward more flexible, but computational resource-depending open-software and -hardware applications.

Author Contributions

CK wrote the manuscript. TB, JH, DV, JK, and ES revised and edited the manuscript. All authors approved the submitted version.

Conflict of Interest

CK, TB, and DV were employed by TSE Systems GmbH. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Funding

CK was an ESR-fellow of the INSPIRE European Training Network. INSPIRE received funding from the EU Horizon 2020 Research and Innovation program, under the Marie Skłodowska-Curie GA 858070.

References

- Albrecht K. (2014). “Microchip-induced tumors in laboratory rodents and dogs: A review of the literature 1990–2006,” in Uberveillance and the Social Implications of Microchip Implants: Emerging Technologies, eds Michael M., Michael K. (Hershey, PA: IGI Global; ), 337–349. [Google Scholar]

- Alonso L., Peeva P., Ramos-Prats A., Alenina N., Winter Y., Rivalan M. (2020). Inter-individual and inter-strain differences in cognitive and social abilities of Dark Agouti and Wistar Han rats. Behav. Brain. Res. 377 112–188. 10.1016/j.bbr.2019.112188 [DOI] [PubMed] [Google Scholar]

- Altmann J. (1974). Observational study of behavior: sampling methods. Behaviour 49 227–267. 10.1163/156853974x00534 [DOI] [PubMed] [Google Scholar]

- Anderson D. J., Perona P. (2014). Toward a science of computational ethology. Neuron 84 18–31. 10.1016/j.neuron.2014.09.005 [DOI] [PubMed] [Google Scholar]

- Bains R. S., Cater H. L., Sillito R. R., Chartsias A., Sneddon D., Concas D., et al. (2016). Analysis of individual mouse activity in group housed animals of different inbred strains using a novel automated home cage analysis system. Front. Behav. Neurosci. 10:106. 10.3389/fnbeh.2016.00106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Branson K., Belongie S. (2005). “Tracking multiple mouse contours (without too many samples),” in Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05) (San Diego, CA: IEEE; ), 1039–1046. 10.1109/CVPR.2005.349 [DOI] [Google Scholar]

- Carreño-Muñoz M. I., Medrano M. C., Ferreira Gomes Da Silva A., Gestreau C., Menuet C., Leinekugel T., et al. (2022). Detecting fine and elaborate movements with piezo sensors provides non-invasive access to overlooked behavioral components. Neuropsychopharmacology 47 933–943. 10.1038/s41386-021-01217-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- Castagne V., Wolinsky T., Quinn L., Virley D. (2012). Differential behavioral profiling of stimulant substances in the rat using the LABORAS system. Pharmacol. Biochem. Behav. 101 553–563. 10.1016/j.pbb.2012.03.001 [DOI] [PubMed] [Google Scholar]

- Castelhano-Carlos M., Costa P. S., Russig H., Sousa N. (2014). PhenoWorld: a new paradigm to screen rodent behavior. Transl. Psychiatry 4:e399. 10.1038/tp.2014.40 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Castelhano-Carlos M. J., Baumans V., Sousa N. (2017). PhenoWorld: addressing animal welfare in a new paradigm to house and assess rat behaviour. Lab. Anim. 51 36–43. 10.1177/0023677216638642 [DOI] [PubMed] [Google Scholar]

- Catarinucci L., Colella R., Mainetti L., Patrono L., Pieretti S., Secco A., et al. (2014). An animal tracking system for behavior analysis using radio frequency identification. Lab. Anim. 43 321–327. 10.1038/laban.547 [DOI] [PubMed] [Google Scholar]

- Chen Z., Zhang R., Zhang Y. E., Zhou H., Fang H.-S., Rock R. R., et al. (2020). AlphaTracker: a multi-animal tracking and behavioral analysis tool. bioRxiv 10.1101/2020.12.04.405159 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crabbe J. C., Wahlsten D., Dudek B. C. (1999). Genetics of mouse behavior: interactions with laboratory environment. Science 284 1670–1672. 10.1126/science.284.5420.1670 [DOI] [PubMed] [Google Scholar]

- Crawley J. N. (1999). Behavioral phenotyping of transgenic and knockout mice: experimental design and evaluation of general health, sensory functions, motor abilities, and specific behavioral tests. Brain Res. 835 18–26. 10.1016/S0006-8993(98)01258-X [DOI] [PubMed] [Google Scholar]

- Dandan S., Lin X. (2016). “A Hybrid Video and RFID Tracking System for Multiple Mice in Lab Environment,” in 3rd International Conference on Information Science and Control Engineering (ICISCE) (Beijing: IEEE; ), 1198–1202. 10.1109/ICISCE.2016.257 [DOI] [Google Scholar]

- de Chaumont F., Coura R. D., Serreau P., Cressant A., Chabout J., Granon S., et al. (2012). Computerized video analysis of social interactions in mice. Nat. Methods 9 410–417. 10.1038/nmeth.1924 [DOI] [PubMed] [Google Scholar]

- de Chaumont F., Ey E., Torquet N., Lagache T., Dallongeville S., Imbert A., et al. (2019). Real-time analysis of the behaviour of groups of mice via a depth-sensing camera and machine learning. Nat. Biomed. Eng. 3 930–942. 10.1038/s41551-019-0396-1 [DOI] [PubMed] [Google Scholar]

- de Visser L., van den Bos R., Kuurman W. W., Kas M. J., Spruijt B. M. (2006). Novel approach to the behavioural characterization of inbred mice: automated home cage observations. Genes. Brain. Behav. 5 458–466. 10.1111/j.1601-183X.2005.00181.x [DOI] [PubMed] [Google Scholar]

- Dell A. I., Bender J. A., Branson K., Couzin I. D., de Polavieja G. G., Noldus L. P., et al. (2014). Automated image-based tracking and its application in ecology. Trends. Ecol. Evol. 29 417–428. 10.1016/j.tree.2014.05.004 [DOI] [PubMed] [Google Scholar]

- Dennis R. L., Newberry R. C., Cheng H. W., Estevez I. (2008). Appearance matters: artificial marking alters aggression and stress. Poult. Sci. 87 1939–1946. 10.3382/ps.2007-00311 [DOI] [PubMed] [Google Scholar]

- Dorigatti J. D., Thyne K. M., Ginsburg B. C., Salmon A. B. (2021). Beta-guanidinopropionic acid has age-specific effects on markers of health and function in mice. GeroScience 43 1–15. 10.1007/s11357-021-00372-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dudek M., Knutelska J., Bednarski M., Nowiński L., Zygmunt M., Mordyl B., et al. (2015). A comparison of the anorectic effect and safety of the alpha2-adrenoceptor ligands guanfacine and yohimbine in rats with diet-induced obesity. PLoS One 10:e0141327. 10.1371/journal.pone.0141327 [DOI] [PMC free article] [PubMed] [Google Scholar]

- European Commission (2020). Open Source Software Strategy 2020 – 2023: Think Open. Available: https://ec.europa.eu/info/departments/informatics/open-source-software-strategy_en#opensourcesoftwarestrategy [accessed January 21 2021]. [Google Scholar]

- Ey E., Torquet N., de Chaumont F., Levi-Strauss J., Ferhat A. T., Le Sourd A. M., et al. (2018). Shank2 Mutant Mice Display Hyperactivity Insensitive to Methylphenidate and Reduced Flexibility in Social Motivation, but Normal Social Recognition. Front. Mol. Neurosci. 11:365. 10.3389/fnmol.2018.00365 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fiedler A., Careau V. (2021). Individual (Co)variation in Resting and Maximal Metabolic Rates in Wild Mice. Physiol. Biochem. Zool. 94 338–352. 10.1086/716042 [DOI] [PubMed] [Google Scholar]

- Flores A. E., Flores J. E., Deshpande H., Picazo J. A., Xie X. S., Franken P., et al. (2007). Pattern recognition of sleep in rodents using piezoelectric signals generated by gross body movements. IEEE Trans. Biomed. Eng. 54 225–233. 10.1109/TBME.2006.886938 [DOI] [PubMed] [Google Scholar]

- Frahm S., Melis V., Horsley D., Rickard J. E., Riedel G., Fadda P., et al. (2018). Alpha-Synuclein transgenic mice, h-α-SynL62, display α-Syn aggregation and a dopaminergic phenotype reminiscent of Parkinson’s disease. Behav. Brain Res. 339 153–168. 10.1016/j.bbr.2017.11.025 [DOI] [PubMed] [Google Scholar]

- Ganea K., Liebl C., Sterlemann V., Muller M. B., Schmidt M. V. (2007). Pharmacological validation of a novel home cage activity counter in mice. J. Neurosci. Methods 162 180–186. 10.1016/j.jneumeth.2007.01.008 [DOI] [PubMed] [Google Scholar]

- Geuther B. Q., Deats S. P., Fox K. J., Murray S. A., Braun R. E., White J. K., et al. (2019). Robust mouse tracking in complex environments using neural networks. Commun. Biol. 2 1–11. 10.1038/s42003-019-0362-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Giancardo L., Sona D., Huang H., Sannino S., Manago F., Scheggia D., et al. (2013). Automatic visual tracking and social behaviour analysis with multiple mice. PLoS One 8:e74557. 10.1371/journal.pone.0074557 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goulding E. H., Schenk A. K., Juneja P., MacKay A. W., Wade J. M., Tecott L. H. (2008). A robust automated system elucidates mouse home cage behavioral structure. Proc. Natl. Acad. Sci. U. S. A. 105 20575–20582. 10.1073/pnas.0809053106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Graving J. M., Chae D., Naik H., Li L., Koger B., Costelloe B. R., et al. (2019). DeepPoseKit, a software toolkit for fast and robust animal pose estimation using deep learning. Elife 8:e47994. 10.7554/eLife.47994 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Habedank A., Urmersbach B., Kahnau P., Lewejohann L. (2021). O mouse, where art thou? The Mouse Position Surveillance System (MoPSS)—an RFID-based tracking system. Behav. Res. Methods 2021 1–14. 10.3758/s13428-021-01593-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hobson L., Bains R. S., Greenaway S., Wells S., Nolan P. M. (2020). Phenotyping in Mice Using Continuous Home Cage Monitoring and Ultrasonic Vocalization Recordings. Curr. Protoc. Mouse Biol. 10:e80. 10.1002/cpmo.80 [DOI] [PubMed] [Google Scholar]

- Hong W., Kennedy A., Burgos-Artizzu X. P., Zelikowsky M., Navonne S. G., Perona P., et al. (2015). Automated measurement of mouse social behaviors using depth sensing, video tracking, and machine learning. Proc. Natl. Acad. Sci. U.S.A 112 E5351–E5360. 10.1073/pnas.1515982112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Howerton C. L., Garner J. P., Mench J. A. (2012). A system utilizing radio frequency identification (RFID) technology to monitor individual rodent behavior in complex social settings. J. Neurosci. Methods. 209 74–78. 10.1016/j.jneumeth.2012.06.001 [DOI] [PubMed] [Google Scholar]

- Hsu A. I., Yttri E. A. (2021). B-SOiD, an open-source unsupervised algorithm for identification and fast prediction of behaviors. Nat. Commun. 12 1–13. 10.1038/s41467-021-25420-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iannello F. (2019). Non-intrusive high throughput automated data collection from the home cage. Heliyon 5:e01454. 10.1016/j.heliyon.2019.e01454 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ingram W. M., Goodrich L. M., Robey E. A., Eisen M. B. (2013). Mice infected with low-virulence strains of Toxoplasma gondii lose their innate aversion to cat urine, even after extensive parasite clearance. PLoS One 8:e75246. 10.1371/journal.pone.0075246 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Insafutdinov E., Pishchulin L., Andres B., Andriluka M., Schiele B. (2016). “Deepercut: A deeper, stronger, and faster multi-person pose estimation model,” in European Conference on Computer Vision (Amsterdam: Springer Nature; ), 34–50. 10.1007/978-3-319-46466-4_3 [DOI] [Google Scholar]

- Itskovits E., Levine A., Cohen E., Zaslaver A. (2017). A multi-animal tracker for studying complex behaviors. BMC Biol. 15:29. 10.1186/s12915-017-0363-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jhuang H., Garrote E., Mutch J., Yu X., Khilnani V., Poggio T., et al. (2010). Automated home-cage behavioural phenotyping of mice. Nat. Commun. 1:68. 10.1038/ncomms1064 [DOI] [PubMed] [Google Scholar]

- Kafkafi N., Agassi J., Chesler E. J., Crabbe J. C., Crusio W. E., Eilam D., et al. (2018). Reproducibility and replicability of rodent phenotyping in preclinical studies. Neurosci. Biobehav. Rev. 87 218–232. 10.1016/j.neubiorev.2018.01.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kane G. A., Lopes G., Saunders J. L., Mathis A., Mathis M. W. (2020). Real-time, low-latency closed-loop feedback using markerless posture tracking. Elife 9:e61909. 10.7554/eLife.61909 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kennard M., Nandi M., Chapple S., King A. (2021). The glucose tolerance test in mice: sex, drugs and protocol. Authorea 2021:61067774. 10.22541/au.162515866.61067774/v1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Khroyan T. V., Zhang J., Yang L., Zou B., Xie J., Pascual C., et al. (2012). Rodent motor and neuropsychological behaviour measured in home cages using the integrated modular platform SmartCage™. Clin. Exp. Pharmacol. Physiol. 39 614–622. 10.1111/j.1440-1681.2012.05719.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kiryk A., Janusz A., Zglinicki B., Turkes E., Knapska E., Konopka W., et al. (2020). IntelliCage as a tool for measuring mouse behavior–20 years perspective. Behav. Brain Res. 388:112620. 10.1016/j.bbr.2020.112620 [DOI] [PubMed] [Google Scholar]

- Kotańska M., Mika K., Reguła K., Szczepańska K., Szafarz M., Bednarski M., et al. (2019). KSK19–Novel histamine H3 receptor ligand reduces body weight in diet induced obese mice. Biochem. Pharmacol. 168 193–203. 10.1016/j.bcp.2019.07.006 [DOI] [PubMed] [Google Scholar]

- Lacey J. C., Beynon R. J., Hurst J. L. (2007). The importance of exposure to other male scents in determining competitive behaviour among inbred male mice. Appl. Anim. Behav. Sci. 104 130–142. 10.1016/j.applanim.2006.04.026 [DOI] [Google Scholar]

- Lauer J., Zhou M., Ye S., Menegas W., Nath T., Rahman M. M., et al. (2021). Multi-animal pose estimation and tracking with DeepLabCut. bioRxiv 10.1101/2021.04.30.442096 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levitis D. A., Lidicker W. Z., Jr., Freund G. (2009). Behavioural biologists do not agree on what constitutes behaviour. Anim. Behav. 78 103–110. 10.1016/j.anbehav.2009.03.018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewejohann L., Hoppmann A. M., Kegel P., Kritzler M., Krüger A., Sachser N. (2009). Behavioral phenotyping of a murine model of Alzheimer’s disease in a seminaturalistic environment using RFID tracking. Behav. Res. Methods 41 850–856. 10.3758/BRM.41.3.850 [DOI] [PubMed] [Google Scholar]

- Linnenbrink M., von Merten S. (2017). No speed dating please! Patterns of social preference in male and female house mice. Front. Zool. 14 1–14. 10.1186/s12983-017-0224-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lipp H.-P., Galsworthy M. J., Zinn P. O., Würbel H. (2005). “Automated behavioral analysis of mice using INTELLICAGE: Inter-laboratory comparisons and validation with exploratory behavior and spatial learning,” in Proceedings of Measuring Behavior (Wageningen: Noldus Information Technology; ), 66–69. [Google Scholar]

- Liu J., Hester K., Pope C. (2021). Dose- and time-related effects of acute diisopropylfluorophosphate intoxication on forced swim behavior and sucrose preference in rats. Neurotoxicology 82 82–88. 10.1016/j.neuro.2020.11.007 [DOI] [PubMed] [Google Scholar]

- Lynch J. J., III, Castagné V., Moser P. C., Mittelstadt S. W. (2011). Comparison of methods for the assessment of locomotor activity in rodent safety pharmacology studies. J. Pharmacol. Methods 64 74–80. 10.1016/j.vascn.2011.03.003 [DOI] [PubMed] [Google Scholar]

- Macrì S., Mainetti L., Patrono L., Pieretti S., Secco A., Sergi I. (2015). “A tracking system for laboratory mice to support medical researchers in behavioral analysis,” In 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) Milan: IEEE, 4946–4949. 10.1109/EMBC.2015.7319501s [DOI] [PubMed] [Google Scholar]

- Markowitz J. E., Gillis W. F., Beron C. C., Neufeld S. Q., Robertson K., Bhagat N. D., et al. (2018). The Striatum Organizes 3D Behavior via Moment-to-Moment Action Selection. Cell 174 44–58. 10.1016/j.cell.2018.04.019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mathis A., Mamidanna P., Cury K. M., Abe T., Murthy V. N., Mathis M. W., et al. (2018). DeepLabCut: markerless pose estimation of user-defined body parts with deep learning. Nat. Neurosci. 21 1281–1289. 10.1038/s41593-018-0209-y [DOI] [PubMed] [Google Scholar]

- Matsumoto J., Nishimaru H., Ono T., Nishijo H. (2017). “3d-video-based computerized behavioral analysis for in vivo neuropharmacology and neurophysiology in rodents,” in In Vivo Neuropharmacology and Neurophysiology, ed. Philippu A. (New York, NY: Humana Press; ), 89–105. 10.1007/978-1-4939-6490-1_5 [DOI] [Google Scholar]

- Matsumoto J., Urakawa S., Takamura Y., Malcher-Lopes R., Hori E., Tomaz C., et al. (2013). A 3D-video-based computerized analysis of social and sexual interactions in rats. PLoS One 8:e78460. 10.1371/journal.pone.0078460 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morgan J. E., Prola A., Mariot V., Pini V., Meng J., Hourde C., et al. (2018). Necroptosis mediates myofibre death in dystrophin-deficient mice. Nat. Commun. 9 1–10. 10.1038/s41467-018-06057-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Muller V. M., Zietek T., Rohm F., Fiamoncini J., Lagkouvardos I., Haller D., et al. (2016). Gut barrier impairment by high-fat diet in mice depends on housing conditions. Mol. Nutr. Food. Res. 60 897–908. 10.1002/mnfr.201500775 [DOI] [PubMed] [Google Scholar]

- Nath T., Mathis A., Chen A. C., Patel A., Bethge M., Mathis M. W. (2019). Using DeepLabCut for 3D markerless pose estimation across species and behaviors. Nat. Protoc. 14 2152–2176. 10.1038/s41596-019-0176-0 [DOI] [PubMed] [Google Scholar]

- Neess D., Kruse V., Marcher A.-B., Wæde M. R., Vistisen J., Møller P. M., et al. (2021). Epidermal Acyl-CoA-binding protein is indispensable for systemic energy homeostasis. Mol. Metab. 44:101144. 10.1016/j.molmet.2020.101144 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nilsson S. R., Goodwin N. L., Choong J. J., Hwang S., Wright H. R., Norville Z. C., et al. (2020). Simple Behavioral Analysis (SimBA)–an open source toolkit for computer classification of complex social behaviors in experimental animals. BioRxiv 10.1101/2020.04.19.049452 [DOI] [Google Scholar]

- Noldus L. P., Spink A. J., Tegelenbosch R. A. (2001). EthoVision: a versatile video tracking system for automation of behavioral experiments. Behav. Res. Methods. Instrum. Comput. 33 398–414. 10.3758/bf03195394 [DOI] [PubMed] [Google Scholar]

- Ohayon S., Avni O., Taylor A. L., Perona P., Roian Egnor S. E. (2013). Automated multi-day tracking of marked mice for the analysis of social behaviour. J. Neurosci. Methods 219 10–19. 10.1016/j.jneumeth.2013.05.013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Panadeiro V., Rodriguez A., Henry J., Wlodkowic D., Andersson M. (2021). A review of 28 free animal-tracking software applications: current features and limitations. Lab. Anim. 50 246–254. 10.1038/s41684-021-00811-1 [DOI] [PubMed] [Google Scholar]

- Peleh T., Bai X., Kas M. J. H., Hengerer B. (2019). RFID-supported video tracking for automated analysis of social behaviour in groups of mice. J. Neurosci. Methods 325:108323. 10.1016/j.jneumeth.2019.108323 [DOI] [PubMed] [Google Scholar]

- Peleh T., Ike K. G., Frentz I., Buwalda B., de Boer S. F., Hengerer B., et al. (2020). Cross-site reproducibility of social deficits in group-housed btbr mice using automated longitudinal behavioural monitoring. Neuroscience 445 95–108. 10.1016/j.neuroscience.2020.04.045 [DOI] [PubMed] [Google Scholar]

- Pennington Z. T., Dong Z., Feng Y., Vetere L. M., Page-Harley L., Shuman T., et al. (2019). ezTrack: An open-source video analysis pipeline for the investigation of animal behavior. Sci. Rep. 9:19979. 10.1038/s41598-019-56408-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pereira T. D., Aldarondo D. E., Willmore L., Kislin M., Wang S. S.-H., Murthy M., et al. (2019). Fast animal pose estimation using deep neural networks. Nat. Methods 16 117–125. 10.1038/s41592-018-0234-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pereira T. D., Tabris N., Li J., Ravindranath S., Papadoyannis E. S., Wang Z. Y., et al. (2020). SLEAP: Multi-animal pose tracking. BioRxiv 10.1101/2020.08.31.276246 [DOI] [Google Scholar]

- Perez-Escudero A., Vicente-Page J., Hinz R. C., Arganda S., de Polavieja G. G. (2014). idTracker: tracking individuals in a group by automatic identification of unmarked animals. Nat. Methods 11 743–748. 10.1038/nmeth.2994 [DOI] [PubMed] [Google Scholar]

- Pernold K., Iannello F., Low B. E., Rigamonti M., Rosati G., Scavizzi F., et al. (2019). Towards large scale automated cage monitoring–Diurnal rhythm and impact of interventions on in-cage activity of C57BL/6J mice recorded 24/7 with a non-disrupting capacitive-based technique. PLoS One 14:e0211063. 10.1371/journal.pone.0211063 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Puścian A., Łęski S., Kasprowicz G., Winiarski M., Borowska J., Nikolaev T., et al. (2016). Eco-HAB as a fully automated and ecologically relevant assessment of social impairments in mouse models of autism. Elife 5:e19532. 10.7554/eLife.19532 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Quinn L. P., Stean T. O., Trail B., Duxon M. S., Stratton S. C., Billinton A., et al. (2003). LABORAS™: initial pharmacological validation of a system allowing continuous monitoring of laboratory rodent behaviour. J. Neurosci. Methods 130 83–92. 10.1016/S0165-0270(03)00227-9 [DOI] [PubMed] [Google Scholar]

- Redfern W. S., Tse K., Grant C., Keerie A., Simpson D. J., Pedersen J. C., et al. (2017). Automated recording of home cage activity and temperature of individual rats housed in social groups: The Rodent Big Brother project. PLoS One 12:e0181068. 10.1371/journal.pone.0181068 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reitz S. L., Wasilczuk A. Z., Beh G. H., Proekt A., Kelz M. B. (2021). Activation of Preoptic Tachykinin 1 Neurons Promotes Wakefulness over Sleep and Volatile Anesthetic-Induced Unconsciousness. Curr. Biol. 31 394–405e394. 10.1016/j.cub.2020.10.050 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rodriguez A., Zhang H., Klaminder J., Brodin T., Andersson M. (2017). ToxId: an efficient algorithm to solve occlusions when tracking multiple animals. Sci. Rep. 7 1–8. 10.1038/srep42201 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rodriguez A., Zhang H., Klaminder J., Brodin T., Andersson P. L., Andersson M. (2018). ToxTrac: a fast and robust software for tracking organisms. Methods Ecol. Evol. 9 460–464. 10.1111/2041-210X.12874 [DOI] [Google Scholar]

- Romero-Ferrero F., Bergomi M. G., Hinz R. C., Heras F. J., de Polavieja G. G. (2019). Idtracker. ai: tracking all individuals in small or large collectives of unmarked animals. Nat. Methods 16 179–182. 10.1038/s41592-018-0295-5 [DOI] [PubMed] [Google Scholar]

- Rousseau J. B., Van Lochem P. B., Gispen W. H., Spruijt B. M. (2000). Classification of rat behavior with an image-processing method and a neural network. Behav. Res. Methods Instrum. Comput. 32 63–71. 10.3758/bf03200789 [DOI] [PubMed] [Google Scholar]

- Salem G. H., Dennis J. U., Krynitsky J., Garmendia-Cedillos M., Swaroop K., Malley J. D., et al. (2015). SCORHE: a novel and practical approach to video monitoring of laboratory mice housed in vivarium cage racks. Behav. Res. Methods 47 235–250. 10.3758/s13428-014-0451-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schlingmann F., Van de Weerd H., Baumans V., Remie R., Van Zutphen L. (1998). A balance device for the analysis of behavioural patterns of the mouse. Anim. Wel. 7 177–188. [Google Scholar]

- Shemesh Y., Sztainberg Y., Forkosh O., Shlapobersky T., Chen A., Schneidman E. (2013). High-order social interactions in groups of mice. Elife 2:e00759. 10.7554/eLife.00759 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shenk J., Lohkamp K. J., Wiesmann M., Kiliaan A. J. (2020). Automated Analysis of Stroke Mouse Trajectory Data With Traja. Front. Neurosci. 14:518. 10.3389/fnins.2020.00518 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Singh S., Bermudez-Contreras E., Nazari M., Sutherland R. J., Mohajerani M. H. (2019). Low-cost solution for rodent home-cage behaviour monitoring. PLoS One 14:e0220751. 10.1371/journal.pone.0220751 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sturman O., von Ziegler L., Schläppi C., Akyol F., Privitera M., Slominski D., et al. (2020). Deep learning-based behavioral analysis reaches human accuracy and is capable of outperforming commercial solutions. Neuropsychopharmacology 45 1942–1952. 10.1038/s41386-020-0776-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- Todd J. G., Kain J. S., de Bivort B. L. (2017). Systematic exploration of unsupervised methods for mapping behavior. Phys. Biol. 14:015002. 10.1088/1478-3975/14/1/015002 [DOI] [PubMed] [Google Scholar]

- Torquet N., Marti F., Campart C., Tolu S., Nguyen C., Oberto V., et al. (2018). Social interactions impact on the dopaminergic system and drive individuality. Nat. Commun. 9 1–11. 10.1038/s41467-018-05526-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Toshev A., Szegedy C. (2014). “Deeppose: Human pose estimation via deep neural networks,” in Proceedings of the IEEE conference on computer vision and pattern recognition (Columbus, OH: IEEE; ), 1653–1660. 10.1109/CVPR.2014.214 [DOI] [Google Scholar]

- van Dam E. A., van der Harst J. E., ter Braak C. J., Tegelenbosch R. A., Spruijt B. M., Noldus L. P. (2013). An automated system for the recognition of various specific rat behaviours. J. Neurosci. Methods 218 214–224. 10.1016/j.jneumeth.2013.05.012 [DOI] [PubMed] [Google Scholar]