Abstract

A brain tumor is an abnormal enlargement of cells if not properly diagnosed. Early detection of a brain tumor is critical for clinical practice and survival rates. Brain tumors arise in a variety of shapes, sizes, and features, with variable treatment options. Manual detection of tumors is difficult, time-consuming, and error-prone. Therefore, a significant requirement for computerized diagnostics systems for accurate brain tumor detection is present. In this research, deep features are extracted from the inceptionv3 model, in which score vector is acquired from softmax and supplied to the quantum variational classifier (QVR) for discrimination between glioma, meningioma, no tumor, and pituitary tumor. The classified tumor images have been passed to the proposed Seg-network where the actual infected region is segmented to analyze the tumor severity level. The outcomes of the reported research have been evaluated on three benchmark datasets such as Kaggle, 2020-BRATS, and local collected images. The model achieved greater than 90% detection scores to prove the proposed model's effectiveness.

1. Introduction

In both adults and children, brain tumors are one of the major causes of mortality [1]. American Brain Tumour Association (ABTA) states that about 612,000 westerners are diagnosed with a brain tumor [2–4]. The term tumor, also known as a neoplasm, refers to the irregular tissue expansion that occurs when cells grow abnormally [5]. Two major types of brain tumors have been identified, each of which is based on the location of the tumors (primary and metastatic) as well as their malignancy growth types (benign and malignant) [6]. Magnetic resonance imaging (MRI) has an ability to discern the tiniest features inside the body. When managing brain lesions and other tumors, MRI is commonly used. We may predict anatomical details and locate anomalies using MRI. This method is better than computed tomography at detecting changes in tissue or structures, and it can also detect a size of a tumor [7]. Manual brain tumor detection is a tough task. As a result, using computer vision techniques to automate brain tumor segmentation and categorization is critical [8]. The clinical approaches allow for the extraction of relevant data and a thorough study of images, whereas computational approaches provide aid in deciphering the nuances in medical imaging [9]. The precise morphology assessment of tumors is a vital task for better treatment [10–12]. Despite substantial work in this sector, physicians still rely on manual tumor determination leading to a shortage of communication among researchers and doctors [13]. Many strategies for automatic classification have been presented lately, which may be divided into feature learning and evaluation processes [14–18]. Recent deep learning techniques, particularly CNN, have shown to be accurate and are frequently employed in medical picture analysis. Furthermore, they have disadvantages over traditional approaches in that they require a big dataset for training, have a high time complexity, are less efficient in application with a limited dataset, and require significant GPUs, all of which raise user costs [19, 20]. Selecting the correct deep learning tools is particularly difficult because it necessitates an understanding of many parameters, training methods, and topology [21].

1.1. Motivation

Although a great deal of study has gone into detecting brain tumors, there are still limits in this area due to the complicated pattern of the lesion's locations. The detection of the small amount of lesions region is a great challenge because the small region also appears as a healthy region. Furthermore, extracting and selecting informative features is a difficult task because it directly minimized the classification accuracy. Convolutional neural networks provide help for informative features extraction, but these models are computationally exhaustive. Still, there is a need for a lightweight model for the analysis of brain tumors [22–24].

Therefore, to overcome the existing limitations, this research presents a unique method for more accurately segmenting and classifying brain lesions. The following is the salient contributory steps of the proposed model:

At the very first contributing step, the score vector is created from the pretrained inceptionv3 model and passed to a six-layer quantum model that trained on tuned parameters such as the number of epochs, batch size, and optimizer solver for prediction among the different classes of the brain tumor such as glioma, no tumor, pituitary tumor, and meningioma.

An improved Seg-Network has been developed and trained on selected tuned parameters with actual segmented ground masks. It segments the tumor region more precisely.

The following is a summary of the overall article structure: related work is examined in Section 2, while proposed methodology steps are defined in Section 3. The results and discussion are further elaborated in Section 4, and the conclusion is given in Section 5.

2. Related Work

A lot of research is done to detect brain tumors, and some of the most current findings are covered in this section. A fuzzy rough set with statistical features is utilized for medical image analysis [25]. Possibilistic fuzzy-c-mean is used with texture features for breast anomalous detection [26–28]. Contrast enhancement is applied to improve the image contrast, and identification of the edges is done using fuzzy logic with dual complex wavelet transform. Furthermore, classification of meningioma/nonmeningioma is performed using U-network [29]. The fine-tuned ResNet-18 model is used for deep feature extraction on 138 subjects of Alzheimer's, and it provides 99.9% classification accuracy [30]. Image fusion plays a vital role in the diagnostic process. MR and CT slices are fused with a sparse convolutional decomposition model. In this, contrast stretching and gradient spatial method are used for edge identification; furthermore, texture cartoon decomposition is employed to create a dictionary where improved sparse convolutional coding with decision maps is used to obtain a final fused slice. Outcomes are evaluated on six benchmark datasets that reflect better performance [31]. A capsule neural model has been designed for brain tumor classification at 86.56% correct prediction rate [32]. The pretrained models such as inceptionv3, ResNet-50, and VGG-16 have been utilized for tumor classification, in which competitive outcomes are achieved on the ResNet-50 model [33]. A hybrid model has been developed in which VGG-net, ResNet, and LSTM models are merged for tumor cell classification that provides 71% accuracy rate on Alex and ResNet and 84% on the VGG16-LSTM model [34]. The singular decomposition value is employed for tumor classification and provides 90% sensitivity (SE), 98% specificity, and 96.66% accuracy [35]. A new model has been proposed based on DWT for tumor classification and has achieved 93.94% accuracy [36]. The histogram equalization approach has been employed to enhance the image quality. Informative features are selected by PCA and passed to the feed-forward network for normal/abnormal MRI image classification with a 90% accuracy rate [37]. The SVM model has been employed for tumor classification that achieved 82% sensitivity and 81.48% specificity. The combination of DWT, PCA, and SVM has been applied for tumor classification that achieved an 80% correct prediction rate with 84% SE and 92% specificity rate [38]. Three machine learning (ML) models have been employed for tumor classification. The model achieved an accuracy of the 88% [39]. A modified CNN model is used for classification of tumors using capsule network (CapsNet). The suggested CapsNet takes advantage of the tumor's spatial interaction including its neighbouring tissues [40]. Another study used the DBN to distinguish between healthy patients and controls with schizophrenia, using 83 and 143 patients from the Radiopaedia database, respectively [40]. In comparison to SVM, which delivers 68.1% accuracy, the suggested DBN gives 73.6% accuracy [41]. A method has been suggested for classifying all types of brain tumors [42, 43]. Likewise, a new framework has been created for classifying brain cancers. To extract the features, the suggested model includes six layers [44, 45]. A multiclass CNN model has been developed for tumor classification. An adversarial generative model has been utilized for the creation of synthetic images [46]. There are six layers in the suggested paradigm. This was combined with a variety of data enhancement techniques. On specified and random splits, this attained an accuracy of 93.01% and 95.6%, respectively [47]. Several additional architectures have lately been proposed to generalize a CNN, particularly in the classification of medical images [48].

Different authors [49, 50] have chosen the graph CNN (GCNN) for tumor classification. Despite different proposed approaches for classifying brain tumors, these methodologies have a number of drawbacks, which have been mentioned in [51, 52]. Many techniques for classifying tumors relied on manually specified tumor regions, preventing them from being entirely automated [53]. The algorithms that used CNN and its derivatives were unable to deliver a significant speed boost. As a result, performance evaluations based on indicators other than precision become increasingly important. Furthermore, CNN models perform poorly on tiny data sets [54–56]. To overcome the existing limitations in this work, a lightweight quantum neural model is proposed for brain tumor classification.

3. Proposed Methodology

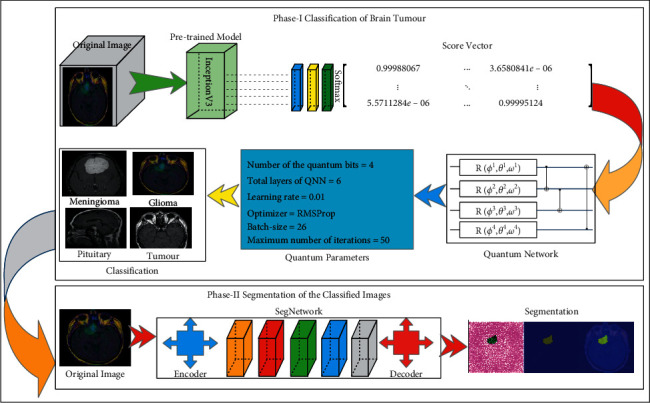

The suggested strategy is divided into three stages such as feature extraction, classification, and segmentation. In phase-I, features are extracted using the pretrained inceptionv3 model. The obtained score vector is further passed to the quantum learning mechanism for tumor classification. In the segmentation phase, SegNetwork has been utilized to segment the actual tumor lesions. The proposed semantic diagram is shown in Figure 1.

Figure 1.

The proposed model for brain tumor detection.

Figure 1 shows the processes of the proposed model, which include extracting features using a pretrained inceptionv3 model and obtaining a score vector from the softmax layer. The six-layer quantum network is trained with the score vector based on selected hyperparameters for tumor classification in phase-I. Furthermore, in phase-II, tumor slices are segmented using the proposed semantic segmentation model.

3.1. Features Extraction of the Pretrained Model

The features are taken from the inceptionv3 model that consists of 315 layers, in which 94 convolutional, 94 ReLU, 94 batch-normalization, 04 max-pooling, 09 average pooling, 01 global pooling, fully connected, output classification, 15 depth concatenation, and softmax. Based on the probability, classification has been done using softmax. In this layer, ϕinputi denotes the ith class probability. The class prediction comprises the maximum probability p, in which N denotes the total number of classes as follows:

| (1) |

The score vectors have been obtained from the softmax and supplied as input to the quantum model for classification.

3.1.1. Classification of Brain Tumours Based on the Score Using the Quantum Model

Demand for processing power growth is another issue where computationally costly applications include simulations of massive quantum systems such as molecules and solving massive linear problems. This is a major reason for the creation of quantum computing, a computational approach that uses the properties and concepts of quantum particles to handle data. Quantum computers offer an exponential speedup. Although quantum computers have advanced quickly in recent years, theoretical and practical difficulties still stand in the way of a large-scale computing device. Related to noisy processes, quantum computers currently have severe constraints, such as restricted qubits and operations of gates. Variational quantum algorithms (VQAs) [57] have emerged as one of the most promising approaches to overcome these constraints. This technique has been presented in a variety of disciplines, including object recognition as a quantum machine learning algorithm. This study presented a brain tumor classification methodology based on the VQA-based data reuploading classifier (DRC).

Quantum computers, like conventional computers, utilize operation gates to regulate and modify the configuration of a qubit. The unitary matrix may be used to explain the quantum gates mathematically. Unitary evolution is the term used to describe the transition from one quantum state to another via gates of quantum. The physics of the qubit is implementation, reliant on the hardware design (hardware) of such gates of the quantum. Each implementation of a qubit has its unique method for generating gates of quantum. Two or even more qubits might be used to run quantum gates. A quantum circuit, or an array of several quantum gates working on greater than one qubit, can be used to run a quantum method. Qubits is a state of qubit after working on a gate of the quantum. Assume that the Hadamard gate (H) operation on the qubit |0 outputs in a qubit inside the following state of superposition:

| (2) |

The control is shown by black dots on the schematic depiction of the CNOT gateway, while the target is represented by the “×” symbol within the circle. If the controlled qubit is in the |1〉 state, the gateway of CNOT will invert the quantum state of qubit target to |0〉 to |1〉 and vice versa. Parametric gates of quantum operate based on parameters that are placed on gates. RX, RY, and RZ gates are parametric having the following matrix functional:

| (3) |

where e represents the epochs,

| (4) |

The (ϕ), (ϕ), and (ϕ) gates rotate the qubit vector around the x-rotation axis, y-rotation axis, and z-rotation axis as in the Bloch sphere image. The qubit vector is then rotated on three axes of rotation with varying angle values using three parametric gates. Consider the rotation gate (ϕ), which has given a matrix structure whose function is dependent on the following parameters:

| (5) |

Here, w denotes weights.

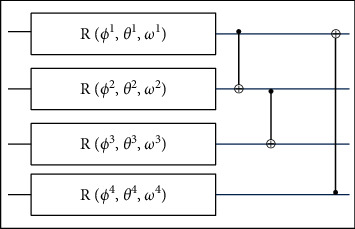

This gate spins a qubit around the z-axis, then the y-axis, and finally back to the z-axis in the Bloch representation. The softmax layer of the inceptionv3 model generates the score vector, which is then given to the variational quantum model. In the proposed model, 4-qubit structure is utilized for model training. The schematic diagram of the four-qubit structure is illustrated in Figure 2.

Figure 2.

Structure of four-qubit method.

This method employs four qubits, including one with an operation of gate R, preceded by a succession of CNOT gates. The total parameters of this ansatz version are 12 since each gate R has three parameters. The parameters are tuned, like ANN, such that the circuit can undergo unitary evolution, resulting in the specific intention findings. The model is trained on the parameters stated in Table 1.

Table 1.

Learning parameters of the QNN model.

| Number of the quantum bits | 4 |

|

| |

| Total layers of the quantum neural network (QNN) | 6 |

| Learning rate | 0.01 |

| Optimizer | RMSProp |

| Batch size | 26 |

| Maximum number of iterations | 50 |

Table 1 shows the learning QNN parameters in which four quantum bits, six QNN layers, 0.01 learning rate with the RMSProp optimizer solver, and a batch size of 26 are utilized on 50 training epochs.

3.2. Proposed Seg-Network

In this study, the improved Seg-Network model is provided that consists of 59 layers, in which 16 convolutional, 16 ReLU, 16 batch-normalization, 4 maxpooling, and a softmax. SegNet is made up of two subnetworks: an encoder and a decoder. The number of times that the input layer is downsampled or upsampled as it is processed is determined by the depth of the networks. The encoder part of the network downsamples the resolution of the input image through the 2D factor, where D denotes the encoder depth, and the output of the decoder network is upsampled by a factor. For each segment of the SegNet encoder model, the output channels are given as just a positive integer/vector. SegNet layers adjust the decoder's output channel count to match the encoder portion. The biased factor is set to zero in convolutional layers. The segmentation model's learning parameters are provided in Table 2.

Table 2.

Initial segmentation learning parameters.

| Size of the input | 240 × 240⟶ 2020 BRATS |

| 240 × 240⟶ TCGA | |

| 240 × 240⟶ local data | |

|

| |

| Optimizer | Sgdm |

| Number of classes | 02 |

| Depth of the encoder | 4 |

| Rate of learning | 1e − 3 |

| Training epochs | 100 |

4. Results and Discussion

The proposed method was tested on three distinct types of benchmark datasets in this study, including The Cancer Genome Atlas (TCGA), BRATS 2020, and locally gathered photos.

TCGA data contain 101 cases: precontrast and postcontrast and Flair. In these data, nine cases of the postcontrast sequence are missing and six cases are missing from the sequence of precontrast [58, 59]. The binary masks of this dataset are in a single channel. The suggested technique is also tested on benchmark datasets like privately gathered photographs from a local hospital. The input MRI ground masks are also manually generated by professional radiologists, in which 800 tumors/nontumor slices of 20 patients are included with the dimension of 320 × 320 × 600, where 320 × 320 represents the image dimension and 600 denotes the number of slices across each patient.

The 2020-BRATS Challenge incorporates data from 259 patients of MRI, with 76 cases of low glioma grades and 76 high glioma grades [60–62]. Every patient has 155 MRI slices with a dimension of 240 × 240 × 155.

The multiclass brain tumor classification dataset has been downloaded from the Kaggle website. This dataset contains four classes such as glioma, meningioma, no tumor, and pituitary tumor. The dataset contains two folders, one for training and another one for testing. The glioma, meningioma, no tumor, and pituitary tumor contain 826, 822, 395, and 827 training slices, respectively. However, testing slices of glioma, meningioma, no tumor, and pituitary tumor are 100, 115, and 74, respectively [63].

The proposed study has been evaluated on MATLAB toolbox with 2021 RA with a Windows 10 operating system. The proposed method's performance was evaluated based on two experimentations. The classification model's performance was evaluated in the first experiment. The second experiment was used to assess the effectiveness of the segmentation approach.

4.1. Experiment#1: Classification of Brain Tumours

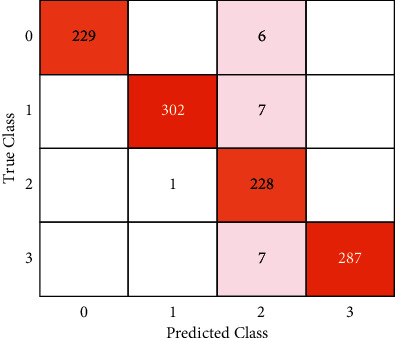

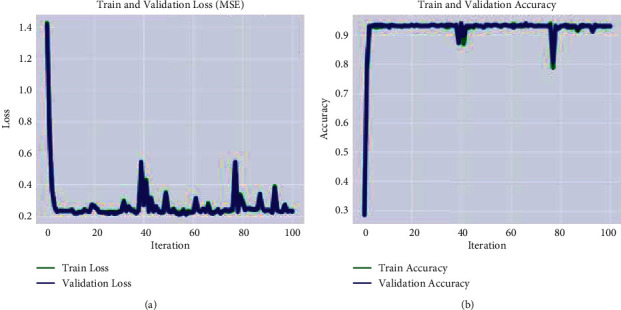

In this experiment, classification data are split into two halves, in a 70 : 30 ratio. The computed classification results on benchmark datasets are given in Table 3. The classification outcomes are also plotted in the form of confusion matrices as shown in Figure 3.

Table 3.

Multiclass classification results on the Kaggle dataset.

| Classes | Acc. | Precision | Recall | F1 score |

|

| ||||

| No tumor | 99.44% | 0.97 | 1.0 | 0.99 |

| Meningioma | 99.25% | 0.98 | 1.0 | 0.99 |

| Pituitary | 98.03% | 1.0 | 0.92 | 0.96 |

| Glioma | 99.34% | 0.98 | 1.0 | 0.99 |

Figure 3.

Confusion matrix.

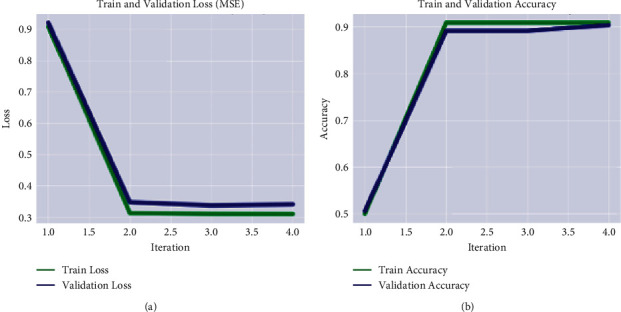

Figure 3 shows the ratio of true positive and true negative values, where 0, 1, 2, and 3 shows the no tumor, meningioma, pituitary tumor, and glioma, respectively. The training and validation loss rate with respect to the accuracy is plotted in Figure 4.

Figure 4.

Graphical representation of the model training on the Kaggle dataset. (a) MSE. (b) accuracy.

Table 3 shows the calculated recognition accuracy, which demonstrates that the obtained multiclass accuracy is 99.44% on no tumor, 99.25% on meningioma, 98.03% on the pituitary, and 99.34% on glioma. The classification of low- and high-grade glioma results is also computed on BRATS-2020 as depicted in Table 4. The training and validation loss rate with respect to the accuracy is depicted in Figure 5.

Table 4.

Classification of HGG/LGG results on the 2020-BRATS Challenge dataset.

| Classes | Acc. | Precision | Recall | F1 score |

|

| ||||

| HGG | 90.91% | 0.87 | 0.94 | 0.91 |

| LGG | 90.91% | 0.95 | 0.88 | 0.91 |

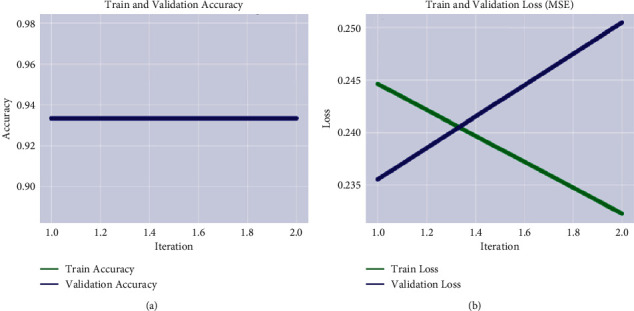

Figure 5.

Graphical representation of model training on the Kaggle dataset. (a) MSE. (b) accuracy.

Table 4 shows the classification outcomes on BRATS-2020 Challenge where the proposed method achieved 90.91% accuracy. The classification results on local collected images from POF Hospital are as shown in Table 5. The graphical representation of training and validation progress is plotted in Figure 6.

Table 5.

Classification of normal/abnormal results on local collected images.

| Classes | Acc. | Precision | Recall | F1 score |

|

| ||||

| Tumor | 93.33% | 0.93 | 0.93 | 0.93 |

| Nontumor | 93.33% | 0.93 | 0.93 | 0.93 |

Figure 6.

Graphical representation of model training on local collected images. (a) MSE. (b) accuracy.

Table 5 depicts the classification outcomes that is 93.33% accurate on local collected images. The outcomes of the proposed classification are compared to current works as stated in Table 6.

Table 6.

Comparison of the proposed approach to existing methodologies.

Binary grading classifier is used for classification of HGG/LGG on BRATS-2020 dataset and provides an accuracy of 84.1% [64]. Features are extracted from the RNN model for the classification of tumor grades such as pituitary tumor [65] and meningioma and give an accuracy of 98.8% [66]. A deep network is developed for brain tumor classification on the Kaggle dataset, and it provides an accuracy of 97.87% [67]. The CNN model is trained on the tuned hyperparameters using the Kaggle dataset, and it gives an accuracy of 96.0% [68]. GRU, LSTM, RNN, and CNN models are used as base learner with a min-max fuzzy classifier for classification, and this provides an accuracy of 95.24% [69].

The proposed approach based on convolutional and quantum neural network provides an accuracy of 98.2% on the Kaggle dataset and 99.7% on the BRATS-2020 Challenge dataset.

In the future, this work might be enhanced for the volumetry analysis of brain tumors that will help the radiologists in the diagnostic process.

4.2. Experiment#2: Segmentation of the Brain Tumor

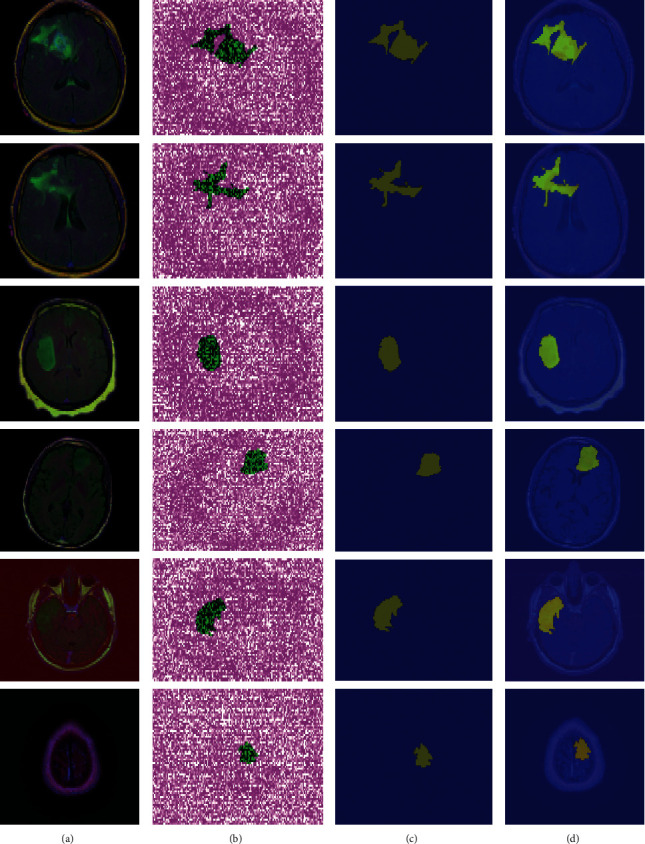

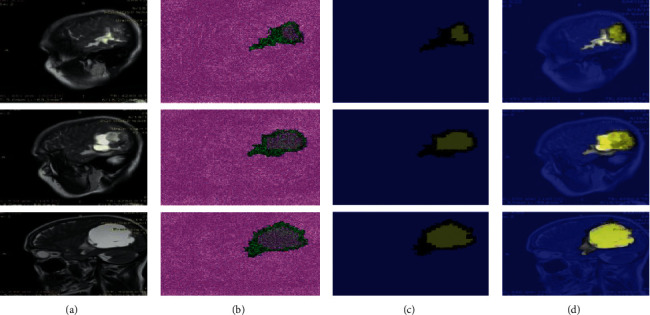

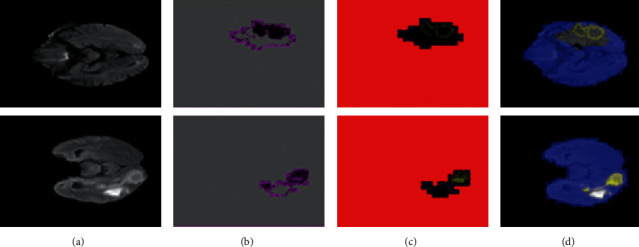

In this experiment, Table 7 shows the results of testing the suggested segmentation algorithm on benchmark datasets. Figures 7–9 illustrate the results of the proposed segmentation approach.

Table 7.

Proposed tumour segmentation results.

| Data | Global acc. | Mean acc. | Mean IoU | Weighted IoU | Mean BF score |

|

| |||||

| Kaggle | 0.98201 | 0.93235 | 0.94402 | 0.97397 | 0.98358 |

| Local collected | 0.99975 | 0.99975 | 0.99988 | 0.99975 | 0.99783 |

| BRATS-2020 | 0.99781 | 0.85378 | 0.93876 | 0.99757 | 0.83913 |

Figure 7.

Proposed method segmentation outcomes on the Kaggle dataset (a). Original MRI slices (b). Three-dimensional segmentation (c). The segmented region is mapped on input slices (d).

Figure 8.

Proposed method segmentation outcomes on local collected images (a). Original MRI slices (b). Three-dimensional segmentation (c). The segmented region is mapped on input slices (d).

Figure 9.

Proposed method segmentation outcomes on Challenge BRATS-2020 (a). Original MRI slices (b). Three-dimensional segmentation (c). The segmented region is mapped on input slices (d).

Table 7 depicts the segmentation outcomes, where the proposed approach achieved a global accuracy of 0.982 on the Kaggle dataset, 0.997 on Challenge BRATS-2020, and 0.999 on images collected from local hospitals. As indicated in Table 8, the obtained segmentation results are compared to the most recent techniques.

Table 8.

Comparison of the simulated improved Seg-network results to the existing methods.

Simulation results of the proposed method have been compared with those of eight latest existing works (as seen in Table 8). Noise is eliminated from the MRI slices with an anisotropic filter, and we classify the tumor/healthy slices using SVM, which provides 96.04% prediction scores [70]. A nonlocal mean filter is used for noise reduction, and tumor pixels are segmented by region growth. The classification of tumor/normal slices is done by a neural network classifier on the Kaggle dataset with 97.3% prediction scores [78]. Morphological operations with a Gaussian filter are applied on MRI slices to segment the brain tumor on the Kaggle dataset, and it gives 93% prediction scores [72]. A fine-tuned transfer learning network is employed for tumor classification on Kaggle data, and it provides 92.67% prediction scores [73]. Deep features are extracted from pretrained Resnet50, in which the five last layers are replaced with the eight new layers, and they give 97.2% prediction scores [74]. The suggested ME-Net classification model consists of the multiple encoder and decoder layers for classification of the tumor slices, and it provides prediction scores of 70.20% [75]. A generic three-dimensional network is designed for the classification of MRI slices, and it provides 88.90 prediction scores on the BRATS-2020 dataset [76]. The skull is removed from MRI slices by region-based approaches, and adaptive FTE clustering is used to segment the tumor pixels. The robust discrete wavelet, HOG, and intensity features are extracted from each MRI slice, and we classify the healthy/unhealthy images using DENN which provides prediction scores of 97% [79].

The experimental analysis clearly shows that the proposed approach performed far better. This work will be enhanced in the future for the classification of tumor substructure such as whole tumor, enhanced tumor structure, and nonenhanced tumor structure to analyze the tumor severity rate.

5. Conclusion

Because of the complicated nature of the lesion's area, detecting malignancy grades is a difficult process. In this research study, an improved idea is provided for classifying and segmenting brain tumors at an initial stage, to enhance patient survival rate. In this work, features are retrieved using the inceptionv3 model and obtained score vector by softmax that is further passed to the variational quantum classifier for brain tumor classifications. The performance of the classification method is evaluated on two publicly available datasets and one local dataset. On the Kaggle dataset, it achieved an accuracy of 99.44% on no tumor, 99.25% on meningioma, 98.03% on pituitary tumor, and 99.34% on glioma. In the classification between tumor and nontumor classes, the proposed method achieved 93.33% accuracy on local collected images. On the 2020-BRATS Challenge, the proposed method attained an accuracy of 90.91% with HGG and LGG slices. After classification for analyzing the total infected region of the tumor, a modified Seg-Network has been introduced and it provides a global accuracy of 0.982 on the Kaggle dataset, 0.999 on private collected images, and 0.997 on the 2020-BRATS Challenge. The experimental study shows that the proposed model outperformed the most recent published work in this field. In the future, this research will improve the capacity and capability of the health sector in general, as well as local hospitals, for early diagnosis resulting in a higher survival rate.

Acknowledgments

This research was supported by the Korea Institute for Advancement of Technology (KIAT) grant funded by the Korea Government (MOTIE) (P0012724, The Competency Development Program for Industry Specialist) and Soonchunhyang University Research Fund.

Data Availability

The multiclass brain tumor classification dataset has been downloaded from the Kaggle website. This dataset contains four classes such as glioma, meningioma, no tumor, and pituitary tumor (“Navoneel Chakrabarty, Swati Kanchan, https://github.com/sartajbhuvaji/brain-tumor-classification-dataset (accessed on 6 June 2021”). The 2020-BRATS Challenge incorporates data from 259 patients of MRI, with 76 cases of low and high glioma grades. Every patient has 155 MRI slices with a dimension of 240 × 240 × 155 (B. H. Menze, A. Jakab, S. Bauer, J. Kalpathy-Cramer, K. Farahani, J. Kirby, et al., “The multimodal brain tumor image segmentation benchmark (BRATS),” IEEE transactions on medical imaging, vol. 34, pp. 1993–2024, 2014. S. Bakas, H. Akbari, A. Sotiras, M. Bilello, M. Rozycki, J. S. Kirby, et al., “Advancing the cancer genome Atlas glioma MRI collections with expert segmentation labels and radio atomic features,” Scientific data, vol. 4, p. 170117, 2017. M. Kistler, S. Bonaretti, M. Pfahrer, R. Niklaus, and P. Büchler, “The virtual skeleton database: an open access repository for biomedical research and collaboration,” Journal of medical Internet research, vol. 15, p. e245, 2013).

Conflicts of Interest

The authors declare that they have no conflicts of interest.

References

- 1.Hanif F., Muzaffar K., Perveen K., Malhi S. M., Simjee ShU. Glioblastoma multiforme: a review of its epidemiology and pathogenesis through clinical presentation and treatment. Asian Pacific Journal of Cancer Prevention: Asian Pacific Journal of Cancer Prevention . 2017;18(1):3–9. doi: 10.22034/APJCP.2017.18.1.3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Howland R. H. Managing common side effects of SSRIs. Journal of Psychosocial Nursing and Mental Health Services . 2007;45(2):15–18. doi: 10.3928/02793695-20070201-04. [DOI] [PubMed] [Google Scholar]

- 3.Munoz M., Garau M., Musetti C., Alonso R., Barrios E. The national cancer registry of urgency: a model for sustainable cancer registration in Latin-AMERICA: 718. Asia-Pacific Journal of Clinical Oncology . 2014;10 [Google Scholar]

- 4.Cabezas M., Oliver A., Lladó X., Freixenet J., Bach Cuadra M. A review of atlas-based segmentation for magnetic resonance brain images. Computer Methods and Programs in Biomedicine . 2011;104(3):e158–e177. doi: 10.1016/j.cmpb.2011.07.015. [DOI] [PubMed] [Google Scholar]

- 5.Clark W. H., Jr, Elder D. E., Guerry D., Epstein M. N., Greene M. H., Van Horn M. A study of tumor progression: the precursor lesions of superficial spreading and nodular melanoma. Human Pathology . 1984;15(12):1147–1165. doi: 10.1016/s0046-8177(84)80310-x. [DOI] [PubMed] [Google Scholar]

- 6.Gault J., Sarin H., Awadallah N. A., Shenkar R., Awad I. A. Pathobiology of human cerebrovascular malformations: basic mechanisms and clinical relevance. Neurosurgery . 2004;55(1):1–7. [PubMed] [Google Scholar]

- 7.Roth C. G., Marzio D. H.-D., Guglielmo F. F. Contributions of magnetic resonance imaging to gastroenterological practice: MRIs for GIs. Digestive Diseases and Sciences . 2018;63(5):1102–1122. doi: 10.1007/s10620-018-4991-x. [DOI] [PubMed] [Google Scholar]

- 8.Malathi M., Sinthia P. Brain tumour segmentation using convolutional neural network with tensor flow. Asian Pacific Journal of Cancer Prevention . 2019;20(7):2095–2101. doi: 10.31557/apjcp.2019.20.7.2095. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Bi W. L., Hosny A., Schabath M. B., et al. Artificial intelligence in cancer imaging: clinical challenges and applications. Ca - a Cancer Journal for Clinicians . 2019;69(2):127–157. doi: 10.3322/caac.21552. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Jayachandran A., Dhanasekaran R. Severity analysis of brain tumor in MRI images using modified multi-texton structure descriptor and kernel-SVM. Arabian Journal for Science and Engineering . 2014;39(10):7073–7086. doi: 10.1007/s13369-014-1334-x. [DOI] [Google Scholar]

- 11.Torrents-Barrena J., Piella G., Masoller N., et al. Segmentation and classification in MRI and US fetal imaging: recent trends and future prospects. Medical Image Analysis . 2019;51:61–88. doi: 10.1016/j.media.2018.10.003. [DOI] [PubMed] [Google Scholar]

- 12.Zoccatelli G., Alessandrini F., Beltramello A., Talacchi A. Advanced magnetic resonance imaging techniques in brain tumours surgical planning. Journal of Biomedical Science and Engineering . 2013;6(3):403–417. doi: 10.4236/jbise.2013.63a051. [DOI] [Google Scholar]

- 13.Verbeek J., Spelten E., Kammeijer M., Sprangers M. Return to work of cancer survivors: a prospective cohort study into the quality of rehabilitation by occupational physicians. Occupational and Environmental Medicine . 2003;60(5):352–357. doi: 10.1136/oem.60.5.352. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Hussain L., Saeed S., Awan I. A., Idris A., Nadeem M. S. A., Chaudhry Q.-u.-A. Detecting brain tumor using machines learning techniques based on different features extracting strategies. Current Medical Imaging Formerly Current Medical Imaging Reviews . 2019;15(6):595–606. doi: 10.2174/1573405614666180718123533. [DOI] [PubMed] [Google Scholar]

- 15.Amin J., Sharif M., Yasmin M., Fernandes S. L. A distinctive approach in brain tumor detection and classification using MRI. Pattern Recognition Letters . 2020;139:118–127. doi: 10.1016/j.patrec.2017.10.036. [DOI] [Google Scholar]

- 16.Amin J., Sharif M., Yasmin M., Fernandes S. L. Big data analysis for brain tumor detection: deep convolutional neural networks. Future Generation Computer Systems . 2018;87:290–297. doi: 10.1016/j.future.2018.04.065. [DOI] [Google Scholar]

- 17.Saba T., Sameh Mohamed A., El-Affendi M., Amin J., Sharif M. Brain tumor detection using fusion of hand crafted and deep learning features. Cognitive Systems Research . 2020;59:221–230. doi: 10.1016/j.cogsys.2019.09.007. [DOI] [Google Scholar]

- 18.Amin J., Sharif M., Gul N., Yasmin M., Shad S. A. Brain tumor classification based on DWT fusion of MRI sequences using convolutional neural network. Pattern Recognition Letters . 2020;129:115–122. doi: 10.1016/j.patrec.2019.11.016. [DOI] [Google Scholar]

- 19.Amin J., et al. Brain tumor detection by using stacked autoencoders in deep learning. Journal of Medical Systems . 2020;44(2):1–12. doi: 10.1007/s10916-019-1483-2. [DOI] [PubMed] [Google Scholar]

- 20.Amin J., Sharif M., Yasmin M., Saba T., Raza M. Use of machine intelligence to conduct analysis of human brain data for detection of abnormalities in its cognitive functions. Multimedia Tools and Applications . 2020;79(15):10955–10973. doi: 10.1007/s11042-019-7324-y. [DOI] [Google Scholar]

- 21.Darwish A., Hassanien A. E., Das S. A survey of swarm and evolutionary computing approaches for deep learning. Artificial Intelligence Review . 2020;53(3):1767–1812. doi: 10.1007/s10462-019-09719-2. [DOI] [Google Scholar]

- 22.Sarvamangala D. R., Kulkarni R. V. Convolutional neural networks in medical image understanding: a survey. Evolutionary intelligence . 2021;15(1):1–22. doi: 10.1007/s12065-020-00540-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Chahal P. K., Pandey S., Goel S. A survey on brain tumor detection techniques for MR images. Multimedia Tools and Applications . 2020;79(29-30):21771–21814. doi: 10.1007/s11042-020-08898-3. [DOI] [Google Scholar]

- 24.Shanthakumar P., Thirumurugan P., Lavanya D. N. A survey on soft computing based brain tumor detection techniques using MR images. Journal of Seybold Report ISSN NO . 1533:p. 9211. [Google Scholar]

- 25.Chowdhary C. L., Acharjya D. P. A hybrid scheme for breast cancer detection using intuitionistic fuzzy rough set technique. International Journal of Healthcare Information Systems and Informatics . 2016;11(2):38–61. doi: 10.4018/ijhisi.2016040103. [DOI] [Google Scholar]

- 26.Chowdhary C. L., Acharjya D. Breast cancer detection using intuitionistic fuzzy histogram hyperbolization and possibilitic fuzzy C-mean clustering algorithms with texture feature based classification on mammography images. Proceedings of the international conference on advances in information communication technology & computing; August 2016; New York, NY, United States. pp. 1–6. [Google Scholar]

- 27.Acharjya D. P., Chowdhary C. L. Breast cancer detection using hybrid computational intelligence techniques. Advances in Healthcare Information Systems and Administration . 2018:251–280. doi: 10.4018/978-1-5225-5460-8.ch011. [DOI] [Google Scholar]

- 28.Chowdhary C. L., Mittal M., Kumaresan P., Pattanaik P. A., Marszalek Z. An efficient segmentation and classification system in medical images using intuitionist possibilistic fuzzy C-mean clustering and fuzzy SVM algorithm. Sensors . 2020;20(14):p. 3903. doi: 10.3390/s20143903. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Maqsood S., Damasevicius R., Shah F. M. An efficient approach for the detection of brain tumor using fuzzy logic and U-NET CNN classification. Proceedings of the International Conference on Computational Science and Its Applications, 2021; September 2021; Berlin/Heidelberg, Germany. Springer; pp. 105–118. [DOI] [Google Scholar]

- 30.Odusami M., Maskeliūnas R., Damaševičius R., Krilavičius T. Analysis of features of alzheimer’s disease: detection of early stage from functional brain changes in magnetic resonance images using a finetuned ResNet18 network. Diagnostics . 2021;11(6):p. 1071. doi: 10.3390/diagnostics11061071. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Muzammil S. R., Maqsood S., Haider S., Damaševičius R. CSID: a novel multimodal image fusion algorithm for enhanced clinical diagnosis. Diagnostics . 2020;10(11):p. 904. doi: 10.3390/diagnostics10110904. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Afshar P., Mohammadi A., Plataniotis K. N. Brain tumor type classification via capsule networks. Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP); October 2018; Athens, Greece. IEEE; pp. 3129–3133. [DOI] [Google Scholar]

- 33.Chelghoum R., Ikhlef A., Hameurlaine A., Jacquir S. Transfer learning using convolutional neural network architectures for brain tumor classification from MRI images. Proceedings of the IFIP International Conference on Artificial Intelligence Applications and Innovations; October 2018; Berlin/Heidelberg, Germany. Springer; pp. 189–200. [DOI] [Google Scholar]

- 34.Shahzadi I., Tang T. B., Meriadeau F., Quyyum A. CNN-LSTM: cascaded framework for brain Tumour classification. Proceedings of the 2018 IEEE-EMBS Conference on Biomedical Engineering and Sciences (IECBES); December 2018; Sarawak, Malaysia. IEEE; pp. 633–637. [DOI] [Google Scholar]

- 35.El Abbadi N. K., Kadhim N. E. Brain tumor classification based on singular value decomposition. Brain . 2016;5(8) doi: 10.17148/ijarcce.2016.58116. [DOI] [Google Scholar]

- 36.Mohsen H., El-Dahshan E., El-Horbaty E., Salem A. Annals of “Dunarea De Jos” University of Galati, Mathematics, Physics, Theoretical Mechanics, Fascicle II, Year IX (XL) 2017. Brain tumor type classification based on support vector machine in magnetic resonance images. M.Sc. Thesis. [Google Scholar]

- 37.El‐Dahshan E. S. A., Bassiouni M. M. Computational intelligence techniques for human brain MRI classification. International Journal of Imaging Systems and Technology . 2018;28(2):132–148. [Google Scholar]

- 38.Ismael M. R. Hybrid Model-Statistical Features and Deep Neural Network for Brain Tumor Classification in MRI Images . 2018;3291 Dissertations. [Google Scholar]

- 39.Rehman Z. U., Zia M. S., Bojja G. R., Yaqub M., Jinchao F., Arshid K. Texture based localization of a brain tumor from MR-images by using a machine learning approach. Medical Hypotheses . 2020;141:p. 109705. doi: 10.1016/j.mehy.2020.109705. [DOI] [PubMed] [Google Scholar]

- 40.Paul J. S., Plassard A. J., Landman B. A., Fabbri D. Medical Imaging 2017: Biomedical Applications in Molecular, Structural, and Functional Imaging . Vol. 10137. International Society for Optics and Photonics; 2017. Deep learning for brain tumor classification; p. p. 1013710. [DOI] [Google Scholar]

- 41.Pinaya W. H, Gadelha A, Doyle O. M, et al. Using deep belief network modelling to characterize differences in brain morphometry in schizophrenia. Scientific Reports . 2016;6(1):38897–38899. doi: 10.1038/srep38897. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Zhou Y., Li Z., Chen C., Gao M., Xu J. Holistic brain tumor screening and classification based on densenet and recurrent neural network. Proceedings of the International MICCAI Brainlesion Workshop; September 2018; Granada, Spain. Springer; pp. 208–217. [Google Scholar]

- 43.Gautam S. Attempting of brain tumor classification on densenet and recurrent neural network framents. Multidisciplinary International Journal . 5 [Google Scholar]

- 44.Sasank V. V. S., Venkateswarlu S. Brain tumor classification using modified kernel based softplus extreme learning machine. Multimedia Tools and Applications . 2021;80(9):13513–13534. doi: 10.1007/s11042-020-10423-5. [DOI] [Google Scholar]

- 45.Abiwinanda N., Hanif M., Hesaputra S. T., Handayani A., Mengko T. R. IFMBE Proceedings . Vol. 2019. Springer; 2018. Brain tumor classification using convolutional neural network; pp. 183–189. [DOI] [Google Scholar]

- 46.Divya S., Suresh L. P., John A. A deep transfer learning framework for multi class brain tumor classification using MRI. Proceedings of the 2020 2nd International Conference on Advances in Computing, Communication Control and Networking (ICACCCN); December 2020; Greater Noida, India. IEEE; pp. 283–290. [Google Scholar]

- 47.Ayadi W., Elhamzi W., Charfi I., Atri M. Deep CNN for brain tumor classification. Neural Processing Letters . 2021;53(1):671–700. doi: 10.1007/s11063-020-10398-2. [DOI] [Google Scholar]

- 48.Amin J., Sharif M., Raza M., Saba T., Sial R., Shad S. A. Brain tumor detection: a long short-term memory (LSTM)-based learning model. Neural Computing & Applications . 2020;32(20):15965–15973. doi: 10.1007/s00521-019-04650-7. [DOI] [Google Scholar]

- 49.Sultan H. H., Salem N. M., Al-Atabany W. Multi-classification of brain tumor images using deep neural network. IEEE Access . 2019;7:69215–69225. doi: 10.1109/access.2019.2919122. [DOI] [Google Scholar]

- 50.Liu D., Liu Y., Dong L. G-ResNet: G-ResNet: improved ResNet for brain tumor classification. Neural Information Processing ; Proceedings of the International Conference on Neural Information Processing, 2019; December 2019; Granada, Spain. Springer; pp. 535–545. [DOI] [Google Scholar]

- 51.Mohan G., Subashini M. M. MRI based medical image analysis: survey on brain tumor grade classification. Biomedical Signal Processing and Control . 2018;39:139–161. doi: 10.1016/j.bspc.2017.07.007. [DOI] [Google Scholar]

- 52.Mazurowski M. A., Buda M., Saha A., Bashir M. R. Deep learning in radiology: an overview of the concepts and a survey of the state of the art with focus on MRI. Journal of Magnetic Resonance Imaging . 2019;49(4):939–954. doi: 10.1002/jmri.26534. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Deepak S., Ameer P. M. Brain tumor classification using deep CNN features via transfer learning. Computers in Biology and Medicine . 2019;111:p. 103345. doi: 10.1016/j.compbiomed.2019.103345. [DOI] [PubMed] [Google Scholar]

- 54.Amin J., Sharif M., Anjum M. A., Raza M., Bukhari S. A. C. Convolutional neural network with batch normalization for glioma and stroke lesion detection using MRI. Cognitive Systems Research . 2020;59:304–311. doi: 10.1016/j.cogsys.2019.10.002. [DOI] [Google Scholar]

- 55.Amin J., Sharif M., Raza M., Saba T., Rehman A. Brain tumor classification: feature fusion. Proceedings of the 2019 international conference on computer and information sciences (ICCIS); April 2019; Sakaka, Saudi Arabia. IEEE; pp. 1–6. [DOI] [Google Scholar]

- 56.Sharif M. I., Li J. P., Amin J., Sharif A. An improved framework for brain tumor analysis using MRI based on YOLOv2 and convolutional neural network. Complex & Intelligent Systems . 2021;7:2023–2036. [Google Scholar]

- 57.Wurtz J., Love P. Classically optimal variational quantum algorithms. 2021. http://arXiv:2103.17065v1.

- 58.Buda M., Saha A., Mazurowski M. A. Association of genomic subtypes of lower-grade gliomas with shape features automatically extracted by a deep learning algorithm. Computers in Biology and Medicine . 2019;109:218–225. doi: 10.1016/j.compbiomed.2019.05.002. [DOI] [PubMed] [Google Scholar]

- 59.Mazurowski M. A., Clark K., Czarnek N. M., Shamsesfandabadi P., Peters K. B., Saha A. Radiogenomics of lower-grade glioma: algorithmically-assessed tumor shape is associated with tumor genomic subtypes and patient outcomes in a multi-institutional study with the Cancer Genome Atlas data. Journal of neuro-oncology . 2017;133(1):27–35. doi: 10.1007/s11060-017-2420-1. [DOI] [PubMed] [Google Scholar]

- 60.Menze B. H., Jakab A., Bauer S., et al. The multimodal brain tumor image segmentation benchmark (BRATS) IEEE Transactions on Medical Imaging . 2014;34(10):1993–2024. doi: 10.1109/TMI.2014.2377694. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Bakas S., Akbari H., Sotiras A., et al. Advancing the cancer genome atlas glioma MRI collections with expert segmentation labels and radiomic features. Scientific Data . 2017;4(1):p. 170117. doi: 10.1038/sdata.2017.117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Kistler M., Bonaretti S., Pfahrer M., Niklaus R., Büchler P. The virtual skeleton database: an open access repository for biomedical research and collaboration. Journal of Medical Internet Research . 2013;15(11):p. e245. doi: 10.2196/jmir.2930. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Chakrabarty N. Swati kanchan. 2021. https://github.com/sartajbhuvaji/brain-tumor-classification-dataset .

- 64.Dequidt P., Bourdon P., Tremblais B., et al. Exploring radiologic criteria for glioma grade classification on the BraTS dataset. IRBM . 2021;42(6):407–414. [Google Scholar]

- 65.Islam M. K., Ali M. S., Miah M. S., Rahman M. M., Alam M. S., Hossain M. A. Brain tumor detection in MR image using superpixels, principal component analysis and template based K-means clustering algorithm. Machine Learning with Applications . 2021;5 doi: 10.1016/j.mlwa.2021.100044.100044 [DOI] [Google Scholar]

- 66.Kesav N., Jibukumar M. Efficient and low complex architecture for detection and classification of brain tumor using rcnn with two channel CNN. Journal of King Saud University-Computer and Information Sciences . 2021 [Google Scholar]

- 67.More R. B., Bhisikar S. A. Techno-Societal 2020 . Springer; 2021. Brain tumor detection using deep neural network; pp. 85–94. [DOI] [Google Scholar]

- 68.Minarno A. E., Mandiri M. H. C., Munarko Y., Hariyady H. Convolutional neural network with hyperparameter tuning for brain tumor classification. Kinetik: Game Technology, Information System, Computer Network, Computing, Electronics, and Control . 2021 doi: 10.22219/kinetik.v6i2.1219. [DOI] [Google Scholar]

- 69.Das A., Mohapatra S. K., Mohanty M. N. Design of deep ensemble classifier with fuzzy decision method for biomedical image classification. Applied Soft Computing . 2022;115:p. 108178. doi: 10.1016/j.asoc.2021.108178. [DOI] [Google Scholar]

- 70.Dash S., Das R. K., Guha S., Bhagat S. N., Behera G. K. Lecture Notes in Networks and Systems Advances in Intelligent Computing and Communication . Springer; 2021. An interactive machine learning approach for brain tumor MRI segmentation; pp. 391–400. [DOI] [Google Scholar]

- 71.Rani K. V., Jawhar S. J., Palanikumar S. Nanoscale imaging technique for accurate identification of brain tumor contour using NBDS method. Journal of Ambient Intelligence and Humanized Computing . 2020;12(7):7667–7684. doi: 10.1007/s12652-020-02485-y. [DOI] [Google Scholar]

- 72.Samreen A., Taha A., Reddy Y., Sathish P. Brain Tumor Detection by Using Convolution Neural Network ; Berlin/Heidelberg, Germany. Springer; 2020. [Google Scholar]

- 73.Alanazi M. F., Ali M. U., Hussain S. J., et al. Brain tumor/mass classification framework using magnetic-resonance-imaging-based isolated and developed transfer deep-learning model. Sensors . 2022;22(1):p. 372. doi: 10.3390/s22010372. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Çinar A., Yildirim M. Detection of tumors on brain MRI images using the hybrid convolutional neural network architecture. Medical Hypotheses . 2020;139 doi: 10.1016/j.mehy.2020.109684.109684 [DOI] [PubMed] [Google Scholar]

- 75.Zhang W., Yang G., Huang He, et al. ME‐Net: multi‐encoder net framework for brain tumor segmentation. International Journal of Imaging Systems and Technology . 2021;31(4):1834–1848. [Google Scholar]

- 76.Fidon L., Ourselin S., Vercauteren T. Generalized wasserstein dice score, distributionally robust deep learning, and ranger for brain tumor segmentation: BraTS 2020 challenge. 2020. arXiv preprint arXiv:2011.01614.

- 77.Rao C. S., Karunakara K. Efficient detection and classification of brain tumor using kernel based SVM for MRI. Multimedia Tools and Applications . 2022;81(5):7393–7417. doi: 10.1007/s11042-021-11821-z. [DOI] [Google Scholar]

- 78.Vijila Rani K., Joseph Jawhar S., Palanikumar S. Nanoscale imaging technique for accurate identification of brain tumor contour using NBDS method. Journal of Ambient Intelligence and Humanized Computing . 2021;12(7):7667–7684. doi: 10.1007/s12652-020-02485-y. [DOI] [Google Scholar]

- 79.Sankaran K. S., Thangapandian M., Vasudevan N. Brain tumor grade identification using deep Elman neural network with adaptive fuzzy clustering-based segmentation approach. Multimedia Tools and Applications . 2021;80:25139–25169. doi: 10.1007/s11042-021-10873-5. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The multiclass brain tumor classification dataset has been downloaded from the Kaggle website. This dataset contains four classes such as glioma, meningioma, no tumor, and pituitary tumor (“Navoneel Chakrabarty, Swati Kanchan, https://github.com/sartajbhuvaji/brain-tumor-classification-dataset (accessed on 6 June 2021”). The 2020-BRATS Challenge incorporates data from 259 patients of MRI, with 76 cases of low and high glioma grades. Every patient has 155 MRI slices with a dimension of 240 × 240 × 155 (B. H. Menze, A. Jakab, S. Bauer, J. Kalpathy-Cramer, K. Farahani, J. Kirby, et al., “The multimodal brain tumor image segmentation benchmark (BRATS),” IEEE transactions on medical imaging, vol. 34, pp. 1993–2024, 2014. S. Bakas, H. Akbari, A. Sotiras, M. Bilello, M. Rozycki, J. S. Kirby, et al., “Advancing the cancer genome Atlas glioma MRI collections with expert segmentation labels and radio atomic features,” Scientific data, vol. 4, p. 170117, 2017. M. Kistler, S. Bonaretti, M. Pfahrer, R. Niklaus, and P. Büchler, “The virtual skeleton database: an open access repository for biomedical research and collaboration,” Journal of medical Internet research, vol. 15, p. e245, 2013).