Abstract

Third molar impacted teeth are a common issue with all ages, possibly causing tooth decay, root resorption, and pain. This study was aimed at developing a computer-assisted detection system based on deep convolutional neural networks for the detection of third molar impacted teeth using different architectures and to evaluate the potential usefulness and accuracy of the proposed solutions on panoramic radiographs. A total of 440 panoramic radiographs from 300 patients were randomly divided. As a two-stage technique, Faster RCNN with ResNet50, AlexNet, and VGG16 as a backbone and one-stage technique YOLOv3 were used. The Faster-RCNN, as a detector, yielded a mAP@0.5 rate of 0.91 with ResNet50 backbone while VGG16 and AlexNet showed slightly lower performances: 0.87 and 0.86, respectively. The other detector, YOLO v3, provided the highest detection efficacy with a mAP@0.5 of 0.96. Recall and precision were 0.93 and 0.88, respectively, which supported its high performance. Considering the findings from different architectures, it was seen that the proposed one-stage detector YOLOv3 had excellent performance for impacted mandibular third molar tooth detection on panoramic radiographs. Promising results showed that diagnostic tools based on state-ofthe-art deep learning models were reliable and robust for clinical decision-making.

Keywords: impacted, tooth, detection, deep learning, panoramic radiograph, machine learning, dentistry

1. Introduction

Dental clinics frequently use different types of radiography with distinct properties. They visualize different regions of interest for diagnosis and further treatment planning [1]. Panoramic radiographs were initially one of the most common visualization techniques in dentistry that scans a wide area with a significantly lower radiation dose [2]. They enable a variety of anomalies, conditions, and lesions to be diagnosed by experts [1,2,3]. However, complex anatomical structures, pathologies, and imaging distortions can make detecting a case or interpreting a critical condition difficult. Computer-assisted diagnostic systems can help clinicians in decision-making [4]. Recently, the introduction of artificial intelligence-based approaches has efficiently overcome the limitations of traditional methods. Automatically identifying the optimal representations, learning features from raw data are used instead of hand-crafted features [5].

Artificial intelligence (AI) refers to systems and devices designed to address real-life problems as creative as human beings treat them by mimicking natural human intelligence and behavior [6,7,8]. Machine learning (ML) is a subset of AI and consists of algorithms to learn from a large set of data that enables computers to learn how to solve a problem by performing a specific task [9,10]. They improve as they experience more data at the task [11]. Deep learning (DL) is a subset of ML and consists of algorithms inspired by structural and functional properties of the human brain, called artificial neural networks [12,13]. They train themselves to learn to perform specific tasks. More extensive neural networks and training them with more data scales the performance up for real-life tasks such as classification, object detection, segmentation, and object recognition [14,15,16]. Convolutional neural networks (CNNs) are a version of the neural networks that include convolution operations in at least one of the layers.

In recent years, DL models have been intensively applied to many fields, including healthcare, which covers a wide range of applications related to medical diagnosis purposes. There has been a growing interest in artificial intelligence-based systems in dentistry. The use of deep learning in dentistry, including orthodontics, periodontology, endodontics, dental radiology, and forensic medicine, has shown promising results in classification, segmentation, and detection tasks [17,18]. Applications are based on teeth, oral structures, pathologies, cephalometric landmarks, bone loss, periodontal inflammation, and root morphology [19,20,21,22,23,24,25,26,27]. According to the number of published research, a smaller number of several initiatives in dental care develop software and digital dental approaches to diagnostic tools [28]. Some of them are CranioCatch, Digital Smile Design (DSD), 3Shape software (3Shape Design Studio and 3Shape Implant Studio), Exocad, and Bellus 3D.

Object detection task refers to determining the coordinates of a specific object in the input data, while classification refers to automatically assigning the objects into pre-determined categories. When the artificial intelligence-based computer-aided systems are engaged in the field, they can:

support clinicians and physicians who are busy all day to avoid misdiagnosis;

help populations with a shortage of radiologists or screening modalities;

help radiologists manage their workloads in large hospitals;

create reports about pathologic or anatomical conditions in panoramic radiographs, which results in saving time;

provide a focus on the education of observers and new graduates in clinics.

Partially or entirely impacted third molars are the most common developmental conditions affecting humans and require surgical intervention [29,30,31]. Tooth position, adjacent tooth, alveolar bone, and surrounding mucosal soft tissue usually cause failed eruption [32]. They may cause pain, tooth decay, swelling, and root resorption for various reasons, while they might asymptomatically indicate other pathologies, like caries, periodontal diseases, cysts, or tumors, around the second or third molar [32,33,34]. Removal of the third molar for severe cases alleviates symptoms and helps the patients’ oral health [32,35,36]. It is one of the most common surgical procedures performed in secondary care in the UK [37].

This work aimed to develop a decision support system that will help dentists. It presents a state-of-the-art artificial intelligence-based detection solution, including deep learning algorithms with multiple convolutional neural networks to mandibular third molar impacted teeth problems in panoramic radiographs. Two different detectors were used, namely Faster RCNN and YOLOv3. While the YOLOv3 was a single-step technique, the Faster RCNN was a two-stage method in which different backbones were needed to finalize the detection process. Three different backbones, ResNet50, AlexNet, and VGG16, were combined with Faster RCNN. The detection performance was evaluated by mean average precision (mAP), recall, and precision. Classification accuracy was also calculated for each model.

Related Works

It is intended to explore studies directly related to a detection task for third molars on panoramic radiographs.

Faure et al. (2021) proposed an approach to automatically diagnosing impacted teeth with 530 panoramic radiographs. They implemented only one model using Faster-RCNN with ResNet101, identifying impacted teeth with performances between 51.7% and 88.9% [38]. Kuwada et al. (2020) used deep learning models on panoramic radiographs to detect and classify the presence of impacted supernumerary teeth in the anterior maxillary area [4]. It was reported that DetectNet showed the highest accuracy value of 0.96. Zhang et al. (2018) predicted postoperative facial swelling following impacted mandibular third molars extraction using 15 factors related to patients [39]. Orhan et al. (2021) performed a segmentation task to detect third molar teeth using Cone-Beam Computed Tomography [40]. One hundred twelve teeth are used. A precision value of 0.77 was reported. Basaran et al. (2021) developed a diagnostic charting for ten dental situations, including impacted teeth [41]. Faster R-CNN Inception v2 was implemented using 1084 panoramic radiographs. The precision value for the impacted tooth was 0.779.

Other studies performed detection tasks that aimed to recognize automatically and number teeth [19,20,21,42]. Panoramic, periapical, and dental bitewing radiographs were used. Their results were presented for all teeth together instead of a separate analysis for impacted third molars. Moreover, previous works did not include categorization for angulation concerning adjacent teeth.

2. Materials and Methods

The Ethical Review Board approved this study at Ankara Yildirim Beyazit University (approval number 2021-69). It was performed following the ethical standards of the Helsinki Declaration.

Panoramic radiographs of 300 patients older than 18 with at least one impacted tooth in the third-molar region were randomly selected from the image database from January 2018 and January 2020. Panoramic radiographs (PRs) with complete or incomplete impacted tooths with complete root formations for patients older than 18 years old were included, while PRs with artifacts, movement, and position-based distortions and incomplete root formations were excluded.

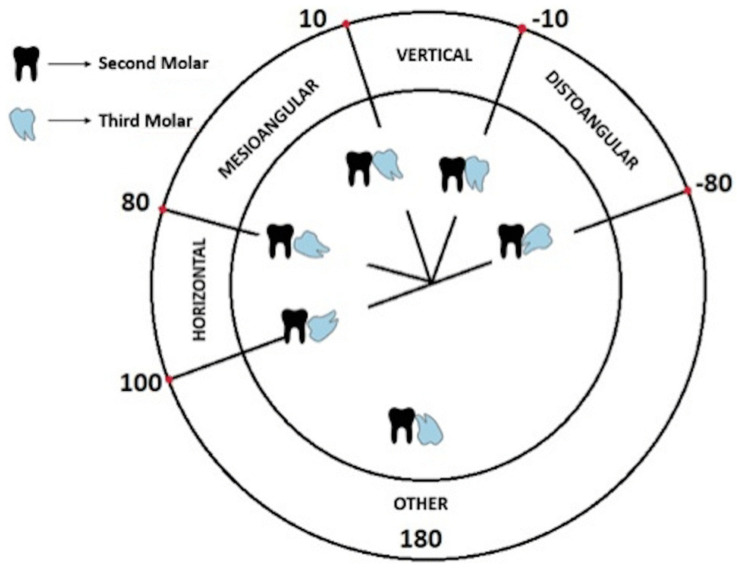

The Winter classification approach was used to categorize mandibular third molar teeth into mesioangular, horizontal positions for both sides [43,44]. The idea was based on the angle between the long axes of the third molar and second molar tooth. While the mesioangular position indicated an angle from 11° to 79°, the horizontal position referred angle from 80° to 100°, as shown schematically in Figure 1.

Figure 1.

Winter’s mandibular third molar teeth classification scheme [34].

The original files had DCM format with a resolution of 2943 × 1435. They first converted to PNG using MATLAB, then resized to 640 × 640 before passing to the models. The PyTorch library was used for developing the models. The dataset was analyzed and labeled by an oral and maxillofacial radiologist with more than five years of experience in the field using labelImg [45]. Rectangular bounding boxes enclosing the crown and root of the interested tooth were used. Experiments were performed using k-fold cross-validation (k = 5) instead of a single standard split. It ensures that models were tested on all kinds of potentially tricky cases. The best performance of each fold is determined.

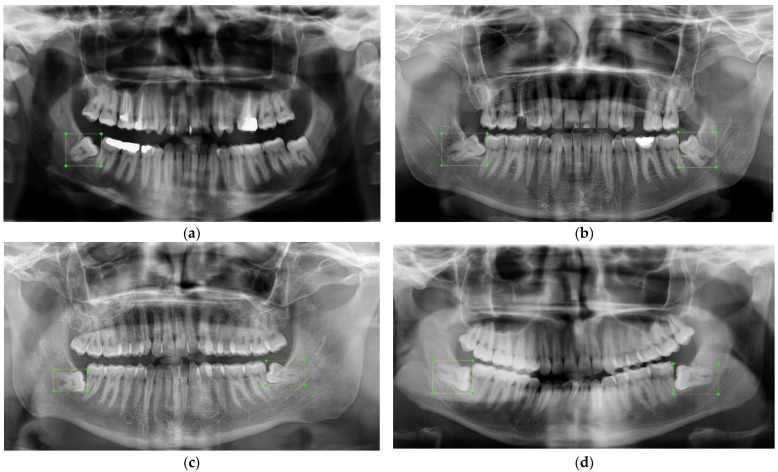

Previous studies on the prevalence of impacted third molars showed that they were twice more likely to be seen in the mandible than in the maxilla [46,47,48,49,50,51,52,53,54]. Almost half of the angulation of incidences was mesioangular. There was no statistically significant difference between the right and left sides. In the light of the preceding findings and the available dataset, mandibular third molars with four classes were chosen to be analyzed. Four classes were defining mandibular third molar teeth that t1, t4, t5, and t8 indicated mesioangular left, horizontal left, mesioangular right and horizontal right impacted teeth respectively. Class distributions were balanced. The total number of impacted third molars was 588 from 440 panoramic radiographs. To keep the dataset balanced for experiments, the number of each class for t1, t4, t5, and t8 was determined as 155, 134, 169, and 130 respectively in the design phase of the work. Figure 2 demonstrates four panoramic radiographs with impacted teeth used as inputs for the proposed detection solution. Figure 2a has only a mesioangular tooth on the left, class t1. Figure 2b has two mesioangular teeth on both sides, classes of t1 and t5. Figure 2c,d has two horizontal teeth on both sides, classes t4 and t8.

Figure 2.

Examples of panoramic radiographs with bounding boxes. (a) One impacted tooth—me-sioangular left, (b) two impacted teeth—mesioangular left and right, (c,d) horizontal left and right.

To date, the state-of-the-art object detectors are categorized into two classes, namely two-stage methods and one-stage methods [55,56]. Two-stage detectors have proposal-driven mechanisms that first candidate object locations, bounding boxes, are firstly proposed, and then each candidate location is assigned to classes using a convolutional neural network [57]. In contrast, one-stage detectors, with the advantage of being simpler, makes use of anchor boxes to localize and restrict the region and the shape of an object to be detected in the image; in other words, they find bounding boxes in a single step without using region proposals [58,59,60].

The AI-based model development phase includes two detectors, namely Faster RCNN and YOLOv3 [57,58]. YOLOv3 performs the detection in a single-phase, although Faster RCNN is a two-stage technique that needs a backbone as a feature extractor. So, ResNet50, AlexNet, and VGG16 are also used as a backbone and Faster RCNN one at a time.

AlexNet consists of 5 convolutional and three fully connected layers. It features Rectified Linear Units to model a neuron’s output, and provides training on multiple GPUs and overlapping pooling, making the process faster [61]. VGG16 is a convolutional neural network model with 13 convolutional and five pooling layers. Large kernel filters used in AlexNet are replaced with 3 × 3 kernel-sized filters in VGG16 architecture for better performance with ease of implementation [62]. After AlexNet and VGG16, architectures begin to become deeper; however, it makes the back-propagated gradient extremely small sometimes, resulting in saturated or decreased performance. Residual Networks, ResNet50, solves this issue by suggesting identity shortcut connections that skip one or more layers and perform identity mappings [63]. It has a depth of up to 152 layers and reduces the number of parameters needed for a deep network.

YOLO, You Only Look Once, is an object detector that uses features learned by a deep convolutional neural network to detect objects [58]. The architecture of YOLO v3 includes 106 layer fully convolutional layers. It makes predictions of bounding boxes at three scales by downsampling the dimensions of the input image at different layers and extracting features from them. Darknet-53 performs feature extraction that is more powerful and efficient. Up-sampled layers help hold fine features, making it better at detecting small objects. Class predictions for each bounding box are made using cross-entropy loss and logistic regression instead of softmax. The network architecture of the model is shown in Figure 3.

Figure 3.

YOLOv3 network architecture that predicts at three scales [64].

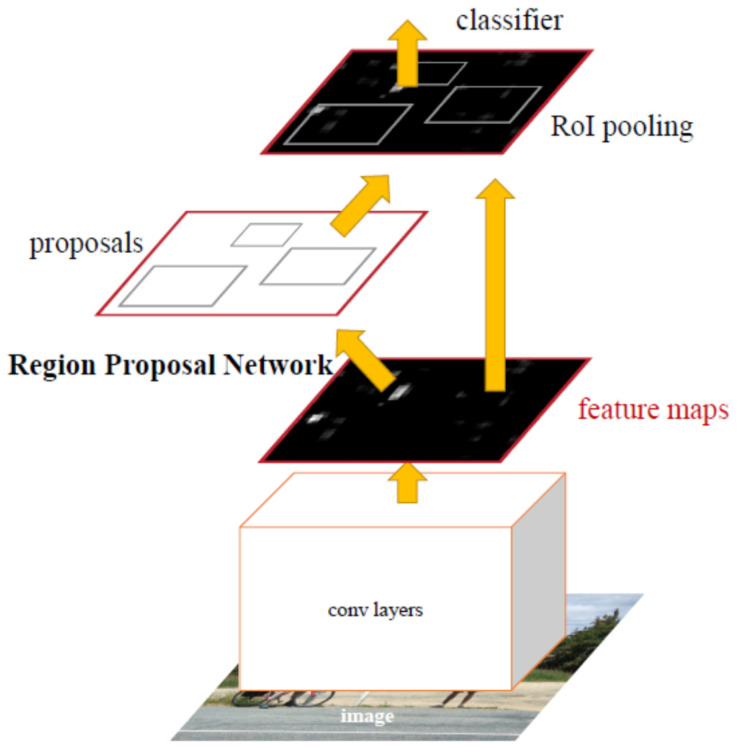

Faster R-CNN uses Region Proposal Networks, a fully convolutional network that simultaneously predicts object bounds and objectness scores, to create potential bounding boxes and afterward runs a classifier on these proposed boxes instead of using Selective Search as a region proposal technique. Classification is followed by a post-processing phase that refines bounding boxes, excluding duplications and score bounding boxes again [65]. Figure 4 summarizes how it proceeds from beginning to end.

Figure 4.

Faster R-CNN system for object detection with RPN [57].

Adam optimizer was used with a learning rate of 0.0001. The model was run on Windows OS with NVIDIA GeForce RTX 3080 graphics processor unit. Object detection models give outputs bounding box and class of the objects in input images. The detection performance is evaluated by mean average precision (mAP), recall, and precision metrics. The mAP is also used for Pattern Analysis, Statistical modeling and Computational Learning (PASCAL) Visual Object Classes (VOC) Challenge [66]. It can briefly be described step by step as follows.

Intersection Over Union (IOU) defines how the bounding box is predicted correctly. It is calculated as a ratio of overlap between the predicted bounding box area and the ground truth area. It takes values between 0 and 1, indicating no overlap and exact overlap, respectively, as shown in Equation (1) [6,9,13].

| (1) |

Precision refers to how exactly the model identifies relevant objects, while recall measures the model’s ability to propose correct detections among all ground truths, which are given in Equations (2) and (3) [6,9,13]. While comparing two models, a model with high precision and recall value are considered better performance.

| (2) |

| (3) |

Average Precision represents the area under the precision-recall curve that is evaluated at an IoU threshold. It is defined in Equation (4) [6,9,13].

| (4) |

The notation of AP@threshold indicates that AP is calculated at a given IoU threshold. For most models, it is considered 0.5 and shown by AP@0.5. AP is calculated for each class in the data, resulting in n-different AP values for n-classes. When these values are averaged, mean Average Precision (mAP) is obtained for n classes with Equation (5) [6,9,13].

| (5) |

Accuracy is a metric used to evaluate classification performance. It refers to the percentage of the correct predictions for the test dataset, as shown in Equation (6). It describes how the model performs for all classes [6,9,13].

| (6) |

Object detection algorithms make predictions with a bounding box and a class label. For each object, the predicted bounding box and ground truth are measured by intersection over union (IoU) [67]. If the IoU value of the prediction is bigger than the IoU threshold, the object is classified as true positive (TP). Precision and Recall are calculated based on the measured IoU and IoU threshold. Average precision (AP) is the area under the Precision-Recall curve. The mean average precision (mAP) is calculated by considering the mean AP over all classes [68].

While mAP@0.5 refers to the mAP when the IoU threshold is 0.5, mAP@0.5–0.95 means the average mAP over different IoU thresholds from 0.5 to 0.95 [69]. Many algorithms, including Faster RCNN, YOLO, use mAP to evaluate the model performance [57,58].

3. Results

Findings were categorized into two groups of one-stage and two-stage detectors in structural design. Detection performances of four different architectures were presented based on mAP with thresholds values of 0.5 and 0.5–0.95, which are given in Table 1.

Table 1.

Detection performances of two detectors, one with three different backbones.

| Fold | mAP@0.5 | mAP@0.5:0.95 | |

|---|---|---|---|

| One-stage technique | |||

| YOLOv3 | 1 | 0.941 | 0.751 |

| 2 | 0.979 | 0.783 | |

| 3 | 0.936 | 0.746 | |

| 4 | 0.981 | 0.761 | |

| 5 | 0.98 | 0.771 | |

| Avg | 0.96 | 0.76 | |

| Two-stage technique | |||

| Faster RCNN–ResNet50 | 1 | 0.912 | 0.628 |

| 2 | 0.904 | 0.673 | |

| 3 | 0.86 | 0.646 | |

| 4 | 0.944 | 0.71 | |

| 5 | 0.953 | 0.713 | |

| Avg | 0.91 | 0.71 | |

| Faster RCNN–AlexNet | 1 | 0.814 | 0.433 |

| 2 | 0.878 | 0.518 | |

| 3 | 0.773 | 0.47 | |

| 4 | 0.916 | 0.52 | |

| 5 | 0.923 | 0.513 | |

| Avg | 0.86 | 0.49 | |

| Faster RCNN–VGG16 | 1 | 0.838 | 0.464 |

| 2 | 0.89 | 0.486 | |

| 3 | 0.802 | 0.423 | |

| 4 | 0.898 | 0.484 | |

| 5 | 0.937 | 0.583 | |

| Avg | 0.87 | 0.49 | |

As a two-stage technique, Faster RCNN was used as a detector together with three different backbones, ResNet50, AlexNet, and VGG16. They produced mAP@0.5 value of 0.91, 0.86 and 0.87 while mAP@0.5:0.95 value of 0.71, 0.49 and 0.49 respectively. ResNet50 produced the highest mAP performance, while the other two gave a slightly lower rate. On the other hand, YOLOv3 provided the highest rate among all, with a mAP@0.5 value of 0.96 and mAP@0.5:0.95 value of 0.76. The precision and recall were 0.88 and 0.93. Train and validation loss for YOLOv3 were given in Figure 5. Training and validation losses were from a single fold with the best performance.

Figure 5.

Change of performance metrics and losses for YOLOv3.

YOLOv3 outperforms ResNet (p = 0.042), AlexNet (p = 0.011) and VGG16 (p = 0.015). It was not seen that there was a significant difference between ResNet and AlexNet (p = 0.158)–VGG16 (p = 0.193). Accuracy is calculated for each model and used for statistical analysis. It was presented in Table 2.

Table 2.

Classification accuracies.

| Fold | YOLOv3 | Faster RCNN–ResNet50 | Faster RCNN–AlexNet | Faster RCNN–VGG16 |

|---|---|---|---|---|

| 1 | 0.824 | 0.814 | 0.636 | 0.674 |

| 2 | 0.86 | 0.727 | 0.68 | 0.693 |

| 3 | 0.834 | 0.713 | 0.529 | 0.653 |

| 4 | 0.897 | 0.856 | 0.76 | 0.736 |

| 5 | 0.891 | 0.854 | 0.81 | 0.792 |

| Avg | 0.86 | 0.79 | 0.68 | 0.7 |

YOLOv3 performed better than AlexNet (p = 0.016) and VGG16 (p = 0.001) in classification accuracy, but there was not a statistically significant difference between the classification accuracies of YOLOv3 and ResNet (p = 0.079). Among backbones used in Faster RCNN, a statistically significant difference was not seen between ResNet and AlexNet (p = 0.085)–VGG16 (p = 0.068).

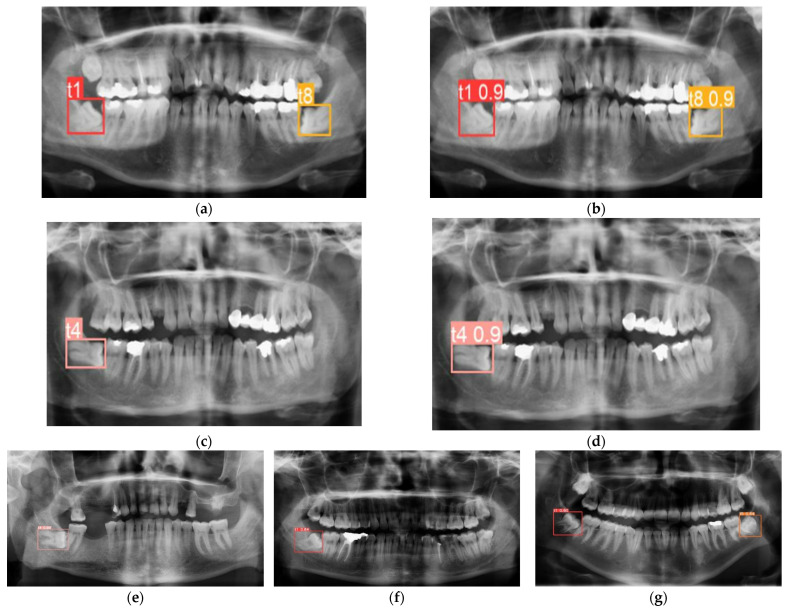

Findings were also shown on panoramic radiographs with annotations and predictions together. Figure 6 demonstrates how accurately they were detected. Figure 6a–c showed ground truth annotations for the impacted teeth, b-d showed corresponding predictions from the proposed solution using YOLOv3. They were detected and assigned to the proper classes. Figure 6e–g demonstrates random detection result samples from ResNet, AlexNet, and VGG16.

Figure 6.

Detection result samples from YOLOv3 (a–d), ground truths (a–c), predictions (b–d) and Faster RCNN with ResNet (e), AlexNet (f) and VGG16 (g).

The detection performance for each class was also investigated. It was seen that t1, t4, t5 and t8 showed the mAP@0.5 rate of 0.96, 0.98, 0.984, 0.995 and AP@0.5:0.95 rate of 0.774, 0.775, 0.793, 0.791 respectively. Table 3 shows the performance metrics of each class for the solution with YOLOv3.

Table 3.

Inter-class detection performances for the solution with YOLOv3.

| Class | AP@0.5 | AP@0.5:0.95 | Precision | Recall |

|---|---|---|---|---|

| t1—mesioangular left | 0.96 | 0.774 | 0.849 | 0.95 |

| t4—horizontal left | 0.98 | 0.775 | 0.96 | 0.833 |

| t5—mesioangular right | 0.984 | 0.793 | 0.908 | 0.987 |

| t8—horizontal right | 0.995 | 0.791 | 0.88 | 1 |

mAP at IOU equals to 0.5 are widely accepted detection metric for many real-life detection applications [13,57,58,66]. YOLOv3 presented promising performance with the highest accuracy, indicating that YOLOv3 was a successful detector at detecting third-molar impacted teeth. It was superior to the Faster-RCNN and its use with ResNet50, AlexNet, and VGG16 in terms of mAP and classification accuracy.

4. Discussion

A significant increase in the number of studies on artificial intelligence-based decision support systems has been seen in the field of dentistry as well as other fields of healthcare. In dentistry, an automated analysis and interpretation system in which radiographs are automatically analyzed to find defects is a fundamental goal. Previous studies used images from different imaging modalities like panoramic, periapical, and bitewing radiographs for detection, segmentation, and classification purposes. Common topics included studies on tooth detection, tooth numbering, and many other conditions like carries, lesions, anatomical structures, cysts, etc. [17,18,19,20,21,22,23,24,25,26,27,46,47,48,49,50,51,52,53,54,70]. Contrarily, it was observed that previous studies on mandibular third molar detection alone were rare and explored only one model architecture performance.

Lee et al. (2018) suggested a deep learning model to diagnose and predict periodontally compromised teeth. It consisted of 1740 periapical radiographic images, resulting in diagnostic accuracy of 76.7% for molar teeth. It was concluded that the CNN algorithm was helpful for diagnosing periodontally compromised teeth with different expectations of improved systems for better performance in time [71]. They compared the agreement between the expert observer and AI application. The proposed work presented a higher performance in accuracy for YOLOv3 and Faster RCNN ResNet50. Other metrics were not reported.

Faure et al. (2021) proposed a method to automatically diagnose impacted teeth using Faster-RCNN with ResNet101, identifying impacted teeth with performances between 51.7% and 88.9% [38]. Angulation was not investigated; only one class for impacted third molars was used. The proposed work provided a more elaborative analysis by using two detectors with three backbones and a higher number of classes.

Basaran et al. (2021) suggested a model for the diagnostic charting of ten dental conditions, including impacted teeth, in panoramic radiography. Their model was based on Faster R-CNN Inception v2, including 1084 graphs with 796 impacted teeth. It was reported that sensitivity and precision for impacted teeth were 0.96 and 0.77 [41]. When it was compared, this work presented higher precision, but mAP was not reported.

Tobel et al. (2017) used CNNs to develop an automated technique to monitor the development stages of the lower third molar on panoramic radiographs. They classified their growth into ten classes. They concluded that the performance was similar to staging by human observers but needed to be optimized for age estimation [72].

Vinayahalingam et al. (2019) implemented CNNs to detect and segment inferior alveolar nerve and lower third molars on panoramic radiographs. The mean dice-coefficient for the third molar was 0.947 ± 0.033 [26]. Contrary to traditional simple architectures, deep CNNs succeed in edge detection thanks to multiple convolutional and hidden layers featuring hierarchical feature presentation [73].

Kuwada et al. (2020) performed detection and classification for impacted supernumerary teeth in the anterior maxillary area [4]. This region was completely different from the region of third molars. Zhang et al. (2018) used 15 patient-related factors to predict postoperative facial swelling following impacted mandibular third molars extraction [39]. They used angulation of the third molar with respect to the second molar as a parameter but did not perform detection. Orhan et al. (2021) performed segmentation to detect third molar teeth with a precision value of 0.77 [40]. They used Cone-Beam Computed Tomography images and compared agreement between the human observer and AI application. The proposed work used panoramic images with higher precision in addition to evaluation metrics for detection.

Considering previous works, this work demonstrated an immediate and comprehensive solution for automated detection of mandibular third molar teeth using two types of detection techniques for the first time. This work also included two different third molar impaction classes. Previous works had only one class for all third molars, which limited the corresponding comparison. Moreover, many previous works performed classification and segmentation tasks for different purposes. This work focused only mandibular third molar detection problem. Although mAP was a standard evaluation metric for detection tasks in the computer vision field, it was not reported in previous works. Briefly, it was not always possible to directly compare each work because of incompatibilities between (i) type of radiography used, (ii) evaluation metrics used, and (iii) purposes.

Multi-label classification used in YOLOv3 performed better for datasets with overlapping labels than using softmax, which assumed each bounding box had only one class, which was not the case in real-life applications. Additional techniques such as advanced image pre-processing, data augmentation, and more data can improve the proposed solution’s results and make it more robust.

This work has two limitations. First, the amount of data is limited. More panoramic radiographs will be collected and annotated to perform deep learning for more robust, reliable results. Second, mandibular third molars were used in this work due to their wider prevalence. Later, maxillary third molars will be analyzed as more data are collected.

5. Conclusions

The proposed solution aims to help dentists in their decision-making process. It is shown that four different models are successful in detecting third molars. The use of machine learning in dentistry has significant potential in diagnosis with high accuracy and precision. Diagnostic tools based on state-of-the-art deep learning models are reliable and robust auxiliary techniques for clinical decision-making, resulting in more efficient treatment planning for patients and clinician health management. In time, AI-based devices can be used as a standard tool in clinical practice and play a crucial role in providing diagnostic recommendations.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Institutional Review Board (or Ethics Committee) of Ankara Yildirim Beyazit University (protocol code 2021-69 and date of approval 16 February 2021).

Informed Consent Statement

Not applicable.

Data Availability Statement

The data supporting this study’s findings are not available and accessible due to ethical issues, patients’ and institutions’ data protection policies.

Conflicts of Interest

The author declares no conflict of interest.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Zadrożny Ł., Regulski P., Brus-Sawczuk K., Czajkowska M., Parkanyi L., Ganz S., Mijiritsky E. Artificial Intelligence Application in Assessment of Panoramic Radiographs. Diagnostics. 2020;12:224. doi: 10.3390/diagnostics12010224. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Prados-Privado M., Villalón J.G., Martínez-Martínez C.H., Ivorra C. Dental Images Recognition Technology and Applications: A Literature Review. Appl. Sci. 2020;10:2856. doi: 10.3390/app10082856. [DOI] [Google Scholar]

- 3.Perschbacher S. Interpretation of panoramic radiographs. Aust. Dent. J. 2012;57:40–45. doi: 10.1111/j.1834-7819.2011.01655.x. [DOI] [PubMed] [Google Scholar]

- 4.Kuwada C., Ariji Y., Fukuda M., Kise Y., Fujita H., Katsumata A., Ariji E. Deep learning systems for detecting and classifying the presence of impacted supernumerary teeth in the maxillary incisor region on panoramic radiographs. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2020;130:464–469. doi: 10.1016/j.oooo.2020.04.813. [DOI] [PubMed] [Google Scholar]

- 5.Panetta K., Rajendran R., Ramesh A., Rao S.P., Agaian S. Tufts Dental Database: A Multimodal Panoramic X-ray Dataset for Benchmarking Diagnostic Systems. IEEE J. Biomed. Health Inform. 2021 doi: 10.1109/JBHI.2021.3117575. [DOI] [PubMed] [Google Scholar]

- 6.Hassoun M.H. Fundamentals of Artificial Neural Networks. MIT Press; London, UK: 1995. [Google Scholar]

- 7.Yegnanarayana B. Artificial Neural Networks. PHI Learning Pvt. Ltd.; Delhi, India: 2009. [Google Scholar]

- 8.Krogh A. What are artificial neural networks? Nat. Biotechnol. 2008;26:195–197. doi: 10.1038/nbt1386. [DOI] [PubMed] [Google Scholar]

- 9.Bishop C.M., Nasrabadi N.M. Pattern Recognition and Machine Learning. Volume 4. Springer; New York, NY, USA: 2006. p. 768. [Google Scholar]

- 10.Jordan M.I., Mitchell T.M. Machine learning: Trends, perspectives, and prospects. Science. 2015;349:255–260. doi: 10.1126/science.aaa8415. [DOI] [PubMed] [Google Scholar]

- 11.Wang S., Summers R.M. Machine learning and radiology. Med. Image Anal. 2012;16:933–951. doi: 10.1016/j.media.2012.02.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Skansi S. Introduction to Deep Learning: From Logical Calculus to Artificial Intelligence. Springer; Berlin/Heidelberg, Germany: 2018. [Google Scholar]

- 13.Goodfellow I., Bengio Y., Courville A. Deep Learning. MIT Press; London, UK: 2016. [Google Scholar]

- 14.Shin H.C., Roth H.R., Gao M., Lu L., Xu Z., Nogues I., Summers R.M. Deep convolutional neural networks for computer-aided detection: CNN architectures, dataset characteristics and transfer learning. IEEE Trans. Med. Imag. 2016;35:1285–1298. doi: 10.1109/TMI.2016.2528162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Liu L., Ouyang W., Wang X., Fieguth P., Chen J., Liu X., Pietikäinen M. Deep learning for generic object detection: A survey. Int. J. Computerv. 2020;128:261–318. doi: 10.1007/s11263-019-01247-4. [DOI] [Google Scholar]

- 16.Khan A., Sohail A., Zahoora U., Qureshi A.S. A survey of the recent architectures of deep convolutional neural networks. Artif. Intell. Rev. 2020;53:5455–5516. doi: 10.1007/s10462-020-09825-6. [DOI] [Google Scholar]

- 17.Schwendicke F., Golla T., Dreher M., Krois J. Convolutional neural networks for dental image diagnostics: A scoping review. J. Dent. 2019;91:103226. doi: 10.1016/j.jdent.2019.103226. [DOI] [PubMed] [Google Scholar]

- 18.Leite A.F., Gerven A.V., Willems H., Beznik T., Lahoud P., Gaêta-Araujo H., Vranckx M., Jacobs R. Artificial intelligence driven novel tool for tooth detection and segmentation on panoramic radiographs. Clin. Oral Investig. 2021;25:2257–2267. doi: 10.1007/s00784-020-03544-6. [DOI] [PubMed] [Google Scholar]

- 19.Parvez M.F., Kota M., Syoji K. Optimization technique combined with deep learning method for teeth recognition in dental panoramic radiographs. Sci. Rep. 2020;10:19261. doi: 10.1038/s41598-020-75887-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Tuzoff D.V., Tuzova L.N., Bornstein M.M., Krasnov A.S., Kharchenko M.A., Nikolenko S.I., Sveshnikov M.M., Bednenko G.B. Tooth detection and numbering in panoramic radiographs using convolutional neural networks. Dentomaxillofac. Radiol. 2019;48:20180051. doi: 10.1259/dmfr.20180051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Chen H., Zhang K., Lyu P., Li H., Zhang L., Wu J., Lee C.H. A deep learning approach to automatic teeth detection and numbering based on object detection in dental periapical films. Sci. Rep. 2019;9:1–11. doi: 10.1038/s41598-019-40414-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Kim C., Kim D., Jeong H., Yoon S.J., Youm S. Automatic tooth detection and numbering using a combination of a CNN and heuristic algorithm. Appl. Sci. 2020;10:5624. doi: 10.3390/app10165624. [DOI] [Google Scholar]

- 23.Kuwana R., Ariji Y., Fukuda M., Kise Y., Nozawa M., Kuwada C., Muramatsu C., Katsumata A., Fujita H., Ariji E. Performance of deep learning object detection technology in the detection and diagnosis of maxillary sinus lesions on panoramic radiographs. Dentomaxillofac. Radiol. 2021;50:20200171. doi: 10.1259/dmfr.20200171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Chang H.J., Lee S.J., Yong T.H., Shin N.Y., Jang B.G., Kim J.E., Huh K.H., Lee S.S., Heo M.S., Choi S.C., et al. Deep learning hybrid method to automatically diagnose periodontal bone loss and stage periodontitis. Sci. Rep. 2020;10:7531. doi: 10.1038/s41598-020-64509-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Zheng Z., Yan H., Setzer F.C., Shi K.J., Mupparapu M., Li J. Anatomically constrained deep learning for automating dental CBCT segmentation and lesion detection. IEEE Trans. Autom. Sci. Eng. 2020;18:603–614. doi: 10.1109/TASE.2020.3025871. [DOI] [Google Scholar]

- 26.Vinayahalingam S., Xi T., Bergé S., Maal T., de Jong G. Automated detection of third molars and mandibular nerve by deep learning. Sci. Rep. 2019;9:9007. doi: 10.1038/s41598-019-45487-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Hiraiwa T., Ariji Y., Fukuda M., Kise Y., Nakata K., Katsumata A., Fujita H., Ariji E. A deep-learning artificial intelligence system for assessment of root morphology of the mandibular first molar on panoramic radiography. Dentomaxillofac. Radiol. 2019;48:20180218. doi: 10.1259/dmfr.20180218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Carrillo-Perez F., Pecho O.E., Morales J.C., Paravina R.D., Della Bona A., Ghinea R., Herrera L.J. Applications of artificial intelligence in dentistry: A comprehensive review. J. Esthet. Restor. Dent. 2022;34:259–280. doi: 10.1111/jerd.12844. [DOI] [PubMed] [Google Scholar]

- 29.World Health Organization . International Statistical Classification of Diseases and Related Health Problems. Volume 2 WHO; Geneva, Italy: 2011. 10th Revision. [Google Scholar]

- 30.Rantanen A. The age of eruption of the third molar teeth. A clinical study based on Finnish university students. Acta Odontol. Scand. 1967;25:1–86. [Google Scholar]

- 31.Hugoson A., Kugelberg C. The prevalence of third molars in a Swedish population. An epidemiological study. Commun. Dent. Health. 1988;5:121–138. [PubMed] [Google Scholar]

- 32.Royal College of Surgeons Faculty of Dental Surgery Parameters of Care for Patients Undergoing Mandibular Third Molar Surgery. 2020. [(accessed on 23 April 2021)]. Available online: https://www.rcseng.ac.uk/-/media/files/rcs/fds/guidelines/3rd-molar-guidelines--april-2021.pdf.

- 33.Doğan N., Orhan K., Günaydin Y., Köymen R., Ökçu K., Üçok Ö. Unerupted mandibular third molars: Symptoms, associated pathologies, and indications for removal in a Turkish population. Quintessence Int. 2007;38:e497–e505. [PubMed] [Google Scholar]

- 34.Gümrükçü Z., Balaban E., Karabağ M. Is there a relationship between third-molar impaction types and the dimen-sional/angular measurement values of posterior mandible according to Pell and Gregory/Winter Classification? Oral Radiol. 2021;37:29–35. doi: 10.1007/s11282-019-00420-2. [DOI] [PubMed] [Google Scholar]

- 35.McGrath C., Comfort M.B., Lo E.C., Luo Y. Can third molar surgery improve quality of life? A 6-month cohort study. J. Oral. Maxillofac. Surg. 2003;61:759–763. doi: 10.1016/S0278-2391(03)00150-2. [DOI] [PubMed] [Google Scholar]

- 36.Savin J., Ogden G. Third molar surgery—A preliminary report on aspects affecting quality of life in the early postoperative period. Br. J. Oral Maxillofac. Surg. 1997;35:246–253. doi: 10.1016/S0266-4356(97)90042-5. [DOI] [PubMed] [Google Scholar]

- 37.McArdle L., Renton T. The effects of NICE guidelines on the management of third molar teeth. Br. Dent. J. 2012;213:E8. doi: 10.1038/sj.bdj.2012.780. [DOI] [PubMed] [Google Scholar]

- 38.Faure J., Engelbrecht A. International Work-Conference on Artificial Neural Networks. Springer; Cham, Switzerland: 2021. Impacted Tooth Detection in Panoramic Radiographs; pp. 525–536. [Google Scholar]

- 39.Zhang W., Li J., Li Z.B., Li Z. Predicting postoperative facial swelling following impacted mandibular third molars extraction by using artificial neural networks evaluation. Sci. Rep. 2018;8:12281. doi: 10.1038/s41598-018-29934-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Orhan K., Bilgir E., Bayrakdar I.S., Ezhov M., Gusarev M., Shumilov E. Evaluation of artificial intelligence for detecting impacted third molars on cone-beam computed tomography scans. J. Stomatol. Oral Maxillofac. Surg. 2021;122:333–337. doi: 10.1016/j.jormas.2020.12.006. [DOI] [PubMed] [Google Scholar]

- 41.Başaran M., Çelik Ö., Bayrakdar I.S., Bilgir E., Orhan K., Odabaş A., Jagtap R. Diagnostic charting of panoramic radiography using deep-learning artificial intelligence system. Oral Radiol. 2021:1–7. doi: 10.1007/s11282-021-00572-0. [DOI] [PubMed] [Google Scholar]

- 42.Yasa Y., Çelik Ö., Bayrakdar I.S., Pekince A., Orhan K., Akarsu S., Atasoy S., Bilgir E., Odabaş A., Aslan A.F. An artificial intelligence proposal to automatic teeth detection and numbering in dental bite-wing radiographs. Acta Odontol. Scand. 2020;11:275–281. doi: 10.1080/00016357.2020.1840624. [DOI] [PubMed] [Google Scholar]

- 43.Winter G. Impacted Mandibular Third Molars. American Medical Book, Co.; St Louis, MO, USA: 1926. [Google Scholar]

- 44.Pell G.J. Impacted mandibular third molars: Classification and modified techniques for removal. Dent Digest. 1933;39:330–338. [Google Scholar]

- 45.Tzutalin LabelImg. Git Code. 2015. [(accessed on 5 October 2015)]. Available online: https://github.com/tzutalin/labelImg.

- 46.Carter K., Worthington S. Predictors of third molar impaction: A systematic review and meta-analysis. J. Dent. Res. 2016;95:267–276. doi: 10.1177/0022034515615857. [DOI] [PubMed] [Google Scholar]

- 47.Jaroń A., Trybek G. The pattern of mandibular third molar impaction and assessment of surgery difficulty: A Retrospective study of radiographs in east Baltic population. Int. J. Environ. Res. Public Health. 2021;18:6016. doi: 10.3390/ijerph18116016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Zaman M.U., Almutairi N.S., Abdulrahman Alnashwan M., Albogami S.M., Alkhammash N.M., Alam M.K. Pattern of Mandibular Third Molar Impaction in Nonsyndromic 17760 Patients: A Retrospective Study among Saudi Population in Central Region, Saudi Arabia. BioMed Res. Int. 2021;2021:1880750. doi: 10.1155/2021/1880750. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Demirel O., Akbulut A. Evaluation of the relationship between gonial angle and impacted mandibular third molar teeth. Anat. Sci. Int. 2020;95:134–142. doi: 10.1007/s12565-019-00507-0. [DOI] [PubMed] [Google Scholar]

- 50.Hashemipour M.A., Tahmasbi-Arashlow M., Fahimi-Hanzaei F. Incidence of impacted mandibular and maxillary third molars: A radiographic study in a Southeast Iran population. Med. Oral Patol. Oral Cir. Bucal. 2013;18:e140. doi: 10.4317/medoral.18028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Eshghpour M., Nezadi A., Moradi A., Shamsabadi R.M., Rezaer N.M., Nejat A. Pattern of mandibular third molar impaction: A cross-sectional study in northeast of Iran. Niger. J. Clin. Pract. 2014;17:673–677. doi: 10.4103/1119-3077.144376. [DOI] [PubMed] [Google Scholar]

- 52.Goyal S., Verma P., Raj S.S. Radiographic evaluation of the status of third molars in Sriganganagar population—A digital panoramic study. Malays. J. Med. Sci. 2016;23:103. doi: 10.21315/mjms2016.23.6.11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Enabulele J.E., Obuekwe O.N. Prevalence of caries and cervical resorption on adjacent second molar associated with impacted third molar. J. Oral Maxillofac. Surg. Med. Pathol. 2017;29:301–305. doi: 10.1016/j.ajoms.2017.01.002. [DOI] [Google Scholar]

- 54.Passi D., Singh G., Dutta S., Srivastava D., Chandra L., Mishra S., Dubey M. Study of pattern and prevalence of mandibular impacted third molar among Delhi-National Capital Region population with newer proposed classification of mandibular impacted third molar: A retrospective study. Nat. J. Maxillofac. Surg. 2019;10:59. doi: 10.4103/njms.NJMS_70_17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Jiao L., Zhang F., Liu F., Yang S., Li L., Feng Z., Qu R. A survey of deep learning-based object detection. IEEE Access. 2019;7:128837–128868. doi: 10.1109/ACCESS.2019.2939201. [DOI] [Google Scholar]

- 56.He X., Zhao K., Chu X. AutoML: A survey of the state-of-the-art. Knowl. Based Syst. 2021;212:106622. doi: 10.1016/j.knosys.2020.106622. [DOI] [Google Scholar]

- 57.Ren S., He K., Girshick R., Sun J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015;28:91–99. doi: 10.1109/TPAMI.2016.2577031. [DOI] [PubMed] [Google Scholar]

- 58.Redmon J., Farhadi A. YOLOv3: An incremental improvement. arXiv. 20181804.02767 [Google Scholar]

- 59.Liu W., Anguelov D., Erhan D., Szegedy C., Reed S., Fu C.Y., Berg A.C. SSD: Single Shot MultiBox Detector. In: Leibe B., Matas J., Sebe N., Welling M., editors. Computer Vision–ECCV 2016, ECCV 2016 Lecture Notes in Computer Science. Volume 9905. Springer; Cham, Switzerland: 2016. [DOI] [Google Scholar]

- 60.Lin T.Y., Goyal P., Girshick R., He K., Dollár P. Focal loss for dense object detection; Proceedings of the IEEE International Conference on Computer Vision; Venice, Italy. 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- 61.Krizhevsky A., Sutskever I., Hinton G.E. Imagenet classification with deep convolutional neural networks; Proceedings of the 25th International Conference on Neural Information Processing Systems-Volume 1 (NIPS’12); Red Hook, NY, USA. 3–6 December 2012; p. 25. [Google Scholar]

- 62.Simonyan K., Zisserman A. Very deep convolutional networks for large-scale image recognition. arXiv. 20141409.1556 [Google Scholar]

- 63.He K., Zhang X., Ren S., Sun J. Deep residual learning for image recognition; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Las Vegas, NV, USA. 27–30 June 2016; pp. 770–778. [Google Scholar]

- 64.Mao Q.C., Sun H.M., Liu Y.B., Jia R.S. Mini-YOLOv3: Real-time object detector for embedded applications. IEEE Access. 2019;7:133529–133538. doi: 10.1109/ACCESS.2019.2941547. [DOI] [Google Scholar]

- 65.Girshick R., Donahue J., Darrell T., Malik J. Rich feature hierarchies for accurate object detection and semantic segmentation; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR); Columbus, OH, USA. 24–27 June 2014; pp. 580–587. [Google Scholar]

- 66.Everingham M., Van Gool L., Williams C.K.I., Winn J., Zisserman A. The Pascal visual object classes (VOC) challenge. Int. J. Comput. Vis. 2009;88:303–338. doi: 10.1007/s11263-009-0275-4. [DOI] [Google Scholar]

- 67.Bell S., Zitnick C.L., Bala K., Girshick R. Inside-outside net: Detecting objects in context with skip pooling and recurrent neural networks; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Las Vegas, NV, USA. 27–30 June 2016; pp. 2874–2883. [Google Scholar]

- 68.Huang J., Rathod V., Sun C., Zhu M., Korattikara A., Fathi A., Murphy K. Speed/accuracy trade-offs for modern convolutional object detectors; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Honolulu, HI, USA. 21–26 July 2017; pp. 7310–7311. [Google Scholar]

- 69.Van Etten A. Satellite imagery multiscale rapid detection with windowed networks; Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV); Waikoloa Village, HI, USA. 7–11 January 2019; pp. 735–743. [Google Scholar]

- 70.Ekert T., Krois J., Meinhold L., Elhennawy K., Emara R., Golla T., Schwendicke F. Deep learning for the radiographic detection of apical lesions. J. Endod. 2019;45:917–922. doi: 10.1016/j.joen.2019.03.016. [DOI] [PubMed] [Google Scholar]

- 71.Lee J.H., Kim D.H., Jeong S.N., Choi S.H. Diagnosis and prediction of periodontally compromised teeth using a deep learning-based convolutional neural network algorithm. J. Periodontal Implant. Sci. 2018;48:114–123. doi: 10.5051/jpis.2018.48.2.114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.De Tobel J., Radesh P., Vandermeulen D., Thevissen P.W. An automated technique to stage lower third molar development on panoramic radiographs for age estimation: A pilot study. J. Forensic Odontol. Stomatol. 2017;35:42. [PMC free article] [PubMed] [Google Scholar]

- 73.Wang R. Advances in Neural Networks–ISNN 2016, Proceedings of the 13th International Symposium on Neural Networks, ISNN 2016, St. Petersburg, Russia, 6–8 July 2016. Springer International Publishing; Cham, Switzerland: 2016. Edge detection using convolutional neural network; pp. 12–20. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data supporting this study’s findings are not available and accessible due to ethical issues, patients’ and institutions’ data protection policies.