Abstract

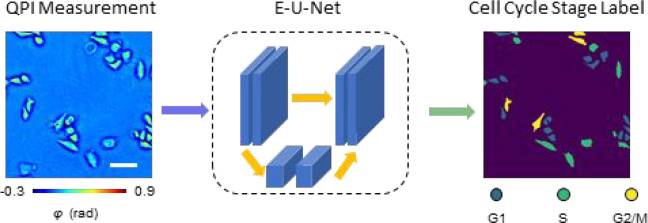

Traditional methods for cell cycle stage classification rely heavily on fluorescence microscopy to monitor nuclear dynamics. These methods inevitably face the typical phototoxicity and photobleaching limitations of fluorescence imaging. Here, we present a cell cycle detection workflow using the principle of phase imaging with computational specificity (PICS). The proposed method uses neural networks to extract cell cycle-dependent features from quantitative phase imaging (QPI) measurements directly. Our results indicate that this approach attains very good accuracy in classifying live cells into G1, S, and G2/M stages, respectively. We also demonstrate that the proposed method can be applied to study single-cell dynamics within the cell cycle as well as cell population distribution across different stages of the cell cycle. We envision that the proposed method can become a nondestructive tool to analyze cell cycle progression in fields ranging from cell biology to biopharma applications.

Keywords: deep learning, quantitative phase imaging, cell cycle, phase imaging with computational specificity

The cell cycle1 is an orchestrated process that leads to genetic replication and cellular division. This precise, periodic progression is crucial to a variety of processes, such as cell differentiation, organogenesis, senescence, and disease. Significantly, DNA damage can lead to cell cycle alteration and serious afflictions, including cancer.2 Conversely, understanding the cell cycle progression as part of the cellular response to DNA damage has emerged as an active field in cancer biology.3

Morphologically, the cell cycle can be divided into interphase and mitosis. The interphase1 can further be divided into three stages: G1, S, and G2. Since the cells are preparing for DNA synthesis and mitosis during G1 and G2 respectively, these two stages are also referred to as the “gaps” of the cell cycle.4 During the S stage, the cells are synthesizing DNA, with the chromosome count increasing from 2N to 4N.

Traditional approaches for distinguishing different stages within the cell cycle rely on fluorescence microscopy5 to monitor the activity of proteins that are involved in DNA replication and repair, e.g., proliferating cell nuclear antigen (PCNA).6 A variety of signal processing techniques, including support vector machine (SVM),7 intensity histogram and intensity surface curvature,8 level-set segmentation,9 and k-nearest neighbor,10 have been applied to fluorescence intensity images to perform classification. In recent years, with the rapid development of parallel-computing capability11 and deep learning algorithms,12 convolutional neural networks have also been applied to fluorescence images of single cells for cell cycle tracking.13,14 Since all these methods are based on fluorescence microscopy, they inevitably face the associated limitations, including photobleaching, chemical, and phototoxicity, weak fluorescent signals that require large exposures, as well as nonspecific binding. These constraints limit the applicability of fluorescence imaging to studying live cell cultures over large temporal scales.15

Quantitative phase imaging (QPI)16 is a family of label-free imaging methods that has gained significant interest in recent years due to its applicability to both basic and clinical science.17 Since the QPI methods utilize the optical path length as intrinsic contrast, the imaging is noninvasive and, thus, allows for monitoring live samples over several days without concerns of degraded viability.17 As the refractive index is linearly proportional to the cell density,18 independent of the composition, QPI methods can be used to measure the nonaqueous content (dry mass) of the cellular culture.19 In the past two decades, QPI has also been implemented as a label-free tomography approach for measuring 3D cells and tissues.20−27 These QPI measurements directly yield biophysical parameters of interest in studying neuronal activity,28 quantifying subcellular contents,29 as well as monitoring cell growth along the cell cycle.30−32 Recently, with the parallel advancement in deep learning, convolutional neural networks were applied to QPI data as universal function approximators33 for various applications.34 It has been shown that deep learning can help computationally substitute chemical stains for cells35 and tissues,36 extract biomarkers of interest,37 enhance imaging quality,38 as well as solve inverse problems.39

In this article, we present a new methodology for cell cycle detection that utilizes the principle of phase imaging with computational specificity (PICS).37,40 Our approach combines spatial light interference microscopy (SLIM),41 a highly sensitive QPI method, with recently developed deep learning network architecture E-U-Net.42 We demonstrate on live HeLa cell cultures that the proposed method classifies cell cycle stages solely using SLIM images as input. The signals from the fluorescent ubiquitination-based cell cycle indicator (FUCCI)43 were only used to generate ground truth annotations during the deep learning training stage. Unlike previous methods that perform single-cell classification based on bright-field and dark-field images from flow cytometry44 or phase images from ptychography,45 our method can classify all adherent cells in the field of view and perform longitudinal studies over many cell cycles. Evaluated on a test set consisting of 408 unseen SLIM images (over 10 000 cells), our method achieves F-1 scores over 0.75 for both the G1 and S stage. For the G2/M stage, we obtained a lower score of 0.6, likely due to the round cells going out of focus in the M-stage. Using the classification data outputted by our method, we created binary maps that were used back into the QPI (input) images to measure single cell area, dry mass, and dry mass density for large cell populations in the three cell cycle stages. Because our SLIM imaging is nondestructive, all individual cells can be monitored over many cell cycles without loss of viability. We envision that our proposed method can be extended to other QPI imaging modalities and different cell lines, even those of different morphology, after proper network retraining for high throughput and nondestructive cell cycle analysis, thus eliminating the need for cell synchronization.

Results

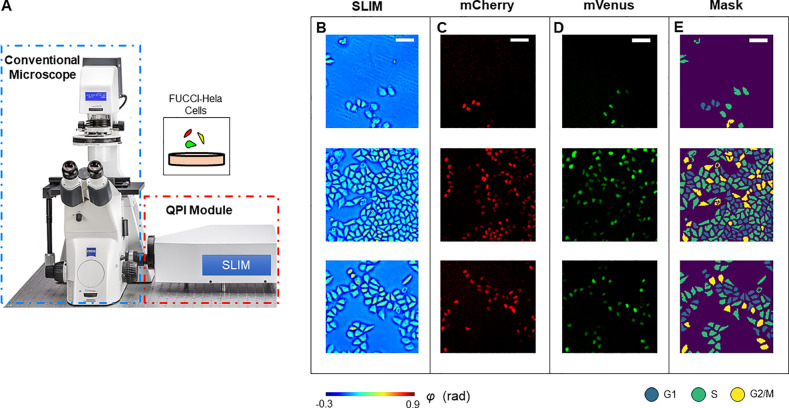

The experiment setup is illustrated in Figure 1. We utilized spatial light interference microscopy (SLIM)41 to acquire the quantitative phase map of live HeLa cells prepared in six-well plates. By adding a QPI module to an existing phase contrast microscope, SLIM modulates the phase delay between the incident field and the scattered field, and an optical path length map is then extracted from four intensity images via phase-shifting interferometry.16 Due to the common-path design of the optical system, we were able to acquire both the SLIM signals and epi-fluorescence signals of the same field of view (FOV) using a shared camera. Figure 1B shows the quantitative phase map of live HeLa cell cultures using SLIM.

Figure 1.

Schematic of the imaging system. (A) The SLIM module was connected to the side port of an existing phase contrast microscope. This setup allows us to take colocalized SLIM images and fluorescence images by switching between transmission and reflection illumination. (B) Measurements of HeLa cells. (C) mCherry fluorescence signals. (D) mVenus fluorescence signals. (E) Cell cycle stage masks generated by using adaptive thresholding to combine information from all three channels. Scale bar is 100 μm.

To obtain an accurate classification between the three stages within one cell cycle interphase (G1, S, and G2), we used HeLa cells that were encoded with fluorescent ubiquitination-based cell cycle indicator (FUCCI).43 FUCCI employs mCherry, an hCdt1-based probe, and mVenus, an hGem-based probe, to monitor proteins associated with the interphase. FUCCI transfected cells produce a sharp triple color-distinct separation of G1, S, and G2/M. Figure 1C and 1D demonstrate the acquired mCherry signal and mVenus signal, respectively. We combined the information from all three channels via adaptive thresholding to generate a cell cycle stage mask (Figure 1E). The procedure of sample preparation and mask generation is presented in detail in the Materials and Methods section and Figure S1.

Deep Learning

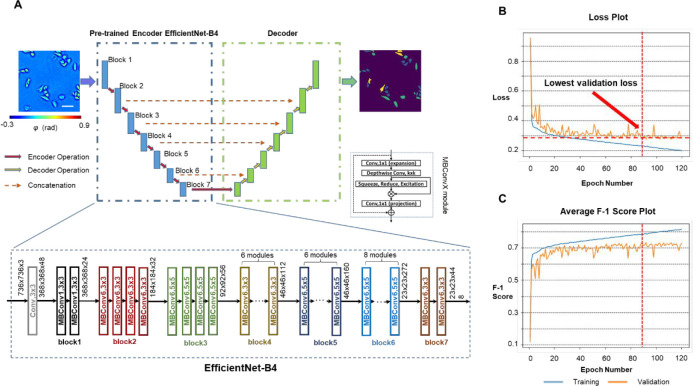

With the SLIM images as input and the FUCCI cell masks as ground truth, we formulated the cell cycle detection problem as a semantic segmentation task and trained a deep neural network to infer each pixel’s category as one of the “G1”, “S”, “G2/M”, or background labels. Inspired by the high accuracy reported in previous works,42 we used the E-U-Net (Figure 2A) as our network architecture. The E-U-Net architecture upgraded the classic U-Net46 by swapping its original encoder layers with a pretrained EfficientNet.47 Since the EfficientNet was already trained on the massive ImageNet data set, it provided more sophisticated initial weights than the randomly initialized layers from the scratch U-Net as in previous approaches.37 This transfer learning strategy enables the model to utilize “knowledge” of feature extraction learned from the ImageNet data set, achieving faster convergence and better performance.42 Since EfficientNet was designed using a compound scaling coefficient, it is still relatively small in size. Our trained network used EfficientNet-B4 as the encoder and contained 25 million trainable parameters in total.

Figure 2.

PICS training procedure. (A) We used a network architecture called the E-U-Net that replaces the encoder part of a standard U-Net with the pretrained EfficientNet-B4. Within the encoder path, the input images were downsampled 5 times through 7 blocks of encoder operations. Each encoder operation consists of multiple MBConvX modules that consist of convolutional layers, squeeze and excitation, and residual connections. The decoder path consists of concatenation, convolution and upsampling operations. (B) The model loss values on the training data set and the validation data set after each epoch. We picked the model checkpoint with the lowest validation loss as our final model and used it for all analysis. (C) The model’s average F-1 score on the training data set and the validation data set after each epoch.

We trained our E-U-Net with 2046 pairs of SLIM images and ground truth masks for 120 epochs. The network was optimized by an Adam optimizer48 against the sum of the DICE loss49 and the categorical focal loss.50 After each epoch, we computed the model’s loss and overall F1-score on both the training set and the validation set, which consists of 408 different image pairs (Figure 2B,C). The weights of parameters that make the model achieve the lowest validation loss were selected and used for all verification and analysis. The training procedure is described in the Materials and Methods.

PICS Performance

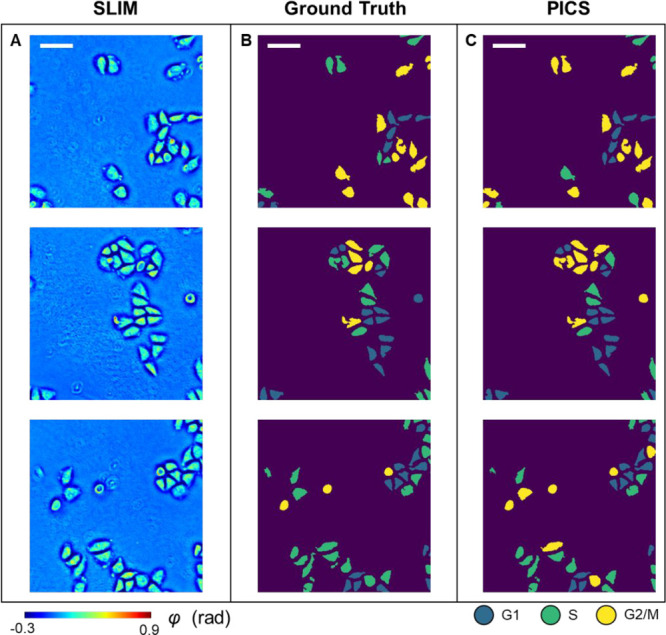

After training the model, we evaluated its performance on 408 unseen SLIM images from the test data set. The test data set was selected from wells that are different from the ones used for network training and validation during the experiment. Figure 3A shows randomly selected images from the test data set. Figure 3B and 3C show the corresponding ground truth cell cycle masks and the PICS cell cycle masks, respectively. It can be seen that the trained model was able to identify the cell body accurately.

Figure 3.

PICS results on the test data set. (A) SLIM images of HeLa cells from the test data set. (B) Ground truth cell cycle phase masks. (C) PICS-generated cell cycle phase masks. Scale bar is 100 μm.

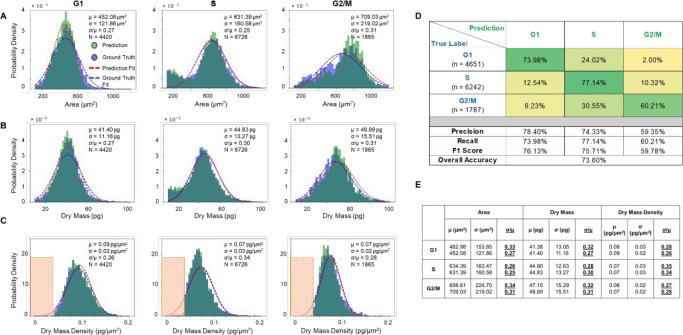

We reported the raw performance of our PICS methods in Figure S2, with pixel-wise precision, recall, and F1-score for each class. However, we noticed that these metrics did not reflect the performance in terms of the number of cells. Thus, we performed a postprocessing step on the inferred masks to enforce particle-wise consistency, as detailed in the Materials and Methods and Figure S3. After this postprocessing step, we evaluated the model’s performance on the cellular level and produced the cell count-based results shown in Figure 4. Figure 4A shows the histogram of cell body area for cells in different stages, derived from both the ground truth masks and the prediction masks. Figure 4B and 4C show similar histograms of cellular dry mass and dry mass density, respectively. The histograms indicated that there is a close overlap between the quantities derived from the ground truth masks and the prediction masks. The cell-wise precision, recall, and F-1 score for all three stages are shown in Figure 4D. Each entry is normalized with respect to the ground truth number of cells in that stage. Our deep learning model achieved over 0.75 F-1 scores for both the G1 stage and the S stage, and a 0.6 F-1 score for the G2/M stage. The lower performance for the G2/M stage is likely due to the round cells going out of focus during mitosis. To better compare the performance of our PICS method with the previously reported works, we produced two more confusion matrices (Figure S4) by merging labels to quantify the accuracy of our method in classifying cells into [“G1/S”, “G2/M”] and [“G1”, “S/G2/M”]. For all the classification formulations, we also computed the overall accuracy. Compared to the overall accuracy of 0.9113 from a method that used convolutional neural networks on fluorescence image pairs to classify single cells into “G1/S” or “G2”, our method achieved a comparable overall accuracy of 0.89 (Figure S4A). Compared to the F1-score of 0.94 and 0.88 for “G1” and “S/G2” respectively from a method14 that used convolution neural networks on fluorescence images, our method achieved a lower F-1 score for “G1” and a comparable F-1 score for “S/G2/M” (Figure.S4B). Compared to the method44 that classifies single-cell images from flow cytometry, our method achieved a lower F-1 score for “G1” and “G2/M” and a higher F-1 score for “S”.

Figure 4.

PICS performance on the test data set. (A–C) Histograms of cell area, dry mass and dry mass density for cells in G1, S, and G2/M, generated by the ground truth mask (in blue) and by PICS (in green). A Gaussian distribution (in blue) was fitted to the ground truth data and another Gaussian distribution (in red) was fitted to the PICS results. (D) Confusion matrix for PICS inference on the test data set. (E) Mean, standard deviation and their ratio (underlined for visibility) of cell area, dry mass and dry mass density obtained from the fitted Gaussian distributions. The top number is the fitted parameter on the ground truth population, while the bottom number is fitted on the PICS prediction population.

We calculated the means and standard deviations of the best fit Gaussian for the area, dry mass, and dry mass density distributions for populations of cells in each of the three stages: G1 (N = 4430 cells), S (N = 6726 cells), and G2/M (N = 1865 cells). The standard deviation divided by the mean, σ/μ, is a measure of the distribution spread. These values are indicated in each panel of Figures 4A–C and summarized in Figure 4E (the top parameter was from the ground truth population and the bottom parameter was from the PICS prediction population). We note that the G1 phase is associated with distributions that are most similar to a Gaussian. It is interesting that the S-phase exhibits a bimodal distribution in both area and dry mass, indicating the presence of a subpopulation of smaller cells at the end of G1 phase. However, the dry mass density even for this bimodal population becomes monomodal, suggesting that the dry mass density is a more uniformly distributed parameter, independent of cell size and weight. Similarly, the G2/M area and dry mass distributions are skewed toward the origin, while the dry mass density appears to have a minimum value of ∼0.0375 pg/μm2 (within the orange rectangles). Interestingly, early studies of fibroblast spreading also found that there is a minimum value for the dry mass density that cells seem to exhibit.51

PICS Application

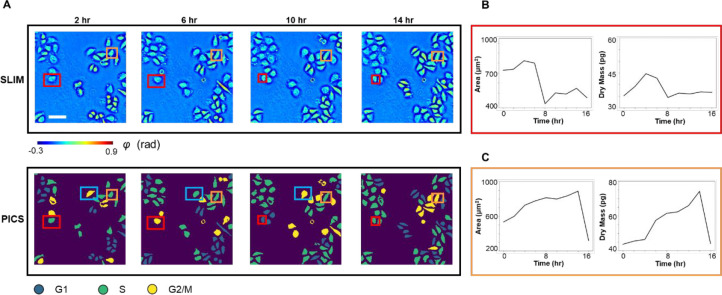

The PICS method can be applied to track the cell cycle transition of single cells, nondestructively. Figure 5A shows the time-lapse SLIM measurements and PICS inference of HeLa cells. The time increment was roughly 2 h between two measurements and the images at t = 2, 6, 10, and 14 h were displayed in Figure 5A. Our deep learning model has not seen any of these SLIM images during training. The comparison between the SLIM images and the PICS inference showed that the deep learning model produced accurate cell body masks and assigned viable cell cycle stages. We showed in Figure 5B,C the results of manually tracking two cells in this field of view across 16 h and using the PICS cell cycle masks to compute their cellular area and dry mass. Figure 5B demonstrates the cellular area and dry mass change for the cell marked by the red rectangle. We observed an abrupt drop in both the area and dry mass around t = 8 h, at which point the mother cell divides into two daughter cells. The PICS cell cycle mask also captured this mitosis event as it progressed from the “G2/M” label to the “G1” label. We observed a similar drop in Figure 5C after 14 h due to mitosis of the cell marked by the orange rectangle. Figure 5C also shows that the cell continues growing before t = 14 h and the PICS cell cycle mask progressed from the “S” label to the “G2/M” label correspondingly. Note that this long-term imaging is only possible due to the nondestructive imaging allowed by SLIM. It is possible that the PICS inference will produce inaccurate stage label for some frames. For instance, PICS inferred label “G2/M” for the cell marked by the blue rectangle at t = 2, 10 h, but inferred label “S” for the same cell at t = 6 h. Such inconsistency can be manually corrected when the user made use of the time-lapse progression of the measurement as well as the cell morphology measurements from the SLIM image.

Figure 5.

PICS on time lapse of FUCCI-HeLa cells. (A) SLIM images and PICS inference of cells measured at 2, 6, 10, and 14 h. The time interval between imaging is roughly 2 h. We manually tracked two cells (marked in red and orange). (B) Cell area and dry mass change of the cell in the red rectangle, across 16 h. These values were obtained via PICS inferred masks. We can observe an abrupt drop in cell dry mass and area as the cell divides after around 8 h. (C) Cell area and dry mass change of the cell in orange rectangle, across 16 h. We can observe that the cell continues growing in the first 14 h as it goes through G1, S, and G2 phase. It divides between hour 14 and hour 16, with an abrupt drop in its dry mass and cell area. Scale bar is 100 μm.

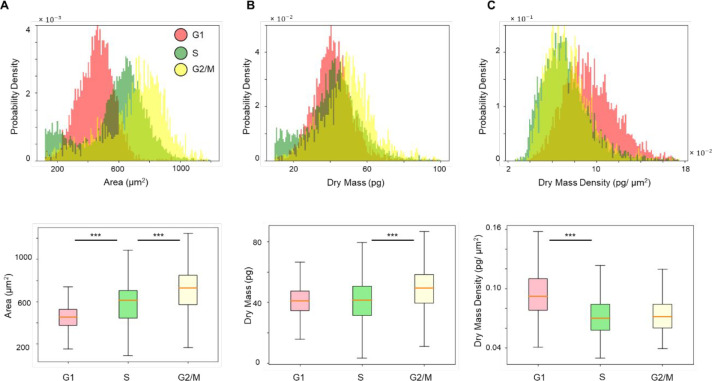

We also demonstrated that the PICS method can be used to study the statistical distribution of cells across different stages within the interphase. The PICS inferred cell area distribution across G1, S, and G2/M is plotted in Figure 6A, whereby a clear shift between cellular area in these stages can be observed. We performed Welch’s t test on these three groups of data points. To avoid the impact on p-value due to the large sample size, we randomly sampled 20% of all data points from each group and performed the t test on these subsets instead. After sampling, we have 884 cells in G1, 1345 cells in S, and 373 cells in G2/M. The p-values are less than 10–3, indicating statistical significance. The same analysis was performed on the cell dry mass and cell dry mass density, as shown in Figures 6B,C. We observed a clear distinction between cell dry mass in S and G2/M as well as between cell dry mass density in G1 and S. These results agree with the general expectation that cells are metabolically active and grow during G1 and G2. During S, the cells remain metabolically inactive and replicate their DNA. Since the DNA dry mass only accounts for a very small factor of the total cell dry mass,32 the distinction between G1 cell dry mass and S cell dry mass is less obvious than the distinction between S cell dry mass and G2/M cell dry mass. We also noted that our observation on the cell dry mass density distribution agrees with previous findings.31

Figure 6.

Statistical analysis from PICS inference on the test data set. (A) Histogram and box plot of cell area. The p-value returned from Welch’s t test indicated statistical significance. (B) Histogram and box plot of cell dry mass. The p-value returned from Welch’s t test indicated statistical significance. (C) Histogram and box plot of cell dry mass density. The p-value returned from Welch’s t test indicated statistical significance comparing cells in G1 and S. The box plot and Welch’s t test are computed on 20% of all data points in G1, S, and G2/M, randomly sampled. The sample size is 884 for G1, 1345 for S, and 373 for G2/M. Outliers are omitted from the box plot. (***p < 0.001).

Discussion

We proposed a PICS-based cell cycle stage classification workflow for fast, label-free cell cycle analysis on adherent cell cultures and demonstrated it on the HeLa cell line. Our new method utilizes trained deep neural networks to infer an accurate cell cycle mask from a single SLIM image. The method can be applied to study single-cell growth within the cell cycle as well as to compare the cellular parameter distributions between cells in different cell cycle phases.

Compared to many existing methods of cell cycle detection,7−10,13,14,44,45,52 our method yielded comparable accuracy for at least one stage in the cell cycle interphase. The errors in our PICS inference can be corrected when the time-lapse progression and QPI measurements of cell morphology were taken into consideration. Due to the difference in the underlying imaging modality and data analysis techniques, we believe that our method has three main advantages. First, our method uses a SLIM module, which can be installed as an add-on component to a conventional phase contrast microscope. The user experience remains the same as using a commercial microscope. Significantly, due to the seamless integration with the fluorescence channel on the same field of view, the instrument can collect the ground truth data very easily, while the annotation is automatically performed via thresholding, rather than manually. Second, our method does not rely on fluorescence signals as input. On the contrary, our method is built upon the capability of neural networks to extract label-free cell cycle markers from the quantitative phase map. Thus, the method can be applied to live cell samples over long periods of time without concerns of photobleaching or degraded cell viability due to chemical toxicity, opening up new opportunities for longitudinal investigations. Third, our approach can be applied to large sample sizes consisting of entire fields of views and hundreds of cells. Since we formulated the task as semantic segmentation and trained our model on a data set containing images with various cell counts, our method worked with FOVs containing up to hundreds of cells. Also, since the U-Net46 style neural network is fully convolutional, our trained model can be applied to images with arbitrary size. Consequently, the method can directly extend to other cell data sets or experiments with different cell confluences, as long as the magnification and numerical aperture stay the same. Since the input imaging data is nondestructive, we can image large cell populations over many cell cycles and study cell cycle phase-specific parameters at the single cell scale. As an illustration of this capability, we measured distributions of cell area, dry mass and dry mass density for populations of thousands of cells in various stages of the cell cycle. We found that the dry mass density distribution drops abruptly under a certain value for all cells, which indicates that live cells require a minimum dry mass density.

During the development of our method, we followed standard protocols in the community,53 such as preparing a diverse enough training data set, properly splitting the training, validation and test data set, and closely monitoring the model loss convergence to ensure that our model can generalize. Our previous studies showed that, with high-quality ground truth data, the deep learning-based methods applied to quantitative phase images are generalizable to predict cell viability54 and nuclear cytoplasmic ratio37 on multiple cell lines. Thus, although we only demonstrated our method on HeLa cells due to the limited availability of cell lines engineered with FUCCI(CA)2, we believe PICS-based instruments are well-suited for extending our method to different cell lines and imaging conditions with minimal effort to perform extra training. Our typical training takes approximately 20 h, while the inference is performed within 65 ms per frame.37 Thus, we envision that our proposed workflow is a valuable alternative to the existing methods for cell cycle stage classification and eliminates the need for cell synchronization.

Materials and Methods

FUCCI Cell and HeLa Cell Preparation

HeLa/FUCCI(CA)243 cells were acquired from the RIKEN cell bank and kept frozen in a liquid nitrogen tank. Prior to the experiments, we thawed and cultured cells into T75 flasks in Dulbecco’s Modified Eagle Medium (DMEM with low glucose) containing 10% fetal bovine serum (FBS) and incubated in 37 °C with 5% CO2. When the cells reached 70% confluency, the flask was washed with phosphate-buffered saline (PBS) and trypsinized with 4 mL of 0.25% (w/v) Trypsin EDTA for 4 min. When the cells started to detach, they were suspended in 4 mL of DMEM and passaged onto a glass-bottom six-well plate. HeLa cells were then imaged after 2 days of growth.

SLIM Imaging

The SLIM system architecture is shown in Figure 1A. We attached a SLIM module (CellVista SLIM Pro; Phi Optics) to the output port of a phase contrast microscope. Inside the SLIM module, the spatial light modulator matched to the back focal plane of the objective controlled the phase delay between the incident field and the reference field. We recorded four intensity images at phase shifts of 0, π/2, π, and 3π/2 and reconstructed the quantitative phase map of the sample. We measured both the SLIM signal and the fluorescence signal with a 10×/0.3 NA objective. The camera we used was Andor Zyla with a pixel size of 6.5 μm. The exposure time for SLIM channel and fluorescence channel was set to 25 and 500 ms, respectively. The scanning of the multiwell plate was performed automatically via a control software developed in-house.37,55 For each well, we scanned an area of 7.5 × 7.5 mm2, which took approximately 16 min for the SLIM and the fluorescence channels. The data set we used in this study were collected over 20 h, with approximately 30 min interval between each round of scanning.

Cellular Dry Mass Computation

We recovered the dry mass as

| 1 |

using the same procedure outlined in previous works.18,19 λ = 550 nm is the central wavelength; γ = 0.2 mL/g is the specific refraction increment, corresponding to the average of reported values;18,56 and ϕ(x,y) is the measured phase. Eq 1 provides the dry mass density at each pixel, and we integrated over the region of interest to get the cellular dry mass.

Ground Truth Cell Cycle Mask Generation

To prepare the ground truth cell cycle masks for training the deep learning models, we combined information from the SLIM channel and the fluorescence channels (Figure S1A) by applying adaptive thresholding (Figure S1B). All the code was implemented in Python, using the scikit-image library. We first applied the adaptive thresholding algorithm on the SLIM images to generate accurate cell body masks. Then we applied the algorithm on the mCherry fluorescence images and mVenus fluorescence images to get the nuclei masks that indicate the presence of the fluorescence signals. To ensure the quality of the generated masks, we first applied the adaptive thresholding algorithm on a small subset of images with a range of possible window sizes. Then we manually inspected the quality of the generated masks and selected the best window size to apply to the entire data set. After getting these three masks (cell body mask, mCherry FL mask, and mVenus FL mask), we took the intersection among them. Following the FUCCI color readout detailed in ref (43), a presence of mCherry signal alone indicates the cell is in G1 stage and a presence of mVenus signal alone indicates the cell is in S stage. The overlapping of both signals indicates the cell is in G2 or M stage. Since the cell mask is always larger than the nuclei mask, we filled in the entire cell area with the corresponding label. To do so, we performed connected component analysis on the cell body mask and counted the number of pixels marked by each fluorescence signal in each cell body and took the majority label. We handled the case of no fluorescence signal by automatically labeling them as S because both fluorescence channels yield low-intensity signals only at the start of the S phase.43 Before using the mask for analysis, we also performed traditional computer vision operations, e.g., hole filling. on the generated masks to ensure the accuracy of computed dry mass and cell area (Figure S1C).

Deep Learning Model Development

We used the E-U-Net architecture42 to develop the deep learning model that can assign a cell cycle phase label to each pixel. The E-U-Net upgraded the classic U-Net46 architecture by swapping its encoder component with a pretrained EfficientNet.47 Compared to previously reported transfer-learning strategies, e.g., utilizing a pretrained ResNet57 for the encoder part, we believe the E-U-Net architecture is superior since the pretrained EfficientNet attains higher performance on the benchmark data set while remaining compact due to the compound scaling strategy.47

The EfficientNet backbone we ended up using for this project was EfficientNet-B4 (Figure 2A). The entire E-U-Net-B4 model contains around 25 million trainable parameters, which is smaller compared to the number of parameters from the stock U-Net46 and other variations.58 We trained the network with 2046 image pairs in the training data set and 408 image pairs in the validation data set. Each image contains 736 × 736 pixels. The model was optimized using an Adam optimizer48 with default parameters against the sum of the DICE loss49 and the categorical focal loss.50 The DICE loss was designed to maximize the dice coefficient D (eq 2) between the ground truth label (gi) and prediction label (pi) at each pixel. It has been shown in previous works that DICE loss can help tackle class imbalance in the data set.59 Besides DICE loss, we also utilized the categorical focal loss FL(pt) (eq 3). The categorical focal loss extended the cross entropy loss by adding a modulating factor (1 – pt)γ. It helped the model to focus more on wrong inferences by preventing easily classified pixels dominating the gradient. We tuned the ratio between these two loss values and launched multiple training sessions. In the end we found the model trained against an equally weighted DICE loss and categorical focal loss gave the best results.

| 2 |

| 3 |

The model was trained for 120 epochs, taking over 18 h on an Nvidia V-100 GPU. For learning rate scheduling, we followed previous works60 and implemented learning rate warm-up and cosine learning rate decay. During the first five epochs of training, the learning rate will increase linearly from 0 to 4 × 10–3. After that, we decreased the learning rate at each epoch following the cosine function. On the basis of our experiments, we ended up relaxing the learning rate decay such that the learning rate in the final epoch will be half of the initial learning rate instead of zero.60 We plotted the model’s loss value on both the training data set and the validation data set after each epoch (Figure 2B) and picked the model checkpoint with the lowest validation loss as our final model to avoid overfitting. All the deep learning code was implemented using Python 3.8 and TensorFlow 2.3.

Postprocessing

We evaluated the performance of our trained E-U-Net on an unseen test data set and reported the precision, recall, and F-1 score for each category: G1, S, G2/M, and background, respectively (Figure S2). The pixel-wise confusion matrix indicated our model achieved high performance in segmenting the cell bodies from the background. However, since this pixel-wise evaluation overlooked the biologically relevant instance, i.e., the number of cells in each cell cycle stage, we performed an extra step of postprocessing to evaluate that.

We first performed connected-component analysis on the raw model predictions. Within each connected component, we applied a simple voting strategy where the majority label will take over the entire cell. Figure S3A,B illustrate this process. We believe enforcing particle-wise consistency, in this case, is justified because it is impossible for a single cell to have two cell cycle stages at the same time and that our model is highly accurate in segmenting cell bodies, with over 0.96 precision and recall (Figure S2). We then computed the precision, recall, and F-1 score for each category on the cellular-level. For each particle in the ground truth, we used its centroid (or the median coordinates if the centroid falls out of the cell body) to determine if the predicted label matches the ground truth. The cellular-wise metrics were reported in Figure 4B.

Before using the postprocessed prediction masks to compute the area and dry mass of each cell, we also performed hole-filling as we did for the ground truth masks to ensure the values are accurate (Figure S3C).

Acknowledgments

Parts of this work were reproduced for a thesis. All data and code required to reproduce the results can be obtained from the corresponding author upon a reasonable request.

Supporting Information Available

The Supporting Information is available free of charge at https://pubs.acs.org/doi/10.1021/acsphotonics.1c01779.

Figure S1: Ground truth mask generation workflow; Figure S2: PICS performance evaluated at a pixel level; Figure S3: Postprocessing workflow; Figure S4: Confusion matrix after merging two labels together (PDF)

Author Contributions

¶ Y.R.H., S.H., and M.E.K. contributed equally to this work. Y.H. and M.E.K. performed imaging. S.H., Y.H., and N.S. performed the deep learning development. Y.L. prepared cell cultures. Y.H. and C.H. performed analysis. Y.H., S.H., Y.L., C.H., and G.P. wrote the manuscript with inputs from all authors. M.A.A. supervised the deep learning. G.P. supervised the project.

This work is sponsored in part by the National Science Foundation (0939511, 1353368), and the National Institutes of Health (R01CA238191, R01GM129709). C.H. is supported by the National Institutes of Health (T32EB019944). M.E.K. is supported by a fellowship from the Miniature Brain Machinery Program at UIUC (NSF, NRT-UtB, and 1735252).

The authors declare the following competing financial interest(s): G.P. has financial interest in Phi Optics, a company developing quantitative phase imaging technology for materials and life science applications.

Supplementary Material

References

- Schafer K. A. The cell cycle: a review. Vet Pathol 1998, 35 (6), 461–78. 10.1177/030098589803500601. [DOI] [PubMed] [Google Scholar]

- Kastan M. B.; Bartek J. Cell-cycle checkpoints and cancer. Nature 2004, 432 (7015), 316–23. 10.1038/nature03097. [DOI] [PubMed] [Google Scholar]

- Malumbres M.; Barbacid M. Cell cycle, CDKs and cancer: a changing paradigm. Nat. Rev. Cancer 2009, 9 (3), 153–66. 10.1038/nrc2602. [DOI] [PubMed] [Google Scholar]

- Lodish H.; Berk A.; Kaiser C. A.; Kaiser C.; Krieger M.; Scott M. P.; Bretscher A.; Ploegh H.; Matsudaira P.. Molecular Cell Biology; Macmillan, 2008. [Google Scholar]

- Lichtman J. W.; Conchello J. A. Fluorescence microscopy. Nat. Methods 2005, 2 (12), 910–9. 10.1038/nmeth817. [DOI] [PubMed] [Google Scholar]

- Maga G.; Hubscher U. Proliferating cell nuclear antigen (PCNA): a dancer with many partners. J. Cell Sci. 2003, 116 (15), 3051–3060. 10.1242/jcs.00653. [DOI] [PubMed] [Google Scholar]

- Wang M.; Zhou X.; Li F.; Huckins J.; King R. W.; Wong S. T. Novel cell segmentation and online SVM for cell cycle phase identification in automated microscopy. Bioinformatics 2008, 24 (1), 94–101. 10.1093/bioinformatics/btm530. [DOI] [PubMed] [Google Scholar]

- Jaeger S.; Palaniappan K.; Casas-Delucchi C. S.; Cardoso M. C.. Classification of cell cycle phases in 3D confocal microscopy using PCNA and chromocenter features. In Proceedings of the Seventh Indian Conference on Computer Vision, Graphics and Image Processing; 2010; pp 412–418.

- Padfield D.; Rittscher J.; Thomas N.; Roysam B. Spatio-temporal cell cycle phase analysis using level sets and fast marching methods. Medical Image Analysis 2009, 13 (1), 143–55. 10.1016/j.media.2008.06.018. [DOI] [PubMed] [Google Scholar]

- Du T. H.; Puah W. C.; Wasser M. Cell cycle phase classification in 3D in vivo microscopy of Drosophila embryogenesis. BMC Bioinf. 2011, 12, 1–9. 10.1186/1471-2105-12-S13-S18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sanders J.; Kandrot E.. CUDA by Example: An Introduction to General-Purpose GPU Programming; Addison-Wesley Professional, 2010. [Google Scholar]

- Goodfellow I.; Bengio Y.; Courville A.; Bengio Y.. Deep Learning; MIT Press: Cambridge, 2016; Vol. 1. [Google Scholar]

- Nagao Y.; Sakamoto M.; Chinen T.; Okada Y.; Takao D. Robust classification of cell cycle phase and biological feature extraction by image-based deep learning. Mol. Biol. Cell 2020, 31 (13), 1346–1354. 10.1091/mbc.E20-03-0187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Narotamo H.; Fernandes M. S.; Sanches J. M.; Silveira M.. Interphase Cell Cycle Staging using Deep Learning. In 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC); IEEE, 2020; pp 1432–1435. [DOI] [PubMed]

- Jensen E. C. Use of fluorescent probes: their effect on cell biology and limitations. Anat Rec (Hoboken) 2012, 295 (12), 2031–6. 10.1002/ar.22602. [DOI] [PubMed] [Google Scholar]

- Popescu G.Quantitative Phase Imaging of Cells and Tissues; McGraw Hill Professional, 2011. [Google Scholar]

- Park Y.; Depeursinge C.; Popescu G. Quantitative phase imaging in biomedicine. Nat. Photonics 2018, 12 (10), 578–589. 10.1038/s41566-018-0253-x. [DOI] [Google Scholar]

- Barer R. Interference microscopy and mass determination. Nature 1952, 169 (4296), 366–7. 10.1038/169366b0. [DOI] [PubMed] [Google Scholar]

- Popescu G.; Park Y.; Lue N.; Best-Popescu C.; Deflores L.; Dasari R. R.; Feld M. S.; Badizadegan K. Optical imaging of cell mass and growth dynamics. Am. J. Physiol Cell Physiol 2008, 295 (2), C538–44. 10.1152/ajpcell.00121.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Charriere F.; Marian A.; Montfort F.; Kuehn J.; Colomb T.; Cuche E.; Marquet P.; Depeursinge C. Cell refractive index tomography by digital holographic microscopy. Opt. Lett. 2006, 31 (2), 178–80. 10.1364/OL.31.000178. [DOI] [PubMed] [Google Scholar]

- Choi W.; Fang-Yen C.; Badizadegan K.; Oh S.; Lue N.; Dasari R. R.; Feld M. S. Tomographic phase microscopy. Nat. Methods 2007, 4 (9), 717–9. 10.1038/nmeth1078. [DOI] [PubMed] [Google Scholar]

- Kim K.; Yoon H.; Diez-Silva M.; Dao M.; Dasari R. R.; Park Y. High-resolution three-dimensional imaging of red blood cells parasitized by Plasmodium falciparum and in situ hemozoin crystals using optical diffraction tomography. J. Biomed. Opt. 2014, 19 (1), 011005. 10.1117/1.JBO.19.1.011005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim T.; Zhou R. J.; Mir M.; Babacan S. D.; Carney P. S.; Goddard L. L.; Popescu G. White-light diffraction tomography of unlabelled live cells. Nat. Photonics 2014, 8 (3), 256–263. 10.1038/nphoton.2013.350. [DOI] [Google Scholar]

- Nguyen T. H.; Kandel M. E.; Rubessa M.; Wheeler M. B.; Popescu G. Gradient light interference microscopy for 3D imaging of unlabeled specimens. Nat. Commun. 2017, 8 (1), 210. 10.1038/s41467-017-00190-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Merola F.; Memmolo P.; Miccio L.; Savoia R.; Mugnano M.; Fontana A.; D'Ippolito G.; Sardo A.; Iolascon A.; Gambale A.; Ferraro P. Tomographic flow cytometry by digital holography. Light Sci. Appl. 2017, 6 (4), e16241–e16241. 10.1038/lsa.2016.241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kandel M. E.; Hu C.; Naseri Kouzehgarani G.; Min E.; Sullivan K. M.; Kong H.; Li J. M.; Robson D. N.; Gillette M. U.; Best-Popescu C.; Popescu G. Epi-illumination gradient light interference microscopy for imaging opaque structures. Nat. Commun. 2019, 10 (1), 4691. 10.1038/s41467-019-12634-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hu C.; Field J. J.; Kelkar V.; Chiang B.; Wernsing K.; Toussaint K. C.; Bartels R. A.; Popescu G. Harmonic optical tomography of nonlinear structures. Nat. Photonics 2020, 14 (9), 564–569. 10.1038/s41566-020-0638-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marquet P.; Depeursinge C.; Magistretti P. J. Review of quantitative phase-digital holographic microscopy: promising novel imaging technique to resolve neuronal network activity and identify cellular biomarkers of psychiatric disorders. NEUROW 2014, 1 (2), 020901–020901. 10.1117/1.NPh.1.2.020901. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim K.; Lee S.; Yoon J.; Heo J.; Choi C.; Park Y. Three-dimensional label-free imaging and quantification of lipid droplets in live hepatocytes. Sci. Rep. 2016, 6 (1), 36815. 10.1038/srep36815. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mir M.; Wang Z.; Shen Z.; Bednarz M.; Bashir R.; Golding I.; Prasanth S. G.; Popescu G. Optical measurement of cycle-dependent cell growth. Proc. Natl. Acad. Sci. U. S. A. 2011, 108 (32), 13124–9. 10.1073/pnas.1100506108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Girshovitz P.; Shaked N. T. Generalized cell morphological parameters based on interferometric phase microscopy and their application to cell life cycle characterization. Biomedical Optics Express 2012, 3 (8), 1757–73. 10.1364/BOE.3.001757. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bista R. K.; Uttam S.; Wang P.; Staton K.; Choi S.; Bakkenist C. J.; Hartman D. J.; Brand R. E.; Liu Y. Quantification of nanoscale nuclear refractive index changes during the cell cycle. Journal of Biomedical Optics 2011, 16 (7), 070503. 10.1117/1.3597723. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hornik K.; Stinchcombe M.; White H. Multilayer Feedforward Networks Are Universal Approximators. Neural Networks 1989, 2 (5), 359–366. 10.1016/0893-6080(89)90020-8. [DOI] [Google Scholar]

- Jo Y.; Cho H.; Lee S. Y.; Choi G.; Kim G.; Min H.-s.; Park Y. Quantitative phase imaging and artificial intelligence: a review. IEEE J. Sel. Top. Quantum Electron. 2019, 25 (1), 1–14. 10.1109/JSTQE.2018.2859234. [DOI] [Google Scholar]

- Ounkomol C.; Seshamani S.; Maleckar M. M.; Collman F.; Johnson G. R. Label-free prediction of three-dimensional fluorescence images from transmitted-light microscopy. Nat. Methods 2018, 15 (11), 917–920. 10.1038/s41592-018-0111-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rivenson Y.; Liu T.; Wei Z.; Zhang Y.; de Haan K.; Ozcan A. PhaseStain: the digital staining of label-free quantitative phase microscopy images using deep learning. Light Sci. Appl. 2019, 8 (1), 23. 10.1038/s41377-019-0129-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kandel M. E.; He Y. R.; Lee Y. J.; Chen T. H.; Sullivan K. M.; Aydin O.; Saif M. T. A.; Kong H.; Sobh N.; Popescu G. Phase imaging with computational specificity (PICS) for measuring dry mass changes in sub-cellular compartments. Nat. Commun. 2020, 11 (1), 6256. 10.1038/s41467-020-20062-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pinkard H.; Phillips Z.; Babakhani A.; Fletcher D. A.; Waller L. Deep learning for single-shot autofocus microscopy. Optica 2019, 6 (6), 794–797. 10.1364/OPTICA.6.000794. [DOI] [Google Scholar]

- Liu T.; de Haan K.; Rivenson Y.; Wei Z.; Zeng X.; Zhang Y.; Ozcan A. Deep learning-based super-resolution in coherent imaging systems. Sci. Rep. 2019, 9 (1), 3926. 10.1038/s41598-019-40554-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- He Y.Phase Imaging with Computational Specificity for Cell Biology Applications; University of Illinois at Urbana–Champaign, 2021. [Google Scholar]

- Wang Z.; Millet L.; Mir M.; Ding H.; Unarunotai S.; Rogers J.; Gillette M. U.; Popescu G. Spatial light interference microscopy (SLIM). Opt. Express 2011, 19 (2), 1016–26. 10.1364/OE.19.001016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baheti B.; Innani S.; Gajre S.; Talbar S.. Eff-unet: A novel architecture for semantic segmentation in unstructured environment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops; 2020; pp 358–359.

- Sakaue-Sawano A.; Yo M.; Komatsu N.; Hiratsuka T.; Kogure T.; Hoshida T.; Goshima N.; Matsuda M.; Miyoshi H.; Miyawaki A. Genetically Encoded Tools for Optical Dissection of the Mammalian Cell Cycle. Mol. Cell 2017, 68 (3), 626–640. 10.1016/j.molcel.2017.10.001. [DOI] [PubMed] [Google Scholar]

- Eulenberg P.; Kohler N.; Blasi T.; Filby A.; Carpenter A. E.; Rees P.; Theis F. J.; Wolf F. A. Reconstructing cell cycle and disease progression using deep learning. Nat. Commun. 2017, 8 (1), 463. 10.1038/s41467-017-00623-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henser-Brownhill T.; Ju R. J.; Haass N. K.; Stehbens S. J.; Ballestrem C.; Cootes T. F.. Estimation of Cell Cycle States of Human Melanoma Cells with Quantitative Phase Imaging and Deep Learning. In 2020 IEEE 17th International Symposium on Biomedical Imaging (ISBI); IEEE, 2020; pp 1617–1621.

- Ronneberger O.; Fischer P.; Brox T.. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer, 2015; pp 234–241.

- Tan M.; Le Q.. Efficientnet: Rethinking model scaling for convolutional neural networks. In International Conference on Machine Learning; PMLR, 2019; pp 6105–6114.

- Kingma D. P.; Ba J.. Adam: A method for stochastic optimization. arXiv, December 22, 2014, arXiv:1412.6980 (accessed May 21, 2021).

- Milletari F.; Navab N.; Ahmadi S.-A.. V-net: Fully convolutional neural networks for volumetric medical image segmentation. In 2016 Fourth International Conference on 3D Vision (3DV); IEEE, 2016; pp 565–571.

- Lin T.-Y.; Goyal P.; Girshick R.; He K.; Dollár P.. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision; 2017; pp 2980–2988.

- Dunn G. A.; Zicha D. Dynamics Of Fibroblast Spreading. J. Cell Sci. 1995, 108, 1239–1249. 10.1242/jcs.108.3.1239. [DOI] [PubMed] [Google Scholar]

- Ersoy I.; Bunyak F.; Chagin V.; Cardoso M. C.; Palaniappan K.. Segmentation and classification of cell cycle phases in fluorescence imaging. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer, 2009; pp 617–624. [DOI] [PMC free article] [PubMed]

- Barbastathis G.; Ozcan A.; Situ G. On the use of deep learning for computational imaging. Optica 2019, 6 (8), 921–943. 10.1364/OPTICA.6.000921. [DOI] [Google Scholar]

- Hu C.; He S.; Lee Y. J.; He Y.; Kong E. M.; Li H.; Anastasio M. A.; Popescu G. Live-dead assay on unlabeled cells using phase imaging with computational specificity. Nat. Commun. 2022, 10.1038/s41467-022-28214-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kandel M. E.; Sridharan S.; Liang J.; Luo Z.; Han K.; Macias V.; Shah A.; Patel R.; Tangella K.; Kajdacsy-Balla A.; Guzman G.; Popescu G. Label-free tissue scanner for colorectal cancer screening. J. Biomed. Opt. 2017, 22 (6), 66016. 10.1117/1.JBO.22.6.066016. [DOI] [PubMed] [Google Scholar]

- Zhao H.; Brown P. H.; Schuck P. On the distribution of protein refractive index increments. Biophys. J. 2011, 100 (9), 2309–17. 10.1016/j.bpj.2011.03.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chaurasia A.; Culurciello E.. Linknet: Exploiting encoder representations for efficient semantic segmentation. In 2017 IEEE Visual Communications and Image Processing (VCIP); IEEE, 2017; pp 1–4.

- Badrinarayanan V.; Kendall A.; Cipolla R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Transactions on Pattern Analysis and Machine Intelligence 2017, 39 (12), 2481–2495. 10.1109/TPAMI.2016.2644615. [DOI] [PubMed] [Google Scholar]

- Sudre C. H.; Li W.; Vercauteren T.; Ourselin S.; Cardoso M. J.. Generalised dice overlap as a deep learning loss function for highly unbalanced segmentations. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support; Springer, 2017; pp 240–248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- He T.; Zhang Z.; Zhang H.; Zhang Z.; Xie J.; Li M.. Bag of tricks for image classification with convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; 2019; pp 558–567.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.