Abstract

Recently, the COVID-19 epidemic has had a major impact on day-to-day life of people all over the globe, and it demands various kinds of screening tests to detect the coronavirus. Conversely, the development of deep learning (DL) models combined with radiological images is useful for accurate detection and classification. DL models are full of hyperparameters, and identifying the optimal parameter configuration in such a high dimensional space is not a trivial challenge. Since the procedure of setting the hyperparameters requires expertise and extensive trial and error, metaheuristic algorithms can be employed. With this motivation, this paper presents an automated glowworm swarm optimization (GSO) with an inception-based deep convolutional neural network (IDCNN) for COVID-19 diagnosis and classification, called the GSO-IDCNN model. The presented model involves a Gaussian smoothening filter (GSF) to eradicate the noise that exists from the radiological images. Additionally, the IDCNN-based feature extractor is utilized, which makes use of the Inception v4 model. To further enhance the performance of the IDCNN technique, the hyperparameters are optimally tuned using the GSO algorithm. Lastly, an adaptive neuro-fuzzy classifier (ANFC) is used for classifying the existence of COVID-19. The design of the GSO algorithm with the ANFC model for COVID-19 diagnosis shows the novelty of the work. For experimental validation, a series of simulations were performed on benchmark radiological imaging databases to highlight the superior outcome of the GSO-IDCNN technique. The experimental values pointed out that the GSO-IDCNN methodology has demonstrated a proficient outcome by offering a maximal of 0.9422, of 0.9466, of 0.9494, of 0.9429, and of 0.9394.

Keywords: deep learning, inception networks, COVID-19, classification, GSO algorithm, radiological images

1. Introduction

The 2019 novel coronavirus named COVID-19 has become a major threat to human health across the globe. Earlier works reported that the Severe Acute Respiratory Syndrome Coronavirus 2 (SARS-CoV-2) began from decomposed cats that affected human beings, and Middle East Respiratory Syndrome (MERS-CoV) virus began from Arabian camels to human beings. It is believed that COVID-19 started in bats and spread to humans. It can infect the respiratory system easily and is rapidly transmitted to other people. It exhibits milder symptoms in about 82% of patients, and the remaining worsens to a critical stage [1]. In most cases, 95% of people survived to a certain stage, and the remaining 5% of people suffered from the advanced stage. It has also been observed that COVID-19 has affected more men than women, and children in the age group of 0–6 are at risk of infection.

Since March 2020, several openly accessible X-ray images of COVID-19-infected persons have existed. It offers a method of analyzing the medical images and identifying every possible prototype that may lead to the automatic identification and classification of diseases. Presently, the imprints of this virus are stimulating effort because the unreachability of the COVID-19 diagnosis process results in stress globally. Because of the inadequate availability of COVID-19 rapid test kits, it has become essential to rely on other diagnostic techniques. Since the coronavirus damages the epithelial cells in the respiratory system, doctors make use of X-rays to diagnose the patient’s lungs [2]. As the hospitals commonly have X-ray imaging equipment, it becomes easy to test COVID-19 using X-rays without specific test kits. Radiological imaging techniques have become essential to detecting and classifying COVID-19. Although it denotes a circular allocation in the images, it exhibits identical characteristics to the alternative viral pandemic lung contagion. Because the coronavirus continues to grow quickly, different varieties of examinations are performed.

Deep learning (DL) is an effective method involved in the healthcare-based diagnostic process. DL is a combination of machine learning (ML) algorithms and is majorly focused on automated feature extraction and classification processes [3,4]. ML as well as DL models have been recognized as well-identified models to mine, examine, and identify the patterns that exist in the images. Improving the progression of medical decision making and computer-aided design (CAD) turned out to be non-trivial, as effective data are produced [5]. DL, normally named deep CNN (DCNN), was utilized for automatically extracting the features that utilize the convolutional processes, and layers operate on nonlinear data. All the layers have a data transformation for superior and more abstract levels. Usually, DL refers to novel deep networks related to standard ML techniques utilizing big data [6].

This paper presents an automated glowworm swarm optimization (GSO) with an inception-based deep convolutional neural network (IDCNN) for COVID-19 diagnosis and classification, called the GSO-IDCNN model. The presented model utilizes a Gaussian smoothening filter (GSF) to exterminate the noise that occurs from the radiological images. Moreover, the IDCNN-based feature extractor was utilized, which employs the Inception v4 method. To further boost the performance of the IDCNN model, the hyperparameters are optimally tuned using the GSO algorithm. Finally, an adaptive neuro-fuzzy classifier (ANFC) is used for classifying the existence of COVID-19. For experimental validation, a series of simulations were performed on benchmark radiological imaging databases to highlight the superior outcome of the GSO-IDCNN model. In short, the contribution of the paper is listed as follows:

To develop a new GSO-ODCNN model for COVID-19 detection and classification;

To present a new GSF model to eradicate the noise that exists in the radiological images;

To introduce a GSO model with an Inception v4-based feature extractor on radiological images;

To employ ANFC classifier to allocate proper class labels to it;

To validate the performance of the GSO-ODCNN model on the benchmark dataset.

2. Related Works

ML algorithms fall under the topic of artificial intelligence (AI), which is commonly employed for healthcare applications for feature extraction and image examination purposes. A classification model is developed for computing the dissimilarity amongst a collection of Regions of Interest (ROIs) [7,8]. Moreover, the features are classified by a normal vector-oriented classifier technique. Another computed tomography (CT)-based classification model is developed in [9] incorporating three classical features, such as grayscale values, shapes, textures, and symmetric features. It can be performed using RBFNN to classify the features involved in the images. A comparative study of JeffriesñMatusita (JñM) distance and KarhunenñLoËve transformation-based feature extracting techniques were developed [10].

A new classifier model is projected in [11] with an average grayscale value of images for a multi-class image classifier. A novel automatic classifier technique is developed in [12] for classifying breast cancer utilizing morphological features. Moreover, it is noticed that the outcome decreases when an identical process is carried out on an alternative dataset. Additionally, handcrafted techniques undergo initialization for deploying CNN and automated feature extraction methods.

Ozyurt et al. [13] presented the hybridization technique known as fused perceptual hash dependent on the CNN model to decrease the diagnosis time of liver CT images and sustain the overall operation. Xu et al. [14] have executed a DTL technique to address the medicinal imaging imbalance problem. Lakshmanaprabu et al. [15] have investigated CT lung images using an optimum DNN as well as LDA. In [16], a transformation of original CT images to lower attenuation actual images and higher attenuation pattern rescaling was carried out. At last, the resampling of the images takes place and is classified using the CNN technique. A DL-based automatic lung and affected region segmentation process take place in [17] using a chest CT image. Wang et al. [18] relied on COVID-19 radiographical modifications in CT images and designed a DL model for graphical feature extraction of COVID-19, offering a medicinal examination prior to obtaining the pathogenic state to avert the deadly disorder in the patients. In [19], data mining (DM) techniques are applied to classifier SARS and pneumonia using X-rays.

Although numerous techniques exist to diagnose COVID-19, it is still a requirement to analyze COVID-19 using chest X-ray images. X-ray machinery appeared to help scan the body for damage, such as fracture, bone displacement, lung disease, pneumonia, and tumor. By using X-rays, the scanning process is easy, quick, cheap, and harmless over CT. Since the advanced stage of COVID-19 leads to serious illnesses, a proficient CAD model for COVID-19 diagnosis is essential. At the same time, most of the earlier works have concentrated on binary classification. Therefore, in this study, a multi-label classification process is designed for COVID-19 diagnosis.

3. The Proposed GSO-IDCNN Model

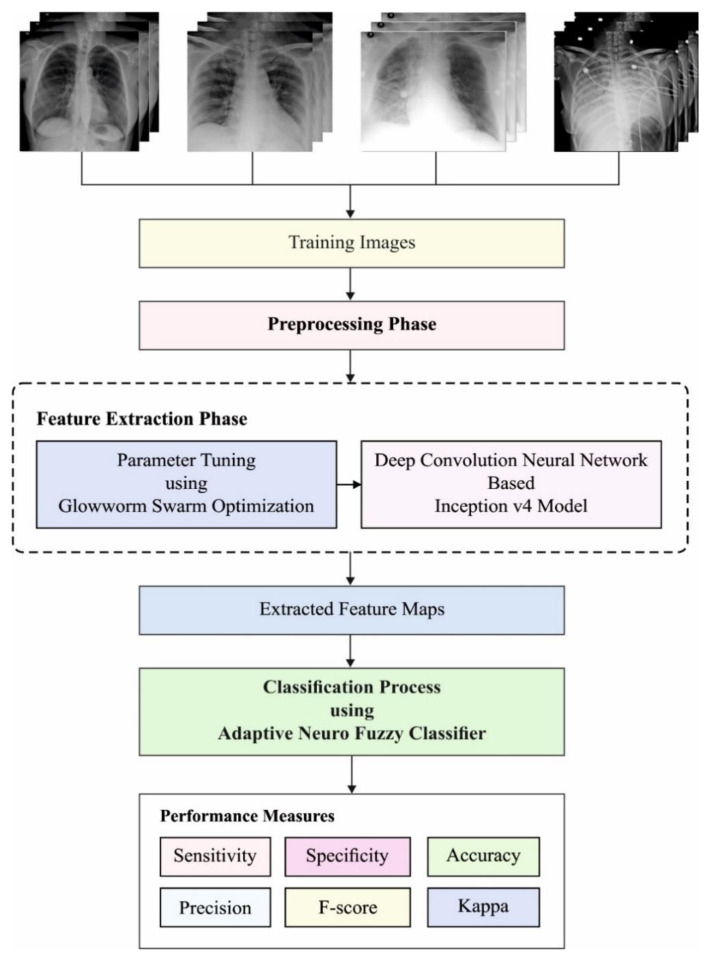

The working procedure contained in the GSO-IDCNN technique is showcased in Figure 1. As depicted, the noise that exists from the radiological images is discarded by the GSF technique. Then, the feature extraction process takes place using the IDCNN model, where the parameters involved in it are tuned by the GSO technique. Eventually, the classification process is executed by the ANFC model to allocate appropriate class labels to it.

Figure 1.

Working process of the GSO-IDCNN model.

3.1. GSF-Based Preprocessing

The design of 2D GSF is commonly employed to smoothen and remove noise. It necessitates massive computational time, and its effectiveness in the design is fascinating.

The convolutional operators are the Gaussian operators, and the model of Gaussian smoothing is attained by convolutional operations. The 1D Gaussian operator has been represented by:

| (1) |

A better smoothening filtering process for images is recognized from the spatial and frequency domains, thus sustaining the uncertainty connection, as provided by [20]:

| (2) |

The 2D Gaussian operator (circularly symmetric) can be represented by:

| (3) |

where designates the standard deviation (SD) of the Gaussian function. Once it includes a high value, the smoothening effect is found to be high, and designates the Cartesian co-ordinate points in the image that indicates the window dimensional.

This filtering technique contains addition as well as multiplication tasks amongst the image and kernel. An image can be defined as a matrix with values of 0–255. The kernel was considered as a normalized square matrix that lies within the range of zero to one. The kernel can be defined using a specific bit count.

The MSE is a cumulative square error amongst the reconstructed and original images that can be represented by:

| (4) |

where indicates the image size, implies the original images, and denotes the restoring image. PSNR is the peak value of SNR, and it can be represented by the ratio of maximum probable power of pixel values and power of distorted noise. It affects the actual quality of the image and is represented by:

| (5) |

where 255 × 255 is the higher pixel values that exist from the image, and MSE is determined to input and saved images with size. The convolutional process is the multiplication method, and the informed logarithm product is ineffective with respect to the accurateness. Thus, it is an effectual logarithm multiplier for improving the accurateness of the Gaussian filter.

3.2. IDCNN-Based Feature Extraction Model

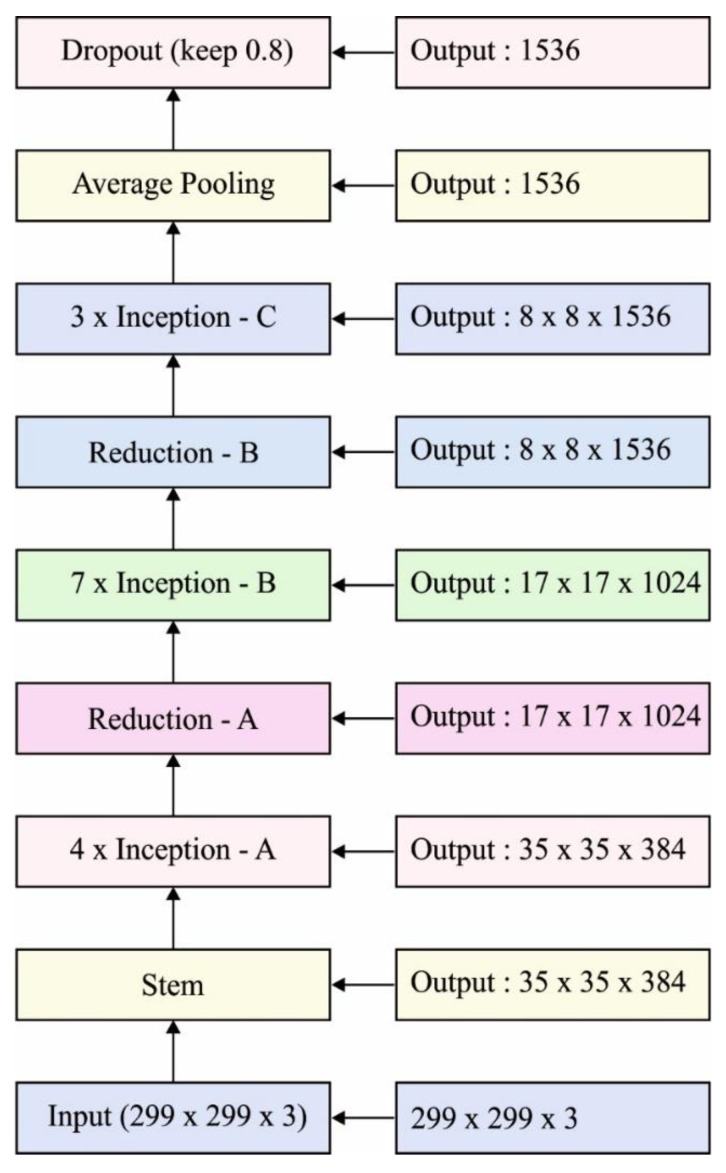

In this section, the features in the preprocessed image are filtered using the IDCNN-based Inception v4 model [21]. The older Inception versions are useful for training distinct blocks where all the repetitive blocks are split into a number of subnetworks enabling the total memory. However, the Inception network is easily tuned, demonstrating that several modifications are performed dependent upon the count of filters in different layers that do not control the quality of completely trained networks. To optimally elect the trained rate, the layer size needs to be set optimally to reach an effective tradeoff between processing and distinct subnetworks. Figure 2 illustrates the network schema of Inception v4. By contrast, in Tensor Flow, advanced Inception techniques are represented without any replica partition.

Figure 2.

Network schema of Inception v4.

For the residual version of the Inception network, the lower Inception blocks were obtainable on regular Inception. Every Inception block arrives in the filter-expansion layer, which increases the filtering bank’s dimensionality before the remaining summation to match the input depth. Further variation amongst the remaining and non-remaining Inception methods is that batch-normalization (BN) was applied on the conventional layer, then not on the peak value of the remaining summaries. It can be anticipated to the exclusive exploitation of BN is suitable, yet the plan of BN in TensorFlow necessitates massive memory, so it becomes essential for minimizing the layer count. Thus, BN is employed.

It is expected that if the filter count exceeds 1000, the residual version starts offering uncertainty, and the network “dies” beforehand from the training, signifying that the final layer prior to average pooling creates only 0 s over different counts of iterations. Therefore, the minimization remaining prior to attaching the preceding activation layer is steady at the time of training. Usually, a few scaling factors exist in the interval of [0.1–0.3] to scale the residual prior to attaching it to the accumulated layer activation.

3.3. GSO-Based Hyperparameter Optimization Model

To optimize the hyperparameters of the GSO technique, a collection of glowworms is initialized and arbitrarily distributed from the solution space in such a way that is effective. The intensity of emitted lights was linked to the amount of luciferin that is closely integrated into it, whereas the glowworms were located from their motion and had a dynamic decision range limited by a spherical sensor range . Firstly, the glowworm comprises an identical count of luciferins, . Based on the resemblance of luciferin values, the glowworm selects their adjacent one with probability and shifts from the direction of decision ranges , whereas the position of the glowworm is represented by [22].

A luciferin update stage is affected by the function value in the glowworms’ place. During the luciferin upgrade, the principle can be defined as:

| (6) |

where denotes the luciferin level connected to a glowworm at time refers to the luciferin decay constant represents the luciferin improvement constant, and signifies the value of the main function at agent ’s place at time

Along with the processes involved in the GSO technique, glowworms are fascinated by their neighbors that glow brighter. Thus, the outcome, at the time of the movement phase, the glowworms make use of the probabilistic process to move towards the neighbor that has a maximum luciferin intensity. In the case of every glowworm , the possibility of moving over a neighboring glowworm can be represented as:

| (7) |

where , denotes the collection of nearby glowworms at time indicates the Euclidean distance amongst the glowworm and at time and denotes the variable neighboring range related to glowworm at time . The variable restricted by a radial sensor range ().

| (8) |

where refers the step sizes, and implies the Euclidean norm operator. Moreover, denote the place of glowworm at time from the dimensional real space . Afterward, let be the initialized neighborhood ranges of all the glowworms (i.e, , ):

| (9) |

where is a constant, and defines a parameter utilized to control the degree.

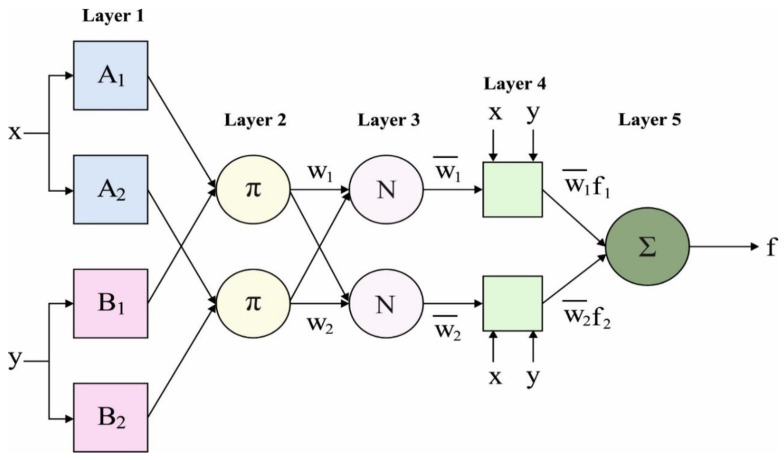

3.4. ANFC-Based Classification Model

The ANFIS-based classification model can be employed to determine the class labels of the input radiological images. For simplicity, it is considered a network with two inputs, and , and one outcome, . The ANFIS is a fuzzy Sugeno method. In order for the ANFIS structure to exist, two fuzzy if-then principles depend on the first-order Sugeno method, which is regarded as follows:

Rule 1: if is A and v is , then ;

Rule 2: if is A and v is , then ;

where and are the input, A and are the fuzzified groups, are the resultants of the fuzzy model, and , and are the designing measures which is defined in the training model. The ANFIS structure for applying these two rules is demonstrated in Figure 3 [23], where the circle refers to the fixed node and the square denotes an adaptive node. As shown in the figure, the ANFIS structure has five layers.

Figure 3.

ANFC structure.

Layer 1: All the nodes in layer 1 creates the adaptive node. The resultants of layer 1 are the fuzzified membership grade of input and are provided as:

| (10) |

| (11) |

where and are the inputs to node , refers the linguistic label, and and accept some fuzzy membership function (MF). In general, is chosen by:

| (12) |

where , and are the measures of membership bell-shaped functions.

Layer 2: A node in this layer is labeled , reflecting that it is executed by a simple multiplier. The resultants of the layer are illustrated as:

| (13) |

Layer 3: It has static nodes that compute the ratio of firing strength of the principles, as given below:

| (14) |

Layer 4: In this layer, nodes are adaptive nodes. The resultants of this layer are calculated by the procedure provided below:

| (15) |

where is a normalized firing strength from layer 3.

Layer 5: A node executes the summary of each received signal. Therefore, an entire output of the method is provided as:

| (16) |

There are two adaptive layers in this ANFIS model, i.e., the first and fourth layers. In the first layer, there are three modifiable measures that are compared with the input MFs. These measures are usually known as premise measures. The fourth layer is also three modifiable measures relating to the first-order polynomial. The consequent measures are during this measurement [24].

3.5. Learning Algorithm of ANFIS

The learning technique for this model is to tune every modifiable measure, such as and , to create an ANFIS output matching the trained data. If premise measures , , and of the MFs are suitable, the resultant of the ANFIS method is expressed by:

| (17) |

By replacing Equation (14) and fuzzy if-then principles with Equation (8), it develops:

| (18) |

where are calculated by Equation (14). After the rearrangement, the output is demonstrated by:

| (19) |

with the linear grouping of changeable resultant measures , and . These measures are upgraded to forward pass the learning technique using the least squares model. Let be an unidentified vector comprising six measures. Thus, Equation (19) is illustrated by:

| (20) |

When A is an invertible matrix then:

| (21) |

Then, a pseudo-inverse is utilized to solve as follows:

| (22) |

During the backward pass, the error signals are propagated, and premise measures are upgraded with gradient descent.

| (23) |

where is the MSE, is all the premise measures, and is the rate of learning. The chain rule is applied to calculate the partial derivative utilized for upgrading the MF measures.

| (24) |

By following the above expression and computing all the partial derivatives, the premise measures are upgraded in Equation (23).

4. Experimental Validation

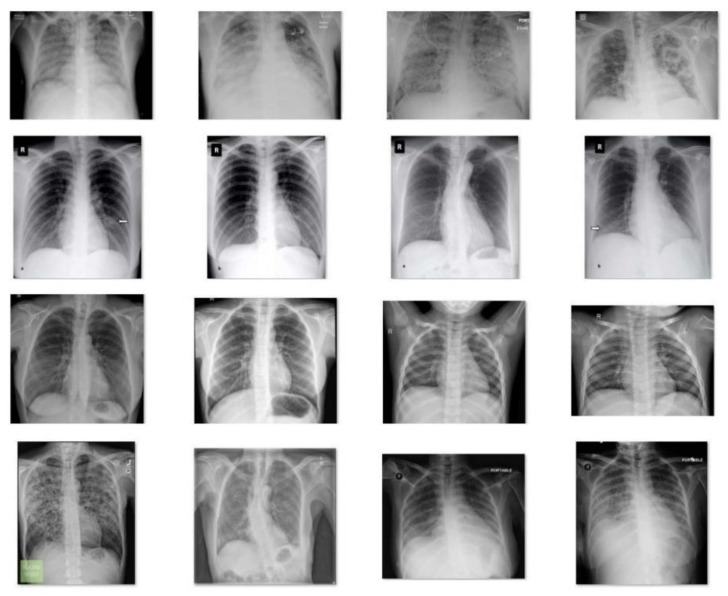

To ensure the classification performance of the GSO-IDCNN method, an extensive experimental validation process was carried out with a chest X-ray dataset [25]. It encompasses a set of 220 COVID-19 images, 27 normal images, 15 pneumocystis images, and 11 SARS images. Figure 4 showcases the sample images. The presented method was executed using an Intel i5, 8th-generation PC with 16GB RAM, MSI L370 Apro, Nividia 1050 Ti4 GB. For experimentation, the Python 3.6.5 tool was utilized together with Pillow, pandas, sklearn, TensorFlow, Keras, opencv, seaborn, Matplotlib, and pycm. The parameters contained are batch size: 128, learning rate: 0.001, epoch count: 500, and momentum: 0.2.

Figure 4.

Sample Images.

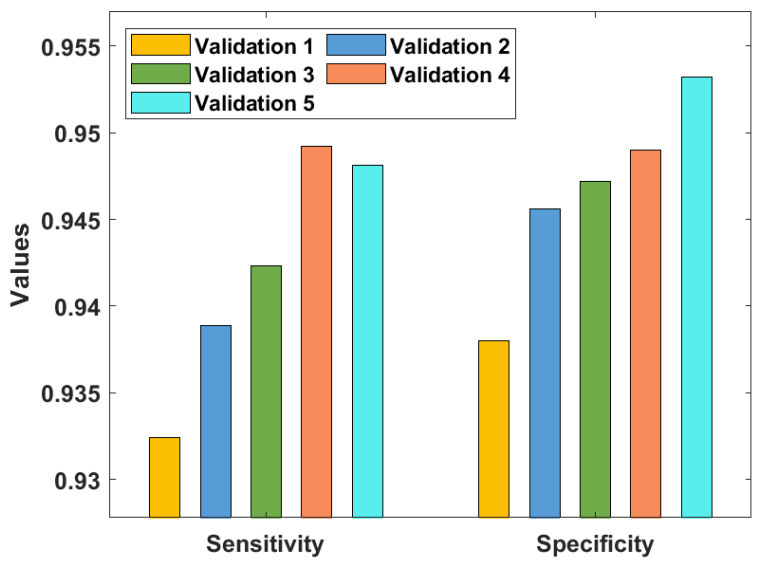

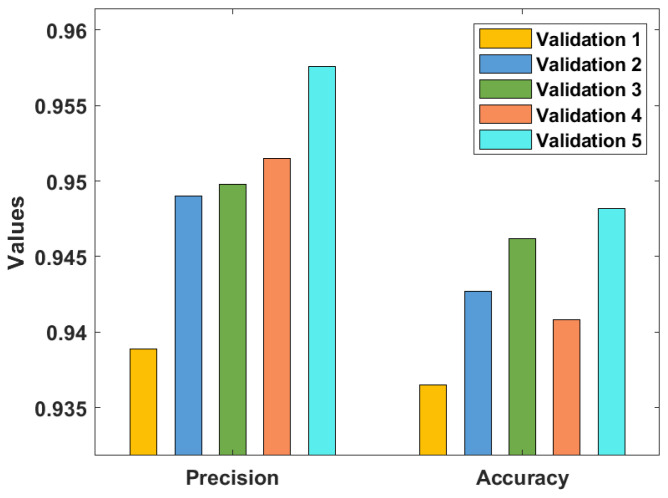

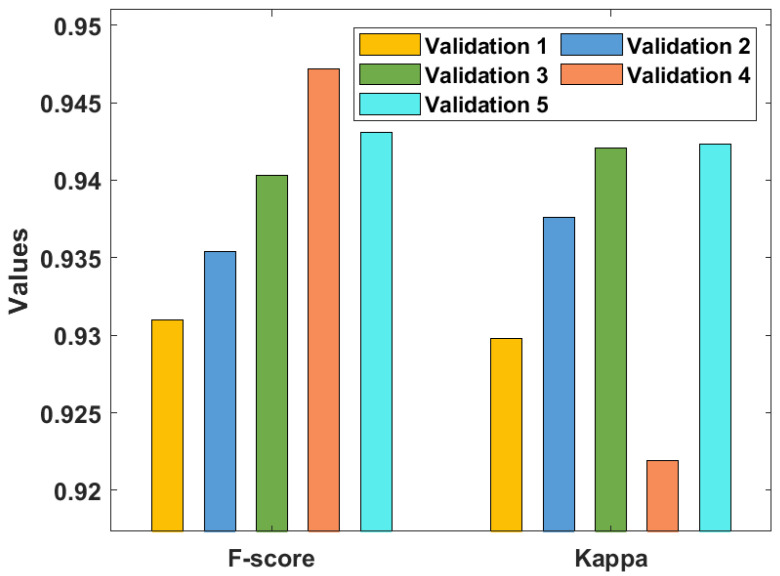

Table 1 and Figure 5, Figure 6 and Figure 7 investigate the classifier outcome analysis of the GSO-IDCNN model under several kinds of validation. The GSO-IDCNN model obtained effective diagnostic outcomes by offering higher performance. For the samples on validation 1, the GSO-IDCNN approach has reached higher , , , , , and kappa values of 0.9324, 0.9380, 0.9389, 0.9365, 0.9310, and 0.9298, respectively.

Table 1.

Result analysis of the presented GSO-IDCNN technique with respect to distinct measures.

| No. of Validation | Kappa | |||||

|---|---|---|---|---|---|---|

| Validation 1 | 0.9324 | 0.9380 | 0.9389 | 0.9365 | 0.9310 | 0.9298 |

| Validation 2 | 0.9389 | 0.9456 | 0.9490 | 0.9427 | 0.9354 | 0.9376 |

| Validation 3 | 0.9423 | 0.9472 | 0.9498 | 0.9462 | 0.9403 | 0.9421 |

| Validation 4 | 0.9492 | 0.9490 | 0.9515 | 0.9408 | 0.9472 | 0.9219 |

| Validation 5 | 0.9481 | 0.9532 | 0.9576 | 0.9482 | 0.9431 | 0.9423 |

| Average | 0.9422 | 0.9466 | 0.9494 | 0.9429 | 0.9394 | 0.9347 |

Figure 5.

Result analysis of the GSO-IDCNN approach with respect to and .

Figure 6.

Result analysis of the GSO-IDCNN technique with respect to and .

Figure 7.

Result analysis of the GSO-IDCNN technique with respect to and kappa.

Eventually, in validation 2, the GSO-IDCNN method attained superior , , , , , and kappa values of 0.9389, 0.9456, 0.9490, 0.9427, 0.9354, and 0.9376, respectively. Moreover, in validation 3, the GSO-IDCNN approach gained increased , , , , , and kappa values of 0.9423, 0.9472, 0.9498, 0.9462, 0.9403, and 0.9421, respectively. Further, in validation 4, the GSO-IDCNN model gained maximal , , , , , and kappa values of 0.9492, 0.9490, 0.9515, 0.9408, 0.9472, and 0.9219, respectively. Furthermore, in validation 5, the GSO-IDCNN method achieved superior sensitivity, specificity, precision, accuracy, F1-score, and kappa values of 0.9481, 0.9532, 0.9576, 0.9482, 0.9431, and 0.9423, respectively.

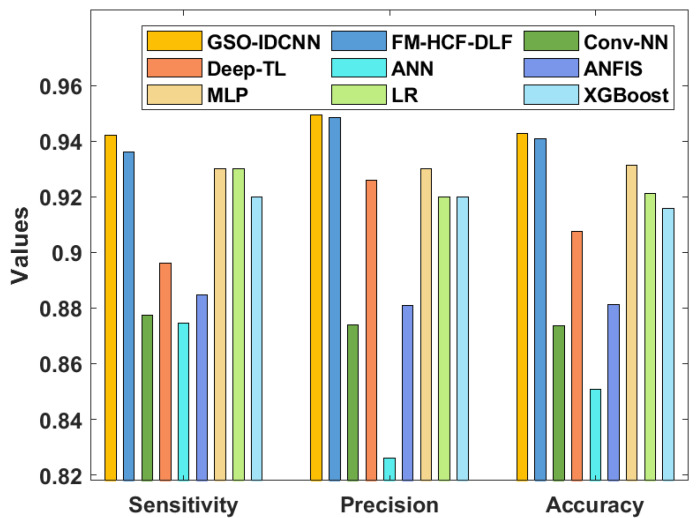

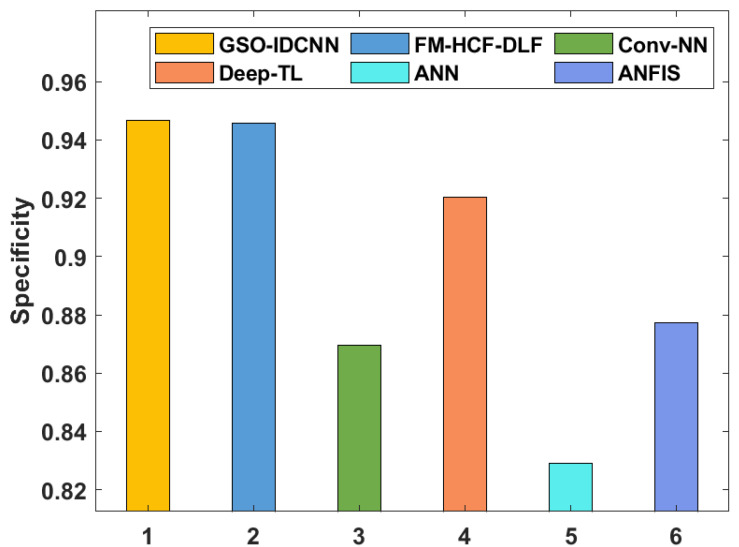

Table 2 and Figure 8 and Figure 9 offer a detailed comparative analysis of the GSO-IDCNN technique with respect to distinct measures [26]. The analysis of the GSO-IDCNN approach with existing algorithms displays that the ANN approach accomplished ineffective results with a lower value of 0.8745. Moreover, the Conv-NN system resulted in a somewhat increased value of 0.8773, whereas the ANFIS and Deep-TL models have accomplished reasonably closer values of 0.8848 and 0.8961, respectively. Eventually, the XGBoost algorithm demonstrated a reasonable outcome with a value of 0.92. Afterward, the MLP and LR approaches depicted considerably increased values of 0.93 and 0.93. Though the FM-HCF-DLF methodology offered a slightly better value of 0.9361, the presented GSO-IDCNN technique achieved a maximum value of 0.9422.

Table 2.

Comparative studies of the existing models with the presented GSO-IDCNN models.

| Methods | |||||

|---|---|---|---|---|---|

| GSO-IDCNN | 0.9422 | 0.9466 | 0.9494 | 0.9429 | 0.9394 |

| FM-HCF-DLF | 0.9361 | 0.9456 | 0.9485 | 0.9408 | 0.9320 |

| Conv-NN | 0.8773 | 0.8697 | 0.8741 | 0.8736 | - |

| Deep-TL | 0.8961 | 0.9203 | 0.9259 | 0.9075 | - |

| ANN | 0.8745 | 0.8291 | 0.8259 | 0.8509 | - |

| ANFIS | 0.8848 | 0.8774 | 0.8808 | 0.8811 | - |

| MLP | 0.9300 | - | 0.9300 | 0.9313 | 0.9300 |

| LR | 0.9300 | - | 0.9200 | 0.9212 | 0.9200 |

| XGBoost | 0.9200 | - | 0.9200 | 0.9157 | 0.9200 |

Figure 8.

Comparative analysis of the GSO-IDCNN technique with different measures.

Figure 9.

Comparative analysis of the GSO-IDCNN technique with respect to .

The analysis of the GSO-IDCNN approach with recent methodologies demonstrates that the ANN model accomplished ineffective outcomes with the minimal value of 0.8291. Additionally, the Conv-NN system resulted in a somewhat increased value of 0.8697, whereas the ANFIS model accomplished a moderate value of 0.8774. Next, the Deep-TL approach showcased reasonable outcomes with a value of 0.9203. Afterward, the FM-HCF-DLF model depicted a considerably increased value of 0.9456. However, the proposed GSO-IDCNN system gained a superior value of 0.9466.

The analysis of the GSO-IDCNN technique with the existing methods shows that the ANN methodology accomplished an ineffectual outcome with a minimum value of 0.8259. In line with this, the Conv-NN model resulted in a slightly higher value of 0.8741, whereas the ANFIS approach accomplished a moderate value of 0.8808. Similarly, the LR and XGBoost models demonstrated a similar value of 0.9200. In addition, the Deep-TL approach portrayed a reasonable outcome with a value of 0.9259. Next, the MLP model has depicted a considerably increased value of 0.9300. Although the FM-HCF-DLF methodology offered a slightly better value of 0.9485, the proposed GSO-IDCNN model achieved a higher value of 0.9494.

The analysis of the GSO-IDCNN methodology with existing approaches exhibits that the ANN method has accomplished ineffective results with a minimum of 0.8509. Similarly, the Conv-NN model resulted in a somewhat enhanced of 0.8736, whereas the ANFIS and Deep-TL systems accomplished reasonably closer values of 0.8811 and 0.9075, respectively. Following them, the XGBoost approach illustrated a reasonable outcome with an value of 0.9157. Concurrently, the LR and MLP methodologies depicted considerably improved values of 0.9212 and 0.9313. Although the FM-HCF-DLF model offered a near-optimal of 0.9408, the projected GSO-IDCNN technique reached a superior of 0.9429. Finally, the analysis of the GSO-IDCNN approach with the existing methodologies displays that the LR and XGBoost methods accomplished ineffective results with the smallest of 0.9200. Additionally, the MLP system resulted in a somewhat maximum of 0.9300. Eventually, the FM-HCF-DLF model outperformed the reasonable results with an of 0.9320. However, the proposed GSO-IDCNN methodology achieved a superior of 0.9394.

From the brief experimental validation, we have ensured that the GSO-IDCNN technique exhibited an effective diagnostic performance on the related approaches since it provided a maximal value of 0.9422, a value of 0.9466, a value of 0.9494, an value of 0.9429, and an of 0.9394. It is due to the integration of the SSA for the parameter tuning of IDCNN using GSO and ANFC models.

5. Conclusions

This paper has established a GSO-IDCNN approach for COVID-19 diagnosis and classification. Primarily, the noise that occurs from radiological images is discarded by the GSF technique. Then, the feature extraction process occurs utilizing the IDCNN model, where the parameters involved in it are tuned by the GSO technique. Eventually, the classification process is executed by the ANFC model to allocate appropriate class labels to it. To validate the performance of the GSO-IDCNN method, extensive simulation analyses were carried out on the benchmark radiological imaging databases to highlight the superior outcome of the GSO-IDCNN technique. The experimental values pointed out that the GSO-IDCNN approach has demonstrated proficient outcome by offering a maximal value of 0.9422, a value of 0.9466, a value of 0.9494, an value of 0.9429, and an of 0.9394. In the future, the COVID-19 diagnostic performance could be improved by utilizing advanced end-to-end deep learning architectures.

Author Contributions

Conceptualization, A.M.H.; Data curation, A.A.A.; Formal analysis, A.A.A.; Investigation, J.S.A.; Methodology, I.A.; Project administration, M.M.E.; Resources, M.I.E.; Software, A.M.; Validation, I.Y.; Writing–original draft, I.A.; Writing–review & editing, A.M. All authors have read and agreed to the published version of the manuscript.

Funding

The authors extend their appreciation to the Deanship of Scientific Research at King Khalid University for funding this work under grant number (RGP 2/158/43). Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2022R191), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia. The authors would like to thank the Deanship of Scientific Research at Umm Al-Qura University for supporting this work by Grant Code: 22UQU4340237DSR05. The authors would like to thank Prince Sultan University for its support in paying the Article Processing Charges.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing is not applicable to this article as no datasets were generated in the study.

Conflicts of Interest

The authors declare that they have no conflict of interest to report regarding the present study.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Stoecklin S.B., Rolland P., Silue Y., Mailles A., Campese C., Simondon A., Mechain M., Meurice L., Nguyen M., Bassi C., et al. First cases of coronavirus disease 2019 (COVID-19) in France: Surveillance, investigations, and control measures. Eurosurveillance. 2020;25:2000094. doi: 10.2807/1560-7917.ES.2020.25.6.2000094. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Pustokhin D.A., Pustokhina I.V., Dinh P.N., Phan S.V., Nguyen G.N., Joshi G.P. An effective deep residual network based class attention layer with bidirectional LSTM for diagnosis and classification of COVID-19. J. Appl. Stat. 2020:1–18. doi: 10.1080/02664763.2020.1849057. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Shorten C., Khoshgoftaar T.M., Furht B. Deep Learning applications for COVID-19. J. Big Data. 2021;8:18. doi: 10.1186/s40537-020-00392-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Alzubaidi L., Zhang J., Humaidi A.J., Al-Dujaili A., Duan Y., Al-Shamma O., Santamaría J., Fadhel M.A., Al-Amidie M., Farhan L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data. 2021;8:53. doi: 10.1186/s40537-021-00444-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Ismael A.M., Şengür A. Deep learning approaches for COVID-19 detection based on chest X-ray images. Expert Syst. Appl. 2021;164:114054. doi: 10.1016/j.eswa.2020.114054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Alzubi J.A., Kumar A., Alzubi O.A., Manikandan R. Efficient Approaches for Prediction of Brain Tumor using Machine Learning Techniques. Indian J. Public Health Res. Dev. 2019;10:267–272. doi: 10.5958/0976-5506.2019.00298.5. [DOI] [Google Scholar]

- 7.Alafif T., Tehame A.M., Bajaba S., Barnawi A., Zia S. Machine and deep learning towards COVID-19 diagnosis and treatment: Survey, challenges, and future directions. Int. J. Environ. Res. Public Health. 2021;18:1117. doi: 10.3390/ijerph18031117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Nazir S., Alzubi O.A., Kaleem K., Hamdoun H. Image subset communication for resource-constrained applications in wireless sensor networks. Turk. J. Electr. Eng. Comput. Sci. 2020;28:2686–2701. doi: 10.3906/elk-2002-169. [DOI] [Google Scholar]

- 9.Azizi S., Mustafa B., Ryan F., Beaver Z., Freyberg J., Deaton J., Loh A., Karthikesalingam A., Kornblith S., Chen T., et al. Big self-supervised models advance medical image classification; Proceedings of the IEEE/CVF International Conference on Computer Vision; Montreal, QC, Canada. 10–17 October 2021; pp. 3478–3488. [Google Scholar]

- 10.Alzubaidi L., Al-Amidie M., Al-Asadi A., Humaidi A.J., Al-Shamma O., Fadhel M.A., Zhang J., Santamaría J., Duan Y. Novel transfer learning approach for medical imaging with limited labeled data. Cancers. 2021;13:1590. doi: 10.3390/cancers13071590. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Albrecht A., Hein E., Steinhöfel K., Taupitz M., Wong C.K. Bounded-depth threshold circuits for computer-assisted CT image classification. Artif. Intell. Med. 2002;24:179–192. doi: 10.1016/S0933-3657(01)00101-4. [DOI] [PubMed] [Google Scholar]

- 12.Yang X., Sechopoulos I., Fei B. Automatic tissue classification for high-resolution breast CT images based on bilateral filtering; Proceedings of the Medical Imaging 2011: Image Processing, International Society for Optics and Photonics; Lake Buena Vista, FL, USA. 12–17 February 2011; p. 79623H. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Ozyurt F., Tuncer T., Avci E., Koc M., Serhatlioglu I. A novel liver image classification method using perceptual hash-based convolutional neural network. Arab. J. Sci. Eng. 2019;44:3173–3182. doi: 10.1007/s13369-018-3454-1. [DOI] [Google Scholar]

- 14.Xu G., Cao H., Udupa J.K., Yue C., Dong Y., Cao L., Torigian D.A. A novel exponential loss function for pathological lymph node image classification; Proceedings of the MIPPR 2019: Parallel Processing of Images and Optimization Techniques; and Medical Imaging, International Society for Optics and Photonics; Wuhan, China. 2–3 November 2020; p. 114310A. [Google Scholar]

- 15.Lakshmanaprabu S.K., Mohanty S.N., Shankar K., Arunkumar N., Ramirez G. Optimal deep learning model for classification of lung cancer on CT images. Future Gener. Comput. Syst. 2019;92:374–382. [Google Scholar]

- 16.Gao M., Bagci U., Lu L., Wu A., Buty M., Shin H.C., Roth H., Papadakis G.Z., Depeursinge A., Summers R.M., et al. Holistic classification of CT attenuation patterns for interstitial lung diseases via deep convolutional neural networks. Comp. Methods Biomech. Biomed. Eng. Imag. Vis. 2018;6:1–6. doi: 10.1080/21681163.2015.1124249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Shan F., Gao Y., Wang J., Shi W., Shi N., Han M., Xue Z., Shi Y. Lung Infection Quantification of COVID-19 in CT Images with Deep Learning. arXiv. 2020:1–19.2003.04655 [Google Scholar]

- 18.Wang S., Kang B., Ma J., Zeng X., Xiao M., Guo J., Cai M., Yang J., Li Y., Meng X., et al. A Deep Learning Algorithm using CT Images to Screen for Corona Virus Disease (COVID-19) medRxiv. 2020:1–26. doi: 10.1007/s00330-021-07715-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Xie X., Li X., Wan S., Gong Y. Mining X-ray images of SARS patients. In: Williams G.J., Simoff S.J., editors. Data Mining: Theory, Methodology, Techniques, and Applications. Springer; Berlin/Heidelberg, Germany: 2006. pp. 282–294. [Google Scholar]

- 20.Nandan D., Kanungo J., Mahajan A. An error-efficient Gaussian filter for image processing by using the expanded operand decomposition logarithm multiplication. J. Ambient Intell. Humaniz. Comput. 2018 doi: 10.1007/s12652-018-0933-x. [DOI] [Google Scholar]

- 21.Sikkandar M.Y., Alrasheadi B.A., Prakash N.B., Hemalakshmi G.R., Mohanarathinam A., Shankar K. Deep learning based an automated skin lesion segmentation and intelligent classification model. J. Ambient Intell. Humaniz. Comput. 2021;12:3245–3255. doi: 10.1007/s12652-020-02537-3. [DOI] [Google Scholar]

- 22.Zainal N., Zain A.M., Radzi N.H.M., Udin A. Applied Mechanics and Materials. Volume 421. Trans Tech Publications Ltd.; Bäch, Switzerland: 2013. Glowworm swarm optimization (GSO) algorithm for optimization problems: A state-of-the-art review; pp. 507–511. [Google Scholar]

- 23.Al-Hmouz A., Shen J., Al-Hmouz R., Yan J. Modeling and simulation of an adaptive neuro-fuzzy inference system (ANFIS) for mobile learning. IEEE Trans. Learn. Technol. 2012;5:226–237. doi: 10.1109/TLT.2011.36. [DOI] [Google Scholar]

- 24.Hosseini M.S., Zekri M. Review of medical image classification using the adaptive neuro-fuzzy inference system. J. Med. Signals Sens. 2012;2:49. doi: 10.4103/2228-7477.108171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. [(accessed on 18 February 2021)]. Available online: https://github.com/ieee8023/covid-chestxray-dataset.

- 26.Shankar K., Perumal E. A novel hand-crafted with deep learning features based fusion model for COVID-19 diagnosis and classification using chest X-ray images. Complex Intell. Syst. 2020;7:1277–1293. doi: 10.1007/s40747-020-00216-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data sharing is not applicable to this article as no datasets were generated in the study.