Abstract

Breast cancer is the leading cause of death for women globally. In clinical practice, pathologists visually scan over enormous amounts of gigapixel microscopic tissue slide images, which is a tedious and challenging task. In breast cancer diagnosis, micro-metastases and especially isolated tumor cells are extremely difficult to detect and are easily neglected because tiny metastatic foci might be missed in visual examinations by medical doctors. However, the literature poorly explores the detection of isolated tumor cells, which could be recognized as a viable marker to determine the prognosis for T1NoMo breast cancer patients. To address these issues, we present a deep learning-based framework for efficient and robust lymph node metastasis segmentation in routinely used histopathological hematoxylin–eosin-stained (H–E) whole-slide images (WSI) in minutes, and a quantitative evaluation is conducted using 188 WSIs, containing 94 pairs of H–E-stained WSIs and immunohistochemical CK(AE1/AE3)-stained WSIs, which are used to produce a reliable and objective reference standard. The quantitative results demonstrate that the proposed method achieves 89.6% precision, 83.8% recall, 84.4% F1-score, and 74.9% mIoU, and that it performs significantly better than eight deep learning approaches, including two recently published models (v3_DCNN and Xception-65), and three variants of Deeplabv3+ with three different backbones, namely, U-Net, SegNet, and FCN, in precision, recall, F1-score, and mIoU (). Importantly, the proposed system is shown to be capable of identifying tiny metastatic foci in challenging cases, for which there are high probabilities of misdiagnosis in visual inspection, while the baseline approaches tend to fail in detecting tiny metastatic foci. For computational time comparison, the proposed method takes 2.4 min for processing a WSI utilizing four NVIDIA Geforce GTX 1080Ti GPU cards and 9.6 min using a single NVIDIA Geforce GTX 1080Ti GPU card, and is notably faster than the baseline methods (4-times faster than U-Net and SegNet, 5-times faster than FCN, 2-times faster than the 3 different variants of Deeplabv3+, 1.4-times faster than v3_DCNN, and 41-times faster than Xception-65).

Keywords: breast cancer segmentation, hierarchical deep learning framework, histopathological images, lymph node metastases, whole-slide image analysis

1. Introduction

Breast cancer is considered the leading cause of death for women globally [1], and according to the report by the American Cancer Society, 42,690 people in the United States of America are expected to die due to breast cancer in 2020 [2]. The prognosis of a breast cancer patient is determined by the extent of metastases, or the spreading of cancer to the other parts of the body from where it initially began [3]. Metastases usually happen when cancer cells split from the main tumor and enter the blood circulation system or the lymphatic system. The TNM staging criteria are commonly adopted to classify the extent of cancer [4]. In the TNM criteria, T refers to the size of the primary tumor (T-stage); N describes whether the cancer has spread to regional lymph nodes (N-stage); M describes whether the cancer has spread to different parts of the body (M-stage) [5]. Metastases can be divided into one of these three categories, including macro-metastases (size greater than 2 mm), micro-metastases (metastatic size greater than 0.2 mm, but no greater than 2.0 mm), and isolated tumor cells (ITCs, metastatic size no greater than 0.2 mm) [6].

The status of a tumor is commonly determined by examining routine histopathological H–E slides, but in many cases, additional costly immunohistochemical (IHC) staining is required to clarify unclear diagnoses of H–E slides [7]. However, the manual detection of cancer in a glass slide under a microscope is a time-consuming and challenging task [8]. At present, we are able to examine pathological images using computer-based algorithms by converting glass slides into whole-slide images (WSI). Dihge [9] predicted lymph node metastases in breast cancer by gene expression data, mixed features, and clinicopathological models to recognize patients with a low risk of metastases and thereby save them from a sentinel lymph node biopsy (SLNB). Shinden [10] proposed using y-glutamyl hydroxymethyl rhodamine green as a new fluorescent method to diagnose lymph node metastases in breast cancer and achieved a sufficiently high specificity (79%), negative predictive value (99%) and sensitivity (97%), proving it useful for cancer diagnosis. Dihge’s [9] and Shinden’s [10] methods require some additional data, such as gene expression data, mixed features, and expensive bio-markers, which make them impractical for clinical usage using economical histopathological slides to detect lymph node metastases. In this work, we propose a method that uses routine H–E slides for lymph node metastasis segmentations.

WSIs are extremely large: a glass slide scanned at 20× magnification produces images that are several gigapixels in size; around 470 WSIs contain nearly the same number of pixels as the whole ImageNet. When confronted with the huge amounts of information contained in large slides, even experienced pathologists are prone to misdetect features and make mistakes. As a result, qualified cancer diagnoses demand peer review and consensus, which can be costly to satisfy in hospitals and small cancer centers with a shortage of trained pathologists. To enhance performance and overcome these weaknesses, deep learning has been presented in various studies [11,12]. Deep learning has the advantage of creating high-level feature extraction and image recognition from raw images, and deep learning algorithms are being used to diagnose, classify, and segment cancer. For example, Yu et al. [13] used regularized machine learning methods to select the top features and to distinguish shorter-term survivors from longer-term ones. Coudray et al. [14] classified lung tissue slides into LUSC, LUAD, or normal lung tissue using Inception v3.

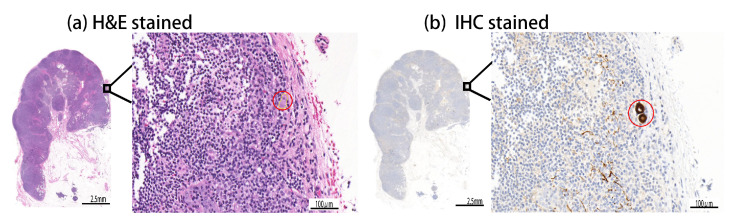

In breast cancer diagnosis, micro-metastases and ITCs are extremely difficult for medical experts to examine on H–E samples, and are highly likely to be neglected because of their tiny size, with respect to the massive dimensions of WSIs. As shown in Figure 1a, ITCs are vastly difficult for a human to find on the routinely used H–E whole-slide image. Therefore, in addition to the H–E staining, an additional expensive data-preparation and examination process, based on IHC staining for cytokeratin, is required to identify ITCs in lymph nodes, as shown in Figure 1b, where ITCs are notably visible as brown spots. The goal of this research is to develop an automated method for the fast, efficient, and accurate segmentation of breast cancer in routinely used H–E WSIs. In evaluation, the proposed method is demonstrated to be able to identify even tiny metastatic foci of challenging samples with ITCs or micro-metastases. To avoid human bias, IHC-stained slides are used to produce a reliable and zero-bias reference standard in this study, and we have collected 188 WSIs, including 94 pairs of H–E and IHC slides. The H–E slides are split into training and testing sets for training and evaluation. We compare the performance of the proposed method with eight popular or recently published deep learning methods, including SegNet [15], U-Net [16], FCN [17], and three variants of Deeplabv3+ [18] with three different backbones, which are MobileNet [19], ResNet [20], and Xception [21], as well as with two recently published models, i.e., v3_DCNN [22] and Xception-65 [23], for the segmentation of breast cancer in routinely used H–E WSIs.

Figure 1.

Challenges in finding tiny metastatic foci in gigapixel H–E WSIs. The segmentation of tiny micro metastases (denoted by red circles in (a) an H–E slide and (b) the associated IHC slide) is as challenging as finding a needle in a haystack. (a) Even in the high-magnification H–E image, ITCs are difficult to locate in humans. (b) In the high-magnification IHC image, metastases are visible as brown spots.

The main contributions of this paper can be summarized as follows:

We present an efficient and robust deep learning model for the segmentation of breast cancer in H–E-stained WSIs. The experimental results show that the proposed method significantly outperforms the baseline approaches for the segmentation of breast cancer in H–E-stained WSIs ();

Our framework is demonstrated to be capable of detecting tiny metastasis foci, such as micro-metastases and ITCs, which are extremely difficult to find by visual inspection on H–E-stained WSIs. In comparison, the baseline approaches tend to fail in detecting tiny metastasis foci;

By leveraging the efficiency of a tile-based data structure and a modified fully convolutional neural network model, the proposed method is notably faster in gigapixel WSI analysis than the baseline approaches, taking 2.4 min to complete the whole slide analysis utilizing four NVIDIA Geforce GTX 1080Ti GPU cards and 9.6 min using a single NVIDIA Geforce GTX 1080Ti GPU card.

This paper is organized as follows. Section 2 presents the related works; Section 3 describes the details of the materials and methods used; Section 4 presents the results, including a comparison with the baseline approaches; Section 5 provides discussions and presents the significance of the work; Section 6 draws the conclusion and presents the future research directions.

2. Related Works

In recent years, due to sensational advancements in computer power and image-scanning techniques, more researchers evaluate their algorithms on WSI datasets. Bejnordi et al. [24] proposed a multiscale superpixel method to detect the ductal carcinoma in situ (DCIS) in WSIs. Huang et al. [25] proposed a convolutional network with multi-magnification input images to automatically detect hepatocellular carcinoma (HCC). Celik et al. [26] used pre-trained deep learning models, ResNet-50, and DenseNET-161 for the automated detection of invasive ductal carcinoma detection. Gecer et al. [27] proposed deep convolutional networks (DCNN) for the detection and classification of breast cancer in WSIs. Firstly, they used a saliency detector that performs multi-scale localization of relevant ROI in a WSI. After that, a convolutional network classifies image patches into five diagnostic categories (atypical ductal hyperplasia, ductal carcinoma in situ, non-proliferative or proliferative changes, and invasive carcinoma). In the end, slide-level categorization and pixel-wise labeling are performed by fusing classification and saliency maps. Lin et al. [28] proposed a framework for the fast and dense scanning of metastatic breast cancer detection in WSI. However, Lin’s [28] method does not deal with ITCs, which are extremely difficult for medical experts to examine on H–E samples in breast cancer diagnosis, and are highly likely to be neglected because of their tiny size, with respect to the massive dimensions of the WSIs.

In 2016 and 2017, the Camelyon16 [29] and Camelyon17 challenges [3,29,30] were held, aiming to evaluate new and existing algorithms for the automated detection and classification of metastases in H–E-stained WSIs of lymph node sections. In Camelyon16, 400 WSIs and the associated annotations were provided, where 270 slides were for training and 130 slides for testing; in Camelyon17, 1399 WSIs and the associated annotations were provided, where 899 slides were for training and 500 slides for testing. Wang [31] implemented an ensemble of two GoogLeNets for patch-based metastasis detection and won Camelyon16. Firstly, they divided WSIs into patches with pixels and then trained an ensemble of two GoogLeNet classification models to detect cancer regions.

For the fast and precise pixel-based segmentation of breast cancer, Guo et al. [22] introduced a v3_DCNN framework in 2019, which combines an Inception-v3 classification model for the selection of tumor regions and a DCNN segmentation model for refined segmentation. Guo [22] used three different patch sizes to train models, including , , and . They named the three DCNN models after the varied sizes of training patches: DCNN-321, DCNN-768, and DCNN-1280, respectively. Priego et al. [23] proposed a patch-based deep convolutional neural network (DCNN), together with an encoder-decoder with a separable atrous convolution architecture for the segmentation of breast cancer in H–E-stained WSIs.

3. Materials and Methods

In this section, we describe the datasets and the proposed deep learning-based framework with a modified fully convolutional network, which is trained using transfer learning, boosting learning, boosted data augmentation, and focus sampling techniques to boost its performance for the segmentation of breast cancer on H–E-stained WSIs. This section is divided into five subsections: in Section 3.1, the datasets are described; in Section 3.2, the transfer learning, boosting learning, boosted data augmentation, and focus sampling techniques of the proposed method are described; in Section 3.3, the proposed deep learning-based framework is described; in Section 3.4, the modified fully convolutional network is described; in Section 3.5, the implementation details of the modified fully convolutional network and the baseline approaches are described.

3.1. The Dataset

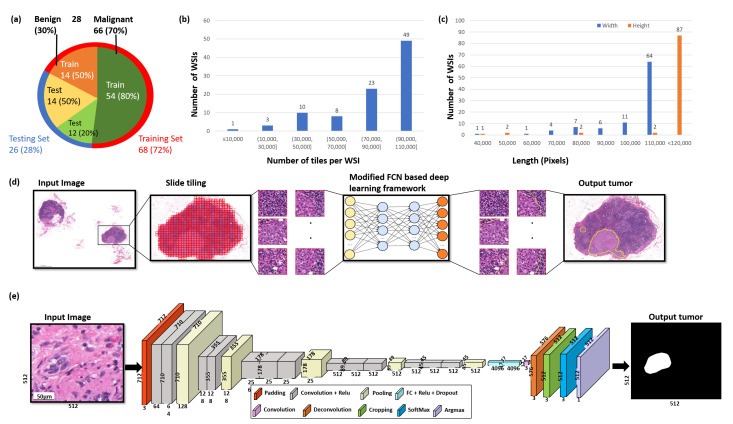

The dataset is directly obtained from the National Taiwan University Hospital with an ethical approval (NTUH-REC 201810082RINB) by the research ethics committee B of the National Taiwan University Hospital on 8 March 2019, containing 188 H–E- and IHC CK(AE1/AE3)-stained lymph slides. Out of 188 WSIs, 94 slides are H–E-stained and the other 94 are IHC CK(AE1/AE3)-stained WSIs. The dimensions of the slides are, on average, pixels, with a physical size of mm. All breast cancer tissue slides with lymphatic metastases were scanned using a 3DHISTECH Pannoramic (3DHISTECH Kft., Budapest, Hungary) scanner at objective magnification. All the annotations were made by two expert pathologists with the guidance of IHC biomarkers. The whole dataset was split into 2 separate subsets for training and testing, including 68 slides for the training set (), from which 54 are malignant slides and 14 are benign slides, and 26 slides for the testing set (), from which 12 are malignant slides and 14 are benign slides (Figure 2a), which ensures the models are never trained and tested on the same sample. For training the AI models, around 0.02% of the malignant tissue samples and 0.01% of the benign tissue samples were used in the training set. Detailed information about the distribution of the WSIs is shown in Figure 2b,c. For a quantitative evaluation, the IHC slides were used to produce a reliable reference standard.

Figure 2.

The overview of the data and the proposed deep learning framework presented in this study. (a) Distribution of data between malignant and benign samples and for training and testing data, respectively. (b) The number of tiles delivered per WSI. (c) The width and height distributions of the WSIs are shown in blue and orange, respectively. (d) The proposed deep learning framework for the segmentation of breast cancer. Firstly, Otsu’s method is used to threshold the slide image to efficiently discard all background noise. Secondly, each WSI is formatted into a tile-based data structure. Thirdly, the tiles are then analyzed by a deep convolutional neural network to produce the breast cancer metastasis segmentation results. (e) Illustration of the proposed modified FCN architecture.

3.2. Transfer Learning, Boosting Learning, Boosted Data Augmentation, and Focusing Sampling

3.2.1. Transfer Learning

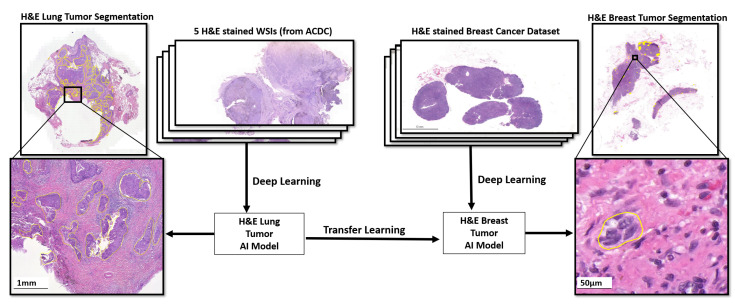

Transfer learning is a machine learning technique in which a model trained on one task is repurposed to the second related task by adding some modifications [32]. For instance, one can visualize using an image segmentation model trained on ImageNet, which contains thousands of classes of different objects, to begin task-specific learning for cancer detection. Transfer learning is usually useful for tasks in which enough training samples are not available to train a model from scratch, such as medical image segmentation for rare or emerging diseases [32]. For models based on deep neural networks, this is, particularly, the situation that requires a large number of parameters for training. By utilizing transfer learning, the model parameters start with already-good initial weights that only need some small alterations to be better curated towards the second task. The transfer learning approach has been frequently used in pathology, for example, in mitochondria segmentation [33], organelle segmentation [34], and breast cancer classification [35]. In this study, we use the pre-trained weights of the lung cancer segmentation model trained using five randomly selected WSIs from an H–E-stained lung dataset provided by the Automatic Cancer Detection and Classification (ACDC) in the Whole-Slide Lung Histopathology challenge, held with the IEEE International Symposium in Biomedical Image (ISBI) in 2019 [36], as initial weights to train the proposed model for the segmentation of tumors in an H–E-stained breast dataset, as shown in Figure 3. We assumed that this pre-trained network could be able to recognize tumor tissue morphology. As a result, this pre-trained model serves as the backbone architecture for transfering information in order to find breast tumor tissues. However, to train a model with such a small dataset, the following boosting learning strategy is designed.

Figure 3.

The H–E-stained breast cancer segmentation model is trained through transfer learning based on a lung tumor AI model which learned from five H–E WSIs of the IEEE ACDC challenge dataset. The pre-trained weights of the H–E lung tumor AI model are used as initial weights to train the proposed EBUS-TBNA lung tumor AI model for the segmentation of the H–E-stained breast dataset.

3.2.2. Boosting Learning

Given a training set , where represents the instance data and represents the label, a learner , and the base deep learning model C, the proposed boosting learning produces the final AI model by the following steps. Firstly, create a new set with instance weight , where represents a tile; . Each instance weight is initialized with an IoU-based attention weighting function for further training.

| (1) |

where ; .

Then, iteratively for , build a base model . The sample weights are continuously modified and formulated by increasing the attention weights of false positives and false negatives of .

| (2) |

3.2.3. Boosted Data Augmentation

Next, we devised a boosted data augmentation based on the sample attention weights and produced new data . Data augmentation was applied to enlarge the training set with additional synthetically modified data by manipulating the rotation per 5°, and 5 times with an increment of 90°, mirror-flipping it along the horizontal and vertical axes, and adjusting the contrast (random contrast, range 0% ± 20%), the saturation (random saturation, range 0% ± 20%), and the brightness (random brightness, range 0% ± 12.5%).

3.2.4. Focusing Sampling

When the training data is partially labeled, causing many unlabeled tissues of interest to be wrongly defined as background or content of no interest, this severely confuses AI learners during supervised learning and deteriorates the performance of the output AI models. To deal with this issue, we have added an IoU-based focusing sampling mechanism for computing the gradients effectively. A number of unlabeled cells will now not be used as negative samples for training to confuse learning, but are arranged as ignored samples. This will not only help the learning be more focused, but also speed up the learning time. Moreover, we increase the learning efforts for false positive and false negative predictions, and further, add variations of the FPs and FNs to assist the AI to learn better, deal with its weakness, and produce improved AI models.

3.3. Whole-Slide Image Processing

Figure 2d shows the deep learning-based approach for the segmentation of metastatic breast cancer from WSIs. Firstly, Otsu’s approach will be utilized to filter the WSI to exclude all background noise, thereby substantially lowering the amount of processing per slide. Then, each WSI is formulated as a tile-based data structure to deal with gigapixel data efficiently, where u represents a tile unit; i and j represent the row and column number of a tile, respectively.

Next, a deep learning model is built using a modified fully convolutional neural network for fast WSI analysis, which is described in detail in the next section. The tiles are processed by the proposed deep convolutional neural network C to obtain the probabilities for cancer cells, as shown in Equation (3). Then, the pixel-based segmentation result of tumor cells is produced based on the tumor cells’ probabilities .

| (3) |

| (4) |

The tile size and alpha are input parameters and are empirically set as 512 × 512 and 0.5, respectively.

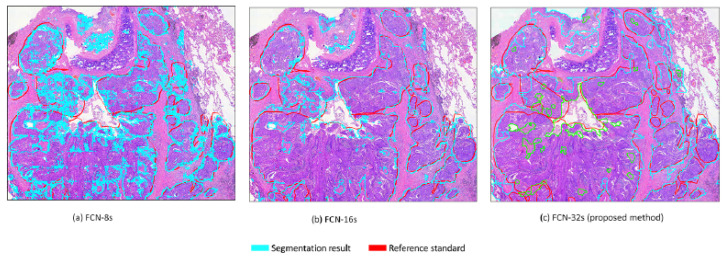

3.4. The Proposed Modified Fully Convolutional Network

Fully convolutional networks (FCNs) are widely used in the field of pathology, including for counting cells in different kinds of neuropathology [37] and microscopy images [38], and for the segmentation of nuclei in histopathology images [39]. Our FCN architecture is modified based on the original FCN framework of Shelhamer [17], with two improvements. Firstly, we address the problem of insufficient GPU memory during training by using the shallow network of five layers rather than seven layers in the conventional FCN network [17]. Secondly, segmentation is required to produce a prediction for every pixel and to perform upsampling to restore the original size. Therefore, we utilize single-stream 32s upsampling to avoid excessively fragmented segmentation results, as shown in Figure 4, and to reduce the computational time for training and inference. In this work, we devise a modified FCN by utilizing the single-stream 32s upsampling as the base deep learning model to decrease GPU memory consumption, improve segmentation results, and decrease the computational time for training and inference. The modified FCN architecture has a padding layer, five convolutional blocks, a rectified linear unit (ReLU) activation function after every convolutional layer, five max-pooling layers, a deconvolutional layer, a softmax layer, and two dropout layers. The modified FCN begins with a padding layer that is used to increase the input size from 512 × 512 3 to 712 × 712 × 3. Following the padding layer, there are five convolutional blocks that are applied in a sequential manner. The first two convolutional blocks consist of two convolutional layers with a filter size of 3 × 3 and a stride size of 1, and the remaining three convolutional blocks consist of three convolutional layers with a filter size of 3 × 3 and a stride size of 1. Each convolution layer in the convolutional block is followed by the ReLU layer. Each convolutional block is followed by a maxpooling layer with a filter size of 2 × 2 and stride size of 2. After the convolutional blocks and maxpooling layer, a deconvolutional layer with a filter size of 64 × 64 and stride size of 32 is applied to obtain the upsampled feature maps. Cropping is performed after the deconvolution layer to restore the feature maps to the same size as an input image. After cropping, softmax is used to obtain the class probabilities. At the end, an argmax function is applied on the class probabilities to produce the pixel-based class map. The detailed architecture of the modified FCN is shown in Figure 2e.

Figure 4.

Three upsampling layers are compared. The findings of (a) FCN-8s and (b) FCN-16s are excessively fragmented when evaluated against the results of the (c) FCN-32s, which are the closest to the reference standard.

3.5. Implementation Details

To train the proposed technique, the model is initialized using the VGG16 model and optimized with stochastic gradient descent (SGD) optimization, and the cross-entropy function is used as a loss function. Furthermore, the proposed method is trained with the following settings: a learning rate of , dropout ratio of 0.5, and weight decay of 0.0005, respectively. The baseline approaches, including U-Net [16], SegNet [15], and FCN [17], are implemented using the Keras implementation of image segmentation models by Gupta et al. [40], initialized using a pre-trained VGG16 model, and optimized with Adadelta optimization, with the cross entropy function as a loss function. In addition, U-Net, SegNet, and FCN are trained with the following settings: a learning rate of 0.0001, dropout ratio of 0.2, and weight decay of 0.0002, respectively. For the baseline approaches, including DeepLabv3+ [18] with three different backbones, which are MobileNet [19], ResNet [20], and Xception [21], the networks are optimized using SGD optimization, with the cross-entropy function as a loss function. Furthermore, DeepLabv3+ with three backbones is trained with the following settings: a learning rate of 0.007, dropout ratio of 0.2, and weight decay of 0.00005, respectively.

4. Results

In this section, the evaluation metrics and the quantitative evaluation results with statistical analysis are described. This section is divided into three subsections: in Section 4.1, we describe the evaluation metrics; in Section 4.2, we present the quantitative evaluation results of the proposed method and compare the performance with the baseline approaches; in Section 4.3, we present the hardware specifications and run-time analysis of the proposed method, and compare the performance with the baseline approaches.

4.1. Evaluation Metrics

Four criteria are adopted to produce the quantitative evaluation of the segmentation performance, i.e., mean intersection over union (), F1-score, precision, and recall.

refers to the mean of computed on normal and tumor slides. The can be formulated according to Equation (5):

| (5) |

where TP represents the true positive, TN is the true negative, FP denotes false positive, and FN is the false negative.

is the mean value of over all the classes in the dataset.

| (6) |

where q + 1 is the total number of classes and is the intersection over union of class b.

Precision, recall, and F1-score are computed as follows:

| (7) |

| (8) |

| (9) |

4.2. Quantitative Evaluation with Statistical Analysis

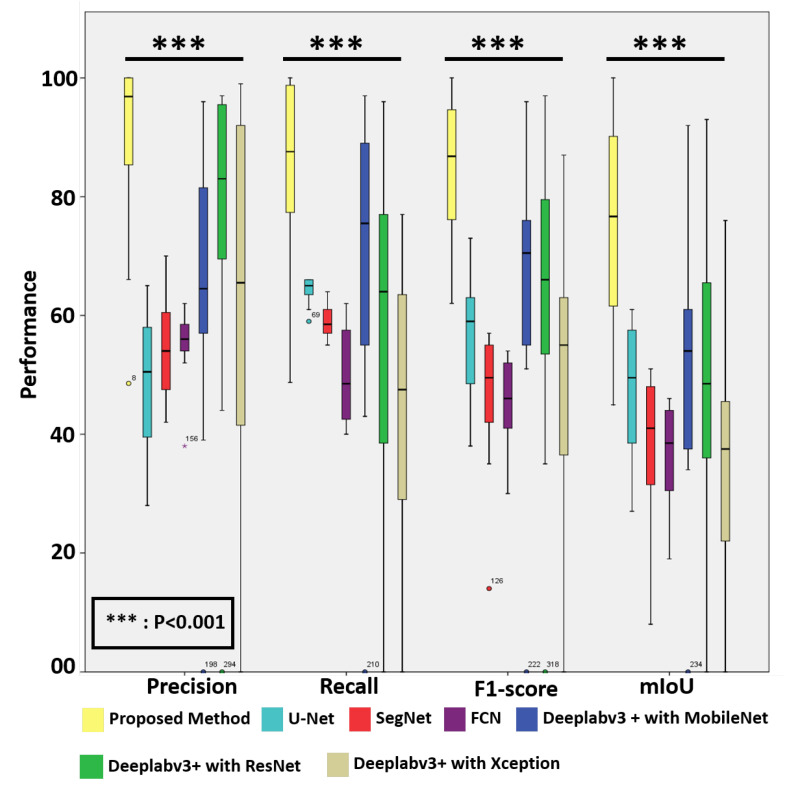

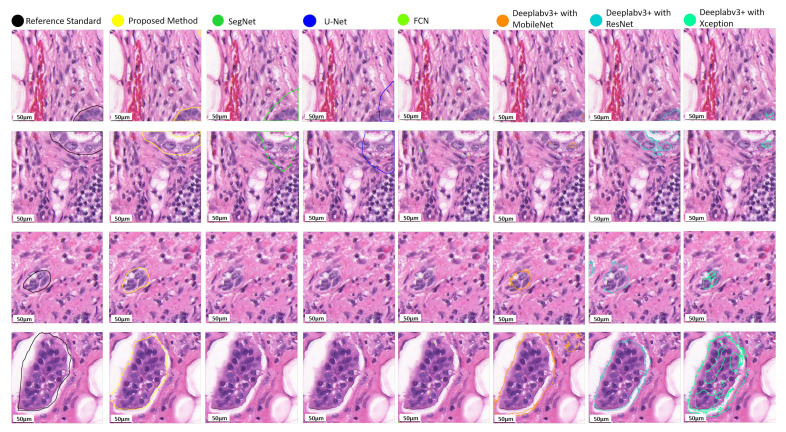

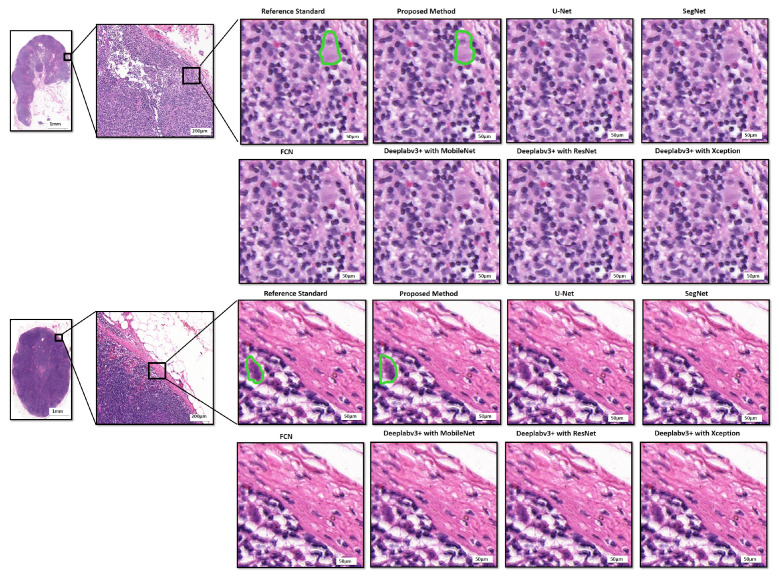

For quantitative assessment, we compared the effectiveness and efficacy of the suggested approach to eight deep learning models, including two recently published models, i.e., v3_DCNN [22] and Xception-65 [23], and U-Net [16], SegNet [15], FCN [17], and three variants of Deeplabv3+ [18] with three different backbones, including MobileNet [19], ResNet [20], and Xception [21], for breast cancer segmentation in routinely used histopathological H–E WSIs, as presented in Table 1. As can be observed, the proposed approach surpasses the baseline techniques in the segmentation of breast cancer in histopathological images with 83.8% recall, 89.6% precision, 84.4% F1-score, and 74.9% , respectively. In addition, the box plots of the quantitative assessment results for breast cancer segmentation are shown in Figure 5, demonstrating that the suggested technique consistently outperforms the baseline approaches. To further demonstrate the efficacy and efficiency of the proposed method, using SPSS software, we examined the quantitative scores that were evaluated with Fisher’s least significant difference (LSD) procedure (Table 2). Based on the LSD test, the suggested approach substantially exceeds the baseline approaches in terms of precision, recall, F1-score, and (). Figure 6 presents the visual comparison of the segmentation results of the proposed method and the baseline approaches for the segmentation of breast cancer in H–E slides. Here, we can observe a consistency between the typical segmentation results generated by the proposed method and the reference standard produced by expert pathologists, while the baseline approaches are unable to produce thhe full segmentation results of metastatic lesions. Figure 7 compares the suggested approach and the baseline approaches for the segmentation of tiny metastatic foci such as micro-metastases and ITCs in challenging cases with isolated tumor cells, demonstrating that the suggested approach is capable of effectively segmenting the tiny metastasis foci, while the reference approaches tend to fail in detecting the tiny metastasis foci.

Table 1.

The quantitative evaluation of the proposed method and the baseline approaches in the segmentation of metastases on H–E WSIs.

| Method | Score | 95% C.I. for Mean | ||||

|---|---|---|---|---|---|---|

| Mean | Std. Deviation | Std. Error | Lower Bound | Upper Bound | ||

| Proposed method | 0.892 | 0.163 | 0.047 | 0.787 | 0.995 | |

| Precision | U-Net [16] | 0.486 | 0.116 | 0.033 | 0.411 | 0.559 |

| SegNet [15] | 0.548 | 0.091 | 0.026 | 0.489 | 0.605 | |

| FCN [17] | 0.552 | 0.062 | 0.018 | 0.512 | 0.590 | |

| Deeplabv3+ [18] with MobileNet [19] | 0.643 | 0.262 | 0.075 | 0.476 | 0.809 | |

| Deeplabv3+ [18] with ResNet [20] | 0.613 | 0.354 | 0.102 | 0.388 | 0.838 | |

| Deeplabv3+ [18] with Xception [21] | 0.753 | 0.286 | 0.082 | 0.571 | 0.935 | |

| Proposed method | 0.837 | 0.169 | 0.049 | 0.729 | 0.945 | |

| Recall | U-Net [16] | 0.643 | 0.022 | 0.006 | 0.628 | 0.656 |

| SegNet [15] | 0.588 | 0.028 | 0.008 | 0.570 | 0.606 | |

| FCN [17] | 0.500 | 0.082 | 0.023 | 0.448 | 0.551 | |

| Deeplabv3+ [18] with MobileNet [19] | 0.682 | 0.277 | 0.080 | 0.506 | 0.858 | |

| Deeplabv3+ [18] with ResNet [20] | 0.440 | 0.261 | 0.075 | 0.274 | 0.606 | |

| Deeplabv3+ [18] with Xception [21] | 0.584 | 0.290 | 0.083 | 0.399 | 0.768 | |

| Proposed method | 0.844 | 0.127 | 0.036 | 0.763 | 0.925 | |

| F1-score | U-Net [16] | 0.564 | 0.095 | 0.027 | 0.503 | 0.624 |

| SegNet [15] | 0.562 | 0.124 | 0.036 | 0.383 | 0.581 | |

| FCN [17] | 0.510 | 0.078 | 0.022 | 0.400 | 0.531 | |

| Deeplabv3+ [18] with MobileNet [19] | 0.640 | 0.241 | 0.069 | 0.487 | 0.794 | |

| Deeplabv3+ [18] with ResNet [20] | 0.480 | 0.262 | 0.075 | 0.313 | 0.646 | |

| Deeplabv3+ [18] with Xception [21] | 0.621 | 0.259 | 0.047 | 0.456 | 0.786 | |

| Proposed method | 0.749 | 0.188 | 0.054 | 0.629 | 0.868 | |

| mIoU | U-Net [16] | 0.473 | 0.114 | 0.331 | 0.400 | 0.546 |

| SegNet [15] | 0.380 | 0.129 | 0.037 | 0.298 | 0.462 | |

| FCN [17] | 0.363 | 0.086 | 0.025 | 0.308 | 0.418 | |

| Deeplabv3+ [18] with MobileNet [19] | 0.504 | 0.229 | 0.066 | 0.358 | 0.650 | |

| Deeplabv3+ [18] with ResNet [20] | 0.344 | 0.213 | 0.031 | 0.208 | 0.480 | |

| Deeplabv3+ [18] with Xception [21] | 0.487 | 0.251 | 0.072 | 0.327 | 0.647 | |

| v3_DCNN-1280 * [22] | 0.685 | - | - | - | - | |

| Xception-65 * [23] | 0.645 | - | - | - | - | |

Figure 5.

The box plot of the quantitative evaluation results in metastasis segmentation, where the outliers the interquartile range are marked with a dot. The results of the LSD tests () show that the proposed method significantly outperforms the baseline approaches.

Table 2.

Multiple comparisons for segmentation of metastases on H–E WSIs: LSD test.

| LSD Multiple Comparisons | |||||||

|---|---|---|---|---|---|---|---|

| Dependent Variable | (I) Method | (J) Method | Mean Difference (I–J) | Std. Error | Sig. | 95% C.I. | |

| Lower Bound | Upper Bound | ||||||

| Precision | Proposed method | U-Net [16] | 0.405 * | 0.088 | <0.001 | 0.311 | 0.499 |

| SegNet [15] | 0.344 * | 0.088 | <0.001 | 0.250 | 0.438 | ||

| FCN [17] | 0.339 * | 0.088 | <0.001 | 0.245 | 0.434 | ||

| Deeplabv3+ [18] with MobileNet [19] | 0.248 * | 0.088 | <0.001 | 0.163 | 0.516 | ||

| Deeplabv3+ [18] with ResNet [20] | 0.248 * | 0.088 | <0.001 | 0.072 | 0.424 | ||

| Deeplabv3+ [18] with Xception [21] | 0.278 * | 0.088 | <0.001 | 0.102 | 0.454 | ||

| Recall | Proposed method | U-Net [16] | 0.195 * | 0.079 | <0.001 | 0.116 | 0.273 |

| SegNet [15] | 0.249 * | 0.079 | <0.001 | 0.170 | 0.328 | ||

| FCN [17] | 0.337 * | 0.079 | <0.001 | 0.258 | 0.416 | ||

| Deeplabv3+ [18] with MobileNet [19] | 0.155 * | 0.079 | <0.001 | 0.350 | 0.313 | ||

| Deeplabv3+ [18] with ResNet [20] | 0.397 * | 0.079 | <0.001 | 0.239 | 0.556 | ||

| Deeplabv3+ [18] with Xception [21] | 0.253 * | 0.079 | <0.001 | 0.0.94 | 0.411 | ||

| F1-score | Proposed method | U-Net [16] | 0.280 * | 0.075 | <0.001 | 0.190 | 0.369 |

| SegNet [15] | 0.382 * | 0.075 | <0.001 | 0.292 | 0.471 | ||

| FCN [17] | 0.339 * | 0.075 | <0.001 | 0.304 | 0.482 | ||

| Deeplabv3+ [18] with MobileNet [19] | 0.203 * | 0.075 | <0.001 | 0.524 | 0.354 | ||

| Deeplabv3+ [18] with ResNet [20] | 0.364 * | 0.075 | <0.001 | 0.213 | 0.515 | ||

| Deeplabv3+ [18] with Xception [21] | 0.222 * | 0.075 | <0.001 | 0.234 | 0.235 | ||

| mIoU | Proposed method | U-Net [16] | 0.275 * | 0.055 | <0.001 | 0.165 | 0.386 |

| SegNet [15] | 0.369 * | 0.055 | <0.001 | 0.258 | 0.480 | ||

| FCN [17] | 0.385 * | 0.055 | <0.001 | 0.275 | 0.496 | ||

| Deeplabv3+ [18] with MobileNet [19] | 0.245 * | 0.074 | <0.001 | 0.096 | 0.393 | ||

| Deeplabv3+ [18] with ResNet [20] | 0.405 * | 0.074 | <0.001 | 0.256 | 0.553 | ||

| Deeplabv3+ [18] with Xception [21] | 0.261 * | 0.074 | <0.001 | 0.113 | 0.410 | ||

* The proposed method is significantly better than the baseline approaches using the LSD test (p < 0.001).

Figure 6.

Qualitative evaluation of the metastasis segmentation results by the proposed method and the baseline approaches for breast cancer segmentation in histopathological images.

Figure 7.

Comparison of the proposed method and the baseline approaches for the segmentation of tiny metastatic foci in challenging cases with isolated tumor cells. The results show that the proposed method is capable of effectively segmenting the tiny metastasis foci, while the baseline approaches tend to fail in segmenting the tiny metastasis foci.

4.3. Run Time Analysis

The computation time of WSI is critical for actual clinical utilization due to the massive size of WSIs. Therefore, we analyzed the overall AI inference time for processing a WSI (Table 3). Table 3 compares the hardware and computing efficiency of the suggested approach with eight deep learning approaches, including, U-Net, SegNet, FCN, and three variants of Deeplabv3+ with three different backbones, which are MobileNet, ResNet, and Xception, as well as two recently published models (v3_DCNN, Xception-65), showing that the proposed method is notably faster than the baseline approaches. For the run-time analysis of v3_DCNN and Xception-65, we referred to the reported numbers of Guo [22] and Priego [23]. As shown in Table 3, the proposed method takes 2.4 min for a WSI analysis, utilizing four NVIDIA Geforce GTX 1080Ti GPU cards, and 9.6 min using a single NVIDIA Geforce GTX 1080Ti GPU card, while the U-Net model takes 44 min, the SegNet model takes 43 min, the FCN model takes 48 min, the Deeplabv3+ with MobileNet model takes 17.2 min, the Deeplabv3+ with ResNet model takes 18.2 min, and the Deeplabv3+ with Xception model takes 17.8 min; the patch-based Xception-65 model requires 398.2 min, estimated by multiplying the time cost of 0.23 s for a single patch by the total number of patches of a WSI, and the best model of v3_DCNN takes 13.8 min, approximated by multiplying the time cost of s for a single pixel by the total number of pixels. Having the same slide dimensions and hardware equipments, the proposed method is 4-times faster than U-Net and SegNet, 5-times faster than FCN, 2-times faster than three different varaints of DeepLabv3+, 1.4-times faster than v3_DCNN, and 41-times faster than Xception-65, even with a less-expensive GPU. Altogether, the suggested technique is proved to be able to reliably detect breast cancer in H–E data and swiftly process WSIs in 2.4 min for actual clinical usage.

Table 3.

Comparison of hardware and computing efficiency.

| Method | CPU | RAM | GPU | Inference Time per WSI (min.) |

|---|---|---|---|---|

| Proposed Method (with 4 GPUs) | Intel Xeon Gold 6134 CPU @ 3.20 GHz × 16 | 128 GB | 4 × GeForce GTX 1080 Ti | 2.4 |

| Proposed Method (with 1 GPU) | Intel Xeon CPU E5-2650 v2 @ 2.60 GHz × 16 | 32 GB | 1 × GeForce GTX 1080 Ti | 9.6 |

| U-Net [16] | Intel Xeon CPU E5-2650 v2 @ 2.60 GHz × 16 | 32 GB | 1 × GeForce GTX 1080 Ti | 44 |

| SegNet [15] | Intel Xeon CPU E5-2650 v2 @ 2.60 GHz × 16 | 32 GB | 1 × GeForce GTX 1080 Ti | 43 |

| FCN [17] | Intel Xeon CPU E5-2650 v2 @ 2.60 GHz × 16 | 32 GB | 1 × GeForce GTX 1080 Ti | 48 |

| Deeplabv3+ [18] with MobileNet [19] | Intel Xeon CPU E5-2650 v2 @ 2.60 GHz × 16 | 32 GB | 1 × GeForce GTX 1080 Ti | 17.2 |

| Deeplabv3+ [18] with ResNet [20] | Intel Xeon CPU E5-2650 v2 @ 2.60 GHz × 16 | 32 GB | 1 × GeForce GTX 1080 Ti | 18.2 |

| Deeplabv3+ [18] with Xception [21] | Intel Xeon CPU E5-2650 v2 @ 2.60 GHz × 16 | 32 GB | 1 × GeForce GTX 1080 Ti | 17.8 |

| v3_DCNN-1280 [22] | - | - | 1 × GeForce GTX 1080 Ti | 13.8 |

| Xception-65 [23] | Intel Xeon CPU E5-2698 v4 @ 2.2 GHz | 256 GB | 4 × Tesla V100 Tensor Core | 398.2 * |

5. Discussion and Significance of the Work

5.1. Discussion

The histopathological H–E analysis of tissue biopsies plays a key role in the diagnosis of cancer and in devising the treatment procedure [28]. Manual pathological diagnosis is an extremely challenging, laborious, and time-consuming task. With the increasing cancer morbidity, the population of pathologists cannot fulfill the increasing demand of diagnosis. Pathologists must undertake a comprehensive evaluation of all information on a significant number of biopsy slides every day in histopathological diagnosis. More significantly, there is a considerable risk of misdiagnosis in difficult instances such as ITCs and micro-metastases. There are strong grounds to assume that digital pathology, in conjunction with artificial intelligence for CAD diagnosis, is a solution to this problem since it helps create more accurate diagnoses, shortens examination times, and reduces both pathologists’ efforts and examination costs.

Prior to Camelyon 16, there have been few studies applying deep learning to gigapixel WSIs. The majority of the solutions used image analysis on pre-selected areas of 500 × 500 pixels that were hand-picked by experienced pathologists. The computational cost is the key hurdle in employing computational approaches to diagnose gigapixel WSIs, which is why many existing algorithms are not well-suited to clinical applications. A complete and thorough automated inspection of WSIs with high accuracy may require additional time and computer resources. In this paper, we describe a quick and efficient approach for segmenting small metastases in WSIs that not only achieves state-of-the-art performance but also overcomes the primary computational cost constraint of WSI analyses. The suggested approach can finish the whole slide analysis of a big WSI with 113,501 × 228,816 pixels in 2.4 min using four NVIDIA Geforce GTX 1080Ti GPU cards, and in 9.6 min using a single NVIDIA Geforce GTX 1080Ti GPU card. More crucially, the suggested technique has been shown to be capable of recognizing ITCs (the smallest kind of metastasis), which have a high chance of misinterpretation by professional pathologists due to their small size (see Figure 7). The results of the experiments reveal that the suggested technique achieves 83.8% recall, 89.6% precision, 84.4% F1-score, and 74.9% . Furthermore, based on the LSD test, the proposed technique outperformed state-of-the-art segmentation models such as U-Net, SegNet, FCN, and three distinct variations of Deeplabv3+, as well as two newly released models, namely, v3 DCNN and Xception-65 ().

5.2. Significance of the Work

The quantitative and qualitative results show that the proposed method could be a highly valuable tool for aiding pathologists in the segmentation of WSIs of breast tissues. This information could be critical for delivering suitable and personalized targeted therapy to breast cancer patients, broadening the scope and effectiveness of precision medicine, which aspires to build a multiplex strategy with patient-specific therapy. More importantly, the proposed method is shown to be capable of detecting ITCs (the smallest kind of metastasis), which could be recognized as a viable marker to determine the prognosis for T1NoMo breast cancer patients. Furthermore, the run-time analysis results show that the proposed method also overcomes the major speed bottleneck in employing computational approaches to diagnose gigapixel WSIs.

6. Conclusions and Future Directions

In this paper, we present a deep learning-based system for automated breast cancer segmentation in commonly used histopathological H–E WSIs. We evaluated our proposed framework using 188 WSIs, containing 94 H–E- and 94 IHC CK(AE1/AE3)-stained WSIs, which are used to create a reliable and objective reference standard. The quantitative results demonstrate that the proposed method achieves 89.6% precision, 83.8% recall, 84.4% F1-score, and 74.9% mIoU, and significantly outperforms eight baseline approaches, including U-Net, SegNet, FCN, three variants of Deeplabv3+ with three different backbones, as well as two recently published methods (v3_DCNN, and Xception-65) for the segmentation of breast cancer in histopathological images (). Furthermore, the results show that our proposed work is capable of identifying tiny metastatic foci that have a high probability of misdiagnosis by visual inspection, while the baseline approaches tend to fail in detecting the tiny metastatic foci for cases with micro metastases or ITCs. The run-time analysis results show that the proposed deep learning framework can effectively segment the lymph node metastases in a short processing time using a low-cost GPU. With high segmentation accuracy and less computational time, our proposed architecture will help pathologists to effectively diagnose and grade tumors by increasing the diagnosis accuracy, reducing the workload of pathologists, and speeding up the diagnosis process. In the future, we anticipate that the system will be used in clinical practice to assist pathologists with portions of their assessments that are well-suited to automatic analysis, and that the segmentation will be extended to other forms of cancer. Another aspect that could be investigated in future works is the use of weakly supervised deep learning technologies for WSI analysis, which can be optimized with a limited amount of labeled training data.

Author Contributions

C.-W.W. conceived the idea of this work. C.-W.W. designed the methodology and the software of this work. M.-A.K. and Y.-C.L. carried out the validation of the methodology of this work. M.-A.K. performed the formal analysis of this work. Y.-C.L. performed the investigation. H.-C.L. and Y.-M.J. participated in annotation or curation of the dataset. C.-W.W. and M.-A.K. prepared and wrote the manuscript. C.-W.W. reviewed and revised the manuscript. M.-A.K. prepared the visualization of the manuscript. C.-W.W. supervised this work. C.-W.W. administered this work and also acquired funding for this work. All authors have read and agreed to the published version of the manuscript.

Funding

This study is supported by the Ministry of Science and Technology of Taiwan, under grants (MOST 108-2221-E-011-070 and 109-2221-E-011-018-MY3).

Institutional Review Board Statement

The dataset used in this study was obtained from the National Taiwan University Hospital with an ethical approval (NTUH-REC 201810082RINB) by the research ethics committee B of the National Taiwan University Hospital on March 8th, 2019, containing 188 H–E- and IHC CK(AE1/AE3)-stained lymph slides. Out of 188 WSIs, 94 slides are H–E-stained and the other 94 are IHC CK(AE1/AE3)-stained WSIs.

Informed Consent Statement

Patient consent was formally waived by the approving review board, and the data were de-identified and used for a retrospective study without impacting patient care.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Anderson B.O., Cazap E., El Saghir N.S., Yip C.H., Khaled H.M., Otero I.V., Adebamowo C.A., Badwe R.A., Harford J.B. Optimisation of breast cancer management in low-resource and middle-resource countries: Executive summary of the Breast Health Global Initiative consensus, 2010. Lancet Oncol. 2011;12:387–398. doi: 10.1016/S1470-2045(11)70031-6. [DOI] [PubMed] [Google Scholar]

- 2.Siegel R.L., Miller K.D., Jemal A. Cancer statistics, 2020. CA Cancer J. Clin. 2020;70:7–30. doi: 10.3322/caac.21590. [DOI] [PubMed] [Google Scholar]

- 3.Bandi P., Geessink O., Manson Q., Van Djik M., Balkenhol M., Hermsen M., Bejnordi B.E., Lee B., Paeng K., Zhong A., et al. From detection of individual metastases to classification of lymph node status at the patient level: The camelyon17 challenge. IEEE Trans. Med. Imaging. 2018;38:550–560. doi: 10.1109/TMI.2018.2867350. [DOI] [PubMed] [Google Scholar]

- 4.Amin M.B., Greene F.L., Edge S.B., Compton C.C., Gersjenwald J.E., Brookland R.K., Meyer L., Gress D.M., Byrd D.R., Winchester D.P. The eighth edition AJCC cancer staging manual: Continuing to build a bridge from a population-based to a more “personalized” approach to cancer staging. CA Cancer J. Clin. 2017;67:93–99. doi: 10.3322/caac.21388. [DOI] [PubMed] [Google Scholar]

- 5.Edge S.B., Compton C.C. The American Joint Committee on Cancer: The 7th edition of the AJCC cancer staging manual and the future of TNM. Ann. Surg. Oncol. 2010;17:1471–1474. doi: 10.1245/s10434-010-0985-4. [DOI] [PubMed] [Google Scholar]

- 6.Apple S.K. Sentinel lymph node in breast cancer: Review article from a pathologist’s point of view. J. Pathol. Transl. Med. 2016;50:83. doi: 10.4132/jptm.2015.11.23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Lee H.S., Kim M.A., Yang H.K., Lee B.L., Kim W.H. Prognostic implication of isolated tumor cells and micrometastases in regional lymph nodes of gastric cancer. World J. Gastroenterol. WJG. 2005;11:5920. doi: 10.3748/wjg.v11.i38.5920. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Qaiser T., Tsang Y.-W., Taniyama D., Sakamoto N., Nakane K., Epstein D., Rajpoot N. Fast and accurate tumor segmentation of histology images using persistent homology and deep convolutional features. Med. Image Anal. 2019;55:1–14. doi: 10.1016/j.media.2019.03.014. [DOI] [PubMed] [Google Scholar]

- 9.Dihge L., Vallon-Christersson J., Hegardt C., Saal L.H., Hakkinen J., Larsson C., Ehinger A., Loman N., Malmberg M., Bendahl P.-O., et al. Prediction of Lymph Node Metastasis in Breast Cancer by Gene Expression and Clinicopathological Models: Development and Validation within a Population-Based Cohort. Clin. Cancer Res. 2019;25:6368–6381. doi: 10.1158/1078-0432.CCR-19-0075. [DOI] [PubMed] [Google Scholar]

- 10.Shinden Y., Ueo H., Tobo T., Gamachi A., Utou M., Komatsu H., Nambara S., Saito T., Ueda M., Hirata H., et al. Rapid diagnosis of lymph node metastasis in breast cancer using a new fluorescent method with γ-glutamyl hydroxymethyl rhodamine green. Sci. Rep. 2016;6:1–7. doi: 10.1038/srep27525. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Bengio Y., Courville A., Vincent P. Representation learning: A review and new perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 2013;35:1798–1828. doi: 10.1109/TPAMI.2013.50. [DOI] [PubMed] [Google Scholar]

- 12.LeCun Y., Bengio Y., Hinton G. Deep learning. Nature. 2015;521:436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 13.Yu K.H., Zhang C., Berry G.J., Altman R.B., Ré C., Rubin D.L., Snyder M. Predicting non-small cell lung cancer prognosis by fully automated microscopic pathology image features. Nat. Commun. 2016;7:12474. doi: 10.1038/ncomms12474. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Coudray N., Ocampo P.S., Sakellaropoulos T., Narula N., Snuderl M., Fenyö D., Moreira A.L., Razavian N., Tsirigos A. Classification and mutation prediction from non–small cell lung cancer histopathology images using deep learning. Nat. Med. 2018;24:1559–1567. doi: 10.1038/s41591-018-0177-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Badrinarayanan V., Kendall A., Cipolla R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017;39:2481–2495. doi: 10.1109/TPAMI.2016.2644615. [DOI] [PubMed] [Google Scholar]

- 16.Falk T., Mai D., Bensch R., Çiçek Ö., Abdulkadir A., Marrakchi Y., Böhm A., Deubner J., Jäckel Z., Seiwald K., et al. U-Net: Deep learning for cell counting, detection, and morphometry. Nat. Methods. 2019;16:67–70. doi: 10.1038/s41592-018-0261-2. [DOI] [PubMed] [Google Scholar]

- 17.Shelhamer E., Long J., Darrell T. Fully convolutional networks for semantic segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017;39:640–651. doi: 10.1109/TPAMI.2016.2572683. [DOI] [PubMed] [Google Scholar]

- 18.Chen L.C., Zhu Y., Papandreou G., Schroff F., Adam H. Encoder-decoder with atrous separable convolution for semantic image segmentation; Proceedings of the European Conference on Computer Vision (ECCV); Munich, Germany. 8–14 September 2018; pp. 801–818. [Google Scholar]

- 19.Howard A.G., Zhu M., Chen B., Kalenichenko D., Wang W., Weyand T., Andreetto M., Adam H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv. 20171704.04861 [Google Scholar]

- 20.He K., Zhang X., Ren S., Sun J. Deep residual learning for image recognition; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Las Vegas, NV, USA. 27–30 June 2016; pp. 770–778. [Google Scholar]

- 21.Chollet F. Xception: Deep learning with depthwise separable convolutions; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Honolulu, HI, USA. 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- 22.Guo Z., Liu H., Ni H., Wang X., Su M., Guo W., Wang K., Jiang T., Qian Y. A fast and refined cancer regions segmentation framework in whole-slide breast pathological images. Sci. Rep. 2019;9:1–10. doi: 10.1038/s41598-018-37492-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Priego-Torres B.M., Sanchez-Morillo D., Fernandez-Granero M.A., Garcia-Rojo M. Automatic segmentation of whole-slide H&E stained breast histopathology images using a deep convolutional neural network architecture. Expert Syst. Appl. 2020;151:113387. [Google Scholar]

- 24.Bejnordi B.E., Balkehol M., Litjens G., Holland R., Bult P., Karssemeijer N., van der Laak J.A.W.M. Automated detection of DCIS in whole-slide H&E stained breast histopathology images. IEEE Trans. Med. Imaging. 2016;35:2141–2150. doi: 10.1109/TMI.2016.2550620. [DOI] [PubMed] [Google Scholar]

- 25.Huang W.C., Chung P.-C., Tsai H.-W., Chow N.-H., Juang Y.-Z., Tsai H.-H., Lin S.-H., Wang C.-H. Automatic HCC detection using convolutional network with multi-magnification input images; Proceedings of the 2019 IEEE International Conference on Artificial Intelligence Circuits and Systems (AICAS); Hsinchu, Taiwan. 18–20 March 2019; pp. 194–198. [Google Scholar]

- 26.Celik Y., Talo M., Yildirim O., Karabatak M., Acharya U.R. Automated invasive ductal carcinoma detection based using deep transfer learning with whole-slide images. Pattern Recognit. Lett. 2020;133:232–239. doi: 10.1016/j.patrec.2020.03.011. [DOI] [Google Scholar]

- 27.Gecer B., Aksoy S., Mercan E., Shapiro L.G., Weaver D.L., Elmore J.G. Detection and classification of cancer in whole slide breast histopathology images using deep convolutional networks. Pattern Recognit. 2018;84:345–356. doi: 10.1016/j.patcog.2018.07.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Lin H., Chen H., Graham S., Dou Q., Rajpoot N., Heng P.A. Fast scannet: Fast and dense analysis of multi-gigapixel whole-slide images for cancer metastasis detection. IEEE Trans. Med. Imaging. 2019;38:1948–1958. doi: 10.1109/TMI.2019.2891305. [DOI] [PubMed] [Google Scholar]

- 29.Bejnordi B.E., Veta M., van Diest P.L., van Ginneken B., Karssemeijer N., Litjens G., van der Laak J.A.W.M. Diagnostic assessment of deep learning algorithms for detection of lymph node metastases in women with breast cancer. JAMA. 2017;318:2199–2210. doi: 10.1001/jama.2017.14585. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Litjens G., Bandi P., Bejnori B.E., Geessink O., Balkenhol M., Bult P., Halilovic A., Hermsen M., van de Loo R., Vogels R., et al. 1399 H&E-stained sentinel lymph node sections of breast cancer patients: The CAMELYON dataset. GigaScience. 2018;7:giy065. doi: 10.1093/gigascience/giy065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Wang D., Khosla A., Gargeya R., Irshad H., Beck A.H. Deep learning for identifying metastatic breast cancer. arXiv. 20161606.05718 [Google Scholar]

- 32.Alzubaidi L., Al-Amidie M., Al-Asadi A., Humaidi A.J., Al-Shamma O., Fadhel M.A., Zhang J., Santamaría J., Duan Y. Novel transfer learning approach for medical imaging with limited labeled data. Cancers. 2021;13:1590. doi: 10.3390/cancers13071590. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Becker C., Christoudias C.M., Fua P. Domain adaptation for microscopy imaging. IEEE Trans. Med. Imaging. 2014;34:1125–1139. doi: 10.1109/TMI.2014.2376872. [DOI] [PubMed] [Google Scholar]

- 34.Bermúdez-Chacón R., Becker C., Salzmann M., Fua P. Scalable unsupervised domain adaptation for electron microscopy; Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention; Athens, Greece. 17–21 October 2016; Cham, Switzerland: Springer; 2016. pp. 326–334. [Google Scholar]

- 35.Spanhol F.A., Oliveira L.S., Cavalin P.R., Petitjean C., Heutte L. Deep features for breast cancer histopathological image classification; Proceedings of the 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC); Banff, AB, Canada. 5–8 October 2017; Piscataway, NJ, USA: IEEE; 2017. pp. 1868–1873. [Google Scholar]

- 36.Li Z., Zhang J., Tan T., Teng X., Sun X., Zhao H., Liu L., Xiao Y., Lee B., Li Y., et al. Deep Learning Methods for Lung Cancer Segmentation in Whole-slide Histopathology Images-the ACDC@ LungHP Challenge 2019. IEEE J. Biomed. Health Inform. 2020;25:429–440. doi: 10.1109/JBHI.2020.3039741. [DOI] [PubMed] [Google Scholar]

- 37.Signaevsky M., Prastawa M., Farrel K., Tabish N., Baldwin E., Han N., Iida M.A., Koll J., Bryce C., Purohit D., et al. Artificial intelligence in neuropathology: Deep learning-based assessment of tauopathy. Lab. Investig. 2019;99:1019. doi: 10.1038/s41374-019-0202-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Zhu R., Sui D., Qin H., Hao A. An extended type cell detection and counting method based on FCN; Proceedings of the 2017 IEEE 17th International Conference on Bioinformatics and Bioengineering (BIBE); Washington, DC, USA. 23–25 October 2017; Piscataway, NJ, USA: IEEE; 2017. pp. 51–56. [Google Scholar]

- 39.Naylor P., Laé M., Reyal F., Walter T. Segmentation of nuclei in histopathology images by deep regression of the distance map. IEEE Trans. Med. Imaging. 2018;38:448–459. doi: 10.1109/TMI.2018.2865709. [DOI] [PubMed] [Google Scholar]

- 40.Gupta D., Jhunjhunu wala R., Juston M., Jaled M.C. Image Segmentation Keras: Implementation of Segnet, FCN, UNet, PSPNet and Other Models in Keras. [(accessed on 15 October 2020)]. Available online: https://github.com/divamgupta/image-segmentation-keras.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.