Abstract

Introduction:

Patient outcome prediction models are underutilized in clinical practice due to lack of integration with real-time patient data. The electronic health record (EHR) has the ability to utilize machine learning (ML) to develop predictive models. While an EHR ML model has been developed to predict clinical deterioration, it has yet to be validated for use in trauma. We hypothesized that the Epic Deterioration Index (EDI) would predict mortality and unplanned ICU admission in trauma patients.

Methods:

A retrospective analysis of a trauma registry was used to identify patients admitted to a level 1 trauma center for > 24 hours from October 2019-July 2020. We evaluated the performance of the EDI, which is constructed from 125 objective patient measures within the EHR, in predicting mortality and unplanned ICU admissions. We performed a 5 to 1 match on age as it is a major component of EDI, then examined the area under the receiver-operating-characteristic curve (AUC) and benchmarked it against injury severity score (ISS) and new injury severity score (NISS).

Results:

The study cohort consisted of 1325 patients admitted with a mean age of 52.5 years and 91% following blunt injury. The in-hospital mortality rate was 2% and unplanned ICU admission rate was 2.6%. In predicting mortality, the max EDI within 24 hours of admission had an AUC of 0.98 compared to 0.89 of ISS and 0.91 of NISS. For unplanned ICU admission, the EDI slope within 24 hours of ICU admission had a modest performance with an AUC of 0.66.

Conclusion:

EDI appears to perform strongly in predicting in-patient mortality similarly to ISS and NISS. In addition, is can be used to predict unplanned ICU admissions. This study helps validate the use of this real-time EHR ML-based tool, suggesting EDI should be incorporated into the daily care of trauma patients.

Level of Evidence:

Retrospective cohort analysis, IV

Keywords: Machine Learning, Electronic Health Record, Trauma, Mortality, Unplanned ICU Admission

Introduction

There are many published models on predicting mortality and unplanned intensive care unit (ICU) admission for trauma patients with similar performances [1]. However, many are not utilized in a day-to-day clinical setting in part due to their separation from the electronic health record (EHR)[2]. Many models require input of multiple data points in a third-party web application while others are available only for use in a retrospective fashion [3–8]. Both are significant barriers to evaluating risk factors for clinical deterioration in day-to-day clinical practice [2]. A recent EHR-integrated prediction model, the Rothman Index, has been validated for predicting readmission and ICU mortality in surgical patients, but it is not widely implemented and has not been validated in trauma specific population [9–11].

Recently, Epic Systems (Verona, WI) developed a novel machine learning (ML) model embedded within the EHR called the Epic Deterioration Index (EDI) that has been widely implemented across the country [12]. This model incorporates a large number of physiological parameters in real-time as well as nursing assessments such as the Glasgow Coma Score (GCS) to generate a composite deterioration risk score. It has been touted as a clinical decision support tool as well as being able to help hospitals with effective triaging of patients at risk for clinical deterioration [12, 13]. However, despite its wide adoption in hospitals using the Epic EHR, external validation of this model has been sparse [14]. Only one single-center validation of the EDI has been published to date, with the study authors concluding that it had moderate predictive performance in ICU admission and mortality for patients with COVID-19 [15]. There are no external validation studies of the EDI for use in trauma patients.

Given that the EDI shares many parameters used by other trauma prediction models, we hypothesized that EDI could predict mortality and unplanned ICU admission in trauma patients with equal or better performance than existing prediction models. Validation of the EDI in the trauma population could pave the way for incorporating real-time EHR-integrated prediction models in trauma centers across the country.

Methods

Study Cohort

All adult patients 18 years or older admitted to a single Level 1 trauma center between October 2019 and July 2020 with a hospital length of stay greater than 24 hours were identified in the trauma registry and included in this retrospective analysis. Patients with no EDI values prior to discharge were excluded. Transparent Reporting of a multivariable prediction model for Individual Prognosis Or Diagnosis (TRIPOD) guidelines was followed for standard reporting of this study. The completed checklist for the manuscript is available as a Digital Supplement Material (SDC) 1. This study was approved by the Institutional Review Board of the University of California San Diego Human Research Protection Program. A waiver of informed consent was also granted.

Study Variables

Patient demographics, EDI scores, trauma mechanism, admission and ICU transfer times, level of care on admission, injury severity score (ISS), and unplanned ICU admission status were collected. Trained abstractors for the trauma registry and not part of this study collected all patient variables and outcomes data. The EDI is a proprietary machine learning model developed originally to predict risk of in-hospital deterioration and mortality. This model was developed from data from over 100,000 patient encounters at 3 healthcare organizations from 2012 to 2016 [14]. Targeted outcomes for the machine learning model included mortality and escalation of care. The final model takes over 125 patient variables in the EHR and generates a composite risk score from 0 to 100, with 100 representing the highest risk of deterioration or mortality in the next 24 hours. This score is calculated every 20 minutes and starts on admission. The implemented EDI is integrated directly into the transactional, user-facing database layer of the EHR. As such, it has direct access to all patient data points in real-time with minimal delays, which allows it to be computed with the latest entered data and updated every 20 minutes or less depending on the health system’s technical infrastructure. Results are then immediately available to clinical teams and displayed prominently in each patient’s chart along with the EDI trend over the previous hours. Specific data extracted for calculation of the EDI includes, but is not limited to age, systolic blood pressure, heart rate, respiratory rate, oxygen saturations, oxygen requirement, cardiac rhythm, blood pH, sodium, potassium, blood urea nitrogen, white blood cell count, hematocrit, platelet count, and neurologic assessments including Glasgow Coma Scale. A full list of variables included in the model as well as the cohort selection, hyperparameters, and internal model validation is proprietary and has not been previously published.

Statistical Analysis

We evaluated the performance of the EDI by calculating the area under the receiver-operating characteristic curve (AUC). In our analysis of mortality prediction, we used the maximum EDI (max EDI) value at 6, 12, 18, and 24 hours from time of admission as well as the slope over 24 hours because the EDI is measured continuously over time. This approach is based on prior work with the EDI in Covid-19 patients that demonstrated that the max EDI over a period of time had the best predictive performance [15]. Furthermore, measuring the max EDI has the added benefit of simplifying the scores into a single number that could be used clinically with limited additional interpretation or calculations.

For unplanned ICU admission analysis, we measured the EDI change over time (slope of the linear regression line) and max EDI in a 24-hour time interval ending at 4 hours prior to unplanned ICU admission time. We adopted this approach because a deterioration prediction tool is most beneficial when it provides clinicians enough lead time to act on the finding. The max EDI and EDI slope over the course of the hospitalization was used for those who did not have an unplanned ICU admission. EDI values for any ICU time were excluded as their scores are generally higher as a reflection of their overall illness.

We performed a 5 to 1 age-matched analysis for both unplanned ICU admission and mortality prediction analysis, because age is a major contributor to the EDI calculation. We also benchmarked the results of the EDI against the ISS and New ISS (NISS), which are validated predictors of mortality in trauma patients [16–18].

The statistical software R v4.0.5 was used to conduct all analysis. Performance threshold curves and AUC curves with 95% confidence interval (CI) calculated using 1000 bootstrapped replicates were generated using modified code from Singh et al – their code is available publicly at https://github.com/ml4lhs/edi_validation [15].

Results

We identified 1325 patients who met the inclusion criteria above. The mean age was 52.5 years old and 69% of patients were male. A majority of patients (91%) were admitted after blunt injury. Overall, in-hospital mortality was 2% and the unplanned ICU admission rate was 2.6%. Patients had a mean ISS of 10.7 and NISS of 145. The mean maximum EDI in the first 24 hours of admission was 50.4. Table 1 summarizes the demographics of our cohort.

Table 1 –

Patient Demographics

| Total Number of Patients | 1325 |

| Age (years, mean ± SD) | 52.5 ± 21.8 |

| % Male | 69.1% |

| % Hispanic | 42.9% |

| Race | |

| White | 43.6% |

| African American | 7.3% |

| Asian | 3.0% |

| Other | 46.1% |

| % Blunt Injury | 90.9% |

| In-hospital Mortality | 2.0% |

| Unplanned ICU Admission Rate | 2.6% |

| Length of Stay (days, mean ± SD) | 5.5 ± 7.8 |

| First 24-hour Max EDI (mean ± SD) | 50.4 ± 17.4 |

| ISS (mean ± SD) | 10.7 ± 8.3 |

| NISS (mean ± SD) | 14.5 ± 12.2 |

SD- Standard Deviation, EDI – Epic Deterioration Index, ISS- Injury Severity Scale, NISS – New Injury Severity Scale

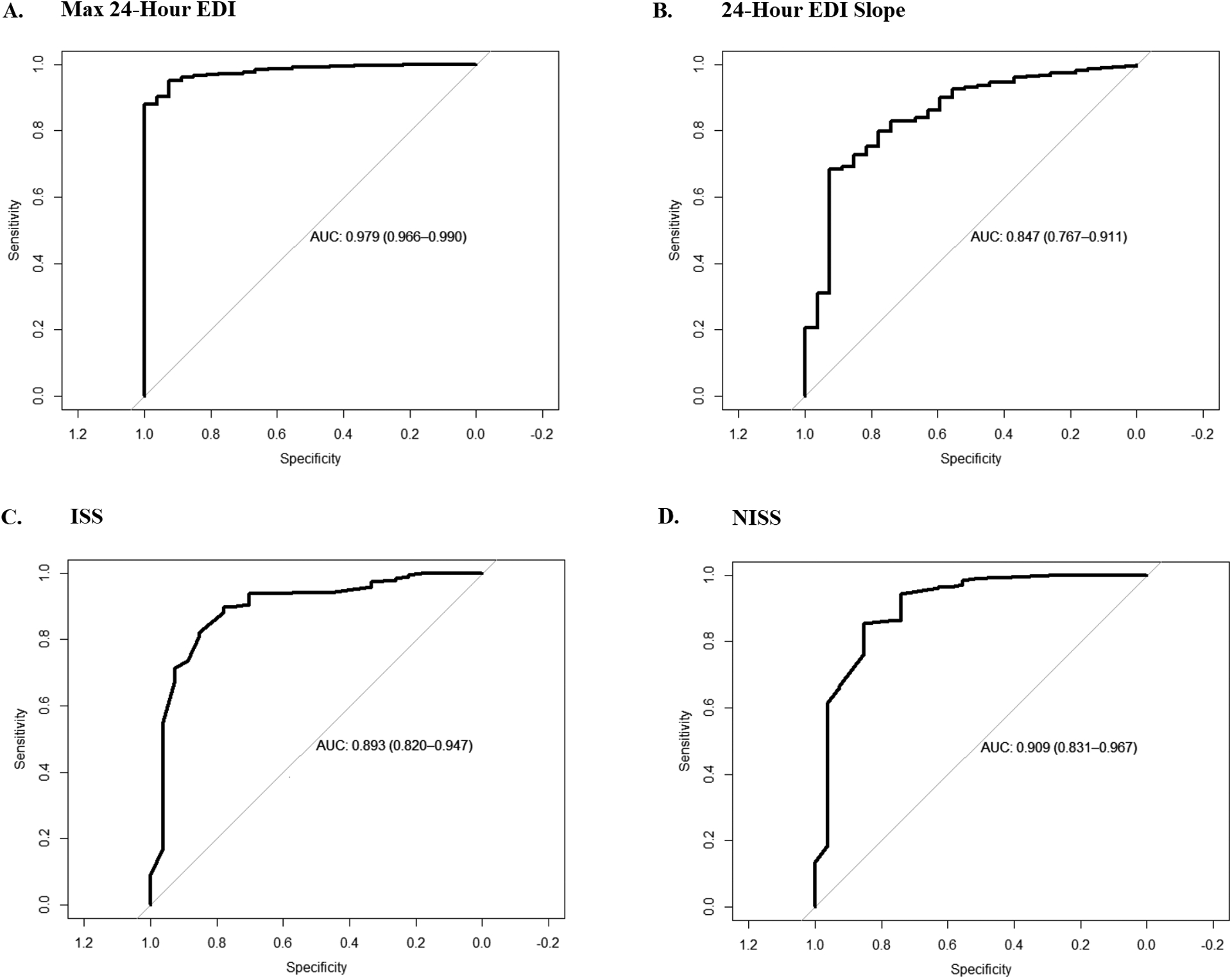

For prediction of in-hospital mortality, the maximum EDI within 6 hours of admission had an AUC of 0.91 (0.85–0.86, 95% CI). This performance improved incrementally as the EDI interval time was increased, with the maximum EDI in 24 hours of admission showing an AUC of 0.98 (0.97–0.99, 95% CI). The slope of the EDI over the first 24 hour-interval also demonstrated moderate performance in mortality prediction at an AUC of 0.85 (0.77–0.91, 95% CI) although it was less than that of other predictors. Comparatively, the ISS had an AUC of 0.89 (0.81–0.95, 95% CI) and the NISS had an AUC of 0.91 (0.84 – 0.97, 95% CI). Figure 1 demonstrates a comparison of the representative models’ performances. The results of the models did not change significantly in the 5 to 1 age-matched cohort. Table 2 summarizes the performance of each model in mortality prediction in the overall cohort and the age-matched cohort.

Figure 1.

Comparison of predictors of mortality. A. Max Epic Deterioration Index (EDI) in the first 24 hours of admission versus mortality. B. The slope of EDI over the first 24 hours of admission vs. mortality. C. Injury Severity Scale (ISS) versus mortality. D. New Injury Severity Scale (NISS) versus mortality.

Table 2 –

Comparison of Models Performances for Mortality

| Model | AUC (95% CI) | Age-Matched AUC (95% CI) |

|---|---|---|

| ISS | 0.89 (0.81–0.95) | 0.89 (0.79–0.94) |

| NISS | 0.91 (0.84 – 0.97) | 0.91 (0.83–0.97) |

| 24 Hour EDI Slope | 0.85 (0.77–0.91) | 0.82 (0.70–0.89) |

| Max EDI | ||

| 6 Hours | 0.91 (0.85–0.86) | 0.90 (0.85–0.95) |

| 12 Hours | 0.96 (0.93–0.98) | 0.96 (0.93–0.99) |

| 18 Hours | 0.98 (0.961–0.99) | 0.97 (0.05–0.99) |

| 24 Hours | 0.98 (0.97–0.99) | 0.97 (0.95–0.99 |

AUC – Area Under Curve, CI- Confidence Interval, ISS- Injury Severity Scale, NISS – New Injury Severity Scale, EDI – Epic Deterioration Index

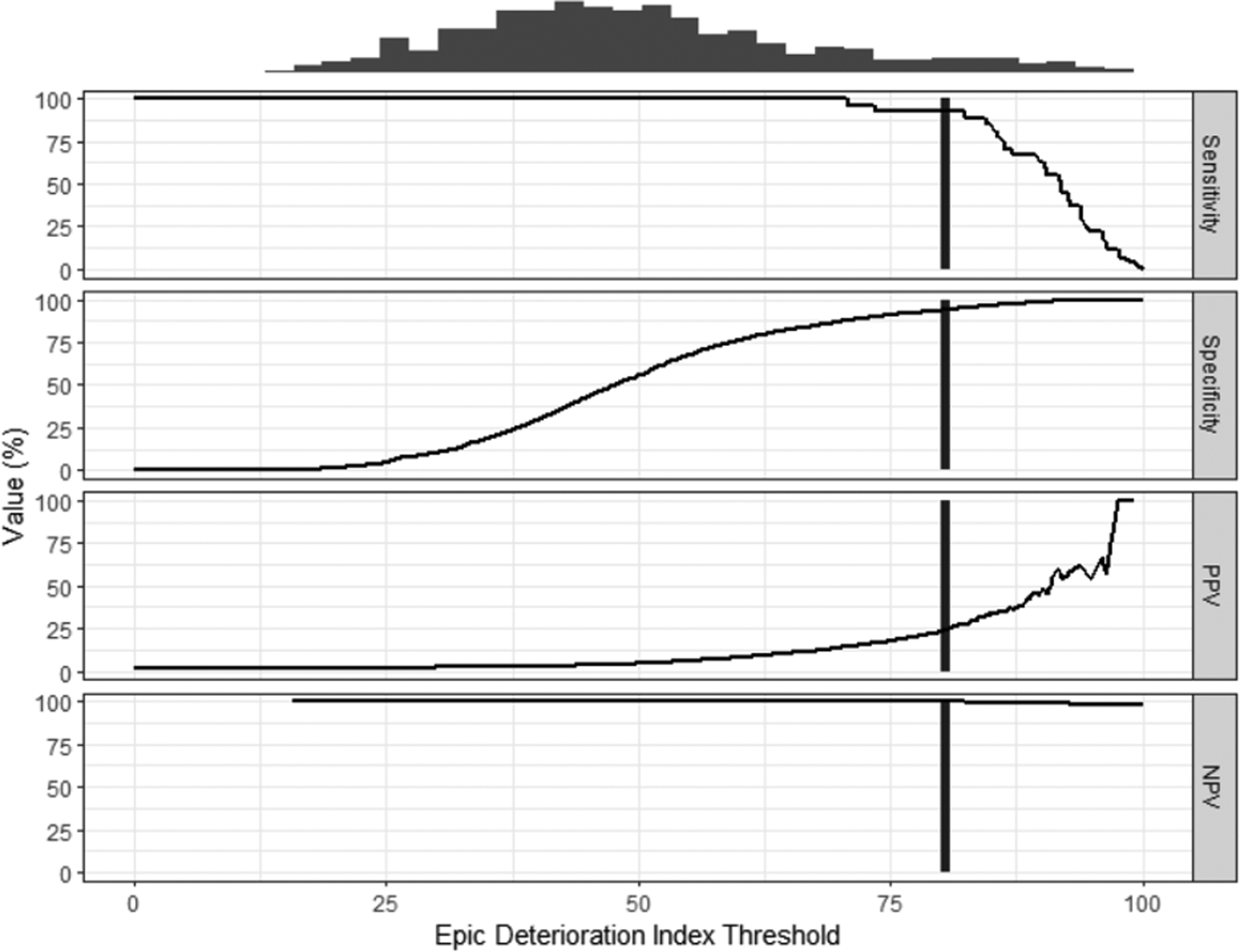

Because the EDI is designed to serve as a threshold score but there is no pre-defined value for this, we constructed a threshold-performance graph to examine the different possibilities as shown in Figure 2. The max 24-hour EDI was used as the predictor. Overall, the score had high sensitivity and specificity across a range of values until an EDI threshold score of 80, at which point the sensitivity decreases with minimal increase in specificity. The sensitivity at EDI threshold score of 80 is 0.93 and the specificity is 0.94, with a positive predictive value (PPV) of 0.23 and negative predictive value (NPV) of 0.99. Similarly, in the age-matched cohort, an EDI threshold of 80 demonstrated a sensitivity of 0.93, specificity of 0.90, PPV of 0.66 and NPV of 0.98 (graph not shown).

Figure 2.

Performance threshold plot of max EDI in 24 hours versus mortality. Black vertical line represents the EDI threshold, 80, at which sensitivity starts decreasing with minimal gain in specificity. Sensitivity = 0.93, Specificity = 0.94, Positive Predictive Value (PPV) = 0.23, Negative Predictive Value (NPV) = 0.99

In the analysis of unplanned ICU admission, we found that the most impactful predictor was the slope of the EDI over a 24-hour time interval ending at 4 hours prior to the ICU admission time with an AUC of 0.66 (0.53 – 0.78, 95% CI). In contrast, the max EDI over this interval of time was not an effective predictor (AUC = 0.52). The performance of these predictors did not change when the time window was changed to 12 hours from 24 hours or for the age-matched cohort. These results are detailed in Table 3.

Table 3 –

Comparison of Models Performances for Unplanned ICU Admission

| Model | AUC (95% CI) | Age-Matched AUC (95% CI) |

|---|---|---|

| EDI Slope† | ||

| 12 Hour Interval | 0.62 (0.46 – 0.76) | 0.67 (0.50–0.80) |

| 24 Hour Interval | 0.66 (0.53 – 0.78) | 0.69 (0.55–0.83) |

| Max EDI † | ||

| 12 Hour Interval | 0.51 (0.42–0.6) | 0.43 (0.33 – 0.55) |

| 24 Hour Interval | 0.52 (0.43–0.61) | 0.45 (0.34–0.56) |

Interval of EDI calculated is the 12-hour or 24-hour time interval prior to 4 hours from ICU admission; AUC – Area Under Curve, CI- Confidence Interval, EDI – Epic Deterioration Index

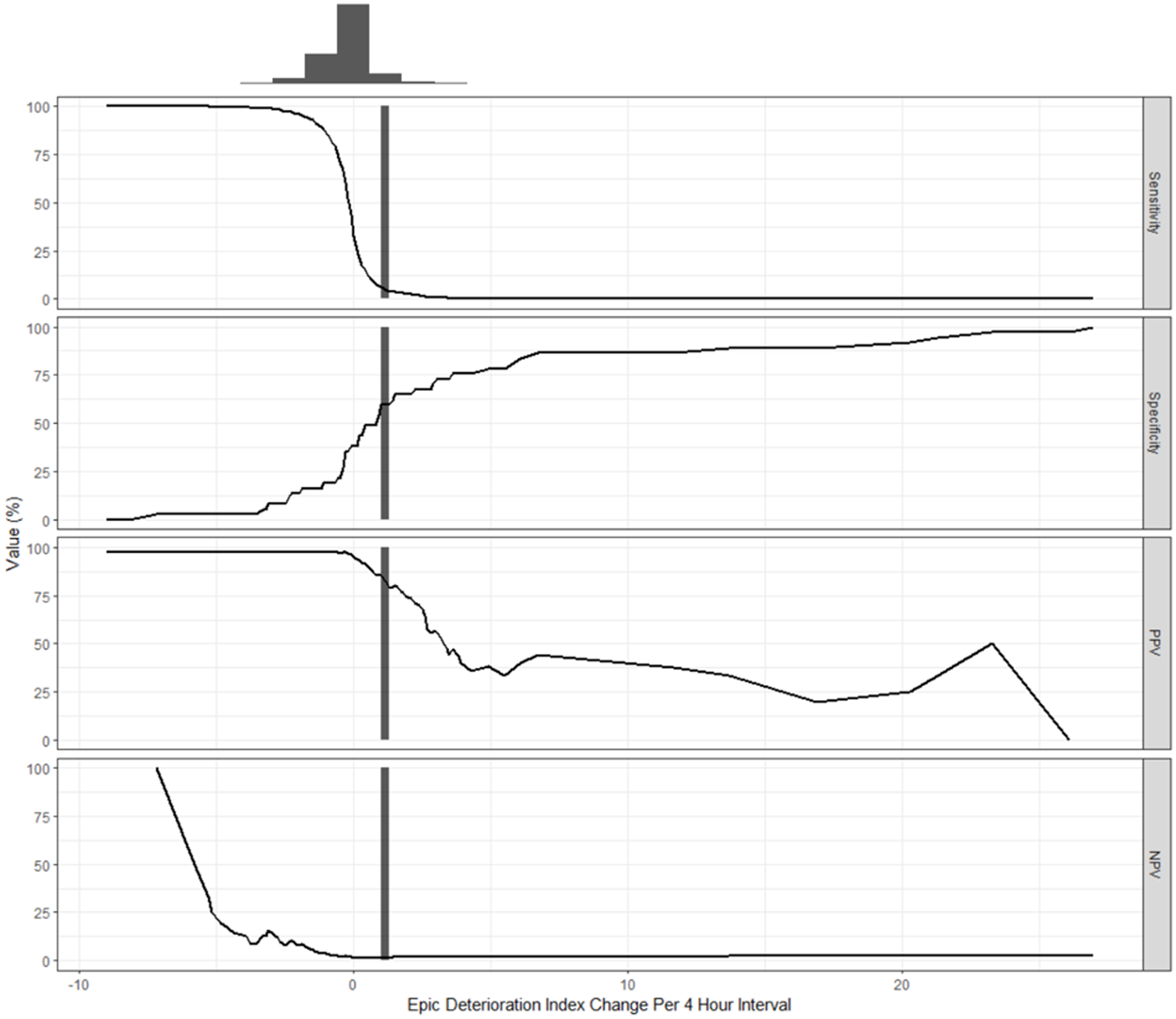

We plotted the performance-threshold graph of the EDI slope in the 24-hour time interval, shown in Figure 3, with the slope rescaled as a change in EDI every 4 hours to produce an interpretable and clinically useful value. Using an EDI increase of 1 over 4 hours as the threshold, the sensitivity is 0.06, specificity is 0.60, PPV is 0.86, and NPV is 0.02.

Figure 3.

Performance threshold plot of EDI slope in 24 hours versus unplanned ICU admission. Black vertical line represents an increase in 1 EDI over 4 hours. At this point, Sensitivity = 0.06, Specificity = 0.6, Positive Predictive Value (PPV) = 0.86, Negative Predictive Value (NPV) = 0.02

Discussion

We report the first public validation of the EDI, a real-time EHR-embedded machine learning model, as a predictor of mortality and unplanned ICU admission in trauma patients. In the first 24 hours, the max EDI was predictive of in-hospital mortality with an AUC of 0.98, which performed better than the previously validated models - the ISS and NISS. We showed that the slope of the EDI over the 24-hour period from admission was also predictive of mortality but this did not perform as well as other indicators. In comparison, the EDI for predicting mortality as well as ICU admission and mechanical ventilation as a composite outcome in COVID-19 patients achieved only an AUC of 0.79 as reported by a separate external validation study [15]. Furthermore, our performance-threshold analysis showed that a max EDI threshold of 80 within the first 24 hours of admission strongly predicted in-hospital mortality with sensitivity and specificity greater than 90%. Thus, by integrating a large quantity of complex data points into a single summary score that is updated in-real time and readily available in the EHR, the EDI can assist teams in rapidly identifying high acuity patients early on in their hospital course. In addition, the EDI can assist in early prognosis discussions with patient and family members based on the predicted mortality risk.

In contrast, the ability of the EDI to predict unplanned ICU admission was markedly decreased. The EDI’s slope over a 24-hour time interval prior to unplanned ICU admission had an AUC of 0.66, indicating moderate performance, while the max EDI was poorly predictive with an AUC of 0.52. Using the threshold of an increase of EDI by 1 over 4 hours showed a PPV of 0.86, but the sensitivity and specificity are low at 0.06 and 0.60 respectively. These results are not surprising as the criteria for ICU admission include many soft criteria and situations that may not be reflected purely by a gross physiological derangement as denoted on the chart. In addition, policies, staffing resources, and provider preference all factor into this complex decision that extends beyond just numbers. The EDI’s performance is also on-par with previously described machine learning models for predicting unplanned ICU admissions in surgical patients that demonstrated AUCs of 0.70 [9, 19]. Overall, this suggests that the EDI can be helpful in alerting clinicians about a potential unplanned ICU admission.

The EHR-based prediction system holds two key advantages over traditional models- timely updates of input parameters and access to a larger pool of data points. Previous models were often developed with access to smaller data sets than what is available in the EHR. This limits the scope of parameters included for model development and thus could affect model performance. We hypothesize that this could be a reason for why the EDI outperforms both ISS and NISS in mortality prediction. Furthermore, while the smaller number of input parameters makes manual entry more feasible in a clinical setting, repeated updating of these data points is not practical and prone to error. In contrast, the EHR is able to constantly update these data points and provide real-time updates to the model prediction score.

For trauma patients, having real-time prediction of mortality is highly sought after as it can play a role in both the patient’s active treatment plan and in assisting with prognosis discussions with patients and families regarding their illness. Furthermore, prediction of unplanned ICU admissions is of vital importance in early intervention as well as resource utilization planning, the latter of which has become all the more critical in light of the COVID-19 pandemic. Currently, the majority of trauma morbidity and mortality tools are housed separately from the EHR or included in EHRs that have a small market share [1, 11, 13]. Both of these serve as barriers to mass adoption of these tools in day-to-day clinical operations. Thus, the EDI represents as a potential paradigm shift in the clinical utilization of machine-learning based prediction models in trauma patients because it can integrate an expansive amount of real-time patient information in the EHR to generate a risk score automatically and is adopted widely across the country already.

The EDI represents the latest in an increasing number of ML-based tools for predicting trauma outcomes. These novel methods allow for inclusion of more complex, multi-dimension data points that would present a problem for traditional regression-based models to predict outcomes at an individual and systemic level [20, 21]. From a trauma systems level, these predictions can assist in determining staffing needs and resource triaging based on nontraditional variables such as temperature and day of the week [22]. On a patient-level, ML derived tools can quickly synthesize and process a complex amount of clinical data to project a meaningful, objective interpretation for clinicians. With the ever-increasing amount of data being measured in the hospital, especially for the critically ill patients, EHR-based ML can assist clinicians in synthesizing this information and manage the data volume complexity as humans on average use six or less data points to make decisions [23]. Furthermore, these ML tools can be used to predict relevant outcomes such as hospital length of stay, coagulopathy after trauma, and sepsis [20, 24, 25]. The EDI represents the next step in advancement of these ML algorithms in clinical adoption as it removes the inherent barrier of manual input of parameters into an independent application as it is often the case. Because it has access to a vast set of real-time patient data, the EDI also has the potential for clinical use beyond just mortality and unplanned ICU admission predictions.

Our results, however, should be interpreted with caution as our study is not without limitations. First, for both mortality and unplanned ICU admission prediction, the thresholds used all had either low sensitivity, specificity, or positive predictive value. For mortality prediction, the EDI threshold of 80, while having high sensitivity and specificity, yielded a PPV of 23%. This is problematic in that our cohort had an overall low mortality rate, which could skew the AUC [26]. However, in our age-matched control, in which the case and controls are more balanced, the AUC maintained and the PPV increased to 66%. This suggests that the performance we demonstrated is not due to just low case count. Second, for our matched cohort analysis, we only matched on age, which could limit the generalizability of our results. On the other-hand, we are also at risk of over-matching by selecting too many variables, which would affect the performance and also generalizability as well. Likewise, our inability to fully characterize the EDI model and the weighting of specific variables within the EDI limits our capacity to determine which data points drive the relationship between EDI and mortality in this study. We do know that EDI extracts a robust set of variables from the EMR including data from demographic information, bedside monitoring, laboratory values and nursing assessments. More granular details regarding the calculation of EDI could help to refine this model in the future and potentially develop disease specific EDI that could incorporate data specific to trauma patient outcomes. Due to the proprietary nature of the EDI, we do not have knowledge of the exact characteristics of the patient cohorts used to calibrate this system. In addition, details regarding the types of hospitals included in the model development and calibration, including whether these hospitals were level 1 trauma centers is not available. Another limitation of our study is the lack of comparison with other computerized outcome prediction models that are available within the EHR. The counterpart to the EDI is the Rothman Index, and while previous studies have shown that it is correlated to trauma mortality, there is no available AUC for comparison and this information is not available for our cohort for benchmarking [27]. Another similar score is the “Acute Physiology and Chronic Health Evaluation (APACHE) that has been developed for general ICU mortality prediction. Past studies have shown AUCs of 0.76 to 0.87 for vascular trauma and trauma ICU patients, and no study have demonstrated it to have performance above AUC of 0.9 in trauma patients or close to the EDI’s AUC of 0.98 [28, 29]. Furthermore, APACHE was developed for ICU exclusively whereas the EDI has been developed for all hospitalized patients and thus has a broader applicability for trauma patients since not all require ICU admission initially. Nevertheless, future studies aimed at comparing these computerized scores would be helpful to guide in model selection for clinical and research usage. Next, our study was done in a single Level 1 trauma center, which may lack the diversity and thus generalizability of a prospective multi-center study. Furthermore, the ICU admission policies will differ at each institution, which would affect the performance of the EDI in predicting unplanned ICU admissions. Lastly, there may be other permutations of the EDI in predicting outcomes or other outcomes that the EDI would be useful in predicting that were not examined by us in this study. A future multi-center prospective trial aimed at further validation of the EDI would be helpful to address these issues.

Here, we validated the EDI in prediction of mortality and unplanned ICU for trauma patients and demonstrated that the EDI performs on par or better than existing validated models. Our results suggests that the EDI should be incorporated into the every-day care of trauma patients as a clinical decision support tool. Future studies should aim to identify real-time EHR- based data that can inform the clinical care of trauma patients.

Supplementary Material

SDC 1: TRIPOD Checklist- This document contains the completed checklist for the Transparent Reporting of a multivariable prediction model for Individual Prognosis Or Diagnosis (TRIPOD) as well as any relevant comments to any specific item.

Acknowledgements

The study authors would like to acknowledge Paulina Paul, MS and Alan Smith, PhD for their assistance in obtaining the necessary data for this study.

Funding

ZM is supported by the National Library of Medicine Training Grant- NIH grant T15LM011271.

Footnotes

Conflict of interest/Disclosure

The authors have no conflicts of interest and no funding source to declare.

This study was presented as a poster presentation at the 80th Annual Meeting of AAST and Clinical Congress of Acute Care Surgery to be held in Atlanta, Georgia on September 28-October 2, 2021.

Disclosures: The authors declare no conflicts of interest.

References

- 1.de Munter L, Polinder S, Lansink KW, Cnossen MC, Steyerberg EW, de Jongh MA: Mortality prediction models in the general trauma population: A systematic review. Injury. 2017; 48(2):221–229. [DOI] [PubMed] [Google Scholar]

- 2.Sharma V, Ali I, van der Veer S, Martin G, Ainsworth J, Augustine T: Adoption of clinical risk prediction tools is limited by a lack of integration with electronic health records. BMJ Health Care Inform. 2021; 28(1). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Baker SP, O’Neill B, Haddon W, Jr., Long WB: The injury severity score: a method for describing patients with multiple injuries and evaluating emergency care. J Trauma. 1974; 14(3):187–196. [PubMed] [Google Scholar]

- 4.Gorczyca MT, Toscano NC, Cheng JD: The trauma severity model: An ensemble machine learning approach to risk prediction. Comput Biol Med. 2019; 108:9–19. [DOI] [PubMed] [Google Scholar]

- 5.Haider A, Con J, Prabhakaran K, Anderson P, Policastro A, Feeney J, Latifi R: Developing a Simple Clinical Score for Predicting Mortality and Need for ICU in Trauma Patients. Am Surg. 2019; 85(7):733–737. [PubMed] [Google Scholar]

- 6.Kuhls DA, Malone DL, McCarter RJ, Napolitano LM: Predictors of mortality in adult trauma patients: the physiologic trauma score is equivalent to the Trauma and Injury Severity Score. J Am Coll Surg. 2002; 194(6):695–704. [DOI] [PubMed] [Google Scholar]

- 7.Lam SW, Lingsma HF, van Beeck EF, Leenen LP: Validation of a base deficit-based trauma prediction model and comparison with TRISS and ASCOT. Eur J Trauma Emerg Surg. 2016; 42(5):627–633. [DOI] [PubMed] [Google Scholar]

- 8.Kipnis P, Turk BJ, Wulf DA, LaGuardia JC, Liu V, Churpek MM, Romero-Brufau S, Escobar GJ: Development and validation of an electronic medical record-based alert score for detection of inpatient deterioration outside the ICU. J Biomed Inform. 2016; 64:10–19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Martin LA, Kilpatrick JA, Al-Dulaimi R, Mone MC, Tonna JE, Barton RG, Brooke BS: Predicting ICU readmission among surgical ICU patients: Development and validation of a clinical nomogram. Surgery. 2019; 165(2):373–380. [DOI] [PubMed] [Google Scholar]

- 10.Piper GL, Kaplan LJ, Maung AA, Lui FY, Barre K, Davis KA: Using the Rothman index to predict early unplanned surgical intensive care unit readmissions. J Trauma Acute Care Surg. 2014; 77(1):78–82. [DOI] [PubMed] [Google Scholar]

- 11.Wengerter BC, Pei KY, Asuzu D, Davis KA: Rothman Index variability predicts clinical deterioration and rapid response activation. Am J Surg. 2018; 215(1):37–41. [DOI] [PubMed] [Google Scholar]

- 12.Artificial Intelligence Triggers Fast, Lifesaving Care for COVID-19 Patients. Accessed 08-12-2021. [https://www.epic.com/epic/post/artificial-intelligence-epic-triggers-fast-lifesaving-care-covid-19-patients]

- 13.Ochsner Health Adopts New AI Technology to Save Lives in Real-time. In.: Ochsner Health; 2018. Accessed 08-01-2021. [https://news.ochsner.org/news-releases/ochsner-health-system-adopts-new-ai-technology-to-save-lives-in-real-time] [Google Scholar]

- 14.Ross C: Hospitals are using AI to predict the decline of Covid-19 patients — before knowing it works. In.: STAT; 2020. Accessed 10-05-2021. [https://www.statnews.com/2020/04/24/coronavirus-hospitals-use-ai-to-predict-patient-decline-before-knowing-it-works/] [Google Scholar]

- 15.Singh K, Valley TS, Tang S, Li BY, Kamran F, Sjoding MW, Wiens J, Otles E, Donnelly JP, Wei MY et al. : Evaluating a Widely Implemented Proprietary Deterioration Index Model among Hospitalized Patients with COVID-19. Ann Am Thorac Soc. 2021; 18(7):1129–1137. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Lavoie A, Moore L, LeSage N, Liberman M, Sampalis JS: The Injury Severity Score or the New Injury Severity Score for predicting intensive care unit admission and hospital length of stay? Injury. 2005; 36(4):477–483. [DOI] [PubMed] [Google Scholar]

- 17.Meredith JW, Evans G, Kilgo PD, MacKenzie E, Osler T, McGwin G, Cohn S, Esposito T, Gennarelli T, Hawkins M et al. : A comparison of the abilities of nine scoring algorithms in predicting mortality. J Trauma. 2002; 53(4):621–628; discussion 628–629. [DOI] [PubMed] [Google Scholar]

- 18.Lavoie A, Moore L, LeSage N, Liberman M, Sampalis JS: The New Injury Severity Score: a more accurate predictor of in-hospital mortality than the Injury Severity Score. J Trauma. 2004; 56(6):1312–1320. [DOI] [PubMed] [Google Scholar]

- 19.Desautels T, Das R, Calvert J, Trivedi M, Summers C, Wales DJ, Ercole A: Prediction of early unplanned intensive care unit readmission in a UK tertiary care hospital: a cross-sectional machine learning approach. BMJ Open. 2017; 7(9):e017199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Liu NT, Salinas J: Machine Learning for Predicting Outcomes in Trauma. Shock. 2017; 48(5):504–510. [DOI] [PubMed] [Google Scholar]

- 21.Stonko DP, Dennis BM, Betzold RD, Peetz AB, Gunter OL, Guillamondegui OD: Artificial intelligence can predict daily trauma volume and average acuity. J Trauma Acute Care Surg. 2018; 85(2):393–397. [DOI] [PubMed] [Google Scholar]

- 22.Stonko DP, Guillamondegui OD, Fischer PE, Dennis BM: Artificial intelligence in trauma systems. Surgery. 2021; 169(6):1295–1299. [DOI] [PubMed] [Google Scholar]

- 23.Sanchez-Pinto LN, Luo Y, Churpek MM: Big Data and Data Science in Critical Care. Chest. 2018; 154(5):1239–1248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Feng YN, Xu ZH, Liu JT, Sun XL, Wang DQ, Yu Y: Intelligent prediction of RBC demand in trauma patients using decision tree methods. Mil Med Res. 2021; 8(1):33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Li K, Wu H, Pan F, Chen L, Feng C, Liu Y, Hui H, Cai X, Che H, Ma Y et al. : A Machine Learning-Based Model to Predict Acute Traumatic Coagulopathy in Trauma Patients Upon Emergency Hospitalization. Clin Appl Thromb Hemost. 2020; 26:1076029619897827. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Romero-Brufau S, Huddleston JM, Escobar GJ, Liebow M: Why the C-statistic is not informative to evaluate early warning scores and what metrics to use. Crit Care. 2015; 19:285. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Alarhayem AQ, Muir MT, Jenkins DJ, Pruitt BA, Eastridge BJ, Purohit MP, Cestero RF: Application of electronic medical record-derived analytics in critical care: Rothman Index predicts mortality and readmissions in surgical intensive care unit patients. J Trauma Acute Care Surg. 2019; 86(4):635–641. [DOI] [PubMed] [Google Scholar]

- 28.Loh SA, Rockman CB, Chung C, Maldonado TS, Adelman MA, Cayne NS, Pachter HL, Mussa FF: Existing trauma and critical care scoring systems underestimate mortality among vascular trauma patients. J Vasc Surg. 2011; 53(2):359–366. [DOI] [PubMed] [Google Scholar]

- 29.Magee F, Wilson A, Bailey M, Pilcher D, Gabbe B, Bellomo R: Comparison of Intensive Care and Trauma-specific Scoring Systems in Critically Ill Patients. Injury. 2021; 52(9):2543–2550. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

SDC 1: TRIPOD Checklist- This document contains the completed checklist for the Transparent Reporting of a multivariable prediction model for Individual Prognosis Or Diagnosis (TRIPOD) as well as any relevant comments to any specific item.