Abstract

Introduction

Executive dysfunctions constitute a significant public health problem: their high impact on everyday life makes it a priority to identify early strategies for evaluating and rehabilitating these disorders in a real-life context. The ecological limitation of traditional neuropsychological tests and several difficulties in administering tests or training in real-life scenarios have paved the way to use Virtual Reality-based tools to evaluate and rehabilitate Executive Functions (EFs) in real-life.

Objective

This work aims to conduct a systematic review to provide a detailed description of the VR-based tools currently developed for the evaluation and rehabilitation of EFs.

Methods

We systematically searched for original manuscripts regarding VR tools and EFs by looking for titles and abstracts in the PubMed, Scopus, PsycInfo, and Web of Science databases up to November 2021 that contained the following keywords “Virtual Reality” AND “Executive function*.”

Results and Conclusion

We analyzed 301 articles, of which 100 were included. Our work shows that available VR-based tools appear promising solutions for an ecological assessment and treatment of EFs in healthy subjects and several clinical populations.

Keywords: executive functions, Virtual Reality, psychometric assessment, rehabilitation, virtual environments

Introduction

“Executive function” (EF) is a complex construct, described by Chan and colleagues as “an umbrella term comprising a wide range of cognitive processes and behavioral competencies which include verbal reasoning, problem-solving, planning, sequencing, the ability to sustain attention, resistance to interference, utilization of feedback, multitasking, cognitive flexibility, and the ability to deal with the novelty” (Chan et al., 2008). Specifically, these higher-order cognitive abilities and behavioral skills are responsible for controlling and regulating actions (e.g., starting and stopping activities or monitoring) (Burgess and Simons, 2005; Chan et al., 2008) and performing complex or non-routine tasks (e.g., ability to perform two tasks simultaneously) (Godefroy, 2003; Alvarez and Emory, 2006; Alderman, 2013). Several studies have shown the critical role of executive functioning in performing various activities of daily living (ADL) (Fortin et al., 2003) and especially the instrumental activities of daily living (IADL), such as preparing meals, managing money, shopping, doing housework, and using a telephone (Chevignard et al., 2000; Fortin et al., 2003; Vaughan and Giovanello, 2010). Due to this overt role in everyday functioning, the executive impairment, known as “Dysexecutive Syndrome” (Robertson et al., 1997; Snyder et al., 2015), has a relevant impact on personal independence, ability to work, educational success, social relationships and cognitive and psychological development (Green, 1996; Goel et al., 1997; Green et al., 2000), with consequences on a person's quality of life and feelings of personal wellbeing (Gitlin et al., 2001). In recent years, the cognitive neuroscience of EFs has been rapidly developing, driven by technological progress, which claimed the crucial role of the frontal lobe in supporting executive processes involved in many real-life situations (Burgess et al., 2006). Dysexecutive Syndrome appears to be associated with aging of the prefrontal cortex in the healthy elderly population (Raz, 2000; Burke and Barnes, 2006), but also is typical in neurological or psychiatric patients due to frontal lobe damage, such as after traumatic brain injury (TBI) and stroke (Baddeley and Wilson, 1988; Nys et al., 2007) or specific pathologies such as Parkinson's disease (PD) (Aarsland et al., 2005; Kudlicka et al., 2011) and Multiple Sclerosis (MS) (Nebel et al., 2007). However, EFs' impairments can be linked to other cerebral areas due to the connection of frontal regions with cortical and subcortical areas, such as the amygdala, cerebellum, and basal ganglia (Tekin and Cummings, 2002).

Since EFs have adverse effects in performing activities of daily living (Fortin et al., 2003; Vaughan and Giovanello, 2010), the identification of early strategies functional to the evaluation and rehabilitation of EFs are critical to minimize the effects of these executive impairments and improve everyday function (Levine et al., 2007). However, the assessment and rehabilitation of EFs represent a challenge due not only to the complexity and heterogeneity of the construct (Stuss and Alexander, 2000) but also to methodological difficulties (Goldstein, 1996; Chaytor and Schmitter-Edgecombe, 2003; Barker et al., 2004; Crawford and Henry, 2005; Godefroy et al., 2010; Kudlicka et al., 2011; Serino et al., 2014).

As regards the evaluation, EFs are traditionally assessed with laboratory tasks or paper-and-pencil neuropsychological tests based on the theory, such as the Modified Wisconsin Card Sorting Test (WCST) (Nelson, 1976) or the Trail Making Test (TMT) (Reitan, 1992a), which guarantee standardized procedures and scores. Over the years, an increasing number of tests have been developed to assess different patients (Chan et al., 2008). The assessment protocol may include a single task for the evaluation of a single cognitive process, for example, Tower of London (ToL) for problem-solving abilities (Allamanno et al., 1987) or tests batteries to assess the entire executive functioning, such as the Frontal Assessment Battery (FAB) (Dubois et al., 2000; Appollonio et al., 2005). However, several authors have shown many limitations and disadvantages in the traditional neuropsychological evaluation (Schultheis and Rizzo, 2001; Parsons and Rizzo, 2008). Firstly, traditional paper and pencil tests could present reliability problems (Rizzo et al., 2001) since the tests could negatively be affected by the different administration procedures (e.g., examiners, test environment, quality of the stimuli or scoring errors). Therefore, validated computerized versions of traditional neuropsychological tests were developed, offering the advantage of systematically delivering stimuli and the ability to monitor speed and accuracy with precision. However, even these versions do not detect how cognitive functioning can change in stressful everyday situations (Armstrong et al., 2013). Secondly, several studies revealed that many patients with Dysexecutive Syndrome achieve normal scores on traditional neuropsychological tests and, at the same time, complain of substantial difficulties in daily life activities (Shallice and Burgess, 1991). This problem may result from a lack of ecological validity of the tests for EFs (Chan et al., 2008). A test can be defined ecological if (1) the task corresponds, in form and content, to a situation outside the laboratory (representativeness of the task), and (2) a poor performance on the test is predictive of problems in the real world (generalizability of the results) (Kvavilashvili and Ellis, 2004). Traditional paper and pencil tests require simple responses to a single event, while complex everyday tasks may require a more complex set of responses (Chan et al., 2008). In other words, the situation - usually the clinic - in which patients perform the tests is different from most of the conditions encountered outside it (that is, they show little “representativeness”). Therefore, the traditional assessment appears not to be able to predict the complexity of executive functioning in real-life settings reliably (Shallice and Burgess, 1991; Goldstein, 1996; Klinger et al., 2004; Burgess et al., 2006; Chaytor et al., 2006; Chan et al., 2008). Nevertheless, an ecological assessment is crucial to understand how cognitive deficits (above all EF) affect daily functioning (Manchester et al., 2004; Burgess et al., 2006). In other words, it allows evaluating if patients can effectively manage and orient cognitive resources within the complexity of the external world (Crawford, 1998; Rand et al., 2009). Since EFs play a key role in everyday life (Shallice and Burgess, 1991) and independent functioning, it is necessary that the EF clinical tests have ecological validity. In this framework, Burgess and colleagues proposed neuropsychological assessments based on models derived from directly observable daily behaviors (Burgess et al., 2006). This “function-led” approach differs from the emphasis on abstract cognitive “constructs” by paying attention to the role of EFs within the complexity of the “functional” behaviors found in real-life situations. This innovative approach could lead to tasks more suited to the clinical concerns due to the transparency offered by greater “representativeness” and “generalizability.” In conclusion, an ecological assessment allows a deeper comprehension of the neuropsychological profile of the patient and future personalized (Pedroli et al., 2016). To overcome this ecological issue, clinicians and researchers paid attention to develop tests able to evaluate the different components of executive functioning in real-life scenarios (Chaytor and Schmitter-Edgecombe, 2003; Jurado and Rosselli, 2007), such as the Multiple Errands Test (MET) (Shallice and Burgess, 1991; Alderman et al., 2003) and Behavioral Assessment of the Dysexecutive Syndrome (BADS) (Wilson et al., 1997). Specifically, MET is a functional test requiring simple tasks (e.g., buying six items) in a real supermarket. At the same time, BADS is a laboratory-based battery that includes ecological tasks (e.g., temporal judgement, rule shift cards, action program, key search) and a dysexecutive questionnaire that investigates several domains like personality, motivation, behavioral, and cognitive changes. The assessment of EFs in real-life settings provides a more accurate estimate of the patient's deficits than within laboratory conditions (Rand et al., 2009) but showed further limitations, such as long times, high economic costs, the difficulty of the organization (e.g., requests for authorisations from local companies), poor controllability of experimental condition or applicability with patients with significant behavioral, psychiatric and motor difficulties (Bailey et al., 2010).

The ecological limitations of traditional neuropsychological tests and several difficulties in administering tests in real-life scenarios have paved the way to use technological tools such as Virtual Reality (VR) to assess EFs in real life (Bohil et al., 2011). VR is a sort of human-computer interface system that enables designing and creating realistic spatial and temporal scenarios, situations or objects that, reproducing conditions of daily life, could allow an ecologically valid evaluation of EFs (Lombard and Ditton, 1997; Campbell et al., 2009; Bohil et al., 2011; Parsons et al., 2011; Parsons, 2015). Therefore, VR could facilitate the assessment and rehabilitation of possible impairments in individuals with executive dysfunction (Tarnanas et al., 2013), leading clinicians to observe in real-time their patients in an everyday setting. These Virtual Environments (VEs) enable patients to interact dynamically with computer-simulated objects and 3D settings (Pratt et al., 1995; Climent et al., 2010) that could allow reproducing complex emotional and cognitive experiences (such as planning and organizing practical actions, attention shift) resembling everyday life situations (Castelnuovo et al., 2003) in ecologically valid and controlled environments. Overall, VR could allow evaluating everyday difficulties due to executive dysfunctions and train these impairments, working directly on impaired ADL and IADL (Zhang et al., 2003; Klinger et al., 2006).

In addition to allowing an ecological assessment, VR-based tools appear highly flexible and guarantee simultaneously a controlled and precise presentation of a large variety of stimuli (Armstrong et al., 2013) and the collection of the full range of users' answers that can be objectively measured (Rizzo et al., 2001; Parsons et al., 2011; Parsons, 2015). Therefore, VR could integrate traditional neuropsychological assessment procedures and improve their reliability and psychometric validity (Riva, 1997, 2004; Rizzo et al., 2001). Moreover, VR can recognize and monitor facial expressions and body movements: all gestures could be captured and processed by translating them into other actions (e.g., grasping, virtual environment scrolling or dropping objects, blowing and moving elements) that manage the virtual objects' direct manipulation using natural behavior (Parsons et al., 2011).

In a rehabilitative context, VR also shows other valuable advantages, showing itself a promising tool in training ADLs' skills (Zhang et al., 2003; Klinger et al., 2006). Firstly, it allows individualized treatment according to patients' skills and needs (Lo Priore et al., 2002; Rand et al., 2009): the real-time data acquisition and performance analysis (Parsons et al., 2011; Parsons, 2015) guarantee the possibility of customizing the scenarios in real-time, focusing on the patient's characteristics and demands (Castelnuovo et al., 2003; Rand et al., 2009). Moreover, VR-based tool also allows compensation for sensory deprivation and motor impairments through multisensorial stimulation and feedback (Kizony, 2011; Zell et al., 2013; Nir-Hadad et al., 2017). Indeed, VR allows administering stimuli and instructions through different modalities (visual, auditory, tactile), which can be adapted to possible sensory deficits of the patients (Parsons and Rizzo, 2008). Another strength of VEs concerns presenting scenarios with features not available in the real world (Kizony, 2011; Zell et al., 2013; Nir-Hadad et al., 2017): cueing stimuli provided to patients to help them in compensatory strategies, to improve functional behavior day by day (Rizzo et al., 2001). Furthermore, VR enables them to perform exercises at a distance, in the comfort and safety of their homes (Dores et al., 2012). This result has been relevant since it makes it possible to overcome two crucial clinical issues: long waiting lists of health services and difficulties in moving patients between their homes and health services. Moreover, the rehabilitation with VR appeared cheaper than the traditional one since, for example, it allows to recreate complex everyday scenarios (e.g., the presence of more persons at the same time), avoiding the need to leave the rehabilitation setting. Finally, VR allows for gradually increasing tasks' complexity, maintaining experimental control over stimulus delivery and individualizing treatment needs in a standardized manner (Rand et al., 2009). Overall, several studies converge that VR is a promising tool to improve rehabilitation since it allows the provision of meaningful, versatile and individualized tasks that can enhance patients' motivation, enjoyment and engagement during training (Hayre et al., 2020), overcoming scarce compliance of patients with cognitive dysfunctions about the traditional rehabilitating program, usually repetitive and not stimulating (Castelnuovo et al., 2003; Rand et al., 2009). Interestingly, several studies have shown that the VR tools' realism and engagement could help transfer learning to the real world (Klinger et al., 2004; Rizzo and Kim, 2005; Carelli et al., 2008).

In light of these promising premises, this review aims to provide a detailed description of the VR tools currently developed for the evaluation and rehabilitation of EFs.

Methods

We achieved this systematic review agreeing to the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines and flow diagram (Liberati et al., 2009).

Information Sources and Study Selection

The literature was searched in the electronic databases PubMed, Web of Science, Scopus and PsycInfo from inception to November 2021. Bibliographies identified articles, and a manual search of relevant journals for additional references was conducted. A further search on Google Scholar and the bibliography of previous reviews was also done. We used the keywords “Virtual Reality” AND “Executive function*” (the asterisk indicates that the search term was not limited to that word). Two reviewers (FB; CP) independently conducted the data extraction.

Eligibility Criteria

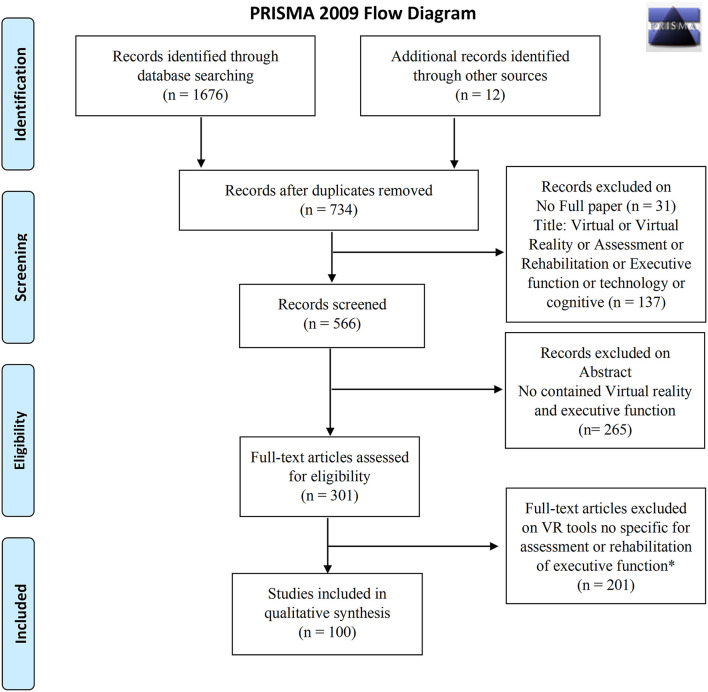

Studies were included if they fulfilled the following criteria: Virtual Reality-based tools specific for the assessment or rehabilitation of EFs. Exclusion criteria were no full paper (i.e., books, chapters of the books, qualitative studies, letters, comments, dissemination, published abstracts without text) and non-English language. The selection of studies was first based on screening the title and abstract, followed by reading the full text of the remaining reports (Figure 1).

Figure 1.

PRISMA 2009 flow diagram.

Virtual Reality Tool

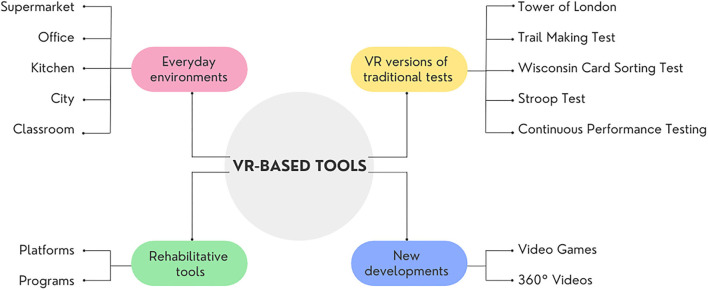

This review aims to provide a detailed description of the main tools that exploit VR for the assessment and rehabilitation of EFs. The description of the tools has been organized into paragraphs based on the VEs used (e.g., supermarket, kitchen). Further paragraphs have been introduced to offer an overview of the main platforms and programs used to evaluate and rehabilitate EFs and the virtual reality versions of traditional paper-pencil tests. Finally, we have decided to introduce two sections to describe the development of Games and 360° videos as innovative and feasible solutions for the assessment and rehabilitation of EFs (Figure 2).

Figure 2.

Overview of available VR-based tools.

For each VR-based instrument reviewed, we have provided a complete description of the tool and, if available, information about usability, construct validity, discriminant validity and test re-test reliability (for a summary, see Table 1).

Table 1.

Summary of available psychometric proprieties of VR-based assessment tools.

| Usability | Construct validity/convergent validity | Discriminant validity/efficacy in discriminating between populations | Test-retest reliability | |

|---|---|---|---|---|

| Everyday environments: | ||||

| Virtual Errands Test | YES | YES | HC vs. PD/Stroke HC vs. OCD/Schizophrenia | NO |

| Virtual Environment Grocery Store | NO | YES | NO | NO |

| Adapted Four-Item Shopping Task | YES | YES | HC vs. Stroke | NO |

| Virtual Action Planning - Supermarket | NO | YES | HC vs. MCI/Stroke/Schizophrenia | NO |

| Virtual Supermarket Shopping Task | NO | NO | NO | NO |

| Jansari Assessment of Executive Functions | NO | YES | HC vs. ABI | NO |

| Jansari Assessment of Executive Functions for Children | NO | NO | NO | NO |

| Assessim Office | NO | YES | HC vs. TBI/MS | NO |

| EcoKitchen | NO | NO | HC vs. HD manifest/premanifest | NO |

| VR-cooking task | NO | YES | HC vs. Alcohol Use Disorder | NO |

| Kitchen and cooking | NO (acceptability) | NO | NO | NO |

| Multitasking in the City Test | NO (acceptability) | YES | HC vs. ABI | NO |

| Virtual Library Environment | NO | YES | HC vs. TBI | NO |

| Edinburgh Virtual Errands Test | NO | YES | NO | NO |

| Virtual Reality Day-Out Task | NO | NO | HC vs. MCI vs. AD | NO |

| Virtual Classroom | NO | YES | HC vs. children with ABI/ NF1/ADHD | NO |

| Virtual versions of traditional paper and pencil tests: | ||||

| Tower of London | NO | YES | NO | NO |

| Virtual Reality Color Trails Test | NO | YES | NO | YES |

| Look for a Match | NO | YES | NO | NO |

| Virtual Reality Stroop Task | NO | YES | NO | NO |

| The Virtual Classroom Stroop Task | NO | YES | NO | NO |

| The Virtual Apartment Stroop Task | NO | YES | NO | NO |

| Virtual Classroom Bimodal Stroop | NO | YES | HC vs. Autism Disorders | NO |

| Virtual Reality Continuous Performance Testing | NO | YES | HC vs. ADHD | NO |

| Advanced Virtual Reality Tool for the Assessment of Attention | NO | NO | NO | NO |

| Nesplora Aquarium | YES | YES | HC vs. ADHD low vs. elevated Mood Disorders | NO |

| New developments: | ||||

| Virtual Reality Video Game | NO | YES | NO | NO |

| Virtual Reality avatar interaction platform | NO (engagement) | NO | Personnel military with/without TBI | NO |

| Picture Interpretation Test 360° | NO | YES | HC vs. PD/MS | NO |

| EXecutive-functions Innovative Tool 360° | YES | YES | NO | NO |

HC, Healthy Controls; PD, Parkinson's Disease; MS, Multiple Sclerosis; TBI, Traumatic Brain Injury; ADHD, Attention-Deficit/Hyperactivity Disorder; ABI, Acquired Brain Injury; OCD, Obsessive and Compulsive Disorders; MCI, Mild Cognitive Impairments; HD, Huntington's disease; NF1, Neurofibromatosis type 1.

Virtual Supermarket Environment

In the literature, many studies have focused on developing virtual shopping environments that simulate a real supermarket to evaluate and treat EFs (Nir-Hadad et al., 2017). Shopping has been selected as an activity that characterizes the IADL, essential for everyday life. Indeed, this activity includes tasks/actions that require the use of EFs, such as comprehending a store's design, forming a strategy to identify the location of products of different types and costs, differentiating between products and keeping track of products acquired. Specifically, most studies have focused on developing and testing the virtual version of the Multiple Errands Test (VMET).

Virtual Multiple Errands Test

VMET is a complex shopping task in which the participants must carry out different tasks in compliance with various rules. Two virtual scenarios of the V-MET have been created: the IREX V-Mall supermarket (Rand et al., 2005, 2009) and the NeuroVR supermarket (Raspelli et al., 2009; Riva et al., 2009).

Rand and colleagues have developed a first version of the VMET (Rand et al., 2009), set in the V-Mall (Rand et al., 2005), a virtual supermarket programmed by GestureTek's Interactive Rehabilitation and Exercise System (IREX) video-capture VR system. Participants can interact with the VE through arrows and natural arm movements (e.g., touch products with both hands). VMall simulates a real supermarket with different stores and aisles: each aisle consists of a maximum of 60 products arranged on the shelves and divided into different categories (e.g., bakery products, cleaning items). The products are reproductions of photographs of real items, taken with a digital camera and rendered using 3D graphic software. The therapist can select and order products: the number, type, and position of objects on the shelves can vary in each corridor. The authors added some common features such as background music or typical special sales announcements to improve the sense of immersion. In their work, Rand and colleagues have provided initial support for the ecological validity of the VMET as an assessment tool of EFs. The preliminary results showed that VMET is sensitive to brain injury because it was able to differentiate between healthy control subjects and patients with post-stroke (Rand et al., 2009).

Raspelli et al. have developed another VR-based MET using the VR platform NeuroVR software (Raspelli et al., 2009, 2010; Riva et al., 2009; Wiederhold et al., 2010). Thanks to this platform, Raspelli and colleagues created a new scenario for assessing EFs (Raspelli et al., 2009). The original procedure of the MET (Shallice and Burgess, 1991) was modified to be adapted to the virtual scenario of the supermarket (Pedroli et al., 2013). In general, the VMET consists of a Blender-based application that allows the assessment of different aspects of EFs through active exploration of a virtual supermarket, where participants must select and buy various products arranged on shelves, following a predefined list obtain some information and respect different rules. Precisely, the VMET measures a subject's ability to formulate, store, and check all the goals and subgoals to respond to environmental demands in ecological situations and to complete specified tasks. In this way, the EFs stimulated are multiple, from the ability to plan a sequence of actions to problem-solving and to cognitive and behavioural flexibility (Cipresso et al., 2013b). Within the virtual supermarket, the products are grouped into the main categories of foods, such as drinks, fruit and vegetables, breakfast foods, hygiene products, frozen foods and products for gardens and pets. Moreover, some signs indicating the product categories have been inserted in the upper part of each section to help the subjects in their exploration (Raspelli et al., 2009; Cipresso et al., 2014). The VMET is composed of four main tasks: 1) buying six different products (i.e., one product on sale); 2) requiring the examiner information about one product to acquire; 3) writing the shopping list of products bought after 5 min from the beginning of the test; 4) answering some questions at the end of the virtual session (i.e., which is the closing time of the supermarket? how many shelves sell the fruit? how many departments are there in the supermarket?) (Cipresso et al., 2013a, 2014). To complete the tasks, participants must follow eight rules: (1) performing all the proposed tasks; (2) performing all the tasks in any order; (3) not going to a place if it is not part of a task; (4) not going to the same passage twice; (5) not acquiring more than two products for each category; (6) completing the exercise in the shortest possible time; (7) not talking to the experimenter if it is not part of a task; and (8) going to “shopping cart” and making a list of all their products, after 5 min from the beginning of the task (Raspelli et al., 2009).

Before starting the real task, the participants perform an initial training phase in a smaller supermarket to test the joy pad use (Pedroli et al., 2013) and understand how to move in the environment (Pedroli et al., 2016). In this phase, the subjects explore the VE freely for a few minutes or until they learn the use of the joypad. After training, the examiner shows the new virtual supermarket, describing different sections and giving a shopping list, a map of the supermarket, information about the supermarket (i.e. opening and closing times, products on sale), a pen, a wristwatch and the instruction sheet. Moreover, the examiner reads and explains all the instructions to the subject to guarantee complete understanding (Cipresso et al., 2014). After that, the participant can freely navigate in the virtual supermarket using a joypad (with the arrows “up-down” joystick) and collect products (by pushing a button on the right side of the joypad) (Raspelli et al., 2012; Cipresso et al., 2013b; Pedroli et al., 2016). The examiner cannot speak to subjects during the task or answer the questions. Still, he can only take notes on the participant's behaviours in the VE (Pedroli et al., 2016) and execution time: clinician measured the time, stopping it when the subject says “I finished” (Raspelli et al., 2009; Cipresso et al., 2013a). In order to better understand the subject's performance, five different items must be registered: total errors (task failure), inefficiencies, strategies, rule breaks, interpretation failures (Shallice and Burgess, 1991; Raspelli et al., 2012). Specifically: 1) errors or task failure: a task is not correctly completed. The total score ranges from 11 (participants complete all task correctly as indicated by the test) to 33 (participants complete all tasks incorrectly). 2) inefficiencies: the participants could have used a more effective strategy to complete the task (i.e., not grouping similar tasks when possible). The general scoring range is 8 (many inefficiencies) to 32 (none). 3) strategies; to analyse their ability to use strategies, 13 behaviours that facilitated carrying out the tasks are evaluated (i.e., accurate planning before starting a specific subtask). The total score ranges from 13 (good strategies) to 52 (no strategies). 4) rule breaks: The total score ranges from 8 (many rule breaks) to 32 (no rule breaks). Notably, the scoring scale for each inefficiency/strategy/rule break ranged from 1 to 4 (1 = always; 2 = more than once; 3 = once; 4 = never). 5) interpretation failures: the requirements of a particular task are misunderstood (i.e., subjects think that the subtasks must be performed in the order of presentation in the information sheet). The score for each interpretation failure ranges from 1 to 2 (1 = yes; 2 = no); therefore, the general score ranges from 3 (a large number of interpretation failures) to 6 (no interpretation failures). (Raspelli et al., 2009).

Furthermore, for every subtask, other variables can be analysed (partial tasks failures): 1) sustained attention (not distracted by other stimuli); 2) maintaining the correct sequence of the task; 3) searched item in the correct area; 4) maintained task objective to completion; 5) divided attention between components of task and components of other VMET task; 6) correct organisation of the materials during all task; 7) self-corrections; 8) absence of perseverations. The general score ranges from 8 (no errors) to 16 (many errors), while a scoring range for each item from 1 (yes) to 2 (no) (Raspelli et al., 2009, 2012; Pedroli et al., 2019). The VMET has demonstrated good inter-rater reliability, showing an intraclass correlation coefficient (ICC) of 0.88 (Cipresso et al., 2013b) and good usability (i.e., this test can be used with patients who are not familiar with computerized tests) (Pedroli et al., 2013). To evaluate the reliability of the VMET, the researchers have conducted two different experiments that showed that the test has good reliability: in the first, two independent researchers analyzed 11 videos in which 11 healthy subjects were tested with VMET; in the second one, seven researchers scored two videos of 2 healthy subjects running the VMET. Moreover, to analyse the usability of VMET, Pedroli and colleagues used the System Usability Scale [SUS, (Brooke, 1996)] in a sample of 21 healthy participants and 3 patients with PD. Results showed good usability of VMET for healthy subjects and that a good training phase before the test is crucial to apply the virtual protocol to PD patients (Pedroli et al., 2013).

Finally, VMET appeared sensitive to assess several components of EFs in neurological and psychiatric populations (Wiederhold et al., 2010; Raspelli et al., 2012; Cipresso et al., 2013a; Pedroli et al., 2019), offering an accurate evaluation of deficits hardly detectable with traditional tests (Cipresso et al., 2014). As regards the neurological condition, the studies have focused on the feasibility of VMET as an assessment tool of EFs in PD and post-stroke patients, showing promising results in terms of convergent validity (good correlation between VMET scores and traditional paper-and-pencil tests, such as ToL, FAB and TMT) and efficacy in distinguishing between healthy controls and pathological groups (Raspelli et al., 2009; Albani et al., 2010). Taking up these research, Cipresso and co-workers deepened the validity of VMET in PD with normal cognition populations (Cipresso et al., 2014), showing significant differences in the VMET scores but not in traditional tests between PD patients and control subjects, particularly in cognitive flexibility. This study offers preliminary evidence that a more ecologically valid evaluation of EFs is more likely to early detect subtle executive deficits in PD patients (Cipresso et al., 2014). Regarding the psychiatric population, La Paglia and colleagues successfully conducted three studies evaluating the feasibility of VMET as an assessment tool of EFs in patients with Obsessive-Compulsive disease (OCD) and schizophrenia. Results showed a good convergent validity of VMET and its ability to distinguish between healthy controls and both OCD and schizophrenia populations (in planning, mental flexibility and attention) (La Paglia et al., 2014). Recently, Pedroli and colleagues proposed successfully a computational approach based on classification learning algorithms to discriminate OCD patients from a control group (Pedroli et al., 2019). This good result opens a new scenario for future assessment protocols based on VR and computational techniques that could reduce time and effort for both patients and clinicians, allowing more personalized and efficient rehabilitative treatment.

Overall, VMET allows the possibility to assess some subcomponents of executive functions in ecologically valid settings, giving an accurate analysis of patients' deficits as well as traditional tests. Further study will have to analyze the temporal stability of VMET, namely test-retest reliability and criterion validity.

Virtual Environment Grocery Store

The Virtual Environment Grocery Store (VEGS) is another task built on MET (Law et al., 2006). The VEGS is a 3D virtual grocery store environment developed to assess executive abilities (Parsons et al., 2008). VEGS was developed using the NeuroVR platform to offer an immersive VR version of the MET, in which participants interact with avatars and objects to perform various shopping tasks (Parsons and McMahan, 2017; Parsons et al., 2017). The different shopping commissions must be completed in a VE according to some rules, in low and high distraction conditions (Shallice and Burgess, 1991; Parsons et al., 2008).

The VEGS puts the subject in an immersive modality, in which the VE is displayed using a desktop monitor. The subjects interact with the VE using the keyboard arrows and a mouse. In the VEGS, participants navigate the store and perform various tasks, such as navigating to the pharmacy and dropping off a prescription with a virtual pharmacist. Here the participant receives a number and listens for that number, ignoring other numbers and announcements while shopping. The participants must also buy products on the shopping list. When they hear their number over the public-address system, they must return to the pharmacist to pick up their prescription (event-based prospective memory). Additional tasks include: 1) navigate the virtual grocery store following specific routes through the aisles; 2) find and select the ingredients necessary for the preparation of easy eats (i.e., making peanut butter); 3) ignore products that are not on the shopping list; 4) selection of products so you don't spend more than the expected amount; 4) perform a prospective memory task when a specific individual is met (Parsons et al., 2008). Also, the difficulty of the tasks increases through the addition of distractions: 1) growing number of items to store; 2) adding background music; 3) increasing its loudness (i.e., an announcement of commercial promotions, human laughter, coughing, falling goods, crying children and ringtones of cell phones); 4) adding virtual human avatars that walk in the environment or are lined up at the control desk and in the pharmacy. Other avatars speak in small groups or on virtual phones (Parsons et al., 2017). After the VEGS, the participant performs delayed free and cued recall of the VEGS shopping items.

A preliminary study conducted on healthy university students showed the absence of correlation between VEGS and DKEFS Color-Word Interference, a traditional neuropsychology test of executive functioning. However, a second study demonstrated that the addition of environmental distractors into VEGS might be successfully used in situations where the neuropsychologist is interested in looking at both memory and inhibitory control in a distracting environment.

Within the line of research on developing virtual shopping environments that simulate the supermarket environment, some researchers have focused on adapting the Four-Item Shopping Task, an assessment IADL of shopping.

Adapted Four-Item Shopping Task

Some studies have validated two versions of the Adapted Four-Item Shopping Task, in which participants have to perform the shopping task in a virtual shopping environment (Kizony et al., 2017; Nir-Hadad et al., 2017). The first one was based on the original task (Rand et al., 2007), where the participant must acquire four different products that appear on a shopping list and are located in two different aisles on both the top and middle shelves. In the other version, the subject must buy four additional products that appeared on a shopping list from at least two different stores (Kizony et al., 2017). While shopping, the subjects need to consider the product brand (some brands are more expensive than others) and acquire all four items without exceeding the specified budget.

The Adapted Four-Item Shopping Task of Nir-Hadad and colleagues was performed in Virtual Interactive Shopper (VIS), a SeeMe supported virtual mall shopping environment (Hadad et al., 2012). SeeMe is a camera tracking VR system installed on any portable computer and displayed on any standard TV monitor. Currently, the virtual mall shopping environment includes three different stores: a supermarket, a toy store, and a hardware store. The types (i.e., products from a specific country), quantities, and position of the products in each store can be easily regulated. The participant navigates within and between the shopping aisles by “touching” directional arrows and selects the desired items by “hovering” over photos of the products. When a product is touched, its name is voiced. After the selection, the product's image is placed in a virtual shopping cart. The shopping list and the contents of the cart (i.e. the products already acquired) can be viewed at any time by “touching” the menu icon. Products bought by mistake can be removed from the cart. After completing the task, a detailed report of the shopping activity is generated, including information about products selected (what and when), if the products purchased by mistake were returned, the total cost of the acquired items and distance traversed shopping. In particular, the last variable, “distance traversed,” refers to the distance moved by participants while they were making their purchases in the virtual supermarket.

The Adapted Four-Item Shopping Task of Kizony and colleagues was performed in another shopping mall: EnvironSim Virtual Shopping Mall (Kizony et al., 2003, 2017). The Center One mall, a real shopping mall in Jerusalem, was simulated using EnvironSim software that allows personalizing any shopping mall design, including the number and type of stores and the products purchased in each store. The participant's point of view is first-hand, so the subjects can observe the environment as if they were in the real world. Distance travelled, trajectory, visited stores, products acquired, and budget management are recorded and used to calculate variables. To navigate right, left, forward and backwards, the participants must use the keyboard keys: it is also possible to replay the route taken by the shopper. The participant must use the mouse to interact with the simulation program's menu items, shopping list and shopping cart. In each shop, the images, names and prices of the products are displayed on the screen. The shopping list with category names of products to buy and the amount of money given to the subjects are located on the left. On the opposite side, there is the shopping cart with the purchased items and their prices. The participant can return the products by selecting the trash image above the object.

In both versions of the Adapted Four-Item Shopping Task, the outcomes measure included: 1) the time to acquire/select the first item, 2) total time to buy the four items, 3) a number of errors (missing items, extra items, items purchased by mistake), 4) discrepancies between the amount of money that participants could spend and the actual amount that they spent, 5) distance travelled while shopping, 6) cognitive strategies used during shopping.

The validation studies of the Adapted Four-Item Shopping Task involving healthy controls subjects and post-stroke patients have provided good convergent validity and efficacy results. Specifically, Nir-Hadad and colleagues have shown this VR-based tool's ability to differentiate healthy and pathological groups in performing executive tasks (with clinical groups that obtained lower performance). Moreover, both research teams showed correlations between performance in the Four-Item Shopping Task in the VE and clinical assessments of EFs for healthy and pathological samples (i.e., TMT, BADS and Executive Function Performance Test), indicative of a good convergent validity. In addition, Kizony and colleagues showed that their version of the test could evaluate age-related EFs decline in terms of inhibition and processing speed in healthy older adults, compared to young adults (Kizony et al., 2017). Interestingly, both healthy groups gave positive reports regarding their VE experience, but the older adults reported a lower level of usability.

Virtual Action Planning - Supermarket

Another user-friendly VR-based tool designed to evaluate and train the ability to plan and perform a shopping task (Klinger et al., 2004) is the Virtual Action Planning - Supermarket (VAP-S) (Klinger et al., 2006). The original VAP-S was adapted by Klinger for use by an Israeli population; the names of the aisles and grocery items and all the task elements were translated to Hebrew (Josman et al., 2006). The VAP-S simulates a fully textured, medium-size supermarket with multiple aisles displaying most of the products that can be found in a real supermarket (i.e., drinks, canned food, fruit, salted and sweet food, cleaning equipment, clothes and flowers). In this virtual supermarket, many elements were introduced: four cashier check-out counters, a reception point and a shopping cart. It also contains refrigerators for milk and dairy products, freezers, four specific stalls for fruits, vegetables, meat, fish, and bread. Moreover, some obstacles (i.e., packs of bottles) were placed to hinder the shopper's progress along the aisles. In addition, static virtual humans, such as a fishmonger, a butcher, check-out cashiers and some customers, populated the supermarket (Josman et al., 2006, 2009). Before starting the task, participants perform a training task similar to the test to familiarize subjects with the VE and the tools. During the training, some instructions are provided on the screen, and the examiner explains other general information about the task and the use of VAP-S. The individual must sit (or stand) in front of a laptop monitor and interact with the VE using a mouse and computer keyboard (Aubin et al., 2018). The participants enter the supermarket behind the cart as if they are pushing it and navigating freely by pressing the keyboard arrows. They experience the VE from a first-person perspective without any intermediating avatar. The participants must acquire seven products from a list of products, then proceed to the cashier's desk, and pay for them. Twelve correct actions (e.g., selecting the exact product) are required to complete the task correctly. The list of products is displayed on the right-hand side of the screen. The participant can select items by pressing the left mouse button. If the item chosen belongs to the list, it will be automatically transferred to the cart. Otherwise, the product will not move, and a mistake will be recorded. At the cashier check-out counter, the participant must place the items on the conveyor belt by pressing the left mouse button with the cursor pointing to the belt. He may also return an item placed on the conveyor belt to the cart. The patient can pay and proceed to the supermarket exit by clicking on the purse icon. The task is completed when the subjects leave the supermarket with the cart (Aubin et al., 2018). The VAP-S records various outcome measures (positions, times, actions) while the participant explores the VE and executes the task. Eight variables are calculated from the recorded data: 1) total distance traversed in meters, 2) whole task time in seconds, 3) number of items acquired, 4) a number of correct actions (i.e. selecting the exact product), 5) number of incorrect actions, 6) number and combined duration of pauses, 7) time to pay (i.e., the time between when the cost is displayed on the screen and when the participant clicks on the purse icon). The participants can make many errors: 1) chooses wrong items or the same item twice; 2) selects a check-out counter without any cashier; 3) leaves the supermarket without purchasing anything or without paying; or 4) stays in the supermarket after the purchase (Josman et al., 2008, 2009; Cogné et al., 2018). The eight outcomes can be conceptualized in terms of executive functioning into two categories: 1) “task completion” measured by the number of purchased products and correct actions; 2) “efficiency” that is competency in performance or ability to complete work with minimum expenditure of time and effort, measured by time, distance, and incorrect actions (Josman et al., 2009). To summarize, the main EF components are measured by looking at the participants' planning abilities within the VAP-S and their organization in time and space (Werner et al., 2009).

As a VR platform, the VAP-S appeared a valid and reliable method to assess EF disabilities in neurologic (i.e., post-stroke and mild cognitive impairment) and psychiatric (i.e., people with schizophrenia) populations, as shown by several studies (Klinger et al., 2006; Josman et al., 2008; Werner et al., 2009). Josman and colleagues have demonstrated that VAP-S correctly categorized more than 70% of the participants according to their group and initial diagnosis (post-stroke, Minimal Cognitive Impaired and schizophrenics). Moreover, several studies have shown the feasibility of VAP-S as a VR-based tool able to discriminate between controls and these pathological groups, with patients that obtained lower performance in different EFs, such as planning, problem-solving, rule compliance (Werner et al., 2009; Josman et al., 2014). Interestingly, all studies supported a promising convergent validity of the tool due to the correlation between BADS profile score and VAP-S outcome measures (such as trajectory duration, covered distance and time of stops).

Virtual Supermarket Shopping Task

Plechata et al. developed the Virtual Supermarket Shopping Task (VSST), a novel solution for assessing and rehabilitating memory and EFs. VSST consists of a simulation of shopping activity set in a small supermarket (29 × 50 m) in which products (e.g., fruits, vegetables) are placed as in a real store. All task was developed using Unity3D software (Plechata et al., 2017). In the VE, the items are visually recognisable, and their names show up to avoid any confusion (e.g., shampoo vs. deodorant). VSST was administered on a 17” laptop, and participants performed the task using a mouse and keyboard. After the exploration phase (maximum of 240 s), where participants could also familiarize themselves with the system, they had to perform two consequent phases: acquisition and recall. During the Acquisition phase, the encoding material (grocery or ordinary supermarket items) was presented to the subject in a shopping list for a specific time (5 seconds for each item). Then, participants performed a delay interval (3 min) without the shopping list and the possibility of moving in VE. After 3 min, the subjects performed the Testing phase, in which they had to find and pick up the stored objects in the virtual supermarket. Participants were instructed to solve the task as fast (short trial time) and as effectively as possible (low trial distance). Interestingly, the examiners could tailor the session to suit the participant's needs, increasing difficulty level (with 3, 5, 7, 9, and 11 items as encoding material). Outcome measures involved the number of correctly collected items and trial time and distance. The errors measured could be composed of two types of mistakes: Intrusion (picking up a wrong object) and Omission (missing some of the objects from the list). Finally, the authors have created two shopping list variants (A and B) for each difficulty level to allow repeated assessment in clinical practice (Plechata et al., 2017). Recently, the authors have conducted a validation study, showing the construct validity of the VSST as a memory task, while further studies are necessary to deepen its validity as an executive function task (trial times and travelled distances – moderate correlations with TMT) (Plechata et al., 2021).

VMall

The virtual environment VMall was developed by Rand et al. in 2005 to propose a suitable setting for the rehabilitation of stroke patients in which they had to perform a shopping task (Rand et al., 2005). The authors evaluated the usability of VMall by involving post-stroke individuals showing that VMall has great potential for rehabilitation with patients as it provides an interesting, challenging and motivating task without side effects. Moreover, they affirmed the will to use it again and the great potential for rehabilitation. Interestingly, several patients bought items not on the list because they needed them at home or were on sale; thus, the task appeared relevant and realistic for participants who felt a high level of presence (Rand et al., 2005). The advantage of VR therapy set in VMall was also demonstrated by Jacoby and colleagues in TBI patients, compared to conventional occupational therapy, in improving complex everyday activities (Jacoby et al., 2013). All participants received ten treatments of 45-min, 3/4 times per week. All therapy interventions followed the cognitive retraining treatment that treats and improves deficits in executive functioning through 1) planning tasks; 2) task performance (to perform a task according to planning); 3) time management; 4) monitoring performance; 5) meta-cognitive strategies. In the experimental group, all tasks were performed in the virtual supermarket, and task complexity was adapted to the needs, abilities and progress of each participant. The results showed that 10 on 12 participants improved their performance after therapy. The findings suggested that the improvement in executive functioning was higher in the experimental group than in the control group. Moreover, the study showed that the participants were able to transfer rehabilitation results from the VR treatment to function in the real world, both in similar activities (shopping in the supermarket) and in the performance of additional IADL activities (e.g., cooking). It is possible because the VR shopping simulation tasks were more similar to daily activities than those used during conventional therapy. Finally, the authors supported the idea that the differences between groups may be related to the patients' enjoyment during the intervention that influenced levels of motivation and compliance during the rehabilitation process (Jacoby et al., 2013).

NeuroVR Supermarket

Carelli et al. proposed a VR-based tool to treat attention shifting and action planning through tasks that mirrored daily life tasks set in a virtual supermarket developed using NeuroVR software (Carelli et al., 2008). Healthy control subjects underwent a 75-min assessment and training session in which they had to explore the VE, collect some items of a shopping list and listen to any audio announcements that would change the sequence or number of items collected. This treatment involved a hierarchical series of tasks: from a single task condition (level 1) to multiple successive tasks. Outcome measures involved execution times, errors, planning route (trajectories and efficacy) and level of complexity to identify the maximum one that healthy people were able to carry out according to their age range. Results showed the feasibility of the virtual supermarket and attention-shifting paradigm for use with older control subjects. The initial results indicated that the temporal and accuracy outcome measures allowed monitoring differences in these subjects' abilities. Specifically, the execution times appeared to be related to the ability to interact with a computer device like the joypad (as expected). Moreover, the trajectories and efficacy of planning the route can be considered relevant outcome measures. The hierarchical series of tasks allowed clinicians to determine the extent to which adding a contextual and functional executive task interferes with the performance of a simple virtual shopping task by individuals with cognitive impairment. However, the results demonstrate a need to give more practice to ensure that the participants learnt the initial simple task. These promising results paved the way for a subsequent randomised clinical trial and rehabilitative program that addresses additional components of executive functioning (Carelli et al., 2008).

Virtual Office Environment

Jansari et al. implemented a new instrument, Jansari Agnew Akesson Murphy task - JAAM (Jansari et al., 2004), that uses office environments as scenarios (Jansari et al., 2014). The authors decided to use this context because most of their patients attempted work placement in the office environment. In subsequent studies, the authors named this VR assessment tool with a different acronym “JEF”: Jansari Assessment of Executive Functions; however, the instrument has remained unchanged between the various studies (Jansari et al., 2013).

Jansari Assessment of Executive Functions

JAAM reproduces the MET, set in an office environment, to assess eight aspects of executive functioning: planning, prioritisation, selective-thinking, creative-thinking, adaptive-thinking, action-based prospective memory (PM), event-based PM and time-based PM (Jansari et al., 2004). The authors introduced another executive aspect in the JEF version: multitasking (Jansari et al., 2014). The choice of office environment allowed creating a complex task in which participants must complete several tasks in parallel; thus, subjects have to plan and organize their actions to achieve the goals. In this way, the overall assessment is less linear and, therefore, less likely to mask or mediate the difficulties that participants may experience in the workplace. The environment consists of a small office-like room linked by a corridor to a larger room appropriate for holding a meeting for 20 people, that reproduce office and corridor at the University of East London. In these rooms, the authors inserted the items needed for the tasks, additional relevant but non-used items (i.e., staplers and extra desks) (Jansari et al., 2014) and different sounds necessary to replicate the fire alarm and memo announcements (Soar et al., 2016). In the task, participants must play the role of an office assistant with the primary goal of organizing a meeting later that day and preparing an appropriate room for that meeting. The subjects receive a list of tasks that need to be completed for the office manager, called the “Manager's Tasks for Completion,” such as setting up tables and chairs or turning on the coffee machine when the first person arrives for the meeting. They are also informed that they will receive many memos (virtual and hard copy) that require them to perform additional tasks or amend a current task during the task. The responsibility of planning for overall task completion is given to the participant with no clues as to possible solutions or courses of action. The task is presented in a desktop VR environment on a laptop, and the participants can navigate around the environment using the arrow keys on a standard computer keypad and collect objects by clicking them with the computer mouse.

To ensure that performance on the new assessment was not affected by lack of experience with using computers and moving around a VE, participants performed a familiarization phase in a similar VE. After training, each participant is taken to the small office and informed of the role that they must play to complete the task (Jansari et al., 2014). As said previously, the JEF evaluates nine aspects of executive functioning using realistic subtasks (two for each construct) that could be found in an average office environment. The authors designed all tasks with ambiguous and multiple solutions, as in real-life situations (Jansari et al., 2014; Denmark et al., 2019). For clarity, a definition has been inserted for each construct, and some task examples will be described. In the planning scale, subjects must logically order events/objects and not due to their perceived importance. For the prioritization scale, subjects must order events following the perceived importance. In the Selective-thinking scale, subjects must choose between more alternatives by drawing on acquired knowledge. For example: decide which mail company should send each post item based on each company's specialty. In the creative-thinking scale, the user must look for solutions to problems using unobvious and unspecified methods. For example: find a way to cover graffiti written on a whiteboard in indelible ink. For the adaptive-thinking scale, participants must achieve goals again in the face of changing conditions. For example, the overhead projector needed in the meeting is broken and needs to be replaced. In the multitasking scale, subjects must perform more tasks simultaneously. Finally, to evaluate the three constructs of prospective memory, the subjects must remember to execute a task in three different conditions: at a specific future time point (TPM), stimulated by an external stimulus/event (EPM) or stimulated by a stimulus related to an action the individual is already engaged in (APM). For example: turn on the overhead projector 10 min before the scheduled start of the meeting (TPM), note down the times of fire alarms tested before the meeting starts (EPM), make a note of any equipment that breaks or malfunctions during the day (APM).

In total, JAAM/JEF participants have ~40 min to complete the list of tasks in time for the beginning of the meeting. The start and meeting times are written, and participants have a digital clock to monitor the time (Denmark et al., 2019). The only aspects of the test that required physical interaction outside the VE involved filling out specific lists (e.g., the initial to-do list). The examiner observes the entire assessment and completes the evaluation sheet, using a standardized sheet, while the participants perform the activity (Denmark et al., 2019). All subtasks of each construct are scored on a 3-point scale (0, 1, 2), reflecting the participant's efficiency in completing a task. The scores for subtasks of each construct are then summed, and a total percentage score is calculated for each construct. Moreover, a full performance percentage score is calculated for the JAAM by adding raw scores for each construct, dividing the overall possible rating and multiplying by 100 (Montgomery et al., 2010, 2011). The JAAM appeared a promising solution to evaluate executive impairments in subjects with ecstasy-polydrug users (Montgomery et al., 2010) and acute alcohol intoxication (Montgomery et al., 2011). Subsequently, JEF appeared a good and valid ecological tool to evaluate executive dysfunctions in acquired brain injury (ABI) and other conditions (e.g., mood disorders) (Jansari et al., 2013, 2014; Denmark et al., 2019). For example, JEF was able to detect deficits in EFs (e.g., planning and adaptive thinking) in patients with ABI, despite BADS performance being normal. Moreover, the traditional neuropsychological tests (e.g., Digit span, TMT) showed no differences between groups, except for TMT-A. Overall, these promising results confirmed the potential clinical utility of the JEF with frontal lesions, emphasizing the need for ecological assessment in detecting EFs impairments. Recently, Hørlyck and colleagues successfully investigated JEF's validity as an innovative VR-based test for evaluating daily life executive function impairments in patients with mood disorders (Hørlyck et al., 2021). Patients showed impairments in executive functioning compared to the control group in performing JEF. Moreover, JEF scores predicted performance on neuropsychological tests (e.g., TMT, Fluency tests, letter-number sequencing, digit span), indicating that it could be used as an index of EFs.

Due the promising results, Jansari and colleagues developed a parallels version on JEF, addressed to children Jansari assessment of Executive Functions for Children (JEF-C) (Jansari et al., 2012; Gilboa et al., 2019).

Jansari Assessment of Executive Functions for Children

JEF-C involves a birthday party and is designed to assess children between 8 and 18 years of age. In this task, the examiner tells the participants that it is their birthday and they must organize their party. The party takes place in a virtual home with three rooms: kitchen, living room and DVD/games room. In this VE, a front door, which participants can open and a back garden with a gate leading to the neighbor's yard are introduced. The participant can move freely around the three rooms, hallway and garden using the computer mouse, and they must perform all required tasks within these areas. Like the adult JEF, there are eight constructs in JEF-C, each of which has an operational definition. For each of these constructs, the authors created realistic tasks that could happen at a child's birthday party to evaluate them as ecologically as possible. For example: in PL, the subjects must rearrange the list of tasks that must be carried out in 3 phases of the party (preparation, development, end); in PR, they must arrange five cleaning tasks for the end of the party. In ST, they must choose which food gives to guests based on their preferences or allergies; in CT, they must find a way to cover the spider drawn with permanent ink on a blackboard (because a guest is afraid of spiders), and in AT, they must find an alternative seating when one chair breaks. The authors designed the tasks with the same characteristics as the adult version: they have ambiguous and multiple solutions. Although most of the tasks are completed in the VE using a standard laptop, for simplicity, some tasks (i.e., selection and planning tasks) are executed in the 'real world' on hard copy. Before starting the evaluation, subjects must move around the house and collect 13 objects for practicing with the environment. Then, they received by examiner an instruction sheet and a biographical sheet of the guests (e.g., food preferences and allergies). Moreover, the participant receives a letter from the parents indicating what they must do: at the end of the reading, the examiner asks the subject to create his “Activity List” card in paper format. The evaluation's real start begins with the beginning of the VR program, as soon as the participant finishes reading the parents' letter. The assessment takes between 30 and 35 min to complete. However, the participant decides when their birthday party finishes; thus, some participants can take longer. Like the adult version, all tasks are assigned on a 3-point scale for success (0-2). To assess inter-rater reliability, two raters simultaneously and independently scored the performance of nine healthy children while performing JEF-C. Data showed very high inter-rater reliability with correlation coefficients between r = 0.96 (p < 0.001) and 1.0 (p < 0.001) for the eight constructs separately and for the overall average JEF-C score (r = 0.999, p < 0.001) (Jansari et al., 2014). In 2019, Gilboa and colleagues tested the feasibility and validity of JEF-C, as innovative ecologically valid assessment tool for children and adolescents (aged 10–18 years) with ABI (Gilboa et al., 2019). JEF-C showed the presence of severe executive dysfunction in most patients with ABI. Specifically, patients performed significantly worse on most of the JEF-C subscales and total scores, with 41.4% patients classified as having severe executive dysfunction (Gilboa et al., 2019). Recently, the same authors developed an adapted Hebrew version, JEF-C (H) and assessed reliability and validity in the Israeli context, involving typically developing Israeli children and adolescents (aged 11–18 years) (Orkin Simon et al., 2020). Overall, results showed the potential clinical utility of JEF-C (H) as a VR-based tool for an ecologically valid evaluation of executive functioning in Israeli children and adolescents. Expressly, data indicated that JEF-C (H) showed interesting psychometric properties (e.g., acceptable internal consistency) for measuring EFs performance of young Israeli sample (Orkin Simon et al., 2020).

Assessim Office

Another VR office task, known as Assessim Office (AO), was implemented by Krch et al. to evaluate several performances on realistic tasks of selective and divided attention, complex problem solving, working memory and prospective memory (Krch et al., 2013). Participants are seated at least 50 cm away from the computer screen and are immersed in the virtual office environment (rendered in the Unity game engine), in which they must navigate and complete virtual tasks using both keys of the mouse. The combination of many tasks of different priorities (e.g., rule-based decision task, reaction time task) is designed to simulate scenarios similar to the real world. In the virtual office, the participants are seated at the virtual desk equipped with several office objects, such as a computer monitor, a keyboard and a file folder. Moreover, the VE includes other desks, two printers, many everyday office objects (e.g., ring binders, lamps, computers, drawer units), a conference room with a projector screen and two big windows. In this task, the subjects must complete several working tasks during a typical workday that lasts ~15 min. Before beginning the task, the examiners show participants the location of crucial objects and what tasks they will perform during their workday. Then, the subject can familiarise with the environment and, as necessary, receive the task instructions again. Participants must carry out five working tasks: 1) respond to emails, 2) decide whether to accept or reject real estate offers based on specific criteria, 3) print the real estate offers that met specific criteria, 4) retrieve printed offers from the printer and deliver them to a file box located on participants' desk and 5) ensure that the conference room projector light remained on at all times. Each task reflects specific EF skills and processes, respectively selective attention, complex problem solving with working memory component (2nd and 3rd tasks), prospective memory and divided attention. In addition to evaluating the task behaviors, off-task behaviors are assessed for the presence of inattentiveness and perseverative behaviors. To facilitate the administration of AO tasks, the authors created an instruction manual that includes many questions frequently asked by participants, with standardized responses and hints for common confusions (e.g., if the participant is lost in the virtual office, the initial cue is “Are you looking for something?”) (Krch et al., 2013). The validation study showed that AO could be an exciting solution for evaluating executive impairments in patients with MS and TBI compared to healthy controls (Krch et al., 2013). The findings suggested a significant difference between patients with MS and the control group on all executive tasks of AO, except for the printing decision task (working memory). Moreover, AO successfully distinguished TBI subjects from controls on specific aspects of EFs: selective and divided attention, problem-solving, and prospective memory. Finally, results showed a good convergent validity due to the relationship between performance on AO tasks and standardized neuropsychological tests (subtests WAIS-III: Letter Number Sequencing and Digit Span; Delis-Kaplan Executive Function System (D-KEFS). Interestingly, a qualitative feasibility assessment revealed that patients could tolerate involvement in a VE with only minimal difficulty moving around the VE with the mouse.

Virtual Kitchen Environments

Other researchers have investigated the potential of virtual kitchens to assess and train patients with the dysexecutive syndrome (Cao et al., 2009; Klinger et al., 2009; Júlio et al., 2016; Chicchi Giglioli et al., 2019). The use of virtual kitchens can be related to two premises: 1) cooking is a good example of a real-world task that is often based heavily on executive functioning (Tanguay et al., 2014); 2) many assessments and rehabilitation studies of clinical populations have successfully used kitchen settings to address functional and executive impairments (Baum and Edwards, 1993; Zhang et al., 2003; Craik and Bialystok, 2006; Giovannetti et al., 2008; Allain et al., 2014; Ruse et al., 2014).

VR-Cooking Task

VR-cooking task is a protocol that uses a virtual kitchen, developed using Unity software as a VE for the ecological assessment of EFs (Chicchi Giglioli et al., 2019). Subjects performed the virtual cooking activity wearing a head-mounted device (HTC VIVE1) and two manual controllers to move hands inside the environment.

Before starting the cooking activity, participants had to familiarize with the technologies, performing an action similar to the virtual cooking activity, to learn the main body movements and hand interactions useful to complete the training. The task begins when they press the button “start.” The virtual cooking task consists of four levels of difficulty that involve three different abilities: attention, planning, and shifting. Each level's main aim is to cook a series of foods at a predetermined time without 1) burning (i.e., food is not removed from the pan or the subject switches off the burner after the predefined cooking time) or 2) cooling (food remains in the pan after it was cooked and turned off the switch, or food is removed from the pan during cooking. Before each level, the users see the instructions about what activities they must perform, how much time each food requires, and the reminder to cook foods without burning or cooling them. When the food is cooked, the participant must remove it from the pan, turn off the cooker, and place it on the plate. Participants could move to the following level as soon as they have cooked all the foods of the previous level. The following levels require more to be completed. In the first level, subjects have to cook three foods in one cooker in 2 min; in the second level, they have to cook five foods on two cookers in 3 min; in the third level, they should perform a dual-task: (a) 5 foods should be cooked on two cookers in 4 min; (b) during the cooking, users should add the right ingredients to the foods, In the last level, another dual-task has been proposed: (a) participants should cook five foods in 2 cookers in 5 min and (b) they should set the table. The virtual system collects the time used to complete the activity at every level, along with total times, burning times and cooling times.

Chicchi Giglioli and co-workers conducted a preliminastudy that evaluated healthy subjects' performance at this ecological task, showing great usability, feasibility, and sense of presence (Chicchi Giglioli et al., 2019). Recently, the same authors have presented a preliminary study to test this task as an alternative to the traditional, standardised neuropsychological tests (e.g., Dot-probe task, Go/No-go test, Stroop test, TMT and ToL) for assessing EFs impairments in patients affected by Alcohol Use Disorder (AUD) (Chicchi Giglioli et al., 2021). Patients with AUD showed lower functioning, with more errors and higher latency times than healthy controls. Moreover, a moderate-to-high relationship appeared between standardized neuropsychological tests and the VCT. Finally, higher relationships were found in the AUD group than the control subjects in the questionnaire evaluating attention control, impulsiveness, and cognitive flexibility, mainly related to planning and cognitive shifting abilities (Chicchi Giglioli et al., 2021). Overall, this study provides initial evidence that a more ecologically valid assessment can be a useful tool to detect cognitive impairments in patients affected by AUD.

Therapeutic Virtual Kitchen

Klinger et al. designed the Therapeutic Virtual Kitchen (TVK) as an assessment and rehabilitation instrument for patients with brain injury (Klinger et al., 2009). TVK allows the therapist to adapt virtual kitchen tasks (ecological tasks) to patient abilities, modulating the difficulty (Cao et al., 2010). The authors designed the TVK in collaboration with Kerpape Rehabilitation Center; for this reason, the TVK is graphically very similar to the Kerpape Center kitchen and is based on the habits and needs of Kerpape therapists. Moreover, the authors conducted a preliminary study (2009) involving graduate students or laboratory staff members and therapists who worked with brain injury patients (Cao et al., 2009, 2010; Klinger et al., 2009). This study allowed assessing various conditions of experimentation within the TVK and understanding how to improve the system. Results showed the necessity to add new components such as a final virtual evaluation scale based on the traditional scale used in Kerpape Rehabilitation Center. The virtual activity is displayed on the screen of any computer, and participants can interact, using the mouse, with several 3D objects required in the preparation of meals and can navigate through the environment using the keyboard. To increase the sensation of immersion in the virtual kitchen, the authors introduced real sounds activated with the interaction with 3D objects. Visual mouse signals are given to the subject to facilitate the understanding of interaction opportunities, such as changing the mouse cursor when an item is “pickable” or according to user action (execute, pour, activate, connect). In this VE, participants are involved in virtual IADL (vIADL), such as preparing a coffee. The tasks were designed to meet some fundamental issues related to the primary tasks, the graduation of the task, and the modalities of interaction (Klinger et al., 2009). The TVK software offers two types of activities: “primary” task (PT) and “complex” task (CT). PT has been designed to ensure the participant's familiarisation with the system and tools and to administer simple tasks that can be proposed before involving the patient in the CT (i.e., Coffee task). To perform a PT, the participants must complete a limited number of actions. An example of PT is “take a glass and put it on the table.” CT involves vIADL, namely tasks which require both planning and space-time organisation. Specifically, the CT consists of the preparation of a coffee. The TVK offers the therapist many possibilities to identify the task, adapt it to the participant's skills, achieve the therapeutic goals (evaluation or rehabilitation), and modulate the difficulty. Each task can be modulated by manipulating the 1) the number of cups to prepare (from one to six); 2) time constraint (time organization and stress induction), and 3) initial location of the required items (easy: all the items are ready in the right place; medium: everyone is on the table; hard: need to retrieve all items in the closets or drawers). Due to the various items' locations, the authors worked on the action “take and put”. To transport an object from one place to another, they proposed different solutions: 1) use of an inventory, like in video games; 2) use of “Drag and drop,” like on PC desktop; and 3) stick of the item on the mouse cursor after its selection. The number of steps depends on item location and the nature of the coffee (easy: 12 steps; medium: 14 steps; and difficult: 16 steps). Moreover, based on the activity and the patient's ability, the therapist can help the participant through visual or auditory signals by pressing the keyboard keys (F1: a voice, F2: a message on the screen, F3: a red arrow to indicate the object with which to interact). The TVK can record, in a virtual assessment grid, ten errors: 6 actions errors (AE) and four behavior errors (BE). The AE consists of actions omissions, actions not completed, perseveration, sequence errors, actions additions and control errors. These errors are automatically interpreted and recorded by the system in the virtual assessment grid. The BEs are error recognition, difficulty in decision making, dependence (i.e., patient needs instructions to recall), use of the therapist's help. Before recording these errors in a virtual grid, the therapist must interpret them and press a keyboard key (Cao et al., 2009; Klinger et al., 2009).

In the following year, the authors explored the feasibility of TVK with healthy subjects and seven patients with brain injury (Cao et al., 2010). All control group participants succeeded in completing primary and complex tasks. As regards patients, six patients succeeded in completing the PT and five in completing the CT. Results showed: 1) parameter setting of the configuration (time constraint, number of cups of coffee to prepare, positions of objects) is handy for the therapists; 2) all tasks are understandable and exciting for two groups; 3) virtual interaction is moderately challenging for people who had not computer games experience; 4) helps are comprehensible for patients without expertise. This study showed an issue in recording participants' errors that appeared different between virtual and real assessment grid (e.g., 61 vs. 40) due to different interpretation (e.g., after the patient's correction, therapists interpret still errors whereas TVK does not record “actions omissions”) (Cao et al., 2010).

EcoKitchen