Scientific Abstract

Commercially available wearable biosensors have the potential to enhance psychophysiology research and digital health technologies for autism by enabling stress or arousal monitoring in naturalistic settings. However, such monitors may not be comfortable for children with autism due to sensory sensitivities. To determine the feasibility of wearable technology in children with autism age 8–12yrs, we first selected six consumer-grade wireless cardiovascular monitors and tested them during rest and movement conditions in 23 typically developing adults. Subsequently, the best performing monitors (based on data quality robustness statistics), Polar and Mio Fuse, were evaluated in 32 children with autism and 23 typically developing children during a two-hour session, including rest and mild stress-inducing tasks. Cardiovascular data were recorded simultaneously across monitors using custom software. We administered the Comfort Rating Scales to children. Although the Polar monitor was less comfortable for children with autism than typically developing children, absolute scores demonstrated that, on average, all children found each monitor comfortable. For most children, data from the Mio Fuse (96–100%) and Polar (83–96%) passed quality thresholds of data robustness. Moreover, in the stress relative to rest condition, heart rate increased for the Polar, F(1,53)=135.70, p<.001, ηp2=.78, and Mio Fuse, F(1,53)=71.98, p<.001, ηp2=.61, respectively, and heart rate variability decreased for the Polar, F(1,53)=13.41, p=.001, ηp2=.26, and Mio Fuse, F(1,53)=8.89, p=.005, ηp2=.16, respectively. This feasibility study suggests that select consumer-grade wearable cardiovascular monitors can be used with children with autism and may be a promising means for tracking physiological stress or arousal responses in community settings.

Keywords: Feasibility Studies, Cardiovascular System, Heart Rate, Physiological Stress, Physiologic Monitoring, Wearable Electronic Devices, Autism Spectrum Disorder

Lay Summary

Commercially available heart rate trackers have the potential to advance stress research with individuals with autism. Due to sensory sensitivities common in autism, their comfort wearing such trackers is vital to gathering robust and valid data. After assessing six trackers with typically developing adults, we tested the best trackers (based on data quality) in typically developing children and children with autism and found that two of them met criteria for comfort, robustness, and validity.

Historically, cardiovascular monitoring has involved expensive, stationary, and invasive equipment limited to the confines of a research or hospital laboratory. However, these constraints have been lifted with the advent of consumer-grade ambulatory monitors (Blasco et al., 2016; Goodwin et al., 2008; Henriksen et al., 2018), making community-based psychophysiology research more feasible in naturalistic settings – like homes and schools, and at scale, with sample sizes previously unobtainable, both in terms of numbers of research participants and quantity of data per participant. Although the number of studies that have tested and verified the robustness of commercially available ambulatory cardiovascular monitors is growing (i.e., the probability that a device will perform its intended function; Baron et al., 2018; Evenson et al., 2015; Henriksen et al., 2018; Mukhopadhyay, 2015; Shin et al., 2019; Walker et al., 2016), only a few have examined their robustness along with their accuracy (i.e., comparison to a research or hospital-grade device), and validity (i.e., whether the monitors show absolute changes in heart rate/heart rate variability during conditions where they should, such as during exercise; Abt et al., 2018; Brosschot & Thayer, 2003; Jo et al., 2016; Muaremi et al., 2013; Parak et al., 2015; Shcherbina et al., 2017; Wang et al., 2016; Xie et al., 2018). Furthermore, most extant studies examine robustness in the context of physical exertion (Abt et al., 2018; Cadmus-Bertram et al., 2017; Jo et al., 2016; Lu et al., 2009a; Parak et al., 2015; Shcherbina et al., 2017; Stahl et al., 2016; Wajciechowski et al., 1991; Wang et al., 2016; Xie et al., 2018), while relatively limited research focuses on the validity of these monitors for measuring physiological correlates of psychosocial stress (Brosschot & Thayer, 2003; Muaremi et al., 2013; Verkuil et al., 2016), including heart rate (HR) and heart rate variability (HRV; e.g., Lazarus et al., 1963; Li et al., 2009; Thayer et al., 2012).

Moreover, few studies examine whether commercially available wireless cardiovascular monitors are suitable for children and child clinical populations to wear, including children with autism spectrum disorder (ASD), a disorder of social communication impairments and behavioral rigidity (American Psychiatric Association [APA], 2013). Work is emerging in this area, with initial studies demonstrating preliminary feasibility of wearable device use in small samples (e.g., N = 5–12) of individuals with ASD (Billeci et al., 2016; Di Palma et al., 2017; Fioriello et al., 2020; Goodwin et al., 2006; Groden et al., 2005). Studies with larger sample sizes are needed to evaluate suitability in children with ASD who experience sensory sensitivities (Ben-Sasson et al., 2009). Ambulatory cardiovascular response tracking has great potential for better understanding and supporting children with ASD who experience high levels of stress and anxiety (Ambler et al., 2015; Khor et al., 2014; Mazefsky, 2015; Picard, 2009; Van Steensel et al., 2011), especially in those who have difficulty externally communicating their internally experienced distress (Nuske et al., 2018; Nuske, Vivanti, & Dissanayake, 2013; Picard, 2009), and who engage in challenging behaviors (Goodwin et al., 2019; Nuske et al., 2019). Wearable physiological monitoring may also help understand and support other comorbid conditions such as sleep and gastrointestinal disturbances (Ferguson et al., 2017; Tessier et al., 2018). However, research involving systematic testing of wearable physiological recording monitors is needed for the field to move toward real-world applications with confidence (Koumpouros & Kafazis, 2019). Although there is a basic agreement for the need to perform certain types of analyses, such as measures of data loss (see Kleckner et al., 2021), consensus on a methodology to establish this technology’s viability for scientific use is lacking in specificity, i.e., which quality metrics and thresholds to evaluate these monitors against.

In the current work, we carry out two studies to determine the suitability of commercially available ambulatory cardiovascular monitors in children with ASD. The goal of Study 1 is to provide an easy-to-follow framework for examining the robustness and validity of commercially available ambulatory cardiovascular monitors (see Methods for details) and to formally test this framework in a typically developing adult sample before testing with typically developing children and children with ASD. The goal of Study 2 is to test whether the most robust consumer-grade monitors from Study 1 can be comfortably worn by children with and without ASD and that the resulting data meet criteria indicating their robustness and validity in measuring psychosocial stress in children with and without ASD.

Study 1 Methods

Participants

Twenty-four typically developing adults (M age= 24.12, SD= 6.33; 7 males) participated in the study through the Center for Autism Research, Children’s Hospital of Philadelphia. Exclusion criteria were a diagnosis of ASD and a history of cardiovascular disorder, seizures, and stroke. One participant had tachycardia as a child; however, this was corrected by 18 years of age with no recurrent issues in the past 14 years, so was retained in analyses. No participants had known current asymptomatic arrhythmias, and our data processing did not identify any asymptomatic arrhythmias in recruited participants. Five participants took medications that can affect cardiovascular responsivity (for four adults, these were antidepressants; for one adult, this was Nuvigil, used to promote wakefulness). However, these participants were included in analyses as the study focused on data transmission and artifacts rather than absolute levels of cardiovascular responsivity.

HR and HRV Measurement

Commercially available ambulatory cardiovascular monitors typically employ two non-invasive approaches to measuring cardiac activity – electrocardiography (ECG, also known as EKG) and photoplethysmography (PPG). ECG involves electrodes placed on the chest that record significant voltage changes that occur when cardiac muscles expand and contract, resulting in a readily identifiable spiked waveform called the QRS complex (the most salient component being the R wave, corresponding to the depolarization of the ventricle walls; Becker, 2006). PPG measures cardiac activity optically via blood volume pulse transit time (typically measured some distance from the heart itself). See Figure 1.

Figure 1.

Electrocardiography (ECG) and photoplethysmography (PPG) data showing time in between heartbeats, R-R interval and interbeat interval (IBI), respectively.

Numerous consumer-grade wireless sensors are available to measure HR (beats per minute; BPM) and heart rate variability (HRV; variability in the time interval between heartbeats) using either ECG or PPG (the former often taking the form of chest straps, whereas the latter is typically built into wristbands). A full consideration of the pros and cons of ECG versus PPG is outside the scope of this article (Lu et al., 2009b); however, we note some salient points about the two approaches. Both ECG and PPG require identification of signal peaks (corresponding to heartbeats) and the frequency (BPM) and latency of those peaks. In general, there is more distinct, high-frequency information in the signal provided by ECG (i.e., the QRS complex), which allows for more accurate peak detection relative to PPG (Heathers, 2013). PPG has a comparative advantage over ECG in that it can accommodate a greater variety of bodily measurement sites (wrist, toe, earlobe, etc.; Ismail et al., 2021). Some of the critical innovations enabled by both ambulatory ECG and PPG wearables monitors are that they typically embed heartbeat detection algorithms right on the monitor, require relatively little power to run (although this is less true for PPG), and can transmit data via wireless protocols (i.e., Bluetooth). Usually, this requires a software development kit to send data to any platform other than the monitor’s manufacturer’s accompanying software. We used the custom-built software we developed for Study 1 to transmit data using Bluetooth/ANT+ protocols for this study.

Selected Ambulatory Cardiovascular Monitors.

We chose six wearable cardiovascular monitors that measure both HR and HRV data for the study. A key element of these devices is their use of Bluetooth or ANT+ transmission protocols and open data standards that allow for bi-directional communication and data transfer. Our device search focused on relatively low-cost (e.g., < $200) monitors for scalability and accessibility in community settings. The price point of the monitors ranged from $57-$130 at the time of assessment. Several commonly used monitors did not meet these criteria, either because they did not give access to data in real-time (e.g., Apple Watch, FitBit) or were above our targeted price point (e.g., Empatica E4, Shimmer). One monitor (Jabra Pulse headphones) met our two criteria, but many participants reported these were uncomfortable to wear, so they were excluded from the study. Three of the monitors were chest straps that recorded ECG (Polar H7, Garmin HRM, and Wahoo TICKR), and three were wristbands that recorded PPG (MioFuse, PulseOn, and Garmin Vívosmart HR). Chest-strap and wristband body placements (e.g., left vs. right wrist, upper vs. lower chest placement) were counterbalanced within participants groups (4 orders) to avoid bias associated with monitor body placement. Each of the wearable monitors relies on proprietary hardware, software, and algorithms to score occurrences of heartbeats. We did not have access to this information and thus were unable to evaluate its accuracy. We asked participants to wear all selected monitors simultaneously. We recorded the resulting data using custom software that allowed a single computer to record all data streams (see Recording Software for Cardiovascular Monitors section). No monitor manufacturers were involved in the study’s conceptualization, design, implementation, analyses, or interpretation.

Recording Software for Cardiovascular Monitors.

We developed a custom desktop application that allows HR and HRV data collection from several wearable monitors simultaneously (Kushleyeva, unpublished). This application supports real-time data collection from any monitor that exposes the Bluetooth Heart Rate Service (Bluetooth SIG. Heart Rate Service Specification, v1.0, https://www.bluetooth.com/specifications/gatt), thus allowing us to work with a wide range of manufacturers and monitor models. The application provides for logging as well as visual monitoring of the data. In addition, it allows real-time annotation and synchronization of observed behavior with physiological data obtained by monitors for later analysis.

Benchmark Wired ECG and Associated Recording Software.

We collected ECG data using the Biopac MP-150 System from Biopac Systems, Inc., with three wired, gelled electrodes in standard chest placement to compare selected ambulatory monitors to a gold standard benchmark. The protocol and electrode choices of the Biopac system were tailored for resting-state recording, including electrodes that were not the type required for robust ECG measurement while in motion (i.e., exercise). The Biopac software, Acknowledge, was used for the recording of ECG data.

Tasks

Resting-State Task.

Resting-state HR and HRV data were recorded for seven minutes while participants watched a relaxing video, Inscapes (Vanderwal et al., 2015) (designed initially for resting-state functional MRI research).

Treadmill Task.

HR and HRV data were recorded for two minutes while participants walked at 2 km (1.24 miles)/hour on a treadmill. This speed represents a casual walking speed for adults.

Procedure

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards. Informed consent was obtained from all participants at the beginning of the session. Participants then filled out a short demographic questionnaire and, following this, were instructed on how to put on each of the monitors verbally with a reference card on the body position for each monitor (four versions for four different placement orders, as above). For comparability with Biopac data, we used ECG electrode gel with the ECG straps we tested. The rest and treadmill conditions were administered in a fixed order: all participants watched the rest video at the start of the session, and the treadmill occurred at the end of the session. The entire session for Study 1 lasted approximately one hour.

Data Analyses

Sampling fidelity and spike rate comprised our data quality robustness statistics between the wearable monitors and our benchmark standard.

Sampling Fidelity.

During a given recording session, all interbeat intervals (milliseconds in between heartbeats) were considered to be reliably identified if the sum of those intervals equaled the overall recording time (within a margin of error of one heartbeat interval), resulting in a Sampling Fidelity of 1.0. If the number was greater than 1.0, then the monitor likely oversampled and classified artifacts as heartbeats. If the number was less than 1.00, the monitor likely undersampled and failed to identify some heartbeats. The current study adopted a Sampling Fidelity of 0.9 as a lower bound and 1.1 as an upper bound to delineate optimal Sampling Fidelity. We examined the Sampling Fidelity during the rest and treadmill tasks for each wireless wearable monitor.

Spike Rate.

When a monitor misidentifies an artifact as a heartbeat, it often returns an interbeat interval that is physiologically improbable and thus constitutes as an outlier (e.g., recording an interbeat interval of 800 msec followed by one that is 1300 msec, followed again by one that is 800 msec). These can be easily identified using a threshold criterion. For this study, we use a somewhat conservative standard for determining a “spike” in the data – an interbeat interval that deviates from the prior one by 200 msec or more. We operationalized Spike Rate as the number of spikes identified per minute of recording and applied a Spike Rate threshold of < 5 spikes per minute to identify optimal data. We examined Spike Rate during the rest and treadmill tasks for each wireless wearable monitor. All six wearable monitors are described for both Rest and Treadmill conditions but wired ECG (Biopac) data are provided for the Rest condition only as the Biopac configuration was not designed for fitness/movement applications.

Study 1 Results

Table 1 reports the number (and percentage) of times each monitor exceeded our threshold for acceptable data, separated by Rest and Treadmill conditions. Among the six wearable monitors, only three (Polar H7, Mio Fuse, and PulseOn) were within our a priori Sampling Fidelity and Spike Rate range for more than 80% of participants (for example, both Rest and Treadmill conditions). Considering these findings, these three monitors that passed our quality metrics were subsequently tested in Study 2.

Table 1.

Robustness Statistics for Wearable HR/HRV Monitors: Percentage of Participants Whose Data Passed Quality Thresholds

| Monitor | Rest | Treadmill | ||

|---|---|---|---|---|

| Sampling Fidelity Pass Rate | Spike Rate Pass Rate | Sampling Fidelity Pass Rate | Spike Rate Pass Rate | |

|

| ||||

| Biopac | 100% | 100% | N/A** | N/A** |

| Garmin HRM chest-strap | 13% | 13% | 4.3% | 4.3% |

| Polar H7 chest-strap* | 87.5% | 87.5% | 100.0% | 100.0% |

| Wahoo Tickr chest-strap | 73.9% | 73.9% | 95.8% | 95.8% |

| Garmin Vívosmart HR wristband | 45.0% | 45.0% | 5.0% | 5.0% |

| Mio Fuse wristband* | 87.5% | 87.5% | 95.8% | 95.8% |

| PulseOn wristband* | 82.6% | 82.6% | 87.0% | 87.0% |

Monitors that passed the quality thresholds.

Monitor not intended for use during exercise.

Study 2 Methods

Participants

Fifty-five children aged 8–12 yrs participated in Study 2, 32 with ASD and 23 without ASD. The primary inclusion criterion for the ASD group was a diagnosis of ASD confirmed using the Autism Diagnostic Observation Schedule, second edition (ADOS-2; Lord et al., 2012) – a semi-structured assessment used to diagnose clinically significant ASD symptoms by a research-reliable clinician. Exclusion criteria were a history of cardiovascular disorder, seizures, and pediatric stroke. Groups were matched on age and gender (see Table 2). IQ was assessed using the abbreviated scales of the Stanford-Binet Intelligence Test (Roid, 2003). While groups were not matched based on IQ, following the recommendation of Dykens and Lense (2011), this allowed us to study a more representative sample of children with ASD, including 20% with estimated IQ < 70, 16% with estimated IQ 70–84, and 62% with estimated IQ ≥ 85. As is common in children with ASD due to frequent comorbidities, 14 children with ASD took medications that affect/possibly affect cardiovascular responsivity (seven children took ADHD medications, three children took antidepressants, four children took antipsychotics, and three children took Melatonin for sleep-cycle management). Two children without ASD took ADHD medications. As these medications could have affected the stress response data, results were run with and without participants on medication.

Table 2.

Child Characteristics of the ASD and Non-ASD group

| Variable | ASD (n = 32) | Non-ASD (n = 23) | Comparison Statistics |

|---|---|---|---|

|

| |||

| Age | |||

| M (SD) | 9.68yrs (1.469) | 9.61yrs (1.234) | t (53) = −.18, p = .86 |

| Gender | |||

| Male | 25 (77.4%) | 17 (73.9%) | χ2 (1, N = 55) = .09, p = .77 |

| Female | 7 (22.6%) | 6 (26.1%) | |

| IQ | |||

| M (SD) | 89.03 (22.05) | 102.48 (13.03) | t (50.84) = 2.8, p = .007 |

| ADOS-CS * | |||

| M (SD) | 5.87 (2.73) | 1.61 (1.16) | t (42.24) = −7.7, p < .001 |

| SCARED ** | |||

| M (SD) | 15.09 (10.38) | 9.38 (8.21) | t (53) = −2.12, p = .04 |

Autism Diagnostic Observation Schedule, Comparison Score

Screen for Child Anxiety Related Disorders, Total Score

HR and HRV Measurement

Wearable Cardiovascular Monitors.

The three consumer-grade, commercially available wearable cardiovascular monitors that passed quality thresholds in Study 1 were used to record HR and HRV during Study 2 (Polar H7 ECG chest-strap, Mio Fuse PPG wristband, and PulseOn PPG wristband). The wrists that the wristbands were placed on (i.e., left or right) were counterbalanced. We asked participants to wear all selected monitors simultaneously.

Recording Software for Cardiovascular Monitors.

HR and HRV data were recorded via Bluetooth with our custom-built software (same as Study 1), allowing for simultaneous recording across the multiple monitors and live event marking (i.e., task start and stop).

Analysis Software for HR and HRV.

For the Robustness metrics (Sampling Fidelity [i.e., missing data] and Spike Rate [i.e., artifacts]; see Study 1, Data Analyses section), raw data were analyzed directly from the .csv data files. For the Validity metric (stress vs. rest task; see Study 2, Data Analyses section), cardiovascular data collected by the wearable monitors were input into Kubios heart rate variability software (Tarvainen et al., 2014). A conservative threshold was applied to the data to evaluate artifacts. All interbeat intervals larger/smaller than 0.15 seconds compared to the local average were corrected by replacing the identified artifacts with interpolated values using a cubic spline. Mean HR, max HR, and root mean square of successive differences (RMSSD) were calculated and compared across monitors. RMSSD is a measure of HRV in the time domain, calculated using the root mean square of the differences of neighboring interbeat intervals. RMSSD is the recommended time-domain measure due to its superior statistical properties (Task Force of the European Society of Cardiology, 1996).

Questionnaires

Monitor Comfortability.

The Comfort Rating Scales (Knight & Baber, 2005) was used to assess the comfort level of selected wearable monitors across eight dimensions: (1) Emotion (I feel tense or on edge because I am wearing the device); (2) Attachment (I can feel the device on my body); (3) Harm (the device is causing me some harm); (4) Perceived change (I feel strange wearing the device); (5) Movement (the device affects the way I move); (6) Anxiety (I do not feel secure wearing the device); (7) Sensory sensitivity (the device feels annoying on my skin); and (8) Attention on sensation (I cannot stop thinking about the feeling of the device on my skin). The last two scales were added as hypothetically relevant to children with ASD based on reports of sensory sensitivities (Ben-Sasson et al., 2009). Ratings for each dimension were on a 20-point scale, from low to high agreement. Given that the measure was created for adults, we made four adaptations to make it more suitable for child administration. First, we delivered the questions in an interview format rather than have participants read the items by themselves. If children did not understand what a dimension meant, they were given a lay description (e.g., for Harm: “How much did the monitor hurt you?”). Second, we added a picture of the scale and broke it up by adding qualifiers at set intervals: 0 = completely wrong, 5 = a little bit wrong, 10 = not right or wrong, 15 = a little bit right, 20 = completely right. Third, we included a picture of each monitor next to the questions about the corresponding monitor so that it was clear which device we were asking about and so they did not need to remember the name of each monitor. Fourth, we told children they could tell us how much they agreed with each statement by pointing to their answer on the scale rather than telling us if they would prefer. Twenty-eight of the children with ASD (87.5%) and all the typically developing children completed the Comfort Rating Scales.

Tasks

Resting-State Task.

Children’s resting baseline HR and HRV were recorded while watching the same relaxing video as in Study 1 (Inscapes; Vanderwal, Kelly, Eilbott, Mayes, Castellanos, 2015).

Psychosocial Stressor Task.

Children were engaged in a task in which they had to regulate low-level stress – the transparent box task, from the Middle Childhood version of the Laboratory Temperament Assessment Battery (Goldsmith et al., 2012). During this task, the child is given a transparent box filled with attractive toys but cannot obtain the toys because he/she is given the wrong set of keys, after which the researcher leaves the room for four minutes. After this time, children are given the correct keys and time to play with toys in the box. Lab-TAB tasks have been validated as effective in inducing moderate to strong negative emotions in children [r =.25-.76] (Goldsmith et al., 2012; Macari et al., 2020; Northrup et al., 2020).

Procedure

Testing was completed at the Center for Autism Research, Children’s Hospital of Philadelphia. Parents gave informed consent after going through a picture book of the tasks with the researchers. Children also gave their assent, if possible, due to cognitive and language delays. Parents were instructed on putting the physiological recording monitors on their children, and the researchers confirmed successful application. Tasks were completed in a set order to standardize the emotional carry-over of the tasks. Experimental sessions lasted approximately two hours per participant. Sessions were video recorded to allow for coding of children’s behavioral responses.

Data Analyses

Data were first analyzed for skewness, kurtosis, and outliers. As expected, many HR and HRV variables were non-normal, so they were log-transformed for inferential analyses. During the transparent box task, 8 children (4 children with ASD, 4 typically developing children) spent 25% or more time of the task out of their seat (M= 61.48%, SD=28.15%, Range= 25.21–100%); however, since only one of these children had below-threshold quality assurance values, only their data were excluded from the robustness and validity analyses. As medications could have affected physiological response data, results were run with and without the participants on medication.

Perceived Monitor Comfort.

The comfort level of the groups wearing the monitors was explored in two ways. First, we assessed how many children put the monitors on and kept the monitors on throughout the two-hour testing period. We set an optimal threshold at 80% of child group per monitor. Monitors that met this threshold were further tested for comfort level, robustness, and validity. Second, to explore children’s ratings of comfort level with the monitors, independent group t-tests were performed on each of the Comfort Rating Scales dimensions.

Robustness.

As per Study 1, we used two metrics of monitor robustness, Sampling Fidelity and Spike Rate. We also examined whether children’s movement (out of seat behavior) room affected monitor robustness.

Validity.

To explore the validity of the monitors in measuring physiological responses, 2 groups (ASD, non-ASD) × 2 tasks (rest, stress) repeated measures ANOVAs were conducted for each monitor, stressor task, and HR/HRV metric assessed (mean BPM, max BPM, and RMSSD). We use eta squared (η2) as a measure of effect size. As the groups differed on cognitive ability, analyses were first conducted as ANCOVAs, covarying cognitive ability (Stanford Binet ABIQ), but was later taken out as it was not significant in any of the models (p > .22)

Study 2 Results

Perceived Monitor Comfort

As shown in Table 3, two of the three monitors met the optimal threshold of 80% of the child groups wearing the monitors throughout the entire two-hour session – Polar H7 and Mio Fuse (accordingly, the PulseOn was not further tested on comfort level, robustness, and validity).

Table 3.

Percentage of Children Who Put and Kept the Monitor on for Entire 2 Hour Session

| Polar H7 Chest-strap | Mio Fuse Wrist-band | PulseOn Wrist-band | |

|---|---|---|---|

|

| |||

| Put Monitor On | |||

| ASD | 87.9% | 90.9% | 87.9% |

| Non-ASD | 95.7% | 95.7% | 95.7% |

| Kept Monitor On | |||

| ASD | 84.8% | 84.8% | 72.7% |

| Non-ASD | 95.7% | 95.7% | 95.7% |

The Comfort Rating Scales data are shown in Figure 2. Although the Polar H7 chest-strap was less comfortable for children with ASD than for children without ASD regarding the Emotion and Movement dimensions, absolute scores show that, on average, all children rated monitors in the comfortable range.

Figure 2.

Comfort Rating Scales scores across eight comfort dimensions, between the groups. Panel A shows data from the Polar H7 chest-strap, Panel B shows data from the Mio Fuse wristband. 20 = Completely uncomfortable, 10 = Not comfortable or uncomfortable, 0 = Completely comfortable. Error bars = SE.

Robustness

Sampling Fidelity.

The percentage of participant’s data that met the above quality criteria for the rest task was 96% for Mio and 83% for Polar. For the stress task, it was 97% for Mio and 96% for Polar.

Spike Rate.

The percentage of participant’s data that met the above quality criteria for the rest task was 100% for Mio and 89% for Polar. For the stress task, it was 100% for Mio and 87% for Polar.

The videos of tasks for participants whose robustness data fell under the quality threshold were reviewed to examine whether there were any comfort, application, or other observable reasons for the low-quality data; video segments included 7 children during rest (5 ASD, 2 TD), and 11 children during the stress tasks (7 ASD, 4 TD). In all but 7 cases (39%) in which the data fell below the quality threshold, there was an evident cause of the low-quality data. Children were either adjusting the monitor or pushing on it during the task (N=6, 33%), the chest-strap monitor fell to the child’s waist (N=2, 11%), or the child had rigorous movements, including over-head upper arm movements (N=2, 11%), and spinning in their chair back and forth during the task (N=1, 6%).

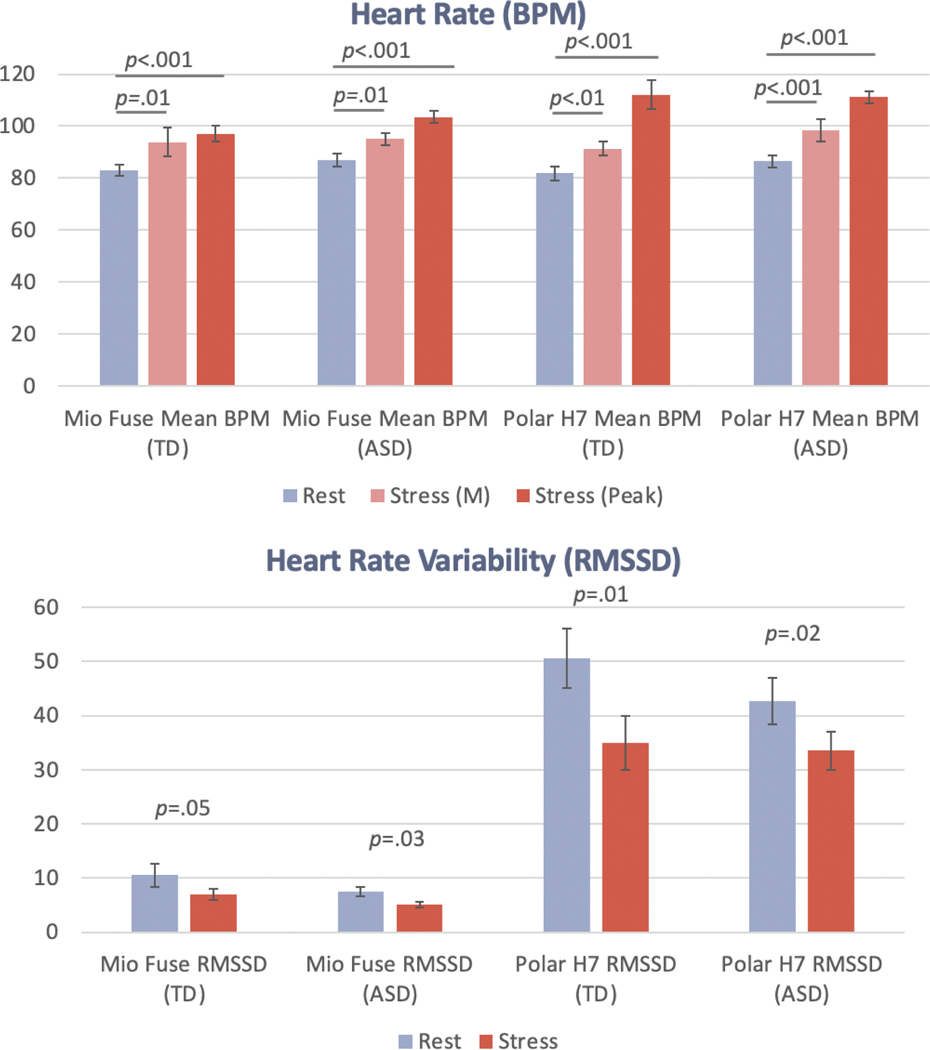

Validity

There was a main effect of task (rest vs. task) across both monitors and HR and HRV metrics. Across all metrics, more increased HR and decreased HRV were recorded relative to the rest task during the stress task. See means in Figure 3 and effect sizes in Table 4. As medications could have affected our physiological response data, results were run with and without participants on medication. The pattern of results was identical; thus, all participants were retained in the analysis (see Supplementary Table 1).

Figure 3.

Heart rate (M and Peak beats per minute) and heart rate variability (RMSSD) in stress vs. rest tasks, across the monitors and groups. Error bars = SE.

Table 4.

Rest versus Stress Task Main Effect Size Statistics Across Monitors and Heart Rate/Heart Rate Variability Metrics

| BPM (M→M) | BPM (M→Peak) | RMSSD | ||||

|---|---|---|---|---|---|---|

| F (df) | η 2 | F (df) | η 2 | F (df) | η 2 | |

|

| ||||||

| Mio Fuse | 15.97 (47) | 0.25*** | 71.98 (47) | 0.61*** | 8.89 (47) | 0.16** |

| Polar H7 | 27.34 (39) | 0.41*** | 135.70 (39) | 0.78*** | 13.41 (39) | 0.26** |

Note. Effect size = η2 (.01 = small, .06 = medium and >.14 = large effect). Ms and SEs displayed in Figure 3.

p < .001

p < .01

p < .05

General Discussion

This study aimed to investigate the comfort, robustness, and validity of commercially available ambulatory cardiovascular monitors for measuring physiological stress in children with ASD after first checking their robustness in typically developing adults. We found that two out of the three monitors tested with children in Study 2 were comfortable for children with ASD, and these monitors were robust in measuring HR and were found to be sensitive measures of psychosocial stress; they showed the predicted increase in HR and decrease in HRV during stress tasks relative to a resting task.

The finding that, on average, children with and without ASD rated the ambulatory monitors in the comfortable range suggests that despite common sensory sensitivities in children with ASD (Ben-Sasson et al., 2009), the children that did keep the monitors on were able to tolerate them, at least for two hours. These data are consistent with the limited data available on the comfort level of individuals with ASD in wearing wearable bio-trackers. Nazneen et al. (2010) reported that neither parent nor their children with ASD indicated opposition to body sensors, despite one parent showing reluctance at first because their child experiences sensory sensitivities. Parents in this study did raise some suggestions regarding the feasibility of ambulatory cardiovascular monitors in children with ASD, suggesting monitors ought to be waterproof, have sturdy straps, be themed to match children’s special interests, and be discrete to avoid negative attention from peers (Nazneen et al., 2010).

This study provides a developing framework for providing a basic quality assessment of wearable cardiovascular monitors to facilitate their use in larger-scale ambulatory psychophysiology research. When selecting the initial six affordable, commercially available cardiovascular monitors, we were able to identify three that showed potential for large-scale research: one ECG chest-strap model and two PPG wristbands. With only approximately one hour of overall recording time (in Study 1 with typically developing adults), utilizing open-source recording software and a relatively small amount of analysis code, this approach could be widely replicated by individual laboratories to test new heart rate devices entering the market before making the more considerable investment for larger-scale studies.

In order to further guide future research in this area and device selection, research assesses multiple tasks in a representative sample is needed. This work could drive the establishment of a statistical metric that investigators could use to determine device selection/sensitivity/validity for particular people or tasks. In this study both the Polar H7 and the Mio Fuse passed comfort level, robustness, and validity checks, a follow-up question is how to select a specific device for use with children on the autism spectrum. The devices differ in type: one is a chest-strap, and one is a wristband, and some children with ASD had strong preferences about one versus the other for multiple reasons, including their peers noticing the device (both in a positive and negative light) and idiosyncratic sensory or body placement preferences. Therefore, we suggest an individualized approach whereby children are presented with both options, given the opportunity to try both, and then select which device they would prefer before commencing physiological tracking.

Given that these monitors are inexpensive and readily available, such research-reliable wearables could collect data on sample sizes previously unobtainable (both numbers of research participants and quantity of data per participant) and in contexts that are more relevant to real-world behavior and cognition than the laboratory, such as in homes, schools, and other community-based locations. This is not to suggest that community physiological stress or arousal tracking could replace lab-based recording entirely; the lab context typically offers more experimental control than real-life settings. Nevertheless, identifying research-reliable commercially available ambulatory cardiovascular monitors would allow the field of psychophysiology to measure cognitive and affective mechanisms that play out on extremely varied time scales, in conjunction with affective experience sampling methods (delivered remotely via smartphone, for example (Intille et al., 2007). The successful use of remote psychophysiology data collection could fundamentally alter how we consider affective information processes (cf., Wilhelm & Grossman, 2010). For example, by allowing for recording over both short and very long durations (hours and days), it could help refine often arbitrary distinctions between “state” and “trait” components of affect (Allen & Potkay, 1981). It could also allow for real-time prediction of affect and behavior (Goodwin et al., 2019; Nuske et al., 2019).

There are several exciting applications of wearable physiology in intervention, assessment, and community engagement research involving individuals with ASD. For example, the recently designed KeepCalm app tracks and integrates physiological stress or arousal of children with ASD, recorded with consumer-grade wearable cardiovascular monitors, with behavioral data to give real-time clinical decision support to educational teams for emotion regulation and challenging behavior at school (Nuske et al., 2020). Another application of this technology is use predicting challenging behaviors in youth with ASD in specialized inpatient psychiatry unit (Goodwin et al., 2019). Current use of wearable physiology in children with ASD in hospital or research settings could be made more accessible with low-cost consumer-grade wearable cardiovascular monitors for complementing behavioral assessment on socio-cognitive tasks (Di Palma et al., 2017) or anxiety symptoms, particularly for individuals with limited verbal repertoires (Van Laarhoven et al., 2021), or community-based monitoring of health status combined with GPS location (Ahmed et al., 2017). Moreover, empirical support for biosensor feedback-based interventions for individuals with anxiety are is growing (Alneyadi et al., 2021), with some work also done in ASD (Friedrich et al., 2014). Consumer-grade wearable cardiovascular monitors could help increase accessibility to those interventions outside of clinic settings from the comfort of the home.

Results also further substantiate the approach of testing new consumer-grade wearables as they enter the market, which is essential as the market is in constant flux, with new wearables being released at a speed faster than typical research timelines. Therefore, the metrics used in both studies, Sampling Fidelity and Spike Rate, can be applied to new heart rate trackers to assess their fit for future research projects (for additional testing resources see Kleckner et al., 2021).

Limitations

These studies are not without limitations. First, the two studies we performed focused on the robustness and the validity of measuring physical or physiological stress or arousal from ambulatory cardiovascular monitors in children with ASD and were not designed to measure accelerometry simultaneously. However, as sessions were video recorded, we did cross-check issues with robustness against child movement. We found that rigorous child movement (such as over-head upper arm movements and spinning in their chair back and forth during the task) accounted for issues with robustness in most cases. Future research should aim to record cardiovascular responses and accelerometry simultaneously to examine the impact of user movement on robustness metrics. Second, since multiple cardiovascular monitors were assessed simultaneously, we cannot rule out that the low robustness found on some devices may have been due to some Bluetooth/ANT+ interference across devices. Third, though we did screen for aspects of participants’ medical history related to cardiovascular functioning, we did not measure their body mass index, whether participants were hyper or hypotensive, and did not measure anxiety and depression symptoms. Future studies on benchmarking wearable cardiovascular monitors should also aim to measure these aspects, especially in studies examining absolute levels of cardiovascular responses. Fourth, our central question in these studies focused on the feasibility of commercially available wearable cardiovascular monitors for physiological stress or arousal monitoring; due to the commercial nature of these devices, we did not have access to the proprietary algorithms that pre-processed the heart rate/heart rate variability data, and therefore could not independently evaluate accuracy and robustness. Fifth, given the age range of the study (8–12 years), we cannot comment on the applicability of wearable cardiovascular monitors for physiological measurement in younger children. Likewise, few children with ASD included in the second study required very substantial support. Although some recent work has explored the use of wearable physiology in such children (e.g., children with challenging behavior; Goodwin et al., 2019; Nuske et al., 2019), further work is needed; this is important as combining physiological monitoring with behavioral monitoring may be particularly beneficial with these individuals. Finally, although the sample size in the current study on children with ASD is larger than previous studies in the area, it is still relatively small and not representative. Therefore replication and extension are warranted.

Conclusions

The findings from the current two feasibility studies show that select consumer-grade wearable cardiovascular monitors are robust and may be used for physiological stress or arousal measurement or tracking in children with ASD. Results from these studies may inform methodologies used in future studies to assess the robustness of remote physiology recording monitors that enter the market, thereby opening up new avenues for the application of remote physiology recording in children with ASD.

Supplementary Material

Acknowledgments

First and foremost, the authors would like to thank the families who took part in this study. We would also like to acknowledge the hard work and dedication of all the students who worked on monitor and task set-up, participant recruitment, event marking, data entry, and physiological data and behavioral coding aspects of the project (in alphabetical order): Zabryna Atkinson-Diaz, Anushua Bhattacharya, Elizabeth Daniels, Amanda Dennis, Avantika Diwadkar, Emma Finkel, Ilona Jileaeva, Ada Li, Devin Murphy, Meredith Pinheiro, William Schmeling, Hungtzu Tai, and Jessica Tan.

Funding: McMorris Family Foundation, Foerderer Grants for Excellence – Children’s Hospital of Philadelphia Research Institute, and National Institutes of Mental Health (K01MH120509).

Footnotes

Conflict of Interest

The authors have no conflict of interest to declare.

References

- Abt G, Bray J, & Benson AC (2018). The validity and inter-device variability of the Apple Watch™ for measuring maximal heart rate. Journal of Sports Sciences, 36(13), 1447–1452. [DOI] [PubMed] [Google Scholar]

- Ahmed IU, Hassan N, & Rashid H. (2017). Solar powered smart wearable health monitoring and tracking device based on GPS and GSM technology for children with autism. 2017 4th International Conference on Advances in Electrical Engineering (ICAEE), 111–116. [Google Scholar]

- Allen BP, & Potkay CR (1981). On the arbitrary distinction between states and traits. Journal of Personality and Social Psychology, 41(5), 916–928. 10.1037/0022-3514.41.5.916 [DOI] [Google Scholar]

- Alneyadi M, Drissi N, Almeqbaali M, & Ouhbi S. (2021). Biofeedback-Based Connected Mental Health Interventions for Anxiety: Systematic Literature Review. JMIR MHealth and UHealth, 9(4), e26038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ambler PG, Eidels A, & Gregory C. (2015). Anxiety and aggression in adolescents with autism spectrum disorders attending mainstream schools. Research in Autism Spectrum Disorders, 18, 97–109. [Google Scholar]

- American Psychiatric Association [APA]. (2013). DSM-5: Diagnostic and Statistical Manual of Mental Disorders. American Psychiatric Publishing, Inc. [Google Scholar]

- Baron KG, Duffecy J, Berendsen MA, Cheung Mason I, Lattie EG, & Manalo NC (2018). Feeling validated yet? A scoping review of the use of consumer-targeted wearable and mobile technology to measure and improve sleep. Sleep Medicine Reviews, 40, 151–159. 10.1016/j.smrv.2017.12.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Becker DE (2006). Fundamentals of Electrocardiography Interpretation. Anesthesia Progress, 53(2), 53–64. 10.2344/0003-3006(2006)53[53:FOEI]2.0.CO;2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ben-Sasson A, Hen L, Fluss R, Cermak SA, Engel-Yeger B, & Gal E. (2009). A meta-analysis of sensory modulation symptoms in individuals with autism spectrum disorders. Journal of Autism and Developmental Disorders, 39(1), 1–11. [DOI] [PubMed] [Google Scholar]

- Billeci L, Tonacci A, Tartarisco G, Narzisi A, Di Palma S, Corda D, Baldus G, Cruciani F, Anzalone SM, Calderoni S, Pioggia G, Muratori F, & Group MS (2016). An Integrated Approach for the Monitoring of Brain and Autonomic Response of Children with Autism Spectrum Disorders during Treatment by Wearable Technologies. Frontiers in Neuroscience, 10. 10.3389/fnins.2016.00276 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blasco J, Chen TM, Tapiador J, & Peris-Lopez P. (2016). A survey of wearable biometric recognition systems. ACM Computing Surveys (CSUR), 49(3), 43. [Google Scholar]

- Brosschot JF, & Thayer JF (2003). Heart rate response is longer after negative emotions than after positive emotions. International Journal of Psychophysiology, 50(3), 181–187. [DOI] [PubMed] [Google Scholar]

- Cadmus-Bertram L, Gangnon R, Wirkus EJ, Thraen-Borowski KM, & Gorzelitz-Liebhauser J. (2017). The Accuracy of Heart Rate Monitoring by Some Wrist-Worn Activity Trackers. Annals of Internal Medicine, 166(8), 610–612. 10.7326/L16-0353 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Di Palma S, Tonacci A, Narzisi A, Domenici C, Pioggia G, Muratori F, Billeci L, & Group MS (2017). Monitoring of autonomic response to sociocognitive tasks during treatment in children with Autism Spectrum Disorders by wearable technologies: A feasibility study. Computers in Biology and Medicine, 85, 143–152. [DOI] [PubMed] [Google Scholar]

- Dykens EM, & Lense M. (2011). Intellectual disabilities and autism spectrum disorder: A cautionary note. In Amaral D, Dawson G, & Geschwind DH (Eds.), Autism spectrum disorders. Oxford University Press. [Google Scholar]

- Evenson KR, Goto MM, & Furberg RD (2015). Systematic review of the validity and reliability of consumer-wearable activity trackers. International Journal of Behavioral Nutrition and Physical Activity, 12(1), 159. 10.1186/s12966-015-0314-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferguson BJ, Marler S, Altstein LL, Lee EB, Akers J, Sohl K, McLaughlin A, Hartnett K, Kille B, & Mazurek M. (2017). Psychophysiological associations with gastrointestinal symptomatology in autism spectrum disorder. Autism Research, 10(2), 276–288. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fioriello F, Maugeri A, D’Alvia L, Pittella E, Piuzzi E, Rizzuto E, Del Prete Z, Manti F, & Sogos C. (2020). A wearable heart rate measurement device for children with autism spectrum disorder. Scientific Reports, 10(1), 1–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friedrich EV, Suttie N, Sivanathan A, Lim T, Louchart S, & Pineda JA (2014). Brain–computer interface game applications for combined neurofeedback and biofeedback treatment for children on the autism spectrum. Frontiers in Neuroengineering, 7, 21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldsmith HH, Reilly J, Lemery KS, Longley S, & Prescott A. (2012). The Laboratory Temperament Assessment Battery. 124. [Google Scholar]

- Goodwin MS, Groden J, Velicer WF, Lipsitt LP, Baron MG, Hofmann SG, & Groden G. (2006). Cardiovascular arousal in individuals with autism. Focus on Autism and Other Developmental Disabilities, 21(2), 100–123. [Google Scholar]

- Goodwin MS, Mazefsky CA, Ioannidis S, Erdogmus D, & Siegel M. (2019). Predicting aggression to others in youth with autism using a wearable biosensor. Autism Research: Official Journal of the International Society for Autism Research, 12(8), 1286–1296. 10.1002/aur.2151 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goodwin MS, Velicer WF, & Intille SS (2008). Telemetric monitoring in the behavior sciences. Behavior Research Methods, 40(1), 328–341. 10.3758/brm.40.1.328 [DOI] [PubMed] [Google Scholar]

- Groden J, Goodwin MS, Baron MG, Groden G, Velicer WF, Lipsitt LP, Hofmann SG, & Plummer B. (2005). Assessing Cardiovascular Responses to Stressors in Individuals With Autism Spectrum Disorders. Focus on Autism and Other Developmental Disabilities, 20(4), 244–252. 10.1177/10883576050200040601 [DOI] [Google Scholar]

- Heathers JA (2013). Smartphone-enabled pulse rate variability: An alternative methodology for the collection of heart rate variability in psychophysiological research. International Journal of Psychophysiology, 89(3), 297–304. [DOI] [PubMed] [Google Scholar]

- Henriksen A, Mikalsen MH, Woldaregay AZ, Muzny M, Hartvigsen G, Hopstock LA, & Grimsgaard S. (2018). Using fitness trackers and smartwatches to measure physical activity in research: Analysis of consumer wrist-worn wearables. Journal of Medical Internet Research, 20(3), e110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Intille SS, Stone AA, & Shiffman S. (2007). Technological innovations enabling automatic, context-sensitive ecological momentary assessment. The Science of Real-Time Data Capture: Self-Reports in Health Research, 308–337. [Google Scholar]

- Ismail S, Akram U, & Siddiqi I. (2021). Heart rate tracking in photoplethysmography signals affected by motion artifacts: A review. EURASIP Journal on Advances in Signal Processing, 2021(1), 1–27. [Google Scholar]

- Jo E, Lewis K, Directo D, Kim MJ, & Dolezal BA (2016). Validation of biofeedback wearables for photoplethysmographic heart rate tracking. Journal of Sports Science & Medicine, 15(3), 540. [PMC free article] [PubMed] [Google Scholar]

- Khor AS, Gray KM, Reid SC, & Melvin GA (2014). Feasibility and validity of ecological momentary assessment in adolescents with high-functioning autism and Asperger’s disorder. Journal of Adolescence, 37(1), 37–46. [DOI] [PubMed] [Google Scholar]

- Kleckner IR, Feldman MJ, Goodwin MS, & Quigley KS (2021). Framework for selecting and benchmarking mobile devices in psychophysiological research. Behavior Research Methods, 53(2), 518–535. 10.3758/s13428-020-01438-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knight JF, & Baber C. (2005). A tool to assess the comfort of wearable computers. Human Factors, 47(1), 77–91. [DOI] [PubMed] [Google Scholar]

- Koumpouros Y, & Kafazis T. (2019). Wearables and mobile technologies in Autism Spectrum Disorder interventions: A systematic literature review. Research in Autism Spectrum Disorders, 66, 101405. [Google Scholar]

- Lazarus RS, Speisman JC, & Mordkoff AM (1963). The relationship between autonomic indicators of psychological stress: Heart rate and skin conductance. Psychosomatic Medicine, 25(1), 19–30. [Google Scholar]

- Li Z, Snieder H, Su S, Ding X, Thayer JF, Treiber FA, & Wang X. (2009). A longitudinal study in youth of heart rate variability at rest and in response to stress. International Journal of Psychophysiology : Official Journal of the International Organization of Psychophysiology, 73(3), 212–217. 10.1016/j.ijpsycho.2009.03.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lu G, Yang F, Taylor JA, & Stein JF (2009a). A comparison of photoplethysmography and ECG recording to analyse heart rate variability in healthy subjects. Journal of Medical Engineering & Technology, 33(8), 634–641. 10.3109/03091900903150998 [DOI] [PubMed] [Google Scholar]

- Lu G, Yang F, Taylor JA, & Stein JF (2009b). A comparison of photoplethysmography and ECG recording to analyse heart rate variability in healthy subjects. Journal of Medical Engineering & Technology, 33(8), 634–641. 10.3109/03091900903150998 [DOI] [PubMed] [Google Scholar]

- Macari SL, Vernetti A, & Chawarska K. (2020). Attend Less, Fear More: Elevated Distress to Social Threat in Toddlers With Autism Spectrum Disorder. Autism Research. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mazefsky CA (2015). Emotion regulation and emotional distress in autism spectrum disorder: Foundations and considerations for future research. Springer. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Muaremi A, Arnrich B, & Tröster G. (2013). Towards measuring stress with smartphones and wearable devices during workday and sleep. BioNanoScience, 3(2), 172–183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mukhopadhyay SC (2015). Wearable Sensors for Human Activity Monitoring: A Review. IEEE Sensors Journal, 15(3), 1321–1330. 10.1109/JSEN.2014.2370945 [DOI] [Google Scholar]

- Nazneen F, Boujarwah FA, Sadler S, Mogus A, Abowd GD, & Arriaga RI (2010). Understanding the challenges and opportunities for richer descriptions of stereotypical behaviors of children with ASD: A concept exploration and validation. Proceedings of the 12th International ACM SIGACCESS Conference on Computers and Accessibility, 67–74. [Google Scholar]

- Northrup JB, Goodwin M, Montrenes J, Vezzoli J, Golt J, Peura CB, Siegel M, & Mazefsky C. (2020). Observed emotional reactivity in response to frustration tasks in psychiatrically hospitalized youth with autism spectrum disorder. Autism, 24(4), 968–982. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nuske HJ, Finkel E, Hedley D, Parma V, Tomczuk L, Pellecchia M, Herrington J, Marcus SC, Mandell DS, & Dissanayake C. (2019). Heart rate increase predicts challenging behavior episodes in preschoolers with autism. Stress, 1–9. [DOI] [PubMed] [Google Scholar]

- Nuske HJ, Hedley D, Tomczuk L, Finkel E, Thomson P, & Dissanayake C. (2018). Emotional Coherence Difficulties in Preschoolers with Autism: Evidence from Internal Physiological and External Communicative Discoordination. [Google Scholar]

- Nuske HJ, Pennington J, Goodwin MS, Sultanik E, & Mandell DS (2020). A m-Health Platform for Teachers of Children with Autism to Support Emotion Regulation. INSAR 2020 Virtual Meeting. https://insar.confex.com/insar/2020/meetingapp.cgi/Paper/35440 [Google Scholar]

- Nuske HJ, Vivanti G, & Dissanayake C. (2013). Are emotion impairments unique to, universal, or specific in autism spectrum disorder? A comprehensive review. Cognition & Emotion, 27(6), 1042–1061. 10.1080/02699931.2012.762900 [DOI] [PubMed] [Google Scholar]

- Parak J, Tarniceriu A, Renevey P, Bertschi M, Delgado-Gonzalo R, & Korhonen I. (2015). Evaluation of the beat-to-beat detection accuracy of PulseOn wearable optical heart rate monitor. 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), 8099–8102. 10.1109/EMBC.2015.7320273 [DOI] [PubMed] [Google Scholar]

- Picard RW (2009). Future affective technology for autism and emotion communication. Philosophical Transactions of the Royal Society B: Biological Sciences, 364(1535), 3575–3584. 10.1098/rstb.2009.0143 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shcherbina A, Mattsson CM, Waggott D, Salisbury H, Christle JW, Hastie T, Wheeler MT, & Ashley EA (2017). Accuracy in wrist-worn, sensor-based measurements of heart rate and energy expenditure in a diverse cohort. Journal of Personalized Medicine, 7(2), 3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shin G, Jarrahi MH, Fei Y, Karami A, Gafinowitz N, Byun A, & Lu X. (2019). Wearable activity trackers, accuracy, adoption, acceptance and health impact: A systematic literature review. Journal of Biomedical Informatics, 93, 103153. 10.1016/j.jbi.2019.103153 [DOI] [PubMed] [Google Scholar]

- Stahl SE, An H-S, Dinkel DM, Noble JM, & Lee J-M (2016). How accurate are the wrist-based heart rate monitors during walking and running activities? Are they accurate enough? BMJ Open Sport & Exercise Medicine, 2(1), e000106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tarvainen MP, Niskanen J-P, Lipponen JA, Ranta-aho PO, & Karjalainen PA (2014). Kubios HRV – Heart rate variability analysis software. Computer Methods and Programs in Biomedicine, 113(1), 210–220. 10.1016/j.cmpb.2013.07.024 [DOI] [PubMed] [Google Scholar]

- Tessier M-P, Pennestri M-H, & Godbout R. (2018). Heart rate variability of typically developing and autistic children and adults before, during and after sleep. International Journal of Psychophysiology, 134, 15–21. [DOI] [PubMed] [Google Scholar]

- Thayer JF, Ahs F, Fredrikson M, Sollers JJ, & Wager TD (2012). A meta-analysis of heart rate variability and neuroimaging studies: Implications for heart rate variability as a marker of stress and health. Neuroscience & Biobehavioral Reviews, 36(2), 747–756. [DOI] [PubMed] [Google Scholar]

- Van Laarhoven TR, Johnson JW, Andzik NR, Fernandes L, Ackerman M, Wheeler M, Melody K, Cornell V, Ward G, & Kerfoot H. (2021). Using Wearable Biosensor Technology in Behavioral Assessment for Individuals with Autism Spectrum Disorders and Intellectual Disabilities Who Experience Anxiety. Advances in Neurodevelopmental Disorders, 5(2), 156–169. [Google Scholar]

- Van Steensel FJ, Bögels SM, & Perrin S. (2011). Anxiety disorders in children and adolescents with autistic spectrum disorders: A meta-analysis. Clinical Child and Family Psychology Review, 14(3), 302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vanderwal T, Kelly C, Eilbott J, Mayes LC, & Castellanos FX (2015). Inscapes: A movie paradigm to improve compliance in functional magnetic resonance imaging. NeuroImage, 122, 222–232. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Verkuil B, Brosschot JF, Tollenaar MS, Lane RD, & Thayer JF (2016). Prolonged non-metabolic heart rate variability reduction as a physiological marker of psychological stress in daily life. Annals of Behavioral Medicine, 50(5), 704–714. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wajciechowski J, Gayle R, Andrews R, & Dintiman G. (1991). The accuracy of radio telemetry heart rate monitor during exercise. Clinical Kinesiology, 45(1), 9–12. [Google Scholar]

- Walker RK, Hickey AM, & Freedson PS (2016). Advantages and Limitations of Wearable Activity Trackers: Considerations for Patients and Clinicians. Clinical Journal of Oncology Nursing, 20(6), 606–610. 10.1188/16.cjon.606-610 [DOI] [PubMed] [Google Scholar]

- Wang R, Blackburn G, Desai M, Phelan D, Gillinov L, Houghtaling P, & Gillinov M. (2016). Accuracy of Wrist-Worn Heart Rate Monitors. JAMA Cardiology. http://jamanetwork.com/journals/jamacardiology/fullarticle/2566167 [DOI] [PubMed] [Google Scholar]

- Wilhelm FH, & Grossman P. (2010). Emotions beyond the laboratory: Theoretical fundaments, study design, and analytic strategies for advanced ambulatory assessment. Biological Psychology, 84(3), 552–569. 10.1016/j.biopsycho.2010.01.017 [DOI] [PubMed] [Google Scholar]

- Xie J, Wen D, Liang L, Jia Y, Gao L, & Lei J. (2018). Evaluating the Validity of Current Mainstream Wearable Devices in Fitness Tracking Under Various Physical Activities: Comparative Study. JMIR MHealth and UHealth, 6(4), e94. 10.2196/mhealth.9754 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.