Abstract

Background

Artificial intelligence (AI) is increasingly used to support bedside clinical decisions, but information must be presented in usable ways within workflow. Graphical user interfaces (GUI) are front-facing presentations for communicating AI outputs, but clinicians are not routinely invited to participate in their design, hindering AI solution potential.

Purpose

To inform early user-engaged design of a GUI prototype aimed at predicting future cardiorespiratory insufficiency (CRI) by exploring clinician methods for identifying at-risk patients, previous experience with implementing new technologies into clinical workflow, and user perspectives on GUI screen changes.

Methods

We conducted a qualitative focus group study to elicit iterative design feedback from clinical end-users on an early GUI prototype display. Five online focus group sessions were held, each moderated by an expert focus group methodologist. Iterative design changes were made sequentially, and the updated GUI display was presented to the next group of participants.

Results

23 clinicians were recruited (14 nurses, 4 nurse practitioners, 5 physicians; median participant age ~35 years; 60% female; median clinical experience 8 years). Five themes emerged from thematic content analysis: trend evolution, context (risk evolution relative to vital signs and interventions), evaluation/interpretation/explanation (sub theme: continuity of evaluation), clinician intuition, and clinical operations. Based on these themes, GUI display changes were made for example, color and scale adjustments, integration of clinical information, and threshold personalization.

Conclusions

Early user-engaged design was useful in adjusting GUI presentation of AI output. Next steps involve clinical testing and further design modification of the AI output to optimally facilitate clinician surveillance and decisions. Clinicians should be involved early and often in clinical decision support design to optimize efficacy of AI tools.

Keywords: modeling, graphical user interface, focus groups

1. INTRODUCTION

Hospitalized patients requiring continuous physiologic monitoring are vulnerable and dependent on technologies for complex health problems management. Clinicians are confronted with live-streaming data that require skillful cognitive integration to inform impressions of patient status. AI is increasingly used to facilitate IDSS. Such systems continuously process complex data to sensitively and specifically predict escalating risk and alert clinicians to future deterioration including possible causes and supportive interventions.[1, 2] However, AI output must be communicated in ways that are acceptable to clinicians, easily interpretable, and support clinical thinking and decisions. One way to display AI output to support clinical care is with a front-facing GUI. These displays are critically important in the design and deployment of IDSS that are aligned with clinical workflow and acceptable to end-users.[3, 4] To ensure usability and acceptability, involving clinical end-users in early-stage GUI design, even prior to testing and trial, is recommended. One way to incorporate clinician participation is by using focus group methods to elicit design input for staged GUI development (user-engaged iterative design).

Focus group studies are widely used to discover the intricate details of clinician perceptions and beliefs about clinical workflow.[5, 6] These methods can provide a platform to share ideas, expand perceptions, and arrive at conclusions that may be completely unforeseen by technical designers. Our research team has been developing AI to predict future CRI in monitored hospitalized patients. As we develop an early GUI protype to communicate such information to clinicians, we conducted a qualitative study to inform the early user-engaged iterative design of the prototype through exploration of clinician methods for identifying patients at CRI risk, experience with implementing a new technology into clinical workflow, and utilization challenges anticipated for a new IDSS. Findings informed iterative changes of a GUI prototype, which will next be used in CRI IDSS clinician simulation, comparison, laboratory, and field testing.

1.1. Instability Model Development

We previously described development of AI algorithms to create a dynamically evolving instability CRI risk score from continuously streaming step-down unit VS monitoring data.[7–10] This risk score is intended to alert clinicians of patient deterioration before it happens (proactive versus reactive).

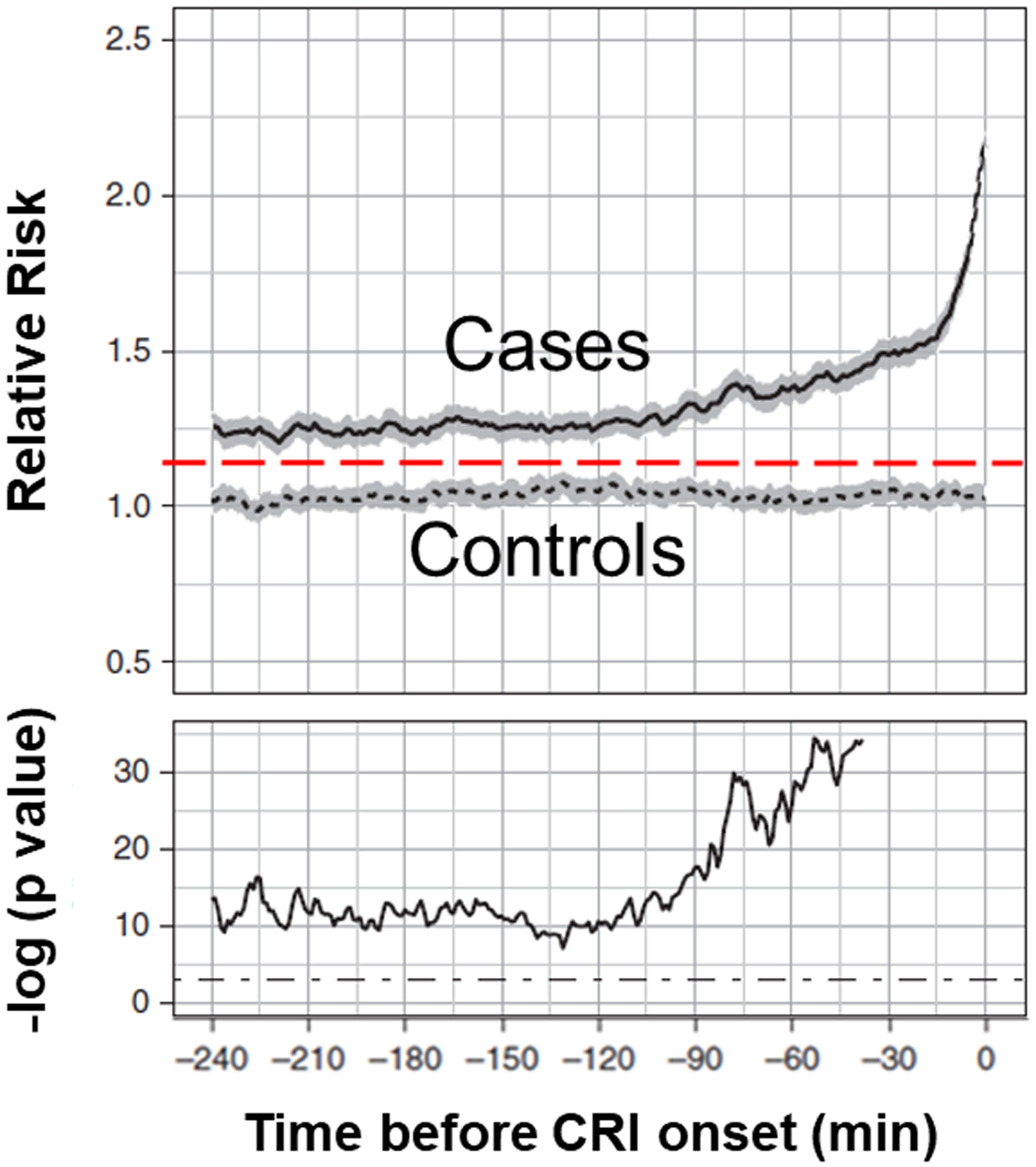

We curated a database of step-down unit patients that provided continuous VS monitoring data for CRI detection. CRI was defined as VS excursion (heart rate, respiratory rate, oxygen saturation [pulse oximetry], and blood pressure) beyond predetermined stability thresholds. Computed multi-domain features of VS time series informed the CRI risk model.[11, 12] Patient risk trends were compared 4-hours after step-down unit admission and 4-hours before the CRI event between cases (CRI event) and controls (no CRI event). Random Forest modeling with non-random splits distinguished cases from controls.[13] Results demonstrated that the relative risk for CRI (scale from 1 to 10) increased significantly ~2-hours before CRI threshold was crossed (Figure 1).[7] This modeling work continues in process, and although random forest modeling was used to predict the current CRI risk score, other AI algorithms, including deep learning based IDSS techniques, are actively being explored.

Figure 1: Relative Cardiorespiratory Insufficiency Risk Sub-trajectories.

Relative cardiorespiratory insufficiency (CRI) risk sub-trajectories aggregated separately for case (solid lines) and control (dotted lines; gray areas represent 95% confidence intervals) patients 4-hours immediately before CRI onset. Time index 0 corresponds to time of CRI. In the bottom plot we show the P value series computed from two-sample t tests at each time point shown on the logarithmic scale. The cutoff threshold of 5% significance is shown with dot–dash straight lines.

Reprinted with permission Chen L, Olufunmilayo O, Clermont G, Hravnak M, Pinsky MR, Dubrawski AW. Dynamic and personalized risk forecast in step-down units: Implications for monitoring paradigms. Annals of the American Thoracic Society. 2017 Mar;14(3):384–391. doi: 10.1513/AnnalsATS.201611-905OC. PMID: 28033032

The “back end” CRI relative risk score was next considered for clinical interpretation as represented by the “front end” static GUI prototype.

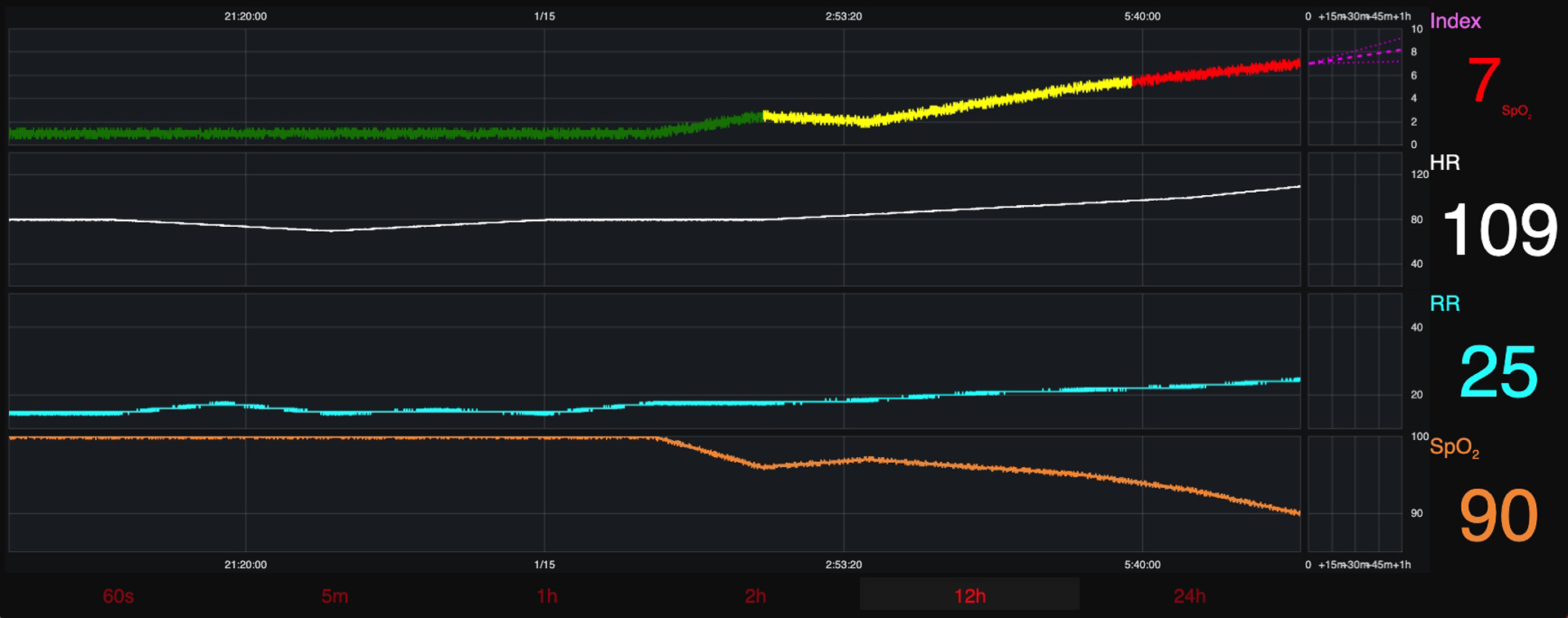

1.2. Static Graphical User Interface Prototype

While model development continues, we simultaneously began to prepare a GUI prototype. Before the focus group study could be deployed, a static GUI display was curated from the insight of clinical and technical research team members. They hypothesized the GUI information needed to inform their clinical status impression before making a therapeutic decision about CRI risk (version 1) (Figure 2). The risk score displayed in Figure 2 represents an individual’s relative risk for CRI and is plotted at a 20-second updated frequency. This static graphic was used as the starting point during focus group discussions.

Figure 2: Graphical User Interface (Version 1).

Graphical User Interface (Version 1)- presented to round 1 participants.

2. MATERIALS AND METHODS

2.1. Study Design

With Institutional Review Board approval, a qualitative focus group study further developed the static GUI prototype design (version 1). Multiple online focus groups sought feedback from clinicians regarding information type and presentation felt to support their recognition of patient CRI risk.

2.2. Study Participants

Eligible participants were licensed clinicians, RN or providers defined as clinicians with prescribing authority (NP and MD) who had experience caring for continuously monitored hospitalized patients at risk for CRI. They also needed to have a computer with internet access and web-camera to access the Zoom videoconferencing platform (https://zoom.us).

2.3. Participant Recruitment

Purposive recruitment was accomplished via scripted emails sent to listservs and targeted contact groups (nurses and providers, graduate nursing students from our School of Nursing, and nursing education/research groups). Snowball sampling was used and participants were encouraged to invite eligible colleagues. Participants could participate in more than one round of focus groups, for a maximum of two rounds.

2.4. Data Collection

Focus groups were organized so that professional roles would be assigned to groups of only peers, and then hybrid groups (both nurses and providers). This strategy enabled the researchers to see if peer-only results differed from hybrid group results. Each focus group was facilitated by an expert focus group methodologist (MT), using a semi-structured moderator guide (Appendix A), as well as a notetaker (SH or TP). Audio-only recordings were collected to validate transcription accuracy, and no identifying participant information was collected. Audio recordings were deleted after the final thematic analysis. Participants were assigned a pseudonym screen name in Zoom (e.g., Participant RN1-1-1). Each focus group lasted ~60 minutes and participants were asked to complete a 3-minute anonymous demographic survey, which captured information on professional role and years of clinical experience.

During the first round of focus groups, the static GUI prototype was displayed and feedback was requested from the participants. Open-ended questions were used and included: 1.) How do you currently figure out which patients will experience CRI? 2.) What do you think about the GUI screen (initial reaction)? 3.) What would you change on the GUI screen to make it more informative/usable? 4.) What would keep you from taking advantage of this technology (barriers)? 5.) What would make you feel more comfortable with using this technology? 6.) How does this GUI screen make you feel about CRI? 7.) How do you see this GUI supporting your future, informed decision making? After the first round, participant requests for GUI design changes were presented to the computer scientist (AW), who made the modifications before the next round.

2.5. Data Analysis

Analysis proceeded using the electronic transcriptions from the audio recordings, and handwritten notes. The transcripts were coded, reviewed, and analyzed to identify relevant emerging themes. Focus groups were repeated until thematic saturation and optimal technical changes were achieved. Thematic analysis consisted of assigning codes to each recorded line of transcript to identify repetition in themes and specifics for GUI technical changes. Coding reliability between researchers was cross-checked by the focus group methodologist, who evaluated transcripts originally coded by two separate researchers, to ensure consistency and inter-rater reliability. Consensus coding was enacted to adjudicate codes with disagreement and to add codes when necessary. Codes were then categorized into relevant themes that applied across all focus group discussions.

3. RESULTS

Five virtual focus groups were conducted. 23 participants were recruited (14 RN, 4 NP, 5 MD). The median participant age was ~35 years, 60% were female, and the median clinical experience was 8 years (Table 1). Focus groups occurred over 4 months, and Table 2 shows the participant distribution over the study period. Thematic analysis showed that five themes emerged: 1.) trend evolution, 2.) context (risk evolution relative to VS and interventions), 3.) evaluation/interpretation/explanation (sub theme: continuity of evaluation), 4.) clinician intuition support, and 5.) clinical operations utility.

Table 1: Participant Characteristics (n = 22) *.

Focus group participant characteristics across all groups.

| Variable | N (%) (n = 22) |

| Age | |

| 25–30 years | 4 (18%) |

| 31–40 years | 11 (50%) |

| 41–50 years | 6 (27%) |

| 51–60 years | 1 (4%) |

| Gender | |

| Female | 13 (59%) |

| Male | 9 (41%) |

| Professional Background | |

| Registered Nurse | 14 (61%) |

| Physician | 5 (22%) |

| Nurse Practitioner | 4 (17%) |

| Years of Experience | |

| 1–5 years | 8 (36%) |

| 6–10 years | 7 (32%) |

| 11–15 years | 5 (23%) |

| 16–20 years | 1 (4%) |

| >20 years | 1 (4%) |

= there were 23 participants, but only 22 completed the demographic survey.

Table 2: Focus Group Set-up.

Registered Nurse (RN), Nurse Practitioner (NP), Physician (MD), Graphical User Interface (GUI)

| Group 1 (Nurse Only) | Group 2 (Provider Only) | Group 3 (Hybrid) | |

|---|---|---|---|

| Round 1 |

|

|

|

| Time spent in between round 1 and round 2 was used for incorporating GUI feedback. Recommended changes from round 1 were applied so that the most current GUI version was presented in round 2. | |||

| Round 2 |

|

|

|

Total of 24 participants (1 MD participated twice)

The table displays the distribution of participants for all focus groups, separated by 2 rounds.

Each round was constructed based off of participant availability.

3.1. Theme 1- Trend evolution.

Participants reflected on the real-world assessment routines used to gather information about their patients CRI status. Change-of-shift report was noted as the most critical method of gaining insight about patient baselines, prior trajectories, and forecasts for impending instability, followed by their own detailed EHR review. Participants from both roles discussed using disconnected components of the EHR to “go through the chart” and build their initial impression of patient stability status. This method was also described as time-consuming, cumbersome, and lacked standardization (from person to person), meaning that different clinicians develop their impressions using different fields throughout the chart. Additional tools used to accumulate data trends included physical assessment findings, waveforms, and diagnostic tests (all roles, but more so by providers).

3.1.1. Specific GUI iterative design changes.

To develop an impression of evolving trends over time, participants requested placement of multiple disparate data elements onto a simultaneous timeline, addition of a zooming feature (screen enlargement), and incorporation of near-to-far trending view choices (one hour to extended 36- and 48-hours views of VS and risk score). Table 3 outlines analysis codes, illustrative quotes, and GUI design changes for individual themes.

Table 3: Illustrative Quotes and Technical Graphical User Interface Design Changes.

Vital Sign (VS), Graphical User Interface (GUI), Fraction of inspired oxygen (FiO2), Chronic Obstructive Pulmonary Disease (COPD), Medication Administration Record (MAR).

| Theme | Code | Illustrative Quotes | Technical Graphical User Interface Design Changes |

|---|---|---|---|

| 1. Trend evolution | CRI_RISK BASELINE_RISK PHYS_EXAM PROX-REMO HEMOD_HIGHTECH HEMOD_LOWTECH INTERVAL OTHER_RISK REPORT |

Participant MD1-3-2: “Respiratory wise, mostly it’s just visualization of work of breathing, FiO2 that’s being required with a pulse ox equivalent, and mostly looking at actual muscles of the chest wall moving…Then cardiovascular…warm touch perfusion extremities and then most of my patients will have a bedside ultrasound to give me a good visualization of…cardiac function.” | Add:

|

| Participant RN2-1-1: “…I’ll be honest it’s not popular with everybody, but bedside report can tell you a lot of things if you’re looking at the patient. It’s not just about the what’s on the monitor and…in a computer, it’s also looking at the patient as well, so tie all this together. Helps me…okay this patient is unstable. Keep a close eye on him, prioritize your patients, based on their acuity of issues.” | |||

| Participant RN1-1-2: “…I mean, this would be really great as like an adjunct to the nurse doing their nursing assessment and…trending your patients, critical thinking, having something like this, especially for our newer nurses.” | |||

| 2. Context | RISK_INDEX BARRIERS BENEFITS PRACTICE |

Participant MD1-3-2: “…A concurrent FiO2 or…level of oxygen being applied to the patient would be helpful or…having some kind of…arrow on the graph where it says…6-liters, and then you give it 10-minutes, and all of a sudden you see 8-liters, then…15-liters, non-rebreather…It kind of shows you… that this is a respiratory failure…instead of something else. And…with an integration like another very…advanced thing is, I’d really like to see at least some drugs or the MAR…pop-up in this timeline.” | Add:

|

| Participant MD2-3-1: “We always under appreciate how much we harm people with medicine, and to have all the medications lined up versus what their vital signs are doing would really…show some of those risks.” | |||

| Participant MD2-2-1: “…One thing I really, really like about this…is that obviously these machine learning algorithms are simply a function of the input data and how often do we have pulse ox that are either erroneous or fall off? So, what I love about this is that if you see a change in the index, you can very quickly look to see if it’s actually clinically valid. For example, if the index goes sky high, saturations 55%, but at the same time you don’t have a confirmed tachycardia or tachypnea, it makes you sort of think maybe that was an erroneous value, so I love that it’s just not a black box number, but we actually have the data and the trends that we can refer back to.” | |||

| Participant MD2-2-1: “…I would just love to know which clinical features are really driving that index…It says saturation there, for example, but I think it’d be really great to know which actual feature is driving the change…” | |||

| Participant RN1-1-2: “…There’s always going to be those people that perhaps are already overwhelmed with the technology…So, they may not be as receptive to this because this is another task. Another thing that the nurses have to check at the bedside when they’re already doing a million things, but I think overall, the majority of people, as long as you didn’t ask them to do too much with it, would be happy to have another data point to look at.” | |||

| Participant RN2-1-4: “I start to hesitate whenever it adds additional steps for bedside staff. I get concerned for compliance and then does that impact the usefulness of the tool? There is a tendency to say oh I’ve got to do this? Well, I’m already doing X, Y and Z, so I’ll keep doing what I’m doing.” | |||

| Participant RN1-1-1: “…For example, somebody with COPD…They can bounce…between 88%…94%, but you have somebody else, like me, where you go down to 88% and we’re going to be struggling…Somebody that lives at certain ranges…does this account for any kind of inputs from us, and can we tailor it to certain patients?” | |||

| 3. GUI evaluation / interpretation / explanation | CHANGES CONFIDEN CRITIC_THINK ALARM_FATIG WEIGHT ED LIKE |

Participant RN1-1-4: “They may feel like…this doesn’t look that good, but I’m going to wait until it gets a little bit worse before I feel confident to call the physician. So, I think having this very tangible number that sort of justifies the phone call might make them feel confident to escalate earlier.” |

|

| Participant RN1-1-1: “I’m going to be a pessimist on this. I think that new nurses need to learn how to critically think and if something like this is available to them, they’re not going to learn how to critically think…” | |||

| Participant RN1-1-2: “…. I do think there’s a lot of code shaming for people that when they call for help, you know, why did you call this and I think it gains confidence there. But it doesn’t take away the human aspect of having to physically assess your patient, having to look at them…You can’t go off from just numbers for a patient, you need to go and look at them.” | |||

| Participant MD2-2-1: “I guess one thing that I worry about, is that this as it is right now, I think puts us at risk of pretty significant alarm fatigue. And I think that could be alleviated by understanding what the y-axis…or even what the numbers mean in terms of score would be a little bit helpful.” | |||

| 3a. Continuity of evaluation | PRIORITIZE HANDOFF |

Participant MD1-3-2: “[Electronic Health Record] is just awful at allowing me to trend vital signs and takes five minutes to load in three days’ worth of stuff. So, you know, that alone would be extremely beneficial, just to have an easier way to view.” | There were no specific changes for this theme. |

| Participant RN2-3-4: “The physician pulled up a graph on the computer where it…trended the vital signs over a three-day period of time and then it was like blatantly obvious what was going on with the patient… It was really cool to see that…I think when you…take care of a patient for 12-hours and then pass to the next person, some of these very obvious trends can…get lost…as you pass the patient back and forth…” | |||

| 4. Clinical intuition support | GUT_FEELING | Participant RN2-1-4: “…You know it’s kind of that gut feeling and we’ve looked at the trends…and think well, that may not be significant, kind of justify it in our heads…” | There were no specific changes for this theme. |

| Participant RN2-1-1: “This can be helpful in a neuro environment or cardiac environment like [participant RN2-1-4] said. There are times, where you bet, you’ll think something’s wrong. But you don’t have enough data to back it up, it’s like okay let’s continue to monitor the patient, and then two to four hours later it’s worse. Well now, you…have data to back it up.” | |||

| Participant CRNP2-3-1: “The only thing that I would add is that, with all of that stuff that is objective there’s also just a gut feeling that you use a lot.” | |||

| 5. Clinical operations utility | EVIDENCE STAFF |

Participant RN2-1-2: “…I’m a big fan of the monitors in the hallway…near the patient rooms, and I worry if it’s…in the nurse’s station that I’ll never see it. I don’t get to go in there very often on a busy day, and of course we have people who can come alert us, but it is more helpful if we can see it ourselves.” |

|

| Participant RN2-1-1: “…I know that clinicians and unit directors employ the use of dashboards so they may be able to look at this from a dashboard point of view…I know the charge nurses, clinicians, and unit managers really rely on the dashboards so that would be a place that it would need to be as well…” | |||

| Participant: MD2-2-1: “…When I’ve talked to some of the dinosaur attendings, particularly surgeons, they always worry about…replacing clinician gestalt…I think if there’s always that ability to clinically validate the scores that are generated, I think that would really, really help with buy in…” | |||

| Participant RN2-1-4: “I have interest in this from a staffing and assignment perspective…this would be a helpful tool…I know that unit staffing doesn’t always mirror actual patient need as much as we would like it to, but this could give very real data to show a population on a certain unit, their patients are always running in the eights and nines. Maybe that gives leadership some data to say we need more or we need X, Y, or Z because our patients, not only do we feel they have a higher acuity we can prove it. And then, also for assignment making you know, do we, you know give nurses higher acuity patients and less of them, do we dispense…the number of patients on the floor with high acuity and mix those with low…But it does give us very real numbers to kind of make some of those decisions that otherwise you know we do on the day to day.” | |||

| Participant MD2-3-1: “When it’s implemented, I really wouldn’t put any real faith into it right away. You know roll it out slowly, with some good kind of education and take some time to catch on. But I think you know all around the country people have been trying to make these early warning systems and they’ve all been fraught with different problems…making them clinically difficult to implement…” |

Most GUI changes can be visualized in Figures 2, 3a, and 3b. Some changes are interactive and would not be appreciated in a static figure. These changes were applied to the final GUI prototype in the interactive development software and are denoted as (**).

The table above provides participant quotes that were coalesced between both rounds. Quotes were analyzed to discover the qualitatively reported themes.

3.2. Theme 2- Context (risk evolution relative to VS and interventions).

Participants discussed the value of being able to view the evolution of CRI risk within the context of VS changes. They highlighted that viewing the VS and risk score at concurrent time points together is more informative. For example, by visualizing the risk score trend going up in the same view, they could see the SpO2 trending down and respiratory rate trending up. The participants also wanted to see risk evolution within the context of interventions. They wanted to know when an intervention (sedation, narcotics, oxygen therapy, vasoactive medications, or volume expanders) was given to establish whether external factors were causing or mitigating the risk. Although additional information was requested, they emphasized the need for automation. They were passionately against adding more burden to the workload that they already have. They noted past risk score systems failed adoption due to increased documentation requirements specific to risk computation, and not routine clinical care. Participants also discussed benefits, barriers, and clinical practice implications that may be relevant for implementing the IDSS. Notably, they highlighted that CRI risk “personalization” is important. Meaning each patient has their own set of baseline VS, and ensuring that the AI algorithm evolves risk from that personalized baseline is essential (e.g., account for baseline tachypnea in a patient with chronic obstructive pulmonary disease).

3.2.1. Specific GUI iterative design changes.

Participants recommended automatic addition of interventions from the EHR (medications, respiratory therapies, and laboratory values) in simultaneous view, and over the same time continuum to provide contextual explanation for risk score changes. Figure 3a displays GUI design changes made to include medical interventions. Requests were also made to include an information tab describing AI algorithm features used to predict CRI risk. They felt it was important to know the underpinnings of risk score development, and were reluctant to trust black box output.

Figure 3a: Changed Graphical User Interface (Version 2).

Changed Graphical User Interface (Version 2)- includes requested changes from round 1. This version was presented to round 2 participants.

3.3. Theme 3- GUI Evaluation/interpretation/explanation.

Participants discussed information arrangement, color, fonts, size, axis scales, and requested changes to improve readability, interpretation, and evaluation. Additionally, participants highlighted the importance of boosting clinician confidence regarding decisions to escalate their concerns, as well as an estimation of staff education needs (alarm management and critical thinking skills). Boosting confidence has major clinical implications, as participants admitted to sometimes waiting to escalate their concerns while they gathered more information to validate that their patient was in fact doing poorly. There was a rich discussion surrounding the validity of the clinical data (real versus artifact) driving the risk score. Participants also wanted to understand the “delta” (time change) of risk evolution and its importance for differentiating risk change that occurred rapidly in time versus slow evolution.

3.3.1. Specific GUI iterative design changes.

Participants requested risk score trajectory column enlargement, color brightness modifications, y-axis standardization, and to add blood pressure and temperature visualization to the list of VS that influence the risk score trajectory. See figure 3b for final GUI screen design.

Figure 3b: Final Graphical User Interface (Version 3).

Final Graphical User Interface (Version 3)- includes requested changes from round 1 and 2 focus groups.

3.3.1.1. Sub Theme 3a- Continuity of evaluation.

Nurses discussed the limitations of shift work and the nature of handoff. Some nursing shifts can be as short as 4 hours, limiting the time to collate a patient’s historical data, provide nursing care, and then hand the patient off to an oncoming nurse. Although to some degree this theme may overlap with trend evolution, this is different because nurses were discussing ways to prevent “losing” trends during patient handoff. The participants were hopeful that the GUI would support thorough patient handoff through improved continuity of evaluation to inform their impressions of patient risk.

3.4. Theme 4- Clinical intuition support.

Participants commented on how important their “gut feelings” or clinical intuition was when assessing patient instability risk. The risk score trajectory was noted as an objective measure that could help support their intuition. They felt that frequently what is referred to as clinical intuition is actually the processing and establishment of connections between subtly evolving yet disparate pieces of information. They viewed the risk score trajectory as providing an additional criterion to use to support these subtle evolutions that create their impression of patient status. Having an objective value representing such subtleties could help to confirm progression towards a CRI state.

3.5. Theme 5- Clinical operations utility.

Although not directly prompted, discussion from both roles, but more so by nurses, moved towards how the evolving risk score trajectory could assist nurses with making patient assignments (e.g., risk score trajectory used as a measure of acuity), inform synergistic throughput decisions (e.g., support for transfer to a higher level of care), and management of resource allocation (e.g., nursing care skill-set, surveillance level, and supportive technology). These topics reflect administrative uses of risk information. Participants also focused on implementation details, such as the most suitable location for the GUI in each nursing unit, and if multiple locations should be considered.

3.5.1. Specific GUI iterative design changes.

Requests included adding patient tabs across the top of the GUI screen to toggle between patients, and incorporating add/remove patient functionality to customize nursing assignment or role (charge nurse versus bedside nurse).

4. DISCUSSION

We demonstrated that clinical end-users are more than willing to engage and provide feedback on an AI derived IDSS. Such engagement resulted in fundamental changes in the display and functionality of the baseline static GUI initially developed by the researchers, stressing the need of involving end-users in the early development of AI-based IDSS tools.

Analysis identified five emerging themes: trend evolution, context, evaluation/interpretation/explanation (sub theme- continuity of evaluation), clinical intuition support, and clinical operations utility. Early-stage, qualitative work that engages research teams with clinical experts is a critical component of health care-focused AI research that is often overlooked.[14–18] Studies demonstrate that AI models identify predictive patterns in heterogenous patient data.[8, 19–22] However, the impact of AI-based technologies focused on patient outcomes is under-realized due to implementation challenges in the clinical setting.[14, 23, 24] A scoping review found that clinician involvement in every stage of IDSS design is currently lacking, and our study helps to fill these gaps.[25]

Previous research has applied user-engaged methods to iteratively design IDSS that stand in between humans and AI.[16] When clinician input is elicited late in the process or not at all, technologies are used in unforeseen ways when introduced into the clinical environment.[16, 18] We intentionally sought clinician input early in GUI prototype development before further clinical testing of the device. Other research also validates the importance of early involvement and idea sharing before any dynamic prototypes are designed.[26]

Other researchers have confirmed the value of clinician input for GUI design. Keim-Malpass et al. demonstrated the importance of this step when their team noted little impact in patient outcomes after clinically implementing their well-validated continuous predictive analytics monitoring tool (CoMET®).[18] They identified that CoMET® output was underutilized and highlighted the need to address system design and implementation challenges before meaningful use and clinical action can occur.[18] Similar to our findings, their focus groups noted that understanding the science behind the tool (context), trusting the data output (context), integrating risk findings with the existing EHR (context and trend evolution), and optimizing clinical pathways (clinical operations utility) are critical to consider for successful adoption and implementation of predictive models in a complex clinical milieu.[18]

Work by Barda et al. also confirmed several of our thematic findings. They proposed a framework for the user-centered design of GUI displays and used focus groups to elicit clinician feedback on their framework. Their design framework focused on four factors: the clinicians using the system, the environment or context of system use, user need for explanations associated with the system, and information representation by a GUI.[16, 27] Our results confirm the importance of their proposed factors. Although they used a hypothetical model rather than a validated model like ours, their focus groups’ feedback aligned with our finding that the degree to which clinicians value context cannot be overemphasized. Clinicians value visually assessing how heavily a factor is contributing to a patient’s risk and having information presented within the context of interventions from the EHR. Furthermore, they seek the ability to quantify change in risk from a patient-specific baseline, and to zoom in to explore trends and details more deeply. Displays that minimized clinician workload or cognitive burden was another theme common between our findings.

Interestingly, we found two themes not mentioned in the studies above. Our participants noted the value of a GUI supporting their clinical intuition, through providing objective confirmation of their imprecise “sense” of changing patient status. This could foster greater confidence in acting on their impression of status change to escalate care or apply interventions. Also, participants consistently shared comments related to the theme of clinical utility across groups. They saw the evolving risk information going beyond informing impressions and decisions for one patient but having utility in other aspects of clinical administration such as in making assignments, assessing shift-based patient acuity, and triage decisions.

These findings are important to inform our early GUI prototype before further evaluation in the dynamic clinical setting. However, much work remains to determine how IDSS information can be used to support clinician thinking from reactive to proactive; enhance communication between interprofessional team members; link risk scores to appropriate clinical interventions; and take special consideration to include only information that has value versus adding to cognitive burden. Ongoing post-implementation evaluation of the impact that early-warning risk prediction systems have on patient outcomes and clinical processes will be needed.[1, 28] Clinicians will require timely feedback about the sensitivity and specificity of the risk scores that they are being asked to use in daily practice. Methods of evaluation should be transparent and interpretable, so that clinicians can have confidence in the accuracy of the risk score as close to real-time as possible. Limitations to this work include performing the focus groups virtually, which limits appreciation of body language and facial expressions. We also recruited from only one health care system, which limits generalizability.

In summary, we found that engaging clinicians early in the iterative design of a front-facing GUI for an IDSS is helpful in adapting such tools to encourage greater clinical usage. Research seeking to develop technologies that target clinicians as end-users should embed user-engaged iterative design from the beginning of a study and throughout its entirety to ensure value, ease of use, and successful clinical uptake.

SUMMARY TABLE

| What is already known on this topic? | What did this study add to our knowledge? |

|---|---|

| 1. Artificial intelligence and predictive analytic methods are being used widely in health care. | 1. Health care professionals are more than willing to collaborate with researchers who design the technologies that these professionals will likely be asked to use in the future. |

| 2. Artificial intelligence outputs are often not interpretable. | 2. Health care professionals are most familiar with clinical workflow practices, and if not investigated by researchers will lead to poor uptake. |

| 3. Health care professionals are not involved early and frequently enough to influence the scope and purpose of front-facing graphical user interfaces that stand in between artificial intelligence outputs and the end-user (nurses and physicians). | 3. Clinical workflow is dynamic and fluid, only those who work in these complex environments can provide the contributions needed to incorporate into innovative technology designs. Without expert clinician input, technologies will not be used as they were intended, if at all. |

ACKNOWLEDGEMENT

We would like to thank the clinician participants of this research study for their time and commitment to achieving better patient outcomes. Their contributions were invaluable. We would also like to thank Dr. Elisabeth George PhD, RN for her assistance with recruitment.

FINANCIAL SUPPORT

This work was supported by grants from the National Institute of Health (grant numbers R01NR 013912, T32NR008857, 1F31NR019725-01A1).

Abbreviations:

- AI

Artificial Intelligence

- CRI

Cardiorespiratory Insufficiency

- EHR

Electronic Health Record

- GUI

Graphical User Interface

- IDSS

Intelligent Decision Support System

- NP

Nurse Practitioner

- MD

Physician

- RN

Registered Nurse

- VS

Vital Sign

APPENDIX A: Focus Group Semi-Structured Moderator Guide

Graphical User Interface (GUI), Cardiorespiratory Insufficiency (CRI)

Semi-structured moderator guide used by the expert focus group methodologist to facilitate discussions during the study.

| Welcome! My name is [facilitator], I will be facilitating today’s discussion. I am assisted by [name of researcher], in case I get stuck on any of the technical aspects of the project we are showing you, and [name of researcher], who will be my notetaker and tech support. They will not be participating in the discussion. |

| Thank you so much for being here. You have been invited to participate in this focus group because you are: A licensed clinician (nurse, physician, physicians’ assistant, or nurse practitioner) who has past or present experience caring for hospitalized patients at risk of cardiorespiratory insufficiency (CRI). |

| Today we will explore your experiences with identifying patients at risk for CRI, by using new technology in clinical workflow practices. We will be getting your feedback about a graphical user interface (GUI) prototype. This GUI may help clinicians better recognize patients approaching CRI, and provides a user interface for our machine learning models to predict future instability risk. |

| While we have some things in common, we also have differences – where we come from, the experiences we have had. That should not prevent you all from participating today. I want to hear from everyone. I am not here to judge you, and there is no right or wrong answer to any of the questions I will be asking. I will respect you and I know that you will be respectful of each other, even if you have a different reaction/opinion, or disagree. |

| We’ll be talking for about an hour, and one of my jobs is to make sure we stick to that timeline. Our discussion will be recorded using the Zoom recording features; before we proceed with analysis, the video portion of the recording will be deleted. And once the recording is transcribed, it will be destroyed so your answers cannot be connected with you. |

| The transcripts will be deidentified and stored in a secure location before, during, and after the final analysis. The results of this discussion will be published for research purposes. By remaining in the room, you give your consent to be recorded and to have your comments included in any reports or manuscripts that come out of this work. Without attribution, of course. |

| Any questions about that? Feel free to get up and stretch if you want, drink your water, … Okay – Let’s begin. I’d like to start by having you tell us your name and where you work. Thanks! Now we are going to show you what a future GUI might look like. [name of researcher], please start share screen. |

| What you are looking at comes from continuous monitoring data collected from the patient cardiorespiratory monitor. |

| Data include vital signs (heart rate, respiratory rate, blood pressure, and pulse oximetry) that can be viewed at 60 second, 5 minutes, 1, 2, 12, and 24-hour intervals. |

Calculations applied to the vital sign data help the machine build predictions about which patients will become unstable, and those who will never become unstable in the future. The score that results from these calculations can help clinicians determine if a patient needs to be evaluated more closely to assess their situation, and if nursing/medical intervention is warranted.

|

Screen Present:

|

Without the GUI Screen Present:

|

Footnotes

ETHICAL STANDARDS

This study was approved by the University of Pittsburgh Institutional Review Board.

DECLARATIONS OF INTEREST

None.

REFERENCES

- [1].Keim-Malpass J, Moorman LP, Nursing and precision predictive analytics monitoring in the acute and intensive care setting: An emerging role for responding to COVID-19 and beyond, Int J Nurs Stud Adv, 3 (2021) 100019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Pinsky MR, Clermont G, Hravnak M, Predicting cardiorespiratory instability, Crit Care, 20 (2016) 70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Kashfi H, Applying a user centered design methodology in a clinical context, Stud Health Technol Inform, 160 (2010) 927–931. [PubMed] [Google Scholar]

- [4].Wright MC, Borbolla D, Waller RG, et al. , Critical care information display approaches and design frameworks: A systematic review and meta-analysis, J Biomed Inform X, 3 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Bristowe K, Siassakos D, Hambly H, et al. , Teamwork for clinical emergencies: interprofessional focus group analysis and triangulation with simulation, Qual Health Res, 22 (2012) 1383–1394. [DOI] [PubMed] [Google Scholar]

- [6].Burke RV, Demeter NE, Goodhue CJ, et al. , Qualitative assessment of simulation-based training for pediatric trauma resuscitation, Surgery, 161 (2017) 1357–1366. [DOI] [PubMed] [Google Scholar]

- [7].Chen L, Ogundele O, Clermont G, et al. , Dynamic and Personalized Risk Forecast in Step-Down Units. Implications for Monitoring Paradigms, Ann Am Thorac Soc, 14 (2017) 384–391. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Chen L, Dubrawski A, Clermont G, et al. , Modelling Risk of Cardio-Respiratory Instability as a Heterogeneous Process, AMIA Annu Symp Proc, 2015 (2015) 1841–1850. [PMC free article] [PubMed] [Google Scholar]

- [9].Chen L, Dubrawski A, Hravnak M, et al. , 41: Forecasting cardio-respiratory instability in monitored patients: a machine learning approach Crit Care Med, 42 (2014) A1378–A1379. [Google Scholar]

- [10].Devita MA, Bellomo R, Hillman K, et al. , Findings of the first consensus conference on medical emergency teams, Crit Care Med, 34 (2006) 2463–2478. [DOI] [PubMed] [Google Scholar]

- [11].Lake DE, Richman JS, Griffin MP, et al. , Sample entropy analysis of neonatal heart rate variability, Am J Physiol Regul Integr Comp Physiol, 283 (2002) R789–797. [DOI] [PubMed] [Google Scholar]

- [12].Pincus SM, Approximate entropy as a measure of system complexity, Proc Natl Acad Sci U S A, 88 (1991) 2297–2301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Breiman L, Random Forests, Machine Learning, 45 (2001) 5–32. [Google Scholar]

- [14].Kelly CJ, Karthikesalingam A, Suleyman M, et al. , Key challenges for delivering clinical impact with artificial intelligence, BMC Med, 17 (2019) 1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Ghassemi M, Naumann T, Schulam P, et al. , A review of challenges and opportunities in machine learning for health, AMIA Summits on Translational Science Proceedings, (2020) 191. [PMC free article] [PubMed] [Google Scholar]

- [16].Barda AJ, Horvat CM, Hochheiser H, A qualitative research framework for the design of user-centered displays of explanations for machine learning model predictions in healthcare, BMC Med Inform Decis Mak, 20 (2020) 257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Shah NH, Milstein A, Bagley SC, Making machine learning models clinically useful, JAMA, 322 (2019) 1351–1352. [DOI] [PubMed] [Google Scholar]

- [18].Keim-Malpass J, Kitzmiller RR, Skeeles-Worley A, et al. , Advancing continuous predictive analytics monitoring: Moving from implementation to clinical action in a learning health system, Crit Care Nurs Clin North Am, 30 (2018) 273–287. [DOI] [PubMed] [Google Scholar]

- [19].Churpek MM, Yuen TC, Winslow C, et al. , Multicenter development and validation of a risk stratification tool for ward patients, Am J Respir Crit Care Med, 190 (2014) 649–655. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Moss TJ, Lake DE, Calland JF, et al. , Signatures of Subacute Potentially Catastrophic Illness in the ICU: Model Development and Validation, Crit Care Med, 44 (2016) 1639–1648. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Lake DE, Fairchild KD, Moorman JR, Complex signals bioinformatics: evaluation of heart rate characteristics monitoring as a novel risk marker for neonatal sepsis, J Clin Monit Comput, 28 (2014) 329–339. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Al-Zaiti S, Besomi L, Bouzid Z, et al. , Machine learning-based prediction of acute coronary syndrome using only the pre-hospital 12-lead electrocardiogram, Nat Commun, 11 (2020) 3966. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Holzinger A, Explainable ai and multi-modal causability in medicine, i-com, 19 (2020) 171–179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].Sanchez-Pinto LN, Luo Y, Churpek MM, Big data and data science in critical care, Chest, 154 (2018) 1239–1248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [25].Schwartz JM, Moy AJ, Rossetti SC, et al. , Clinician involvement in research on machine learning-based predictive clinical decision support for the hospital setting: A scoping review, J Am Med Inform Assoc, 28 (2021) 653–663. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [26].Neubeck L, Coorey G, Peiris D, et al. , Development of an integrated e-health tool for people with, or at high risk of, cardiovascular disease: The Consumer Navigation of Electronic Cardiovascular Tools (CONNECT) web application, Int J Med Inform, 96 (2016) 24–37. [DOI] [PubMed] [Google Scholar]

- [27].Churpek MM, Yuen TC, Winslow C, et al. , Multicenter comparison of machine learning methods and conventional regression for predicting clinical deterioration on the wards, Crit Care Med, 44 (2016) 368. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].Scully CG, Daluwatte C, Evaluating performance of early warning indices to predict physiological instabilities, J Biomed Inform, 75 (2017) 14–21. [DOI] [PMC free article] [PubMed] [Google Scholar]