ABSTRACT

Appointment no-shows have a negative impact on patient health and have caused substantial loss in resources and revenue for health care systems. Intervention strategies to reduce no-show rates can be more effective if targeted to the subpopulations of patients with higher risk of not showing to their appointments. We use electronic health records (EHR) from a large medical center to predict no-show patients based on demographic and health care features. We apply sparse Bayesian modeling approaches based on Lasso and automatic relevance determination to predict and identify the most relevant risk factors of no-show patients at a provider level.

KEYWORDS: Appointment no-shows, electronic health data, Bayessian Lasso, automatic relevance determination, sparse Bayesian modeling

1. Introduction

Patients that do not show to their scheduled appointment or cancel the appointment late are commonly referred in the literature as appointment no-shows. A missed appointment could negatively impact patient's health condition and even increase the risk of premature death in severe cases. McQueenie et al. [13] have studied the effect of no-shows on all-cause mortality in patients with long-term mental and physical health conditions, using a 3-year period across Scotland. They have found that patients with long-term mental conditions have a higher risk of mortality if missing two or more appointments. Other implications of no-shows range from lost revenue due to wasted resources at the moment of the appointment to future additional costs associated with the increased risk of emergency visits or hospital admissions that could have been prevented with proper follow-up during the missed appointment. For example, Kheirkhah et al. [11] reported a no-show proportion of 18.8% across 10 clinics between the years 2006 and 2008 at a medical center that serves over 76,000 veterans in Texas, with an estimated 14.58 million marginal cost of no-shows in 2008. Other interesting studies about the costs of no-show patients and its implications in the health care system are also presented in [9,14].

Due to the negative effects associated with missed or cancelled appointments, intervention plans to reduce the proportion of no-shows are currently a priority for health care providers and administrators. Some of the intervention practices consist of setting up reminder calls for the patients during the three days prior to the appointment, allowing immediate re-scheduling of the appointment, and reducing waiting times between the scheduling and the actual appointment dates. Recent efforts have also focused on models designed to optimize the number of overbooked patients in order to minimize loss [6]. In this work, we are interested in prediction models that can be used to identify specific risk factors and patient subpopulations that have a higher risk of not showing to their appointments. This information can be used to aim intervention strategies to the fraction of incoming patients with highest no-show proneness. Notably, Ellis et al. [7] and Williamson et al. [22] have made an effort to link patients' risk of missing appointments with patient-level factors and practice-level factors in the UK. Williamson et al. [22] used a retrospective cohort design and [7] used an negative binomial model for calculating no-shows risks and a cohort analysis based on patient and practice characteristics. Our paper differs significantly from previous studies both in data structure and methodology. Our data contains summaries of previous appointments for a specific patient such as the proportion of missed appointments in the last three months and the number of times appointments were rescheduled. Using this information and other patient characteristics (demographic features, health conditions, etc) we aim to predict no-show appointments. Our study identifies possible predictors for high-risk appointment no-shows from a large sparse dataset, and we employ Bayesian statistical learning methods for this prediction task. Here, we are able to identify the factors that significantly affect the risk of no-show appointments among hundreds of potential factors to then take specific actions such as targeted reminder phone-calls and optimal overbooking in order to reduce the risk.

Furthermore, electronic health records (EHR) have become a powerful tool to preserve longitudinal real-time, patient-centered records that make health information available instantly. Following the work of Ding et al. [4], we use EHR data to build a prediction model for the risk of a patient not showing to an appointment, conditional on observed individual variables that include demographic and health care features. As it is common in large health care systems, the data consists of a variety of specialties, clinics and providers serving different patient populations. Ding et al. [4] present penalized logistic models for no-show prediction across different specialties and clinics, and highlights the superior performance of clinic specific models over more general specialty level models. Here, we propose to explore models at a provider level using two approaches for penalized logistic regression within a Bayesian framework.

2. EHR data

Electronic health records collected from a large academic medical center in North Carolina consists of over 2 million patients between the ages of 18 and 89 years of age who had an appointment at an outpatient specialty clinic between 2014 and 2016. A total of 14 specialties (Cardiology, Neurology, Gastroenterology, Ophthalmology, Dermatology, Endocrinology, Orthopedics, Otolaryngology, Plastic Surgery, Pulmonary and Allergy, Rheumatology, Urogynecology and Urology) are included in the data. Many specialties have multiple clinics serving different volumes of patients from distinct populations (age, gender), for a total of 61 clinics that can be either attached to or detached from the primary hospital. For the purpose of this analysis we removed 8 small clinics that did not have enough data to train the models for a total of 53 clinics and 475 providers.

Patients were required to have made the appointment at least three days before the appointment date, and received call reminders via an automated phone system. The definition of ‘late cancellation’ differed among clinics depending on rescheduling difficulty. In this application, we defined a late cancellation as cancelling on the day of the appointment. Appointments that were canceled prior to one day were not considered as no-shows in this analysis. The outcome of interest is then an observed binary variable representing whether a patient did not show to their scheduled appointment or canceled on the day of the appointment. In this particular EHR dataset, the average no-show rate across specialties is approximately 18%. Figure 1 displays how the no-show rate varies across different clinics and specialties. The highest no-show rate among clinics is 42% for a clinic in the Pulmonary and Allergy specialty and the lowest is 6% for a clinic in Orthopedics. The highest no-show rate among specialties is also present in the Pulmonary and Allergy specialty (32%), and the lowest rate in the Urogynecology specialty (13%).

Figure 1.

No-show rates among different clinics (left) and specialties (right). Highest no-show rate of 42% and lowest 6% among clinics. Highest rate is 32% among specialties and the lowest is 13%.

The data also contains information about representative patients demographics (age, gender, employment status), health care plan, engagement with the health system (active in the online patient portal MyChart, response to 7 day phone call), appointment type (how long the appointment was held for, the location of the appointment), patient response to appointment confirmation among others, for a total of 67 covariates (102 when using dummy variables for categorical features). There is little clinical information available except for substance abuse and psychiatric diagnosis in the past two years. The available patient information is utilized to model the no-show probability of individual patients and to identify high-risk patients for which intervention measures can be directed in order to reduce no-shows rates.

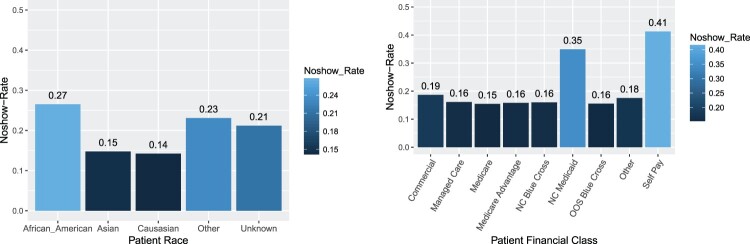

Figure 2 displays the no-show rates among groups for two features: race (demographic information) and medical financial class (health care information). We observe that the highest no-show rates are present among African American patients, and in the self-pay category of financial class that corresponds to uninsured patients that are usually responsible for full payment of the medical care they receive. We want to point out here that in scenarios where predictive policing is of interest it is of utmost importance to avoid perpetuating discriminatory practices against certain subpopulations. In this particular application, attributes such as race and socio-economic status may be predictive of no-shows, but implementation of preventive measures based on these attributes should be carried out with care. For example, direct application of the finding that African Americans are less likely to show up for their appointments, could lead to discriminatory practices like overbooking appointments for all African American patients. The ethical implications of automated machine learning approaches and other relevant issues associated with causality and fairness are discussed in depth in [12].

Figure 2.

No-show rates by patient race and financial class. African American patients and patients in the Self Pay category have higher no-show rates.

In this particular data set, and possibly in other EHR data sets coming from large medical institutions, there is a natural specialty-clinic-provider hierarchy. The 53 clinics considered in this data are served by 475 individual medical providers (doctors) that handle between 1005 and 21,560 patients each, with and average of 4404 patients overall. The provider with the largest number of patients is observed in the ophthalmology specialty, and a few providers serve in more than one clinic at a time. We see that the average no-show rate among providers varies widely between 1.6% and 97% with an average rate of 17%. In this work, we choose to build separate risk models at a provider level to take into account the possible heterogeneity among the population of patients. The multiple risk models would also allow us to perform a more exhaustive identification of the different underlying risk factors of no-shows by provider considering that they differ among clinics and specialty combinations [4].

3. Methods

We employ a logistic regression model for each provider where the response is the binary variable indicating whether a patient is a no-show or not, and the predictor variables are all the demographic and health care attributes available in the EHR data. This model choice allows us to predict the probability of a patient being a no-show, and estimate the change in risk associated with a particular factor level or value increase of a predictor variable.

Let denote the no-show status of the ith patient for a specific provider. The proposed model assumes independent Bernoulli distributions on the outcome as follows:

| (1) |

where is the probability for patient i of being a no-show:

| (2) |

for a p-dimensional vector of individual attributes, and the respective vector of regression coefficients associated with . Hence, the likelihood function is given by,

| (3) |

In a relatively high-dimensional application like the one presented here, it is important to consider the issue of variable selection in the model. The number of features present in the EHR data is relatively large (p = 102) and we are interested in identifying the relevant risk factors of no-shows among providers. While building separate models is straightforward, performing variable selection among a large number of models can be challenging. Here, we rely on sparse Bayesian approaches to identify the more meaningful no-show predictors by provider in an automatic fashion. In the following section we discuss two well known machine learning methods for sparse learning that also belong in the class of hierarchical shrinkage priors for regression models.

3.1. Lasso penalized logistic regression

Among the most popular approaches for variable selection in regression models are those based on Lasso (least absolute shrinkage and selection operator) introduced by Tibshirani [18]. In Lasso regression, a penalty is imposed on the model coefficients to induce sparsity such that the less relevant variable coefficients are shrunk to zero, thus performing automatic variable selection. Furthermore, prediction accuracy can also be improved by shrinking the model coefficients. More specifically, the estimates for the model parameters in traditional Lasso are obtained by solving:

| (4) |

where is the log-likehood based on Equation (3) and the second term corresponds to the Lasso penalty. The parameter is the penalty parameter that controls the shrinkage level of the coefficients towards zero, and therefore has a direct impact on the quality of the estimates and predictions generated by the model.

The penalty parameter λ can be selected through cross-validation by training the model on a fraction of the observed data (training set) for each provider, and performing predictions on the remaining portion (testing set) for a grid of values of λ. For evaluation of prediction performance, these predictions can be compared against the respective observed no-show indicator, then the number of false and true positives is computed, and a receiver operating characteristic (ROC) curve is constructed. Finally, the optimal penalty parameter can be chosen as the value of λ in the grid that provides the highest area under the curve (AUC) in the testing dataset. The main drawback is that selection of the optimal tuning parameter through cross-validation can be computationally expensive in general [19], and in particular in this application where we fit independent models for each provider and the range of values of λ can vary among them.

Here, we adopt the Bayesian counterpart of Lasso regression presented in [15] where the penalty parameter can be estimated directly by assuming a prior distribution on λ. The Bayesian Lasso was introduced by noting that the Lasso penalty in Equation (4) is equivalent to assuming independent Laplace prior distributions on the regression coefficients. Park and Casella [15] provide a hierarchical model and Gibbs sampler in the linear regression context by representing the Laplace distribution as a scale mixture of normals. In particular, a conditionally conjugate Gamma prior is assumed for the penalty parameter, and thus posterior samples of λ can be obtained in a straightforward fashion from a Gamma distribution with updated parameters. This is a very appealing feature for this application but a full Bayesian approach also comes with its own set of limitations. The main disadvantage is that inferences obtained through a Bayesian framework lack direct variable selection. While the traditional maximum a posteriori approach in Equation (4) produces estimates of the coefficients that are exactly zero, the Bayesian counterpart of Park and Casella [15] does not. However, variable selection in this setting can be guided by using the credibility intervals of the model coefficients. We discuss in more detail how to identify relevant risks predictors using credibility intervals in the context of the no-shows application in Section 4.

In order to perform Bayesian inference with a binomial likelihood, we exploit the data augmentation method based on Polya-Gamma latent variables proposed by Polson et al. [16]. Using this approach, the binomial likelihood can be represented as a mixture of normals with Polya-Gamma mixing distribution. This approach allows for a full conjugate hierarchical representation of the Bayesian lasso model and posterior inference through relatively simple Markov chain Monte Carlo (MCMC) algorithms. Specific theoretical guarantees for Gibbs sampling of Bayesian logistic regression with Polya-Gamma latent variables are provided in [3]. Details of the full conditional distributions of the Gibbs sampling algorithm for the Lasso penalized logistic regression setting are presented in the appendix.

3.2. Logistic regression with automatic relevance determination

Even though the Bayesian Lasso has been widely used in many applications, there are other common alternatives for prior distributions that induce sparsity on model parameters. For example, the sparse Bayesian learning approach for regression introduced by Tipping [20,21] is considered a type of automatic relevance determination (ARD) that is also an effective tool for trimming large numbers of irrelevant features by inducing sparse solutions. Bayesian Lasso and ARD both accomplish variable selection in a linear regression setting by assigning a shrinkage prior on coefficients where posteriors for irrelevant variables would converge to zero. The key difference lies in the choice of the prior distribution for the regression coefficients. Instead of assigning a Laplace prior as in the Lasso case, the prior used for the implementation of ARD is a member of the scale mixtures of normals and therefore inherits its appealing conjugacy properties.

In particular, independent Normal prior distributions are assigned to each β,

| (5) |

where is a hyperparameter vector of precisions that controls how far from zero the coefficients can be. The precision hyperparameters are assigned Gamma prior distributions,

| (6) |

where and represent the shape and rate parameters, respectively. This hierarchical prior specification simply leads to Normal-Inverse Gamma prior distributions for each regression coefficient. Here, low precision means the prior is uninformative while high precision means is constrained to be zero, and thus the corresponding feature is very likely irrelevant. This prior choice will automatically determine the relevant predictors for no-show patients in a similar fashion to Lasso sparse regression.

Note that in this framework we assign an individual hyperprior to each regression coefficient and the number of parameters that need to be estimated has doubled. A full Bayesian treatment of inference for this hierarchical model imposes a high computational cost in big (large n) and high-dimensional data (large p) and can quickly become intractable. To reduce this cost, recent efforts have focused on approximate inference based on variational Bayes techniques [1,10]. The main goal of variational Bayes is to approximate the posterior distribution, the combination of a logistic likelihood with a Normal-Inverse Gamma prior for example, such that the inference problem is transformed into an optimization problem via the introduction of variational parameters. While saving computational cost however, it is possible that a variational approach is inaccurate in recovering the true posterior distribution and inference results can be affected. A through description of such variational treatment and implementation for logistic regression with ARD is presented in [5]. In this particular context, the variational Bayes approach produces accurate results and represents an efficient alternative for full Bayesian inference treatment and prediction with sparse models that can be challenging on big EHR data applications. In contrast to the full Bayesian Lasso, the estimates of the regression coefficients under this approach can be exactly zero therefore performing direct variable selection. Here, we utilize the sprsmdl package available in R to obtain predictions with the ARD logistic model for the no-show data [17]. In Section 4 we present prediction results by provider using both the Lasso and ARD priors.

4. No-show prediction and risk factors

In order to train the models and evaluate prediction performance down on the provider level we take 80% of the patients as training set and the remaining patients as test set. The test set consists of the last 20% of patients for each provider taking into consideration the appointment date. This data partition structure leaves us with enough data to train the models properly for all 475 providers noting that the provider with the lowest number of patients in this dataset serves 1005 patients alone. The no-show rates among providers for the training sets take values between 1.3% and 98%. To obtain inference using the Bayesian Lasso we use a Gamma prior for with fixed parameters for all providers (see Appendix), and run 5000 MCMC iterations after a burn-in period of 1000 iterations.

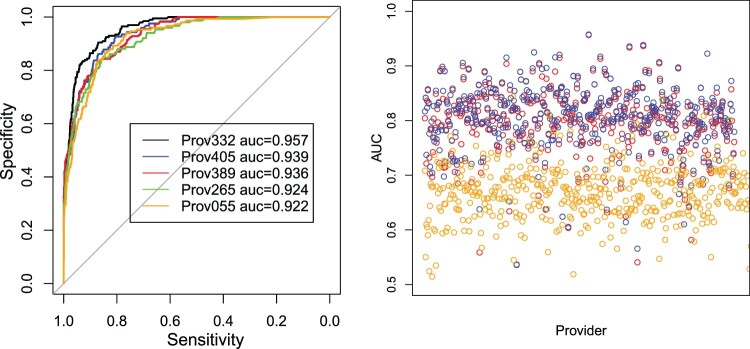

We also compare our two methods (Bayesian Lasso and Automatic Relevance Determination) with the traditional logistic regression. The AUCs of the three models are displayed in Figure 3. Both of our methods significantly outperform the traditional logistic regression in terms of prediction for the majority of providers. By assigning shrinkage priors on the model parameters, we are able to find the most relevant predictors for no-shows among all 102 predictors and thus provide more accurate individual predictions.

Figure 3.

ROC curves for five providers with highest AUC values using ARD predictions (left), and AUC values for provider level prediction performance comparison of Bayesian Lasso (blue), ARD (red) and basic logistic regression (orange).

In the case of ARD, we specified and for the Gamma prior of the precision parameters. In this particular application, the results are robust to the choice of hyperparameters for the models due to the fairly large amount of patient records available for each provider. Figure 3 displays operating characteristic curves associated with the predictions on the test sets based on ARD for the five providers with the highest AUC values (left), and the AUC values for all providers using both the Lasso (blue) and ARD (red) priors. We observe that both approaches render similar prediction performance with the Lasso method performing just slightly better overall. Both models provide a similar fair to good prediction performance with an AUC for the majority of providers. The posterior mean values obtained for the penalty parameter λ of the Bayesian Lasso vary between 0.182 and 20.3 approximately among providers.

We also grouped the providers by clinics and specialties to explore differences in prediction performance among them. From Figure 4, we see that the average AUC among providers grouped by clinics is between 70% and 92%. We also observe that the prediction performance varies among providers in different specialties. For example, the highest median AUC values are observed in the Urology and Urogynecology specialties with relatively low variability among providers, while the Cardiology, Otolaringology, and Pulmonology specialties display high variability as well as the highest proportion of providers with poor prediction performance with AUC values below 0.7. We observed that the poor prediction performance for some of the providers is mostly due to relatively small training () and testing sample sizes ().

Figure 4.

Mean AUC of providers grouped into clinics (left), and box plots of AUC values for providers grouped by specialty for Bayesian Lasso method.

In terms of identifying the relevant predictors of no-shows, both Lasso and ARD produce identical results. The average number of relevant features among providers is approximately 13 with a maximum of 34 predictors. We see that the relevant predictors vary significantly among providers (and specialties) such that each of the 102 covariates is relevant for at least one of the providers. Table 1 displays a list of 10 selected predictors whose regression coefficients are positive and therefore considered as indicators of a higher risk of no-shows. The predictors in Table 1 are displayed in decreasing order according to the proportion of providers for which the specific predictor coefficient was relevant (i.e. different from zero) using the ARD estimates. From these results we observe that some predictors are fairly consistent among providers. For example, if an appointment was rescheduled or the number of days between the scheduling and the appointment date is too large the patient was more likely to be a no-show. Furthermore, patients that pay their own medical expenses (Self Pay), African American patients, patients with history of not showing to appointments in the last three months, and patients that indicated they wanted to cancel or did not confirm the appointment via the automated phone system were also more likely to not show for at least 15% of providers. We also see that when a collection agency needs to be involved due to past unpaid bills of a patient, the relative risk of no-show increases for 46% of providers approximately.

Table 1. Ten most frequent relevant predictors across all providers indicating increased risk of no-show in patients.

| Predictor | Relevance % | |

|---|---|---|

| 1 | Rescheduled appointment | 97.1 |

| 2 | Days until appointment | 82.7 |

| 3 | Self Pay patient (financial class) | 64.5 |

| 4 | African American patient | 52.9 |

| 5 | Collection agency involved | 46.4 |

| 6 | No-show appointments in past 3 months | 22.6 |

| 7 | Number of times appointment changed | 21.6 |

| 8 | NC Medicaid patient (financial class) | 19.1 |

| 9 | Wants to cancel (phone reminder status) | 16.8 |

| 10 | Answered but not confirmed (phone reminder status) | 15.8 |

Note that these results represent the more frequent relevant predictors of no-shows but not necessarily the strongest. The strongest predictors vary widely across providers; however, we see that appointment rescheduling and the Self Pay category of financial class are in the top two stronger predictors for approximately 90% of the providers in this data set. Table 2 lists the top five predictors that characterize lower risk patients among providers, i.e. predictors with negative regression coefficients. The top two predictors are the same for most of the providers, that is, patients that confirmed their appointments via the automated phone system and actively participated in the online portal (MyChart). We also see that older and retired patients have lower risk of no-show for a fair number of providers. Distinctive predictors are also observed among specialties, for example, having a copay has a strong negative prediction for at least 85% of providers in Urology, Urogynecology and Pulmonology but not the other specialties.

Table 2. Five most frequent relevant predictors across all providers indicating lower risk of no-show in patients.

| Predictor | Relevance % | |

|---|---|---|

| 1 | Confirmed appointment (phone reminder status) | 81.7 |

| 2 | Active in MyChart | 72.2 |

| 3 | Age on appointment date | 55.4 |

| 4 | Copay due | 47.3 |

| 5 | Retired (employment status) | 17.2 |

4.1. Results for individual providers

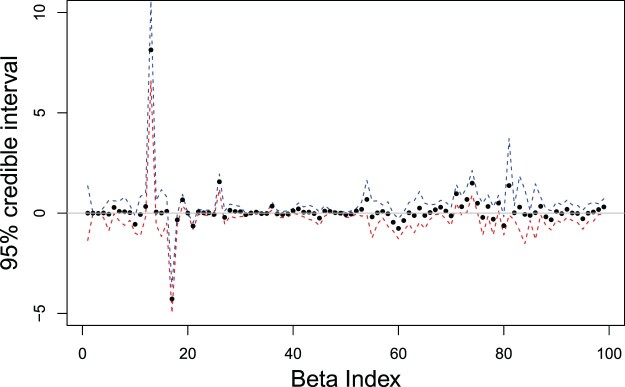

In this section, we discuss in more detail the interpretation of the strongest predictors of no-shows based on results from the Bayesian Lasso approach for a specific provider. The chosen provider has the best prediction accuracy in our data set with an AUC of 0.957, and serves in the Urology specialty. Figure 5 displays the posterior means and 95% credible intervals of the regression coefficients associated with all 99 predictors included for this particular provider (some factor categories were not present). The credible intervals tell us that the true value of the regression coefficient is in the respective interval with 95% probability. Thus we used them to establish the relevant predictors by identifying the regression coefficients for which the credible interval does not contain zero. We found 21 relevant predictors in this case.

Figure 5.

Posterior mean estimates (dots) and 95% credible intervals, upper limit (blue) and lower limit (red), of regression coefficients for Urology provider using Bayesian Lasso method. The horizontal line at zero is used to guide variable selection.

Tables 3 and 4 show the names and respective posterior mean and standard deviation of the coefficients for the top 14 strongest predictors, both positive and negative, in decreasing order (in absolute value). We use the estimated odds ratios (OR) to determine and compare the magnitude of the risk factors for no-shows. The odds ratio can be easily obtained by exponentiating the estimated regression coefficient associated with the predictors. Similar to what we observed in the results for all providers, the strongest no-show predictors in this case include appointment rescheduling, previous no-shows in the las three months and being in the self pay category. More specifically, holding other predictors fixed, the estimated odds that a patient with previous no-show records in the last three months does not show to the most recent scheduled appointment is 4.75 () greater than the corresponding odds for a patient with no history of no-shows in the last three months. Furthermore, the relative increase in the odds of no-shows is 4.44 for Self Pay patients, 2.64 for NC Blue Cross and 1.97 for Medicare Advantage patients compared to patients in the Medicare financial class (baseline category). Another strong positive predictor is past due bills that require a collection agency to be involved with an estimated odds ratio of 1.93 (). Finally, the odds of no-show for a patient that answered but did not confirm the appointment through the automated phone system are 1.65 times greater than the odds of no-show for a patient that was not reached (baseline category).

Table 3. Top seven positive predictors indicating increased risk of no-show for urology provider.

| Predictor | SD | ||

|---|---|---|---|

| 1 | Rescheduled appointment | 8.14 | 1.05 |

| 2 | No-show appointments in past 3 months | 1.56 | 0.20 |

| 3 | Self Pay patient (financial class) | 1.49 | 0.31 |

| 4 | NC Blue Cross patient (financial class) | 0.97 | 0.23 |

| 5 | Medicare Advantage patient (financial class) | 0.68 | 0.33 |

| 6 | Collection agency involved | 0.66 | 0.15 |

| 7 | Answered but not confirmed (phone reminder status) | 0.50 | 0.20 |

Table 4. Top seven negative predictors indicating lower risk of no-show for urology provider.

| Predictor | SD | ||

|---|---|---|---|

| 1 | Copay due | −4.28 | 0.35 |

| 2 | Disabled (employment status) | −0.76 | 0.25 |

| 3 | Previous appointments in past 3 months | −0.64 | 0.08 |

| 4 | Confirmed (phone reminder status) | −0.62 | 0.23 |

| 5 | Overbooked appotinment | −0.56 | 0.22 |

| 6 | Retired (employment status) | −0.46 | 0.22 |

| 7 | Active in MyChart | −0.34 | 0.14 |

On the other hand, for patients who have a copay the estimated odds of no-show decreases by roughly a factor of 70 for this particular provider in the Urology specialty. Demographically, patients who are disabled or retired have decreased odds of no-shows, 0.47 and 0.63 odds reduction respectively, compared to patients with an unknown employment status. Other lower risk factors for no-show patients include having previous appointments in the specific specialty in the last three months (), confirmation of the appointment via the automated phone system (), and being active in the MyChart online portal (). For this individual provider we also see that the relative reduction in the odds of no-show for a patient with an overbooked appointment is 0.57 approximately.

5. Discussion

We presented simple risk prediction models for no-shows that can be applied to EHR data from large health medical systems with multi-provider clinics and specialties. We discussed two Bayesian methods for adaptive shrinkage that induce sparsity in the model coefficients and allow us to identify relevant no-show risk factors. This information can be used for no-show detection and development of general intervention strategies, or to optimize booking arrangements for individual providers. Maintaining and updating multiple risk models with more incoming data can be challenging. Even though we train separate models at the provider level, models at the clinic level can also be implemented to borrow strength among providers, facilitate model maintenance and deployment, and possibly gain prediction accuracy as discussed in [4].

For big and high-dimensional data, a full Bayesian implementation of penalized regression models can be challenging and computationally restrictive. However, the use of variational approaches present an efficient alternative for model implementation within a Bayesian framework with relatively low accuracy loss. Other Bayesian methods based on spike-and-slab priors [8] provide a more principled approach to variable selection. In contrast to penalized regression, this approach includes natural measures of uncertainty such as posterior inclusion probabilities of the individual predictors and formal model selection using Bayes factors. We refer the reader and practitioners to the work of Carbonetto et al. [2] and the varbvs package in R which implements the spike-and-slab prior for Bayesian variable selection in large-scale regression based on variational approximation methods.

Penalized regression approaches such as the ones presented in this paper can be vastly adapted to hospital scheduling systems. By inputting the basic information of a patient the models can accurately predict a person's probability of not showing to a scheduled appointment. Preventive measures can then be taken to reduce no-show rates such as increasing the number of reminder calls or scheduling in more patients depending on this days' patient probability of being a no-show, similar to the overbooking scheme used by airline companies. However, intervention strategies should be planned with care to avoid the implications of a model that effectively discriminates against certain subpopulations. The work of Kusner et al. [12] introduces the concept of counterfactual fairness to enforce that the distribution over possible predictions for an individual should remain unchanged when an individual's protected attributes, as is the case of race and socioeconomic status, had been different in a causal sense. This approach could be explored in future work to overcome discrimination patterns in the decision-making process since simply removing the protected attributes from the analysis is not a satisfactory solution.

Appendix.

Using the fact that the Laplace prior can be expressed as a scale mixture of normals with exponential mixing density [15], the hierarchical representation of the Bayesian Lasso model is the following:

The binomial likelihood can also be represented as a mixture of normals with Polya-Gamma mixing distribution using the latent variable data augmentation approach of Polson et al. [16]. A Polya-Gamma Distribution is with density function given by

Given the conjugate hierarchical representation of the model for Lasso penalized logistic regression, the full conditionals for Gibbs sampling are given in closed form as follows:

where represents the Inverse Gaussian distribution, and

for and where .

Funding Statement

Lin, Betancourt, Goldstein, and Steorts were supported by a seed grant from the Duke Center for Integrative Health at Duke University, Durham, North Carolina

Disclosure statement

No potential conflict of interest was reported by the authors.

ORCID

Qiaohui Lin http://orcid.org/0000-0003-2792-0176

References

- 1.Bishop C.M., Pattern Recognition and Machine Learning, Springer, 2006. [Google Scholar]

- 2.Carbonetto P., Zhou X., and Stephens M., varbvs: Fast Variable Selection for Large-scale Regression, arXiv preprint arXiv:1709.06597 (2017).

- 3.Choi H.M. and Hobert J.P., The polya-gamma Gibbs sampler for bayesian logistic regression is uniformly ergodic, Electron. J. Stat. 7 (2013), pp. 2054–2064. doi: 10.1214/13-EJS837 [DOI] [Google Scholar]

- 4.Ding X., Gellad Z.F., Mather C., Barth P., Poon E.G., Newman M., and Goldstein B.A., Designing risk prediction models for ambulatory no-shows across different specialties and clinics, J. Am. Med. Inform. Assoc. 25 (2018), pp. 924–930. doi: 10.1093/jamia/ocy002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Drugowitsch J., Variational bayesian inference for linear and logistic regression. arXiv preprint arXiv:1310.5438v3, 2017

- 6.El-Sharo M., Zheng B., Yoon S.W., and Khasawneh M.T., An overbooking scheduling model for outpatient appointments in a multi-provider clinic, Oper. Res. Health Care 6 (2015), pp. 1–10. doi: 10.1016/j.orhc.2015.05.004 [DOI] [Google Scholar]

- 7.Ellis D.A., McQueenie R., McConnachie A., Wilson P., and Williamson A.E., Demographic and practice factors predicting repeated non-attendance in primary care: A national retrospective cohort analysis, The Lancet Public Health 2 (2017), pp. e551–e559. doi: 10.1016/S2468-2667(17)30217-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.George E.I. and McCulloch R.E., Variable selection via gibbs sampling, J. Amer. Statist. Assoc. 88 (1993), pp. 881–889. doi: 10.1080/01621459.1993.10476353 [DOI] [Google Scholar]

- 9.Hwang A.S., Atlas S.J., Cronin P., Ashburner J.M, Shah S.J., He W., and Hong C.S., Appointment ‘no-shows’ are an independent predictor of subsequent quality of care and resource utilization outcomes, 2015. doi: 10.1007/s11606-015-3252-3 [DOI] [PMC free article] [PubMed]

- 10.Jaakkola T.S. and Jordan M.I., Bayesian parameter estimation via variational methods, Stat. Comput. 10 (2000), pp. 25–37. doi: 10.1023/A:1008932416310 [DOI] [Google Scholar]

- 11.Kheirkhah P., Feng Q., Travis L.M., Tavakoli-Tabasi S., and Sharafkhaneh A., Prevalence, predictors and economic consequences of no-shows, BMC. Health. Serv. Res. 16 (2016), pp. 13. doi: 10.1186/s12913-015-1243-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Kusner M., Loftus J., Russell C., and Silva R., Counterfactual fairness. In 31st Conference of Neural Information Processing Systems (NIPS), 2017.

- 13.McQueenie R., Ellis D.A., Wilson P., McConnachie A., and Williamson A.E., Morbidity, mortality and missed appointments in healthcare: A national retrospective data linkage study, BMC. Med. 17 (2019), pp. 2. doi: 10.1186/s12916-018-1234-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Nuti L.A., Lawley M., Turkcan A., Tian Z., Zhang L., Chang K., and Willis D.R., No-shows to primary care appointments: Subsequent acute care utilization among diabetic patients, 2012. doi: 10.1186/1472-6963-12-304. [DOI] [PMC free article] [PubMed]

- 15.Park T. and Casella G., The bayesian lasso, J. Amer. Statist. Assoc. 103 (2008), pp. 681–686. doi: 10.1198/016214508000000337 [DOI] [Google Scholar]

- 16.Polson N.G., Scott J.G., and Windle J., Bayesian inference for logistic models using polya-gamma latent variables, J. Amer. Statist. Assoc. 108 (2013), pp. 1339–1349. doi: 10.1080/01621459.2013.829001 [DOI] [Google Scholar]

- 17.Saito H., sprsmdl: Sparse modeling toolkit, R package, 2015.

- 18.Tibshirani R., Regression shrinkage and selection via the lasso, J. R. Stat. Soc. Ser. B (Methodological) 58 (1996), pp. 267–288. [Google Scholar]

- 19.Tibshirani R., Saunders M., Rosset S., Zhu J., and Knight K., Sparsity and smoothness via the fused lasso, J. R. Stat. Soc, Ser. B 67 (2005), pp. 91–108. doi: 10.1111/j.1467-9868.2005.00490.x [DOI] [Google Scholar]

- 20.Tipping M.E., The relevance vector machine, Adv Neural Inform. Process. Syst. 12 (2000), pp. 652–658. [Google Scholar]

- 21.Tipping M.E., Sparse Bayesian learning and the relevance vector machine, J. Mach. Learn. Res. 1 (2001), pp. 211–244. [Google Scholar]

- 22.Williamson A.E., Ellis D.A., Wilson P., McQueenie R., and McConnachie A., Understanding repeated non-attendance in health services: A pilot analysis of administrative data and full study protocol for a national retrospective cohort, BMJ. Open 7 (2017), pp. e014120. doi: 10.1136/bmjopen-2016-014120 [DOI] [PMC free article] [PubMed] [Google Scholar]