Abstract

In this application note paper, we propose and examine the performance of a Bayesian approach for a homoscedastic nonlinear regression (NLR) model assuming errors with two-piece scale mixtures of normal (TP-SMN) distributions. The TP-SMN is a large family of distributions, covering both symmetrical/ asymmetrical distributions as well as light/heavy tailed distributions, and provides an alternative to another well-known family of distributions, called scale mixtures of skew-normal distributions. The proposed family and Bayesian approach provides considerable flexibility and advantages for NLR modelling in different practical settings. We examine the performance of the approach using simulated and real data.

KEYWORDS: Gibbs sampling, MCMC method, nonlinear regression model, scale mixtures of normal family, two-piece distributions

1. Introduction

Non-linear regression (NLR) models are commonly used to model data in a range of applications including engineering, biology, geology and finance, to name just a few. However, a common assumption is that the error distribution is normally distributed which has limitations for the modelling of asymmetrical and/or outlying data seen relatively common in practice.

Recent and various extensions to allow for asymmetrical distributions for non-linear regression models to different fields and phenomena have been proposed (See, e.g. [9,33,8,4,34,35,24,29]). These asymmetric NLR models have involved various distributional classes involving symmetry/asymmetry and others are light/heavy tailed. Montenegro et al. [24], Cancho et al. [5] and Labra et al. [14] are notable and highly cited works which introduced and showed the advantages of using the flexible class of scale mixtures of skew-normal (SMSN) family called SMSN-NLR models. The SMSN family [3] is a flexible class of distributions which is an extension of the skewed version of the well-known symmetric scale mixtures of normal (SMN) family [2], and covers the light/heavy tailed and symmetry/asymmetry distributions such as skew-normal (SN), skew-t (ST), skew-slash (SSL) and skew contaminated-normal (SCN) distributions. Additionally, this family has been widely considered in many statistical models [17,19,23,36,11], and references therein.

Another extension of the skewed version of the SMN family was introduced based on the two-piece distributions (constructed by symmetrical distributions; [1]) with various scales by Maleki and Mahmoudi [20] which were called two-piece scale mixtures of normal (TP-SMN) family. The TP-SMN family is an analogy and competitor of the well-known SMSN family which contains the light/heavy-tailed and symmetry/asymmetry members including the two-piece normal (TP-N), two-piece t (TP-T), two-piece slash (TP-SL) and two-piece contaminated-normal (TP-CN) distributions. Maleki et al. [15] and Hoseinzadeh et al. [12] proposed and examined the performance of the TP-SMN family in the context of NLR models (TP-SMN-NLR) using an EM-type algorithm to obtain maximum-likelihood estimates for parameters. However, in the context of non-linear regression models, these types of approaches often have computational difficulties in high dimensions and are often not suitable for noisy data without methods which have the capability to include some form of regularization on the parameter space.

In this paper, we extend the previous work to consider a Bayesian approach for the TP-SMN-NLR models which aims to provide more flexibility and advantages in different settings compared to previous approaches. In the extension, we are able to derive a suitable hierarchical representation for this particular approach (different from the hierarchical representation in [15]) which is computationally attractive and facilitates the use of Markov Chain Monte Carlo (MCMC) methods for finding Bayes estimates using the Gibbs sampling scheme.

The rest of this paper is organized as follows. In Section 2, some important properties of the TP-SMN family are reviewed and a suitable stochastic representation is proposed for use in the Bayesian approach. In Section 3, the TP-SMN-NLR models are introduced and a hierarchical representation of the model outlined. In Section 4, details of the MCMC approach using Gibbs sampling are outlined, including the appropriate choice of prior information. The performance of the approach is examined in Section 4 using simulation studies and several challenging and real examples used in the NLR literature. Some final conclusions are also provided in Section 5.

2. Some properties of the TP-SMN family

In this section, we present some properties of the TP-SMN distributions which have been used to implement the Bayesian analysis of the non-linear regression model (some of these properties have also been outlined in [15]).

The well-known SMN family introduced by Andrews and Mallows [2], denoted by is the basis of the asymmetry TP-SMN family [20] and has the following probability density function (pdf) and stochastic representation, respectively given by

| (1) |

| (2) |

where represents the density of distribution, is the cumulative distribution function (cdf) of the scale mixing random variable , which can be indexed by a scalar or vector of parameters , and is independent of .

The TP-SMN is a rich family of distributions that covers the asymmetric light-tailed TP-N, (which is also called the Epsilon-Skew-Normal; [26,18]) and asymmetric heavy-tailed TP-T, TP-SL and TP-CN distributions and their corresponding symmetric members. In terms of the density, for , this family can be represented as

| (3) |

where is the slant parameter, is given by (1), and it is denoted by TP-SMN with

for which , and and is the scale mixing random variable in (2).

There are a range of different TP-SMN member distributions which are possible from (3) using different distributions for the scale mixing random variable in (2), as follows:

Two-Piece Normal (TP-N): with probability one,

Two-Piece t (TP-T): , i.e. ,

Two-Piece Slash (TP-SL): , i.e. ,

Two-Piece Contaminated Normal (TP-CN): , i.e. .

For more details of stochastic representations, statistical inferences and applications of the two-pieces distributions, especially TP-SMN family, see Arellano-Valle et al. [1] and Maleki and Mahmoudi [20], Hoseinzadeh et al. [13,7], Moravveji et al. [25], Maleki et al. [15,16], Maleki et al. [21,22] and Ghasami et al. [10].

By using an auxiliary (latent) variable in terms of the components of the mixture (3), the TP-SMN random variable can have the following stochastic representation

| (4) |

where and denotes the truncated SMN-distribution on the interval , and has a probability mass function (pmf),

| (5) |

Note that this distribution for Z is different to the distribution used in Maleki et al. [15] to obtain ML estimates.

3. TP-SMN nonlinear regression

In this section, we introduce the TP-SMN nonlinear regression (TP-SMN-NLR) model and outline the hierarchical representation which will be needed for the Bayesian approach in Section 4.

3.1. TP-SMN-NLR models

The TP-SMN non-linear regression model is defined by

| (6) |

where is a vector of nonlinear regression parameters, is a response variable, is an injective and twice continuously differentiable function with respect to the parameter vector , is a vector of fixed explanatory variables for subject and with the random errors TP-SMN , where and . Following properties of the TP-SMN family, we have and

| (7) |

with vector of parameters . Note that and has the pdf given by

| (8) |

where is the TP-SMN density given in (3).

From Equations (6)–(7) and the stochastic representation of the SMN family in (4), it is noticed that the proposed TP-SMN-NLR can be written in a convenient hierarchical form and represented as

| (9) |

for and , where and denotes the truncated normal distribution on the interval . Note that the hierarchical representation (9) is different from the hierarchical representation in Maleki et al. [15] and facilitates a Bayesian approach to obtain posteriors using Gibbs sampling.

To define the complete log-likelihood for use in Gibbs sampling, let be the complete data in the form of the and , be the missing parts of the data and for which , be the i.i.d. observed part of the data from TP-SMN-NLR models with vector of parameters . Considering the hierarchical representation (9), the completed (augmented) likelihood function is given by

| (10) |

where .

4. Bayesian approach of TP-SMN-NLR models

In this section, we develop a Bayesian approach of the TP-SMN-NLR models defined by (6–7). Some standard model selection criterions are also presented.

4.1. Prior distributions

To complete the Bayesian specification of the TP-SMN-NLR models we need to consider prior distributions for all the unknown parameters , and . We assign conjugate but weakly informative prior distributions to the parameters, because it is assumed that no prior information from historical data or from previous experiment exists. Also, to guarantee proper posteriors, proper priors with known hyper-parameters are adopted. So, the following prior distributions are then specified in the form of:

i.e. normal prior distributions for the elements of . Also because of conjugacy, we consider the following priors for scale and shape parameters

The prior distribution assigned to , varies with the particular TP-SMN distributions as follows:

TP-T distribution: with mean before truncation. The truncation at the interval , was chosen to ensure a finite variance for errors.

TP-SL distribution: with small positive values and ( ).

TP-CN distribution: the non-informative and independent prior distributions are adopted for each component of .

The values assigned in our methodology to the hyper-parameters guarantee the propriety of the posterior distributions, and they are chosen in order to have weakly informative prior distributions. Note that more informative prior distributions could be used in the case of noisy data, particularly on the parameters in the error term In high dimensional applications there is also interest in regularization on the regression coefficients and so more informative priors could be used for this purpose. However, different types of prior distributions and an examination of their performance are outside the scope of this paper.

4.2. Posterior distributions

Assuming a joint prior distribution for given by , the joint posterior distribution of with unobserved variables is where is given in (10). Since this posterior distribution is not analytically tractable, MCMC methods such as the Gibbs sampler are used to draw samples from the full conditional distributions given by

where is the number of positive latent allocation variables , and .

Note that the full conditional posterior for the non-linear regression coefficient cannot be sampled from directly, so a Metropolis-Hasting algorithm within the Gibbs iterations will be used [6]. To generate samples from at the kth iteration in the chain, we generate a sample from and a random number from , then set the new value to be either or depending on whether

The matrix is a proposal covariance matrix that can be adapted as the chain progresses or after generating an initial MCMC chain, to achieve efficient convergence.

To complete the sampling scheme via MCMC methods, we need to determine the posterior distributions for the latent allocation variables and the latent scalar for and the additional parameter , which depends on the specific members of the TP-SMN family. To do this, we have that

where and .

And then, by defining , we obtain for that:

- TP-T-NLR:

(11)

Note that (11) does not have a closed form, but a Metropolis-Hastings algorithm or rejection sampling steps can be embedded in the MCMC scheme to obtain draws for .

- TP-SL-NLR:

• TP-CN-NLR:

where , and

| (12) |

Note that a Metropolis-Hastings algorithm can be embedded in the MCMC scheme to obtain draws for in (12), which is described in Rosa et al. [30].

4.3. Model selection criteria

Let be a sample of size M from posterior after a suitable burn-in period for the chain. A commonly used model selection criteria is the deviance information criterion (DIC). The posterior mean of the deviance can be estimated by , where where is defined in (9), and by utilizing the estimates from the MCMC chain, the DIC criterion can be estimated by , where and . Other model selection criteria can also be used (based on the posterior mean of the deviance ) which penalize for the number of parameters in the model such as the expected Akaike information criterion (EAIC) and the expected Bayesian information criterion (EBIC). These criteria can be estimated by and , respectively, where is the number of unknown parameters of the TP-SMN-NLR model with sample size of .

5. Numerical studies

The general performance of the TP-SMN-NLR models have been shown in Maleki et al. [15] with simulations and some well-known real datasets, so in this section, simulation studies and real examples are presented to evaluate the performance of Bayesian estimates of the proposed TP-SMN-NLR models. Results are compared with well-known scale mixtures of skew normal non-linear regression (SMSN-NLR) models.

For the simulation study we evaluate the performance of the Bayesian estimates based on the posterior mean for various TP-SMN-NLR models in three situations: week, moderate and strong skewed errors. All simulations use the following weakly informative prior distributions: , and , with for the TP-T-NLR model, for the TP-SL-NLR model, and independent of for the TP-CN-NLR model. Also, Gibbs sampling run of 45,000 iterations with a burn-in of 15,000 cycles is used for each generated data set (to eliminate the effect of the initial values and to avoid correlation problems,) and also chains monitored for convergence. Initial values of NLR model have obtained via the least square (LS) method, and for the TP-SMN distribution parameters have obtained via method of moments (MM) in Maleki and Mahmoudi [20] on the LS estimated residuals. The implementations of the algorithms are based on the R software [31] version 3.6.1 with a core i7 760 processor 2.8 GHz.

5.1. Simulations

In this part, we simulate from a nonlinear regression based on the logistic model given as

| (13) |

with , , , , where for the TP-T and TP-SL cases; and for TP-CN case, each with 400 Monte Carlo generated data sets. The variables are generated from a univariate normal standard distribution and these values are held fixed throughout the simulations.

We have two experiments: Experiment 1, parameters recovery and Experiment 2, performances of model selection criteria. In the Experiment 1, we consider different scenarios ( ) for simulations to verify the estimate of true parameter values accurately by using the proposed estimation method. In addition, in the Experiment 2, we compare the ability of some classic model selection criteria to select the appropriate model among the different models, including the SMSN family.

5.1.1. Experiment 1

For the first simulation study, we consider (respectively, strong, moderate and weak skewness) and , in each of these sets, the arithmetic average of the 100 Bayesian estimates (replications) given by

and the empirical mean squared error given by

(MC indicates the arithmetic average of the respective criterion) for the model parameters are obtained, where is the estimated value of for obtained in the th sample from the posterior mean. The results from the different fitted TP-SMN-NLR models are shown in Tables 1–3 and show the good performance of the proposed Bayesian estimates of parameters with various sample sizes. In particular, the parameter estimates are all relatively close to the true parameter estimates with reasonable accuracy across the Monte Carlo datasets.

Table 1.

The arithmetic average of Bayesian estimates and empirical mean squared error of logistic TP-SMN-NLR models with strong skewness .

| TP-N-NLR | TP-T-NLR | TP-SL-NLR | TP-CN-NLR | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Par. | MC-Mean | MC-MSE | MC-Mean | MC-MSE | MC-Mean | MC-MSE | MC-Mean | MC-MSE | |

| 10.311 | 0.769 | 9.746 | 0.874 | 9.642 | 0.911 | 9.597 | 0.964 | ||

| 1.262 | 0.665 | 1.271 | 0.623 | 1.232 | 0.644 | 1.228 | 0.677 | ||

| 0.227 | 0.110 | 0.181 | 0.103 | 0.222 | 0.125 | 0.213 | 0.123 | ||

| 1.298 | 0.311 | 1.302 | 0.312 | 1.278 | 0.315 | 1.307 | 0.345 | ||

| 0.039 | 0.028 | 0.032 | 0.075 | 0.035 | 0.033 | 0.034 | 0.027 | ||

| – | – | 6.643 | 0.594 | 5.203 | 0.583 | 0.410 | 0.232 | ||

| – | – | – | – | – | – | 0.602 | 0.311 | ||

| 10.265 | 0.713 | 10.338 | 0.862 | 9.569 | 0.869 | 10.358 | 0.883 | ||

| 1.238 | 0.627 | 1.235 | 0.618 | 1.223 | 0.621 | 1.218 | 0.637 | ||

| 0.178 | 0.102 | 0.215 | 0.098 | 0.184 | 0.119 | 0.211 | 0.127 | ||

| 1.223 | 0.270 | 1.247 | 0.291 | 1.254 | 0.288 | 1.269 | 0.258 | ||

| 0.056 | 0.016 | 0.040 | 0.023 | 0.061 | 0.020 | 0.062 | 0.029 | ||

| – | – | 5.711 | 0.507 | 6.305 | 0.497 | 0.550 | 0.221 | ||

| – | – | – | – | – | – | 0.567 | 0.247 | ||

| 9.781 | 0.492 | 10.265 | 0.487 | 10.198 | 0.413 | 10.222 | 0.413 | ||

| 1.118 | 0.517 | 1.133 | 0.504 | 1.124 | 0.534 | 1.129 | 0.540 | ||

| 0.208 | 0.088 | 0.193 | 0.079 | 0.210 | 0.092 | 0.207 | 0.090 | ||

| 1.127 | 0.242 | 1.120 | 0.246 | 1.131 | 0.251 | 1.121 | 0.228 | ||

| 0.052 | 0.009 | 0.053 | 0.010 | 0.054 | 0.011 | 0.055 | 0.013 | ||

| – | – | 6.051 | 0.445 | 6.080 | 0.431 | 0.529 | 0.170 | ||

| – | – | – | – | – | – | 0.520 | 0.146 | ||

| 10.118 | 0.401 | 10.132 | 0.424 | 10.157 | 0.401 | 9.879 | 0.397 | ||

| 1.087 | 0.496 | 1.092 | 0.500 | 1.102 | 0.497 | 1.100 | 0.489 | ||

| 0.194 | 0.069 | 0.206 | 0.077 | 0.195 | 0.085 | 0.203 | 0.081 | ||

| 1.118 | 0.229 | 1.101 | 0.211 | 1.110 | 0.207 | 1.104 | 0.201 | ||

| 0.049 | 0.010 | 0.052 | 0.008 | 0.053 | 0.009 | 0.051 | 0.011 | ||

| – | – | 5.881 | 0.421 | 6.072 | 0.449 | 0.487 | 0.156 | ||

| – | – | – | – | – | – | 0.513 | 0.142 | ||

Table 2.

The arithmetic average of Bayesian estimates and empirical mean squared error of logistic TP-SMN-NLR models with moderate skewness .

| TP-N-NLR | TP-T-NLR | TP-SL-NLR | TP-CN-NLR | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Par. | MC-Mean | MC-MSE | MC-Mean | MC-MSE | MC-Mean | MC-MSE | MC-Mean | MC-MSE | |

| 10.298 | 0.752 | 10.312 | 0.913 | 9.512 | 0.904 | 10.300 | 0.872 | ||

| 1.274 | 0.692 | 1.269 | 0.652 | 1.301 | 0.684 | 1.251 | 0.690 | ||

| 0.171 | 0.118 | 0.219 | 0.112 | 0.220 | 0.125 | 0.218 | 0.131 | ||

| 1.312 | 0.341 | 1.287 | 0.322 | 0.731 | 0.307 | 1.296 | 0.312 | ||

| 0.234 | 0.072 | 0.263 | 0.068 | 0.261 | 0.061 | 0.235 | 0.061 | ||

| – | – | 5.842 | 0.499 | 6.181 | 0.511 | 0.527 | 0.312 | ||

| – | – | – | – | – | – | 0.532 | 0.299 | ||

| 10.253 | 0.720 | 9.732 | 0.807 | 10.398 | 0.766 | 10.247 | 0.754 | ||

| 1.248 | 0.646 | 1.243 | 0.642 | 1.250 | 0.651 | 1.244 | 0.660 | ||

| 0.217 | 0.108 | 0.182 | 0.106 | 0.216 | 0.111 | 0.218 | 0.120 | ||

| 0.793 | 0.293 | 1.230 | 0.291 | 1.262 | 0.275 | 1.239 | 0.281 | ||

| 0.260 | 0.062 | 0.264 | 0.058 | 0.262 | 0.059 | 0.260 | 0.051 | ||

| – | – | 6.147 | 0.461 | 5.888 | 0.498 | 0.471 | 0.300 | ||

| – | – | – | – | – | – | 0.525 | 0.321 | ||

| 9.781 | 0.501 | 10.212 | 0.489 | 10.263 | 0.465 | 10.220 | 0.492 | ||

| 1.156 | 0.476 | 1.174 | 0.511 | 1.143 | 0.532 | 1.169 | 0.498 | ||

| 0.208 | 0.091 | 0.209 | 0.086 | 0.191 | 0.090 | 0.189 | 0.095 | ||

| 1.118 | 0.234 | 1.125 | 0.217 | 1.108 | 0.205 | 0.897 | 0.199 | ||

| 0.256 | 0.049 | 0.255 | 0.051 | 0.234 | 0.060 | 0.232 | 0.055 | ||

| – | – | 5.893 | 0.406 | 6.077 | 0.422 | 0.483 | 0.247 | ||

| – | – | – | – | – | – | 0.513 | 0.253 | ||

| 10.142 | 0.417 | 10.122 | 0.421 | 10.178 | 0.431 | 9.854 | 0.418 | ||

| 1.137 | 0.440 | 1.129 | 0.452 | 1.130 | 0.456 | 1.119 | 0.437 | ||

| 0.193 | 0.087 | 0.192 | 0.084 | 0.206 | 0.088 | 0.201 | 0.091 | ||

| 1.107 | 0.226 | 0.928 | 0.213 | 1.089 | 0.198 | 1.084 | 0.190 | ||

| 0.248 | 0.047 | 0.253 | 0.046 | 0.256 | 0.050 | 0.253 | 0.055 | ||

| – | – | 6.043 | 0.387 | 6.059 | 0.391 | 0.491 | 0.239 | ||

| – | – | – | – | – | – | 0.506 | 0.248 | ||

Table 3.

The arithmetic average of Bayesian estimates and empirical mean squared error of logistic TP-SMN-NLR models with weak skewness .

| TP-N-NLR | TP-T-NLR | TP-SL-NLR | TP-CN-NLR | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Par. | MC-Mean | MC-MSE | MC-Mean | MC-MSE | MC-Mean | MC-MSE | MC-Mean | MC-MSE | |

| 9.497 | 0.797 | 10.334 | 0.927 | 9.496 | 0.909 | 10.421 | 0.915 | ||

| 1.286 | 0.712 | 1.274 | 0.735 | 1.292 | 0.737 | 1.282 | 0.747 | ||

| 0.224 | 0.123 | 0.223 | 0.119 | 0.179 | 0.120 | 0.181 | 0.126 | ||

| 0.674 | 0.350 | 1.311 | 0.347 | 1.303 | 0.356 | 0.694 | 0.338 | ||

| 0.433 | 0.071 | 0.468 | 0.069 | 0.475 | 0.071 | 0.474 | 0.079 | ||

| – | – | 6.223 | 0.521 | 6.308 | 0.530 | 0.537 | 0.351 | ||

| – | – | – | – | – | – | 0.466 | 0.347 | ||

| 10.404 | 0.701 | 10.323 | 0.727 | 9.611 | 0.720 | 10.412 | 0.711 | ||

| 1.251 | 0.676 | 1.255 | 0.685 | 1.241 | 0.655 | 1.227 | 0.690 | ||

| 0.178 | 0.119 | 0.217 | 0.120 | 0.218 | 0.117 | 0.219 | 0.122 | ||

| 1.239 | 0.287 | 0.680 | 0.292 | 1.265 | 0.301 | 1.264 | 0.287 | ||

| 0.438 | 0.069 | 0.467 | 0.066 | 0.465 | 0.071 | 0.435 | 0.073 | ||

| – | – | 5.798 | 0.518 | 6.288 | 0.512 | 0.531 | 0.342 | ||

| – | – | – | – | – | – | 0.540 | 0.356 | ||

| 10.358 | 0.677 | 9.680 | 0.700 | 10.338 | 0.692 | 10.361 | 0.685 | ||

| 1.174 | 0.501 | 1.191 | 0.524 | 1.165 | 0.513 | 1.160 | 0.560 | ||

| 0.211 | 0.100 | 0.189 | 0.096 | 0.209 | 0.099 | 0.210 | 0.097 | ||

| 1.131 | 0.227 | 0.880 | 0.225 | 1.134 | 0.219 | 1.116 | 0.217 | ||

| 0.461 | 0.057 | 0.442 | 0.049 | 0.458 | 0.042 | 0.456 | 0.041 | ||

| – | – | 6.137 | 0.474 | 6.103 | 0.460 | 0.482 | 0.280 | ||

| – | – | – | – | – | – | 0.523 | 0.293 | ||

| 10.201 | 0.523 | 10.280 | 0.603 | 10.256 | 0.598 | 10.240 | 0.549 | ||

| 1.156 | 0.478 | 1.161 | 0.463 | 1.155 | 0.440 | 1.129 | 0.479 | ||

| 0.208 | 0.081 | 0.209 | 0.086 | 0.195 | 0.080 | 0.207 | 0.099 | ||

| 0.934 | 0.213 | 0.926 | 0.217 | 1.109 | 0.211 | 1.100 | 0.211 | ||

| 0.445 | 0.054 | 0.453 | 0.046 | 0.458 | 0.042 | 0.453 | 0.045 | ||

| – | – | 5.870 | 0.449 | 6.089 | 0.437 | 0.518 | 0.265 | ||

| – | – | – | – | – | – | 0.488 | 0.259 | ||

5.1.2. Experiment 2

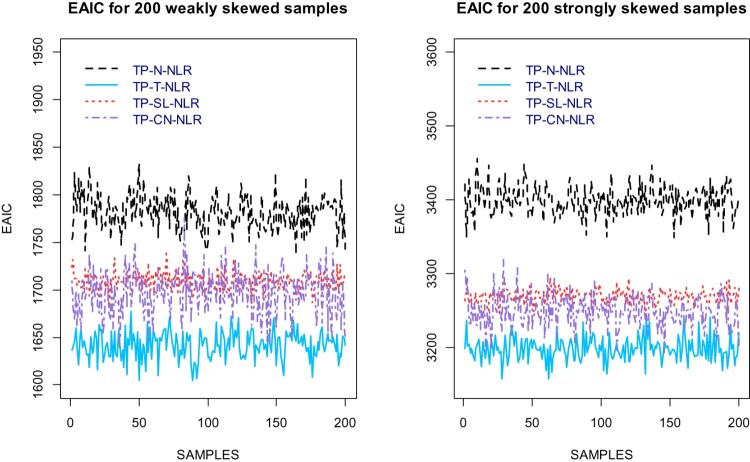

The second simulation study is devoted to the NLR model (13) with two 200 samples, for which on each sample sets , be an i.i.d. sequence of skew-t with zero mean, , and with weak skewness (heavy tailed weakly skewed) and strong skewness (heavy tailed strongly skewed). The Bayesian model comparison criterion EAIC is presented for the proposed TP-SMN-NLR models in Figure 1, which satisfied (the expected result) that the asymmetry and heavy-tailed TP-SMN-NLR members (i.e. TP-T-NLR, TP-SL-NLR and TP-CN-NLR models), especially TP-T-NLR, are the best fitted models in comparison the light-tailed TP-N-NLR member.

Figure 1.

EAIC criterion of fitted TP-SMN-NLR models for weakly (Left) and strongly (Right) skewed ST-NLR samples.

5.2. Real datasets

In this section, we examine the performance of the Bayesian fitted TP-SMN-NLR models compared to the Bayesian fitted well-known class of SMSN-NLR models examined in Cancho et al. [5] for two real datasets. The proposed Bayesian model selection criteria show that TP-T-NLR model is more suitable for the both proposed datasets compared to other TP-SMN-NLR models, and also has better performance against their well-known SMSN-NLR counterparts.

5.2.1. One dimensional predictor data

The first dataset is the result of a NIST study involving circular interference transmittance. This real data called ‘Eckerle4’ set has length of with response variable ( : transmittance) and predictor variables ( wavelength). This dataset is available at https://www.itl.nist.gov/div898/strd/nls/data/LINKS/DATA/Eckerle4.dat, and in ‘NISTnls’ R statistical software package, which is considered by [32] R., NIST (197?). In the present methodology assume that the following NLR model, given by

The results of the various Bayesian fitted TP-SMN-NLR models and corresponding counterparts in the SMSN-NLR models are provided in Tables 4 and 5.

Table 4.

Bayesian estimates of the TP-SMN-NLR and SMSN-NLR models parameters for the ‘Eckerle4' dataset.

| Par. | TP-N | SN | TP-T | ST | TP-SL | SSL | TP-CN | SCN |

|---|---|---|---|---|---|---|---|---|

| 1.5535 | 1.5673 | 1.5412 | 1.5419 | 1.5452 | 1.5361 | 1.5548 | 1.5523 | |

| 4.0761 | 4.0947 | 4.1661 | 4.1076 | 4.1245 | 4.1481 | 4.0729 | 4.0884 | |

| 451.5638 | 451.5763 | 451.5040 | 451.5020 | 451.5254 | 451.5032 | 451.5090 | 451.5031 | |

| 1.2e–02 | 1.0e–04 | 54e-04 | 4.2e–06 | 7.6e–03 | 8.6e–06 | 8.2e–04 | 5.7e–07 | |

| 0.3994 | –2.6411 | 0.4726 | 0.0492 | 0.5302 | –0.6222 | 0.5211 | –0.1486 | |

| – | – | 1.2354 | 0.9371 | 1.2077 | 0.9032 | (0.6,0.02) | (0.6,0.01) |

Table 5.

Bayesian model selection criteria for the TP-SMN-NLR and SMSN-NLR models parameters of the ‘Eckerle4’ dataset.

| Criteria | TP-N | SN | TP-T | ST | TP-SL | SSL | TP-CN | SCN |

|---|---|---|---|---|---|---|---|---|

| EAIC | –247.21 | –246.07 | –252.15 | –251.10 | –250.72 | –249.51 | –251.14 | –250.23 |

| EBIC | –239.34 | –238.25 | –242.82 | –241.12 | –241.41 | –240.19 | –240.19 | –239.33 |

| DIC | –251.42 | –250.11 | –256.11 | –255.21 | –254.50 | –254.32 | –255.31 | –254.26 |

Note: The best values are indicated in bold.

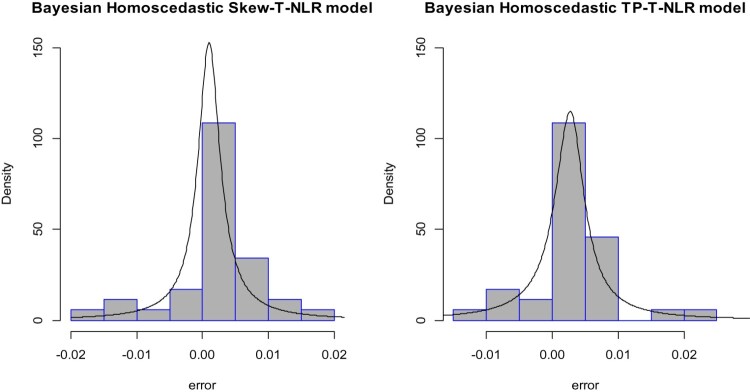

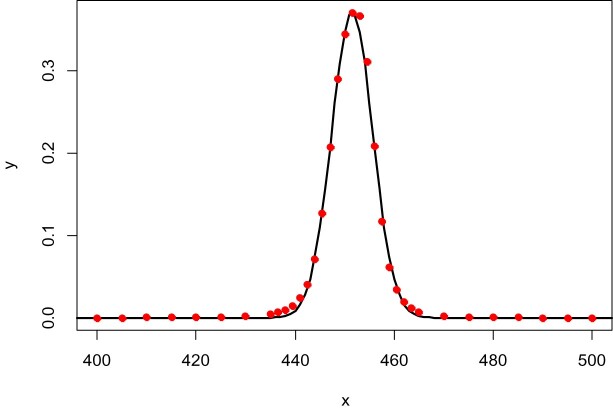

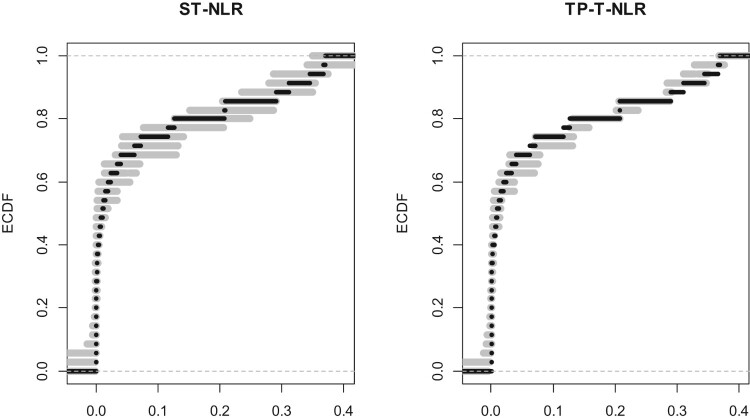

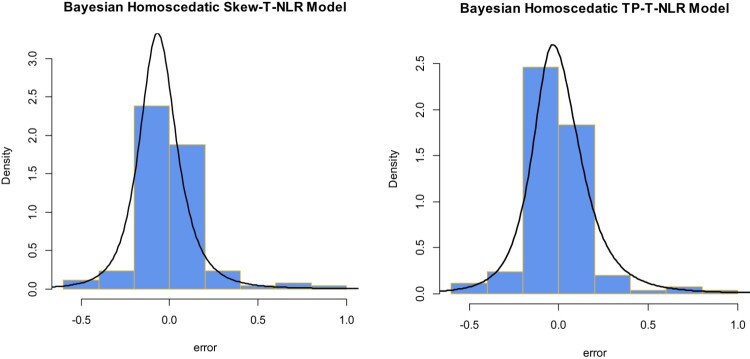

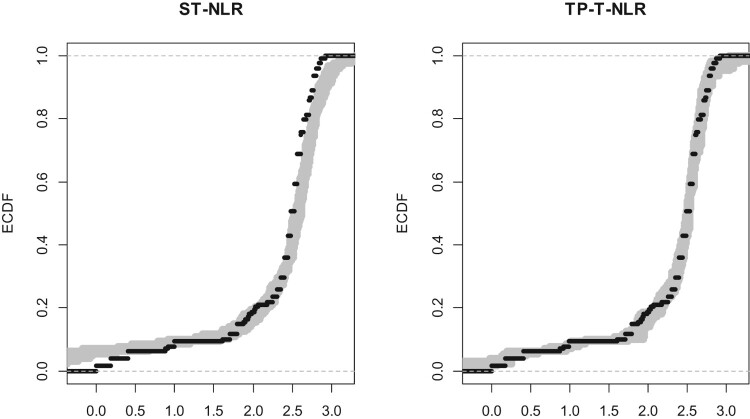

In this example, the TP-T-NLR model appears to be the best fitting, with most of the two-piece distributions outperforming their SMSN counterpart. In order to some fitted model checking, we consider the following diagrams (Figures 2–4). The histograms of the residuals based on the TP-T-NLR and skew-t NLR (ST-NLR) models are provided in Figure 2. According the keenly methodology in O’Hagan et al. [28], the ECDF of the original response ‘Eckerle4’ dataset has superimposed on the ECDFs of 500 data sets simulated from the optimal models under skew-t and the TP-T models in Figure 3. Figures 2–3 provide marginally better fit of the TP-T-NLR than the ST-NLR model. The fitted TP-T-NLR model superimposed on the ‘Eckerle4’ data is provided in Figure 4.

Figure 2.

Bayesian estimated densities of the ST (left) and TP-T (right) models fitted on their corresponding residuals of each NLM using the ‘Eckerle4' dataset.

Figure 4.

Bayesian fitted TP-T-NLR model and observed values versus predictor variable from the ‘Eckerle4' dataset.

Figure 3.

The black line represents the ECDF of the original response ‘Eckerle4' dataset; the gray lines represent the ECDFs of each of the 500 data sets simulated from the optimal model under the ST (left) and the TP-T (right) models.

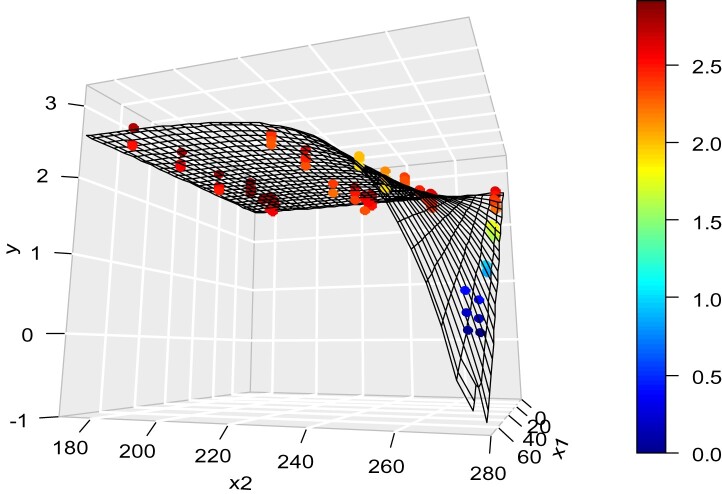

5.2.2. Two dimensional predictor data

In this section, we examine the performance of the Bayesian fitted TP-SMN-NLR models compared to the Bayesian fitted well-known class of SMSN-NLR models examined in Cancho et al. [5] for a real dataset. The proposed Bayesian model selection criteria show that TP-T-NLR model is more suitable for the proposed data compared to other TP-SMN-NLR models, and also has better performance against their well-known SMSN-NLR counterparts.

The real data called ‘Nelson’ set has length of with one response variable ( : dielectric breakdown strength in kilo-volts) and two predictor variables ( time in weeks, and, temperature in degrees Celsius). These data are the result of a study involving the analysis of performance degradation data from accelerated tests, published in Nelson [27] and Maleki et al. [15]. In the present methodology and assuming the same NLR model as in Nelson [27],

The results of the various Bayesian fitted TP-SMN-NLR models and corresponding counterparts in the SMSN-NLR models are provided in Tables 6 and 7.

Table 6.

Bayesian estimates of the TP-SMN-NLR and SMSN-NLR models parameters for the ‘Nelson' dataset.

| Par. | TP-N | SN | TP-T | ST | TP-SL | SSL | TP-CN | SCN |

|---|---|---|---|---|---|---|---|---|

| 2.53e+00 | 2.61e+00 | 2.63e+00 | 2.60e+00 | 2.59e+00 | 2.62e+00 | 2.61e+00 | 2.62e+00 | |

| 9.72e–09 | 9.06e–09 | 2.30e–09 | 2.29e–09 | 4.52e–09 | 4.51e–09 | 4.35e–09 | 2.55e–08 | |

| –5.53e–02 | –5.61e–02 | –6.22e–02 | –6.23e–02 | –5.91e–02 | –5.87e–02 | –5.93e–02 | –5.21e–02 | |

| 0.3511 | 0.2401 | 0.2103 | 0.1204 | 0.2402 | 0.2110 | 0.1702 | 0.1320 | |

| 0.4201 | – 1.3101 | 0.5870 | 0.2394 | 0.5210 | 0.2532 | 0.6571 | – 0.3521 | |

| – | – | 2.8504 | 2.8222 | 2.2331 | 2.1109 | (0.3,0.1) | (0.3,0.1) |

Table 7.

Bayesian model selection criteria for the TP-SMN-NLR and SMSN-NLR models parameters of the ‘Nelson' dataset.

| Criteria | TP-N | SN | TP-T | ST | TP-SL | SSL | TP-CN | SCN |

|---|---|---|---|---|---|---|---|---|

| EAIC | –78.143 | –78.432 | –95.211 | –93.209 | –86.132 | –82.567 | –78.980 | –85.590 |

| EBIC | –63.814 | –64.345 | –78.147 | –76.432 | –69.244 | –65.413 | –59.734 | –65.576 |

| DIC | –80.621 | –80.879 | –97.720 | –95.689 | –88.593 | –85.010 | –81.402 | –88.023 |

Note: The best values are indicated in bold.

In this example, the TP-T-NLR model appears to be the best fitting, with most of the two-piece distributions outperforming their SMSN counterpart. To model checking of the best fitted models as the previous real example, the histograms of the residuals based on the TP-T-NLR and ST-NLR models are provided in Figure 5. The ECDF of the original response ‘Nelson’ dataset has superimposed on the ECDFs of 500 data sets simulated from the optimal models under skew-t and the TP-T models in Figure 6. Figures 5 and 6 provide marginally better fit of the TP-T-NLR than the ST-NLR model. The fitted surface of the TP-T-NLR model superimposed on the ‘Nelson’ data is provided in Figure 7.

Figure 5.

Bayesian estimated densities of the ST (left) and TP-T (right) models fitted on their corresponding residuals of each NLM using the ‘Nelson' dataset.

Figure 6.

The black line represents the ECDF of the original response ‘Nelson' dataset; the gray lines represent the ECDFs of each of the 500 data sets simulated from the optimal model under the ST (left) and the TP-T (right) models.

Figure 7.

Bayesian fitted surface of the fitted TP-T-NLR model and observed values versus two predictor variables from the ‘Nelson' dataset.

6. Conclusion

In this paper, we have extended previous work and the literature on NLR models to propose a Bayesian approach to fitting these models using TP-SMN distributions. This family of distributions provides considerable flexibility in modelling asymmetric and/or outlying data in the context of NLR models and encompasses a number of well-known distributions. We proposed a special stochastic and hierarchical representation of the TP-SMN random variables to allow for ease of computation using a Bayesian approach to estimate non-linear regression parameters. Using simulated and real dataset, the proposed methodology provides good performance of the Bayesian estimates of TP-SMN-NLR models compared to the well-known SMSN-NLR models. Future research could take a number of directions but we expect some of this to build on the advantages of the Bayesian approach in this context including the handling of high dimensional and/or noisy data, which can be particular problems in regression settings in practice.

Acknowledgements

We would like to express our very great appreciation to associate editor and reviewer(s) for their valuable and constructive suggestions during the planning and development of this research work.

Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- 1.Arellano-Valle R.B., Gómez H., and Quintana F.A., Statistical inference for a general class of asymmetric distributions. J. Stat. Plan. Inference. 128 (2005), pp. 427–443. [Google Scholar]

- 2.Andrews D.R. and Mallows C.L., Scale mixture of normal distribution. J. R. Stat. Soc., Ser. B 36 (1974), pp. 99–102. [Google Scholar]

- 3.Branco M.D. and Dey D.K., A general class of multivariate skew-elliptical distributions. J. Multivar. Anal. 79 (2001), pp. 99–113. [Google Scholar]

- 4.Cancho V.C., Lachos V.H., and Ortega E.M.M., A nonlinear regression model with skew-normal errors. Stat. Pap. 51 (2009), pp. 547–558. [Google Scholar]

- 5.Cancho V.G., Dey D.K., Lachos V.H., and Andrade M.G., Bayesian nonlinear regression models with scale mixtures of skew-normal distributions: estimation and case influence diagnostics. Comput. Stat. Data Anal. 55 (2011), pp. 588–602. [Google Scholar]

- 6.Chib S. and Greenberg E., Understanding the metropolis_Hastings algorithm. Am. Stat. 49 (1995), pp. 327–335. [Google Scholar]

- 7.Contreras-Reyes J.E., Maleki M., and Cortés D.D., Skew-Reflected-Gompertz information quantifiers with application to sea surface temperature records. Mathematics 7 (2019), pp. 403). doi: 10.3390/math7050403. [DOI] [Google Scholar]

- 8.Cordeiro G.M., Cysneiros A.H.M.A., and Cysneiros F.J.A., Corrected maximum likelihood estimators in heteroscedastic symmetric nonlinear models. J. Stat. Comput. Simul. (2009), doi: 10.1080/00949650802706420. [DOI] [Google Scholar]

- 9.Cysneiros F.J.A. and Vanegas L.H., Residuals and their statistical properties in symmetrical nonlinear models. Stat. Probab. Lett. 78 (2008), pp. 3269–3273. [Google Scholar]

- 10.Ghasami S., Maleki M., and Khodadadi Z., Leptokurtic and platykurtic class of robust symmetrical and asymmetrical time series models. J. Comput. Appl. Math. 112806 (2020), doi: 10.1016/j.cam.2020.112806. [DOI] [Google Scholar]

- 11.Hajrajabi A. and Maleki M., Nonlinear semiparametric autoregressive model with finite mixtures of scale mixtures of skew normal innovations. J. Appl. Stat. 46 (2019), pp. 2010–2029. [Google Scholar]

- 12.Hoseinzadeh A., Maleki M., and Khodadadi Z., Heteroscedastic nonlinear regression models using asymmetric and heavy tailed two-piece distributions. AStA Adv. Stat. Anal. (2020), doi: 10.1007/s10182-020-00384-3. [DOI] [Google Scholar]

- 13.Hoseinzadeh A., Maleki M., Khodadadi Z., and Contreras-Reyes J.E., The skew-Reflected-Gompertz distribution for analyzing symmetric and asymmetric data. J. Comput. Appl. Math. 349 (2019), pp. 132–141. [Google Scholar]

- 14.Labra F.V., Garay A.M., Lachos V.H., and Ortega E.M.M., Estimation and diagnostics for heteroscedastic nonlinear regression models based on scale mixtures of skew-normal distributions. J. Stat. Plan. Inference. 142 (2012), pp. 2149–2165. [Google Scholar]

- 15.Maleki M., Barkhordar Z., Khodadadi Z., and Wraith D., A robust class of homoscedastic nonlinear regression models. J. Stat. Comput. Simul. 89 (2019c), pp. 2765–2781. [Google Scholar]

- 16.Maleki M., Contreras-Reyes J.E., and Mahmoudi M.R., Robust mixture modeling based on two-piece scale mixtures of normal family. Axioms 8 (2019d), pp. 38). doi: 10.3390/axioms8020038. [DOI] [Google Scholar]

- 17.Maleki M. and Nematollahi A.R., Autoregressive models with mixture of scale mixtures of Gaussian innovations. Iran. J. Sci. Technol., Trans. A: Sci. 41 (2017a), pp. 1099–1107. [Google Scholar]

- 18.Maleki M. and Nematollahi A.R., Bayesian approach to epsilon-skew-normal family. Commun. Stat. Theory Methods 46 (2017b), pp. 7546–7561. [Google Scholar]

- 19.Maleki M., Wraith D., Mahmoudi M.R., and Contreras-Reyes J.E., Asymmetric heavy-tailed vector auto-regressive processes with application to financial data. J. Stat. Comput. Simul. 90 (2019a), pp. 324–340. [Google Scholar]

- 20.Maleki M. and Mahmoudi M.R., Two-Piece Location-scale distributions based on scale mixtures of normal family. Commun. Stat. Theory Methods 46 (2017), pp. 12356–12369. [Google Scholar]

- 21.Maleki M., Mahmoudi M.R., Heydari M.H., and Pho K.H., Modeling and forecasting the spread and death rate of coronavirus (COVID-19) in the world using time series models. Chaos, Solitons Fractals 110151 (2020a), doi: 10.1016/j.chaos.2020.110151. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Maleki M., Mahmoudi M.R., Wraith D., and Pho K.H., Time series modelling to forecast the confirmed and recovered cases of COVID-19. Travel Med. Infect. Dis. 101742 (2020b), doi: 10.1016/j.tmaid.2020.101742. [DOI] [PubMed] [Google Scholar]

- 23.Maleki M., Wraith D., and Arellano-Valle R.B., A flexible class of parametric distributions for Bayesian linear mixed models. TEST 28 (2019b), pp. 543–564. [Google Scholar]

- 24.Montenegro L.C., Lachos V., and Bolfarine H., Local influence analysis of skew-normal linear mixed models. Commun. Stat. Theory Methods 38 (2009), pp. 484–496. [Google Scholar]

- 25.Moravveji B., Khodadadi Z., and Maleki M., A Bayesian analysis of Two-piece distributions based on the scale mixtures of normal family. Iran. J. Sci. Technol., Trans. A: Sci. 43 (2019), pp. 991–1001. [Google Scholar]

- 26.Mudholkar G.S. and Hutson A.D., The epsilon-skew-normal distribution for analyzing near-normal data. J. Stat. Plan. Inference. 83 (2000), pp. 291–309. [Google Scholar]

- 27.Nelson W., Analysis of performance-degradation data. IEEE Trans. Reliab. 2 (1981), pp. 149–155. [Google Scholar]

- 28.O’Hagan A., Murphy T.B., Gormley I.C., McNicholas P.D., and Karlis D., Clustering with the multivariate normal inverse Gaussian distribution. Comput. Stat. Data Anal. 93 (2016), pp. 18–30. [Google Scholar]

- 29.Pan J.J., Mahmoudi M.R., Baleanu D., and Maleki M., On Comparing and Classifying several independent linear and Non-linear regression models with symmetric errors. Symmetry. (Basel) 11 (2019), pp. 820). doi: 10.3390/sym11060820. [DOI] [Google Scholar]

- 30.Rosa G.J.M., Padovani C.R., and Gianola D., Robust linear mixed models with normal/independent distributions and Bayesian MCMC implementation. Biom. J. 45 (2003), pp. 573–590. [Google Scholar]

- 31.R Core Team . R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. Available at https://www.R-project.org/.

- 32.Thurber R., Semiconductor Electron Mobility Modeling, NIST, unpublished, 1979.

- 33.Vanegas L.H. and Cysneiros F.J.A., Assesment of diagnostic procedures in symmetrical nonlinear regression models. Comput. Stat. Data Anal. 54 (2010), pp. 1002–1016. [Google Scholar]

- 34.Xie F.C., Lin J.G., and Wei B.C., Diagnostics for skew-normal nonlinear regression models with ar(1) errors. Comput. Stat. Data. Anal. 53 (2009a), pp. 4403–4416. [Google Scholar]

- 35.Xie F.C., Wei B.C., and Lin J.G., Homogeneity diagnostics for skew-normal nonlinear regression models. Stat. Probab. Lett. 79 (2009b), pp. 821–827. [Google Scholar]

- 36.Zarrin P., Maleki M., Khodadadi Z., and Arellano-Valle R.B., Time series process based on the unrestricted skew normal process. J. Stat. Comput. Simul. 89 (2018), pp. 38–51. [Google Scholar]