ABSTRACT

In many real-world applications of monitoring multivariate spatio-temporal data that are non-stationary over time, one is often interested in detecting hot-spots with spatial sparsity and temporal consistency, instead of detecting system-wise changes as in traditional statistical process control (SPC) literature. In this paper, we propose an efficient method to detect hot-spots through tensor decomposition, and our method has three steps. First, we fit the observed data into a Smooth Sparse Decomposition Tensor (SSD-Tensor) model that serves as a dimension reduction and de-noising technique: it is an additive model decomposing the original data into: smooth but non-stationary global mean, sparse local anomalies, and random noises. Next, we estimate model parameters by the penalized framework that includes Least Absolute Shrinkage and Selection Operator (LASSO) and fused LASSO penalty. An efficient recursive optimization algorithm is developed based on Fast Iterative Shrinkage Thresholding Algorithm (FISTA). Finally, we apply a Cumulative Sum (CUSUM) Control Chart to monitor model residuals after removing global means, which helps to detect when and where hot-spots occur. To demonstrate the usefulness of our proposed SSD-Tensor method, we compare it with several other methods including scan statistics, LASSO-based, PCA-based, T2-based control chart in extensive numerical simulation studies and a real crime rate dataset.

Keywords: Tensor decomposition, spatio-temporal, hot-spot detection, quick detection, CUSUM

1. Introduction

In many real-world applications such as biosurveillance, epidemiology, and sociology, multiple data sources are often measured from many spatial locations repeatedly over time, say, daily, monthly, or annually. This is commonly referred as multivariate spatio-temporal data. When such data are non-stationary over time, compared with detecting the global or system-wise changes as in the traditional statistical process control (SPC) or sequential change-point detection literature, we would be more interested in detecting hot-spots with spatial sparsity and temporal consistency. Here, we define hot-spots as the anomalies that can occur in the temporal and spatial domains among the multivariate spatio-temporal data.

The primary objective of this paper is to develop an efficient method for hot-spots detection and localization for multivariate spatio-temporal data. From the viewpoint of monitoring non-stationary multivariate spatial-temporal data, there are two kinds of changes: one is the change on the global-level trend (e.g. the first-order changes), and the other is the local-level hot-spot (e.g. second-order changes). Here we focus on detecting the latter one and assume that local-level hot-spots have the following two properties: (1) spatial sparsity, i.e. the local changes are sparse in the spatial domain; and (2) temporal consistency, i.e. the local changes last for a reasonable period of time.

Little research has been done on the hot-spot detection for multivariate spatio-temporal data, although there are two major related existing research areas for detection in multivariate spatio-temporal data: one is spatio-temporal cluster detection, and the other is change-point detection. In the first area, the famous representative is the scan statistics-based method, which was first developed in the 1960s in [17] and later extended by [11] to detect anomalous clusters in spatio-temporal data. The main idea of scan statistics is to detect the abnormal clusters by utilizing maximal log-likelihood ratio (more mathematical details are provided in Appendix 3). It is worth noting that the scan statistics-based method is a parametric method, in which the parametric families of data distributions are made. For instance, [26] assumes the negative binomial distribution, [11,12,18,19] investigate the Poisson distribution. A limitation of scan statistics is that it assumes that the background is independent and identically distributed (i.i.d.) or follow a rather simple probability distribution, which might not be suitable to handle non-stationary spatio-temporal data.

The second category of existing research is the change-point detection problem for spatio-temporal data. Below we will further review two approaches that are related to our context: the Least Absolute Shrinkage and Selection (LASSO)-based methods and dimension-reduction-based methods. Note that LASSO has been demonstrated to be an effective method for variable selection to address sparsity issues for high-dimensional data in the past decades since its developments in [27], and thus it is natural to apply it to detect sparse changes in high-dimensional data, see [24,32,34,36–38]. While the sparse change of LASSO is similar to the hot-spots, unfortunately, as our extensive simulation studies will demonstrate, the LASSO-based control chart is unable to separate the local hot-spots from the non-stationary global trend mean in the spatio-temporal data.

For the dimension-reduction-based change-point detection method, principal component analysis (PCA) or other dimension-reduction methods are often used to extract the features from the high-dimensional data. More specifically, [14] reduces the dimensionality in spatio-temporal data by constructing and Q charts separately. [22] combines multivariate functional PCA with change-point models to detect the hot-spots. For other dimension reduction methods, please see [1,5,9,16,21,33] for more details. The drawbacks of PCA or other dimension-reduction-based methods are the restriction of the change-point detection problem and the failure to consider the spatial sparsity and temporal consistency of hot-spots.

Our proposed method is essential to the application of the LASSO-based method and dimension-reduction-based method for SPC or change-point detection over a model based on tensor, which is a multi-dimensional array. It is worth noting that the multivariate spatio-temporal data can often be represented in 3-dimensional tensor format as ‘Spatial dimension × Temporal dimension × Attributes dimension’. Therefore, we propose to use tensor to represent the original data and consider the additive model that decomposes this tensor into three components: (1) smooth but non-stationary global trend mean, (2) sparse local hot-spots, and (3) residuals. We term our proposed decomposition model as Smooth Sparse Decomposition-Tensor (SSD-Tensor). Besides, when fitting the raw data to the SSD-Tensor model, we propose to add two penalty functions: the first one is the LASSO type penalty to guarantee the spatial sparsity of hot-spots, and the second one is the fused-LASSO penalty [see, 28] to guarantee the temporal consistency of hot-spots. This allows us to not only detect when the hot-spot happens over the temporal domain (i.e. hot-spot detection problem) but also localize where and which types/attributes of the hot-spots occurs if the change happens (i.e. hot-spot localization problem).

It is useful to highlight the novelty of our proposed method as compared to the existing research on spatio-temporal data. First, our proposed SSD-Tensor method can detect hot-spots when the global trend of the spatio-temporal data is dynamic (i.e. non-stationary or non-i.i.d). That is, our method is robust to the global trend, in the sense that it can detect hot-spots with positive or negative mean shifts on top of the global trend of raw data, no matter whether it is decreasing or increasing. In comparison, existing SPC or change-point detection methods often assume that the background is i.i.d. and focus on detecting the anomalies under the static and i.i.d. background. Second, we should clarify that the primary goal of our proposed method is not the prediction or model fitting. Instead, we focus on hot-spots detection and localization among the dynamic spatio-temporal data. Of course, good fitting or estimation of the global trend will be useful to detect hot-spots accurately. Finally, while our paper focuses only on a 3-dimensional tensor arising from our motivating application in crime rates, our proposed hot-spot method can easily be extended to any d-dimensional tensor ( ), as we can simply add corresponding dimensions and bases in the tensor analysis. The capability of extending to high-dimensional tensor data is one of the main advantages of our proposed SSD-Tensor method.

The remainder of this paper is as follows. Section 2 introduces and visualizes the crime rate dataset, which will be used as our motivating example. Section 3 presents our proposed SSD-Tensor model and discusses how to estimate model parameters from data. Section 4 describes how to use our proposed SSD-Tensor model to detect and localize hot-spots. Section 5 compares our proposed method with several benchmark methods and demonstrates its usefulness through extensive simulations. Section 6 represents the application of our proposed method in a real crime rate dataset.

2. Motivating example and background

This section gives a detailed description of the crime rate dataset that is available from the US Department of Justice Federal Bureau of Investigation (see https://www.ucrdatatool.gov/Search/Crime/State/StateCrime.cfm). The crime rates are recorded from 1965 to 2014 for 51 states in the United States annually. In each year and for each state, three types of crime crates are reported: (1) Murder and non-negligent manslaughter; (2) Legacy rape; and (3) Revised rape. Table 1 shows the head of the dataset, and the value in the table is the crime rate per 100,000 population in each state.

Table 1.

Head of the crime rate dataset from 1965 to 2014 for 51 states in the United States annually. The dataset is publicly available from https://www.ucrdatatool.gov/Search/Crime/State/StateCrime.cfm.

| Year | State | Murder and non-negligent manslaughter | Legacy rape | Revised rape |

|---|---|---|---|---|

| 1965 | Alabama | 11.4 | 10.6 | 28.7 |

| 1965 | Alaska | 6.3 | 17.8 | 39.9 |

| 1965 | Arkansas | 5.9 | 10.4 | 23.7 |

| ⋮ | ⋮ | ⋮ | ⋮ | ⋮ |

| 1965 | Wyoming | 2.9 | 11.5 | 17.9 |

| 1966 | Alabama | 10.9 | 9.7 | 32.0 |

| 1966 | Alaska | 12.9 | 19.5 | 36.0 |

| ⋮ | ⋮ | ⋮ | ⋮ | ⋮ |

Notes: The recorded three types of crime rates are murder and non-negligent manslaughter, legacy rape, and revised rape. The value in the table is the crime rate per 100,000 population of each state, and the states are ordered in alphabetical order.

It is worth noting that the crime rate dataset has three dimensions: (1) the temporal dimension (i.e. years), (2) the spatial dimension (i.e. states) and (3) the attribute/category dimension (i.e. three different types of crime rates). For a visual representation, we plot several figures that show the characteristics of each dimension.

To begin with, we first illustrate the temporal dimension (i.e. years), where we plot the time series of the sum of all states' crime rates in the logarithm scale in Figure 1(a). The x-axis is the year ranging from 1965 to 2014, and the y-axis is the sum of all state's crime rates in the logarithm scale. We acknowledge that this summation is different from the actual annual crime rate in the United States, which needs to take into account different population sizes of each state at different years. Here, we use this notation to refer it as the annual crime rate of the United States for simplicity, since our purpose here is only for demonstration of the overall temporal trends. Figure 1(a) suggests that the crime rates are increasing in the first 10 years (1965–1975), then become stationary during 1975–1995, and finally have a decreasing trend during 1995–2014. Furthermore, it is interesting to point out the two peaks around 1980 and 1992, since we are interested in finding out whether they are caused by global trends or local hot-spots.

Figure 1.

(a) Time series of annual crime rates in the United States from1965 to 2014 & (b) Bar plot of three cumulative rates from 1965 to 2014. For Figure (a), the x-axis plot is the year ranging from 1965 to 2014, and the y-axis is the annual crime rate of the United States in the logarithm scale. Because the value of the crime rate is the number of crime cases per 100,000 population, it is reasonable for the annual crime rate to be larger than 100 during some years. For plot (b), different bars represent different types of crime rates, and the height of the bar represents the cumulative crime rate from 1965 to 2014 in the United States in the logarithm scale.

Next, we show the characteristics of the crime rate dataset on the attribute/category dimension (i.e. three types of the crime rates) in Figure 1(b), where different bars represents the different types of the crime rates, and the height of the bar represents the cumulative crime rate from 1965 to 2014 in the United States. It can be seen that these three crime rates overall happen with similar frequencies. This can possibly make it challenging to detect the hot-spots if we analyze the three-dimension data as a whole.

Finally, we illustrate the crime rate data in the spatial dimension (i.e. states) in Figure 2. In Figure 2, each map shows the spatial information of the crime rates in six different years. The selected six years are starting from 1965 with a ten-year interval, and the only exception is the sixth map, which uses the Year 2014 data, as the data in Year 2015 is not available yet as of August 2020. If a state has a very dark color in Figure 2, it has very high crime rates. We can see from the spatial plot in Figure 2 that the spatial patterns of crime rates are generally very smooth.

Figure 2.

Each map shows the spatial information of the crime rates in six different years. The selected six years are (a) 1965, (b) 1975, (c) 1985, (d) 1995, (e) 2005, (f) 2014. A darker color represents a higher crime rate. We can see that, the spatial patterns of crime rates are generally very smooth.

From Figures 1 and 2, there seems to be a brief increasing trend during 1984–1995, but it is difficult to conclude whether this is due to the global trend or local hot-spots without refined analysis. Note that global trend might be caused by the US federal governments' policies or the worldwise economic or political situations that are out of control of any local state or certain government branches. However, it is possible that the issues from local hot-spots can be addressed by borrowing other states' successful strategies or policies.

Below we provide the technical background on tensor through the crime rate dataset. Note that we can store our data set as a tensor of order three, denoted by where an element represents the jth crime rate of state i in year t, where for 51 states, j = 1, 2, 3 for three different type of crime rate and for 50 years from 1965 to 2014.

We are now ready to introduce some basic tensor notation and algebra that are useful in this paper. For the notations throughout the paper, scalars are denoted by lowercase letters (e.g. θ), vectors are denoted by lowercase boldface letters ( ), matrices are denoted by uppercase boldface letter ( ), and tensors by curlicue letter (ϑ). For example, a tensor of order N is represented by where represent the mode-n dimension of ϑ for .

Next, we introduce the notation of slice, which is a two-dimensional section of a tensor by fixing all but two indices. Let us take tensor as an example, and Figure 3 visualizes its horizontal, lateral, and frontal slides, which are denoted by , and , respectively.

Figure 3.

Slices of three-dimension tenor. (a) The original tensor: , (b) horizontal slices: , (c) lateral slices: , (d) frontal slices: .

Moreover, we introduce the mode-n product between tensor and matrix. For a given tensor of order N, i.e. and a given matrix the mode-n product between ϑ and , denoted by is a new tensor of dimension , where its th entry can be computed as Here we use the notation to refer the th entry in matrix and to refer the th entry tensor ϑ.

Finally, we discuss the Tucker decomposition, which is a useful technique in tensor algebra. Its main idea is to decompose a tensor into a core tensor multiplied by matrices along each dimension:

where is an orthogonal matrix for . The above equation can be equivalently represented by a Kronecker product, i.e.

where is the vectorized operator. Here the Kronecker product ⊗ is defined as follow: suppose and are matrices, the Kronecker product of these matrices is an block matrix defined by

The Kronecker product has been shown to have excellent computational efficiency for tensor data, see [10].

3. Our proposed SSD-tensor model

This section presents our proposed SSD-Tensor model and its parameter estimation, whereas the discussion of hot-spot detection and localization will be postponed to the next section. The main advantage of using tensor is not only to characterize the complicated ‘within-dimension’ or ‘between-dimension’ correlations, but also to simplify the computations. The latter is similar to the matrix context where it might be difficult to compute the inverse of a large matrix in general, but it will be straightforward to calculate the inverse of a large block diagonal matrix through the inverse of sub-matrices in the diagonal.

To better present our main ideas, we split this section into three subsections. Section 3.1 presents the mathematical formulation of our proposed SSD-Tensor model, and Section 3.2 develops the optimization algorithm for the parameter estimation problem of the model when fitting the observed data. Since the choice of basis in the tensor decomposition plays an important role in representing spatial or temporal patterns, we devote Section 3.3 to discuss the choice of basis in our context.

3.1. Our proposed model

In this section, we present the mathematical formulation of our proposed SSD-Tensor model. Here let us focus on our motivating data with three-dimension tensor , where the th entry indicate the jth crime rate in ith state in year t, with , , and .

At the high level, our proposed SSD-Tensor model is to decompose a raw data into three components: the smooth global trend mean , local hot-spots , and residuals . Mathematically, it is an additive model with the form

where the residuals are i.i.d. with Under the tensor notation, we denote as the corresponding tensors of dimension Then our proposed model can be rewritten as .

It remains to discuss two main components of our model in more detail. For the global trend mean , our main idea is to adopt the basis decomposition framework that allows us to address the complicated within-dimension correlation and between-dimension correlations. To be more specific, we propose to decompose the global trend mean tensor as

where is an unknown tensor parameter to be estimated, and matrices are pre-specified bases to describe the within-state correlation, within-rate correlation, and within-year correlation in , respectively. The choices of the base matrices, i,e., and are very important in practice, and we will discuss them in more details in Section 3.3. In our tensor decomposition, the operator is the mode-n product reviewed in the previous section, where n = 1, 2, 3. This mode-n product is used to model the between-dimension correlations in .

Since some readers might not be familiar with the basis decomposition in tensor, let us provide a little more background. Loosely speaking, basis decomposition for tensor is an extension of the matrix decomposition, and is similar to the singular value decomposition (SVD) in the sense of representing a matrix or tensor as the product of several specialized matrices or tensors. The main difference is that the bases are known in the basis decomposition. Figure 4 illustrates the relationship between SVD and basis decomposition.

Figure 4.

The relationship between SVD and basis decomposition. The plot (a) is the SVD of matrix . The plot (b) is the basis decomposition of tensor .

Next, for the local hot-spot tensor , we follow the similar basis decomposition way as :

where is the unknown tensor to be estimated. And matrices are pre-specified bases to describe the within-state correlation, within-rate correlation, and within-year correlation in , respectively. For the selection of these bases, i,e., , a detailed discussion can be found in Section 3.3. The operator is the mode- product (see the definition of mode-n product in the end of Section 2) to model the between-dimension correlations in .

In summary, our proposed SSD-Tensor model can be written as the following tensor format:

| (1) |

This tensor representation above allows us to develop computationally efficient methods for estimation and prediction. For the detailed reason why tensor is more computational efficient can be found in Section 3.2 and Appendix 2. By introducing tensor algebra, the above format of our model can be written in an equivalent way:

| (2) |

where vector , vector , vector , and vector . The residual vector is assumed to be the Gaussian white noise, i.e. .

In our proposed SSD-Tensor model in (2), it is crucial to estimate the global mean parameter and the local hot-spots parameter when fitting to the observed data. Here we propose to estimate them by the penalized likelihood-function framework. To be more concrete, we propose to add two penalties in our parameter estimation. The first one is the LASSO penalty term on to ensure the sparsity property of hot-spots: The second penalty is the fused LASSO penalty ([28]) on to encourage the temporal consistency of the hot-spots: where .

Thus, by combining these two penalties, we propose to estimate the parameters ( ) via the following optimization problem:

| (3) |

We will discuss how to efficiently solve this optimization problem in the next section.

3.2. Optimization algorithm for estimation

In this section, we develop an efficient computational algorithm for solving the optimization problem in (3). To emphasize the tuning parameters and in the penalty terms in (3), we rewrite and as and respectively.

Our proposed optimization algorithm to solve (3) contain two main steps. The first one is to estimate and for a given . The second step is to estimate by using the Fast Iterative Shrinkage Thresholding Algorithm (FISTA) in [2] that iteratively updates the estimators. When implementing the FISTA algorithm to our context, at each iteration, we face an optimization problem that involves both LASSO and fused LASSO penalty parameters and To make the computation feasible, we apply an useful proposition that establishes the relationship of the optimal solutions between and general

Let us first discuss the estimation of and for a given . It turns out that we have a closed-form solution, as shown in the following proposition.

Proposition 3.1

In the optimization problem in (3), for a given , the optimal solution of is given by:

(4)

The proof of Proposition 3.1 follows the standard argument in the method of least squares in linear regression, and thus omitted. Moreover, since can have huge dimension when is large, it might be computationally expensive to solve the inverse of matrix . By using the tensor algebra, we can greatly simplify the computations, see Appendix 2 for the details.

Next, we discuss the estimation of , which is highly non-trivial. By Proposition 3.1, the original optimization problem in (3) becomes

| (5) |

where with as the projection matrix. Here the matrix is defined to make equivalent to (see Appendix 1 for the explicit definition of ).

It suffices to solve the new optimization problem in (5). Note that (5) is a generalized LASSO problem, and there are many optimization methods available in the literature. For instance, [29] solves a generalized LASSO problem through transformation to a common LASSO problem, but unfortunately, it is computationally heavy. [30] uses the alternating direction methods of multipliers (ADMM) algorithm to solve a generalized LASSO problem, but its convergence rate is of as shown in [8], where k indicating the iterations. Another popular method is iterative shrinkage thresholding algorithms (ISTA) proposed by [4], which also has a convergence rate of Later, researchers in [2] proposed a faster version of the ISTA, called FISTA, and shown that it has a convergence rate . Thus in our paper, we decide to choose the FISTA algorithm of [2] as the primary tool to solve (5) due to its fast convergence rate.

There is a technical challenge to apply the FISTA algorithm to (5). In FISTA algorithm, each iteration is based on the proximal mapping of the loss function To be more concrete, the updating rule from ith FISTA iteration to th FISTA iteration is given by

where is the auxiliary variable, i.e. with , , and is the stepsize which is fixed as the maximal eigenvalue of matrix (see Appendix 4 for more details).

The challenge of applying the FISTA algorithm is because it is difficult to solve directly, as it involves two penalties with parameters and To overcome this challenge, we propose to combine a nice theoretical result in [13] with an augmented ADMM algorithm in [35]. Specifically, [13] shows that there is a closed-form relationship between and . Thus we can easily compute if we know how to solve . The latter can be solved by the augmented ADMM algorithm in [35], which is an extension of the regular ADMM method.

Proposition 3.2 summarizes that, in the ith FISTA iteration, how to derive from whose iterative applications lead to the estimation of in (5):

Proposition 3.2

Assume that

there is a diagonal matrix satisfying , i.e. is a positive semidefinite matrix;

there is a scaler , which is a positive penalty parameter;

The updating procedure of augmented ADMM algorithm from kth augmented ADMM iteration to the th augmented ADMM iteration is

(6) Suppose the above updating procedure lasts for iterations with as the number of augmented ADMM iterations, then we have can well approximate as . Then can be solved as

(7) where ⊙ is an operator, which multiply two vectors in an element-wise fashion. For example, for two vectors , the ith operator of is .

The proof of Proposition 3.2 is omitted, as the update rule in (6) is an application of the augmented ADMM algorithm of [35], and equation (7) follows directly from Theorem 1 of [13].

Combining the above two propositions together, our optimization algorithm to solve the optimization problem in (3) can be summarized as the pseudocode in Algorithm 1.

3.3. Selection of bases in our context

This section discusses how to choose the bases , , , , , in our contexts. In general, these bases can be Gaussian kernels, Cosine kernel, etc. depending on the nature or characteristics of the data. When one has little to no prior knowledge of the data structure, a simple choice of bases can be identity matrix.

Let us now discuss our choices of these bases in our simulation studies and case study of the crime rate dataset. For the bases of the global trend mean, it involves the selection of , , , where is the basis in the state dimension of the global trend, is the basis in the crime rate dimension of the global trend, is the basis in the temporal dimension of the global trend. By Figure 2, the data are spatially smooth in the state dimension, and thus we propose to choose as the Gaussian kernel matrix whose th element is defined by , where d is the distance between the center of ith state and jth state. Here the bandwidth constant c is chosen by Silverman's Rule of thumb of [25]: , where is the estimated variance of . Meanwhile, we set and to be the identity matrix, since we have little prior knowledge in the crime rate dimension and temporal dimension.

For the selection of the hot-spots basis, i.e. , , , we propose to set all of them as the identity matrix, since there is no prior knowledge of the hot-spots. It is informative to mention that while the identify matrix seems to lack the temporal consistency of the hot-spots, our optimization problem adds the fused LASSO penalty that might have already addressed the temporal consistency of the hot-spots.

4. Detection and localization of hot-spots

In this section, we discuss the detection and localization of the hot-spots. For the ease of presentation, we first discuss the detection of the hot-spots, i.e. detect when a hot-spot occurs in Section 4.1. Then, in Section 4.2, we consider the localization of the hot-spot, i.e. determine which states and which crime types are involved for the detected hot-spots.

4.1. Detect when hot spots occur?

To detect when hot-spots occur, we develop a control chart based on the following hypothesis test problem:

| (8) |

where

is the residual after removing the global trend mean under the penalty parameters and the vector are the estimated global trend men and local hot-spots in tth year. Here, we add to emphasize that, are the global trend mean and local hot-spots estimation under penalty parameter respectively.

The motivation of the above hypothesis test is as follows. When there are no hot-spots, the residual is exactly the model noises. However, when hot-spots exist, the residual includes both hot-spots and noises. By including the hot-spot information of in the alternative hypothesis, we hope to provide a direction in the alternative hypothesis space, which allows one to construct a test with more power, see [36].

Next, we construct the likelihood ratio test in the above-mentioned hypotheses testing problem. By [7], the test statistics monitoring upward shift is

where only takes the positive part of with other entries as zero, because our objective is to detect positive hot-spots. The superscript ‘+’ emphasizes that we aim at detecting upward shift. In other words, we focus on the hot-spots that have increasing means, partly because increasing crime rates are generally more harmful to the societies and communities. If one is also interested in detecting decreasing mean shifts, one could modify it by using a two-sided test.

It remains to discuss how to choose suitably in our test. We propose to follow [36] to calculate a series of under different combination of and then select the combination of with the largest power. The final chosen test statistics, denoted as , can be computed by

| (9) |

where , respectively are the mean and variance of under (e.g. for phase-I in-control samples). Here is the penalty parameter maximizing the above equation.

With the test statistic available, we detect when hot-spots occur based on the widely used Cumulative Sum (CUSUM) Control Chart, see [15,20]. At each time t, we recursively compute the CUSUM statistics as

| (10) |

with the initial value , where is a constant and can be chosen according to the degree of the shift that we want to detect. Then we declare that a hot-spot might occurs whenever for some pre-specified control limit L.

Note that the CUSUM statistics leads to the optimal control chart to detecting a mean shift from to for normally distributed data, see [15]. When the data are not normally distributed, the optimality properties might not hold, but it can still be a reasonable control chart. Also, it is important to choose the control limit L in the CUSUM control chart suitably, and the detailed discussion will be presented in Section 5 for our simulation studies and in Section 6 for our case study.

4.2. Localize where and which the hot spots occur?

In this section, we discuss how to localize the hot-spots if the CUSUM control chart in (10) raises an alarm at year In other words, we want to determine where and which crime rates may account for the hot-spots. To do so, we propose to utilize the matrix , which is the hot-spot estimation in th year. If the th entry in is non-zero, then we declare that there is a hot-spot for the jth crime rate type in the ith state at the th year.

The mathematical procedure to derive is as follows. First, is the tensor format of , where is the minimizer in (2) with penalty parameter as . Second, is the slices along the temporal dimension of .

As one reviewer points out, this approach might lead to a relatively high false positive rate (FPR), since some non-zero entries might not be statistically significant. Two possible ways to improve our approach are (1) to conduct the significant test, or (2) to set up a pre-specified threshold and only keep the positive entries that are larger than the threshold. It is useful to investigate how to improve our approach, which is an interesting topic for future research. Here we focus on our main ideas of using tensor decomposition for hot-spots, and adopt the simple approach for hot spots localization.

5. Simulation study

In this section, we report the numerical simulation results of our proposed method as well as its comparison with several benchmark methods in the literature. To better present our results, we divide this section into several subsections. Section 5.1 includes the data generation mechanism for our simulation studies, and Section 5.2 presents the benchmark methods for the comparison purpose. The performance of hot-spot detection and localization are reported in Section 5.3, and the fitness of the global trend mean is evaluated in Section 5.4.

5.1. Data generation

In our simulation, we detect the hot-spots on the complete data, i.e. . In order to shed light on the case study, we match the tensor dimension of the crime rate dataset and choose in our simulation. To generate the data , we generate it by generating its front slices for . Mathematically, we generate by

| (11) |

where denotes the ith entry in vector . The last term on the right hand side of the above equation, i.e. , is the ith entry in the white noise . And is a vector whose entries are independent and follow distribution. Note that while we generate the data sequentially over time t, our proposed SSD-Tensor method is actually an off-line method that analyzes the complete tensor.

The first term on the right hand side of the above equation, i.e. , is the global mean, where the subscript denotes the ith entry. Matrix is a fixed B-spline basis with the degree of three and fifty knots evenly spacing on interval . Note that the B-spline basis is only used in the generative model in simulation to generate data, but is not used in our proposed methodologies. Vector is a constant parameter controlling the trend of the global mean. We set in two different ways, so we discuss 2 scenarios:

Scenario 1: The global trend mean is stationary, in which for and . Here is the ith entry in .

Scenario 2: The global trend mean is decreasing over time, in which for . And for .

The second term on the right side hand of equation (11), i.e. is the local hot-spot, where is the indicator function, which has the value 1 for all elements of A and the value 0 for all elements not in A. First, indicates that the hot-spots only occur after the hot-spot τ. This ensures that the simulated hot-spot is temporal consistent. The second indicator function shows that only those entries whose location index belongs set are assigned as local hot-spots. This ensures that the simulated hot-spot is sparse. Here we assume the change happens at and the hot-spots index set . The hot-spots only account for around , so it is sparse. Parameter denotes the change magnitude. In our simulation studies, two change magnitudes are considered, one is (small shift) and the other is (large shift). Here we follow the existing research [see, 6,34] to set the variance of the white noise as . Note that the white noise standard deviation might seem to be small, but we want to emphasize that the σ value itself is not crucial here, and the signal-to-noise-ratio (SNR), i.e. , is more fundamental.

Here is the detailed implementation of our proposed SSD-Tensor method. For the selection of basis, we use the the same bases as Section 3.3. For the penalty parameters , we set . Since our proposed method is an off-line method that uses the complete data, our simulation setting on the average run length under the out-of-control status ( ) is slightly different from the standard SPC literature. In standard SPC literature, of a procedure with the stopping time N is defined as when the true change occurs at a given time While in our paper, we choose to better illustrate real-world applications. To be more specific, in each Monte Carlo run for , we simulate a complete tensor data with years and the change-time years. Next, we focus only those runs that the control chart raises an alarm at (if T<200 it will be counted as false alarm), and then define the detection delay, or , as with the change time .

5.2. Benchmark methods

In this section, we present the description and implement of benchmark methods that will be used to compare with our method.

The first benchmark method is the scan statistics method in [19], which is a Bayesian extension of Kulldorff's scan statistic. The reason for us to choose [19] is that it has large power to detect clusters and the fast runtime. In our paper, we use the a R function called scan_bayes_negbin() from the package scanstatistics. To implement this function, the population size is needed. For a fair comparison, we will not give more data to scan-stat, and simply assume that the population is 100, 000 for all states and all years. Because scan_bayes_negbin() can only handle one type of crime rate one time, we apply scan bayes_negbin() to three crime rates separately and set the probability of an outbreak as . Because scan-statistics-based method does not give the clear calculation of average run length under the in-control status ( ) and , so we can only use the probability of an outbreak as to define the control limit to achieve similar with other benchmarks.

The second benchmark method is the LASSO-based method in [36]. The main idea of [36] is to integrate the multivariate Exponentially Weighted Moving Average (EWMA) charting scheme. Under the assumption that the hot-spots are sparse, the LASSO model is applied to the EWMA statistics. If the Mahalanobis distance between the expected response (the LASSO estimator) and observed values is larger than a pre-specified control limit, temporal hot-spots are detected, with non-zero entries of the LASSO estimator are declared as spatial hot-spots. For the control limits and the penalty parameters of the LASSO-based method, we use the same criterion as our proposed SSD-Tensor method.

The third benchmark is the dimension-reduction method of [5] that uses PCA to extract a set of uncorrelated new features that are linear combinations of original variables. Note that [5] fails to localize the spatial hot-spots, and it can only detect the temporal change-point when the PCA-projected Mahalanobis distance is larger than a pre-specified control limit. For this control limit, we set it by using the same criterion as our proposed SSD-Tensor method. In both our simulations and case study, we select three principle components, since they can explain more than cumulative percentage of variance (CPV).

Finally, the fourth benchmark is the traditional T2 control chart [23] method with the control limit set using the same criterion as our proposed SSD-Tensor method. Since the T2 control chart method is a well-defined method, we skip the detailed description, and more details can be found in [23].

5.3. Performance on hot-spot detection and localization

In this section, we compare our proposed SSD-Tensor method with four benchmark methods with the focus on the performance of hot-spots detection and localization. The four benchmark methods are scan-statistics method proposed by [19] (denoted as ‘scan-stat’), LASSO-based control chart proposed by [36] (denoted as ‘ZQ-LASSO’), PCA-based control chart proposed by [5] (denoted as ‘PCA’), and the traditional Hotelling control chart [23] (denoted as ‘T2’). All simulation results below are based on 1000 Monte Carlo replications.

Let us first compare the performance of hot-spots detection over the time domain, i.e. when the hot-spots occur. The criterion we use to measure the performance of detection is . Because measures the delay after the change occurs, the smaller the , the better detection performance. The results can be found in Tables 2 and 3. For our proposed SSD-Tensor, it has a small under all scenarios, no matter there is a stable or decreasing global trend. This illustrates that our proposed SSD-Tensor method can provide a rapid alarm after the hot-spots occur, even if there are stable or unstable global trends. This good behavior is due to the ability to capture both temporal consistency and spatial sparsity of the hot-spots. For the scan-statistics method, it is hard to estimate the exact because it focuses on the hot-spots localization, not sequential change point detection. So we will not report the of it. For ZQ-LASSO, it successfully detects the hot-spots when the global trend is stable, but unfortunately it fails to do so when there is a decreasing global trend. The latter is not surprising because ZQ-LASSO is unable to separate the global trend and local hot-spots. For PCA and T2, both of them fail to detect the hot-spots in all scenarios within the entire (simulated) years, as their . The reason for the unsatisfying results of PCA and T2 is that, they are designed based on a multivariate hypothesis test on the global mean change, which cannot take into account the non-stationary global mean trend and the sparsity of the hot-spots.

Table 2.

Scenario 1 (stable global trend mean): Comparison of hot-spot detection under small and large hot-spots with four criterions: precision, recall, F-measure and .

| Large shift | Small shift | |||||||

|---|---|---|---|---|---|---|---|---|

| Methods | Precision | Recall | F-measure | ARL | Precision | Recall | F-measure | ARL |

| SSD-Tensor | 0.3210 | 0.9898 | 0.6554 | 1.2680 | 0.3217 | 0.9890 | 0.6553 | 2.0970 |

| (0.0345) | (0.0893) | (0.0599) | (0.3321) | (0.0362) | (0.0946) | (0.0634) | (0.8979) | |

| Scan-stat | 0.3109 | 0.4664 | 0.3887 | – | 0.2242 | 0.3364 | 0.2803 | – |

| (0.0020) | (0.0030) | (0.0025) | (–) | (0.0301) | (0.0451) | (0.0376) | (–) | |

| ZQ-LASSO | 0.1961 | 1.0000 | 0.5980 | 1.0000 | 0.1961 | 1.0000 | 0.5980 | 1.9750 |

| (0.0000) | (0.0000) | (0.0000) | (0.0000) | (0.0000) | (0.0000) | (0.0000) | (2.1291) | |

| PCA | – | – | – | 50.0000 | – | – | – | 50.0000 |

| – | – | – | (0.0000) | – | – | – | (0.0000) | |

| T2 | – | – | – | 50.0000 | – | – | – | 50.0000 |

| – | – | – | (0.0000) | – | – | – | (0.0000) | |

Table 3.

Scenario 2 (decreasing global trend mean): Comparison of hot-spot detection under small and large hot-spots with four criterions: precision, recall, F-measure and .

| Large shift | Small shift | |||||||

|---|---|---|---|---|---|---|---|---|

| Methods | Precision | Recall | F-measure | ARL | Precision | Recall | F-measure | |

| SSD-Tensor | 0.3242 | 0.9978 | 0.6610 | 1.3490 | 0.3245 | 0.9978 | 0.6612 | 3.4120 |

| (0.0184) | (0.0085) | (0.0105) | (0.4762) | (0.0188 ) | (0.0085) | (0.0108) | (0.8217) | |

| Scan-stat | 0.3111 | 0.4666 | 0.3889 | – | 0.2567 | 0.3851 | 0.3209 | – |

| (0.0007) | (0.0011) | (0.0009) | (–) | (0.0307) | (0.0460) | (0.0383) | (–) | |

| ZQ-LASSO | 0.0000 | 0.0000 | 0.0000 | 50.0000 | 0.0000 | 0.0000 | 0.0000 | 50.0000 |

| (0.0000) | (0.0000) | (0.0000) | (0.0000) | (0.0000) | (0.0000) | (0.0000) | (0.0000) | |

| PCA | – | – | – | 50.0000 | – | – | – | 50.0000 |

| – | – | – | (0.0000) | – | – | – | (0.0000) | |

| T2 | – | – | – | 50.0000 | – | – | – | 50.0000 |

| – | – | – | (0.0000) | – | – | – | (0.0000) | |

We visualize the hot-spots detection results in Figure 5, which illustrates the trend of the detection delay, of all methods, as δ changes from 0.1 to 0.5 with the step size of 0.1. Because scan-stat does not represent the and PCA, T2 fail to detect hot-spots in all scenarios, we only plot the of SSD-Tensor and ZQ-LASSO. From the plot, our proposed SSD-Tensor method, compared with ZQ-LASSO, shares similar detection delays when there is a stable global trend. However, our proposed SSD-Tensor method has much smaller detection delays than ZQ-LASSO, particularly when there is a decreasing global trend mean and the magnitude of the hot-spot is small. Also, it is interesting to note that the detection delays of all methods are decreasing as the magnitude of the hot-spot is increasing, which is consistent with our intuition that it is easier to detect larger changes.

Figure 5.

plot under different magnitude δ of the hot-spot. (a) ARL1 under stable global trend, (b) ARL1 under decreasing global trend.

Next, let us compare the performance on hot-spots localization of these methods, i.e. localize where the hot-spots occur. To evaluate the localization performance of all the methods, we will compute the following four criteria: (1) precision, defined as the proportion of detected hot-spots that are true hot-spots; (2) recall, defined as the proportion of the hot-spots that are correctly identified; (3) F-measure, a single criterion that combines the precision and recall by calculating their harmonic mean. Moreover, we also compare the true positive rate (TPR), true negative rate (TNR), false positive rate (FPR), and false negative rate (FNR). The localization performance measuring in precision, recall, F-measure can be found in Tables 2 and 3, and the localization performance measuring in TPR, TNR, FPR, FNR can be found in Tables 4 and 5. For our proposed SSD-Tensor method, its localization performance is satisfactory no matter there is a stable or unstable global trend. For instance, when there is a decreasing global trend and , our method has precision and recall, which outperforms those of scan-stat and ZQ-LASSO. The only weakness of our proposed SSD-Tensor method is that it has a relatively high FPR: this is consistent with our expectation, since we did not conduct the significance test of the positive entry in . For scan-stat, it has very similar precision as our proposed SSD-Tensor method (both are around ), but its recall is much lower. This is because scan-stat tends to detect clustered hot-spots, which results in detecting fewer hot-spots and missing some true hot-spots. This might also explain why scan-stat has low TPR, but high FNR. It is worth noting that the precision/recall/F-measure might be overestimated for scan-stat: we record all the Monte Carlo runs, even if it is a false alarm, since scan-stat fails to report . For ZQ-LASSO, it has precision and recall when the global trend is stable, but it has a very high FPR. This is because ZQ-LASSO fails to detect the significance of the non-zero entries, and declares all non-zero entries as hot-spots. However, when there is a decreasing global trend, ZQ-LASSO fails to detect hot-spots, so we represent its localization performance as 0. This unsatisfactory performance is due to the inability of ZQ-LASSO to separate the global trend and local hot-spots, particularly in Scenario 2 (decreasing global trend mean). For PCA and T2, they cannot localize hot-spots, and thus we do not report the corresponding values on the precision, recall, F-measure, TPR, TFR, FPR and FNR.

Table 4.

Scenario 1 (stable global trend mean): Comparison of hot-spot detection under small and large hot-spots under four criterions: TPR, TNR, FPR, FNR.

| Large shift | Small shift | |||||||

|---|---|---|---|---|---|---|---|---|

| Methods | TPR | TNR | FPR | FNR | TPR | TNR | FPR | FNR |

| SSD-Tensor | 0.9898 | 0.4848 | 0.5072 | 0.0022 | 0.9890 | 0.4865 | 0.5045 | 0.0020 |

| (0.0893) | (0.0616) | (0.0630) | (0.0085) | (0.0946) | (0.0635) | (0.0648) | (0.0080) | |

| Scan-stat | 0.4664 | 0.7479 | 0.2521 | 0.5336 | 0.3364 | 0.7162 | 0.2838 | 0.6636 |

| (0.0030) | (0.0007) | (0.0007) | (0.0003) | (0.0451) | (0.0110) | (0.0110) | (0.0451) | |

| ZQ-LASSO | 1.0000 | 0.0000 | 1.0000 | 0.0000 | 1.0000 | 0.0000 | 1.0000 | 0.0000 |

| (0.0000) | (0.0000) | (0.0000) | (0.0000) | (0.0000) | (0.0000) | (0.0000) | (0.0000) | |

Table 5.

Scenario 2 (decreasing global trend mean): Comparison of hot-spot detection under small and large hot-spots under four criterions: TPR, TNR, FPR, FNR.

| Large shift | Small shift | |||||||

|---|---|---|---|---|---|---|---|---|

| Methods | TPR | TNR | FPR | FNR | TPR | TNR | FPR | FNR |

| SSD-Tensor | 0.9978 | 0.4903 | 0.5097 | 0.0022 | 0.9978 | 0.4909 | 0.5091 | 0.0022 |

| (0.0085) | (0.0422) | (0.0422) | (0.0085) | (0.0085) | (0.0431) | (0.0431) | (0.0085) | |

| Scan-stat | 0.4666 | 0.7480 | 0.2520 | 0.5334 | 0.3851 | 0.7281 | 0.2719 | 0.6149 |

| (0.0011) | (0.0003) | (0.0003) | (0.0011) | (0.0460) | (0.0112) | (0.0112) | (0.0460) | |

| ZQ-LASSO | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 |

| (0.0000) | (0.0000) | (0.0000) | (0.0000) | (0.0000) | (0.0000) | (0.0000) | (0.0000) | |

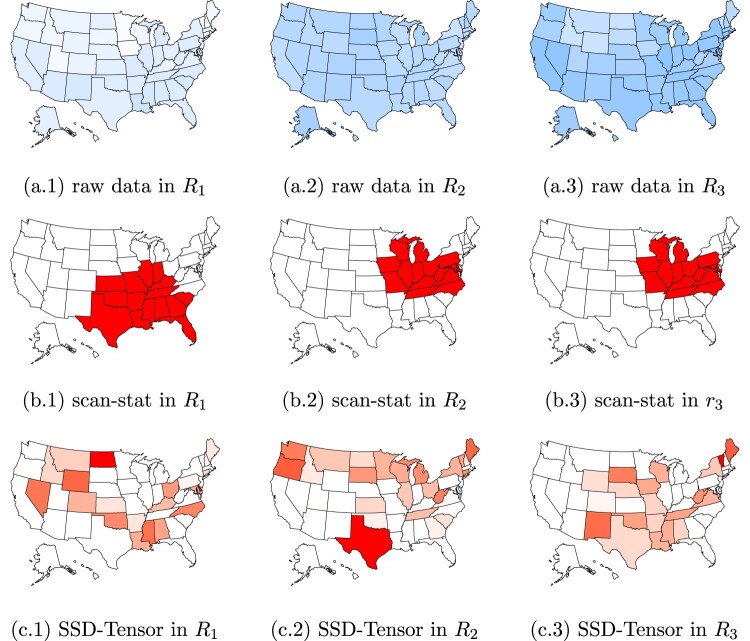

Moreover, we also visualize the hot-spot localization results in Figure 6. In the first row, the blue states are the true hot-spots (true positive), whereas the white states are the normal states (true negative). In the second row, the red states are the detected hot-spots (true positive + false positive) by scan-stat. In the third row, the red states are the detected hot-spots by our proposed SSD-Tensor method. Different color represents how likely it is hot-spot: the darker red, the more likely it is. From Figure 6, we can see that scan-stat tends to detect clustered hot-spots. Meanwhile, there is no clear pattern for the hot-spots detected by our proposed SSD-Tensor method. We need to acknowledge that while our proposed method is able to detect most of the true hot-spots (the blue states), but there are some false alarms. We should emphasize that this kind of false alarm is reasonable on the hot-spot localization, which is related to the multiple hypothesis testing in the high-dimensional data.

Figure 6.

Hot-spots detection performance by SSD-Tensor and scan-stat in Scenario 2 (decreasing global trend mean) with large hot-spots ( ). In the first row, the blue states are the true hot-spots (true positive), whereas the white states are the normal states (true negative). In the second row, the red states are the detected hot-spots (true positive + false positive) by scan-stat. In the third row, the red states are the detected hot-spots by our proposed SSD-Tensor method. Different color represents how likely it is hot-spot: the darker red, the more likely it is. In these figures, represent the first, second, third category, respectively. To match the dimension of our motivating data set, we choose three categories to match the three types of crime rates in our motivating crime rate dataset, namely legacy rape rate, murder and non-negligent manslaughter, and revised rape rate.

5.4. Background fitness

In this section, we illustrate that our proposed SSD-Tensor method leads to a reasonably well estimation for the global trend mean. To do this, we compare the Squared-Root of Mean Square Error (SMSE) of the fitness of a global trend mean in Table 6. Here we only show our proposed method, since other baseline methods (scan-stat, ZQ-LASSO, PCA, and T2) cannot model the global trend mean. It is clear from Table 6 that, our proposed SSD-Tensor method performs well in terms of the background fitness, especially in Scenario 2 (decreasing global trend).

Table 6.

SMSE in two scenarios under different of the hot-spot.

| Methods | |||||

|---|---|---|---|---|---|

| Scenario 1 (stationary global trend mean) | |||||

| SSD-Tensor | 0.0075 | 0.0076 | 0.0075 | 0.0077 | 0.0077 |

| (7.1331e ) | (3.4049e ) | (8.0032e ) | (1.0799e ) | (6.9222e ) | |

| Scenario 2 (decreasing global trend mean) | |||||

| SSD-Tensor | 0.0039 | 0.0039 | 0.0039 | 0.0039 | 0.0039 |

| (1.2357e ) | (1.2647e ) | (1.1054e ) | (1.1845e ) | (1.2619e ) | |

6. Case study

In this section, we apply our proposed SSD-Tensor method to detect and localize hot-spots in the crime rate dataset described in Section 2 and compare its performance with other benchmarks.

First, we compare the performance of the detection delay of the hot-spot. For all the methods, we set the control limits so that the average run length to false alarm constraint via Monte Carlo simulation under the assumption that data from the first 20 years are in control. For the setting of the parameters and the selection of basis, they are the same as that in Section 5. For our proposed SSD-Tensor method, we build a CUSUM control chart utilizing the test statistic in Section 4, which is shown in Figure 7(a). From this figure, we can see that the hot-spots are detected in 2012 by our proposed SSD-Tensor method. For the benchmark methods for comparison, we also apply scan-stat [see, 19], ZQ-LASSO [see, 36], PCA [see, 5] and T2 [see, 23] to the crime rate dataset and summarize the performance of the detection of a hot-spot in Table 7. Note that the value in Table 7 is the first year that raises alarm, i.e. . Our proposed SSD-Tensor method raises an alarm of hot-spots in year 2012, while other benchmarks fail to detect any hot-spots (we do not represent the hot-spots year of scan-stat, as it does not report the hot-spots). While nobody knows the true hot-spots of the real dataset such as the crime rate data, our numerical simulation experiences suggest that year 2012 is likely a hot-spot.

Figure 7.

(a) Hot-spot detection by SSD-Tensor. Since the CUSUM test statistics of 2012, 2013 and 2014 exceed the threshold (red dashed line), we declare that year 2012 is the first change-points. (b) Fitted global trend mean and the observed annual data points. Each point (blue circle) is an actual observed annual data in logarithm scale, which is fitted by a fitted mean curve (blue line).

Table 7.

Detection of change-point year in crime rate dataset.

| Methods | SSD-Tensor | scan-stat | ZQ-LASSO | PCA | T2 |

|---|---|---|---|---|---|

| Year when an alarm is raised | 2012 | – | None | None | None |

Notes: The label ‘Year when an alarm is raised’ is first year that raises alarm, i.e. , where is the CUSUM statistics defined in equation (10), and L is control limits to achieve the average run length to false alarm constraint via Monte Carlo simulation under the assumption that data from the first 20 years are in control.

Next, after the detection of hot-spots, we need to further localize the hot-spots in the sense of determining which state and which type of crime rates may lead to the occurrence of a hot-spot. Because the baseline methods, PCA and T2, can only detect when the changes happen and ZQ-LASSO fails to detect any changes, we only show the localization of hot-spot by SSD-Tensor and scan-stat, where the results are visualized in Figure 8. We also represent the raw crime rates in 2012 in the first row of Figure 8, where the darker the blue, the high value of the crime rates. The first row (a.1)–(a.3) in this figure represents the raw data, where we can see that all three types of crime rates share a very smooth background, so it becomes difficult to find out the hot-spots directly from the raw data. The second row (b.1)–(b.3) and third (c.1)–(c.3) in this figure represents the hot-spots (TP + FP) detected by scan-stat [see, 19] and SSD-Tensor, respectively. Scan-stat tends to detect clustered hot-spots, while there is no obvious pattern of the hot-spots detected by our proposed SSD-Tensor method. Thus, as compared to scan-stat, our proposed SSD-Tensor method seems to detect sparse hot-spots, which might be useful in practice when identifying where the sudden increase of crime happens.

Figure 8.

Localization of hot-spot. (a.1)–(a.3): the raw data of the three crime rates in 2012. (b.1)–(b.2): the detected hot-spots by scan-stat. (c.1)–(c.3): the detected hot-spots by SSD-Tensor. The red color of the states means that this is a hot-spot state. And the deeper the color, the larger of the hot-spots size. In these figures, is the legacy rape rate, is the murder and non-negligent manslaughter, and is the revised rape rate.

Finally, we also represent the fitted global trend mean curve in Figure 7(b), where the blue line is the fitted mean curve, and the blue circle is the observed annual data points in logarithm scale. Note that the actual observed data in 2012 is a little bit higher than the fitted mean curve, which may lead to hot-spots detection in 2012. Overall, the fitness of our proposed method works very well, which can help us greatly remove the effect of the global trend.

7. Conclusion

In this paper, we propose the SSD-Tensor method for hot-spot detection and localization in multivariate spatio-temporal data, which is an important problem in many real-world applications. The main idea is to represent the multi-dimension data as tensor, and then to decompose the tensor into the global trend mean, local hot-spots and residuals. The estimation of model parameters and hot-spots is formulated as an optimization that includes the sum of residual squares with both LASSO and fused LASSO penalties, which control the sparsity and the temporal consistency of the hot-spots, respectively. Moreover, we develop an efficient algorithm to solve these optimization problems for parameter estimation by using the FISTA algorithm. In addition, we compare our proposed SSD-Tensor method with other benchmarks through Monte Carlo simulations and the case study of the crime rate dataset.

Clearly, there are many opportunities to improve the algorithms and methodologies. First, it would be interesting to investigate the confounding between the global trend and local hot-spots in future research. In our paper, we assume that they are additive, but it is possible that increasing global trend yields an increased number of hot-spots. Second, the significance test of the non-zero entries of can be promising future research, so we can reduce the false positive rate (FPR) in localizing the hot-spots. Third, it will be useful to extend our method to the context of missing or incomplete data. We feel it is straightforward to combine our method with the imputation method when missing is (completely) at random, but it remains an open problem if missing is not at random. Fourth, in this paper, we fix the tensor basis, and it will be useful to investigate the robustness effects of different tensor bases, or better yet, to adopt some data-driven method to learn the bases from the data. Fifth, a promising research direction is to combine our proposed SSD-Tensor method with the spatially adaptive method in [31] for trend filtering. Finally, it will be interesting to derive the theoretical properties of our proposed method including the sufficient or necessary conditions under which our hot-spot estimation properties.

Acknowledgments

The authors are grateful to the Associate Editor and two anonymous reviewers for their constructive comments that greatly improved the quality and presentation of this article.

Appendices.

Appendix 1. Construction of matrix

The main idea to construct matrix is to make

Here is more details. Matrix can be constructed as:

where matrix are the identity matrix. And matrix is defined as

where is the th entry in matrix .

Appendix 2. Proof of fast calculation of via tensor algebra

Proof.

Appendix 3. Review of all benchmarks

For the scan-stat-besed method, we select [19] as the representative. The goal of [19] is to find regions (a collection of location index) which has high posterior probability to be an anomalous cluster. To realize this objective, [19] compare the null hypothesis is no anomalous clusters with a set of alternative , each representing a cluster in some region S. The anomalous cluster is declared as the one with highest posterior probabilities , i.e.

where dataset with as the number of crime counts in index . And is assumed to follow Poisson distribution, i.e. , where represents the (known) population of index and q is the (unknown) underlying crime rate. To calculate the posterior probability , a hierarchical Bayesian model is used, where q are drawn from Gamma distribution. For selection of prior parameters of the Gamma distribution, please see [19] for the detailed description. For each j in , the above procedure is repeated to find anomalous regions in all type of rates.

For LASSO-based methods, we select [36] as the representative. In a given time t, [36] build a multivariate Exponentially Weighted Moving Average (EWMA) on data : where and α is a weighting parameter in . For each , a LASSO estimator is derived from the penalized likelihood function where is the covariance matrix of the normal distribution which follows. Temporal change point is detected when

where is the control limit chosen to achieve a given in-control average run length. and The spatial hot-spots are localized at non-zero entries of .

For the dimension-reduction methods, we select [5] as the representative. The main tool of this method is PCA, which defines a linear relationship between the original variables of the data set, mapping them to a set of uncorrelated variables. [5] fails to localize the spatial hot-spots, and it can only realize detecting the temporal change-point when

where is the control limit chosen to achieve a given in-control average run length. Vector with and . Matrix is derived from the covariance matrix of , i.e. , where is the loading vectors of and contains the eigenvalues of in descending order. The correlation of and is that, contains only the first k columns of , where k is the number of components selected. The selection criterion is cumulative percentage of variance (CPV), and in our case, we select k = 3. The correlation of and is that, . Also, we select the traditional T2 control chart [23] method as a benchmark. Because T2 control chart method is a very well-defined method, we ignore the detailed description of it in this paper, and the details of it can be found in [23].

Appendix 4. Choice of in Algorithm 1

For the choice of , the theoretical value can set as the Lipschitz constant of . In practice, researchers often use the maximal eigenvalue of the Hessian matrix of as the fixed [see, 2], i.e. the maximal eigenvalue of matrix , where the definition of matrix can be found in Section 3.2. Please note that the value of is fixed throughout our algorithm, and guarantees the convergence of the algorithm.

For choice of , a simple choice to ensure would be , where is the identity matrix and with denoting the operator norm of matrix [see, 35]. The definition of matrix can be found in Appendix 1 in our paper. Please note that is fixed throughout our algorithm and guarantees the convergence of the algorithm.

For choice of ρ, it has be theoretically proved that, any choice of ρ will lead to the convergence of the algorithm, but a good choice of ρ would help us realize faster convergence [see, 35]. In practice, a good choice of ρ is to start with an initial value and then update it in each iteration. The updating rule from to is:

where and The explicit format of can be found in (6). Besides, is the dual and primal feasibility, i.e. and . In practice, can be set as , depending on the desired precision. The parameter are set to be 2 and 10 as suggested by [3]. More details about the setting of ρ can be found in Section 2.3 in [35] and [3].

Funding Statement

H. Yan's research was supported in part by NSF grant [grant number DMS-1830363], S. E. Holte's research was supported in part by NSF grant DMS [grant number DMS-1830372], and University of Washington / Fred Hutch Center for AIDS Research, an NIH-funded program under award number [AI027757]. Y. Zhao and Y. Mei's research was supported in part by NSF [grant numbers DMS-1830344 and DMS-2015405], and in part by the National Center for Advancing Translational Sciences of the National Institutes of Health under Award Number UL1TR000454.

Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- 1.Bakshi B.R., Multiscale PCA with application to multivariate statistical process monitoring, AIChE J. 44 (1998), pp. 1596–1610. [Google Scholar]

- 2.Beck A. and Teboulle M., A fast iterative shrinkage-thresholding algorithm for linear inverse problems, SIAM. J. Imaging. Sci. 2 (2009), pp. 183–202. [Google Scholar]

- 3.Boyd S., Parikh N., and Chu E., Distributed optimization and statistical learning via the alternating direction method of multipliers, Now Publishers Inc, 2011.

- 4.Daubechies I., Defrise M., and De Mol C., An iterative thresholding algorithm for linear inverse problems with a sparsity constraint, Commun. Pure Appl. Math. A 57 (2004), pp. 1413–1457. Journal Issued by the Courant Institute of Mathematical Sciences. [Google Scholar]

- 5.De Ketelaere B., Hubert M., and Schmitt E., Overview of pca-based statistical process-monitoring methods for time-dependent, high-dimensional data, J. Qual. Technol. 47 (2015), pp. 318–335. [Google Scholar]

- 6.Fang X., Paynabar K., and Gebraeel N., Image-based prognostics using penalized tensor regression, Technometrics 61 (2019), pp. 369–384. [Google Scholar]

- 7.Hawkins D.M., Regression adjustment for variables in multivariate quality control, J. Qual. Technol. 25 (1993), pp. 170–182. [Google Scholar]

- 8.He B. and Yuan X., On non-ergodic convergence rate of Douglas–Rachford alternating direction method of multipliers, Numer. Math. 130 (2015), pp. 567–577. [Google Scholar]

- 9.Hu K. and Yuan J., Batch process monitoring with tensor factorization, J. Process Control 19 (2009), pp. 288–296. [Google Scholar]

- 10.Kolda T.G. and Bader B.W., Tensor decompositions and applications, SIAM Rev. 51 (2009), pp. 455–500. [Google Scholar]

- 11.Kulldorff M., A spatial scan statistic, Commun. Stat. Theory Methods 26 (1997), pp. 1481–1496. [Google Scholar]

- 12.Kulldorff M., Prospective time periodic geographical disease surveillance using a scan statistic, J. R. Stat. Soc. Ser. A 164 (2001), pp. 61–72. [Google Scholar]

- 13.Liu J., Yuan L., and Ye J., An efficient algorithm for a class of fused lasso problems, Proceedings of the 16th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, ACM, 2010, pp. 323–332.

- 14.Liu R.Y., Control charts for multivariate processes, J. Am. Stat. Assoc. 90 (1995), pp. 1380–1387. [Google Scholar]

- 15.Lorden G., Procedures for reacting to a change in distribution, Ann. Math. Stat. 42 (1971), pp. 1897–1908. [Google Scholar]

- 16.Louwerse D. and Smilde A., Multivariate statistical process control of batch processes based on three-way models, Chem. Eng. Sci. 55 (2000), pp. 1225–1235. [Google Scholar]

- 17.Naus J.I., Clustering of Random Points in Line and Plane, Harvard University Press, 1963. [Google Scholar]

- 18.Neill D.B., Moore A.W., Sabhnani M., and Daniel K., Detection of emerging space-time clusters, Proceedings of the 11th ACM SIGKDD International Conference on Knowledge Discovery in Data Mining, 2005, pp. 218–227.

- 19.Neill D.B., Moore A.W., and Cooper G.F., A Bayesian spatial scan statistic, in Advances in Neural Information Processing Systems, 2006, pp. 1003–1010

- 20.Page E.S., Continuous inspection schemes, Biometrika 41 (1954), pp. 100–115. [Google Scholar]

- 21.Paynabar K., Jin J., and Pacella M., Monitoring and diagnosis of multichannel nonlinear profile variations using uncorrelated multilinear principal component analysis, IIE Trans. 45 (2013), pp. 1235–1247. [Google Scholar]

- 22.Paynabar K., Zou C., and Qiu P., A change-point approach for phase-i analysis in multivariate profile monitoring and diagnosis, Technometrics 58 (2016), pp. 191–204. [Google Scholar]

- 23.Qiu P., Introduction to Statistical Process Control, Chapman and Hall/CRC, 2013. [Google Scholar]

- 24.Šaltytė Benth J. and Šaltytė L., Spatial-temporal model for wind speed in lithuania, J. Appl. Stat. 38 (2011), pp. 1151–1168. [Google Scholar]

- 25.Silverman B.W., Density Estimation for Statistics and Data Analysis, Vol. 26, CRC Press, 1986. [Google Scholar]

- 26.Tango T., Takahashi K., and Kohriyama K., A space–time scan statistic for detecting emerging outbreaks, Biometrics 67 (2011), pp. 106–115. [DOI] [PubMed] [Google Scholar]

- 27.Tibshirani R., Regression shrinkage and selection via the lasso, J. R. Stat. Soc. Ser. B 58 (1996), pp. 267–288. [Google Scholar]

- 28.Tibshirani R., Saunders M., Rosset S., Zhu J., and Knight K., Sparsity and smoothness via the fused lasso, J. R. Stat. Soc. Ser. B 67 (2005), pp. 91–108. [Google Scholar]

- 29.Tibshirani R.J. and Taylor J., The solution path of the generalized lasso, Ann. Stat. 39 (2011), pp. 1335–1371. [Google Scholar]

- 30.Wahlberg B., Boyd S., Annergren M., and Wang Y., An ADMM algorithm for a class of total variation regularized estimation problems, IFAC Proc. 45 (2012), pp. 83–88. [Google Scholar]

- 31.Wang Y.X., Sharpnack J., Smola A.J., and Tibshirani R.J., Trend filtering on graphs, J. Mach. Learn. Res. 17 (2016), pp. 3651–3691. [Google Scholar]

- 32.Yan H., Grasso M., Paynabar K., and Colosimo B.M., Real-time detection of clustered events in video-imaging data with applications to additive manufacturing, IISE Trans. (In Review). Available at http://arxiv.org/abs/2004.10977, _eprint: 2004.10977

- 33.Yan H., Paynabar K., and Shi J., Image-based process monitoring using low-rank tensor decomposition, IEEE. Trans. Autom. Sci. Eng. 12 (2014), pp. 216–227. [Google Scholar]

- 34.Yan H., Paynabar K., and Shi J., Real-time monitoring of high-dimensional functional data streams via spatio-temporal smooth sparse decomposition, Technometrics 60 (2018), pp. 181–197. [Google Scholar]

- 35.Zhu Y., An augmented admm algorithm with application to the generalized lasso problem, J. Comput. Graph. Stat. 26 (2017), pp. 195–204. [Google Scholar]

- 36.Zou C. and Qiu P., Multivariate statistical process control using LASSO, J. Am. Stat. Assoc. 104 (2009), pp. 1586–1596. [Google Scholar]

- 37.Zou C., Tsung F., and Wang Z., Monitoring profiles based on nonparametric regression methods, Technometrics 50 (2008), pp. 512–526. [Google Scholar]

- 38.Zou C., Ning X., and Tsung F., LASSO-based multivariate linear profile monitoring, Ann. Oper. Res. 192 (2012), pp. 3–19. [Google Scholar]