Abstract

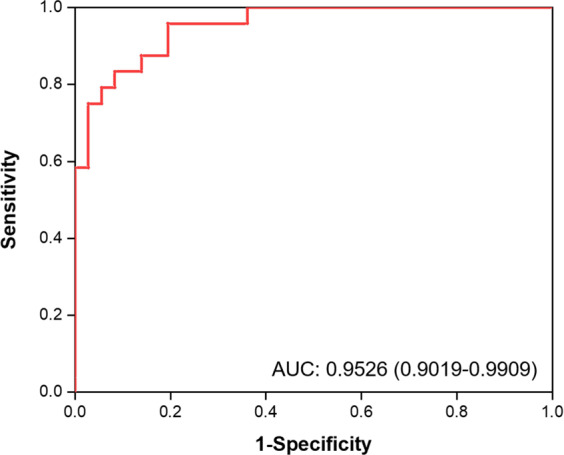

Lung cancer is one of the leading causes of cancer-related death worldwide. Cytology plays an important role in the initial evaluation and diagnosis of patients with lung cancer. However, due to the subjectivity of cytopathologists and the region-dependent diagnostic levels, the low consistency of liquid-based cytological diagnosis results in certain proportions of misdiagnoses and missed diagnoses. In this study, we performed a weakly supervised deep learning method for the classification of benign and malignant cells in lung cytological images through a deep convolutional neural network (DCNN). A total of 404 cases of lung cancer cells in effusion cytology specimens from Shanghai Pulmonary Hospital were investigated, in which 266, 78, and 60 cases were used as the training, validation and test sets, respectively. The proposed method was evaluated on 60 whole-slide images (WSIs) of lung cancer pleural effusion specimens. This study showed that the method had an accuracy, sensitivity, and specificity respectively of 91.67%, 87.50% and 94.44% in classifying malignant and benign lesions (or normal). The area under the receiver operating characteristic (ROC) curve (AUC) was 0.9526 (95% confidence interval (CI): 0.9019–9.9909). In contrast, the average accuracies of senior and junior cytopathologists were 98.34% and 83.34%, respectively. The proposed deep learning method will be useful and may assist pathologists with different levels of experience in the diagnosis of cancer cells on cytological pleural effusion images in the future.

Subject terms: Cancer imaging, Translational research

Introduction

Lung cancer is one of the leading causes of cancer-related death worldwide1. With the improvement of computed tomography screening technology, small lesions could be detected, making it possible for more patients in the early stage to be cured. However, there are still many patients who are already in a late stage when diagnosed and have missed the best surgical treatment period2,3. How to precisely treat this group of patients and improve their disease-free survival and overall survival is key to the diagnosis and treatment of advanced lung cancer4.

Patients with advanced lung cancer are often in poor physical condition and can hardly tolerate invasive examinations. This is because bleeding or other complications could easily occur during the procedure due to the large tumor loads. Therefore, it is of great benefit to patients to diagnose, classify (e.g. to distinguish between small cell and non-small cell carcinomas) and stage tumors through minimally invasive approaches, and this remains a popular research direction towards precision therapy5,6. Whilst histological biopsy and liquid-based cytological test (LCT) are both minimally invasive approaches for diagnosing lung cancer, the latter is even less invasive, since the specimens are harvested through sputum, pleural effusion, endobronchial ultrasound-guided transbronchial needle aspiration, bronchoscopy brush examination, bronchoalveolar lavage fluid, etc.7,8

Pleural effusion commonly exhibits in advanced lung cancer patients, especially in those with lung adenocarcinoma, for whom LCT is often the first-line diagnostic test to determine the stage of tumor and to obtain a large number of tumor cells for further molecular examinations9–11. Although many patients with advanced lung cancer are diagnosed cytologically with pleural effusion aspiration in our daily practice, differentiating cancer cells from reactive mesothelial cells remains a challenging problem for pleural effusion cytological diagnosis12. Cell blocks and immunohistochemistry (IHC) are not suitable for every cytological specimen because of the limited amount of tissue; thus, cytology slides are often the only sample available for diagnosis. In these cases, cytological morphology is important. However, subjective observation of cytological morphology may result in low interobserver consistency, especially in confusing cases, even for senior pathologists13. Improving the discrimination of tumor cells and other cells can provide support for decision making in clinical practice and greatly reduce both physical and economic burdens14–16.

Artificial intelligence (AI) has been widely used in the field of modern medicine17,18 and can help pathologists make more accurate diagnoses19–22. Using a deep convolutional neural network (DCNN), AI can be used to establish a systematic method to evaluate cells and obtain a final result23–26. We focus on the applications of AI to cytology as the latter not only plays an important role in pathology but also has the potential to resolve many clinical problems27. The conventional predictive AI models used in decision support systems for medical image analysis rely on annotations and manually engineered feature extraction, which is time-consuming and requires the advanced skills of cytopathologists or experts28,29. However, the weakly supervised deep learning algorithm could solve the problem of mass annotation, thus not requiring any annotation but a label for samples to be used for the training and validation of the model30–32.

In this study, we performed a weakly supervised deep learning method (namely “Aitrox AI model”) for the classification of benign and malignant cases based on lung cytological images at the whole-slide image (WSI) level. To investigate the diagnostic performance of the Aitrox AI model, it was compared against that of junior cytopathologists (resident pathologists who studied thoracic cytology for less than 1 year) and senior cytopathologists (attending pathologists who independently handled cytological reports for more than 3 years) with the patient pathological reports used as the gold standard.

Materials and methods

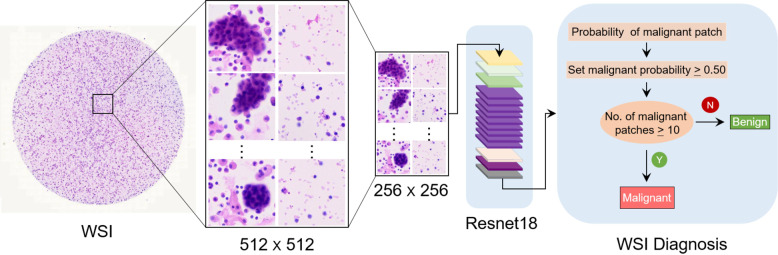

This study was approved by the Institutional Ethics Committee of Shanghai Pulmonary Hospital (approval no. K19-127Y). The cell classification method proposed in this study is shown in Fig. 1. Patches were cropped from cytological WSIs, and the Aitrox AI model was then used to classify those patches as benign or malignant.

Fig. 1. Overview of the proposed deep learning framework presented in this study.

WSIs of lung cancer pleural effusion specimens were cropped into small patches and classified as benign or malignant lesions based on a Resnet18 deep convolutional neural network. WSI, whole-slide image.

Materials

In this study, we retrospectively reviewed patients with benign and pulmonary adenocarcinoma pleural effusion cases diagnosed by interventional fine-needle aspiration from March 2018 to January 2020 at Shanghai Pulmonary Hospital. For patients with multiple slides, only one slide with the highest quality was manually selected for downstream analysis. The final constructed dataset consisted of 234 benign and 170 malignant slides, among which 28% were diagnosed according to cytological morphology alone whereas 72% were diagnosed according to cytological morphology combined with a matched cell block with IHC. Clinical follow-ups were not included in these cases. All slides were checked by a senior cytopathologist (XFX) and stored in the online digital slide viewer Microscope Image Information System from Shanghai Aitrox Technology Corporation Limited, Shanghai, China. Cytological specimens were prepared by the LCT method and stained with hematoxylin and eosin (H&E). The LCT is a method used to prepare cytological slides using the characteristics of different types of human cells (cells with larger sizes are rapidly deposited on glass after sedimentation). In pleural effusion cytology, tumor cells are larger than normal cells, which makes them easily recognizable by pathologists. The procedure of cytology specimen preparation was conducted according to the manufacturer’s protocol (CytoRich Non-gyn, BD), but with a change involving staining with H&E as used in our daily practice.

Liquid-based cytology can greatly facilitate the digitization of pathology because during the sample preparation, mucus and red blood cells (which often appear in the traditional smear) are removed and other cells are displayed as a thin layer form on the slide. The flat and high-quality slices greatly improve the efficiency of WSI scanning33. The WSIs of 234 benign and 170 malignant slides were collected using a digital slide scanner with a 40× magnification objective and a resolution of 0.25 µm/pixel and saved in SVS format according to the manufacturer’s protocol (EasyScan 6, Motic Inc., Xiamen, China). Six slides were excluded due to difficulty in scanning during quality control, including those with out-of-focus blur.

Data distribution

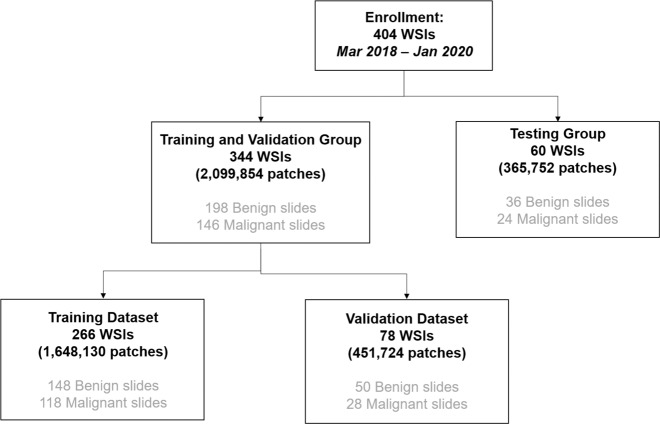

A consort diagram for data enrollment and allocation is presented in Fig. 2. According to the malignancy distribution of the dataset, 404 WSIs were randomly allocated into training, validation and test sets to train the models, select the best model, and evaluate the performance of the best model34,35. Because the sizes of the WSIs were too large to directly input to a neural network, all WSIs were cropped into small patches. There were 266 slides with 1,648,130 patches in the training set, 78 slides with 451,724 patches in the validation set, and 60 slides with 365,752 patches in the test set. For model construction, 344 slides were split into two groups, namely, the training and validation groups, which optimized the accuracy of the AI model.

Fig. 2. The consort diagram of the enrolled data from the Shanghai Pulmonary Hospital from March 2018 to January 2020.

Enrolled 404 WSIs were randomly allocated into training (266 WSIs), validation (78 WSIs) and testing (60 WSIs) datasets. WSI, whole slide image.

Image preparation

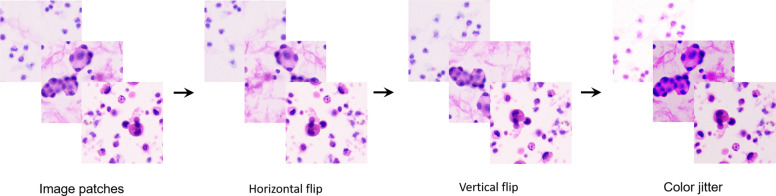

To construct the image dataset for the DCNN, patch images of 512 × 512 pixels were first cropped from the original microscopic images without overlapping and then resized to 256 × 256 pixels as input for model training. The pathologists reviewed the WSIs, and the number of patches per slide depended on the sample size, with an average of 6104. Before data augmentation, 1,648,130 (754,622 malignant/893,508 benign) and 451,724 (178,392 malignant/273,332 benign) patch images were obtained for the training and validation sets, respectively. As shown in Fig. 3, the patch images included various kinds of cells, and data augmentation (such as random horizontal flipping, random vertical flipping, and color jitter) was introduced to generate more diverse images to improve the generalizability of our model. On average, there were 24,417 patches per slide after data augmentation. Finally, 6,592,520 (3,018,488 malignant/3,574,032 benign) and 1,806,896 (713,568 malignant/1,093,328 benign) augmented patch images were prepared for training and validation, respectively.

Fig. 3. Generation and augmentation of patch images.

Image patches were augmented by horizontal flip, vertical flip and color jitter.

DCNN model training

The weakly supervised WSI classification model relies on multiple instance learning (MIL). The slide-level diagnosis casts the same weak labels on all patches within a specified WSI: on the one hand, if the slide is classified as malignant, at least one patch image contains malignant cells; on the other hand, if the slide is classified as benign, all of its patches must be benign and completely free from malignant cells31. The ResNet18 network structure, which was first proposed by Kaiming He, was employed to construct the classification models in this study36. A two-step approach was adopted during model training. In the first stage, a classifier was trained with patch images and the corresponding weak labels. The weak label of a single patch image was defined as the label of the WSI within the patch. All patches in the training dataset were inferred by the pretrained classifier, of which the pretrained weights were obtained from pretraining on the ImageNet dataset37. The malignant probabilities of the patches inferred within the same slide were sorted from high to low. The top ten patches with the highest probabilities were selected for inclusion in the training set, and each patch obtained a weak label from their common WSI. In the traditional MIL method, n (the number of patches with the highest probabilities) is usually set to 1. However, since the number of malignant cells in the WSIs of pleural effusion was usually greater than 10, n was set to 10 to obtain more training data in this research, making the model more convergent and preventing overfitting. In addition, to balance the distribution between malignant and benign samples, ten additional patches from each negative WSI were randomly selected for inclusion in the training set. In the second stage, the classifier was updated with the training data obtained from the first stage.

Resultsten

This study aimed to identify malignant and benign samples. Table 1 shows the baseline characteristics of the patients in all three datasets. The training and test sets achieved a balance in most of the characteristics. The p-values between the training and test sets for age and sex were 0.1307 and 0.3837, respectively, which are greater than 0.05 and indicate a balanced distribution between the two sets.

Table 1.

Baseline characteristics of the patients in training, validation and testing groups.

| Characteristics | Total (n = 404) | Training (n = 266) | Validation (n = 78) | Test (n = 60) | P value |

|---|---|---|---|---|---|

| Age, median (IQR), yr | 66 (21–97) | 65 (21–69) | 65 (21–91) | 65 (22–97) | 0.1307 |

| Male, No. (%) | 265 (65.27%) | 174 (65.41%) | 54 (69.23%) | 37 (59.68%) | 0.3837 |

| Histopathologic & Clinical final diagnosis | |||||

| Malignant lesions | 170 (41.87%) | 118 (44.36%) | 28 (35.90%) | 24 (38.71%) | |

| Benign lesions | 234 (58.13%) | 148 (55.64%) | 50 (64.10%) | 36 (61.29%) | |

The model had an area under the receiver operating characteristic curve (AUC) of 0.9526 with a 95% confidence interval (CI) of 0.9019–0.9909 (Fig. 4). If a patch has a malignancy probability greater than 0.50, it is considered a malignant patch. If a specific WSI has more than 10 malignant patches, it is classified as a malignant slide (Fig. 1).

Fig. 4. Receiver operator characteristics (ROC) curve of the AI model with AUC of 0.9526 (95% CI: 0.9019–0.9909).

AUC denotes the area under the receiver operator characteristics curve.

The case-based classifier’s performance was tested on 60 slides and showed 91.67% accuracy, 87.50% sensitivity, and 94.44% specificity. The performance was compared with that of two senior and junior cytopathologists. The cytopathologists annotated the dataset at the WSI level and provided a binary result (malignant or benign) for each WSI. All given outputs were evaluated against the gold standard (the pathological report result). The accuracy of our AI model was 91.67%, with an AUC of 0.9526 (95% CI: 0.9019–0.9909). In comparison, the accuracies of the two senior cytopathologists (P1 and P2; P2 was an expert cytopathologist) and the two junior cytopathologists (P3 and P4) were 96.67%, 100.00%, 81.67%, and 85.00%, respectively.

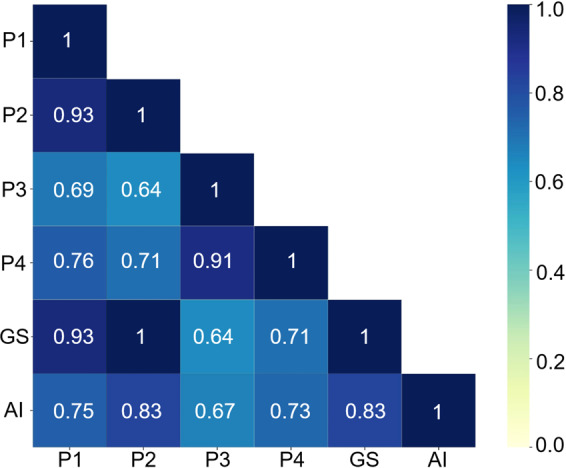

Correlations of the gold standard with the senior cytopathologists, junior cytopathologists, and Aitrox AI model were respectively analyzed. The results showed that the diagnoses by the senior pathologists (P1 and P2, Fig. 5) were most significantly correlated with the gold standard, with τ-values of 0.93 and 1.00. The diagnoses by the junior pathologists (P3 and P4, Fig. 5) showed the lowest correlation with the gold standard, with τ-values of 0.64 and 0.71. The predictions of the AI model were significantly correlated with the gold standard, with a τ-value of 0.83. The AI predictions also correlated with the diagnoses by the senior and junior cytopathologists, with τ values ranging from 0.67 and 0.83. The accuracy of the test set showed that the performance of our Aitrox AI model was between that of the junior and senior cytopathologists (Fig. 5).

Fig. 5. Heatmap of Kendall correlation τ value for gold standard, two senior cytopathologists, two junior cytopathologists and DCNN model.

GS, Gold standard; P1 and P2, two senior pathologists; P3 and P4, two junior pathologists; AI, the Aitrox AI model based on a DCNN.

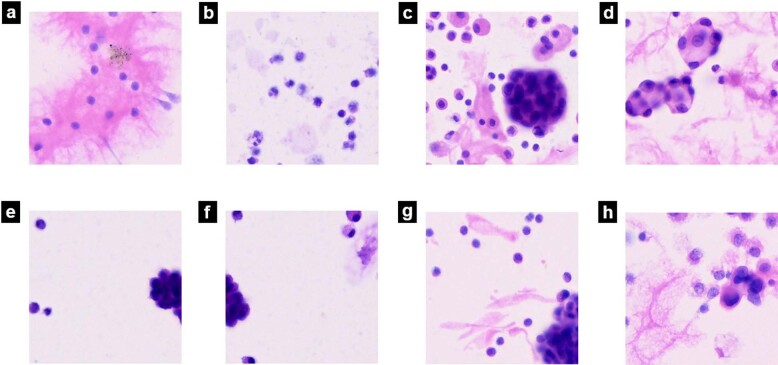

Figure 6 shows example patches from malignant and benign WSIs. Fig 6a–d and Fig. 6e–h show correct classifications and misclassifications by our DCNN, respectively.

Fig. 6. Different situation of AI performance in pleura effusion cytology diagnosis.

a and b show AI made correct diagnosis of benign cells; c and d show AI made correct diagnosis of malignant cells; e and f show AI misdiagnosed benign mesothelial cells as malignant; g and h show AI misdiagnosed malignant cells as benign.

Discussion

To the best of our knowledge, this is the first study with the largest dataset that applied weakly supervised AI to cytological pleural effusion image evaluation and compared predictions from AI with diagnoses by cytopathologists at different WSI levels. Previous studies were conducted with a small amount of data at the patch level or single-cell level. For example, Win’s experiments on cytological pleural effusion were carried out on 12538 and 12439 cytological pleural effusion images. The cytological specimens of 46 patients were collected in Teramoto’s study on lung cytological images40. In Tosun’s study of mesothelial cells in effusion cytology specimens, only 34 patients were enrolled12. Compared with these studies, our investigation was carried out with a larger dataset of 404 patients, which indicated that our dataset had broader coverage and higher generalization.

The main purpose of this investigation was to evaluate whether diagnoses made by the weakly supervised Aitrox AI model could reach clinical grade at the WSI level. This was the first time that a weakly supervised deep learning method was introduced to model training to distinguish malignant cells in effusion cytology specimens. To achieve this goal, a deep learning method was developed based on the Resnet 18 structure adopting the MIL approach. Diagnoses were also made by senior cytopathologists, junior cytopathologists and the Aitrox AI model for the same test set comprising 60 WSIs. Kendall’s correlation coefficients between the Aitrox AI model and the cytopathologists and gold standard demonstrated that the Aitrox AI model predictions had strong correlations with the diagnoses by all cytopathologists (from 0.67 to 0.83) and the gold standard (0.83)41. The diagnoses by the senior cytopathologists showed the highest correlation with the gold standard (0.93 and 1.00), while those by the junior cytopathologists showed the lowest correlation with the gold standard (0.64 and 0.71), indicating that the Aitrox AI model has the potential to make clinical-grade decisions during the initial diagnosis.

Among the 60 WSIs in the test set, 5 WSIs were misclassified by our AI model. The AI model performed well in classifying images with straightforward cell morphology and obvious features (Fig. 6a–d). However, the presence of proliferating mesothelial cells that cluster together (Fig. 6e, f) and tumor cells with poor adhesion (Fig. 6g, h) may result in misclassification by our DCNN. Of note, the diagnoses made in Fig. 6e and f are also difficult for pathologists in real clinical scenarios, and further evaluation based on cell blocks with IHC by experienced pathologists is necessary.

Cytological diagnosis plays an important role in the rapid diagnosis of lung cancer. Since cytological diagnosis is very subjective and requires experience, it takes a long time to train cytopathologists. Both the severe shortage of cytopathologists and the low accuracy rate of junior cytopathologists limit the applications of cytological diagnosis in China. Our research shows that the AI model has an accuracy rate close to that of senior cytopathologists, which may compensate for the shortage of experienced cytopathologists through its deployment in different hospitals and cities.

In the present study, we explored the performance of a DCNN model in the classification of cancer cells on cytological pleural effusion images at the WSI level. The results showed that the AI model achieved a diagnostic accuracy of 91.67%, which was much better than that of the two junior cytopathologists. The results indicated that AI may assist pathologists in diagnosing cancer cells on cytological pleural effusion images in the future.

This study has some limitations. Our data were collected from a single medical center (Shanghai Pulmonary Hospital), and only lung adenocarcinoma patients were included. While lung adenocarcinoma represents the most common subtype of lung cancer, future studies would benefit from including additional samples of other lung cancer types from multiple national and international centers. Another potential limitation is the exclusion of any atypical or suspicious diagnoses. A possible direction for future studies to follow up will be developing a DCNN-based method that can not only classify malignant and benign lesions but also output the malignant probability of a WSI to better assist pathologists in diagnosing atypical or suspicious cases.

Author contributions

W.C.Y. performed study concept and design; X.F.X wrote the manuscript; Yue Yu, Q.Y.Y. made Algorithm implementation; Chi-Cheng Fu analysis and interpretation of data, and statistical analysis; Q.F. provided technical support; L.P.Z., L.K.H., W.C.Y. performed development of methodology and writing, review and revision of the paper; All authors read and approved the final paper.

Funding

This work was supported by projects from The Shanghai Municipal Health Commission (20194Y0228), Shanghai Hospital Development Center Foundation (SHDC2020CR3047B), Clinical Research Foundation of Shanghai Pulmonary Hospital (fk1937), Shanghai Science and Technology Commission (18411962900).

Data availability

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

Competing interests

Authors have no conflict of interest to declare. I would like to declare on behalf of my co-authors that the work described was original research that has not been published previously, and not under consideration for publication elsewhere, in whole or in part. All the authors listed have approved the manuscript that is enclosed.

Ethics approval

This study was approved by the Ethics Committee of Shanghai Pulmonary Hospital (approval no. K19-127Y).

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Jiang T, et al. The changing diagnostic pathway for lung cancer patients in Shanghai, China. Eur. J. Cancer. 2017;84:168–172. doi: 10.1016/j.ejca.2017.07.036. [DOI] [PubMed] [Google Scholar]

- 2.Shlomi D, Ben-Avi R, Balmor GR, Onn A, Peled N. Screening for lung cancer: time for large-scale screening by chest computed tomography. Eur. Respir. J. 2014;44:217–238. doi: 10.1183/09031936.00164513. [DOI] [PubMed] [Google Scholar]

- 3.Bhandari S, Pham D, Pinkston C, Oechsli M, Kloecker G. Timing of treatment in small-cell lung cancer. Med. Oncol. 2019;36:47. doi: 10.1007/s12032-019-1271-3. [DOI] [PubMed] [Google Scholar]

- 4.Sato T, et al. Prognostic understanding at diagnosis and associated factors in patients with advanced lung cancer and their caregivers. Oncologist. 2018;23:1218–1229. doi: 10.1634/theoncologist.2017-0329. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Sermanet P, et al. Overfeat: Integrated recognition, localization and detection using convolutional networks. arXiv. 2014;1312:6229. [Google Scholar]

- 6.Zeiler M. D., Fergus R. Visualizing and understanding convolutional neural networks. In ECCV, 818-833 (2014).

- 7.Cicek T, et al. Adequacy of EBUS-TBNA specimen for mutation analysis of lung cancer. Clin. Respir. J. 2019;13:92–97. doi: 10.1111/crj.12985. [DOI] [PubMed] [Google Scholar]

- 8.Sundling KE, Cibas ES. Ancillary studies in pleural, pericardial, and peritoneal effusion cytology. Cancer Cytopathol. 2018;126:590–598. doi: 10.1002/cncy.22021. [DOI] [PubMed] [Google Scholar]

- 9.Baburaj G, et al. Liquid biopsy approaches for pleural effusion in lung cancer patients. Mol. Biol. Rep. 2020;47:8179–8187. doi: 10.1007/s11033-020-05869-7. [DOI] [PubMed] [Google Scholar]

- 10.Akamatsu H, et al. Multiplexed molecular profiling of lung cancer using pleural effusion. J. Thorac. Oncol. 2014;9:1048–1052. doi: 10.1097/JTO.0000000000000203. [DOI] [PubMed] [Google Scholar]

- 11.Ruan X, et al. Multiplexed molecular profiling of lung cancer with malignant pleural effusion using next generation sequencing in Chinese patients. Oncol. Lett. 2020;19:3495–3505. doi: 10.3892/ol.2020.11446. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Tosun AB, Yergiyev O, Kolouri S, Silverman JF, Rohde GK. Detection of malignant mesothelioma using nuclear structure of mesothelial cells in effusion cytology specimens. Cytom A. 2015;87:326–333. doi: 10.1002/cyto.a.22602. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Sakr L, et al. Cytology-based treatment decision in primary lung cancer: is it accurate enough? Lung Cancer. 2012;75:293–299. doi: 10.1016/j.lungcan.2011.09.001. [DOI] [PubMed] [Google Scholar]

- 14.Edwards SL, et al. Preoperative histological classification of primary lung cancer: accuracy of diagnosis and use of the non-small cell category. J. Clin. Pathol. 2000;53:537–540. doi: 10.1136/jcp.53.7.537. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Matsuda M, et al. Bronchial brushing and bronchial biopsy comparison of diagnostic accuracy and cell typing reliability in lung cancer. Thorax. 1986;41:475–478. doi: 10.1136/thx.41.6.475. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.DiBonito L, et al. Cytological typing of primary lung cancer study of 100 cases with autopsy confirmation. Diagn. Cytopathol. 1991;7:7–10. doi: 10.1002/dc.2840070104. [DOI] [PubMed] [Google Scholar]

- 17.Krizhevsky A, Sutskever I, Hinton G. Imagenet classification with deep convolutional neural networks. Commun. ACM. 2017;60:84–90. doi: 10.1145/3065386. [DOI] [Google Scholar]

- 18.Cun YL, et al. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1989;1:541–551. doi: 10.1162/neco.1989.1.4.541. [DOI] [Google Scholar]

- 19.Muhammad KKN, Parwani AV, Gurcan MN. Digital pathology and artificial intelligence. Lancet Oncol. 2019;20:e253–e261. doi: 10.1016/S1470-2045(19)30154-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Miller DD, Brown EW. Artificial intelligence in medical practice: the question to the answer? Am. J. Med. 2018;131:129–133. doi: 10.1016/j.amjmed.2017.10.035. [DOI] [PubMed] [Google Scholar]

- 21.Pallua JD, Brunner A, Zelger B, Schirmer M, Haybaeck J. The future of pathology is digital. Pathol. Res. Pract. 2020;216:153040. doi: 10.1016/j.prp.2020.153040. [DOI] [PubMed] [Google Scholar]

- 22.Bera K, Schalper KA, Rimm DL, Velcheti V, Madabhushi A. Artificial intelligence in digital pathology—new tools for diagnosis and precision oncology. Nat. Rev. Clin. Oncol. 2019;16:703–715. doi: 10.1038/s41571-019-0252-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Antonio VAA, et al. Classification of lung adenocarcinoma transcriptome subtypes from pathological images using deep convolutional networks. Int. J. Comput. Assist. Radio Surg. 2018;13:1905–1913. doi: 10.1007/s11548-018-1835-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Teramoto A, et al. Deep learning approach to classification of lung cytological images: Two-step training using actual and synthesized images by progressive growing of generative adversarial networks. PLoS One. 2020;15:e0229951. doi: 10.1371/journal.pone.0229951. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Wei J, et al. Pathologist-level classification of histologic patterns on resected lung adenocarcinoma slides with deep neural networks. Sci. Rep. 2019;9:3358. doi: 10.1038/s41598-019-40041-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Kriegeskorte N, Golan T. Neural network models and deep learning. Curr. Biol. 2019;29:R231–R236. doi: 10.1016/j.cub.2019.02.034. [DOI] [PubMed] [Google Scholar]

- 27.Lepus CM, Vivero M. Updates in effusion cytology. Surg. Pathol. Clin. 2018;11:523–544. doi: 10.1016/j.path.2018.05.003. [DOI] [PubMed] [Google Scholar]

- 28.Humphries MP, et al. Automated tumour recognition and digital pathology scoring unravels new role for PD-L1 in predicting good outcome in ER-/HER2+ breast cancer. J. Oncol. 2018;2018:2937012. doi: 10.1155/2018/2937012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Sha L, et al. Multi-field-of-view deep learning model predicts non-small cell lung cancer Programmed Death-Ligand 1 status from whole-slide Hematoxylin and Eosin images. J. Pathol. Inform. 2019;10:24. doi: 10.4103/jpi.jpi_24_19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Kanavati F, et al. Weakly-supervised learning for lung carcinoma classification using deep learning. Sci. Rep. 2020;10:9297. doi: 10.1038/s41598-020-66333-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Campanella G, et al. Clinical-grade computational pathology using weakly supervised deep learning on whole slide images. Nat. Med. 2019;25:1301–1309. doi: 10.1038/s41591-019-0508-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Girum KB, Crehange G, Hussain R, Lalande A. Fast interactive medical image segmentation with weakly supervised deep learning method. Int. J. Comput. Assist. Radio Surg. 2020;15:1437–1444. doi: 10.1007/s11548-020-02223-x. [DOI] [PubMed] [Google Scholar]

- 33.Simone LVE, et al. Constant quest for quality digital cytopathology. J. Pathol. Inf. 2018;9:13. doi: 10.4103/jpi.jpi_6_18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Coudray N, et al. Classification and mutation prediction from non-small cell lung cancer histopathology images using deep learning. Nat. Med. 2018;24:1559–1567. doi: 10.1038/s41591-018-0177-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Khalid A, et al. Geospatial immune variability illuminates differential evolution of lung adenocarcinoma. Nat. Med. 2020;26:1054–1062. doi: 10.1038/s41591-020-0900-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.He K., Zhang X., Ren S., Sun J. Deep residual learning for image recognition. In CVPR, 770-778 (2016).

- 37.Deng J., et al ImageNet: a large-scale hierarchical image database. In CVPR, 248–255 (2009).

- 38.Win KY, et al. Computer aided diagnosis system for detection of cancer cells on cytological pleural effusion images. Biomed. Res. Int. 2018;2018:6456724. doi: 10.1155/2018/6456724. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Win K, Choomchuay S, Hamamoto K, Raveesunthornkiat M. Detection and classification of overlapping cell nuclei in cytology effusion images using a double-strategy random forest. Appl. Sci. 2018;8:1608. doi: 10.3390/app8091608. [DOI] [Google Scholar]

- 40.Teramoto A, et al. Automated classification of benign and malignant cells from lung cytological images using deep convolutional neural network. Inform. Med. Unlocked. 2019;16:100205. doi: 10.1016/j.imu.2019.100205. [DOI] [Google Scholar]

- 41.Akoglu H. User’s guide to correlation coefficients. Turk. J. Emerg. Med. 2018;18:91–93. doi: 10.1016/j.tjem.2018.08.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.