Abstract

Background

Digital mental health apps are rapidly becoming a common source of accessible support across the world, but their effectiveness is often influenced by limited helpfulness and engagement.

Objective

This study’s primary objective was to analyze feedback content to understand users’ experiences with engaging with a digital mental health app. As a secondary objective, an exploratory analysis captured the types of mental health app users.

Methods

This study utilized a user-led approach to understanding factors for engagement and helpfulness in digital mental health by analyzing feedback (n=7929) reported on Google Play Store about Wysa, a mental health app (1-year period). The analysis of keywords in the user feedback categorized and evaluated the reported user experience into the core domains of acceptability, usability, usefulness, and integration. The study also captured key deficits and strengths of the app and explored salient characteristics of the types of users who benefit from accessible digital mental health support.

Results

The analysis of user feedback found the app to be overwhelmingly positively reviewed (6700/7929, 84.50% 5-star rating). The themes of engaging exercises, interactive interface, and artificial intelligence (AI) conversational ability indicated the acceptability of the app, while the nonjudgmentality and ease of conversation highlighted its usability. The app’s usefulness was portrayed by themes such as improvement in mental health, convenient access, and cognitive restructuring exercises. Themes of privacy and confidentiality underscored users’ preference for the integrated aspects of the app. Further analysis revealed 4 predominant types of individuals who shared app feedback on the store.

Conclusions

Users reported therapeutic elements of a comfortable, safe, and supportive environment through using the digital mental health app. Digital mental health apps may expand mental health access to those unable to access traditional forms of mental health support and treatments.

Keywords: digital mental health, artificial intelligence, user reviews, cognitive behavioral therapy, CBT

Introduction

The World Health Organization estimates that 450 million people worldwide have a mental disorder and a mental health gap of 1:10,000 worldwide [1]. Another report identified financial constraints and lack of serviceability as structural barriers to treatment [2]. Despite considerable progress in access to resources, the gap in mental health access, especially in industrialized countries, does not appear to have shifted [3,4]. Psychological and structural barriers to accessing mental health care, such as availability, convenience, stigma, and preference for self-care, persist and underscore the increased need for accessibility of mental health resources [5]. Digital mental health tools, such as apps and chatbots, allow for anonymity and convenience and can serve as important alternatives to bridge the access gap [6]. The increasing availability and usability of mobile devices may create new opportunities for overcoming the existing barriers and limited access of traditional clinical service delivery and provide customized patient-centered interventions. Similarly, smartphones and other mobile technology may have the potential to reach a greater number of users and deliver reliable and effective services, regardless of location [7,8].

For bridging the mental health access gap, understanding user experiences and attitudes toward digital mental health apps is crucial. In the context of digital mental health, the Technology Acceptance Model posits that perceived ease of use and perceived usefulness of a given technology have a positive influence on user engagement, which is required for interventions to be effective [9]. For both patients and providers, Chan et al [10] proposed criteria to use in assessing mental health apps in 4 key domains: usefulness, usability, integration, and infrastructure. In addition, acceptability of a mobile app is defined as the perceived value, usefulness, and desirability [11]. As user engagement often can be suboptimal, users’ attitudes toward the digital technology can reveal important insight into their engagement [9].

To further understand user engagement with artificial intelligence (AI)–guided digital mental health apps, this study aimed to understand user needs for impactful engagement with a digital mental health app (Wysa) by examining their user reviews. As a direct proxy for users’ attitudes toward a digital mental health app, user reviews are typically voluntary, unsolicited, and openly available on a public forum, which may provide helpful evaluations and insights into the users’ experiences and engagement. A previous qualitative analysis of user reviews on mental health apps identified design improvements, user expectations, unmet needs, and utility [12]. These user reviews are regarded as a comprehensive evaluation of the app from the user’s own perspective, which provides rich insights into the app user experience [13,14]. In addition to understanding needs for engagement, this study planned to explore the perceived value, usability, and desirability of the app as a digital mental health tool [15,16].

Methods

App Background

Wysa is an AI-enabled mental health app that leverages evidence-based cognitive-behavioral therapy (CBT) techniques through its conversational interface (chatbot). The app is designed by a team based out of India, the United Kingdom, and the United States. The app is designed to provide a therapeutic virtual space for user-led conversations through AI-guided listening and support, access to self-care tools and techniques (eg, CBT-based tools), as well as one-on-one human support. The app has demonstrated efficacy in building mental resilience and promoting mental well-being through a text-based conversational interface [17]. For the time period considered (1 year), the app received an overall 4.8/5 user rating on the Google Play Store and had been downloaded by more than 2 million people. The app also exists on the Apple App Store with a similar rating of 4.9/5 but with a smaller sample of qualitative reviews. Studies have shown Wysa as having the most evidence-based treatments among other smartphone apps [18], with conversations targeting specific problems and goals [19]. The app is anonymous [20] and safe [21] and rates highly on measures of app quality [22].

Study Design

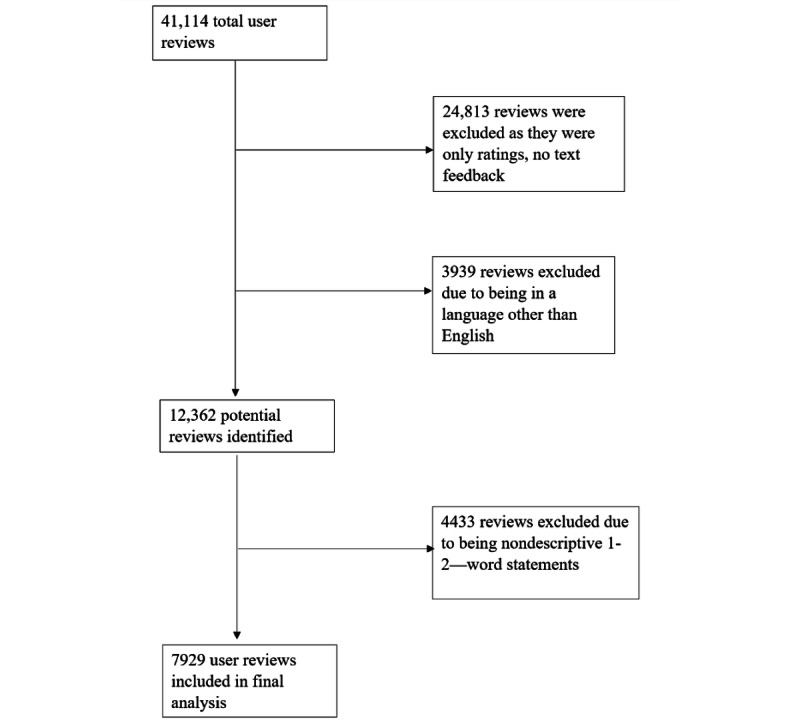

For direct user feedback, the authors examined reviews posted on the Google Play Store between October 2020 and October 2021, during which time, 41,114 user reviews had been received. A duration of 1 year and the use of Google Play reviews were considered to ensure a sufficiently large sample. For the analysis of descriptive feedback (n=7929), the authors codified the reasons shared by the users for their rating. User feedback in languages other than English, blanks, as well as reviews that contained 1-2–word nondescriptive statements (eg. “Really nice!”, “Awesome”, “Not interested”) were excluded (Figure 1).

Figure 1.

Diagram of the inclusion and exclusion criteria for user review analysis.

The study’s primary objective was to analyze feedback content to understand the users’ experiences with engaging with a digital mental health app. As a secondary objective, the types of individuals providing feedback were also explored.

Analysis

Using a consolidated framework created by Chan et al [10], which was based on guidelines suggested by the Healthcare Information and Management Systems Society (HIMSS) and the US Federal Government for evaluating digital health apps, the written reviews were verbally grouped into the domains of the framework and further analyzed for specific themes within each [15]. To understand Wysa’s capacity to currently help and engage users, the thematic analysis examined specific domains of (1) acceptability (eg, satisfaction, matching expectations of capabilities, likelihood to recommend, and level of interactiveness), (2) usability (eg, the ease, enjoyment, cultural, and demographic accessibility of use), (3) usefulness (eg, validity, reliability, effectiveness, and time required to obtain a benefit), and (4) integration (eg, security, privacy, data integration, and safety) [10,15].

Each user review was evaluated and categorized into the nonmutually exclusive domains. The domain of acceptability included statements discussing likelihood to recommend the app, frequency of use, impact of use, and reasons for use. For usefulness, mentions of what the app was being used for, specific uses (including tools and techniques), and time of use were included. Usability included mentions of ease of use, convenience, and interface features. Integration primarily consisted of reviews discussing data privacy, security, and anonymity.

The coding also enabled us to capture the emergence of the key characteristics of users who were able to receive mental health support due to increased accessibility.

Results

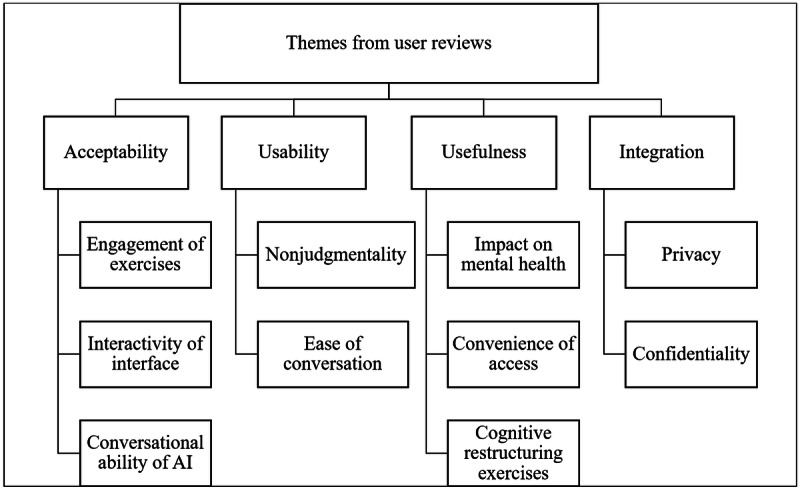

The reviews analyzed for this study were largely positive, with 6700 reviews (6700/7929, 84.50%) giving the app a 5-star rating and 2676 reviews (2676/7929, 33.75%) explicitly terming the app “helpful” or that it “helped.” Of 7929 reviews, 251 (3.17%) had a less than 3-star rating and were termed as negative reviews. The themes under the evaluation criteria aimed to capture the user experiences (Figure 2).

Figure 2.

Key themes from the reviews analyzed within the study. AI: artificial intelligence.

Acceptability

The acceptability of the app was identified through the themes emerging around engagement of exercises, interactivity of the interface, and conversational ability of AI. The users who reviewed the app rated it positively on acceptability when they found it interactive and conversational. Users reported that receiving appropriate responses to user conversations in the tools and techniques was valuable. For instance, a user compared it with other options available for self-care: “The interactive experience helped more than the journaling exercises I've done in the past.” Several users reported the variety of exercise-guided meditations, venting spaces, positive thinking exercises, and cognitive restructuring as important in their engagement. Another user commented: “It has such great features such as journaling and helping with anxiety, stress and sleep problems.” Additionally, the user reviews described the exercises as “educational,” “calming,” “relaxing,” and “functional.”

Users said that though “...Initially it felt silly to talk to an AI but it's extremely well made, tailored for therapy.” Per users, the “warm, friendly, and encouraging” AI helped them recreate an environment of confiding in a friend, without having to confront the intimidation of speaking with a real person. For instance, a user mentioned “It's really nice and I feel like I've been heard when others won't listen, even if I am only talking to an AI,” and another user said it “made me feel loved and heard during a crisis.” Users also reported finding talking to the AI to be a “fun” experience, perhaps brought out by elements that keep it light and accessible by including jokes, games, bitmap images (ie, GIFs), and other interactive agents.

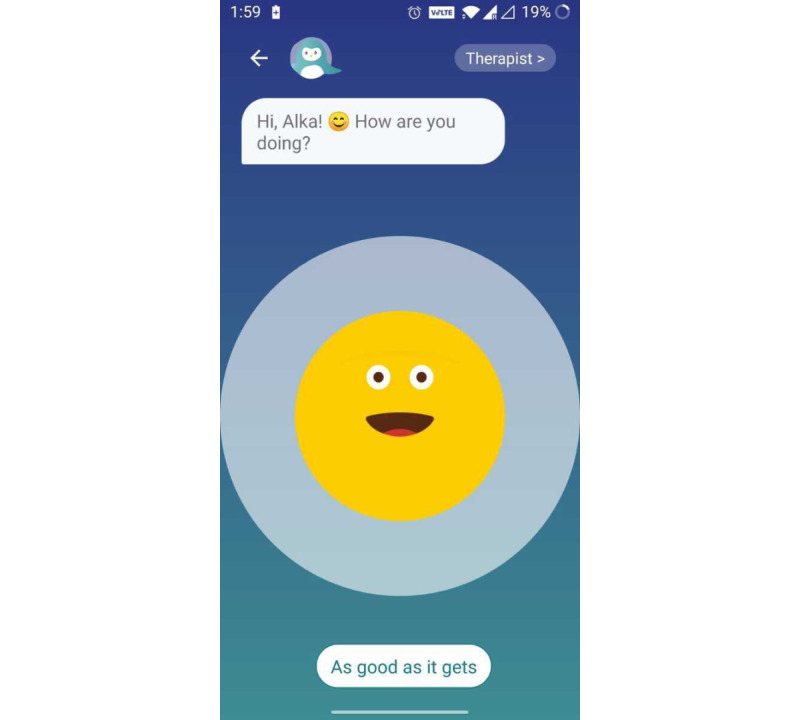

Users reported the interactiveness of the app as central in keeping them engaged: “The app made me laugh with its silly jokes and play.” They also found the “easy” and “instinctive” interface as a central element in a positive experience of using the app to be “easy” and “instinctive” (Figure 3). Users found it comfortable to use Wysa for numerous aspects of their well-being (Figure 4).

Figure 3.

Example of interactive interface.

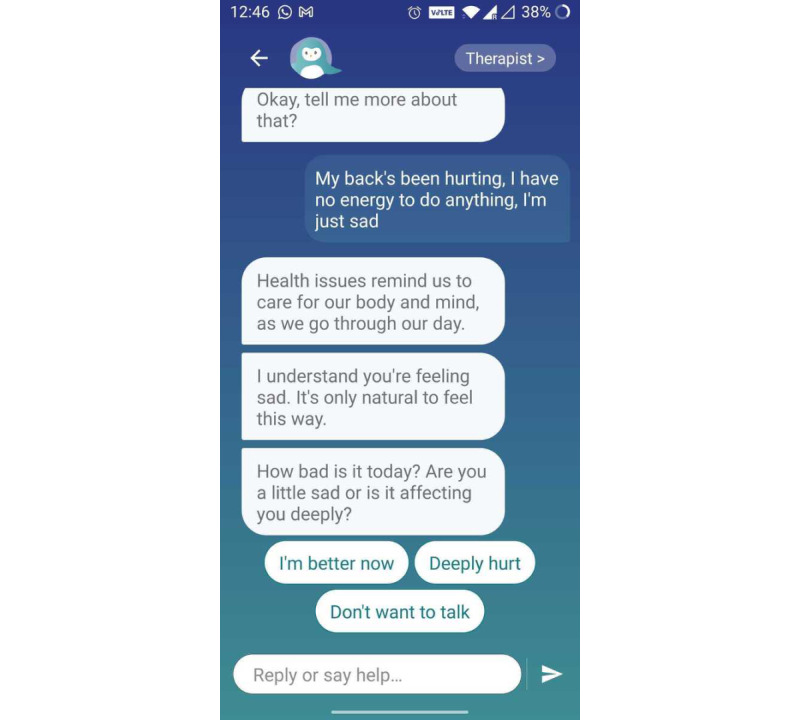

Figure 4.

Example of empathetic conversation.

They also mentioned being likely to recommend Wysa to others to help with sleep, managing stress, working through anxiety, as well as to “just talk to someone.” One user said, “it listens to you and helps relieve stress and also has a lot of coping mechanisms. I definitely recommend.” Some users discussed being able to share and rely on something for “regular” support, which further contributed to the acceptability of the app. A user exemplified this by stating:

Different people may find this app useful in different ways and it doesn't pressure you to do stuff if you aren't ready for it (no energy or not the right type). It's great even just as a sounding board, a place to organize your thoughts or make a to-do list, or a bit of a tiny friend in your pocket that's not judgmental and won't be tired of you.

However, some users did not find it helpful for their specific concerns and suggested further expansion to include these specific requirements. For instance, a user said, “Interesting concept, but it needs to learn to deal with more illnesses.”

Usability

The usability of the app was presented through emerging themes of nonjudgmentality and ease of conversation. This domain was rated positively by users as they found it to be a “safe” and “nonjudgmental” environment that is easily accessible. This feature of the app was identified from user reviews such as:

It's nice to talk to someone completely objectively. Even in therapy you feel guilty if all you do is go on and on, as is human conditioning, but being able to talk it out with Wysa is great. No judgment. Don't have to feel weird about anything.

Reviews indicated that, by conversing with AI, the pressure of performance in front of a therapist was removed, which may allow a user to express themselves more freely. Users commented on the AI interface of the “cute and approachable penguin” as helpful in cultivating a nonthreatening environment: “I love it, it's just amazing, knowing that I can talk about my inner problems to a penguin without judgment … I love that.” In fact, 201 users commented on the “no-judgment space” as a core component in making them feel safe and comfortable.

The app usage experience was also described as “...It feels like I'm talking to a real person ... Such a friendly interface.” Users appeared to be willing to adapt their expectation in order to continue benefiting from the app, with one user saying, “a little clunky at first but once you learn how to manage it it's very helpful.”

The most common negative review of the app was for repetition and a lack of comprehension by the chatbot, which made some users feel misunderstood and sometimes want to leave the app. Language limitations felt like a barrier to others who wanted to be understood more. They expressed a want for the app in native languages, including Italian, Spanish, French, and others, with one user saying, “The application is great, but it lacks the addition of other languages … in order to facilitate its use by all layers of society.”

Usefulness

The app's usefulness was portrayed by themes such as impact on mental health, convenience of access, and cognitive restructuring exercises. User feedback discussed that the app provided a safe and open space to challenge one's thoughts and feelings. The usefulness of the app in this regard is captured by its efficacy in dealing with mental health concerns. A user described their experience:

I have been struggling with depression since I was a child, and was terrified of reaching out for help. Finally a few weeks ago I hit rock bottom worse than ever before. I was really scared for a while. I was seeking some form of comfort or communication but didn't want to go to anyone, not to mention money is tight. This app really helped me when I needed it most. Who knew an AI penguin would cause me to sing again?

Providing a “safe” and “anonymous” place to process one's thoughts and emotions was identified by 107 users as highly impactful.

In specific clinical utility, users reported positive effects for anxiety (n=805), stress reduction (n=480), and depressed mood (n=400). In addition, 324 users reported app usage for posttraumatic stress disorder (PTSD) symptoms, fear, and sleep issues. Users identified numerous techniques and spaces offered as being especially helpful, such as physical activity exercises, sleep stories, meditations, cognitive restructuring, and reframing exercises. Users also commented on the affordability of the app as a way to bridge mental health access: “This app really helped me especially since I don't have access to any other useful form of therapy.”

The app would seem least useful when the chatbot felt limiting or was unable to fully understand the user. Some users facing a difficult time with the app would state, “Sometimes it's frustrating that an AI can't understand you that well,” and when it couldn’t understand the user’s dilemma, then it felt “empty and generic.”

Integration

The integration of the app was illustrated through the themes of privacy and confidentiality.

The app did not ask users to register themselves in any way to use the app and thus did not ask for personal details, such as demographic data. The anonymous and confidential nature of the app was a key reason for positive ratings in integration. Many users reported being satisfied with the privacy practices and finding the app “easy to trust.”

I feel really good knowing that I can talk to something completely private. I was feeling really down and I was pleasantly surprised. It was so simple yet so effective. I most definitely recommend it to someone who wants privacy and a healthy listening ear.

Characteristics of Users

The thematic analysis captured the emergence of the types of users who provided reviews in the app on Google Play Store and are also a representation of users who access digital mental health support such as Wysa. They were grouped by salient aspects of their expressed needs and concerns.

We identified 4 key groups: (1) those who self-reported having clinical issues, (2) those who reported being unable or unwilling to open up to a real person, (3) those who are financially conscious, (4) and those who are unable to access mental health professionals. Use of the app for support through self-reported diagnosis and symptoms of depression, anxiety, panic disorders, and PTSD was mentioned by 1856 individuals. They primarily used the CBT techniques and meditations on the app as a form of self-care. Another application of the app is for individuals who feel uncomfortable talking to people in their lives or who don’t have a reliable system with which to share their thoughts. They reported finding the AI-driven app useful in reducing the guilt and burden of opening up to a real person. Users also found the free nature of the app to be beneficial to reduce the burden of financial anxiety when considering mental health support. Numerous users (n=594) also reported using the app at times when they would be unable to access therapists, including when experiencing higher symptoms of depression and anxiety late at night.

Discussion

Principal Findings

This study represents one of the largest studies in understanding users’ perceptions of a digital mental health app. It looked at the acceptability, usability, usefulness, and integration of a digital mental health app, by analyzing publicly available user feedback and reviews. This approach is unique for several reasons—first, it uses user feedback that was unsolicited by the developers and promoters and is delivered in a public forum, reducing the social desirability bias that could interact in other researcher-administered evaluations. Second, the robust sample size allowed for a deeper dive of user experiences, which was previously unexplored in other studies. This approach helped to recognize the types of users of mental health apps, which helped to identify strengths and weaknesses of digital mental health tools and allowed us to better understand the gaps in services provided.

The most important findings resulting from this study are the factors that contribute to higher engagement and acceptability for a digital mental health app. Users most consistently listed the “active and available listening” element as the key to foster acceptability with the digital mental health experience. The app further cultivated the therapeutic elements via the use of an AI-based chatbot with a friendly penguin user interface. In addition, the perceived nonjudgmentality and friendliness of this interface resulted in high usability and ongoing engagement with the app.

Understanding the user experience is important to ensuring meaningful usage and clinical utility [17]. Users strongly valued the anonymity and confidentiality of the app, which are valuable strengths in any therapeutic relationship [23]. Therapeutic bonds are fostered through trust, acceptance, empathy, and genuineness and are important for their role in the effectiveness of an intervention [23] and, in a digital environment, are created by human dialogue through a conversation agent [24].

With users providing a large majority of positive reviews, the acceptability and effectiveness of Wysa as a digital mental health tool have been established [25]. Digital mental health apps can provide important benefits, especially for supporting individuals with subclinical psychiatric symptoms [26]. The findings of this study highlighted how digital mental health apps can significantly improve the accessibility and affordability of mental health support. The characteristics of users identified helped outline those who may access and benefit from the presence of mental health apps; for example, individuals managing social anxiety symptoms of speaking face to face can find significant therapeutic value through an AI-enabled tool. In addition, mental health apps may serve as augmenting or transitioning tools during times when traditional mental health services are limited, such as after office hours, in rural settings, or in between appointments and referrals.

Limitations

Limitations to the study include the source of data, as the Apple App Store data were not considered and only the reviews on Google Play Store were addressed in this study. Further, user reviews are taken at a single point in time, and thus evidence of changes in feedback are unavailable for consideration. No demographic information was collected aside from reviews being in English. Clinical scores of users were not identified, which would otherwise have contributed to more direct understanding of the experience with the app in clinical populations. The study is also limited by lack of knowledge on the duration of app use or the rate of attrition among users due to app issues or other reasons.

Conclusions

This study utilized a user-led approach to understanding factors for engagement and helpfulness in digital mental health. User feedback was analyzed on domains of acceptability, usability, usefulness, and integration, and we found the app to be overwhelmingly positively reviewed. A key facet that emerged is the comfort and safe environment created by the nonjudgmental digital mental health tool that provides users with clinical and subclinical support. Further analysis revealed 4 predominant types of individuals who appear to be engaging in digital mental health support and who are infrequent users of face-to-face mental health services. Digital mental health apps can provide a valuable service to those unable to access mental health support. Future directions for digital mental health include improvements within the technology to cater to varied users, increasing its capacity to contribute to clinical utility.

Abbreviations

- AI

artificial intelligence

- CBT

cognitive behavioral therapy

- HIMSS

Healthcare Information and Management Systems Society

- PTSD

posttraumatic stress disorder

Footnotes

Conflicts of Interest: TM and CS are employees of Wysa and hold equity in Wysa Inc. AJA declares no conflicts of interest related to this study.

References

- 1.Depression and other common mental disorders: global health estimates. World Health Organization. 2017. [2022-03-30]. https://apps.who.int/iris/handle/10665/254610 .

- 2.Rowan K, McAlpine DD, Blewett LA. Access and cost barriers to mental health care, by insurance status, 1999-2010. Health Aff (Millwood) 2013 Oct;32(10):1723–30. doi: 10.1377/hlthaff.2013.0133. http://europepmc.org/abstract/MED/24101061 .32/10/1723 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Demyttenaere K, Bruffaerts R, Posada-Villa J, Gasquet I, Kovess V, Lepine JP, Angermeyer MC, Bernert S, de Girolamo G, Morosini P, Polidori G, Kikkawa T, Kawakami N, Ono Y, Takeshima T, Uda H, Karam EG, Fayyad JA, Karam AN, Mneimneh ZN, Medina-Mora ME, Borges G, Lara C, de Graaf R, Ormel J, Gureje O, Shen Y, Huang Y, Zhang M, Alonso J, Haro JM, Vilagut G, Bromet EJ, Gluzman S, Webb C, Kessler RC, Merikangas KR, Anthony JC, Von Korff MR, Wang PS, Brugha TS, Aguilar-Gaxiola S, Lee S, Heeringa S, Pennell B, Zaslavsky AM, Ustun TB, Chatterji S, WHO World Mental Health Survey Consortium Prevalence, severity, and unmet need for treatment of mental disorders in the World Health Organization World Mental Health Surveys. JAMA. 2004 Jun 02;291(21):2581–90. doi: 10.1001/jama.291.21.2581.291/21/2581 [DOI] [PubMed] [Google Scholar]

- 4.WHO MiNDbank: More Inclusiveness Needed in Disability and Development. World Health Organization. [2022-03-30]. https://www.who.int/mental_health/mindbank/flyer_EN.pdf .

- 5.Jorm AF, Patten SB, Brugha TS, Mojtabai R. Has increased provision of treatment reduced the prevalence of common mental disorders? Review of the evidence from four countries. World Psychiatry. 2017 Mar 26;16(1):90–99. doi: 10.1002/wps.20388. doi: 10.1002/wps.20388. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Mohr DC, Riper H, Schueller SM. A solution-focused research approach to achieve an implementable revolution in digital mental health. JAMA Psychiatry. 2018 Feb 01;75(2):113–114. doi: 10.1001/jamapsychiatry.2017.3838.2664967 [DOI] [PubMed] [Google Scholar]

- 7.East ML, Havard BC. Mental health mobile apps: from infusion to diffusion in the mental health social system. JMIR Ment Health. 2015 Mar 31;2(1):e10. doi: 10.2196/mental.3954. https://mental.jmir.org/2015/1/e10/ v2i1e10 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Torous J, Luo J, Chan SR. Mental health apps: What to tell patients. Current Psychiatry. 2018;17(3):21–25. https://cdn.mdedge.com/files/s3fs-public/Document/February-2018/CP01703021.PDF . [Google Scholar]

- 9.Davis FD. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly. 1989 Sep;13(3):319. doi: 10.2307/249008. [DOI] [Google Scholar]

- 10.Chan S, Torous J, Hinton L, Yellowlees P. Towards a framework for evaluating mobile mental health apps. Telemed J E Health. 2015 Dec;21(12):1038–41. doi: 10.1089/tmj.2015.0002. [DOI] [PubMed] [Google Scholar]

- 11.Improving the User Experience. US General Services Administration. [2022-03-30]. https://www.usability.gov/

- 12.Nicholas J, Fogarty AS, Boydell K, Christensen H. The reviews are in: a qualitative content analysis of consumer perspectives on apps for bipolar disorder. J Med Internet Res. 2017 Apr 07;19(4):e105. doi: 10.2196/jmir.7273. https://www.jmir.org/2017/4/e105/ v19i4e105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Alqahtani F, Orji R. Insights from user reviews to improve mental health apps. Health Informatics J. 2020 Sep 10;26(3):2042–2066. doi: 10.1177/1460458219896492. https://journals.sagepub.com/doi/10.1177/1460458219896492?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub%3dpubmed . [DOI] [PubMed] [Google Scholar]

- 14.Pagano D, Maalej W. User feedback in the appstore: An empirical study. 21st IEEE International Requirements Engineering Conference (RE); July 15-19, 2013; Rio de Janeiro, Brazil. 1961. pp. 24–34. [DOI] [Google Scholar]

- 15.Gowarty MA, Longacre MR, Vilardaga R, Kung NJ, Gaughan-Maher AE, Brunette MF. Usability and acceptability of two smartphone apps for smoking cessation among young adults with serious mental illness: mixed methods study. JMIR Ment Health. 2021 Jul 07;8(7):e26873. doi: 10.2196/26873. https://mental.jmir.org/2021/7/e26873/ v8i7e26873 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Marshall WL, Serran GA. The role of the therapist in offender treatment. Psychology, Crime & Law. 2006 Aug 22;10(3):309–320. doi: 10.1080/10683160410001662799. [DOI] [Google Scholar]

- 17.Torous J, Andersson G, Bertagnoli A, Christensen H, Cuijpers P, Firth J, Haim A, Hsin H, Hollis C, Lewis S, Mohr DC, Pratap A, Roux S, Sherrill J, Arean PA. Towards a consensus around standards for smartphone apps and digital mental health. World Psychiatry. 2019 Mar;18(1):97–98. doi: 10.1002/wps.20592. doi: 10.1002/wps.20592. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Wasil AR, Venturo-Conerly KE, Shingleton RM, Weisz JR. A review of popular smartphone apps for depression and anxiety: Assessing the inclusion of evidence-based content. Behav Res Ther. 2019 Dec;123:103498. doi: 10.1016/j.brat.2019.103498.S0005-7967(19)30184-6 [DOI] [PubMed] [Google Scholar]

- 19.Wasil AR, Palermo EH, Lorenzo-Luaces L, DeRubeis RJ. Is there an app for that? A review of popular apps for depression, anxiety, and well-being. Cognitive and Behavioral Practice. 2021 Oct;:1. doi: 10.1016/j.cbpra.2021.07.001. http://paperpile.com/b/eBtpUG/FGM5 . [DOI] [Google Scholar]

- 20.Kretzschmar K, Tyroll H, Pavarini G, Manzini A, Singh I, NeurOx Young People’s Advisory Group Can your phone be your therapist? Young people's ethical perspectives on the use of fully automated conversational agents (chatbots) in mental health support. Biomed Inform Insights. 2019 Mar 05;11:1178222619829083. doi: 10.1177/1178222619829083. https://journals.sagepub.com/doi/10.1177/1178222619829083?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub%3dpubmed .10.1177_1178222619829083 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Meadows R, Hine C, Suddaby E. Conversational agents and the making of mental health recovery. Digit Health. 2020 Nov 20;6:2055207620966170. doi: 10.1177/2055207620966170. https://journals.sagepub.com/doi/10.1177/2055207620966170?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub%3dpubmed .10.1177_2055207620966170 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Myers A, Chesebrough L, Hu R, Turchioe MR, Pathak J, Creber RM. Evaluating commercially available mobile apps for depression self-management. AMIA Annu Symp Proc. 2020;2020:906–914. http://europepmc.org/abstract/MED/33936466 .126_3411554 [PMC free article] [PubMed] [Google Scholar]

- 23.Nienhuis JB, Owen J, Valentine JC, Winkeljohn Black S, Halford TC, Parazak SE, Budge S, Hilsenroth M. Therapeutic alliance, empathy, and genuineness in individual adult psychotherapy: A meta-analytic review. Psychother Res. 2018 Jul 07;28(4):593–605. doi: 10.1080/10503307.2016.1204023. http://paperpile.com/b/eBtpUG/KMEY . [DOI] [PubMed] [Google Scholar]

- 24.Følstad A, Brandtzaeg PB. Users' experiences with chatbots: findings from a questionnaire study. Qual User Exp. 2020 Apr 11;5(1):1. doi: 10.1007/s41233-020-00033-2. http://paperpile.com/b/eBtpUG/tHSD . [DOI] [Google Scholar]

- 25.Inkster B, Sarda S, Subramanian V. An empathy-driven, conversational artificial intelligence agent (Wysa) for digital mental well-being: Real-world data evaluation mixed-methods study. JMIR Mhealth Uhealth. 2018 Nov 23;6(11):e12106. doi: 10.2196/12106. https://mhealth.jmir.org/2018/11/e12106/ v6i11e12106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Mohr DC, Burns MN, Schueller SM, Clarke G, Klinkman M. Behavioral intervention technologies: evidence review and recommendations for future research in mental health. Gen Hosp Psychiatry. 2013;35(4):332–8. doi: 10.1016/j.genhosppsych.2013.03.008. https://linkinghub.elsevier.com/retrieve/pii/S0163-8343(13)00069-8 .S0163-8343(13)00069-8 [DOI] [PMC free article] [PubMed] [Google Scholar]