Abstract

Shadow detection and removal play an important role in the field of computer vision and pattern recognition. Shadow will cause some loss and interference to the information of moving objects, resulting in the performance degradation of subsequent computer vision tasks such as moving object detection or image segmentation. In this paper, each image is regarded as a small sample, and then a method based on material matching of intelligent computing between image regions is proposed to detect and remove image shadows. In shadow detection, the proposed method can be directly used for detection without training and ensures the consistency of similar regions to a certain extent. In shadow removal, the proposed method can minimize the influence of shadow removal operation on other features in the shadow region. The experiments on the benchmark dataset demonstrate that the proposed approach achieves a promising performance, and its improvement is more than 6% in comparison with several advanced shadow detection methods.

1. Introduction

Although the shadow in the image is a kind of image information, it often interferes with object recognition, image segmentation, and other image processing work. Therefore, the detection and removal of shadow have been widely concerned. The difficulty of shadow detection lies in the complexity of background and light in the image, as well as the interference of dark objects in the image. The difficulty of shadow removal is to ensure that only the shadow is removed without changing other image features in the shadow region.

Inspired by the relevant articles on shadow detection and removal through region matching and other methods [1, 2], this paper proposes a shadow detection and removal method based on interarea material matching without training. This method can accurately detect the shadow region and minimize the impact of shadow removal on other features in the shadow region.

The contributions of this paper are as follows. (1) In shadow detection, based on the mutual restriction between image region features and matching region, this method can detect directly without training. (2) In shadow removal, the shadow region is restored according to the region matching. The calculation time is very short. Other features in the shadow region can be kept unchanged to a great extent, and only the shadow is restored.

The remainder of this paper is organized as follows. Section 2 introduces the related works of our proposed approach. In Section 3, we present the processes of shadow detection and removal. The experiments and experimental results' analysis are described in Section 4, and finally, this paper is briefly summarized in Section 5.

2. Related Works

At present, the commonly used shadow detection and removal methods are divided into three directions: the method based on a physical model, the method based on basic image features, and the method based on machine learning. At the same time, there are many ways to combine these directions.

2.1. Shadow Detection

2.1.1. Shadow Feature

At present, shadow detection based on image features is widely studied, mainly to extract some changing and invariant features in shadow. Zhu et al. [3] proposed a variety of features that change and remain unchanged under the shadow in the gray image, including the features of distinguishing dark objects from shadow regions. Lalonde et al. [4] emphasized the local features of the image and determined the shadow boundary quickly by comparing the features of adjacent regions. Chung et al. [5] proposed a single feature threshold method for directly distinguishing shadow regions. Dong et al. [6] proposed the characteristics of brightness and change rate. Some color-related features were mentioned in [1, 7] to distinguish shadow regions.

2.1.2. Machine Learning Method

Currently, a lot of works combined the direct distinction of original features with machine learning algorithms to train classifiers to distinguish shadow regions under the premise of data-driven [1, 3, 4, 8]. At the same time, for the models that distinguish each pixel separately, most of the works used CRF, MRF, and other similar models to maintain regional consistency [3, 9–11]. With the development of deep learning algorithms in recent years, deep learning models have also been applied to shadow detection [9, 10, 12]. Deep learning models make the original feature engineering no longer necessary, but these algorithms are often based on a large amount of data and need a long time for model training.

2.2. Shadow Removal

User assistance shadow removal can be completed under two conditions: automatic image shadow detection and input of some image information with user assistance. Different from the earlier user assistance that requires users to clearly input various complex information such as shadow contour [13–15], at present, some user assistance methods only need to input a small amount of information to obtain good results [16–18].

2.2.1. Shadow Removal Algorithm

At present, many shadow removal algorithms are based on shadow matting [1, 9], that is, to decompose the image into the form of some parameters acting on the original unshaded image, and then remove the shadow by calculating these parameters. On the basis of shadow detection, shadow boundary and image reorganization with shadow can be used to remove shadow [19–21]. Vicente and Samaras [2] proposed a method to restore the shadow by using the adjacent region of the shadow region, but this method required the shadow region to have the same material as its adjacent region. At the same time, some recent machine learning algorithms and deep learning methods also have good performance in shadow removal [9, 12, 22].

3. The Proposed Method

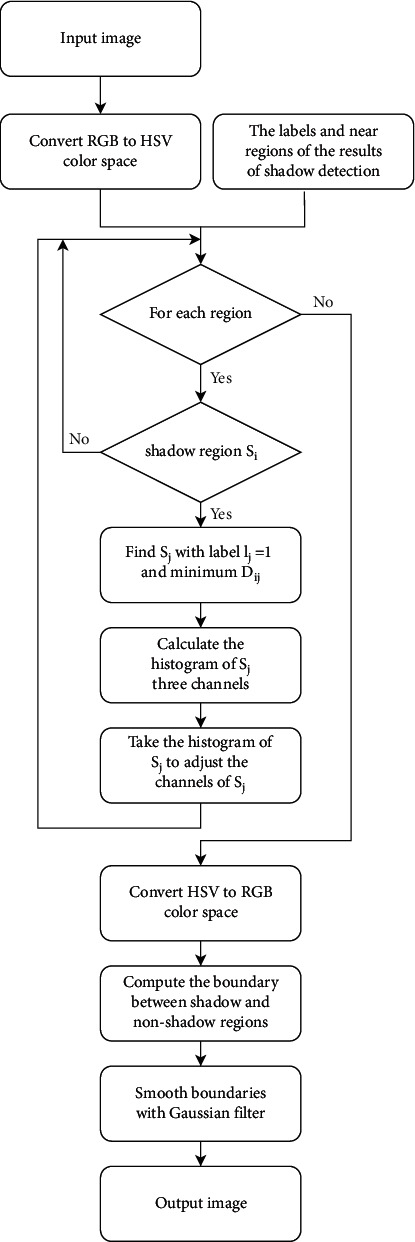

The proposed method in this paper is based on image region matching and image optical features. Firstly, the Meanshift [23] algorithm is used to segment the image into a total of n regions. Each region is recorded as Si, and the center is Ci. Then, match the region with the closest material for each region (see Section 3.1). Shadow detection (see Section 3.2) and shadow removal (see Section 3.3) are realized according to region matching. The overall pipeline is shown in Figure 1.

Figure 1.

Pipeline of the proposed method.

3.1. Region Matching Calculation

Since the color, saturation, and other features will change in the shadow region and the target is looking for the region with the most similar materials, the features used in region matching are the two shadow-invariant features mentioned in reference [3]: gradient feature and texture feature.

3.1.1. Gradient Feature

The gradient change of the image region is hardly affected by shadow. The gradient similarity between regions with similar materials is stronger. In order to extract this feature, a histogram is calculated using the gradient value of each region in the image, and the similarity between regions is measured through the Euclidean distance of the histograms between two regions.

3.1.2. Texture Feature

Image surface texture features are almost independent of shadows. Specifically, the method in reference [24] is used to extract texture features. Similarly, the similarity between regions is measured by calculating the Euclidean distance of the histograms of two regions.

3.1.3. Distance between Regions

In order to ensure the local consistency, the distance between the central points of the two regions is added as the factor to judge the regional similarity. The calculation of similarity between regions is shown in the following equation:

| (1) |

where Dgradienti,j, Dtexturei,j, and Ddistancei,j, respectively, represent gradient feature similarity, texture feature similarity between regions i, j, and distance between center points of regions i, j.

3.2. Shadow Detection

In shadow detection, on the one hand, the shadow is judged by the shadow features; on the other hand, the shadow is judged by the mutual restriction between regions.

3.2.1. Feature Selection

In color images, color tone is a powerful descriptor that simplifies and dominates feature identification and extraction in visual pattern recognition applications. Shadow detection and removal require separate chrominance and luminance information. Since the image represented by the RGB color space is a mixture of chrominance and luminance information into the three components of R, G, and B, we need some other color models to handle shadow. There are five invariant color models, HSI model, HSV model, HCV model, YIQ model, and YCbCr model. All of the models consist of independent chromaticity and luminance. We select three common color space models to detect and remove shadows.

-

(1)

Y-channel information in YCbCr color space: convert the original image from RGB color space to YCbCr color space. According to the method mentioned in reference [25], when the value of a pixel on the Y-channel in YCbCr space is less than 60% of the average value of the Y-channel of the whole image, it can be directly considered that the pixel is in shadow. In the algorithm introduced in Section 3.2.2, when the average value of the Y-channel of a region is less than 60% of the average value of the Y-channel of the whole image, this region is considered in the shadow. Take the average value from the region Si and record the feature as Yi.

-

(2)HSI color space information: convert the original image from RGB space to HSI space and then normalize the values on H and I channels to the [0, 1] interval to obtain He and Ie. According to the method in reference [5], the value in equation (2) is extracted for each pixel as another feature of the image. Take the average value from the region Si and record the feature as Ri.

(2) R(x, y), He(x, y), and Ie(x, y) represent pixel at position (x, y) in R, image He, and image Ie, respectively.

-

(3)

HSV color space information: convert the original image from RGB space to HSV color space. We utilize the chrominance channel H as the other feature. Take the average value from the region Si and record the feature as Hi.

3.2.2. The Proposed Algorithm

Figure 2 shows the flowchart of the shadow detection approach.

Figure 2.

The flowchart of the shadow detection.

The specific implementation steps of the proposed shadow detection method are as follows.

Variable Preparation.

(1) Segment image with Meanshift algorithm and then obtain n regions; each region is remarked as Si. The center of each region is Ci.

(2) Compute the disparity between Si and Sj and get the corresponding region with the highest similarity for a given region which is denoted as Neari. At the same time, record the information labeli of whether the region i is in shadow and initialize labeli to 255. The similarity of regions i and j is computed as equation (1).

(3) For all Ri, 1 < i < n, use K-means algorithm to calculate two centers Cshadow and Clit which, respectively, represent whether it is the feature center of the shadow region. Suppose R follows a normal distribution; the standard deviations Stdshadow and Stdlit are calculated, which correspond to Cshadow and Clit, respectively. Then, for every Ri, Fshadow and Flit corresponding to Cshadow and Clit are computed.

(4) For each region Si, Refusei represents whether Si is forbidden to be a shadow region because of other regions, and Refusei is initialized to 0.

Steps.

(1) Extract features Yi and Ri and prepare relevant variables.

(2) If Yi < 60%∗mean(Yimage), labeli=shadow.

(3) Select the region Si with the greatest Fshadow and Refusei=0, and set labeli=shadow.

(4) Denote the nearest region Neari of Si as Sj. Check whether Si and Sj are brightness opposite regions by comparing Ri and Rj, and if so, then judge Refusej=1.

(5) Iteratively execute steps (3) and (4) until no update.

(6) For the region Si of labeli=shadow, if the brightness of Si is similar to Sj and Refusej=0 by comparing Yi, Yj, Ri, Rj, set labelj=0.

3.3. Shadow Removal

Shadow removal is mainly carried out in HSV color space. The overall idea is to find the corresponding region Sj for the shadow region Si to meet labelj=1 and minimize Di,j. Therefore, Sj is the most similar non-shaded region of Si. Consider using Sj to adjust the brightness of Si, remove the shadow on the Si through the histogram matching algorithm, and minimize the impact of the operation on other features. The flowchart of shadow removal is shown in Figure 3.

Figure 3.

The flowchart of the shadow removal.

3.3.1. Histogram Matching

Supposing the feature of region Si as Featurei, given template histogram HistT, the purpose of histogram matching is to ensure that the overall offset conforms to the distribution of the template T under the condition of ensuring the minimum change of mutual distributions between Featurei. The specific methods are as follows.

Calculate the histogram Histi of Featurei with the number of bins equal to T.

The cumulative histograms Acci and AccT are calculated for Histi and HistT, respectively.

For each stripe p in Acci, calculate the bin number q with the smallest interpolation among Acci.

Move each bin p to the position q.

3.3.2. The Proposed Algorithm

The specific implementation steps of the proposed shadow removal method are as follows.

Calculate the shadow detection result label and convert the image to HSV space at the same time.

Repeat steps (3)–(5) for each shadow region Si.

For region Si, find Sj with labelj=1 and minimum Di,j, and use Sj to relight Si.

In the HSV color space, compute the histograms HistH,j, HistS,j and HistV,j of Sj in the three channels H, S, and V, respectively..

Take HistH,j, HistS,j, HistV,j as the template of histogram matching and adjust the three features of Si, so that the feature distribution is close to the template.

Convert the image to RGB space.

Calculate the intersection boundary of all shadow regions and non-shaded regions in the image and then smooth all boundaries using a Gaussian filter.

4. Experiments

Experiments are conducted on the shadow dataset SBU [26]. SBU dataset is a large shadow detection dataset, which contains 4727 images, and each image has a ground truth based on pixel markers. In order to quantitatively evaluate the effectiveness of the proposed method and comparative methods, the commonly used indexes such as recall, specificity, and balanced error rate (BER) are used as the evaluation indexes, which are defined as follows:

| (3) |

where TP indicates that the real shadow is correctly classified, FN indicates that the real shadow is incorrectly classified as non-shaded, TN indicates that the non-shaded pixels are correctly classified, and FP indicates that the non-shaded pixels are incorrectly classified as shadow pixels. The higher the recall is, the more the shadow pixels are found. The higher the specificity is, the more the non-shaded pixels are recalled. Recall and specificity are considered a pair of contradictions. The comprehensive performance of the algorithm is tested by BER. The smaller the BER, the better the shadow detection performance.

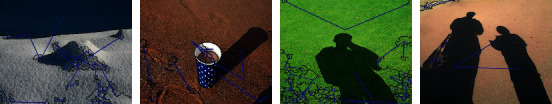

4.1. Region Matching

Region matching is shown in Figure 4. The blue line in the figure connects the center of each pair of matching regions. It can be seen that when the image segmentation is more accurate, the nearest region matching is also more accurate.

Figure 4.

Most similar region matching.

4.2. Shadow Detection

The parameter setting of shadow detection is mainly in steps (4) and (6) of Section 3.2.2.

In step (4), when equation (4) is satisfied between Si and Sj, it is considered that the label properties of Ri and Rj are opposite, and set Refusej=1.

| (4) |

where Ri and Rj are the features of shadow detection in the region Si and Sj. The value of Ri ado Rj can be computed by equation (2). Cshadow is the feature center of the shadow areas, and Stdshadow is the standard deviation corresponding to the Cshadow. Step (3) of Section 3.2.2 describes the details.

In step (6), when equation (5) is satisfied between Si and Sj, it is considered that the label properties of Si and Sj are the same, and set labelj=0.

| (5) |

where Hi, Yi, Ri, Hj, Yj, and Rj are the shadow detection features of regions Si and Sj, respectively.

3 and 2.5 are empirical constants. Tuning of the parameters is outside the scope of the current work.

The performance of the proposed shadow detection method is evaluated by comparing it with two commonly used methods: Unary-Pairwise [27] and Stacked-CNN [26]. The Unary-Pairwise method is one of the best statistical methods to detect shadows from a single image. The Stacked-CNN method is a shadow detection method based on the deep learning framework using shadow prior map.

4.2.1. Qualitative Analysis

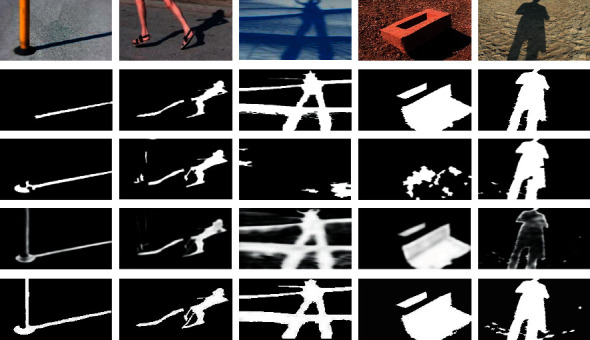

Figure 5 shows the shadow detection results of some images in the SBU dataset.

Figure 5.

Qualitative comparison of the proposed method with other methods. Rows from top to bottom: input images, ground truths, results of the Unary-Pairwise method, results of the Stacked-CNN method, and results of the proposed method.

It can be seen from the third line of Figure 5 that the Unary-Pairwise method has good shadow detection results in some images, but almost no shadow is detected in columns 3 and 4. The fourth line of Figure 5 shows the shadow detection results of the Stacked-CNN method, which can detect all correct shadows, but the visual effects of some results are blurred.

As can be seen from the last line of Figure 5, the proposed method has relatively small error in image shadow detection, but the detection is basically correct. The consistency of shadow regions is ensured by region matching. It should be noted that in the images in column 1, the black base of the pole is detected as a shadow, which is caused by the similar color features between the black base and the shadow. Objects with color features similar to shadow will reduce the precision of shadow detection.

4.2.2. Quantitative Analysis

Table 1 shows the results of quantitative analysis on the SBU dataset. The proposed method can improve the detection rate of shadow and non-shaded regions. Obviously, recall and specificity are considered a pair of contradictions. Considering the performance of shadow detection, the BER metric is used, and the proposed method effectively reduces the BER.

Table 1.

Evaluation of shadow detection methods on SBU dataset.

| Method | Recall | Specificity | BER |

|---|---|---|---|

| Unary-Pairwise | 0.5636 | 0.9357 | 0.2504 |

| Stacked-CNN | 0.8609 | 0.9059 | 0.1166 |

| Our method | 0.8460 | 0.9361 | 0.1090 |

The bold entries indicate the best result in a given column.

The Stacked-CNN method can detect most shadow pixels in the dataset, but it also causes pixels in non-shaded areas to be insufficiently recalled. Some non-shaded pixels are wrongly classified as shadow pixels, resulting in blurred shadow detection images, which is consistent with the results of qualitative analysis. The Unary-Pairwise method has the lowest recall of shadow pixels on a large-scale shadow detection dataset. This is because of the false pairing between regions due to the loose constraints. Although the recall of the proposed method is lower than that of the Stacked-CNN method, the precision is higher. On average with BER, the improvement made by the proposed method is more than 6% which is impressive.

In Table 2, we show the execution time of testing phases of the proposed approach. Our method does not need training, and it works faster than the statistical method and one order of magnitude faster than the deep learning method.

Table 2.

Time complexity of shadow detection methods on the SBU dataset.

| Method | Testing (hours) | Testing (sec/image) | Training (hours) |

|---|---|---|---|

| Unary-Pairwise | 9.13 | 51.56 | ∼ |

| Stacked-CNN | 25.08 | 141.56 | 9.4 |

| Our method | 3.08 | 17.40 | ∼ |

The bold entries indicate the best result in a given column.

4.3. Shadow Removal

The parameters of shadow removal are set in step (7) of Section 3.3.2, in which Gaussian blur is applied to the points whose distance from the edge pixel is less than or equal to 2, and the parameters of Gaussian blur are set to hsize=15, σ=15.

The second line of Figure 6 shows the shadow removal process of some images in SBU dataset, in which the red lines connect and match the corresponding shadow region Si and non-shaded region Sj. Use Sj to relight Si through the algorithm in Section 3.3.2.

Figure 6.

Qualitative analysis results of the proposed shadow removal method on the SBU dataset. Rows from top to bottom: input images, shadow removal matching regions, and shadow removal images.

The third line of Figure 6 shows the shadow removal results of the images. It can be seen that the regions after removing shadow through region matching maintain the original texture features, but the brightness changes. The image after removing shadow has an obvious boundary around the original shadow region, but the edge effect is significantly weakened after Gaussian smoothing.

Through experiments, it is found that not all the shadows of the image can be removed correctly. Two failed shadow removal scenarios are shown in Figure 7. In the first image, the shadow occupies most of the image, and the shadow is evenly distributed in the image, which will lead to the uniform distribution of image feature histogram, and the non-shaded region is not enough to remove the shadow region. In the second image, the shadows of multiple objects are complex, and the fire hydrant is almost completely in shadow, resulting in the wrong shadow removal matching, so the shadows cannot be removed correctly.

Figure 7.

Failed images for shadow removal on the dataset.

In this work, the experiments are run on a PC with a 3.6 GHz CPU and 8G RAM in the Matlab 2018b environment under Windows 10.

5. Conclusion

Experiments show that the shadow detection and removal algorithm based on region matching is effective. In the aspect of shadow detection, the consistency of images can be guaranteed to a certain extent through the mutual restriction of regions with the same material; in the aspect of shadow removal, the method of brightening the shadow by the region with similar material can minimize the impact of shadow removal on the non-shaded features in the shadow region.

The limitation of the method proposed in this paper is that it is difficult to ensure the accuracy of region matching for complex images. Therefore, in shadow detection and shadow removal, it will bring wrong constraints due to wrong matching or restore the region to the wrong material. At the same time, this algorithm is based on Meanshift image segmentation technology, which is difficult to segment accurately in complex images.

The further improvement mainly lies in the calculation of the material matching region. First, using more and more datasets, the matching region is given by training classifiers and other methods. Second, adding more shadow-invariant features to improve the accuracy of region matching in complex images will be taken into account.

Data Availability

The data used to support the findings of this study are included within the article.

Conflicts of Interest

The authors declare that there are no conflicts of interest regarding the publication of this paper.

References

- 1.Guo R., Dai Q., Hoiem D. Paired regions for shadow detection and removal. IEEE Transactions on Pattern Analysis and Machine Intelligence . 2013;35(12):2956–2967. doi: 10.1109/TPAMI.2012.214. [DOI] [PubMed] [Google Scholar]

- 2.Vicente T. F. Y., Samaras D. Single image shadow removal via neighbor-based region relighting. Proceedings of the European Conference on Computer Vision; September, 2014; Munich, Germany. pp. 309–320. [Google Scholar]

- 3.Zhu J., Samuel K. G., Masood S. Z., Tappen M. F. Learning to Recognize Shadows in Monochromatic Natural Images. Proceedings of the 2010 IEEE Computer Society conference on computer vision and pattern recognition; June, 2010; San Francisco, CA, USA. pp. 223–230. [Google Scholar]

- 4.Lalonde J.-F., Efros A. A., Narasimhan S. G. Detecting ground shadows in outdoor consumer photographs. Proceedings of the European Conference on Computer Vision; September, 2010; Heraklion, Crete, Greece. pp. 322–335. [DOI] [Google Scholar]

- 5.Chung K.-L., Lin Y.-R., Huang Y.-H. J. I. T. o. G., sensing R. Efficient shadow detection of color aerial images based on successive thresholding scheme. 2008;47(2):671–682. [Google Scholar]

- 6.Dong Q., Liu Y., Zhao Q., Yang H., graphics Detecting soft shadows in a single outdoor image: from local edge-based models to global constraints. Computers & Graphics . 2014;38:310–319. doi: 10.1016/j.cag.2013.11.005. [DOI] [Google Scholar]

- 7.Panagopoulos A., Wang C., Samaras D., Paragios N. European Conference on Computer Vision . Berlin, Heidelberg: Springer; 2010. Estimating shadows with the bright channel cue; pp. 1–12. [Google Scholar]

- 8.Vicente T. F. Y., Hoai M., Samaras D. Leave-one-out kernel optimization for shadow detection and removal. IEEE Transactions on Pattern Analysis and Machine Intelligence . Mar 2018;40(3):682–695. doi: 10.1109/TPAMI.2017.2691703. [DOI] [PubMed] [Google Scholar]

- 9.Khan S. H., Bennamoun M., Sohel F., Togneri R. J. I. t. o. p. a., intelligence m. Automatic shadow detection and removal from a single image. 2015;38(3):431–446. doi: 10.1109/TPAMI.2015.2462355. [DOI] [PubMed] [Google Scholar]

- 10.Khan S. H., Bennamoun M., Sohel F., Togneri R. Automatic feature learning for robust shadow detection. Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition; June, 2014; Columbus, OH, USA. IEEE; pp. 1939–1946. [DOI] [Google Scholar]

- 11.Vicente T. F. Y., Yu C.-P., Samaras D. Single Image Shadow Detection Using Multiple Cues in a Supermodular MRF. Proceedings of the BMVC; January, 2013; Aberystwyth, UK. [Google Scholar]

- 12.Qu L., Tian J., He S., Tang Y., Lau R. W. Deshadownet: a multi-context embedding deep network for shadow removal. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; July, 2017; Honolulu, HI, USA. pp. 4067–4075. [DOI] [Google Scholar]

- 13.Arbel E., Hel-Or H. J. I. t. o. p. a., intelligence m. Shadow removal using intensity surfaces and texture anchor points. 2010;33(6):1202–1216. doi: 10.1109/TPAMI.2010.157. [DOI] [PubMed] [Google Scholar]

- 14.Liu F., Gleicher M. European Conference on Computer Vision . Berlin, Heidelberg: Springer; 2008. Texture-consistent shadow removal; pp. 437–450. [DOI] [Google Scholar]

- 15.Ya-Fan Su Y.-F., Chen H. H. J. I. T. o. I. P. A three-stage approach to shadow field estimation from partial boundary information. IEEE Transactions on Image Processing . 2010;19(10):2749–2760. doi: 10.1109/tip.2010.2050626. [DOI] [PubMed] [Google Scholar]

- 16.Shor Y., Lischinski D. Computer Graphics Forum . 2. Vol. 27. Wiley Online Library; 2008. The shadow meets the mask: pyramid-based shadow removal; pp. 577–586. [DOI] [Google Scholar]

- 17.Gong H., Cosker D. Interactive Shadow Removal and Ground Truth for Variable Scene Categories. Proceedings of the BMVC; September, 2014; Nottingham. pp. 1–11. [Google Scholar]

- 18.Yu X., Li G., Ying Z., Guo X. A new shadow removal method using color-lines. Proceedings of the Computer Analysis of Images and Patterns; July, 2017; Seville, Spain. pp. 307–319. [DOI] [Google Scholar]

- 19.Finlayson G. D., Fredembach C. Fast Re-integration of shadow free images. Color and Imaging Conference . 2004;2004(1):117–122. [Google Scholar]

- 20.Fredembach C., Finlayson G. Hamiltonian path-based shadow removal. Proceedings of the 16th British Machine Vision Conference (BMVC); September, 2005; Oxford, UK. CONF; pp. 502–511. [DOI] [Google Scholar]

- 21.Finlayson G. D., Hordley S. D., Drew M. S. European Conference on Computer Vision . Berlin, Heidelberg: Springer; 2002. Removing shadows from images; pp. 823–836. [DOI] [Google Scholar]

- 22.Fu L. Auto-exposure fusion for single-image shadow removal. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition; June, 2021; Nashville, TN, USA. pp. 10571–10580. [DOI] [Google Scholar]

- 23.Comaniciu D., Meer P., intelligence m. Mean shift: a robust approach toward feature space analysis. IEEE Transactions on Pattern Analysis and Machine Intelligence . 2002;24(5):603–619. doi: 10.1109/34.1000236. [DOI] [Google Scholar]

- 24.Martin D. R., Fowlkes C. C., Malik J., intelligence m. Learning to detect natural image boundaries using local brightness, color, and texture cues. IEEE Transactions on Pattern Analysis and Machine Intelligence . 2004;26(5):530–549. doi: 10.1109/tpami.2004.1273918. [DOI] [PubMed] [Google Scholar]

- 25.Blajovici C., Kiss P. J., Bonus Z., Varga L. J. P. o. t. S. Shadow Detection and Removal from a Single Image . Szeged, Hungary: Springer; 2011. 19th summer school on image processing; pp. 7–16. [Google Scholar]

- 26.Vicente T. F. Y., Hou L., Yu C.-P., Hoai M., Samaras D. European Conference on Computer Vision . Berlin, Heidelberg: Springer; 2016. Large-scale training of shadow detectors with noisily-annotated shadow examples; pp. 816–832. [DOI] [Google Scholar]

- 27.Guo R., Dai Q., Hoiem D. Single-image shadow detection and removal using paired regions. Proceedings of the CVPR 2011; June, 2011; Colorado Springs, CO, USA. IEEE; pp. 2033–2040. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data used to support the findings of this study are included within the article.