Abstract

Online and virtual teaching–learning has been a panacea that most educational institutions adopted from the dire need created by COVID-19. We provide a comprehensive bibliometric study of 9523 publications on virtual laboratories in higher education covering the years 1991 to 2021. Influential bibliometrics such as publications and citations, productive countries, contributing institutions, funders, journals, authors, and bibliographic couplings were studied using the Scientific Procedures and Rationales for Systematic Literature Reviews (SPAR-4-SLR) protocol. A new metric to complement citations called Field Weighted Citation Impact was introduced that considers the differences in research behavior across disciplines. Findings show that 72% of the research work was published between 2011-and 2021, most likely due to digitalization, with the highest number of publications in 2020–2021 highlighting the impact of the pandemic. Top contributing institutions were from the developed economies of Spain, Germany, and the United States. The citation impact from publications with international co-authors is the highest, highlighting the importance of co-authoring papers with different countries. For the first time, Altmetrics in the context of virtual labs were studied though a very low correlation was observed between citations and Altmetrics Attention Score. Still, the overall percentage of publications with attention showed linear growth. Our work also highlights that virtual laboratory could play a significant role in achieving the United Nations Sustainable Development Goals, specifically SDG4-Quality Education, which largely remains under-addressed.

Keywords: Virtual laboratories, Higher education, Bibliometrics, Citation analysis, Altmetrics, SDG

Introduction

Experimentation in laboratories is vital in science, engineering, and technology education. Traditionally, the experiments are done in hands-on labs, which involve physical presence, procurement of equipment, and human resources to maintain them (Gomes & Bogosyan, 2009). Progressive technological developments and the ubiquity of the internet transformed lab experimentation in science, technology, and engineering, aiding distance learning or e-learning through virtual reality, virtual worlds, dynamics-based virtual systems, and virtual laboratories. Virtual experiments delivered with computer technology add value to physical experiments by allowing students to explore scientific phenomena; link observable and unobservable phenomena; point out salient information; enable learners to conduct multiple experiments in a short amount of time; and provide online, adaptive guidance (Ton de Jong et al., 2013). Virtual laboratories direct students’ attention to variables and the interaction of the variables that produce the outcomes (Toth, 2016). As such, this technology has the potential for delivering a first-person experience that very closely approximates not just that of a teaching laboratory (Vrellis et al., 2016). Virtual laboratories allow students to put theory into practice in appropriate experiments at a given level of advancement in a discipline or with specific topics within a course of study (Ural, 2016). Compared to traditional hands-on laboratories, they can offer reduced cost, greater accessibility, time-saving, safe environments, and flexibility for self-regulated learning (Ali and Ullah, 2020; Alkhaldi et al., 2016).

Virtual laboratories can be considered an alternative to hands-on laboratories, and they can be regarded as being as effective as hands-on laboratories (Kapici H.O. et al., 2019). An extensive pedagogical study through the development of two instruments towards assessing conceptual understanding and perception of platform effectiveness that was conducted both on physical laboratory and remotely triggerable Universal Testing Machine (RT-UTM) showed remote users conducted experiments three times more frequently, completed assignments in 30% less time and had over 200% improvement in scores when RT-UTM platform was integrated into mainstream learning (Achuthan et al., 2020). Virtual laboratories are generally accepted as a viable alternative to traditional labs in imparting practical skills to students and professionals and positively affecting students’ learning processes (Stegman, 2021). As an innovation, virtual laboratories promote a resilient, inclusive, and sustainable approach to supplementing knowledge and training resources and common limitations to laboratory skill training (Achuthan et al., 2020).

The virtual laboratories are based on concepts of remote access to simulated resource system. The researchers operate with an experimentation interface on a virtual system through the internet, a simulated system accessible by several users simultaneously (Heradio et al., 2016). There are several benefits of virtual labs in higher education (Tawfik et al., 2012). First, available anytime, anywhere. Second, multi-tasking and observability, for several researchers to work concurrently on an investigation through virtual labs. Third, it provides safety compared to physical labs due to non-exposure to the hazardous experimental setup. Finally, flexibility; is the ability to change experimental configurations and study their impact with little or no downtime. The virtual labs played a crucial role in the higher education sector during the COVID-19 pandemic. Using Google Analytics, Raman et al. (2021a, 2021b, 2021c) found that users increasingly adopted online labs during the pandemic as a new learning pedagogy for performing lab experiments as indicated by parameters such as the number of users; the number of unique pages viewed per session; time spent on viewing content and bounce rate.

In the literature, a wide range of studies like the virtual and remote labs in education (Heradio et al., 2016; Tzafestas et al., 2006; Balamuralithara & Woods, 2009; Grosseck et al., 2019; Chandrashekhar et al., 2020; Sweileh, 2020; Meschede, 2020; Ray & Srivastava, 2020; Raman et al., 2021b), Technology-enhanced learning (Shen & J. Ho, 2020), e-learning (Jui-long Hung, 2012), medical education (Nedungadi & Raman, 2016), and Virtual Reality in Higher Education (Shaista et al., 2021) have been observed. Virtual labs have been studied as an alternative to in-person labs to determine if students will learn from the virtual lab experience (Davenport et al., 2018; Enneking et al., 2019; Miller et al., 2018). These studies highlighted that the combined physical and virtual labs would be frequently needed, and a systematic blending would be required to suit specific learning objectives and learners (Achuthan et al., 2017).

Our study addresses the gaps in the existing body of scientific literature, regarding virtual laboratories in higher education, in multiple ways. The earlier studies focused on science mapping and performance analysis, exploring current trends and critical issues in virtual laboratories. The three crucial gaps addressed in our work include: Firstly, we have studied the intellectual structure of virtual laboratories for over 30 years, giving comprehensive coverage to this important research topic. Secondly, we introduce new metrics like Field Weighted Citation Impact (Colledge & Verlinde, 2014) and alternate metrics (Priem et al., 2010) based on social media platforms that have not been studied before in the context of virtual laboratories. Finally, we look at how virtual laboratories research contributes to the United Nations Sustainable Development Goals and the recent impact of COVID-19. Virtual Laboratories had been in use for years before the pandemic started. Still, the user adoption of virtual laboratories increased during the pandemic-imposed lockdowns, and learners were minor instructor-dependent (Radhamani et al., 2021). These virtual laboratories have been used as a complement learning resource to in-person laboratories for both teachers and students since the early 2000s (Vasiliadou, 2020). The National Mission on Education through Information and Communication Technology (NMEICT), an initiative of the Ministry of Education, Government of India, launched in 2009, is an excellent example of the adoption of virtual laboratories pre-pandemic. India's Ministry of Human Resources Development initiative has simulation-based virtual labs in various science and engineering disciplines based on multiple university syllabi. Another example of adoption is the EXPERES project in Morocco from 2016 to 2018. Moroccan universities developed virtual laboratories and implemented them in the 12 science faculties in Morocco. The study further observed that virtual laboratories support adopting the proposed learning environment in laboratory educational procedures as an alternative to physical laboratories (El Kharki et al., 2021).

The analysis resulted in the key findings: SDG remains under-addressed, and countries worldwide should focus on virtual labs in higher education to achieve SDG, especially SDG4-Quality education. Virtual laboratories contribute the most to achieving SDG 4, and other SDGs are addressed in a mere 7% of all publications.

From our understanding, this work is the first in the entire literature to do so. Secondly, while prior work on virtual laboratories has shown its successful usage as a supplement to physical laboratories, the COVID pandemic significantly reversed this impetus for virtual laboratory usage. This reversal and its impact on various disciplines are vital to monitor as it adds significant value to future design and usage of virtual laboratories. Thirdly, our work is unique, while performance analysis has been done in existing studies using citation analysis. It presents a more extensive comprehensive overview using Altmetrics and Citation Impact that has not been explored before.

In this work, we conduct a systematic literature review of 9523 articles related to virtual laboratories in higher education published between 1991 and 2021. Our methodology consists of bibliometrics and Altmetrics analysis (Raman et al., 2021b), (Raman et al., 2022), and content analysis (Radhamani et al., 2021; Tibaná-Herrera et al., 2018).

Specifically, in our study following research questions (RQ) are explored regarding virtual laboratories in higher education.

RQ1: What are the bibliometric trends in publications, citations, and Altmetrics?

RQ2: Which are the top contributing institutions?

RQ3: Who are the prolific authors and their networks?

RQ4: Which are the most productive countries in terms of publications?

RQ5: Which are the influential publications based on citations and Altmetrics?

RQ6: Which are the top citing journals and their networks?

RQ7: What are the intellectual structure's major research themes, topics, and keyphrases?

RQ8: How is research on virtual laboratories contributing to UN SDG?

RQ9: What has been the impact of COVID-19 on the adoption of virtual laboratories?

This study analyses the virtual laboratories' research over three decades across different regions of the world and how virtual laboratories' research contributes to UN SDG and the recent impact of COVID-19 has been studied. Hence this article caters to an international readership. Most of the bibliometric studies in the area of virtual laboratories have been done till 2015 and follow conventional science mapping and performance analysis methods exploring current trends. Through these research questions, we are not only trying to measure the impact of research using Altmetrics, which addresses social media attention but also contributing to the UN SDG. This makes the study unique and essential to researchers. The other aspect is the impact of a pandemic like COVID-19, a once-in-a-century phenomenon on virtual laboratories. It is also explored, increasing the study's significance for a wider international readership.

Bibliometric analysis

The field of bibliometrics studies publication and citation patterns by using quantitative techniques. Bibliometrics can be either descriptive, such as looking at how many articles an organization has published, or evaluative, such as using citation analysis to examine how those articles influenced subsequent research by others. According to Narin and Hamilton (1996), Noyons et al. (1999) bibliometrics can be characterized based on the type of analysis done i.,e. performance analysis and science mapping analysis. Typical performance analysis utilizes the cumulative publications, citations, ratio, yearly trend, and journal quality indicated by SCImago rank and impact factor. In this work, we have considered the h-index (Hirsch, 2005), a popular metric used in bibliometric research (Gaviria-Marin et al., 2019). The h-index indicates the number of documents that have received an ‘h’ number of citations or more.

We have introduced two new indicators as part of the performance analysis in our study. The first is Field Weighted Citation Impact (FWCI), an article-level metric from Scival. FWCI takes the form of a simple ratio: actual citations to a given publication divided by the expected rate for publications of similar age, subject, and type. It considers the differences in research behavior across disciplines. The second indicator is Altmetrics which attempts to capture research impact through non-traditional means like social media (Priem et al., 2012a). The Altmetric Attention Score (AAS) is a weighted count of all the online attention found for a publication. This includes mentions in public policy documents and references in Wikipedia, mainstream news, social networks, Twitter, blogs, and more (Williams, 2016). Most comments on the benefits of Altmetrics relate to their potential for measuring the broader impact of research, that is, beyond science (Priem et al., 2011, 2012b; Weller et al., 2011).

The second type of bibliometric study includes science mapping analysis, which evaluates a research field's cognitive and social structure (Small, 1999). Visualization of Similarities (VOS) viewer is a software tool specifically designed for constructing and visualizing bibliometric networks (van Eck & Waltman, 2010) and has been widely used in science mapping studies (Butt et al., 2020; Farooq et al., 2021; Khan et al., 2020; Saleem et al., 2021). Such science mapping illustrates scientific research's structural and dynamic aspects (Cobo et al., 2011). With VOSviewer, patterns of influence in co-citations have been illustrated. Co-citation occurs when two documents receive a citation from the same third document (Small, 1973). An author co-citation analysis (ACA) allows us to understand how authors, as topic experts, connect ideas between published articles (Chen et al., 2010). Like co-citation, a similarity measure based on citation analysis, Bibliographical coupling is also used in our study (Kessler, 1963).

The Dimensions tool from Digital Science, which is widely used in bibliometrics studies, was used for retrieving the bibliographic data of Virtual Laboratories (Bode et al., 2018; Herzog et al., 2020; Martín-Martín et al., 2021).

SPAR-4-SLR protocol

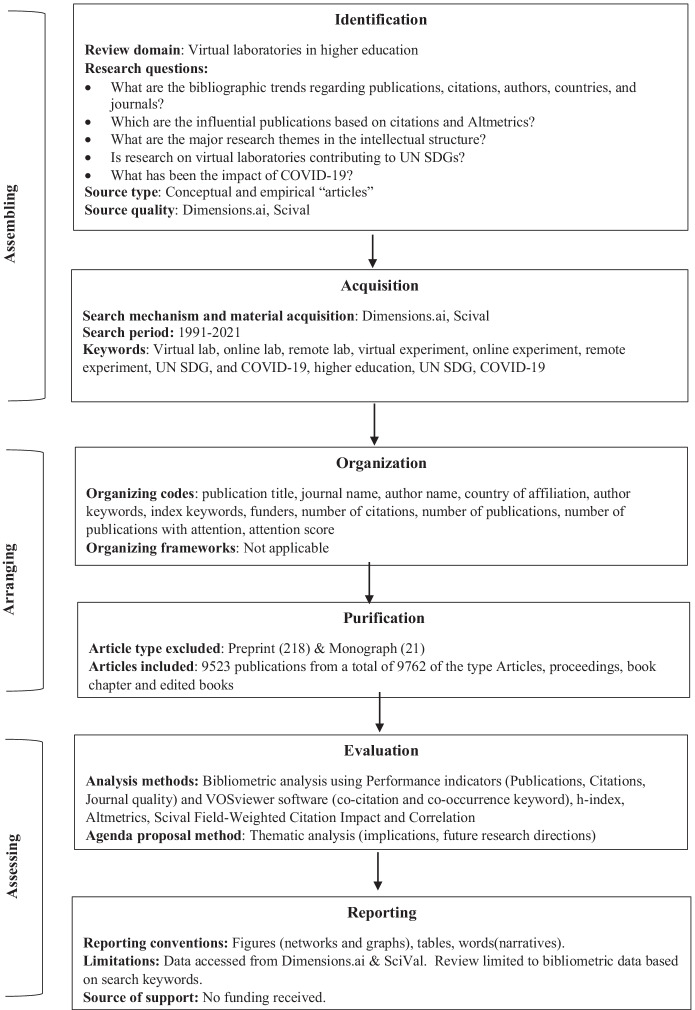

For our study, we adopted the 3-stage Scientific Procedures and Rationales for Systematic Literature Reviews (SPAR-4-SLR) protocol developed by Paul et al. (2021), as shown in Fig. 1. The 3-stages include:

Fig. 1.

The research design follows the SPAR-4-SLR protocol

Assembling

Assembling involves the identification and acquisition of publications for review. The bibliographic data of publications were collected from the Dimensions database, which is used in several bibliometric studies (Bornmann, 2018; Bornmann & Marx, 2018; Singh et al., 2021). The search period was from 1991 to 2021, and the following keywords were used; Virtual lab*, online lab*, remote lab*, virtual experiment*, online experiment*, remote experiment*, UN SDG*, COVID-19* and higher education. A total of 9762 publications were returned.

Arranging

The next stage is arranging, which involves the organization and purification of the publications. Publications were organized using the publication title, journal title, author name, country of affiliation, author & index keywords, funders, number of citations, number of publications, h-index, FWCI, and attention score. In terms of purification, only publication types were included: Articles, proceedings, book chapters, and edited books; publications type preprint and monograph were removed. After purification, 9523 publications were included for analysis.

Assessing

The final stage is assessing, which involves evaluation and reporting. SPAR-4-SLR protocol provides valuable suggestions that can help scholars justify the logic (rationale, reason) behind review decisions (Paul et al., 2021). The bibliometric review has been done in this article using bibliographic modeling and topic modeling, e.g., co-citation analysis, bibliographic coupling, and keyword co-occurrence analysis, to ensure rigor. The agenda proposal method has been used to identify the gaps based on a review of existing literature and suggest future research directions. No ethics clearance was required since the study is predicated on secondary data that can be accessed by anyone who has access to Dimensions. The reporting conventions of the review include figures, tables, and words, whereas the limitations and sources of support are acknowledged toward the end of this article.

List of Indicators

TP: Total number of publications in dimensions database

TC: Number of times a publication has been cited by other publications in the dimensions database. The values per year are the citations received in each year.

TC/TP: Ratio of Total Citations/Total Publications

h-index: maximum value of h such that the given journal has published at least h papers that have each been cited at least h times.

TC/Year: Total Citations received in a year

SJR: SCImago Journal Rank. According to this Rank, prestige is transferred between journals based on their citation links.

Impact Factor: is calculated as the average of the sum of the citations received in a given year to a journal’s previous two years of publications, divided by the sum of “citable” publications in the previous two year

TPA: Total publications with Altmetrics Attention Score. It is a weighted count of all the online attention. The values per year represent the years in which the publications were published.

TPA (%): Percentage of publications with Altmetrics Attention Score

FWCI: Field Weighted Citation Impact—the ratio of actual citations to a given publication divided by the expected rate for publications of similar age, subject, and type.

Results

Trends of publications, citations, and Altmetrics

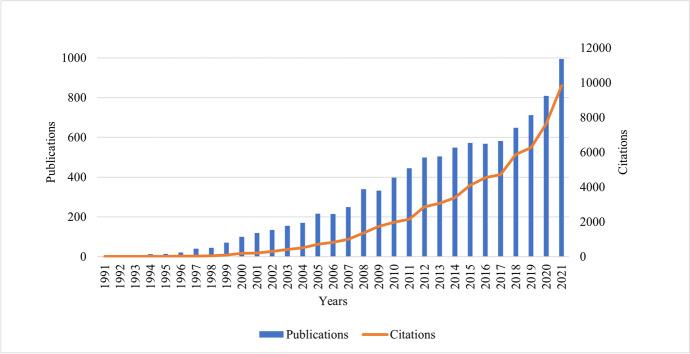

The bibliometric trends for 9523 publications and their citations for the period 1991 to 2021 are shown in Fig. 2. Increased focus on the digitalization of education led to design, development, and usage of virtual laboratories in higher education from 2011-to 2021 (T3). During T3, there were 6883 publications accounting for more than 72% of the total publications. Specifically, the last two years had the highest number of yearly publications due to the massive adoption and new online and virtual learning models induced by COVID-19. Correspondingly the research influence of virtual laboratories as measured by citations also showed a significant increase in T3, accounting for 85% of the total citations received from 1991-to 2021.

Fig. 2.

Year-wise trends of publications and citations

Table 1 shows the temporal evolution of virtual laboratories’ performance across three time periods. The average TP showed a quantum jump from 31 in T1 to 626 in T3. The average TC also increased exponentially from 45 in T1 to 4952 in T3. The average TC/TP also has grown more than three times from T1 to T3.

Table 1.

Comparison of key performance indicators across T1, T2, T3

| Performance Indicators | 1991–2000 (T1) | 2001–2010 (T2) | 2011–2021 (T3) |

|---|---|---|---|

| TP | 31 | 233 | 626 |

| TC | 45 | 908 | 4952 |

| TC/TP | 2.4 | 3.5 | 7.6 |

In Table 2, we observe how different forms of collaborations have contributed to citation impact (FWCI) of the publications. An FWCI of greater than 1.00 indicates that the publications have been cited more than expected based on the world average for similar publications. Though institutional collaboration contributed the most publications (51.1%), the citation impact from international collaborations is the highest, highlighting the importance of co-authoring papers with different countries.

Table 2.

Impact of collaboration on citation impact

| Type of Collaboration | % Share | FWCI |

|---|---|---|

| International | 12.2% | 1.68 |

| National | 20.4% | 0.94 |

| Institutional | 51.1% | 0.72 |

| Single authorship (no collaboration) | 16.3% | 0.61 |

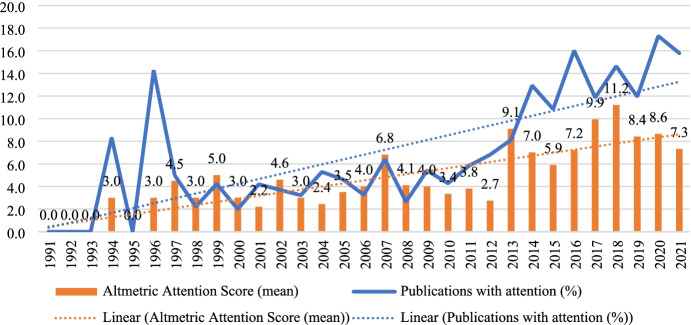

Finally, we looked at the percentage of publications with attention and Altmetric Attention Scores (AAS) trend between 1991 and 2021 (Fig. 3). The mean value of AAS shows a linear upward trend, with the highest value in 2018. Similarly, the percentage of publications with attention shows a linear growth, possibly indicating that authors are actively promoting their research work on social media platforms.

Fig. 3.

Year-wise trends of Attention Score (AAS) and Percentage of Publications with Attention

Top contributing institutions

It is essential to recognize the top contributing institutions researching virtual laboratories in higher education so that their work may be tracked to stay updated with the developments in the field. Bibliometric citation analysis was used to identify top institutions to address RQ2. Table 3 shows the leading institutions and their publications and citations. The results show these top institutions belong to the following countries: Spain (3), Germany (4), United States of America (2), Portugal (2), Slovakia, Austria, Netherlands, Switzerland, Brazil, Indonesia, Romania (1 each). The findings show that European countries lead the research on virtual labs in higher education. There is a conspicuous absence of Asian countries and those from the developing world.

Table 3.

Top contributing institutions based on publications and citations

| Name | Country | TP | TC | TC/TP | TPA (%) |

|---|---|---|---|---|---|

| National University of Distance Education (UNED) | Spain | 177 | 2293 | 12.95 | 7.3 |

| University of Deusto | Spain | 125 | 1221 | 9.77 | 6.4 |

| Slovak University of Technology in Bratislava (STU) | Slovakia | 65 | 392 | 6.03 | - |

| Carinthia University of Applied Sciences (CUAS) | Austria | 60 | 305 | 5.08 | 3.3 |

| University of Amsterdam (UvA) | Netherlands | 58 | 1201 | 20.71 | 17.2 |

| Swiss Federal Institute of Technology in Lausanne (EPFL) | Switzerland | 54 | 769 | 14.24 | 14.8 |

| Massachusetts Institute of Technology (MIT) | United States | 50 | 1403 | 28.06 | 12 |

| Universidade Federal de Santa Catarina (UFSC) | Brazil | 48 | 137 | 2.85 | 8.3 |

| Polytechnic Institute of Porto | Portugal | 46 | 361 | 7.85 | 6.5 |

| TU Dortmund University | Germany | 46 | 224 | 4.87 | 6.5 |

| Ilmenau University of Technology | Germany | 46 | 226 | 4.91 | 4.3 |

| Transylvania University of Brașov (UTBv) | Romania | 45 | 146 | 3.24 | 2.2 |

| Oregon State University (OSU) | United States | 44 | 1123 | 25.52 | 18.1 |

| University of Stuttgart | Germany | 44 | 369 | 8.39 | 4.5 |

| University of Porto | Portugal | 44 | 365 | 8.3 | - |

| RWTH Aachen University (RWTH) | Germany | 43 | 269 | 6.26 | 2.3 |

| Indonesia University of Education (UPI) | Indonesia | 42 | 69 | 1.64 | - |

| Technical University of Madrid (UPM) | Spain | 40 | 346 | 8.65 | 10 |

Among the top contributing institutions, the National University of Distance Education (UNED) has the most publications (TP:177) and citations (TC:2293), followed by the University of Deusto (TP: 125, TC: 1221). Both the universities are in Spain. Interestingly though the Massachusetts Institute of Technology, United State,s has fewer publications (TP:50), they have got a higher number of citations (TC:1403) than the University of Deusto (TC:1221), which has the second-highest number of publications (TP:125). Though the number of top contributing institutions is from Germany (4), the number of publications (TP) from these institutions is more or less the same.

UNED has the highest TP (TP:2293) and TC (TC:2293), but the highest TC/TP is for publications from the Massachusetts Institute of Technology (MIT) (TC/TP:28.06). A different story is revealed when we analyze the top contribution institutions based on TPA (%). TPA (%) is the highest for publications from Oregon State University (OSU) (TP:44, TC:1123). The TPA (%) is high for publications from the Netherlands and Switzerland. The United States and the United Kingdom are the countries with the most publications with AAS in Dimensions. Netherlands and Switzerland feature among the top countries with a higher number of publications in Dimensions and AAS (Orduna-Malea & López-Cózar, 2019).

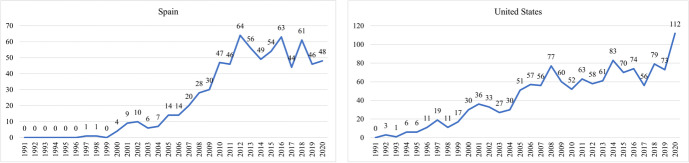

On further analysis of the growth of publications, it was visible that the number of publications from the USA was higher during the initial years, i.e. early 90 s compared to other countries. However, a steeper trajectory in the growth of publications between the period 2001 – 2021 was attributed to a larger contribution by European authors. Spain emerged as the country with the highest number of publications. Considering the growth in citations, the average citation count for Spain was 53.1 over the last 10 year period while that for the USA was 72.9 over the same period indicative of a larger research impact of publications from the USA as shown in Fig. 4.

Fig. 4.

Citation over the years—Spain and USA

Prolific authors based on publications, citations and Altmetrics

The recognition of prolific authors is an accepted practice in bibliometrics (Marín et al., 2018). The prolific authors in virtual labs were ranked based on publications (TP), citations (TC), and percentage of publications with attention (TPA) (Table 4).

Table 4.

Top authors based on publications, citations, and Altmetrics

| Based on TP | Based on TC | Based on TPA (%) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Author | TP | TC | TC/TP | Author | TC | TP | TC/TP | Author | TPA (%) | TP | TC |

| Javier Garcia-Zubia | 115 | 1300 | 11.3 | Sebastian Dormido | 1769 | 84 | 21.05 | Glen E P Ropella | 54 | 13 | 45 |

| Manuel Alonso Castro | 110 | 977 | 8.8 | Javier Garcia-Zubia | 1300 | 115 | 11.3 | Ton De Jong | 47 | 15 | 940 |

| Pablo Orduña | 90 | 956 | 10.6 | Manuel Alonso Castro | 977 | 110 | 8.8 | Johan P M Hoefnagels | 42 | 12 | 99 |

| Gustavo Ribeiro Costa Alves | 89 | 840 | 9.4 | Pablo Orduña | 956 | 90 | 10.6 | Willian Rochadel | 36 | 14 | 51 |

| Sebastian Dormido | 84 | 1769 | 21.05 | Ton De Jong | 940 | 15 | 62.6 | Marc G D Geers | 31 | 13 | 67 |

| Elio Sancristobal | 55 | 719 | 13.07 | Gustavo Ribeiro Costa Alves | 840 | 89 | 9.4 | Fernando Torres | 21 | 14 | 432 |

| Krishnashree Achuthan | 54 | 447 | 8.2 | Luis De La Torre | 763 | 45 | 16.9 | Nijin Nizar | 21 | 14 | 102 |

| Karsten Henke | 53 | 262 | 4.9 | Denis Gillet | 723 | 49 | 14.7 | Krishnashree Achuthan | 20 | 54 | 447 |

| Gabriel Diaz | 49 | 723 | 14.7 | Elio Sancristobal | 719 | 55 | 13.07 | Francisco Andrés Candelas | 20 | 15 | 406 |

| Denis Gillet | 49 | 533 | 10.8 | Jeffrey J Mcdonnell | 654 | 10 | 65.4 | Dhanush Kumar | 20 | 15 | 122 |

| Ignacio Angulo | 49 | 351 | 7.1 | José Ignacio Sánchez Sánchez | 641 | 34 | 18.8 | David B Lowe | 18 | 17 | 319 |

| Luis Rodriguez-Gil | 48 | 393 | 8.1 | Francisco Esquembre | 628 | 28 | 22.4 | Carlos Alberto Jara | 17 | 18 | 444 |

| Heinz-Dietrich Wuttke | 46 | 232 | 5.04 | Ingvar Gustavsson | 612 | 29 | 21.1 | Milo D Koretsky | 17 | 24 | 165 |

| Luis De La Torre | 45 | 763 | 16.9 | Hamideh Afsarmanesh | 612 | 14 | Shyam Diwakar | 16 | 38 | 389 | |

| André Vaz Da Silva Fidalgo | 41 | 278 | 6.7 | Luis Manuel Camarinha-Matos | 548 | 8 | Serafí N Alonso | 15 | 13 | 107 | |

The top authors publishing on virtual laboratories are Javier Garcia Zubia (TP:115) & Manuel Alonso Castro (TP:110). The research paper of Javier Garcia Zubia with the highest citations (TC:35) is “VISIR: Experiences and challenges” which was published in the International Journal of Online Engineering in 2021 & “State of art, initiatives and new challenges for virtual and remote labs” was published in Proceedings of the 12th IEEE International Conference on Advanced Learning Technologies, ICALT 2012 (TC:26). The research papers of Manuel Alonso Castro with the highest citations (TC: 54) is “Integration view of web labs and learning management systems” which was published in 2010 IEEE Education Engineering Conference, EDUCON 2010, and “State of art, initiatives and new challenges for virtual and remote labs” (TC:26) was published in Proceedings of the 12th IEEE International Conference on Advanced Learning Technologies, ICALT 2012.

Till a decade ago the quality of publications was recognized mostly by way of citations, but this trend has changed with the popularity of social networking platforms such as Facebook, Twitter, LinkedIn, Mendeley etc. The penetration of ICT has given the emergence of a new area called Altmetrics. Priem and Hemminger (2010) first coined the term “Altmetrics” and subsequently published a manifesto (Priem et al., 2010). Bornmann (2014) proposed Altmetrics as an alternative and the extension of the traditional bibliometric indicators (such as Journal Impact Factor or h-index). Altmetrics enables to study the impact of a paper just a few days or weeks after it has appeared and the techniques include both “intrinsic” measures linked to the author’s scientific community, and “extrinsic” measures from the broader context outside the research community (Poplašen & Grgić, 2017). When we look at TC/TP to measure the research impact, Sebastian Dormido has the highest TC/TP (21.05) but Glen EP Ropella has more publications with attention (TPA: 54%). This indicates though an author may have more citations it does not necessarily mean higher attention.

Most productive countries based on publications, citations, and Altmetrics

To address RQ4, we ranked the most productive countries based on publications and citations (Table 5). Our analysis shows that the top five countries which have published on the topic are the United States of America (TP:1406), China (TP:763), Spain (TP:749), Germany (TP:572), and Italy (TP:351). The total citations are highest for the United States (TC:19658) followed by Spain (TC: 8216), Germany (TC: 5822), and the UK (TC: 5231). These top 4 countries account for more than 61% of the total citations.

Table 5.

Most productive countries based on publications, citations, and Altmetrics

| Name | TP | TC | TPA (%) | Name | TC | TP | TPA (%) | Name | TP | TC | TPA (%) |

|---|---|---|---|---|---|---|---|---|---|---|---|

| United States | 1532 | 19,659 | 21.0 | United States | 19,659 | 1532 | 21.0 | New Zealand | 20 | 183 | 50.0 |

| China | 823 | 3143 | 5.2 | Spain | 8216 | 782 | 10.1 | Israel | 27 | 455 | 48.2 |

| Spain | 782 | 8216 | 10.1 | Germany | 5822 | 632 | 13.8 | Denmark | 51 | 539 | 39.2 |

| Germany | 632 | 5822 | 13.8 | UK | 5231 | 336 | 28.6 | Cyprus | 19 | 589 | 31.6 |

| Italy | 380 | 3694 | 10.5 | Australia | 4157 | 297 | 24.9 | UK | 336 | 5231 | 28.6 |

| UK | 336 | 5231 | 28.6 | Italy | 3694 | 380 | 10.5 | Finland | 46 | 647 | 28.3 |

| India | 329 | 1349 | 8.5 | Netherlands | 3320 | 195 | 20.5 | Qatar | 23 | 118 | 26.1 |

| Australia | 297 | 4157 | 24.9 | China | 3143 | 823 | 5.2 | Australia | 297 | 4157 | 24.9 |

| Indonesia | 291 | 578 | 1.4 | France | 2884 | 281 | 13.5 | United States | 1532 | 19,659 | 21.0 |

| France | 281 | 2884 | 13.5 | Switzerland | 2780 | 144 | 20.8 | Switzerland | 144 | 2780 | 20.8 |

| Portugal | 281 | 2541 | 6.1 | Portugal | 2541 | 281 | 6.1 | Canada | 189 | 1976 | 20.6 |

| Russia | 242 | 553 | 3.3 | Canada | 1976 | 189 | 20.6 | Netherlands | 195 | 3320 | 20.5 |

| Japan | 233 | 1671 | 9.0 | Japan | 1671 | 233 | 9.0 | Sweden | 109 | 1288 | 18.4 |

| Brazil | 226 | 1084 | 9.7 | Austria | 1461 | 136 | 11.0 | Belgium | 98 | 1061 | 17.4 |

| Netherlands | 195 | 3320 | 20.5 | India | 1349 | 329 | 8.5 | South Africa | 35 | 116 | 17.1 |

| Canada | 189 | 1976 | 20.6 | Sweden | 1288 | 109 | 18.4 | Greece | 136 | 968 | 16.9 |

| Romania | 165 | 555 | 3.0 | Brazil | 1084 | 226 | 9.7 | Croatia | 25 | 222 | 16.0 |

| Switzerland | 144 | 2780 | 20.8 | South Korea | 1072 | 95 | 15.8 | South Korea | 95 | 1072 | 15.8 |

| Slovakia | 143 | 728 | 2.8 | Belgium | 1061 | 98 | 17.4 | Malaysia | 54 | 355 | 14.8 |

Alternate metrics called Altmetrics have been proposed to obtain, evaluate, and characterize scientific information of most productive countries through data contained in social media such as Twitter, Facebook, Google + , blogs, Mendeley Readers, CiteULike, Reddit, and Wikipedia, among others (Priem et al., 2012a). The percentage of Total Publications with Attention (TPA) reveals a different picture. It can be seen that New Zealand (TP:50, TC:183) and Israel (TP:2, TC:455) though have a fewer number of publications and citations have a higher TPA percentage (New Zealand TPA:50, Israel TPA: 48.2) which may be because of high visibility in social media platforms but not necessarily scholarly relevance (Trueger et al., 2015; Veeranjaneyulu, 2018).

Influential publications based on citations

Table 6 shows influential publications ranked based on citations. Among the top-cited publications, the following studies are literature reviews or bibliometrics reviews on the topic of virtual laboratories.

Table 6.

Influential publications according to citations and their key findings

| Author/Year | Journal | TC | Research questions | Key findings |

|---|---|---|---|---|

| Ma and Nickerson (2006) | ACM Computing Surveys | 517 | A literature review of hands-on, simulated, and remote laboratories and their role in science education | (i) detailed review of virtual labs in education, (ii) hands-on, simulated, and remote labs are essential for education, (iii) technology is commonly used in all types of labs |

| de Jong et al. (2013) | Science | 372 | To discuss the importance of physical and virtual labs in higher education | (i) discussed past Literature on utilization of physical and digital labs in student education. (ii) Recommend that virtual labs are essential for the development of higher education |

| Gomes and Bogosyan (2009) | IEEE Transactions on Industrial Electronics | 307 | The role of remote laboratories in engineering student education | (i) overview of the role of remote laboratories in engineering, (ii) examples of remote laboratories are presented |

| Potkonjak et al. (2016) | Computers & Education | 294 | A literature view of virtual labs in science, technology, and engineering | (i) overview of the past Literature on the topic. (ii) presented future research directions and discussed the essentials of virtual labs in higher education |

| Harward et al. (2008) | Proceedings of the IEEE | 291 | The overview of the MIT iLab project developed a software toolkit for virtual labs through the internet | (i) overview of iLab and their developed software kits for utilizing the |

| Aktan et al. (1996) | IEEE Transactions on Education | 229 | The discussion and analysis of distance learning application to virtual laboratories in the engineering discipline | (i) overview of the application of distance learning tools through the internet to experiments of engineering students is presented in this study. (ii) overview of detailed past literature is provided |

| Weiler & McDonnell (2004) | Journal of Hydrology | 220 | The objective is to conceptualize the process of hillslope hydrology through virtual labs | (i) presents an overview of virtual labs and experimentation in conceptualizing hillslope hydrology |

| Heradio et al. (2016) | Computers & Education | 205 | A bibliometric review of virtual and remote labs | (i) improve the discussion about virtual lab literature through bibliometric analysis |

TC indicates Total Citations

For example, the study of Ma and Nickerson (2006) is a detailed literature review on the topic of hand-on, simulated, and remote laboratories and their role in science education. They summarize, compare, and provide insights about literature related to three types of laboratories. They concluded the boundaries among the three labs i.e. hands-on, simulated and remote laboratories are blurred in the sense that most laboratories are mediated by computers, and that the psychology of presence may be as important as technology. de Jong et al. (2013) reviewed the literature to contrast the value of physical and virtual investigations and to offer recommendations for combining the two to strengthen science learning. Gomes and Bogosyan's (2009) study present the literature review and discusses virtual labs' role in student education. They provide the latest trends related to remote laboratories. In another study, Heradio et al. (2016) conducted a bibliometric analysis of the topic on virtual and remote laboratories. They provide an in-depth bibliometric analysis of virtual labs, but their study covers the period only until 2015.

The development process of virtual labs is explained and discussed by the authors (Ko et al., 2001a, 2001b; Nedungadi et al., 2018; Quesnel et al., 2009; Tetko et al., 2005). The utilization of digital technologies in higher education has increased the demand for virtual labs for sciences and engineering students (Achuthan et al., 2020; Jara et al., 2011; Martín-Gutiérrez et al., 2017; Restivo et al., 2009; Sangeeth et al., 2015). Virtual laboratories play a pivotal role in distance learning and the digital education system in science and engineering (Dalgarno et al., 2009). The effect of virtual laboratories is positive, and it results in higher learning outcomes of experiments in sciences and engineering (Lindsay & Good, 2005; Koretsky et al., 2008; Achuthan et al., 2011; Nedungadi et al., 2017a, 2017b, Post et al., 2019).

Influential publications based on Altmetrics

Citation-based metrics have some limitations such as the delay from publication to bibliographic indexing into citation databases (Sud & Thelwall, 2014). As a complement to this traditional measure of scholarly impact (Thelwall et al., 2013), while ranking the top ten influential publications, we also considered Altmetrics to address RQ5. The potential advantages of Altmetrics for research evaluation cannot be ignored and they may reflect impacts that may appear before citations.

The top ten influential publications based on Altmetric Attention Score (AAS) are highlighted in Table 7. AAS gives the real-time impact of papers by including mentions on the internet and on social media (Dinsmore et al., 2014). We observe that the publication titled Education online: The virtual lab published in Nature journal has the highest AAS (278). Ranked second is Simulated Interactive Research Experiments as Educational Tools for Advanced Science with an AAS of 110 though its citations are only 5. However, the publication titled Compiling and using input–output frameworks through collaborative virtual laboratories has more citations (TC:146) but its AAS is only 32. Some publications like Remote Laboratory Exercise to Develop Micro pipetting Skills have zero citations, but the AAS is 53 which confirms that there may be a low correlation between AAS and citations (Haustein et al. 2013, 2015).

Table 7.

Top ten influential publications according to Altmetrics

| Author | Year | Journal | AAS | TC | Title |

|---|---|---|---|---|---|

| Waldrop, M. Mitchell | 2013 | Nature | 278 | 59 | Education online: The virtual lab |

| Tomandl, Mathias et. all | 2015 | Sci Rep | 110 | 5 | Simulated Interactive Research Experiments as Educational Tools for Advanced Science |

| Jawad, Mona Noor | 2021 | Journal of Microbiology and Biology Education | 53 | 0 | Remote Laboratory Exercise to Develop Micropipetting Skills |

| Figueiras, Edgar; | 2018 | European Journal of Physics | 47 | 5 | An open-source virtual laboratory for the Schrödinger equation |

| Afgan, Enis; | 2015 | PLOS One | 43 | 84 | Genomics Virtual Laboratory: A Practical Bioinformatics Workbench for the Cloud |

| Monzo, Carlos; | 2021 | Electronics | 37 | 1 | Remote Laboratory for Online Engineering Education: The RLAB-UOC-FPGA Case Study |

| Lenzen, Manfred; | 2014 | Science of the Total Environment | 32 | 146 | Compiling and using input–output frameworks through collaborative virtual laboratories |

| Stauffer, Sarah; | 2018 | Springer | 15 | 3 | Labster Virtual Lab Experiments: Basic Biology |

| Skilton, Ryan A.; | 2014 | Nature Chemistry | 14 | 50 | Remote-controlled experiments with cloud chemistry |

Going one step further, we studied correlation between TC and AAS (Torres-Salinas et al. (2013)). Top 500 publications based on citations and their corresponding AAS scores were identified. Correlation analysis was performed with Microsoft Excel, and findings were interpreted using a predetermined p-value threshold of < 0.05. There were several publications with no AAS Score and we assigned a value of zero to them. The correlation between TC and AAS was found to be low (r = 0.12). Studies in the past have also reported a weak or negligible relationship between citations and Altmetrics attention score (Costas et al. 2014).

Topciting journals and their Altmetrics

To address RQ6, we ranked the top citing journals based on publications and citations (Table 8). International Journal of Online Engineering (iJOE) has the most publications (TP:215) followed by Lecture Notes in Computer Science (TP:200). Though IEEE Transactions on Education has only 69 publications, the number of citations is highest (TC:2423) compared to other journals.

Table 8.

Top citing journals according to publications, citations and Altmetrics

| Journal | TP | TC | TPA (%) |

|---|---|---|---|

| Lecture Notes in Computer Science | 239 | 881 | 3.4 |

| International Journal of Online Engineering | 215 | 1215 | 0.9 |

| Advances in Intelligent Systems and Computing | 185 | 266 | 11.4 |

| IFAC Proceedings Volumes | 152 | 600 | 3.3 |

| Journal of Physics Conference Series | 148 | 231 | 1.4 |

| Lecture Notes in Networks and Systems | 138 | 245 | 4.4 |

| Computer Applications in Engineering Education | 94 | 1301 | 7.5 |

| IEEE Transactions on Education | 71 | 2513 | 19.7 |

| Journal of Chemical Education | 61 | 504 | 70.5 |

| IFAC-PapersOnLine | 61 | 289 | 3.3 |

| Communications in Computer and Information Science | 60 | 85 | 1.7 |

| IOP Conference Series Materials Science and Engineering | 51 | 36 | 0.0 |

| Applied Mechanics and Materials | 49 | 14 | 0.0 |

| AIP Conference Proceedings | 41 | 66 | 7.3 |

| IEEE Transactions on Industrial Electronics | 40 | 1733 | 12.5 |

| Procedia—Social and Behavioral Sciences | 40 | 258 | 5.0 |

| Lecture Notes in Electrical Engineering | 40 | 49 | 0.0 |

| Advances in Social Science, Education and Humanities Research | 39 | 29 | 0.0 |

| FASEB Journal | 39 | 6 | 0.0 |

| International Journal of Online and Biomedical Engineering | 37 | 45 | 2.7 |

Once again we looked at alternate metrics—Total Publications with Attention (TPA). We observed that Journal of Chemical Education got the highest TPA (70.5%) though its publications (TP: 61) and citations (TP: 504) were far less compared to Lecture Notes in Computer Science (TP: 239 and TC: 881). The TPA of the Lecture Notes in Computer Science which has got the highest publications is only 0.9 percent. The journal which has the highest citations (TC:2513) has got lower TPA (19.7%) when compared to the Journal of Chemical Education (TC: 504).

Co-occurrence of keywords

There are several approaches to studying the network structure of a research topic such as bibliographic coupling (Boyack & Klavans, 2010), co-words analysis (Igami et al., 2014), and co-authorship analysis (Huang et al., 2015).

We used VOSviewer for co-word analysis to get an insight into RQ7 i.e., the major research themes, topics, and keywords in the intellectual structure of our research topic. The main objective of the co-words analysis is to identify the research progress that has been achieved by previous researchers and future directions explored by researchers. The keyword of an article can represent its main content, and the frequency of occurrence and co-occurrence can reflect themes focused on a special field to some extent (Zong et al., 2013). We used VOSviewer to visualize clusters with high similarities between nodes (van Eck & Waltman, 2007, 2010, 2011).

Table 9 shows that the total number of keywords in the period 1991–2000 (T1) was 1738, increasing eight times to 12,020 during 2001–2010 (T2) and then more than two times to 31,725 in 2011–2021. Setting a threshold of 15 occurrences for each keyword resulted in cluster formation as detailed in Table 11.

Table 9.

Results of co-occurrence of keyword analysis for the period T1, T2 & T3

| 1991–2000 (T1) | 2001–2010 (T2) | 2011–2021 (T3) | |

|---|---|---|---|

| Keywords | 1738 | 12,020 | 31,725 |

| Keywords (threshold = 15) | 15 | 151 | 546 |

| Clusters | 1 | 4 | 7 |

Table 11.

Most researched UN SDG according to publications and citations

| SDG | TP | TC |

|---|---|---|

| 4 Quality Education | 1824 | 12,273 |

| 7 Affordable and Clean Energy | 201 | 1069 |

| 13 Climate Action | 89 | 554 |

| 3 Good Health and Well Being | 63 | 433 |

| 11 Sustainable Cities and Communities | 24 | 145 |

| 9 Industry, Innovation, and Infrastructure | 13 | 186 |

| 12 Responsible Consumption and Production | 13 | 125 |

| 8 Decent Work and Economic Growth | 9 | 57 |

| 6 Clean Water and Sanitation | 7 | 55 |

| 14 Life Below Water | 7 | 60 |

| 2 Zero Hunger | 5 | 33 |

| 15 Life on Land | 5 | 135 |

| 1 No Poverty | 3 | 1 |

| 16 Peace, Justice and Strong Institutions | 3 | 1 |

| 10 Reduced Inequalities | 1 | 17 |

| 17 Partnerships for the Goals | 1 | 0 |

TP indicates Total Publications, TC indicates Total Citations and TPA indicates Total Publications with Attention

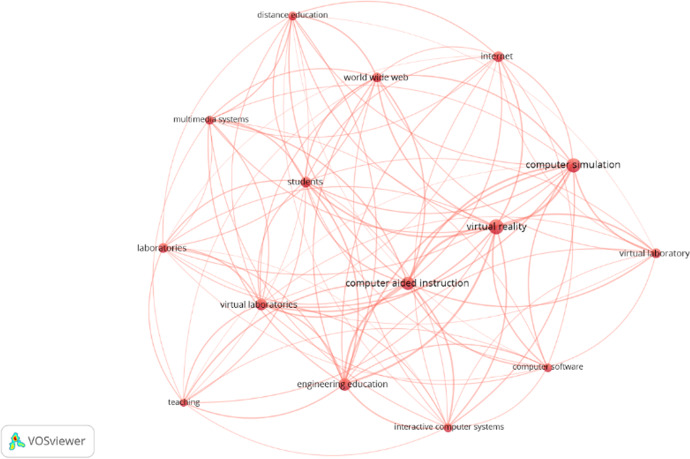

During T1, there was a single cluster of 15 keywords like computer-aided instruction, computer simulation, engineering education, virtual laboratories, multimedia systems which correspond to the research theme of Computer-aided learning (Fig. 5).

Fig. 5.

Co-occurrence of keywords for 1991 – 2000 (T1)

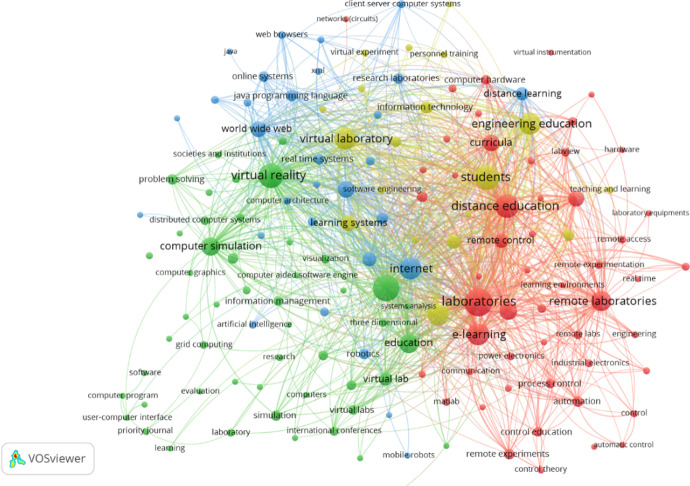

During T2, number of clusters increased to four as seen in Fig. 6. We see cluster 1 (red) having 52 keywords laboratories; distance education; e-learning; remote laboratories; experiments leading to the research theme of Laboratories in the distance learning. cluster 2 (green) has 50 keywords like virtual laboratories, virtual reality, education, computer simulation, and problem solving leading to theme of virtual laboratories for problem solving. Cluster 3 (blue) with 30 keywords internet; computer software; user interface; multimedia systems; distance learning maps to research theme of Internet and computer software for distance learning. Finally, cluster 4 (yellow) with 19 keywords students; engineering education; teaching; learning systems; computer aided instruction Computer aided instruction in engineering education.

Fig. 6.

Co-occurrence of keywords for 2001 – 2010 (T2)

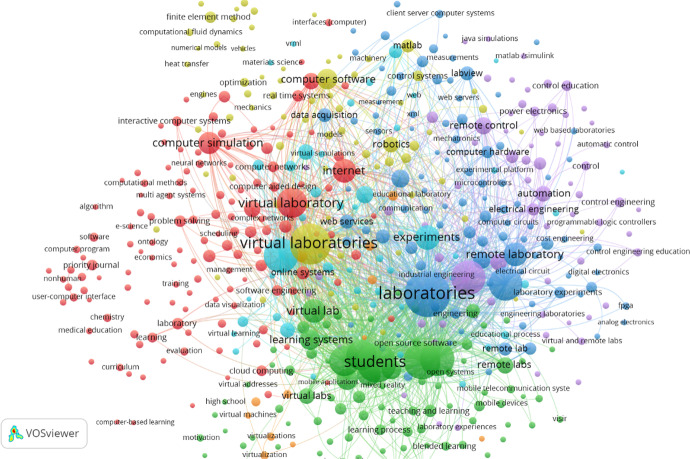

Finally, during T3, number of clusters increased to seven as seen in Fig. 7. We see Cluster 1 (red) with 136 keywords such as virtual laboratory, internet computer, simulation, and world wide web leading to the theme of computer simulation and virtual laboratory. Cluster 2 (green) has 108 keywords like students; e-learning; engineering education; teaching; curricula mapping to the theme of teaching–learning in engineering education using e-learning. Cluster 3 (blue) has 90 keywords like remote laboratories; remote laboratory; user interfaces; remote experiments; labview which leads to the theme of remote access to the equipment in the labs. Cluster 4 (yellow) has 77 keywords like virtual laboratories; computer software; robotics; matlab; finite element method leading to the theme of computer software and virtual laboratories; Cluster 5 (purple) has 67 keywords like distance education; remote control; distance learning; automation; electrical engineering which leads to the theme distance learning and automation in engineering education. Cluster 6 (light blue) has 47 keywords with main keywords being virtual reality; virtual experiments; multimedia systems; information technology; java programming language mapping to the theme of virtual reality and virtual laboratories and cluster 7 (orange) has 12 keywords like virtual laboratory, remote laboratory, virtual reality, automation, virtualization leading to the theme computer programming and virtualisation.

Fig. 7.

Co-occurrence of keywords for 2011 – 2021 (T3)

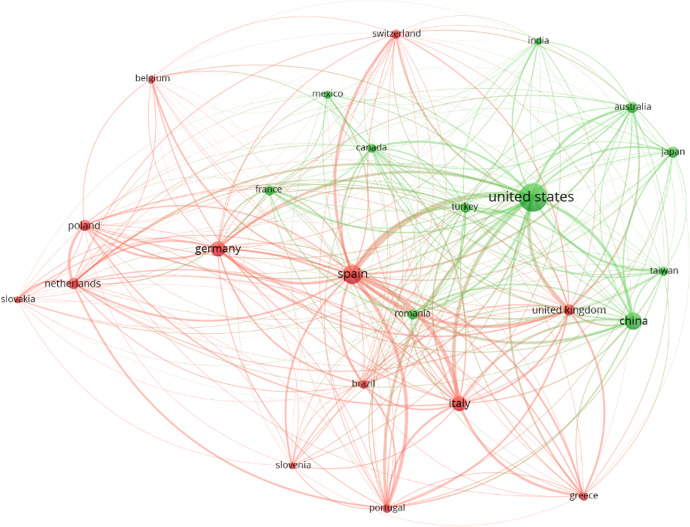

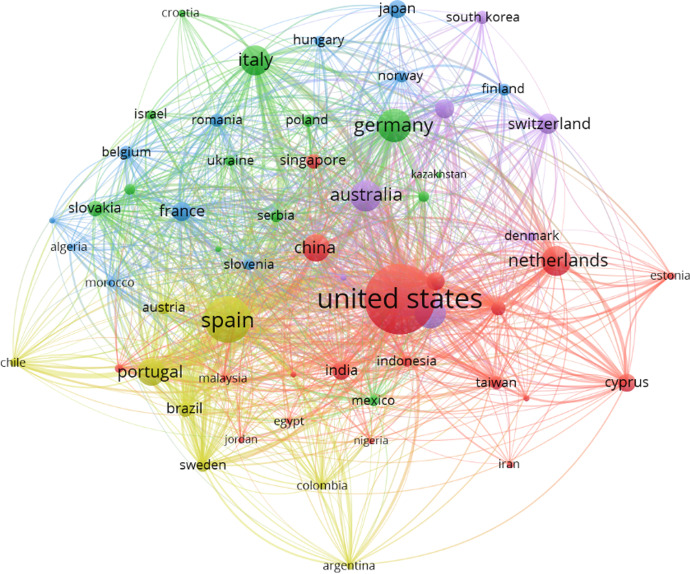

Bibliographic couplings - country analysis

Clusters representing countries were created using VOS viewer (Table 10). Starting with 42 countries with publications on virtual laboratories in 1991–2000 (T1) it went up to 186 in 2001–2010 (T2) and further went up to 253 countries in 2011–2021 (T3) showing broad participation in the recent decade. We set a threshold of 20 publications which resulted in a single cluster in T1, two clusters with 25 countries in T2, and 58 countries with 5 clusters in T3.

Table 10.

Clusters of countries using VOS viewer

| 1991–2000 (T1) | 2001–2010 (T2) | 2011–2021 (T3) | |

|---|---|---|---|

| Countries | 42 | 186 | 253 |

| Countries (threshold = 20 publications) | 21 | 25 | 58 |

| Clusters | 1 | 2 | 5 |

Figures 8, 9, and 10 show the network of countries based on TP in T1, T2 & T3. United States had the highest number of publications in all three time periods—T1 (TP: 145), T2 (TP: 527), and T3 (TP:1382). The other countries with a high number of publications in T2 and T3 include Germany, Spain, Italy, and the Netherlands. In T3 we can see more developing countries joining the network and specifically there is an increase in the number of publications from Asian countries like China, India, Indonesia, Taiwan, and Japan. The figures also demonstrate the increasing number of countries contributing to the area of virtual laboratories which gives an indication of the faster adaption of virtual laboratories in T3.

Fig. 8.

Bibliographic network of countries during 1991–2000 (T1)

Fig. 9.

Bibliographic network of countries during 2001–2010 (T2)

Fig. 10.

Bibliographic network of countries during 2010–2021 (T3)

Virtual laboratory’s research related to UN SDG

At the UN Sustainable Development Summit in September 2015, the world leaders adopted a new 2030 Agenda for Sustainable Development which is “a plan of action for people, planet, and prosperity designed to “shift the world onto a sustainable and resilient path (UN, 2015). This agenda is mainly represented by the so-called sustainable development goals (SDGs) that encompass “grand challenges” for society at all its levels (George et al., 2016).

Contemporary technologies have been integrated into socio-economic, environmental, sustainable, and climate research applications to enhance the productivity and efficiency of a given system (Balogun et al., 2020; Ceipek et al., 2021). Information and communication technologies are an enabler to more efficient resource usage, education, and business operations which is a critical success factor for achieving the SDGs (Tjoa et al., 2016). Virtual laboratories accessible through the internet anytime anywhere can play a very crucial role in achieving United Nations Sustainable Development Goals (SDG), specifically UN SDG4 Quality Education (Benetazzo et al., 2000). Ahmed & Hasegawa (2021) in their study found a positive relationship between the online virtual platform (OVLP) and SDG related to education. Another example of using educational technology in achieving SDG 4 is the Amrita Rural India Tablet enhanced Education which utilizes multilingual virtual labs that are adapted for rural areas to work with low-bandwidth Internet (Nedungadi et al., 2017a, Menon et al., 2021).

Table 11 shows the virtual laboratory’s research related to UN SDG. It can be seen that virtual laboratories contribute the most to achieving SDG 4 (Quality Education), both in terms of TP and TC.

The findings give a new insight into that virtual laboratories are essential to attain the United Nations Sustainable Development Goals (Grosseck et al., 2019; Meschede, 2020). Quality education is predominantly addressed in 19% of the papers that reflect the important features virtual labs deliver towards SDG. The other SDG are addressed in a mere 7% of all publications. Thus, SDG remains under-addressed and countries worldwide should focus on virtual labs in higher education to achieve SDG, especially to attain SDG4-Quality education.

Impact of COVID-19

COVID-19 has sparked the importance of virtual labs substantially in higher education due to sporadic shutdowns, online coverage of technical content, and inaccessibility of physical labs. In this health-induced economic crisis, virtual labs play a crucial role in the experimentation, analysis, and higher education of students in the sciences field (Ray & Srivastava, 2020; Raman et al., 2021b). Initially, before COVID, behavioral analysis on university students indicated the substantial popularity of virtual laboratories in education for skill training and instructor dependency. Usage adoption of virtual laboratories increased during the pandemic-imposed lockdowns and learners were being less instructor dependent (Radhamani et al., 2021). Therefore, it is vital to analyze the role of virtual labs in higher education during COVID-19. By applying bibliometric citation analysis on the literature on virtual labs in higher education, we identified the top 10 influential publications thereby answering RQ9. Table 12 shows the details and list of these publications.

Table 12.

Influential publications analyzing the role of virtual labs during COVID-19

| Title | Journal | Year | Authors | TC | AAS |

|---|---|---|---|---|---|

| Virtualization of science education: a lesson from the COVID-19 pandemic | Journal of Proteins and Proteomics | 2020 | Ray, Sandipan et.al | 28 | 1 |

| Studying physics during the COVID-19 pandemic: Student assessments of learning achievement, perceived effectiveness of online recitations, and online laboratories | Physical Review Physics Education Research | 2021 | Klein, P. et.al | 23 | 13 |

| Virtual laboratories during coronavirus (COVID‐19) pandemic | Biochemistry and Molecular Biology Education | 2020 | Vasiliadou, Rafaela.et.al | 20 | 4 |

| As Close as It Might Get to the Real Lab Experience Live-Streamed Laboratory Activities | Journal of Chemical Education | 2020 | Woelk, Klaus. et.al | 14 | 2 |

| Lab-in-a-Box: A Guide for Remote Laboratory Instruction in an Instrumental Analysis Course | Journal of Chemical Education | 2020 | Miles, Deon T. et.al | 14 | 9 |

| Virtual Laboratory: A Boon to the Mechanical Engineering Education During COVID-19 Pandemic | Higher Education for the Future | 2020 | Kapilan, N. et.al | 12 | 9 |

| Developing Engaging Remote Laboratory Activities for a Nonmajors Chemistry Course During COVID-19 | Journal of Chemical Education | 2020 | Youssef, Mena. et.al | 10 | 1 |

| Choose Your Own “Labventure”: A Click-Through Story Approach to Online Laboratories during a Global Pandemic | Journal of Chemical Education | 2020 | D’Angelo, John G. et.al | 10 | 2 |

| Pandemic Teaching: Creating and teaching cell biology labs online during COVID‐19 | Biochemistry and Molecular Biology Education | 2020 | Delgado, Tracie. et.al | 7 | 9 |

| Transforming Traditional Teaching Laboratories for Effective Remote Delivery – A Review | Education for Chemical Engineers | 2021 | Bhute, Vijesh J. et.al | 6 | 4 |

TC indicates Total Citations, and AAS indicates Altmetrics Attention Score

The top three influential studies which explore the role of virtual labs in higher education during COVID-19 were Ray and Srivastava (2020), Klein et al. (2021), and Vasiliadou (2020). These recent studies explored research questions like—what role virtual labs play in science and engineering education during COVID-19.

Altmetric Attention Score (AAS) was also retrieved to get a different perspective based on social media presence. AAS was highest (AAS:13) for the publication by Klein et al. (2021) published in Physical Review Physics Education Research. Interestingly the top-cited publication had the least AAS.

Conclusion

Laboratory experimentation plays an essential role in engineering and scientific education. Virtual and remote labs reduce the costs associated with conventional hands-on labs due to their required equipment, space, and maintenance staff besides the additional benefits such as supporting distance learning, improving lab accessibility to handicapped people, and increasing safety for dangerous experimentation (Heradio et al., 2016). This study uses bibliometrics to develop a comprehensive overview of the research contributions in Virtual Laboratories in higher education and uses bibliometric indicators (Garfield, 1956) to represent the bibliographic data, including the total number of publications and citations (Ding et al., 2014).

Our bibliometric analysis highlighted significant insights through analysis of 9523 publications over 30 years between 1991 and 2021. The Scientific Procedures and Rationales for Systematic Literature Reviews (SPAR-4-SLR) protocol was used in the study. Research on Virtual labs intensified between the period 2010 to 2021 due to the increased focus on digitalization of education. The highest number of yearly publications happened during the last two years between 2019 and 2021 with COVID-19 motivating researchers to study impact of online learning. Among the top contributing institutions in publications and citations, the National University of Distance Education (UNED) has the largest number of publications and citations followed by the University of Deusto. Our study shows that European researchers dominated as key authors in terms of the number of publications and citations. The top authors publishing on virtual laboratories are Javier Garcia Zubia (TP:115) & Manuel Alonso Castro (TP:110) with top influential publication coming from Ma & Nickerson (TC:517). Among the countries associated with the author’s contributions to the field of study, the United States had the most authors followed by China and Spain. Major funding organizations were the European Commission (EC) followed by National Natural Science Foundation of China (NSFC) and Ministry of Economy, Industry, and Competitiveness (MINECO). The Field Weighted Citation Impact indicated a high impact for publications with international collaborations. The top citing journal source on virtual labs is the International Journal of Online Engineering (EOE). For the first time, in the context of virtual laboratories, Altmetrics has been included in this study and Altmetrics Attention Score (AAS) and Total Publications with Attention (TPA) have been used as an alternative and extension of the traditional bibliometric indicators. Top 500 publications on virtual labs with citation scores (TC) and AAS were identified and a low correlation was found between TC and AAS, similar to results from other studies. On analysis on virtual labs addressing the UNSDGs, SDG4 (Quality Education) had the highest number of publications and citations. However, only 25% of all publications addressed SDGs, which is quite low considering its significant contribution to accessibility, personalized learning and minimizing laboratory resources in a scalable environment.

The co-occurrence of keywords analysis highlights the evolution of virtual laboratories as a field of study exposing the research emphasis over the three decades. Initially, the novel concept of computer-based instruction was explored followed by research in enhancements of methods especially in multiple disciplines towards designing and building these virtual laboratories. During 1991–2000, the studies were focused on simulation and computer aided instruction but the research focus in 2001–2010 shifting to remote access to the equipment’s in the engineering labs. During 2010–2021, we see a gradual shift towards virtual reality in virtual laboratories, computer programming and virtualisation.

This study has some limitations. Some articles on virtual laboratories research may have been missed in case virtual laboratories are not mentioned in the title, abstract, or keywords. This could have resulted in some discrepancies in the statistical analysis. Moreover, the citation analysis tool which we have used in this study focuses only on the magnitude of the impact of the cited papers, and highly cited papers are not necessarily high-quality papers (Thompson & Walker, 2015). There are some limitations with the tools used in this study as well i.e., Scival Field Weighted Citation Impact, h-index, Dimensions & Altmetrics. The main limitation of Citation-based metrics like Field Weighted Citation Impact is that it should not be interpreted as a direct measure of research quality and only the publications included in Scopus have FWCI. H-index is meaningless without a context within the author's discipline, and it should be used with care to make comparisons because of its bias against early career researchers and those who started late or had career breaks. It also discriminates against disciplines. As Dimensions is a relatively new database, many publishers have not indexed their publications with it. This might be impacting the total size of the corpus. And finally, the limitation of Altmetrics is that Attention does not necessarily indicate that the article is important or even of quality and may indicate popularity with the public.

Complementing virtual labs with digital and classroom resources will provide comprehensive educational outcomes that will allow students to understand theoretical aspects and develop practical skills (Bencomo, 2004). Studies show that “digital storytelling” and its associated components such as “virtual reality,” “critical thinking,’ and “serious games” are the emerging themes of the smart learning environments, and they need to be further developed to establish more ties with “smart learning” (Agbo et al., 2021). In the future, studies may be carried out on the impact of Virtual Reality (VR) and Augmented Reality (AR) based virtual laboratories.

Author contribution

All the authors were involved in conceptualization, formal analysis, methodology, writing—review & editing, and have read and approved the final manuscript.

Data availability

The datasets generated and analyzed during the current study are not publicly available due to confidentiality but are available from the corresponding author on reasonable request.

Declarations

Competing interests

The authors declare that they have no conflict of interest.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Raghu Raman, Email: raghu@amrita.edu.

Krishnashree Achuthan, Email: krishna@amrita.edu.

Vinith Kumar Nair, Email: vinithkumarnair@am.amrita.edu.

Prema Nedungadi, Email: prema@amrita.edu.

References

- Achuthan, K., Freeman, J. D., Nedungadi, P., Mohankumar, U., Varghese, A., Vasanthakumari, A. M., & Kolil, V. K. (2020). Remote triggered dual-axis solar irradiance measurement system. IEEE Transactions on Industry Applications, 56(2), 1742–1751.

- Achuthan, K., Sreelatha, K. S., Surendran, S., Diwakar, S., Nedungadi, P., Humphreys, S., & Mahesh, S. (2011). The VALUE@ Amrita Virtual Labs Project: Using web technology to provide virtual laboratory access to students. In 2011 IEEE Global Humanitarian Technology Conference (pp. 117–121)

- Achuthan K, Francis SP, Diwakar S. Augmented reflective learning and knowledge retention perceived among students in classrooms involving virtual laboratories. Education and Information Technologies. 2017;22(6):2825–2855. doi: 10.1007/s10639-017-9626-x. [DOI] [Google Scholar]

- Agbo FJ, Oyelere SS, Suhonen J, Tukiainen M. Scientific production and thematic breakthroughs in smart learning environments: a bibliometric analysis. Smart Learning Environments. 2021;8(1):1–25. doi: 10.1186/s40561-020-00145-4. [DOI] [Google Scholar]

- Ahmed, M. E., & Hasegawa, S. (2021). Development of online virtual laboratory platform for supporting real laboratory experiments in multi domains. Education Sciences, 11(9), 548.

- Aktan, B., Bohus, C. A., Crowl, L. A., & Shor, M. H. (1996). Distance learning applied to control engineering laboratories. IEEE Transactions on Education, 39(3), 320–326.

- Ali, N., & Ullah, S. (2020). Review to analyze and compare virtual chemistry laboratories for their use in education. Journal of Chemical Education, 97(10), 3563–3574

- Alkhaldi, G., Hamilton, F. L., Lau, R., Webster, R., Michie, S., & Murray, E. (2016). The effectiveness of prompts to promote engagement with digital interventions: a systematic review. Journal of Medical Internet Research,18(1), e4790. [DOI] [PMC free article] [PubMed]

- Balamuralithara B, Woods PC. Virtual laboratories in engineering education: The simulation lab and remote lab. Computer Applications in Engineering Education. 2009;17(1):108–118. doi: 10.1002/cae.20186. [DOI] [Google Scholar]

- Balogun, A. L., Marks, D., Sharma, R., Shekhar, H., Balmes, C., Maheng, D., ... & Salehi, P. (2020). Assessing the potentials of digitalization as a tool for climate change adaptation and sustainable development in urban centres. Sustainable Cities and Society, 53, 101888

- Bencomo, D. S. (2004) Control learning: present and future. Annual Reviews in Control, 28(1).

- Benetazzo, L., Bertocco, M., Ferraris, F., Ferrero, A., Offelli, C., Parvis, M., & Piuri, V. (2000). A Web-based distributed virtual educational laboratory. IEEE Transactions on Instrumentation and Measurement, 49(2), 349-356

- Bode C, Herzog C, Hook D, McGrath R. A guide to the Dimensions data approach. Dimensions Report. Digital Science; 2018. [Google Scholar]

- Bornmann, L. (2014). Do Altmetrics point to the broader impact of research? An overview of benefits and disadvantages of Altmetrics. In arXiv [cs.DL]. http://arxiv.org/abs/1406.7091

- Bornmann L. Field classification of publications in dimensions: A first case study testing its reliability and validity. Scientometrics. 2018;117(1):637–640. doi: 10.1007/s11192-018-2855-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bornmann L, Marx W. Critical rationalism and the search for standard (field-normalized) indicators in Bibliometrics. Journal of Informetrics. 2018;12(3):598–604. doi: 10.1016/j.joi.2018.05.002. [DOI] [Google Scholar]

- Boyack KW, Klavans R. Co-citation analysis, bibliographic coupling, and direct citation: Which citation approach represents the research front most accurately? Journal of the American Society for Information Science and Technology. 2010;61(12):2389–2404. doi: 10.1002/asi.21419. [DOI] [Google Scholar]

- Butt FM, Ashiq M, Rehman SU, Minhas KS, Ajmal Khan M. Bibliometric analysis of road traffic injuries research in the Gulf Cooperation Council region. F1000Research. 2020;9:1155. doi: 10.12688/f1000research.25903.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ceipek R, Hautz J, Petruzzelli AM, De Massis A, Matzler K. A motivation and ability perspective on engagement in emerging digital technologies: The case of Internet of Things solutions. Long Range Planning. 2021;54(5):101991. doi: 10.1016/j.lrp.2020.101991. [DOI] [Google Scholar]

- Chandrashekhar, P., Prabhakaran, M., Gutjahr, G., Raman, R., & Nedungadi, P. (2020). Teacher perception of Olabs pedagogy. In Fourth International Congress on Information and Communication Technology (pp. 419–426). Springer.

- Chen, C., Ibekwe-SanJuan, F., & Hou, J. (2010). The structure and dynamics of co-citation clusters: A multiple-perspective co-citation analysis. In arXiv [cs.CY]. http://arxiv.org/abs/1002.1985

- Cobo MJ, López-Herrera AG, Herrera-Viedma E, Herrera F. Science mapping software tools: Review, analysis, and cooperative study among tools. Journal of the American Society for Information Science and Technology. 2011;62(7):1382–1402. doi: 10.1002/asi.21525. [DOI] [Google Scholar]

- Colledge L, Verlinde R. Scival metrics guidebook. Elsevier; 2014. [Google Scholar]

- Costas, R., Zahedi, Z., & Wouters, P. (2014). Does Altmetrics correlate with citations? Extensive comparison of altmetric indicators with citations from a multidisciplinary perspective. In arXiv [cs.DL]. http://arxiv.org/abs/1401.4321

- Dalgarno B, Bishop AG, Adlong W, Bedgood DR., Jr Effectiveness of a virtual laboratory as a preparatory resource for distance education chemistry students. Computers & Education. 2009;53(3):853–865. doi: 10.1016/j.compedu.2009.05.005. [DOI] [Google Scholar]

- Davenport JL, Rafferty AN, Yaron DJ. Whether and how authentic contexts using a virtual chemistry lab support learning. Journal of Chemical Education. 2018;95(8):1250–1259. doi: 10.1021/acs.jchemed.8b00048. [DOI] [Google Scholar]

- de Jong T, Linn MC, Zacharia ZC. Physical and virtual laboratories in science and engineering education. Science (New York, NY) 2013;340(6130):305–308. doi: 10.1126/science.1230579. [DOI] [PubMed] [Google Scholar]

- Ding Y, Zhang G, Chambers T, Song M, Wang X, Zhai C. Content-based citation analysis: The next generation of citation analysis. Journal of the Association for Information Science and Technology. 2014;65(9):1820–1833. doi: 10.1002/asi.23256. [DOI] [Google Scholar]

- Dinsmore A, Allen L, Dolby K. Alternative perspectives on impact: the potential of ALMs and Altmetrics to inform funders about research impact. PLoS Biology. 2014;12(11):e1002003. doi: 10.1371/journal.pbio.1002003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- El Kharki K, Berrada K, Burgos D. Design and implementation of a virtual laboratory for physics subjects in Moroccan universities. Sustainability. 2021;13(7):3711. doi: 10.3390/su13073711. [DOI] [Google Scholar]

- Enneking KM, Breitenstein GR, Coleman AF, Reeves JH, Wang Y, Grove NP. The evaluation of a hybrid, general chemistry laboratory curriculum: Impact on students’ cognitive, affective, and psychomotor learning. Journal of Chemical Education. 2019;96(6):1058–1067. doi: 10.1021/acs.jchemed.8b00637. [DOI] [Google Scholar]

- Farooq RK, Rehman SU, Ashiq M, Siddique N, Ahmad S. Bibliometric analysis of coronavirus disease (COVID-19) literature published in Web of Science 2019–2020. Journal of Family & Community Medicine. 2021;28(1):1–7. doi: 10.4103/jfcm.JFCM_332_20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garfield E. Response: Citation indexes for science. Science (New York, NY) 1956;123(3185):62–62. doi: 10.1126/science.123.3185.62.a. [DOI] [PubMed] [Google Scholar]

- Gaviria-Marin M, Merigó JM, Baier-Fuentes H. Knowledge management: A global examination based on bibliometric analysis. Technological Forecasting and Social Change. 2019;140:194–220. doi: 10.1016/j.techfore.2018.07.006. [DOI] [Google Scholar]

- George G, Howard-Grenville J, Joshi A, Tihanyi L. Understanding and tackling societal grand challenges through management research. Academy of management journal. 2016;59(6):1880–1895. doi: 10.5465/amj.2016.4007. [DOI] [Google Scholar]

- Gomes L, Bogosyan S. Current trends in Remote Laboratories. IEEE Transactions on Industrial Electronics. 2009;56(12):4744–4756. doi: 10.1109/TIE.2009.2033293. [DOI] [Google Scholar]

- Grosseck G, Ţîru LG, Bran RA. Education for Sustainable Development: Evolution and perspectives: A bibliometric review of research, 1992–2018. Sustainability. 2019;11(21):6136. doi: 10.3390/su11216136. [DOI] [Google Scholar]

- Harward, V. J., del Alamo, J. A., Lerman, S. R., Bailey, P. H., Carpenter, J., DeLong, K., Felknor, C., Hardison, J., Harrison, B., Jabbour, I., Long, P. D., Tingting Mao, Naamani, L., Northridge, J., Schulz, M., Talavera, D., Varadharajan, C., Shaomin Wang, Yehia, K., … Zych, D. (2008). The iLab shared architecture: A web services infrastructure to build communities of internet accessible laboratories. Proceedings of the IEEE, 96(6), 931–950.

- Haustein S, Costas R, Larivière V. Correction: Characterizing social media metrics of scholarly papers: the effect of document properties and collaboration patterns. PloS One. 2015;10(5):e0127830. doi: 10.1371/journal.pone.0127830. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haustein, S., Peters, I., Sugimoto, C. R., Thelwall, M., & Larivière, V. (2013). Tweeting biomedicine: an analysis of tweets and citations in the biomedical literature. In arXiv [cs.DL]. http://arxiv.org/abs/1308.1838

- Heradio R, de la Torre L, Galan D, Cabrerizo FJ, Herrera-Viedma E, Dormido S. Virtual and remote labs in education: A Bibliometric analysis. Computers & Education. 2016;98:14–38. doi: 10.1016/j.compedu.2016.03.010. [DOI] [Google Scholar]

- Herzog C, Hook D, Konkiel S. Dimensions: Bringing down barriers between scientometricians and data. Quantitative Science Studies. 2020;1(1):387–395. doi: 10.1162/qss_a_00020. [DOI] [Google Scholar]

- Hirsch, J. E. (2005). An index to quantify an individual’s scientific research output. In arXiv [physics.soc-ph]. http://arxiv.org/abs/physics/0508025 [DOI] [PMC free article] [PubMed]

- Huang M-H, Wu L-L, Wu Y-C. A study of research collaboration in the pre-web and post-web stages: A coauthorship analysis of the information systems discipline: A Study of Research Collaboration in the Pre-web and Post-web Stages. Journal of the Association for Information Science and Technology. 2015;66(4):778–797. doi: 10.1002/asi.23196. [DOI] [Google Scholar]

- Hung J-L. Trends of e-learning research from 2000 to 2008: Use of text mining and bibliometrics: Research trends of e-learning. British Journal of Educational Technology: Journal of the Council for Educational Technology. 2012;43(1):5–16. doi: 10.1111/j.1467-8535.2010.01144.x. [DOI] [Google Scholar]

- Igami MZ, Bressiani J, Mugnaini R. A new model to identify the productivity of theses in terms of articles using co-word analysis. Journal of Scientometric Research. 2014;3(1):3. doi: 10.4103/2320-0057.143660. [DOI] [Google Scholar]

- Jara CA, Candelas FA, Puente ST, Torres F. Hands-on experiences of undergraduate students in Automatics and Robotics using a virtual and remote laboratory. Computers & Education. 2011;57(4):2451–2461. doi: 10.1016/j.compedu.2011.07.003. [DOI] [Google Scholar]

- Kapici HO, Akcay H, de Jong T. Using hands-on and virtual laboratories alone or together-which works better for acquiring knowledge and skills? Journal of science education and technology. 2019;28(3):231–250. doi: 10.1007/s10956-018-9762-0. [DOI] [Google Scholar]

- Kessler MM. Bibliographic coupling between scientific papers. American Documentation. 1963;14(1):10–25. doi: 10.1002/asi.5090140103. [DOI] [Google Scholar]

- Khan AS, Ur Rehman S, AlMaimouni YK, Ahmad S, Khan M, Ashiq M. Bibliometric analysis of literature published on antibacterial dental adhesive from 1996–2020. Polymers. 2020;12(12):2848. doi: 10.3390/polym12122848. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klein, P., Ivanjek, L., Dahlkemper, M. N., Jeličić, K., Geyer, M.-A., Küchemann, S., & Susac, A. (2021). Studying physics during the COVID-19 pandemic: Student assessments of learning achievement, perceived effectiveness of online recitations, and online laboratories. Physical Review Physics Education Research, 17(1).

- Ko CC, Chen BM, Chen J, Zhuang Y, Tan KC. Development of a web-based laboratory for control experiments on a coupled tank apparatus. IEEE Transactions on Education. 2001;44(1):76–86. doi: 10.1109/13.912713. [DOI] [Google Scholar]

- Ko CC, Chen BM, Hu S, Ramakrishnan V, Cheng CD, Zhuang Y, Chen J. A web-based virtual laboratory on a frequency modulation experiment. IEEE Transactions on Systems, Man, and Cybernetics, Part C (Applications and Reviews) 2001;31(3):295–303. doi: 10.1109/5326.971657. [DOI] [Google Scholar]

- Koretsky MD, Amatore D, Barnes C, Kimura S. Enhancement of student learning in experimental design using a virtual laboratory. IEEE Transactions on Education. 2008;51(1):76–85. doi: 10.1109/TE.2007.906894. [DOI] [Google Scholar]

- Lindsay ED, Good MC. Effects of laboratory access modes upon learning outcomes. IEEE Transactions on Education. 2005;48(4):619–631. doi: 10.1109/TE.2005.852591. [DOI] [Google Scholar]

- Ma J, Nickerson JV. Hands-on, simulated, and remote laboratories. ACM Computing Surveys. 2006;38(3):7. doi: 10.1145/1132960.1132961. [DOI] [Google Scholar]

- Marín-Morales J. Affective computing in virtual reality: emotion recognitionfrom brain and heartbeat dynamics using wearable sensors. Sci Rep. 2018;8(1):13657. doi: 10.1038/s41598-018-32063-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martín-Gutiérrez J, Mora CE, Añorbe-Díaz B, González-Marrero A. Virtual technologies trends in education. Eurasia Journal of Mathematics, Science and Technology Education. 2017;13(2):469–486. [Google Scholar]

- Martín-Martín A, Thelwall M, Orduna-Malea E, López-Cózar ED. Google Scholar, Microsoft Academic, Scopus, Dimensions, Web of Science, and OpenCitations’ COCI: A multidisciplinary comparison of coverage via citations. Scientometrics. 2021;126(1):871–906. doi: 10.1007/s11192-020-03690-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Menon, R., Sridharan, A., Sankar, S., Gutjahr, G., Chithra, V. V., & Nedungadi, P. (2021). Transforming attitudes to science in rural India through activity based learning. In AIP Conference Proceedings (Vol. 2336, No. 1, p. 040003). AIP Publishing LLC.