Abstract

Photoacoustic imaging (PAI) is an emerging hybrid imaging modality integrating the benefits of both optical and ultrasound imaging. Although PAI exhibits superior imaging capabilities, its translation into clinics is still hindered by various limitations. In recent years, deeplearning (DL), a new paradigm of machine learning, is gaining a lot of attention due to its ability to improve medical images. Likewise, DL is also widely being used in PAI to overcome some of the limitations of PAI. In this review, we provide a comprehensive overview on the various DL techniques employed in PAI along with its promising advantages.

Keywords: Photoacoustic tomography, Photoacoustic microscopy, Machine learning, Deep learning, Convolutional neural network

Introduction

Photoacoustic imaging (PAI) is a rapidly evolving hybrid imaging modality integrating the benefits of both optical and ultrasound imaging [1–7]. The hybrid nature of PAI overcomes numerous challenges encountered in conventional pure optical imaging [8]. PAI depends on the principle of the photoacoustic (PA) effect for the formation of images. The PA effect is induced when a nanosecond laser pulse illuminates the tissue. The absorption of light energy by the tissue chromophores (like haemoglobin, melanin etc.) leads to thermoelastic expansion resulting in the generation of ultrasound waves (known as PA waves). These PA waves are detected by ultrasound detectors and later used to form PA images. Depending on the imaging configuration used, PAI can be broadly classified into photoacoustic microscopy (PAM) and photoacoustic tomography/photoacoustic computed tomography (PAT/PACT). PAM is an embodiment of PAI where focused optical illumination and/or focused ultrasound detection is used for imaging [9–15]. Depending on the foci employed, PAM is categorized into optical-resolution PAM (OR-PAM) and acoustic-resolution PAM (AR-PAM). In OR-PAM, a tightly focused laser beam is used for illumination, and a confocally aligned single-element ultrasound transducer (SUT) is employed for PA signal acquisition. In this method, the optical focus is tighter than the acoustic focus, therefore it can achieve a high spatial resolution of ~ 1–5 µm at an imaging depth of ~ 1–2 mm (limited by light diffusion) [16–19]. In AR-PAM, a weakly focused laser beam is used for illumination with a focused SUT for PA signal acquisition. In this method, the acoustic focus is tighter than the optical focus making this technique useful for visualizing structures at a depth of ~ 3–5 mm with a lateral resolution of ~ 50–100 µm [20–22]. Typically, images are acquired in PAM by performing point-by-point scanning of the confocally aligned laser beam and SUT over the sample. By moving this confocally aligned system across a line, time-resolved PA signals (known as A-lines) are thus collected and placed side by side to form two-dimensional B-scans. Multiple B-scans are then aligned to obtain three-dimensional volumetric images or two-dimensional projections. As such, no reconstruction techniques are needed to obtain the final images.

In PAT/PACT typically an unfocused ultrasound detector is used (either in circular geometry or a linear configuration) to acquire the PA signals from multiple positions around the sample boundary and the sample is illuminated with a broad homogeneous pulsed laser beam [23–26]. The PA signal acquired at a given detector position can be mathematically expressed as the spherical Radon transform of the optical absorption coefficient of the sample (similar to X-ray computed tomography, where the projections are related to the Radon transform of the X-ray absorption coefficient of the object). Therefore, to obtain the absorption map (or the initial pressure rise distribution map) of the object, one needs to do an inverse Radon transform. Since analytical solution for inverse Radon transform is challenging, various reconstruction algorithms such as delay-and-sum (DAS) beamformer, filtered back projection, model-based approach, time reversal and frequency domain reconstruction are employed for reconstructing the PAT images [27–39]. These reconstruction techniques have their unique shortcomings, which are further aggravated by the hardware limitations [37, 40].

In recent years, deep learning, a new paradigm of machine learning, is gaining a lot of attention in numerous scientific domains due to its ability to solve complex problems [41–45]. Especially, in PAI deep learning is utilized as a data-driven approach to overcome the limitations due to hardware shortcomings and improve the performance of traditional reconstruction algorithms [46–53]. In this review, we focus on summarizing the different deep learning optimization techniques employed to overcome the challenges encountered in the PAI. The review concludes with an extensive discussion on the significance of deep learning in the prospects of PAI.

Brief overview of photoacoustic imaging systems

The main components of a conventional PAI system are shown in Fig. 1. It comprises of excitation light sources, light delivery systems, ultrasound detection, and image reconstruction/image processing unit. Conventionally in PAI, laser pulses of nanosecond duration are used as excitation sources. In particular, the most commonly used excitation sources are solid-state lasers like Nd: YAG, fiber lasers, optical parametric oscillator (OPO) lasers, and dye lasers. Owing to their low cost and high pulse repetition rate, diode lasers like pulsed laser diodes (PLD), and light-emitting diodes (LED) have also gained importance [54–56]. PAI systems with PLD and LED excitation sources have already been demonstrated for clinical and preclinical imaging [57–61]. Light delivery systems play a vital role in transporting the excitation beam from the laser sources to the sample. In PAI, light delivery from the laser to the target area is achieved using multiple optical components. Lens, prisms, filters, and diffusers are commonly used along with optical fibers for deflecting, focusing, diffusing, and shaping the beam so that it uniformly irradiates the target area. For detecting the generated PA signals, piezoelectric ultrasound transducers are most widely employed due to their low cost and ready availability in the markets. Detection techniques based on Fabry Perot etalon film, capacitive micromachined ultrasound transducer (CMUT), polyvinylidene difluoride (PVDF), optical interferometry have also been explored for PA signal acquisition [62, 63]. The PA signal acquired through these detection techniques are reconstructed/processed into images using various algorithms.

Fig. 1.

The main components of conventional photoacoustic imaging (PAI) system

Image reconstruction/processing in photoacoustic imaging

One of the important stages of PAI is image reconstruction, and the reconstruction techniques employed depends on the imaging configurations employed. In PAT, reconstructions are employed to solve the acoustic inverse problem where the acquired PA data is mapped into initial pressure distributions using algorithms such as DAS beamformer, universal back projection, time reversal, and frequency domain reconstructions. However, the acoustic inversion is still ill-posed due to factors such as erroneous speed of sound assumptions [64, 65], calibration errors, limited view detection geometries, limited-acceptance angle, limited data [66, 67], and limited-bandwidth of detectors [68]. Furthermore, the fluctuations in pulse energy also affects the ability of reconstruction algorithms.

In PAM, although reconstruction of the acquired A-lines are not required to form the images, constraints in laser illumination or acoustic detection limit the quality and exactitude of the acquired images [69, 70]. Factors like assumptions in the speed of sound, limited-bandwidth detector [71, 72], limited focal zone [73], absorption saturation effect [13], and variations in laser fluence irradiating the sample [74] affect the image quality of PAM. Moreover, to acquire high-resolution images, it is important to have small step-size and high-frequency transducers with a high numerical aperture (NA). Decrease in step-size results in a trade-off between resolution and imaging speed. Further, using a high NA detector degrades the resolution outside the focal plane and thereby limits the imaging field. In both PAM and PAT, multiple factors affect the image formation in coalesce, hence, addressing them individually is cumbersome. Thus, there is a constant need for a technique to overcome these challenges without simultaneously degrading other imaging aspects.

Deep learning overview

Over the recent years, machine learning has seen unprecedented growth due to the widespread availability of massive amounts of data and superior computational power [75, 76]. Especially, deep-learning (DL), a subset of machine learning technique, is most widely used in numerous applications such as image classification [77–79], image segmentation [80–83], object detection [84, 85], super-resolution [86, 87] and disease prediction [88]. In practice, deep learning is a data-driven optimization approach employing a wide variety of neural networks. Typically, the DL architectures are optimized by the end-to-end mapping of input to the true ground truth data. Thus, the optimization generally consists of a combination of input and ground truth data. The effectiveness of DL generally depends on the quality of the datasets used. Algorithms like stochastic gradient descent (SGD) and Adaptive moment estimation (Adam) with loss functions are commonly employed to optimize the neural networks. Tensorflow, Pytorch, Theano, Caffe2 are the most common libraries used for DL implementation in coalition with programming languages such as Python, MATLAB, and C/C++.

Among the neural networks, convolutional neural network (CNN) is the most popular deep-learning network used in imaging-related tasks due to its ability to solve complex problems [89, 90]. Heeding to the success of CNN, tech giants such as Google, Facebook, and Microsoft have established dedicated research groups to explore new CNN architectures for computer vision and image processing applications. A conventional CNN architecture consists of a number of convolution layers, subsampling layers, fully connected layers, and activation functions such as RELU, ELU, Sigmoid, Linear, and SELU. The CNN architectures are optimized through the regulation of weights according to the target through backpropagation. LeNet developed in 1990 is one of the pioneering CNN's developed for various image related recognition tasks. Following this, numerous architectures with significant innovations and modifications have been developed. Notably, architectures with skip connections have been gaining popularity after the introduction of the concept by ResNet in 2015. U-Net is the most popular architecture used in medical image processing due to its exemplary performance in segmentation tasks [91]. It is based on the encoder-decoder architecture with a contractive path to reduce the spatial dimensions with information encoding and an expansive path to recover the spatial resolution along with skip connections resembling a symmetrical U-shape. Following the inception of U-Net, it has been modified with extensional techniques for improved accuracy and performance depending on the task which is applied for. Furthermore, the rapidly evolving technological developments in the field of fast computing devices and graphical processing units (GPU) have significantly reduced the time taken for optimizing the deep-learning networks.

Application of deep learning in photoacoustic imaging

The advent of DL in PAI began much later than its boom in other imaging modalities. At present, the application of DL to overcome the challenges in PAI is being extensively studied and it encompasses huge prospects. This review concentrates on summarizing the deep-learning techniques employed to overcome some of the limitations both in PAT and PAM.

Deep learning in photoacoustic tomography image reconstruction

The reconstruction schema in PAT is ill-posed due to practical limitations in the detection geometry, acceptance angle of transducers, and estimation of speed of sound. DL is best suited to address these challenges as they often orchestrate together. Depending on the stages of PAT reconstruction where deep-learning techniques are applied, it can be broadly classified into four categories: pre-processing, post-processing, direct processing, and hybrid processing approaches. Herein we will summarize the recent DL based advancement in PAT reconstruction into these four categories.

Pre-processing approach using deep learning

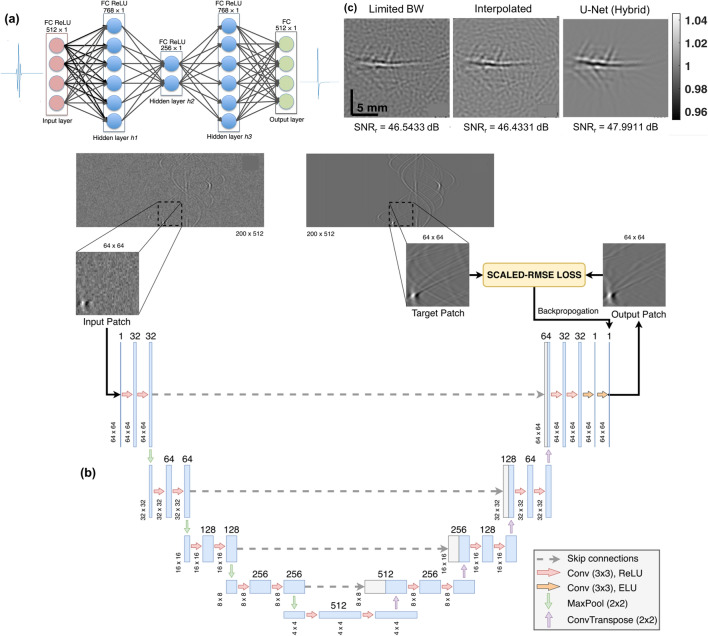

In this approach, DL is employed to process the acquired raw PA data prior to the image reconstruction. Conventionally in PAT, band-limited transducers are most widely employed. They function like a bandpass filter suppressing the low-frequency components of the signal, thus eliminating some valuable information of the target imaged [92]. To broaden the bandwidth of the acquired sinograms, a fully connected neural network (FCNN) (Fig. 2a) was employed and its potential for improving the bandwidth of the PA data was validated using both simulated and experimental data [93]. Following this, a CNN architecture based on U-Net (Fig. 2b) was proposed to enhance the resolution and bandwidth of the sinograms [94]. Compared with the conventional bandwidth enhancement techniques, the proposed method improved the structural similarity index (SSIM) by 33.81% and it also improved the experimental in vivo PAT brain images by denoising them (Fig. 2c). Furthermore, an FCNN was explored for unmixing the spectra in multispectral photoacoustic imaging [95]. The proposed network consists of an initialization network and unmixing network. The initialization network is used to learn the spectra from the PA data, and the unmixing network is employed to extract the end member spectra. In summary, the application of DL to process the PA data enhances the resultant beamformed image by denoising, removing artifacts, and enhancing the bandwidth of the PA data without compromising the integrity of the acquired raw PA data.

Fig. 2.

a Schematic of the fully connected neural network used for bandwidth enhancement in PAT. Reprinted with permission from SPIE [93]. b Schematic of the U-Net employed for bandwidth enhancement in PAT. c Reconstructed in vivo PAT brain image with U-Net based bandwidth enhancement. b, c Reprinted with permission from IEEE [94]

Post-processing approach using deep learning

In PAT, DL is most actively explored to post-process the images resulting from the conventional reconstruction techniques. Although the traditional reconstruction methodologies furnish a good approximation of initial pressure distribution, the resultant images are marred by artifacts and poor resolution. DL is best suited to overcome these challenges. Especially, to enhance the PAT images generated by removing the under-sampling artifacts U-Net was first explored along with a simple CNN consisting of three layers (S-NET) [96]. A more complex U-Net was then demonstrated for removing the sparse sampling and limited view artifacts in PAT [97]. Its potential to remove the artifacts and improve the quality of the images has been verified on simulated data, experimental phantom, and in vivo images. Following this, to remove the sparse sampling artifacts a dual-domain U-Net (DuDoUnet) using mutual information from the time and frequency domain has also been demonstrated [98]. Subsequently, a novel technique combining inverse compressed sensing and DL has successfully recovered high-resolution PAT images from sparse measurements, and a 30% improvement in the image quality was noted on experimental datasets [99]. Imprinting U-Net, an advanced architecture termed as fully dense U-Net (FD-UNet) has also been proposed to eliminate the artifacts in sparse view PAT systems [100]. The FD-UNet uses dense blocks to increase the depth of the network without increasing the number of layers. Furthermore, it uses the features extracted in the initial convolution operations in the subsequent convolution operations. The proposed FD-UNet outperforms the performance of conventional U-Net and improves the visibility of the underlying structure in the reconstructed images.

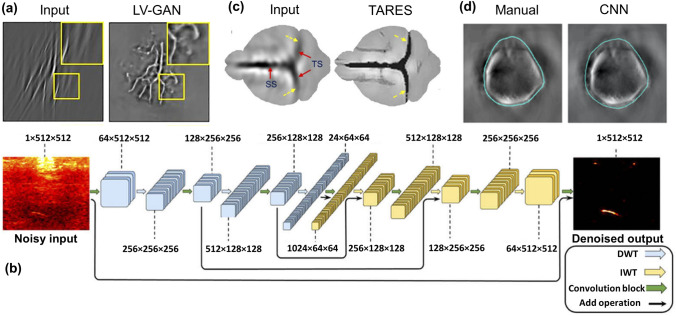

To tackle the limited view artifacts in PAT, generative adversarial networks (GANs) implementing U-Net as generator network (LV-GAN) have also been explored [101]. The LV-GAN was trained and evaluated using a meld of simulation and experimental datasets. A representative image demonstrating the LV-GANs capability to remove the limited view artifacts in vessel phantom PAT images is shown in Fig. 3a. Similarly, for addressing the limited view problems in linear array PAT geometry, a Wasserstein generative adversarial network (WGAN) combined with gradient penalty was implemented and it performed a superior role in removing artifacts, thus improving the image quality [102]. A U-Net architecture with Monte Carlo (MC) dropout as a Bayesian approximation has also been proposed to remove the limited view artifacts in the linear-array PAT system [103]. Remarkably in this approach, the U-Net was optimized with the dataset comprising of ground truths derived from the CMOS images. The proposed network was validated on both simulated and experimental datasets. Furthermore, it exhibits a robust performance in comparison with techniques without MC dropout. Apart from the U-Net inspired architectures, a CNN network termed RADL-net has been proposed to remove the limited view and under sampling artifacts in three quarter ring transducer arrays [104]. The CNN architectures have also been combined with truncated singular value decomposition (SVD) to eliminate the limited view artifacts [105]. However, it has been evaluated only on the simulated datasets. DL has also been extended to remove the limited view and sparse sampling artifacts in the 3D domain through the advent of dense dilated U-Net (DD-UNet) for artefact removal in 3D PAT [106]. This network combined the advantages of both dense networks and dilated convolutions for correcting the sparse view artifacts. The DD-UNet outperformed the FD-U-Net, and its performance has been validated on experimental phantom images.

Fig. 3.

a Reconstructed limited view vessel phantom image using LV-GAN. Reprinted with permission from Wiley–VCH [101]. b Schematic of the MWCNN architecture employed to improve contrast in low fluence PAT settings. U-Net employed for bandwidth enhancement in PAT. Reprinted with permission from OSA [110]. c Tangential resolution improved in vivo PAT brain image using TARES network. Reprinted with permission from OSA [117]. d Boundary segmented in vivo mouse liver images using CNN. Reprinted with permission from SPIE [132]

Aside from the removal of limited view and sparse sampling artifacts, DL has also been explored for image quality and resolution improvement in PAT. Especially in LED-based PAT imaging systems, the image quality suffers from poor SNR due to the low power of the LED excitation source. A CNN architecture was first proposed to improve the SNR of the reconstructed LED-PAT images, and experimental phantom images were used for optimization [107]. A U-Net based CNN architecture has also been applied to improve the SNR, where, for optimization, high energy laser images were used as ground truths [108]. An architecture integrating the CNN with RNN has also been proposed to enhance the SNR by considering spatial information and temporal dependencies [109]. Compared with conventional CNN architectures, the hybrid CNN-RNN architecture exhibited superior performance on the experimental phantom images. Following this, a multi-level wavelet CNN (MWCNN) architecture based on traditional U-Net architecture was also devised to improve the quality of LED-PAT images by mapping low fluence excitation maps to its high fluence excitation map [110]. Notably, in MWCNN, contractive and expansive path pooling layers are replaced by discrete and inverse wavelet transform to prevent the loss of spatial information. The schematic of the MWCNN architecture is depicted in Fig. 3b. MWCNN exhibited a good performance on removing the low optical attenuation noises in both experimental and in vivo images with an improvement in framerate by 8 times. Similarly, to enhance low energy laser images a simple U-Net architecture has also been utilized and its ability to improve the SNR has been validated on in vivo deep tissue images [111]. In PAT a higher imaging depth can be achieved by employing excitation sources in the NIR-II spectral window [112, 113]. However, a major limitation is the background noise and tissue scattering. To overcome this challenge a DL architecture named as IterNet has been used and its performance was evaluated on in vivo vasculature images [114]. In a conventional PAT setting, the quality of the image is also marred by the presence of aberrations due to the speed of sound variations. DL based on U-Net has also been used for correcting these aberrations and its performance has been validated on the in vivo human forearm images [115]. Remarkably, a CNN architecture named the PA-Fuse model was also implemented to reconstruct the PAT image by deep feature fusing the images generated by the Tikhonov and TV regularization methods [116].

DL has also been explored for resolution improvement in PAT. One of the most common problems encountered in the circular scanning PAT is the poor tangential resolution near the SUT surface (or far from the scanning center). To overcome this tangential resolution degradation an architecture based on the FD U-Net architecture termed as TARES network has been proposed [117]. Although various approaches to solve the problem have been proposed and validated [37, 118–120], the DL approach performs better with no hardware changes in the system. The proposed network was trained on simulated images and its performance was validated on the experimental phantom and in vivo brain images (Fig. 3c). The TARES network demonstrated, improved the tangential resolution by eight times in comparison with the conventional tangential resolution improvement techniques. Another common problem encountered in the multi-UST PAT imaging system is the need for radius calibration. Recently, a DL architecture termed radius correction PAT (RACOR-PAT) was proposed to alleviate the need for radius calibration in a multi-UST PAT imaging system [121]. The proposed network was trained on hybrid datasets, and it exhibits superior performance in comparison with the U-Net and FD-UNet.

One of the major advantages of PAI is its multispectral imaging capability. In PAI the concentration of endogenous chromophores and exogenous probes can be quantified by the spectral unmixing of the wavelengths. However, the optical fluence in an inhomogeneous medium (like tissue) will vary depending on the wavelengths. The assumption of constant fluence for various wavelengths leads to erroneous quantification when traditional linear unmixing is employed [122]. Certainly, DL offers a promising means to solve this problem. Residual U-Net is one of the initial architectures explored for quantitative PAI (QPAI) of oxygen saturation in blood vessels [123]. The proposed network takes an input of initial pressure images obtained at different wavelengths and returns the quantified sO2 image. In comparison with the traditional linear unmixing, Res U-Net exhibited superior performance and its potential is substantiated by smaller reconstruction error of the network along with a short reconstruction time of 22 ms. Subsequently, U-Net based architectures such as DR2U-Net [124] and absO2luteU-Net [125] have been devised to quantitatively and accurately map the blood oxygen saturation. An architecture termed Encoder-Decoder Aggregator network (EDA-Net) has also been proposed to quantify the oxygen saturation in clinically obtained human breast datasets [122]. Similarly, an approach termed learned spectral decoloring for quantitative photoacoustic imaging (LSD-qPAI) comprising of an FCNN has been devised to compute the sO2 concentration [126]. The LSD-qPAI method provides accurate sO2 estimation on both experimental phantoms and in vivo data. Notably, an architecture named DL-eMSOT has also been concocted by melding CNN with RNN to take advantage of sequential learning for estimating the oxygen saturation concentration [127]. For determining the tissue oxygen concentration in 3D volumetric PAT imaging, a 3D convolutional encoder-decoder network with skip connections (EDS) has also been successfully demonstrated and its potential has been evaluated on the simulated phantom datasets [128].

Postprocessing approaches employing DL have also been applied for image segmentation and disease detection in PAT. A feasibility study on the segmentation of breast cancer images was first demonstrated on breast cancer PAT images using architectures such as AlexNet and Google Net [129]. Ensuing this, a CNN based partially learned algorithm was proposed for segmenting the vasculatures and it outperformed the traditional segmentation schema [130]. A CNN architecture termed sparse UNet (S-UNet) has been then put forward to automate the segmentation of vasculatures in clinically obtained multispectral optoacoustic tomography (MSOT) images [131]. The S-UNet incorporates an embedded wavelength selection module to select the wavelengths important for the segmentation task. Furthermore, the wavelength selection module reduces the scanning time and the volume of the data acquired in multispectral imaging scenarios. Similarly, an automatic CNN segmentation network was also proposed for segmenting the mouse boundary in a multispectral hybrid optoacoustic and ultrasound imaging (OPUS) system [132]. In comparison with manual segmentation, the CNN based architecture exhibits a superior boundary segmentation ability in the in vivo mouse brain, liver and kidney images, a representative image depicting this is shown in Fig. 3d. Following this, the same CNN segmentation network was extended for segmenting the boundary in whole-body mouse OPUS images and it exhibited a robust performance in segmenting the boundary [133]. A mask generation technique for confidence estimation was also proposed using U-Net to improve the quantification accuracy [134].

In PAT imaging, the application of DL for disease diagnosis was first demonstrated using deep neural networks for prostate cancer detection in multispectral tomographic images [135]. Following this, a CNN network called inception-resnet-v2 was utilized for cancer detection in ex vivo histopathological prostate tissues [136]. The proposed network uses the transfer learning method to transfer domain features of the thyroid dataset for prostate cancer detection and was later extended to the 3D photoacoustic domain [137]. A semi-supervised machine learning (ML) approach was also proposed to detect and remove the hair hampering the visibility of the underlying blood vessels using prior knowledge incorporating the orientation similarity of adjoining hairs [138]. This approach was successfully applied to the experimental data for improving the visibility of underlying blood vessels by removing the hair.

Direct processing using deep learning

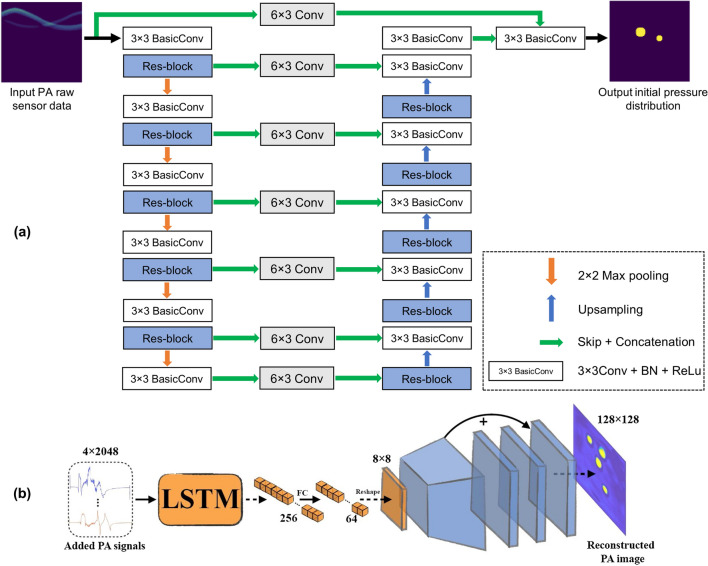

In the approach of direct processing, DL is employed to directly map the raw PA signals into initial pressure maps without using conventional reconstruction methodologies. In PAT, DL based approach for direct processing was first put forward by the application of U-Net to reconstruct the initial pressure distribution from the synthetically generated 128 element linear array transducer data [139]. Following this, a CNN based approach comprising of densely connected convolution layers was applied to estimate the speed of sound (SOS) compensated initial pressure distribution from the PA sinograms [140]. Similarly, a modified version of U-Net called an end-to-end Res-U-Net was also proposed to solve the inverse problem in PAT [141]. The proposed network incorporates residual blocks in both contracting and expansive layers of the U-Net, to prevent the degradation of image quality. Further, it has exhibited a performance improvement in the PSNR by 18%.The schematic of the end-to-end Res-U-Net is shown in Fig. 4a. In this approach, the Res-U-Net was optimized using the simulation dataset and was validated with the experimental phantom images. A novel pixel-wise DL approach (Pixel-DL) employing pixel-wise interpolation conjugated with the FD U-Net was then proposed to reconstruct the PAT images without artifacts in a limited view PAT system and it exhibited superior performance in comparison with the FD U-Net based post-processing approach [142]. Similarly, for real time reconstruction in limited view linear array PAT systems, a U-Net based upgU-Net was proposed to reconstruct the PAT images from the 3D transformed pre-delayed PA channel data [143]. In comparison with the conventional reconstruction methods and U-Net, upgU-Net exhibited a higher structural similarity on both simulated and experimental data. To reconstruct multifrequency sensor data, a CNN network termed as DU-Net has been proposed and it consists of two clubbed U-Nets with an auxiliary loss to constrain the first U-Net [144]. The VGG16 CNN network has also been utilized to directly detect point sources from the raw PA data [145]. The network exhibited a point source detection accuracy of 96.67% on the phantom experimental data. Similarly, a novel encoder-decoder based architecture has also been devised for target localization directly from the acquired PA signal [146]. To enable reconstruction in real-time PAT imaging systems employing single data acquisition channel, a DL architecture comprising of RNN (to extract the semantic information) and CNN (to convert the semantic information to the image) (Fig. 4b) was recently proposed and its performance was validated both on synthetic and experimental data [147].

Fig. 4.

a Deep learning architecture of the end-to-end Res-U-Net employed to reconstruct PAT images from PA sinograms. Reprinted with permission from OSA [141]. b Deep learning architecture used for real-time reconstruction in single channel PAT system. Reprinted with permission from SPIE [147]

As a shift from the conventional paradigm of employing DL architectures for the direct signal to image transformations, DL architectures have also been applied at different stages of reconstruction and integrated to enhance the reconstructed image quality. For removing the artifacts in a limited view imaging system, a DL technique employing a CNN architecture for direct processing coupled with a CNN for post-processing has been demonstrated [148]. In this methodology, a feature projection network was proposed for mapping the image from raw data and a conventional U-Net was employed to improve the quality of the images. Although this technique exhibits superior performance, optimizing both networks is computationally costly. Alternatively, DL has also been used as regularizers in model-based learning and reconstruction, where forward and adjoint operators correspond to the system’s physical parameters. Although model-based reconstructions produce high reconstruction quality images, the regularizers employed often fail to handle the variations encountered in complex experimental scenarios. To optimize the regularizer term for compressed sensing in PAT, a CNN architecture based on U-Net was first employed and was compared with the l1 minimization [149]. DL was also applied to replace total variation (TV) minimization in the deep gradient descent (DGD) algorithm to correct the artifacts and improve the quality of reconstructed images [150]. This method exhibited superior performance in comparison with the conventional DGD and was also verified on experimental images. Following this, a learned primal–dual (LPD) method with CNN replacing the primal and dual-domain was proposed to perform both reconstruction and segmentation in limited view detection geometry [130]. Similarly, to reconstruct the limited view PAT images by negating the artifacts, a fast forward PAT (FF-PAT) has also been devised and demonstrated to have a higher speed of reconstruction than TV regularization reconstruction [151] and it increased the reconstruction speed by 32 times. A CNN network that can simultaneously reconstruct, simultaneous reconstruction network (SR-Net), both the initial pressure and SOS distribution through the iterative fusion technique was also proposed [152]. Furthermore, an RNN based architecture called recurrent interference machines has also been implemented with K-space methods to solve the inverse problem at an accelerated rate [153]. DL based direct processing techniques have been explored for disease diagnosis and detection directly from the PA signals. A deep neural network using fully connected layers was employed to estimate the size of adipocytes in human tissue directly from the acquired PA spectrum [154]. In this approach, a relationship between the PA spectrum and the size of adipocytes was established by optimizing the DL network using the dataset obtained from the ex vivo human adipose tissues. In comparison with the traditional method, the proposed method exhibited superior performance in the detection of adipocyte size.

Another imaging modality that is similar to PAT imaging is thermoacoustic tomography (TAT), where ultrasound waves are induced by pulsed microwave excitation (instead of laser excitation). Recently a direct processing technique has been demonstrated to map the acquired signals to tomographic images in TAT [155]. In this approach, a CNN based on an encoder-decoder architecture called TAT-Net was used to reconstruct the initial pressure distribution maps directly from the sinograms. The proposed network was optimized using the synthetically generated dataset and it exhibited superior performance in comparison with other DL architectures.

Hybrid-processing using deep learning

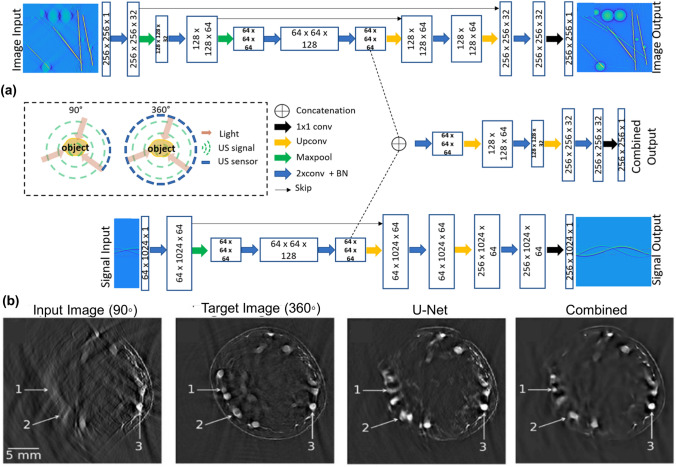

In the hybrid processing approach, DL is utilized to reconstruct the PAT images by taking both the raw PA signals and conventionally reconstructed images as input. The advent of the hybrid processing DL models in PAT began with the proposition of a GAN architecture called knowledge infused GAN (KI-GAN) [156]. In KI-GAN architecture, better PAT reconstruction was obtained by infusing information of the raw PA signals with textural information. This textural information was provided by reconstructing the PA data using a conventional DAS algorithm. As the optimization of KI-GAN required a large amount of dataset, synthetic datasets were used for training. In comparison with the conventional delay-and-sum beamformer and U-Net-based reconstruction, KI-GAN showed better performance on both fully sampled and sparsely sampled PA data. Following this, a DL architecture called as Y-Net was proposed to reconstruct the PAT images by integrating the features from both the raw PA signals and conventional delay and sum beamformer images [157]. The proposed Y-Net consists of two encoder paths to embed information from both textural and physical features and a decoder path to generate the resultant output. Analogous to KI-GAN, Y-Net has also been optimized using the simulated dataset and its performance was evaluated on the synthetic dataset. Similarly, to reconstruct the image from the sparsely acquired PA data, a novel architecture termed Attention steered network (AS-Net) was demonstrated [158]. In the AS-Net architecture approach, the high dimensional features extracted by the semantic feature extraction module are fused into the decoder path of the PA reconstruction module to achieve better reconstruction quality. Furthermore, folded transformation technique was applied to transform the asymmetric dimension of the PA sinograms into symmetric dimension input to the basic PA reconstruction module. The performance of the proposed network was verified on both simulated phantom and experimental in vivo datasets. Furthermore, hybrid deep learning approaches have also been explored for image quality improvement in limited view conditions. Recently, a CNN based approach (Fig. 5a) was demonstrated for enhancing the quality in optoacoustic images by combining the information’s from conventional reconstruction and time domain data [159]. The proposed network was evaluated on limited view in vivo human finger images (Fig. 5b), and it exhibited a significant improvement in SSIM, PSNR and MSE in comparison with the traditional U-Net.

Fig. 5.

a CNN architecture used for enhancing the PAT image using both conventionally reconstructed PAT image and time domain data. b In vivo human finger image enhanced using the CNN architecture. All figures reprinted with permission from OSA [159]

Deep learning in photoacoustic microscopy

Due to the absence of reconstruction algorithms, the scope of enhancing PAM images using mathematical calculations and image processing tools is relatively less widespread compared to PAT. However, PAM also employs various algorithms for image improvement and segmentation. Synthetic aperture focusing technique based on the concept of virtual detector is commonly applied for improving out-of-focus resolution in AR-PAM images [73, 160, 161]. Various filtering methods and algorithms for noise reduction have also been proposed for both OR- and AR- PAM images. Such techniques have been applied for deblurring, correcting motion artifacts, and reducing aberrations due to the presence of the skull [162–165]. Images acquired using PAM are often used to determine oxygen saturation and hemodynamic responses. To that end, image processing algorithms and thresholding have also been proposed for vascular tree extraction and vessel segmentation [166]. Utilization of such image processing algorithms have helped in improving PAM images; however, these methods also have limitations like high processing time, decreased signal-to-noise ratio, or not being adaptive for diverse sets of images. Hence, methods for further upgrading these algorithms are constantly being proposed.

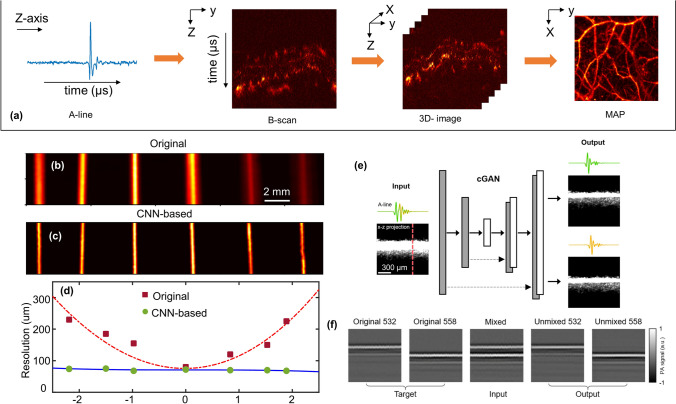

Figure 6a briefly describes image acquisition in PAM. Multiple time-resolved PA signals (A-lines) collected by moving confocally aligned optical illumination and ultrasound detection in one direction, are placed side-by-side to form B-scan images. B-scans, therefore, contain depth-wise information of the target, i.e., in the axial direction of the transducer. Three-dimensional images of the target are generated by acquiring and combining multiple B-scans. Generally, two-dimensional images—maximum amplitude projection (MAP) or maximum intensity projection (MIP) are used for visualization of the sample. Various machine learning algorithms have recently been proposed to enhance the PAM images. Typically, the algorithms are implemented on two-dimensional PAM images, which can either be B-scans or MAP of final three-dimensional volume data. Hence, based on whether the processing is done on time-resolved signals or final images, the algorithms are classified into two categories- B-scan processing and MAP-processing.

Fig. 6.

a Sequence of acquisition of PA signal for image formation in PAM. b Original and c CNN processed MAP images of same sized horse hair placed at different depths from focus. d Resolution of AR-PAM system at various distances from focus before and after processing by CNN. b–d Reprinted with permission from OSA [170]. e Schematic of cGAN showing examples of input and output B-scans and one representative A-line, used for spectral unmixing. f Representative B-scans showing target, input, and output of the network shown in e for unimixing PA signals from 532 and 558 nm laser pulses. e, f Reprinted with permission from OSA [171]

B-scan processing

Machine learning has been used for processing time-resolved signals of both OR-PAM and AR-PAM images. Initially, a dictionary-learning-based approach was developed for denoising OR-PAM images. Using the K-means singular value decomposition (K-SVD) method, an algorithm was developed and applied to the B-scans of a 3D image. An adaptive dictionary was trained on noisy images to denoise new photoacoustic images [167]. Although the algorithm was efficient in denoising the images, there still existed some weaker vessels which were difficult to discern. Following this, a dictionary learning-based method was also applied for removing reverberant artifacts from B-scans [168]. Here, dictionary learning was employed to adaptively learn the basis that described the PAM signal, thus nullifying the need to know the point spread function of the transducer. Validation of results in vitro and in vivo showed that this method can repress artifacts caused due to reverberations of skull and help in obtaining depth-resolved images without ghosting artifacts. This helped in visualizing microvasculature present at deeper layers. Later, another machine learning approach to decrease skull induced aberrations was proposed. This method, based on the vector space similarity (VSS) model, was employed to compensate for skull-induced aberrations in A-lines PA signals [169]. The performance of this model on simulated numerical phantom demonstrated its ability to compensate for distortions like amplitude attenuation, time shift, and reflections.

To enhance the out-of-focus resolution of AR-PAM images, an FD-UNet architecture was trained on simulated B-scans. This DL architecture aimed at improving the system resolution outside the focal plane while simultaneously decreasing the background noise [170]. Imaging of a phantom consisting of horse hairs kept at various imaging depths showed the degradation in system resolution and decrease in signal strength outside the focal zone (Fig. 6b). Processing of the B-scans using the trained network (Fig. 6c) exhibited that the model helped in maintaining similar resolution across the entire imaging depth of ~ 4 mm while simultaneously decreasing the background noise and improving the signal strength. Figure 6d shows the system resolution at various distances from focus in both original and CNN-based images. Testing of the trained model on in vivo rat vasculature images validated its capability to improve complicated vasculature structures as well.

Recently, a conditional GAN (cGAN) was proposed for temporal and spectral unmixing of PA signals [171]. In this method, B-scans were acquired by irradiating the sample at two wavelengths (532 nm and 558 nm) at a short time delay of ~ 38 ns. This led to an overlap between the PA signals due to the individual wavelengths. The cGAN shown in Fig. 6e was trained for unmixing the PA signal acquired from these two wavelengths. Overlapping PA signal was provided as the network input while the network was trained to unmix the signal and provide two outputs, one for each wavelength. Example of target, input and output B-scans of the network (Fig. 6f) validated that the network could unmix overlapping A-lines with a very small error (~ 5%). In vivo mouse brain imaging further validated the network performance. It was observed that the SSIM between the cGAN-based unmixed images and the ground truth images was close to 1 throughout the field of view. This can help in the faster and accurate calculation of haemoglobin concentration, blood saturation, and blood flow. By breaking the physical limit of the A-line rate, this method provides a means of reducing scan time for functional PAM studies [171]. Apart from image enhancement, DL has also been employed for application in the assessment of rectal cancer in endoscopic PA images. A CNN model was developed and trained to distinguish between normal and malignant colorectal tissue [172]. An endorectal probe was used to obtain AR-PAM/US images. In vivo data from patients showed that by employing CNN, the PAM/US system was able to differentiate between residual tumours, normal rectal tissue, and tissue of treatment responders.

Maximum amplitude projection image processing

Visualization of PAM images is usually done by projecting the 3D volumetric images into 2D images. To overcome various artifacts, present in these projection images, post-processing techniques are commonly used. Multiple filters and thresholding methods have been used to denoise the images. Using a blind deconvolution method, a ~ twofold improvement in resolution of the OR-PAM system was recently demonstrated [164]. Post-processing of MAP images has also been done for deblurring, noise reduction, motion correction, and vascular tree extraction. Limitations of these algorithms have led to an increased interest in DL methods for processing MAP images.

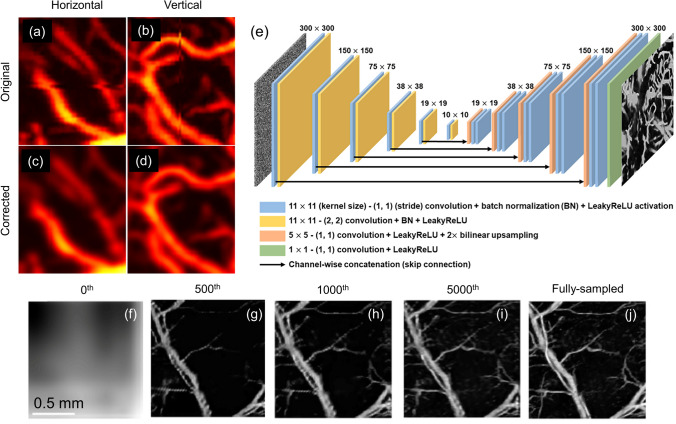

A three-layered CNN was implemented for correcting motion artifacts in MAP OR-PAM images. In this work, experimental OR-PAM images were acquired and distorted to include motion artifacts in both horizontal and vertical directions [173]. Training was done by giving these modified images as input and their corresponding corrected images as output. Figure 7a–d show rat brain vasculature imaging acquired using an OR-PAM system. Motion artifacts in both (a) horizontal and (b) vertical directions were present in the initial images. Feasibility of CNN to correct these artifacts was successfully demonstrated on experimental images as shown in Fig. 7c, d, respectively. CNN's have also been used for deblurring OR-PAM images. It has been shown that DL based deblurring method is generic and a single trained network can be applied to different optical microscopic imaging techniques including OR-PAM [174]. By training the network on publicly available optical coherence tomography (OCT) images and testing it on simulated and experimental OR-PAM images, the authors showed the universal applicability of the proposed method.

Fig. 7.

a–d Correction of motion artifacts using CNN. a, b Raw MAP PA vasculature images containing artifacts in a horizontal and b vertical directions. c, d Corrected MAP images of a, b, respectively. a–d Reprinted with permission from Springer [173]. e Network architecture for modified U-Net used in DIP model with 32 channel noise input and 1 channel recovered MAP image output. f–i Examples of recovered MAP image after different iterations (number given on top) of the DIP model when j fully sampled MAP image was undersampled at [3, 7]. e–j Reprinted with permission from Elsevier [176]

Improvement in acquisition time of OR-PAM using DL was shown by accurately reconstructing under sampled OR-PAM images [175]. An FD-UNet- based network was trained and tested on experimentally obtained rat brain vasculature images which were artificially downsampled. Receiving these downsampled images as an input, the network was trained to produce the fully sampled high-resolution images as the output. Network performance was validated by reconstructing vasculature images containing only 2–20% of the original number of pixels and comparing them with fully sampled images. It was therefore concluded that this method can help in improving the scan time by 5–50 folds in cases where imaging speed is limited by the pulse repetition rate of the laser. This work was further expanded using deep image prior (DIP), which is an unsupervised DL technique [176]. In this method, a noise input is passed through the CNN which aims at producing the fully sampled image as an output. The CNN output is multiplied by the sampling mask and compared with the original under sampled image. This comparison gives a loss that is used to iteratively update the weights of the CNN. Modified U-Net-based architecture shown in Fig. 7e was used in the DIP model, which was evaluated on mouse vasculature data. Figure 7f–i show the model output for an image undersampled by a factor of 7 and 3 in the x and y direction, respectively, at 0th, 500th, 1000th, and 5000th iterations. Compared to fully sampled image (Fig. 7j), the model exhibited high SSIM and peak signal-to-noise ratio (PSNR) even though it used only 4.76% effective pixels. Although no pre-training or ground truth images were required in this method, the performance of this network for reconstructing under-sampled images was shown to be comparable to that of the pre-trained FD-UNet architecture discussed above. Another CNN architecture containing residual blocks, Squeeze-and-Excitation blocks, and perceptual loss function was also proposed to reconstruct PAM images collected from sparse data [177]. Here, the network was trained on downsampled leaf vein images and the performance was validated on in vivo mouse ear and eye vasculature images.

Applications of DL algorithms for processing MAP image has also been extended to performing image segmentation. A hybrid network consisting of a fully convolutional network and a U-Net was applied for vessel segmentation in OR-PAM images. Vasculature images acquired from mouse ear were manually annotated by Labelmel to train the network [178]. Vascular segmentation using this method showed higher accuracy and robustness compared to the conventional segmentation methods like thresholding, k-means clustering, and region growing. Recently, multiple CNN architectures are being studied and optimized for denoising PAM images. Improvement in network is being proposed using techniques like residual learning or batch normalization [179]. Further, DL is also being recommended for super-resolution imaging, and for enhancing AR-PAM images to achieve images with optical resolution [180, 181]. However, most of these works are still in their preliminary stage and further optimization is needed before these methods can be applied for real applications.

Conclusions and future directions

DL and PAI are both fast-evolving fields and there exists a lot of room for innovations. Especially when these two fields are melded, it can lead to advancement in PAI resulting in faster translation from bench to bedside. As a step towards providing a one-stop overview, we have summarized the up-to-date developments of DL in PAT and PAM in this review. Table 1 shows a concise summary of the DL architectures and its corresponding application in PAT and PAM. One of the most common trends that is overt from this review is the optimization of the DL networks with the synthetic datasets. This reliance on synthetic datasets can be attributed to the difficulty in generating large amounts of experimental data and the lack of ground truths. In future, this reliance can be overcome by simulating the datasets close to experimental scenarios or by optimizing the DL architecture with hybrid datasets containing both simulated and experimental datasets. Furthermore, there also exists a lack of standard datasets for evaluating the performance of the DL models. Establishing a standard dataset that can be simulated and adapted to various PAI configurations will propel the DL based advancement in PAI. Organizations like International Photoacoustic Standardisation Consortium (IPASC) are working towards establishing standardization, which may include standardized datasets for wide spread adaptation and uniformity. Another challenge that persists in PAI is the integration of the DL models for real-time imaging with existing PAI systems. As the DL techniques devised are customized according to a particular PAI configuration its translation will be difficult. Especially if a network is trained for a specific linear array US probe and is adapted for use with a different probe the results obtained may contain unrealistic features questioning the reliability of the DL model employed. Thus analyzing the generalizability of the model before its adaptation is crucial. Another common trend that is perceptible is the application of U-Net in most applications and the results are superior when the complexity of the network increases. From the published trends it is also palpable that the combination of the DL with the conventional reconstruction is a good choice because it has yielded better results in all the tasks where it has been applied. Especially when the time domain information and the conventionally reconstructed images served as the input of the network, better information extraction was achieved. An important point to consider while designing DL architecture is the optimization time. It is a well-known phenomenon that the size of the optimization data significantly influences it, therefore, it is intrinsic to optimize the amount of data before training the DL architecture. The optimization time can also be reduced by distributing the training over multiple GPU’s. As the GPU is the heart of DL its specification such as CUDA cores, BUS, GPU memory bandwidth, and the clock have to be considered. Furthermore, choosing the best GPU is the key to obtaining an optimized performance of the DL models. Despite all the challenges DL encompasses a huge potential in the advancement of PAI. This review is not a conclusion but a beginning to the realm of DL in PAI.

Table 1.

Deep learning architectures used for various applications in photoacoustic imaging

| Stages of DL application | Objective | Architectures | |

|---|---|---|---|

| PAT | Pre-processing | Bandwidth enhancement | FCNN [93], U-Net [94] |

| Spectral unmixing | CNN [95] | ||

| Post-processing | Sparse Sampling | S-Net [96], U-Net [97], DuDoUnet [99], FD-UNet [100], DD-UNet [106], RADL-net [104], CNN [99] | |

| Limited view | U-Net [103], LV-GAN [101], WGAN [102], DD-UNet [106], CNN [105] | ||

| Noise reduction | CNN [107], U-Net [108, 115], CNN + RNN [109], MWCNN [110], IterNet [114] | ||

| Resolution and image improvement | TARES [117], RACOR-PAT [121], PA-Fuse [116] | ||

| Chromophore quantification | Res-U-Net [123], DR2U-Net [124], absO2luteU-Net [125], EDA-Net [122], LSD-qPAI [126], CNN + RNN [127], EDS [128] | ||

| Segmentation | AlexNet [129], Google Net [129], S-UNet [131], CNN [132, 133], U-Net [134] | ||

| Malady detection | inception-resnet-v2 [136, 137], ML [138] | ||

| Direct-processing | Reconstruction | U-Net [139], Res-U-Net [141], FD U-Net [142], upgU-Net [143], DU-Net [144], CNN [140, 148], CNN + RNN [147] | |

| Source detection | VGG16 [145], CNN [146] | ||

| Model based reconstruction | U-Net [149], CNN [130, 150], FF-PAT [151], SR-Net [152], RNN [153] | ||

| Malady detection | FCNN [154] | ||

| Hybrid-processing | Reconstruction | KI-GAN [156], Y-Net [157], AS-Net [158], CNN [159] | |

| PAM | B-scan-processing | Resolution enhancement | FD-U-Net [170] |

| Temporal and spectral unmixing | cGAN [171] | ||

| Malady detection | CNN [172] | ||

| MAP-processing | Motion artifact correction | CNN [173] | |

| Deblurring | CNN [174] | ||

| Sparse sampling | FD-U-Net [175], DIP [176], CNN [177] | ||

| Vessel segmentation | Hybrid (FCN + U-Net) [178] | ||

| Denoising | CNN [179] | ||

| Resolution enhancement | CNN [180], PRU-Net [181] |

Acknowledgements

The author would like to acknowledge the support by Tier 1 Grant funded by the Ministry of Education in Singapore (RG144/18, RG127/19).

Funding

Authors have no relevant financial interests in the manuscript.

Declarations

Ethical statement

This paper does not contain any studies with human participant or animal performed by any of the authors.

Conflict of interest

The authors declare that they have no conflict of interest.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Das D, Sharma A, Rajendran P, Pramanik M. Another decade of photoacoustic imaging. Phys Med Biol. 2021;66(5):05TR1. doi: 10.1088/1361-6560/abd669. [DOI] [PubMed] [Google Scholar]

- 2.Lin L, Hu P, Tong X, Na S, Cao R, Yuan X, Garrett DC, Shi J, Maslov K, Wang LV. High-speed three-dimensional photoacoustic computed tomography for preclinical research and clinical translation. Nat Commun. 2021;12(1):882. doi: 10.1038/s41467-021-21232-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Attia ABE, Balasundaram G, Moothanchery M, Dinish U, Bi R, Ntziachristos V, Olivo M. A review of clinical photoacoustic imaging: current and future trends. Photoacoustics. 2019;16:100144. doi: 10.1016/j.pacs.2019.100144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Omar M, Aguirre J, Ntziachristos V. Optoacoustic mesoscopy for biomedicine. Nat Biomed Eng. 2019;3(5):354–370. doi: 10.1038/s41551-019-0377-4. [DOI] [PubMed] [Google Scholar]

- 5.Gottschalk S, Degtyaruk O, Mc Larney B, Rebling J, Hutter MA, Deán-Ben XL, Shoham S, Razansky D. Rapid volumetric optoacoustic imaging of neural dynamics across the mouse brain. Nat Biomed Eng. 2019;3(5):392–401. doi: 10.1038/s41551-019-0372-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Deán-Ben X, Gottschalk S, Mc Larney B, Shoham S, Razansky D. Advanced optoacoustic methods for multiscale imaging of in vivo dynamics. Chem Soc Rev. 2017;46(8):2158–2198. doi: 10.1039/C6CS00765A. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Upputuri PK, Pramanik M. Recent advances toward preclinical and clinical translation of photoacoustic tomography: a review. J Biomed Opt. 2017;22(4):041006. doi: 10.1117/1.JBO.22.4.041006. [DOI] [PubMed] [Google Scholar]

- 8.Zhang P, Li L, Lin L, Shi J, Wang LV. In vivo superresolution photoacoustic computed tomography by localization of single dyed droplets. Light Sci Appl. 2019;8(1):36. doi: 10.1038/s41377-019-0147-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Seong M, Chen SL. Recent advances toward clinical applications of photoacoustic microscopy: a review. Sci China Life Sci. 2020;63:1798–1812. doi: 10.1007/s11427-019-1628-7. [DOI] [PubMed] [Google Scholar]

- 10.Baik JW, Kim JY, Cho S, Choi S, Kim J, Kim C. Super wide-field photoacoustic microscopy of animals and humans in vivo. IEEE Trans Med Imaging. 2020;39(4):975–984. doi: 10.1109/TMI.2019.2938518. [DOI] [PubMed] [Google Scholar]

- 11.Zhang C, Zhao H, Xu S, Chen N, Li K, Jiang X, Liu L, Liu Z, Wang L, Wong KKY, Zou J, Liu C, Song L. Multiscale high-speed photoacoustic microscopy based on free-space light transmission and a MEMS scanning mirror. Opt Lett. 2020;45(15):4312–4315. doi: 10.1364/OL.397733. [DOI] [PubMed] [Google Scholar]

- 12.Li M, Chen J, Wang L. High acoustic numerical aperture photoacoustic microscopy with improved sensitivity. Opt Lett. 2020;45(3):628–631. doi: 10.1364/OL.384691. [DOI] [PubMed] [Google Scholar]

- 13.Liu C, Liang Y, Wang L. Optical-resolution photoacoustic microscopy of oxygen saturation with nonlinear compensation. Biomed Opt Express. 2019;10(6):3061–3069. doi: 10.1364/BOE.10.003061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Periyasamy V, Das N, Sharma A, Pramanik M. 1064 nm acoustic resolution photoacoustic microscopy. J Biophotonics. 2019;12(5):e201800357. doi: 10.1002/jbio.201800357. [DOI] [PubMed] [Google Scholar]

- 15.Yao JJ, Maslov KI, Puckett ER, Rowland KJ, Warner BW, Wang LV. Double-illumination photoacoustic microscopy. Opt Lett. 2012;37(4):659–661. doi: 10.1364/OL.37.000659. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Chen Q, Xie H, Xi L. Wearable optical resolution photoacoustic microscopy. J Biophotonics. 2019;12(8):e201900066. doi: 10.1002/jbio.201900066. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Allen TJ, Ogunlade O, Zhang E, Beard PC. Large area laser scanning optical resolution photoacoustic microscopy using a fibre optic sensor. Biomed Opt Express. 2018;9(2):650–660. doi: 10.1364/BOE.9.000650. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Jin T, Guo H, Jiang H, Ke B, Xi L. Portable optical resolution photoacoustic microscopy (pORPAM) for human oral imaging. Opt Lett. 2017;42(21):4434–4437. doi: 10.1364/OL.42.004434. [DOI] [PubMed] [Google Scholar]

- 19.Hu S, Maslov K, Wang LV. Second-generation optical-resolution photoacoustic microscopy with improved sensitivity and speed. Opt Lett. 2011;36(7):1134–1136. doi: 10.1364/OL.36.001134. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Moothanchery M, Dev K, Balasundaram G, Bi R, Olivo M. Acoustic resolution photoacoustic microscopy based on MEMS scanner. J Biophotonics. 2019;13:e201960127. doi: 10.1002/jbio.201960127. [DOI] [PubMed] [Google Scholar]

- 21.Cai D, Li Z, Chen S-L. In vivo deconvolution acoustic-resolution photoacoustic microscopy in three dimensions. Biomed Opt Express. 2016;7(2):369–380. doi: 10.1364/BOE.7.000369. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Park S, Lee C, Kim J, Kim C. Acoustic resolution photoacoustic microscopy. Biomed Eng Lett. 2014;4(3):213–222. doi: 10.1007/s13534-014-0153-z. [DOI] [Google Scholar]

- 23.Rajendran P, Sahu S, Dienzo RA, Pramanik M. In vivo detection of venous sinus distension due to intracranial hypotension in small animal using pulsed-laser-diode photoacoustic tomography. J Biophotonics. 2020;13(6):e201960162. doi: 10.1002/jbio.201960162. [DOI] [PubMed] [Google Scholar]

- 24.Kalva SK, Upputuri PK, Pramanik M. High-speed, low-cost, pulsed-laser-diode-based second-generation desktop photoacoustic tomography system. Opt Lett. 2019;44(1):81–84. doi: 10.1364/OL.44.000081. [DOI] [PubMed] [Google Scholar]

- 25.Sharma A, Kalva SK, Pramanik M. A comparative study of continuous versus stop-and-go scanning in circular scanning photoacoustic tomography. IEEE J Sel Top Quantum Electron. 2019;25(1):7100409. doi: 10.1109/JSTQE.2018.2840320. [DOI] [Google Scholar]

- 26.Nishiyama M, Namita T, Kondo K, Yamakawa M, Shiina T. Ring-array photoacoustic tomography for imaging human finger vasculature. J Biomed Opt. 2019;24(9):096005. doi: 10.1117/1.JBO.24.9.096005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Prakash J, Kalva SK, Pramanik M, Yalavarthy PK. Binary photoacoustic tomography for improved vasculature imaging. J Biomed Opt. 2021;26(8):086004. doi: 10.1117/1.JBO.26.8.086004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Yalavarthy PK, Kalva SK, Pramanik M, Prakash J. Non-local means improves total-variation constrained photoacoustic image reconstruction. J Biophotonics. 2021;14(1):e202000191. doi: 10.1002/jbio.202000191. [DOI] [PubMed] [Google Scholar]

- 29.Poudel J, Na S, Wang LV, Anastasio MA. Iterative image reconstruction in transcranial photoacoustic tomography based on the elastic wave equation. Phys Med Biol. 2020;65(5):055009. doi: 10.1088/1361-6560/ab6b46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Ma X, Peng C, Yuan J, Cheng Q, Xu G, Wang X, Carson PL. Multiple delay and sum with enveloping beamforming algorithm for photoacoustic imaging. IEEE Trans Med Imaging. 2020;39(6):1812–1821. doi: 10.1109/TMI.2019.2958838. [DOI] [PubMed] [Google Scholar]

- 31.Deán-Ben X, Razansky D. Optoacoustic image formation approaches: a clinical perspective. Phys Med Biol. 2019;64(18):18TR01. doi: 10.1088/1361-6560/ab3522. [DOI] [PubMed] [Google Scholar]

- 32.Prakash J, Sanny D, Kalva SK, Pramanik M, Yalavarthy PK. Fractional regularization to improve photoacoustic tomographic image reconstruction. IEEE Trans Med Imaging. 2019;38(8):1935–1947. doi: 10.1109/TMI.2018.2889314. [DOI] [PubMed] [Google Scholar]

- 33.Mozaffarzadeh M, Mahloojifar A, Periyasamy V, Pramanik M, Orooji M. Eigenspace-based minimum variance combined with delay multiply and sum beamforming algorithm: application to linear-array photoacoustic imaging. IEEE J Sel Top Quantum Electron. 2019;25(1):6800608. doi: 10.1109/JSTQE.2018.2856584. [DOI] [Google Scholar]

- 34.Paridar R, Mozaffarzadeh M, Periyasamy V, Basji M, Mehrmodammadi M, Pramanik M, Orooji M. Validation of delay-multiply-and-standard-deviation weighing factor for improved photoacoustic imaging of sentinel lymph node. J Biophotonics. 2019;12(6):e201800292. doi: 10.1002/jbio.201800292. [DOI] [PubMed] [Google Scholar]

- 35.Mozaffarzadeh M, Periyasamy V, Pramanik M, Makkiabadi B. Efficient nonlinear beamformer based on Pth root of detected signals for linear-array photoacoustic tomography: application to sentinel lymph node imaging. J Biomed Opt. 2018;23(12):121604. doi: 10.1117/1.JBO.23.12.121604. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Gutta S, Kalva SK, Pramanik M, Yalavarthy PK. Accelerated image reconstruction using extrapolated Tikhonov filtering for photoacoustic tomography. Med Phys. 2018;45(8):3749–3767. doi: 10.1002/mp.13023. [DOI] [PubMed] [Google Scholar]

- 37.Pramanik M. Improving tangential resolution with a modified delay-and-sum reconstruction algorithm in photoacoustic and thermoacoustic tomography. J Opt Soc Am A. 2014;31(3):621–627. doi: 10.1364/JOSAA.31.000621. [DOI] [PubMed] [Google Scholar]

- 38.Prakash J, Raju AS, Shaw CB, Pramanik M, Yalavarthy PK. Basis pursuit deconvolution for improving model-based reconstructed images in photoacoustic tomography. Biomed Opt Express. 2014;5(5):1363–1377. doi: 10.1364/BOE.5.001363. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Shaw CB, Prakash J, Pramanik M, Yalavarthy PK. Least squares QR-based decomposition provides an efficient way of computing optimal regularization parameter in photoacoustic tomography. J Biomed Opt. 2013;18(8):080501. doi: 10.1117/1.JBO.18.8.080501. [DOI] [PubMed] [Google Scholar]

- 40.Kalva SK, Hui ZZ, Pramanik M. Calibrating reconstruction radius in a multi single-element ultrasound-transducer-based photoacoustic computed tomography system. J Opt Soc Am A. 2018;35(5):764–771. doi: 10.1364/JOSAA.35.000764. [DOI] [PubMed] [Google Scholar]

- 41.Tian L, Hunt B, Bell MAL, Yi J, Smith JT, Ochoa M, Intes X, Durr NJ. Deep learning in biomedical optics. Lasers Surg Med. 2021;53:748–775. doi: 10.1002/lsm.23414. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Pradhan P, Guo S, Ryabchykov O, Popp J, Bocklitz TW. Deep learning a boon for biophotonics? J Biophotonics. 2020;13(6):e201960186. doi: 10.1002/jbio.201960186. [DOI] [PubMed] [Google Scholar]

- 43.Shen C, Nguyen D, Zhou Z, Jiang SB, Dong B, Jia X. An introduction to deep learning in medical physics: advantages, potential, and challenges. Phys Med Biol. 2020;65(5):05TR1. doi: 10.1088/1361-6560/ab6f51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Razzak MI, Naz S, Zaib A. Deep learning for medical image processing: overview, challenges and the future. In: Dey N, Ashour AS, Borra S, editors. Classification in BioApps lecture notes in computational vision and biomechanics. Cham: Springer; 2018. pp. 323–350. [Google Scholar]

- 45.Shen D, Wu G, Suk H-I. Deep learning in medical image analysis. Ann Rev Biomed Eng. 2017;19:221–248. doi: 10.1146/annurev-bioeng-071516-044442. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Grohl J, Schellenberg M, Dreher K, Maier-Hein L. Deep learning for biomedical photoacoustic imaging: a review. Photoacoustics. 2021;22:100241. doi: 10.1016/j.pacs.2021.100241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Manwar R, Zafar M, Xu Q. Signal and image processing in biomedical photoacoustic imaging: a review. Optics. 2021;2(1):1–24. doi: 10.3390/opt2010001. [DOI] [Google Scholar]

- 48.DiSpirito A, III, Vu T, Pramanik M, Yao J. Sounding out the hidden data: a concise review of deep learning in photoacoustic imaging. Exp Biol Med. 2021;246(12):1355–1367. doi: 10.1177/15353702211000310. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Deng H, Qiao H, Dai Q, Ma C. Deep learning in photoacoustic imaging: a review. J Biomed Opt. 2021;26(4):040901. doi: 10.1117/1.JBO.26.4.040901. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Yang C, Lan H, Gao F, Gao F. Review of deep learning for photoacoustic imaging. Photoacoustics. 2021;21:100215. doi: 10.1016/j.pacs.2020.100215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Hsu K-T, Guan S, Chitnis PV. Comparing deep learning frameworks for photoacoustic tomography image reconstruction. Photoacoustics. 2021;23:100271. doi: 10.1016/j.pacs.2021.100271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Hauptmann A, Cox B. Deep learning in photoacoustic tomography: current approaches and future directions. J Biomed Opt. 2020;25(11):112903. doi: 10.1117/1.JBO.25.11.112903. [DOI] [Google Scholar]

- 53.Yang C, Lan H, Gao F, Gao F. Deep learning for photoacoustic imaging: a survey. arXiv:2008.04221arXiv (2020).

- 54.Cho SW, Park SM, Park B, Kim DY, Lee TG, Kim BM, Kim C, Kim J, Lee SW, Kim CS. High-speed photoacoustic microscopy: a review dedicated on light sources. Photoacoustics. 2021;24:100291. doi: 10.1016/j.pacs.2021.100291. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Kuniyil Ajith Singh M, Xia W. Portable and affordable light source-based photoacoustic tomography. Sensors. 2020;20(21):6173. doi: 10.3390/s20216173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Erfanzadeh M, Zhu Q. Photoacoustic imaging with low-cost sources: a review. Photoacoustics. 2019;14:1–11. doi: 10.1016/j.pacs.2019.01.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Kalva SK, Pramanik M. Photoacoustic tomography using high-energy pulsed laser diodes. Bellingham: SPIE Press; 2020. [Google Scholar]

- 58.Erfanzadeh M, Kumavor PD, Zhu Q. Laser scanning laser diode photoacoustic microscopy system. Photoacoustics. 2018;9:1–9. doi: 10.1016/j.pacs.2017.10.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Upputuri PK, Pramanik M. Fast photoacoustic imaging systems using pulsed laser diodes: a review. Biomed Eng Lett. 2018;8(2):167–181. doi: 10.1007/s13534-018-0060-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Dai X, Yang H, Jiang H. In vivo photoacoustic imaging of vasculature with a low-cost miniature light emitting diode excitation. Opt Lett. 2017;42(7):1456–1459. doi: 10.1364/OL.42.001456. [DOI] [PubMed] [Google Scholar]

- 61.Upputuri PK, Pramanik M. Pulsed laser diode based optoacoustic imaging of biological tissues. Biomed Phys Eng Express. 2015;1(4):045010–45017. doi: 10.1088/2057-1976/1/4/045010. [DOI] [Google Scholar]

- 62.Chan J, Zheng Z, Bell K, Le M, Reza PH, Yeow JTW. Photoacoustic imaging with capacitive micromachined ultrasound transducers: principles and developments. Sensors. 2019;19(16):3617. doi: 10.3390/s19163617. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Zhang E, Laufer J, Beard P. Backward-mode multiwavelength photoacoustic scanner using a planar Fabry-Perot polymer film ultrasound sensor for high-resolution three-dimensional imaging of biological tissues. Appl Opt. 2008;47(4):561–577. doi: 10.1364/AO.47.000561. [DOI] [PubMed] [Google Scholar]

- 64.Lin X, Sun M, Liu Y, Shen Z, Shen Y, Feng N. Variable speed of sound compensation in the linear-array photoacoustic tomography using a multi-stencils fast marching method. Biomed Signal Process Control. 2018;44:67–74. doi: 10.1016/j.bspc.2018.04.012. [DOI] [Google Scholar]

- 65.Deán-Ben XL, Özbek A, Razansky D. Accounting for speed of sound variations in volumetric hand-held optoacoustic imaging. Front Optoelectron. 2017;10(3):280–286. doi: 10.1007/s12200-017-0739-z. [DOI] [Google Scholar]

- 66.Gutta S, Bhatt M, Kalva SK, Pramanik M, Yalavarthy PK. Modeling errors compensation with total least squares for limited data photoacoustic tomography. IEEE J Sel Top Quantum Electron. 2019;25(1):6800214. doi: 10.1109/JSTQE.2017.2772886. [DOI] [Google Scholar]

- 67.Awasthi N, Kalva SK, Pramanik M, Yalavarthy PK. Vector extrapolation methods for accelerating iterative reconstruction methods in limited-data photoacoustic tomography. J Biomed Opt. 2018;23(7):071204. doi: 10.1117/1.JBO.23.7.071204. [DOI] [PubMed] [Google Scholar]

- 68.Haltmeier M, Zangerl G. Spatial resolution in photoacoustic tomography: effects of detector size and detector bandwidth. Inverse Problems. 2010;26(12):125002. doi: 10.1088/0266-5611/26/12/125002. [DOI] [Google Scholar]

- 69.Liu W, Zhou Y, Wang M, Li L, Vienneau E, Chen R, Luo J, Xu C, Zhou Q, Wang LV, Yao J. Correcting the limited view in optical-resolution photoacoustic microscopy. J Biophotonics. 2017;11:e201700196. doi: 10.1002/jbio.201700196. [DOI] [PubMed] [Google Scholar]

- 70.Yang X, Zhang Y, Zhao K, Zhao Y, Liu Y, Gong H, Luo Q, Zhu D. Skull optical clearing solution for enhancing ultrasonic and photoacoustic imaging. IEEE Trans Med Imaging. 2016;35(8):1903–1906. doi: 10.1109/TMI.2016.2528284. [DOI] [PubMed] [Google Scholar]

- 71.Langer G, Buchegger B, Jacak J, Klar TA, Berer T. Frequency domain photoacoustic and fluorescence microscopy. Biomed Opt Express. 2016;7(7):2692–2702. doi: 10.1364/BOE.7.002692. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Yao J, Wang LV. Sensitivity of photoacoustic microscopy. Photoacoustics. 2014;2(2):87–101. doi: 10.1016/j.pacs.2014.04.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Mozaffarzadeh M, Varnosfaderani MHH, Sharma A, Pramanik M, Jong ND, Verweij MD. Enhanced contrast acoustic-resolution photoacoustic microscopy using double-stage delay-multiply-and-sum beamformer for vasculature imaging. J Biophotonics. 2019;12(11):e201900133. doi: 10.1002/jbio.201900133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Asadollahi A, Latifi H, Pramanik M, Qazvini H, Rezaei A, Nikbakht H, Abedi A. Axial accuracy and signal enhancement in acoustic-resolution photoacoustic microscopy by laser jitter effect correction and pulse energy compensation. Biomed Opt Express. 2021;12(4):1834–1845. doi: 10.1364/BOE.419564. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Brnabic A, Hess LM. Systematic literature review of machine learning methods used in the analysis of real-world data for patient-provider decision making. BMC Med Inform Decis Mak. 2021;21(1):54. doi: 10.1186/s12911-021-01403-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Emmert-Streib F, Yang Z, Feng H, Tripathi S, Dehmer M. An introductory review of deep learning for prediction models with big data. Front Artif Intell. 2020;3:4. doi: 10.3389/frai.2020.00004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Liu J-E, An F-P. Image classification algorithm based on deep learning-kernel function. Sci Progr. 2020;2020:7607612. [Google Scholar]

- 78.Huang M-L, Wu Y-S. Classification of atrial fibrillation and normal sinus rhythm based on convolutional neural network. Biomed Eng Lett. 2020;10(2):183–193. doi: 10.1007/s13534-020-00146-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Yadav SS, Jadhav SM. Deep convolutional neural network based medical image classification for disease diagnosis. J Big Data. 2019;6(1):113. doi: 10.1186/s40537-019-0276-2. [DOI] [Google Scholar]

- 80.Minaee S, Boykov YY, Porikli F, Plaza AJ, Kehtarnavaz N, Terzopoulos D. Image segmentation using deep learning: a survey. IEEE Trans Pattern Anal Mach Intell. 2021 doi: 10.1109/TPAMI.2021.3059968. [DOI] [PubMed] [Google Scholar]

- 81.Zhou T, Ruan S, Canu S. A review: Deep learning for medical image segmentation using multi-modality fusion. Array. 2019;3–4:100004. doi: 10.1016/j.array.2019.100004. [DOI] [Google Scholar]

- 82.Hegazy MAA, Cho MH, Cho MH, Lee SY. U-net based metal segmentation on projection domain for metal artifact reduction in dental CT. Biomed Eng Lett. 2019;9(3):375–385. doi: 10.1007/s13534-019-00110-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Hooda R, Mittal A, Sofat S. Segmentation of lung fields from chest radiographs-a radiomic feature-based approach. Biomed Eng Lett. 2019;9(1):109–117. doi: 10.1007/s13534-018-0086-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Kim S-H, Hwang Y. A survey on deep learning based methods and datasets for monocular 3D object detection. Electronics. 2021;10(4):517. doi: 10.3390/electronics10040517. [DOI] [Google Scholar]

- 85.Gupta A, Anpalagan A, Guan L, Khwaja AS. Deep learning for object detection and scene perception in self-driving cars: survey, challenges, and open issues. Array. 2021;10:100057. doi: 10.1016/j.array.2021.100057. [DOI] [Google Scholar]

- 86.Li Y, Sixou B, Peyrin F. A review of the deep learning methods for medical images super resolution problems. IRBM. 2021;42(2):120–133. doi: 10.1016/j.irbm.2020.08.004. [DOI] [Google Scholar]

- 87.Yang W, Zhang X, Tian Y, Wang W, Xue J, Liao Q. Deep Learning for single image super-resolution: a brief review. IEEE Trans Multimed. 2019;21(12):3106–3121. doi: 10.1109/TMM.2019.2919431. [DOI] [Google Scholar]

- 88.Shahid AH, Singh MP. A deep learning approach for prediction of Parkinson's disease progression. Biomed Eng Lett. 2020;10(2):227–239. doi: 10.1007/s13534-020-00156-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Zhao W, Wang H, Gemmeke H, van Dongen KWA, Hopp T, Hesser J. Ultrasound transmission tomography image reconstruction with a fully convolutional neural network. Phys Med Biol. 2020;65(23):235021. doi: 10.1088/1361-6560/abb5c3. [DOI] [PubMed] [Google Scholar]

- 90.Yamashita R, Nishio M, Do RKG, Togashi K. Convolutional neural networks: an overview and application in radiology. Insights Imaging. 2018;9(4):611–629. doi: 10.1007/s13244-018-0639-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Ronneberger O, Fischer P, Brox T. U-Net: convolutional networks for biomedical image segmentation. In: Medical image computing and computer-assisted intervention-MICCAI 2015. Cham: Springer; 2015. p. 234–41.

- 92.Rejesh NA, Pullagurla H, Pramanik M. Deconvolution-based deblurring of reconstructed images in photoacoustic/thermoacoustic tomography. J Opt Soc Am A. 2013;30(10):1994–2001. doi: 10.1364/JOSAA.30.001994. [DOI] [PubMed] [Google Scholar]

- 93.Gutta S, Kadimesetty VS, Kalva SK, Pramanik M, Ganapathy S, Yalavarthy PK. Deep neural network-based bandwidth enhancement of photoacoustic data. J Biomed Opt. 2017;22(11):116001. doi: 10.1117/1.JBO.22.11.116001. [DOI] [PubMed] [Google Scholar]

- 94.Awasthi N, Jain G, Kalva SK, Pramanik M, Yalavarthy PK. Deep neural network based sinogram super-resolution and bandwidth enhancement for limited-data photoacoustic tomography. IEEE Trans Ultrason Ferroelectr Freq Control. 2020;67(12):2660–2673. doi: 10.1109/TUFFC.2020.2977210. [DOI] [PubMed] [Google Scholar]

- 95.Durairaj DA, Agrawal S, Johnstonbaugh K, Chen H, Karri SPK, Kothapalli S-R. Unsupervised deep learning approach for photoacoustic spectral unmixing. Proc SPIE. 2020;11240:112403H. [Google Scholar]

- 96.Antholzer S, Haltmeier M, Schwab J. Deep learning for photoacoustic tomography from sparse data. Inverse Probl Sci Eng. 2019;27(7):987–1005. doi: 10.1080/17415977.2018.1518444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Davoudi N, Deán-Ben XL, Razansky D. Deep learning optoacoustic tomography with sparse data. Nat Mach Intell. 2019;1(10):453–460. doi: 10.1038/s42256-019-0095-3. [DOI] [Google Scholar]