Abstract

Introduction

Ultrasound-guided regional anesthesia (UGRA) involves the acquisition and interpretation of ultrasound images to delineate sonoanatomy. This study explores the utility of a novel artificial intelligence (AI) device designed to assist in this task (ScanNav Anatomy Peripheral Nerve Block; ScanNav), which applies a color overlay on real-time ultrasound to highlight key anatomical structures.

Methods

Thirty anesthesiologists, 15 non-experts and 15 experts in UGRA, performed 240 ultrasound scans across nine peripheral nerve block regions. Half were performed with ScanNav. After scanning each block region, participants completed a questionnaire on the utility of the device in relation to training, teaching, and clinical practice in ultrasound scanning for UGRA. Ultrasound and color overlay output were recorded from scans performed with ScanNav. Experts present during the scans (real-time experts) were asked to assess potential for increased risk associated with use of the device (eg, needle trauma to safety structures). This was compared with experts who viewed the AI scans remotely.

Results

Non-experts were more likely to provide positive and less likely to provide negative feedback than experts (p=0.001). Positive feedback was provided most frequently by non-experts on the potential role for training (37/60, 61.7%); for experts, it was for its utility in teaching (30/60, 50%). Real-time and remote experts reported a potentially increased risk in 12/254 (4.7%) vs 8/254 (3.1%, p=0.362) scans, respectively.

Discussion

ScanNav shows potential to support non-experts in training and clinical practice, and experts in teaching UGRA. Such technology may aid the uptake and generalizability of UGRA.

Trial registration number

Keywords: regional anesthesia, ultrasonography, technology, education, nerve block

Introduction

The performance of ultrasound-guided regional anesthesia (UGRA) relies on the optimal acquisition and interpretation of ultrasound images.1–3 This skill is underpinned by knowledge of the underlying anatomy.4 5 However, even experienced anesthesiologists can find this challenging, particularly in the context of anatomical variation or other difficulties in scanning (eg, obesity).6 7 Furthermore, sonographic image interpretation is variable, even among experts.8–10 In the authors’ experience, though ultrasound technology yields ever-greater image resolution, acumen in ultrasound image acquisition and interpretation has not demonstrated an equal improvement since the introduction of ultrasound guidance in regional anesthesia in 1989.11

The implementation of artificial intelligence (AI) in clinical practice continues to grow and regional anesthesia has potential to embrace this technology for patient benefit, such as supporting UGRA training and practice to increase patient access to these techniques, as well as improve patient safety.12 Broadly speaking, AI includes any technique that enables computers to undertake tasks associated with human intelligence.12 Common techniques in this field include machine learning (ML) and deep learning (DL). ML uses algorithms, rule-based problem-solving instructions implemented by the computer,12 to learn: training data are analyzed to identify patterns (statistical correlations) in this information. If the algorithm is informed of the desired endpoints (eg, by labeling the images during the training stage) and then looks for correlations between the raw input data and the endpoints, this is called supervised ML. In unsupervised ML, the algorithm is given no instruction as to what endpoints are desired but looks for clusters of similarity in the dataset. Semisupervised ML uses a mixture of these two approaches. DL is a method of implementing ML. The algorithm encodes a network of artificial neurons (mathematical functions) which are arranged in layers. Successive layers of the network operate on the data as it is passed through the system, extracting progressively more information. DL is a common technique used in computer vision—a branch of AI which allows computers to interact with the visual world—a field that is readily applicable to medical image analysis.

ScanNav Anatomy Peripheral Nerve Block (ScanNav, formerly known as AnatomyGuide; Intelligent Ultrasound, Cardiff, UK) is an AI-based device that uses DL to apply a color overlay to real-time B-mode ultrasound.13 It highlights relevant anatomical structures on the ultrasound image, aiming to assist ultrasound image interpretation (see online supplemental files A–D). Expert evaluation of ultrasound videos have previously considered the color overlay to be helpful in identifying specific structures and confirming an appropriate block view in over 99% of cases.14 Similar systems include Nerveblox (Smart Alfa Teknoloji San. Ve Tic AS, Ankara, Turkey), which also applies a color overlay of anatomical structures on ultrasound in regions commonly scanned for UGRA,15 and NerveTrack (Samsung Medison, Seoul, South Korea), which applies a bounding box around the median and ulnar nerves when scanning the forearm.16

rapm-2021-103368supp001.mp4 (16.7MB, mp4)

rapm-2021-103368supp002.mp4 (17.4MB, mp4)

rapm-2021-103368supp003.mp4 (15.9MB, mp4)

rapm-2021-103368supp004.mp4 (15.6MB, mp4)

Though highlighting accuracy data have been published for ScanNav and Nerveblox,14 15 there are limited data from real-world use of these systems—particularly on their utility for operators. We therefore aimed to assess the subjective utility of ScanNav as an aid to identifying relevant structures, teaching/learning UGRA scanning, and increasing operator confidence. We also assessed UGRA experts’ perception of potential risks associated with the use of ScanNav, including increased risk of block failure or unwanted needle trauma to important safety structures (eg, nerves, arteries, and pleura/peritoneum).

Methods

The study was registered with www.clinicaltrials.gov.

Non-expert participants (ultrasound scanners)

Fifteen non-experts in UGRA were recruited; US postgraduate year 2–4 medical doctors enrolled in the anesthesiology residency training program at OHSU.

Expert participants (ultrasound scanners/real-time assessors)

Fifteen experts in UGRA were recruited: US board-certified anesthesiologists and current or former anesthesiology faculty at OHSU. All were competent to perform UGRA independently and met at least two of the following three characteristics: completed fellowship training in UGRA, regularly delivered direct clinical care using UGRA (including for ‘awake’ surgery), and regularly taught UGRA in the course of their clinical work (including advanced techniques).

Remote expert assessors

Three remote experts were recruited to subsequently review ultrasound videos recorded when a subject was scanned using ScanNav (unmodified videos presented with videos to which the AI color overlay was applied). One was a UK-based consultant anesthetist and two were US-based attending anesthesiologists. All met the criteria defined previously (with the exception that one was a pediatric anesthesiologist, thus their conduct of ‘awake’ surgery is limited by their patient population). With the exception of one participant (GW, who is an author if this article and was not remunerated for work in this study), participants and remote expert assessors did not form part of the investigator group and were compensated for their time.

Subjects

Two healthy volunteers for ultrasound scanning were recruited from a professional modeling agency and compensated for their services. Half of the scans were performed on each subject. The only exclusion criterion was pathology of the areas to be scanned.

Equipment

Ultrasound scanning was performed using the X-Porte or PX SonoSite ultrasound machines (Fujifilm SonoSite, Bothell, Washington, USA). Participants were free to choose from a selection of compatible probes for each machine; X-Porte with HFL50xp/L38xp linear or C60xp curvilinear probe, or PX with L15-5/L12-3 linear or C5-1 curvilinear probe.

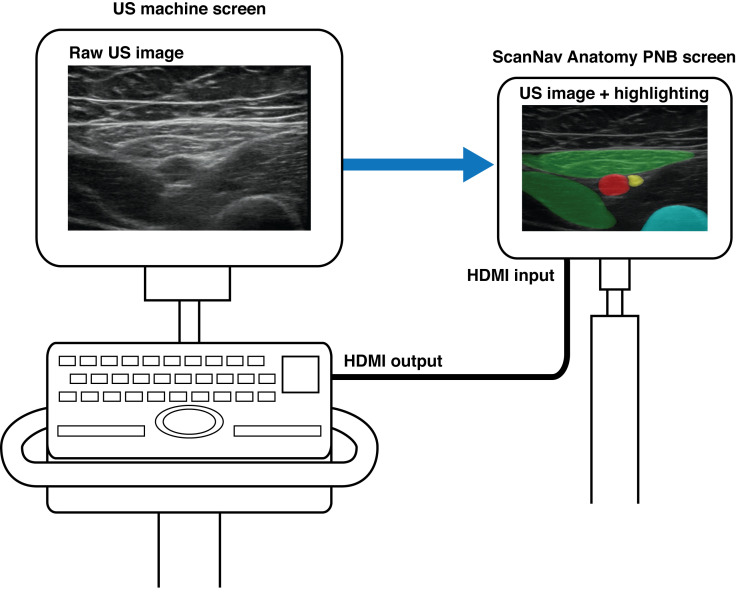

ScanNav (US V.1.0) was connected to the main ultrasound machine high-definition multimedia interface (HDMI) output. It displayed the same ultrasound image, with color overlay, on a secondary monitor (see figure 1). It is important to note that this device is not intended to replace clinician judgment and has been submitted to the US Food and Drug Administration for regulatory approval as a scanning-only device (for use prior to needle insertion or local anesthetic injection).

Figure 1.

Schematic of ScanNav connected to an ultrasound machine, displaying the same ultrasound image with a color overlay. US, ultrasound.

Scanning protocol

After gaining informed consent (both scanners and subjects), ultrasound assessment was performed at the typical locations for nine peripheral nerve blocks. The upper limb included the interscalene-level, upper trunk-level, supraclavicular-level and axillary-level brachial plexus regions. The trunk included the erector spinae plane and rectus sheath plane block regions. Lower limb scanning included regions for the suprainguinal-level fascia iliaca plane, adductor canal and popliteal-level sciatic nerve blocks.

Participants were free to set the depth and gain settings deemed appropriate for each scan and use the scanning technique they would in their normal clinical practice. The first scan was performed by an expert and performed on both sides, once with the use of ScanNav and once without. Eight participants performed the first scan with the use of ScanNav and seven without (order alternating between participants). Subsequently, a non-expert participant entered the room and was asked to scan for the same block in the same subject, under the supervision of the expert. The expert taught and/or supported the non-expert, as necessary, to achieve an optimal block view. As before, both sides were scanned, once with the use of the AI color overlay and once without (order alternating as above). This same process of four scans was then repeated on the same subject, scanning for a different block. The expert/non-expert pair then repeated the scanning protocol on a second subject (the scanning sequence is summarized in online supplemental file E).

rapm-2021-103368supp005.pdf (9.1MB, pdf)

This process of 16 scans (8 by the expert and 8 by the non-expert) was performed by 15 expert/non-expert pairs (total 240 scans). All AI-assisted scans (n=120) were recorded for later analysis by remote experts.

Utility: experts versus non-experts

After scanning for each block, the participants were asked to complete a questionnaire evaluating the AI color overlay for the images acquired (n=120, half by non-experts and half by experts; questionnaires available in online supplemental file E). Non-experts were asked to compare their experience of scanning with versus without ScanNav using the following metrics:

Confidence in scanning (0–10: 0, low confidence; 10, confident).

Identifying relevant anatomical structures on ultrasound (assisted, no difference, hindered).

Identifying the correct view for the block (easier, no difference, harder).

Learning scanning for the block (easier to learn, no difference, harder to learn)

Facilitating training (beneficial, no difference, detrimental).

Modify level of support required from your supervisor (less support required, no change;,more support required)

Experts were similarly asked to compare their experience of scanning with versus without ScanNav:

Confidence in their own scanning (0–10: 0, low confidence; 10, confident).

Identifying relevant anatomical structures on ultrasound (assisted, no difference, hindered).

Teaching scanning for the block (easier to teach, no difference, harder to teach).

Supervising scanning for the block (beneficial, no difference, detrimental).

Reduce the frequency of intervention during supervision of the non-expert (yes, no difference, no).

Confidence in the non-expert’s scanning ability (0–10: 0, low confidence; 10, confident).

Potential risks: real-time expert users versus remote experts

For each scan performed with ScanNav (n=120), experts were asked to report potential for increased risk of block failure or unwanted needle trauma to ‘safety critical’ structures as relevant to each block (eg, nerves, arteries, pleura, and peritoneum; see online supplemental file E). The risk of complications related to each structure included:

Nerve injury/postoperative neurological symptoms (PONS, nerves).

Local anesthetic systemic toxicity (LAST, arteries).

Pneumothorax (pleura).

Peritoneal violation (peritoneum).

Block failure (overall).

The real-time expert assessment was compared with that of the panel of remote experts, who viewed the recorded ultrasound with the unmodified video presented adjacent to the AI-color overlay video. Three remote experts viewed each ultrasound video and a majority view for each question was taken. In cases where no majority was reached, this was classified as ‘no consensus’.

Data analysis

Data were reported descriptively and, where appropriate, statistical evaluation (using R software V.4.1.1) was used to assess the relationship between variables. A χ2 test was used to compare feedback (expert vs non-expert, real-time user vs remote expert, and subject 1 vs subject 2) except for participant confidence, which used a Mann-Whitney U test to compare ordinal data. Statistical significance was deemed as a p value of <0.05.

Results

In total, 240 ultrasound scans were performed, 120 with ScanNav and 120 without. Of the 120 scans performed with the ScanNav, 60 were performed by non-experts in UGRA (under the supervision of experts) and 60 by experts. Both scan subjects were adult men; one was 34 years old with a body mass index (BMI) of 37.2 kg/m2 and the other was 41 years old with a BMI of 28.9 kg/m2. The data are summarized in tables 1–3 and a full breakdown is in online supplemental file E.

Table 1.

Non-expert feedback on benefits (n=60 scans with ScanNav)

| Positive | Neutral | Negative | |

| Identifying structures | 31 (51.7%) | 27 (45.0%) | 2 (3.3%) |

| Acquisition of correct view | 22 (36.7%) | 37 (61.7%) | 1 (1.7%) |

| Learning scan | 36 (60.0%) | 23 (38.3%) | 1 (1.7%) |

| Helped training | 37 (61.7%) | 23 (38.3%) | 0 (0%) |

| Supervisor support | 8 (13.3%) | 51 (85.0%) | 1 (1.7%) |

| Confidence | 31 (51.7%) | 25 (41.7%) | 4 (6.7%) |

| Overall (/360) | 165 (45.8%) | 186 (51.7%) | 9 (2.5%) |

Table 2.

Expert feedback on benefits (n=60 scans with ScanNav)

| Positive | Neutral | Negative | |

| Identifying structures | 15 (25.0%) | 42 (70.0%) | 3 (5.0%) |

| Teaching | 30 (50.0%) | 29 (48.3%) | 1 (1.7%) |

| Supervising | 27 (45.0%) | 29 (48.3%) | 4 (6.7%) |

| Frequency of intervention | 16 (26.7%) | 34 (56.7%) | 10 (16.7%) |

| Confidence (own scanning) | (Increase) 14 (23.3%) |

(No difference) 44 (73.3%) |

(Decrease) 2 (3.3%) |

| Confidence (supervising non-expert) | (Increase) 29 (48.3%) |

(No difference) 24 (40.0%) |

(Decrease) 7 (11.7%) |

| Overall (/360) | 131 (36.4%) | 202 (56.1%) | 27 (7.5%) |

Table 3.

Reported potential risks: real-time expert versus remote expert assessor (max n=103)

| Real-time expert | Remote expert | |

| Nerve injury/PONS (/67) | 5 (7.5%) | 0 (0%) |

| LAST (/71) | 3 (4.2%) | 0 (0%) |

| Pneumothorax (/16) | 0 (0%) | 0 (0%) |

| Peritoneum violation (/8) | 0 (0%) | 0 (0%) |

| Block failure (/92) | 4 (4.3%) | 8 (8.7%)* |

| Total (/254) | 12 (4.7%) | 8 (3.1%)* |

N.B. Despite 103 block feedback sheets return, not all feedback elements were completed by participants for all blocks (hence, n<103 for block failure).

*8/92 of all block views considered (7/8 of these views considered ‘inadequate’ by expert panel).

LAST, local anesthetic systemic toxicity; PONS, postoperative neurological symptoms.

Utility: non-experts versus experts

Non-experts provided positive feedback more frequently and provided negative feedback less frequently than experts (p=0.001). The most frequent positive feedback provided by non-experts was on ScanNav’s role in their training (37/60, 61.7%); for experts, it was for ScanNav’s use in teaching (30/60, 50%). Overall, 70% of participants reported that ScanNav aided in the identification of key anatomical structures for a peripheral nerve block, and 63% believed that it assisted in confirming the correct ultrasound view during scanning. Non-experts’ most frequent negative feedback was that it may decrease their confidence in scanning (4/60, 6.7%); for experts, it was that ScanNav may increase the frequency of supervisor intervention (10/60, 16.7%). Non-expert median confidence in their own scanning was 6 (IQR 5–8) without ScanNav and 7 (IQR 5.75–9) with ScanNav (p=0.07). Experts reported median confidence of 10 (IQR 8-10) vs 10 (IQR 8-10) respectively (p=0.57). When supervising a non-expert scanning, their median confidence in the non-expert’s scanning was 7 (IQR 4.75–8) without ScanNav and 8 (IQR 4–9) with ScanNav (p=0.23). Overall, there was no difference in the reporting between subjects scanned (p=0.562).

Potential risks: real-time user expert versus remote expert

Of the 120 scans performed with ScanNav, real-time expert user data were collected on 103 scans (17 lost), and remote expert assessment was thus compared for the same 103 scans. Real-time and remote expert reported a potential increase in risk: 12/254 (4.7%) vs 8/254 (3.1%), respectively (p=0.362, table 3).

Discussion

This study explores the potential role of assistive AI in the acquisition and interpretation of ultrasound scans for UGRA, with real-world users in a simulation setting. Positive sentiment (36.4%–45.8%) about ScanNav was reported by users more commonly than negative sentiment (2.5%–7.5%), while the majority (63%–70%) reported that it aided in the identification of key anatomical structures on ultrasound and in confirmation of the correct ultrasound view. Non-experts derived most benefit from ScanNav; ≥50% reported it to aid in the identification of sonoanatomical structures, learning the scanning for a block, benefits to training and improving their confidence in scanning. These data suggest a role for ScanNav in the training of non-experts in UGRA. Training can be in the form of non-patient-facing activities such as formal teaching and educational courses. However, teaching during clinical practice plays a fundamental role in medical training. Thus, although it requires additional financial resources, ScanNav may be used for educational purposes in both settings, supporting widespread adoption of UGRA17 and patient access to these techniques.18

The areas receiving the highest frequency of negative feedback (frequency of supervisor intervention and decreasing confidence of performing/supervising ultrasound scanning) are perhaps unsurprising. This is a new medical device, based on AI technology with which many clinicians are unfamiliar, and the participants in this study had not used it prior to participating in this study. Initial use of any new technology may be associated with more frequent intervention and lower confidence, which may improve with time and increased familiarity with the device.

There was a low perception of increased risk associated with AI highlighting by ScanNav (3.1%–4.7%), though potential complications considered may be clinically important (eg, nerve injury/PONS and LAST). Potential causes of error include those related to device performance, such as incorrect highlighting, which may cause anesthesiologists to misinterpret the ultrasound image(s). Block failure or unwanted needle trauma to safety critical structures may therefore be more likely if the anesthesiologist is falsely reassured by the presence or absence of color on the screen. Others may be associated with use of the device, such as correct highlighting causing distraction by drawing focus away from other relevant structures. The current technology therefore provides additional information for the anesthesiologist rather than a decision-making system on ultrasound image interpretation (much as is the case for other image augmentation systems, eg, color Doppler). Furthermore, correct structure/block view identification alone does not ensure safe or effective UGRA nor does the device guide needle placement. It is therefore the practitioner’s responsibility to identify hazards and undertake safe practice. The data show a more critical view of real-time users as compared with those viewing the video remotely. Real-time users have a richer source of information as they performed the dynamic scan; however, they had to subsequently give their assessment from their memory of the preceding few minutes. Remote users had the benefit of scrolling back and forth through the video to carefully scrutinize the unmodified ultrasound video. In addition, three remote experts assessed each video compared with one real-time user expert. It is not possible to determine, with these limited data, whether one cohort or another is correct—but to be aware of the range of opinion and that more work must be done to explore this facet of the data. Nevertheless, a more cautious view adopted by real-time users is perhaps a welcome inadvertent safety feature, showing a desire to maintain the use of clinical judgment which has been developed over years of training.

This study has limitations. These data are subjective, based on a limited use of the device, and may not necessarily reflect clinical practice. Over time, clinical data may support or refute the conclusions drawn here and could include studies in other settings (eg, UGRA in the emergency department or prehospital emergency care). Outcome data, including patient outcomes and resource-use metrics, will be crucial in validating the clinical utility of ScanNav. This study included only 2 scan subjects and 30 anesthesiologist participants; these data may need to be replicated with a larger number of participants and subjects with different demographics, such as body habitus, comorbid status or anatomical abnormalities. Also, all participants were from a single institution, while UGRA practices and experiences may vary between institutions, regions or countries. In addition, a three-point scale was given for participants to provide their assessment, whereas a five-point scale may have allowed greater discrimination of subjective assessment. Finally, only two ultrasound machines were assessed; further work is required to ensure generalizability of these data.

Conclusion

The few studies conducted in this field so far report little in the way of contemporaneous feedback by users or evaluation of the clinical utility of AI devices to support ultrasound scanning in UGRA. We have demonstrated that ScanNav may support non-experts in their training and clinical practice, and experts in their teaching of UGRA. It may help by drawing attentional focus to the area of interest to aid in confirmation of the correct ultrasound view and the identification of sonoanatomical structures on that view. We believe that AI-augmented ultrasound scanning, through devices such as ScanNav, may support the uptake and generalizability of ultrasound-guided techniques in the future.

Acknowledgments

The authors thank Drs Boyne Bellew, David Maduram and Bryant Tran for their remote review of artificial intelligence-overlay ultrasound videos in the study.

Footnotes

Twitter: @bowness_james, @elboghdadly, @woodworgMD, @AlisonNoble_OU, @HelenEHigham, @davidbstl

Contributors: Study concept and design: JSB and DBSL; data collection: GW; manuscript preparation: JSB; manuscript editing, review and approval: all authors.

Funding: This work was funded by Intelligent Ultrasound Limited (Cardiff, UK). Data from this study were included in medical device regulatory approval submissions in the USA.

Competing interests: JSB is a Senior Clinical Advisor for Intelligent Ultrasound, receiving research funding and honoraria. KEB is an Editor for Anaesthesia and has received research, honoraria and educational funding from Fisher and Paykel Healthcare Lit, GE Healthcare and Ambu. DBSL is a Clinical Advisor for Intelligent Ultrasound, receiving honoraria. JAN is a Senior Scientific Advisor for Intelligent Ultrasound.

Provenance and peer review: Not commissioned; externally peer reviewed.

Supplemental material: This content has been supplied by the author(s). It has not been vetted by BMJ Publishing Group Limited (BMJ) and may not have been peer-reviewed. Any opinions or recommendations discussed are solely those of the author(s) and are not endorsed by BMJ. BMJ disclaims all liability and responsibility arising from any reliance placed on the content. Where the content includes any translated material, BMJ does not warrant the accuracy and reliability of the translations (including but not limited to local regulations, clinical guidelines, terminology, drug names and drug dosages), and is not responsible for any error and/or omissions arising from translation and adaptation or otherwise.

Ethics statements

Patient consent for publication

Not applicable.

Ethics approval

This study involves human participants and ethical approval was granted by the Oregon Health & Science University Institutional Review Board (STUDY00022920). Participants gave informed consent to participate in the study before taking part.

References

- 1. Woodworth GE, Carney PA, Cohen JM, et al. Development and validation of an assessment of regional anesthesia ultrasound interpretation skills. Reg Anesth Pain Med 2015;40:306–14. 10.1097/AAP.0000000000000236 [DOI] [PubMed] [Google Scholar]

- 2. Sites BD, Chan VW, Neal JM, et al. The American Society of regional anesthesia and pain medicine and the European Society of regional anaesthesia and pain therapy joint Committee recommendations for education and training in ultrasound-guided regional anesthesia. Reg Anesth Pain Med 2009;34:40–6. 10.1097/AAP.0b013e3181926779 [DOI] [PubMed] [Google Scholar]

- 3. Henderson M, Dolan J. Challenges, solutions, and advances in ultrasound-guided regional anaesthesia. BJA Educ 2016;16:374–80. 10.1093/bjaed/mkw026 [DOI] [Google Scholar]

- 4. Bowness J, Taylor A. Ultrasound-Guided regional anaesthesia: visualising the nerve and needle. Adv Exp Med Biol 2020;1235:19–34. 10.1007/978-3-030-37639-0_2 [DOI] [PubMed] [Google Scholar]

- 5. Mariano ER, Marshall ZJ, Urman RD, et al. Ultrasound and its evolution in perioperative regional anesthesia and analgesia. Best Pract Res Clin Anaesthesiol 2014;28:29–39. 10.1016/j.bpa.2013.11.001 [DOI] [PubMed] [Google Scholar]

- 6. Tremlett M. Final Fellowship of the Royal College of Anaesthetists (FRCA) Examination Chairman’s Report (Academic Year September 2013 - July 2014): Review of the RCoA Final Exam 2013 - 2014;2014. [Google Scholar]

- 7. Bowness J, Turnbull K, Taylor A, et al. Identifying the emergence of the superficial peroneal nerve through deep fascia on ultrasound and by dissection: implications for regional anesthesia in foot and ankle surgery. Clin Anat 2019;32:390–5. 10.1002/ca.23323 [DOI] [PubMed] [Google Scholar]

- 8. Williams L, Carrigan A, Auffermann W, et al. The invisible breast cancer: experience does not protect against inattentional blindness to clinically relevant findings in radiology. Psychon Bull Rev 2021;28:503–11. 10.3758/s13423-020-01826-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Drew T, Võ ML-H, Wolfe JM. The invisible gorilla strikes again: sustained inattentional blindness in expert observers. Psychol Sci 2013;24:1848–53. 10.1177/0956797613479386 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Bowness J, Turnbull K, Taylor A, et al. Identifying variant anatomy during ultrasound-guided regional anaesthesia: opportunities for clinical improvement. Br J Anaesth 2019;122:e75–7. 10.1016/j.bja.2019.02.003 [DOI] [PubMed] [Google Scholar]

- 11. Ting PL, Sivagnanaratnam V. Ultrasonographic study of the spread of local anaesthetic during axillary brachial plexus block. Br J Anaesth 1989;63:326–9. 10.1093/bja/63.3.326 [DOI] [PubMed] [Google Scholar]

- 12. Bowness J, El-Boghdadly K, Burckett-St Laurent D. Artificial intelligence for image interpretation in ultrasound-guided regional anaesthesia. Anaesthesia 2021;76:602–7. 10.1111/anae.15212 [DOI] [PubMed] [Google Scholar]

- 13. Bowness J, Macfarlane AJR, Noble JA. Anaesthesia, nerve blocks and artificial intelligence. Anaesthesia News 2021;408:4–6. [Google Scholar]

- 14. Bowness J, Varsou O, Turbitt L, et al. Identifying anatomical structures on ultrasound: assistive artificial intelligence in ultrasound-guided regional anesthesia. Clin Anat 2021;34): :802–9. 10.1002/ca.23742 [DOI] [PubMed] [Google Scholar]

- 15. Gungor I, Gunaydin B, Oktar SO, et al. A real-time anatomy ıdentification via tool based on artificial ıntelligence for ultrasound-guided peripheral nerve block procedures: an accuracy study. J Anesth 2021;35:591–4. 10.1007/s00540-021-02947-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Soultion Brief . Available: https://www.intel.com/content/dam/www/public/us/en/documents/solution-briefs/samsung-nervetrack-solution-brief.pdf [Accessed 25 Dec 2021].

- 17. Turbitt LR, Mariano ER, El-Boghdadly K. Future directions in regional anaesthesia: not just for the cognoscenti. Anaesthesia 2020;75:293–7. 10.1111/anae.14768 [DOI] [PubMed] [Google Scholar]

- 18. Mudumbai SC, Auyong DB, Memtsoudis SG, et al. A pragmatic approach to evaluating new techniques in regional anesthesia and acute pain medicine. Pain Manag 2018;8:475–85. 10.2217/pmt-2018-0017 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

rapm-2021-103368supp001.mp4 (16.7MB, mp4)

rapm-2021-103368supp002.mp4 (17.4MB, mp4)

rapm-2021-103368supp003.mp4 (15.9MB, mp4)

rapm-2021-103368supp004.mp4 (15.6MB, mp4)

rapm-2021-103368supp005.pdf (9.1MB, pdf)