Abstract

Photoacoustic imaging has shown great potential for guiding minimally invasive procedures by accurate identification of critical tissue targets and invasive medical devices (such as metallic needles). The use of light emitting diodes (LEDs) as the excitation light sources accelerates its clinical translation owing to its high affordability and portability. However, needle visibility in LED-based photoacoustic imaging is compromised primarily due to its low optical fluence. In this work, we propose a deep learning framework based on U-Net to improve the visibility of clinical metallic needles with a LED-based photoacoustic and ultrasound imaging system. To address the complexity of capturing ground truth for real data and the poor realism of purely simulated data, this framework included the generation of semi-synthetic training datasets combining both simulated data to represent features from the needles and in vivo measurements for tissue background. Evaluation of the trained neural network was performed with needle insertions into blood-vessel-mimicking phantoms, pork joint tissue ex vivo and measurements on human volunteers. This deep learning-based framework substantially improved the needle visibility in photoacoustic imaging in vivo compared to conventional reconstruction by suppressing background noise and image artefacts, achieving 5.8 and 4.5 times improvements in terms of signal-to-noise ratio and the modified Hausdorff distance, respectively. Thus, the proposed framework could be helpful for reducing complications during percutaneous needle insertions by accurate identification of clinical needles in photoacoustic imaging.

Keywords: Photoacoustic imaging, Needle visibility, Light emitting diodes, Deep learning, Minimally invasive procedures

1. Introduction

Ultrasound (US) imaging is widely used for guiding minimally invasive percutaneous procedures such as peripheral nerve blocks [1], tumour biopsy [2] and fetal blood sampling [3]. During these procedures, a metallic needle is inserted percutaneously into the body towards the target under real-time US guidance. Accurate and efficient identification of the target and the needle are of paramount importance to ensure the efficacy and safety of the procedure. Despite a number of prominent advantages associated with US imaging such as its real-time imaging capability, high affordability and accessibility, it suffers from intrinsically low soft tissue contrast that sometimes results in insufficient visibility of critical tissue structures such as nerves and small blood vessels. Moreover, visibility of clinical needles with US imaging is strongly dependent on the insertion angle and depth of the needle. With steep insertion angles, US reflections can be readily outside the transducer aperture, leading to poor needle visibility. Loss of visibility of tissue targets or the needle can cause significant complications [4].

Various methods have been developed for enhancing needle visualisation with US imaging, including echogenic needles [5], Doppler imaging [6], electromagnetic tracking [7], and ultrasonic needle tracking [8], [9], [10]. Although promising results have been reported, these methods usually require specialised equipment. Image-based needle tracking algorithms leveraging linear features of needles in US images have also been investigated such as random sample consensus [11], [12], Hough Transform [13], [14], line filtering [15], [16], and graph cut [17]. However, it is challenging to automate these algorithms on US images with a variety of tissue backgrounds and needle contrasts. Deep learning (DL) based models especially convolutional neural networks have demonstrated competitive robustness and accuracy [18], [19], [20], however, large clinical datasets with fine annotations are usually required for clinical applications but difficult to obtain.

Photoacoustic (PA) imaging has been of growing interest in the past two decades for its various potential preclinical and clinical applications, owing to its unique ability to resolve spectroscopic signatures of tissues at high spatial resolution and depths [21], [22], [23]. In recent years, several research groups have proposed the combination of US and PA imaging for guiding minimally invasive procedures by offering complementary information to each other, with US imaging providing tissue structural information and PA imaging identifying critical tissue structures and invasive surgical devices such as metallic needles [24], [25], [26], [27]. Recently, laser diodes (LDs) and light emitting diodes (LEDs) have shown promising results as an alternative to solid-stated lasers that are commonly used as PA excitation sources due to their favourable portability and affordability, which is of advantage to clinical translation [28], [29], [30].

DL has been demonstrated as a powerful tool for signal and image processing, leading to remarkable successes in medical imaging [31], [32], [33], [34]. DL-based approaches have been proposed for PA imaging enhancement, especially photoacoustic tomography [35], where they process raw channel data for image reconstruction [36], [37], [38] and enhancement [39], [40] as well as reconstructed images for image segmentation or classification [41], [42].

DL has been recently used by several research groups for improving the imaging quality of LED-based PA/US imaging systems. Anas et al. [43] exploited the use of a combination of a convolutional neural network (CNN) and a recurrent neural network (RNN) to enhance the quality of PA images by leveraging both the spatial features and temporal information in repeated PA image acquisitions. Kuniyil Ajith Singh et al. [29] proposed a U-Net model to improve the SNR by training a neural network using PA images acquired by an improved PA imaging system with a higher laser energy and broadband ultrasound transducers. The pre-trained model was proven effective with LED-based PA images acquired from phantoms. Hariri et al. [44] proposed a multi-level wavelet-CNN to enhance noisy PA images associated with low fluence LED illumination by learning from PA images acquired with high fluence illumination sources. Enhancements were achieved on unseen in vivo data with improved image contrast. Most recently, Kalloor Joseph et al. [45] developed a generative adversarial network (GAN)-based framework for PA image reconstruction to mitigate the impact of the limited aperture and bandwidth of the ultrasound transducer. The proposed model was trained on simulated images from artificial blood vessels and tested on in vivo measurements of the human forearm. The proposed approach was able to remove artefacts caused by the limited bandwidth and detection view.

Although prominent attention has been given to improving the visualisation of tissue structures, notably, not much effort has been made to improve the visualisation of invasive medical devices in PA imaging. In this work, we proposed a DL-based framework to enhance the visibility of clinical needles with PA imaging for guiding minimally invasive procedures. As clinical needles have relatively simple geometries whilst background biological tissues such as blood vessels are complex, as opposed to using purely synthetic data [46], [47], [48], [49], a hybrid method was proposed for generating semi-synthetic datasets [50]. The DL model was trained and validated using such semi-synthetic datasets and blind to the test data obtained from tissue-mimicking phantoms, ex vivo tissue and human fingers in vivo. The applicability of the proposed model on diverse in vivo image data was further assessed on PA video sequences and compared with the standard Hough Transform. To the best of our knowledge, this is the first work that exploits DL for improving needle visualisation with PA imaging as well as utilises semi-synthetic datasets for DL in PA imaging.

2. Material and methods

2.1. System description

A commercial LED-based PA/US imaging system (AcousticX, CYBERDYNE INC, Tsukuba, Japan) was used for acquiring experimental data. Detailed description of it can be found elsewhere [51]. Briefly, PA excitation is provided by two LED arrays that sandwich a linear array US probe at a fixed angle. Each array consists of four rows of LEDs with 36 elements of 1 mm 1 mm on each row. The LED arrays can be driven at different pulse repetition frequencies from 1 kHz to 4 kHz, and the maximum pulse energy from each array is 200 J. The LED pulse duration is controllable between 30 ns to 150 ns. In this study, a pulse width of 70 ns at 850 nm was selected for optimal energy efficiency [52], [53]. The illumination area formed by the LED arrays was approximately a rectangle (50 mm 7 mm), resulting in an optical fluence of 0.11 mJ/cm2 at the maximum pulse energy of 400 J. The US probe has 128 elements over a linear array distance of 40.3 mm, with each element having a pitch of 0.315 mm, a central frequency of 7 MHz, and a −6 dB fractional bandwidth of 80.9%.

Radio-frequency (RF) data for PA and US imaging were collected simultaneously from 128 channels on the probe with sampling rates of 40 MHz and 20 MHz, respectively. Interleaved PA and US imaging can be performed in real-time with image reconstruction performed on a graphics processing unit (GPU). Meanwhile, a maximum of 1536 PA frames and 1536 US frames corresponding to a total duration of 20 s could be saved in memory at one time, available for offline reconstruction.

2.2. Semi-synthetic dataset generation

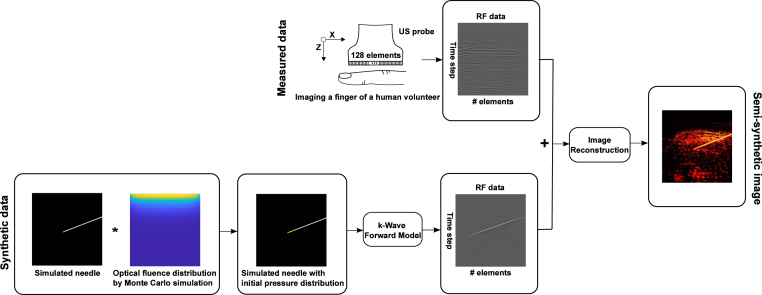

The process of semi-synthetic dataset generation comprised three main steps as shown in Fig. 1: (1) acquisition of in vivo data to account for background PA signals originated from biological tissue; (2) generation of synthetic sensor data from needles; (3) image reconstruction with raw channel data combining synthetic and measurement data.

Fig. 1.

Flowchart illustration of the process of semi-synthetic training dataset generation. Top row: acquisition of sensor data from human finger vasculature in vivo as background. Bottom row: synthetic radio-frequency (RF) sensor data generation from a simulated needle.

In vivo data for background vasculature were collected by imaging the fingers of 13 healthy human volunteers using AcousticX. Experiments on human volunteers were approved by the King’s College London Research Ethics Committee (study reference: HR-18/19-8881). For each measurement, a total of 1536 PA frames and 1536 US frames were saved to the hard drive of the system’s workstation for offline reconstruction. The RF data of one PA or US frame was a 2D matrix with dimensions of 1024 × 128. The first 150 (out of 1024) time steps were zeroed to remove strong LED-induced noise that spanned across the upper 5 mm depth in PA and US images. Averaging over 128 frames was implemented for suppressing random noise in the background. Reconstructed PA and US images corresponded to a field-of-view of 40.3 mm (X) 39.4 mm (Z) according to the geometry of the linear transducer and the total number of time steps (1024) at a 40 MHz sampling rate.

Simulations of the sensor data originated from the needle were performed using the k-Wave toolbox [54]. Initial pressure distribution maps were created by simulating the optical fluence distributions on the needle shaft using Monte Carlo simulations [55]. A 40.0 mm (X) 40.0 mm (Z) region with a grid size of 0.1 mm was constructed to represent the background tissue. A uniform refractive index, optical scattering coefficient and anisotropy of 1.4, 10 mm−1 and 0.9, respectively, were assigned to this region [56]. Three optical absorption coefficients of 1 mm−1, 1.5 mm−1, 2 mm−1 accounted for the variations in standard tissue. A homogeneous photo beam with a finite size of 38.4 mm was applied to the surface of the simulation area. Each simulation was run for around 10 min with approximate 100,000 photon packets.

A linear array of 128 US transducer elements (with a pitch of 0.315 mm over a total length of 40.3 mm) were assigned to a forward model in k-Wave to receive the generated PA signals from the initial pressure distributions maps. The US transducer was assigned a central frequency of 7 MHz and a fractional −6 dB bandwidth of 80.9% according to the specifications of AcousticX. RF data collected by the transducer elements were successively down-sampled to 40 MHz to match the sampling rate of the measured data. Considering the variations of needle insertions, simulations were conducted to account for clinically-relevant needle insertion depths and angles, spanning from 5 mm to 25 mm with an increment of 5 mm, and from 20 degrees to 65 degrees with a step of 5 degrees, respectively.

To form a semi-synthetic image, the simulated RF data were normalised to maximum amplitude of ex vivo needle signals collected by AcousticX. Subsequently, a pair of 2D data matrices (1024 × 128) consisted of RF data from a simulation on a needle and a measurement on a human finger were added to form a single 2D data matrix and then fed to a Fourier domain algorithm for image reconstruction [57]. The reconstructed images based on the semi-synthetic data were then interpolated to 578 × 565 pixels with a uniform pixel size of 70 m 70 m. To facilitate network implementations, the images were cropped to 512 × 512 pixels by removing the corresponding rows from top to bottom and the same number of columns from left to centre and right to centre respectively.

Finally, a total number of 2000 semi-synthetic images with substantial variations on both the needle and background were used for model training with the corresponding initial pressure distributions as the ground truths.

2.3. Acquisition of phantom, ex vivo and in vivo data for evaluation

Evaluation of the trained neural network was performed on PA images acquired with in-plane needle (20G, BD, USA) insertions into blood-vessel-mimicking phantoms, pork joint tissue ex vivo and human fingers in vivo (needle outside of tissue; see Supplementary Materials; Video S1). It is noted that the fingers with needle insertions were used to obtain representative real in vivo data, but there is no corresponding clinical scenario.

The blood-vessel-mimicking phantoms were created by affixing several carbon fibre bundles in a plastic box filled with 1% Intralipid dilution (Intralipid 20% emulsion, Scientific Laboratory Supplies, UK) that had an estimated optical reduced scattering coefficient of 0.96 mm−1 at 850 nm [58]. The fibre bundles were randomly positioned to mimic different orientations of blood vessels. The acquired PA images were prepared following the pipeline used for processing the semi-synthetic data.

2.4. Network implementation

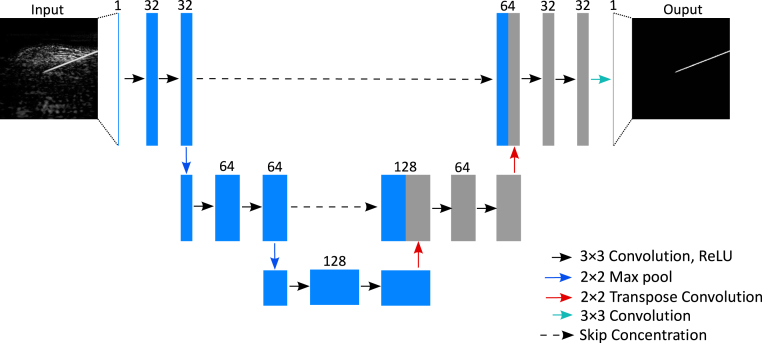

The network architecture implemented in this work was derived from the U-Net architecture proposed in Ref. [35]. In general, this model followed the original U-Net architecture [59] but had fewer scales and a reduced number of filters at each scale to accommodate a small input size. Experiments about the model capacity (see Supplementary Materials; Section 5) manifested that the model shown in Fig. 2 was able to learn the regularities of the training data and generalise well to unseen data. Besides, this model was built smaller and lighter which could contribute to size and latency reduction that are beneficial for real-time applications.

Fig. 2.

Architecture of the proposed network for improving needle visibility in photoacoustic imaging.

In Fig. 2, following an encoder path, each scale consisted of two convolutional layers followed by a 2 × 2 max pooling layer. For the decoder path, similarly, each scale contained two convolutional layers but followed by a transposed convolutional layer with an up-sampling factor of 2. The model was trained using the input pairs with a smaller resolution of 128 × 128 pixels that adapted well to the receptive field of the model by resizing from the initial size of 512 × 512 pixels via bicubic interpolation and evaluated on the real images with a size of 256 × 256 (see 4. Discussion & Conclusions regarding the choice of the image resolution).

Our network was implemented in Python using PyTorch v1.2.0. The semi-synthetic dataset was randomly split into training, test, and validation sets with a ratio of 8:1:1. Training was performed for 5000 iterations with a batch size of 4 that minimised the mean square error (MSE) loss in the validation set using the ADAM optimiser [60] (initial learning rate: 0.001) and NVIDIA Tesla V100 GPUs. The CosineAnnealingLR learning rate scheme [61] was employed to steadily decrease the learning rate during the training.

2.5. Post-processing

A post-processing algorithm based on maximum contour selection [62] was employed for further improving the outcomes of the trained neural network and fitting the needle trajectory. It was assumed that for all the experiments only in-plane placements with one single needle was performed. The isolated outliers in the outputs of the U-Net could be discriminated based on the region size differences from that of the enhanced needle. Thus, the post-processing algorithm detected all contours in the outputs of the proposed model and saved the maximum contour as the one from the needle by counting the number of pixels on each contour boundary.

2.6. Comparison method

As a further evaluation step considering processing multiple PA frames from video sequences during dynamic needle insertions, the performance of the trained neural network on needle identification was compared with the standard Hough Transform (SHT), which is a classical baseline method for line detection [63]. The SHT is designed to identify straight lines in images. It employs the parametric representation of a straight line, which is also called the Hesse normal form and can be expressed as:

| (1) |

where is the shortest distance from the origin to the line. measures the angle between the -axis and the perpendicular projection from the origin point to the line. Therefore, a straight line can be associated with a pair of parameters (, ), corresponding to a sinusoidal curve in Hough space. A few points on the same straight line will produce a set of sinusoidal curves that cross the same point (, ) which exactly represents that line. In this study, the SHT was implemented by a two-dimensional matrix whose columns and rows were used to save the and values, respectively. For each point in the image, was calculated for each , leading to increments of that bin in the matrix. Finally, the potential straight lines in the image were extracted by selecting the local maxima from the accumulator matrix.

2.7. Evaluation protocol and performance metrics

The needles in the acquired PA images from three different media (phantoms, pork joint tissue ex vivo, and human fingers) were manually labelled as line segments by an experienced observer. The line needle segment was generated by connecting two points in the needle: the needle tip and the farthest point to the tip on the needle shaft that was visualised in a PA image. The needle tip had a good contrast on US images when it was surrounded by water in the liquid-based tissue-mimicking phantoms, but had poor visibility for ex vivo and in vivo measurements. Therefore, solid glass spheres (0–63 m, Boud Minerals Limited, UK) were injected through the needle after being diluted with water to enhance the contrast of the tip, thus improving the accuracy and precision of manual labelling. For each medium, 20 representative measurements with different backgrounds and needle locations were used for metrics calculation.

To access the accuracy of needle extraction using the proposed DL-based approach, a metric called the modified Hausdorff distance (MHD) was employed [64]. The MHD was adapted from the generalised Hausdorff distances proposed for object matching with improved discriminatory power and greater robustness to outliers. Considering two point sets and . The distance between a point from and a set of points from was defined as . The directed distance measure was defined as:

| (2) |

Then, the directed distance measures and were combined in the following way, resulting in the definition of the MHD as:

| (3) |

The MHD was defined in the unit of pixels which could be converted to real distance considering the pixel size of 70 m per pixel. Signal-to-noise ratio (SNR) was also used to assess the performance of the needle enhancement and was defined as SNR = / , where is the mean amplitude over the needle region and is the standard deviation of the background. The mean amplitude of the needle region was calculated by taking the average of the pixel values over the line segment. The background was defined as one of the largest rectangular regions that excluded the needle pixels.

3. Results

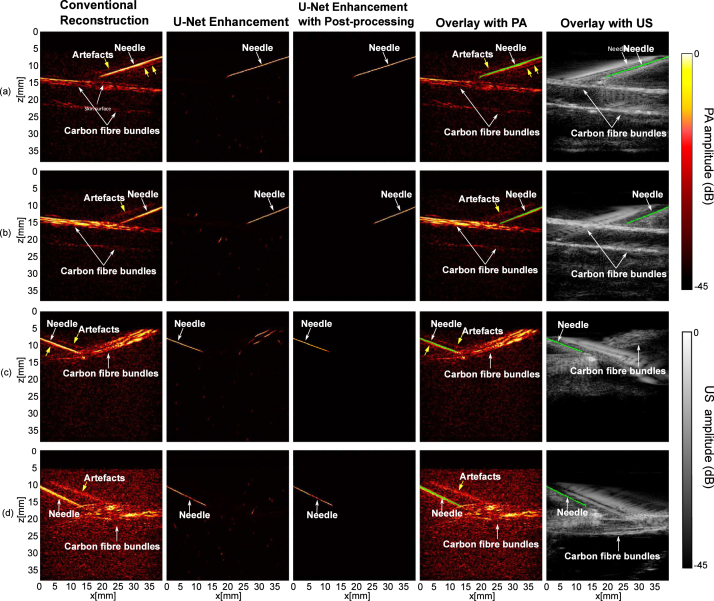

3.1. Blood-vessel-mimicking phantoms

The results of imaging on blood-vessel-mimicking phantoms are shown in Fig. 3. Compared to conventional reconstructions, noticeable improvements in terms of removing background noise and artefacts can be observed in the outputs of the U-Net. The proposed model successfully identified the needle insertion without being perturbed by the background vessels that shared similar line-shape features. The false positives in the U-Net enhanced images were further suppressed by the post-processing. Besides, the proposed model demonstrated robustness to noise and strong artefacts (e.g., Fig. 3(d)). The composite images indicated that the proposed model was able to detect the needle insertion with good correspondence to the conventional reconstructions and the US images.

Fig. 3.

Photoacoustic imaging with needle insertions into a blood-vessel-mimicking phantom with conventional reconstruction, U-Net enhancement and U-Net enhancement with post-processing.

The performance of the proposed model was quantified with SNR and MHD (Table 1). Compared to the conventional reconstruction, the proposed model achieved a significant improvement in SNR by a factor of 8.3 (). The MHD had large values as the noise level and artefacts increased. The proposed U-Net led to an initial 2.4 times decrease in MHD (from 63.2 ± 15.9 to 26.4 ± 23.3) and was further optimised by the successive post-processing to achieve the smallest MHD of 1.4 ± 1.3.

Table 1.

Quantitative evaluation of the trained neural network using blood-vessel-mimicking phantoms. These performance metrics are expressed as mean standard deviations from 20 measurements acquired from different phantoms and needle positions.

| Metrics | Conventional reconstruction | U-Net enhancement | U-Net enhancement with post-processing |

|---|---|---|---|

| SNR | 8.7 ±2.3 | – | |

| MHD | 63.2 ± 15.9 |

3.2. Pork joint tissue ex vivo

The proposed model was also applied to images acquired from needle insertions into ex vivo tissue. Fig. 4 shows the representative results comparing the conventional reconstruction and the proposed model. The proposed model robustly enhanced the needle visibility with different insertion depths and angles, and significantly suppressed image artefacts and background noise. It also achieved a 4.8 times improvement in SNR compared to the conventional reconstruction ()( Table 2). The MHD substantially decreased from 28.7 ± 16.3 to 4.5 ± 7.0 after post-processing the output of the U-Net ().

Fig. 4.

Photoacoustic imaging with needle insertions into ex vivo tissue with conventional reconstruction, U-Net enhancement, and U-Net enhancement with post-processing.

Table 2.

Quantitative evaluation of the trained neural network using ex vivo needle images. These performance metrics are expressed as mean standard deviations from 20 measurements acquired from different spatial locations of the ex vivo tissue and needle positions.

| Metrics | Conventional reconstruction | U-Net enhancement | U-Net enhancement with post-processing |

|---|---|---|---|

| SNR | 91.3 ±47.3 | – | |

| MHD | 28.7 ± 16.3 | 6.3 ±9.1 |

It is worth noting that the performance of the proposed model was slightly degraded with visualising the region near the needle tip, which could be attributed to the large depths at around 2.5 cm (Fig. 4(b) and (d)). However, within smaller imaging depths, the proposed model was still effective for increasing the imaging speed by reducing the number of averages required to visualise the needle with a high SNR (see Supplementary Materials; Figure S4).

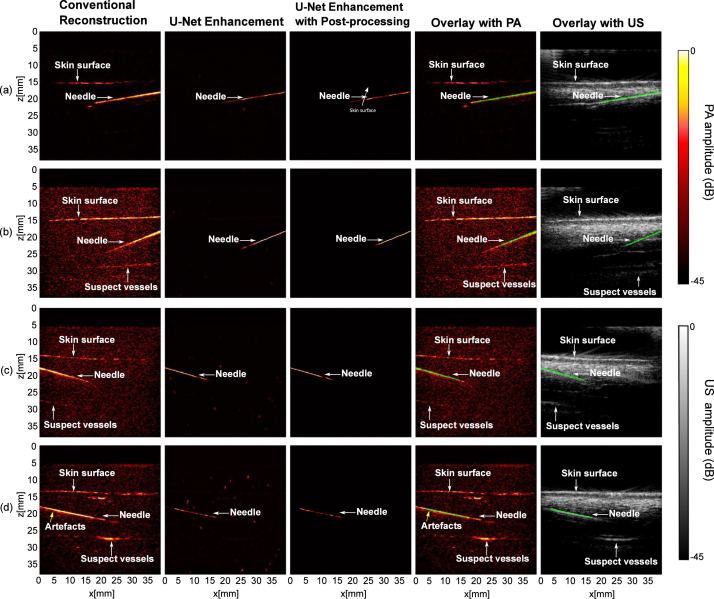

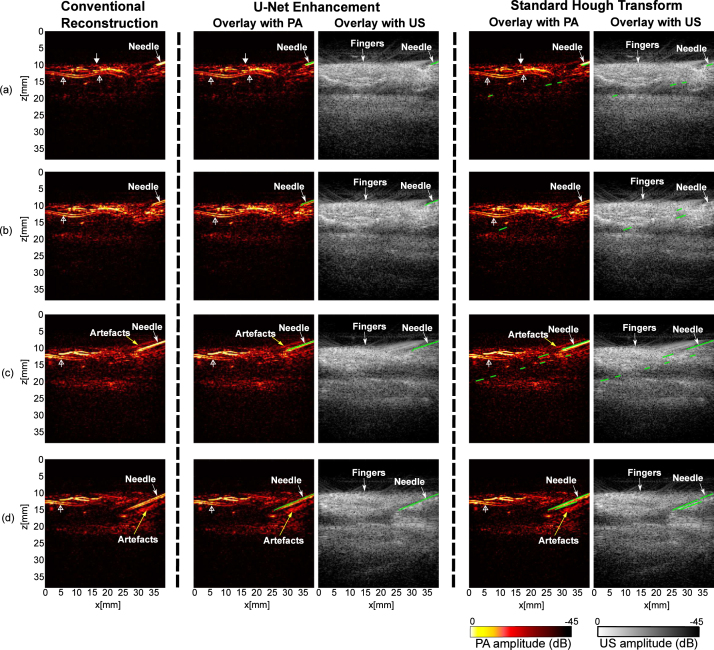

3.3. In vivo imaging

Fig. 5 shows the results of PA imaging with a 20G needle inserted between two fingers of a human volunteer immersed in a water tank (needle outside of the fingers). A main digital artery which has a two-layered feature is apparent on PA images (marked by hollow triangle wide arrows). A 22 s video consisting of 128 frames was saved during the needle insertions (see Supplementary Materials; Video S2). Fig. 5 shows four frames acquired at different time points with the conventional reconstructions and the overlays of PA and US images after the U-Net enhancement and SHT. The SHT was able to detect the needle, but resulted in excessive false positives. This is because the performance of the SHT is sensitive to the specifications of hyperparameters, such as the detectable length of line segments and the searching resolution of and . Fine-tuning of the hyperparameters based on the observations of the needle on a frame by frame basis is not trivial. In comparison, the proposed model manifested a good ability of robustness and generalisation. The in vivo results demonstrated it is insensitive to constantly changing lengths and angles of the needle, and images with excessive noise and artefacts.

Fig. 5.

Photoacoustic (PA) imaging with needle insertions into human fingers in vivo with conventional reconstruction, U-Net enhancement, and standard Hough Transform. Signals from the skin surface are indicated by triangle wide arrows, and signals that may be from digital arteries are indicated by hollow triangle wide arrows. The outcomes of U-Net enhancement and standard Hough Transform are denoted by green lines in PA and ultrasound (US) overlays. (a) - (d) are from a reconstructed PA image sequence recorded during needle insertions in real time.

Quantitative results are summarised in Table 3. An average of 5.8 times improvement in SNR was observed in the U-Net enhancement versus conventional reconstruction at different time points during the insertion (). For MHD, the proposed model outperformed the conventional reconstruction and the SHT with a 4.5- and 2.9-fold reduction, respectively ( for the conventional reconstruction; for the SHT). The post-processing algorithm effectively suppressed the outliers in the output of the U-Net, leading to the MHD as small as 0.6.

Table 3.

Quantitative evaluation of the trained neural network using in vivo needle images. These performance metrics are expressed as mean standard deviations from 20 measurements acquired at different time points during the insertion.

Conventional reconstruction.

Standard hough transform.

To further evaluate the model performance, needle detection rate was measured with three in vivo PA sequences (Table 4; Supplementary Materials; Video S3). For each sequence containing 128 frames, the number of frames with the identifiable needle was counted by the observer as the reference for calculation. It is worth noting that the proposed model enhanced almost all the frames that contained the needle with a true positive rate up to 100%, 90.6%, and 97.0%, respectively.

Table 4.

Quantitative performance on three PA video sequences in vivo with the proposed model.

| Sequence 1 | Sequence 2 | Sequence 3 | |

|---|---|---|---|

| Frames with needle /Total frames |

92/128 | 53/128 | 99/128 |

| Frames without needle /Total frames |

36/128 | 75/128 | 29/128 |

| Needle missed | 0 | 5 | 3 |

| True positives | 92 | 48 | 96 |

| False positives | 0 | 1 | 1 |

| True positive rate (%) | 100 | 90.6 | 97.0 |

| False positive rate (%) | 0 | 1.3 | 3.4 |

3.4. Impact of the needle diameter

To further assess the generalisability of the proposed model, in-plane insertions of needles with different diameters (16G, 18G, 20G, BD, USA; 25G, 30G, Meso-relle, Italy) were imaged with AcousticX during in plane insertions into pork joint tissue ex vivo. The results (see Supplementary Materials; Figure S5) were consistent with the previous results of the 20G needle. For the needles with small diameters, the acquired PA images readily suffered from lower SNRs. However, as expected, the proposed model yielded robust enhancement on the needles at different contrast levels. For SNR and MHD, the proposed model outperformed the conventional reconstruction with an average of 11.2 and 6.5 times improvement, respectively (see Supplementary Materials; Table S1).

4. Discussion

Previous works on DL in PA imaging mainly focused on improving the visualisation of the vasculature by denoising and artefacts removal. However, the performances of DL networks are highly dependent on the training dataset. Networks that are specifically trained to enhance the visualisation of vasculature usually have poor performance on visualising needles due to their different image features. In this work, we are the first to apply DL to specifically improve the needle visualisation with PA imaging for minimally invasive guidance. Considering the relatively simple geometries of clinical needles compared to vasculature, a prominent contribution of this work is that we developed a semi-synthetic approach to address the challenges associated with obtaining ground truth for in vivo data as well as the poor realism of purely simulated data.

According to our experimental results (not shown for the sake of brevity), the simulated optical fluence distribution had a minimal effect on the performance of the proposed model when evaluated on unseen real needle images even with deep insertion angles. This is because the DL-based method was able to enhance the visualisation of the needle by learning its relatively simple spatial features that remained largely consistent. In addition, we found that the inference performance of the trained model on the real images did not benefit from a higher resolution input data than that is currently used (128 × 128 pixels; See Supplementary Materials; Figure S7). The low resolution images performed sufficiently well considering the lightweight model and simple features of the input data. Compared to a high resolution input, a low resolution input is also advantageous in terms of the computational costs; the inferring time for one image was around 90 ms using one GPU (NVIDIA GeForce RTX 2070 Max-Q). Further reduction on the inferring time could be realised by using more powerful GPUs for real-time applications.

During minimally invasive procedures, accurate and clear visualisation of the needle is essential for successful outcomes. Needle visibility has been greatly improved by PA imaging as compared to US imaging, but the image quality in terms of SNR with the LED light source is still sub-optimum due to the low pulse energy. Frame averaging is effective for reducing background noise, but at the cost of the imaging speed and introduces movement artefacts. Further, blood vessels in the background with similar line-shape structures to the needle are readily regarded as visual disturbances for clinicians to identify the needle trajectory. Finally, line artefacts above or beneath the needle shaft are often non-negligible that can lead to misinterpretation of the needle position. Therefore, in this work, PA images of needle insertions into different types of blood-vessel-mimicking phantoms, ex vivo tissue, and in vivo human fingers were acquired to evaluate the proposed model.

Qualitative results demonstrated that our proposed model was able to achieve substantial enhancement on the needle visualisation regarding noise suppression, artefacts removal, and needle detection. The enhancement was further quantified by the SNR and MHD. Performance of the proposed model was compared to the conventional reconstruction and the standard Hough transform on images acquired from blood-vessel-mimicking phantoms, ex vivo pork joint tissue, and human fingers as shown in Supplementary Materials (Figure S1; Figure S2; Figure S3). For SNR, our proposed model achieved 8.3, 4.8, and 5.8 times enhancement for phantom, ex vivo, and in vivo data respectively. The MHD as a measure of similarity of two objects was employed for its great robustness and discriminatory power. It was observed that the MHD had the smallest values with our proposed model compared to the conventional reconstruction and the SHT (1.4, 4.5, and 0.6 for phantom, ex vivo, and in vivo data respectively). Additionally, it is evidenced that the post-processing method based on maximum contour selection was effective to remove the false positives of the U-Net enhancement while preserving the needle pixels.

We also compared the proposed model with a conventional line detection algorithm, SHT, with in vivo video sequences. The SHT performed quite well on some cases with carefully chosen critical hyperparameters, but its performance was readily affected by imperfection errors from the former edge detection step and sensitive to some decision criteria such as empirical values of and that are directly related to the detection efficiency. Fine-tuning of these hyperparameters is impractical for real-time applications where the effective length and angle of the needle placements could constantly vary in each frame. In contrast, our proposed model can efficiently improve the needle visualisation on a variety of PA images from in vivo measurements in near real-time.

Nonetheless, the DL-based enhancement was sensitive to the SNRs of the images. More importantly, the visibility of the needle, especially its tip was still limited to a depth of around 1 cm with in vivo measurements. In the future, deep neural networks could be applied for real-time denoising [44] as an alternative to frame-to-frame averaging to improve the imaging depth. For needle tip visualisation, a fibre-optic US transmitter could be integrated within the needle cannula so that the needle tip can be unambiguously visualised in PA imaging with high SNRs [10]. Light-absorbing coatings based on elastomeric nanocomposites could also be applied to the needle shaft for enhancing its visualisation for guiding minimally invasive procedures [65].

5. Conclusions

In this work, we provided a DL-based framework for enhancing needle visualisation with PA imaging. The DL-model was built using only semi-synthetic data generated by combining simulated data and in vivo measurements. Evaluation was performed on unseen real data acquired by inserting needles into blood-vessels-mimicking phantoms, ex vivo tissue and human fingers (needle outside tissue). Compared to the conventional reconstruction, the proposed framework substantially improved the needle visualisation with PA imaging. It also outperformed the standard Hough Transform on PA in vivo videos with improved robustness and generalisability. Therefore, our framework could be useful for guiding minimally invasive procedures that involve percutaneous needle insertions by accurate identification of clinical needles.

Declaration of Competing Interest

One or more of the authors of this paper have disclosed potential or pertinent conflicts of interest, which may include receipt of payment, either direct or indirect, institutional support, or association with an entity in the biomedical field which may be perceived to have potential conflict of interest with this work. For full disclosure statements refer to https://doi.org/10.1016/j.pacs.2022.100351. Adrien E. Desjardins reports a relationship with Echopoint Medical that includes: equity or stocks. Tom Vercauteren reports relationships with Hypervision Surgical Ltd and Mauna Kea Technologies that include: equity or stocks.

Acknowledgements

This work was funded in whole, or in part, by the Wellcome Trust, United Kingdom [203148/Z/16/Z, WT101957, 203145Z/16/Z], the Engineering and Physical Sciences Research Council (EPSRC), United Kingdom (NS/A000027/1, NS/A000050/1, NS/A000049/1), and King’s–China Scholarship Council PhD Scholarship Program (K-CSC) (202008060071). For the purpose of open access, the author has applied a CC BY public copyright licence to any Author Accepted Manuscript version arising from this submission.

Biographies

Mengjie Shi is a Ph.D. student in the School of Biomedical Engineering & Imaging Sciences at King’s College London, UK. She completed her bachelor’s degree in Optoelectricity Information of Science and Technology at Nanjing University of Science and Technology, China in 2019 and master’s degree in Communications and Signal Processing at Imperial College London in 2020. Her research interests focus on improving photoacoustic imaging with affordable light sources for guiding minimally invasive procedures.

Tianrui Zhao is a Ph.D. student in the School of Biomedical Engineering & Imaging Sciences at King’s College London, UK. He received his B.Sc. in Materials Science and Engineering from Northwestern Polytechnical University, China, and M.Sc. degree in Materials for Energy and Environment from University College London, UK, in 2015 and 2016, respectively. His research interests include developing minimally invasive imaging devices based on photoacoustic imaging.

Dr. Sim West is a consultant anaesthetist at UCLH. He graduated from Sheffield in 2000, and completed his training in anaesthesia in North London, spending 2012 as the Smiths Medical Innovation Fellow. He was appointed to UCLH in 2013 and is lead for regional anaesthesia and the orthopaedic hub. His research interests include improving visualisation of needles, catheters and nerves.

Dr. Adrien Desjardins is a Professor in the Department of Medical Physics and Biomedical Engineering at the University College London, where he leads the Interventional Devices Group. His research interests are centred on the development of new imaging and sensing modalities to guide minimally invasive medical procedures. He has a particular interest in the application of photoacoustic imaging and optical ultrasound to guide interventional devices for diagnosis and therapy.

Tom Vercauteren is a Professor of Interventional Image Computing at King’s College London since 2018 where he holds the Medtronic/Royal Academy of Engineering Research Chair in Machine Learning for Computer-assisted Neurosurgery. From 2014 to 2018, he was Associate Professor at UCL where he acted as Deputy Director for the Wellcome/EPSRC Centre for Interventional and Surgical Sciences (2017–18). From 2004 to 2014, he worked for Mauna Kea Technologies, Paris where he led the research and development team designing image computing solutions for the company’s CE- marked and FDA-cleared optical biopsy device. His work is now used in hundreds of hospitals worldwide. He is a Columbia University and Ecole Polytechnique graduate and obtained his Ph.D. from Inria in 2008. Tom is also an established open-source software supporter.

Dr. Wenfeng Xia is a Lecturer in the School of Biomedical Engineering & Imaging Sciences at King’s College London, UK. He received a B.Sc. in Electrical Engineering from Shanghai Jiao Tong University, China, and a M.Sc. in Medical Physics from University of Heidelberg, Germany, in 2005 and 2007, respectively. In 2013, he obtained his Ph.D from University of Twente, Netherlands. From 2014 to 2018, he was a Research Associate / Senior Research Associate in the Department of Medical Physics and Biomedical Engineering at University College London, UK. He currently leads the Photons+ Ultrasound Research Laboratory (https://www.purlkcl.org/). His research interests include non-invasive and minimally invasive photoacoustic imaging, and ultrasound-based medical devices tracking for guiding interventional procedures.

Footnotes

Supplementary material related to this article can be found online at https://doi.org/10.1016/j.pacs.2022.100351.

Appendix A. Supplementary data

The following is the Supplementary material related to this article.

Additional experimental results for model validation and evaluation of impact of needle diameters, input size, and model capacity.

Photoacoustic imaging of needle insertions between human fingers.

Video displaying of in vivo needle insertions in PA and US overlays with U-Net enhancement and Standard Hough Transform (enhancement results are denoted by green lines).

Three PA in vivo sequences with U-Net enhancement (enhancement results are denoted by green lines).

References

- 1.Chin K.J., Perlas A., Chan V.W.S., Brull R. Needle visualization in ultrasound-guided regional anesthesia: Challenges and solutions. Reg. Anesth. Pain Med. 2008;33(6):532–544. doi: 10.1136/rapm-00115550-200811000-00005. [DOI] [PubMed] [Google Scholar]

- 2.Helbich T.H., Matzek W., Fuchsjäger M.H. Stereotactic and ultrasound-guided breast biopsy. Eur. Radiol. 2004;14(3):383–393. doi: 10.1007/s00330-003-2141-z. [DOI] [PubMed] [Google Scholar]

- 3.Daffos F., Capella-Pavlovsky M., Forestier F. Fetal blood sampling during pregnancy with use of a needle guided by ultrasound: A study of 606 consecutive cases. Am. J. Obstet. Gynecol. 1985;153(6):655–660. doi: 10.1016/s0002-9378(85)80254-4. [DOI] [PubMed] [Google Scholar]

- 4.Rathmell J.P., Benzon H.T., Dreyfuss P., Huntoon M., Wallace M., Baker R., Riew K.D., Rosenquist R.W., Aprill C., Rost N.S., Buvanendran A., Kreiner D.S., Bogduk N., Fourney D.R., Fraifeld E., Horn S., Stone J., Vorenkamp K., Lawler G., Summers J., Kloth D., O’Brien D., Tutton S. Safeguards to prevent neurologic complications after epidural steroid injections: Consensus opinions from a multidisciplinary working group and national organizations. Anesthesiology. 2015;122(5):974–984. doi: 10.1097/ALN.0000000000000614. [DOI] [PubMed] [Google Scholar]

- 5.Hovgesen C.H., Wilhjelm J.E., Vilmann P., Kalaitzakis E. Echogenic surface enhancements for improving needle visualization in ultrasound: A PRISMA systematic review. J. Ultrasound Med. 2022;41(2):311–325. doi: 10.1002/jum.15713. [DOI] [PubMed] [Google Scholar]

- 6.Fronheiser M.P., Idriss S.F., Wolf P.D., Smith S.W. Vibrating interventional device detection using real-time 3-D color Doppler. IEEE Trans. Ultrason. Ferroelectr. Freq. Control. 2008;55(6):1355–1362. doi: 10.1109/TUFFC.2008.798. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Klein S.M., Fronheiser M.P., Reach J., Nielsen K.C., Smith S.W. Piezoelectric vibrating needle and catheter for enhancing ultrasound-guided peripheral nerve blocks. Anesth. Analg. 2007;105(6):1858–1860. doi: 10.1213/01.ane.0000286814.79988.0a. [DOI] [PubMed] [Google Scholar]

- 8.Xia W., Mari J.M., West S.J., Ginsberg Y., David A.L., Ourselin S., Desjardins A.E. In-plane ultrasonic needle tracking using a fiber-optic hydrophone. Med. Phys. 2015;42(10):5983–5991. doi: 10.1118/1.4931418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Xia W., West S.J., Finlay M.C., Mari J.-M., Ourselin S., David A.L., Desjardins A.E. Looking beyond the imaging plane: 3D needle tracking with a linear array ultrasound probe. Sci. Rep. 2017;7(1):3674. doi: 10.1038/s41598-017-03886-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Xia W., Noimark S., Ourselin S., West S.J., Finlay M.C., David A.L., Desjardins A.E. In: Medical Image Computing and Computer-Assisted Intervention - MICCAI 2017, Vol. 10434. Descoteaux M., Maier-Hein L., Jannin P., Collins D.L., Duchesne S., editors. Springer International Publishing; Cham: 2017. Ultrasonic needle tracking with a fibre-optic ultrasound transmitter for guidance of minimally invasive fetal surgery; pp. 637–645. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Uherčík M., Kybic J., Liebgott H., Cachard C. Model fitting using RANSAC for surgical tool localization in 3-D ultrasound images. IEEE Trans. Biomed. Eng. 2010;57(8):1907–1916. doi: 10.1109/TBME.2010.2046416. [DOI] [PubMed] [Google Scholar]

- 12.Waine M., Rossa C., Sloboda R., Usmani N., Tavakoli M. 2015 IEEE International Conference on Robotics and Automation (ICRA) 2015. 3D shape visualization of curved needles in tissue from 2D ultrasound images using RANSAC; pp. 4723–4728. [DOI] [Google Scholar]

- 13.Ding M., Fenster A. A real-time biopsy needle segmentation technique using hough transform. Med. Phys. 2003;30(8):2222–2233. doi: 10.1118/1.1591192. [DOI] [PubMed] [Google Scholar]

- 14.Okazawa S.H., Ebrahimi R., Chuang J., Rohling R.N., Salcudean S.E. Methods for segmenting curved needles in ultrasound images. Med. Image Anal. 2006;10(3):330–342. doi: 10.1016/j.media.2006.01.002. [DOI] [PubMed] [Google Scholar]

- 15.Kaya M., Bebek O. 2014 IEEE International Conference on Robotics and Automation (ICRA) 2014. Needle localization using gabor filtering in 2D ultrasound images; pp. 4881–4886. [DOI] [Google Scholar]

- 16.Uherčík M., Kybic J., Zhao Y., Cachard C., Liebgott H. Line filtering for surgical tool localization in 3D ultrasound images. Comput. Biol. Med. 2013;43(12):2036–2045. doi: 10.1016/j.compbiomed.2013.09.020. [DOI] [PubMed] [Google Scholar]

- 17.Ayvaci A., Yan P., Xu S., Soatto S., Kruecker J. Biopsy needle detection in transrectal ultrasound. Comput. Med. Imaging Graph. 2011;35(7–8):653–659. doi: 10.1016/j.compmedimag.2011.03.005. [DOI] [PubMed] [Google Scholar]

- 18.Pourtaherian A., Ghazvinian Zanjani F., Zinger S., Mihajlovic N., Ng G.C., Korsten H.H.M., de With P.H.N. Robust and semantic needle detection in 3D ultrasound using orthogonal-plane convolutional neural networks. Int. J. Comput. Assist. Radiol. Surg. 2018;13(9):1321–1333. doi: 10.1007/s11548-018-1798-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Arif M., Moelker A., van Walsum T. Automatic needle detection and real-time bi-planar needle visualization during 3D ultrasound scanning of the liver. Med. Image Anal. 2019;53:104–110. doi: 10.1016/j.media.2019.02.002. [DOI] [PubMed] [Google Scholar]

- 20.Gillies D.J., Rodgers J.R., Gyacskov I., Roy P., Kakani N., Cool D.W., Fenster A. Deep learning segmentation of general interventional tools in two-dimensional ultrasound images. Med. Phys. 2020;47(10):4956–4970. doi: 10.1002/mp.14427. [DOI] [PubMed] [Google Scholar]

- 21.Wang L.V., Yao J. A practical guide to photoacoustic tomography in the life sciences. Nature Methods. 2016;13(8):627–638. doi: 10.1038/nmeth.3925. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Ntziachristos V. Going deeper than microscopy: The optical imaging frontier in biology. Nature Methods. 2010;7(8):603–614. doi: 10.1038/nmeth.1483. [DOI] [PubMed] [Google Scholar]

- 23.Beard P. Biomedical photoacoustic imaging. Interface Focus. 2011;1(4):602–631. doi: 10.1098/rsfs.2011.0028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Kuniyil Ajith Singh M., Steenbergen W., Manohar S. In: Frontiers in Biophotonics for Translational Medicine: In the Celebration of Year of Light (2015) Olivo M., Dinish U.S., editors. Springer; Singapore: 2016. Handheld probe-based dual mode ultrasound/photoacoustics for biomedical imaging; pp. 209–247. (Progress in Optical Science and Photonics). [DOI] [Google Scholar]

- 25.Park S., Jang J., Kim J., Kim Y.S., Kim C. Real-time triple-modal photoacoustic, ultrasound, and magnetic resonance fusion imaging of humans. IEEE Trans. Med. Imaging. 2017;36(9):1912–1921. doi: 10.1109/TMI.2017.2696038. [DOI] [PubMed] [Google Scholar]

- 26.Xia W., Nikitichev D.I., Mari J.M., West S.J., Pratt R., David A.L., Ourselin S., Beard P.C., Desjardins A.E. Performance characteristics of an interventional multispectral photoacoustic imaging system for guiding minimally invasive procedures. J. Biomed. Opt. 2015;20(8) doi: 10.1117/1.JBO.20.8.086005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Zhao T., Desjardins A.E., Ourselin S., Vercauteren T., Xia W. Minimally invasive photoacoustic imaging: Current status and future perspectives. Photoacoustics. 2019;16 doi: 10.1016/j.pacs.2019.100146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Xia W., Kuniyil Ajith Singh M., Maneas E., Sato N., Shigeta Y., Agano T., Ourselin S., West S.J., Desjardins A.E. Handheld real-time LED-based photoacoustic and ultrasound imaging system for accurate visualization of clinical metal needles and superficial vasculature to guide minimally invasive procedures. Sensors. 2018;18(5):1394. doi: 10.3390/s18051394. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Kuniyil Ajith Singh M., Xia W. Portable and affordable light source-based photoacoustic tomography. Sensors. 2020;20(21):6173. doi: 10.3390/s20216173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Joseph J., Ajith Singh M.K., Sato N., Bohndiek S.E. Technical validation studies of a dual-wavelength LED-based photoacoustic and ultrasound imaging system. Photoacoustics. 2021;22 doi: 10.1016/j.pacs.2021.100267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Lundervold A.S., Lundervold A. An overview of deep learning in medical imaging focusing on MRI. Z. Med. Phys. 2019;29(2):102–127. doi: 10.1016/j.zemedi.2018.11.002. [DOI] [PubMed] [Google Scholar]

- 32.Liu S., Wang Y., Yang X., Lei B., Liu L., Li S.X., Ni D., Wang T. Deep learning in medical ultrasound analysis: A review. Engineering. 2019;5(2):261–275. doi: 10.1016/j.eng.2018.11.020. [DOI] [Google Scholar]

- 33.Domingues I., Pereira G., Martins P., Duarte H., Santos J., Abreu P.H. Using deep learning techniques in medical imaging: A systematic review of applications on CT and PET. Artif. Intell. Rev. 2020;53(6):4093–4160. doi: 10.1007/s10462-019-09788-3. [DOI] [Google Scholar]

- 34.Gröhl J., Schellenberg M., Dreher K., Maier-Hein L. Deep learning for biomedical photoacoustic imaging: A review. Photoacoustics. 2021;22 doi: 10.1016/j.pacs.2021.100241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Hauptmann A., Cox B. Deep learning in photoacoustic tomography: Current approaches and future directions. J. Biomed. Opt. 2020;25(11) doi: 10.1117/1.JBO.25.11.112903. [DOI] [Google Scholar]

- 36.Antholzer S., Haltmeier M., Nuster R., Schwab J. Photons Plus Ultrasound: Imaging and Sensing 2018. Vol. 10494. SPIE; 2018. Photoacoustic image reconstruction via deep learning; pp. 433–442. [DOI] [Google Scholar]

- 37.Davoudi N., Deán-Ben X.L., Razansky D. Deep learning optoacoustic tomography with sparse data. Nat. Mach. Intell. 2019;1(10):453–460. doi: 10.1038/s42256-019-0095-3. [DOI] [Google Scholar]

- 38.Lan H., Jiang D., Gao F., Gao F. Deep learning enabled real-time photoacoustic tomography system via single data acquisition channel. Photoacoustics. 2021;22 doi: 10.1016/j.pacs.2021.100270. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Allman D., Reiter A., Bell M.A.L. Photoacoustic source detection and reflection artifact removal enabled by deep learning. IEEE Trans. Med. Imaging. 2018;37(6):1464–1477. doi: 10.1109/TMI.2018.2829662. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Awasthi N., Jain G., Kalva S.K., Pramanik M., Yalavarthy P.K. Deep neural network-based sinogram super-resolution and bandwidth enhancement for limited-data photoacoustic tomography. IEEE Trans. Ultrason. Ferroelectr. Freq. Control. 2020;67(12):2660–2673. doi: 10.1109/TUFFC.2020.2977210. [DOI] [PubMed] [Google Scholar]

- 41.Boink Y.E., Manohar S., Brune C. A partially-learned algorithm for joint photo-acoustic reconstruction and segmentation. IEEE Trans. Med. Imaging. 2020;39(1):129–139. doi: 10.1109/TMI.2019.2922026. [DOI] [PubMed] [Google Scholar]

- 42.Allman D., Assis F., Chrispin J., Bell M.A.L. Photons Plus Ultrasound: Imaging and Sensing 2019. Vol. 10878. SPIE; 2019. A deep learning-based approach to identify in vivo catheter tips during photoacoustic-guided cardiac interventions; pp. 454–460. [DOI] [Google Scholar]

- 43.Anas E.M.A., Zhang H.K., Kang J., Boctor E. Enabling fast and high quality LED photoacoustic imaging: A recurrent neural networks based approach. Biomed. Opt. Express. 2018;9(8):3852. doi: 10.1364/BOE.9.003852. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Hariri A., Hariri A., Alipour K., Alipour K., Mantri Y., Schulze J.P., Schulze J.P., Jokerst J.V., Jokerst J.V., Jokerst J.V. Deep learning improves contrast in low-fluence photoacoustic imaging. Biomed. Opt. Express BOE. 2020;11(6):3360–3373. doi: 10.1364/BOE.395683. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Kalloor Joseph F., Arora A., Kancharla P., Kuniyil Ajith Singh M., Steenbergen W., S. Channappayya S. In: Photons Plus Ultrasound: Imaging and Sensing 2021. Oraevsky A.A., Wang L.V., editors. SPIE; Online Only, United States: 2021. Generative adversarial network-based photoacoustic image reconstruction from bandlimited and limited-view data; p. 54. [DOI] [Google Scholar]

- 46.Heimann T., Mountney P., John M., Ionasec R. Real-time ultrasound transducer localization in fluoroscopy images by transfer learning from synthetic training data. Med. Image Anal. 2014;18(8):1320–1328. doi: 10.1016/j.media.2014.04.007. [DOI] [PubMed] [Google Scholar]

- 47.Unberath M., Zaech J.-N., Gao C., Bier B., Goldmann F., Lee S.C., Fotouhi J., Taylor R., Armand M., Navab N. Enabling machine learning in X-ray-based procedures via realistic simulation of image formation. Int. J. CARS. 2019;14(9):1517–1528. doi: 10.1007/s11548-019-02011-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Maneas E., Hauptmann A., Alles E.J., Xia W., Vercauteren T., Ourselin S., David A.L., Arridge S., Desjardins A.E. Deep learning for instrumented ultrasonic tracking: From synthetic training data to in vivo application. IEEE Trans. Ultrason. Ferroelectr. Freq. Control. 2021:1. doi: 10.1109/TUFFC.2021.3126530. [DOI] [PubMed] [Google Scholar]

- 49.Movshovitz-Attias Y., Kanade T., Sheikh Y. In: Computer Vision – ECCV 2016 Workshops. Hua G., Jégou H., editors. Springer International Publishing; Cham: 2016. How useful is photo-realistic rendering for visual learning? pp. 202–217. (Lecture Notes in Computer Science). [DOI] [Google Scholar]

- 50.Garcia-Peraza-Herrera L.C., Fidon L., D’Ettorre C., Stoyanov D., Vercauteren T., Ourselin S. Image compositing for segmentation of surgical tools without manual annotations. IEEE Trans. Med. Imaging. 2021;40(5):1450–1460. doi: 10.1109/TMI.2021.3057884. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Singh M.K.A. 2020. LED-based photoacoustic imaging: From bench to bedside. [Google Scholar]

- 52.Agano T., Singh M.K.A., Nagaoka R., Awazu K. Effect of light pulse width on frequency characteristics of photoacoustic signal – an experimental study using a pulse-width tunable LED-based photoacoustic imaging system. Int. J. Eng. Technol. 2018;7(4):4300–4303. doi: 10.14419/ijet.v7i4.19907. [DOI] [Google Scholar]

- 53.Hariri A., Lemaster J., Wang J., Jeevarathinam A.S., Chao D.L., Jokerst J.V. The characterization of an economic and portable LED-based photoacoustic imaging system to facilitate molecular imaging. Photoacoustics. 2018;9:10–20. doi: 10.1016/j.pacs.2017.11.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Treeby B.E., Cox B.T. K-wave: MATLAB toolbox for the simulation and reconstruction of photoacoustic wave fields. J. Biomed. Opt. 2010;15(2) doi: 10.1117/1.3360308. [DOI] [PubMed] [Google Scholar]

- 55.Wang L., Jacques S.L., Zheng L. MCML—Monte Carlo modeling of light transport in multi-layered tissues. Comput. Methods Prog. Biomed. 1995;47(2):131–146. doi: 10.1016/0169-2607(95)01640-F. [DOI] [PubMed] [Google Scholar]

- 56.Jacques S.L. Optical properties of biological tissues: A review. Phys. Med. Biol. 2013;58(11):R37–R61. doi: 10.1088/0031-9155/58/11/R37. [DOI] [PubMed] [Google Scholar]

- 57.Jaeger M., Schüpbach S., Gertsch A., Kitz M., Frenz M. Fourier reconstruction in optoacoustic imaging using truncated regularized inverse k -space interpolation. Inverse Problems. 2007;23(6):S51–S63. doi: 10.1088/0266-5611/23/6/S05. [DOI] [Google Scholar]

- 58.van Staveren H.J., Moes C.J.M., van Marie J., Prahl S.A., van Gemert M.J.C. Light scattering in lntralipid-10% in the wavelength range of 400–1100 nm. Appl. Opt. AO. 1991;30(31):4507–4514. doi: 10.1364/AO.30.004507. [DOI] [PubMed] [Google Scholar]

- 59.Ronneberger O., Fischer P., Brox T. 2015. U-net: Convolutional networks for biomedical image segmentation. arXiv:1505.04597 [cs] [Google Scholar]

- 60.Kingma D.P., Ba J. 2017. Adam: A method for stochastic optimization. arXiv:1412.6980 [cs] [Google Scholar]

- 61.Loshchilov I., Hutter F. 2017. SGDR: Stochastic gradient descent with warm restarts. arXiv:1608.03983 [cs, math] [Google Scholar]

- 62.Arbeláez P., Maire M., Fowlkes C., Malik J. Contour detection and hierarchical image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2011;33(5):898–916. doi: 10.1109/TPAMI.2010.161. [DOI] [PubMed] [Google Scholar]

- 63.Duda R.O., Hart P.E. Use of the Hough transformation to detect lines and curves in pictures. Commun. ACM. 1972;15(1):11–15. doi: 10.1145/361237.361242. [DOI] [Google Scholar]

- 64.Dubuisson M.-P., Jain A.K. Proceedings of 12th International Conference on Pattern Recognition, Vol. 1. IEEE; 1994. A modified hausdorff distance for object matching; pp. 566–568. [Google Scholar]

- 65.Xia W., Noimark S., Maneas E., Brown N.M., Singh M.K.A., Ourselin S., West S.J., Desjardins A.E. Photons Plus Ultrasound: Imaging and Sensing 2019, Vol. 10878. International Society for Optics and Photonics; 2019. Enhancing photoacoustic visualization of medical devices with elastomeric nanocomposite coatings; p. 108783G. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Additional experimental results for model validation and evaluation of impact of needle diameters, input size, and model capacity.

Photoacoustic imaging of needle insertions between human fingers.

Video displaying of in vivo needle insertions in PA and US overlays with U-Net enhancement and Standard Hough Transform (enhancement results are denoted by green lines).

Three PA in vivo sequences with U-Net enhancement (enhancement results are denoted by green lines).