Abstract

Background

Early detection of dementia is critical for intervention and care planning but remains difficult. Computerized cognitive testing provides an accessible and promising solution to address these current challenges.

Objective

The aim of this study was to evaluate a computerized cognitive testing battery (BrainCheck) for its diagnostic accuracy and ability to distinguish the severity of cognitive impairment.

Methods

A total of 99 participants diagnosed with dementia, mild cognitive impairment (MCI), or normal cognition (NC) completed the BrainCheck battery. Statistical analyses compared participant performances on BrainCheck based on their diagnostic group.

Results

BrainCheck battery performance showed significant differences between the NC, MCI, and dementia groups, achieving 88% or higher sensitivity and specificity (ie, true positive and true negative rates) for separating dementia from NC, and 77% or higher sensitivity and specificity in separating the MCI group from the NC and dementia groups. Three-group classification found true positive rates of 80% or higher for the NC and dementia groups and true positive rates of 64% or higher for the MCI group.

Conclusions

BrainCheck was able to distinguish between diagnoses of dementia, MCI, and NC, providing a potentially reliable tool for early detection of cognitive impairment.

Keywords: cognitive test, mild cognitive impairment, dementia, cognitive decline, repeatable battery, discriminant analysis

Introduction

In proportion with the growth of the aging population, the incidence of dementia is on the rise and is projected to affect nearly 14 million people in the United States and upwards of 152 million people globally in the coming decades [1-3]. Current rates of undetected dementia are reported to be as high as 61.7% [4], and available treatments are limited to promoting quality of life rather than reversal or cure of the disease process. The ability to properly identify and treat dementia at this scale requires an active approach focused on early identification. Early detection of dementia provides access to timely interventions and knowledge to promote patient health and quality of life before symptoms become severe [5-8]. Early and accurate diagnosis also allows for proper preparation for patients, caregivers, and their families, resulting in improved caregiver well-being and delayed nursing home placements [9-12]. Further, it helps to characterize patients with early-stage dementia for clinical trials, exploring the latest therapeutics and validating biomarkers indicative of specific pathologies. Despite the benefits, early detection is a challenge with current clinical protocols, leaving many patients undiagnosed until symptoms become noticeable in later stages of the illness [13].

Considered an early symptomatic stage of dementia, mild cognitive impairment (MCI) signifies a level of cognitive impairment between normal cognition (NC) and dementia [14]. While not all MCI cases progress, the conversion rate of MCI to dementia has been observed to be approximately 5% to 10% [15]. This stresses the importance of identifying MCI in early detection and clinical intervention for dementia, which is included in recommendations from the National Institute on Aging and the Alzheimer’s Association [16]. Detection of MCI has been successful when using brief cognitive screening assessments. The widely used Montreal Cognitive Assessment (MoCA) has demonstrated 83% sensitivity and 88% specificity in distinguishing MCI from NC, and 90% sensitivity and 63% specificity in distinguishing dementia from MCI [17-19]. Similar performance has also been observed for the Mini-Mental State Examination (MMSE) and the Saint Louis University Mental Status (SLUMS) exam [20-22]. While these screening tests do well in their ability to detect MCI, they have many limitations. First, these tests are time- and labor-intensive (ie, verbal administration by a physician or test administrator and hours for training, recording responses, scoring, and interpreting results). Second, these paper-based tests cannot allow for tracking of timing, which is an important indicator of an individual’s cognitive health [23]. Also, there is a lack of detailed insight into different cognitive domains because their individual subtests are, by design, simple and suffer from ceiling effects [19,24,25].

Neuropsychological tests (NPTs) represent a more extensive and comprehensive class of cognitive evaluation [26]. They allow for research into certain cognitive domains (eg, attention, working memory, language, visuospatial skills, executive functioning, and memory), research that is used to support clinical diagnoses and further delineate specific neurocognitive disorders. NPTs can determine patterns of cognitive functioning that relate to normal aging, MCI, and dementia progression with a specificity of 67% to 99% [27]. A major strength of NPTs is their ability to characterize cognitive impairment, providing clues to underlying pathology, and thereby improving diagnostic accuracy to guide appropriate treatment. However, NPTs come with downsides, including financial cost, long appointment times, and high levels of training and expertise required to conduct and interpret tests. Prior studies have also shown that some NPTs demonstrate high accuracy in differentiating dementia patients from healthy participants, but do not have adequate psychometrics to distinguish MCI from dementia [28-31].

Computerized cognitive assessment tools have been developed to address the issues of accessibility and efficiency [31-34]. They are more comprehensive than screening tests but less expensive and quicker than clinical NPTs, and they aim to maximize accessibility to both patients and providers. They also yield multiple benefits, including maintaining testing standardization, alleviating the time pressures of modern clinical practice, and providing a comprehensive assessment of cognitive function to strengthen a clinical diagnosis. Importantly, in the new era of practicing amid the COVID-19 pandemic [35-37], increasing the accessibility of remote cognitive testing for vulnerable and high-risk patients is essential.

This study evaluated BrainCheck, a computerized cognitive test battery available on mobile devices, such as smartphones, tablets, and computers, making it portable and allowing it to be administered remotely. In addition to offering automated scoring and instant interpretation, BrainCheck requires short administration and testing times, comparable to traditional screening instruments, but provides detailed insight into multiple aspects of cognitive functioning that only comprehensive NPTs can. BrainCheck has previously been validated for its diagnostic accuracy in detecting concussion [38] and dementia-related cognitive decline [39]. Furthering its validation for dementia-related cognitive decline, we sought to assess BrainCheck’s utility as a diagnostic aid to accurately assess the severity of cognitive impairment. We measured BrainCheck’s ability to distinguish individuals with different levels of cognitive impairment (ie, NC, MCI, and dementia) based on their comprehensive clinical diagnoses. Our goal was to further demonstrate the utility of BrainCheck for cognitive assessment, specifically as a diagnostic aid in cases where NPT may be unavailable or when a comprehensive evaluation is not indicated.

Methods

Ethics Approval

This study was approved by the University of Washington (UW) Institutional Review Board (IRB) for human subject participation (review number STUDY00000790).

Recruitment

Participants were recruited from a research registry maintained by the Alzheimer’s Disease Research Center associated with the UW Medicine Memory and Brain Wellness Center clinic [40]. This registry is a continually updated database of individuals who have expressed interest and signed an IRB-approved consent form to be contacted about participation in Alzheimer disease (AD) and related dementias research studies, many of whom have been recently evaluated at the clinic and, hence, have a clinical diagnosis or evaluation. Those with listed addresses within a 70-mile radius of Seattle, Washington, were contacted by phone or email, based on information provided within the registry. If the person was unable to physically use an iPad, if the person was too cognitively impaired to understand or follow instructions, or if the primary contact (eg, spouse) indicated that the person was unable to participate, they were not recruited for the study. When study procedures were modified from in-person to remote administration due to the COVID-19 pandemic (approximately March 2020), participants outside the initial geographical range were contacted to explore remote testing capabilities. We required that these participants have access to either an iPad with iOS 10 or later or a touchscreen computer and Wi-Fi connectivity to participate in the study.

Using the provided primary cognitive diagnosis within the registry, participants were divided into one of three groups: (1) NC, indicated by subjective cognitive complaint or no diagnosis of cognitive impairment, some of which were self-reported; (2) MCI, representing both amnestic and nonamnestic subtypes; or (3) dementia, which included dementia due to AD, frontotemporal dementia, vascular dementia, Lewy body dementia, mixed dementia, or atypical AD.

A total of 5 participants were not recruited from the registry but via snowball sampling from other participants. The recruitment of these participants was simply due to convenience, typically a family member or friend that was also available at the time of testing. Out of these 5 participants, 4 of them were placed into the NC group after their self-reports denied symptoms or a history of cognitive impairment; these 4 were not patients of the memory clinic. The remaining participant was a patient from the memory clinic, just not a part of the registry, and was placed in the AD group based on their most recent diagnosis retrieved from their medical records. Testing for these 5 participants was administered on-site.

Study Design and Procedures

On-site Administration

Data for on-site administration was collected from October 2019 to February 2020. A session was held either in the participant’s home or in a well-lit, quiet, and distraction-free public setting. Consent forms were reviewed and signed by the participant or their legally authorized representative and an examiner, with both parties obtaining a copy. The study was designed for participants to complete one session with a moderator using a provided iPad (model MR7G3LL/A; Apple Inc) connected to Wi-Fi to complete the BrainCheck battery. Prior to testing, participants were briefed on BrainCheck, and moderator guidance was limited to questions and assistance requested by the participant during the practice portions. Participants received a gift card (US $20) for participation at the conclusion of the study session.

Remote Administration

Due to the COVID-19 pandemic and interest in preliminary data on remote cognitive testing, study procedures were modified to accommodate stay-at-home orders in Washington state. Data collection resumed from April to May 2020, with modified procedures using remote administration. These participants provided written and verbal consent and were administered the BrainCheck battery remotely over a video call with the moderator. Participants used their personal iPads or touchscreen computer browsers to complete the BrainCheck battery. The same method for on-site administration, as described above, was used for remote administration.

Measurements

A short description for each of the five assessments comprising the BrainCheck battery (V4.0.0) is listed in Table S1 in Multimedia Appendix 1. More detailed descriptions may be found in a previous validation study [38]. After completion of the BrainCheck battery, the score for each assessment was calculated using assessment-specific measurements by the BrainCheck software (Table S1 in Multimedia Appendix 1). The BrainCheck Overall Score is a single, cumulative score for the BrainCheck battery that represents general cognitive functioning. This score was calculated by taking the average of all completed assessment scores. If an assessment was timed out, a penalty was applied by setting this assessment score to zero. The normalized assessment scores and BrainCheck Overall Scores were corrected for participant age and device used (ie, iPad vs computer) using the mean and SD of the corresponding score from a normative database previously collected by BrainCheck [38,39]. The score generated followed a standard normal distribution, where a lower score indicates lower assessment performance and cognitive functioning.

Statistical Analysis

Statistical analyses were performed using Python (version 3.8.5; Python Software Foundation) and R (version 3.6.2; The R Foundation) programming languages. All tests were 2-sided, and significance was accepted at the 5% level (α=.05). Comparison of means of groups was made by an analysis of variance test for normally distributed data. The chi-square test was used to analyze differences in categorical variables.

To evaluate BrainCheck performance among participants in different diagnostic groups while adjusting for age, sex, and administration type, linear regression was used in which the outcome variables were duration to complete BrainCheck battery, individual BrainCheck assessment scores, and BrainCheck Overall Scores. P values were corrected using the Tukey method for multiple comparisons. To assess the accuracy of the BrainCheck Overall Score in the binary classification of participants in the different diagnostic groups (ie, dementia vs NC, MCI vs NC, and dementia vs MCI), receiver operating characteristic (ROC) curves with area under the curve (AUC) calculations were generated to determine diagnostic sensitivity and specificity. In these binary classifications, sensitivity (ie, true positive rate) and specificity (ie, true negative rate) are measured, with the more severe group as cases and the less severe group as controls. For example, the MCI group represents cases in the MCI versus NC classification, but it represents controls in the dementia versus MCI classification. In assessing BrainCheck for three-group classification, we used volume under the three-class ROC surface method from Luo and Xiong [41] to define optimal cutoffs for the BrainCheck Overall Score and find the maximum diagnostic accuracy.

Results

Participant Characteristics and Demographics

A total of 241 individuals were contacted to participate, and 99 participants completed the study. Demographic details of the participants are provided in Table 1. The three groups did not differ to a significant degree in terms of education, administration type, or recruitment type, but there were differences in age and sex.

Table 1.

Participant demographics.

| Demographics | Normal cognition (n=35) | Mild cognitive impairment (n=22) | Dementia (n=42) | P value | |

| Participants (N=99), n (%)a | 35 (35) | 22 (22) | 42 (42) | —b | |

| Age (years), mean (SD) | 67.8 (9.6) | 73.5 (5.9) | 71.5 (9.0) | .04c | |

| Sex, n (%) | .005d | ||||

|

|

Female | 25 (71) | 8 (36) | 16 (38) |

|

|

|

Male | 10 (29) | 14 (64) | 26 (62) |

|

| Education level, n (%) | .70d | ||||

|

|

Some college or less | 2 (6) | 2 (9) | 8 (19) |

|

|

|

Bachelor of Arts or Bachelor of Science college graduate | 10 (29) | 6 (27) | 11 (26) |

|

|

|

Post–bachelor’s degree | 14 (40) | 9 (41) | 16 (38) |

|

|

|

N/Ae | 9 (26) | 5 (23) | 7 (17) |

|

| Administration type, n (%) | .37d | ||||

|

|

On-site | 29 (83) | 16 (73) | 29 (69) |

|

|

|

Remote | 6 (17) | 6 (27) | 13 (31) |

|

| Recruitment type, n (%) | .09d | ||||

|

|

Registry | 31 (89) | 22 (100) | 41 (98) | |

|

|

Snowball | 4 (11) | 0 (0) | 1 (2) |

|

aPercentages in this row were calculated based on the total sample number.

bNo statistical test was run.

cThis P value was calculated using the analysis of variance test.

dThis P value was calculated using the chi-square test.

eN/A: not applicable; a response was not given.

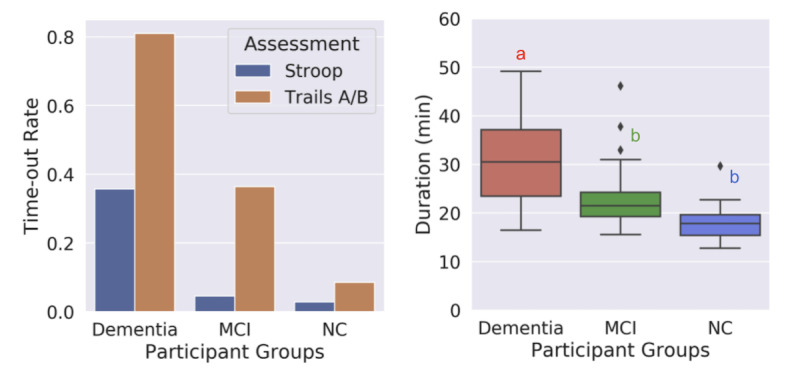

Completion of Assessments

We found that most participants in the NC group were able to complete the assessments, whereas the dementia group had a higher time-out rate, with the MCI group falling in between the two (Figure 1). The time-out function occurs when a participant cannot complete a trial of the assessment in 30 seconds; it is embedded in the assessments of the Stroop test and the Trail Making Test, Parts A and B (Trails A/B). Time-outs were mainly due to response delays, where participants were attempting the test but could not answer quickly enough. Overall, the dementia group took significantly more time to complete the BrainCheck battery (median 30.5, IQR 23.4-37.1 min) compared to the MCI group (median 21.5, IQR 19.3-24.2 min) and the NC group (median 17.8, IQR 15.4-19.6 min).

Figure 1.

Completion of assessments and durations to complete BrainCheck battery. A. Time-out rates of the Stroop test and Trails A/B assessments for each diagnostic group. The BrainCheck Stroop and Trails A/B assessments time out if participants cannot complete a trial of the assessment in 30 seconds. B. Duration (min) to complete the BrainCheck battery for each diagnostic group. Letters (a, b) indicate significant differences between groups (P<.05) in the linear regression model, with age, sex, and administration type as regressors; any two groups sharing a letter are not significantly different. MCI: mild cognitive impairment; NC: normal cognition; Trails A/B: Trail Making Test, Parts A and B.

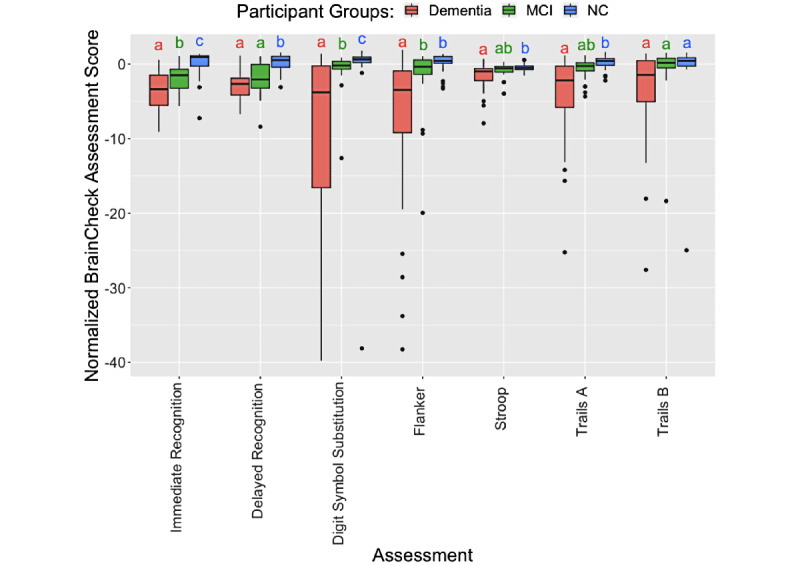

BrainCheck Performance

BrainCheck assessments were compared across the three groups using a linear regression model with age, sex, and administration type as regressors (Figure 2 and Table 2). Individual scores, such as the BrainCheck Overall Score, were normalized for age and device. Overall, participants with greater cognitive impairment showed lower BrainCheck assessment scores. All individual assessments except Trails B showed significant differences in performance between the NC and dementia groups, whereas two of the seven assessments (ie, Immediate Recognition and Digit Symbol Substitution) showed significant differences in performance between all three groups (Figure 2 and Table 2). Digit Symbol Substitution, Flanker, and Trails A/B assessments showed long tails in the scores of the dementia group because some participants in the dementia group only completed parts of the assessments or exhibited low accuracy (Figure S1 in Multimedia Appendix 1).

Figure 2.

Pairwise comparison of participant groups based on normalized scores of BrainCheck assessments. For each assessment, any two groups sharing a letter are not significantly different. Otherwise, they are significantly different (P<.05) in linear regression models, with age, sex, and administration type as regressors. The outliers identified by the IQR method in each assessment were removed before the comparison. MCI: mild cognitive impairment; NC: normal cognition; Trails A: Trail Making Test, Part A; Trails B: Trail Making Test, Part B.

Table 2.

Linear regression model analyses using each BrainCheck assessment score and the BrainCheck Overall Score as the outcome variable in separate models, with age, sex, and administration type as regressors.

| Assessment | Estimated marginal mean (SE) | Contrast estimate (P value) | |||||

|

|

NCa | MCIb | Dementia | Dementia vs NC | Dementia vs MCI | MCI vs NC | |

| Immediate Recognitionc | 0.17 (0.44) | –1.93 (0.50) | –3.36 (0.36) | –3.54 (<.001) | –1.43 (.04) | –2.10 (.005) | |

| Delayed Recognition | 0.06 (0.34) | –2.16 (0.39) | –2.92 (0.28) | –2.98 (<.001) | –0.76 (.23) | –2.23 (<.001) | |

| Digit Symbol Substitutionc | 0.80 (0.27) | –0.21 (0.29) | –1.23 (0.29) | –2.02 (<.001) | –1.01 (.04) | –1.01 (.03) | |

| Flanker | 0.76 (0.45) | –0.74 (0.51) | –2.64 (0.41) | –3.4 (<.001) | –1.89 (.009) | –1.5 (.06) | |

| Stroop test | –0.43 (0.12) | –0.63 (0.13) | –0.91 (0.12) | –0.49 (.01) | –0.28 (.23) | –0.21 (.46) | |

| Trail Making Test, Part A | –0.01 (0.33) | –0.75 (0.36) | –1.69 (0.30) | –1.67 (<.001) | –0.94 (.11) | –0.74 (.29) | |

| Trail Making Test, Part B | 0.51 (0.21) | 0.21 (0.23) | –0.16 (0.24) | –0.67 (.08) | –0.37 (.47) | –0.30 (.57) | |

| Normalized BrainCheck Overall Scorec | 0.71 (0.55) | –2.15 (0.62) | –5.63 (0.45) | –6.34 (<.001) | –3.48 (<.001) | –2.86 (.002) | |

aNC: normal cognition.

bMCI: mild cognitive impairment.

cThese assessments indicate significant differences across all three diagnostic groups.

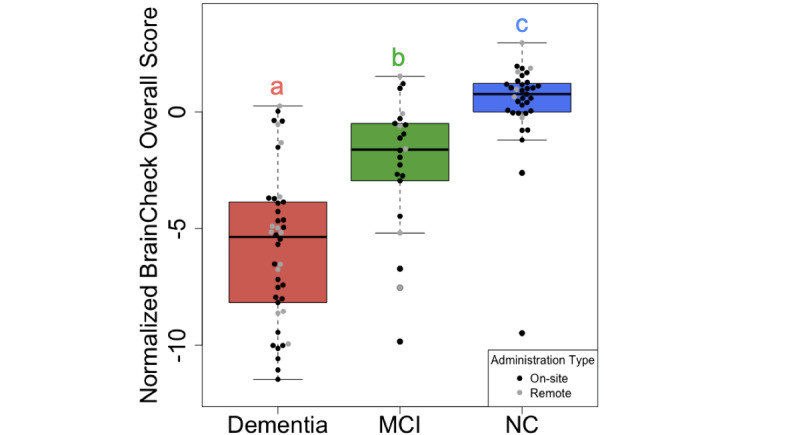

The BrainCheck Overall Score is a composite of all individual assessments within the BrainCheck battery, representing overall performance (see details in the Measurements section). Using an existing normative population database, partly compiled from controls in previous studies [38,39], the BrainCheck Overall Score was adjusted for age and the device used to generate the normalized BrainCheck Overall Scores. The normalized BrainCheck Overall Scores differed significantly among these three groups (P<.001). Pairwise comparisons with Tukey adjustments for multiple comparisons show that the NC group scored significantly higher than the MCI group (P=.002) and the dementia group (P<.001), and the MCI group scored significantly higher than the dementia group (P<.001; Figure 3).

Figure 3.

Comparison of normalized BrainCheck Overall Scores among groups. The normalized BrainCheck Overall Score follows a standard normal distribution. Letters (a, b, c) indicate significant differences (P<.05) on the linear regression model, with age, sex, and administration type as regressors. MCI: mild cognitive impairment; NC: normal cognition.

BrainCheck Diagnostic Accuracy

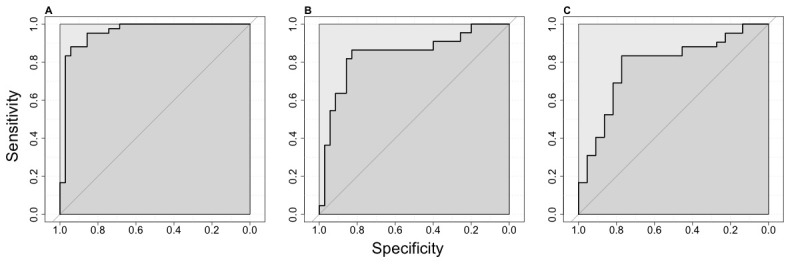

Using ROC analysis, BrainCheck Overall Scores achieved a sensitivity of 88% and a specificity of 94% for classifying between dementia and NC participants (AUC=0.95), a sensitivity of 86% and a specificity of 83% for classifying between MCI and NC participants (AUC=0.84), and a sensitivity of 83% and a specificity of 77% for classifying between dementia and MCI participants (AUC=0.79; Figure 4).

Figure 4.

ROC curves for the BrainCheck Overall Score in classifying participants of different groups. ROC curves with AUCs for the BrainCheck Overall Score in the binary classification of (A) dementia vs NC, (B) MCI vs NC, and (C) dementia vs MCI. In these binary classifications, sensitivity (ie, true positive rate) and specificity (ie, true negative rate) are measured with the more severe group as cases and the less severe group as controls. AUC: area under the curve; MCI: mild cognitive impairment; NC: normal cognition; ROC: receiver operating characteristic.

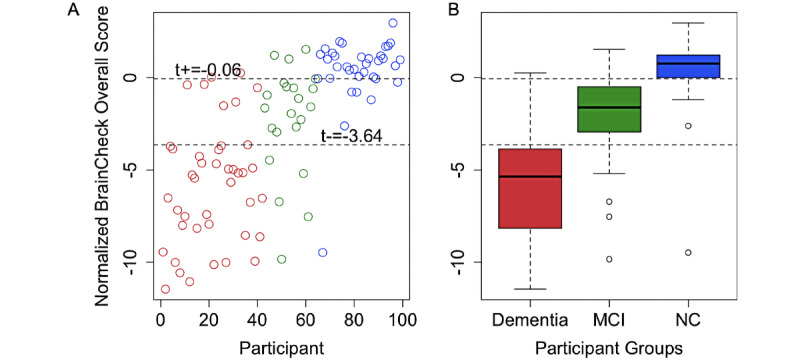

Using methods described by Luo and Xiong for three-group classification [41], the optimal lower and upper cutoffs of the normalized BrainCheck Overall Score in maximizing diagnostic accuracy were –3.64 and –0.06, respectively. This achieved true positive rates of 80% for the NC group, 64% for the MCI group, and 81% for the dementia group (Figure 5).

Figure 5.

Optimal BrainCheck cutoff scores for distinguishing NC, MCI, and dementia groups. A. Individual participant normalized BrainCheck Overall Scores, where the x-axis is the index of the participant, sorted by primary diagnosis (dementia: red, MCI: green, and NC: blue). The values t+ and t-, respectively, represent the optimal upper and lower cutoffs of the normalized BrainCheck Overall Score in maximizing diagnostic accuracy. B. Box plots of normalized BrainCheck Overall Scores for each diagnostic group. The normalized BrainCheck Overall Score follows a standard normal distribution. The dashed lines label the optimal cutoff scores for distinguishing the diagnostic groups. MCI: mild cognitive impairment; NC: normal cognition.

Discussion

Principal Findings

Consistent with prior findings in concussion [38] and in dementia and cognitive decline [39] samples, this study demonstrated that BrainCheck is consistent in its capability to detect cognitive impairment and can reliably detect severity and differentiate between cognitive impairment groups (ie, NC, MCI, and dementia). As expected, participants with more severe cognitive impairment performed worse across the individual assessments and on BrainCheck Overall Scores. The BrainCheck Overall Scores separated participants of different diagnostic groups successfully with high sensitivity and specificity.

BrainCheck Overall Scores were more robust in distinguishing between these groups where participants in the dementia group had significantly lower scores than those in the NC group. The BrainCheck battery was able to distinguish between NC and dementia participants, with 94% sensitivity and 88% specificity. These findings show that the BrainCheck Overall Score demonstrates better accuracy for differentiating NC from dementia, compared to the MMSE, SLUMS, and MoCA screening measures [22,41,42]. People with MCI usually experience fewer cognitive deficits and preserved functioning in activities of daily living compared to those with dementia [43], and our findings of sensitivity and specificity with separating MCI from other groups were slightly lower than the NC versus the dementia differentiations (Figure 5). Nonetheless, the BrainCheck Overall Score showed sensitivities and specificities greater than 80% in distinguishing MCI from NC and dementia groups, which is comparable to the MoCA, SLUMS, and MMSE [18-22,42]. Furthermore, a review of validated computerized cognitive tests indicated AUCs ranging from 0.803 to 0.970 for detecting MCI, and AUCs of 0.98 and 0.99 in detecting dementia due to AD [44], which were comparable with the results found in this study.

Although not all individual assessments in the BrainCheck battery differentiated between NC, MCI, and dementia, we observed a general trend for each assessment showing that dementia participants had the lowest scores, whereas the NC participants had the highest scores. Individual assessments that did show significant differences in the scores between NC and MCI groups and between dementia and MCI groups included Immediate Recognition and Digit Symbol Substitution. Notably, Digit Symbol Substitution showed significant differences in performance between all three diagnostic groups, whereas a previous study found that Digit Symbol Substitution did not show significant differences between cognitively healthy and cognitively impaired groups (n=18, P=.29) [39], likely due to this study having a larger sample size. Individual assessments with no significant differences between the MCI group and the NC and dementia groups were the Stroop and Trails A/B tests (Figure 2 and Table 2). All of these tests include time-out mechanisms if participants are unable to complete the test, and time-out rates were higher in the more cognitively impaired groups (Figure 2). Therefore, when calculating the BrainCheck Overall Score, we have introduced a penalty mechanism for timed-out assessments.

In comparison to comprehensive NPTs, which can typically last a few hours and sometimes require multiple visits [43], BrainCheck demonstrated shorter test duration, with median completion times of 17.8 (IQR 15.4-19.6) minutes for NC participants and 30.5 (IQR 23.4-37.1) minutes for dementia participants (Figure 1). Shorter test durations observed in individuals with no or less cognitive impairment suggest that computerized cognitive tests could be useful for rapid early detection in this population, prompting further evaluation, whereas those with dementia have likely already undergone a comprehensive evaluation. The wide variance in completion time for the dementia group may have uncovered the difficulty that participants with more severe cognitive impairment may have faced in completing the BrainCheck battery, compared to the lower variance observed in the NC group.

A limitation of this study was that participants were not diagnosed by a physician at the time point of BrainCheck testing. Thus, participants were placed into the diagnostic groups based on their most recent clinical diagnosis available in their electronic health record, or for the few NC participants without medical evaluations, based on their report of no cognitive symptoms or diagnosis of cognitive impairment. The period from the most recent clinical diagnosis to the date of BrainCheck testing varied among the diagnostic groups; the dementia group had the fewest days from their latest clinical evaluation (median 82.5, IQR 44.5-141.25 days), followed by the MCI group (median 244, IQR 105-346.5 days) and the NC group (median 645, IQR 225.5-1112.5 days). These large time intervals in a degenerative population leave room for cognition to worsen over time, potentially blurring the lines in the severity of cognitive impairment, where participants may have progressed to MCI from NC and to dementia from MCI during that period. This would make distinguishing NC from cognitive impairment more difficult, yet diagnostic accuracy among the groups remains high. Furthermore, the median number of days since the last clinical evaluation for NC participants was as high as 645 days. This could suggest that the NC participants did not feel an inclination to seek out further cognitive evaluation during the extended time period, and may not have experienced noticeable cognitive decline. Future validity studies should ensure that a physician evaluation and diagnosis occur closer to the time of BrainCheck testing to address these limitations.

Another limitation was that although not all individual assessment scores could differentiate the three groups, the pattern of differences across these scores may contain useful diagnostic information. The use of the BrainCheck Overall Score as an average of all individual assessment scores appears to work effectively, but does not take into account the other relationships seen across individual scores. Furthermore, some individual scores may be more informative for detecting cases, whereas others may be informative for gauging severeness. Future studies recruiting a larger sample size in each group will allow for an investigation into whether machine learning methods can extrapolate these relationships and improve the diagnostic accuracy of BrainCheck.

When administration type was considered in linear regression model analyses, scores only showed significant differences among the three diagnostic groups instead of administration types. While remote administration was not designed into the original study, stay-at-home orders due to COVID-19 required modifications, and efforts were made to provide preliminary data for remote use. With preliminary outcomes indicating feasibility for remote administration, a more robust study and increased sample size will be needed to fully validate BrainCheck’s cognitive assessment via its remote feature.

Conclusions

The use of computerized cognitive tests provides the opportunity to increase test accessibility for an aging population with an increased risk of cognitive impairment. The findings in this study demonstrate that BrainCheck could distinguish between three levels of cognitive impairment: NC, MCI, and dementia. BrainCheck is automated and quick to administer, both in person and remotely, which could help increase accessibility to testing and early detection of cognitive decline in an ever-aging population. This study paves the way for a comprehensive longitudinal study, exploring BrainCheck in early detection of dementia and monitoring of cognitive symptoms over time, including further comparison to gold-standard neuropsychological assessments.

Acknowledgments

Funding was provided by BrainCheck, Inc. The registry was provided by a National Institutes of Health–funded research resource center associated with the UW Memory and Brain Wellness Center and the UW Alzheimer’s Disease Research Center. We would like to thank Carolyn M Parsey, a neuropsychologist at the UW Memory and Brain Wellness Center at Harborview and a UW assistant professor of neurology, for advising throughout the entirety of this study.

Abbreviations

- AD

Alzheimer disease

- AUC

area under the curve

- IRB

Institutional Review Board

- MCI

mild cognitive impairment

- MMSE

Mini-Mental State Examination

- MoCA

Montreal Cognitive Assessment

- NC

normal cognition

- NPT

neuropsychological test

- ROC

receiver operating characteristic

- SLUMS

Saint Louis University Mental Status

- Trails A/B

Trail Making Test, Parts A and B

- UW

University of Washington

Supplementary information.

Footnotes

Authors' Contributions: BH and RHG were responsible for the conceptualization of the study and provided study supervision. SY, KS, and BH were responsible for the formal analysis. RHG was responsible for funding acquisition. KS, HQP, and BK were responsible for study investigation. SY, BH, and RHG were responsible for the study methodology. KS and RHG were responsible for securing study resources. SY, KS, HQP, BK, and BH wrote the original draft of the manuscript. All authors reviewed and edited the manuscript.

Conflicts of Interest: The principal investigator, RHG, serves as Chief Medical Officer of BrainCheck. All authors report personal fees from BrainCheck, outside of the submitted work, and KS, DH, BH, and RHG report receiving stock options from BrainCheck. The study was funded by BrainCheck.

References

- 1.Alzheimer's Association 2020 Alzheimer's disease facts and figures. Alzheimers Dement. 2020 Mar 10;:391–460. doi: 10.1002/alz.12068. https://alz-journals.onlinelibrary.wiley.com/doi/10.1002/alz.12068 . [DOI] [Google Scholar]

- 2.Matthews KA, Xu W, Gaglioti AH, Holt JB, Croft JB, Mack D, McGuire LC. Racial and ethnic estimates of Alzheimer's disease and related dementias in the United States (2015-2060) in adults aged ≥65 years. Alzheimers Dement. 2019 Jan;15(1):17–24. doi: 10.1016/j.jalz.2018.06.3063. http://europepmc.org/abstract/MED/30243772 .S1552-5260(18)33252-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Alzheimer’s Disease International . World Alzheimer Report 2019: Attitudes to Dementia. London, UK: Alzheimer’s Disease International; 2019. [2020-09-21]. https://www.alzint.org/u/WorldAlzheimerReport2019.pdf . [Google Scholar]

- 4.Lang L, Clifford A, Wei L, Zhang D, Leung D, Augustine G, Danat IM, Zhou W, Copeland JR, Anstey KJ, Chen R. Prevalence and determinants of undetected dementia in the community: A systematic literature review and a meta-analysis. BMJ Open. 2017 Feb 03;7(2):e011146. doi: 10.1136/bmjopen-2016-011146. https://bmjopen.bmj.com/lookup/pmidlookup?view=long&pmid=28159845 .bmjopen-2016-011146 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Hill NTM, Mowszowski L, Naismith SL, Chadwick VL, Valenzuela M, Lampit A. Computerized cognitive training in older adults with mild cognitive impairment or dementia: A systematic review and meta-analysis. Am J Psychiatry. 2017 Apr 01;174(4):329–340. doi: 10.1176/appi.ajp.2016.16030360. [DOI] [PubMed] [Google Scholar]

- 6.Karssemeijer EGA, Aaronson JA, Bossers WJ, Smits T, Olde Rikkert MGM, Kessels RPC. Positive effects of combined cognitive and physical exercise training on cognitive function in older adults with mild cognitive impairment or dementia: A meta-analysis. Ageing Res Rev. 2017 Nov;40:75–83. doi: 10.1016/j.arr.2017.09.003.S1568-1637(17)30114-9 [DOI] [PubMed] [Google Scholar]

- 7.Guitar NA, Connelly DM, Nagamatsu LS, Orange JB, Muir-Hunter SW. The effects of physical exercise on executive function in community-dwelling older adults living with Alzheimer's-type dementia: A systematic review. Ageing Res Rev. 2018 Nov;47:159–167. doi: 10.1016/j.arr.2018.07.009.S1568-1637(18)30121-1 [DOI] [PubMed] [Google Scholar]

- 8.Petersen JD, Larsen EL, la Cour K, von Bülow C, Skouboe M, Christensen JR, Waldorff FB. Motion-based technology for people with dementia training at home: Three-phase pilot study assessing feasibility and efficacy. JMIR Ment Health. 2020 Aug 26;7(8):e19495. doi: 10.2196/19495. https://mental.jmir.org/2020/8/e19495/ v7i8e19495 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.de Vugt ME, Verhey FR. The impact of early dementia diagnosis and intervention on informal caregivers. Prog Neurobiol. 2013 Nov;110:54–62. doi: 10.1016/j.pneurobio.2013.04.005.S0301-0082(13)00041-5 [DOI] [PubMed] [Google Scholar]

- 10.Mittelman MS. A family intervention to delay nursing home placement of patients with Alzheimer disease. JAMA. 1996 Dec 04;276(21):1725. doi: 10.1001/jama.1996.03540210033030. [DOI] [PubMed] [Google Scholar]

- 11.Sirály E, Szabó Á, Szita B, Kovács V, Fodor Z, Marosi C, Salacz P, Hidasi Z, Maros V, Hanák P, Csibri É, Csukly G. Monitoring the early signs of cognitive decline in elderly by computer games: An MRI study. PLoS One. 2015;10(2):e0117918. doi: 10.1371/journal.pone.0117918. https://dx.plos.org/10.1371/journal.pone.0117918 .PONE-D-14-36909 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Budd D, Burns LC, Guo Z, L'italien G, Lapuerta P. Impact of early intervention and disease modification in patients with predementia Alzheimer's disease: A Markov model simulation. Clinicoecon Outcomes Res. 2011;3:189–195. doi: 10.2147/CEOR.S22265. doi: 10.2147/CEOR.S22265.ceor-3-189 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Gauthier S, Rosa-Neto P, Morais A, Webster C. World Alzheimer Report 2021: Journey Through the Diagnosis of Dementia. London, UK: Alzheimer’s Disease International; 2021. [2020-09-21]. https://www.alzint.org/u/World-Alzheimer-Report-2021.pdf . [Google Scholar]

- 14.Morris JC, Storandt M, Miller JP, McKeel DW, Price JL, Rubin EH, Berg L. Mild cognitive impairment represents early-stage Alzheimer disease. Arch Neurol. 2001 Mar 01;58(3):397–405. doi: 10.1001/archneur.58.3.397.noc00280 [DOI] [PubMed] [Google Scholar]

- 15.Mitchell A, Shiri-Feshki M. Rate of progression of mild cognitive impairment to dementia--Meta-analysis of 41 robust inception cohort studies. Acta Psychiatr Scand. 2009 Apr;119(4):252–265. doi: 10.1111/j.1600-0447.2008.01326.x.ACP1326 [DOI] [PubMed] [Google Scholar]

- 16.Albert MS, DeKosky ST, Dickson D, Dubois B, Feldman HH, Fox NC, Gamst A, Holtzman DM, Jagust WJ, Petersen RC, Snyder PJ, Carrillo MC, Thies B, Phelps CH. The diagnosis of mild cognitive impairment due to Alzheimer's disease: Recommendations from the National Institute on Aging-Alzheimer's Association workgroups on diagnostic guidelines for Alzheimer's disease. Alzheimers Dement. 2011 May 22;7(3):270–279. doi: 10.1016/j.jalz.2011.03.008. http://europepmc.org/abstract/MED/21514249 .S1552-5260(11)00104-X [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Carson N, Leach L, Murphy KJ. A re-examination of Montreal Cognitive Assessment (MoCA) cutoff scores. Int J Geriatr Psychiatry. 2018 Feb;33(2):379–388. doi: 10.1002/gps.4756. [DOI] [PubMed] [Google Scholar]

- 18.Dautzenberg G, Lijmer J, Beekman A. Diagnostic accuracy of the Montreal Cognitive Assessment (MoCA) for cognitive screening in old age psychiatry: Determining cutoff scores in clinical practice. Avoiding spectrum bias caused by healthy controls. Int J Geriatr Psychiatry. 2020 Mar 27;35(3):261–269. doi: 10.1002/gps.5227. http://europepmc.org/abstract/MED/31650623 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Nasreddine ZS, Phillips NA, Bédirian V, Charbonneau S, Whitehead V, Collin I, Cummings JL, Chertkow H. The Montreal Cognitive Assessment, MoCA: A brief screening tool for mild cognitive impairment. J Am Geriatr Soc. 2005 Apr;53(4):695–699. doi: 10.1111/j.1532-5415.2005.53221.x.JGS53221 [DOI] [PubMed] [Google Scholar]

- 20.Tsoi KKF, Chan JYC, Hirai HW, Wong SYS, Kwok TCY. Cognitive tests to detect dementia: A systematic review and meta-analysis. JAMA Intern Med. 2015 Sep 01;175(9):1450–1458. doi: 10.1001/jamainternmed.2015.2152.2301149 [DOI] [PubMed] [Google Scholar]

- 21.Benson AD, Slavin MJ, Tran T, Petrella JR, Doraiswamy PM. Screening for early Alzheimer's disease: Is there still a role for the Mini-Mental State Examination? Prim Care Companion J Clin Psychiatry. 2005 Apr 01;7(2):62–69. doi: 10.4088/pcc.v07n0204. http://www.psychiatrist.com/pcc/screening-early-alzheimers-disease-is-still-role-mini . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Szcześniak D, Rymaszewska J. The usfulness of the SLUMS test for diagnosis of mild cognitive impairment and dementia. Psychiatr Pol. 2016;50(2):457–472. doi: 10.12740/PP/OnlineFirst/43141. http://www.psychiatriapolska.pl/uploads/onlinefirst/ENGverSzczesniak_PsychiatrPolOnlineFirstNr18.pdf .58256 [DOI] [PubMed] [Google Scholar]

- 23.Müller S, Preische O, Heymann P, Elbing U, Laske C. Increased diagnostic accuracy of digital vs conventional clock drawing test for discrimination of patients in the early course of Alzheimer's disease from cognitively healthy individuals. Front Aging Neurosci. 2017 Apr 11;9:101. doi: 10.3389/fnagi.2017.00101. doi: 10.3389/fnagi.2017.00101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Spencer RJ, Wendell CR, Giggey PP, Katzel LI, Lefkowitz DM, Siegel EL, Waldstein SR. Psychometric limitations of the Mini-Mental State Examination among nondemented older adults: An evaluation of neurocognitive and magnetic resonance imaging correlates. Exp Aging Res. 2013 Jul;39(4):382–397. doi: 10.1080/0361073x.2013.808109. [DOI] [PubMed] [Google Scholar]

- 25.Lonie JA, Tierney KM, Ebmeier KP. Screening for mild cognitive impairment: A systematic review. Int J Geriatr Psychiatry. 2009 Sep;24(9):902–915. doi: 10.1002/gps.2208. [DOI] [PubMed] [Google Scholar]

- 26.Block CK, Johnson-Greene D, Pliskin N, Boake C. Discriminating cognitive screening and cognitive testing from neuropsychological assessment: Implications for professional practice. Clin Neuropsychol. 2017 Apr 12;31(3):487–500. doi: 10.1080/13854046.2016.1267803. [DOI] [PubMed] [Google Scholar]

- 27.Jacova C, Kertesz A, Blair M, Fisk JD, Feldman HH. Neuropsychological testing and assessment for dementia. Alzheimers Dement. 2007 Oct;3(4):299–317. doi: 10.1016/j.jalz.2007.07.011.S1552-5260(07)00564-X [DOI] [PubMed] [Google Scholar]

- 28.Lim YY, Jaeger J, Harrington K, Ashwood T, Ellis KA, Stöffler A, Szoeke C, Lachovitzki R, Martins RN, Villemagne VL, Bush A, Masters CL, Rowe CC, Ames D, Darby D, Maruff P. Three-month stability of the CogState brief battery in healthy older adults, mild cognitive impairment, and Alzheimer's disease: Results from the Australian Imaging, Biomarkers, and Lifestyle-rate of change substudy (AIBL-ROCS) Arch Clin Neuropsychol. 2013 Jun 03;28(4):320–330. doi: 10.1093/arclin/act021.act021 [DOI] [PubMed] [Google Scholar]

- 29.Hammers D, Spurgeon E, Ryan K, Persad C, Barbas N, Heidebrink J, Darby D, Giordani B. Validity of a brief computerized cognitive screening test in dementia. J Geriatr Psychiatry Neurol. 2012 Jun 11;25(2):89–99. doi: 10.1177/0891988712447894.25/2/89 [DOI] [PubMed] [Google Scholar]

- 30.Dwolatzky T, Whitehead V, Doniger GM, Simon ES, Schweiger A, Jaffe D, Chertkow H. Validity of a novel computerized cognitive battery for mild cognitive impairment. BMC Geriatr. 2003 Nov 02;3(1):4. doi: 10.1186/1471-2318-3-4. https://bmcgeriatr.biomedcentral.com/articles/10.1186/1471-2318-3-4 .1471-2318-3-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Zygouris S, Tsolaki M. Computerized cognitive testing for older adults: A review. Am J Alzheimers Dis Other Demen. 2015 Feb 13;30(1):13–28. doi: 10.1177/1533317514522852. https://journals.sagepub.com/doi/10.1177/1533317514522852?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub%3dpubmed .1533317514522852 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Wild K, Howieson D, Webbe F, Seelye A, Kaye J. Status of computerized cognitive testing in aging: A systematic review. Alzheimers Dement. 2008 Nov;4(6):428–437. doi: 10.1016/j.jalz.2008.07.003. http://europepmc.org/abstract/MED/19012868 .S1552-5260(08)02863-X [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.de Oliveira MO, Dozzi Brucki SM. Computerized Neurocognitive Test (CNT) in mild cognitive impairment and Alzheimer's disease. Dement Neuropsychol. 2014;8(2):112–116. doi: 10.1590/S1980-57642014DN82000005. http://europepmc.org/abstract/MED/29213891 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Tierney MC, Lermer MA. Computerized cognitive assessment in primary care to identify patients with suspected cognitive impairment. J Alzheimers Dis. 2010;20(3):823–832. doi: 10.3233/JAD-2010-091672.HW530N8J55678212 [DOI] [PubMed] [Google Scholar]

- 35.Cucinotta D, Vanelli M. WHO declares COVID-19 a pandemic. Acta Biomed. 2020 Mar 19;91(1):157–160. doi: 10.23750/abm.v91i1.9397. http://europepmc.org/abstract/MED/32191675 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Henneghan AM, Lewis KA, Gill E, Kesler SR. Cognitive impairment in non-critical, mild-to-moderate COVID-19 survivors. Front Psychol. 2022 Feb 17;13:770459. doi: 10.3389/fpsyg.2022.770459. doi: 10.3389/fpsyg.2022.770459. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Henneghan AM, Lewis KA, Gill E, Franco-Rocha OY, Vela RD, Medick S, Kesler SR. Describing cognitive function and psychosocial outcomes of COVID-19 survivors: A cross-sectional analysis. J Am Assoc Nurse Pract. 2022 Mar;:499–508. doi: 10.1097/JXX.0000000000000647.01741002-900000000-99366 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Yang S, Flores B, Magal R, Harris K, Gross J, Ewbank A, Davenport S, Ormachea P, Nasser W, Le W, Peacock WF, Katz Y, Eagleman DM. Diagnostic accuracy of tablet-based software for the detection of concussion. PLoS One. 2017 Jul 7;12(7):e0179352. doi: 10.1371/journal.pone.0179352. https://dx.plos.org/10.1371/journal.pone.0179352 .PONE-D-17-05024 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Groppell S, Soto-Ruiz KM, Flores B, Dawkins W, Smith I, Eagleman DM, Katz Y. A rapid, mobile neurocognitive screening test to aid in identifying cognitive impairment and dementia (BrainCheck): Cohort study. JMIR Aging. 2019 Mar 21;2(1):e12615. doi: 10.2196/12615. https://aging.jmir.org/2019/1/e12615/ v2i1e12615 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Using ADRC and Related Resources. University of Washington Alzheimer’s Disease Research Center. [2020-09-01]. http://depts.washington.edu/mbwc/adrc/page/research-resources .

- 41.Luo J, Xiong C. DiagTest3Grp: An R package for analyzing diagnostic tests with three ordinal groups. J Stat Softw. 2012 Oct;51(3):1–24. doi: 10.18637/jss.v051.i03. http://europepmc.org/abstract/MED/23504300 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Roebuck-Spencer T, Glen T, Puente A, Denney R, Ruff R, Hostetter G, Bianchini KJ. Cognitive screening tests versus comprehensive neuropsychological test batteries: A National Academy of Neuropsychology education paper. Arch Clin Neuropsychol. 2017 Jun 01;32(4):491–498. doi: 10.1093/arclin/acx021.3065330 [DOI] [PubMed] [Google Scholar]

- 43.Neuropsychological testing. ScienceDirect. [2020-10-17]. https://www.sciencedirect.com/topics/medicine-and-dentistry/neuropsychological-testing .

- 44.De Roeck EE, De Deyn PP, Dierckx E, Engelborghs S. Brief cognitive screening instruments for early detection of Alzheimer's disease: A systematic review. Alzheimers Res Ther. 2019 Feb 28;11(1):21. doi: 10.1186/s13195-019-0474-3. https://alzres.biomedcentral.com/articles/10.1186/s13195-019-0474-3 .10.1186/s13195-019-0474-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary information.