Abstract

Time is a fundamental dimension of our perception of the world and is therefore of critical importance to the organization of human behavior. A corpus of work — including recent optogenetic evidence — implicates striatal dopamine as a crucial factor influencing the perception of time. Another stream of literature implicates dopamine in reward and motivation processes. However, these two domains of research have remained largely separated, despite neurobiological overlap and the apothegmatic notion that “time flies when you’re having fun”. This article constitutes a review of the literature linking time perception and reward, including neurobiological and behavioral studies. Together, these provide compelling support for the idea that time perception and reward processing interact via a common dopaminergic mechanism.

Keywords: Time perception, Timing, Reward, Motivation, Dopamine

1. Introduction

All behaviors unfold over time, and as a result, time is an implicit dimension of all behaviors whether they relate to movement, identification, feeding, communication, co-operation, or competition. Ongoing research has identified that the neurotransmitter dopamine, conventionally associated with reward and motivation, is critically involved in human time perception. Given this neurobiological overlap, there is reason to believe that time perception and reward processing may share common information processing pathways. However, for the most part the subfields that study time perception, reward, and motivation have been largely independent.

Conjecture regarding the relationship between reward and timing systems in the brain has focused on the idea of an internal clock modulated by the reward-dependent release of dopamine. The studies covered in this review build a compelling narrative for such an interaction, but present the need for a more nuanced account. We present an open question regarding the direction of modulation, asking whether dopamine and reward affect the internal clock, or if the internal clock informs dopaminergic signals of reward expectation that then guide timing behavior. While an internal clock would not inherently need reward-related information to function, the reward system must rely on an internal timing mechanism to produce temporally informative signals about when to expect reward. Evolving a system to learn the temporal landscape of rewards would require the use of an internal clock, which may have constituted the evolutionary pressure for timing and reward processing centers to evolve as interconnected systems (Namboodiri et al., 2014a, c). Therefore, studying these systems in conjunction may lead to new insights into the neural forms of reward and time.

To examine the relationship between time perception and reward in the brain, we will review (1) some proposed mechanisms of timing and their potential neural substrates, (2) the role of dopamine in reward and motivation, and (3) evidence supporting the interaction of dopamine and reports of time perception.

2. The internal clock

In physics, time is a singular, fundamental quantity, inexorably bound to the second law of thermodynamics. Psychological time, on the other hand, is both relativistic and multifaceted. It is well known that the perceptual experience of duration can be distorted by external factors, such as music and emotion (Droit-Volet and Meck, 2007). Phenomena such as these have long been recognized, and thus models describing how we perceive time have a substantial history.

While the passage of time is experienced on many scales, from milliseconds to hours to decades (Paton and Buonomano, 2018), we focus here on the sub-second to minutes range. A system capable of measuring time has, presumably, a few necessary components. First, it requires a progression through time in some stereotypical way. Second, a timing system needs a means to store states corresponding to temporal intervals in memory. Lastly, the system requires a means to compare the current state of the clock with such a memory. An analog clock tracks time with the physical movement of its hands, allowing one to remember their relative positions so as to tell how much time has passed with a high degree of precision and accuracy. A neural clock must similarly possess temporal predictability, though widely observed and curious deviations in precision and accuracy are well-documented (Namboodiri et al., 2014a). With regards to precision, humans and animals commonly exhibit errors in time perception that scale in direct proportion to the interval being timed (Gibbon, 1991), being an expression of Weber’s law in the time domain, termed the “scalar timing property”. With regards to accuracy, when asked to categorize an interval as nearer a shorter or longer standard, humans and animals report the temporal bisection point (the subjectively equivalent interval) as lying—varyingly—across a span of values ranging from the harmonic mean on through the geometric mean to the arithmetic mean of the standards (Killeen and Fetterman, 1983; Kopec and Brody, 2010; Wearden, 1991). These behavioral observations regarding temporal precision and accuracy provide clues to understanding how and why time is represented in the brain as it is. Indeed, the merit of any theory of timing and time perception rests on its ability to account for the accuracy and precision of temporal behaviors observed, to deduce the neural form that time’s representation takes, and to provide the purpose rationalizing why it takes the form that it does.

2.1. Theoretical models of timing

Theories of timing and time perception must make a reckoning of time perception’s form, accounting for its well-characterized imprecision and inaccuracy. The most prominent class of timing models is the pacemaker-accumulator model, in which a pacemaker emits counts over time and a reference memory system accumulates and stores those counts (Treisman, 1963). An advantage of this class of model is its ability to describe directional inaccuracies in time perception consistently in terms of the number of pulses accumulated. To see how this is helpful, imagine an individual whose internal clock has been artificially slowed. When asked how long a 60 s stimulus lasts, this individual may report 50 s, an underestimation, as too few pulses were accumulated over 60 s. However, when asked to produce a 60 s interval by holding down a button, this individual would press for 72 s, an overproduction, as it now takes longer to accumulate 60 s worth of pulses. These terms —underestimation and overproduction— indicate the same bias in terms of psychological time, although they manifest as negative and positive errors when dealing with estimation and production paradigms, respectively. The opposite bias in psychological time is also possible: a “faster” psychological clock would result in overestimation and underproduction, in each respective task.

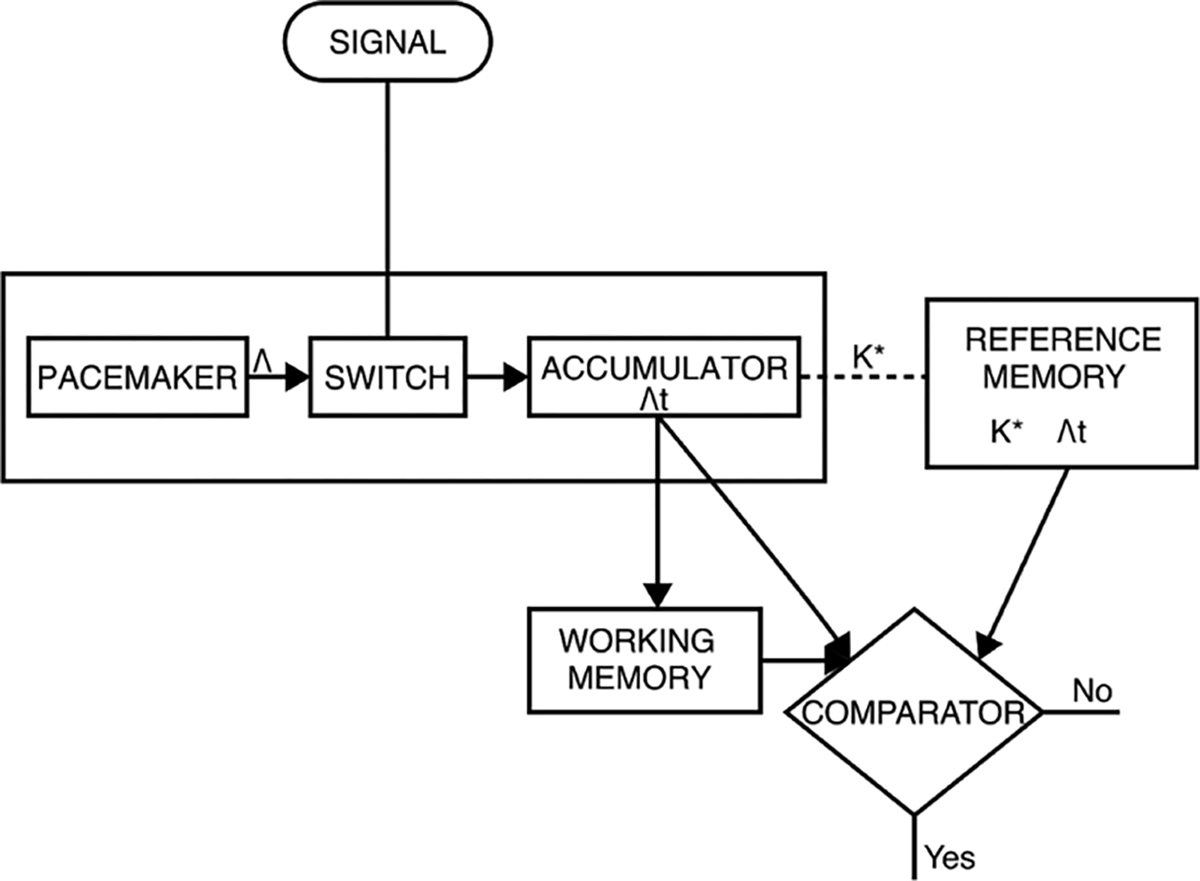

There are many different versions of the basic pacemaker-accumulator conception (Gibbon, 1977; Gibbon et al., 1984; Killeen and Fetterman, 1988; Matell and Meck, 2000; Namboodiri et al., 2015; Simen et al., 2013, 2011; Treisman, 1963). Accounting in part for their success, pace-maker accumulator models of timing demonstrate how Weber’s law in time can be abided through the accumulation of Poisson processes, though they make varying predictions and assertions about the neural form of time’s representation and how it is read-out (Balci et al., 2011; Gibbon, 1977; Gibbon et al., 1984; Killeen and Fetterman, 1988; Matell and Meck, 2000; Simen et al., 2013, 2011; Treisman, 1966, 1963). The canonical and perhaps most popular of these models is scalar expectancy theory (SET, (Gibbon, 1977)). SET extends the simple pacemaker-accumulator idea to include additional psychological modules: a switch, a fallible memory system, and a comparator (Fig. 1, (Church, 1984)). To process temporal information, the switch controls whether the pacemaker is currently in an ‘off’ or ‘on’ state. When the pacemaker is ‘on’, it generates counts (or pulses) as a Poisson process, which are stored in the accumulator. This count can be transferred to a reference memory which provides the basis for relative comparisons of duration against the current count in working memory. Finally, a comparison process identifies whether the count in working memory matches that in the reference memory. By constructing a linear representation of time from the accumulation of a Poisson process and employing a ratio decision rule, SET can exhibit the temporal scalar property. SET also therefore predicts that the point of subjective equality in temporal bisection should be the geometric mean, as often observed experimentally (Church and Deluty, 1977).

Fig. 1.

The psychological modules in scalar expectancy theory. Multiplicative factors such as the pacemaker rate (Λ) and memory constant (K *) can affect the pacemaker pulse count and result in scalar timing. Adapted from Church (1984).

Another highly influential model of time is the Behavioral Theory of Timing (BeT). This model is similarly based on a pacemaker-accumulator process, but assumes that agents do not have direct access to an internal pacemaker and instead indirectly measure time via changes in adjunctive behavioral states (behaviors that are not directly related to the task, e.g. a change from the state of ‘preening’ to the state of ‘yawning’ (Killeen and Fetterman, 1988). When the interval to be timed is the delay to reinforcement, BeT assumes that the pacemaker rate is inversely proportional to the rate of reinforcement (Bizo and White, 1994), resulting in time’s neural representation being dependent on the interval to be timed (in contrast to SET, where the rate of accumulation is independent of the interval to be timed). BeT yields the scalar property by setting the rate of accumulation to a constant threshold and applying a subtractive decision rule. Mathematically mimicking BeT, Simen et al. (Simen et al., 2013) present a more biologically rooted model of timing (“Time-adaptive, opponent drift diffusion model”; TopDDM). In TopDMM, two opponent Poisson processes linearly sum, such that the rate of accumulation to a constant threshold is tuned to produce the interval to be timed. Importantly, this model not only exhibits the scalar timing property but also produces distributions whose skewness well accords with that widely reported experimentally (Balci and Simen, 2016). BeT and TopDMM, therefore, account for the scalar property of timing while directly connecting reward rate to time’s representation. As will be demonstrated in later sections, the idea that reward rate relates to timing behavior is of critical relevance to this review, thus it is useful to note that this relationship between reward and time perception has precedent in early psychological models and their modern implementations.

While the above models of timing explain how fundamental features of timing could arise, they do not explain why time takes its apparent neural form, ie. what purpose it may serve. Previously, we have proposed a theory of decision making that is based on the normative principle of reward rate maximization, called TIMERR (Training-integrated Maximized Estimation of Reward Rate) that rationalizes observations in decision making and time perception (Namboodiri et al., 2014b, c). To determine whether a pursuit is worth its cost in time, in TIMERR the agent “looks back” into its recent past to determine the rate of experienced reward, choosing pursuits that maximize the total reward rate over this look-back time and the time of the pursuit. TIMERR derives the form that the neural representation of time takes by postulating that time is represented so that the ratio of the valuation of the pursuit to the neural representation of its delay equals the resulting change in reward rate. Time’s neural representation under TIMERR, then, is not a linear function of objective time, as in the above models, but rather is a non-linear, concave function, whose degree of non-linearity is controlled by the look-back time. An implementation level model of TIMERR (Namboodiri et al., 2015) that also uses opponent diffusion processes as in Top-DDM, can also produce temporal distributions with the appropriate skewness that conform to Weber’s law in time perception. In addition, TIMERR rationalizes why reports of the temporal bisection point range from the harmonic to the arithmetic mean: as the look-back time goes from being diminishingly small to increasingly large, the degree of non-linearity in the neural representation of time goes from its maximal to its minimal value, resulting in the temporal bisection point sliding from the harmonic to the arithmetic mean. Therefore, how, as well as why, time takes its neural form is explained by TIMERR as being in the service of reward rate maximization.

An implementation-level model of timing of particular importance to this review is that of Murray and Escola (Murray and Escola, 2017), who provide a formal framework for understanding sequential activation patterns and properties observed in the striatum of rodents during timing tasks (Akhlaghpour et al., 2016; Bakhurin et al., 2017; Gouvea et al., 2015; Mello et al., 2015; Zhou et al., 2020). In Murray and Escola’s model, a recurrent network based on the inhibitory connections learns to produce sequential patterns. Importantly, in their network, excitatory inputs (as if from cortex) provide the means to flexibly rescale clock speed, though the authors also note how dopamine could change sequence speed in their model. Indeed, both sequential activation during interval timing and the ability to rescale speed have been observed in striatal neurons (Mello et al., 2015).

2.2. Timing in the Basal Ganglia

Numerous brain regions are associated with timing and time perception, including anterior and posterior cingulate cortex, anterior insula cortex, prefrontal cortex and dorsolateral prefrontal cortex, inferior frontal gyrus, posterior and inferior parietal cortex, superior temporal gyrus, the supplementary motor area, cerebellum, hippocampus, and even primary sensory areas (Shuler, 2016); for meta-analyses see (Lewis and Miall, 2003; Macar et al., 2002; Meck et al., 2008; Wiener et al., 2010)). However, it is often unclear to which aspects of timing different brain areas relate; i.e. whether the responsive regions are involved in the encoding of a time interval (i.e. memory processes), the reproduction of an interval (i.e. motor processes), or decision-making processes. We have restricted this review to focus on a brain region at the intersection of timing, reward, and dopamine: the basal ganglia.

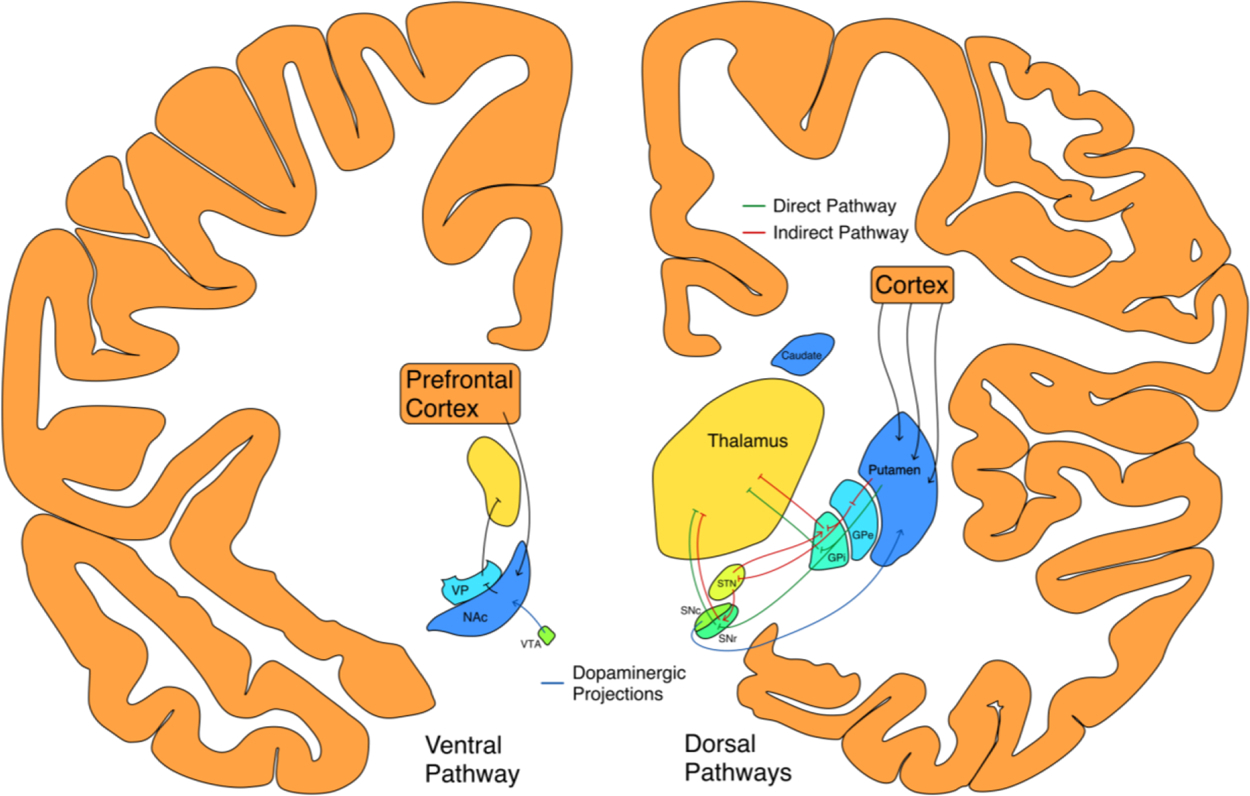

The basal ganglia have been implicated both in brain measures of the precision and accuracy of timing judgments, as well as timing behaviors affected by diseases of the basal ganglia. Anatomically, the basal ganglia are a collection of nuclei interconnected primarily by inhibitory projections. The bulk of excitatory inputs from cortex to the basal ganglia arrive in the striatum, which in humans is divided into the caudate, putamen, and nucleus accumbens. The caudate and putamen make up the dorsal striatum and feed into the direct and indirect pathways, sending signals through the globus pallidus, subthalamic nucleus, and substantia nigra pars reticulata before they are output to the thalamus (see Fig. 2). The ventral region of the striatum is the nucleus accumbens, which projects to the ventral pallidum, an output nucleus. The substantia nigra pars compacta contains dopamine neurons projecting to the dorsal striatum, while the ventral tegmental area contains those projecting to the nucleus accumbens (Lanciego et al., 2012).

Fig. 2.

The direct (green) and indirect (red) pathways of the basal ganglia, with dopamine projections in blue. NAc, nucleus accumbens; GPe, globus pallidus external segment; GPi, globus pallidus internal segment; VP, ventral pallidum; STN, subthalamic nucleus; SNc, substantia nigra pars compacta; SNr, substantia nigra pars reticulata; VTA, ventral tegmental area. Off-plane nuclei depicted in their approximate ML DV position. The Allen Human Brain Atlas was used as an anatomical reference in creating this figure (Allen Institute for Brain Science, 2010; Ding et al., 2016).

The nigrostriatal pathway has been implicated as part of a core timing mechanism, which recurs in multiple brain imaging studies (Coull et al., 2011; Meck et al., 2008; Teki et al., 2011). For example, Harrington et al. (Harrington et al., 2004) asked participants to complete a temporal discrimination task (where a target time must be categorized as shorter or longer than a reference interval or either 1200 or 1800 ms; See Fig. 3C) while they were scanned using fMRI. The authors found that the coefficient of variation of time estimates was correlated with activation in the right caudate, right inferior parietal, left declive and left tuber, suggesting that these areas were associated with timing precision (Harrington et al., 2004). In another study, Pouthas et al. (Pouthas et al., 2005) asked subjects to complete a temporal generalization task (where a target time must be categorized as equivalent or different to a reference interval of 450 or 1300 ms) while they were scanned using fMRI. By comparing brain activation during trials in which the short reference was used versus those in which the long reference was used, the authors could identify which areas were sensitive to interval duration. They found that activation in the right caudate, preSMA, anterior cingulate cortex, right inferior frontal gyrus, and bilateral premotor cortex corresponded to the duration of the interval. These two studies showed, respectively, that the activation of the striatum is associated with both the precision (Harrington et al., 2004) the duration (Pouthas et al., 2005) of time estimates. The involvement of the basal ganglia is also evident in meta-analyses of studies that have varied in their procedures (e.g. (Macar et al., 2002; Meck et al., 2008; Wiener et al., 2010)). It should be noted, however, that while these studies do implicate basal ganglia structures in timing, there have been examples of patients with significant bilateral lesions in the caudate, putamen, and globus pallidus that were able to perform estimation and production tasks with accuracy, although they showed impairments in paced tapping (Coslett et al., 2010).

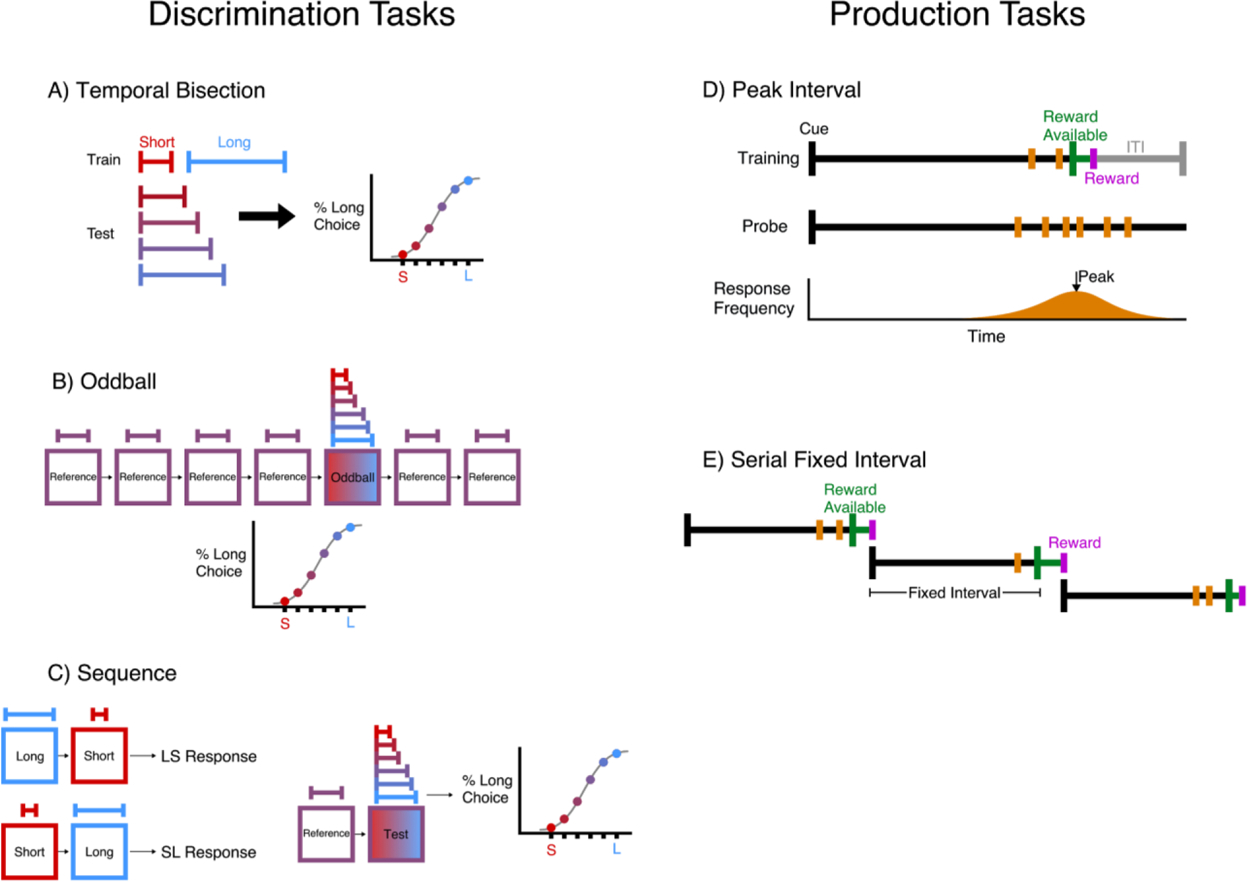

Fig. 3.

Schematic references for common timing tasks. Across panels red is used to indicate short durations while blue is used to indicate long durations. Interval discrimination tasks: (A) The temporal bisection paradigm trains subjects to give different responses to short and long reference intervals, then presents intervals of intermediate durations to find the point of subjective equality between the short and long intervals. This generates a response curve that can shift left or right to reflect changes in the subject’s report of time. (B) The oddball paradigm presents a series of cues, all of the same duration. One of the later cues is randomly replaced with an oddball cue of a different duration, that the subject must classify as longer or shorter than the rest. (C) Sequence paradigms present two cues in a row, asking subjects to either determine if the second was longer or shorter than the first. Sometimes the duration of the first is held constant while the second varies, while in other tasks the order of the long and short cues is randomly assigned. Interval production tasks: (D) The peak interval procedure provides a reward for the first response a fixed duration after a cue. On a small number of probe trials, no reward is available and the peak rate of responding is taken as the subject’s estimate of the duration. (E) The serial fixed interval task is similar, except that the previous reward is used as the cue to begin the interval. In some versions of this task, the most recent response resets the interval, requiring animals to withhold responding for the entire duration.

Another corpus of literature that points to the basal ganglia as a key neuroanatomical substrate for time perception relates to pathology (for detailed reviews of disorders that affect time perception see (Allman and Meck, 2012; Teixeira et al., 2013)). In particular, Parkinson’s patients, who have a loss of dopamine-producing neurons in the substantia nigra (Damier et al., 1999), have robust impairments in time perception across a range of tasks (Carroll et al., 2009; Jones et al., 2008; Malapani et al., 1998; Parker et al., 2013; Perbal et al., 2005; Smith et al., 2007). This manifests primarily as motor timing difficulties, including tapping synchronization (Elsinger et al., 2003; O’Boyle et al., 1996), but also as perceptual timing impairments, including temporal bisection (Carroll et al., 2009; Elsinger et al., 2003; Parker et al., 2013; Smith et al., 2007). More specifically, it has been reported that Parkinson’s patients tend to underestimate intervals (Lange et al., 1995; Pastor et al., 1992), which could indicate a slowed internal clock.

Apart from Parkinson’s disease, other disorders also affect timing behavior. Patients with schizophrenia tend to perform poorly on a range of timing tasks (Bolbecker et al., 2014; Ciullo et al., 2016; Peterburs et al., 2013), and this has been suggested to arise as a result of the impaired function of dopaminergic brain areas (Brisch et al., 2014; Guillin et al., 2007). Similarly, patients diagnosed with attention deficit hyperactivity disorder (ADHD) also often exhibit impairments in the accuracy of temporal reproductions (Barkley et al., 2001a, b; Plummer and Humphrey, 2009; West et al., 2000). Dopamine agonists such as methylphenidate (the most common treatment for ADHD) can alleviate the timing impairments present in the disorder (Rubia et al., 2009).

In summary, fMRI studies of healthy individuals, as well as diseases and disorders in which time perception is affected, point toward dopaminergic areas of the basal ganglia as a core aspect of the timing mechanism. Studies in non-human animals complement these findings with invasive recordings and interventions not possible in humans. Electrophysiological recordings in monkeys trained to select the longer of two cues revealed striatal neurons that vary their firing rate in response to cue duration (Chiba et al., 2015). In rats performing a temporal bisection task (see Fig. 3A), striatal neurons displayed sparse population coding, activating in sequence over the course of the timed interval (Gouvea et al., 2015). When the neural population moved through the sequence more quickly, rats were more likely to classify the interval as long. Conversely, moving through the sequence more slowly coincided with short classifications, indicating a relationship between time perception and the speed of sequential activation in the striatum. This striatal population clock-like activity was also observed in rats performing an interval production task (See Fig. 3E), with the added observation that changing the target interval elicited rescaling of the population code to the new duration (Mello et al., 2015). This type of sequential activation during intervals is not unique to the striatum, however studies in mice have shown that compared to orbital frontal cortex (Bakhurin et al., 2017) and secondary motor cortex (Zhou et al., 2020), striatal activity exhibits a higher degree of sequentiality during timing tasks. Zhou et al. went on to show that biologically constrained decoder networks performed better when parsing time from inputs with greater sequentiality, indicating that the striatum provides a more optimized set of signals for downstream areas to readout time.

With these indications that the basal ganglia and midbrain dopamine system are involved in timing, we now consider the canonical relationship between dopamine and the basal ganglia in reward and motivation and examine how they interact with regards to time perception.

3. Reward, motivation, and dopamine

Many researchers have drawn a distinction between the motivation toward reward (i.e. “wanting”; (Berridge, 2007)) and the hedonic experience of reward receipt (i.e.“liking”; (Wise, 1980). These states differ in when they are experienced relative to reward. “Wanting” occurs before a reward is received, whereas “liking” occurs while a reward is being consumed. There has been much debate about which one of these constructs the dopaminergic system principally subserves, or whether they are even mutually exclusive (Bromberg-Martin et al., 2010), and it is unclear precisely which motivational processes (e.g. arousal, effort, persistence) are influenced by midbrain dopamine (Salamone and Correa, 2012).

It should be noted that much research into the neurobiology of reward and motivation typically focuses on the ventral tegmental area and the nucleus accumbens. This is in contrast to time perception research, which is more often related to the nigrostriatal pathway (this pathway is also commonly associated with movement; see (Meck, 2006)). However, these pathways are not independent, and the nigrostriatal pathway has also been shown to be critical for reward processing (Wise, 2009).

3.1. Reward prediction error

Early studies implicated dopamine as the principal neurotransmitter responsible for the hedonic nature of “liking” (Wise, 1980). This perspective has rapidly evolved, and dopaminergic signals are now more often conceptualized as a reinforcement signal that facilitates learning, rather than directly causing pleasure. This is due to the central finding that phasic dopamine activity reports a reward prediction error (Schultz, 1998), a key component of temporal difference learning (Sutton and Barto, 1998). Temporal difference learning simplifies an environment into a collection of states, then learns values associated with each state to guide choices while navigating the environment. Reward prediction errors allow a temporal difference learning agent to update state values by reporting the difference between expected and actual value after each transition between states. When an unexpected reward is encountered, the reward prediction error generated increases the value of the previous state, such that the reward can be better anticipated and thus shape behavior. Temporal difference learning is one of several reinforcement learning algorithms that could be used to learn the temporal structure of a rewarding environment. These range from model-based to model-free algorithms, which vary in their speed and flexibility when learning intervals to rewards (See the recent review by Petter et al.; (Petter et al., 2018)).

Foundational work by Schultz et al. observed two key properties of reward prediction errors in the phasic activity of dopamine neurons in monkeys (Schultz et al., 1997). The first is that when a cue is predictably paired with a reward, the reward prediction error for the reward will slowly transfer to the cue. The second is that when an expected reward is omitted, a negative reward prediction error is produced to signal that the previous state overestimated the upcoming reward. These observations necessitate that the brain keeps track of the time elapsed between the cue and the reward and indicates that dopamine neurons have access to this temporal prediction information. The following studies are part of a growing body of causal evidence supporting dopamine’s role as a reward prediction error signal (Adamantidis et al., 2011; Chang et al., 2016; Hollerman and Schultz, 1998; Kim et al., 2012; Schoenbaum et al., 2013; Steinberg et al., 2013) and are corroborated in humans using fMRI imaging of the striatum (Berns et al., 2001; McClure et al., 2003; Pagnoni et al., 2002).

Dopamine neurons respond differently to cues that predict rewards with different anticipated delays (Fiorillo et al., 2008; Gregorios-Pippas et al., 2009; Kobayashi and Schultz, 2008), as well as differences in reward probability, magnitude and type (Lak et al., 2014; Stauffer et al., 2014). Their decreased response to cues predicting longer reward delays typifies the principle of temporal discounting: rewards are devalued as a function of delay until their receipt. In fact, the decrease in activity approximates a hyperbolic discounting function (Kobayashi and Schultz, 2008), which matches that observed for human temporal discounting choice data (Kobayashi and Schultz, 2008; Mazur, 1987). When the delay between a cue and the reward it predicts is longer, there is a greater dopamine response to the reward itself (Fiorillo et al., 2008). This has been assumed to reflect the greater uncertainty in temporal precision, which is manifested as the scalar property of time perception, as well as reduced associative learning (Fiorillo et al., 2005). These findings indicate that dopamine neurons are sensitive to temporal information about predicted rewards, as time is an integral aspect of reward processing (Daw et al., 2006; Gershman et al., 2014; Kirkpatrick, 2014); and see (Bermudez and Schultz, 2014).

The reward prediction error findings above pertain largely to phasic dopamine signals, however, dopamine neurons also exhibit “quasi-phasic” responses (Lloyd and Dayan, 2015), and tonic responses. The quasi-phasic responses manifest as a ramping up of activity as reward approaches (Fiorillo et al., 2003) — perhaps as an anticipatory signal that may be involved in motivation and persistence (Bromberg-Martin et al., 2010; Guru et al., 2020; Howe et al., 2013). Similar anticipatory signals have also been observed in human BOLD imaging studies in striatum (Jimura et al., 2013), as well as in other areas sensitive to subjective value, such as medial prefrontal cortex (McGuire and Kable, 2015). Notably, the persistence of these dynamics after learning does not fit neatly into the reward prediction error theory that the phasic activity of these neurons supports (Niv, 2013). The tonic activity of dopamine neurons in the striatum takes place over a longer time scale (seconds to minutes) and is thought to be related to the calibration of the vigor of actions (Niv et al., 2007, 2005). However, earlier suggestions have associated the basal firing rates of dopaminergic neurons projecting to the striatum with the rate of the pacemaker in pacemaker-accumulator models (Meck, 1988).

3.2. Motivation

Motivation is defined by the hedonistic principle as the process of maximizing pleasure (Young, 1959). Motivational states therefore relate to reward-directed behavior, including approach, behavioral activation and invigoration (Kleinginna and Kleinginna, 1981). In regard to this review, the most important part of this definition is the temporal sequence: motivation is the behavioral or cognitive state of an agent due to a prospective reward (Botvinick and Braver, 2015). Notably, some researchers have proposed that the behavioral energization that would conventionally be considered to be an aspect of goal-directed motivation may also occur generally, when a reward is not specified (Niv et al., 2006).

The incentive salience hypothesis proposes that dopamine signals serve to motivate and invigorate actions, separate from signaling reward (Berridge, 2007). Evidence for this comes from studies showing, for example, that dopamine depletion in the ventral striatum can impair high-effort reward-seeking (Aberman and Salamone, 1999); and for review see (Salamone et al., 2007). Motivational deficits are also present in dopaminergic diseases like depression and schizophrenia (Salamone et al., 2015).

Early experimental studies first demonstrated an effect of motivation on time perception by incentivizing subjects to complete irrelevant tasks before interrupting them and eliciting a time estimate. For example, Rosenzweig and Koht (Rosenzweig and Koht, 1933)— who refer to motivation as “need-tension” — asked subjects to complete an unsolvable puzzle and told subjects that the puzzle constituted a practice (low motivation) or a test (high motivation). While no inferential statistics were provided, the authors reported a tendency for durations in the low motivation condition to be overestimated relative to durations in the high motivation condition (over durations of 1–10 min). Other similar early experiments also report these tendencies with similar paradigms, which differed slightly in their manipulation of motivation (Meade, 1963, 1959).

One key proposal that has been advanced to account for these findings is that in highly motivated states, agents are engaged with their goals, and “lose track of time” (Meade, 1963). Such an effect has been explained by assuming a common, finite, attentional resource for temporal and non-temporal information (Alonso et al., 2014;Buhusi and Meck, 2009). In terms of a pacemaker-accumulator model, if more of this attentional resource is allocated to one’s goals, temporal information processing is inhibited, resulting in fewer pulses accumulating in the memory store (Zakay and Block, 1995). This idea has been shown to generalize to task engagement in general, especially in dual task paradigms, where a time estimation must be made concurrently with another non-temporal task. The results from these paradigms have indicated that time is consistently underestimated relative to when the distractor task is not present, suggesting that the distractor task interferes with the accumulation of pulses (Brown, 1997). Thus, one explanation for the effect of motivation can be attributed to a lack of attention to time.

More recently, this idea has been investigated using an experience sampling method (Conti, 2001). In this study, participants were queried about their current activities, intrinsic and extrinsic motivation, time awareness, time estimation, checking of time, and perceived speed of time during normal behavior over a five-day period. It was found that higher intrinsic motivation was associated with a lack of attention to time and underestimations of time. Importantly, while this finding would seem to recapitulate the idea that high motivation leads to a lack of attention to and underestimations of time, these factors were independently associated with time perception after controlling for the other. Thus, a lack of attention to time does not seem to fully account for the relationship between motivation and time perception.

Some studies have used more explicit incentives to assess how motivation can influence time perception. For example, using a version of the peak-interval procedure modified for humans, Balci, Wiener, Çavdaroğlu, & Coslett (Balci et al., 2013) assessed the effect of increased monetary reward payoff on timing. Here, participants were instructed to bracket the target time by holding a response key. If the response was initiated after, or terminated before the target time, the reward was not received. The study found that when larger rewards were available, participants with particular genetic polymorphisms (low PFC dopamine and low D2, or high PFC dopamine and high D2) initiated their responses earlier. However, as these participants did not also terminate their responses earlier, this was taken to indicate a strategic change in the decision process rather than a change of their internal clock speed (see (Balci, 2014).

4. Effects of dopamine on time perception

Psychologists and neuroscientists have long endeavored to reveal insight into the neural form of time’s representation in the brain by observing behavioral patterns in timing tasks (Namboodiri and Hussain Shuler, 2016). Numerous different tasks are used to elicit timing behavior, though they can typically be categorized as either discrimination tasks or production tasks. Discrimination tasks ask subjects to classify an interval as long or short compared to a middling duration or bounding references. Production tasks require responses from the subject after a learned duration to reward availability. Common versions of these tasks are schematized in Fig. 3 for reference, as they are used in many of the following studies to measure changes in time perception under the effects of dopamine and reward manipulations.

4.1. Dopamine Clock Hypothesis

A prominent hypothesis regarding the relationship between dopamine and time is the “dopamine clock hypothesis” (Meck, 1996, 1983), which proposes that increases or decreases in dopamine quicken or slow the internal clock, respectively, (see Fig. 4A and Table 1 for quick reference regarding predictions; for review - see (Coull et al., 2011; Jones and Jahanshahi, 2009)), resulting in the duration of timed intervals shifting proportional to the interval to be produced (Balci, 2014). Supporting experimental evidence stems, in part, from pharmacological manipulations of dopamine in non-human animals. When given dopamine agonists (e.g. methamphetamine) during a peak interval procedure, rats’ response rates peaked earlier, as if their internal clock was accelerated (Buhusi and Meck, 2002). When given dopamine antagonists (e.g. haloperidol), peak responses were later, which would correspond to a slowed internal clock (Buhusi and Meck, 2002). When both drugs were delivered simultaneously, rats’ peak responses were similar to that of a control condition (Maricq and Church, 1983; Namboodiri et al., 2016). In a human fMRI study, dopamine precursor depletion revealed reduced activity in the putamen and supplementary motor area with impairments in performing time perception tasks (Coull et al., 2012).

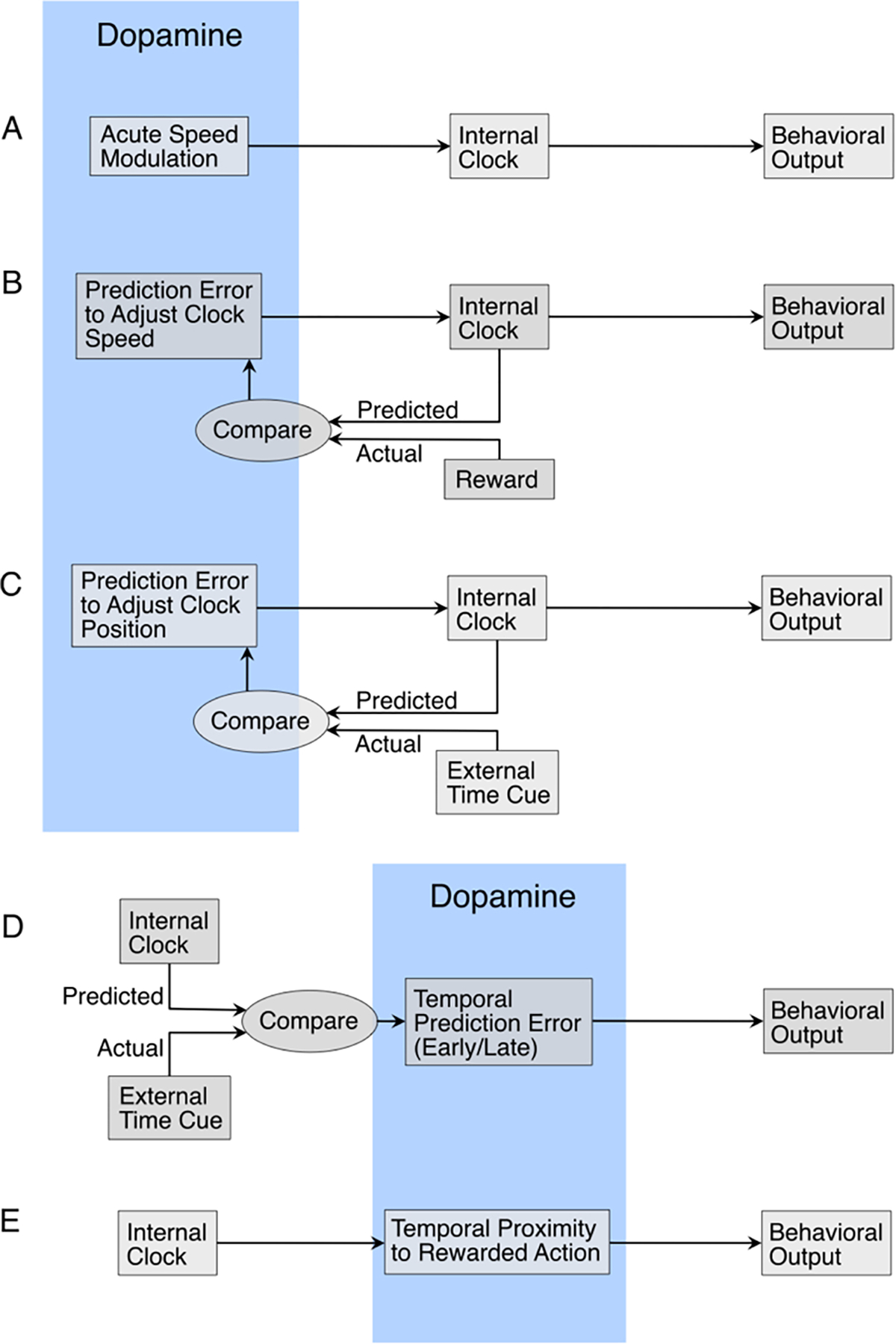

Fig. 4.

Hypothetical Roles for Dopamine. (A) The dopamine clock hypothesis. Under the dopamine clock hypothesis, high levels of dopamine cause the internal clock to speed up, which in turn causes the behavioral output to exhibit underestimation or overproduction depending on the task. (B) Dopamine signals reflect reward prediction errors, which are used to rescale the speed of the clock depending on whether the reward arrived earlier or later than expected. This would change the speed of the clock on subsequent trials with the goal of increasing temporal accuracy when predicting reward. (C) Dopamine signals reflect temporal prediction errors when external cues of time disagree with the internal clock. In this case, dopamine signals could adjust the position of the internal clock to agree with the external cues. (D) External timing cues compared with the output of the internal clock produce dopaminergic prediction errors that are then used to inform the behavioral output. (E) Dopamine signals inform the behavioral output by ramping to a threshold for action initiation. The level of dopamine activity encodes the temporal proximity to reward, which is informed by the internal clock.

Table 1.

The effects of dopamine modulation on time production and discrimination as proposed by the dopamine clock hypothesis. The hypothetical clock is proposed to respond to increases and decreases in dopamine by respectively increasing and decreasing its speed.

| Dopamine | Hypothetical Clock | Production | Discrimination | Experience |

|---|---|---|---|---|

|

| ||||

| Increase | Quickens | Early response/Underproduction | Chooses long/Overestimation | Less time seems like more |

| Decrease | Slows | Late response/Overproduction | Chooses short/Underestimation | More time seems like less |

The bidirectional effects of dopamine on apparent clock speed are additionally supported by genetic manipulations in mice. Genetic knockout of the gene for the dopamine transporter (DAT), which increases extracellular levels of dopamine, has been shown to cause either a total loss of temporally sensitive behavior (Meck et al., 2012) or earlier peak responses indicating a faster internal clock (Balci et al., 2010). Genetic over-expression of the D2 receptor causes later peak responses (Drew et al., 2007), implying a slowing of the internal clock and suggesting possible divergent mechanisms for dopamine action on timing across the direct and indirect pathways. Many studies also report an increase in the variance of responses (i.e. non-directional impairments) in timing tasks due to dopamine antagonists (Coull et al., 2012; Rammsayer, 1999, 1997, 1993), which could indicate a role for dopamine in temporal error correction or in signaling timed actions downstream from the timekeeping mechanism. Nevertheless, the bulk of evidence has generally supported the idea that increasing dopamine levels increases the speed of the internal clock (Abner et al., 2001; Buhusi and Meck, 2002; Cevik, 2003; Cheng et al., 2007, 2006; Cheng and Liao, 2007; Cheung et al., 2006; Chiang et al., 2000; Maricq et al., 1981; Maricq and Church, 1983; Matell et al., 2006, 2004; Meck et al., 2012) and that decreasing dopamine levels decreases the speed of the internal clock (Buhusi and Meck, 2002; Cheng and Liao, 2007; Drew et al., 2003; Lustig and Meck, 2005; Macdonald and Meck, 2006; MacDonald and Meck, 2005; Maricq and Church, 1983).

Some recreational drugs can also have profound effects on the experience of time. Amphetamines are known to increase extracellular dopamine (Erin and Calipari, 2013) and are often used in studies of time perception (Balci et al., 2008; Buhusi and Meck, 2002; Chiang et al., 2000; Lake and Meck, 2013; Spetch and Treit, 1984; Taylor et al., 2007; Weiner and Ross, 1962; Williamson et al., 2008). Tetrahydrocannabinol, the main active component of cannabis, is also known to increase dopamine levels and has been shown to induces consistent overestimations and underproductions in time discrimination and production tasks (Sewell et al., 2013). However, the effects of drug-based dopaminergic manipulations on timing can be hard to parse, due to the non-specificity of different drugs, non-linear dose-response curves (Cools and D’Esposito, 2011; Floresco, 2013; Gjedde et al., 2010; Monte-Silva et al., 2009), and known collateral effects that could themselves impact timing behavior (Rammsayer et al., 2001; Seiden et al., 1993; Wittmann et al., 2007). Individual differences between subjects may moderate the effects of dopamine on time perception, as has been suggested from some human genotyping work (Balci et al., 2013). In support of this, whether individuals make early or late responses during a peak interval procedure while under the influence of amphetamine has been shown to differ as a function of their enjoyment of the drug, as measured by self-report (Lake and Meck, 2013). The authors of this study concluded that in the participants that reported greater enjoyment, the euphoria and positive affect induced by amphetamine competed for attentional resources (Buhusi and Meck, 2009), and thus caused underestimations of time in this group. They liken this explanation to the “time flies when you’re having fun” idiom: if attentional resources are reallocated to positive experiences, then it might be expected that time will be retrospectively underestimated.

Instead of using pharmacological manipulations of dopamine, Failing and Theeuwes (Failing and Theeuwes, 2016) assessed participants’ temporal perception of reward-signaling stimuli. In their first experiment, the authors used a temporal oddball paradigm where the color of the oddball stimulus signaled whether a correct response would be rewarded or not. Presumably, the oddball should act as a predictive cue for reward and lead to phasic dopamine signals. They found that when the oddball indicated that reward would be available, participants were more likely to classify its duration as longer than the reference stimuli, indicating an increase in their internal clock speed during the presentation of the oddball. However, this could also be explained by an increase in the attentional resources dedicated to the oddball. In a second experiment, the color of the reference stimuli presented around the oddball signaled reward availability. In this case, the precision of judgments increased, with no change in tendency to classify the oddball as longer or shorter. A third experiment, nearly identical to the first, compared two oddball stimuli that signaled either low or high rewards. The results of this experiment showed that the perceived duration of the oddball was longer for the high reward oddball than the low reward oddball, suggesting a parametric effect (Failing and Theeuwes, 2016). In summary, this study appeared to show a dissociation between motivational effects and reward prediction errors: reward prediction errors increased the clock speed, whereas motivational differences only affected precision.

In a similar study, Toren et al. (Toren et al., 2020) also showed that positive prediction errors are associated with longer perceived durations. They asked participants to determine which of two images was presented longer, where the second image had overlaid a positive or negative monetary value. When the second image induced a positive reward prediction error, its duration was overestimated, while the opposite was true for negative prediction errors. These results may be attributed to phasic increases in dopamine during reward prediction errors increasing the speed of the internal clock. However, it has also been shown that affective states related to appraisal can influence time perception, as Uusberg et al. (Uusberg et al., 2018) demonstrate by testing participants’ temporal bisection of cues during a gambling task. Goal relevance, congruence, and approach type all increased the subjective duration of cues. Although it is possible that these increases in subjective duration are all elicited by dopamine upregulation of internal clock speed, there may be other systems capable of influencing time perception that also respond to reward.

4.2. Complicating evidence

In contrast with the studies suggesting that dopamine increases correlate with overestimations of time, several studies imply the opposite. In a temporal binding paradigm, where the compression of time between an action and its consequence is measured (Moore and Obhi, 2012), priming participants with visually rewarding images increased time compression (Aarts et al., 2012), indicating a slower internal clock. Notably, this effect was primarily driven by individuals with higher spontaneous blink rates, which may indicate higher levels of tonic dopamine in the striatum (Karson, 1983; Taylor et al., 1999). Similar results have been observed when the actions are paired with monetary rewards, particularly in individuals with high trait sensitivity to reward (Muhle-Karbe and Krebs, 2012). This suggests that, especially in individuals with higher levels of tonic striatal dopamine, reward can lead to underestimations of time - consistent with a slowing of clock speed. However, another study showed that on a temporal bisection task, participants tended to overestimate the length of intervals if they had blinked on the previous trial (Terhune et al., 2016).

Clock speed also appears to slow following caloric rewards, as Fung et al. (Fung et al., 2017b) showed in a temporal production task. Participants were presented with a cue indicating a reward magnitude and a time interval (ranging from 4 to 10 seconds), then were asked to make a response to divide the interval in half. In between trials, participants were given the magnitude of reward corresponding to the previous cue. For caloric rewards, responses were later immediately after consuming larger volumes of liquid, indicating a slower internal clock, while the magnitude of the prospective reward and consumption of non-caloric rewards had no effect. Notably, calorie consumption has been shown to strongly elicit activity in dopaminergic midbrain areas, relative to artificial sweeteners (Frank et al., 2008). In a similar non-human study, rats trained on a peak-interval procedure tended to produce later response peaks after being pre-fed sucrose, while rats pre-fed with other nutrients (lecithin, casein, and saccharine) showed no change (Meck and Church, 1987). In a human temporal bisection task, the durations of images of appetizing foods were shown to be underestimated compared to generally positive or neutral images, implying a slowed internal clock (Gable and Poole, 2012). This effect is opposite that for images overlaid with positive numerical reward values (Failing and Theeuwes, 2016; Toren et al., 2020).

A compelling demonstration of the importance of dopamine in time perception comes from Soares, Atallah, and Paton (Soares et al., 2016), who used optogenetic techniques to directly and specifically manipulate neural activity in the SNc of mice during a temporal bisection task. However, the direction of the effects they observed were opposite those predicted by the dopamine clock hypothesis, proffering the question of how these observations can be reconciled (Simen and Matell, 2016). Instead of increasing perceived time, optogenetic activation of dopamine neurons caused mice to more often classify the timed interval as short, implying a slowed internal clock. The dopamine recordings taken to complement this experiment may provide a clue to rationalize these results. The interval to be classified was marked by the temporal separation of two identical cues. When the second cue came relatively early, i.e. the interval was short, dopamine neurons responded more strongly to it than when the second cue came relatively late. This was modeled by the authors as a linear combination of reward expectation (based on performance) and the inverse likelihood of the second cue (high at first and low at the end). In other words, dopamine responses were greater when the second cue was more surprising. An earlier second cue also meant that reward from a correct response would be received earlier than expected. Under temporal difference learning, this would produce a jump in state value and therefore a larger reward prediction error. When the experimenters optogenetically stimulated dopamine neurons, they artificially increased dopamine levels both during the interval and after the second cue. This creates two possibilities for what caused the shift toward classifying intervals as “short”, (1) elevated dopamine during the interval caused the internal clock to slow (opposite of the dopamine clock hypothesis), or (2) elevated dopamine after the second cue caused the dopamine response to look more similar to those that followed a short interval. For the second case to affect the probability of “short” classifications, the dopamine signals would need to act as an intermediary between the internal clock and the motor structures planning the behavioral output (see Fig. 4D). Future work could attempt to isolate these effects.

Another option is that reward prediction errors may provide temporal feedback to adjust the internal clock. As mentioned previously, time cells in the striatum exhibit the ability to dynamically rescale in response to changing the duration to reward (Mello et al., 2015). Mikhael and Gershman (Mikhael and Gershman, 2019) developed a theoretical model for how a population clock can achieve this rescaling using dopaminergic reward prediction errors. In this model, the direction of dopamine’s effect on clock speed is dependent on whether it is encountered before or after the expected time of reward (see Fig. 4B). However, the studies examining the effects of reward prediction error on time perception discussed earlier (Failing and Theeuwes, 2016; Gable and Poole, 2012; Toren et al., 2020) measure the subjective duration of the reward-informing cue, not the duration to the reward itself. The prediction errors are for either the magnitude or availability of the reward and are not temporal in nature, so any influence on the internal clock would be akin to an off-target effect. That being said, the reward prediction errors potentially generated in these experiments signal that upcoming rewards are closer than expected. In Mikhael and Gershman’s model, these pre-reward dopamine signals may cause the clock to speed up, which could cause reward predicting cues to be perceived as longer, as is the case in the above studies. Interestingly, studies examining self-reports of temporal accuracy and the ability to improve show conflicting results regarding how aware human subjects are of the direction and magnitude of their errors without external feedback (Akdoğan and Balci, 2017; Riemer et al., 2019; Ryan, 2016). As such, the temporal reward prediction error applied in Mikhael and Gershman’s proposed model may not be accessible for conscious evaluation or articulation.

Another possible role for dopamine in timing is in sending prediction error signals to adjust the position of the clock to correct for new information. If an unexpected cue indicates that reward is much closer or further than projected, the prediction error signal could update the temporal reward expectation by jumping the clock to a later or earlier time point in the state space (see Fig. 4C). In a study where temporal prediction errors were artificially induced, participants completed tasks while, unbeknownst to them, the clocks on display moved either faster or slower than real time (Harvey and Monello, 1974). Adjusting their internal senses of time to match the fallacious clocks had a profound effect on the subjective experience of the participants. Individuals who had been tricked into thinking less time had passed rated the task as boring, while those believing more time had passed found it more enjoyable. A more recent series of experiments used similar methodology to create the illusion of slow or fast time and showed that ratings of hedonic experience are higher when time is thought to have passed more quickly (Sackett et al., 2010). It would be of interest to measure dopamine signals produced from cues indicating positive and negative temporal prediction errors.

Apart from modulating clock speed or adjusting clock position, dopamine may play a more direct role in action initiation. In their recent paper, Hamilos et al. (Hamilos et al., 2020) observed ramping dopamine activity during a fixed interval timing task, where mice were required to withhold their lick behavior for 3.3 s after a cue to receive reward. Dopamine signals increased slowly until the time of the first lick, after which they rapidly peaked and decayed. The slope of the ramp was a consistent predictor of lick time, with activity climbing to a threshold level before action initiation. Theoretical work offers a means to explain how dopamine ramps could reflect a continuous prediction error (Hamilos and Assad, 2020; Mikhael et al., 2019). An alternative explanation is that dopamine ramps reflect a reward-related readout of the internal clock as a ramp-to-threshold action gating mechanism (see Fig. 4E). As such, increasing dopamine levels on production tasks would drive the ramp to the threshold earlier, resulting in early responses (underproduction) as is commonly observed (Balci et al., 2010; Buhusi and Meck, 2002; Drew et al., 2007; Hamilos et al., 2020; Matell et al., 2006; Sewell et al., 2013).

5. Conclusion

The literature relating psychologically rewarding factors to human time perception is often equivocal. Many of the directional effects that increased reward magnitude can have on timing behavior in the non-human animal literature may be accounted for by motivational effects (Balci, 2014), which may be distinct from an effect on the perception of time itself. There is also considerable evidence that physiological and metabolic factors are important to time perception, which raises the question of whether different types of rewards (e.g. primary versus secondary) could affect time perception in different ways.

As a whole, this literature suggests that dopamine likely has nuanced and varied roles across different temporal behaviors. In addition to modulating speed, dopamine may relay the output of the internal clock to help govern the production of timed behaviors, in which case dopamine manipulations could alter the report of time by disrupting the channel through which timing information guides behavior. The internal clock may contribute temporal information to the generation of dopaminergic prediction errors, while dopamine signals send prediction errors back to adjust the internal clock. The varied approaches taken in studies of time perception may help elucidate the complex role of dopamine in timing. Dopamine induced by rewards or reward predicting cues will be accompanied by a variety of other responses throughout the brain, whereas optogenetic activation of dopamine neurons may have more isolated effects. Classification and production style tasks address the same question, but may engage separate systems or the same systems in different ways. Dopaminergic activity may have different effects depending on when it occurs throughout the timing process, whether it is elevated during the interval or at the point of decision or action. While the monotonic clock speed modulation proposed by the dopamine clock hypothesis presents a straightforward role for dopamine in timing, accumulating evidence suggests that dopamine may impact timing and time perception at multiple stages of temporal processing.

For humans and other organisms, one could argue that the paramount function of time perception is in causal inference and prediction. Indeed contemporary models of learning rely on reward predictions that are time-sensitive (Fiorillo et al., 2008). However, there is a lack of explicit investigation of how the characteristics of neurobiological reward prediction, and reward prediction error signals, map onto what is known about time perception and interval judgments. Above and beyond merely learning from and acting in a time-ordered environment, humans also experience time as an abstract perceptual sensation, and very few theorists have attempted to integrate these perceptual aspects of human time perception into models of learning (notable exceptions are (Gershman et al., 2014), and (Mikhael and Gershman, 2019). Such details would provide a link between the principles and algorithms underlying learning and our high-level perceptual experience.

A relationship between time perception and reward processing also makes sense from an ecological perspective, and the fact that animals have a fundamental requirement for energy (i.e. reward). If organisms rely on their current reward state to inform their sense of urgency, they can act to seek future reward in an optimal fashion (i.e. optimal foraging (Namboodiri et al., 2016; Namboodiri et al., 2014a, c; Stephens and Krebs, 1986). There is indeed some evidence of adjustments to timed behavior as a function of energy state and reward rates (Fung et al., 2017a; Niv et al., 2007; Yoon et al., 2018), yet these studies do not refer to experiential aspects of time perception or urgency in a broader sense. There are, however, substantial theoretical efforts to reconcile characteristics of time perception in models of reward-seeking and decision making (Bateson, 2003; Cui, 2011; Hills and Adler, 2002; Namboodiri and Hussain Shuler, 2016; Namboodiri et al., 2014a; Ray and Bossaerts, 2011; Takahashi and Han, 2012), which demonstrate that the combination of these two streams of literature has strong utility, by adding psychologically plausible constraints to limit the hypothesis space.

This reasoning, along with the evidence presented in this review, suggest that a consideration of the perceptual characteristics of time perception should aid in the specification of models of reward processing and learning. Similarly, time perception researchers could benefit by putting greater emphasis on its functional and evolutionary role, and by considering that time perception may be an epiphenomenal consequence of reward processing mechanisms.

Acknowledgements

Gracious thanks to Stefan Bode and Carsten Murawski for their insight and help with editing.

Funding

This work was supported in part by a research grant, R01 MH123446, from the U.S. National Institute of Mental Health to MGHS.

Footnotes

Declaration of Competing Interest

The authors have no competing interests to declare.

References

- Aarts H, Bijleveld E, Custers R, Dogge M, Deelder M, Schutter D, Haren NE, 2012. Positive priming and intentional binding: eye-blink rate predicts reward information effects on the sense of agency. Soc. Neurosci 7, 105–112. [DOI] [PubMed] [Google Scholar]

- Aberman JE, Salamone JD, 1999. Nucleus accumbens dopamine depletions make rats more sensitive to high ratio requirements but do not impair primary food reinforcement. Neuroscience 92, 545–552. [DOI] [PubMed] [Google Scholar]

- Abner RT, Edwards T, Douglas A, Brunner D, 2001. Pharmacology of temporal cognition in two mouse strains. Int. J. Comp. Psychol 14. [Google Scholar]

- Adamantidis AR, Tsai H-C, Boutrel B, Zhang F, Stuber GD, Budygin Ea., Touriño C, Bonci A, Deisseroth K, de Lecea L, 2011. Optogenetic interrogation of dopaminergic modulation of the multiple phases of reward-seeking behavior. J. Neurosci 31, 10829–10835. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Akdoğan B, Balci F, 2017. Are you early or late?: temporal error monitoring. J. Exp. Psychol. Gen 146, 347–361. [DOI] [PubMed] [Google Scholar]

- Akhlaghpour H, Wiskerke J, Choi JY, Taliaferro JP, Au J, Witten IB, 2016. Dissociated sequential activity and stimulus encoding in the dorsomedial striatum during spatial working memory. Elife 5. 10.7554/eLife.19507. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Allen Institute for Brain Science, 2010. Allen Human Brain Atlas. [Google Scholar]

- Allman MJ, Meck WH, 2012. Pathophysiological distortions in time perception and timed performance. Brain 135, 656–677. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alonso R, Brocas I, Carrillo JD, 2014. Resource allocation in the brain. Rev. Econ. Stud. [Google Scholar]

- Bakhurin KI, Goudar V, Shobe JL, Claar LD, Buonomano DV, Masmanidis SC, 2017. Differential Encoding of Time by Prefrontal and Striatal Network Dynamics. J. Neurosci 37, 854–870. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Balci F, Simen P, 2016. A decision model of timing. Curr. Opin. Behav. Sci 8, 94–101. [Google Scholar]

- Balci F, Ludvig EA, Gibson JM, Allen BD, Frank KM, Kapustinski BJ, Fedolak TE, Brunner D, 2008. Pharmacological manipulations of interval timing using the peak procedure in male C3H mice. Psychopharmacology 201, 67–80. [DOI] [PubMed] [Google Scholar]

- Balci F, Ludvig EA, Abner R, Zhuang X, Poon P, Brunner D, 2010. Motivational effects on interval timing in dopamine transporter (DAT) knockdown mice. Brain Res. 1325, 89–99. [DOI] [PubMed] [Google Scholar]

- Balci F, Freestone D, Simen P, Desouza L, Cohen JD, Holmes P, 2011. Optimal temporal risk assessment. Front. Integr. Neurosci 5, 56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Balci F, Wiener M, Cavdaroglu B, Branch Coslett H, 2013. Epistasis effects of dopamine genes on interval timing and reward magnitude in humans. Neuropsychologia 51, 293–308. [DOI] [PubMed] [Google Scholar]

- Balci F, 2014. Interval timing, dopamine, and motivation. Timing Time Percept. 2, 379–410. [Google Scholar]

- Barkley RA, Edwards G, Laneri M, Fletcher K, Metevia L, 2001a. Executive functioning, temporal discounting, and sense of time in adolescents with attention deficit hyperactivity disorder (ADHD) and oppositional defiant disorder (ODD). J. Abnorm. Child Psychol 29, 541–556. [DOI] [PubMed] [Google Scholar]

- Barkley RA, Murphy KR, Bush T, 2001b. Time perception and reproduction in young adults with attention deficit hyperactivity disorder. Neuropsychology 15, 351–360. [DOI] [PubMed] [Google Scholar]

- Bateson M, 2003. Interval timing and optimal foraging. Functional and neural mechanisms of interval timing 19. [Google Scholar]

- Bermudez MA, Schultz W, 2014. Timing in reward and decision processes. Philos. Trans. R. Soc. Lond., B, Biol. Sci 369, 20120468. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berns GS, McClure SM, Pagnoni G, Montague PR, 2001. Predictability modulates human brain response to reward. J. Neurosci 21, 2793–2798. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berridge KC, 2007. The debate over dopamine’s role in reward: the case for incentive salience. Psychopharmacology 191, 391–431. [DOI] [PubMed] [Google Scholar]

- Bizo LA, White KG, 1994. The behavioral theory of timing: reinforcer rate determines pacemaker rate. J. Exp. Anal. Behav 61, 19–33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bolbecker AR, Westfall DR, Howell JM, Lackner RJ, Carroll CA, O’Donnell BF, Hetrick WP, 2014. Increased timing variability in schizophrenia and bipolar disorder. PLoS One 9, e97964. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Botvinick M, Braver T, 2015. Motivation and cognitive control: from behavior to neural mechanism. Annu. Rev. Psychol 66, 83–113. [DOI] [PubMed] [Google Scholar]

- Brisch R, Saniotis A, Wolf R, Bielau H, Bernstein HG, Steiner J, Bogerts B, Braun K, Jankowski Z, Kumaratilake J, Henneberg M, Gos T, 2014. The role of dopamine in schizophrenia from a neurobiological and evolutionary perspective: old fashioned, but still in vogue. Front. Psychiatry 5, 47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bromberg-Martin ES, Matsumoto M, Hikosaka O, 2010. Distinct tonic and phasic anticipatory activity in lateral habenula and dopamine neurons. Neuron 67, 144–155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown SW, 1997. Attentional resources in timing: interference effects in concurrent temporal and nontemporal working memory tasks. Percept. Psychophys 59, 1118–1140. [DOI] [PubMed] [Google Scholar]

- Buhusi CV, Meck WH, 2002. Differential effects of methamphetamine and haloperidol on the control of an internal clock. Behav. Neurosci 116, 291–297. [DOI] [PubMed] [Google Scholar]

- Buhusi CV, Meck WH, 2009. Relative time sharing: new findings and an extension of the resource allocation model of temporal processing. Philos. Trans. R. Soc. Lond., B, Biol. Sci 364, 1875–1885. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carroll CA, O’Donnell BF, Shekhar A, Hetrick WP, 2009. Timing dysfunctions in schizophrenia span from millisecond to several-second durations. Brain Cogn. 70, 181–190. [DOI] [PubMed] [Google Scholar]

- Cevik MO, 2003. Effects of methamphetamine on duration discrimination. Behav. Neurosci 117, 774–784. [DOI] [PubMed] [Google Scholar]

- Chang CY, Esber GR, Marrero-Garcia Y, Yau HJ, Bonci A, Schoenbaum G, 2016. Brief optogenetic inhibition of dopamine neurons mimics endogenous negative reward prediction errors. Nat. Neurosci 19, 111–116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cheng RK, Liao RM, 2007. Dopamine receptor antagonists reverse amphetamine-induced behavioral alteration on a differential reinforcement for low-rate (DRL) operant task in the rat. Chin. J. Physiol 50, 77–88. [PubMed] [Google Scholar]

- Cheng RK, MacDonald CJ, Meck WH, 2006. Differential effects of cocaine and ketamine on time estimation: implications for neurobiological models of interval timing. Pharmacol. Biochem. Behav 85, 114–122. [DOI] [PubMed] [Google Scholar]

- Cheng RK, Hakak OL, Meck WH, 2007. Habit formation and the loss of control of an internal clock: inverse relationship between the level of baseline training and the clock-speed enhancing effects of methamphetamine. Psychopharmacology 193, 351–362. [DOI] [PubMed] [Google Scholar]

- Cheung THC, Bezzina G, Asgari K, Body S, Fone KCF, Bradshaw CM, Szabadi E, 2006. Evidence for a role of D1 dopamine receptors in d-amphetamine’s effect on timing behaviour in the free-operant psychophysical procedure. Psychopharmacology 185, 378–388. [DOI] [PubMed] [Google Scholar]

- Chiang TJ, Al-Ruwaitea AS, Mobini S, Ho MY, Bradshaw CM, Szabadi E, 2000. The effect of d-amphetamine on performance on two operant timing schedules. Psychopharmacology 150, 170–184. [DOI] [PubMed] [Google Scholar]

- Chiba A, Oshio K, Inase M, 2015. Neuronal representation of duration discrimination in the monkey striatum. Physiol. Rep 3 10.14814/phy2.12283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Church RM, 1984. Properties of the internal clock. Ann. N. Y. Acad. Sci 423, 566–582. [DOI] [PubMed] [Google Scholar]

- Church RM, Deluty MZ, 1977. Bisection of temporal intervals. J. Exp. Psychol. Anim. Behav. Process 3, 216–228. [DOI] [PubMed] [Google Scholar]

- Ciullo V, Spalletta G, Caltagirone C, Jorge RE, Piras F, 2016. Explicit time deficit in schizophrenia: systematic review and meta-analysis indicate it is primary and not domain specific. Schizophr. Bull 42, 505–518. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Conti R, 2001. Time flies: investigating the connection between intrinsic motivation and the experience of time. J. Pers 10.1111/1467-6494.00134. [DOI] [PubMed] [Google Scholar]

- Cools R, D’Esposito M, 2011. Inverted-U-shaped dopamine actions on human working memory and cognitive control. Biol. Psychiatry 69, e113–25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coslett HB, Wiener M, Chatterjee A, 2010. Dissociable neural systems for timing: evidence from subjects with basal ganglia lesions. PLoS One 5, e10324. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coull JT, Cheng R-K, Meck WH, 2011. Neuroanatomical and neurochemical substrates of timing. Neuropsychopharmacology 36, 3–25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coull JT, Hwang HJ, Leyton M, Dagher A, 2012. Dopamine precursor depletion impairs timing in healthy volunteers by attenuating activity in putamen and supplementary motor area. J. Neurosci 32, 16704–16715. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cui X, 2011. Hyperbolic discounting emerges from the scalar property of interval timing. Front. Integr. Neurosci 5, 24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Damier P, Hirsch EC, Agid Y, Graybiel AM, 1999. The substantia nigra of the human brain. II. Patterns of loss of dopamine-containing neurons in Parkinson’s disease. Brain 122 (Pt 8), 1437–1448. [DOI] [PubMed] [Google Scholar]

- Daw ND, Courville AC, Tourtezky DS, 2006. Representation and timing in theories of the dopamine system. Neural Comput. 18, 1637–1677. [DOI] [PubMed] [Google Scholar]

- Ding S-L, Royall JJ, Sunkin SM, Ng L, Facer BAC, Lesnar P, Guillozet-Bongaarts A, McMurray B, Szafer A, Dolbeare TA, Stevens A, Tirrell L, Benner T, Caldejon S, Dalley RA, Dee N, Lau C, Nyhus J, Reding M, Riley ZL, Sandman D, Shen E, van der Kouwe A, Varjabedian A, Wright M, Zöllei L, Dang C, Knowles JA, Koch C, Phillips JW, Sestan N, Wohnoutka P, Zielke HR, Hohmann JG, Jones AR, Bernard A, Hawrylycz MJ, Hof PR, Fischl B, Lein ES, 2016. Comprehensive cellular-resolution atlas of the adult human brain. J. Comp. Neurol 524, 3127–3481. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Drew MR, Fairhurst S, Malapani C, Horvitz JC, Balsam PD, 2003. Effects of dopamine antagonists on the timing of two intervals. Pharmacol. Biochem. Behav 75, 9–15. [DOI] [PubMed] [Google Scholar]

- Drew MR, Simpson EH, Kellendonk C, Herzberg WG, Lipatova O, Fairhurst S, Kandel ER, Malapani C, Balsam PD, 2007. Transient overexpression of striatal D2 receptors impairs operant motivation and interval timing. J. Neurosci 27, 7731–7739. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Droit-Volet S, Meck WH, 2007. How emotions colour our perception of time. Trends Cogn. Sci. (Regul. Ed.) 11, 504–513. [DOI] [PubMed] [Google Scholar]

- Elsinger CL, Rao SM, Zimbelman JL, Reynolds NC, Blindauer KA, Hoffmann RG, 2003. Neural basis for impaired time reproduction in Parkinson’s disease: an fMRI study. J. Int. Neuropsychol. Soc 9, 1088–1098. [DOI] [PubMed] [Google Scholar]

- Erin S, Calipari MJF, 2013. Amphetamine mechanisms and actions at the dopamine terminal revisited. J. Neurosci 33, 8923. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Failing M, Theeuwes J, 2016. Reward alters the perception of time. Cognition 148, 19–26. [DOI] [PubMed] [Google Scholar]

- Fiorillo CD, Tobler PN, Schultz W, 2003. Discrete coding of reward probability and uncertainty by dopamine neurons. Science 299, 1898–1902. [DOI] [PubMed] [Google Scholar]

- Fiorillo CD, Tobler PN, Schultz W, 2005. Evidence that the delay-period activity of dopamine neurons corresponds to reward uncertainty rather than backpropagating TD errors. Behav. Brain Funct 1, 7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fiorillo CD, Newsome WT, Schultz W, 2008. The temporal precision of reward prediction in dopamine neurons. Nat. Neurosci 966–973, 2008/07/29. [DOI] [PubMed] [Google Scholar]

- Floresco SB, 2013. Prefrontal dopamine and behavioral flexibility: shifting from an “inverted-U” toward a family of functions. Front. Neurosci 7, 62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frank GKW, Oberndorfer TA, Simmons AN, Paulus MP, Fudge JL, Yang TT, Kaye WH, 2008. Sucrose activates human taste pathways differently from artificial sweetener. Neuroimage 39, 1559–1569. [DOI] [PubMed] [Google Scholar]

- Fung BJ, Bode S, Murawski C, 2017a. High monetary reward rates and caloric rewards decrease temporal persistence. Proc. Biol. Sci 284 10.1098/rspb.2016.2759. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fung BJ, Murawski C, Bode S, 2017b. Caloric primary rewards systematically alter time perception. J. Exp. Psychol. Hum. Percept. Perform 43, 1925–1936. [DOI] [PubMed] [Google Scholar]

- Gable PA, Poole BD, 2012. Time flies when you’re having approach-motivated fun: effects of motivational intensity on time perception. Psychol. Sci 23, 879–886. [DOI] [PubMed] [Google Scholar]

- Gershman SJ, Moustafa AA, Ludvig EA, 2014. Time representation in reinforcement learning models of the basal ganglia. Front. Comput. Neurosci 7, 194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gibbon J, 1977. Scalar expectancy theory and Weber’s law in animal timing. Psychol. Rev 84, 279–325. [Google Scholar]

- Gibbon J, 1991. Origins of scalar timing. Learn. Motiv 22, 3–38. [Google Scholar]

- Gibbon J, Church RM, Meck WH, 1984. Scalar timing in memory. Ann. N. Y. Acad. Sci 423, 52–77. [DOI] [PubMed] [Google Scholar]

- Gjedde A, Kumakura Y, Cumming P, Linnet J, Møller A, 2010. Inverted-U-shaped correlation between dopamine receptor availability in striatum and sensation seeking. Proc. Natl. Acad. Sci. U. S. A 107, 3870–3875. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gouvea TS, Monteiro T, Motiwala A, Soares S, Machens C, Paton JJ, 2015. Striatal dynamics explain duration judgments. Elife 4. 10.7554/eLife.11386. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gregorios-Pippas L, Tobler PN, Schultz W, 2009. Short-term temporal discounting of reward value in human ventral striatum. J. Neurophysiol 101, 1507–1523. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guillin O, Abi-Dargham A, Laruelle M, 2007. Neurobiology of dopamine in schizophrenia. Int. Rev. Neurobiol 78, 1–39. [DOI] [PubMed] [Google Scholar]

- Guru A, Seo C, Post RJ, Kullakanda DS, Schaffer JA, Warden MR, 2020. Ramping activity in midbrain dopamine neurons signifies the use of a cognitive map. bioRxiv. 10.1101/2020.05.21.108886. [DOI] [Google Scholar]

- Hamilos AE, Assad JA, 2020. Application of a unifying reward-prediction error (RPE)-based framework to explain underlying dynamic dopaminergic activity in timing tasks. bioRxiv. 10.1101/2020.06.03.128272. [DOI] [Google Scholar]

- Hamilos AE, Spedicato G, Hong Y, Sun F, Li Y, Assad JA, 2020. Dynamic Dopaminergic Activity Controls the Timing of Self-timed Movement. 10.1101/2020.05.13.094904. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harrington DL, Boyd LA, Mayer AR, Sheltraw DM, Lee RR, Huang M, Rao SM, 2004. Neural representation of interval encoding and decision making. Brain Res. Cogn. Brain Res 21, 193–205. [DOI] [PubMed] [Google Scholar]

- Harvey L, Monello L, 1974. Cognitive manipulation of boredom. In: Nisbett RE (Ed.), Thought and Feeling: Cognitive Alteration of Feeling States, pp. 74–82. [Google Scholar]

- Hills TT, Adler FR, 2002. Time’s crooked arrow: optimal foraging and rate-biased time perception. Anim. Behav 64, 589–597. [Google Scholar]

- Hollerman JR, Schultz W, 1998. Dopamine neurons report an error in the temporal prediction of reward during learning. Nat. Neurosci 1, 304–309. [DOI] [PubMed] [Google Scholar]

- Howe MW, Tierney PL, Sandberg SG, Phillips PEM, Graybiel AM, 2013. Prolonged dopamine signalling in striatum signals proximity and value of distant rewards. Nature 500, 575–579. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jimura K, Chushak MS, Braver TS, 2013. Impulsivity and self-control during intertemporal decision making linked to the neural dynamics of reward value representation. J. Neurosci 33, 344–357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jones CR, Jahanshahi M, 2009. The substantia nigra, the basal ganglia, dopamine and temporal processing. J. Neural Transm. Suppl 161–171, 2009/01/01. [DOI] [PubMed] [Google Scholar]

- Jones CR, Malone TJ, Dirnberger G, Edwards M, Jahanshahi M, 2008. Basal ganglia, dopamine and temporal processing: performance on three timing tasks on and off medication in Parkinson’s disease. Brain Cogn. 68, 30–41. [DOI] [PubMed] [Google Scholar]

- Karson CN, 1983. Spontaneous eye-blink rates and dopaminergic systems. Brain 106 (Pt 3), 643–653. [DOI] [PubMed] [Google Scholar]

- Killeen PR, Fetterman JG, 1983. Time’ s causes. Time 1–48. [Google Scholar]

- Killeen PR, Fetterman JG, 1988. A behavioral theory of timing. Psychol. Rev 95, 274–295. [DOI] [PubMed] [Google Scholar]

- Kim KM, Baratta MV, Yang A, Lee D, Boyden ES, Fiorillo CD, 2012. Optogenetic mimicry of the transient activation of dopamine neurons by natural reward is sufficient for operant reinforcement. PLoS One 7, e33612. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kirkpatrick K, 2014. Interactions of timing and prediction error learning. Behav. Processes 101C, 135–145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kleinginna PR, Kleinginna AM, 1981. A categorized list of motivation definitions, with a suggestion for a consensual definition. Motiv. Emot 5, 263–291. [Google Scholar]

- Kobayashi S, Schultz W, 2008. Influence of reward delays on responses of dopamine neurons. J. Neurosci 28, 7837–7846. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kopec CD, Brody CD, 2010. Human performance on the temporal bisection task. Brain Cogn. 74, 262–272. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lak A, Stauffer WR, Schultz W, 2014. Dopamine prediction error responses integrate subjective value from different reward dimensions. Proc. Natl. Acad. Sci. U. S. A 111, 2343–2348. [DOI] [PMC free article] [PubMed] [Google Scholar]