Abstract

The COVID-19 virus has caused and continues to cause unprecedented impacts on the life trajectories of millions of people globally. Recently, to combat the transmission of the virus, vaccination campaigns around the world have become prevalent. However, while many see such campaigns as positive (e.g., protecting lives), others see them as negative (e.g., the side effects that are not fully understood scientifically), resulting in diverse sentiments towards vaccination campaigns. In addition, the diverse sentiments have seldom been systematically quantified let alone their dynamic changes over space and time. To shed light on this issue, we propose an approach to analyze vaccine sentiments in space and time by using supervised machine learning combined with word embedding techniques. Taking the United States as a test case, we utilize a Twitter dataset (approximately 11.7 million tweets) from January 2015 to July 2021 and measure and map vaccine sentiments (Pro-vaccine, Anti-vaccine, and Neutral) across the nation. In doing so, we can capture the heterogeneous public opinions within social media discussions regarding vaccination among states. Results show how positive sentiment in social media has a strong correlation with the actual vaccinated population. Furthermore, we introduce a simple ratio between Anti and Pro-vaccine as a proxy to quantify vaccine hesitancy and show how our results align with other traditional survey approaches. The proposed approach illustrates the potential to monitor the dynamics of vaccine opinion distribution online, which we hope, can be helpful to explain vaccination rates for the ongoing COVID-19 pandemic.

Keywords: COVID-19 Pandemic, Vaccination, Sentiment Analysis, Time & Space, Social Media, United States

1. Introduction

Over the last two decades there have been several diseases outbreaks, such as HIN1 influenza, the Ebola and Zika viruses and the current COVID-19 outbreak. Of these diseases have been more localised than others (e.g., Ebola) but without exception, they have brought tremendous economic losses and deaths. Take the ongoing COVID-19 pandemic as an example, which was declared an international public health emergency by the World Health Organization (WHO) in early 2020, it has now spread all around the world. The pandemic has affected hundreds of millions of people’s lives in many aspects including environmental, psychological, social, and economic (Saadat et al., 2020, Saladino et al., 2020, Sharifi and Khavarian-Garmsir, 2020).

In order to prevent the spread of the COVID-19 in society, governments in different countries have put in place various prevention and control measures, such as social distancing, stay-at-home constraints, closure of educational institutions, workplaces along with restricting movement either internally or externally (GÜNER et al., 2020). Although it has been seen that such counter measures for infection control can slow the transmission of the disease (Ge et al., 2022, Lai et al., 2020, Ruktanonchai et al., 2020), thus far they cannot prevent the disease from spreading totally. One possible solution however to stop the spread is that of vaccination, which can also help reduce mortality and economic losses and aid the world gradually to return to some sort of normalcy.

However, notwithstanding the continuous development and maturation of vaccine research since the 18th century (Plotkin, 2014), the implementation of vaccination campaigns is still challenging. This is mainly due to the potential impacts of vaccines on human longevity and health, such as side effects that are not fully understood scientifically, and the concerns related to religion and philosophical beliefs (Calandrillo, 2004, Phipps, 2020). These impacts and concerns have slowly taken root in people’s consciousness, resulting in diverse public sentiments (e.g., positive, negative, or neutral) towards vaccination.

With the proliferation of social media platforms, such as Facebook, Twitter, Weibo, and so on, people now have more flexibility than ever to share their attitudes regarding vaccination (Liu, 2012). However, the convenience of the digital era has also put people in an environment flooded with diverse information. The information, especially those from Anti-vaccine groups who disseminate negative views about vaccines, can alter the public’s perceptions regarding vaccination. As such, vaccine hesitancy has been identified as one of the ten main threats to global health in 2019 by the WHO (2019). Negative or hesitant vaccine sentiment raises risks of vaccine-preventable diseases (Dubé et al., 2013, Puri et al., 2020), contributing to a suboptimal uptake of vaccination. From this point of view, it is crucial to understand the heterogeneous public opinions regarding vaccination so as to provide insightful informs for enhancing vaccine coverage.

One way to explore this is via sentiment analysis, which is one of the most active research fields in Natural Language Processing (NLP) and has been widely used to detect and classify sentiments from text data. Although social media platforms have the potential to amplify the negative voice of vaccination as information carriers, they also serve as digital Petri dishes, opening up a host of new possibilities for sentiment analysis by making it more affordable and convenient to collect large-scale data. Recently, researchers have used different techniques, such as lexicon-based or learning-based sentiment analysis, to assess public attitudes towards vaccination in online social media (Hu et al., 2021, Villavicencio et al., 2021, Yousefinaghani et al., 2021, Yuan et al., 2019), but most of them solely focused on a short study period, and no one to our knowledge has compared the dynamic changes of vaccine sentiments before and after a disease outbreak over a prolonged period of time. We take the promising intersection of sentiment analysis and social media data as a starting point to further unpack the potential dynamic changes of the public’s vaccine attitudes over a long-term period. We argue that sentiment analysis lens on large-scale social media data can complement understanding public opinions regarding vaccination based on survey research with views from both spatial and temporal perspectives.

To illustrate this potential, we took the United States as a test case and utilized a Twitter dataset from January 2015 to July 2021. We started by developing a classifier to detect three vaccine sentiments (Pro-vaccine, Anti-vaccine, and Neutral) based on an approach that combines machine learning and word embedding techniques. Subsequently, taking the time of the COVID-19 outbreak as the demarcation point, we divided the study period into two phases - before and after the COVID-19 outbreak. In doing so, we can compare the three vaccine sentiments before and after the outbreak and as such reveal specific changes or trends in public attitudes towards vaccination in the United States. Moreover, we proposed a metric (A2P Ratio) derived from the identified Pro- and Anti-vaccine sentiments to evaluate the vaccine hesitancy and validated it with the estimated vaccine hesitancy from Centers for Disease Control and Prevention (CDC).

Doing this allows us to address questions such as of what is the dominant vaccine sentiment before and after the outbreak? Did vaccine sentiment change over time and where did such changes take place? What are the relationships between different vaccine sentiments and the actual vaccination rates? These questions - and others alike - have direct policy implications. Such as discussions surrounding the topics of the effectiveness of interventions/strategies for enhancing vaccine uptake and immunization coverage, and the psychological, social, and political factors that sustain public trust in vaccines (Larson et al., 2011, Odone et al., 2015). We argue that tracking the dynamics of vaccine sentiments over space and time can generate informed insights for these questions. This is especially the case when combined with the analytical latitude offered by social media data that give more possibilities for assessing vaccine sentiments at larger scales in terms of space and time.

In the remainder of the paper, we will provide an overview of the vaccination background and the commonly used techniques for sentiment analysis (Section 2). After that, we will describe the details of the data sets and the methodology utilized to identify public attitudes towards vaccination in the online discussion (Section 3). We will discuss the results through a series of visualization and specific state examples in Section 4 and lastly, outline the core findings and potential future works in Section 5.

2. Background

Modern day vaccinations can be traced back to the late 18th and early 19th Century, when Edward Jenner created the world’s first ever vaccine to contain smallpox (Battley, 1982, Edward, 1802). However, the term vaccine at that time was only used to refer to the smallpox vaccine. It was not until Louis Pasteur and his cure for rabies in the late 19th Century that the term vaccine became more broadly used (Stern and Markel, 2005). This medical cure to rabies, changed the American public’s perceptions and expectations of science (Hansen, 1998) and leaded to the widespread adoption of other vaccines, such as measles, rubella, tetanus, and diphtheria. As such vaccination campaigns were designated as one of the top ten public health achievements of the United States in the 20th century (CDC, 1999).

Although vaccination has been a crucial part of preventative health care that has saved millions of lives, some have negative opinions with respect to them. One typical example is the autism-vaccine controversy where Wakefield et al. (1998) linked the rise in the ratio of children with autism to measles, mumps, and rubella (MMR) vaccination. Although the claim was eventually proved false (Wakefield et al., 1998), people became conscious about vaccine safety not only in the United States but also more globally (Motta and Stecula, 2021). Over time, public debate regarding vaccination has gradually become polarized. People who support vaccination claim that it is part of a citizen’s responsibility to be vaccinated as it helps mitigate risks of diseases transmission in society (Blume, 2006). While for anti-vaccinists, they appeal more to human rights and liberties fighting against the mandatory nature of vaccination based on concerns related to vaccine safety, religious and philosophical beliefs (Calandrillo, 2004, Phipps, 2020).

The anti-vaccine movement may put vaccine practices at risk of being rejected, and their reach can be significantly amplified in the age of information because anti-vaccinists can disseminate the negatives about vaccination through social media (Evrony and Caplan, 2017, Kata, 2012, Smith and Graham, 2019). As such, the anti-vaccine movement can alter public perceptions about vaccination, and the resulting vaccine hesitancy is one of such risks. Vaccine hesitancy can be colloquially referred to as a kind of behavioral phenomenon in which people are not confident in or dissatisfied with vaccination and may potentially delay or even refuse vaccination (Finney Rutten et al., 2021). Research has provided evidence that vaccine hesitancy is one of the factors leading to the decline of vaccine coverage, which raises disease transmission risks that, in practice, can be mitigated by vaccination (Dubé et al., 2013, Puri et al., 2020). This has increased difficulties in the uptake of vaccines, particularly in the context of the ongoing COVID-19 pandemic, which has caused and continues to cause unprecedented impacts on life trajectories of millions of people globally (Palacios Cruz et al., 2020, Velavan and Meyer, 2020, Xiong et al., 2020). As such, understanding public attitude towards vaccination responding to the COVID-19 pandemic is of utmost importance to the current global public health emergency.

In this context, many studies have used different sentiment analysis techniques to unfold the public sentiments or their emotions regarding vaccination, especially in the era of smart technologies, where crowd sourced data (e.g., social media data, customer reviews) have become more and more available (Yang et al., 2017). These data sources provide researchers more opportunities and flexibility to perform sentiment analysis on a large scale. Generally, sentiment analysis techniques can be classified into two major categories, namely lexical rule-based approaches and learning-based methods. More precisely, the lexical rule-based approaches utilize the manually created and validated lexicon with pre-assigned valence scores to analyze sentiment polarities. One typical example is the Valence Aware Dictionary and sEntiment Reasoner (VADER) model developed by Hutto and Gilbert (2014). VADER has the ability to capture both the polarity (positive or negative) and intensity (how positive or negative) of sentiments. For example, Hu et al. (2021) utilized VADER combined with topic modeling to measure public perceptions on COVID-19 vaccines by different topics extracted from tweets in the United States and compared them to spatio-temporal shifts of the identified sentiments from early 2020 to early 2021. Similarly, Yousefinaghani et al. (2021) used VADER to measure public opinions and emotions on vaccinations with respect to emotional expression based on Twitter posts, but in multiple countries. However, as noted earlier, the lexical rule-based approaches require manually generating and verifying predefined dictionaries, which can be a time-intensive process and the lexicon may need to be revised after a certain time. In addition, they only work on individual words without evaluating the context of words, which may result in errors, especially for sarcastic or ironic texts.

Therefore, other studies have turned their attention to more automated ways, and the learning-based sentiment analysis methods are what they looked for, such as machine learning technology. The machine learning algorithms generally require transforming textual data into numeric representations before they can process them. To this end, different methods have been used, for example, the Term Frequency-Inverse Document Frequency (TF-IDF) algorithm. TF-IDF is based on the Bag-of-Words (BoW) model, which measures the mathematical importance of words in documents from a probabilistic point of view (Aizawa, 2003). For example, Yuan et al. (2019) investigated the polarized online MMR vaccine debate in Twitter after the California Disneyland measles outbreak in 2015. They used TF-IDF to extract features of the input dataset before classifying sentiments with different machine learning techniques. While in another example, Villavicencio et al. (2021) studied the stance of Filipinos regarding COVID-19 vaccines. They also utilized TF-IDF to generate word vectors from Twitter posts and performed sentiment analysis using the Naïve Bayes classification algorithm. However, the traditional TF-IDF approach may be slow for enormous vocabularies because it works directly in the word-count space. Moreover, it cannot catch the position in a text and the semantics between words due to the designed principle.

In order to overcome these limitations, word embedding techniques, such as Word2Vec (Mikolov et al., 2013), have been proposed which can not only efficiently compute vector representations of words for large datasets, but can also capture the syntactic and semantic relations among words (Rezaeinia et al., 2019). Although Word2Vec has been used in sentiment analysis related to COVID-19 pandemic (e.g., Mostafa, 2021, Sciandra, 2020, Yu et al., 2020), to the authors knowledge, no one has yet used it to analyze public attitudes towards vaccination in the online discussion, let alone the dynamic changes of vaccine sentiments over space and time. Against this backdrop, we proposed to analyze public attitudes towards vaccination in online debate from both spatial and temporal perspectives through the combination of Word2Vec and supervised machine learning techniques.

3. Methodology

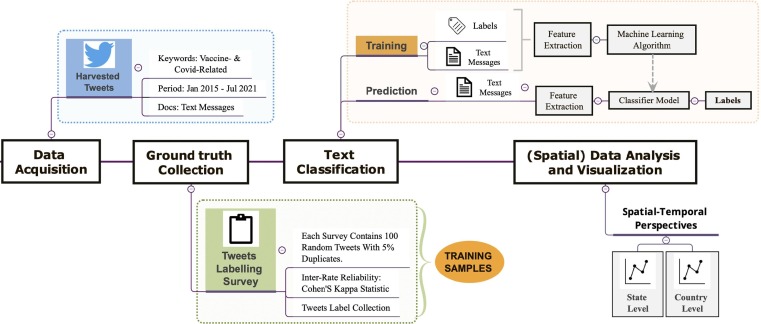

Sentiment analysis has been widely used in different fields to study public perceptions of health-related issues, especially in the context of infodemiology related to the COVID-19 pandemic. However, as far as we know, no one has compared public attitudes towards vaccination before and after a major disease outbreak. In this paper, we take the United States as a case study and utilize a large-scale Twitter dataset to understand the dynamic public attitudes on social media towards vaccination from both spatial and temporal perspectives. More precisely, we start by introducing the Twitter dataset used in this study (Section 3.1), followed by hand-labeled tweets collection through the designed dynamic online labeling questionnaire (Section 3.2). Then we demonstrate an ensemble approach combining Word2Vec and machine learning techniques to develop a classifier for sentiment analysis (Section 3.3). The classifier was subsequently applied to identify the sentiment of unlabeled tweets. Lastly, in Section 3.4, we introduce a simple ratio between Anti and Pro-vaccine as a proxy to quantify vaccine hesitancy. Fig. 1 illustrates an overview of the research outline.

Fig. 1.

An overview of research outline.

3.1. Twitter data collection

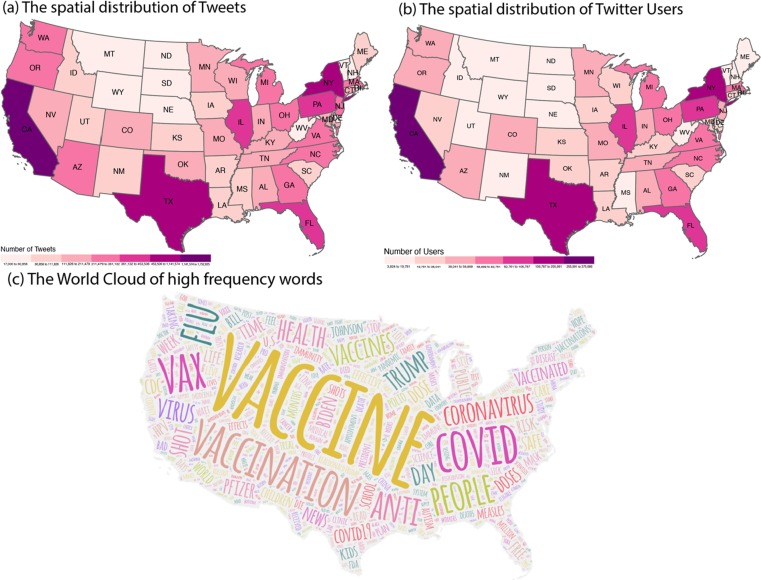

To illustrate the aforementioned approach, we collected tweets sent in the United States between Jan 2015 and July 2021 utilizing the geosocial system Croitoru et al. (2013) using the keyword “vaccination” or its derivatives that are often encountered in social media (i.e., “vaccine”, “vaccines”, “vax”, “vaxine”, and “vaxx”) which have been shown to be representative of the vaccine debate in social media (e.g., Radzikowski et al., 2016). However, due to the data collection infrastructure of the geosocial system, and the Twitter API more generally, non-related tweets can show up in the dataset. For example, if someone wrote “Who should get the new vaccine?” and another person quoted this tweet saying “Apparently me sometime before today”, both tweets would be assigned the vaccination label even if the later one does not directly mention vaccines. To obtain a clearer dataset, we removed these non-related tweets, yielding a total of 11,676,612 tweets sent by 2,591,363 users. Among them, 0.21% (24,791) have precise GPS locational information, where the coordinates were directly collected when a user shares their location at the time of tweet with a GPS enabled device. 3.07% (358,443) of them were geolocated by Twitter using its API. The remainder of the tweets (96.7%) had moderate locational information as they were geolocated based on the place name given in users' public profile description. Generally, the place name can infer a user's location at city or state level using a gazetteer lookup, such as Geonames (Schulz et al., 2013) and Yahoo! Placemaker (Kinsella et al., 2011) and has been shown to be an effective way to geolocate tweets (e.g., Cheng et al., 2010, Croitoru et al., 2013, Hecht et al., 2011). Fig. 2 displays the spatial distribution of tweets and Twitter users at the state level, as well as the word cloud of high-frequency words appeared in the Twitter dataset used.

Fig. 2.

The spatial distributions of tweets and Twitter users (a) the spatial distribution of Tweets; (b) the spatial distribution of Twitter users; (c) Word Cloud of high frequency words in the Twitter data set.

In order to check the Twitter data is representative of the vaccination narrative at large, we compared the percentage of keywords that appeared in tweets every month with the proportion of keywords queries analyzed in Google Trends. Although certain months may either have more or fewer tweets with the same keywords as with Google Trends, we can observe a strong positive correlation (R = 0.89, p < 0.001) between the two as shown in Fig. 3 . In addition, we can also see how Twitter has the ability to capture important news or announcements (see Fig. 3(c)). Both analysis indicate that the Twitter data is representative of the vaccination debate and can used for subsequent vaccine sentiment analysis.

Fig. 3.

Comparison between Twitter data and Google Trends. (a) Distribution of keywords search and tweets over time; (b) Correlation between Google Trends and Twitter data of keywords search; (c) Important news or announcements catched on Twitter activity (Note: period is the shaded area in (a)).

3.2. Hand-labeled tweets collection

In order to carry out supervised learning, a certain number of the tweets needs to be classified as pro, anti or neutral with respect to their vaccine stance. This lays the foundation for developing the sentiment classifier during the model training process (Section 3.3). The labeling of tweets was done by creating a dynamic online labeling questionnaire. Each questionnaire contained 100 random tweets, and each tweet needed to be assigned a label from the three sentiment options: “Pro-vaccine”, “Neutral”, and “Anti-vaccine”. Table 1 lists examples of manually labeled tweets of each type of sentiment.

Table 1.

Examples of hand-labeled tweets of each type of sentiment.

| Sentiment Labels | Examples |

|---|---|

| Pro-vaccine | Measles can be serious for young children. Make sure your child is up to date on MMR vaccine. |

| Neutral | State records show Blair County kindergarten measles vaccination rate is among lowest in the state. |

| Anti-vaccine | The CDC states the 0 have died in the past 10 years of #measles while the vaccine has killed 108 according to VAERS. |

To quantify the reliability of the hand-labeled tweets, we made some adjustments to the designed questionnaire. Specifically, we first created a sample pool that contained 15,000 random tweets. Each tweet was assigned a random value from Uniform distribution and was ranked based on the assigned value. Similarly, we generated 100 random values from the Uniform distribution for each questionnaire and ranked them from 1 to 100. Subsequently, we matched the ranks in the sample pool and extracted the matching tweets. It is important to point out that the ranks in both sample pool and questionnaire vary every time conducting the random selection. As such, we can assure there are some overlapping tweets between questionnaires to evaluate how consistent labels are across different participants. Moreover, we also made sure there were 5% repeated tweets in each questionnaire. Thus this ensured that a participant was presented with 5 of the same tweets twice, which allowed us to examine participants' self-consistency. The dynamic questionnaires were sent to 150 participants randomly. In the end, we received 96 responses, resulting in a total of 5,969 unique hand-labeled tweets. Simultaneously, we gathered all duplicated tweets from questionnaires and asked two researchers with domain knowledge to mark them manually, and used these labels as references in the reliability measurement.

Reliability measurement aims to identify participants who are not only self-consistent (i.e., internal consistency, which refers to the consistency of people’s responses to tweets multiple times) but also have a certain degree of agreement among others in labeling behavior (i.e., inter-rater reliability, which refers to the consistency between different participants in their judgments of tweet sentiment). As the quality of the hand-labeled tweets has profound implications for the model development, we only utilized the hand-labeled tweets from participants who passed the reliability test. To be precise, We first measured the percentage of agreement of the duplicated tweets for each participant and only retained participants with higher than 50% agreement of themselves. Afterward, we used Cohen’s kappa statistic (McHugh, 2012) to examine the inter-rate reliability between participants and the researchers and excluded participants with scores below 0.4 (i.e., moderate agreement). In doing so, we identified 39 reliable participants, with a total of 2,032 unique hand-labeled tweets. In addition to the data quality, we expanded the training data while still preserving the quality by combining it with other tweets from the same data corpus which were labeled by Yuan et al. (2019). In the end, we obtained a total of 7,086 hand-labeled tweets for subsequent sentiment analysis. For interested readers, we provide source code and labeled tweets at: https://figshare.com/s/7b6a91c6949d63280e99.

3.3. Sentiment classification

Sentiment analysis is a common technique used to identify and categorize public attitudes and emotions expressed on social media platforms in response to different events - in our case, the vaccination debate. To tackle the sentiment analysis, we began with a minimal text preprocessing (Section 3.3.1), followed by machine labeling (Section 3.3.2).

3.3.1. Preprocessing

Text preprocessing generally relates to removing noise in the contents that do not contribute to the classification process. In this paper, we cleaned out contents by pruning multiple consecutive same characters, removing Unicode characters, hashtags, URLs, as well as replacing emoji with the respective emotion which is common practice (e.g., Solomon, 2021, Surikov and Egorova, 2020). In addition, as tweets are limited in their length, and people may use the same language to support opposing viewpoints, therefore, tackling sentiment classification on tweets is sensitive to some preprocessing steps, such as stop words and punctuation removals (Saif et al., 2014, Symeonidis et al., 2018). For example, one person may post “It is insane to get vaccinated” while another may say “It is insane to not get vaccinated”, and if we remove the stop-word “not”, a classifier may not distinguish the difference between the two. Therefore, we decided to keep both stop words and punctuation to help maintain the context of each tweet.

3.3.2. Machine labeling

After preprocessing the tweets, we applied Word2Vec to convert words mathematically into a vector representation. Compared to other methods like the BoW approach used within TF-IDF, the advantage of Word2Vec is that it represents a word in a relatively low dimensional vector space and can capture non-trivial (grammatical) associations between words while preserving their contexts. With this being said, words with semantic relations (i.e., similar meanings) are more likely to appear close to each other in the vector space, and vice versa. In this study, we used a pre-trained word2vec model released by Google (Rezaeinia et al., 2019) to compute the numeric representation of the input words for each tweet. The embeddings were then used as the input in a set of machine learning algorithms for classification, including Naive Bayes, Support Vector Machine (SVM), Logistic Regression, and Extreme Gradient Boosting (XGBoost) in order to see which one provides the best performance. More specifically, we used stratified sampling to split the hand-labeled tweets into a training (80%) and test (20%) set. The training set was utilized for model training and the test set was used to evaluate model performance. In addition, we used 5-fold Cross-Validation (CV) and hyperparameter tuning to optimize the model performance. By comparing the performance on the test set, we found XGBoost stands out from other algorithms with an accuracy of 74% (see Table 3). The hyperparameter settings of XGBoost are shown in Table 2 . Then, the XGBoost classifier was applied to detect the sentiments (i.e., Pro-vaccine, Anti-vaccine, Neutral) of each tweet in the rest data corpus.

Table 3.

Performance metrics of the XGBoost classifier.

| Performance metrics |

|||

|---|---|---|---|

| Precision | Recall | F1 Score | |

| Anti-vaccine | 0.78 | 0.61 | 0.69 |

| Neutral | 0.71 | 0.59 | 0.65 |

| Pro-vaccine | 0.74 | 0.87 | 0.80 |

| Macro average | 0.75 | 0.69 | 0.71 |

| Weighted average | 0.74 | 0.74 | 0.74 |

| Accuracy | 0.74 | ||

Table 2.

Parameter settings that generates the best performance used in the XGBoost classifier.

| Hyperparameter settings | |

|---|---|

| Parameters | Values |

| Number of trees | 500 |

| Maximum depth of a tree | 8 |

| Learning rate | 0.2 |

| γ | 0.0 |

3.4. Vaccine hesitancy estimation

In addition to detecting the three sentiments (Pro-vaccine, Anti-vaccine, Neutral), we took one step further to analyze vaccine hesitancy. As discussed earlier, vaccine hesitancy is one of the main challenges for improving vaccine coverage. However, precise quantification of vaccine hesitancy can be challenging as vaccine hesitancy itself can be affected by various factors (e.g., confidence, complacency, convenience, demographic, cultural, social, and political factors), and can vary across time, place, and vaccines (Dubé et al., 2013, MacDonald, 2015). Traditional methods for estimating vaccine hesitancy are generally done by survey or focus group discussions (e.g., Detoc et al., 2020, Krishnamoorthy et al., 2019, Larson et al., 2016). These methods are naturally time-consuming, labor-intensive, and difficult to scale up. Moreover, survey samples may also have biases in the geographical distribution, for example, individuals who live in poorly accessible areas could be underrepresented. To circumvent the limitations, we proposed a metric called “A2P Ratio” (i.e., the ratio of Anti-vaccine to Pro-vaccine) as a simple proxy to quantify vaccine hesitancy due to its nuanced nature. The larger the ratio, the higher the vaccine hesitancy. To validate the proposed metric, we further compared it with the estimated vaccine hesitancy from CDC at the state level. By doing so, we are able to identify the potential correlation between the two, so as to use the “A2P Ratio” as a simple proxy to quantify vaccine hesitancy in a more efficient way. However, it is important to stress that the “A2P Ratio” introduced here only focuses on sentiment derived from the online vaccination discussions, and as such works as a simple alternative way to provide informed insights into vaccine hesitancy. Yet, to develop a more comprehensive understanding of vaccine hesitancy will require incorporating many other factors aforementioned, such as demographic, political, cultural factors which is beyond the scope of the current paper. We will come back to this issue in Section 5.

4. Results

Building upon our methodology, we now turn to the results of our study. We will first discuss the results of the classified vaccine sentiments at both the country and state levels, this will be followed by exploring the potential correlation between the “Pro-vaccine” sentiment and the actual vaccination records at the state level. Finally, we will compare the “A2P Ratio” with estimated vaccine hesitancy from CDC to evaluate the rationality of the proposed metric for estimating vaccine hesitancy.

Table 4 displays the results that compare the public’s attitudes towards vaccination from Twitter before and after the COVID-19 outbreak. We used “1st December 2019”, which is the date of symptom onset of the 1st patient in Wuhan, China (Huang et al., 2020), as the demarcation point and split the entire study period into two phases. In other words, any dates before the demarcation point were called “before COVID-19”, otherwise they were referred to as “after COVID-19”. Among the Twitter users in the whole dataset, “Pro-vaccine” users accounted for the greatest proportion (56.97%), followed by the “Anti-vaccine” users, which made up 22.80% of total users, and the proportion of “Neutral” users was the smallest (20.22%). This result indicated that the positive vaccine sentiment was the dominant opinion. Nonetheless, the rate of “Pro-vaccine” users decreased after the outbreak, dropping from 61.56% to 56.20%. Concurrently, the percentage of “Anti-vaccine” users revealed a modest increment after the outbreak, which grew from 20.17% to 23.28%, implying that the outbreak indeed moderately shifted public attitudes towards vaccination.

Table 4.

Number of Tweets and Twitter Users by each sentiment category.

| Sentiments | Number of Users | Number of Tweets |

|---|---|---|

| Entire period | ||

| Pro-vaccine | 2,055,959 (56.97%) | 7,190,846 (61.58%) |

| Neutral | 729,868 (20.22%) | 2,158,271 (18.48%) |

| Anti-vaccine | 822,926 (22.8%) | 2,327,495 (19.93%) |

| Before COVID-19 | ||

| Pro-vaccine | 544,365 (61.56%) | 1,655,642 (60.56%) |

| Neutral | 161,609 (18.28%) | 457,925 (16.75%) |

| Anti-vaccine | 178,339 (20.17%) | 620,103 (22.68%) |

| After COVID-19 | ||

| Pro-vaccine | 1,631,444 (56.2%) | 5,535,204 (61.89%) |

| Neutral | 595,655 (20.52%) | 1,700,346 (19.01%) |

| Anti-vaccine | 675,706 (23.28%) | 1,707,392 (19.09%) |

| Average number of tweets per user: 5 tweets | ||

To further investigate the dynamics of vaccine sentiment over time, we split the proportion of the three vaccine sentiments into years. Fig. 4 demonstrates the percentages of users (orange) and tweets (green) by the three sentiments in different years. The results show the rate of “Anti-vaccine” users approached the highest point in 2020, then slightly shrank in 2021, although the overall “Anti-vaccine” sentiment was higher compared to that of before the outbreak. One possible reason for this could be the uptake of the coronavirus vaccine(s) in some cases is accompanied by various side effects that have not been comprehensively explained scientifically (Ledford, 2020). Moreover, the severity of the side effects (e.g., minor to moderate adverse effects like fever, headache, tiredness, muscle pain, or serious safety problems such as anaphylaxis and thrombosis with thrombocytopenia syndrome (CDC, 2020)) vary from different brands of coronavirus vaccines for diverse physiques (Menni et al., 2021), resulting in negative vaccine safety concerns. This finding was coincided with Yousefinaghani et al.’s (2021) results, where the authors discovered the negative vaccine sentiment significantly increased in late 2020 when different brands of coronavirus vaccines came out. A similar trend was observed when disaggregating the sentiments at the state level as shown in Fig. 5 . For most states, the rate of “Anti-vaccine” users increased in 2020 compared to 2019 and showed a minimal drop in 2021, while the changes in the rate of “Pro-vaccine” users over time are the opposite.

Fig. 4.

Distribution of tweets and Twitter users by different sentiment before and after the COVID-19 outbreak.

Fig. 5.

Distribution of state-level vaccination sentiment from 2015 to 2021, (a) Percentage of Pro-vaccine users; (b) Percentage of Anti-vaccine users.

Moreover, in order to understand the potential correlation between the positive vaccine attitude online and the actual vaccination rate offline, especially after the COVID-19 outbreak, we compared the odds ratio of the Pro-vaccine users after the outbreak to that of the coronavirus vaccinations in each state. The coronavirus vaccinations data comes from “Our World in Data” (Ritchie et al., 2020) which records the total number of people in each state who have been fully vaccinated and the number is updated daily according to governmental reports. It is necessary to note that to be in line with the study period, we chose the number of vaccinations reported on 6th July 2021 as the reference for the comparison. The odds ratio is a statistic generally utilized in medical studies (Bland and Altman, 2000), however, it is increasingly being used in a geographic context, especially in social media analysis, to alleviate size-related issues, such as uneven spatial distribution of tweets (e.g., Shelton et al., 2015, Zook et al., 2017). In this paper, we calculated the odds ratio of the “Pro-vaccine” users and vaccination records as follows:

| (4.1) |

| (4.2) |

where refers to the total numbers.

Fig. 6 (a) & (b) display the spatial distribution of the odds ratios of the “Pro-vaccine” users and vaccination records, separately. The results reveal there was geographic difference in Pro-vaccine sentiment on Twitter. More specifically, states, such as Massachusetts (MA), Connecticut (CT), Vermont (VT), Colorado (CO), Washington (WA), New York (NY), had relatively higher Pro-vaccine odds than other states (see Fig. 6 (a)). Part of the reason for this could be attributed to the relatively complete health system in these states as it has been shown that a well-functioning health system is crucial for improving vaccine coverage (Lahariya, 2015). According to the Commonwealth Fund’s (2020) scorecard on state health system performance, where the scorecard was assessed based on a series of indicators, including access to health care, quality of care, service use and costs of care, health outcomes, as well as income-based health care disparities, these states were ranked in the top 10. Our finding follows a similar trend to that of the actual vaccination rate (see Fig. 6 (b)), which implies the positive attitude regarding vaccines identified from the social media data can, to some extent, reflect the actual vaccination rate offline. This was further validated by measuring the correlation coefficient between the two. Fig. 6 (c) presents the correlation between the odds ratio of actual vaccination records and the odds ratio of Pro-vaccine users, where a positive correlation () between the two was observed. We argue that the proposed approach for identifying positive vaccine sentiments online can be used as an indicator for evaluating offline vaccination rates.

Fig. 6.

Correlation between Pro-vaccine users and actual vaccination records (a) Spatial distribution of odds ratio of Pro-vaccine users; (b) Spatial distribution of odds ratio of actual vaccination records; (c) Correlation between the Pro-vaccine users and the actual vaccination records.

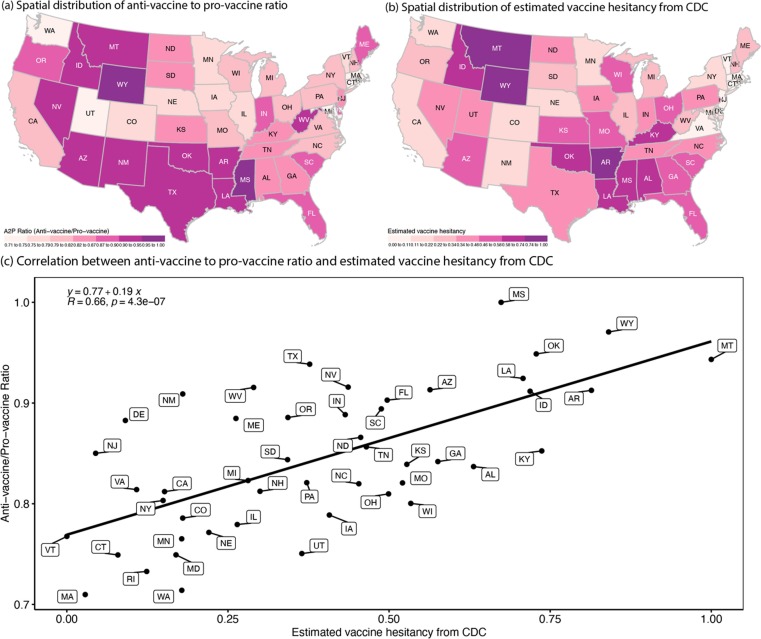

In addition, to demonstrate public’s attitudes identified from online vaccine discussion can be useful for predicting the tendency of vaccines hesitancy offline, we used the A2P Ratio (see Equation (4.3)) as a proxy of vaccine hesitancy prediction and compared it with the estimated vaccine hesitancy rate from CDC1 , which is measured based on the U.S. Census Bureau's Household Pulse Survey (HPS)2 . In doing so, we were able to detect the correlation between the A2P ratio and the estimated vaccine hesitancy. By mapping the spatial distribution of both normalized A2P ratio and estimated vaccine hesitancy, we contextualized the findings in Fig. 7 . We observed that relatively higher A2P ratios and estimated vaccine hesitancy are mostly entrenched in states in the West and South, such as Wyoming (WY), Arkansas(AR), Florida (FL), Louisiana (LA), Nevada (NV), and so on. Besides this, we also observed that WY stood out from the other states in both maps, appearing as the most vaccine-hesitant state in the country, which was consistent with the result in Douthit et al.’s (2015) study. An important reason may be the inequality in resources allocation and distribution. More specifically, these states have a high proportion of people living in more rural areas who have greater difficulties in seeking medical care due to poor quality of medical services, scarcity of medical staff, lack of public transport, and more (Douthit et al., 2015). Other possible reasons could be, for example, cultural conservatism, safety concerns, distrust of government, low health literacy, and so on (Tram et al., 2021). The results indicate the proposed A2P ratio has the ability to capture a comparable pattern as the estimated vaccine hesitancy obtained from a survey research. To quantified the relationship between the two, we conducted a correlation analysis and found they indeed had a positive correlation () as shown in Fig. 7. This finding implies the proposed A2P ratio can effectively estimate vaccine hesitancy, complementing the limitations of vaccine hesitancy based on survey research, such as difficulties in scaling up, time-consuming, and labor-intensive.

| (4.3) |

Fig. 7.

Vaccine hesitancy (a) Spatial distribution of Anti-vaccine to Pro-vaccine ratio; (b) Spatial distribution of estimated vaccine hesitancy from CDC; (c) Correlation between Anti-vaccine to Pro-vaccine ratio and the estimated vac- cine hesitancy from CDC.

5. Discussion and conclusion

In this study, we proposed a method to identify the dynamic changes in the public's sentiments towards vaccination based on social media data with respect to spatial and temporal aspects. We do so by using sentiment analysis techniques to categorize vaccine sentiment into three classes, namely “Pro-vaccine”, “Anti-vaccine” and “Neutral”. The sentiment analysis was operationalized by combining a machine learning algorithm with a pre-trained Word2Vec model leveraging a large-scale Twitter dataset. The analytical latitude offered by social media data give more possibilities for comparing changes in vaccine sentiments before and after a disease outbreak. As such, we argue the methodology presented here offers a scan of the changing pulse of the public’s perceptions regarding vaccination in light of vaccination campaigns and policy decision-making facing the current COVID-19 and future public health challenges. In addition, we introduced a metric named “A2P ratio”, which is derived from the identified positive and negative vaccine sentiments, to estimate vaccine hesitancy. The proposed A2P ratio provides a way to effectively monitor vaccine hesitancy in near real-time, which could complement the limitations of vaccine hesitancy based on survey research, such as difficulties with respect to scaling up, time-consuming and labor-intensive nature of interviewing people, etc.

By using the United States as a test case, we revealed how sentiment analysis techniques combined with social media data can be utilized to capture a comprehensive picture of vaccine sentiment in online discussions from 2015 to 2021. Furthermore, we showed how the odds ratio is a helpful method for alleviating size-related issues (Shelton et al., 2015, Zook et al., 2017) when it comes to vaccine sentiment. The analysis illustrates its capacity to identify differences in geographic and temporal of Twitter users. Specifically, we discovered how positive vaccine sentiment was dominant throughout the years, although there was a slight decline in 2020 when different brands of coronavirus vaccines came out. Possible reasons for this could be due to concerns of side effects that have not been fully understood scientifically. A similar pattern was observed when disaggregating vaccine sentiments at the state level. Moreover, we found the relatively higher Pro-vaccine odds generally appeared in states that have relatively complete health systems. This is reasonable as a well-functioning health system plays an influential role in vaccine campaign (Lahariya, 2015). In addition, the Pro-vaccine odds displayed a positive correlation with the actual vaccination odds. Although the correlation was moderate, it still demonstrates the capability to be used as a preliminary reflection of the vaccination rate.

However, the most intriguing insight is the proposed A2P ratio for evaluating vaccine hesitancy. We found states with relatively high vaccine hesitancy were generally concentrated in the Western and Southern states, such as Wyoming and Arkansas. Wyoming, in particular stood out from the other states appearing as the most vaccine-hesitant state in the country. As noted above, partial reasons for this could be attributed to the unequal distribution of medical resources. Moreover, the result showed there was a positive relationship between the A2P ratio and the estimated vaccine hesitancy based on survey research. As discussed earlier, vaccine hesitancy has been one of the key challenges in improving vaccination campaigns. As such, effectively evaluating vaccine hesitancy is crucial for enhancing vaccine uptake. However, deriving vaccine hesitancy directly from tweets as opposed to pro and anti-vaccine sentiment is a major challenge and we would argue that the proposed A2P ratio could provide informed insights into vaccine hesitancy in near real-time, which could helpful for health professionals and policymakers to propose more precise and promotion plans and effective strategies to improve vaccine coverage so as to control the transmission of viruses in the society.

The study presented here can certainly be expanded. First, we solely tested the methodology on the Twitter dataset, while other social media datasets, such as Facebook and Weibo, should theoretically be compatible with it. Second, the social media data have certain limitations, such as sampling bias and lack of demographic information (Yuan et al., 2020). As such, future studies can make more efforts to generalize these sorts of data, such as calibrating or evaluating bias (Jiang et al., 2019, Longley et al., 2015), before utilizing them in public health policy. Second, it is crucial to mention that the “A2P Ratio” metric, introduced here is not intended to precisely predict vaccine hesitancy based only on “Anti-vaccine” and “Pro-vaccine” sentiment derived from the online vaccination discussion, but rather to provide an initial quantitative informed insights of the potential trend of vaccine hesitancy. Thus, integrating this metric with other data sources, such as census data, could enhance our understanding of vaccine hesitancy. Moreover, as different public concerns, views, and responses may result in negative sentiments or vaccine hesitancy. Therefore, it is of interest to explore key topics or themes discussed online about vaccination in the future. One such approach for this could be semantic network analysis, which deduces themes from discussions by analyzing word co-occurrence in the texts (e.g., Featherstone et al., 2020, Ruiz et al., 2021). This could be helpful for identifying more specific factors that drive different public attitudes regarding vaccination.

Nonetheless, the study demonstrates the effectiveness and practicality of integrating machine learning with pre-trained Word2Vec model, allowing quantitative research on social media data to uncover insights of public perceptions towards vaccination. The methodology proposed here takes into account the complex and changing sentiments of the public on vaccination and can be expanded to assess other general public opinions related to health issues.

CRediT authorship contribution statement

Qingqing Chen: Methodology, Formal analysis, Visualization, Writing – original draft, Writing – review & editing. Andrew Crooks: Supervision, Conceptualization, Methodology, Writing – review & editing.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Footnotes

Vaccine Hesitancy for COVID-19: state, county, and local estimates.

Household Pulse Survey: Measuring Social and Economic Impacts during the Coronavirus Pandemic.

References

- Aizawa A. An information-theoretic perspective of tf–idf measures. Information Processing & Management. 2003;39:45–65. doi: 10.1016/S0306-4573(02)00021-3. [DOI] [Google Scholar]

- Battley E.H. Jenner’s smallpox vaccine. The riddle of vaccinia virus and its origin. Derrick baxby. The Quarterly Review of Biology. 1982;57:303–304. doi: 10.1086/412809. [DOI] [Google Scholar]

- Bland J.M., Altman D.G. The odds ratio. British Medical Journal. 2000;320:1468. doi: 10.1136/bmj.320.7247.1468. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blume S. Anti-vaccination movements and their interpretations. Social Science & Medicine. 2006;62:628–642. doi: 10.1016/j.socscimed.2005.06.020. [DOI] [PubMed] [Google Scholar]

- Calandrillo S.P. Vanishing vaccinations: why are so many Americans opting out of vaccinating their children? Univ Mich J Law Reform. 2004;37:353–440. [PubMed] [Google Scholar]

- CDC, 2020. Safety of COVID-19 vaccines. Centers for Disease Control and Prevention. URL https://www.cdc.gov/coronavirus/2019-ncov/vaccines/safety/safety-of-vaccines.html (accessed 1.27.22). [PubMed]

- Cdc Ten great public health achievements - United States, 1900–1999 [WWW Document] 1999. https://www.cdc.gov/mmwr/preview/mmwrhtml/00056796.htm accessed 1.26.22.

- Cheng Z., Caverlee J., Lee K. You are where you tweet: a content-based approach to geo-locating twitter users. Association for Computing Machinery; New York: 2010. pp. 759–768. [DOI] [Google Scholar]

- Croitoru A., Crooks A., Radzikowski J., Stefanidis A. Geosocial gauge: a system prototype for knowledge discovery from social media. International Journal of Geographical Information Science. 2013;27:2483–2508. doi: 10.1080/13658816.2013.825724. [DOI] [Google Scholar]

- Detoc M., Bruel S., Frappe P., Tardy B., Botelho-Nevers E., Gagneux-Brunon A. Intention to participate in a COVID-19 vaccine clinical trial and to get vaccinated against COVID-19 in France during the pandemic. Vaccine. 2020;38:7002–7006. doi: 10.1016/j.vaccine.2020.09.041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Douthit N., Kiv S., Dwolatzky T., Biswas S. Exposing some important barriers to health care access in the rural USA. Public Health. 2015;129:611–620. doi: 10.1016/j.puhe.2015.04.001. [DOI] [PubMed] [Google Scholar]

- Dubé E., Laberge C., Guay M., Bramadat P., Roy R., Bettinger J.A. Vaccine hesitancy: An overview. Vaccine hesitancy. Human Vaccines & Immunotherapeutics. 2013;9(8):1763–1773. doi: 10.4161/hv.24657. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Edward, J., 1802. An inquiry into the causes and effects of the variolae vaccinae: a disease discovered in some of the western counties of England, particularly Gloucestershire, and known by the name of the cow pox [WWW Document]. National Library of Medicine. URL https://collections.nlm.nih.gov/catalog/nlm:nlmuid-2559001R-bk (accessed 1.25.22).

- Evrony A., Caplan A. The overlooked dangers of anti-vaccination groups’ social media presence. Human Vaccines & Immunotherapeutics. 2017;13:1475–1476. doi: 10.1080/21645515.2017.1283467. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Featherstone J.D., Ruiz J.B., Barnett G.A., Millam B.J. Exploring childhood vaccination themes and public opinions on Twitter: A semantic network analysis. Telematics and Informatics. 2020;54 doi: 10.1016/j.tele.2020.101474. [DOI] [Google Scholar]

- Finney Rutten L.J., Zhu X., Leppin A.L., Ridgeway J.L., Swift M.D., Griffin J.M., St Sauver J.L., Virk A., Jacobson R.M. Evidence-based strategies for clinical organizations to address COVID-19 vaccine hesitancy. Mayo Clinic Proceedings. 2021;96:699–707. doi: 10.1016/j.mayocp.2020.12.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ge Y., Zhang W.-B., Liu H., Ruktanonchai C.W., Hu M., Wu X., Song Y., Ruktanonchai N.W., Yan W., Cleary E., Feng L., Li Z., Yang W., Liu M., Tatem A.J., Wang J.-F., Lai S. Impacts of worldwide individual non-pharmaceutical interventions on COVID-19 transmission across waves and space. International Journal of Applied Earth Observation and Geoinformation. 2022;106 doi: 10.1016/j.jag.2021.102649. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Güner R., Hasanoğlu İ., Aktaş F. COVID-19: Prevention and control measures in community. Turk J Med Sci. 2020;50:571–577. doi: 10.3906/sag-2004-146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hansen B. America’s first medical breakthrough: how popular excitement about a French rabies cure in 1885 raised new expectations for medical progress. The American Historical Review. 1998;103:373–418. doi: 10.1086/ahr/103.2.373. [DOI] [PubMed] [Google Scholar]

- Hecht, B., Hong, L., Suh, B., Chi, E.H., 2011. Tweets from Justin Bieber’s heart: the dynamics of the location field in user profiles, in: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, CHI ’11. Association for Computing Machinery, Vancouver, BC, Canada, pp. 237–246. Doi: 10.1145/1978942.1978976.

- Hu T., Wang S., Luo W., Zhang M., Huang X., Yan Y., Liu R., Ly K., Kacker V., She B., Li Z. Revealing public opinion towards COVID-19 vaccines with Twitter data in the United States: spatiotemporal perspective. Journal of Medical Internet Research. 2021;23 doi: 10.2196/30854. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huang C., Wang Y., Li X., Ren L., Zhao J., Hu Y., Zhang L., Fan G., Xu J., Gu X., Cheng Z., Yu T., Xia J., Wei Y., Wu W., Xie X., Yin W., Li H., Liu M., Xiao Y., Gao H., Guo L., Xie J., Wang G., Jiang R., Gao Z., Jin Q., Wang J., Cao B. Clinical features of patients infected with 2019 novel coronavirus in Wuhan, China. Lancet. 2020;395:497–506. doi: 10.1016/S0140-6736(20)30183-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hutto C., Gilbert E. Proceedings of the International AAAI Conference on Web and Social Media. Presented at the Eighth International AAAI Conference on Weblogs and Social Media. 2014. VADER: A parsimonious rule-based model for sentiment analysis of social media text, in; pp. 216–225. [Google Scholar]

- Jiang Y., Li Z., Ye X. Understanding demographic and socioeconomic biases of geotagged Twitter users at the county level. Cartography and Geographic Information Science. 2019;46:228–242. doi: 10.1080/15230406.2018.1434834. [DOI] [Google Scholar]

- Kata A. Anti-vaccine activists, Web 2.0, and the postmodern paradigm – an overview of tactics and tropes used online by the anti-vaccination movement. Vaccine. 2012;30:3778–3789. doi: 10.1016/j.vaccine.2011.11.112. [DOI] [PubMed] [Google Scholar]

- Kinsella, S., Murdock, V., O’Hare, N., 2011. “I’m eating a sandwich in Glasgow”: modeling locations with tweets, in: Proceedings of the 3rd International Workshop on Search and Mining User-Generated Contents, SMUC ’11. Association for Computing Machinery, Glasgow, Scotland, UK, pp. 61–68. Doi: 10.1145/2065023.2065039.

- Krishnamoorthy Y., Kannusamy S., Sarveswaran G., Majella M.G., Sarkar S., Narayanan V. Factors related to vaccine hesitancy during the implementation of measles-rubella campaign 2017 in rural Puducherry - a mixed-method study. Journal of Family Medicine and Primary Care. 2019;8:3962–3970. doi: 10.4103/jfmpc.jfmpc_790_19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lahariya C. Health system approach for improving immunization program performance. Journal of Family Medicine and Primary Care. 2015;4:487–494. doi: 10.4103/2249-4863.174263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lai S., Ruktanonchai N.W., Zhou L., Prosper O., Luo W., Floyd J.R., Wesolowski A., Santillana M., Zhang C., Du X., Yu H., Tatem A.J. Effect of non-pharmaceutical interventions to contain COVID-19 in China. Nature. 2020;585:410–413. doi: 10.1038/s41586-020-2293-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Larson H.J., Cooper L.Z., Eskola J., Katz S.L., Ratzan S. Addressing the vaccine confidence gap. Lancet. 2011;378:526–535. doi: 10.1016/S0140-6736(11)60678-8. [DOI] [PubMed] [Google Scholar]

- Larson H.J., de Figueiredo A., Xiahong Z., Schulz W.S., Verger P., Johnston I.G., Cook A.R., Jones N.S. The state of vaccine confidence 2016: global insights through a 67-country survey. EBioMedicine. 2016;12:295–301. doi: 10.1016/j.ebiom.2016.08.042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ledford H. Oxford COVID-vaccine paper highlights lingering unknowns about results. Nature. 2020;588:378–379. doi: 10.1038/d41586-020-03504-w. [DOI] [PubMed] [Google Scholar]

- Liu B. Sentiment analysis and opinion mining. Synthesis Lectures on Human Language Technologies. 2012;5:1–167. doi: 10.2200/S00416ED1V01Y201204HLT016. [DOI] [Google Scholar]

- Longley P.A., Adnan M., Lansley G. The Geotemporal Demographics of Twitter Usage. Environ Plan A. 2015;47:465–484. doi: 10.1068/a130122p. [DOI] [Google Scholar]

- MacDonald N.E. Vaccine hesitancy: definition, scope and determinants. Vaccine. 2015;33:4161–4164. doi: 10.1016/j.vaccine.2015.04.036. [DOI] [PubMed] [Google Scholar]

- McHugh M.L. Interrater reliability: the kappa statistic. Biochem Med (Zagreb) 2012;22:276–282. [PMC free article] [PubMed] [Google Scholar]

- Menni C., Klaser K., May A., Polidori L., Capdevila J., Louca P., Sudre C.H., Nguyen L.H., Drew D.A., Merino J., Hu C., Selvachandran S., Antonelli M., Murray B., Canas L.S., Molteni E., Graham M.S., Modat M., Joshi A.D., Mangino M., Hammers A., Goodman A.L., Chan A.T., Wolf J., Steves C.J., Valdes A.M., Ourselin S., Spector T.D. Vaccine side-effects and SARS-CoV-2 infection after vaccination in users of the COVID symptom study app in the UK: a prospective observational study. The Lancet Infectious Diseases. 2021;21:939–949. doi: 10.1016/S1473-3099(21)00224-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mikolov, T., Chen, K., Corrado, G., Dean, J., 2013. Efficient Estimation of Word Representations in Vector Space.

- Mostafa L. Egyptian student sentiment analysis using Word2vec during the coronavirus (Covid-19) pandemic, in. Springer; Cairo, Egypt: 2021. pp. 195–203. [DOI] [Google Scholar]

- Motta, M., Stecula, D., 2021. Quantifying the effect of Wakefield et al. (1998) on skepticism about MMR vaccine safety in the U.S. Plos One 16, e0256395. Doi: 10.1371/journal.pone.0256395. [DOI] [PMC free article] [PubMed]

- Odone A., Ferrari A., Spagnoli F., Visciarelli S., Shefer A., Pasquarella C., Signorelli C. Effectiveness of interventions that apply new media to improve vaccine uptake and vaccine coverage. Human Vaccines & Immunotherapeutics. 2015;11:72–82. doi: 10.4161/hv.34313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palacios Cruz, M., Santos, E., Velázquez Cervantes, M.A., León Juárez, M., 2020. COVID-19, a worldwide public health emergency. Revista Clinica Espanola S0014-2565(20)30092–8. Doi: 10.1016/j.rce.2020.03.001. [DOI] [PMC free article] [PubMed]

- Phipps H. Vaccines: personal liberty or social responsibility? Digital Commons. 2020 [Google Scholar]

- Plotkin S. History of vaccination. Proceedings of the National Academy of Sciences of the United States of America. 2014;111:12283–12287. doi: 10.1073/pnas.1400472111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Puri N., Coomes E.A., Haghbayan H., Gunaratne K. Social media and vaccine hesitancy: new updates for the era of COVID-19 and globalized infectious diseases. Human Vaccines & Immunotherapeutics. 2020;16:2586–2593. doi: 10.1080/21645515.2020.1780846. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Radzikowski J., Stefanidis A., Jacobsen K.H., Croitoru A., Crooks A., Delamater P.L. The measles vaccination narrative in Twitter: a quantitative analysis. JMIR Public Health and Surveillance. 2016;2 doi: 10.2196/publichealth.5059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rezaeinia S.M., Rahmani R., Ghodsi A., Veisi H. Sentiment analysis based on improved pre-trained word embeddings. Expert Systems with Applications. 2019;117:139–147. doi: 10.1016/j.eswa.2018.08.044. [DOI] [Google Scholar]

- Ritchie H., Mathieu E., Rodés-Guirao L., Appel C., Giattino C., Ortiz-Ospina E., Hasell J., Macdonald B., Beltekian D., Roser M. Our World in Data; 2020. Coronavirus (COVID-19) vaccinations. [Google Scholar]

- Ruiz J., Featherstone J.D., Barnett G.A. Identifying Vaccine Hesitant Communities on Twitter and their Geolocations: A Network Approach. Presented at the Hawaii International Conference on System Sciences. 2021 doi: 10.24251/HICSS.2021.480. [DOI] [Google Scholar]

- Ruktanonchai N.W., Floyd J.R., Lai S., Ruktanonchai C.W., Sadilek A., Rente-Lourenco P., Ben X., Carioli A., Gwinn J., Steele J.E., Prosper O., Schneider A., Oplinger A., Eastham P., Tatem A.J. Assessing the impact of coordinated COVID-19 exit strategies across Europe. Science. 2020;369(6510):1465–1470. doi: 10.1126/science.abc5096. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saadat S., Rawtani D., Hussain C.M. Environmental perspective of COVID-19. Science of The Total Environment. 2020;728 doi: 10.1016/j.scitotenv.2020.138870. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saif, H., Fernandez, M., He, Y., Alani, H., 2014. On stopwords, filtering and data sparsity for sentiment analysis of Twitter, in: Proceedings of the Ninth International Conference on Language Resources and Evaluation. Reykjavik, Iceland, pp. 810–817.

- Saladino V., Algeri D., Auriemma V. The psychological and social impact of Covid-19: new perspectives of well-being. Frontiers in Psychology. 2020;11 doi: 10.3389/fpsyg.2020.577684. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schulz A., Hadjakos A., Paulheim H., Nachtwey J., Mühlhäuser M. A multi-indicator approach for geolocalization of Tweets. Proceedings of the International AAAI Conference on Web and Social Media. 2013;7:573–582. [Google Scholar]

- Sciandra A. COVID-19 outbreak through Tweeters’ words: monitoring Italian social media communication about COVID-19 with Text Mining and Word Embeddings. IEEE; Rennes, France: 2020. pp. 1–6. [DOI] [Google Scholar]

- Sharifi A., Khavarian-Garmsir A.R. The COVID-19 pandemic: Impacts on cities and major lessons for urban planning, design, and management. Sci Total Environ. 2020;749 doi: 10.1016/j.scitotenv.2020.142391. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shelton T., Poorthuis A., Zook M. Social media and the city: Rethinking urban socio-spatial inequality using user-generated geographic information. Landscape and Urban Planning. 2015;142:198–211. doi: 10.1016/j.landurbplan.2015.02.020. [DOI] [Google Scholar]

- Smith N., Graham T. Mapping the anti-vaccination movement on Facebook. Information, Communication & Society. 2019;22:1310–1327. doi: 10.1080/1369118X.2017.1418406. [DOI] [Google Scholar]

- Solomon, B., 2021. demoji: Accurately remove and replace emojis in text strings.

- Stern A.M., Markel H. The history of vaccines and immunization: familiar patterns, new challenges. Health Affairs. 2005;24:611–621. doi: 10.1377/hlthaff.24.3.611. [DOI] [PubMed] [Google Scholar]

- Surikov A., Egorova E. Procedia Computer Science, 9th International Young Scientists Conference in Computational Science. 2020. Alternative method sentiment analysis using emojis and emoticons; pp. 182–193. [DOI] [Google Scholar]

- Symeonidis S., Effrosynidis D., Arampatzis A. A comparative evaluation of pre-processing techniques and their interactions for Twitter sentiment analysis. Expert Systems with Applications. 2018;110:298–310. [Google Scholar]

- The Commonwealth Fund 2020 scorecard on state health system performance [WWW Document] 2020. https://www.commonwealthfund.org/publications/scorecard/2020/sep/2020-scorecard-state-health-system-performance accessed 1.27.22.

- Tram, K.H., Saeed, S., Bradley, C., Fox, B., Eshun-Wilson, I., Mody, A., Geng, E., 2021. Deliberation, dissent, and distrust: understanding distinct drivers of coronavirus disease 2019 vaccine hesitancy in the United States. Clinical Infectious Diseases ciab633. Doi: 10.1093/cid/ciab633. [DOI] [PMC free article] [PubMed]

- Velavan T.P., Meyer C.G. The COVID-19 epidemic. Tropical Medicine and International Health. 2020;25:278–280. doi: 10.1111/tmi.13383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Villavicencio C., Macrohon J.J., Inbaraj X.A., Jeng J.-H., Hsieh J.-G. Twitter sentiment analysis towards COVID-19 vaccines in the Philippines using Naïve Bayes. Information. 2021;12:204. doi: 10.3390/info12050204. [DOI] [Google Scholar]

- Wakefield A.J., Murch S.H., Anthony A., Linnell J., Casson D.M., Malik M., Berelowitz M., Dhillon A.P., Thomson M.A., Harvey P., Valentine A., Davies S.E., Walker-Smith J.A. Ileal-lymphoid-nodular hyperplasia, non-specific colitis, and pervasive developmental disorder in children. Lancet. 1998;351:637–641. doi: 10.1016/s0140-6736(97)11096-0. [DOI] [PubMed] [Google Scholar]

- Xiong J., Lipsitz O., Nasri F., Lui L.M.W., Gill H., Phan L., Chen-Li D., Iacobucci M., Ho R., Majeed A., McIntyre R.S. Impact of COVID-19 pandemic on mental health in the general population: a systematic review. Journal of Affective Disorders. 2020;277:55–64. doi: 10.1016/j.jad.2020.08.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yang C., Huang Q., Li Z., Liu K., Hu F. Big data and cloud computing: innovation opportunities and challenges. International Journal of Digital Earth. 2017;10:13–53. doi: 10.1080/17538947.2016.1239771. [DOI] [Google Scholar]

- Yousefinaghani S., Dara R., Mubareka S., Papadopoulos A., Sharif S. An analysis of COVID-19 vaccine sentiments and opinions on Twitter. International Journal of Infectious Diseases. 2021;108:256–262. doi: 10.1016/j.ijid.2021.05.059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- WHO, 2019. Ten threats to global health in 2019. World Health Organization. URL https://www.who.int/news-room/spotlight/ten-threats-to-global-health-in-2019 (accessed 12.13.21).

- Yu, X., Zhong, C., Li, D., Xu, W., 2020. Sentiment analysis for news and social media in COVID-19, in: Proceedings of the 6th ACM SIGSPATIAL International Workshop on Emergency Management Using GIS. Association for Computing Machinery, New York, pp. 1–4. Doi: 10.1145/3423333.3431794.

- Yuan, X., Schuchard, R.J., Crooks, A.T., 2019. Examining Emergent Communities and Social Bots Within the Polarized Online Vaccination Debate in Twitter. Social Media + Society 5, 2056305119865465. Doi: 10.1177/2056305119865465.

- Yuan Y., Lu Y., Chow T.E., Ye C., Alyaqout A., Liu Y. The Missing Parts from Social Media-Enabled Smart Cities: Who, Where, When, and What? Annals of the American Association of Geographers. 2020;110:462–475. doi: 10.1080/24694452.2019.1631144. [DOI] [Google Scholar]

- Zook, M., Poorthuis, A., Donohue, R., 2017. Mapping spaces: cartographic representations of online data, in: The SAGE Handbook of Online Research Methods. London, pp. 542–560. Doi: 10.4135/9781473957992.n31.