Abstract

Experiencing positive emotions together facilitates interpersonal understanding and promotes subsequent social interaction among individuals. However, the neural underpinnings of such emotional-social effect remain to be discovered. The current study employed the functional near-infrared spectroscopy–based hyperscanning to investigate the abovementioned relationship. After participants in dyad watched movie clips with happily or neutral emotion, they were asked to perform the interpersonal cooperative task, with their neural activation of prefrontal cortex being recorded simultaneously via functional near-infrared spectroscopy. Results suggested that compared with the neutral movie watching together, a higher interpersonal neural synchronization (INS) in left inferior frontal gyrus during participant dyads watching happiness movie together was observed. Subsequently, dyads in happiness showed more effective coordination interaction during the interpersonal cooperation task compared to those in the neutral condition, and such facilitated effect was associated with increased cooperation-related INS at left middle frontal cortex. A mediation analysis showed that the coordination interaction fully mediated the relationship between the emotion-induced INS during the happiness movie-viewing and the cooperation-related INS in interpersonal cooperation. Taken together, our findings suggest that the faciliatory effect experiencing happiness together has on interpersonal cooperation can be reliably reflected by the INS magnitude at the brain level.

Keywords: emotional movie-viewing, interpersonal neural synchronization (INS), interpersonal cooperation, coordination interaction, fNIRS hyperscanning

Introduction

While negative emotions prompt specific and immediate responses to aid an individual’s survival, positive emotions are thought to illicit a more general response in individuals’ daily life (Bell et al., 2013). Over the past two decades, an increasing number of studies have offered supportive evidence that positive emotions play an essential role in creating strong social bonds among individuals and influence immediately social interaction behaviors (Tracy and Randles, 2011; Cohen and Mor, 2018). When humans experience positive feelings, their attention is drawn away from themselves toward others, inspiring more prosocial behaviors and predicting increased interpersonal cooperation behaviors (Drouvelis and Grosskopf, 2016; Meneghel et al., 2016; Aknin et al., 2018). For example, employee positive moods promoted cooperative processes (helping co-workers and getting help from co-workers) and predicted a better work performance (Collins et al., 2016). Yet, despite the obvious link between positive emotions and human social interactions, little was known about behavioral dynamics and the neural underpinnings that possibly underlie this association.

Earlier neurophysiological evidences have been found that such influences were accomplished through dopamine-mediated reward system in the brain encompassing frontal lobe, ventromedial prefrontal cortex, orbitofrontal cortex (OFC), anterior cingulate cortex, amygdala and ventral striatum, the areas that have been associated with positive feelings and reward processing (Silvetti et al., 2014; Kawamichi et al., 2015; Banich and Floresco, 2019; Rolls, 2019). However, one issue that causes confusion was that much of these previous works did not experimentally identify the neural associations of the positive emotion and social interaction or even unconfound the neural underpinnings of positive emotions from the neural underpinnings of the social interaction. Instead, certain neural regions showed that changes in the activation pattern during social interaction were often interpreted as proof that emotion elicited (Lotz et al., 2011; Ho et al., 2012). Therefore, the direct measurement of neural activity of emotion was a critical step in understanding how the brain mediates emotional influence on social behavior. One functional magnetic resonance imaging (fMRI) study experimentally manipulated emotional state and then used a separate probe to measure social judgment task (Bhanji and Beer, 2012). The results showed that the emotional influence on social judgment was reflected in left lateral OFC activation changes at emotion elicitation and the medial OFC activation changes at the time of social judgment. We, therefore, tested the issues whether is the brain doing something during the emotional experience especially positive emotional experience that predicts the influence on the subsequent social interactions (i.e., interpersonal cooperation) in the brain, and improve the ecological validity of cooperative settings.

Humans spend much of their lives with others and collectively engage in emotional events such as watching entertainment movies together, and attending weddings (or funerals) socially but silently. Recent neuroimaging studies have explored that the emotion-related neural activity temporal synchrony across individuals allow them to ‘tune in’ or ‘sync’ with each other. This view was supported by accumulative studies showing that when participants co-view emotional movies, their emotional (Bruder et al., 2012) and neurophysiological (Nummenmaa et al., 2012; Golland et al., 2015) responses to movies become similar, thereby facilitating a strong binding between each other (Golland et al., 2017) and interpersonal mutual understanding (Nummenmaa et al., 2018). In sum, specific neural synchrony of positive emotional experience across individuals was seen to establish the common ground on which social interactions unfold.

In fact, synchrony is socially important and plays a central role in almost every aspect of group behaviors. There were evidences of inter-individuals motion synchrony when two or more participants engaged in spontaneous rhythmic action (Richardson et al., 2007; Trainor and Cirelli, 2015). Synchronous biological rhythms such as heart rate and respiration have been linked to interpersonal behavioral coordination (Waters et al., 2017; Thorson et al., 2018). At the neural level, the interpersonal neural synchronization (INS) was found to effectively facilitate interpersonal information flow in assuming the mental and bodily perspectives of others (Nguyen et al., 2019; Xie et al., 2020) and predict the quality of interpersonal cooperation (Cui et al., 2012; Pan et al., 2017). Taken one step further, a growing body of research indeed provides evidence for higher levels of INS during cooperative interactions to be associated with higher task performance in terms of joint goal achievement, indicating that INS can be regarded as a neural indicator to track cooperative performance (Pan et al., 2017; Feng et al., 2020). Thereby, compared to traditional single-brain measures (scans a single participant engaged in a simulated interactive task), INS—neurophysiological substrates of ‘social brain’—possibly at the functional role in elucidate the neural associations of positive emotion and interpersonal cooperation.

On the other hand, some researchers have argued that emotion rarely has a direct role in social interactions, instead, indirectly regulated the social motivation during real-time interaction (Gable and Harmon-Jones, 2016). The motivational dimensional model posits that positive emotion (e.g. happiness, amusement and contentment) is related to an approach motivation as one was engaged in goal pursuit; this intrinsically motivated interest in the given task was likely to assist in the goal-directed action, with the increased likelihood of goal accomplishment (Brandstätter et al., 2001; Harmon-Jones et al., 2012). Experiments have revealed that positive emotions have implications for breadth of attention (Fredrickson and Branigan, 2005; Gable and Harmon-Jones, 2016), memory (Kaplan et al., 2012), cognitive categorization (Isen, 2002; Harmon-Jones et al., 2012) and some advanced cognitive processes involved in cognitive flexibility, response inhibition and task switching (Magnano et al., 2016; Harmon-Jones, 2019). Accordingly, in interpersonal cooperation, co-actors need to coordinate their actions with partners to achieve common cooperative goals. A more effective coordinated interaction is impacted by the motivations or desires to affiliate with others and acts as a ‘social glue’ (Lakin et al., 2003; Miles et al., 2010), to ensure individuals represent other’s state of intention accurately and take the joint actions of themselves and others into account at the same time (Kirschner and Tomasello, 2010). Thus, we speculated that the coordinated interaction as a core element in interpersonal cooperation, which might play a mediating role in the relationship between the INS associated with positive emotional experience during movie-viewing and the INS during the interpersonal cooperation.

To test the abovementioned hypothesis, two-person button-pressing (i.e. interpersonal cooperative and competitive task) experimental setup (in accordance to Cui et al., 2012) was recruited in a face-to-face context in the present study. Happiness was applied as positive emotion condition based upon numerous experiments have showed that happiness experience reflect an urge to share a laugh to build and solidify enduring social bonds with others (Fredrickson, 2013; Fine and Corte, 2017; Smirnov et al., 2019). Besides, one non-emotional (Neutral) movie clip was applied as control emotion condition. The functional near-infrared spectroscopy (fNIRS) was chosen as the neuroimaging technique in the present study because it measures the local hemodynamic effect with a high temporal resolution in more naturalistic environments than fMRI), and is less susceptible to motion artifacts than electroencephaphy (EEG) (Funane et al., 2011; Yücel et al., 2017). We allow a single device to measure two participants simultaneously, obviating the need for calibration process. The prefrontal cortexical (PFC) as a priori area of interests in the current study by considering this region has been reported to be the crucial neural region for neural correlates in emotion (Jaaskelainen et al., 2008; Smirnov et al., 2019) and the INS in interpersonal cooperation were identified in many previous studies (Funane et al., 2011; Cui et al., 2012; Tang et al., 2016; Pan et al., 2017).

We hypothesized that (i) higher INS across individuals during happiness movie-viewing relative to neutral movie-viewing and (ii) considering positive emotion would enhance motivation as one in cooperative goal pursuit, it was reasonable to assume that compared to neural emotion condition, the INS in happiness emotion would relate to the more effective coordination interaction in the subsequent cooperation task. Therefore, we observed that there was a positive association between INS during happiness movie-viewing and the coordination interaction in the interpersonal cooperation. (iii) Furthermore, given the previous evidence suggesting the link between better cooperation performance and increased cooperation-related INS (Cui et al., 2012; Pan et al., 2017; Wang et al., 2019), we also expected such better coordination interaction should be associated with stronger cooperation-related INS; (iv) coordination interaction might modulate the association between increased INS during emotional movie-viewing and the INS during interpersonal cooperation task.

Materials and methods

Participants

Sixty-two healthy young adults (a total of 31 dyads, Nmale = 24) with mean age of M = 21.96 (s.d. = 2.64) were recruited. All participants were right-handed as assessed by the Edinburgh handedness questionnaire (Oldfield, 1971), with normal or corrected-to-normal vision and hearing. Exclusion criteria were evidence of any psychopathological history for the participants or immediate family. The participant dyads were composed of two individuals of same gender, and they were unacquainted with his or her partner before the experiment. Informed consent was obtained from participants before experiment, and payment was provided based on participants’ task performance (ranging from 80 to 100 yuan in RMB). The University Committee on Human Research Protection of East China Normal University approved all aspects of the experiments. The experiment was performed under all relevant guidelines and regulations.

Experiment tasks and procedures

After reconfirming that the dyad of participants was not familiar with each other, they were then brought into a quiet room. The timeline of the experiment is shown in Figure 1A. The experimental procedure consisted of three resting-state sessions and two task-state sessions; the task-state session occurred between the two resting-state sessions. Each task-state session included one movie-viewing phase (happiness or neutral film clip) and the button-pressing task phase. The participants were seated face-to-face on the opposite sides of a table, in front of two separate computer screens and keyboards, labeled as participants #1 and #2, respectively. A baffle was put between the two monitors to ensure a real social interaction within each dyad while preventing them from imitating the action of each other (Figure 1B). Participants were asked to fill out some questionnaires including demographic characteristics and Pre-movie Emotion State, and then, they were explained that they will watch two movie clips in sequence and evaluate emotional state based on their actual feelings during the movie clip watching. The order of movie clips was randomized across participant dyad. Although dyad of participants watched the same movie clips simultaneously, each one uses their own computers, screens and headsets so that they never heard or observed each other’ s voice or responses. Emotion self-assessment questionnaire to measure the emotional effects after viewing movie. Then, the participants completed the button-pressing task, and four evaluations about participants’ subjective ratings of their cooperation and competition performances after answering all the stimuli were collected. The tasks were implemented using E-prime 2.0 (Psychology Software Tools Inc, Pittsburgh, PA, USA). Tasks and measurements are described below.

Fig. 1.

Experimental design. (A) Timeline for an experimental session. The sequence of two task-state session was counterbalanced. (B) Two participants sat on opposite sides of a table, in front of individual monitors and keyboards. (C) fNIRS optode probe set was placed on the prefrontal cortices. The number indicates measurement channels (CHs). (D) Button-pressing task design. The interpersonal cooperation task (D up) and competition task (D down) in one trial.

Emotion induction and assessment

The movie clips were selected according to the following criteria (Ge et al., 2019): (i) intelligibility—movie clips can be easily understood (Chinese subtitles were also added to guarantee a full understanding of the contents); (ii) length—they were relatively short, lasting for 4–5 min; (iii) discreteness—they were likely to elicit a specific reported emotional state (happiness and neutral in this study) based on the subjective feeling of the viewers.

Happiness emotion included clip drawn from the publicity movie Hail the Judge, while neutral movie clip modified from urdan documentaries same as previous research (Ge et al., 2019). In addition, to allow participants focus on the visual content of the landscapes in neutral emotion, the original speaker-based score typical of neutral clips was replaced with ambient music (Maffei et al., 2015). All movie clips were displayed through stereo headphones.

Button-pressing task

The button-pressing task included a cooperation task and a competition task (Figure 1D). Each task consisted of one block with 20 trials, and the order of cooperation and competition tasks were counterbalanced across pairs of participants. Each trial started with a hollow gray circle that remained on their computer screen for an unpredictable interval between 0.6 and 1.5 s. Subsequently, a rapid change in the color of the circle from gray to green initiated a button press response from both participants in dyad using their right index or middle finger. Participant #1 was instructed to press the ‘0’ key, and participant #2 was asked to press the ‘1’ key. After buttons were pressed, a feedback screen was displayed at a duration of 2 or 4 s. Eight practice trials (four trials for cooperation) were administered to familiarize the participants with the task.

Interpersonal cooperation task.

Participants were instructed to press their buttons as simultaneously as possible. If the latency between their buttons was below a threshold (which was calculated as 1/8 of the averaged response time of the two participants of a given trial), the dyad would earn one point together; otherwise, the point will not be counted. The parameter 1/8 was chosen to maintain a moderate level of difficulty of the cooperation task. After the slower member’s press, a feedback screen was displayed at a duration of 4 s, showing the result of current trial (‘Win!’ or ‘Lost!’) along with their cumulative points. The feedback also indicated whether the participant responded faster (green ‘+’) or slower (white ‘−’) than their partner, on both participants’ right-hand sides of the monitor. After the feedback, an inter-trial interval (black screen, 2 s) was shown, followed by the next trial.

Interpersonal competition task.

To exclude potential confounding effect of the observed cooperation-related INS was determined merely by synchronous action but without any involvement of cooperative mind, we employed the interpersonal competition as the control task. Previous studies explained that interacting dyads’ behaviors were independent during the competition that does not require an understanding of actions or minds of the other, which would not lead to an increase of the INS (Cui et al., 2012; Liu and Pelowski, 2014). This task was similar to cooperation task except that participants were given a different objective for that they were instructed to respond faster than his/her partners possibly. In each trial, only the participant whose response was faster received one point. After response, the feedback screen was presented with the display showing the trial winner (‘Win!’) on the faster player’s side and a word ‘Lost!’ on the lower player’s side, along with accumulative points for each participant. An inter-trial interval (2 s) was shown before the next trial.

Questionnaire measures

Emotion self-assessment measurements.

To understand whether we managed to primarily induce the emotions that we were interested in, a questionnaire from Chinese version of the Scale of Positive and Negative Affectivity (Huang et al., 2003) was used to rate participants’ emotional state. In order to avoid exhausting the participants with an overly long experimental protocol, some items were removed and two items of ‘happily’ and ‘peaceful’ were added to the questionnaire directly measured levels of happiness and neutral mood. The applied emotion words in scale (consisted of totally 13 items) are presented in Supplementary Table S1. The items were rated on a 5-point Likert scale, with the options [1 = ‘not at all’] to [5 = ‘extremely’]. Internal consistency of the scales was assessed by Cronbach’s alphas (>0.79), which all indicated an acceptable level of internal consistency. One another subjective assessment recorded whether there is an extra emotion during participants movie viewing were intended to exclude participants whose failed emotion prime or primed target-unrelated emotions. Participants were guided to describe how they felt by mood adjectives after answered in the affirmative. All participants were instructed to give their answers without thinking too long.

Subjective measurements of the button-pressing task.

After the cooperation task and competition task were carried out, both members of the dyad were given a questionnaire to obtain measures of generalized performance and efficacy for the tasks (post-experiment). Specifically, one open-ended question was used to identify the strategy participants adopted in the cooperation and competition tasks, participants were asked to describe the unified strategy if they used the same button-press strategy in the cooperation and competition tasks; or describe the strategies of button-press they used in the cooperation and competition tasks separately if not-consistent. Besides, three additional evaluations were collected about their cooperation performance and ranked by a 5-point Likert scale from ‘1 = not at all’ to ‘5 = very much’. Specifically, the evaluations included (i) satisfaction for her/his own performance, (ii) satisfaction for her/his partner performance and (iii) perceived cooperativeness during the cooperation.

fNIRS data collection

fNIRS data acquisition

Hemodynamic signals were acquired from each dyad simultaneously by using a multichannel high-speed, continuous-wave system (LABNIRS, Shimadzu Corp., Kyoto, Japan), consisting of different laser diodes with three wavelengths of 780, 805 and 830 nm. The absorption of light in tissue for the three wavelengths is converted into the appropriate hemoglobin concentrations via a modified Beer–Lambert law (Pellicer and del Carmen Bravo, 2011), with raw optical density changes converted into relative chromophore concentration variations (i.e. Δoxy-Hb, Δdeoxy-Hb and ΔTotal-Hb).

Optode arrangement

Here, two 3×5 optode patches were situated over the participants’ foreheads separately. Seven emitters and 8 detectors were positioned alternatively for a total of 15 probes, resulting in 22 measurement channels (CHs). The center of the lowest probe set row was placed at Fpz, according to the international 10/20 system. Specifically, the bottom of the patch was touching the tops of the participants’ eyebrows, and the middle probe column was aligned above the nose (Figure 1C). The distance between pairs of emission and detector probes is set at 3 cm, which enables cerebral blood volume measurements at a 2–3 cm depth from the skin of the head. Resistance for each CH prior to recording was determined to ensure acceptable signal-to-noise ratios, with adjustments (including hair removal from CH area prior to placement of each optode placement) made until meeting minimum criteria defined in the LABNIRS recording standards (Noah et al., 2015; Hirsch et al., 2017).

Data analysis

From the initial sample pair, one (female) pair was excluded because of technical problem with data recording, two pairs (one female pair) were excluded due to target-unrelated emotion reported in subjective assessment (one of female participants reported ‘disgusted’ in happiness movie watching, another male participant reported ‘bored’ in neutral movie watching) and they reported the extremely scores which were target irrelevant such as ‘Irritable’, ‘distressed’ in the emotion self-assessment questionnaire with ratings on 5. Thus, 28 pairs (11 male pairs) of participants were retained in the subsequent analysis.

Behavioral performance

Manipulation check in the validation of emotion

As a validity check of emotion priming, a series of two-tailed paired t-test per item were computed for each participant’s self-report scores as unit of analysis to identify meaningful differences between two emotional movies (Happiness vs Neutral). Bonferroni test was applied for multiple comparisons.

Behavior performance analysis in the button pressing task

Outliers (an interval spanning over the mean±3 s.d.) possibly underlying processes for lack of attention, distraction as reflected by a long spurious Response AQ time (>3 s.d.), or participants pressing the button in advance due to an anticipation of the impending object stimuli, reflected by a short spurious Response AQ time (<3 s.d.) (see Supplementary Table S3 in Supplementary Materials for detail). Therefore, the median of response time (median-RTs) and the relative difference of response time (median-rDRTs = |RT1-TR2|/|RT1 + TR2|) were calculated among the cooperative and competitive task in each dyad because of its robustness to outliers (in accordance to Pan et al., 2017; Reindl et al., 2018).

To further quantify the quality of interpersonal cooperation, the effectiveness of coordination interaction (ECI) and the mean cooperation rate was calculated: (i) ECI: including ‘Effective Adjustments’ and ‘Post-failure Effective Adjustment Rate’. As mentioned above, the degree of coordination interaction depends on how well the co-actors adjust the speed of pressing the button. Specifically, participants would readjust themselves response speed according to feedback information to achieve synchronization pressing in the cooperation task. If the participant’s response speed (faster or slower) was consistent with the feedback in the prior trial (‘−’ or ‘+’), we treated this trial as an effective adjustment action for successful cooperation with each other, which can be named ‘Effective Adjustments’. The summed ‘Effective Adjustments’ of two participants within dyad to create a dyad-level variable for analysis, more effective motion adjustments indicted more efficient coordination interaction. More importantly, considering participants could actively adjust their pressing speed when they receive negative feedback (e.g. Lost!) but keeping a certain reaction speed to maintain continuous cooperation when a successful cooperation feedback (e.g. Win!) was given, we also calculated the rate of effective adjustment behaviors when participant dyad got the feedback of cooperation failed (described as ‘Post-failure Effective Adjustment Rate, PFEA’):

Effective Adjustments = Effective Adjustment times (Sub01) + Effective Adjustment times (Sub02)

PFEA = Effective Adjustments in Lost trial/Sum of Lost trial.

(ii) Mean Cooperation Rate: the percentage of joint wins in all of cooperation trials made by each dyad.

Exploratory behavioral analyses.

Furthermore, as an exploratory analysis to examine the temporal variation of the cooperation, the cooperation performance produced by each member of a dyad using the trial as a function of time. We hypothesized that the interpersonal cooperation performance, especially in coordination interaction between the dyad, will evolve in a time-sensitive manner as individuals undergo interactive cooperation in our focus condition.

The fNIRS data analysis

Preprocessing

Preprocessing of the fNIRS data was performed in MATLAB (MathWorks, Natick, MA) and proceeded along similar lines to previous fNIRS studies. First, since head and body movement can cause movement artifacts in the fNIRS signals, correlation-based signal improvement method was used to remove motion artifacts from each CH, as implemented in the toolbox (for more details, see Cui et al., 2012). Only Δoxy-Hb time-series data were analyzed in the present study, given Hbo signal was more sensitive to the changes in cerebral blood flow than Δdeoxy-Hb signal (Lindenberger et al., 2009) as well as provided a robust correlation with the blood-oxygen-level dependent (BOLD) signal measured by fMRI (Huppert et al., 2006; Hoshi, 2007). For to decrease the fNIRS signals temporal autocorrelation (Yang et al., 2020), HbO time-series in each CH were acquired at a raw sampling rate of 24 Hz and downsampled to 10 Hz using Matlab’ built-in Resample function, which uses interpolation and a finite impulse response anti-aliasing filter for resampling the signal to the desired frequency. This function also compensates for the filter’s delay (Duan et al., 2015; Rösch et al., 2021).

Inter-brain analysis

A wavelet transform coherence (WTC) analysis was calculated to assess the cross-correlation between two HbO time series generated by each pair of participants in each CH as a function of frequency and time (for more details, see Grinsted et al., 2004). WTC is suggested to be more suitable in comparison to correlational approaches, as it normalizes the amplitude of the signal according to each time window and thus is not vulnerable to the transient spikes induced by movements (Nozawa et al., 2016). The channel-wise coherence was calculated using the WTC package (http://noc.ac.uk/using-science/crosswavelet-wavelet-coherence) in Custom MATLAB code. This method generated a two-dimensional matrix of a series of brain coherence values consisting of columns of time information and rows of frequency information, respectively. All CH combinations (22 in total) were obtained for each of the task-state session and the resting-state session.

According to previous studies, the INS during the resting state could be regarded as a spurious synchronization (Iacoboni et al., 2004). Thus, the averaged coherence values (estimated by WTC) for the baseline phase were subtracted from those of the task session to obtain the task-related INS (i.e. INS task-related = INS task − INS rest). The initial resting-state (180 s) phase served as the first task setting baseline, and the inter-task resting phase (second 180 s rest) served as the baseline for second task setting.

INS analysis in emotional movie-viewing.

We first test the hypothesis that INS increased in emotion movie-viewing condition of happiness as compared with the neutral condition of non-emotion movie-viewing. For this purpose, the coherence values were time-averaged across each movie-viewing phase and the resting-state session. The emotion-induced INS was calculated by movie-viewing session minus resting session and then converted into Fisher-Z values for further analysis (Chang and Glover, 2010; Cui et al., 2012). To enable the identification of frequency characteristics, paired t-test was performed on the coherence values in two movie-viewing along the full frequency range (0.01–0.7 Hz) across all CHs. Following previous studies, data above 0.7 Hz were not included to avoid aliasing of higher-frequency physiological noise, such as cardiac activity (∼0.8–2.5 Hz) (Tong et al., 2011; Barrett et al., 2019); data below 0.01 Hz were also not used to remove very low-frequency fluctuations (Guijt et al., 2007). This analysis yielded a series of P-values that were FDR-corrected for all frequency bands (i.e. 75 frequency bands in total), which help us determine the frequency of interest (FOI). Eventually, paired sample t-tests were conducted for comparison of coherence values between the happiness and neutral movie-viewing conditions along with the determined FOIs, with FDR correction for all CHs (P < 0.05).

INS analysis in cooperation tasks.

WTC analysis was also employed to calculate significantly INS between members of a dyad per CH during the cooperation task. We focused on the frequency band between [3.2, 12.8 s] and [0.08, 0.31 Hz]; this period range correspond to the duration of our task setting (the average durations of cooperative trials (7.8 s) and competitive trials (5.4 s)), also consistent with the FOIs selected in past research (Cheng et al., 2015; Pan et al., 2017). Cooperation-related INS was defined as the task coherence values minus the resting-state coherence values in this frequency band within all trials, and the coherence values were converted to Fisher-Z values before statistical analysis was performed. The between-emotion condition difference of cooperation coherence values was calculated for each pair of participants, and a positive coherence value of each CH was determined by paired t-tests. A series of FDR corrected paired t-tests across all CHs. In addition, to rule out the INS within pairs during cooperation that simply emerges as a consequence of the synchronous figure movement but without the inclination to cooperate with each other, a parallel analysis was measured during the competition task at the same frequency band in cooperation task.

The t values of 22 CHs were converted using the xjview toolbox into an image file (t-test map) (http://www.alivelearn.net/xjview/), and the image file was visualized by BrainNet Viewer toolbox (http://www.nitrc.org/projects/bnv/).

Validating the INS through permutation test

To identify the possibility that the higher INS found in the happiness movie-viewing was not merely by product of characters in the video, a validation analysis was added by recruited pair randomization permutation test. We consider if the higher INS was merely by stimulus material processing, this effect can be expected to disappear when the partners are shuffled in happiness movie-viewing. The participants were randomly paired to a new partner who had no interaction with each other but performed the same experimental time series in the task within the same condition. In this way, new 28-dyad samples were created. Afterward, the INS analysis was then reconducted.

This validation measurement was also applied for the cooperation task to consider the possibility that the higher INS found in our focus condition during the cooperation task was not merely the product of the common task set by the two participants—involving the dyad members doing roughly the same activity—rather than any additional social factors. It should be noted that the time length in each participant of dyad during the cooperation task was not identical due to differences in response times and the variable inter-trial intervals but the wavelet coherence analysis requires both time series to be equal in duration (Grinsted et al., 2004). To rule it out, the longer time series of the participant was cut so that it was the same length as the shorter one for each random pair (in accordance to Reindl et al., 2018).

The permutation was conducted 1000 times to yield a null distribution of the the coherence values for each of emotion condition separately. Significant levels (P < 0.05) were assessed by comparing the coherence values from the original dyads with 1000 renditions of random pairs. It was expected that genuine interactions compared to pseudo-interactions would induce significant higher INS.

Correlation and mediation analysis

To identify underlying mechanistic processes that may connect cooperation behavior with fNIRS signals, Pearson’s correlation coefficients were calculated to investigate the relationship between cooperation behavior efficiency (both ECI and Mean Cooperation Rate) and cooperation-related INS from significant CHs that detected significant. Next, the primary interest in our study was the neural underpinnings of positive emotion and interpersonal cooperation, and thus, we employed Pearson’s correlation analysis to assess the relationship of emotion-induced INS with ECI. Finally, we also performed a mediation analysis to investigate which factor (i.e. Effective Adjustments or PFEA) mediates the emotion-induced INS on cooperation-related INS.

Results

Validation of emotion induction

Behavioral ratings confirmed that the movie in happiness than neutral condition elicited strong positive emotional experiences in items highly happiness related (e.g. ‘Interested’, ‘Happily’, ‘Enthusiastic’ and ‘Active’) at the emotion self-assessment questionnaire, with mean ratings range from (3.13) to (3.77) on the 5-point Likert scale. Besides, all mean ratings of items in neutral condition were less than 2.55 except the item ‘Peaceful’ (3.73). Paired t-test (Bonferroni corrected, shown in Supplementary Table S1 in Supplementary Materials) showed significantly higher ratings in above happiness-related items (all Ps < 0.001, ts > 4.92) compared to neutral condition but a lower rating in the item of ‘Peaceful’ compared to neutral condition (t(55) = ‒6.87, P < 0.001, Cohen’s d = ‒1.85). Furthermore, this emotion induction effect remained significant after controlling the baseline ratings (pre-scores of video Emotion state) (Bonferroni corrected, shown in Supplementary Table S2 in Supplementary Materials).

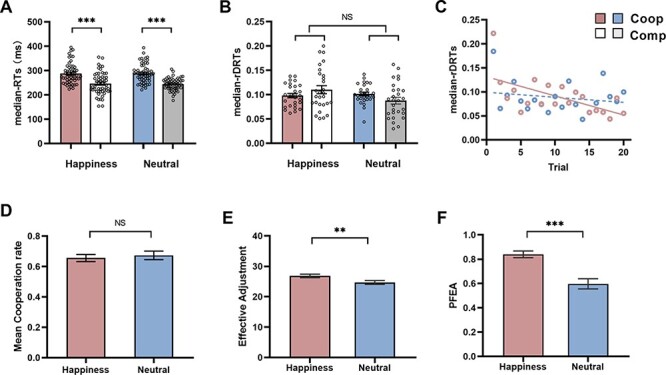

Behavioral performance in button pressing task

Two-way repeated measures analyses of variance (2 task type: Cooperation vs Competition) × 2 emotion condition: Happiness vs Neutral) conducted on the median-RTs indicated a significant main effect for task type (F(1, 55) = 17.01, P < 0.000, η2 = 0.75). The participants’ response times were faster in competition than they were in the cooperation task (Figure 2A). This finding probably reflects a different strategy that participants adopted in the cooperation and competition, which were confirmed by the subjective measurements (the freedom question?). Specifically, in the cooperation task, most participants (78% of the total) reported that the speed of pressing the button was based on their partner’s press response. Instead, almost all of the participants (92% of the total) reported pressing the buttons as faster as possible after the change in the color of the circle from gray to green in the competition task. Neither the main effect of emotion nor the interactive effects were found (Ps > 0.1). Similar analysis was conducted on the median-rDRTs, there were no main effects or interactions (all Fs < 2.39, ps > 0.05) (Figure 2B).

Fig. 2.

Button-pressing task performance. (A) The median response times (median-RTs) of happiness and neutral emotion conditions both on interpersonal cooperation and on competition tasks. (B) The relative difference of response time (median-rDRTs). (C) Median-rDRTs as a function of trial number for two conditions. (D) The mean cooperation rate in each condition. (E) The number of effective adjustments on the cooperation task in each condition. (F) Post-failure Effective Adjustment Rate (PFEA) on the cooperation task in each condition. Coop: cooperation. Comp: competition. *P < 0.05, **P < 0.01, ***P < 0.001 NS not significant. Data in all panels with error bars indicate SEM.

For interpersonal cooperation quantify, the paired t-test on mean cooperation rate demonstrated that there was no significant difference observed with factors of emotion (P > 0.05) (Figure 2D). Notably, on ECI, paired t-test indicated the more effective adjustments in the happiness (M = 27, SEM = 0.57) compared to neutral condition (M = 25, SEM = 0.62), (t(27) = 3.04, P = 0.005, Cohes’d = 1.17) (Figure 2E). Moreover, we also focused on the effectiveness of coordinated interaction when cooperation goes wrong, the PFEA. As might be expected, significant higher PFEA in happiness condition (M = 0.84, SEM = 0.07) than that in neutral condition (M = 0.60, SEM = 0.042) during interpersonal cooperation task, t(27) = 5.179, P < 0.001, Cohes’d = 1.99 (Figure 2F). A series of paired t-tests were conducted to estimate the effect of emotional condition on participants’ subjective measurements for cooperation performance. Each rating scores averaged within the two-person group to create a dyad-level variable for analysis. The results did not demonstrate any differences between happiness and neutral conditions in evaluating cooperation performance (all Ps > 0.05). These findings implied that happiness and neutral emotion had similar subjective attitudes derived from cooperation in the present study.

Finally, the temporal variation effect in cooperation task were conducted in a more fine grained way. Although no significant emotion difference on median-rDRTs was observed in the interpersonal cooperation task, linear regressions procedures were computed suggests a linear decrease for median-rDRTs across trials (the difference of response times getting smaller over time) in happiness condition (β = −0.602, P < 0.001), but was not found in neutral condition (β = −0.191, P = 0.42) (Figure 2C). This result suggested that the response by each member of a dyad was this finding suggested that the response by each member of a dyad were aligned progressively in happiness condition in the interpersonal cooperation behaviors on the coordination interaction (see Supplementary Figure S1 in the Supplementary Material).

INS during emotional movie-viewing

In terms of frequency characteristics, INS was significantly higher during the happiness movie-viewing than neutral movie-viewing for frequencies ranging between 0.13 and 0.18 Hz (7 frequency bands in total) (all FDR-corrected, Figure 3A). These frequencies are out of the range of physiological responses associated with cardiac pulsation activity (∼0.8–2.5 Hz), respiration (∼0.2–0.3 Hz) and Mayer waves (∼0.07–0.12 Hz). These ranges were then chosen as FOIs for subsequent analyses. Paired t-tests with FDR-corrected P values of coherence values in these FOIs were submitted. The result showed significantly larger coherence values for happiness movie-viewing at CH17 (t(27) = 4.12, P-corrected = 0.004) and CH22 (t(27) = 5.02, P-corrected < 0.001) than neutral movie-viewing, roughly in the left inferior frontal gyrus (IFG) (see Figure 3B).

Fig. 3.

(A) Selection of FOI range. Paired t-test results for interpersonal neural synchronization of dyads during emotional movie-viewing (i.e. coherence values in happiness movie-viewing vs coherence values in neutral movie-viewing) across the full frequency range (0.01–0.7 Hz) on 22 CHs. Note that the frequency is not equidistributional on the x-axis. The red dashed box highlights the FOIs (∼0.13–0.22 Hz). (B) The emotion-induced coherence values at CH17 and CH22 were significantly higher in the happiness than in the neutral at the frequency band of 0.13–0.22 Hz. ***P < 0.001, FDR-corrected.

Increased cooperation-related INS in the happiness condition

Paired t-test on Happiness_Coop vs Neutral_Coop and showed a greater coherence values in happiness condition than in neutral condition during the cooperation at CH4 after FDR-corrected (t(27) = 3.75, p-corrected = 0.02, Cohen’s d = 1.43), which was roughly located in the left middle frontal cortex (LMFC) (shown in Figure 4A and B).

Fig. 4.

INS during button-pressing task on emotional condition difference. (A) Paired t-test map in Happiness_Coop vs Neutral_Coop; (B) Comparisons of coherence values in CH4 in cooperation under two emotion conditions. (C) Paired t-test map in Happiness_Comp vs Neutral_Comp; (D) The correlation between PFEA and coherence values in CH4 was significant for happiness (purple) but not for neutral (gray) condition. Error bars indicate SEM *P < 0.05, **P < 0.05.

Furthermore, a parallel analysis was performed on the coherence values in the interpersonal competition task (Happiness_Comp vs Neutral_Comp), but no significant difference of coherence values was found at any of 22 CHs (all ts < 1.73, Ps > 0.05, before FDR, Figure 4C). In sum, we propose that the movement synchronization of pressing the buttons had little explanatory power for understanding our identified cooperation-related INS.

Validation analyses

With the pair randomization permutation procedure, we found a robust higher INS in happiness movie-viewing but not in the neutral movie-viewing. Besides, the average cooperation-related coherence values in each emotion condition from the original coupling significantly exceeded than the 1000 random pairs of coherence values at the same frequency bands (all Ps < 0.001) during the cooperation task. (Please refer to Supplementary Figures S3 and S4 in Supplementary Materials for further details.)

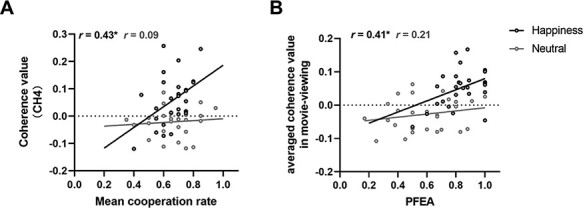

Association of the cooperation-related INS with cooperation efficiency

Pearson’s correlation analysis was conducted on ECI and the significant channel (CH4) in INS in the cooperation. Our result only showed a positive association of the detected coherence values in CH4 with the PFEA in the happiness condition (r = 0.50, P = 0.007) but not in the neutral condition (r = 0.10, P = 0.60) (shown in Figure 4D). Furthermore, a statistical comparison was conducted between these two correlation values. The calculations relied on tests implemented in the R package cocor (Diedenhofen and Musch, 2015), and Silver’s z procedure confirmed the significant difference between these two correlations (Silver et al., 2004). The Silver’s z test revealed a significant difference between these two correlations values marginally, z = 1.59, P = 0.056 (one-tailed). This finding indicates, that the PFEA coincide with the cooperation-related INS in the interpersonal cooperation was specific to the participants experience happiness emotion. However, there were no significant correlation between the effective adjustments with the cooperation-related INS both on happiness and on neutral conditions (Ps > 0.05).

Additionally, to identify that the across brain coupling can be regarded as a neural indicator to track cooperation performance, we also examined the relationship of the cooperation-related INS and the mean cooperation rate (in accordance to Cui et al., 2012; Pan et al., 2017). To identify that cooperation-related INS can be regarded as a neural indicator to track cooperation performance, we also examine the relationship of cooperation-related INS to the mean cooperation rate. Pearson’s correlation analysis demonstrated a significant positive association between INS in CH4 and mean cooperation rate in happiness condition (r = 0.43, P = 0.021) but not in neutral condition (r = 0.093, P > 0.05) (shown in Figure 5A). But the comparison between these two correlation values submitted by Silver’s z test was not significant, z = 1.30, P = 0.1 (one-tailed).

Fig. 5.

(A) Correlation between the cooperation-related coherence at CH4 and the mean cooperation rate was significant in happiness but not in neutral condition. (B) Correlation between the emotion-induced INS and PFEA was significant in happiness but not in neutral condition. *P < 0.05.

Besides, to address the potential contribution of subjective experiences for cooperation task to the cooperation-related INS, we examined whether the self-reported satisfaction for their own or partner’s performance and perceived cooperativeness correlated with cooperation-related INS. None of these correlations were detected (all Ps > 0.05).

Association of emotion-induced INS with coordination interaction

As expected, Pearson’s correlation analysis was employed to assess whether the emotion-induced INS play a direct role in cooperation-related INS. As expected, no significant correlation was found between the INS of happiness movie-viewing in the left IFG and the cooperation-related INS in the LMFC (P > 0.05). However, notably, there was a significantly positive association between averaged coherence values in happiness movie-viewing with the coordination interaction of PFEA (r = 0.41, P = 0.031, Figure 5B).

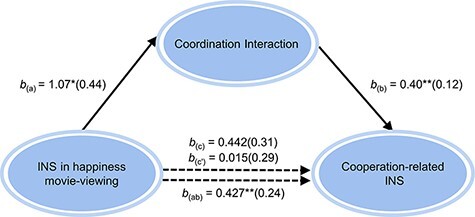

Mediation analysis

The abovementioned analytical results revealed that (i) increased emotion-induced INS in participant dyad watching happiness movie promotes a higher coordination interaction (indicator of PFEA) in the interpersonal cooperation; (ii) in happiness condition, the cooperation-related INS was positively related with the PFEA, and this neural synchronization can be regarded as a neural indicator of cooperation performance due to a positive correlation between the cooperation-related INS and the mean cooperation rate and (iii) no direct correlation between emotion-induced INS and cooperation-related INS was found; given these pairwise relationships, we inferred that enhanced emotion-induced INS in happiness movie-promoted participant dyad more effectively coordinate action with their partner during the interpersonal cooperation task, which may characterized by a higher cooperation-related INS. To this means, a mediation analysis was conducted using Model4 in SPSS PROCESS (Hayes, 2017). The mediation effects were formally tested using the PROCESS for SPSS (Model 4, 5,000 bootstrapping samples to generate 95% confidence intervals (CIs)), a method that does not rely on the assumption of a normally distributed sampling distribution of the indirect effect (Preacher and Hayes, 2008). The mediated effects were considered statistically significant at the 0.05 level if the 95% CI for these estimates did not include zero, the indirect effect of INS in happiness movie-viewing on cooperation-related INS through PFEA was significant (b = 0.427, SEM = 0.24, 95% CI [0.0447 0.9759]), meaning that the higher INS during happiness experience within participant dyad increases the PFEA, which in turn produces a more cooperation-related INS. The direct effect was not significant (b = 0.015, SEM = 0.294, t = 0.513, 95% CI [−0.591 0.621]), indicating that INS of happiness movie-viewing did not have an effect on cooperation-related INS when controlling for its effects through PFEA. All paths for the full process model and their corresponding coefficients are illustrated in Figure 6.

Fig. 6.

The mediation model. More effective coordination interaction (PFEA) mediated the effect of emotion-induced INS in happiness movie-viewing on cooperation-related INS. Standard errors in parentheses, *P < 0.05, **P < 0.01.

Discussion

Positive emotions are found to be more favorable in social interaction behavior (for a review, see van Kleef and Fischer, 2016). To this psychological phenomenon, so it is important to understand the neural processes underlying positive emotion on social facilitation. Here, the present research explored that when individuals experience happiness with other persons, their brains are immersed in interacting with others, driving greater inclination to cooperate with their partner by the more effective coordination interaction during the subsequent interpersonal cooperation task, which consequently results in a stronger cooperation-related INS in the two-person dyad. The aforementioned results are discussed in detail as follows.

We found that brain activity becomes synchronized among participant dyads during happiness but not in non-emotional (neutral) movie viewing, and the detected INS was roughly located in the left IFG. The area has been generally considered a component of the mirror neuron system, which plays role in shared intention (Tanabe et al., 2012; Cacioppo et al., 2014), social processing (Liu et al., 2015) and empathy with others’ emotion (Decety, 2010; Asada, 2015). More recent work from the viewpoint of ‘second-person neuroscience’ has identified that IFG exhibits a common pattern of activity across speakers and listeners and underlies the process of emotion alignment with others (Golland et al., 2017; Smirnov et al., 2019). Here, we found higher synchronization in neural activity in the left IFG when participants dyad viewing a movie with characteristics of highly positive emotionality rather than neutral emotionality. Taken together with previous models of IFG function, our study further confirms the role of the left IFG as a neural mechanism through which affective information was experienced between individuals, which subsequently increase the similarity in the way the individuals perceive and experience their common social environment.

Note that the revealed INS in happiness movie-viewing was associated with subsequent mutual coordination interaction–feedback-based pressing adjustment–for cooperation embodied by PFEA (Figure 5B). As reported previously, the interpersonal cooperation in the button pressing task involved interacting exchanges in the form of simultaneously pressing the buttons and the participants’ dyad depended only on the feedback of each trial to coordinate with the partner, which triggers synchronized mentalization processes (a tendency to cooperate with each other) and self-control processes (pressing buttons faster or lower at next trail). Based on these characteristics, the observed higher PFEA in happiness condition than in neutral condition may suggested that the positive emotional experience with participant dyad promote more contribute to coordinating their concrete actions for cooperation success. This could be interpreted by one previous study that the perceived level of happiness or pleasure indicates a motivationally congruent if the other person is a friend or ally (De Melo et al., 2014). Although in our study the participants within dyad unacquainted with each other before experiment, the common emotional experience makes them feel similar and might be guaranteed by a strong motivation of autonomic modulate action to devote themselves to the task, as more PFEA was found in the interpersonal cooperation task. However, our study did to not identify whether stronger social bonding between individuals within dyad in the happiness emotion condition, further investigation may clarify this posit.

Similar to early research, the present study also adds information about the across-brain neural mechanisms involved in cooperation. Our result observed a higher cooperation-related INS in the LMFC, and the association between the INS with the cooperation behavior performance was confirmed (both with PFEA and mean cooperation rate) in the happiness rather than in neutral condition. LMFC is considered to engage in adaptive behavior in human interaction and processing as well as predicting relevant information about others in social interactions (Koster-Hale et al., 2013). Previous studies have found that even when simply watching others in social interaction (Iacoboni et al., 2004) or participating in one-way interactions (Schippers et al., 2010), there were also larger activities in LMFC. The INS in the LMFC during a simultaneous time counting task can also predict subsequent prosocial inclination (Hu et al., 2017). Therefore, our study further confirmed the value of LMFC in social interactions.

Further, significant positive correlation between PFEA and cooperation-related INS indicated that the coordination interaction was also mirrored by brain coupling in the dyads: the more effective coordination interaction, the larger cooperation-related INS in the LMFC (Figure 4D). This finding echo recent neuroimaging studies that mental efforts to cooperative promote inter-brain synchronization (Pan et al., 2017; Wang et al., 2019). On the other hand, previous studies using the same experimental task reported positive associations between brain coherence and mean cooperation rate (Cheng et al., 2015; Baker et al., 2016; Pan et al., 2017; Wang et al., 2019); our study examines the stability of this relationship (Figure 5A). In addition, some additional control analyses were also conducted in the present study to clarify the explanatory power of enhanced INS observed in the interpersonal cooperation task: (i) the synchronous figure movement within participants’ dyad but without the involvement of common goal achievement and (ii) individuals engaged in the similar experimental task situation but not in genuine interacting dyad. As expected, these control analyses confirmed that the dyads’ interaction efficiency could be represented and retained by pair-specific cooperation-correlated INS. Hence, our results further supported the ‘cooperative interaction hypothesis’ that INS in the present study is derived from synchronized mentalization processes and continuously coherent interpersonal interaction (predicting other’s motion and controlling self-motion) (Pan et al., 2017; Shamay-Tsoory et al., 2019).

More interestingly, we found that the more effective coordination interaction in PFEA acted as a full mediator of emotion-induced INS in the happiness movie-viewing and the cooperation-related INS (Figure 6). Previous study suggests that in a Prisoner’s Dilemma game, the larger INS in interactive decision-making can be contributed to by the higher level of perceived cooperativeness in the dyad (Hu et al., 2018). This is so-called ‘mentalizing’ process (Frith and Frith, 2003). Consistent with these findings, our result further supports the mentalizing process by an immediately profitable action for INS in favor of efficient dyad cooperation. On the other hand, previous studies have shown that emotions are associated with INS if individuals are exposed to similar emotional content (Nummenmaa et al., 2012, 2018). We extend these findings in new insights on social facilitation. In fact, relevant previous research advocated increased INS in cooperation might be accounted for by emotional involvement within lover dyad compare to friend and stranger dyad (Pan et al., 2017). In the recent fNIRS-based hyperscanning study, Reindl et al. (2018) found that the emotional connection between parent and child was related to INS of their cooperation. However, these studies did not explicitly examine the predictive relations of the two measures. Taken together, our study expands on previous findings by showing that higher INS in happiness emotion experience within participant dyads improves mutual understanding and shared representation, predicting a higher cooperation-related INS, and this effect was mediated by the more effective ongoing coordination interaction between interacting persons.

Regarding another coordination interaction index—‘effective adjustments’—tested in the present study, although it showed a condition difference favoring the happiness emotion experienced in the dyad, there was no significant association between effective adjustments and cooperation-related INS. We posited that the effective adjustment was less influenced by whether the cooperation succeeds or fails but only linked to the feedback (‘+’ or ‘−’) on the screen. Besides, the autonomic physiologic fluctuation also confounded effects on participants’ pressing time.

Limitations of this study were also important to note. First, our optode probe set of fNIRS only covered the prefrontal regions of the brain but left other regions unexplored. However, previous studies revealed that emotion movies elicited the neural coupling in occipital, prefrontal and temporal cortices (Nummenmaa et al., 2012; Bacha-Trams et al., 2020) and also the cooperation-related INS in the right temporo-parietal (Tang et al., 2016; Wang et al., 2019). The roles of these brain regions could be further examined by measuring from the entire brain. Second, previous studies have found significant differences in mean cooperation rate among different experiment conditions (e.g. higher cooperation rate in lover dyads compared with friend and stranger dyads at Pan et al., 2017), but our study did not find a significant effect on cooperation behavior outcomes. One possible explanation from Wang et al. (2019) is that the changes of cooperation rates were across task processing combined with an increased INS (in block 2 and block 3), which implied a learning process of cooperation was needed. In the present study, a short experimental setting (solely one block of 20 trials for the cooperation task) may mask this dynamic facilitate effects in mean cooperation rate, future research may submit more trial numbers than given in the current study to further assess the effects for emotion-depended INS on cooperation behavior outcomes change. Third, this computer-based button-pressing task leads to a low ecological value compared with the real common situation. Therefore, variations in the type of ‘real-social’ task that can improve specific work environment should be provided in future research.

Conclusion

Here, we add an empirical piece of hyperscanning work, contributing to a better understanding of neural underpinning associating with positive emotion in modifying bodily and neural traits in human interpersonal cooperation in the perspective of two-person neuroscience. We propose that INS across individuals in positive emotion experience attuned individuals’ behavior to others accordingly and enable the more effective coordination interaction during the interpersonal cooperation task, subsequently facilitating the cooperation-related INS. Extrapolating from experimental results to real world, our study potentially expands the understanding of human beings prefer to engage in positive emotion experiences together (watching movie or football final), which may essential for individuals to build and maintain partnerships and facilitate interpersonal interactions.

Supplementary Material

Acknowledgements

We would like to thank Jieqiong Liu, Xiaoyu Zhou and Xie enhui for their valuable comments on earlier drafts. We would also like to thank all the participants of our experiment.

Contributor Information

Yangzhuo Li, School of Psychology and Cognitive Science, East China Normal University, Shanghai 200062, China.

Mei Chen, School of Psychology and Cognitive Science, East China Normal University, Shanghai 200062, China.

Ruqian Zhang, School of Psychology and Cognitive Science, East China Normal University, Shanghai 200062, China.

Xianchun Li, School of Psychology and Cognitive Science, East China Normal University, Shanghai 200062, China; Shanghai Key Laboratory of Mental Health and Psychological Crisis Intervention, Affiliated Mental Health Center (ECNU), School of Psychology and Cognitive Science, East China Normal University, Shanghai 200062, China.

Funding

This work was supported by the Shanghai Key Base of Humanities and Social Sciences (Psychology-2018), the National Natural Science Foundation of China (32071082 and 71942001), Key Specialist Projects of Shanghai Municipal Commission of Health and Family Planning (ZK2015B01) and the Programs Foundation of Shanghai Municipal Commission of Health and Family Planning (201540114).

Supplementary data

Supplementary data are available at SCAN online.

Conflict of interest

The authors declare that they have no actual or potential conflicts of interest concerning this work.

References

- Aknin L.B., Van de Vondervoort J.W., Hamlin J.K. (2018). Positive feelings reward and promote prosocial behavior. Current Opinion in Psychology, 20, 55–9. [DOI] [PubMed] [Google Scholar]

- Asada M. (2015). Development of artificial empathy. Neuroscience Research, 90, 41–50. [DOI] [PubMed] [Google Scholar]

- Bacha-Trams M., Ryyppo E., Glerean E., Sams M., Jaaskelainen I.P. (2020). Social perspective taking shapes brain hemodynamic activity and eye-movements during movie viewing. Social Cognitive and Affective Neuroscience, 15(2), 175–91. doi: 10.1093/scan/nsaa033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baker J.M., Liu N., Cui X., et al. (2016). Sex differences in neural and behavioral signatures of cooperation revealed by fNIRS hyperscanning. Scientific Reports, 6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Banich M.T., Floresco S. (2019). Reward systems, cognition, and emotion: introduction to the special issue. Cognitive, Affective and Behavioral Neuroscience, 19(3), 409–14. [DOI] [PubMed] [Google Scholar]

- Barrett K., Barman S., Boitano S. (2019). Ganong’s Review of Medical Physiology. Appleton and Lange ISE. NY: McGraw-Hill Education. [Google Scholar]

- Bell R., Röer J.P., Buchner A. (2013). Adaptive memory: the survival-processing memory advantage is not due to negativity or mortality salience. Memory and Cognition, 41(4), 490–502. [DOI] [PubMed] [Google Scholar]

- Bhanji J.P., Beer J.S. (2012). Unpacking the neural associations of emotion and judgment in emotion-congruent judgment. Social Cognitive and Affective Neuroscience, 7(3), 348–56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brandstätter V., Lengfelder A., Gollwitzer P.M. (2001). Implementation intentions and efficient action initiation. Journal of Personality and Social Psychology, 81(5), 946. [DOI] [PubMed] [Google Scholar]

- Bruder M., Dosmukhambetova D., Nerb J., Manstead A.S.R. (2012). Emotional signals in nonverbal interaction: dyadic facilitation and convergence in expressions, appraisals, and feelings. Cognition and Emotion, 26(3), 480–502. [DOI] [PubMed] [Google Scholar]

- Cacioppo S., Zhou H., Monteleone G., et al. (2014). You are in sync with me: neural correlates of interpersonal synchrony with a partner. Neuroscience, 277, 842–58. [DOI] [PubMed] [Google Scholar]

- Chang C., Glover G.H. (2010). Time–frequency dynamics of resting-state brain connectivity measured with fMRI. NeuroImage, 50(1), 81–98. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cheng X.J., Li X.C., Hu Y. (2015). Synchronous brain activity during cooperative exchange depends on gender of partner: a fNIRS-based hyperscanning study. Human Brain Mapping, 36(6), 2039–48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen N., Mor N. (2018). Enhancing reappraisal by linking cognitive control and emotion. Clinical Psychological Science, 6(1), 155–63. [Google Scholar]

- Collins A.L., Jordan P.J., Lawrence S.A., Troth A.C. (2016). Positive affective tone and team performance: the moderating role of collective emotional skills. Cognition and Emotion, 30(1), 167–82. [DOI] [PubMed] [Google Scholar]

- Cui X., Bryant D.M., Reiss A.L. (2012). NIRS-based hyperscanning reveals increased interpersonal coherence in superior frontal cortex during cooperation. NeuroImage, 59(3), 2430–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Melo C.M., Carnevale P.J., Read S.J., Gratch J. (2014). Reading people’s minds from emotion expressions in interdependent decision making. Journal of Personality and Social Psychology, 106(1), 73. [DOI] [PubMed] [Google Scholar]

- Decety J. (2010). The neurodevelopment of empathy in humans. Developmental Neuroscience, 32(4), 257–67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Diedenhofen B., Musch J. (2015). cocor: a comprehensive solution for the statistical comparison of correlations. PloS One, 10(6), e0121945. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Drouvelis M., Grosskopf B. (2016). The effects of induced emotions on pro-social behaviour. Journal of Public Economics, 134, 1–8. [Google Scholar]

- Duan L., Dai R., Xiao X., Sun P., Li Z., Zhu C. (2015). Cluster imaging of multi-brain networks (CIMBN): a general framework for hyperscanning and modeling a group of interacting brains. Frontiers in Neuroscience, 9, 267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Feng X., Sun B., Chen C., et al. (2020). Self–other overlap and interpersonal neural synchronization serially mediate the effect of behavioral synchronization on prosociality. Social Cognitive and Affective Neuroscience, 15(2), 203–14. doi: 10.1093/scan/nsaa017/5733879. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fine G.A., Corte U. (2017). Group pleasures: collaborative commitments, shared narrative, and the sociology of fun. Sociological Theory, 35(1), 64–86. [Google Scholar]

- Fredrickson B.L. (2013). Positive emotions broaden and build. Advances in Experimental Social Psychology, 47, 1–53. Elsevier. [Google Scholar]

- Fredrickson B.L., Branigan C. (2005). Positive emotions broaden the scope of attention and thought‐action repertoires. Cognition and Emotion, 19(3), 313–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frith U., Frith C.D. (2003). Development and neurophysiology of mentalizing. Philosophical Transactions of the Royal Society of London. Series B: Biological Sciences, 358(1431), 459–73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Funane T., Kiguchi M., Atsumori H., Sato H., Kubota K., Koizumi H. (2011). Synchronous activity of two people’s prefrontal cortices during a cooperative task measured by simultaneous near-infrared spectroscopy. Journal of Biomedical Optics, 16(7), 077011. [DOI] [PubMed] [Google Scholar]

- Gable P.A., Harmon-Jones E. (2016). Assessing the motivational dimensional model of emotion–cognition interaction: comment on Domachowska, Heitmann, Deutsch, et al.,(2016). Journal of Experimental Social Psychology, 67, 57–9. [Google Scholar]

- Ge Y., Zhao G., Zhang Y., Houston R.J., Song J. (2019). A standardised database of Chinese emotional film clips. Cognition and Emotion, 33(5), 976–90. [DOI] [PubMed] [Google Scholar]

- Golland Y., Arzouan Y., Levit-Binnun N. (2015). The mere co-presence: synchronization of autonomic signals and emotional responses across co-present individuals not engaged in direct interaction. PLoS One, 10(5), e0125804. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Golland Y., Levit-Binnun N., Hendler T., Lerner Y. (2017). Neural dynamics underlying emotional transmissions between individuals. Social Cognitive and Affective Neuroscience, 12(8), 1249–60. doi: 10.1093/scan/nsx049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grinsted A., Moore J.C., Jevrejeva S. (2004). Application of the cross wavelet transform and wavelet coherence to geophysical time series. Nonlinear Processes in Geophysics, 11(5–6), 561–6. [Google Scholar]

- Guijt A.M., Sluiter J.K., Frings-Dresen M.H. (2007). Test-retest reliability of heart rate variability and respiration rate at rest and during light physical activity in normal subjects. Archives of Medical Research, 38(1), 113–20. [DOI] [PubMed] [Google Scholar]

- Harmon-Jones E., Gable P., Price T.F. (2012). The influence of affective states varying in motivational intensity on cognitive scope. Frontiers in Integrative Neuroscience, 6, 73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harmon-Jones E. (2019). On motivational influences, moving beyond valence, and integrating dimensional and discrete views of emotion. Cognition and Emotion, 33(1), 101–8. [DOI] [PubMed] [Google Scholar]

- Hayes A.F. (2017). Introduction to Mediation, Moderation, and Conditional Process Analysis: A Regression-based Approach. Guilford publications. New York, NY: Guildford Press. [Google Scholar]

- Hirsch J., Zhang X., Noah J.A., Ono Y. (2017). Frontal temporal and parietal systems synchronize within and across brains during live eye-to-eye contact. NeuroImage, 157, 314–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ho S.S., Gonzalez R.D., Abelson J.L., Liberzon I. (2012). Neurocircuits underlying cognition-emotion interaction in a social decision making context. NeuroImage, 63(2), 843–57. [DOI] [PubMed] [Google Scholar]

- Hoshi Y. (2007). Functional near-infrared spectroscopy: current status and future prospects. Journal of Biomedical Optics, 12(6), 062106. [DOI] [PubMed] [Google Scholar]

- Hu Y., Hu Y., Li X., Pan Y., Cheng X. (2017). Brain-to-brain synchronization across two persons predicts mutual prosociality. Social Cognitive and Affective Neuroscience, 12(12), 1835–44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hu Y., Pan Y., Shi X., Cai Q., Li X., Cheng X. (2018). Inter-brain synchrony and cooperation context in interactive decision making. Biological Psychology, 133, 54–62. [DOI] [PubMed] [Google Scholar]

- Huang L., Yang T., Li Z. (2003). Applicability of the positive and negative affect scale in Chinese. Chinese Mental Health Journal, 17(1), 54–56. [Google Scholar]

- Huppert T.J., Hoge R.D., Diamond S.G., Franceschini M.A., Boas D.A. (2006). A temporal comparison of BOLD, ASL, and NIRS hemodynamic responses to motor stimuli in adult humans. NeuroImage, 29(2), 368–82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iacoboni M., Lieberman M.D., Knowlton B.J., et al. (2004). Watching social interactions produces dorsomedial prefrontal and medial parietal BOLD fMRI signal increases compared to a resting baseline. NeuroImage, 21(3), 1167–73. [DOI] [PubMed] [Google Scholar]

- Isen A.M. (2002). Missing in action in the AIM: positive affect’s facilitation of cognitive flexibility, innovation, and problem solving. Psychological Inquiry, 13(1), 57–65. [Google Scholar]

- Jaaskelainen I.P., Koskentalo K., Balk M.H., et al. (2008). Inter-subject synchronization of prefrontal cortex hemodynamic activity during natural viewing. The Open Neuroimaging Journal, 2, 14–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaplan R.L., Van Damme I., Levine L.J. (2012). Motivation matters: differing effects of pre-goal and post-goal emotions on attention and memory. Frontiers in Psychology, 3, 404. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kawamichi H., Yoshihara K., Sasaki A.T., et al. (2015). Perceiving active listening activates the reward system and improves the impression of relevant experiences. Social Neuroscience, 10(1), 16–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kirschner S., Tomasello M. (2010). Joint music making promotes prosocial behavior in 4-year-old children. Evolution and Human Behavior, 31(5), 354–64. [Google Scholar]

- Koster-Hale J., Saxe R., Dungan J., Young L.L. (2013). Decoding moral judgments from neural representations of intentions. Proceedings of the National Academy of Sciences, 110(14), 5648–53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lakin J.L., Jefferis V.E., Cheng C.M., Chartrand T.L. (2003). The chameleon effect as social glue: evidence for the evolutionary significance of nonconscious mimicry. Journal of Nonverbal Behavior, 27(3), 145–62. [Google Scholar]

- Lindenberger U., Li S.C., Gruber W., Muller V. (2009). Brains swinging in concert: cortical phase synchronization while playing guitar. BMC Neuroscience, 10, 22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu T., Saito H., Oi M. (2015). Role of the right inferior frontal gyrus in turn-based cooperation and competition: a near-infrared spectroscopy study. Brain and Cognition, 99, 17–23. [DOI] [PubMed] [Google Scholar]

- Liu T., Pelowski M. (2014). A new research trend in social neuroscience: towards an interactive-brain neuroscience. PsyCh Journal, 3(3), 177–88. [DOI] [PubMed] [Google Scholar]

- Lotz S., Okimoto T.G., Schlosser T., Fetchenhauer D. (2011). Punitive versus compensatory reactions to injustice: emotional antecedents to third-party interventions. Journal of Experimental Social Psychology, 47(2), 477–80. [Google Scholar]

- Maffei A., Vencato V., Angrilli A. (2015). Sex differences in emotional evaluation of film clips: interaction with five high arousal emotional categories. PLoS One, 10(12), e0145562. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Magnano P., Craparo G., Paolillo A. (2016). Resilience and emotional intelligence: which role in achievement motivation. International Journal of Psychological Research, 9(1), 9–20. [Google Scholar]

- Meneghel I., Salanova M., Martínez I.M. (2016). Feeling good makes us stronger: how team resilience mediates the effect of positive emotions on team performance. Journal of Happiness Studies, 17(1), 239–55. [Google Scholar]

- Miles L.K., Griffiths J.L., Richardson M.J., Macrae C.N. (2010). Too late to coordinate: contextual influences on behavioral synchrony. European Journal of Social Psychology, 40(1), 52–60. [Google Scholar]

- Nguyen M., Vanderwal T., Hasson U. (2019). Shared understanding of narratives is correlated with shared neural responses. NeuroImage, 184, 161–70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Noah J.A., Ono Y., Nomoto Y., et al. (2015). fMRI validation of fNIRS measurements during a naturalistic task. Journal of Visualized Experiments: JoVE, (100), e52116. doi: 10.3791/52116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nozawa T., Sasaki Y., Sakaki K., Yokoyama R., Kawashima R. (2016). Interpersonal frontopolar neural synchronization in group communication: an exploration toward fNIRS hyperscanning of natural interactions. NeuroImage, 133, 484–97. [DOI] [PubMed] [Google Scholar]

- Nummenmaa L., Glerean E., Viinikainen M., Jaaskelainen I.P., Hari R., Sams M. (2012). Emotions promote social interaction by synchronizing brain activity across individuals. Proceedings of the National Academy of Sciences of the United States of America, 109(24), 9599–604. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nummenmaa L., Hari R., Hietanen J.K., Glerean E. (2018). Maps of subjective feelings. Proceedings of the National Academy of Sciences, 115(37), 9198–203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oldfield R.C. (1971). The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia, 9(1), 97–113. [DOI] [PubMed] [Google Scholar]

- Pan Y., Cheng X., Zhang Z., Li X., Hu Y. (2017). Cooperation in lovers: an fNIRS-based hyperscanning study. Human Brain Mapping, 38(2), 831–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pellicer A., del Carmen Bravo M. (2011). Near-infrared spectroscopy: a methodology-focused review. In: Seminars in Fetal and Neonatal Medicine, 16(1), 42–9. [DOI] [PubMed] [Google Scholar]

- Preacher K.J., Hayes A.F. (2008). Asymptotic and resampling strategies for assessing and comparing indirect effects in multiple mediator models. Behavior Research Methods, 40(3), 879–91. [DOI] [PubMed] [Google Scholar]

- Reindl V., Gerloff C., Scharke W., Konrad K. (2018). Brain-to-brain synchrony in parent-child dyads and the relationship with emotion regulation revealed by fNIRS-based hyperscanning. NeuroImage, 178, 493–502. [DOI] [PubMed] [Google Scholar]

- Richardson M.J., Marsh K.L., Isenhower R.W., Goodman J.R., Schmidt R.C. (2007). Rocking together: dynamics of intentional and unintentional interpersonal coordination. Human Movement Science, 26(6), 867–91. [DOI] [PubMed] [Google Scholar]

- Rolls E.T. (2019). The cingulate cortex and limbic systems for emotion, action, and memory. Brain Structure and Function, 224(9), 3001–18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rösch S.A., Schmidt R., Lührs M., Ehlis A.C., Hesse S., Hilbert A. (2021). Evidence of fNIRS-based prefrontal cortex hypoactivity in obesity and binge-eating disorder. Brain Sciences, 11(1), 19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schippers M.B., Roebroeck A., Renken R., Nanetti L., Keysers C. (2010). Mapping the information flow from one brain to another during gestural communication. Proceedings of the National Academy of Sciences of the United States of America, 107(20), 9388–93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shamay-Tsoory S.G., Saporta N., Marton-Alper I.Z., Gvirts H.Z. (2019). Herding brains: a core neural mechanism for social alignment. Trends in Cognitive Sciences, 23(3), 174–86. [DOI] [PubMed] [Google Scholar]

- Silver N.C., Hittner J.B., May K. (2004). Testing dependent correlations with non overlapping variables: A monte carlo simulation. The Journal of Experimental Education, 73(1), 53–69. [Google Scholar]

- Silvetti M., Alexander W., Verguts T., Brown J.W. (2014). From conflict management to reward-based decision making: actors and critics in primate medial frontal cortex. Neuroscience and Biobehavioral Reviews, 46, 44–57. [DOI] [PubMed] [Google Scholar]

- Smirnov D., Saarimäki H., Glerean E., Hari R., Sams M., Nummenmaa L. (2019). Emotions amplify speaker–listener neural alignment. Human Brain Mapping, 40(16), 4777–88. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tanabe H.C., Kosaka H., Saito D.N., et al. (2012). Hard to "tune in": neural mechanisms of live face-to-face interaction with high-functioning autistic spectrum disorder. Frontiers in Human Neuroscience, 6, 268. [DOI] [PMC free article] [PubMed] [Google Scholar]