Abstract

Artificial intelligence (AI) for breast imaging has rapidly moved from the experimental to implementation phase. As of this writing, Food and Drug Administration (FDA)-approved mammographic applications are available for triage, lesion detection and classification, and breast density assessment. For sonography and MRI, FDA-approved applications are available for lesion classification. Numerous other interpretive and noninterpretive AI applications are in the development phase. This article reviews AI applications for mammography, sonography, and MRI that are currently available for clinical use. In addition, clinical implementation and the future of AI for breast imaging are discussed.

Keywords: Artificial intelligence, breast imaging, implementation, machine learning, mammography

Introduction

Artificial intelligence (AI) for breast imaging has quickly moved from the research and development phase to the clinical implementation phase. This rapid growth has resulted from improved computer processing power, advanced algorithms, and the expansion of electronic health data1. AI includes the subfields of machine learning (ML) and deep learning (DL) (Figure 1)2. ML is defined as computers learning from images and other data without being programmed. DL is based on neural networks that obtain high-level features from data. The convolutional neural network is the most common network used for the analysis of images3.

Figure 1.

Artificial intelligence and its subfields.

As of this writing, there are twenty AI applications that are approved by the Food and Drug Administration (FDA) for mammography, breast sonography, and breast MRI (Table 1)4. For mammography, the applications are intended for triage, lesion detection and classification, and density assessment. For sonography and MRI, the applications are intended for lesion classification. The data that led to FDA approval, particularly for the lesion detection and classification applications, are mostly based on multireader studies; however, the results of these types of studies may not directly translate to clinical practice, and thus post-implementation studies in the clinical environment are essential to understand the real-world impact of AI applications on radiologists’ performance and workflows5.

Table 1.

Food and Drug Administration (FDA)-approved applications for breast imaging*.

| Tool (Company) | Modality | Application | Date of Approval |

|---|---|---|---|

| MammoScreen (Therapixel)10 | Mammography | Lesion detection and classification | 3/25/2020 |

| Genius AI Detection (Hologic)11 | Mammography | Lesion detection and classification | 11/18/2020 |

| ProFound AI Software V3.0 (iCAD)12,13 | Mammography | Lesion detection and classification | 3/12/2021 |

| Transpara 1.7.0 (ScreenPoint Medical B.V.)14 | Mammography | Lesion detection and classification | 6/2/2021 |

| Lunit INSIGHT MMG (Lunit)15 | Mammography | Lesion detection and classification | 11/17/2021 |

| cmTriage (CureMetrix)16 | Mammography | Triage | 3/8/2019 |

| HealthMammo (Zebra Medical Vision)17 | Mammography | Triage | 7/16/2020 |

| Saige-Q (DeepHealth)18 | Mammography | Triage | 4/16/2021 |

| Quantra 2.1/2.2 (Hologic)19 | Mammography | Density assessment | 10/20/2017 |

| Insight BD (Siemens Healthineers)20 | Mammography | Density assessment | 2/6/2018 |

| DM-Density (Densitas)21 | Mammography | Density assessment | 2/23/2018 |

| DenSeeMammo (Statlife)22 | Mammography | Density assessment | 6/26/2018 |

| densityai (Densitas)23 | Mammography | Density assessment | 2/19/2020 |

| WRDensity (Whiterabbit.ai)24 | Mammography | Density assessment | 10/30/2020 |

| Visage Breast Density (Visage Imaging GmbH)25 | Mammography | Density assessment | 1/29/2021 |

| Powerlook Density Assessment V2.1/V4.0 (iCAD)26 | Mammography | Density assessment | 7/12/2021 |

| Volpara Imaging Software (Volpara Health Technologies Limited)27 | Mammography | Density assessment | 7/27/2021 |

| ClearView cCAD (ClearView Diagnostics)42 | Ultrasound | Lesion classification | 12/28/2016 |

| Koios DS (Koios Medical)43 | Ultrasound | Lesion classification | 7/3/2019 |

| QuantX (Qlarity Imaging)56 | MRI | Lesion classification | 5/17/2017 |

Based on FDA documents and the American College of Radiology website4.

In addition to the approved applications described above, numerous other interpretive and noninterpretive AI applications for breast imaging are in the development phase. The success of these applications will depend on their real-world performance in the clinical environment and their integration into existing clinical workflows. This article reviews FDA-approved AI applications for mammography, sonography, and MRI and the evidence supporting their use. In addition, clinical implementation and the future of AI for breast imaging are discussed.

Mammography

FDA-Approved Applications for Mammography

Mammography is the only modality for breast cancer screening shown to decrease breast cancer-related deaths, but there is wide variability in performance metrics and potential for improvement of its sensitivity and specificity6–8. Also, more time is needed to interpret digital breast tomosynthesis (DBT) examinations compared to digital 2D mammography (DM) examinations9. AI is a promising tool to address these limitations. As of this writing, and per the American College of Radiology (ACR) website, there are more than 15 FDA-approved mammographic applications for triage, lesion detection and classification, and breast density assessment (Table 1)4,10–27. These are intended for DM, synthetic DM, and/or DBT. Examples of these FDA-approved applications and the data supporting their use are discussed below.

One of the FDA-approved applications for triage, Saige-Q, can be applied to screening DM and DBT examinations18. Saige-Q provides a code for each mammogram, which indicates the algorithm’s suspicion that the mammogram has at least one concerning finding. These assigned codes are available on the picture archiving and communication system (PACS) workstation and can be used to prioritize or triage examinations. According to the FDA documentation for Saige-Q, the area under the receiver operating characteristic curve (AUC) was found to be 0.97 for DM and 0.99 for DBT in studies done across multiple clinical sites in two states. Performance by patient age, breast density, lesion type, and lesion size were similar across sub-categories.

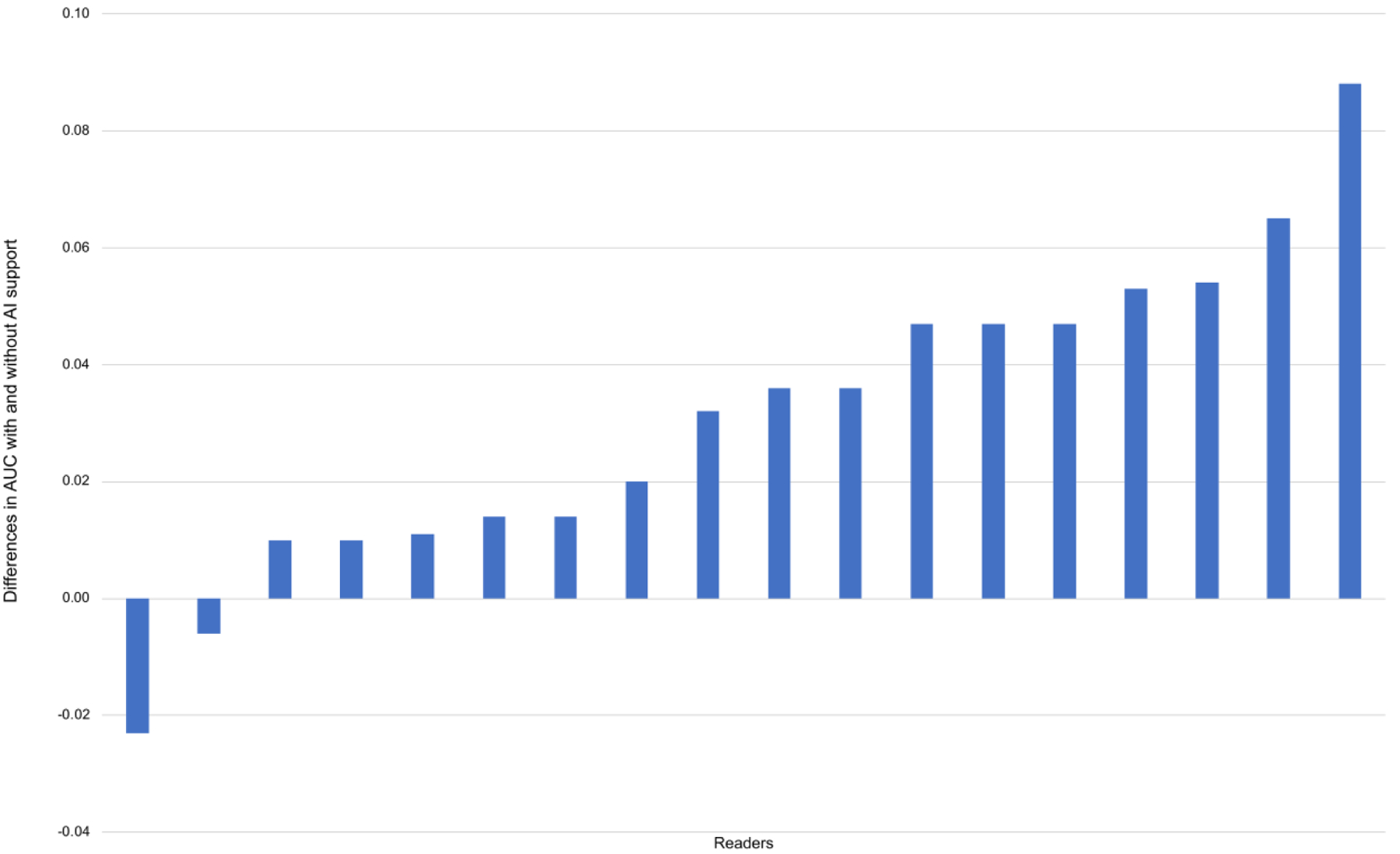

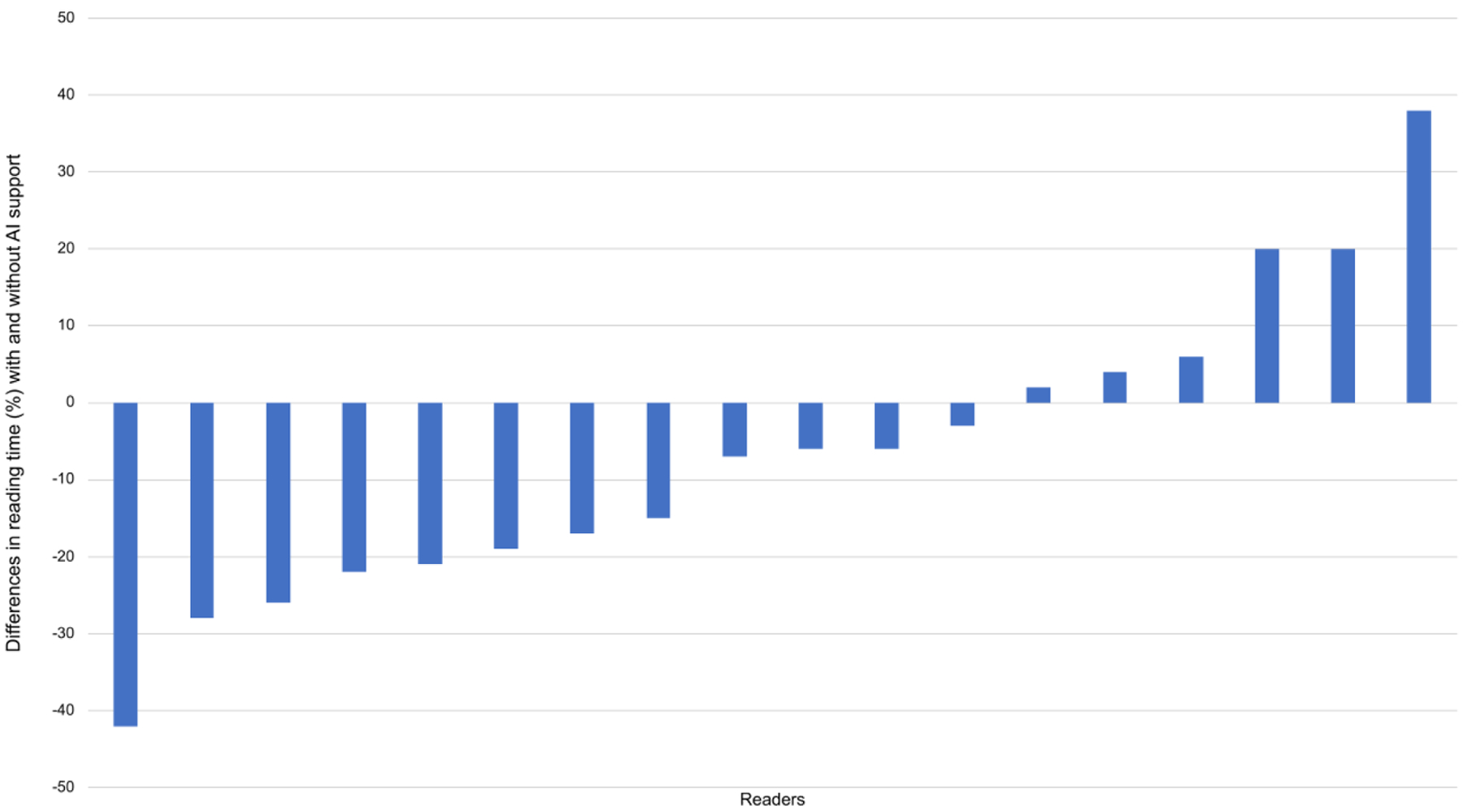

One of the FDA-approved applications for lesion detection and classification, Transpara, detects suspicious mammographic lesions and assigns a probability of malignancy score from 1–100 to each one14,28. An examination score from 1 to 10 is also provided, which indicates likelihood of malignancy. In a multi-reader multi-case study validating this application, 18 radiologists each read 240 DBT examinations, aided and unaided by the AI system28. The mean reader AUC improved from 0.83 without AI support to 0.86 with AI support (p=0.0025). Sixteen of 18 radiologists achieved a better AUC with AI support (Figure 2). Mean sensitivity increased from 75% to 79% (p=0.016), while specificity remained unchanged. Given that the standalone AI system performance did not differ from that of the radiologist readers, the authors suggested that this system could be used in place of the second reader or could even be used for standalone interpretation, particularly to triage out negative screening mammograms. Of note, reading time per examination decreased from 41 seconds to 36 seconds with AI support (p<0.001) (Figure 3). This reduction in interpretation time was largely due to the examination scores, suggesting that readers felt comfortable spending less time on examinations categorized as likely normal.

Figure 2. Differences in the area under the receiver operating characteristic curve (AUC) with and without artificial intelligence (AI) support from a mammographic lesion detection and classification algorithm.

Sixteen of 18 readers achieved a better AUC with AI support. (With permission and adapted from reference 28.)

Figure 3. Differences in reading time with and without artificial intelligence (AI) support from a mammographic lesion detection and classification algorithm.

Reading time per examination decreased from 41 seconds to 36 seconds with AI support (p<0.001). (With permission and adapted from reference 28.)

There are nearly ten FDA-approved applications for breast density assessment, which is of heightened interest in the setting of density notification legislation and the recognition that dense tissue not only masks breast cancers but is also an independent breast cancer risk factor4,19–27,29. Densitas offers both an ML-based density application, DM-Density, and a DL-based density application, densityai21,23. In a study comparing densityai output to consensus assessment of four expert radiologists’ independent breast density readings, accuracies for four-class prediction were 78% for almost entirely fatty breasts, 76% for scattered fibroglandular densities, 83% for heterogeneously dense breasts, and 89% for extremely dense breasts, and accuracies for two-class prediction were 88% for non-dense breasts and 96% for dense breasts23. AI-based breast density is also being used in conjunction with clinical risk factors and conventional risk prediction models to generate estimates of breast cancer risk30,31.

Other commercial applications for mammography, which are marketed as clinical decision support and thus do not require FDA approval, are also available for clinical use. For example, risk prediction models based on DM and DBT images offer one-year and two-year estimates of risk32. In addition, AI applications for image quality control, currently available for clinical use, offer immediate feedback to technologists about the adequacy of compression and various patient positioning parameters33,34. Such applications have the potential to decrease technical recalls and can automatically generate image quality reports at the individual or practice level.

Future of AI for Mammography

Research is in progress for other AI applications for mammography, including long-term breast cancer risk assessment. Accurate assessment of risk is necessary to inform decisions about genetic testing, chemoprevention, and supplemental screening with MRI, and several studies have demonstrated that DL models based on mammographic images can provide accurate long-term risk estimates35,36. For example, a deep learning model based on mammography to estimate risk at several timepoints achieved five-year AUCs of 0.78 and 0.79, respectively, in two external cohorts36. Furthermore, the mammography-based model identified 42% of women who would develop cancer in five years as high risk, whereas the Tyrer-Cuzick model (a model based on traditional risk factors) identified only 23% of women who would develop cancer in five years (p<0.001). The imaging-based model could potentially be improved if prior mammograms (and not just the current one) were also used.

Future research involves the development of mammography-specific deep learning architectures that take advantage of the unique attributes of high-resolution mammographic imaging37. In addition, as there are fewer DBT than DM datasets available for algorithm training, techniques such as transfer learning – in which a pre-trained DM model is modified with a smaller DBT dataset – can be utilized38. AI algorithms must be validated not only across diverse patient populations but also across different mammography machines from different vendors; and, to facilitate clinical adoption and acceptance, the rationale behind the decision-making process of algorithms must be understandable to radiologists and other clinicians37–39.

Breast Ultrasound

FDA-Approved Applications for Breast Ultrasound

In the setting of breast density notification legislation and supplemental screening options for dense-breasted women, ultrasound is an increasingly utilized modality for screening purposes; however, its specificity is reported to be relatively low40,41. As of this writing and per the ACR website, there are two FDA-approved applications for breast ultrasound, both of which are intended for lesion classification and therefore could assist radiologists in distinguishing between benign and malignant lesions (Table 1)4,42,43. One of the applications, Koios DS, provides a probability of cancer for a reader-selected region-of-interest containing a breast lesion44,45. In a study validating this application, nine breast imaging radiologists interpreted 319 breast lesions on ultrasound aided and unaided by the AI system45. With the AI system set to its original mode, the mean reader AUC was 0.82 with and without AI support (p=0.92). When the AI system was set to a high-sensitivity mode or high-specificity mode, the mean reader AUC improved from 0.83 to 0.88 (p<0.001) and from 0.82 to 0.89 (p<0.001), respectively. Readers reacted more frequently to prompts in the high-specificity mode, which provided fewer false-positive cues, suggesting that radiologists are more likely to trust the recommendations provided by more specific AI-based systems46.

In a second evaluation of this AI system, Koios DS, 15 physicians, including 11 radiologists, interpreted 900 breast lesions on ultrasound, aided and unaided by this AI system44. The mean reader AUC increased with use of the AI system from 0.83 to 0.87 (p<0.0001). Fourteen of 15 readers achieved a better AUC with AI support. The authors noted that the impact of the AI system on each reader’s performance depended on that reader’s initial operating point; that is, readers that were more sensitive but not specific demonstrated improvements in specificity. Use of the AI algorithm was also found to decrease inter-reader and intra-reader variability. Limitations of this study include that its results may not directly translate to clinical practice (as it was a reader study) and that Breast Imaging and Reporting Data System (BI-RADS) assessments were given by readers solely based on two orthogonal static ultrasound images (without any other imaging or clinical data).

Future of AI for Breast Ultrasound

AI applications for breast ultrasound largely focus on lesion classification (that is, distinguishing between cancerous and noncancerous lesions), as discussed above. AI could also be used to detect and segment lesions on ultrasound, triage examinations, and predict Tumor Node Metastasis (TNM) classification and response to treatment47–49. For example, in a feasibility study that applied DL-based models to ultrasound images in women with breast cancer and clinically negative axillary lymph nodes, the best-performing model attained an AUC of 0.90 in predicting axillary lymph node metastasis and outperformed three experienced radiologists50. To better understand the predictions made by the AI system, the authors used “class activation mapping” to indicate the parts of the ultrasound image most predictive of metastasis.

DL-based models have also been applied to ultrasound images to predict neoadjuvant chemotherapy response. For example, in a study of 168 women with breast cancer, ultrasound examinations were obtained before neoadjuvant chemotherapy, after the second round of therapy, and after the fourth round of therapy51. The models to predict treatment response after the second round achieved an AUC of 0.81 and after the fourth round 0.94. One of the models accurately identified 19 of 21 non-responsive patients, suggesting that this AI system could inform necessary adjustments early during treatment and thus help personalize treatment approaches.

The data supporting the aforementioned lesion classification applications are based on reader studies and, as with other applications for breast imaging, will require thorough validation in the clinical environment. Radiologists, clinicians, and patients are more likely to trust AI systems that offer clear and transparent rationale for their predictions, and thus methods to explain AI output (such as the class activation maps described above) will continue to be critical for successful clinical adoption and acceptance of AI algorithms50,52. In addition, the diagnostic accuracy of DL-based applications for ultrasound, which are currently trained with 2D images, could potentially be improved if 3D imaging, Doppler imaging data, shear wave elastography, and/or cine clips were also used for training purposes47,53.

Breast MRI

FDA-Approved Applications for Breast MRI

MRI is the most sensitive of the breast imaging modalities but has variable specificity, though false-positive rates have been found to decrease after the first examination, with increasing radiologist experience, and with higher spatial and temporal resolution of MRI54,55. QuantX, an FDA-approved algorithm for breast MRI, addresses the specificity metric by assisting radiologists in differentiating between noncancerous and cancerous lesions (Table 1)56,57. To use QuantX, the reader first identifies and localizes the lesion. The AI system, which offers both image registration and lesion segmentation, then generates a list of key features, such as the time to peak enhancement and washout rate. It also synthesizes multiple features into a the QuantX score, which reflects cancer likelihood.

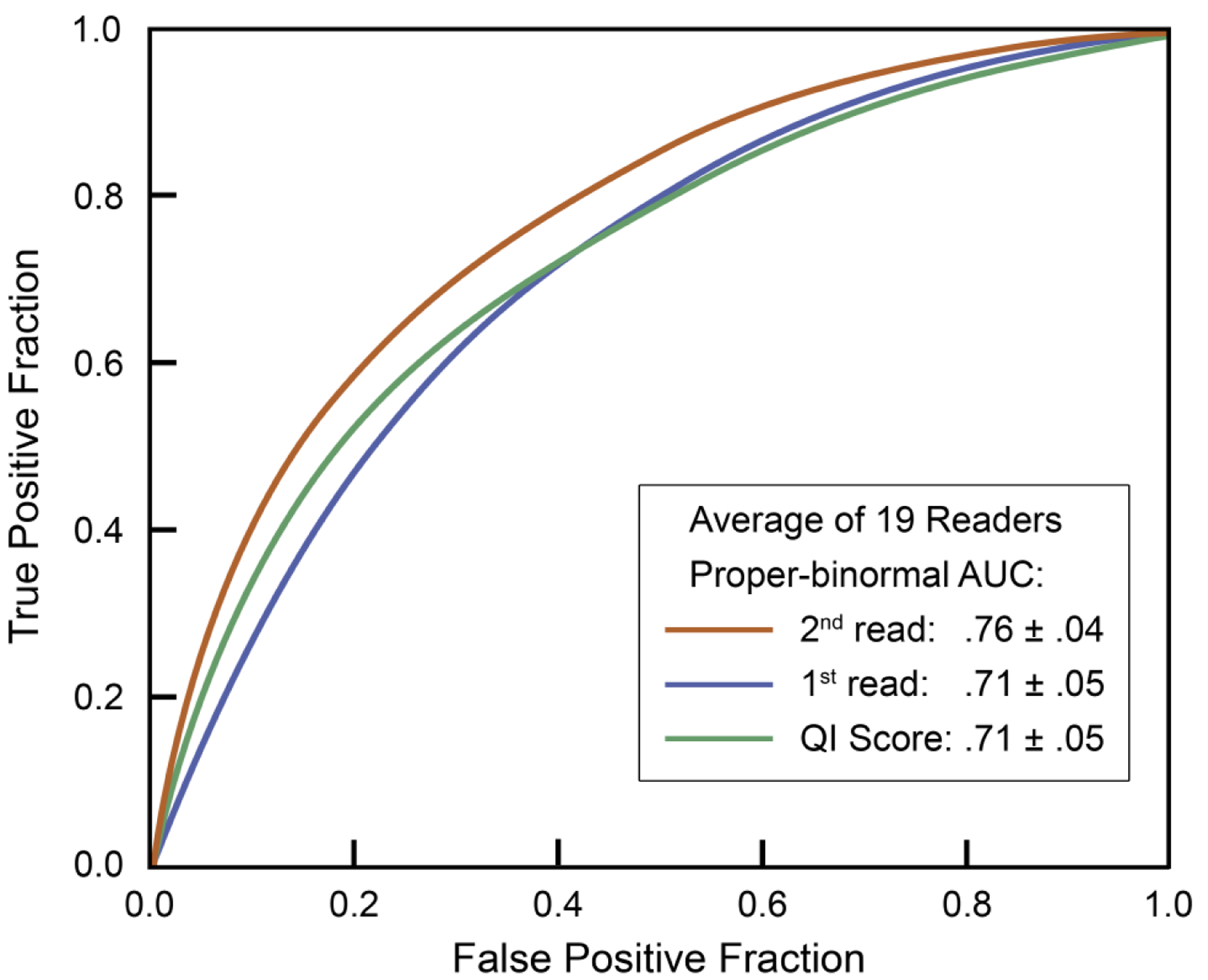

In a multireader study, 19 radiologists with breast imaging fellowship training or a minimum of two years of breast imaging experience interpreted 111 breast MRI examinations, with and without the use of QuantX57. In distinguishing noncancerous and cancerous lesions, the standalone performance of the AI system based on user-identified seed points had a mean AUC of 0.71. For radiologists, the mean AUC increased from 0.71 without use of the AI system to 0.76 with use of the AI system (p=0.04) (Figure 4). Of note, radiologists without fellowship training in breast imaging had a higher increase in AUC than those who were fellowship-trained. The sensitivity of radiologists improved with use of the AI system when BI-RADS category 3 was used as the cut point, but no differences in specificity were observed. This particular AI system could help radiologists reduce classification errors (i.e., benign versus malignant) but not detection errors, as lesions must first be identified by the radiologist to make use of the AI system. An additional drawback of this study is that radiologists’ behavior in a reader study may not reflect their behavior in clinical practice5.

Figure 4. Average receiver operating characteristic curves (AUC) with and without artificial intelligence (AI) support from an MRI lesion classification algorithm.

The standalone performance of the AI tool had a mean AUC of 0.71. For radiologists, the mean AUC increased from 0.71 without use of the AI tool (1st read) to 0.76 with use of the AI tool (2nd read) (p=0.04). (With permission and adapted from reference 57.)

Future of AI for Breast MRI

Currently per the ACR website, the only FDA-approved application for breast MRI is QuantX, which is intended for lesion classification, as discussed above4. Other potential applications of AI for breast MRI include lesion detection; prediction of neoadjuvant chemotherapy response, tumor markers, lymph node status, and recurrence risk; breast cancer risk assessment; and image processing (i.e., tissue and lesion segmentation and image quality improvement)58–60. For example, with regard to prognostic imaging, a DL-based model was applied to an MRI dataset in order to predict the Oncotype Dx Recurrence Score, which is a validated genomic assay used in women with invasive cancer61. For three-class prediction (Recurrence Score <18, 18–30, and >30), the algorithm attained an AUC of 0.92, sensitivity of 60%, and specificity of 90%. The authors concluded that an AI algorithm could be used to predict the Oncotype Dx Recurrence Score, which is an invasive and expensive test, but further validation and correlation with clinical outcomes are needed.

One study applied a DL-based model to MRI examinations of 141 women with breast cancer who were given neoadjuvant chemotherapy and subsequently underwent surgery62. Women were categorized into three groups based on their neoadjuvant chemotherapy response: complete response, partial response, and no response. A DL-based model was applied to the MRI examinations obtained before the initiation of chemotherapy and attained an accuracy of 88% in the three-group prediction. While current prediction models are based on interval imaging after the start of treatment, this AI model uses a baseline breast MRI to predict response and could thus help guide the upfront use of novel therapies in women who are unlikely to respond to conventional agents and also reduce toxicities from treatments that are unlikely to be effective.

An active area of research is segmentation and quantification of background parenchymal enhancement on MRI, which is a known marker of breast cancer risk. For example, in a study of 137 patients, a DL-based algorithm was shown to give reliable segmentation and classification results for background parenchymal enhancement, with an accuracy of 83% and correlation with manual segmentation and quantification of 0.9663. One other study of 133 high-risk women, 46 of whom developed breast cancer, showed that quantitative background parenchymal enhancement features extracted from MR imaging are in fact related to breast cancer risk64. Of note, the predictive performance of these background parenchymal enhancement features outperformed that of radiologists’ subjective background parenchymal enhancement assessments.

Of the emerging AI applications for breast MRI, lesion segmentation and classification are thought to be the most mature at this time, but the potential of AI to extract rich biological and prognostic data from MR imaging data has yet to be realized65. In terms of challenges, available training datasets for MRI are relatively small, as compared to those available for digital 2D mammography, and there exists a lack of standardization for segmentation, feature extraction, and feature selection for MRI59,65. Future directions in this domain include enhancing personalized risk assessment with background parenchymal enhancement analysis, developing the role of noncontrast MRI, and applying AI to MRI acquisition and reconstruction techniques in order to decrease scan time59.

Implementation of AI

As the number of commercial AI algorithms for breast imaging continues to increase, and radiologists seek to determine which models to implement in practice, recent publications have addressed key questions to consider before purchasing an AI tool66,67. For example, the ÉCLAIR guidelines set forth a series of issues to consider when evaluating a commercial model: (1) relevance (i.e., intended use, indications of use, benefits, and risks), (2) performance and validation (i.e., how algorithm was trained and how performance has been evaluated), (3) usability and integration (i.e., impact of AI tool on workflow, information technology infrastructure that is required), (4) regulatory and legal aspects (i.e., compliance of AI tool with local and data protection regulations), and (5) financial and support services (i.e., licensing model and maintenance of product)67.

After selecting a candidate AI tool, a breast imaging practice may choose to validate the tool using its own data66,68. Models that were trained with large, diverse patient populations may perform well across many or all practices, but certain models will require fine-tuning with local data to achieve an acceptable level of performance69. A plan for ongoing monitoring to ensure that the tool maintains an acceptable level of performance over time could also be needed66,68–70. Even an AI tool with high performance will likely fail if not well-integrated into existing clinical workflows, which requires close collaboration with AI vendors and local information technology groups. The algorithm may be integrated into practice via a platform through which other AI vendors could also deploy their tools or integrated into practice as a standalone application, which offers maximum flexibility but is less scalable66.

With regard to financial considerations, purchase of an AI tool may require a one-time fee, pay-per-use, or an ongoing subscription66,67. Additional fees may be incurred for installation, maintenance, future updates, and training of new users. Some vendors may offer a trial period, as discussed above, before purchasing decisions are made. In addition, or alternatively, the contract between the AI vendor and practice may include contingencies if agreed-upon performance metrics are not attained66. Currently there are no separate billing codes to generate additional revenue from the use of AI tools, but payment structures may evolve over time66.

Conclusions

AI for breast imaging has rapidly progressed from the research and development phase to clinical implementation. FDA-approved mammographic applications are available for triage, lesion detection and classification, and breast density assessment. FDA-approved sonographic and MRI applications are available for lesion classification. Post-implementation studies in the clinical environment are essential to understand the real-world impact of these AI applications on radiologists’ performance and workflows. A multitude of other applications, including noninterpretive applications (such as for breast cancer risk assessment), are in development. The future of AI for breast imaging is bright, with its success depending on validation in real-world clinical settings, demonstrated improvements in patient outcomes and clinical efficiency, and seamless integration into existing clinical workflows.

Abbreviations:

- AUC

Area under the receiver operating characteristic curve

- AI

Artificial intelligence

- DL

Deep learning

- DM

Digital 2D mammography

- DBT

Digital breast tomosynthesis

- FDA

Food and Drug Administration

- ML

Machine learning

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Trister AD, Buist DSM, Lee CI. Will machine learning tip the balance in breast cancer screening? JAMA Oncol. Nov 1 2017;3(11):1463–1464. doi: 10.1001/jamaoncol.2017.0473 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Chartrand G, Cheng PM, Vorontsov E, et al. Deep learning: a primer for radiologists. Radiographics. Nov-Dec 2017;37(7):2113–2131. doi: 10.1148/rg.2017170077 [DOI] [PubMed] [Google Scholar]

- 3.Cheng PM, Montagnon E, Yamashita R, et al. Deep learning: an update for radiologists. Radiographics. Sep-Oct 2021;41(5):1427–1445. doi: 10.1148/rg.2021200210 [DOI] [PubMed] [Google Scholar]

- 4.American College of Radiology Data Science Institute. AI Central. https://aicentral.acrdsi.org/; 2021. Accessed 1 December 2021.

- 5.Hsu W, Hoyt AC. Using time as a measure of impact for AI systems: implications in breast screening. Radiol Artif Intell. Jul 2019;1(4):e190107. doi: 10.1148/ryai.2019190107 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Lehman CD, Arao RF, Sprague BL, et al. National performance benchmarks for modern screening digital mammography: update from the Breast Cancer Surveillance Consortium. Radiology. Apr 2017;283(1):49–58. doi: 10.1148/radiol.2016161174 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Duffy SW, Tabar L, Yen AM, et al. Mammography screening reduces rates of advanced and fatal breast cancers: results in 549,091 women. Cancer. Jul 1 2020;126(13):2971–2979. doi: 10.1002/cncr.32859 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Duffy SW, Vulkan D, Cuckle H, et al. Effect of mammographic screening from age 40 years on breast cancer mortality (UK Age trial): final results of a randomised, controlled trial. Lancet Oncol. Sep 2020;21(9):1165–1172. doi: 10.1016/S1470-2045(20)30398-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Dang PA, Freer PE, Humphrey KL, Halpern EF, Rafferty EA. Addition of tomosynthesis to conventional digital mammography: effect on image interpretation time of screening examinations. Radiology. Jan 2014;270(1):49–56. doi: 10.1148/radiol.13130765 [DOI] [PubMed] [Google Scholar]

- 10.Food and Drug Administration. MammoScreen. https://www.accessdata.fda.gov/cdrh_docs/pdf19/K192854.pdf; 2020. Accessed 1 December 2021.

- 11.Food and Drug Administration. Genius AI Detection. https://www.accessdata.fda.gov/cdrh_docs/pdf20/K201019.pdf; 2020. Accessed 1 December 2021.

- 12.Food and Drug Administration. ProFound AI Software V2.1. https://www.accessdata.fda.gov/cdrh_docs/pdf19/K191994.pdf; 2019. Accessed 1 December 2021.

- 13.Food and Drug Administration. 510(k) Premarket Notification. https://www.accessdata.fda.gov/scripts/cdrh/cfdocs/cfpmn/pmn.cfm?ID=K203822; 2021. Accessed 1 December 2021.

- 14.Food and Drug Administration. Transpara 1.7.0. https://www.accessdata.fda.gov/cdrh_docs/pdf21/K210404.pdf; 2021. Accessed 1 December 2021.

- 15.Food and Drug Administration. Lunit INSIGHT MMG. https://www.accessdata.fda.gov/cdrh_docs/pdf21/K211678.pdf; 2021. Accessed 12 December 2021.

- 16.Food and Drug Administration. cmTriage. https://www.accessdata.fda.gov/cdrh_docs/pdf18/K183285.pdf; 2019. Accessed 1 December 2021.

- 17.Food and Drug Administration. HealthMammo. https://www.accessdata.fda.gov/cdrh_docs/pdf20/K200905.pdf; 2020. Accessed 1 December 2021.

- 18.Food and Drug Administration. Saige-Q. https://www.accessdata.fda.gov/cdrh_docs/pdf20/K203517.pdf; 2021. Accessed 1 December 2021.

- 19.Food and Drug Administration. Quantra. https://www.accessdata.fda.gov/cdrh_docs/pdf16/K163623.pdf; 2017. Accessed 1 December 2021.

- 20.Food and Drug Administration. Insight BD. https://www.accessdata.fda.gov/cdrh_docs/pdf17/K172832.pdf; 2018. Accessed 1 December 2021.

- 21.Food and Drug Administration. DM-Density. https://www.accessdata.fda.gov/cdrh_docs/pdf17/K170540.pdf; 2018. Accessed 1 December 2021.

- 22.Food and Drug Administration. DenSeeMammo. https://www.accessdata.fda.gov/cdrh_docs/pdf17/K173574.pdf; 2018. Accessed 1 December 2021.

- 23.Food and Drug Administration. densityai. https://www.accessdata.fda.gov/cdrh_docs/pdf19/K192973.pdf; 2020. Accessed 1 December 2021.

- 24.Food and Drug Administration. WRDensity by Whiterabbit.ai. https://www.accessdata.fda.gov/cdrh_docs/pdf20/K202013.pdf; 2020. Accessed 1 December 2021.

- 25.Food and Drug Administration. Visage Imaging GmbH. https://www.accessdata.fda.gov/cdrh_docs/pdf20/K201411.pdf; 2021. Accessed 1 December 2021.

- 26.Food and Drug Administration. PowerLook Density Assessment V4.0. https://www.accessdata.fda.gov/cdrh_docs/pdf21/K211506.pdf; 2021. Accessed 1 December 2021.

- 27.Food and Drug Administration. Volpara Imaging Software. https://www.accessdata.fda.gov/cdrh_docs/pdf21/K211279.pdf; 2021. Accessed 1 December 2021.

- 28.van Winkel SL, Rodriguez-Ruiz A, Appelman L, et al. Impact of artificial intelligence support on accuracy and reading time in breast tomosynthesis image interpretation: a multi-reader multi-case study. Eur Radiol. Nov 2021;31(11):8682–8691. doi: 10.1007/s00330-021-07992-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Freer PE. Mammographic breast density: impact on breast cancer risk and implications for screening. Radiographics. Mar–Apr 2015;35(2):302–15. doi: 10.1148/rg.352140106 [DOI] [PubMed] [Google Scholar]

- 30.Densitas. densitas riskai. https://densitas.health/riskai; 2021. Accessed 4 December 2021.

- 31.Volpara Health. Scorecard. https://www.volparahealth.com/breast-health-platform/products/scorecard/; 2021. Accessed 4 December 2021.

- 32.iCAD. ProFound AI Risk. https://www.icadmed.com/profoundai-risk.html; 2021. Accessed 4 December 2021.

- 33.Densitas. densitas qualityai. https://densitas.health/solutions/quality/; 2021. Accessed 4 December 2021.

- 34.Volpara Health. Live. https://www.volparahealth.com/breast-health-platform/products/live/; 2021. Accessed 4 December 2021.

- 35.Dembrower K, Liu Y, Azizpour H, et al. Comparison of a deep learning risk score and standard mammographic density score for breast cancer risk prediction. Radiology. Dec 17 2020;294(2):265–272. doi: 10.1148/radiol.2019190872 [DOI] [PubMed] [Google Scholar]

- 36.Yala A, Mikhael PG, Strand F, et al. Toward robust mammography-based models for breast cancer risk. Sci Transl Med. Jan 27 2021;13(578):eaba4373 doi: 10.1126/scitranslmed.aba4373 [DOI] [PubMed] [Google Scholar]

- 37.Geras KJ, Mann RM, Moy L. Artificial intelligence for mammography and digital breast tomosynthesis: current concepts and future perspectives. Radiology. Nov 2019;293(2):246–259. doi: 10.1148/radiol.2019182627 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Bahl M Artificial intelligence: a primer for breast imaging radiologists. J Breast Imaging. Aug 2020;2(4):304–314. doi: 10.1093/jbi/wbaa033 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Hu Q, Giger ML. Clinical artificial intelligence applications: breast imaging. Radiol Clin North Am. Nov 2021;59(6):1027–1043. doi: 10.1016/j.rcl.2021.07.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Brem RF, Lenihan MJ, Lieberman J, Torrente J. Screening breast ultrasound: past, present, and future. AJR Am J Roentgenol. Feb 2015;204(2):234–240. doi: 10.2214/AJR.13.12072 [DOI] [PubMed] [Google Scholar]

- 41.Berg WA, Vourtsis A. Screening breast ultrasound using handheld or automated technique in women with dense breasts. J Breast Imaging. 2019;1(4):283–296. doi: 10.1093/jbi/wbab013 [DOI] [PubMed] [Google Scholar]

- 42.Food and Drug Administration. ClearView cCAD. https://www.accessdata.fda.gov/cdrh_docs/pdf16/K161959.pdf; 2016. Accessed 12 December 2021.

- 43.Food and Drug Administration. Koios DS for Breast. https://www.accessdata.fda.gov/cdrh_docs/pdf19/K190442.pdf; 2019. Accessed 1 December 2021.

- 44.Mango VL, Sun M, Wynn RT, Ha R. Should we ignore, follow, or biopsy? Impact of artificial intelligence decision support on breast ultrasound lesion assessment. AJR Am J Roentgenol. Jun 2020;214(6):1445–1452. doi: 10.2214/AJR.19.21872 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Berg WA, Gur D, Bandos AI, et al. Impact of original and artificially improved artificial intelligence-based computer-aided diagnosis on breast US interpretation. J Breast Imaging. 2021;3(3):301–311. doi: 10.1093/jbi/wbab013 [DOI] [PubMed] [Google Scholar]

- 46.Bahl M Artificial intelligence for breast ultrasound: will it impact radiologists’ accuracy? J Breast Imaging. May-Jun 2021;3(3):312–314. doi: 10.1093/jbi/wbab022 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Wu GG, Zhou LQ, Xu JW, et al. Artificial intelligence in breast ultrasound. World J Radiol. Feb 28 2019;11(2):19–26. doi: 10.4329/wjr.v11.i2.19 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Fujioka T, Mori M, Kubota K, et al. The utility of deep learning in breast ultrasonic imaging: a review. Diagnostics (Basel). Dec 6 2020;10(12):1055. doi: 10.3390/diagnostics10121055 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Shen Y, Shamout FE, Oliver JR, et al. Artificial intelligence system reduces false-positive findings in the interpretation of breast ultrasound exams. Nat Commun. Sep 24 2021;12(1):5645. doi: 10.1038/s41467-021-26023-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Zhou LQ, Wu XL, Huang SY, et al. Lymph node metastasis prediction from primary breast cancer US images using deep learning. Radiology. Jan 2020;294(1):19–28. doi: 10.1148/radiol.2019190372 [DOI] [PubMed] [Google Scholar]

- 51.Gu J, Tong T, He C, et al. Deep learning radiomics of ultrasonography can predict response to neoadjuvant chemotherapy in breast cancer at an early stage of treatment: a prospective study. Eur Radiol. Oct 15 2021; doi: 10.1007/s00330-021-08293-y [DOI] [PubMed] [Google Scholar]

- 52.Bae MS. Using deep learning to predict axillary lymph node metastasis from US images of breast cancer. Radiology. Jan 2020;294(1):29–30. doi: 10.1148/radiol.2019192339 [DOI] [PubMed] [Google Scholar]

- 53.Akkus Z, Cai J, Boonrod A, et al. A survey of deep-learning applications in ultrasound: artificial Intelligence-powered ultrasound for improving clinical workflow. J Am Coll Radiol. Sep 2019;16(9 Pt B):1318–1328. doi: 10.1016/j.jacr.2019.06.004 [DOI] [PubMed] [Google Scholar]

- 54.Mann RM, Cho N, Moy L. Breast MRI: state of the art. Radiology. Sep 2019;292(3):520–536. doi: 10.1148/radiol.2019182947 [DOI] [PubMed] [Google Scholar]

- 55.Mann RM, Kuhl CK, Moy L. Contrast-enhanced MRI for breast cancer screening. J Magn Reson Imaging. Aug 2019;50(2):377–390. doi: 10.1002/jmri.26654 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Food and Drug Administration. QuantX. https://www.accessdata.fda.gov/cdrh_docs/pdf17/K170195.pdf; 2017. Accessed 1 December 2021

- 57.Jiang Y, Edwards AV, Newstead GM. Artificial intelligence applied to breast MRI for improved diagnosis. Radiology. Jan 2021;298(1):38–46. doi: 10.1148/radiol.2020200292 [DOI] [PubMed] [Google Scholar]

- 58.Codari M, Schiaffino S, Sardanelli F, Trimboli RM. Artificial intelligence for breast MRI in 2008–2018: a systematic mapping review. AJR Am J Roentgenol. Feb 2019;212(2):280–292. doi: 10.2214/AJR.18.20389 [DOI] [PubMed] [Google Scholar]

- 59.Reig B, Heacock L, Geras KJ, Moy L. Machine learning in breast MRI. J Magn Reson Imaging. Oct 2020;52(4):998–1018. doi: 10.1002/jmri.26852 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Sheth D, Giger ML. Artificial intelligence in the interpretation of breast cancer on MRI. J Magn Reson Imaging. May 2020;51(5):1310–1324. doi: 10.1002/jmri.26878 [DOI] [PubMed] [Google Scholar]

- 61.Ha R, Chang P, Mutasa S, et al. Convolutional neural network using a breast MRI tumor dataset can predict Oncotype Dx Recurrence Score. J Magn Reson Imaging. Feb 2019;49(2):518–524. doi: 10.1002/jmri.26244 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Ha R, Chin C, Karcich J, et al. Prior to initiation of chemotherapy, can we predict breast tumor response? Deep learning convolutional neural networks approach using a breast MRI tumor dataset. J Digit Imaging. Oct 2019;32(5):693–701. doi: 10.1007/s10278-018-0144-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Ha R, Chang P, Mema E, et al. Fully automated convolutional neural network method for quantification of breast MRI fibroglandular tissue and background parenchymal enhancement. J Digit Imaging. Feb 2019;32(1):141–147. doi: 10.1007/s10278-018-0114-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Saha A, Grimm LJ, Ghate SV, et al. Machine learning-based prediction of future breast cancer using algorithmically measured background parenchymal enhancement on high-risk screening MRI. J Magn Reson Imaging. Aug 2019;50(2):456–464. doi: 10.1002/jmri.26636 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Meyer-Base A, Morra L, Meyer-Base U, Pinker K. Current status and future perspectives of artificial intelligence in magnetic resonance breast imaging. Contrast Media Mol Imaging. 2020;2020:6805710. doi: 10.1155/2020/6805710 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Filice RW, Mongan J, Kohli MD. Evaluating artificial intelligence systems to guide purchasing decisions. J Am Coll Radiol. Nov 2020;17(11):1405–1409. doi: 10.1016/j.jacr.2020.09.045 [DOI] [PubMed] [Google Scholar]

- 67.Omoumi P, Ducarouge A, Tournier A, et al. To buy or not to buy-evaluating commercial AI solutions in radiology (the ECLAIR guidelines). Eur Radiol. Jun 2021;31(6):3786–3796. doi: 10.1007/s00330-020-07684-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Pierce JD, Rosipko B, Youngblood L, Gilkeson RC, Gupta A, Bittencourt LK. Seamless integration of artificial intelligence into the clinical environment: our experience with a novel pneumothorax detection artificial intelligence algorithm. J Am Coll Radiol. Nov 2021;18(11):1497–1505. doi: 10.1016/j.jacr.2021.08.023 [DOI] [PubMed] [Google Scholar]

- 69.Allen B, Dreyer K, Stibolt R Jr., et al. Evaluation and real-world performance monitoring of artificial intelligence models in clinical practice: try it, buy it, check it. J Am Coll Radiol. Nov 2021;18(11):1489–1496. doi: 10.1016/j.jacr.2021.08.022 [DOI] [PubMed] [Google Scholar]

- 70.Pianykh OS, Langs G, Dewey M, et al. Continuous learning AI in radiology: implementation principles and early applications. Radiology. Oct 2020;297(1):6–14. doi: 10.1148/radiol.2020200038 [DOI] [PubMed] [Google Scholar]