Abstract

An important aspect of the diagnosis procedure in daily clinical practice is the analysis of dental radiographs. This is because the dentist must interpret different types of problems related to teeth, including the tooth numbers and related diseases during the diagnostic process. For panoramic radiographs, this paper proposes a convolutional neural network (CNN) that can do multitask classification by classifying the X-ray images into three classes: cavity, filling, and implant. In this paper, convolutional neural networks are taken in the form of a NASNet model consisting of different numbers of max-pooling layers, dropout layers, and activation functions. Initially, the data will be augmented and preprocessed, and then, the construction of a multioutput model will be done. Finally, the model will compile and train the model; the evaluation parameters used for the analysis of the model are loss and the accuracy curves. The model has achieved an accuracy of greater than 96% such that it has outperformed other existing algorithms.

1. Introduction

Tooth decay, sometimes referred to as dental caries, is a disease that affects millions of people in daily life [1]. Depending on the extent of the lesion, dental caries can be categorized as normal, initial, moderate, or extensive [2]. Early identification of dental caries can save money in the long run by avoiding more intrusive procedures. Bitewing radiography is the gold standard for diagnosing demineralized proximal caries, which is difficult to detect with clinical investigations alone [3]. Detecting proximal caries is made simple with bitewing radiography and a visual assessment. Fluorescence-based technologies like DIAGNOdent (KaVo Charlotte, NC, USA) and fiber optic transillumination can also be used to detect dental caries. The disadvantages of these approaches include their inability to detect posterior beginning proximal caries and the cost of additional devices. Therefore, bitewing radiographs are still the most often utilized approach in clinical settings [4].

Dental informatics is a new and rising topic in dentistry, which can improve treatment and diagnostics and reduce stress and tiredness during everyday practice. Dental procedures create a vast amount of data from sources like high-resolution medical imaging and biosensors with continuous output [5]. Dental professionals can benefit from using computer programs in various decision-making processes, including prevention, diagnosis, and treatment planning [6]. In clinical settings, deep learning is currently one of the artificial intelligence methods used [7]. Deep learning's success is mainly attributable to advances in computing power, enormous data, and new methods. This strategy has been proven in image-based diagnostics and is widely utilized [8]. During the last decade, deep learning-based applications have exploded in popularity. Convolutional neural networks (CNNs) have become a popular choice for medical image analysis because of their rapid development. Clinical skin screenings, mammography, and eye exams for diabetic retinopathy have successfully used CNNs. Hence, CNNs have been particularly successful in the field of a cancer diagnosis [9].

Dental caries can be difficult to diagnose with radiographs, even though they are widely accepted as a diagnostic tool [10]. Even when utilizing the same radiograph, observers have significant discrepancies regarding whether caries lesions are seen. The inter-rater agreement can be affected by factors such as the radiograph's quality, how the dentist views it, and the time it takes to complete each exam. The mean kappa values for the presence or absence of dental caries and their degree ranged from 0.30 to 0.72 when 34 raters examined the identical bitewing radiographs in a prior study. Consistency is a significant issue, especially when detecting the early stages of dental caries.

Researchers have recently extensively investigated the use of deep learning and convolutional neural networks (CNNs) to interpret various types of medical images, with promising results [11]. There has been a rise in deep learning in diagnosing diseases, which has resulted in improved clinical outcomes. Using deep convolutional networks in dentistry has been studied since 2015.

The organisation of the paper is as follows: Section 2 discusses the literature survey. In Section 3, the proposed methodology has explained, and Section 4 covers the results and discussion. In Section 5, the conclusion and future work are mentioned followed by the reference section.

2. Related Work

Computer-aided image segmentation in dentistry has been introduced in the literature using various techniques [12, 13]. Image classification and object detection are two examples of computer vision problems used by deep learning. A growing use of deep learning-based algorithms has recently been becoming popular for image segmentation. Full-connected networks (FCNs) are a common segmentation approach that can be used in various imaging applications.

By employing 1500 panoramic X-ray radiography, Jader et al. [14] developed the profile of each tool using a mask region-based convolutional neural network. Accuracy, F1-score, precision, recall, and specificity were used as outcome metrics in this investigation, with 0.98, 0.88, 0.94, 0.84, and 0.99, respectively. Muramatsu et al. [15] used a fourfold cross-validation method using 100 dental panoramic radiographs to build an object detection network. As a result, 96.4% and 93.2% were the sensitivity and the accuracy of tooth detection, respectively. Miki et al. [16] used an AlexNet-based convolutional neural network (DCNN) to classify dental cone-beam computed tomography (CBCT) pictures of tooth kinds. Researchers used 42 images to train the network and ten images to test it and achieved an accuracy rate above 80% in that study. Furthermore, TensorFlow tool package has been employed by Chen et al. to identify and count teeth in dental periapical videos using quicker R-CNN features [17]. In this case, 800 images were used for training, 200 for testing, and 250 for validation. There was a correlation between the result metrics of recall and precision (0.728 and 0.771, respectively). They used a neural network to forecast the number of missing teeth.

Zhang et al. [18] used periapical images with Faster RCNN and R-FCN (region-based fully convolutional networks). A total of 700 images were used to train the network, 200 for testing, and 100 for validation. The model has produced a precision of 95.78% and a recall of 0.961. Velemínská et al. [19] compared the accuracy of an RBFNN and a GAME neural network in estimating the age of the Czech population between the ages of three and seventeen. A panoramic X-ray of 1393 people ranging in age from three to 17 years was used in this investigation. To get an idea of the variance, the standard deviation was used. Afterwards, Tuzoff et al. [20] used a Faster R-CNN architecture and 1352 panoramic images to detect teeth [3]. A sensitivity of 0.9941 and a precision of 0.9945 were achieved in this study. Schwendicke et al. have implemented Resnet18 and Resnext50, and CNNs were trained and validated using a 10-fold cross-validation method. One-cycle learning rate policy was applied, with the minimum learning rate of 105 and maximum learning rate of 10-3, respectively, during the training process. PPV/NPV and AUC (area under the receiver operating characteristic curve) were some of the metrics used to evaluate the model's performance. To see if the CNNs were based on features that dentists would also use, feature visualisation was used. Approximately 41% of the teeth were found to have caries lesions. Raith et al. [21] identified teeth using a CNN architecture and the PyBrain package, achieving a performance of 0.93. Based on the tooth type (molar, incisor, or premolar), an AlexNet database of 100 panoramic radiographs was used by Oktay to recognize teeth with an accuracy of above 0.92 [22].

Fukuda [23] has used panoramic radiography and a convolutional neural network (CNN) technology, and this study attempted to determine whether or not VRF could be detected. In this method, 330 VRF teeth with clearly visible fracture lines were picked from the hospital imaging database in 330 panoramic images. Two radiologists and an endodontist confirmed the presence of VRF lines. Of the 300 images, 240 images were assigned to a training set and 60 images to a test set, respectively. CNN-based deep learning models were created using DIGITS version 5.01 to detect VRFs. The implemented method has achieved a precision of 0.93, and the F measure was 0.83. Though a number of works have been presented on the detection of the object of interest from the dental X-ray images, still there is a need for an automated technique that can detect the desired objects accurately and effectively.

It was demonstrated that SegNet [24] could be trained more quickly using an encoder-decoder architecture. Encoder-decoder architecture with skip links between upsampling and downsampling layers allowed the U-Net network to combine high-resolution characteristics with an encoded and decoded output. Some U-Net versions, such as 3D U-Net [25], V-Net [26], and attention U-Net [27], have also been proposed to improve performance. According to Fan et al. [28], an efficient network called PraNet was developed to balance inference speed with segmentation accuracy. In addition to the above-mentioned general frameworks for picture segmentation, multiple deep learning-based algorithms have been used to segment X-ray pictures. Al-Antari et al. directly segmented live using the DeepLab [29]. To detect COVID-19 infections from chest X-ray images, Blain et al. presented a modified UNet network [30]. Moeskops et al. trained a multitask segmentation model using a variety of visual modalities [31]. RNN was first integrated into FCN by Trullo et al. as a conditional random field module [32–34].

3. Materials and Methods

3.1. Datasets

The dental image dataset consisted of unidentified and anonymized panoramic dental X-ray images of a total of 116 patients, acquired from Noor Medical Imaging Center, Qom, Iran. This dataset covered different dental conditions of teeth from healthy to partial and complete edentulous cases. All conditions of different cases are segmented by two dentists manually. The dataset can be obtained from the Kaggle website which is publicly available at https://www.kaggle.com/daverattan/dental-xrary-tfrecords.

3.2. Proposed Methodology

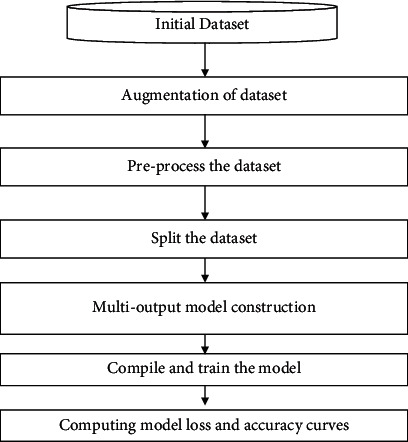

In this work, X-ray images of teeth will be used for performing the multiclass classification. In this classification, there are three classes such as “cavity,” “filling,” and “implant.” In the initial phase, the proposed work will augment the data and then preprocess it. Next, the data are split into training and validation sets. Finally, the multioutput model is developed which is used to compile and train the model. Figure 1 illustrates the flowchart of the proposed work.

Figure 1.

Flowchart of the proposed work.

3.2.1. Data Augmentation

The data augmentation has been done by applying different types of operations such as scale, rotates, translate, Gaussian blur, and Gaussian noise. The function has been defined to apply the augmentation such as bounding_box and image_aug. Therefore, before performing the augmentation, the dataset was of size 83, and after the augmentation, it has been increased to 245. The augmentation of the dataset has been done to increase the size of the dataset, so that more accurate results can be obtained.

3.2.2. Preprocessing of the Image Dataset

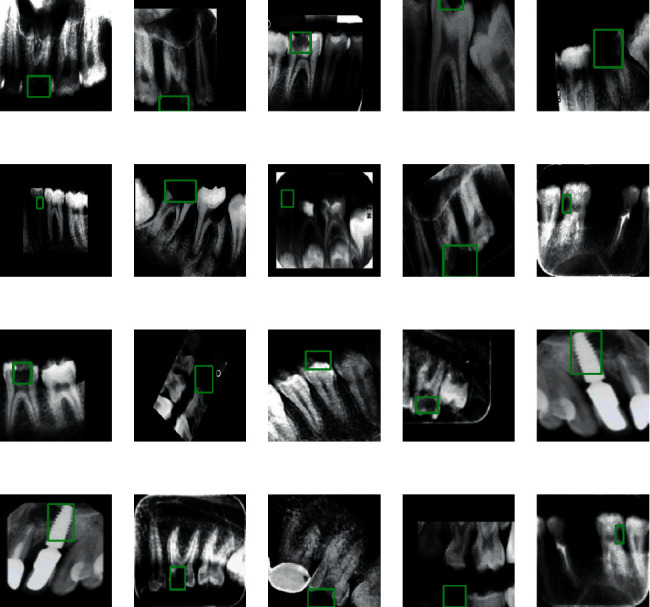

The dataset is preprocessed by scaling the boundary box in the range of 0 to 1 so that model can understand easily. Some more resizing operation is also performed such as label_encoder, integer_labels, and onehot_labels. label_encoder is used to convert the textual data into numerical form, so that model can understand it in a better way. onehot_labels are used to convert the categorical data into numerical data. Figure 2 illustrates the images of different types of teeth diseases.

Figure 2.

Different types of images based on teeth disease.

Splitting the dataset: the dataset is split into the training set and the validation set. 90% of the data are taken as the training dataset, and 10% of the data are used for the validation purpose.

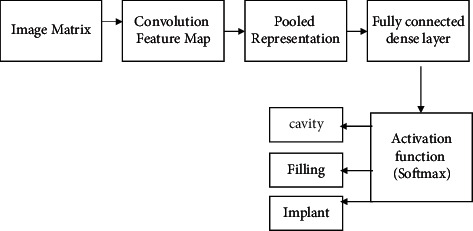

3.2.3. Construction of the Multioutput Model

In this work, the transfer layer has been implemented by using a convolutional neural network. The convolution neural network has been constructed by incorporating different number of max-pooling layer, dropout layer, and activation functions. Figure 3 shows the architecture of the proposed model in which the image matrix has been passed through the convolution feature map. The pooled representation of the features has been inserted in the fully connected dense layer, and then, finally, the activation function has been applied to perform the multiclass classification.

Figure 3.

Architecture of the proposed model.

3.2.4. Proposed NASNet Model

It is a machine learning model known as Neural Search Architecture Network (NASNet). Because it departs from established models like GoogleNet in several crucial ways, it has the potential to be a game-changer for artificial intelligence. In the context of neural network development, we expect it to perform at its highest level. To develop the most efficient architecture, one needs to have extensive knowledge of the subject matter.

Constructing neural networks requires a lot of trial and error, which may be very time-consuming and costly. Human professionals may have built a successful model architecture, but this does not guarantee that we have explored the whole network architectural space and found the optimal solution. Automated network architecture design has been made possible by the neural architecture search (NAS). It is a search algorithm that seeks the best method to accomplish a specific task. Many new proposals for faster, more accurate, and less expensive neural architecture search (NAS) approaches have sprung up in response to the ground-breaking work done in 2017 by Zoph & Le and Baker et al. [24]. For example, Google's AutoML and Auto-Keras are commercial services and open-source tools that make NAS accessible to the broader machine learning community. There are three important steps in the NASNet, which are search space, searching method, and estimation methodology.

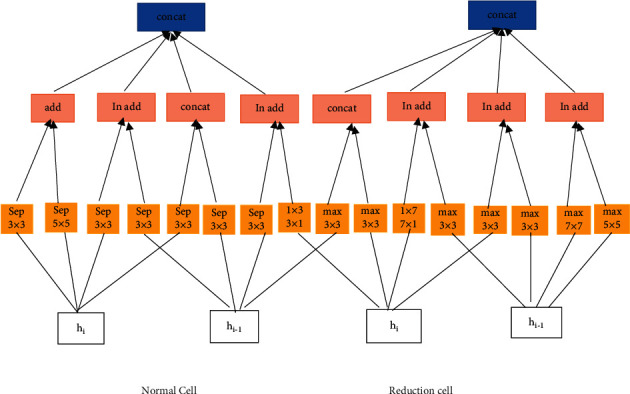

Figure 4 represents the NASNet model in which there are two different cells; one is a normal cell, and the other one is a reduction cell. These cells are passed through different types of max-pooling layers, dropout layers, dilated convolutions, depth-wise separated convolutions, and the activation function. By using fewer floating-point operations and parameters than comparable architectures, the NASNet achieves state-of-the-art performance.

Figure 4.

NASNet model.

4. Results and Discussion

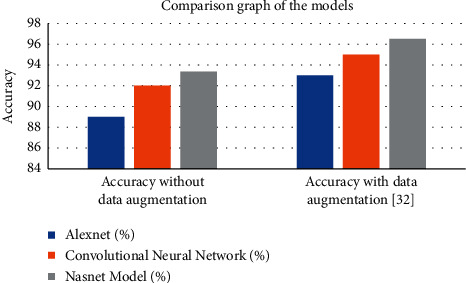

The discussed work is implemented using the Python programming language. The performance parameters used for the validation of the models are accuracy and loss. The proposed Model has achieved an accuracy of 96.51% with data augmentation and 93.36 without augmentation. Table 1 lists the comparison of the proposed NASNet model with AlexNet and convolutional neural network. From Table 1, it has been observed that the NASNet model outperformed the AlexNet by 4.36% and CNN by 1.36% without any augmentation. It has also been observed from results that accuracy of 96.51% has been achieved by the proposed NASNet model by outperforming the AlexNet having 93% accuracy and CNN having 95% with data augmentation. Figure 5 displays the comparison graph of the proposed model with the existing models.

Table 1.

Comparison of the proposed model with the existing model.

| Models | AlexNet (%) | Convolutional neural network (%) | NASNet model (%) |

|---|---|---|---|

| Accuracy without data augmentation | 89 | 92 | 93.36 |

| Accuracy with data augmentation [33] | 93 | 95 | 96.51 |

Figure 5.

Comparison graph of the implemented model.

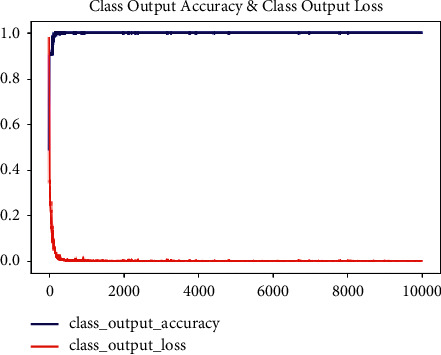

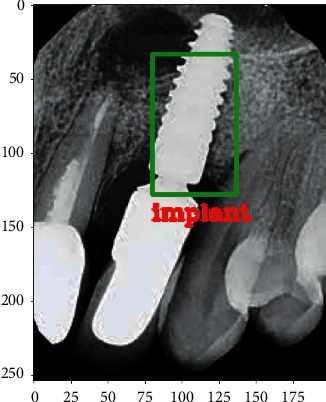

Figure 6 shows the graph based on output accuracy and the output loss. The 10000-epoch iteration has been done to compute the accuracy and the loss of the proposed model. The proposed model has computed the loss of 0.0407. Figure 7 illustrates the predicted image with implant class.

Figure 6.

Graph of output accuracy and output loss.

Figure 7.

Teeth image predicted with the implant class.

5. Conclusion

This paper proposes a method to perform multiclass classification through X-ray images. The work has implemented the convolutional neural network with a transfer learning approach, that is, NASNet. There are three different types of classes: cavity, filling, and implant used during the classification process. These assessments show that the procedure can be an initial stage in clinical practice processing and analysing dental pictures. The limited training dataset of 116 patient images is also a factor in the success of this approach. Image processing stages are applied to the neural networks with multiple max-pooling layers, dropout layers, and activation functions. While the goal of this work is to segment tooth instances, the strategy given here can be applied to similar challenges in other domains, such as separating cell instances. In the future work, the multiclass classification can be done in large datasets with a greater number of classes. A new advanced deep learning based on hybrid deep learning can be implemented that can enhance the existing performance.

Acknowledgments

This project was funded by the Deanship of Scientific Research (DSR), King Abdulaziz University, Jeddah, Saudi Arabia under Grant no. (D-672-611-1443). The authors, therefore, gratefully acknowledge DSR technical and financial support.

Data Availability

Data are publicly available in Kaggle (https://www.kaggle.com/daverattan/dental-xrary-tfrecords).

Conflicts of Interest

The authors declare that there are no conflicts of interest regarding the publication of this paper.

References

- 1.Tian S., Dai N., Zhang B., Yuan F., Yu Q., Cheng X. Automatic classification and segmentation of teeth on 3D dental model using hierarchical deep learning networks. IEEE Access . 2019;7:84817–84828. doi: 10.1109/ACCESS.2019.2924262. [DOI] [Google Scholar]

- 2.Megalan Leo L., Kalapalatha Reddy T. Learning compact and discriminative hybrid neural network for dental caries classification. Microprocessors and Microsystems . 2021;82 doi: 10.1016/j.micpro.2021.103836.103836 [DOI] [Google Scholar]

- 3.Koundal D., Gupta S., Singh S. Automated delineation of thyroid nodules in ultrasound images using spatial neutrosophic clustering and level set. Applied Soft Computing . 2016;40:86–97. doi: 10.1016/j.asoc.2015.11.035. [DOI] [Google Scholar]

- 4.Aggarwal S., Gupta S., Alhudhaif A., Koundal D., Gupta R., Polat K. Automated COVID‐19 detection in chest X‐ray images using fine‐tuned deep learning architectures. Expert Systems . 2021;39(3) doi: 10.1111/exsy.12749. [DOI] [Google Scholar]

- 5.Shah N. Recent advances in imaging technologies in dentistry. World Journal of Radiology . 2014;6(10):794–807. doi: 10.4329/wjr.v6.i10.794. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Masic F. Information systems in dentistry. Acta Informatica Medica . 2012;20(1):47–55. doi: 10.5455/aim.2012.20.47-55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Nair R., Alhudhaif A., Koundal D., et al. Deep learning-based COVID-19 detection system using pulmonary CT scans. TURKISH JOURNAL OF ELECTRICAL ENGINEERING & COMPUTER SCIENCES . 2021;29(SI-1):2716–2727. doi: 10.3906/elk-2105-243. [DOI] [Google Scholar]

- 8.Niazi M. K. K., Parwani A. v., Gurcan M. N. Digital pathology and artificial intelligence. The Lancet Oncology . 2019;20(5):e253–e261. doi: 10.1016/S1470-2045(19)30154-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Vishwakarma S. K., Verma S. S., Nair R., Roy V., Agrawal A. Detection of sleep apnea through heart rate signal using convolutional neural network. International Journal of Pharmaceutical Research . 2021;12(4) doi: 10.31838/ijpr/2020.12.04.654. [DOI] [Google Scholar]

- 10.Yılmaz H., Keleş S. Recent methods for diagnosis of dental caries in dentistry. Meandros Medical and Dental Journal . 2018;19(1):1–8. doi: 10.4274/meandros.21931. [DOI] [Google Scholar]

- 11.Bhalla K., Koundal D., Bhatia S., Khalid Imam Rahmani M., Tahir M. Fusion of infrared and visible images using fuzzy based siamese convolutional network. Computers, Materials & Continua . 2022;70(3):5503–5518. doi: 10.32604/cmc.2022.021125. [DOI] [Google Scholar]

- 12.Singh P., Kaur R. An integrated fog and Artificial Intelligence smart health framework to predict and prevent COVID-19. Global transitions . 2020;2:283–292. doi: 10.1016/j.glt.2020.11.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Singh P., Kaur R. Implementation of the QoS framework using fog computing to predict COVID-19 disease at early stage. World Journal of Engineering . 2021;19(1):80–89. doi: 10.1108/wje-12-2020-0636. [DOI] [Google Scholar]

- 14.Jader G., Fontineli J., Ruiz M., Abdalla K., Pithon M., Oliveira L. Deep instance segmentation of teeth in panoramic X-ray images. Proceedings of the 31st SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI); November 2019; Parana, Brazil. IEEE; [DOI] [Google Scholar]

- 15.Muramatsu C. Tooth detection and classification on panoramic radiographs for automatic dental chart filing: improved classification by multi-sized input data. Oral Radiology . 2021;37(1):13–19. doi: 10.1007/s11282-019-00418-w. [DOI] [PubMed] [Google Scholar]

- 16.Miki Y., Muramatsu C., Hayashi T., et al. Classification of teeth in cone-beam CT using deep convolutional neural network. Computers in Biology and Medicine . 2017;80:23–29. doi: 10.1016/j.compbiomed.2016.11.003. [DOI] [PubMed] [Google Scholar]

- 17.Chen H., Zhang K., Lyu P., et al. A deep learning approach to automatic teeth detection and numbering based on object detection in dental periapical films. Scientific Reports . 2019;9(1):p. 3840. doi: 10.1038/s41598-019-40414-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Zhang K., Wu J., Chen H., Lyu P. An effective teeth recognition method using label tree with cascade network structure. Computerized Medical Imaging and Graphics . 2018;68:61–70. doi: 10.1016/j.compmedimag.2018.07.001. [DOI] [PubMed] [Google Scholar]

- 19.Velemínská J., Pilný A., Čepek M., Kot’Ová M., Kubelková R. Dental age estimation and different predictive ability of various tooth types in the Czech population: data mining methods. Anthropologischer Anzeiger . 2013;70(3):331–345. doi: 10.1127/0003-5548/2013/0311. [DOI] [PubMed] [Google Scholar]

- 20.Tuzoff D. V., Tuzova L. N., Bornstein M. M., et al. Tooth detection and numbering in panoramic radiographs using convolutional neural networks. Dentomaxillofacial Radiology . 2019;48(4) doi: 10.1259/dmfr.20180051.20180051 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Raith S. Artificial Neural Networks as a powerful numerical tool to classify specific features of a tooth based on 3D scan data. Computers in Biology and Medicine . 2017;80:65–76. doi: 10.1016/j.compbiomed.2016.11.013. [DOI] [PubMed] [Google Scholar]

- 22.Oktay A. B. Tooth detection with convolutional neural networks. Proceedings of the in 2017 Medical Technologies National Conference, TIPTEKNO 2017; October 2017; Trabzon, Turkey. [DOI] [Google Scholar]

- 23.Fukuda M. Evaluation of an artificial intelligence system for detecting vertical root fracture on panoramic radiography. Oral Radiology . 2020;36(4):337–343. doi: 10.1007/s11282-019-00409-x. [DOI] [PubMed] [Google Scholar]

- 24.Badrinarayanan V., Kendall A., Cipolla R. SegNet: a deep convolutional encoder-decoder architecture for image segmentation. IEEE Transactions on Pattern Analysis and Machine Intelligence . 2017;39(12):2481–2495. doi: 10.1109/TPAMI.2016.2644615. [DOI] [PubMed] [Google Scholar]

- 25.Çiçek Ö., Abdulkadir A., Lienkamp S. S., Brox T., Ronneberger O. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) New York, NY, USA: Springer; 2016. 3D U-net: learning dense volumetric segmentation from sparse annotation. [Google Scholar]

- 26.Milletari F., Navab N., Ahmadi S. A. V-Net: fully convolutional neural networks for volumetric medical image segmentation. Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV); June 2016; Stanford, CA, USA. IEEE; [DOI] [Google Scholar]

- 27.el Jurdi R., Petitjean C., Honeine P., Abdallah F. Bb-unet: U-net with bounding box prior. IEEE Journal on Selected Topics in Signal Processing . 2020;14(6):1189–1198. doi: 10.1109/JSTSP.2020.3001502. [DOI] [Google Scholar]

- 28.Fan D. P. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) New York, NY, USA: Springer; 2020. PraNet: parallel reverse attention network for polyp segmentation. [DOI] [Google Scholar]

- 29.Al-antari M. A., Al-masni M. A., Choi M. T., Han S. M., Kim T. S. A fully integrated computer-aided diagnosis system for digital X-ray mammograms via deep learning detection, segmentation, and classification. International Journal of Medical Informatics . 2018;117:44–54. doi: 10.1016/j.ijmedinf.2018.06.003. [DOI] [PubMed] [Google Scholar]

- 30.Blain M. Determination of disease severity in COVID-19 patients using deep learning in chest x-ray images. Diagnostic and interventional radiology . 2021;27(1):20–27. doi: 10.5152/dir.2020.20205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Moeskops P. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) New York, NY, USA: Springer; 2017. Deep learning for multi-task medical image segmentation in multiple modalities. [DOI] [Google Scholar]

- 32.Trullo R., Petitjean C., Ruan S., Dubray B., Nie D., Shen D. Segmentation of organs at risk in thoracic CT images using a SharpMask architecture and conditional random fields. Proceedings of the IEEE 14th International Symposium on Biomedical Imaging (ISBI 2017); April 2017; Melbourne, Australia. IEEE; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Singh P., Sehgal P. Numbering and classification of panoramic dental images using 6-layer convolutional neural network. Pattern Recognition and Image Analysis . 2020;30(no. 1):125–133. doi: 10.1134/S1054661820010149. [DOI] [Google Scholar]

- 34.Zoph B., Le Q. v. Neural architecture search with reinforcement learning. 2017. https://arxiv.org/abs/1611.01578 .

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data are publicly available in Kaggle (https://www.kaggle.com/daverattan/dental-xrary-tfrecords).