Abstract

Machine learning approaches are just emerging in eating disorders research. Promising early results suggest that such approaches may be a particularly promising and fruitful future direction. However, there are several challenges related to the nature of eating disorders in building robust, reliable and clinically meaningful prediction models. This article aims to provide a brief introduction to machine learning and to discuss several such challenges, including issues of sample size, measurement, imbalanced data and bias; I also provide concrete steps and recommendations for each of these issues. Finally, I outline key outstanding questions and directions for future research in building, testing and implementing machine learning models to advance our prediction, prevention, and treatment of eating disorders.

Keywords: computational methods, eating disorders, machine learning, prediction

1 |. INTRODUCTION

Research over the past century has made significant advances in understanding eating disorders (EDs), including identifying genetic (Duncan et al., 2017) and environmental (Culbert et al., 2015) risk factors, mapping their longitudinal course and outcome (Eddy et al., 2017), and developing evidence-based treatments (Wilson & Shafran, 2005). While these explanatory approaches have proliferated, less attention has focused on predicting EDs. Although many studies use longitudinal data to identify factors associated with EDs at later timepoints, traditional statistical methods are ill-suited to accurately forecast future outcomes. Reflecting this misalignment, conventional approaches have yielded risk factors that often fail to replicate (Vall & Wade, 2015) and response rates for even our best treatments remain low (Kass et al., 2013). Advanced computational methods such as machine learning (ML) are better equipped to advance ED prediction and treatment. These approaches are just emerging in ED research, with promising results (Cyr et al., 2018; Haynos et al., 2020; Sadeh-Sharvit et al., 2020).

As such, this article aims to highlight some key considerations for responsible ML application in EDs. Of note, this paper does not provide a comprehensive overview of all potential challenges, nor is it a primer or tutorial on ML methods. Rather, I hope to provide an accessible introduction to ML for ED researchers and highlight important points to consider before conducting such studies. Although many challenges reviewed are relevant to psychiatry broadly, all examples will be drawn from ED research and suggestions will be tailored to ED investigators, given recent increasing interest in ML methods in this field.

2 |. WHAT IS MACHINE LEARNING, AND HOW IS IT DIFFERENT FROM TRADITIONAL STATISTICAL METHODS?

Several excellent tutorials have reviewed ML (Dwyer et al., 2018; Yarkoni & Westfall, 2017) and interested readers can find even greater detail in textbooks (James et al., 2013; Kuhn & Johnson, 2013). Rather than reiterate these details, this section will briefly highlight key differences between traditional statistics and ML to familiarize readers with overarching concepts/terminology. Of note, ML techniques can be broadly grouped into supervised and unsupervised methods. While supervised methods (e.g., elastic net, support vector machines) are applied to labelled data to learn functions that best map input to output variables (e.g., predicting ED onset), unsupervised methods (e.g., clustering, dimension reduction) are applied to unlabelled data to identify underlying data structures (e.g., identifying subtypes of ED patients). This paper focuses on supervised methods which may be most applicable to questions in the field (e.g., predicting ED treatment outcomes).

In brief, there are two critical advantages of ML compared to traditional statistical methods and algorithms. First, regarding methods, traditional statistical frameworks aim to identify variables significantly (e.g., p < 0.05) associated with an outcome by fitting models on entire data sets. However, as any data set is influenced by underlying data-generating mechanisms and sampling error, such models will be overfit and likely generalize poorly. Supervised ML methods focus instead on optimizing predictive accuracy for new data, using internal model validation to evaluate performance of models (built on subsets of a full data set) in held-out validation data. A common approach is k-fold cross-validation, which involves (1) splitting a data set into k subsets, (2) training/tuning models on k−1 subsets, (3) testing on the k held-out subset and (4) repeating k times so each subset serves as the validation set.

Second, ML algorithms are more complex and flexible than traditional statistical models. Although hundreds of algorithms exist (see James et al., 2013), common algorithms in psychiatry can be broadly classified in three groups: regularization (e.g., lasso, elastic net), tree-based methods (e.g., random forests, decision trees) and support vector machines. These algorithms’ flexibility is reflected in tuning parameters, which control aspects of the algorithm to obtain accurate and generalizable predictions. For example, lasso can be viewed as an extension of linear regression, where coefficients (βs) are chosen to minimize sum-of-squared errors (SSE) between observed and predicted responses:

where yi = observed outcome and = predicted response. This is prone to overfitting by inflating coefficient estimates and cannot handle high collinearity among predictors (Kuhn & Johnson, 2013), quite common in clinical data sets. Lasso addresses these limitations with a penalty term:

This forces the model to minimize SSE and the absolute value of all coefficients. Thus, lasso shrinks coefficients, protects against overfitting and increases likelihood of good prediction in new data. During internal cross-validation, researchers tune λ (controlling shrinkage/regularization) to optimize out-of-sample prediction. Unlike traditional statistical methods, most ML models have one or more tuning parameters.

3 |. CHALLENGES AND RECOMMENDATIONS FOR MACHINE LEARNING IN EATING DISORDERS RESEARCH

The promise of ML in psychiatry has generated immense enthusiasm, with a proliferation of papers applying ML to predict depression (Chekroud et al., 2016; Kessler et al., 2016), schizophrenia (Koutsouleris et al., 2016), suicide and self-injury (Fox et al., 2019; Wang et al., 2021), anxiety (Boeke et al., 2020; Bokma et al., 2020) and obsessive–compulsive disorder (Lenhard et al., 2018); ML approaches are also emerging in ED research. For instance, ML has shown promise in predicting longitudinal illness course from self-report data (Haynos et al., 2020), detecting ED symptoms from Internet and/or social media data (Hwang et al., 2020; Sadeh-Sharvit et al., 2020), identifying deteriorating patients in need of medical attention (Ioannidis et al., 2020), and identifying biomarkers from neuroimaging data (Cerasa et al., 2015; Cyr et al., 2018). As these methods become more widely applied in ED research, this section describes challenges and considerations for responsible ML application, including sample size, measurement, imbalanced data and bias. Although these are general issues in ML for psychiatry, this section focuses specifically on how these issues may arise in ED research, providing steps and recommendations for ED researchers to address each challenge.

3.1 |. Sample size

3.1.1 |. Challenge

Though small sample sizes are generally a concern in psychiatry (Reardon et al., 2019), they are particularly problematic in ML and can lead to overfitting and vastly inflated estimates of model accuracy. Current recommendations suggest avoiding ML with fewer than several hundred observations (Poldrack et al., 2020). However, it is notoriously difficult to recruit large ED samples, particularly when constrained to specific clinical presentations (e.g., anorexia nervosa [AN] or bulimia nervosa [BN]).

3.1.2 |. Recommendation

There are several avenues for increasing sample size. First, online methods are time- and cost-effective for reaching large, representative samples (Smith et al., 2021). Second, collaborative multi-site studies could yield large samples; for instance, Haynos et al. (2020) leveraged data from the McKnight Longitudinal Study of Eating Disorders, a multisite study with 400+ participants. Third, ML models for other clinical concerns are often built using electronic health records with millions of patients (Barak-Corren et al., 2016); such data sources may prove useful for predicting EDs. Fourth, recent large-scale projects have embraced an open science model of publicly releasing data that can be harnessed for ML. For example, the Adolescent Brain Cognitive Development study is following >11,000 children for 10 years and has included ED assessments at each visit, with studies already applying ML (Adeli et al., 2020) and investigating ED (Rozzell et al., 2019) using this data.

When faced with smaller samples, researchers can make methodological choices to protect against over-fitting. For instance, prediction accuracy may be evaluated using average cross-validated estimates rather than entirely separate, held-out data (Kuhn & Johnson, 2013). Nested cross-validation methods, which allow for inner and outer cross-validation loops, may also protect against overfitting, particularly in small data sets (Cearns et al., 2019). Researchers should also avoid complex “black box” algorithms (e.g., deep neural networks) in favour of simpler and more interpretable options (e.g., elastic net, decision trees). Model robustness checks (e.g., comparing fivefold vs. 10-fold cross-validation accuracy) can also help ensure results are not spurious.

3.2 |. Measurement

3.2.1 |. Challenge

Questionable measurement practices limit our ability to build accurate ML models. As Jacobucci and Grimm (2020) put it: “throwing the same set of poorly measured variables that have been analysed before into ML algorithms is highly unlikely to produce new insights or findings.” For example, take restrictive eating—this construct is often poorly defined, as it is unclear which behaviours it describes (e.g., fasting vs. reducing portion sizes vs. reducing carbohydrates). Because these behaviours may be predicted by different variables, a model considering them as equivalent may find it difficult to determine consistent relationships between predictor and outcome variables. Furthermore, many scales used to measure restrictive eating are misaligned with the theoretical construct (measuring cognitive restraint rather than actual restricting behaviours) (Haynos et al., 2015; Stice et al., 2004, 2007). If these scales emerged as important predictors of treatment response in ML models, interventions may inadvertently target the wrong construct.

3.2.2 |. Recommendation

In a seminal paper, Flake and Fried (2020) pose six questions to guide transparent measurement practices. Their first questions involve defining and justifying constructs of interest, which are highly relevant for ML studies in ED research. For instance, should researchers build models predicting any ED diagnosis, a specific diagnosis (e.g., AN vs. BN), severity of overall ED psychopathology or specific symptoms? Typical outcomes include diagnoses and/or ED scale sum scores, but these have critical issues. For instance, EDs are characterized by high diagnostic instability and crossover (Eddy et al., 2008) with boundary conditions between diagnoses often governed solely by BMI; treating these as distinct categories may not be accurately “carving nature at its joints”. Moreover, sum scores of global ED psychopathology make the assumption that all symptoms are equally good and interchangeable indicators of EDs (Fried & Nesse, 2015). As a solution, researchers might consider defining and measuring outcomes at the symptom (rather than diagnostic or sum-score) level to provide more precise predictions about transdiagnostic ED features.

After identifying constructs of interest, it is critical to match the measurement to the construct. How should a construct such as restrictive eating be measured? There are many options ranging from self-report scales to behavioural tasks (e.g., test meal intake) to wearable biosensors, and many choices within each of those categories. While a large body of literature on construct validation (e.g., Clark & Watson, 2019; Strauss & Smith, 2009) cannot be adequately summarized here, researchers should clearly justify and transparently report details of measures used. Despite these measurement details not receiving as much attention in ML research as in more traditional clinical or psychometric studies, they are no less critical; even advanced ML algorithms and big data cannot overcome poor measurement. For example, above, I highlighted the potential of harnessing data from large-scale studies or multi-site collaborations. However, the quality of ED data in such studies relies on researchers’ measurement choices. For instance, many popular diagnostic interviews (e.g., MINI, SCID-5) (First et al., 2015; Sheehan et al., 1998) screen for AN based solely on BMI and skip questions about restricting, fear of weight gain and body dissatisfaction if individuals are not underweight. This fails to detect many individuals with ED psychopathology, and may cast doubt on prediction models built using this data. Increased collaboration between ED researchers and investigators from other subfields in developing general psychopathology assessments and designing large-scale studies could improve the validity of ED data available in large data sets for ML.

3.3 |. Imbalanced data

3.3.1 |. Challenge

In ML, “imbalanced data” refers to a classification problem in which outcomes are not equally likely to occur, leading to overly optimistic performance estimates. For instance, an algorithm predicting suicide risk in the general population could achieve 99% accuracy by simply predicting that no one attempts suicide, but this would not be very useful. ED researchers are likely to encounter many cases of imbalanced data (e.g., predicting ED onset in the general population).

3.3.2 |. Recommendation

Several sampling methods can address class imbalance during model training, including up-sampling (simulating or imputing minority class cases), down-sampling (reducing majority class cases) and hybrid methods (Kuhn & Johnson, 2013). It is imperative that subsampling occurs within the training set only. Test data sets should never be artificially balanced, but reflect “true” class imbalance anticipated in future data sets. Such subsampling methods were recently applied by Haynos et al. (2020) to predict outcomes including persistence of ED diagnosis and underweight BMI using data that showed significant class imbalance (e.g., 93.4% participants showing persistence of EDs at 1-year follow-up), allowing for more accurate estimates of model performance.

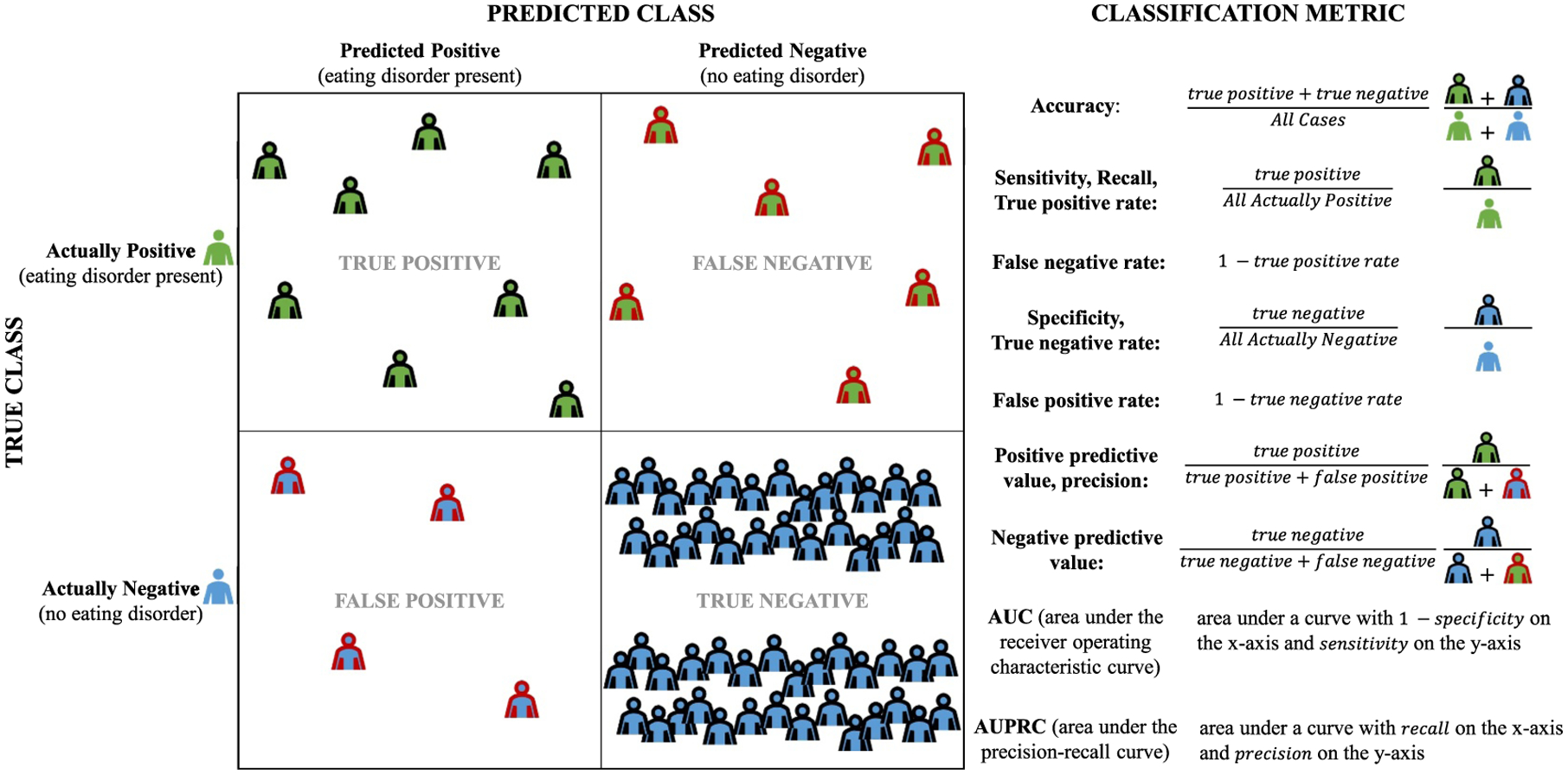

Model performance metrics are also important. As noted above, accuracy (proportion of all correctly classified cases) can be artificially optimistic. Fortunately, numerous other metrics are available (Figure 1). In psychiatry, area under the receiver operating characteristic curve (AUC) has been a standard metric. However, while many models have achieved high AUC (e.g., >0.80 in suicide prediction), their positive predictive values (PPV) remain untenably low. Indeed, a review of 64 suicide prediction studies found extremely low (<0.01) PPVs for most models, indicating 99 out of every 100 individuals predicted to attempt suicide will not (Belsher et al., 2019). This illustrates the importance of thoughtfully selecting metrics that capture multiple important characteristics. The internal model validation process requires researchers to select a metric to optimize; many models default to AUC, but other options may be more suitable for imbalanced data (e.g., area under the precision-recall curve).

FIGURE 1.

Metrics for measuring performance of classifiers

3.4 |. Bias

3.4.1 |. Challenge

Though often heralded as ‘objective’, algorithms reflect the nature of the data used to train them, and often detect, learn, and perpetuate societal injustices (Birhane & Cummins, 2019). Recidivism algorithms are biased against Black defendants (Angwin et al., 2016), predictive policing software disproportionately target non-White, low-income communities (Lum & Isaac, 2016), and STEM advertisements are less likely to be displayed to women (Lambrecht & Tucker, 2019). There are substantial disparities in the ED field (Sonneville & Lipson, 2018), with White women vastly overrepresented across clinical and research settings. Training ML models using such data sets risks perpetuating algorithmic bias with underdetection of EDs in marginalized communities.

3.4.2 |. Recommendation

As ML models are built and tested, ED researchers must carefully collect and select data sets diverse in race, ethnicity, gender, socioeconomic status and other key demographics. Researchers should also monitor and test for algorithmic bias at all stages (Gianfrancesco et al., 2018), centre individuals from marginalized communities in the model-building and decision-making process, and consider whether any prediction model would combat or entrench existing injustice (Birhane & Cummins, 2019). Beyond technical solutions, researchers must also recognize the entire construct of EDs—including the criteria used to diagnose them, the institutions that provide treatment, and the clinical interventions offered—result from systems built on foundations of discrimination. For instance, ED screening and assessment tools were primarily developed for and validated among cisgender heterosexual White women and may not accurately capture ED symptoms in racial/ethnic minorities, sexual minorities, transgender, non-binary or gender nonconforming individuals, who have been historically underrepresented in ED research (Burke et al., 2020; Coelho et al., 2019). Addressing these systemic issues will require (but is not limited to) carefully designing and validating culturally sensitive assessments for individuals from historically marginalized backgrounds, intentionally recruiting diverse research samples and reducing barriers to treatment access. As researchers of colour are more likely than White researchers to publish work highlighting race and recruit more participants of colour into studies (Roberts et al., 2020), increasing workforce diversity is also critical; current estimates demonstrate a striking lack of diversity in the ED field, with only 3% being Black/African-American, 3% Asian/Asian American and 14% Latino/Hispanic (Jennings Mathis et al., 2020).

Modelling ED data inherently involves working within a societal and political landscape. ML and other computational methods are not exempt by their data-driven nature and technical sophistication, but also emerge from history ripe with racism, sexism and white supremacy (Birhane & Guest, 2020). Thus, researchers must actively work to change the overwhelming whiteness and masculinity of computational sciences, which excludes minoritized groups. Increasing representation of researchers and clinicians from marginalized backgrounds is critical for advancing assessment, prediction and treatment of EDs in diverse populations.

4 |. CONCLUSION AND FUTURE DIRECTIONS

ML is rapidly emerging in the ED field, with promising early results. Where should we go from here? There are several important future directions. First, external model validation is critical before clinical implementation. This is perhaps best illustrated via COVID-19 prediction models: when submitting 22 published models to external validation, none beat simple univariate predictors (Gupta et al., 2020). Despite challenges inherent in collecting large clinical data sets, external model validation is a crucial step.

Although this article focuses on data-driven computational approaches, I also believe theory-driven methods are needed for making meaningful progress. One exciting method involves formalizing theories as mathematical models. Although we have numerous influential ED theories (e.g., cognitive-behavioural theory) (Fairburn, 2008), all theories to date have been instantiated verbally, rendering them underspecified due to the inherent imprecision of language. Other areas of science (e.g., ecology, physics) instantiate theories using formal mathematical notation (e.g., differential equations) and computer code, providing greater precision and specificity. Formal theories also allow for simulation of theory-implied data, which can be used to revise theories based on their ability to capture and recapitulate empirical data patterns. These methods have recently been applied to formalize a panic disorder theory (Robinaugh et al., 2019), with emerging efforts to also formalize theories of suicide and EDs, representing an exciting new direction in psychopathology research.

Both theory- and data-driven computational methods are critical for advancing ED research. As ML methods are increasingly applied, I encourage researchers to collaborate with interdisciplinary experts, including computer scientists, statisticians, clinical practitioners, ethicists and those with lived experience. Diverse perspectives are crucial for advancing science and practice, and voices of those who have been historically excluded from the ED field should be included and valued throughout. Collaboration and careful thinking at each step in the data collection, model building, testing and implementation process can allow for meaningful advances in the prediction, prevention and treatment of EDs to reduce their global burden.

Highlights.

Machine learning holds significant promise to advance eating disorders research

Some key considerations for responsible machine learning application in eating disorders research include issues of sample size, measurement, imbalanced data and bias

Future research should prioritize external validation of machine learning models

ACKNOWLEDGEMENTS

I thank Kathryn Fox, Ruben Van Genugten, Rowan Hunt, Patrick Mair and Matthew Nock for their helpful discussion and feedback on earlier drafts of this manuscript. Shirley B. Wang, is supported by the National Science Foundation Graduate Research Fellowship under Grant No. DGE-1745303. The content is solely the responsibility of the author and does not necessarily represent the official views of the National Science Foundation.

Abbreviations:

- AN

anorexia nervosa

- AUC

area under the receiver operating characteristic curve

- BN

bulimia nervosa

- ED

eating disorder

- ML

machine learning

- PPV

positive predictive value

- SSE

sum-of-squared errors

Footnotes

CONFLICTS OF INTEREST

I report no conflicts of interest.

DATA AVAILABILITY STATEMENT

Data sharing not applicable to this article as no data sets were generated or analysed for this paper.

REFERENCES

- Adeli E, Zhao Q, Zahr NM, Goldstone A, Pfefferbaum A, Sullivan EV, & Pohl KM (2020). Deep learning identifies morphological determinants of sex differences in the pre-adolescent brain. NeuroImage, 223, 117293. 10.1016/j.neuroimage.2020.117293 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Angwin J, Larson J, Mattu S, & Kirchner L (2016). Machine bias risk assessments in criminal sentencing. ProPublica. May, 23. [Google Scholar]

- Barak-Corren Y, Castro VM, Javitt S, Hoffnagle AG, Dai Y, Perlis RH, Nock MK, Smoller JW, & Reis BY (2016). Predicting suicidal behavior from longitudinal electronic health records. American Journal of Psychiatry, 174(2), 154–162. 10.1176/appi.ajp.2016.16010077 [DOI] [PubMed] [Google Scholar]

- Belsher BE, Smolenski DJ, Pruitt LD, Bush NE, Beech EH, Workman DE, Morgan RL, Evatt DP, Tucker J, & Skopp NA (2019). Prediction models for suicide attempts and deaths: A systematic review and simulation. JAMA Psychiatry, 76(6), 642. 10.1001/jamapsychiatry.2019.0174 [DOI] [PubMed] [Google Scholar]

- Birhane A, & Cummins F (2019). Algorithmic injustices: Towards a relational ethics. ArXiv:1912.07376 [Cs]. https://arxiv.org/abs/1912.07376 [Google Scholar]

- Birhane A, & Guest O (2020). Towards decolonising computational sciences. ArXiv:2009.14258 [Cs]. https://arxiv.org/abs/2009.14258 [Google Scholar]

- Boeke EA, Holmes AJ, & Phelps EA (2020). Toward robust anxiety biomarkers: A machine learning approach in a large-scale sample. Biological Psychiatry: Cognitive Neuroscience and Neuroimaging, 5(8), 799–807. 10.1016/j.bpsc.2019.05.018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bokma WA, Zhutovsky P, Giltay EJ, Schoevers RA, Penninx BWJH, van Balkom ALJM, Batelaan NM, & van Wingen GA (2020). Predicting the naturalistic course in anxiety disorders using clinical and biological markers: A machine learning approach. Psychological Medicine, 1–11. 10.1017/S0033291720001658 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burke NL, Schaefer LM, Hazzard VM, & Rodgers RF (2020). Where identities converge: The importance of intersectionality in eating disorders research. International Journal of Eating Disorders, 53(10), 1605–1609. 10.1002/eat.23371 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cearns M, Hahn T, & Baune BT (2019). Recommendations and future directions for supervised machine learning in psychiatry. Translational Psychiatry, 9(1), 271. 10.1038/s41398-019-0607-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cerasa A, Castiglioni I, Salvatore C, Funaro A, Martino I, Alfano S, Donzuso G, Perrotta P, Gioia MC, Gilardi MC, & Quattrone A (2015). Biomarkers of eating disorders using support vector machine analysis of structural neuroimaging data: Preliminary results. Behavioural Neurology, 10, e924814. 10.1155/2015/924814 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chekroud AM, Zotti RJ, Shehzad Z, Gueorguieva R, Johnson MK, Trivedi MH, Cannon TD, Krystal JH, & Corlett PR (2016). Cross-trial prediction of treatment outcome in depression: A machine learning approach. The Lancet Psychiatry, 3(3), 243–250. 10.1016/S2215-0366(15)00471-X [DOI] [PubMed] [Google Scholar]

- Clark LA, & Watson D (2019). Constructing validity: New developments in creating objective measuring instruments. Psychological Assessment, 31(12), 1412–1427. 10.1037/pas0000626 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coelho JS, Suen J, Clark BA, Marshall SK, Geller J, & Lam P-Y (2019). Eating disorder diagnoses and symptom presentation in transgender youth: A scoping review. Current Psychiatry Reports, 21(11), 107. 10.1007/s11920-019-1097-x [DOI] [PubMed] [Google Scholar]

- Culbert KM, Racine SE, & Klump KL (2015). Research Review: What we have learned about the causes of eating disorders – a synthesis of sociocultural, psychological, and biological research. Journal of Child Psychology and Psychiatry, 56(11), 1141–1164. 10.1111/jcpp.12441 [DOI] [PubMed] [Google Scholar]

- Cyr M, Yang X, Horga G, & Marsh R (2018). Abnormal fronto-striatal activation as a marker of threshold and subthreshold Bulimia Nervosa. Human Brain Mapping, 39(4), 1796–1804. 10.1002/hbm.23955 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duncan L, Yilmaz Z, Gaspar H, Walters R, Goldstein J, Anttila V, Bulik-Sullivan B, Ripke S, Thornton L, Hinney A, Daly M, Sullivan PF, Zeggini E, Breen G, Bulik CM, Duncan L, Yilmaz Z, Gaspar H, Walters R, … Bulik CM (2017). Significant locus and metabolic genetic correlations revealed in genome-wide association study of anorexia nervosa. American Journal of Psychiatry, 174(9), 850–858. 10.1176/appi.ajp.2017.16121402 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dwyer DB, Falkai P, & Koutsouleris N (2018). Machine learning approaches for clinical psychology and psychiatry. Annual Review of Clinical Psychology, 14(1), 91–118. 10.1146/annurev-clinpsy-032816-045037 [DOI] [PubMed] [Google Scholar]

- Eddy KT, Dorer DJ, Franko DL, Tahilani K, Thompson-Brenner H, & Herzog DB (2008). Diagnostic crossover in anorexia nervosa and bulimia nervosa: Implications for DSM-V. American Journal of Psychiatry, 165(2), 245–250. 10.1176/appi.ajp.2007.07060951 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eddy KT, Tabri N, Thomas JJ, Murray HB, Keshaviah A, Hastings E, Edkins K, Krishna M, Herzog DB, Keel PK, & Franko DL (2017). Recovery from anorexia nervosa and bulimia nervosa at 22-year follow-up. The Journal of Clinical Psychiatry, 78(02), 184–189. 10.4088/JCP.15m10393 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fairburn CG (2008). Cognitive behavior therapy and eating disorders. https://www.semanticscholar.org/paper/Cognitive-Behavior-Therapy-and-Eating-Disorders-Fairburn/c25dfa46ef03eced6c48e35e0acf50bcb7e1538f [DOI] [PMC free article] [PubMed]

- First M, Williams J, Karg R, Spitzer R, & others (2015). Structured clinical interview for DSM-5—Research version (SCID-5 for DSM-5, research version; SCID-5-RV) (pp. 1–94). American Psychiatric Association. [Google Scholar]

- Flake JK, & Fried EI (2020). Measurement schmeasurement: Questionable measurement practices and how to avoid them. Advances in Methods and Practices in Psychological Science, 3(4), 456–465. 10.1177/2515245920952393 [DOI] [Google Scholar]

- Fox KR, Huang X, Linthicum KP, Wang SB, Franklin JC, & Ribeiro JD (2019). Model complexity improves the prediction of nonsuicidal self-injury. Journal of Consulting and Clinical Psychology, 87, 684–692. 10.1037/ccp0000421 [DOI] [PubMed] [Google Scholar]

- Fried EI, & Nesse RM (2015). Depression sum-scores don’t add up: Why analyzing specific depression symptoms is essential. BMC Medicine, 13(1), 72. 10.1186/s12916-015-0325-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gianfrancesco MA, Tamang S, Yazdany J, & Schmajuk G (2018). Potential biases in machine learning algorithms using electronic health record data. JAMA Internal Medicine, 178(11), 1544. 10.1001/jamainternmed.2018.3763 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gupta RK, Marks M, Samuels THA, Luintel A, Rampling T, Chowdhury H, Quartagno M, Nair A, Lipman M, Abubakar I, van Smeden M, Wong WK, Williams B, & Noursadeghi M (2020). Systematic evaluation and external validation of 22 prognostic models among hospitalised adults with COVID-19: An observational cohort study. European Respiratory Journal, 56, 2003498. 10.1183/13993003.03498-2020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haynos AF, Field AE, Wilfley DE, & Tanofsky-Kraff M (2015). A novel classification paradigm for understanding the positive and negative outcomes associated with dieting: PSYCHO-BEHAVIORAL DIETING PARADIGM. International Journal of Eating Disorders, 48(4), 362–366. 10.1002/eat.22355 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haynos AF, Wang SB, Lipson S, Peterson CB, Mitchell JE, Halmi KA, Agras WS, & Crow SJ (2020). Machine learning enhances prediction of illness course: A longitudinal study in eating disorders. Psychological Medicine, 51, 1–1402. 10.1017/S0033291720000227 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hwang Y, Kim HJ, Choi HJ, & Lee J (2020). Exploring abnormal behavior patterns of online users with emotional eating behavior: Topic modeling study. Journal of Medical Internet Research, 22(3), e15700. 10.2196/15700 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ioannidis K, Serfontein J, Deakin J, Bruneau M, Ciobanca A, Holt L, Snelson S, & Stochl J (2020). Early warning systems in inpatient anorexia nervosa: A validation of the MARSIPAN-based modified early warning system. European Eating Disorders Review, 28(5), 551–558. 10.1002/erv.2753 [DOI] [PubMed] [Google Scholar]

- Jacobucci R, & Grimm KJ (2020). Machine learning and psychological research: The unexplored effect of measurement. Perspectives on Psychological Science, 15(3), 809–816. [DOI] [PubMed] [Google Scholar]

- James G, Witten D, Hastie T, & Tibshirani R (2013). An introduction to statistical learning (Vol. 103). Springer. 10.1007/978-1-4614-7138-7 [DOI] [Google Scholar]

- Jennings Mathis K, Anaya C, Rambur B, Bodell LP, Graham AK, Forney KJ, Anam S, & Wildes JE (2020). Work-force diversity in eating disorders: A multi-methods study. Western Journal of Nursing Research, 42(12), 1068–1077. 10.1177/0193945920912396 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kass AE, Kolko RP, & Wilfley DE (2013). Psychological treatments for eating disorders. Current Opinion in Psychiatry, 26(6), 549–555. 10.1097/YCO.0b013e328365a30e [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kessler RC, van Loo HM, Wardenaar KJ, Bossarte RM, Brenner LA, Cai T, Ebert DD, Hwang I, Li J, De Jonge P, Nierenberg AA, Petukhova MV, Rosellini AJ, Sampson NA, Schoevers RA, Wilcox MA, & Zaslavsky AM (2016). Testing a machine-learning algorithm to predict the persistence and severity of major depressive disorder from baseline self-reports. Molecular Psychiatry, 21(10), 1366–1371. 10.1038/mp.2015.198 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koutsouleris N, Kahn RS, Chekroud AM, Leucht S, Falkai P, Wobrock T, Derks EM, Fleischhacker WW, & Hasan A (2016). Multisite prediction of 4-week and 52-week treatment outcomes in patients with first-episode psychosis: A machine learning approach. The Lancet Psychiatry, 3(10), 935–946. 10.1016/S2215-0366(16)30171-7 [DOI] [PubMed] [Google Scholar]

- Kuhn M, & Johnson K (2013). Applied predictive modeling. Springer-Verlag. 10.1007/978-1-4614-6849-3 [DOI] [Google Scholar]

- Lambrecht A, & Tucker C (2019). Algorithmic bias? An empirical study into apparent gender-based discrimination in the display of STEM career ads. SSRN Electronic Journal, 65(7), 2966–2981. 10.2139/ssrn.2852260 [DOI] [Google Scholar]

- Lenhard F, Sauer S, Andersson E, Månsson KN, Mataix-Cols D, Rück C, & Serlachius E (2018). Prediction of outcome in internet-delivered cognitive behaviour therapy for paediatric obsessive-compulsive disorder: A machine learning approach. International Journal of Methods in Psychiatric Research, 27(1), e1576. 10.1002/mpr.1576 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lum K, & Isaac W (2016). To predict and serve? Significance, 13(5), 14–19. 10.1111/j.1740-9713.2016.00960.x [DOI] [Google Scholar]

- Poldrack RA, Huckins G, & Varoquaux G (2020). Establishment of best practices for evidence for prediction: A review. JAMA Psychiatry, 77(5), 534–540. 10.1001/jamapsychiatry.2019.3671 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reardon KW, Smack AJ, Herzhoff K, & Tackett JL (2019). An N-pact factor for clinical psychological research. Journal of Abnormal Psychology, 128(6), 493–499. 10.1037/abn0000435 [DOI] [PubMed] [Google Scholar]

- Roberts SO, Bareket-Shavit C, Dollins FA, Goldie PD, & Mortenson E (2020). Racial inequality in psychological research: Trends of the past and recommendations for the future. Perspectives on Psychological Science, 15(6), 1295–1309. 10.1177/1745691620927709 [DOI] [PubMed] [Google Scholar]

- Robinaugh D, Haslbeck JMB, Waldorp L, Kossakowski JJ, Fried EI, Millner A, McNally RJ, van Nes EH, Scheffer M, Kendler KS, & Borsboom D (2019). Advancing the network theory of mental disorders: A computational model of panic disorder [preprint]. PsyArXiv. 10.31234/osf.io/km37w [DOI] [Google Scholar]

- Rozzell K, Moon DY, Klimek P, Brown T, & Blashill A (2019). Prevalence of eating disorders among US children aged 9 to 10 Years: Data from the adolescent brain cognitive development (ABCD) study. JAMA Pediatrics, 173(1), 100–101. 10.1001/jamapediatrics.2018.3678 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sadeh-Sharvit S, Fitzsimmons-Craft EE, Taylor CB, & Yom-Tov E (2020). Predicting eating disorders from Internet activity. International Journal of Eating Disorders, 53(9), 1526–1533. 10.1002/eat.23338 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sheehan DV, Lecrubier Y, Sheehan KH, Amorim P, Janavs J, Weiller E, Hergueta T, Baker R, & Dunbar GC (1998). The Mini-International Neuropsychiatric Interview (M.I.N.I): The development and validation of a structured diagnostic psychiatric interview for DSM-IV and ICD-10. The Journal of Clinical Psychiatry, 59(Suppl 20), 22–33. [PubMed] [Google Scholar]

- Smith DMY, Lipson SM, Wang SB, & Fox KR (2021). Online methods in adolescent self-injury research: Challenges and recommendations. Journal of Clinical Child & Adolescent Psychology, 1–12. 10.1080/15374416.2021.1875325 [DOI] [PubMed] [Google Scholar]

- Sonneville KR, & Lipson SK (2018). Disparities in eating disorder diagnosis and treatment according to weight status, race/ethnicity, socioeconomic background, and sex among college students. International Journal of Eating Disorders, 51(6), 518–526. 10.1002/eat.22846 [DOI] [PubMed] [Google Scholar]

- Stice E, Cooper J, Schoeller D, Tappe K, & Lowe M (2007). Are dietary restraint scales valid measures of moderate- to long-term dietary restriction? Objective biological and behavioral data suggest not. Psychological Assessment, 19, 449–458. 10.1037/1040-3590.19.4.449 [DOI] [PubMed] [Google Scholar]

- Stice E, Fisher M, & Lowe MR (2004). Are dietary restraint scales valid measures of acute dietary restriction? Unobtrusive observational data suggest not. Psychological Assessment. [DOI] [PubMed] [Google Scholar]

- Strauss ME, & Smith GT (2009). Construct validity: Advances in theory and methodology. Annual Review of Clinical Psychology, 5, 1–25. 10.1146/annurev.clinpsy.032408.153639 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vall E, & Wade TD (2015). Predictors of treatment outcome in individuals with eating disorders: A systematic review and meta-analysis. International Journal of Eating Disorders, 48(7), 946–971. 10.1002/eat.22411 [DOI] [PubMed] [Google Scholar]

- Wang SB, Coppersmith DDL, Kleiman EM, Bentley KH, Millner AJ, Fortgang R, Mair P, Dempsey W, Huffman JC, & Nock MK (2021). A pilot study using frequent inpatient Assessments of suicidal thinking to predict short-term postdischarge suicidal behavior. JAMA Network Open, 4(3), e210591. 10.1001/jamanetworkopen.2021.0591 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilson GT, & Shafran R (2005). Eating disorders guidelines from NICE. The Lancet, 365(9453), 79–81. 10.1016/S0140-6736(04)17669-1 [DOI] [PubMed] [Google Scholar]

- Yarkoni T, & Westfall J (2017). Choosing prediction over explanation in psychology: Lessons from machine learning. Perspectives on Psychological Science: A Journal of the Association for Psychological Science, 12(6), 1100–1122. 10.1177/1745691617693393 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data sharing not applicable to this article as no data sets were generated or analysed for this paper.