Abstract

Experiments aiming at high sensitivities usually demand for a very high statistics in order to reach more precise measurements. However, for those exploiting Low Temperature Detectors (LTDs), a high source activity may represent a drawback, if the events rate becomes comparable with the detector characteristic temporal response. Indeed, since commonly used optimum filtering approaches can only process LTDs signals well isolated in time, a non-negligible part of the recorded experimental data-set is discarded and hence constitute the dead-time. In the presented study we demonstrate that, thanks to the matrix optimum filtering approach, the dead-time of an experiment exploiting LTDs can be strongly reduced.

Introduction

Low Temperature Detectors (LTDs) are one of the most suitable detectors for experiments demanding a very high energy resolution for energies up to MeV [1]. This request usually stems from the need of a high background rejection power and a good sensitivity for a low-probability fraction of the measured energy spectrum. However, whenever the background or the signal rate becomes comparable to the LTDs characteristic temporal profile, the experiment may suffer from sensitivity loss. Indeed, in this case a non-negligible fraction of the collected data-set would be composed of pile-up on tail events, which have to be discarded. This is due to the fact that the optimum filter technique described in [2] cannot correctly process pile-up on tail events, since it is non-causal. The amount of discarded data, due to the limited performance of this filter, constitutes the dead-time.

A large dead-time translates in a heavily reduced signal statistics that in turn worsen the target measurement. With the aim of reducing the amount of dead-time, we investigated an alternative approach, presented in [3], for the processing of LTD pulses. In this paper we report the study carried out on this optimum filter [3] (matrix filter in the following) in the framework of the HOLMES experiment [4]. We probed the performance of this alternative optimum filtering technique on both real and simulated HOLMES microcalorimeters data by computing the experimental energy resolution and the projected dead-time.

Pulse processing techniques

In order to reduce noise contributions, microcalorimeters pulses are usually processed before estimating their interesting parameters. For example, in order to evaluate the energy of an event, a processing technique optimizing the evaluation of the pulse amplitude has to be exploited. In this section we describe two pulse processing techniques for pulse amplitude evaluation. The first one is the most known and widely used technique: the standard optimum filter of [2], (standard filter in the following). The second one is the matrix optimum filter of [3] (matrix filter in the following). Both the two filtering techniques require that the processed pulses are acquired in record windows whose length is determined by the detector characteristic time profile in such a way that, within their record windows, pulses can fully recover. Moreover, the two filters theoretical derivations assume that the pulses have a fixed shape and that the noise, to which the signals are subject, is ergodic.

Standard filter

Let be the Fourier Transform of the pulse (S(t)) and the microcalorimeter noise power spectral density. The standard filter (H) is derived, as in [2], by maximizing the signal-to-noise ratio () of the processed pulse:

| 1 |

where is the time at which the pulse reaches its maximum value. Equation (1) can be maximized by applying the Shwartz inequality, leading to:

| 2 |

where is the complex conjugate of the pulse Fourier Transform. The pulse amplitude is then estimated by evaluating the maximum of the filtered pulse (f(t)):

| 3 |

Matrix filter

The matrix filter is derived by maximizing the likelihood L between the processed pulse and its model. Let and be respectively the pulse data vector and its model both of length n, corresponding to the number of pulse sampling points. Since the model generally depends on a certain number of parameters, it is better to refer to the model as , where is the parameters vector of length . If R is the data covariance noise matrix of dimensions , the likelihood between the model and the data results to be:

| 4 |

Assuming that the microcalorimeters pulses always have the same shape and that their amplitude linearly depends on the energy released, it is possible to assume that the model m(p) is equal to the average detector response with length n and unitary amplitude multiplied by just one parameter : the pulse amplitude value. It is worth noting that this model would also work in general even if the relation between the pulse amplitude and the energy released is no more linear, provided that the conversion between these two parameters is well known.

In a more realistic picture, the pulses model should account for more elements, such as a constant vector modeling the eventual flat baseline over which the pulse rises. In this case the model m can be written as:

| 5 |

where is the parameter representing baseline level. The model can be therefore recast as where is the parameters vector and M the matrix collecting the pulse model elements of dimension .

Under this assumption, the maximization of the likelihood in (4) returns the best estimation of the parameters vector, , which results in:

| 6 |

From this relation it is possible to identify the filter q as

| 7 |

The filter q accounts for both the processed pulse model components and the noise covariance matrix as the standard filters do. Moreover, in order to get the pulse amplitude estimation, it is sufficient to compute the matrix inner product between the filter and the data. Differently from the standard filter, the matrix one permits the introduction of additional elements to better model the processed data. For example, an extra s vector modeling a second pulse in the record would in principle allow the processing of pile-up on tail events.

We observed that this approach heavily depends on the temporal discretization of the recorded pulses. Indeed, in order to mitigate any possible artifacts, e.g. non-correct amplitudes evaluation due to infra-sample pulses arrival times, in the matrix M for every array a second derivative vector of the averaged detector response is introduced.

The HOLMES experiment data-set

The HOLMES experiment, which aims to measure the electron anti-neutrino mass with a sensitivity of 2 eV, will exploit 1024 Mo/Cu Transition-Edge Sensor (TES) [5] based microcalorimeters implanted with Ho. The HOLMES TES pulses are acquired with a derivative threshold trigger and recorded by means of windows with prefixed length, during which the trigger will be paralyzed. Moreover, in order to also collect information on the pulses baseline, the record window is shifted backward in time, with respect to the pulse triggering time, of about one tenth of the window length. This part of the window is hence called pretrigger.

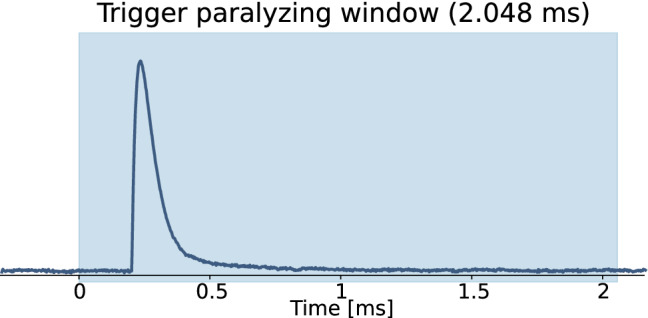

The window length is chosen on the basis of the microcalorimeters characteristic temporal response, in order to allow the full recovery of the signals to the baseline level within the window. As a rule of thumb, we suppose that a window length larger than five times the pulse decay time guarantees its complete recovery. Assuming the temporal characteristics of the microcalorimeters studied in [6], a record window of 2.048 ms, composed by 1024 points (out of which 100 are of pretrigger) with a sampling frequency of 500 kHz, would be suitable for the experimental purposes. Indeed with this choices the signals can fully recover within the window, as shown in Fig. 1, where a pulse simulated to reproduce those collected in the study presented in [6] is shown.

Fig. 1.

Example of a simulated pulse of a HOLMES microcalorimeter. In its 2.048 ms record window (light-blue in the figure), the pulse is sampled with 1024 points (out of which 100 are of pretrigger) with a sampling frequency of 500 kHz

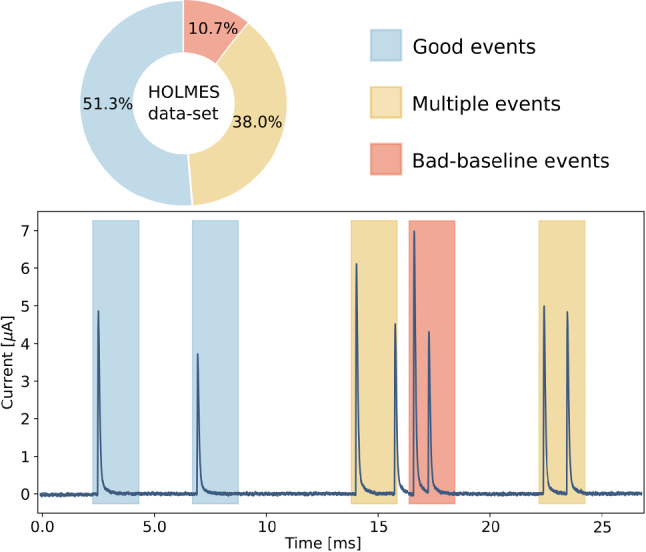

Each record window is then classified according to the features shown. If the pretrigger is characterized with a flat baseline and in the whole window only one pulse is present, it is tagged as a good event. If the baseline is flat but the record features more than one pulse, it is classified as a multiple event. If the baseline is non-flat, the record is labeled as bad-baseline.

Simulation of the data-set

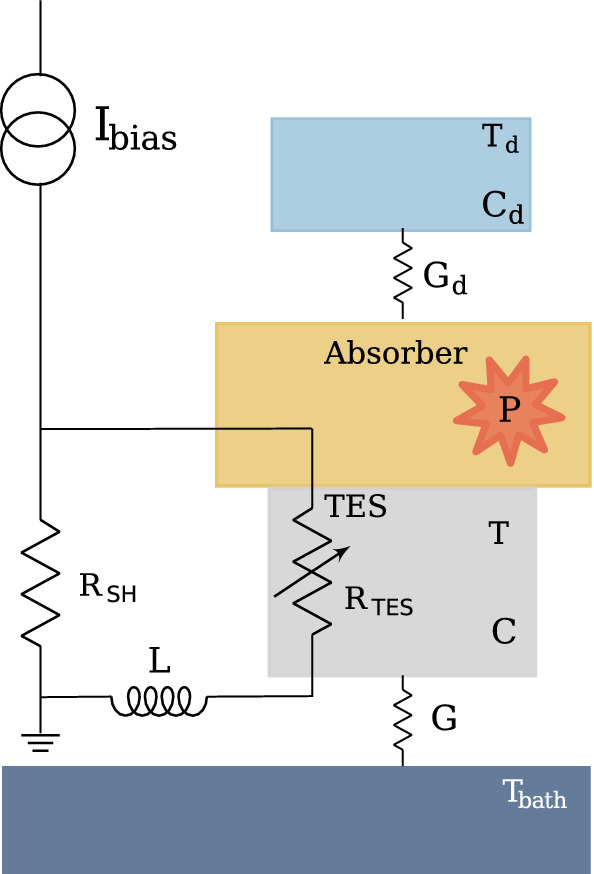

TESs are sensitive to temperature variations. If properly biased with a voltage producing a current , their response to a release of energy E, corresponding to a power P, can be calculated by solving the thermal and electrical differential equation of the TES model [5]. The so-computed response depends both on external parameters, such as the environment temperature () and electrical circuit impedance (L), and on the TES properties, such as its resistance (), its temperature (T), its capacitance (C), the voltage (), the current flowing in it () and the thermal conductance between the TES and the environment (G).

For the HOLMES TESs a good agreement was found between real pulses and those simulated with the two-body dangling model [7, 8] presented in Fig. 2 and described by the following set of equations:

| 8 |

where, and are the heat capacity and the temperature of the dangling body in the model (depicted in light-blue in Fig. 2) and K and are constants depending on the thermal conductances G and , respectively.

Fig. 2.

Two-body dangling model for HOLMES microcalorimeter pulses simulation. The TES is represented by the gray body, the absorber part of the microcalorimeter where the Ho is implanted is depicted in yellow and the dangling body is illustrated in light-blue. Whenever a Ho decay occurs in the absorber, a release of energy E, corresponding to a power P (depicted in red in the scheme), triggers the microcalorimeter response which depends both on the electric and thermal circuit parameters

Equations in (8) can be solved with a fourth order Runge-Kutta method, by discretizing the time variable as to simulate the HOLMES data acquisition system. In order to study realistic data and simulate the temporal jitter effect, a pulse interpolation with a random shift within the interval in terms of sampling points is applied. The so-obtained pulses were then superimposed to random samples of noise. These latter were obtained by extracting the parameters of the AutoRegressive Moving Average model (ARMA(p,q)) [9] from the comparison between theoretical and experimental noise power spectral densities of the device.

In order to properly simulate the data-set to analyze with the two different filters, a Monte Carlo study considering the HOLMES experiment final configuration ( detectors with 300 Bq of Ho activity each [4]), was performed. With a conservative approach, in this study, we labeled as bad-baseline every record window whose starting point is less than 1.848 ms away the previous pulse arrival time. The results of this simulation are reported in Fig. 3. The study reveals that for a 300 Bq of Ho source, which is the target activity per single HOLMES microcalorimeter [4], only half of the recorded events are good, and hence processable with the standard filter.

Fig. 3.

Results of a HOLMES experiment TES simulation: composition of the HOLMES experiment data-set (top); part of raw data flow from a HOLMES microcalorimeter with triggered record windows colored as the classification of the events (bottom)

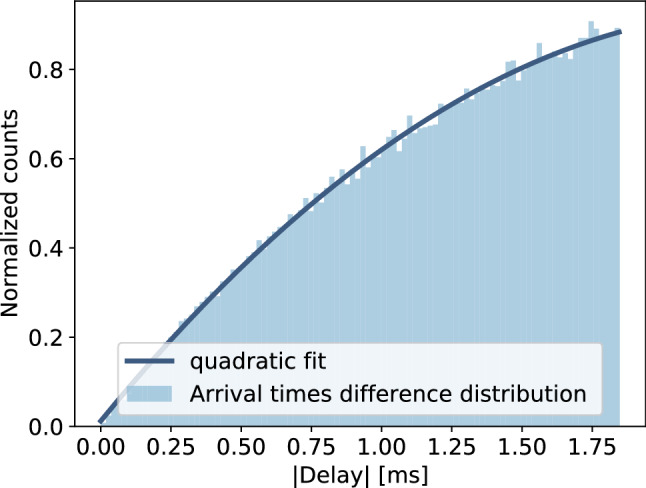

Moreover, thanks to the same study, the distribution of arrival times differences between bad-baseline events triggered pulses and their previous ones is estimated. This outcome, which is perfectly fit with a quadratic function, is reported in Fig. 4. For sake of clarity, from now on, we will simply refer to the difference between two consecutive pulses arrival times as delay.

Fig. 4.

Distribution of arrival times difference (delay) between the triggered pulse and its previous one in bad-baseline events. It follows a quadratic trend

For this work we have simulated a HOLMES data-set following the results of the Monte Carlo of Fig. 3. For sake of simplicity, the data-set we simulated accounts for good events, multiple events with two pulses only and bad-baseline events featuring one pulse, with the correct proportions and temporal distributions. Good and bad-baseline events were generated by assuming a release of 3000 eV. The choice of this energy value is dictated by the fact that it is very close to the Ho spectrum end-point (at 2833 (stat) (sys) eV [10]), which is the HOLMES experiment region of interest (ROI) and therefore we expect to obtain, for this energy value, the same energy response that would be observed in the ROI. Moreover, multiple events were split into two categories: Multi1 with first pulses corresponding to 3000 eV and second pulses randomly generated according to the Ho spectrum and Multi2 representing the viceversa.

Detector responses with the two different filters

In order to estimate the amount of expected dead-time depending on the exploited filter, it is necessary to study how the detector response (R(E)), which is the distribution of measured energies in response to a monochromatic input, is modified accordingly to the considered sub-set of events. For a microcalorimeter, assuming ergodic noise, R(E) is a Gaussian distribution when only good events are analyzed. The standard deviation of this distribution is defined by the filter performance in discriminating the signal to noise contributions. We verified with the study reported in [11] that the two filters are comparable in terms of energy resolution measured as the full width at half maximum (FWHM) of the K X-ray peak of Mn. Indeed we found for the matrix filter an energy resolution of eV at 5.89875 keV in line with the one measured with the standard filter, of eV [6] at the same energy peak, obtained with the same set of data.

As one would expect, the detector response is altered once non good events are processed, since these records deviate from the model one (S(t) for the standard filter and m(p) for the matrix one). Indeed, in this case, the processing of non good pulses alters R(E) in such a way that is not possible to find a correct analytical model. Therefore, it is better to reduce the set of analyzed data up to a point in which the deviations from the analytical R(E) (Gaussian in our case) no more introduce systematics in the measured parameters of interest. To perform this kind of study, we analyzed the different detector responses obtained with the two filters by varying the set of analyzed data.

Standard filter detector responses

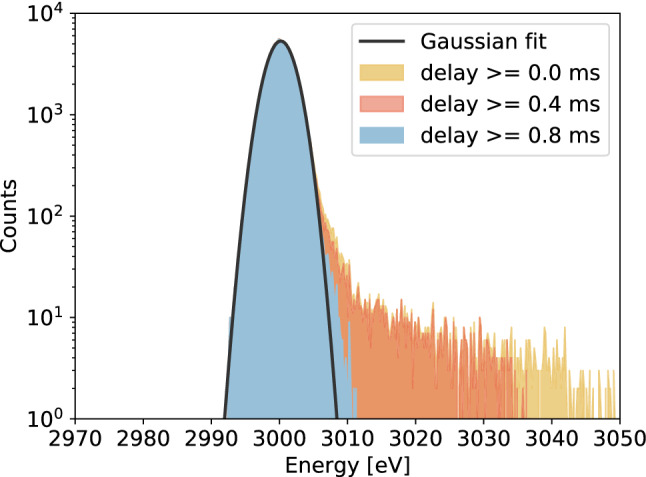

Since the standard filter, being non-causal, cannot process events with more than one pulse, we can only try to include in the processing also single bad-baseline records, constituting only the 6.1% of the data-set. The detector responses are obtained by considering several sub-set of data created by defining different lower thresholds on the delay, varying with a step of 0.1 ms. Accordingly to the studied sub-set of data, the standard filter detector response is modified. In particular, the integration of more single bad-baseline pulses in the analyzed data-set produce a bigger tail at higher energies, leaving unaltered the Gaussian distribution peaked at 3000 eV. As a proof for this, three example of detector responses characterized by three different lower thresholds in the delay are depicted in Fig. 5.

Fig. 5.

Detector responses (R(E)) generated with the application of the standard filter to different sub-sets of the data-set. In yellow R(E) obtained with the complete data-set (good plus all single bad-baseline events), in red R(E) including only bad-baseline single pulses with delay greater than 0.4 ms and in light-blue R(E) considering those with delay greater than 0.8 ms. The plot also shows the Gaussian fit (in black) of the yellow detector response. The values of the Gaussian parameters from the fit are: mean eV, standard deviation eV and norm

Matrix filter detector responses

The matrix filter can in principle be applied to each class of events. However, even if in case of good events only its performance in terms of computational cost is similar to the standard filter, in case of non good events the matrix filter has to be adjusted case-by-case in order to correctly process each pulse in the records. This implies that this filter, for the processing of non good events, has to be applied offline.

In order to construct the matrix filter detector responses, as in the case of the standard filter, different sub-sets of data are created. In this analysis we considered good pulses, bad-baseline signals, Multi1 records and Multi2 events with delays smaller than 1.756 ms. The latter limit is a consequence of the fact that, with longer delays, the pulse cannot merely reach its maximum in the record window (which lasts for just 1.848 ms after the first pulse arrival time). The value for this limit was set by requiring that the detector response R(E), at large lower thresholds on the delay, is well-fitted by a Gaussian distribution (p-value of fit ).

In both cases, bad-baseline and multiple events, before computing the matrix filter q, the information on the delay (temporal difference between the record pulse and the prior one, in the bad-baseline case, and the temporal difference between the two pulses in the record, in the multiple event case) has to be extracted from the records. To assess pulses arrival times, we exploit a simple threshold triggering technique on the pulses rising edge. Notice that, in the case of bad-baseline records, to evaluate the delay we need to have the information on the event recorded before the analyzed one. This is always possible in the HOLMES experiment, since an absolute temporal value referring to the start of the data taking (timestamp) is saved for each recorded event. Once the delay is assessed, two extra vectors are included in the matrix model M defined in Sect. 2: one average detector response s shifted in time by a factor equal to the computed delay and its second derivative vector. By shifting forward (backward) in time the s vector, for events classified as multiple (bad-baseline), some void points at the beginning (end) of the vector are created. These void points are filled with zeros in both cases of multiple and bad-baseline events. On the one hand, this represents the most natural choice for the multiple cases, in which the pulse is shifted forward and it does not contribute at all to the model prior to its arrival. On the other hand, this choice, for the bad-baseline case, was dictated by the results of an analysis revealing that, by completing the shifted s vector with the average value of its last 10 points, the detector response showed a slightly worse resolution.

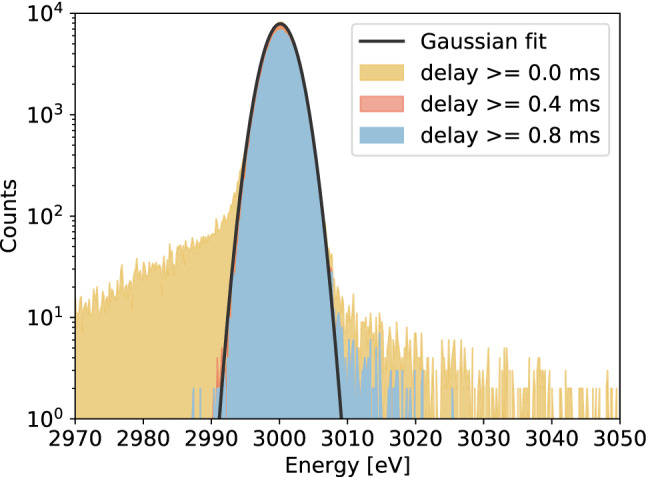

In Fig. 6 three examples of matrix filter detector responses characterized by three different lower thresholds on the delay (applied both to multiple and bad-baseline events) are reported. Differently from the standard filter case, one can notice that, by increasing the lower threshold on the delay, not only the distribution tails are modified, but also the Gaussian peak. Indeed, as clearly visible from the comparison of the red and light-blue R(E) of Fig. 6, a significant portion of counts at the Gaussian peak centered at 3000 eV are removed. This means that, differently from the standard filter case, this filter correctly estimate also the amplitudes of the pulses belonging to events with small delays. However, we have observed that there exists a lower threshold on the delay below which it seems that the amplitudes are no more correctly reconstructed causing a broadening of the detector response (as one can evince from the yellow distribution in Fig. 6). We found out that this effect cannot be ascribed to the matrix filter but rather to the intrinsic non-linearity of the TES response to multiple releases of energy very close in time, introduced by the equations in (8). This was proved by an analysis performed on multiple events generated by linearly superimposing simulated single pulses. Indeed, for this latter case we obtained a way less broaden detector response.

Fig. 6.

Detector responses (R(E)) generated with the application of the matrix filter to different sub-sets of the data-set, by omitting the evaluation of Multi2 events with a delay larger than 1.756 ms. In yellow R(E) obtained with the complete data-set (good plus all bad-baseline and all multiple events), in red R(E) including only bad-baseline and multiple events with delay greater than 0.4 ms and in light-blue R(E) considering those with delay greater than 0.8 ms. The plot also shows the Gaussian fit (in black) of the yellow detector response. The values of the Gaussian parameters from the fit are: mean eV, standard deviation eV and norm

Dead-time evaluation for standard and matrix filters

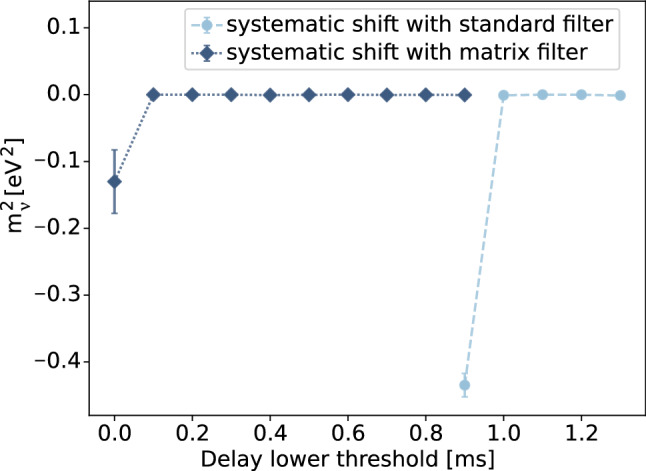

In order to evaluate the expected experimental dead-time according to the exploited filter, we studied the induced systematic effects, caused by the deviation of the detector response from the analytical one (Gaussian distribution), on the neutrino mass, which is the parameter the HOLMES experiment aims to assess by means of a fit of the Ho measured spectrum. With the detector responses previously obtained, we convoluted, bin-by-bin, 10 simulated Ho spectra comprising events, which correspond to the hypothetical amount of energy releases expected in one TES implanted with a 300 Bq activity source in four months of data-taking. We then fitted the obtained convoluted histograms with a Ho spectrum profile smeared with a Gaussian distribution with fixed variance (obtained by the Gaussian fit of the responses R(E)). In the fit procedure the squared neutrino mass, the spectrum normalization and its end-point were left as free fit parameters. For this study we decided to set 0.1 as the preliminary maximum acceptable absolute value of the systematic effect on . This value will be correctly tuned once the final configuration of the experiment will be defined.

In Fig. 7, the average of the resulting values for the squared neutrino mass is plotted for each lower threshold on the delay, we have considered in the study.

Fig. 7.

Systematic shift introduced by ignoring the non Gaussian detector response R(E) (computed for several lower thresholds on the delay with a step of 0.1 ms) caused by the application of both standard (light-blue) and matrix (blue) filters. In this study, in a conservative way, 0.1 was established as maximum acceptable absolute value of the systematic shift on

As we can clearly see from Fig. 7, the standard filter already produces a detector response that induce a shift larger than 0.1 for a lower threshold on the delay of between 0.9 and 1.0 ms, while for the matrix filter this happens between 0.0 and 0.1 ms, due to the non-linearity effects.

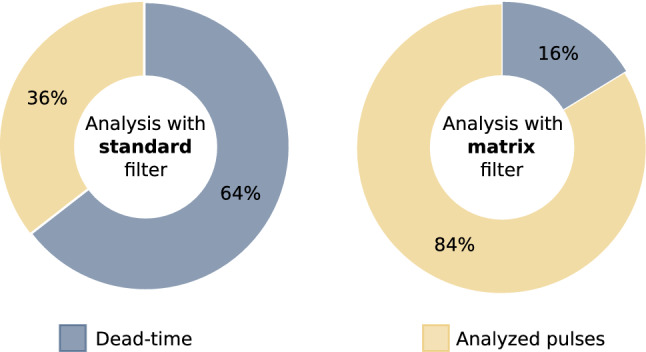

Once the lower threshold on the delay is defined, set to 1.0 ms for the standard filter and to 0.1 ms for the matrix one, we can compute the fraction of the data-set that can be processed according to the considered filter. In a conservative way, we decided, in the computation of the expected dead-time, to discard a priori the fraction of the data-set composed by multiple and bad-baseline events accounting for more than two pulses. The results of this study, expressed in terms of percentages of processable pulses, are reported in Fig. 8, both for the standard and matrix filters.

Fig. 8.

Dead-time (fraction of pulses that have to be discarded in the analysis phase) for both standard and matrix filters. Percentages are computed on a pulse-based and not event-based analysis

We can conclude that in terms of expected dead-time, the matrix filter represents a great advantage for the HOLMES experiment. Indeed, it reduces the dead-time by a factor of about four.

Conclusion

The matrix filter of [3] for microcalorimeters pulses processing represents a great alternative to the standard optimum filter, described in [2]. Indeed, it provides very similar energy resolutions and, at the same time, it also allows the analysis of non good events. This property is of great advantage for experiments exploiting microcalorimeters subject to very high source activities. Indeed, as demonstrated with this study in the specific framework of the HOLMES experiment, it can strongly reduce the expected dead-time. The obtained reduction of dead-time, which translates into an increased statistics for the measurement of the neutrino mass of a factor greater than two, allows to improve the experimental sensitivity on . The results found in this study show that by exploiting the matrix filter instead of the standard one for the HOLMES experiment, the neutrino mass sensitivity, which scales with the inverse of the fourth root of the statistics, improves of about 19% .

Acknowledgements

This work was supported by the European Research Council (FP7/2007-2013), under Grant Agreement HOLMES No. 340321, and by the INFN Astroparticle Physics Commission 2 (CSN2). We also acknowledge the support from the NIST Innovations in Measurement Science program for the TES detector development.

Data Availability

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: Data are not provided since they can be fully reconstructed form the information given in the paper.]

References

- 1.N.E. Booth, B. Cabrera, E. Fiorini, Annu. Rev. Nucl. Part. Sci. 46e (1996). 10.1146/annurev.nucl.46.1.471

- 2.E. Gatti, P. Manfredi, Riv. Nuovo Cim. 9N1 (1986). 10.1007/BF02822156

- 3.Fowler JW, et al. Am. Astron. Soc. 2015 doi: 10.1088/0067-0049/219/2/35. [DOI] [Google Scholar]

- 4.Alpert B, et al. Eur. Phys. J. C. 2015;75(3):112. doi: 10.1140/epjc/s10052-015-3329-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.K. Irwin, G. Hilton, Cryog. Part. Det. 99 (2005). 10.1007/10933596_3

- 6.Alpert D, et al. Eur. Phys. J. C. 2019 doi: 10.1140/epjc/s10052-019-6814-4. [DOI] [Google Scholar]

- 7.M. Borghesi et al., Eur. Phys. J. C 81 (2021). 10.1140/epjc/s10052-021-09157-x

- 8.I.J. Maasilta, Eur. AIP Adv. 2 (2012). 10.1063/1.4759111

- 9.G.E.P. Box, G.M. Jenkins, G.C. Reinsel, Time Series Analysis: Forecasting and Control, 5th edn. (Wiley,2015). ISBN: 978-1-118-67502-1

- 10.S. Eliseev et al., Phys. Rev. Lett. 115 (2015). 10.1103/PhysRevLett.115.062501

- 11.Ferrari C, Borghesi M, Faverzani M, Ferri E, Giachero A, Nucciotti A. Il Nuo. Cim. C. 2020 doi: 10.1393/ncc/i2021-21090-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: Data are not provided since they can be fully reconstructed form the information given in the paper.]