Abstract

Background

Mobile health (mHealth) apps are revolutionizing the way clinicians and researchers monitor and manage the health of their participants. However, many studies using mHealth apps are hampered by substantial participant dropout or attrition, which may impact the representativeness of the sample and the effectiveness of the study. Therefore, it is imperative for researchers to understand what makes participants stay with mHealth apps or studies using mHealth apps.

Objective

This study aimed to review the current peer-reviewed research literature to identify the notable factors and strategies used in adult participant engagement and retention.

Methods

We conducted a systematic search of PubMed, MEDLINE, and PsycINFO databases for mHealth studies that evaluated and assessed issues or strategies to improve the engagement and retention of adults from 2015 to 2020. We followed the PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) guidelines. Notable themes were identified and narratively compared among different studies. A binomial regression model was generated to examine the factors affecting retention.

Results

Of the 389 identified studies, 62 (15.9%) were included in this review. Overall, most studies were partially successful in maintaining participant engagement. Factors related to particular elements of the app (eg, feedback, appropriate reminders, and in-app support from peers or coaches) and research strategies (eg, compensation and niche samples) that promote retention were identified. Factors that obstructed retention were also identified (eg, lack of support features, technical difficulties, and usefulness of the app). The regression model results showed that a participant is more likely to drop out than to be retained.

Conclusions

Retaining participants is an omnipresent challenge in mHealth studies. The insights from this review can help inform future studies about the factors and strategies to improve participant retention.

Keywords: mobile phone, mHealth, retention, engagement

Introduction

Background

Today, 85% of the US population owns a smartphone device and daily use averages 4.5 hours [1]. With the rise in smartphone ownership and use, smartphones have become one of the most accessible and cost-effective platforms in health care and research. Smartphones are also pervasive across age, race, and socioeconomic status, allowing researchers to inexpensively reach out to myriad of population-level samples with ease. Specifically, the adoption of mobile health (mHealth) apps—mobile apps that help monitor and manage health of participants through smartphone devices, tablets, and other wireless network devices—has been increasing in the research sphere. The mHealth market is expected to grow at a compound annual growth rate of 17.6% from 2021 to 2028 [2]. In addition, the recent COVID-19 pandemic has led to a rise in the downloads and use of various mHealth apps, highlighting the importance of technology-based remote monitoring and diagnosis for continued advancement in modern health care (eg, [3]).

The greatest advantage of mHealth apps is their convenience. Unlike traditional in-person study settings, mHealth apps can be easily accessed from anywhere at the participant’s convenience. Using apps for remote assessment allows participants to make fewer site visits, substantially reducing the burden of travel and the time needed to participate in laboratory studies. With lowered barriers, it becomes easier for participants to conduct repeated testing and share real-time data based on their daily life experiences. Some mHealth research apps also allow participants to directly communicate with their providers via the app, which may enhance both the effectiveness of the app in its goals (eg, in disease management) and adherence in research studies. Given the ubiquity of smartphones among US adults, mHealth apps for research stand to better meet participants where they are at.

For researchers, the convenience of mHealth apps allows them to reach out to large and diverse participant populations more inexpensively and efficiently than traditional in-person studies. Recently, several large-scale studies were able to recruit thousands of participants within a span of a few months using Apple’s ResearchKit framework (eg, [4-7]). Using these apps, researchers can monitor day-to-day fluctuations of a wide range of real-time data. For example, self-reported emotional outcomes can be assessed together with passive location data to then infer many other real-time variables, such as physical activity, weather, and air quality, that could potentially affect mood throughout the day.

Despite these overwhelming advantages, many mHealth studies experience high participant attrition rooted in the fundamental challenges of keeping participants engaged. For example, consistent with other large-scale mHealth studies, the notable Stanford-led MyHeart Counts study experienced substantial dropout rates; mean engagement with the app was only 4.1 days [8]. It is a ubiquitous problem across all app uses; approximately 71% of app users are estimated to disengage within 90 days of a new activity [9].

It is imperative for mHealth studies to minimize participant dropout, as substantial attrition may reduce study power and threaten the representativeness of the sample. A potential benefit of mHealth research studies should include easier access to well-balanced, representative samples in terms of race, ethnicity, gender, age, education status, etc. However, given that many studies systematically lose participants, systematic differences between participants who are not completing the studies and those who complete the studies, may introduce bias to the sample. Differential retention makes it difficult to conclude whether any observed effects were caused by the intervention itself, retention bias, or inherent differences between groups. Participant dropout also precludes the conduct of longitudinal research.

In an effort to understand the various factors affecting participant retention, recent studies have evaluated recruitment and retention in several remotely conducted mHealth studies. In their cross-study evaluation of 100,000 participants, Pratap et al [10] analyzed individual-level study app use data from 8 studies that accumulated nearly 3.5 million remote health evaluations. Their study identified 4 factors that were significantly associated with increased participant retention: clinician referral, compensation, having the clinical condition of interest, and older age. However, the study only focused on large-scale observational studies led by the Sage or Research Kit, with especially low barriers to entry and exit, thus questioning the appropriateness of applying these findings to other small-scale studies with varying levels of participation. To our knowledge, other published systematic reviews and meta-analyses on engagement and retention are narrowly focused on one subfield of mHealth research, such as depression or smoking, or are only based on a few studies. Thus, it is impossible to extrapolate their findings to other mHealth apps that are not in the same subfield [11-13].

Retention strategies could be incorporated as app features to prevent participant dropout. For example, gamifying mHealth apps by incorporating badges, competitions, and rankings should make the experience more enjoyable and provide better incentives for participants. The addition of reminders, such as push notifications and SMS text messages, and enabling communication with clinicians are also expected to increase participant retention. However, the extent of their effectiveness in successfully engaging and retaining participants is not yet well defined.

This Study

One fundamental challenge for many mHealth app studies is the rapid and substantial participant dropout. This study aimed to better understand how mHealth studies conducted in the past 5 years have addressed the challenges of participant engagement and retention. We conducted a systematic review of the literature to identify notable factors and strategies used in participant engagement and retention. We hypothesize that participant attrition will be high overall and that there will be shared challenges across different studies that researchers should be cognizant of in future research.

Methods

Search Criteria and Eligibility

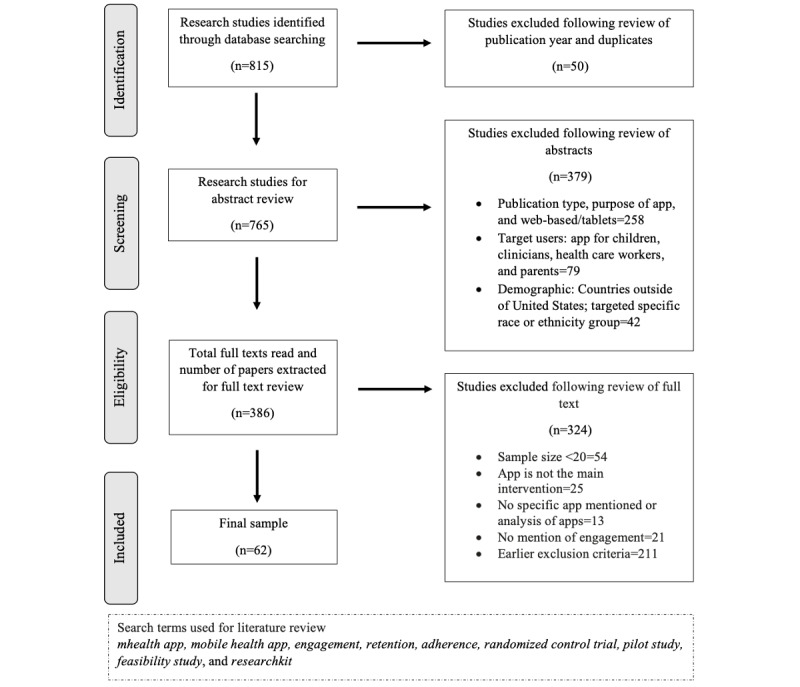

Our methodology was guided by the PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) statement [14]. We identified 3 main databases for this search: PubMed, MEDLINE, and PsycInfo. This review aimed to evaluate the engagement and retention of adults in evaluation research on mHealth apps. Study inclusion criteria were peer-reviewed publications within the last 5 years (January 2015 to October 2020), conducted within the United States, with a minimum of 20 adults. Refer to Figure 1 for more details on the search strategy and exact search terms. Although mHealth takes many forms, we were exclusively interested in mobile-based apps rather than SMS text messaging, tablets, or web-based interventions. We used a variety of research methods and designs, including qualitative, quantitative, or mixed methods. To conduct a comprehensive analysis, we also included mHealth apps in various research areas, ranging from smoking cessation to cardiovascular health research. Articles that were written purely as study protocols or design pieces were excluded. As we were primarily interested in mHealth for intervention purposes, we excluded studies that used fitness app data exclusively (eg, Fitbit and digital pedometers), unless they were specifically geared toward a particular health population (eg, breast cancer survivors and patients with other chronic illnesses). We also excluded evaluations of mHealth apps that focused solely on participant education or where the clinician was the focus of the intervention.

Figure 1.

Study selection flowchart.

Data Extraction and Analysis

We initially extracted basic information from each study: title, year, author, target population, operating system, definition of engagement, sample size and type (clinical vs nonclinical), participant age, study duration, main findings, possible implications, and whether participants were compensated. Most of these data were analyzed in a quantitative manner and are described as descriptive statistics in the Results section (ie, app system, sample size, sample type, compensation, and participant age). These data were also used to develop a binomial regression model to determine the factors affecting retention. For the remaining variables, such as the definition of engagement, findings, and implications, we extracted whole sentences or paragraphs that mentioned these items. Following the narrative approach described by Mays et al [15], the first (SA) and second author (SP) analyzed the findings and implications of the initial sample extraction to determine potential themes around retention and engagement. At this point, codes were applied to individual considerations of retention and engagement (or lack thereof) within the articles. After several readings of all extracted findings and implications, the second author initially determined approximately 5 themes related to support and barriers to engagement. These themes were developed from sets of codes, and these sets of codes were considered a theme once they were identified in 2 unique articles. After discussion and agreement with the first author, the second author reread the full-text articles to continue to refine these themes and consolidate the findings. We reached saturation when we could no longer identify new themes during the analysis, a process Saunders et al [16] considered inductive thematic saturation. Descriptions of these themes are presented in the qualitative findings of the Results section. The definitions of engagement themes and success rates were also processed in a similar way, and they are described in the quantitative findings of the Results section.

Results

Final Sample of Studies

After locating all studies that met our search criteria (N=389) and downloading the full text, the first and second authors briefly reviewed the abstracts and full text to determine whether the selected studies met our inclusion criteria. In this process, we confirmed that all the studies that should have been excluded were, in fact, excluded. In a random sample of 100 articles, the authors agreed on 91% (91/100) of these decisions. After reaching an agreement about the remaining 9% (9/100), the first and second author divided the remaining articles for a more detailed review. The final sample comprised 62 articles. Refer to Figure 1 for the study selection flowchart and Multimedia Appendix 1 [4-8,17-73] for characteristics of the studies.

Descriptive Findings

The mean age across the users of mHealth apps among the 62 studies was 44.14 years (range 32-64.9 years), and the majority were of a clinical population (48/62, 77%). The sample size ranged from 20 (our predetermined minimum) to 101,108 participants, with most studies reporting a sample size of <100 (34/62, 55%). Most studies reported compensating participants (34/62, 55%). Most articles described apps that were available for both iPhone and Android users (29/62, 47%). Refer to Table 1 for more information about the descriptive statistics.

Table 1.

Descriptive statistics of the 62 studies.

|

|

Values | |

| Age of users (years), mean (range) | 44.14a (32-64.9) | |

| Sample sizeb (n=108), n (%) | ||

|

|

20-49 | 17 (27) |

|

|

50-99 | 17 (27) |

|

|

100-499 | 12 (19) |

|

|

>500 | 16 (25) |

| Platform, n (%) | ||

|

|

Android | 11 (17) |

|

|

iPhone | 11 (17) |

|

|

Both | 29 (46) |

|

|

Not reported | 11 (17) |

| Clinical vs nonclinical, n (%) | ||

|

|

Clinical | 48 (77) |

|

|

Nonclinical | 14 (22) |

| Compensation, n (%) | ||

|

|

Provided compensation | 34 (54) |

|

|

No compensation | 28 (45) |

| Success code, n (%) | ||

|

|

Not successful | 13 (21) |

|

|

Partially successful | 42 (67) |

|

|

Successful | 6 (9) |

|

|

Not able to calculate | 1 (1) |

aAdequate information to calculate the mean age was not provided for 13 out of the 62 studies. We excluded these studies from the mean age calculation.

bThe sample size ranged from 20 to 101. The median was 90.5 (IQR 436).

Definitions

Engagement, Retention, Adherence, Compliance, Completion, etc

We identified 2 main themes regarding the use of definitions in the literature sample. Our initial finding was that there is no clear agreement on the definition of engagement. This was likely because the literature in this space varies widely across questions, motivations, and perspectives. The second finding was that engagement was often captured by many different terms. In our sample, we saw terms such as retention, adherence, compliance, completion, and others sometimes used interchangeably. Despite this lack of clarity, we categorized our final sample into 3 distinct areas of engagement. Almost all studies (59/62, 95%) described or measured some form of engagement around the opening or using a specific app. Depending on the interface of the app being studied, this open or use definition encompasses nearly any type of app interaction. In some cases, the number of app opens and duration of time spent were collected via a backend system, whereas for others, the data that users logged within the app were part of this definition. The 3 articles that did not fit our open or use category relied on self-reported use of the app or measuring the completion of intervention activities from the app.

Success

We asked about the extent to which the research was successful in maintaining participant engagement. Regardless of the term used for engagement or retention, we defined success as the percentage of participants with complete data from the initial sample after an intervention. We defined a Success Code variable with 3 categories based on information from the mean and SD. Percentages below the mean minus one SD were considered not successful and percentages above the mean plus one SD were considered successful. Everything else in between was considered partially successful (42/62, 68%). Only 19% (12/62) were considered not successful and 3% (2/62) could not be calculated because they relied on self-reported app use.

Simultaneously, we developed a binomial regression model to examine the factors that could affect retention. The outcome of our binomial regression model was the proportion of complete data from the final sample. The model was weighted on the sample size of the studies. Table 2 shows the odds ratio estimates and CIs from the binomial regression model. For any given participant, it is more likely that they will not be retained than they will be retained. Furthermore, participants with a clinical condition of interest were 4 times more likely to stay in the study than those who did not. Moreover, participants who were compensated were 10 times more likely to stay in the study than those who were not compensated.

Table 2.

Results from the binomial regression model.

|

|

Odds ratio (95% CI) |

| Intercept | 0.09a (0.093-0.094) |

| Clinical | 4.34a (4.16-4.52) |

| Compensation | 10.32a (9.48-11.25) |

aP<.001.

Qualitative Findings

Our qualitative findings represent the recurring themes around engagement listed in the findings, discussion, limitations, or conclusions sections of the articles. To be considered a stand-alone theme, the concept must have appeared in at least two independent studies in our sample.

Support Themes

We identified 3 major themes (ie, app affordances, successful recruitment, and low barriers to entry) that researchers mentioned that might have kept the participants engaged in their mHealth apps. Even if the article in the sample did not specifically use these supports, we noted where researchers recommended more work to address these supports in future research.

App Affordances

Affordances are “the quality or property of an object that defines its possible uses or makes clear how it can or should be used” [74]. In the technology space, this word is often used to describe the possibilities of specific actions that software or hardware allows. On the level of the app being studied (either compared with business-as-usual, another app, or something else altogether), there were several affordances that made research participants more engaged or more likely to stay engaged across the study span. One such factor was gamification. According to Fernandez et al [57], “Gamification or the use of game design elements (badges, leaderboards, rewards, and avatars) can help maintain user engagement.” Very few studies have actually implemented gamification, but this theme was often mentioned as a possibility for future research to evaluate. Approximately one-quarter of our sample mentioned gamification as a future tool for promoting or sustaining engagement in a given mHealth app.

Although it was sometimes an area of interest in its own right, most articles mentioned some level of app reminders, feedback, or notifications that promoted engagement. Indeed, Bidargaddi et al [22] tested the effect of timing on weekends versus weekdays and found that users were most likely to engage with the app within 24 hours if prompted midday on the weekend. It is clear that reminders or other feedback through notifications was a supportive element for producing more engagement and less retention.

Approximately half of the articles mentioned some form of social support provided by coaches or peers within the app. Apps that included a coaching element, either from paraprofessionals, other participants, or the research study team, reported that this social support was critical for maintaining engagement throughout. One specific study by Mao et al [64] reported that 90% of participants who downloaded the app completed 4 months of coaching. This finding was likely because of a combination of participant-selected professional coaches who provided accountability and the social nature of the coaching relationship. In addition to social support, apps featuring tailored and personalized content were more likely to support engagement and adherence to the study.

Successful Recruitment

A total of 2 subthemes were drawn from the discussion of recruitment as support for engagement: recruiting highly motivated niche groups and providing some type of motivator in the form of either an incentive or a compensation. mHealth apps that were focused on a niche or highly motivated group of users tended to be more successful in engaging participants over the course of the study. For example, mHealth apps created to support smoking cessation for adult smokers were more likely to be successful when participants were already highly motivated to stop smoking (eg, [18,40,72]). Most studies also mentioned either some form of compensation or other incentives or motivators that could engage more study participants for a longer period. More than half of the studies mentioned providing some type of compensation. Several articles mentioned that there was also a necessary balance needed to use compensation effectively. Providing too little incentive might make participants less compelled to continue in the research, but at the same time, providing too much incentive could also backfire by reducing their intrinsic motivation to continue. This balance continues to be important for researchers to consider moving forward.

Low Barriers to Entry

Related to both app affordances and recruitment strategies, another subtheme that emerged was apps with extremely low barriers to entry. This theme was best described by McConnell et al [7] in the MyHeart Counts Study. Their app was based on Apple’s ResearchKit and enabled nearly 50,000 participants to register and provide consent for research. By launching a free app on smartphones, the authors stated “...the bar for entry to this study was much lower than that for equivalent studies performed using in-person visits. This lowering has the demonstrated advantage that many people consented...” Several other large-scale studies developed using ResearchKit had the advantage of recruiting and enrolling several thousands of participants [75]. This initial engagement was noted as a benefit, but as we learned later, such a low barrier to entry also often meant a low barrier to exit.

Barrier Themes

Researchers have also mentioned barriers that might diminish participant engagement. Here, we also noted barriers that were addressed in the discussion or limitations section of the articles, even though they were not actively described in the measures or results. These themes were described as (1) the lack of support codes; (2) low barriers to exit; (3) technical difficulties in using the app; and (4) somewhat counterintuitively, the usefulness of an app.

Lack of Support Features

Most barriers, either explicitly described or implied, were those that counteracted the support features. Articles routinely mentioned the lack of app affordances and recruitment success. Research involving apps without gamification, notifications of some sort, or support from peers or coaches was more likely to mention these as potential rationales for poor engagement and areas that could be improved in the future. A similar phenomenon was found in terms of recruitment strategies, where lack of compensation or having a niche group for the app were regularly noted as barriers to retention.

Low Barriers to Exit

In the same manner that large smartphone-based studies using the ResearchKit format provided a low barrier to entry, they also provided an equally low barrier to exit. For example, the MyHeart Counts Study further noted that when there is a low barrier to entry, there is a “notable disadvantage that those individuals are by definition less invested in the study and thus less likely to complete all portions” [7]. Almost all the apps available from ResearchKit in our sample represent the highest end of the sample size; however, none of the studies received even a partially successful code in our analysis.

Technical Difficulties

Articles that mentioned occasional glitches or bugs in the use of their apps were also likely to describe technical difficulties as a reason for lack of engagement. One study explicitly mentioned the use of the research support team to troubleshoot any technical difficulties for users [35], but most articles did not mention how they handled technology support requests. It is likely that some of the technical difficulties could have been on the app side, especially when the apps tested were in a pilot or beta form, but it is also possible that the participants had their own technical difficulties. None of the studies we evaluated performed any kind of pretest to measure participant comfort or familiarity with apps in general or apps similar to the one being studied. Generally, participants who were young adults or middle-aged were assumed to be good with technology overall. In addition, despite nearly a third of the articles mentioning usability and feasibility as a main investigation, only 5 studies mentioned participant results from the System Usability Scale [76], a standardized measure of usability frequently included in the human-computer interaction research space. Otherwise, usability and feasibility analyses were conducted on a study-by-study basis.

Usefulness of App

Although it may seem counterintuitive, apps that were extremely useful for participants were also some of the apps anecdotally deemed poor at engagement. For example, participants who successfully quit smoking while using a smoking cessation app generally had poor engagement in the long term. Indeed, if an app works, or achieves what it is meant to achieve, and does not offer some kind of regular check-in or maintenance program, it may be reasonable that participants taper the use of the app. In these cases, reduced engagement is a sign of success rather than failure and could actually be considered the goal of the app.

Discussion

Principal Findings

This study synthesizes the literature on mHealth apps and the engagement strategies. As mHealth apps continue to grow in popularity and research in this space follows that trend, researchers need to identify what made participants stay engaged in the app or studies with the app.

Our review found that most (48/62, 77%) studies were at least partially successful in maintaining participant engagement throughout. Many of these successes were because of the support features of the research or app and the lack of barriers to entry. We determined the categories of strategies that support or detract from engagement. We identified particular elements of the app (eg, feedback, appropriate reminders, and in-app support from peers or coaches) and strategies for research that promote retention (eg, compensation and niche samples) as well as those that do not support retention (eg, lack of support features, technical difficulties, and usefulness of app). Research on the massive population-level ResearchKit apps appeared in both cases, using both successful and unsuccessful engagement and retention techniques. Although low barriers to initial entry could allow thousands of participants to be recruited, the same features also functioned as low barriers to exit. Recruiting a large number of participants is certainly beneficial, but that benefit may be substantially reduced if retention is poor. Future research should consider how to better balance these needs and incorporate factors such as clinical status, referral from providers, and compensation into recruitment plans for population-level apps.

This study used a binomial regression model to assess whether having a clinical condition of interest or receiving compensation affects retention rates. The empirical outcomes of the binomial regression model revealed that (1) any participant is more likely to not be retained than to be retained, (2) participants who have the same clinical condition targeted by the study are 4.33 times more likely to stay in the study than participants who do not have the same clinical condition targeted by the study, and (3) participants receiving compensation are 10.32 times more likely to stay in the study than participants who do not receive compensation. These findings, in line with previous research [10], demonstrate that retaining participants is a true challenge for studies using mHealth apps. Unlike that study [10], we were unable to incorporate clinician referral and age as part of our model because of inconsistent reporting in the articles. Although we planned to include other factors of interest, such as participant gender, income level, years of education, and smartphone platform type, the inconsistent reporting across studies made it challenging to accurately compare these variables. We also recognize that our definition of success relies on a normal distribution rather than some other indicator, which might be more appropriate for research with mobile apps that are still in their infancy. To summarize, scientists and researchers must consider different strategies to incentivize and encourage participant retention.

Of course, there is a balance when it comes to successful recruitment strategies, specifically compensation and niche groups. Strong participant engagement or retention may not accurately demonstrate the effectiveness of an app if the participants are overly compensated. Likewise, recruiting a niche group that is highly motivated to use a particular app presents a selection bias and leads to a lack of generalizability of the evaluation findings. Researchers and industry alike would do well to consider this balance when implementing studies using mHealth apps.

Limitations

Although we offer new insights into mHealth apps and participant engagement, this study has some limitations. First, as a systematic review, we were unable to make claims about all studies on apps. Owing to the file drawer phenomenon and our use of only peer-reviewed published articles, we do not report any studies that might have found null results, even though they might have described different interesting supports and barriers for engagement. Therefore, we encourage readers to refrain from generalizations about research on all mHealth apps. Second, we initially extracted information about the diversity of the sample; however, not all articles were clear about the diversity and the possible limitations of their own samples. Unfortunately, we were unable to describe these features in detail, as it is a critical area for more scholarship. Future research should consider the diversity in the demographics of published articles on mHealth apps and provide guidance about that.

Implications

We recommend that future mHealth apps consider potential support and barriers to participant engagement. Although the promise of moving health experiences onto the devices that people are currently using is great, many of the same barriers to participant engagement still exist and should be considered before moving research onto smartphone administration exclusively.

Conclusions

Retaining participants is a ubiquitous challenge for studies using mHealth apps. Despite the continued success of mHealth apps in the research sphere, there are many barriers to participant retention and long-term engagement. The insights from this review will help inform future studies about the potential different strategies and factors to consider and improve mHealth app engagement and retention.

Abbreviations

- mHealth

mobile health

- PRISMA

Preferred Reporting Items for Systematic Reviews and Meta-Analyses

Study characteristics of the 62 articles.

Footnotes

Conflicts of Interest: None declared.

References

- 1.Mobile fact sheet. Pew Research Center. 2019. [2022-04-07]. https://www.pewresearch.org/internet/fact-sheet/mobile/

- 2.Grand View Research. 2021. [2022-04-07]. https://www.marketresearch.com/Grand-View-Research-v4060/mHealth-Size-Share-Trends-Component-14163316/

- 3.Almalki M, Giannicchi A. Health apps for combating COVID-19: descriptive review and taxonomy. JMIR Mhealth Uhealth. 2021 Mar 02;9(3):e24322. doi: 10.2196/24322. https://mhealth.jmir.org/2021/3/e24322/ v9i3e24322 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Chan YF, Bot BM, Zweig M, Tignor N, Ma W, Suver C, Cedeno R, Scott ER, Gregory Hershman S, Schadt EE, Wang P. The asthma mobile health study, smartphone data collected using ResearchKit. Sci Data. 2018 May 22;5:180096. doi: 10.1038/sdata.2018.96. doi: 10.1038/sdata.2018.96.sdata201896 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Chan YF, Wang P, Rogers L, Tignor N, Zweig M, Hershman SG, Genes N, Scott ER, Krock E, Badgeley M, Edgar R, Violante S, Wright R, Powell CA, Dudley JT, Schadt EE. The Asthma Mobile Health Study, a large-scale clinical observational study using ResearchKit. Nat Biotechnol. 2017 Apr;35(4):354–62. doi: 10.1038/nbt.3826. http://europepmc.org/abstract/MED/28288104 .nbt.3826 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Doerr M, Maguire Truong A, Bot BM, Wilbanks J, Suver C, Mangravite LM. Formative evaluation of participant experience with mobile eConsent in the app-mediated Parkinson mPower study: a mixed methods study. JMIR Mhealth Uhealth. 2017 Feb 16;5(2):e14. doi: 10.2196/mhealth.6521. https://mhealth.jmir.org/2017/2/e14/ v5i2e14 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.McConnell MV, Shcherbina A, Pavlovic A, Homburger JR, Goldfeder RL, Waggot D, Cho MK, Rosenberger ME, Haskell WL, Myers J, Champagne MA, Mignot E, Landray M, Tarassenko L, Harrington RA, Yeung AC, Ashley EA. Feasibility of obtaining measures of lifestyle from a smartphone app: the MyHeart counts cardiovascular health study. JAMA Cardiol. 2017 Jan 01;2(1):67–76. doi: 10.1001/jamacardio.2016.4395.2592965 [DOI] [PubMed] [Google Scholar]

- 8.Hershman SG, Bot BM, Shcherbina A, Doerr M, Moayedi Y, Pavlovic A, Waggott D, Cho MK, Rosenberger ME, Haskell WL, Myers J, Champagne MA, Mignot E, Salvi D, Landray M, Tarassenko L, Harrington RA, Yeung AC, McConnell MV, Ashley EA. Physical activity, sleep and cardiovascular health data for 50,000 individuals from the MyHeart Counts Study. Sci Data. 2019 Apr 11;6(1):24. doi: 10.1038/s41597-019-0016-7. doi: 10.1038/s41597-019-0016-7.10.1038/s41597-019-0016-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Team Localytics Mobile App Retention Rate: What’s a Good Retention Rate? Upland Software. 2021. [2022-04-07]. https://uplandsoftware.com/localytics/resources/blog/mobile-apps-whats-a-good-retention-rate/

- 10.Pratap A, Neto EC, Snyder P, Stepnowsky C, Elhadad N, Grant D, Mohebbi MH, Mooney S, Suver C, Wilbanks J, Mangravite L, Heagerty PJ, Areán P, Omberg L. Indicators of retention in remote digital health studies: a cross-study evaluation of 100,000 participants. NPJ Digit Med. 2020 Feb;3:21. doi: 10.1038/s41746-020-0224-8. doi: 10.1038/s41746-020-0224-8.224 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Chu KH, Matheny SJ, Escobar-Viera CG, Wessel C, Notier AE, Davis EM. Smartphone health apps for tobacco Cessation: a systematic review. Addict Behav. 2021 Jan;112:106616. doi: 10.1016/j.addbeh.2020.106616. http://europepmc.org/abstract/MED/32932102 .S0306-4603(20)30746-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Druce KL, Dixon WG, McBeth J. Maximizing engagement in mobile health studies: lessons learned and future directions. Rheum Dis Clin North Am. 2019 May;45(2):159–72. doi: 10.1016/j.rdc.2019.01.004. https://linkinghub.elsevier.com/retrieve/pii/S0889-857X(19)30004-3 .S0889-857X(19)30004-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Torous J, Lipschitz J, Ng M, Firth J. Dropout rates in clinical trials of smartphone apps for depressive symptoms: a systematic review and meta-analysis. J Affect Disord. 2020 Feb 15;263:413–9. doi: 10.1016/j.jad.2019.11.167.S0165-0327(19)32606-0 [DOI] [PubMed] [Google Scholar]

- 14.Moher D, Liberati A, Tetzlaff J, Altman DG, PRISMA Group Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med. 2009 Jul 21;6(7):e1000097. doi: 10.1371/journal.pmed.1000097. https://dx.plos.org/10.1371/journal.pmed.1000097 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Mays N, Pope C, Popay J. Systematically reviewing qualitative and quantitative evidence to inform management and policy-making in the health field. J Health Serv Res Policy. 2005 Jul;10 Suppl 1:6–20. doi: 10.1258/1355819054308576. [DOI] [PubMed] [Google Scholar]

- 16.Saunders B, Sim J, Kingstone T, Baker S, Waterfield J, Bartlam B, Burroughs H, Jinks C. Saturation in qualitative research: exploring its conceptualization and operationalization. Qual Quant. 2018;52(4):1893–907. doi: 10.1007/s11135-017-0574-8. http://europepmc.org/abstract/MED/29937585 .574 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Purkayastha S, Addepally SA, Bucher S. Engagement and usability of a cognitive behavioral therapy mobile app compared with web-based cognitive behavioral therapy among college students: randomized heuristic trial. JMIR Hum Factors. 2020 Feb 03;7(1):e14146. doi: 10.2196/14146. https://humanfactors.jmir.org/2020/1/e14146/ v7i1e14146 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Marler JD, Fujii CA, Utley DS, Tesfamariam LJ, Galanko JA, Patrick H. Initial assessment of a comprehensive digital smoking cessation program that incorporates a mobile app, breath sensor, and coaching: cohort study. JMIR Mhealth Uhealth. 2019 Feb 04;7(2):e12609. doi: 10.2196/12609. https://mhealth.jmir.org/2019/2/e12609/ v7i2e12609 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Patrick H, Fujii CA, Glaser DB, Utley DS, Marler JD. A comprehensive digital program for smoking cessation: assessing feasibility in a single-group cohort study. JMIR Mhealth Uhealth. 2018 Dec 18;6(12):e11708. doi: 10.2196/11708. https://mhealth.jmir.org/2018/12/e11708/ v6i12e11708 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Huberty J, Green J, Glissmann C, Larkey L, Puzia M, Lee C. Efficacy of the mindfulness meditation mobile app "Calm" to reduce stress among college students: randomized controlled trial. JMIR Mhealth Uhealth. 2019 Jun 25;7(6):e14273. doi: 10.2196/14273. https://mhealth.jmir.org/2019/6/e14273/ v7i6e14273 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Sittig S, Wang J, Iyengar S, Myneni S, Franklin A. Incorporating behavioral trigger messages into a mobile health app for chronic disease management: randomized clinical feasibility trial in diabetes. JMIR Mhealth Uhealth. 2020 Mar 16;8(3):e15927. doi: 10.2196/15927. https://mhealth.jmir.org/2020/3/e15927/ v8i3e15927 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Bidargaddi N, Almirall D, Murphy S, Nahum-Shani I, Kovalcik M, Pituch T, Maaieh H, Strecher V. To prompt or not to prompt? A microrandomized trial of time-varying push notifications to increase proximal engagement with a mobile health app. JMIR Mhealth Uhealth. 2018 Nov 29;6(11):e10123. doi: 10.2196/10123. https://mhealth.jmir.org/2018/11/e10123/ v6i11e10123 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Selter A, Tsangouri C, Ali SB, Freed D, Vatchinsky A, Kizer J, Sahuguet A, Vojta D, Vad V, Pollak JP, Estrin D. An mHealth app for self-management of chronic lower back pain (Limbr): pilot study. JMIR Mhealth Uhealth. 2018 Sep 17;6(9):e179. doi: 10.2196/mhealth.8256. https://mhealth.jmir.org/2018/9/e179/ v6i9e179 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Dahne J, Lejuez CW, Diaz VA, Player MS, Kustanowitz J, Felton JW, Carpenter MJ. Pilot randomized trial of a self-help behavioral activation mobile app for utilization in primary care. Behav Ther. 2019 Jul;50(4):817–27. doi: 10.1016/j.beth.2018.12.003. http://europepmc.org/abstract/MED/31208690 .S0005-7894(18)30157-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Shah S, Kemp JM, Kvedar JC, Gracey LE. A feasibility study of the burden of disease of atopic dermatitis using a smartphone research application, myEczema. Int J Womens Dermatol. 2020 Dec;6(5):424–8. doi: 10.1016/j.ijwd.2020.08.001. https://linkinghub.elsevier.com/retrieve/pii/S2352-6475(20)30127-1 .S2352-6475(20)30127-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Shcherbina A, Hershman SG, Lazzeroni L, King AC, O'Sullivan JW, Hekler E, Moayedi Y, Pavlovic A, Waggott D, Sharma A, Yeung A, Christle JW, Wheeler MT, McConnell MV, Harrington RA, Ashley EA. The effect of digital physical activity interventions on daily step count: a randomised controlled crossover substudy of the MyHeart Counts Cardiovascular Health Study. Lancet Digit Health. 2019 Nov;1(7):e344–52. doi: 10.1016/S2589-7500(19)30129-3. https://linkinghub.elsevier.com/retrieve/pii/S2589-7500(19)30129-3 .S2589-7500(19)30129-3 [DOI] [PubMed] [Google Scholar]

- 27.Iacoviello BM, Steinerman JR, Klein DB, Silver TL, Berger AG, Luo SX, Schork NJ. Clickotine, a personalized smartphone app for smoking cessation: initial evaluation. JMIR Mhealth Uhealth. 2017 Apr 25;5(4):e56. doi: 10.2196/mhealth.7226. https://mhealth.jmir.org/2017/4/e56/ v5i4e56 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Arean PA, Hallgren KA, Jordan JT, Gazzaley A, Atkins DC, Heagerty PJ, Anguera JA. The use and effectiveness of mobile apps for depression: results from a fully remote clinical trial. J Med Internet Res. 2016 Dec 20;18(12):e330. doi: 10.2196/jmir.6482. https://www.jmir.org/2016/12/e330/ v18i12e330 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Bricker JB, Watson NL, Heffner JL, Sullivan B, Mull K, Kwon D, Westmaas JL, Ostroff J. A smartphone app designed to help cancer patients stop smoking: results from a pilot randomized trial on feasibility, acceptability, and effectiveness. JMIR Form Res. 2020 Jan 17;4(1):e16652. doi: 10.2196/16652. https://formative.jmir.org/2020/1/e16652/ v4i1e16652 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Ben-Zeev D, Scherer EA, Gottlieb JD, Rotondi AJ, Brunette MF, Achtyes ED, Mueser KT, Gingerich S, Brenner CJ, Begale M, Mohr DC, Schooler N, Marcy P, Robinson DG, Kane JM. mHealth for schizophrenia: patient engagement with a mobile phone intervention following hospital discharge. JMIR Ment Health. 2016 Jul 27;3(3):e34. doi: 10.2196/mental.6348. https://mental.jmir.org/2016/3/e34/ v3i3e34 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Serrano KJ, Coa KI, Yu M, Wolff-Hughes DL, Atienza AA. Characterizing user engagement with health app data: a data mining approach. Transl Behav Med. 2017 Jun;7(2):277–85. doi: 10.1007/s13142-017-0508-y. http://europepmc.org/abstract/MED/28616846 .10.1007/s13142-017-0508-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Zhang R, Nicholas J, Knapp AA, Graham AK, Gray E, Kwasny MJ, Reddy M, Mohr DC. Clinically meaningful use of mental health apps and its effects on depression: mixed methods study. J Med Internet Res. 2019 Dec 20;21(12):e15644. doi: 10.2196/15644. https://www.jmir.org/2019/12/e15644/ v21i12e15644 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Moberg C, Niles A, Beermann D. Guided self-help works: randomized waitlist controlled trial of Pacifica, a mobile app integrating cognitive behavioral therapy and mindfulness for stress, anxiety, and depression. J Med Internet Res. 2019 Jun 08;21(6):e12556. doi: 10.2196/12556. https://www.jmir.org/2019/6/e12556/ v21i6e12556 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Griauzde D, Kullgren JT, Liestenfeltz B, Ansari T, Johnson EH, Fedewa A, Saslow LR, Richardson C, Heisler M. A mobile phone-based program to promote healthy behaviors among adults with prediabetes who declined participation in free diabetes prevention programs: mixed-methods pilot randomized controlled trial. JMIR Mhealth Uhealth. 2019 Jan 09;7(1):e11267. doi: 10.2196/11267. https://mhealth.jmir.org/2019/1/e11267/ v7i1e11267 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Ross EL, Jamison RN, Nicholls L, Perry BM, Nolen KD. Clinical integration of a smartphone app for patients with chronic pain: retrospective analysis of predictors of benefits and patient engagement between clinic visits. J Med Internet Res. 2020 Apr 16;22(4):e16939. doi: 10.2196/16939. https://www.jmir.org/2020/4/e16939/ v22i4e16939 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Scott AR, Alore EA, Naik AD, Berger DH, Suliburk JW. Mixed-methods analysis of factors impacting use of a postoperative mHealth app. JMIR Mhealth Uhealth. 2017 Feb 08;5(2):e11. doi: 10.2196/mhealth.6728. https://mhealth.jmir.org/2017/2/e11/ v5i2e11 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Gordon JS, Armin J, D Hingle M, Giacobbi Jr P, Cunningham JK, Johnson T, Abbate K, Howe CL, Roe DJ. Development and evaluation of the See Me Smoke-Free multi-behavioral mHealth app for women smokers. Transl Behav Med. 2017 Jun;7(2):172–84. doi: 10.1007/s13142-017-0463-7. http://europepmc.org/abstract/MED/28155107 .10.1007/s13142-017-0463-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Berman MA, Guthrie NL, Edwards KL, Appelbaum KJ, Njike VY, Eisenberg DM, Katz DL. Change in glycemic control with use of a digital therapeutic in adults with type 2 diabetes: cohort study. JMIR Diabetes. 2018 Feb 14;3(1):e4. doi: 10.2196/diabetes.9591. https://diabetes.jmir.org/2018/1/e4/ v3i1e4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Michaelides A, Major J, Pienkosz Jr E, Wood M, Kim Y, Toro-Ramos T. Usefulness of a novel mobile diabetes prevention program delivery platform with human coaching: 65-week observational follow-up. JMIR Mhealth Uhealth. 2018 May 03;6(5):e93. doi: 10.2196/mhealth.9161. https://mhealth.jmir.org/2018/5/e93/ v6i5e93 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Bruno M, Wright M, Baker CL, Emir B, Carda E, Clausen M, Sigler C, Patel A. Mobile app usage patterns of patients prescribed a smoking cessation medicine: prospective observational study. JMIR Mhealth Uhealth. 2018 Apr 17;6(4):e97. doi: 10.2196/mhealth.9115. https://mhealth.jmir.org/2018/4/e97/ v6i4e97 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Petersen CL, Weeks WB, Norin O, Weinstein JN. Development and implementation of a person-centered, technology-enhanced care model for managing chronic conditions: cohort study. JMIR Mhealth Uhealth. 2019 Mar 20;7(3):e11082. doi: 10.2196/11082. https://mhealth.jmir.org/2019/3/e11082/ v7i3e11082 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Bush J, Barlow DE, Echols J, Wilkerson J, Bellevin K. Impact of a mobile health application on user engagement and pregnancy outcomes among Wyoming Medicaid members. Telemed J E Health. 2017 Nov;23(11):891–8. doi: 10.1089/tmj.2016.0242. http://europepmc.org/abstract/MED/28481167 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Ryan P, Brown RL, Csuka ME, Papanek P. Efficacy of osteoporosis prevention smartphone app. Nurs Res. 2020;69(1):31–41. doi: 10.1097/NNR.0000000000000392. http://europepmc.org/abstract/MED/31568199 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Zeng EY, Heffner JL, Copeland WK, Mull KE, Bricker JB. Get with the program: adherence to a smartphone app for smoking cessation. Addict Behav. 2016 Dec;63:120–4. doi: 10.1016/j.addbeh.2016.07.007. http://europepmc.org/abstract/MED/27454354 .S0306-4603(16)30248-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Sridharan V, Shoda Y, Heffner J, Bricker J. A pilot randomized controlled trial of a web-based growth mindset intervention to enhance the effectiveness of a smartphone app for smoking cessation. JMIR Mhealth Uhealth. 2019 Jul 09;7(7):e14602. doi: 10.2196/14602. https://mhealth.jmir.org/2019/7/e14602/ v7i7e14602 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Hales S, Turner-McGrievy GM, Wilcox S, Davis RE, Fahim A, Huhns M, Valafar H. Trading pounds for points: engagement and weight loss in a mobile health intervention. Digit Health. 2017 Apr 24;3:2055207617702252. doi: 10.1177/2055207617702252. https://journals.sagepub.com/doi/10.1177/2055207617702252?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub%3dpubmed .10.1177_2055207617702252 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Mundi MS, Lorentz PA, Grothe K, Kellogg TA, Collazo-Clavell ML. Feasibility of smartphone-based education modules and ecological momentary assessment/intervention in pre-bariatric surgery patients. Obes Surg. 2015 Oct;25(10):1875–81. doi: 10.1007/s11695-015-1617-7.10.1007/s11695-015-1617-7 [DOI] [PubMed] [Google Scholar]

- 48.Betthauser LM, Stearns-Yoder KA, McGarity S, Smith V, Place S, Brenner LA. Mobile app for mental health monitoring and clinical outreach in veterans: mixed methods feasibility and acceptability study. J Med Internet Res. 2020 Aug 11;22(8):e15506. doi: 10.2196/15506. https://www.jmir.org/2020/8/e15506/ v22i8e15506 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Escobar-Viera C, Zhou Z, Morano JP, Lucero R, Lieb S, McIntosh S, Clauson KA, Cook RL. The Florida mobile health adherence project for people living with HIV (FL-mAPP): longitudinal assessment of feasibility, acceptability, and clinical outcomes. JMIR Mhealth Uhealth. 2020 Jan 08;8(1):e14557. doi: 10.2196/14557. https://mhealth.jmir.org/2020/1/e14557/ v8i1e14557 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Patel ML, Hopkins CM, Bennett GG. Early weight loss in a standalone mHealth intervention predicting treatment success. Obes Sci Pract. 2019 Jun;5(3):231–7. doi: 10.1002/osp4.329. http://europepmc.org/abstract/MED/31275596 .OSP4329 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Forman EM, Goldstein SP, Zhang F, Evans BC, Manasse SM, Butryn ML, Juarascio AS, Abichandani P, Martin GJ, Foster GD. OnTrack: development and feasibility of a smartphone app designed to predict and prevent dietary lapses. Transl Behav Med. 2019 Mar 01;9(2):236–45. doi: 10.1093/tbm/iby016. http://europepmc.org/abstract/MED/29617911 .4956142 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Du H, Venkatakrishnan A, Youngblood GM, Ram A, Pirolli P. A group-based mobile application to increase adherence in exercise and nutrition programs: a factorial design feasibility study. JMIR Mhealth Uhealth. 2016 Jan 15;4(1):e4. doi: 10.2196/mhealth.4900. https://mhealth.jmir.org/2016/1/e4/ v4i1e4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Krzyzanowski MC, Kizakevich PN, Duren-Winfield V, Eckhoff R, Hampton J, Blackman Carr LT, McCauley G, Roberson KB, Onsomu EO, Williams J, Price AA. Rams Have Heart, a mobile app tracking activity and fruit and vegetable consumption to support the cardiovascular health of college students: development and usability study. JMIR Mhealth Uhealth. 2020 Aug 05;8(8):e15156. doi: 10.2196/15156. https://mhealth.jmir.org/2020/8/e15156/ v8i8e15156 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Michaelides A, Raby C, Wood M, Farr K, Toro-Ramos T. Weight loss efficacy of a novel mobile Diabetes Prevention Program delivery platform with human coaching. BMJ Open Diabetes Res Care. 2016 Sep 5;4(1):e000264. doi: 10.1136/bmjdrc-2016-000264. https://drc.bmj.com/lookup/pmidlookup?view=long&pmid=27651911 .bmjdrc-2016-000264 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Kelechi TJ, Prentice MA, Mueller M, Madisetti M, Vertegel A. A lower leg physical activity intervention for individuals with chronic venous leg ulcers: randomized controlled trial. JMIR Mhealth Uhealth. 2020 May 15;8(5):e15015. doi: 10.2196/15015. https://mhealth.jmir.org/2020/5/e15015/ v8i5e15015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Stein N, Brooks K. A fully automated conversational artificial intelligence for weight loss: longitudinal observational study among overweight and obese adults. JMIR Diabetes. 2017 Nov 01;2(2):e28. doi: 10.2196/diabetes.8590. https://diabetes.jmir.org/2017/2/e28/ v2i2e28 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Fernandez MP, Bron GM, Kache PA, Larson SR, Maus A, Gustafson Jr D, Tsao JI, Bartholomay LC, Paskewitz SM, Diuk-Wasser MA. Usability and feasibility of a smartphone app to assess human behavioral factors associated with tick exposure (The Tick App): quantitative and qualitative study. JMIR Mhealth Uhealth. 2019 Oct 24;7(10):e14769. doi: 10.2196/14769. https://mhealth.jmir.org/2019/10/e14769/ v7i10e14769 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Hébert ET, Ra CK, Alexander AC, Helt A, Moisiuc R, Kendzor DE, Vidrine DJ, Funk-Lawler RK, Businelle MS. A mobile just-in-time adaptive intervention for smoking cessation: pilot randomized controlled trial. J Med Internet Res. 2020 Mar 09;22(3):e16907. doi: 10.2196/16907. https://www.jmir.org/2020/3/e16907/ v22i3e16907 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Huberty J, Vranceanu AM, Carney C, Breus M, Gordon M, Puzia ME. Characteristics and usage patterns among 12,151 paid subscribers of the calm meditation app: cross-sectional survey. JMIR Mhealth Uhealth. 2019 Nov 03;7(11):e15648. doi: 10.2196/15648. https://mhealth.jmir.org/2019/11/e15648/ v7i11e15648 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Chow PI, Showalter SL, Gerber M, Kennedy EM, Brenin D, Mohr DC, Lattie EG, Gupta A, Ocker G, Cohn WF. Use of mental health apps by patients with breast cancer in the United States: pilot pre-post study. JMIR Cancer. 2020 Apr 15;6(1):e16476. doi: 10.2196/16476. https://cancer.jmir.org/2020/1/e16476/ v6i1e16476 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Toro-Ramos T, Michaelides A, Anton M, Karim Z, Kang-Oh L, Argyrou C, Loukaidou E, Charitou MM, Sze W, Miller JD. Mobile delivery of the diabetes prevention program in people with prediabetes: randomized controlled trial. JMIR Mhealth Uhealth. 2020 Jul 08;8(7):e17842. doi: 10.2196/17842. https://mhealth.jmir.org/2020/7/e17842/ v8i7e17842 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Tran C, Dicker A, Leiby B, Gressen E, Williams N, Jim H. Utilizing digital health to collect electronic patient-reported outcomes in prostate cancer: single-arm pilot trial. J Med Internet Res. 2020 Mar 25;22(3):e12689. doi: 10.2196/12689. https://www.jmir.org/2020/3/e12689/ v22i3e12689 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Ifejika NL, Bhadane M, Cai CC, Noser EA, Grotta JC, Savitz SI. Use of a smartphone-based mobile app for weight management in obese minority stroke survivors: pilot randomized controlled trial with open blinded end point. JMIR Mhealth Uhealth. 2020 Apr 22;8(4):e17816. doi: 10.2196/17816. https://mhealth.jmir.org/2020/4/e17816/ v8i4e17816 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Mao AY, Chen C, Magana C, Caballero Barajas K, Olayiwola JN. A mobile phone-based health coaching intervention for weight loss and blood pressure reduction in a national payer population: a retrospective study. JMIR Mhealth Uhealth. 2017 Jun 08;5(6):e80. doi: 10.2196/mhealth.7591. https://mhealth.jmir.org/2017/6/e80/ v5i6e80 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Rudin RS, Fanta CH, Qureshi N, Duffy E, Edelen MO, Dalal AK, Bates DW. A clinically integrated mHealth app and practice model for collecting patient-reported outcomes between visits for asthma patients: implementation and feasibility. Appl Clin Inform. 2019 Oct;10(5):783–93. doi: 10.1055/s-0039-1697597. http://europepmc.org/abstract/MED/31618782 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Johnston DC, Mathews WD, Maus A, Gustafson DH. Using smartphones to improve treatment retention among impoverished substance-using Appalachian women: a naturalistic study. Subst Abuse. 2019 Jul 8;13:1178221819861377. doi: 10.1177/1178221819861377. https://journals.sagepub.com/doi/10.1177/1178221819861377?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub%3dpubmed .10.1177_1178221819861377 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Pagoto S, Tulu B, Agu E, Waring ME, Oleski JL, Jake-Schoffman DE. Using the habit app for weight loss problem solving: development and feasibility study. JMIR Mhealth Uhealth. 2018 Jun 20;6(6):e145. doi: 10.2196/mhealth.9801. https://mhealth.jmir.org/2018/6/e145/ v6i6e145 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Everett E, Kane B, Yoo A, Dobs A, Mathioudakis N. A novel approach for fully automated, personalized health coaching for adults with prediabetes: pilot clinical trial. J Med Internet Res. 2018 Feb 27;20(2):e72. doi: 10.2196/jmir.9723. https://www.jmir.org/2018/2/e72/ v20i2e72 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Spring B, Pellegrini C, McFadden HG, Pfammatter AF, Stump TK, Siddique J, King AC, Hedeker D. Multicomponent mHealth intervention for large, sustained change in multiple diet and activity risk behaviors: the make better choices 2 randomized controlled trial. J Med Internet Res. 2018 Jun 19;20(6):e10528. doi: 10.2196/10528. https://www.jmir.org/2018/6/e10528/ v20i6e10528 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Burke LE, Zheng Y, Ma Q, Mancino J, Loar I, Music E, Styn M, Ewing L, French B, Sieworek D, Smailagic A, Sereika SM. The SMARTER pilot study: testing feasibility of real-time feedback for dietary self-monitoring. Prev Med Rep. 2017 Mar 31;6:278–85. doi: 10.1016/j.pmedr.2017.03.017. https://linkinghub.elsevier.com/retrieve/pii/S2211-3355(17)30059-1 .S2211-3355(17)30059-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Bricker JB, Copeland W, Mull KE, Zeng EY, Watson NL, Akioka KJ, Heffner JL. Drug Alcohol Depend. 2017 Jan 01;170:37–42. doi: 10.1016/j.drugalcdep.2016.10.029. http://europepmc.org/abstract/MED/27870987 .S0376-8716(16)30980-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Hoeppner BB, Hoeppner SS, Carlon HA, Perez GK, Helmuth E, Kahler CW, Kelly JF. Leveraging positive psychology to support smoking cessation in nondaily smokers using a smartphone app: feasibility and acceptability study. JMIR Mhealth Uhealth. 2019 Jul 03;7(7):e13436. doi: 10.2196/13436. https://mhealth.jmir.org/2019/7/e13436/ v7i7e13436 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Dillingham R, Ingersoll K, Flickinger TE, Waldman AL, Grabowski M, Laurence C, Wispelwey E, Reynolds G, Conaway M, Cohn WF. PositiveLinks: a mobile health intervention for retention in HIV care and clinical outcomes with 12-month follow-up. AIDS Patient Care STDS. 2018 Jun;32(6):241–50. doi: 10.1089/apc.2017.0303. http://europepmc.org/abstract/MED/29851504 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Affordance. Merriam-Webster. [2022-04-07]. https://www.merriam-webster.com/dictionary/affordance .

- 75.Husain I. ResearchKit app shows high levels of enrollment and engagement on iPhone. iMedical Apps. 2015. Jun 17, [2022-04-07]. https://www.imedicalapps.com/2015/06/researchkit-engagement-iphone/

- 76.Brooke J. SUS: a 'quick and dirty' usability scale. In: Jordan PW, Thomas B, McClelland IL, Weerdmeester B, editors. Usability evaluation in industry. London, UK: CRC Press; 1996. pp. 189–204. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Study characteristics of the 62 articles.