Abstract

Objectives

(1) Systematically review the literature on computerized audit and feedback (e-A&F) systems in healthcare. (2) Compare features of current systems against e-A&F best practices. (3) Generate hypotheses on how e-A&F systems may impact patient care and outcomes.

Methods

We searched MEDLINE (Ovid), EMBASE (Ovid), and CINAHL (Ebsco) databases to December 31, 2020. Two reviewers independently performed selection, extraction, and quality appraisal (Mixed Methods Appraisal Tool). System features were compared with 18 best practices derived from Clinical Performance Feedback Intervention Theory. We then used realist concepts to generate hypotheses on mechanisms of e-A&F impact. Results are reported in accordance with the PRISMA statement.

Results

Our search yielded 4301 unique articles. We included 88 studies evaluating 65 e-A&F systems, spanning a diverse range of clinical areas, including medical, surgical, general practice, etc. Systems adopted a median of 8 best practices (interquartile range 6–10), with 32 systems providing near real-time feedback data and 20 systems incorporating action planning. High-confidence hypotheses suggested that favorable e-A&F systems prompted specific actions, particularly enabled by timely and role-specific feedback (including patient lists and individual performance data) and embedded action plans, in order to improve system usage, care quality, and patient outcomes.

Conclusions

e-A&F systems continue to be developed for many clinical applications. Yet, several systems still lack basic features recommended by best practice, such as timely feedback and action planning. Systems should focus on actionability, by providing real-time data for feedback that is specific to user roles, with embedded action plans.

Protocol Registration

PROSPERO CRD42016048695.

Keywords: clinical audit, feedback, quality improvement, benchmarking, informatics, systematic review

INTRODUCTION

Audit and feedback (A&F) is widely used to improve care quality and health outcomes.1 Through summarizing clinical performance over time (audit), and presenting this information to health professionals and their organizations (feedback), it can drive improvements in health outcomes.1–3 There is established literature on predictors of A&F effectiveness, such as targeting low baselines, delivering feedback through supervisors, and frequent feedback.1,3,4 This has led to theories of how A&F produces change in clinical practice and hypothesized features of best practice.5,6 We previously developed a clinical performance feedback intervention theory (CP-FIT): a framework for A&F interventions describing how feedback works and factors that influence success.5 However, little is known about to what extent this translates to automated or computerized forms of A&F using digital care records and computational approaches, which are becoming increasingly adopted.

Computerized or electronic audit and feedback (e-A&F) systems, often delivered as “dashboards,” generally incorporate visualization elements to deliver feedback of clinical performance.7 With increasing availability of linked care record data, they offer potential advantages over manual A&F methods through lower costs of producing the audits and quicker feedback.7 Developing e-A&F systems have also changed the dynamics of how clinical performance is understood, evolving from single graphical displays requiring human assistance for feedback, into automated multi-functional feedback displays with interactive components.8 Over the last decade, e-A&F systems have moved away from static reports, as interactive interfaces enable users to “drill down,” filter and prioritize the data, carrying greater potential for flexibility and specificity in feedback.1 E-A&F systems are generally used away from the point-of-care (unlike clinical decision support tools), but can produce timely improvements on individual, team, or organizational levels depending on how feedback data is used to review care performance.7

Two previous systematic reviews examining e-A&F, yielded limited insights into the characteristics of successful systems due to the heterogeneity of studies and inclusion criteria.7,9 The most recent (2017) review focused on behavior change theory and included only 7 randomized controlled trials (RCTs).7 This needed updating and extending to consider a wider range of current e-A&F systems in more detail.

A&F systems continue to demonstrate highly variable effects on patient care, though effect sizes have been plateauing for some time.4 Rather than simply studying outcomes, a greater focus on optimization of intervention design is required.10 There is a need for more comprehensive evidence of e-A&F that considers and extends best practice theory to define successful features and components of these systems.7,9 Previous studies have shown that contextual factors need to be considered, which directly affect e-A&F implementation, such as data infrastructure and existing ways of working.7,9,11 A narrative synthesis allows deeper exploration of intervention components, contextual factors, and mechanisms of action to generate further hypotheses regarding outcomes and effect modifiers.12

The aim of this study was to summarize and evaluate the current state of e-A&F, synthesizing the literature to provide useful evidence through learning from successes and failures. Using an extended theoretical framework, we explored how e-A&F system design may be optimized to reduce variability in outcomes.

OBJECTIVES

Objective 1: Systematically review and summarize the literature on published e-A&F systems in healthcare.

Objective 2: Compare features of these e-A&F systems against generic A&F best practices.

Objective 3: Generate hypotheses on how e-A&F systems may impact patient care and outcomes

METHODS

This article is consistent with PRISMA standards for systematic reviews.13 The protocol of our study is published on the International Prospective Register of Systematic Reviews [PROSPERO CRD42016048695].

Search strategy

We replicated the search strategy of the latest Cochrane review on A&F.1 The search terms for RCT filters were replaced with those relating to computerization (Supplementary File S1), based on the scoping search (described in our protocol) and previous literature.1,5 We searched MEDLINE (Ovid), EMBASE (Ovid), and CINAHL (Ebsco) databases starting from January 1 1999, based on the earliest publication date of papers from our scoping searches, up to December 31, 2020. For each included article, we performed a supplementary search (undertaken up to January 31, 2021) that consisted of reference list, citation, and related article searching to identify further relevant articles. Related article and citation searching was performed in Google Scholar and limited to the first 100 articles to maintain relevance.

Study selection and data extraction

The inclusion criteria are presented in Table 1. We included all peer-reviewed studies on interactive e-A&F systems used by health professionals for care improvements that were implemented in clinical practice. Two reviewers (JT and BB) independently screened titles and abstracts using the inclusion criteria. Citations that were deemed relevant by either reviewer had full texts obtained. All full manuscripts were then independently read by the 2 reviewers, and the inclusion criteria reapplied with any disagreements being resolved through discussion. Data extraction and quality appraisal (see below) were undertaken concurrently using a standardized data extraction tool (Supplementary File S2) by JT and reviewed independently by a second researcher (BB). Further discussion of the data and resolving of discrepancies occurred at weekly meetings. Data were collected regarding studies’ characteristics, outcomes, and features of the e-A&F system being studied.

Table 1.

Inclusion criteria and typical examples of exclusions

| Inclusion criteria | Typical exclusion examples |

|---|---|

| Population | |

|

|

| Intervention | |

|

|

|

|

|

|

|

|

|

|

|

|

| Outcome | |

|

|

| Study type | |

|

|

|

|

Quality appraisal

We performed quality appraisal (risk of bias) using the Mixed Methods Appraisal Tool (MMAT) version 2011.14 The MMAT is a validated tool that includes assessment criteria of methodological quality for quantitative, qualitative, and mixed methods studies.14,15 These criteria include 2 screening questions and 3–4 design-specific questions, with different study designs having different quality criteria. The results are presented as 1–4 stars, allowing direct comparison between different study types. This was incorporated into a GRADE-CERQual assessment to explicitly evaluate the confidence placed in each individual set of findings from objective 3 (see below).16 The GRADE-CERQuaL approach incorporates 4 components including methodological limitations, relevance to the review question, coherence of the finding, and adequacy of data. Ratings of “high,” “moderate,” or “low” confidence were given through considering these 4 components in the context of reviewing the evidence supporting the findings, and its relation to the wider review question. Thus, quality appraisal was used to inform data synthesis rather than determine study inclusion to avoid excluding “low quality” studies that still generated valuable insights.17

Analysis and synthesis

CP-FIT took a central role in framing the analysis and synthesis of data.5 CP-FIT builds on 30 pre-existing theories from a range of disciplines including behavior change, goal setting, context, psychological, sociological, and technology theories.5 It outlines factors for successful feedback cycles in producing behavior changes in health professionals.5 To achieve each of our objectives, we undertook the following analyses:

Objective 1: systematically review the literature on e-A&F systems in healthcare

We categorized common conceptual domains and dimensions of e-A&F systems, allowing grouping and contrasting of interventions to supplement further analyses. Using thematic analysis, we developed codes that described and categorized different features of the e-A&F systems.18 Codes were created both inductively from the data, and by deductively applying codes that describe A&F systems taken from CP-FIT.5

Objective 2: compare features of e-A&F systems against generic A&F “best practices”

We compared each e-A&F system to a list of features from current literature thought to be associated with effective A&F, determining whether each feature was present, absent, or not-reported.1,5,6 We focused on 18 effective features that could be measured more objectively included those from the latest Cochrane review, in addition to theorized features within CP-FIT.1,5 These included a list of defined “cointerventions,” such as “clinical education” and “financial rewards,” but more subjective features of best practice such as credibility and adaptability were excluded.5 We assumed that existing ‘best practices’ for A&F would be applicable to e-A&F systems, but also looked to refine these best practices to increase their relevance to e-A&F. We used linear regression to estimate the trend of best practice features adopted over time.

Objective 3: generate hypotheses on how e-A&F systems may impact patient care and outcomes

We adopted realist concepts to summarize our findings and to explore features of e-A&F systems as interventions implemented within complex health and social contexts.5,19 Moving beyond traditional review methods, realist methodology allowed us to look past overall successes or failures of e-A&F systems to generate explanations about how and why these systems work, for whom, and in what contexts.19 Drawing on findings developed in objectives 1 and 2, descriptive and analytical themes were organized into intervention-context-mechanism-outcome (ICMO) configurations to generate further hypotheses.19,20 The resulting synthesis highlighted possible intervention factors (I) of e-A&F systems that when implemented in a specific context (C), acted through various mechanisms (M) to produce particular outcomes (O) of interest (including usage, care quality, and patient outcomes). As in CP-FIT, mechanisms (M) were defined as underlying explanations of how and why an intervention works, related to the feedback itself, the recipient, and the wider context.5,19 Each ICMO configuration was assessed through GRADE-CERQual to explicitly evaluate our confidence for each hypothesis. Included papers were then reread to iteratively test and refine our emerging hypotheses, starting with papers with higher scores of the quality appraisal and GRADE-CERQual.19

RESULTS

Study selection

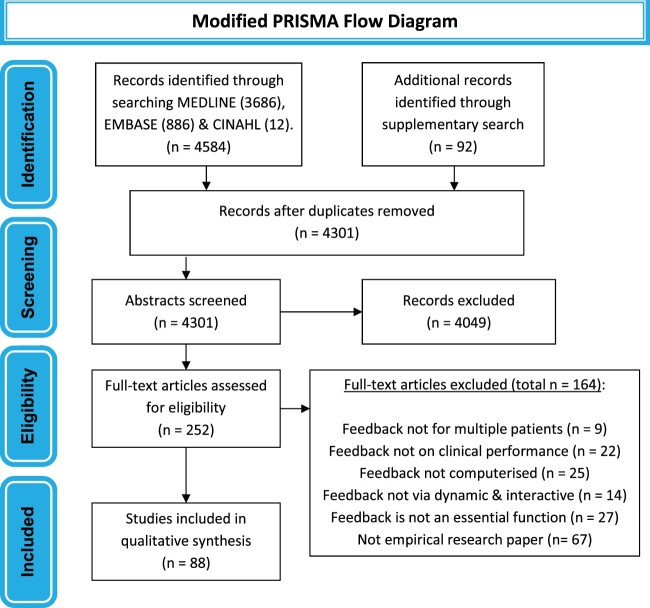

The search of the 3 databases yielded 4584 articles, with 92 more articles being identified in the supplementary search (Figure 1). After removing duplicates, 4301 abstracts were screened. Most articles removed at this stage did not describe an e-A&F system impacting clinical care. A total of 252 full-text articles were assessed and 88 papers studying 65 systems were included in total.

Figure 1.

Flow diagram summarizing study selection process. Illustration of the steps used in the study selection process.

Systematic review of published e-A&F systems (Objective 1)

Included studies varied in study type, timeframe, and reporting of results, with some studies looking at clinician performance, others looking at outcome measures, and some examining systems utilization and integration.21–108 The main characteristics are summarized in Table 2 with full details in Supplementary File S3.

Table 2.

Frequency of main study characteristics

| Count (%)a | ||

|---|---|---|

| Publication year | 2016–2020 | 43 (49%) |

| 2011–2015 | 34 (39%) | |

| 2005–2010 | 11 (12%) | |

| Quality appraisal (4* being lowest risk of bias) | 4* | 4 (4%) |

| 3* | 37 (42%) | |

| 2* | 33 (38%) | |

| 1* | 14 (16%) | |

| Study type | Randomized controlled trial | 21 (24%) |

| Nonrandomized controlled trial | 3 (3%) | |

| Cohort study | 5 (6%) | |

| Before and after study | 8 (9%) | |

| Cross sectional study | 3 (3%) | |

| Other quantitative study | 11 (12%) | |

| Qualitative study | 27 (31%) | |

| Mixed methods study | 10 (11%) | |

| Continent | North America | 57 (65%) |

| Europe | 26 (30%) | |

| Asia | 4 (4%) | |

| Australia | 1 (1%) | |

| Setting | Hospital care (including secondary and tertiary settings) | 51 (58%) |

| Outpatient care (including specialty and primary care settings) | 36 (41%) | |

| Nursing home | 1 (1%) | |

| Specialty area | Medication safety | 19 (22%) |

| Diabetes | 17 (19%) | |

| Cardiovascular | 15 (17%) | |

| Respiratory | 6 (7%) | |

| Oncology | 9 (10%) | |

| Nephrology | 2 (2%) | |

| Geriatrics | 4 (4%) | |

| General medicine | 4 (4%) | |

| Infectious disease | 11 (12%) | |

| Surgery | 5 (6%) | |

| Obstetrics | 1 (1%) | |

| Pediatrics | 3 (3%) | |

| Radiology | 4 (4%) | |

| Psychiatry (including substance misuse) | 5 (6%) | |

Counts may add to more than 100% where papers are in multiple categories.

A summary of e-A&F system features is presented in Table 3. Systems targeted a diverse range of aspects of care, the most common being prescribing (32 out of 65 systems) and chronic disease management (24 systems). Most systems (57 of 65) were used by doctors, with 29 systems being designed for doctors alone and 21 systems also involving users with managerial or senior leadership roles. For feedback display, over 70% of systems (46 of 65) included graphical elements. These systems varied in their presentation of line, bar, pie, and box and whisker plots, with some systems (27 of 65) presenting more than one type of graph. Over 80% (53 of 65) systems incorporated benchmarking elements with a similar number of systems (51 of 65) displaying specific performance data at individual or practice level. About two-thirds (43 of 65) provided lists of patients, with over a third (24 of 65) providing detailed patient-level data. Over half (34 of 65) deployed interactive functions for prioritization including sorting and color coding functions.

Table 3.

Summary of computerized audit and feedback (e-A&F) system features

|

Prescribing27,28,32,35,37,48,50,53,55–57,61,62,65,67,69,72,74,75,77,79,81,84,85,89,93–95,98,100,102,103 |

| Blood test use and monitoring22,39,55,63,80,81 | |

| Skill-based performance (eg, surgical/radiological)24,31,40,42,51,96,99,107 | |

| Chronic disease management21,26,32,33,35,37,39,43,48,54–56,61,69,75,77–79,84,91,93,97,101,103,104 | |

| Acute condition management22,37,41,53,67,70,86,92 | |

| Disease prevention and screening25,27,35,39,54,55,60,61,71,74,75,79,101,103,104 | |

| Nursing care40,52,59,73,75 | |

| Discharge care21,80 | |

| Patient experience25,51,103 | |

|

Doctors only24–26,31–33,37,42,48,60–63,69,71,74,79,80,84,89,92,93,95–97,99,101,103,104 |

| Doctors and nurses27,40,41,51,54,67,78,91,105 | |

| Doctors and pharmacists28,57,65,81,98 | |

| Doctors, nurses, and pharmacists21,56,75,77,86,94 | |

| Doctors, nurses, and allied health22,35,39,43,50,53,70,107 | |

| Nurses only52,59,73 | |

| Pharmacists only72,85,100,102 | |

| Also involved senior leadership or managerial users24,27,28,35,39,40,43,50,51,53,54,57,59,65,73,75,77,81,86,95,105 | |

|

Electronic health record data21,24,26,28,32,35,39–41,43,53,56,57,63,65,67,69,71,72,74,75,77,79–81,84,86,91,93–95,97,98,101,104,107 |

| Specific prescribing system data27,62,65,74,89,92,100,102 | |

| Separate biochemistry, laboratory or radiological database22,24,41,70,78,91 | |

| External national or regional database26,37,42,48,50,54,60,73,85,99,103,105 | |

| Nursing data22,41,52,59,73,75 | |

| Healthcare staff self-reported data31,33,92 | |

| Patient reported outcomes data25,51,103 | |

|

Graphical elements21,22,24–28,31,33,37,40,42,43,48,50,51,53–57,59,60,67,69,72–75,77–81,84–86,89,91,93,94,96,101–103,107 |

| Benchmarking21,22,24,25,27,28,31–33,37,39,40,42,43,48,50–57,59,60,63,65,67,69,73–75,77–81,84–86,89,91–93,95–97,99–101,103–105,107 | |

| Patient lists21,22,24,26,28,35,39–41,48,52,54,55,57,60,62,63,65,67,69–72,74,75,78–81,84,91,92,96–98,101–105,107 | |

| Detailed patient-level data22,24,26,28,35,39,40,48,55,57,63,65,67,69,70,72,75,77–79,91,92,97,102 | |

| Individual Performance levels22,25,27,31,32,35,37,40,42,43,48,50,51,53,54,56,59–61,63,65,67,69,72–74,77,79,84–86,89,91–97,99,100,103,104,107 | |

| Individual practice performance levels (primary care)26,57,71,78,80,81,101,104 | |

| Qualitative data (free text communication)24,52,72,91 | |

| Prioritization (color coding or sorting functions)21,26,27,35,39,41,43,48,53–55,57,60,65,69,70,72,74,75,77–81,85,86,91,92,95,100–103,107 | |

|

Action plans24,25,27,32,33,35,42,43,54–56,62,72,73,75,79,84,91,99,101 |

| Financial reward or alignment25,28,32,56,57,74,77,79,81,84,103,104 | |

| Clinical education28,32,33,37,52,53,65,80,81,86,91,92,99,100,105 | |

| Peer discussion25,27,37,40,43,48,59,65,80,81,86,91,103 | |

| External change agent43,59,71,77,93 | |

| Clinical decision support, reminders, or alerts26,32,53,57,71,72,75,79,80,84,91,95,97,102,104 | |

| Patient education21,65,92 | |

|

Leadership support21,24,25,27,33,35,39,40,43,50–55,57,59,65,73–75,77,80,81,85,86,93–95,100,103,105 |

| Intraorganizational networks21,24,25,27,28,33,39–41,43,50,53,55,57,59,65,70,73–75,77,78,81,86,94,100,102,103,105 | |

| Extraorganizational networks37,39,40,42,43,50,53–55,57,60,65,75,79,81,85,86,99,101,103–105 | |

| Limited reporting of organizational support22,26,31,48,56,61–63,67,71,72,84,89,92,96–98,101,107 | |

| Champions51,55,65,74,75,77,86,105 | |

| Feedback delivered to a group25,27,33,37,40,43,59,62,74,80,100 | |

| Workflow fit considered21,25,28,32,55,56,69,74,80,81,84,92,103,104 | |

| Limited reporting of implementation process26,33,37,40,41,50,51,56,57,61,62,67,70–72,74,78,85,92,95,96,98,99,102,104,107 |

Note: A descriptive summary of the differing features and characteristics of e-A&F systems based on clinical performance feedback intervention theory.

Comparison against generic A&F “best practices” (Objective 2)

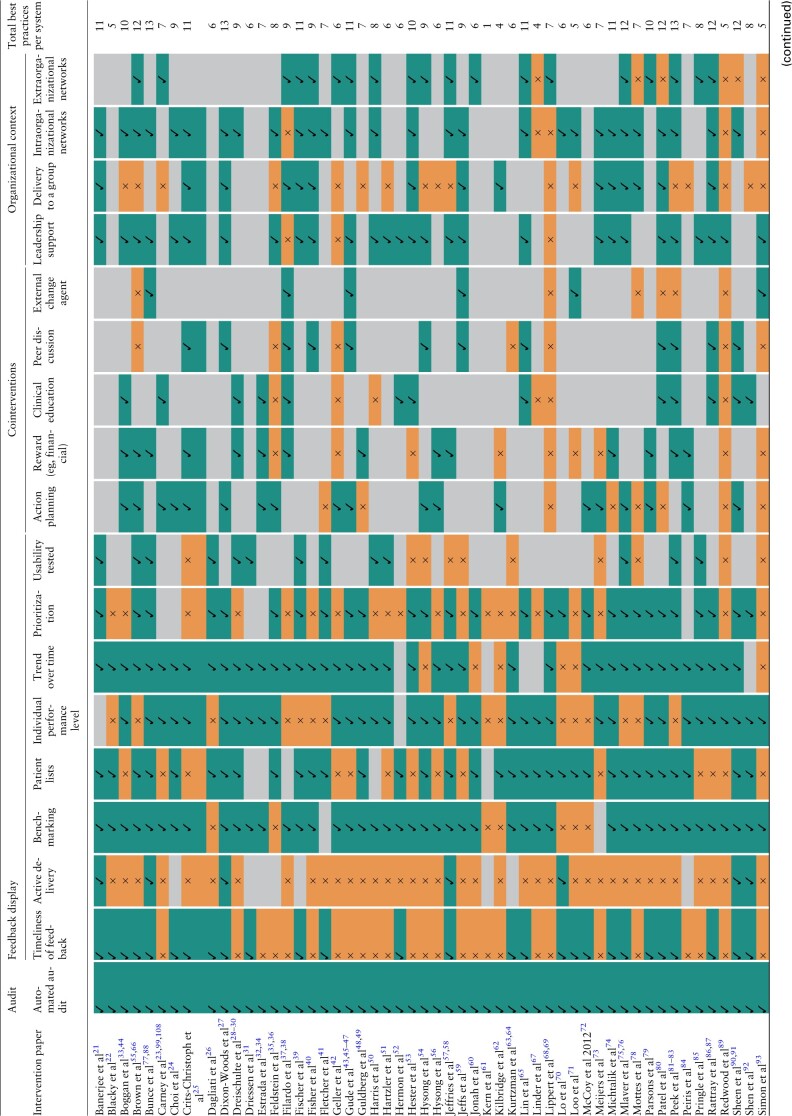

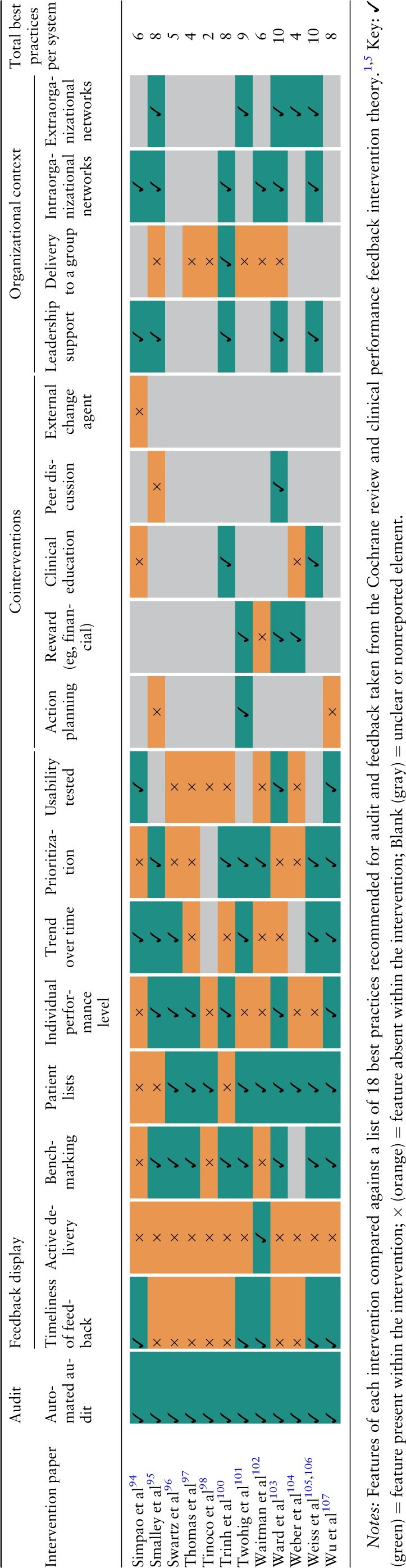

Table 4 below summarizes the number of characteristics each e-A&F system had compared against a list of 18 recommended best practices for generic A&F.1,5 Systems adopted a median of 8 best practices (interquartile range 6–10). None of the 65 systems exhibited all 18 best practices (range 1–14). An increasing number of best practice features were adopted over time, with linear regression estimating 0.40 (95% CIs, 0.32–0.48) new features per year (Supplementary File S4).

Table 4.

Comparison of computerized audit and feedback systems against theorized best practices

|

|

All systems adopted automated audit, with 48 systems showing data on trend over time in uses and functions. Timeliness of feedback data varied with 32 systems reporting immediate or “near real-time” feedback, and most others (21 systems) reporting feedback monthly or less frequent. “Cointerventions” that were defined as part of recommended best practices were commonly offered alongside e-A&F systems (Tables 3 and 4). Action planning was encouraged by 20 systems, with some containing embedded recommended actions within systems and others encouraging users to define their own action plans. Other common cointerventions included financial or other rewards (17 systems) and clinical education (15 systems). Organizational context was often poorly reported with 19 systems stating limited information on organizational support and 26 systems having a limited description of their implementation process. For those that specified, 33 systems had leadership support, with 34 systems involving intraorganizational networks and 24 systems involving extraorganizational networks. Intraorganization networks frequently involved management roles and included speciality committees, working groups and primary care practice teams. Extraorganizational networks were varied encompassing widespread academic networks, governmental agencies, and pharmacy chains.

How e-A&F systems may impact patient care and outcomes (Objective 3)

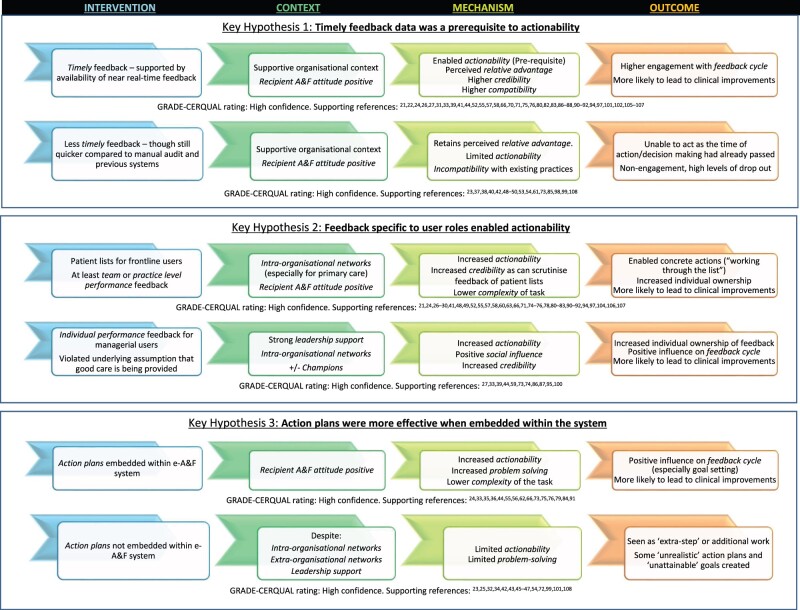

Key findings supported by ICMO configurations are presented in Figure 2. For readability, we focus on high confidence and novel findings related to e-A&F, with a full list of ICMO configurations and CER-QUAL ratings in Supplementary File S5. A substantial proportion of studies (over 30%) reported insignificant results or included negative findings, allowing us to compare and contrast ICMOs for these systems.23,25,32,34,37,38,40,42,43,45–47,50,53,54,61,62,70,72,73,77,85,89,93,99,101,108 A large majority of the codes arose from CP-FIT, though some nuanced codes building on CP-FIT were identified inductively (see Supplementary Files S5 and S6).5 When compared with the other mechanisms within CP-FIT, actionability appeared to be the most important mechanism in producing clinical improvements.21–63,65–97,99–108 Actionability is the ability of e-A&F systems to directly facilitate behaviors for users. Namely, the more an e-A&F system successfully and directly supported clinical behaviors with tangible or concrete next steps, the more users felt empowered and motivated to act on these behaviors more effectively, also increasing achievability and controllability of the task.21–63,65–97,99–108 Other mechanisms within CP-FIT (eg, reduced complexity, perceived relative advantage, see Supplementary File S6 for full descriptions and explanations) often contributed to successful e-A&F systems, but were less important as influencing factors, and were insufficient to produce clinical improvements alone.23,25,32,34,37,38,40,42,43,45–47,50,53,54,61,62,70,72,73,77,85,89,93,99,101,108,109 Contextual factors were also key effect modifiers of e-A&F systems, as they significantly enabled or limited implementation and engagement with each system.21,23–25,27–30,32–35,37–59,63,65–70,73–91,93–95,99–108 However, despite strong organization and contextual backing, systems without actionable feedback were unlikely to result in clinical improvements.23,25,32,34,37,38,40,42,43,45–47,50,53,54,61,62,70,72,73,77,85,89,93,99,101,108,109

Figure 2.

Summary of key findings on how computerized audit and feedback systems impact patient care and outcomes. It presents key findings, supported by intervention-context-mechanism-outcome (ICMO) configurations along with supporting references and GRADE-CERQual assessments.16,19 Three key intervention factors were identified that enhanced actionability and were more likely to result in clinical improvements, including the availability of timely data for feedback, feedback functions specific to user roles, and action plans embedded within systems. For a more comprehensive list of ICMOs see Supplementary File S5, with further descriptions and explanations of mechanism constructs in Supplementary File S6. Constructs taken from clinical performance feedback intervention theory are in italics.5

Three key e-A&F intervention factors were identified that enhanced actionability and were more likely to result in clinical improvements:

The availability of real-time data for feedback

Feedback functions specific to user roles

Action plans embedded within systems

Timely feedback data as a prerequisite to actionability

Systems that provided immediately updated or “near real-time” feedback resulted in higher engagement and were more likely to report successful outcomes.21,22,24,26,27,31,33,39,41,44,52,55,57,66,70,71,74–77,79–83,86–88,90–92,94,97,102,105–107 The timeliness of feedback enabled the data to be viewed as more credible and representative of performance.21,22,24,26,27,31,33,39,41,44,52,55,57,58,66,70,71,75,76,80,82,83,86–88,90–92,94,97,101,102,105–107 Importantly, it was reported as a prerequisite for actionability, with less timely feedback frequently been seen as extra work, and occurring outside existing workflow.24,27,55,77,83,102,107 Although almost all systems provided more timely feedback compared with manual audit and previous systems, several of these studies reported that without immediate feedback, it remained too long for effective action to be taken despite many users finding the feedback “helpful” or “insightful.” 40,48–50,53,54,61,73,85,98 Likewise, the lack of real-time feedback was reported to be a barrier to usage in several studies.48–50,53,73,85

No e-A&F systems providing annual feedback reported significant improvements in patient care, with several studies reporting low usage and high dropout.23,37,38,42,50,61,73,99,108 For instance, the “Web-based Tailored Educational Intervention Data System” only produced yearly feedback for users once, with only 55% of enrolled participants using the system and a large dropout and null effect by the end.99 This was despite more than 80% of users rating the intervention “very helpful” in several domains including that the feedback was useful to evaluate their practice.23 Similarly, a web-based benchmarking tool for heart failure and pneumonia provided annual retrospective data and received >50% dropout rate by the end of the study, failing to detect any differences in care performance.37,38

Feedback specific to user roles enabled actionability

e-A&F systems were designed for a wide range of users that fell into 2 main roles. The majority were “frontline” users responsible for delivering care (eg, doctors, nurses, pharmacists), with others being “managerial” users (eg, managers, leadership, or organizational roles). To be directly actionable, feedback needed to be specific to user roles: feedback to “frontline” users mainly required patient lists, whereas for feedback to “managerial” users, the priority was highlighting the specifics for individual performance. Many successful systems presented specific feedback on both patient lists and individual or practice performance levels,24,27–30,35,36,48,60,68,69,74,77–80,84,88,90,91,96,97,103,107 with various using functions such as color coding and sorting , 21,26,27,35,36,41,48,49,55,57,58,60,66,68–70,74–80,83,90–92,102,107 to enhance prioritization of actions to be taken.

Patient lists to “frontline” users generally highlighted gaps in recommended care, supported by team or practice level performance feedback (particularly for primary care).26,55,57,58,66,71,81–83,104 These electronic patient lists, were seen as more efficient than standard care, with the e-A&F system reporting superior effects to alerts within the electronic medical record.57,71,80,104 Many studies without user-specific feedback including lack of patient lists,23,37,38,42,53,85,89,93,99,108 or individual performance data,40,61,62,70 did not demonstrate significant improvements to patient outcomes. Several of these studies reported specificity of the data (both on an individual practitioner level and a patient level) to be a barrier to actionability and usage.37,38,54,85 For example, Filardo et al37,38,109 described a benchmarking and case review tool, which combined education initiatives with feedback on aggregate measures, rather than highlighting individual performances.37,38,109 This resulted in no significant effects on patient care, with only 26% completing the full intervention.37,38

Nevertheless, within a strong organizational context, individual clinician performance feedback (even without patient lists) given to “managerial” users or senior staff, particularly from leadership or management, was also effective.27,33,39,44,59,73,74,86,87,95,100 Although this entailed an extra step to deliver feedback to frontline care staff and often required good interdisciplinary collaboration, the process appeared to increase motivation and accountability.27,39,59,86,87,95,100 This process influenced individual users to take ownership of the feedback, including the responsibility to directly address the care gaps highlighted and prevented the assumption that someone else would.27,39,54,73,74,86,87,95,100 For example, Dixon-Woods et al27 described how the leadership team closely scrutinized the data and set up meetings that effectively targeted individuals who were underperforming in one area or another. With a strong “improvement culture” led by the leadership team, staff viewed their own feedback critically and over time, enabled downstream improvements even without prompts from the leadership team.27 In contrast, Crits-Christoph et al25 designed a system to collect performance ratings of therapeutic alliance, treatment satisfaction, and drug and alcohol use. To protect clinician employment and confidentiality, individual clinicians and patients could not be identified and so users struggled to act on the feedback.25 Despite monthly meetings, leadership support, and financial incentives, no significant improvements in clinical outcomes measures were noted.25

Action plans were more effective when embedded within the system

The e-A&F systems that incorporated action plans as part of their multi-faceted interventions appeared to produce better results.24,33,35,36,44,55,56,62,66,73,75,76,79,84,91 For example, Feldstein et al35,36 designed a dashboard that showed not only color-coded graphs of clinical performance compared with guidelines but also had a list of prompts for how to achieve recommended targets for individual patients (eg, prompts to conduct a screening test or adjusting a medication dose). This resulted in significant improvements in care scores for several chronic disease areas, with users feeling “empowered” to proactively manage wider patient needs, particularly for broader clinical roles.35 Similarly, a website reported percentages of patients meeting BP targets primary care professionals, and importantly included suggested actions designed to be simple and achievable.56 This allowed direct actions to address gaps in performance and resulted in significant increases in the use of guideline-recommended medications for blood pressure.56

Conversely, when users were asked to come up with their own action plan either as part of meetings or as part of wider quality improvement activity groups, it reduced actionability, and at times resulted in unrealistic action plans and unattainable goals.23,25,32,34,42,43,45–47,54,72,99,101,108 In a medication safety system targeting patients with acute kidney injury, pharmacists input their own recommendations for doctors, rather than doctors being able to direct action changes in medication.72 This resulted in a time delay before the action plan could be implemented and no improvements in adverse drug reactions or time taken to stop nephrotoxic medications.72

DISCUSSION

This review summarized 88 studies of e-A&F systems, demonstrating their wide range of settings, applications, and characteristics. Despite automated audit and advantages in analysis compared with manual methods, it was insufficient for e-A&F systems to just feedback more data, or solely present measurements and targets for performance. When compared with generic A&F best practices, there was an increased expectation for e-A&F systems to present more precise and nuanced feedback, to make it easy to act on or present viable next steps to improve patient care. Established effective components of wider A&F interventions include timely feedback, individualized feedback and action planning.1,5 Yet, even some recent e-A&F systems lacked these, with extensive inconsistencies between different systems. Our review highlights more nuanced requirements for e-A&F, including the availability of immediate or ‘near real-time’ data for feedback; feedback functions that were specific to user roles (including “patient lists” for frontline users and “individual performance feedback” for senior or managerial users); and embedding action plans within systems. A key consideration for successful e-A&F was enabling feedback to be actionable, yet underlying contexts of organizations, resources, and user characteristics deeply affected the uptake of e-A&F systems, considerably influencing their effects in several studies.

Comparison with existing literature

Our review builds on wider evidence regarding A&F, revealing important findings for computerized interventions.1,3,4 In particular, e-A&F systems offer opportunities to enhance the positive effects of 3 known generic A&F best practices, including timeliness, specificity, and action-planning.1,3,4,6,10 Our findings present a more explicit understanding of these, recommending the provision of real-time data, feedback functions tailored to user roles (particularly patient lists to frontline users and individual performance data to managerial roles), along with embedded action plans. With an increasing uptake of e-A&F, wider A&F best practices could be extended to take these into account.1,5,6 Our review utilized a list of 18 best practices, focusing on more objective features to aid clarity, but this was only one way of classifying e-A&F system components. Though there is considerable overlap, others have proposed slightly different classifications.4,6,10,110 Our approach was guided by the reporting within papers, and explicitly considered organizational factors and cointerventions, though omitted more complex and subjective characteristics that were less evidently reported, such as trust or identity.1,5,6,10

Two systematic reviews on e-A&F systems have been performed previously in 2015 and 2017. Dowding et al (2015) included 11 studies on dashboards, highlighting that contextual factors were key to the usage of e-A&F systems and hence the effect on outcomes. Tuti et al7 examined 7 RCTs, but noted highly heterogeneous effect sizes. Our review builds on these findings, adopting broader inclusion criteria to examine a wider range of studies in a narrative synthesis to identify characteristics of e-A&F systems more likely to result in care improvements. Consistent with findings from these 2 previous reviews, several contextual factors within included “best practices” appeared to be beneficial in encouraging the uptake of systems and positive outcomes. In particular, leadership support and intraorganizational networks appeared to support user role-specific feedback, strengthening motivation and accountability to act on feedback data.

Implications for practice

This review compliments wider literature in advocating an “action over measurement” approach.111,112 With limited time and resources in healthcare, actionability within e-A&F systems appears important to enable tangible changes in care, rather than simply chasing targets or measuring performance.113 Important features highlighted by this review to enable actionability include the availability of real-time data, feedback specific to user roles, and embedded action plans. However, even some recent systems lacked basic features recommended by best practice, such as timely feedback and action planning. With e-A&F systems increasing in their potential functions and complexity, it suggests a need for codesign with relevant stakeholders to increase usability, participation, and sustainability that takes into account theorized “best practices.”114,115 Otherwise, with increasing complexity, computerized tools are more likely to result in nonadoption and abandonment.116,117 Enhancing functionality of e-A&F systems alone would be futile if computerized tools failed in their uptake, implementation, or sustainability.

Strengths and limitations

This is the largest review of studies focusing on e-A&F to date. It incorporated CP-FIT and applied realist principles in exploring a wide range of literature, from RCTs to qualitative studies to generate a rich insight into the current state of e-A&F systems. Our synthesis considered all studies regardless of methodological quality but was guided by our quality appraisal and GRADE-CERQual assessment in the confidence of findings. Applying CP-FIT allowed a greater depth of analysis based on theoretical findings for wider A&F and a framework of hypothesized “best practices.” However, use of CP-FIT may at the same time have limited novel themes, as findings may have been biased to preformed constructs. Through CP-FIT, we aimed to extend existing knowledge frameworks on wider A&F through application to e-A&F systems. Though we attempted to focus on findings specific to e-A&F, it was not always possible to ascertain whether features for success or failure were specific to just e-A&F or inherent to A&F interventions more generally.

As with other literature syntheses, our results are limited to the reporting and transparency of the authors within original studies. Though we propose and prioritize key mechanisms for success, our review was not designed to quantify casual effects or relative effect sizes. There is a degree of uncertainty in our highlighted mechanisms having a significant casual effect on process and outcomes and it is possible that underreported features may have greater effects on patient care. Our review likely identified studies with a predisposition towards recruiting participants from organizations with better resources and infrastructures, particularly in information technology, and hence our findings may be less applicable to low resource settings. We also restricted our search to published articles within medical databases and Google scholar to focus on systems for healthcare, but searching of further technology focused databases (eg, IEEE Xplore and ACM Digital Library) may have yielded further studies. Iterative interpretation of data is a core component of realist synthesis, but this has obvious implications for the replication of findings from the review, as others may have interpreted the evidence differently.

CONCLUSIONS

e-A&F systems continue to be developed for a wide range of clinical applications. Yet, it remains that several systems still lack basic features recommended by best practice, such as timely feedback and action planning. e-A&F systems should consistently incorporate best practices that enhance actionability by using real-time data, feeding back in ways that are specific to user roles, and providing embedded action plans. Future research needs to address inconsistencies in e-A&F system features, to ensure development incorporates features recommended by best practice, which can increase actionability of feedback and may improve outcomes.

FUNDING

This article is linked to independent research funded by the National Institute for Health Research (NIHR) through the Greater Manchester Patient Safety Translational Research Centre (award No. PSTRC-2016-003).

AUTHOR CONTRIBUTIONS

JT and BB conceived of the article and developed the study design. JT and BB performed study selection, screening, extraction, and quality appraisal. Results were developed by JT and BB under the supervision of SV and NP. JT wrote the article with contributions and comments from BB, SV, NP, IB. JT is guarantor of the article.

SUPPLEMENTARY MATERIAL

Supplementary material is available at Journal of the American Medical Informatics Association online.

CONFLICT OF INTEREST STATEMENT

The authors declare that they have no competing interests. The views expressed in this document are those of the authors and not necessarily those of the NHS, NIHR, or the Department of Health and Social Care.

DATA AVAILABILITY

The data supporting the findings of this study are available within Supplementary Files, with further datasets available upon reasonable request.

Supplementary Material

Contributor Information

Jung Yin Tsang, Centre for Health Informatics, Division of Informatics, Imaging and Data Science, Faculty of Biology, Medicine and Health, Manchester Academic Health Science Centre, The University of Manchester, Manchester, UK; Centre for Primary Care and Health Services Research, University of Manchester, Manchester, UK; NIHR Greater Manchester Patient Safety Translational Research Centre (GMPSTRC), University of Manchester, Manchester, UK.

Niels Peek, Centre for Health Informatics, Division of Informatics, Imaging and Data Science, Faculty of Biology, Medicine and Health, Manchester Academic Health Science Centre, The University of Manchester, Manchester, UK; NIHR Greater Manchester Patient Safety Translational Research Centre (GMPSTRC), University of Manchester, Manchester, UK; NIHR Applied Research Collaboration Greater Manchester, University of Manchester, Manchester, UK.

Iain Buchan, Institute of Population Health, University of Liverpool, Liverpool, UK.

Sabine N van der Veer, Centre for Health Informatics, Division of Informatics, Imaging and Data Science, Faculty of Biology, Medicine and Health, Manchester Academic Health Science Centre, The University of Manchester, Manchester, UK.

Benjamin Brown, Centre for Health Informatics, Division of Informatics, Imaging and Data Science, Faculty of Biology, Medicine and Health, Manchester Academic Health Science Centre, The University of Manchester, Manchester, UK; Centre for Primary Care and Health Services Research, University of Manchester, Manchester, UK; NIHR Greater Manchester Patient Safety Translational Research Centre (GMPSTRC), University of Manchester, Manchester, UK.

REFERENCES

- 1. Ivers N, Jamtvedt G, Flottorp S, et al. Audit and feedback: effects on professional practice and healthcare outcomes. Cochrane Database Syst Rev 2012; 6: CD000259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Jamtvedt G, Young JM, Kristoffersen DT, O’Brien MA, Oxman AD. Does telling people what they have been doing change what they do? A systematic review of the effects of audit and feedback. Qual Saf Health Care 2006; 15 (6): 433–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Hysong SJ. Meta-analysis: audit and feedback features impact effectiveness on care quality. Med Care 2009; 47 (3): 356–63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Ivers NM, Grimshaw JM, Jamtvedt G, et al. Growing literature, stagnant science? Systematic review, meta-regression and cumulative analysis of audit and feedback interventions in health care. J Gen Intern Med 2014; 29 (11): 1534–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Brown B, Gude WT, Blakeman T, et al. Clinical Performance Feedback Intervention Theory (CP-FIT): a new theory for designing, implementing, and evaluating feedback in health care based on a systematic review and meta-synthesis of qualitative research. Implement Sci 2019; 14 (1): 40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Brehaut JC, Colquhoun HL, Eva KW, et al. Practice feedback interventions: 15 suggestions for optimizing effectiveness. Ann Intern Med 2016; 164 (6): 435–41. [DOI] [PubMed] [Google Scholar]

- 7. Tuti T, Nzinga J, Njoroge M, et al. A systematic review of electronic audit and feedback: intervention effectiveness and use of behaviour change theory. Implement Sci 2017; 12 (1): 61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Wu DTY, Chen AT, Manning JD, et al. Evaluating visual analytics for health informatics applications: a systematic review from the American Medical Informatics Association Visual Analytics Working Group Task Force on Evaluation. J Am Med Inform Assoc 2019; 26 (4): 314–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Dowding D, Randell R, Gardner P, et al. Dashboards for improving patient care: review of the literature. Int J Med Inform 2015; 84 (2): 87–100. [DOI] [PubMed] [Google Scholar]

- 10. Colquhoun HL, Carroll K, Eva KW, et al. Advancing the literature on designing audit and feedback interventions: identifying theory-informed hypotheses. Implement Sci 2017; 12 (1): 117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Ivers N, Barnsley J, Upshur R, et al. “My approach to this job is…one person at a time”: perceived discordance between population-level quality targets and patient-centred care. Can Fam Physician 2014; 60 (3): 258–66. [PMC free article] [PubMed] [Google Scholar]

- 12. Barnett-Page E, Thomas J. Methods for the synthesis of qualitative research: a critical review. BMC Med Res Methodol 2009; 9: 59. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Moher D, Shamseer L, Clarke M, et al. ; PRISMA-P Group. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement. Syst Rev 2015; 4 (1): 1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Pluye P, Gagnon M-P, Griffiths F, Johnson-Lafleur J. A scoring system for appraising mixed methods research, and concomitantly appraising qualitative, quantitative and mixed methods primary studies in Mixed Studies Reviews. Int J Nurs Stud 2009; 46 (4): 529–46. [DOI] [PubMed] [Google Scholar]

- 15. Pace R, Pluye P, Bartlett G, et al. Testing the reliability and efficiency of the pilot Mixed Methods Appraisal Tool (MMAT) for systematic mixed studies review. Int J Nurs Stud 2012; 49 (1): 47–53. [DOI] [PubMed] [Google Scholar]

- 16. Lewin S, Glenton C, Munthe-Kaas H, et al. Using qualitative evidence in decision making for health and social interventions: an approach to assess confidence in findings from qualitative evidence syntheses (GRADE-CERQual). PLoS Med 2015; 12 (10): e1001895. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Dixon-Woods M, Sutton A, Shaw R, et al. Appraising qualitative research for inclusion in systematic reviews: a quantitative and qualitative comparison of three methods. J Health Serv Res Policy 2007; 12 (1): 42–7. [DOI] [PubMed] [Google Scholar]

- 18. Green J, Thorogood N. Qualitative Methods for Health Research. Los Angeles: Sage; 2018. [Google Scholar]

- 19. Pawson R, Greenhalgh T, Harvey G, Walshe K. Realist review-a new method of systematic review designed for complex policy interventions. J Health Serv Res Policy 2005; 10 (Suppl 1): 21–34. [DOI] [PubMed] [Google Scholar]

- 20. Punton M, Vogel I, Lloyd R. Reflections from a realist evaluation in progress: scaling ladders and stitching theory. Brighton: Institute of Development Studies; 2016.

- 21. Banerjee D, Thompson C, Kell C, et al. An informatics-based approach to reducing heart failure all-cause readmissions: the Stanford heart failure dashboard. J Am Med Inform Assoc 2017; 24 (3): 550–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Blacky A, Mandl H, Adlassnig K-P, Koller W. Fully automated surveillance of healthcare-associated infections with MONI-ICU: a breakthrough in clinical infection surveillance. Appl Clin Inform 2011; 2 (3): 365–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Carney PA, Geller BM, Sickles EA, et al. Feasibility and satisfaction with a tailored web-based audit intervention for recalibrating radiologists’ thresholds for conducting additional work-up. Acad Radiol 2011; 18 (3): 369–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Choi HH, Clark J, Jay AK, Filice RW. Minimizing barriers in learning for on-call radiology residents-end-to-end web-based resident feedback system. J Digit Imaging 2018; 31 (1): 117–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Crits-Christoph P, Ring-Kurtz S, McClure B, et al. A randomized controlled study of a web-based performance improvement system for substance abuse treatment providers. J Subst Abuse Treat 2010; 38 (3): 251–62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Dagliati A, Sacchi L, Tibollo V, et al. A dashboard-based system for supporting diabetes care. J Am Med Inform Assoc 2018; 25 (5): 538–47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Dixon-Woods M, Redwood S, Leslie M, Minion J, Martin GP, Coleman JJ. Improving quality and safety of care using “technovigilance”: an ethnographic case study of secondary use of data from an electronic prescribing and decision support system. Milbank Q 2013; 91 (3): 424–54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Dreischulte T, Donnan P, Grant A, Hapca A, McCowan C, Guthrie B. Safer prescribing—a trial of education, informatics, and financial incentives. N Engl J Med 2016; 374 (11): 1053–64. [DOI] [PubMed] [Google Scholar]

- 29. Grant A, Dreischulte T, Guthrie B. Process evaluation of the Data-driven Quality Improvement in Primary Care (DQIP) trial: case study evaluation of adoption and maintenance of a complex intervention to reduce high-risk primary care prescribing. BMJ Open 2017; 7 (3): e015281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Grant A, Dreischulte T, Guthrie B. Process evaluation of the data-driven quality improvement in primary care (DQIP) trial: active and less active ingredients of a multi-component complex intervention to reduce high-risk primary care prescribing. Implement Sci 2017; 12 (1): 4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Driessen SRC, Van Zwet EW, Haazebroek P, et al. A dynamic quality assessment tool for laparoscopic hysterectomy to measure surgical outcomes. Am J Obstet Gynecol 2016; 215: 754e.1–8. [DOI] [PubMed] [Google Scholar]

- 32. Estrada CA, Safford MM, Salanitro AH, et al. A web-based diabetes intervention for physician: a cluster-randomized effectiveness trial. Int J Qual Health Care 2011; 23 (6): 682–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Boggan JC, Cheely G, Shah BR, et al. A novel approach to practice-based learning and improvement using a web-based audit and feedback module. J Grad Med Educ 2014; 6 (3): 541–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Billue KL, Safford MM, Salanitro AH, et al. Medication intensification in diabetes in rural primary care: a cluster-randomised effectiveness trial. BMJ Open 2012; 2 (5): e000959. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Feldstein AC, Schneider JL, Unitan R, et al. Health care worker perspectives inform optimization of patient panel-support tools: a qualitative study. Popul Health Manag 2013; 16 (2): 107–19. [DOI] [PubMed] [Google Scholar]

- 36. Feldstein AC, Perrin NA, Unitan R, et al. Effect of a patient panel-support tool on care delivery. Am J Manag Care 2010; 16 (10): e256–66. [PubMed] [Google Scholar]

- 37. Filardo G, Nicewander D, Herrin J, et al. A hospital-randomized controlled trial of a formal quality improvement educational program in rural and small community Texas hospitals: one year results. Int J Qual Health Care 2009; 21 (4): 225–32. [DOI] [PubMed] [Google Scholar]

- 38. Filardo G, Nicewander D, Herrin J, et al. Challenges in conducting a hospital-randomized trial of an educational quality improvement intervention in rural and small community hospitals. Am J Med Qual 2008; 23 (6): 440–7. [DOI] [PubMed] [Google Scholar]

- 39. Fischer MJ, Kourany WM, Sovern K, et al. Development, implementation and user experience of the Veterans Health Administration (VHA) dialysis dashboard. BMC Nephrol 2020; 21 (1): 136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Fisher JC, Godfried DH, Lighter-Fisher J, et al. A novel approach to leveraging electronic health record data to enhance pediatric surgical quality improvement bundle process compliance. J Pediatr Surg 2016; 51 (6): 1030–3. [DOI] [PubMed] [Google Scholar]

- 41. Fletcher GS, Aaronson BA, White AA, Julka R. Effect of a real-time electronic dashboard on a rapid response system. J Med Syst 2017; 42 (1): 5. [DOI] [PubMed] [Google Scholar]

- 42. Geller BM, Ichikawa L, Miglioretti DL, Eastman D. Web-based mammography audit feedback. Am J Roentgenol 2012; 198 (6): 562–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Gude WT, van Engen-Verheul MM, van der Veer SN, et al. Effect of a web-based audit and feedback intervention with outreach visits on the clinical performance of multidisciplinary teams: a cluster-randomized trial in cardiac rehabilitation. Implement Sci 2016; 11 (1): 160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Boggan JC, Swaminathan A, Thomas S, Simel DL, Zaas AK, Bae JG. Improving timely resident follow-up and communication of results in ambulatory clinics utilizing a web-based audit and feedback module. J Grad Med Educ 2017; 9 (2): 195–200. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Gude WT, van Engen-Verheul MM, van der Veer SN, de Keizer NF, Peek N. How does audit and feedback influence intentions of health professionals to improve practice? A laboratory experiment and field study in cardiac rehabilitation. BMJ Qual Saf 2017; 26 (4): 279–87. [DOI] [PubMed] [Google Scholar]

- 46. Gude WT, Van Der Veer SN, Van Engen-Verheul MMM, De Keizer NF, Peek N. Inside the black box of audit and feedback: a laboratory study to explore determinants of improvement target selection by healthcare professionals in cardiac rehabilitation. Stud Health Technol Inform 2015; 216: 424–8. [PubMed] [Google Scholar]

- 47. Engen-Verheul MM, Van Gude WT, Veer SN, Van Der, et al. Improving guideline concordance in multidisciplinary teams: preliminary results of a cluster-randomized trial evaluating the effect of a web-based audit and feedback intervention with outreach visits. AMIA Annu Symp Proc 2015; 2015: 2101–10. [PMC free article] [PubMed] [Google Scholar]

- 48. Guldberg TL, Vedsted P, Kristensen JK, Lauritzen T. Improved quality of Type 2 diabetes care following electronic feedback of treatment status to general practitioners: A cluster randomized controlled trial. Diabet Med 2011; 28 (3): 325–32. [DOI] [PubMed] [Google Scholar]

- 49. Guldberg TL, Vedsted P, Lauritzen T, Zoffmann V. Suboptimal quality of type 2 diabetes care discovered through electronic feedback led to increased nurse-GP cooperation. A qualitative study. Prim Care Diabetes 2010; 4 (1): 33–9. [DOI] [PubMed] [Google Scholar]

- 50. Harris S, Morgan M, Davies E. Web-based reporting of the results of the 2006 Four Country Prevalence Survey of Healthcare Associated Infections. J Hosp Infect 2008; 69 (3): 258–64. [DOI] [PubMed] [Google Scholar]

- 51. Hartzler AL, Chaudhuri S, Fey BC, Flum DR, Lavallee D. Integrating patient-reported outcomes into spine surgical care through visual dashboards: lessons learned from human-centered design. EGEMS (Wash DC) 2015; 3 (2): 1133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52. Hermon A, Pain T, Beckett P, et al. Improving compliance with central venous catheter care bundles using electronic records. Nurs Crit Care 2015; 20 (4): 196–203. [DOI] [PubMed] [Google Scholar]

- 53. Hester G, Lang T, Madsen L, Tambyraja R, Zenker P. Timely data for targeted quality improvement interventions: use of a visual analytics dashboard for bronchiolitis. Appl Clin Inform 2019; 10 (1): 168–74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54. Hysong SJ, Knox MK, Haidet P. Examining clinical performance feedback in Patient-Aligned Care Teams. J Gen Intern Med 2014; 29 (S2): S667–74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55. Brown B, Balatsoukas P, Williams R, Sperrin M, Buchan I. Multi-method laboratory user evaluation of an actionable clinical performance information system: implications for usability and patient safety. J Biomed Inform 2018; 77: 62–80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56. Hysong SJ, Kell HJ, Petersen LA, Campbell BA, Trautner BW. Theory-based and evidence-based design of audit and feedback programmes: examples from two clinical intervention studies. BMJ Qual Saf 2017; 26 (4): 323–34. [DOI] [PubMed] [Google Scholar]

- 57. Jeffries M, Phipps DL, Howard RL, Avery AJ, Rodgers S, Ashcroft DM. Understanding the implementation and adoption of a technological intervention to improve medication safety in primary care: a realist evaluation. BMC Health Serv Res 2017; 17 (1): 196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58. Jeffries M, Phipps D, Howard RL, Avery A, Rodgers S, Ashcroft D. Understanding the implementation and adoption of an information technology intervention to support medicine optimisation in primary care: qualitative study using strong structuration theory. BMJ Open 2017; 7 (5): e014810. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59. Jeffs L, Beswick S, Lo J, Lai Y, Chhun A, Campbell H. Insights from staff nurses and managers on unit-specific nursing performance dashboards: a qualitative study. BMJ Qual Saf 2014; 23 (12): 1001–6. [DOI] [PubMed] [Google Scholar]

- 60. Jonah L, Pefoyo AK, Lee A, et al. Evaluation of the effect of an audit and feedback reporting tool on screening participation: The Primary Care Screening Activity Report (PCSAR). Prev Med 2017; 96: 135–43. [DOI] [PubMed] [Google Scholar]

- 61. Kern LM, Malhotra S, Barrón Y, et al. Accuracy of electronically reported “meaningful use” clinical quality measures: a cross-sectional study. Ann Intern Med 2013; 158 (2): 77–83. [DOI] [PubMed] [Google Scholar]

- 62. Kilbridge PM, Noirot LA, Reichley RM, et al. Computerized surveillance for adverse drug events in a pediatric hospital. J Am Med Inform Assoc 2009; 16 (5): 607–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63. Kurtzman G, Dine J, Epstein A, et al. Internal medicine resident engagement with a laboratory utilization dashboard: mixed methods study. J Hosp Med 2017; 12 (9): 743–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64. Ryskina K, Jessica Dine C, Gitelman Y, et al. Effect of Social Comparison Feedback on Laboratory Test Ordering for Hospitalized Patients: A Randomized Controlled Trial. J Gen Intern Med 2018; 33 (10): 1639–45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65. Lin LA, Bohnert ASB, Kerns RD, Clay MA, Ganoczy D, Ilgen MA. Impact of the Opioid Safety Initiative on opioid-related prescribing in veterans. Pain 2017; 158 (5): 833–9. [DOI] [PubMed] [Google Scholar]

- 66. Tsang JY, Brown B, Peek N, Campbell S, Blakeman T. Mixed methods evaluation of a computerised audit and feedback dashboard to improve patient safety through targeting acute kidney injury (AKI) in primary care. Int J Med Inform 2021; 145: 104299. [DOI] [PubMed] [Google Scholar]

- 67. Linder J. A, Schnipper JL, Tsurikova R, et al. Electronic health record feedback to improve antibiotic prescribing for acute respiratory infections. Am J Manag Care 2010; 16 (12 Suppl HIT): e311–9. [PubMed] [Google Scholar]

- 68. Lippert ML, Kousgaard MB, Bjerrum L. General practitioners uses and perceptions of voluntary electronic feedback on treatment outcomes—a qualitative study. BMC Fam Pract 2014; 15: 193. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69. Schroll H, Christensen R. D, Thomsen JL, Andersen M, Friborg S, Søndergaard J. The Danish model for improvement of diabetes care in general practice: impact of automated collection and feedback of patient data. Int J Family Med 2012; 2012: 1–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70. Lo Y-SS, Lee W-SS, Chen G-BB, Liu C-TT. Improving the work efficiency of healthcare-associated infection surveillance using electronic medical records. Comput Methods Programs Biomed 2014; 117 (2): 351–9. [DOI] [PubMed] [Google Scholar]

- 71. Loo TS, Davis RB, Lipsitz LA, et al. Electronic medical record reminders and panel management to improve primary care of elderly patients. Arch Intern Med 2011; 171 (17): 1552–8. [DOI] [PubMed] [Google Scholar]

- 72. McCoy AB, Cox ZL, Neal EB, et al. Real-time pharmacy surveillance and clinical decision support to reduce adverse drug events in acute kidney injury. Appl Clin Inform 2012; 3 (2): 221–38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73. Meijers JMM, Halfens RJG, Mijnarends DM, Mostert H, Schols JMGA. A feedback system to improve the quality of nutritional care. Nutrition 2013; 29 (7-8): 1037–41. [DOI] [PubMed] [Google Scholar]

- 74. Michtalik HJ, Carolan HT, Haut ER, et al. Use of provider-level dashboards and pay-for-performance in venous thromboembolism prophylaxis. J Hosp Med 2015; 10 (3): 172–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75. Mlaver E, Schnipper JL, Boxer RB, et al. User-centered collaborative design and development of an inpatient safety dashboard. Jt Comm J Qual Patient Saf 2017; 43 (12): 676–85. [DOI] [PubMed] [Google Scholar]

- 76. Bersani K, Fuller TE, Garabedian P, et al. Use, perceived usability, and barriers to implementation of a patient safety dashboard integrated within a vendor EHR. Appl Clin Inform 2020; 11 (1): 34–45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77. Bunce AE, Gold R, Davis JV, et al. “Salt in the Wound”: safety net clinician perspectives on performance feedback derived from EHR data. J Ambul Care Manage 2017; 40 (1): 26–35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78. Mottes TA, Goldstein SL, Basu RK. Process based quality improvement using a continuous renal replacement therapy dashboard. BMC Nephrol 2019; 20 (1): 17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79. Parsons A, McCullough C, Wang J, Shih S. Validity of electronic health record-derived quality measurement for performance monitoring. J Am Med Inform Assoc 2012; 19 (4): 604–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80. Patel S, Rajkomar A, Harrison JD, et al. Next-generation audit and feedback for inpatient quality improvement using electronic health record data: a cluster randomised controlled trial. BMJ Qual Saf 2018; 27 (9): 691–9. [DOI] [PubMed] [Google Scholar]

- 81. Peek N, Gude WT, Keers RN, et al. Evaluation of a pharmacist-led actionable audit and feedback intervention for improving medication safety in UK primary care: An interrupted time series analysis. PLoS Med 2020; 17 (10): e1003286. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82. Jeffries M, Gude WT, Keers RN, et al. Understanding the utilisation of a novel interactive electronic medication safety dashboard in general practice: a mixed methods study. BMC Med Inform Decis Mak 2020; 20 (1): 69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83. Jeffries M, Keers RN, Phipps DL, et al. Developing a learning health system: Insights from a qualitative process evaluation of a pharmacist-led electronic audit and feedback intervention to improve medication safety in primary care. PLoS One 2018; 13 (10): e0205419. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84. Peiris D, Usherwood T, Panaretto K, et al. Effect of a computer-guided, quality improvement program for cardiovascular disease risk management in primary health care: the treatment of cardiovascular risk using electronic decision support cluster-randomized trial. Circ Cardiovasc Qual Outcomes 2015; 8 (1): 87–95. [DOI] [PubMed] [Google Scholar]

- 85. Pringle JL, Kearney SM, Grasso K, Boyer AD, Conklin MH, Szymanski KA. User testing and performance evaluation of the Electronic Quality Improvement Platform for Plans and Pharmacies. J Am Pharm Assoc 2015; 55 (6): 634–41. [DOI] [PubMed] [Google Scholar]

- 86. Rattray NA, Damush TM, Miech EJ, et al. Empowering implementation teams with a learning health system approach: leveraging data to improve quality of care for transient ischemic attack. J Gen Intern Med 2020; 35 (Suppl 2): 823–31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87. Bravata DM, Myers LJ, Perkins AJ, et al. Assessment of the protocol-guided rapid evaluation of veterans experiencing new transient neurological symptoms (PREVENT) program for improving quality of care for transient ischemic attack: a nonrandomized cluster trial. JAMA Netw Open 2020; 3 (9): e2015920. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88. Gold R, Nelson C, Cowburn S, et al. Feasibility and impact of implementing a private care system’s diabetes quality improvement intervention in the safety net: a cluster-randomized trial. Implement Sci 2015; 10: 83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89. Redwood S, Ngwenya NB, Hodson J, et al. Effects of a computerized feedback intervention on safety performance by junior doctors: results from a randomized mixed method study. BMC Med Inform Decis Mak 2013; 13: 63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90. Sheen YJ, Huang CC, Huang S-C, Lin C-H, Lee I-T, H-H Sheu W. Electronic dashboard-based remote glycemic management program reduces length of stay and readmission rate among hospitalized adults. J Diabetes Investig 2021; 12 (9): 1697–707. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91. Sheen Y-J, Huang C-C, Huang S-C, et al. Implementation of an electronic dashboard with a remote management system to improve glycemic management among hospitalized adults. Endocr Pract 2020; 26 (2): 179–91. [DOI] [PubMed] [Google Scholar]

- 92. Shen X, Lu M, Feng R, et al. Web-based just-in-time information and feedback on antibiotic use for village doctors in rural Anhui, China: randomized controlled trial. J Med Internet Res 2018; 20 (2): e53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93. Simon SR, Soumerai SB. Failure of Internet-based audit and feedback to improve quality of care delivered by primary care residents. Int J Qual Health Care 2005; 17 (5): 427–31. [DOI] [PubMed] [Google Scholar]

- 94. Simpao AF, Ahumada LM, Desai BR, et al. Optimization of drug-drug interaction alert rules in a pediatric hospital’s electronic health record system using a visual analytics dashboard. J Am Med Informatics Assoc 2015; 22 (2): 361–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95. Smalley CM, Willner MA, Muir MR, et al. Electronic medical record-based interventions to encourage opioid prescribing best practices in the emergency department. Am J Emerg Med 2020; 38 (8): 1647–51. [DOI] [PubMed] [Google Scholar]

- 96. Swartz J, Koziatek C, Theobald J, Smith S, Iturrate E. Creation of a simple natural language processing tool to support an imaging utilization quality dashboard. Int J Med Inform 2017; 101: 93–9. [DOI] [PubMed] [Google Scholar]

- 97. Thomas KG, Thomas MR, Stroebel RJ, et al. Use of a registry-generated audit, feedback, and patient reminder intervention in an internal medicine resident clinic-a randomized trial. J Gen Intern Med 2007; 22 (12): 1740–4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98. Tinoco A, Evans RS, Staes CJ, et al. Comparison of computerized surveillance and manual chart review for adverse events. J Am Med Inform Assoc 2011; 18 (4): 491–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99. Carney PA, Abraham L, Cook A, et al. Impact of an educational intervention designed to reduce unnecessary recall during screening mammography. Acad Radiol 2012; 19 (9): 1114–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100. Trinh LD, Roach EM, Vogan ED, Lam SW, Eggers GG. Impact of a quality-assessment dashboard on the comprehensive review of pharmacist performance. Am J Health Syst Pharm 2017; 74 (17 Suppl 3): S75–S83. [DOI] [PubMed] [Google Scholar]

- 101. Twohig PA, Rivington JR, Gunzler D, Daprano J, Margolius D. Clinician dashboard views and improvement in preventative health outcome measures: a retrospective analysis. BMC Health Serv Res 2019; 19 (1): 475. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102. Waitman LR, Phillips IE, McCoy AB, et al. Adopting real-time surveillance dashboards as a component of an enterprisewide medication safety strategy. Jt Comm J Qual Patient Saf 2011; 37 (7): 326–32. [DOI] [PubMed] [Google Scholar]

- 103. Ward CE, Morella L, Ashburner JM, Atlas SJ. An interactive, all-payer, multidomain primary care performance dashboard. J Ambul Care Manage 2014; 37 (4): 339–48. [DOI] [PubMed] [Google Scholar]

- 104. Weber V, Bloom F, Pierdon S, Wood C. Employing the electronic health record to improve diabetes care: a multifaceted intervention in an integrated delivery system. J Gen Intern Med 2008; 23 (4): 379–82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 105. Weiss D, Dunn SI, Sprague AE, et al. Effect of a population-level performance dashboard intervention on maternal-newborn outcomes: an interrupted time series study. BMJ Qual Saf 2018; 27 (6): 425–36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 106. Reszel J, Ockenden H, Wilding J, et al. Use of a maternal newborn audit and feedback system in Ontario: A collective case study. BMJ Qual Saf 2019; 28 (8): 635–44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 107. Wu DTY, Vennemeyer S, Brown K, et al. Usability testing of an interactive dashboard for surgical quality improvement in a large congenital heart center. Appl Clin Inform 2019; 10 (5): 859–69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 108. Carney PA, Bowles EJA, Sickles EA, et al. Using a tailored web-based intervention to set goals to reduce unnecessary recall. Acad Radiol 2011; 18 (4): 495–503. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 109. Filardo G, Nicewander D, Hamilton C, et al. A hospital-randomized controlled trial of an educational quality improvement intervention in rural and small community hospitals in Texas following implementation of information technology. Am J Med Qual 2007; 22 (6): 418–27. [DOI] [PubMed] [Google Scholar]

- 110. Colquhoun H, Michie S, Sales A, et al. Reporting and design elements of audit and feedback interventions: a secondary review. BMJ Qual Saf 2017; 26 (1): 54–60. [DOI] [PubMed] [Google Scholar]

- 111. Jamtvedt G, Flottorp S, Ivers N. Audit and feedback as a quality strategy. In: Busse R, Klazinga N, Panteli D, et al. eds.Improving Healthcare Quality in Europe: Characteristics, Effectiveness and Implementation of Different Strategies. Health Policy Series, No. 53. European Observatory on Health Systems and Policies; 2019. [PubMed] [Google Scholar]

- 112. Foy R, Skrypak M, Alderson S, et al. Revitalising audit and feedback to improve patient care. BMJ 2020; 368: m213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 113. Gude WT, Brown B, van der Veer SN, et al. Clinical performance comparators in audit and feedback: a review of theory and evidence. Implement Sci 2019; 14 (1): 39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 114. Slattery P, Saeri AK, Bragge P. Research co-design in health: a rapid overview of reviews. Health Res Policy Syst 2020; 18 (1): 17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 115. Maguire M. Methods to support human-centred design. Int J Hum Comput Stud 2001; 55 (4): 587–634. [Google Scholar]

- 116. Greenhalgh T, Wherton J, Papoutsi C, et al. Beyond adoption: a new framework for theorizing and evaluating nonadoption, abandonment, and challenges to the scale-up, spread, and sustainability of health and care technologies. J Med Internet Res 2017; 19 (11): e367. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 117. Greenhalgh T, Robert G, Macfarlane F, Bate P, Kyriakidou O. Diffusion of innovations in service organizations: systematic review and recommendations. Milbank Q 2004; 82 (4): 581–629. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data supporting the findings of this study are available within Supplementary Files, with further datasets available upon reasonable request.