Abstract

Brain imaging research enjoys increasing adoption of supervised machine learning for single-participant disease classification. Yet, the success of these algorithms likely depends on population diversity, including demographic differences and other factors that may be outside of primary scientific interest. Here, we capitalize on propensity scores as a composite confound index to quantify diversity due to major sources of population variation. We delineate the impact of population heterogeneity on the predictive accuracy and pattern stability in 2 separate clinical cohorts: the Autism Brain Imaging Data Exchange (ABIDE, n = 297) and the Healthy Brain Network (HBN, n = 551). Across various analysis scenarios, our results uncover the extent to which cross-validated prediction performances are interlocked with diversity. The instability of extracted brain patterns attributable to diversity is located preferentially in regions part of the default mode network. Collectively, our findings highlight the limitations of prevailing deconfounding practices in mitigating the full consequences of population diversity.

Brain-imaging research enjoys increasing adoption of supervised machine learning for single-subject disease classification. This study explores the contribution of diversity-aware machine learning models to tracking, unpacking and understanding out-of-distribution generalization in large-scale neuroimaging datasets, and shows that population diversity is a key factor contributing to generalization performance.

Introduction

Brain scanning technology opens a noninvasive window into the structure and function of the human brain. Combined with machine learning algorithms, brain imaging research is now gaining momentum to transition from group-level contrast analyses toward single-participant prediction [1–3]. In supervised learning, the main purpose is to learn coherent patterns from brain measurements (i.e., brain signatures) that can be used to make accurate forecasts for new participants [4,5]. The prediction paradigm holds the promise of improving disease diagnosis, enhancing prognostic estimates, and ultimately paving the way to precision medicine [6,7]. Machine learning methods are now increasingly adopted for the goal of classifying various conditions, including autism spectrum disorder (ASD) [8–11], attention-deficit/hyperactivity disorder (ADHD) [12–15], anxiety (ANX) [16,17], or schizophrenia [18–22]. However, there is a large variability in the diagnostic accuracy reported across studies [23–25]. Counterintuitively, the larger the clinical cohorts have become, the worse the prediction performance of machine learning algorithms has become. The unknown reasons behind this varying success hamper the translation of emerging neuroimaging findings into clinical practice.

In the current landscape of machine learning applications in neuroimaging, researchers are facing challenges related to the generalizability and replicability of brain patterns valuable for prediction. Careful validation of machine learning tools is therefore needed to ascertain the robustness of the learned brain patterns. When using small and homogeneous datasets, however, common cross-validation (CV) procedures are more likely to yield high prediction accuracies, although at the expense of producing biased biomarkers with poor generalization to new or future cohorts. The last 2 decades have witnessed an unprecedented growth in the number of open-access data-sharing initiatives in the neuroscientific community [26–31]. In these initiatives, data are collected from multiple acquisition sites to aggregate larger and demographically more heterogeneous datasets. Such multisite data pooling efforts are more likely to reflect the true diversity in the wider populations. This first wave of retrospective data collection initiatives offers great opportunities for the advancement of scientific discoveries in neuroscience [32,33].

However, these efforts also pose new hurdles due to the diverging characteristics and backgrounds of the participants who are recruited at each site. Discrepancies in demographic and clinical variables may interfere with the identification of robust relationships between imaging-based features and the target variables (e.g., diagnosis). These challenges may entail potential bias for downstream decision-making by healthcare professionals and other stakeholders. Moreover, gathering brain scans from multiple sites comes with the potential cost of increased heterogeneity due to different sources of variation, such as batch effects [34,35]. Although the higher heterogeneity in multisite, compared to single site, cohorts may provide better generalization to participants from unseen scanning sites, classification in multisite cohorts has generally shown lower performance [23,36–38]. When handled with insufficient care, these general sources of variation that tell people apart may prevent predictive models from learning accurate patterns and weaken their performance. For these reasons, faithful disease classification in multisite cohorts may increasingly require sharp tools that take several dimensions of diversity into account.

Previous studies have typically focused on assessing generalizability along a single dimension, for example, evaluating a trained predictive model on a specific subset of participants from unseen scanning sites (e.g., leave-N-sites-out), a subset of participants with a specific sex or age range (e.g., [39–42]). The increasingly embraced CV tactics can monitor and probe the effects from one specific source of heterogeneity. Yet, cohort heterogeneity may stem from multiple aspects of diversity (e.g., age, sex, and acquisition site). For example, a performance decay in held-out acquisition sites might be in part attributable to other aspects of population variation (e.g., age and sex distribution). To deal with such diversity, we need approaches that can appropriately incorporate sources of population heterogeneity that should be acknowledged in the analytical workflow (cf [43]).

Our study aims to provide investigators with a handle to directly quantify the ramifications of population diversity. We propose to recast the propensity score framework to faithfully track out-of-distribution behavior of predictive analysis pipelines. Briefly, the propensity score has originally been introduced for computing the probability of treatment assignment given an individual’s collective covariates [44]. An appealing property of propensity scores is the opportunity to encapsulate a mixed envelope of covariates and express them as a single dimension of variation. Once built, a propensity score model can be deployed for a variety of purposes, including matching, stratification, covariate adjustment, and weighting [45,46]. Henceforth, our notion of diversity relies on continuous differences in the propensity scores of the participants in a cohort. Participants who are closer to each other on the propensity score spectrum can be assumed to have more similar constellations of covariates. Larger differences in propensity scores indicate bigger gaps in population stratification or background between a given pair of participants. After stratifying the cohort by means of propensity scores, we examined different sampling schemes to analyze the impact of diversity on the performance of our predictive models and assess their generalizability. It is worth noting here that the purpose of our study was not to improve the generalization abilities of these predictive models [7,47], but rather to provide a principled way to characterize population diversity. Hence, our aim was to investigate and understand the role of diversity in brain imaging prediction (i.e., is there an impact of common dimensions of population variation on neuroimaging-based machine learning?). For the estimation of propensity scores and thus diversity, we considered a set of nonimaging covariates with potential confounding effects that are widely used and readily available in most neuroimaging datasets [48–50]: age, sex assigned at birth, and scanning site. Results on the classification of participants with a neurodevelopmental diagnosis versus healthy controls from both the Autism Brain Imaging Data Exchange (ABIDE) and Healthy Brain Network (HBN) cohorts using functional and structural brain measurements show that, even after rigorous matching and nuisance deconfounding, diversity exerts a substantial impact on the generalization accuracy of the predictive models and on the pattern stability of the extracted biomarkers.

Methods

Participants and brain scanning resources

We examined resting-state functional MRI (rs-fMRI) and cortical thickness data from both waves of the openly shared ABIDE initiative (ABIDE I and II; http://fcon_1000.projects.nitrc.org/indi/abide) [29,30] and the HBN dataset [26], releases 1–8 collected between June 2017 and January 2020.

For ABIDE, we considered data from all acquisition sites with at least 10 participants per group and with both children and adults. After detailed quality control, only cases with acceptable T1-weighted (T1w) MRI, surface extraction, and head motion in rs-fMRI were included. Participants with ASD were diagnosed based on an in-person interview administered by board-certified mental health professionals using the gold standard diagnostics of the Autism Diagnostic Observation Schedule (ADOS) [51] and/or Autism Diagnostic Interview-Revised (ADI-R) [52,53]. Typically developing (TD) participants had no history of mental disorders. For all groups, participants were excluded on the basis of genetic disorders associated with autism (i.e., Fragile X), contraindications to MRI scanning, or current pregnancy. The ABIDE data collections were performed in accordance with local Institutional Review Board guidelines, and data were fully anonymized. These quality procedures resulted in a total of 297 participants (151 ASD and 146 TD) from 4 different sites (i.e., New York University Langone Medical Center [NYU], University of Pittsburgh, School of Medicine [PITT], University of Utah, School of Medicine [USM], and Trinity Centre for Health Sciences [TCD]) in ABIDE.

For HBN, we included 102 TD individuals and 449 participants carrying a diagnosis of ASD, ADHD, and/or ANX. From all participants with at least one diagnosis, 53 were diagnosed with ASD and ADHD, 12 diagnosed with ASD and ANX, 99 with ANX and ADHD, and 17 participants were diagnosed with all 3. Only 24 ASD, 171 ADHD, and 73 ANX participants had no comorbidity. The HBN cohort offers a broad range of heterogeneity in developmental psychopathology. Participants were recruited using a community referral model (for inclusion criteria, see https://fcon_1000.projects.nitrc.org/indi/cmi_healthy_brain_network/Recruitement.html#inclusion-exclusion). Psychiatric diagnoses were assessed and reported by clinicians according to DSM-5 criteria [54]. HBN was approved by the Chesapeake Institutional Review Board. Written informed consent was obtained from all participants and from legal guardians of participants younger than 18 years. Hence, after assessing the same criteria for quality control, we ended up with a total of 551 participants, with several participants having more than one neurodevelopmental diagnosis, from 3 different sites (i.e., Staten Island [SI], Rutgers University Brain Imaging Center [RU], and CitiGroup Corcell Brain Imaging Center [CBIC]) in HBN.

More details about the demographic information of the participants included in our study and about acquisition settings used for both datasets are reported in Table 1 and S1 Table, respectively.

Table 1. Demographics for each site and group.

| Site | N | Sex (M/F) | Age | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| TD | ASD | ADHD | ANX | TD | ASD | ADHD | ANX | TD | ASD | ADHD | ANX | ||

| ABIDE | NYU | 70 | 56 | - | - | 69/1 | 52/4 | - | - | 15.4 ± 7.0 | 14.5 ± 7.9 | - | - |

| PITT | 22 | 20 | - | - | 22/- | 20/- | - | - | 19.7 ± 7.0 | 20.8 ± 7.3 | - | - | |

| TCD | 19 | 18 | - | - | 19/- | 18/- | - | - | 15.8 ± 3.2 | 14.5 ± 3.3 | - | - | |

| USM | 40 | 52 | - | - | 40/- | 52/- | - | - | 21.5 ± 7.8 | 23.6 ± 7.6 | - | - | |

| All | 151 | 146 | - | - | 150/1 | 142/4 | - | - | 17.7 ± 7.3 | 18.6 ± 8.4 | - | - | |

| HBN | CBIC | 23 | 41 | 172 | 86 | 11/12 | 37/4 | 119/53 | 52/34 | 11.7 ± 3.3 | 12.5 ± 4.0 | 11.5 ± 3.3 | 12.4 ± 3.7 |

| RU | 33 | 49 | 130 | 74 | 19/14 | 36/13 | 87/43 | 43/31 | 11.6 ± 3.5 | 12.7 ± 4.0 | 11.6 ± 3.3 | 12.1 ± 3.5 | |

| SI | 46 | 16 | 38 | 41 | 20/26 | 11/5 | 24/14 | 23/18 | 11.8 ± 3.7 | 12.6 ± 3.4 | 12.8 ± 4.1 | 12.8 ± 3.8 | |

| All | 102 | 106 | 340 | 201 | 50/52 | 84/22 | 230/110 | 118/83 | 11.7 ± 3.5 | 12.6 ± 3.9 | 11.7 ± 3.4 | 12.4 ± 3.6 | |

Number of participants (N), males/females (M/F), and mean age and standard deviation for each site and group. Note that some participants from HBN are diagnosed with more than one disorder.

ABIDE, Autism Brain Imaging Data Exchange; ADHD, attention-deficit/hyperactivity disorder; ANX, anxiety; ASD, autism spectrum disorder; CBIC, CitiGroup Corcell Brain Imaging Center; HBN, Healthy Brain Network; NYU, New York University Langone Medical Center; PITT, University of Pittsburgh, School of Medicine; RU, Rutgers University Brain Imaging Center; SI, Staten Island; TCD, Trinity Centre for Health Sciences, Trinity College Dublin; TD, typically developing; USM, University of Utah, School of Medicine.

Brain scan preprocessing workflow

For both ABIDE and HBN datasets, structural brain scans (T1w MRI) were preprocessed with FreeSurfer [55–57]. The pipeline performed automated bias field correction, registration to stereotaxic space, intensity normalization, skull stripping, and tissue segmentation. White and pial surfaces were reconstructed using triangular surface tessellation and topology corrected. Surfaces were inflated and spherically registered to the fsaverage5 template. Segmentations and surfaces were subject to visual inspection. Participants with erroneous segmentations were excluded from further analysis.

For the resting-state functional brain scans in ABIDE, we built on data previously made available by the Preprocessed Connectomes initiative (http://preprocessed-connectomes-project.org/abide). The preprocessing was performed with C-PAC (https://fcp-indi.github.io) and included slice time correction, head motion correction, skull stripping, and intensity normalization. The rs-fMRI data were detrended and adjusted for common nuisance effects related to head motion, white matter, and cerebrospinal fluid signals using CompCor [58], followed by band-pass filtering (0.01 to 0.1 Hz). For the rs-fMRI data in HBN, we discarded the first 5 volumes, removed the skull, and corrected for head motion. Magnetic field inhomogeneity was corrected using topup with reversed phase-encoded data [59]. After applying a high-pass filter at 0.01 Hz, nuisance effects were removed using ICA-FIX [60]. We excluded participants who had mean framewise displacement greater than 0.3. Individual rs-fMRI data were mapped to the corresponding mid-thickness surfaces, resampled to the Conte69 template (https://github.com/Washington-University/Pipelines), and smoothed using a 5 mm full-width-at-half-maximum (FWHM) kernel.

Pattern learning pipeline for disease classification

The construction of the feature space from the brain scans was based on a topographical brain parcellation: 100 functionally defined cortical regions from a widely used reference atlas [61]. For cortical thickness, each participant’s fingerprint consisted of a 100-feature vector corresponding to the mean cortical thickness of the vertices within each of the 100 atlas regions. Similarly, for intrinsic functional fluctuations, the time series were averaged over all vertices in each of the 100 atlas regions and used to build functional connectivity profiles based on all pairwise correlations (Pearson correlation coefficient) between the 100 region time series. For the rs-fMRI, the feature vectors thus consisted of 4,950 unique region–region connectivity strengths.

The predictive model we used for classification (e.g., ASD versus TD) is the commonly used logistic regression model with an optimization loss that includes a penalty term for Tikhonov (l2) regularization. Let w be the coefficients (i.e., weights) of our model and x the feature vector (e.g., of cortical thickness values) corresponding to a given participant. For prediction of continuous class assignments in form of log odds, the model specification involves mapping the weighted combination of features (i.e., z = wTx) from input space (cf feature engineering above) into a probability using the sigmoid function. For gradient descent–based estimation of this convex optimization problem, the set of final model coefficients indicating the global minimum was obtained by solving a structured risk minimization problem based on the following cost function:

where n is the number of observations, y(i) carries the class label (i.e., ASD or TD), z(i) is the weighted combination of features of the i-th observation, and φ denotes the sigmoid function, which is defined as φ(z) = 1/(1+e−z). The first term is the log-likelihood function, and the second term penalizes the weight coefficients, with λ denoting the hyperparameter that controls the regularization strength of the l2 penalty term. Tikhonov regularization is known to exert smooth parameter shrinkage by acting on the low-variation directions that underlie the input space relatively more than the dominant high-variation patterns in the data [62]. This model has been shown to perform better than several competing machine learning models in comprehensive benchmark studies of ASD classification [39] and other commonly studied phenotype predictions [41,63].

To rigorously evaluate our analytical workflow, we adopted a nested CV strategy. Regardless of how the outer CV was implemented (see paragraph below on out-of-distribution prediction), we consistently used an inner CV based on 5-fold for tuning the hyperparameter controlling the regularization strength (i.e., λ). The value for the complexity parameter λ was chosen from a grid of 7 equidistant points in logarithmic scale in the interval [1e-3, 1e+3]. Prior to fitting our model to the data, brain features were z-scored across participants on the training set at hand (i.e., respecting the mechanics of CV), and the derived parameters were then used to apply z-scoring of the features to the participants in the test set.

Quantifying diversity using propensity scores

Our overarching aim was to characterize the role of population heterogeneity in forming prediction by means of pattern extraction. The notion of diversity used throughout this work leans on 3 important facets of population variation that are available in most population datasets: participant age, participant sex, and scanning site. In our study, we proposed to get a handle on this notion of participant diversity by virtue of the propensity score framework that is established in a variety of domains [44]. Diversity, thus, denoted the distance based on these common covariates between participants in our cohorts. Observational studies have benefited from propensity scores to successfully estimate the probability of a participant receiving treatment given the covariates. Here, we concentrated on estimating the probability that a given participant carries a disease diagnosis based on a set of covariates, i.e.,

where Y is the target variable, with Y = 1 for ASD and Y = 0 for TD. C are the covariates, which collectively provided the basis for quantifying population diversity. In other words, we estimated a model for the purpose of deriving a propensity score as a function of the covariates of a given participant. Although other machine learning tools have sometimes been used to obtain propensity scores [64–66], logistic regression is probably the most common implementation in this context. This natural choice of method yields the following model specification for our propensity scores framework:

where k denotes the number of covariates or sources of population variation, β0 is the intercept capturing the average classification log odds in the participant data at hand, while logit(·) is a nonlinear transformation (called “link function” in the generalized linear modeling family) that maps from probability space to log odds space. In other words, we invoked a conditional expectation that is implemented by a logistic regression model to predict diagnosis (e.g., ASD) using the covariates of each participant as features. To prevent class imbalance from skewing the probabilities, we further used probability calibration based on Platt scaling [67] to produce the final propensity scores. Although propensity scores are often based on treatment assignment, for simplicity, we use them throughout this work to refer to the probability we defined above. Note that the propensity scores were estimated using a conventional logistic regression model based solely on the participant covariates as input variables—without any access to brain imaging information. In contrast, the predictive model used for the actual disease classification problem (see Pattern learning pipeline for disease classification section) employed a regularized logistic regression with the l2-norm to predict disease diagnosis based on brain imaging features (e.g., functional connectivity). We reiterate that the propensity score model itself did not involve or had access to any of the brain imaging features of primary scientific interest.

A key asset of the propensity score framework is the opportunity to seamlessly construct subsets of participants with homogeneous background profiles with respect to the observed covariates. This follows because the propensity score is a balancing score. At each value p of the propensity score, the distributions of the covariates are the same in both groups [44,68], i.e.,

where π(C) denotes the propensity score corresponding to the covariate set C, and Y is a binary variable holding the diagnosis.

Multiple applications of propensity scores can be used for confounding adjustment including matching, stratification and inverse probability weighting [45,46]. In previous neuroimaging studies, propensity scores have been used to address confounding in permutation testing [69], classification [70], and regression [49] problems. Note that, unlike these methods, our proposed approach leverages propensity scores to investigate the relationship between population variation and out-of-distribution prediction rather than to perform deconfounding. An appealing quality of propensity scores is a form of dimensionality reduction: representing a mixed envelope of covariates as a single number that coherently encapsulates the sources of interparticipant variation indexed by the joint covariates. Matching, for example, can then be performed directly on the propensity scores rather than on the covariates, which rapidly becomes infeasible with high-dimensional vectors of covariates. Moreover, propensity scores can be readily used to handle both continuous (e.g., age) and categorical (e.g., site or sex) covariates in a single coherent framework. Any possible collinearity in coefficient estimation of the propensity score model does not affect the results because this modeling step follows the prediction goal [47]. In other words, we are not concerned with point estimate, interval estimate, or subject matter interpretation of any specific coefficient inside the propensity score model itself [71].

After estimating the propensity scores, participants were spanned out along a one-dimensional continuum that gauges the diversity among them: The more dissimilar the participants in their covariates, the more distant they were from each other on the propensity score spectrum. By chunking the participants into strata with similar confounding architecture, within each of which participants had minimal diversity relative to each other, we could assess the generalizability of our predictive models sampling different strata for train and test in an operationalizable series of experiments.

Matching and stratification

Naively partitioning the data points, however, may produce strata with unbalanced classes. To safeguard against skewing of results due to class imbalance, we initially matched participants based on their propensity score estimates. We employed a pair matching approach: One specific participant in the TD group was allocated to one participant in the ASD group. To identify these pairs in a data-driven fashion, we carried out the matching procedure using the Hungarian algorithm [72]. The Hungarian algorithm obtained a globally optimal pairing by minimizing the global distance (i.e., a “cost” quantity) between paired participants based on the absolute difference of their estimated propensity scores. Nonetheless, this approach is not exhaustive, in that, the absolute differences between matched participants are unlikely to be zero. This circumstance especially comes into play when there is imperfect overlap between the overall distributions of estimated propensity scores in ASD and TD. This is because there is a risk of ending up with paired participants with very dissimilar propensity scores and hence different positioning according to directions of population variation.

To overcome this hurdle, we added a restriction to the Hungarian algorithm such that the absolute difference in the propensity scores of matched participants must not exceed a prespecified threshold—the so-called caliper [73]. This constraint is commonly achieved by setting the cost of matching pairs of participants to a large value when their difference exceeds the threshold. Then, paired participants returned by the Hungarian algorithm are inspected to discard those pairs that exceed the threshold. The value must be larger than all values in the cost matrix of the Hungarian algorithm. In this work, the caliper was set to 0.2, which is a popular choice in the literature [74,75]. The maximum acceptable distance for pairs of participants to be considered a match was then set to 0.2*dsd, where dsd was the standard deviation of all pairwise absolute differences between the participants in our dataset. The benefit of using a caliper was that we achieved a better matching of the considered participants, at the possible expense of reducing the number of participants in a data analysis scenario. Thus, final pairs of TD–ASD participants had very similar covariates. As shown in Fig 1A, distributions of propensity scores after matching showed better overlap (middle panel) than the unmatched original distributions (left panel), especially in HBN. The demographic information of the participants after matching is reported in S2 Table. The quality of matching was assessed using standardized mean difference (SMD). SMD is a widely used diagnostic to examine the balance in the covariates between the groups (i.e., ASD and TD). For a continuous covariate, the absolute SMD is defined as

where xi and denote the mean and variance of the covariate in the group indexed by subscript i (i = 0 for TD, and i = 1 for ASD), respectively. For binary variables,

where pi denotes the prevalence in each group. Usually, a covariate with an SMD <0.1 is considered well balanced [46]. As shown in S1 Fig, matching on the propensity scores allowed us to achieve balance in both ABIDE and HBN. The only exception was the sex covariate in ABIDE, although its SMD was very close from 0.1. Moreover, for our analyses, this was unlikely to have any impact because the results because the proportion of females in ABIDE was negligible. After matching, we ended up with 290 participants (145 participants per group) in ABIDE and 126 (63 participants per group) in HBN, which were used subsequently for the classification of ASD versus TD in both datasets.

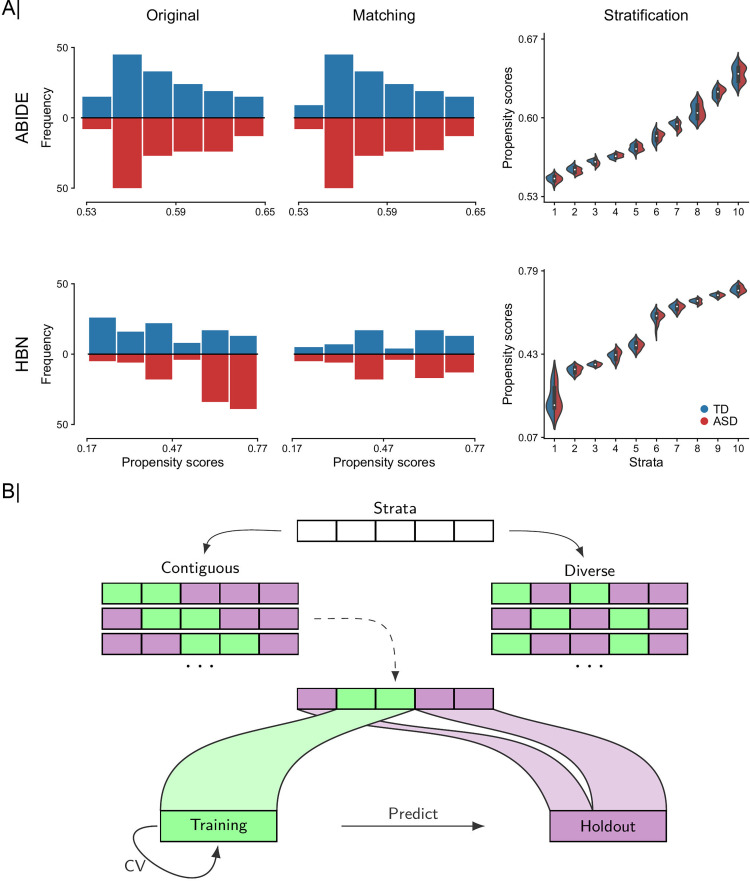

Fig 1. Workflow for systematic participant stratification and diversity-based participant sampling.

We repurpose propensity scores as a tool to translate several different indicators of population variation into a single diversity index. (A) From left to right: 2-sided histograms in which we show bin counts bins of estimated propensity score distributions for TD (blue) and ASD (red) participants before (i.e., using all observations in original dataset) and after matching (based on age, sex, and site) and partitioning of matched participants in 10 equally sized participant sets (i.e., strata) based on similarities of their propensity scores for ABIDE (top) and HBN (bottom) cohorts. Distributions of propensity scores after matching showed better overlap (middle panel) than the unmatched original distributions (left panel), especially in HBN. Propensity scores are computed as a function of age, sex, and site, which are commonly available in many future population cohorts. (B) Diversity-based participant sampling: Given the attribution of each participant to 1 of q homogeneous strata, a subset of r strata is picked and combined to form the training set used for estimating the predictive model (green) and the q-r remaining strata served as held-out set for testing the performance of that learning model (violet). Two different sampling regimes are used to form the training set: (i) a contiguous scheme where the training set is composed of adjacent strata of similar diversity; and (ii) a diverse scheme where the training data are composed of noncontiguous (at least one) strata with participants pulled from diverse populations. For each analysis setting, the classification accuracy is assessed within the training set based on rigorous 10-fold fit–tune–predict CV cycles, and then all the training data are used to predict disease status in unseen holdout participants. Data underlying this figure can be found in S1 Data. ABIDE, Autism Brain Imaging Data Exchange; ASD, autism spectrum disorder; CV, cross-validation; HBN, Healthy Brain Network; TD, typically developing.

Based on the matched data, the aligned participant pairs were ranked according to their mean estimated propensity scores (i.e., the mean propensity score of a matched pair of participants) and stratified into mutually exclusive subsets, henceforth “strata.” In our work, participants were divided into 10 equally sized strata using the deciles of the estimated propensity scores. Although matching based on the mean propensity score of paired participants may not be perfect in all cases, Fig 1A shows good overlap between the distributions of propensity scores for each group (i.e., ASD and TD) within each of the 10 strata. In ABIDE, in all participants, we obtained propensity scores above 0.5. Propensity scores above 0.5 show that participants had demographic properties, captured by the covariate configurations alone, which were more indicative of the disease group. Nonetheless, using high propensity scores was unlikely to have any impact on our disease classification results because we are not interested in the values of the propensity scores per se, but rather in their distance. In other words, diversity was the same when participants had the same distance between their propensity scores, regardless of their positions on the propensity score spectrum. Moreover, as shown in S1 Fig, we achieved strong balance with matching, indicating that the estimated propensity scores provided a robust and useful proxy for the underlying covariates. In HBN, the nonuniform distribution of sex across the strata, with females only at the lowest end of the propensity score spectrum, corroborated the validity of our approach to characterize diversity. Strata containing female participants were closer to each other (lower diversity) than to strata containing only male participants (higher diversity). Note that, in the present work, the reason behind stratifying the participants in our dataset based on their estimated propensity scores was different from that used in observational studies, where stratification can be employed for example to estimate stratum-specific effects. Stratification was here repurposed as a tool for the sole aim of training and testing predictive models on sets of participants whose similarities and differences we could control in our data analysis experiments.

Out-of-distribution prediction

To illustrate the role of diversity in single-participant prediction, we started by comparing distinct participant sampling schemes when forming the training and holdout sets. In the contiguous scheme, we only selected adjacent strata for training and the remaining strata were “kept in a vault” for later testing of the generalizability in classifying ASD from TD. In the diverse scheme, the training set was composed of (at least one) noncontiguous strata. As a baseline, we furthermore used a random scheme where training data were drawn by taking into account the class proportions but irrespective of their propensity scores or strata. The random scheme amounted to a conventional CV strategy.

This analysis was repeated for different sizes of the training set, ranging from 2 to 8 strata. In the random scheme, we hence pulled 20% to 80% of the total participants in our dataset for training of the predictive model. For each number of strata (or subset of participants in case of random sampling) used for training, we performed 20 draws and computed the average of the ensuing model performances. In the diverse scheme, we did not consider all possible combinations of training/test strata, but rather focused on the combinations that provided the most diverse strata for training. For each diverse draw (i.e., combination of training strata with at least one nonadjacent stratum), we computed the mean absolute difference in propensity scores of the participants in the training strata (i.e., within-distribution diversity). We ranked the draws according to their within-distribution diversity and then chose the top 20 draws. In the contiguous scheme, the number of possible draws was less than 20 (e.g., 9 maximum draws when using 2 contiguous strata for training).

To ensure a consistent number of draws, additional draws with similar size for the training set (e.g., 20% of pairs when using 2 strata for training) were sampled by considering paired participants in consecutive order (according to their propensity scores) for training and the remaining for holdout. These sampling schemes allowed us to compare out-of-distribution (i.e., participants with unseen propensity scores) performance when we learned from data that spanned the wider space parameterized by propensity scores (i.e., diverse), focused on a narrow subspace (i.e., contiguous), or completely ignored this kind of information in predictive modeling (i.e., random). Moreover, we also assessed performance in the within-distribution setting. That is, based solely on the training strata, we used a 10-fold CV to evaluate performance when our model was trained and tested using participants with similar propensity scores. Although train and test participants had similar propensity scores when assessing within-distribution performance, predictive performance was not based on the same participant data used to build the predictive models.

Note that the matching step was important to prevent the contiguous and diverse sampling schemes from producing unbalanced train/test splits that may potentially bias our results. Without matching, we would have ended up with substantially unbalanced strata, especially in the HBN dataset (see Fig 1A). In the case of imbalance in the training strata, the predictive models would have likely overpredicted toward the majority class. On the other hand, an imbalance in the testing strata would have favored predictive models that tend toward the majority class and also required the consideration of additional evaluation metrics (e.g., specificity, sensitivity, precision, etc.). More importantly, there might have been draws with imbalance in both train and test data, although inverted when going from training to the testing stage. This would have occurred, for example, in the unmatched HBN dataset when using the strata with the lowest/highest propensity scores for training/testing. Here, the proportion of TD would have been substantially higher/lower in the training/testing strata. In this case, we would not have been able to tell if the observed prediction accuracy was related to the diversity in the data, to the imbalance in the strata, or even to both. With matching, we avoided class imbalance and ensured that changes in prediction accuracy were due to diversity.

Assessing the relationship of performance with diversity

The previous analyses offered an initial depiction of the implications of participant diversity on out-of-distribution performance. Yet, the analysis only considered 20 draws of training/test strata. In such cases, the contiguous and diverse sampling schemes may have been dominated by the strata at both extremes of the diversity spectrum, i.e., those strata of pulled participants with the lowest/highest propensity scores. These strata were likely to be part of the test set in most draws in the contiguous scheme (because only adjacent strata were used for training). In the diverse scheme, on the other hand, these strata were likely to be part of the training set (because they were the most distant strata). The overrepresentation of the strata at both ends of the propensity score spectrum may have contributed to the obtained constellation of findings in terms of performance as well as in terms of the stability of the predictive patterns. Furthermore, diversity was experimentally controlled with a focus on the training set, rather than the holdout participants. In another set of analyses, we examined the relationship between model performance and diversity for each draw of training/test strata. To this end, we used 5 strata for training (and the remaining 5 for holdout) and considered all possible combinations of participant strata to form the training set (i.e., 252 different draws, picking 5 out of 10 strata to form the training set).

Within-distribution performance was reported based on the average performance using a 10-fold CV strategy, whereas out-of-distribution performance was reported separately for each holdout stratum. For assessment of within-distribution performance, diversity was computed as the average of all pairwise absolute differences in propensity scores:

where Nt is the total number of participants in the training strata, and πi denotes the propensity score of the i-th participant. For the purpose of out-of-distribution prediction, diversity denotes the mean absolute difference in propensity scores between all pairs of participants in the training set and those in the holdout stratum:

where Ns is the number of participants in the holdout stratum. This analysis allowed us to provide a clearer look into the impact of diversity on predictive modeling, by directly inspecting the relationship between participant diversity and predictive accuracy. Note that we assessed out-of-distribution performance for each stratum separately to prevent that sampling variation due to the diversity in the testing data (using all testing strata) influences our CV procedure. Stratification assigned individuals with similar covariates to the same stratum. Therefore, when evaluating performance of a built predictive model in a single holdout stratum, the remaining diversity among the participants in the stratum (as captured by covariate configurations) was substantially minimized and unlikely to impact performance. Moreover, diversity was roughly the same across all strata. On the other hand, if we had used the participants in all the testing strata to report performance, our results would be potentially biased because the diversity in the testing data substantially changed from one draw to another (as the strata used for testing change). Therefore, reporting out-of-distribution performance for each single holdout stratum ensured that differences in prediction accuracy were not participant to the diversity among testing participants, but only to the diversity between the training and testing participants.

Relation to common deconfounding practices

The covariates used in this study to investigate the impact of diversity on the classification of ASD may have a confounding effect in how the brain scanning measurements are used to predict diagnostic categories. In fact, numerous earlier studies have tried to account for nuisance sources by deconfounding the imaging-derived variables in what is often thought as a data cleaning step [43,76–78]. For example, considering the sex of the participants, we could find that males are more prone to be classified as ASD than females. This can be seen, for example, in the distribution of sex across the different strata in the HBN dataset (see S2 Fig). We noted that females occurred in the strata with the lowest propensity scores, while only males were in the highest propensity score strata. Regarding scanning site as a dimension of diversity, the variance of the brain imaging–derived variables may differ from one site to another. This could lead to a biased estimation of the underlying ASD-related pattern in the brain since the model may have learned differences in variance rather than the true putative pattern [48,79]. In such scenarios, it is important to seek to remove these sources of confounding.

To shed light on these circumstances, we explored (i) a conventional linear regression model; and (ii) ComBat [80] for the purpose of variable deconfounding before the actual predictive model of interest. The first method is a standard approach to control for confounds in neuroimaging. It estimates the effect of confounds using a linear regression model to then remove their contribution to the data. The residualized functional connectivity strengths or regional morphological measurements then served as input variables of interest fed into the quantitative model of interest, such as a machine learning model [78,81]. For categorical confounds such as the imaging site, this amounts to centering the data within each site, without considering the potential intersite differences in variance [48]. To deal with the latter point, we also carried out ComBat: a recently proposed approach for better correcting intersite effects [80,82]. ComBat has been previously applied to multiple neuroimaging data modalities including cortical thickness [82,83], resting-state functional connectivity [35,84], and diffusion tensor imaging [82,85]. Briefly, ComBat involves Bayesian hierarchical regression modeling to estimate and remove effects attributable to acquisition site additive and structured noise from the brain imaging features. ComBat might also remove the effects of other factors (e.g., age and sex) if they are colinear with site. For example, if age covaried to a certain extent with site, ComBat may remove some variation related to age when removing site effects [43]. However, we cannot rely on ComBat to remove the variation related to other factors. Here, in addition to the site covariate used for harmonization, our ComBat model further incorporated the age and sex covariates. Thus, the ComBat-based deconfounding used in our work was composed of 2 steps: (1) remove site-specific effects while preserving variation related to age and sex using ComBat, and then (2) regress out age and sex effects using conventional linear regression.

We performed deconfounding using all participants in our dataset, prior to matching and running our analysis. Note that in alternative settings, to prevent data leakage, only the training data should be used to build the deconfounding model, without considering the test data [86]. However, the main reason behind our choice was that deconfounding in data points matched based on the same covariates may have little impact on the results [70], as shown in S3 Fig.

Dissecting the impact of cohort diversity

To provide a richer picture into how diversity affects disease classification, we next used confusion matrices to tease apart the cases in which our predictive models succeeded or failed. Moreover, prediction success may be contingent on the different covariates used to build the propensity scores. Here, we laid out the implications of diversity by taking into consideration the covariates of the holdout participants. Given a particular holdout stratum, prediction performance was assessed for participants from each of the different scanning sites. The analogous procedure was carried out to study sex. We also inspected the changes in performance that were attributable to age differences between the participants in the training set and each holdout stratum.

Finally, we analyzed the degree to which diversity affects the stability of the extracted predictive patterns. To this end, we performed the analysis at both the regional (i.e., 100 target regions) and network levels (i.e., 7 target canonical networks) as defined by the widely used Schaefer–Yeo atlas [61,87]. First, we extracted the coefficients (i.e., weight vectors) from our 252 predictive model instances (corresponding to all possible combinations of picking 5 out of 10 strata to form the training set). These model coefficients were then assigned to 5 different groups according to the diversity of the training set used to learn the models (i.e., the average of all pairwise absolute differences in propensity scores of the participants in the training set). We tested for significant coefficient differences between groups using classical 1-way analysis of variance (ANOVA) with 5 levels (i.e., 5 groups: from very low to very high diversity, no continuous input variables). At the region level, we ended up with 252 coefficients corresponding to our collection of models. Differences in coefficient estimates were analyzed on a region-by-region basis using separate ANOVAs. This approach allowed identifying the cortical regions whose variance in predictive contributions (across the 252 previously obtained predictive models) could be partitioned according to the diversity factor levels in a statistically defensible fashion. Note that in the case of functional connectivity, we used model coefficients for each node (average of the regression coefficients across all edges of a node), and the threshold-free cluster enhancement approach was used with 1,000 permutations to correct for multiple comparisons [88]. At the network level, we analyzed the relationships between model coefficients and diversity by computing Pearson correlation coefficient between the network-aggregated coefficients of predictive models and the diversity of the training participants (i.e., the average of all the pairwise absolute differences in propensity scores of the training observations). These systematic assessments were aimed to uncover which parts of the brain are most at stake when predictive models from heterogeneous participant observations are to be interpreted by the neuroscientific investigator.

Results

In this work, we explored the value of propensity scores as a handle to detect and monitor the role of cohort diversity in predictive modeling. With our approach, we were able to encompass multiple sources of population variation into a single dimension that meticulously recapitulated the diversity among the participants in our cohorts. Tailoring the propensity score framework to brain imaging predictions allowed us to bring to the fore the relationship between diversity and prediction accuracy in a rigorous manner. More specifically, we investigated how diversity plays out in classification of neuropsychiatric disorders using structural and functional brain imaging profiles constructed from the ABIDE [29,30] and the HBN [26] datasets. Specific site inclusion criteria and data quality control resulted in a total of 297 participants (151/146 ASD/TD) from 4 different acquisition sites in ABIDE and 208 participants (106/102 ASD/TD) from 3 sites in HBN (S1 and S2 Tables). Our image processing strategy involved the mapping of functional signals to cortical surfaces as well as surface-based alignment. Functional connectivity matrices were calculated at a single-participant level based on a widely used parcellation atlas with 100 cortical regions [61].

Prior to predictive modeling, ASD and TD participants were matched and grouped into strata with homogeneous backgrounds based on their propensity scores (see Fig 1). This step yielded a total of 290 participants (145 participants per group) in ABIDE and 126 in HBN. In ABIDE, a very small number of participants was discarded from subsequent analyses after matching by age, sex, and acquisition site. However, with HBN around one-third of the original participants was excluded because of the modest overlap between the distributions of estimated propensity scores between TD and ASD groups. In Fig 1A, we can see that in HBN the distribution of TD participants was skewed to the right, while for ASD it was skewed to the left. In HBN, we further explored the role of diversity in the participant classification of ADHD versus TD and ANX versus TD. After matching, we submitted a total of 180 (90 ADHD/TD) and 188 (94 ANX/TD) participants to each classification analysis, respectively. Further information about the datasets, image processing, matching, and classification settings is provided in the Methods section.

Benchmarking out-of-distribution prediction performance

Results in the classification of ASD versus TD participants in both ABIDE and HBN datasets are shown in Fig 2, with performance reported in terms of area under the receiver operating characteristic curve (hereafter, AUC) and F1 score. In the ABIDE cohort, when we trained and tested on data with similar distributions (first column), the contiguous scheme reached better prediction performance than the diverse scheme, with the random scheme lying in between. This trend remained stable as we increased the number of strata used for training. However, we can observe in the holdout dataset (second column) that the difference in prediction accuracy showed an inverted trend. The predictive model trained on the contiguous strata showed poor generalization abilities. We note a clear decay in classification performance as we increased the number of strata used for training, which may suggest that the model was overfitting to participants that share similar demographic status (e.g., age and sex), and hence may have been limited to generalize to more diverse data. On the other hand, the diverse and random schemes performed better as we grew the size of the training set. In the HBN dataset, we observed the opposite trends with the diverse analysis scenario, which showed higher performance within-distribution and poor generalizability.

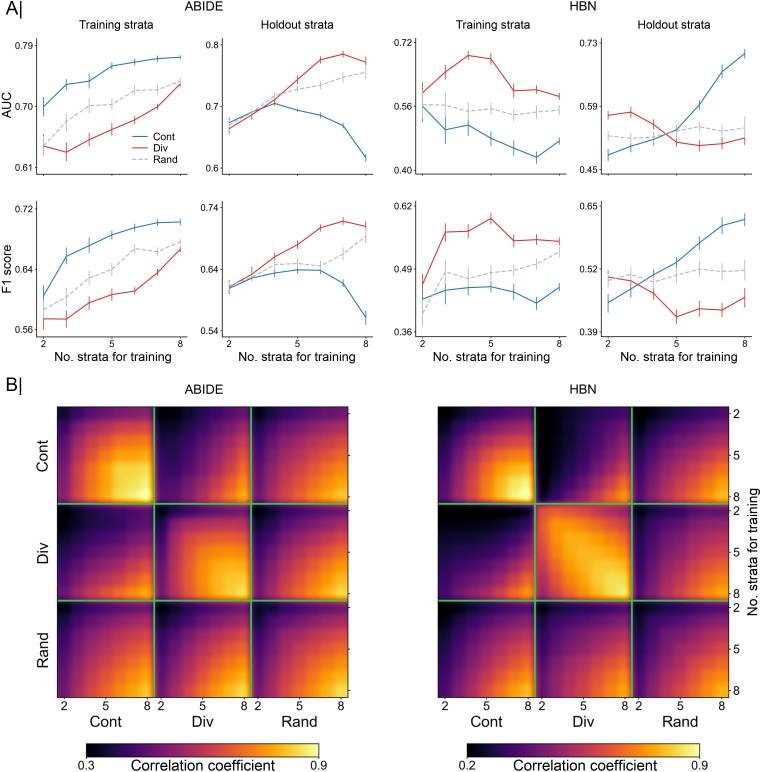

Fig 2. Accuracy of out-of-distribution prediction and consistency of extracted predictive patterns.

Results are based on the classification of ASD versus TD using functional connectivity profiles. (A) Comparison of model accuracy based on contiguous (Cont) and diverse (Div) training sets in the classification of autism. Prediction performance is reported as AUC (top) and F1 score (bottom) in 2 separate cohorts: ABIDE (left) and HBN (right). Accuracy is assessed using different training sets sized from 2 to 8 combined strata. For each cohort, the first column indicates the prediction accuracy using a 10-fold CV strategy based solely on participants from the training strata. Folds were randomly sampled, without considering the propensity scores of the participants. The second column displays the performance in the holdout strata, which contains the remaining participants (from untouched strata). As a baseline for comparison, we used an additional sampling scheme (Rand): Participants for the training set were randomly chosen regardless of their propensity scores. (B) Consistency of model coefficients was quantified by Pearson correlation coefficient across 2 given models in the contiguous, diverse, and random data scenarios. For each of these sampling schemes, consistency is shown for different numbers of combined strata used for predictive model training (from 2 to 8 combined strata), delineated by the green segments. Building models based on diverse strata of participants entailed considerable differences in predictive patterns from those learned based on models with similar participants (i.e., contiguous). Data underlying this figure can be found in S1 Data. ABIDE, Autism Brain Imaging Data Exchange; ASD, autism spectrum disorder; AUC, area under the curve; CV, cross-validation; HBN, Healthy Brain Network; TD, typically developing.

In the context of these diverging results obtained for ABIDE and HBN, we note the difference in the occurrence of females in each dataset. In HBN, 36% of participants were females (45/126), whereas in ABIDE, the proportion of females was negligible (<0.02%, 5/290). Sex showed an expected strong contribution to the computation of diversity (through the propensity scores) and to its relationship with prediction accuracy in HBN. In ABIDE, however, the sex covariate had almost no contribution (see S4 Fig). Thus, the difference in the sex covariate between HBN and ABIDE (with practically no females in ABIDE) may be a potential factor behind the opposite results in these datasets. Nonetheless, in both datasets, we observed a strong impact of diversity on model performance. This finding indicates that within-distribution performance is not in all cases an accurate proxy for out-of-distribution performance when there is a shift in the distribution of the covariates.

Such tendency received further support from our results in classifying ASD versus TD using cortical thickness in the ABIDE dataset and the other 2 neuropsychiatric disorders (i.e., ADHD versus TD and ANX versus TD) from the HBN dataset based on functional connectivity (see S5 Fig). With cortical thickness, we found similar performance trends as the ones obtained with functional connectivity in ABIDE, with the contiguous scheme outperforming the diverse scheme within-distribution but showing lower accuracy in the holdout participants. The same performance pattern was found in ANX. On the other hand, ADHD showed similar trends to the ones found in the classification of ASD in HBN. Model accuracy in the classification of ADHD, however, was very close to 0.5, which may suggest that there may not be enough signal in the data.

We also analyzed the relationship between the functional connectivity patterns (i.e., weights) learned by the models based on the 2 different sampling schemes. More specifically, we calculated the Pearson correlation coefficient to quantify the consistency of model coefficients that we obtained in the scenarios with contiguous, diverse, and random sampling schemes. Results are shown in Fig 2B for different sizes of the training set (from 2 to 8 strata for model training) in both ABIDE and HBN datasets for the classification of ASD. With the exception of 2 strata, where the model may not have enough data to extract a robust pattern, the models were able to identify robust patterns (correlation coefficient r > 0.7) in both datasets with the diverse sampling. With the contiguous and random schemes, the extracted patterns showed high consistency only when large numbers of strata were used for training, although in ABIDE the contiguous scheme was able to extract stable patterns. This is mainly due to the overlap of the training data, which may relate to our observation of strong pattern stability comparing the sampling schemes when using a large number of strata for training (e.g., 8 strata). Overall, however, the patterns identified by the contiguous and diverse schemes showed very low correlations, which highlights the important role of diversity in obtaining robust and reproducible biomarkers. This was also illustrated by the low pattern correlations of the random scheme, where training participants were chosen without considering the criterion of diversity. S6 Fig shows the consistency of patterns obtained in the classification of ASD using cortical thickness in the ABIDE dataset and ADHD and ANX from HBN based on functional connectivity. In accordance with the previous results obtained in the classification of ASD based on functional connectivity, we found a low consistency between the patterns extracted from contiguous and those from diverse strata.

Charting the tension between prediction accuracy and participant diversity

Fig 3 displays the relationship between model performance and diversity in both ABIDE and HBN datasets in the classification of ASD from functional connectivity. For each cohort, the first column shows within-distribution performance based on a 10-fold CV strategy using only the training set, and the second column shows performance in the holdout data. Results were obtained using 5 strata to build our predictive models (and the remaining 5 for holdout). Performance is reported in terms of AUC and F1 score. With both performance metrics, we can observe a decline in performance in ABIDE as diversity increased in both within (AUC: r = −0.630, F1 score: r = −0.568) and out-of-distribution (AUC: r = −0.671, F1 score: r = −0.579) predictions. In the HBN cohort, we found the opposite relationship, with performance being negatively correlated with diversity when assessing both within (AUC: r = 0.427, F1 score: r = 0.413) and out-of-distribution (AUC: r = 0.414, F1 score: r = 0.397) predictions. In both datasets, we found strong associations between performance and diversity, although with different signs. In light of the diverging results observed in the 2 cohorts and the negligible number of females in ABIDE, we further inspected the role of diversity in the HBN dataset when considering males and females separately. Diversity was thus only defined by age and scanning site. As illustrated in S7 Fig, performance (both within and out-of-distribution) decayed with increasing diversity in both female and male subsets, showing therefore similar trends to that found in the ABIDE cohort. Moreover, S5 Fig shows the relationship between performance and diversity in the classification of ASD in ABIDE based on cortical thickness and ADHD and ANX in HBN using functional connectivity. In ADHD, we can observe a positive correlation of performance with diversity. Yet, it was very weak in holdout participants. For the 2 other cases (i.e., ASD versus TD using cortical thickness and ANX versus TD using functional connectivity), we found negative correlations, with performance decreasing the farther the held-out stratum was from the strata used for training.

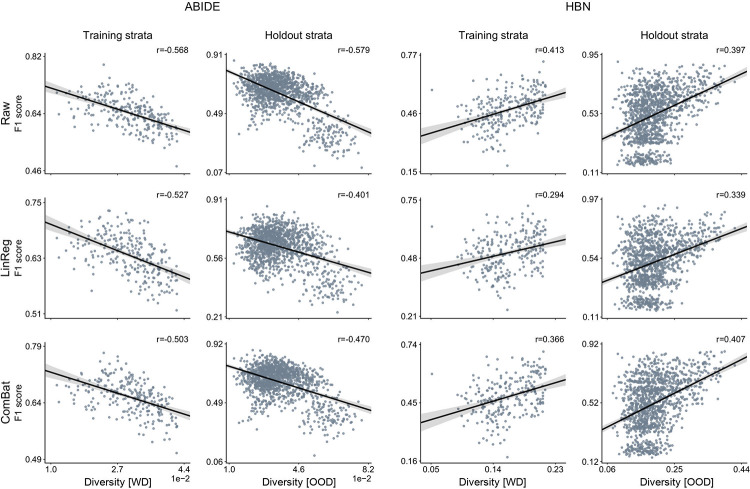

Fig 3. Participant diversity is a major determinant for the classification accuracy of predictive models.

Results are based on the classification of ASD versus TD using functional connectivity profiles. For each dataset, results show prediction accuracy for each possible combination of 5 out of 10 strata for training and the remaining 5 strata as holdout. Prediction accuracy based on AUC (top) and F1 score (bottom) in 2 different cohorts: ABIDE (left) and HBN (right). For each cohort, the first column indicates the predictive model performance using a 10-fold CV strategy based solely on the training set, where diversity is computed as the average of all pairwise absolute differences in propensity scores (i.e., WD). The second column displays the performance for each single stratum in the holdout strata. Diversity denotes the mean absolute difference in propensity scores between the participants of the training set and those in the held-out strata with unseen participants (i.e., OOD). The strength of the association between performance and diversity is reported with Pearson correlation coefficient (r). Our empirical results show a strong relationship between predictive performance and diversity, although different correlation directions were found in ABIDE and HBN cohorts. Data underlying this figure can be found in S1 Data. ABIDE, Autism Brain Imaging Data Exchange; ASD, autism spectrum disorder; AUC, area under the curve; CV, cross-validation; HBN, Healthy Brain Network; OOD, out of distribution; TD, typically developing; WD, within distribution.

The aforementioned analysis is based on the stratification of the matched participants, as explained in the Methods section (subsection Matching and stratification). To illustrate the importance of the stratification and matching steps, we further assessed out-of-distribution performance (i.e., holdout strata) without using stratification and without using matching and compared the results to the ones in Fig 3. As shown in S8 and S9 Figs, respectively, stratification was necessary to produce train–test draws with different propensity scores and allowed us to assess the impact of diversity in performance, while matching was required to prevent class imbalance from biasing results. Furthermore, to better understand the incongruent results obtained from the ABIDE and HBN cohorts when using both males and females, we generated 2 different single-feature toy datasets. In the first synthetic dataset (ABIDE like), the group-wise feature difference decreased as we moved toward the lowest/highest propensity scores, while it increased in the second synthetic dataset (HBN like). Based on these datasets, we assessed the relationship between diversity and AUC using the same approach used in the main analysis. As shown in S10 Fig, these results recapitulate the same trends as the ones found in the actual ABIDE and HBN participants and therefore may explain to a great extent the divergent results obtained in ABIDE and HBN.

Exploring the role of deconfounding practices

So far, we have shown that diversity had a considerable impact on model performance, but we did this based on the original raw functional connectivity data. In this section, our aim is to investigate if deconfounding can help us remove, at least to some extent, the impact of diversity on performance. To do so, we used 2 different deconfounding approaches. The first deconfounding approach is the traditional linear regression–based approach that removes the contribution of the confounds from the raw brain imaging data. For the second deconfounding approach, we used ComBat to deal with site effects. Similar to the computation of the propensity scores, we performed deconfounding prior to our analyses, based on all the available participants instead of using only the training data. The relationship of performance with diversity after deconfounding is reported using AUC in Fig 4 and F1 score in S11 Fig. We refer to the absence of deconfounding as raw data (first row). In ABIDE, the correlations of AUC and diversity in the holdout dataset when using linear regression–based (r = −0.539) and ComBat (r = −0.599) deconfounding approaches were slightly lower than the correlation obtained when using the raw data (r = −0.671). In HBN, we also found small differences with the linear regression–based (r = 0.327) and ComBat (r = 0.392) approaches than when using the raw data (r = 0.414). Similar results were obtained with F1 score. Note that the computation of the diversity index and the nuisance deconfounding procedures were based on the same set of covariates (i.e., age, sex, and site). Nonetheless, these results show that, although slightly decreased, a strong correspondence persisted between diversity and prediction accuracy after deconfounding. Since diversity captured the variation in the collective covariates, the large proportion of variation in the data that was still explained by diversity (even after deconfounding) suggests that existing deconfounding approaches do not completely eliminate the effects of diversity (i.e., known sources of population variation) from the brain imaging data. It is worth mentioning here that, although there were slight differences, we cannot expect to find major changes because our data were already matched. Nonetheless, these results further emphasized the importance of taking diversity into consideration when using CV strategies to assess the performance of our predictive models.

Fig 4. Established and new deconfounding strategies appear insufficient to counteract escalating population diversity.

A means to quantify the behavior of predictive models is comparing its predictions on training and testing information. Performance is measured by AUC metric on the original brain imaging data (Raw, top), and after carrying out deconfounding steps on the brain feature prior to the pattern classification pipeline using standard linear regression–based deconfounding (LinReg, middle) and recently proposed ComBat (bottom) approaches. Compared with the conventional nuisance removal using regression residuals, ComBat is a more advanced hierarchical regression to control for site differences. Results are reported for 2 different clinical cohorts: ABIDE (left) and HBN (right). For each cohort, the first column shows the model prediction performance using a 10-fold CV strategy. Diversity is computed as the average of pairwise absolute differences in propensity scores between all participants (i.e., WD). Each dot is a cross-validated accuracy. The second column, for each cohort, displays the performance in the holdout participants. Instead of reporting performance on all the participants in the holdout data, here, performance is assessed independently for participants in each single stratum from the holdout data. Thus, diversity denotes the mean absolute difference in propensity scores between the participants in the training set and those in the held-out stratum (i.e., OOD). Both ComBat and linear regression–based deconfounding failed to mitigate the impact of diversity on prediction accuracy. Data underlying this figure can be found in S1 Data. ABIDE, Autism Brain Imaging Data Exchange; AUC, area under the curve; CV, cross-validation; HBN, Healthy Brain Network; OOD, out of distribution; WD, within distribution.

Dissecting the impact of diversity on prediction accuracy

To better understand the impact of diversity on performance, we inspected the out-of-distribution performance of our predictive models using confusion matrices. First, the holdout strata were ranked and divided into 6 chunks according to their diversity (with respect to the training strata) to then generate the respective average confusion matrices. Fig 5A shows out-of-distribution confusion matrices in the classification of ASD in both ABIDE and HBN datasets. In ABIDE, with low diversity (e.g., the first confusion matrices) we see that the predictive models achieved good discriminative power, with a proportion of true positives (TP) and true negatives (TN) of 0.33 and 0.35, respectively. But as diversity increased, the model tended to classify most participants as TD. In the confusion matrix corresponding to the highest diversity, the proportion of TN was 0.37 and that of false positives (FP) was 0.38. In the HBN dataset, the impact of diversity was reflected in the opposite direction, starting with low performances (e.g., proportion of TP and TN in the first confusion matrix of 0.22 and 0.25) to then achieve better predictions as diversity increased (e.g., proportion of TP and TN of 0.33 and 0.39 in the last confusion matrix).

Fig 5. Breakdown of out-of-distribution predictions reveals unstable model behavior according to diversity subdimensions: acquisition sites, participant age, and sex.

(A) Error matrices that summarize the relationship of diversity (between the training and testing strata, i.e., OOD) with success and failure rate of the predictive models. These matrices are computed across sets of diagnostic classifications sorted in ascending order by diversity. (B) Relationship of out-of-distribution performance with diversity and age for each scanning site in ABIDE (left; NYU, PITT, TCD, and USM) and HBN (right; CBIC, RU, and SI). Performance is shown in terms of proportion of FN, FP, TN, and TP cases of model-based classification of clinical diagnoses. (C) Relationship of out-of-distribution performance with diversity for female (F) and male (M) participants. Predictive models show unstable behavior across each diversity dimension, underscoring the inconsistent results across the different sites and between females and males. Data underlying this figure can be found in S1 Data. ABIDE, Autism Brain Imaging Data Exchange; CBIC, CitiGroup Corcell Brain Imaging Center; FN, false negatives; FP, false positives; HBN, Healthy Brain Network; NYU, New York University Langone Medical Center; OOD, out of distribution; PITT, University of Pittsburgh, School of Medicine; RU, Rutgers University Brain Imaging Center; SI, Staten Island; TCD, Trinity Centre for Health Sciences, Trinity College Dublin; TN, true negatives; TP, true positives; USM, University of Utah, School of Medicine.

We also examined the impact of diversity for each of the covariates that contributed to its computation (i.e., age, sex, and site). As shown in Fig 5B, the impact of diversity on classification performance (in terms of true/FP/negatives) varied from one scanning site to another. Considering, for example, the proportion of TN in ABIDE, we can see that it decreased with diversity in most sites except in TCD, where it increased. Similar results could be found in HBN, where the proportion of TN increased with diversity in one single scanning site (i.e., CBIC) but decayed in the remaining sites. This indicates that diversity impacts the performance in each site differently, which might account for the divergent results observed in ABIDE and HBN. When plotting the same performance results against age, we found different trends. This occurred because the distribution of age across the strata was not monotonically increasing with diversity, as shown in S2 Fig. Nonetheless, in HBN, e.g., we can see that the proportion of false negatives (FN) increased with age in CBIC, while it decreased in the rest of the scanning sites. Finally, when using sex (see Fig 5C), we found a clear decrease in the proportion of FN in females while a slight improvement was observed in males in the ABIDE dataset. Note, however, that there was a substantially reduced number of females in ABIDE compared to HBN. In HBN, we see that the proportion of FP, for example, increased in females and decreased in males. These results indicate that the formed predictive models exhibit unstable performance behavior as a function of population variation, given that prediction accuracy showed varying links with diversity along each dimension. The considered subanalyses need not align with the global trend observed when collapsing all these dimensions of population variation into a single composite index. Furthermore, the divergence in trends between the individual dimensions of interindividual variation (e.g., participant age) and their composite score (i.e., diversity) spotlights the need for propensity score frameworks because they can serve as a handle to monitor and manage diversity in modern multisite datasets in neuroscience. Since we are only interested in the overall trends, the results in Fig 5B and 5C were smoothed using a Gaussian-weighted moving average for visualization purposes.

Quantifying the impact of diversity on pattern consistency

Fig 6A displays the coefficients extracted by the predictive models with increasing diversity in both ABIDE and HBN cohorts. Model coefficients were z-scored, grouped into 5 chunks, and averaged according to diversity, with positive and negative coefficients shown separately. In both datasets, we can observe that these coefficients underwent considerable drifts in the face of diversity. For example, the coefficients of both the medial frontal and posterior cingulate cortices changed considerably with diversity, going from positive to negative in ABIDE and vice versa in HBN. Moreover, Fig 6B illustrates how consistency changed between patterns extracted from participants in the training set with different diversity. We can see that the extracted patterns gradually diverged as diversity in the training data increased. This is shown in both datasets, but more markedly in HBN. Similar results can be seen in S12 Fig in the classification of ASD using cortical thickness and ADHD and ANX based on functional connectivity. The regions whose coefficients underwent significant changes with diversity are shown in Fig 7A and 7B for ABIDE and HBN, respectively. Although this is not directly related to the discriminative importance of such regions, it does show that the patterns extracted from homogeneous observations differ from those obtained when training with more heterogeneous observations. At the network level, we found strong associations of network coefficients with diversity in the default mode (consistency of predictive patterns: r = −0.286/0.161 in ABIDE/HBN), frontoparietal (r = 0.413/−0.291), limbic (r = 0.373/0.110), and dorsal (r = 0.137/−0.247) and ventral (r = 0.298/0.330) attention networks. There does not seem to be a strong effect of diversity in the model coefficients corresponding to the somatomotor (r = −0.112/−0.099) and visual (r = 0.046/−0.108) networks.

Fig 6. Varying participant diversity is detrimental for consistency of model-derived predictive patterns.

Results are based on all possible combinations of 5 out of 10 strata for training and the remaining 5 strata as holdout. (A) Drifts in model coefficients when estimated repeatedly (rows) with increasing diversity, separately in ABIDE (top) and HBN (bottom) cohorts. Model coefficients were ranked according to diversity and grouped into 5 chunks. Coefficients averaged within each chunk are displayed with increasing diversity. Positive and negative coefficients are shown in separate brain renderings for visibility. For each node, positive/negative coefficients were computed by averaging the edges with only positive/negative coefficients. (B) From top to bottom, changes in predictive model coefficients with increasing diversity in ABIDE and HBN cohorts. Consistency of model coefficients, in terms of Pearson correlation, is obtained for each possible combination of training participants (5 strata combined for training), where diversity is computed as the mean absolute difference in the propensity scores of the training participants (i.e., WD). Each entry in the correlation matrices corresponds to the correlation between the coefficients obtained by 2 predictive models trained on different combinations of training strata. Model coefficients were sorted according to the diversity of their corresponding training observations (arranged from low to high). Each matrix shows correlations based on the model coefficients learned when using the raw data (lower triangular part) and the ComBat-deconfounded data (upper part). Our results show that the consistency of model-derived predictive patterns decays with increasing diversity of the training set, even under deconfounding. Data underlying this figure can be found in S1 Data. ABIDE, Autism Brain Imaging Data Exchange; HBN, Healthy Brain Network; WD, within distribution.

Fig 7. Anatomical hot spots where predictive rules risk to become brittle in the face of diversity.

(A) ABIDE cohort: relationship between network-aggregated coefficients of predictive model and level of population diversity. Diversity is computed on the training strata (i.e., WD). Model coefficients are averaged for each intrinsic connectivity network. (B) HBN cohort: relationship of network-aggregated model coefficients with diversity. Brain renderings in A and B expose regions whose coefficients show a significant association with diversity. Consistent across both clinical cohorts, predictive model coefficients in regions of the highly associative DMN showed a privileged relation to escalating population variation. Data underlying this figure can be found in S1 Data. ABIDE, Autism Brain Imaging Data Exchange; DAN, dorsal attention network; DMN, default mode network; FPN, frontoparietal network; LSN, limbic system network; SMN, somatomotor network; VAN, ventral attention network; VN, visual network; WD, within distribution.

Discussion

In our quest toward realizing single-patient prediction in real-world settings, MRI-based machine learning approaches seek to provide accurate and replicable biomarkers. Due to the recent rise of large-scale brain scanning collections, analytical tools are now urgently needed to account for potential distributional shifts as a consequence of increasing diversity of the participant cohorts. In multisite neuroimaging studies, the generalization power of the predictive models is more likely to be affected by several sources of population variation. Some previous research studied their impact on prediction accuracy and biomarker robustness [42,48,89]. Yet, such analyses typically focused on a single source of participant heterogeneity. Our study sought to accommodate cohort diversity coming from multiple sources simultaneously to examine out-of-distribution generalization. We conducted computational experiments that delineate how success and failure of brain-based machine learning predictions are conditioned on sample diversity based on brain imaging–derived profiles of structure and function in 2 different ASD cohorts. Our findings spotlight the heteromodal association cortex, especially the default mode network, as a candidate substrate where participant diversity was linked to the biggest instability of extracted predictive signatures. Our results further show that this interdependence was not only specific to ASD and functional connectivity. Predictive signatures were also driven by participant diversity when studying structural features (i.e., cortical thickness) and functional connectivity in other neuropsychiatric disorders (i.e., ADHD and ANX).