Abstract

Mesoscopic photoacoustic imaging (PAI) enables non-invasive visualisation of tumour vasculature. The visual or semi-quantitative 2D measurements typically applied to mesoscopic PAI data fail to capture the 3D vessel network complexity and lack robust ground truths for assessment of accuracy. Here, we developed a pipeline for quantifying 3D vascular networks captured using mesoscopic PAI and tested the preservation of blood volume and network structure with topological data analysis. Ground truth data of in silico synthetic vasculatures and a string phantom indicated that learning-based segmentation best preserves vessel diameter and blood volume at depth, while rule-based segmentation with vesselness image filtering accurately preserved network structure in superficial vessels. Segmentation of vessels in breast cancer patient-derived xenografts (PDXs) compared favourably to ex vivo immunohistochemistry. Furthermore, our findings underscore the importance of validating segmentation methods when applying mesoscopic PAI as a tool to evaluate vascular networks in vivo.

Keywords: Photoacoustic imaging, Vasculature, Segmentation, Topology

1. Introduction

Tumour blood vessel networks are often chaotic and immature [2], [3], [4], [5], [1], with inadequate oxygen perfusion and therapeutic delivery [6], [7]. The association of tumour vascular phenotypes with poor prognosis across many solid cancers [1] has generated substantial interest in non-invasive imaging of the structure and function of tumour vasculature, particularly longitudinally during tumour development. Imaging methods that have been tested to visualise the vasculature include whole-body macroscopic methods, such as computed tomography and magnetic resonance imaging, as well as localised methods, such as ultrasound and photoacoustic imaging (PAI) [1]. Microscopy methods can achieve much higher spatial resolution but are typically depth limited, at up to ~1 mm depth, and frequently applied ex vivo [8], [9], [10], [11], [1].

Of the available tumour vascular imaging methods, PAI is highly scalable and, as such, applicable for studies from microscopic to macroscopic regimes. By measuring ultrasound waves emitted from endogenous molecules, including haemoglobin, following the absorption of light, PAI can reconstruct images of vasculature at depths beyond the optical diffusion limit of ~1 mm [11], [13], [12], [14]. State-of-the-art mesoscopic systems now bridge the gap between macroscopy and microscopy, achieving ~20 µm resolution at up to 3 mm in depth [15], [16]. Preclinically, mesoscopic PAI has been used to monitor the development of vasculature in several tumour xenograft models [17], [18], [19] and can differentiate aggressive from slow-growing vascular phenotypes [19]. Studies to-date, however, have been largely restricted to qualitative analyses due to the challenges of accurate 3D vessel segmentation, quantification and robust statistical analyses [17], [20], [15], [18], [19], [21]. Instead, PAI quantification is typically manual and ad-hoc, with 2D measurements often extracted from 3D PAI data [17], [20], [22], [19], [23], reducing repeatability and comparability across datasets.

To assess the performance and accuracy of vessel analyses, ground truth datasets are needed with a priori known features [24]. Creating full-network ground truth reference annotations could be achieved through comprehensive manual labelling of PAI data, but this is difficult due to: the lack of available experts to perform annotation with a new imaging modality; the time taken to label images; and the inherent noise and artefacts present in PAI data. Despite the numerous software packages available to analyse vascular networks [2], their performance in mesoscopic PAI has yet to be evaluated, hence there is an unmet need to improve the quantification of vessel networks in PAI, particularly given the increasing application of PAI in the study of tumour biology [17], [15], [19].

To quantify PAI vascular images and generate further insights into the role of vessel networks in tumour development and therapy response, accurate segmentation of the vessels must be performed [2] (see step 1 in Fig. 1). A plethora of segmentation methods exist and can be broadly split into two categories: rule-based and machine learning-based methods. Rule-based segmentation methods encompass techniques that automatically delineate the vessels from the background based on a custom set of rules [25]. These methods provide less flexibility and tend to consider only a few features of the image, such as voxel intensity [17], [19], [26], [23] but they are easy-to-use, with no training dataset requirements. On the other hand, machine learning-based methods, such as random forest classifiers, delineate vessels based on self-learned features [27], [25]. Nonetheless, learning-based methods are data-driven, requiring large and high-quality annotated datasets for training and can have limited applicability to new datasets. To tackle some of these issues, several software packages have been developed in recent years, and have become increasingly popular in life science research [2], [28], [29]. Prior to segmentation, denoising and feature enhancement methods, such as Hessian-matrix based filtering, can also be applied to overcome the negative impact of noise and/or to enhance certain vessel structures within an image [30], [31], [32].

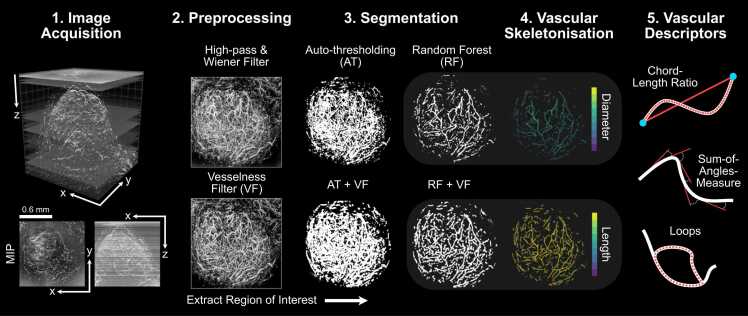

Fig. 1.

The mesoscopic photoacoustic image analysis pipeline. 1) Images are acquired and reconstructed at a resolution of 20 × 20×4 µm3 (PDX tumour example shown with axial and lateral maximum intensity projections – MIPs). 2) Image volumes are pre-processed to remove noise and homogenise the background signal (high-pass and Wiener filtering followed by slice-wise background correction). Vesselness image filtering (VF) is an optional and additional feature enhancement method. 3) Regions of interest (ROIs) are extracted and segmentation is performed on standard and VF images using auto-thresholding (AT or AT + VF, respectively) or random forest-based segmentation with ilastik (RF or RF + VF, respectively). 4) Each segmented image volume is skeletonised (skeletons with diameter and length distributions shown for RF and RF + VF, respectively). 5) Statistical and topological analyses are performed on each skeleton to quantify vascular structures for a set of vascular descriptors. All images in steps 2–4 are shown as x-y MIPs.

Here, we establish ground truth PAI data based on simulations conducted using synthetic vascular architectures generated in silico and, also using a photoacoustic string phantom, composed of a series of synthetic blood vessels (strings) of known structure, which can be imaged in real-time. Against these ground truths, we compare and validate the performance of two common vessel segmentation methods, with or without the application of 3D Hessian matrix-based vesselness image filtering feature enhancement of blood vessels (steps 2 & 3 in Fig. 1). Following skeletonisation of the segmentation masks, we perform statistical and topological analyses to establish how segmentation influences the architectural characteristics of a vascular network acquired using PAI (steps 4 & 5 in Fig. 1). Finally, we apply our segmentation and analysis pipeline to two in vivo breast cancer models and undertake a biological validation of the segmentation and subsequent statistical and topological descriptors using ex vivo immunohistochemistry (IHC). Compared to a rule-based auto-thresholding method, our findings indicate that a learning-based segmentation, via a random forest classifier, is better able to account for the artefacts observed in our 3D mesoscopic PAI datasets, providing a more accurate segmentation of vascular networks. Statistical and topological descriptors of vascular structure are influenced by the chosen segmentation method, highlighting a need to validate and standardise segmentation methods in PAI for increased reproducibility and repeatability of mesoscopic PAI in biomedical applications.

2. Results

2.1. In silico simulations of synthetic vasculature enable segmentation precision to be evaluated against a known ground truth

Our ground truth consisted of a reference dataset of synthetic vascular network binary masks (n = 30) generated from a Lindenmayer System, referred to as L-nets (Fig. 2; Supplementary Movie 1 for 3D visualisation). We simulated PAI mesoscopy data from these L-nets (Fig. 2A) and subsequently used vesselness filtering (VF) as an optional and additional feature enhancement method (Fig. 2B). The four segmentation pipelines selected for testing (Fig. 1) were applied to the simulated PAI data (Fig. 2C), that is, all images were segmented with:

-

1.

Auto-thresholding using a moment preserving method (AT);

-

2.

Auto-thresholding using a moment preserving method with vesselness filtering pre-segmentation (AT+VF);

-

3.

Random forest classifier (RF);

-

4.

Random forest classifier with vesselness filtering pre-segmentation (RF+VF).

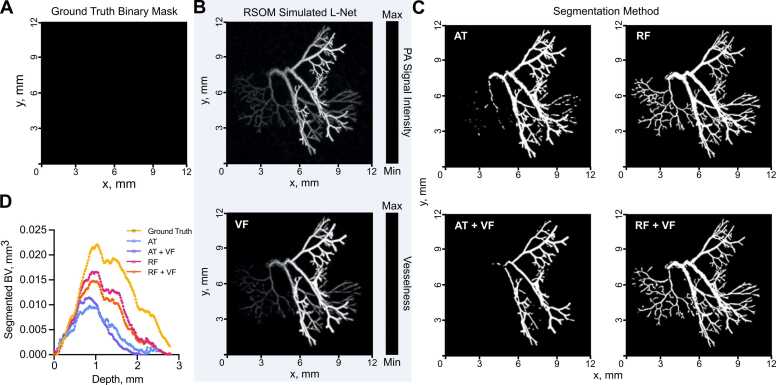

Fig. 2.

Exemplar vascular architectures generated in silico and processed through our photoacoustic image analysis pipeline. (A-C) XY maximum intensity projections of L-net vasculature. (A) Ground truth L-Net binary mask used to simulate raster-scanning optoacoustic mesoscopy (RSOM) image shown in (B, top) and subsequent optional vesselness filtering (VF) (B, bottom). (C) Segmented binary masks generated using either auto-thresholding (AT), auto-thresholding after vesselness filtering (AT + VF), random forest classification (RF); or random forest classification after vesselness filtering (RF+VF). (D) Segmented blood volume (BV) average across L-net image volumes, plotted against image volume depth (mm). For (D) n = 30 L-nets. See Supplementary Movie 1 for 3D visualisation.

Visually, RF methods appear to segment a larger portion of synthetic blood vessels (Fig. 2C) and they are particularly good at segmenting vessels at depths furthest from the simulated light source (Fig. 2D). A key image quality metric in the context of segmentation is the signal-to-noise (SNR), which is degraded at greater depth (Fig. 3A). To evaluate the relative performance of the methods, we compared the segmented and skeletonised blood volumes (BV) from the simulated PAI data to the known ground truth from the L-net. Here, we found that the learning-based RF segmentation outperformed the others in making the segmentation masks, with significantly higher R2 (segmented BV: AT: 0.68, AT+VF: 0.58, RF: 0.84, RF+VF: 0.89, Fig. 3B skeleton BV: AT: 0.59, AT+VF: 0.73, RF: 0.90, RF+VF: 0.93, Fig. 3C) and lower mean-squared error (MSE) (Fig. 3D), with respect to the ground truth L-net volumes, compared to both AT methods (p < 0.0001 for all comparisons). Bland-Altman plots, which we used to illustrate the level of agreement between segmented and ground truth vascular volumes, showed a mean difference compared to the reference volume of 0.61 mm3 (limits of agreement, LOA −0.48 to 1.7 mm3, Fig. 3E) and F1 score of 0.73 ± 0.11 (0.49–0.88) for RF segmentation, albeit with a wide variation indicated by the LOA. RT+VF segmentation resulted in a similar mean difference 0.74 mm3 (LOA −0.50 to 2.0 mm3, Fig. 3F) and F1 score of 0.66 ± 0.11 (0.44–0.84). In comparison, the rule-based AT segmentation showed poor performance in segmenting vessels at depth (Fig. 2C, Supplementary Movie 1), yielding a mean difference of 1.1 mm3 (LOA −0.60 to 2.8 mm3) and as with RT+VF, AT+VF did not improve the result, yielding the same mean difference of 1.1 mm3 (LOA −0.52 to 2.8 mm3) (Fig. 3G,H). F1 scores were poor for both AT methods, with 0.39 ± 0.10 (0.21–0.59) for AT and 0.37 ± 0.09 (0.16–0.52) for AT+VF.

Fig. 3.

Learning-based random forest classifier outperforms rule-based auto-thresholding in segmenting simulated PAI vascular networks. (A) Depth-wise comparison of signal-to-noise ratio (SNR) measured in PAI-simulated L-nets across depth. (B,C) A comparison between ground truth blood volume (BV) and (B) segmented or (C) skeletonised blood volumes (BV). The dashed line indicates a 1:1 relationship. (D) Heat map displaying normalised (with respect to the maximum of each individual descriptor) mean-squared error comparing our vascular descriptors, calculated from segmented and skeletonised L-nets compared to ground truth L-nets, to each segmentation method. Abbreviations defined: connected components, β0 (CC), chord-to-length ratio (CLR), sum-of-angle measure (SOAM). (E-H) Bland-Altman plots comparing blood volume measurements from ground truth L-nets with that of each segmentation method: (E) RF, (F) RF+VF, (G) AT, (H) AT+VF. Pink lines indicate mean difference to ground truth, whilst dotted black lines indicate limits of agreement (LOA). For all subfigures n = 30 L-nets.

The following is the Supplementary material related to this article Video S1..

Supplementary material related to this article can be found online

In all cases, the mean difference shown in Bland-Altman plots increased with ground truth vascular volume, especially in the rule-based AT segmentation, which would be expected due to the restricted illumination geometry of photoacoustic mesoscopy. Since more vessel structures lie at a greater distance from the simulated light source in larger L-nets, they suffer from the depth-dependent decrease in SNR (Fig. 3A). RF segmentation was better able to cope with the SNR degradation, particularly at distances beyond ~1.5 mm, compared to the AT segmentation, which consistently underestimated the vascular volume.

Next, we skeletonised each segmentation mask to enable us to perform statistical and topological data analysis (TDA) to test how each segmentation method quantitatively influences a core set of vessel network descriptors [33]. These descriptors allowed us to evaluate the performance of the different segmentation methods in respect of the biological characterisation of the tumour networks. We used the following statistical descriptors: vessel diameters and lengths, vessel tortuosity (sum-of-angles measure, SOAM) and vessel curvature (chord-to-length ratio, CLR). Our topological network descriptors are connected components (Betti number β0) and looping structures (1D holes, Betti number β1) (see Table S1 for descriptor descriptions).

Here, the accuracy and strength of relationship between the segmented and ground truth vascular descriptors, calculated by MSE (see Fig. 3D) and R2 values (Figure S1A-I) respectively, gave the same conclusions. Across all skeletons, we measured an increased number of connected components (β0) and changes to the number of looping structures (β1) from the simulated compared to the ground truth L-nets, resulting in low R2 and high MSE for all methods (Fig. 3D). The observed changes in these topological descriptors arise due to depth-dependent SNR and PAI echo artefacts. For all other descriptors, AT+VF outperformed the other segmentation methods in its ability to accurately preserve the architecture of the L-nets, with higher R2 and lowest MSE values for vessel lengths, CLR, SOAM, number of edges and number of nodes (Fig. 3D).

Vessel diameters are accurately preserved by both RF segmentation methods, supporting our observation that these methods perform accurate vascular volume segmentation. We note that the number of edges and nodes are also well preserved by RF and RF+VF. This further supports the high accuracy of both RF methods to segment vascular structures.

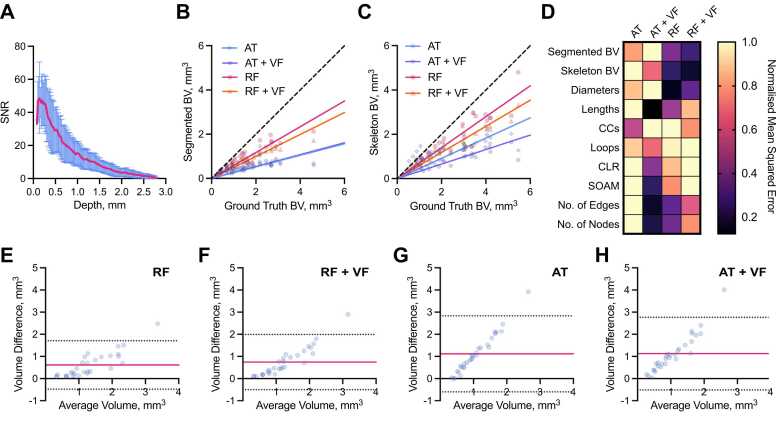

2.2. Random forest classifier accurately segments a string phantom

We next designed a phantom test object (Supplementary Figure 2) to further compare the performance of our segmentation pipelines in a ground truth scenario. Agar phantom images (n = 7) were acquired using a photoacoustic mesoscopy system and contained three strings of the same known diameter (126 µm), length (~8.4 mm) and consequently volume (104.74 µm3), positioned at 3 different depths, 0.5 mm, 1 mm, and 2 mm, respectively (Fig. 4A,B; Supplementary Movie 2). Consistent with our in silico experiments, the accuracy of skeletonised string volumes decreased as a function of depth across all methods (Fig. 4C), due to the decreased SNR with depth (Fig. 4D). Interestingly, the significance of this decrease was very high for all comparisons (top vs. middle, top vs. bottom and middle vs. bottom) in both AT methods (all p < 0.001), but we observed an improvement in string volume predictions across depth for both RF methods, such that middle vs. bottom string volumes were not significantly different in RF+VF (p = 0.42).

Fig. 4.

Random forest classifier outperforms auto-thresholding in segmenting a string phantom. XY maximum intensity projections of string phantom imaged with RSOM show that random forest-based segmentation outmatches auto-thresholding when correcting for depth-dependent SNR. (A) Photoacoustic mesoscopy (RSOM) image shows measured string PA signal intensity with top (0.5 mm), middle (1 mm) and bottom (2 mm) strings labelled. (B) Binary masks are shown following segmentation using: (AT) auto-thresholding; (RF) Random forest classifier; (AT+VF) vesselness filtered strings with auto-thresholding; and (RF+VF) vesselness filtered strings with random-forest classifier. (C) Skeletonised string volume calculated from segmented images of 3 strings placed at increasing depths in an agar phantom. Results from all 4 segmentation pipelines are shown. All volume comparisons (top vs. middle, top vs. bottom, middle vs. bottom) where significant (p < 0.05) except middle vs. bottom for RF+VF (p = 0.42). (D) SNR decreases with increasing depth. (E) Illumination geometry: known cross-section of string outlined (left); during measurement, signal is detected from the partially illuminated section (outlined) resulting in an underestimation in string volume (right). (F) String volume calculated pixel-wise from the segmented binary mask. (C,D,F) Data represented by truncated violin plots with interquartile range (bold) and median (dotted), * ** *=p < 0.0001 (n = 7 scans). (C,F) Dotted line indicates ground truth volume 0.105 mm3. See Supplementary Movie 2 for 3D visualisation.

The following is the Supplementary material related to this article Video S2..

Supplementary material related to this article can be found online

The illumination geometry of the photoacoustic mesoscopy system means that vessels or strings are underrepresented when detected as the illumination source is located at the top surface of the tissue or phantom (Fig. 4E). As a result, all string volumes computed from the segmented images are inaccurate relative to ground truth suggesting that blood volume cannot be accurately predicted from segmented PA images (Fig. 4F). Skeletonisation provides a more accurate prediction of vessel and string volume as it approximates the undetected section by representing these objects as axisymmetric tubes (Fig. 4C,F).

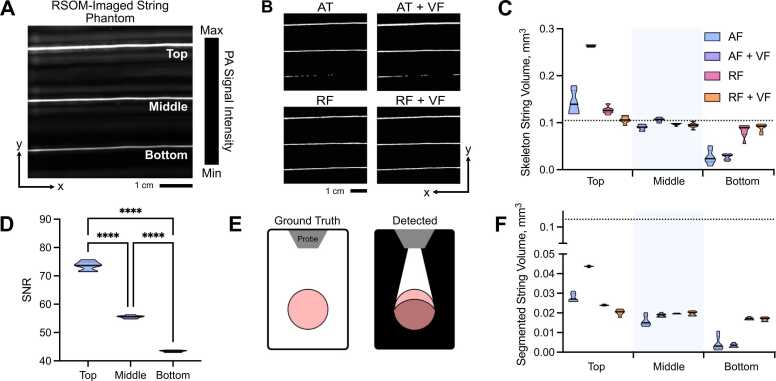

2.3. Vesselness filtering of in vivo tumour images impacts computed blood volume

Having established the performance of our AT- and RF-based segmentation methods in silico and in a string phantom, next we sought to determine the influence of the chosen method in quantifying tumour vascular networks from size-matched breast cancer patient-derived xenograft (PDX) tumours of two subtypes (ER- n = 6; ER+ n = 8, total n = 14).

Visual inspection of the tumour networks subjected to our processing pipelines suggests that VF increases vessel diameters in vivo (Fig. 5A-C; see Supplementary Movie 3 for 3D visualisation). This could be due to acoustic reverberations observed surrounding vessels in vivo, which VF scores with high vesselness, spreading the apparent extent of a given vessel and ultimately increased volume. Our quantitative analysis confirmed this observation, where significantly higher skeletonised blood volumes were calculated in the AT+VF and RF+VF masks compared to AT and RF alone (Fig. 5D).

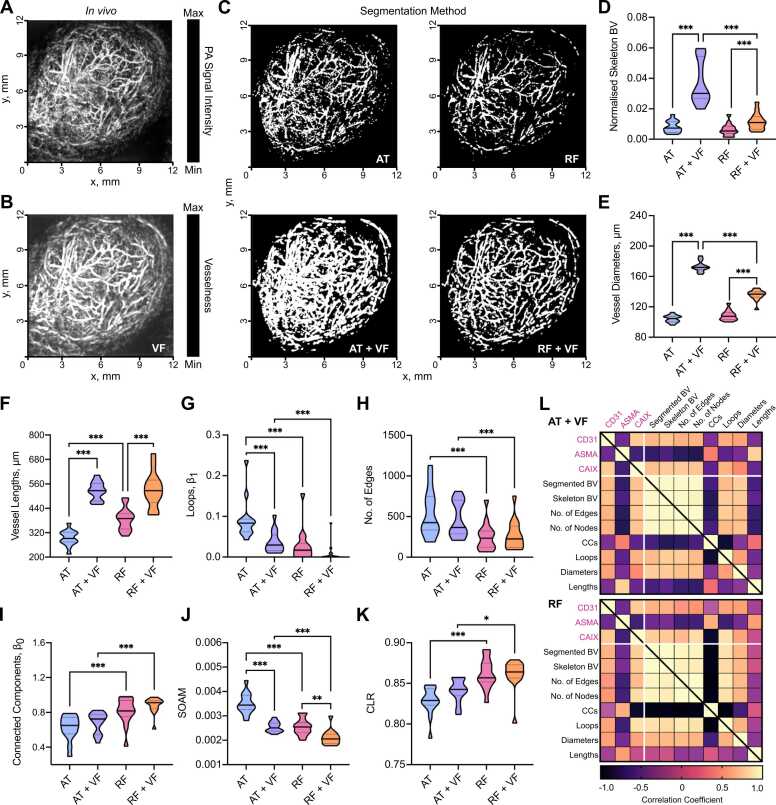

Fig. 5.

In vivo vascular network analyses and comparisons to ex vivo immunohistochemistry in patient-derived breast tumours are dependent on segmentation method employed. XY Maximum intensity projections of breast PDX tumours imaged with RSOM: (A) original image before segmentation; (B) original image with vesselness filtering (VF) applied; (C) a panel showing segmentation with each method (AT: auto-thresholding, AT+VF: auto-thresholding with VF, RF: random forest classifier, and RF + VF: random forest with VF). (D) Skeletonised tumour blood volume (BV) from all 4 segmentation methods normalised to ROI volume. Statistical and topological data analyses were performed on skeletonised tumour vessel vascular networks for the following descriptors: (E) Vessel diameters; (F) Vessel lengths; (G) loops normalised by network volume, β1; (H) Total number of edges; (I) Connected components normalised by network volume, β0; (J) sum-of-angle measures (SOAM); and (K) chord-to-length ratios (CLR). In panels (D-K), data are represented by truncated violin plots with interquartile range (dotted) and median (bold). Pairwise comparisons of AT vs. AT+VF, AT vs. RF, RF vs. RF+VF and AT+VF vs. RF+VF calculated using a linear mixed effects model (*= p < 0.05, **=p < 0.01, ***=p < 0.001,). (L) Matrix of correlation coefficients for comparisons between IHC, BV and vascular descriptors for (top) AT+VF and (bottom) RF segmented networks. Pearson or spearman coefficients are used as appropriate, depending on data distribution. For (D) n = 14, (E-K) n = 13 due to imaging artefact in one image which will impact our vascular descriptors. For (L) comparisons involving BV n = 14, all other vascular descriptors n = 13. See Supplementary Movie 3 for 3D visualisation.

The following is the Supplementary material related to this article Video S3..

Supplementary material related to this article can be found online

2.4. Network structure analyses and comparisons to ex vivo immunohistochemistry of tumour vasculature are impacted by the choice of segmentation method

Next, we computed vascular descriptors for our dataset of segmented in vivo images. As expected from our initial in silico and phantom evaluations, VF led to increased vessel diameters and lengths (Fig. 5E,F), as well as blood volume. Our in silico analysis indicated that AT performs poorly in differentiating vessels from noise and introduces many vessel discontinuities (Table S1). This was exacerbated in vivo where more complex vascular networks and real noise lead to an increase in segmented blood volume (p < 0.01), looping structures (Fig. 5G), a greater number of edges (Fig. 5H), and reduced number of connected components (Fig. 5I).

Our prior in silico and phantom experiments indicated that RF-based methods had a greater capacity to segment vessels at depth. Similarly, we observe more connected components for RF-based methods in vivo (Fig. 5I) along with lower SOAM (Fig. 5J) and higher CLR (Fig. 5K), suggesting that RF-segmented vessels have reduced tortuosity and curvature compared to AT+VF segmented vessels. These in vivo findings support our observations from in silico and phantom studies where RF-based methods provide the most reliable prediction of vascular volume, whereas AT+VF best preserves architecture towards the tissue surface.

Next, we sought to assess how our vascular metrics correlated with the following ex vivo IHC descriptors: CD31 staining area (to mark vessels), ASMA vessel coverage (as a marker of pericyte/smooth muscle coverage and vessel maturity) and CAIX (as a marker of hypoxia) to provide ex vivo biological validation of our in vivo descriptors. Our in silico, phantom and in vivo analyses indicate that AT+VF and RF are the top performing segmentation methods and so we focussed on these (results for AT and RF+VF can be found in Figure S3). We note that none of the vascular metrics derived from AT segmented networks correlated with IHC descriptors.

Both AT+VF and RF skeletonised blood volume correlate with CD31 staining area (r = 0.54, p = 0.05; and r = 0.61, p = 0.02 respectively; Fig. 5 L). This is as expected as elevated CD31 indicates a higher number of blood vessels and, consequently, higher vascular volume. The following correlations are observed for ASMA vessel coverage: vessel diameters (r = −0.41, p = 0.17; and r = −0.43, p = 0.14, respectively); looping structures (r = −0.68, p = 0.01; and r = −0.58, p = 0.04, respectively); number of edges (r = −0.69, p = 0.01; and r = −0.65, p = 0.02, respectively); number of nodes (r = −0.70, p = 0.01; and r = −0.65, p = 0.02, respectively); vessel lengths (r = 0.76, p = 0.03; and r = 0.5, p = 0.08, respectively); connected components (r = 0.38, p = 0.22; and r = 0.59, p = 0.03, respectively). Considering the strengths of AT+VF and RF, these results are biologically intuitive as tumour vessel maturation may lead to higher pericyte coverage, lower vessel density and the pruning of redundant vessels. Elevated pericyte coverage is known to decrease vessel diameters [34], whereas high vessel density resulting from high angiogenesis rates can result in immature vessel networks [1]. Pruning may lead to a reduction in looping structures and, consequently, an increase in vessel lengths or vascular subnetworks.

Finally, levels of hypoxia in the tumours, measured by CAIX IHC, positively correlated in both AT+VF and RF methods with skeletonised blood volume (r = 0.72, p = 0.007; and r = 0.72, p = 0.004, respectively), number of edges (r = 0.59, p = 0.04; and r = 0.84, p < 0.001, respectively), nodes (r = 0.72, p = 0.007; and r = 0.84, p < 0.001, respectively) and looping structures (r = 0.61, p = 0.03; and r = 0.85, p < 0.001, respectively). In the case of blood volume, edges and nodes, these results are expected as it has been shown that breast cancer tumours with dense but immature and dysfunctional vasculatures exhibit elevated hypoxia [35], [1], likely due to poor perfusion. CAIX negatively correlated with connected components for RF networks (r = −0.87, p < 0.001) (Fig. 5L), reflecting results for ASMA vessel coverage. Our cross-validation between ex vivo IHC and vascular descriptors indicate that RF and AT+VF segmentation methods can reliably capture biological characteristics in tumours.

2.5. Ex vivo immunohistochemistry and network structural analyses highlight distinct vascular networks between ER- and ER+ breast patient-derived xenograft tumours

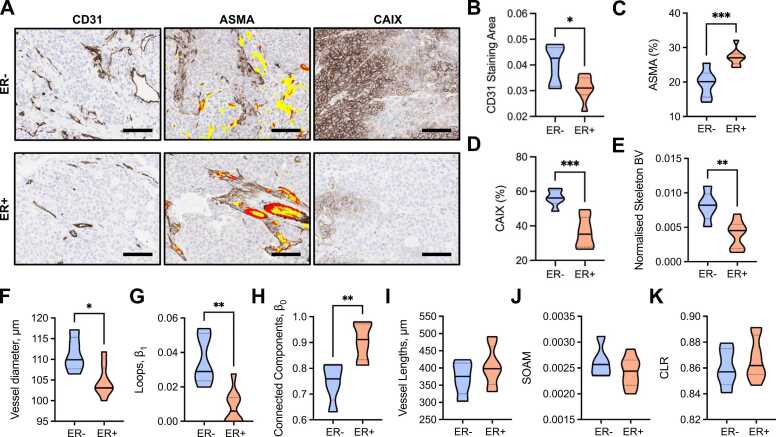

Finally, we quantified and compared IHC and our vascular descriptors between the two breast cancer subtypes represented (RF in Fig. 6; AT+VF in Figure S4; similar trends and significances are observed unless stated otherwise). From analysis of IHC images (Fig. 6A), ER- tumours had higher CD31 staining area (Fig. 6B), poorer ASMA+ pericyte vessel coverage (Fig. 6C) and higher CAIX levels (Fig. 6D) compared to ER+ tumours. Our IHC data supports our RF-derived vascular descriptors, where we found that ER- tumours had denser networks, with higher blood volume, diameter and looping structures (Fig. 6E,F,G). ER+ tumours have a sparse network but showed more subnetworks (Fig. 6H) with significantly longer vessels in AT+VF segmented networks (p < 0.05, Figure S4C), which could indicate a more mature vessel network based on our prior correlative analyses. No significant differences between the two models were observed for blood vessel tortuosity and curvature (Fig. 6J,K).

Fig. 6.

ER- PDX tumours have dense and immature vascular networks which result in hypoxic tumour tissue. (A) Exemplar IHC images of CD31, ASMA and CAIX stained ER- and ER+ tumours. Scale bar= 100 µm. Brown staining indicates positive expression of marker. ASMA sections display CD31 overlay, where red indicates areas where CD31 and ASMA are colocalised (ASMA vessel coverage) and yellow indicates areas where CD31 is alone. (B) CD31 staining area quantified from CD31 IHC sections and normalised to tumour area. (C) ASMA vessel coverage of CD31 + vessels (number of red pixels/number of red+yellow pixels, expressed as a percentage) on ASMA IHC sections. (D) CAIX total positive pixels as a percentage of the total tumour area pixels on CAIX IHC sections. (E-K) Statistical and topological data analyses comparing ER- and ER+ tumours. Data are represented by truncated violin plots with interquartile range (dotted black) and median (solid black). Comparisons between ER- and ER+ tumours made with unpaired t-test. * = p < 0.05, **=p < 0.01, ***=p < 0.001. For (B-E) ER- n = 6, ER+ n = 8. For (F-K) ER- n = 5, ER+ n = 8, one ER- image excluded with artefact that would impact the measured vascular descriptors.

3. Discussion

Mesoscopic PAI enables longitudinal visualisation of blood vessel networks at high resolution, non-invasively and at depths beyond the optical diffraction limit of 1 mm [11], [13], [15], [14]. To quantify the vasculature, PA images need to be accurately segmented. Manual annotation of vasculature in 3D PAI is difficult due to depth-dependent signal-to-noise and imaging artefacts. Whilst a plethora of vascular segmentation techniques are available [2], [27], their application in PAI has been limited due to a lack of an available ground truth for comparison and validation.

In this study, we first sought to address the need for ground truth data in PAI segmentation. We generated two ground truth datasets to assess the performance of rule-based and machine learning-based segmentation approaches with or without feature enhancement via vesselness filtering. The first is an in silico dataset where PAI was simulated on 3D synthetic vascular architectures; the second is an experimental dataset acquired from a vessel-like string phantom. These allowed us to evaluate the ability of different segmentation methods to preserve blood volume and vascular network structure.

Our first key finding is that machine learning-based segmentation using RF classification provided the most accurate segmentation of vessel volumes across our in silico, phantom and in vivo datasets, particularly at depths beyond ~1.5 mm, where SNR diminishes due to optical attenuation. Compared to the AT approaches, RF-based segmentation partially overcomes the depth dependence of PAI SNR since it identifies and learns edge and texture features of vessels at different scales and contrasts. Such intrinsic depth-dependent limitations are often ignored in the literature, where analyses are typically performed on 2D maximum intensity projections for simplicity [17], [20], [22], [18], [19], [23], suggesting that a fully 3D machine learning-based segmentation is needed to accurately recapitulate the complexity of in vivo vasculatures measured using PAI.

As blood vessel networks can be represented as complex, interconnected graphs, we performed statistical and topological data analyses [36], [33] to further assess the strengths and weaknesses of our chosen segmentation methods.

Our second key finding is that AT methods struggle to segment vessels with low SNR, but adding VF outperforms all other methods in preserving vessel lengths, loops, curvature and tortuosity. Additionally, where intensity varies across a vessel structure, this results in many disconnected vessels when segmenting with AT alone, as only the highest intensity voxels will pass the threshold. Only when vesselness filtering is applied does AT do well at preserving topology. VF alters the intensity values from a measure of PA signal to a prediction of ‘vesselness’, generating a more homogeneous intensity across the vessel structures and ultimately a more continuous vessel structure to segment. This likely explains why AT+VF best preserves vessel length and, subsequently, network structure, while AT alone performs poorly. For AT, VF improved BV predictions in silico via better preservation of lengths but not diameters, as our phantom experiments indicate that AT+VF overestimates diameter.

Owing to the homogenous intensity of vessels introduced by VF, one could therefore assume that RF+VF would be the most accurate method at preserving network structure (by combining the machine-learning accuracy of segmentation with the shape enhancement of VF). However, this is not the case: RF alone can account for discontinuities in vessel intensity, unlike AT, meaning it does not rely on VF to enhance structural preservation, which is our third key finding. In fact, the slight inaccuracy in diameter preservation introduced by VF in silico appears to decrease topology preservation in RF+VF compared to RF alone. As expected, all methods led to an increase in the number of subnetworks (connected components) in silico, as these segmentation methods cannot reconnect vessel subnetworks that were disconnected due to poor SNR or imaging artefacts. Given the better segmentation at depth by RF-methods, we hypothesise that these increasingly small subnetworks might have biased the segmentations to underperform in our vascular descriptors. This could be explored in future work, for example, by developing string phantoms with more complex topologies.

Taken together, our results suggest that RF performs feature detection across scales in the manually labelled voxels to learn discriminating characteristics for vessel classification and segmentation. Adding VF before RF segmentation may confound this segmentation framework, because VF systematically smooths images and removes non-cylindrical raw image information, which may have been vital in the RF learning of vascular structures on the training dataset.

Applying statistical and topological analyses to our in vivo tumour PDX dataset we observed trends consistent with our in silico and phantom experiments. Cross-validating our vascular descriptors with ex vivo IHC confirmed that we can extract biologically relevant information from mesoscopic PA images. In our analyses, we revealed that predictions of BV correlated with endothelial cell and hypoxia markers via CD31 and CAIX staining, respectively; and vascular descriptors relating to the maturation of vascular structures correlated with ASMA vessel coverage. Applying our segmentation pipeline to compare ER- and ER+ breast cancer PDX models showed that descriptors of network structure can capture the higher density and immaturity of ER- vessel networks which result in decreased oxygen delivery and high hypoxia levels in comparison to ER+ tumours.

Prior work applying topological data analysis after skeletonization of PAI data in a skin burn model also showed biologically relevant differences in between healthy and burnt skin in rats [37]. Looking further afield, vascular descriptors such as these have also shown response to anti-angiogenic drugs and radiotherapy, with decreased SOAM (tortuosity), loops and increased vessel lengths detected in response to treatment in mouse colon carcinomas [33]. Stolz et al. also showed an increase in tortuosity and looping structures upon addition of a vascular-promoting agent. These vascular descriptors are able to capture vascular structures of varying disorder, meaning they could be widely applied to study tumour vasculature, which is often chaotic and tortuous due to excessive angiogenesis [1].

While our pipeline yields encouraging correlations to the underlying tumour vasculature, avenues of further development exist to improve the realism of our ground truth data, including advances in simulation complexity, and tissue-specific synthetic and phantom vasculatures. While our in silico PAI dataset incorporated the effects of depth-dependent SNR and gaussian noise found in in vivo PAI mesoscopic data, further development of the optical simulations could, for example, recapitulate the raster-scanning motion of illumination optical fibres, instead of approximating a simultaneous illumination plane of single-point sources. The limited aperture of the raster-scanning ultrasound transducer could not be simulated in k-Wave as it is not yet implemented for 3D structures. In terms of vascular complexity, our string phantom represents a highly idealised vessel networks but future work could introduce more complex and interconnected vessel-like networks in order to replicate more realistic vascular topologies [38]. Our ex vivo IHC descriptors were used to confirm our in vivo tumour analyses but did not exhibit correlations across all vascular descriptors. This may be expected as the 2D IHC analysis does not fully encompass the 3D topological characteristics of the vascular network. 3D IHC, microCT or light sheet fluorescence microscopy may provide improved ex vivo validation using exogenous labelling to identify 3D vascular structures, such as tortuosity, at endpoint [39], [40]. It should also be noted that we cannot discount the effect of unconscious biases on segmentation performance when manually labelling images with and without VF to train the classifier. The segmentation accuracy of classifiers trained by multiple users could be explored in future work to formally investigate these effects.

Furthermore, the past decade has seen the rise of a multitude of blood vessel segmentation methods using convolutional neural networks and deep learning [41]. Applying deep learning to mesoscopic PAI could provide a means to overcome several equipment-related limitations such as: vessel discontinuities induced by breathing motion in vivo; vessel orientation relative to the ultrasound transducer; shadow and reflection artefacts; or underestimation of vessel diameter in the z-direction due to surface illumination. Whilst we found that skeletonisation addressed diameter underestimation and observed the influence of discontinuities on the extracted statistical and topological descriptors, they were not deeply characterised or corrected. Nonetheless, whilst deep learning may provide superior performance when fine-tuned to specific tasks, the resulting methods may lack generalisability across tissues with differing SNR and blood structures, requiring large datasets for training. In this study we chose to use software that is open-source and widely accessible to biologists in the life sciences. We believe that such a platform shows more potential to be employed widely with limited computational expertise.

In summary, we developed an in silico, phantom, in vivo, and ex vivo-validated end-to-end framework for the segmentation and quantification of vascular networks captured using mesoscopic PAI. We created in silico and string phantom ground truth PAI datasets to validate segmentation of 3D mesoscopic PA images. We then applied a range of segmentation methods to these and images of breast PDX tumours obtained in vivo, including cross-validation of in vivo images with ex vivo IHC. We have shown that learning-based segmentation, via a random forest classifier, best accounted for the artefacts present in mesoscopic PAI, providing a robust segmentation of blood volume at depth in 3D and a good approximation of vessel network structure. Despite the promise of the learning-based approach to account for depth-dependent variation in SNR, auto-thresholding with vesselness filtering more accurately represents statistical and topological characteristics in the superficial blood vessels as it better preserves vessel lengths. Therefore, when quantifying PA images, users need to consider the relative importance of each descriptor as the choice of segmentation method can directly impact the resulting analyses. We have highlighted the potential of statistical and topological analyses to provide a detailed parameterisation of tumour vascular networks, from classic statistical descriptors such as vessel diameters and lengths to more complex descriptors of network topology characterising vessel connectivity and loops. Our results further underscore the potential of photoacoustic mesoscopy as a tool to provide biological insight into studying vascular network in vivo by providing life scientists with a readily deployable and cross-validated pipeline for data analysis.

4. Materials and methods

4.1. Generating ground truth vascular architectures in silico

To generate an in silico ground truth vascular network, we utilised Lindenmayer systems (L-Systems, see Figure S5) [42]. L-Systems are language-theoretic models that were originally developed to model cellular interactions but have been extended to model numerous developmental processes in biology [43]. Here, we apply L-Systems to generate realistic, 3D vascular architectures [44], [45] (referred to as L-nets) and corresponding binary image volumes. A stochastic grammar was used [44] to create a string that was evaluated using a lexical and syntactic analyser to build a graphical representation of each L-net. To transfer the L-net to a discretised binary image volume, we used a modified Bresenham’s algorithm [46] for 3D to create a vessel skeleton. Voxels within a vessel volume were then identified using the associated vessel diameter for each centreline (Figure S5).

4.2. Photoacoustic image simulation of synthetic ground truths

To test the accuracy of the segmentation pipelines, the L-nets were then used to simulate in vivo photoacoustic vascular networks embedded in muscle tissue using the Simulation and Image Processing for Photoacoustic Imaging (SIMPA) python package (SIMPA v0.1.1, https://github.com/CAMI-DKFZ/simpa) [47] and the k-Wave MATLAB toolbox (k-Wave v1.3, MATLAB v2020b, MathWorks, Natick, MA, USA) [48]. Planar illumination of the L-nets on the XY plane was achieved using Monte-Carlo eXtreme (MCX v2020, 1.8) simulation on the L-net computational grid of size 10.24 × 10.24 × 2.80 mm3 with 20 µm isotropic resolution. The optical forward modelling was conducted at 532 nm using the optical absorption spectrum of 50% oxygenated haemoglobin for vessels (an approximation of tumour vessel oxygenation based on previously collected photoacoustic data [35] and of water for muscle. Next, 3D acoustic forward modelling was performed on the illuminated L-nets assuming a speed of sound of 1500 ms−1 in k-Wave. The photoacoustic response of the illuminated L-nets was measured with a planar array of sensors positioned on the surface of the XY plane with transducer elements of bandwidth central frequency of 50 MHz (100% bandwidth) and using a 1504 time steps, where a time step is 5 × 10−8 Hz−1). Finally, the 3D initial PA wave-field was reconstructed using fast Fourier transform-based reconstruction [48], after adding uniform gaussian noise on the collected wave-field.

4.3. String phantom

We used a string phantom as a ground truth structure (see Supplementary Materials). The agar phantom was prepared as described previously [49] including intralipid (I141–100 ML, Merck, Gillingham, UK) to mimic tissue-like scattering conditions. Red-coloured synthetic fibres (Smilco, USA) were embedded at three different depths defined by the frame of the phantom to provide imaging targets with a known diameter of 126 µm. The top string was positioned at 0.5 mm from the agar surface, the middle one at 1 mm, and the bottom one at 2 mm, as shown in Figure S2.

4.4. Animals

All animal procedures were conducted in accordance with project and personal licences, issued under the United Kingdom Animals (Scientific Procedures) Act, 1986 and approved locally under compliance forms CFSB1567 and CFSB1745. For in vivo vascular tumour models, cryopreserved breast PDX tumour fragments in freezing media composed of heat-inactivated foetal bovine serum (10500064, Gibco™, Fisher Scientific, Göteborg Sweden) and 10% dimethyl sulfoxide (D2650, Merck) were defrosted at 37 °C, washed with Dulbecco’s Modified Eagle Medium (41965039, Gibco) and mixed with matrigel (354262, Corning®, NY, USA) before surgical implantation. One estrogen receptor negative (ER-, n = 6) PDX model and one estrogen receptor positive (ER+, n = 8) PDX model were implanted subcutaneously into the flank of 6–9 week-old NOD scid gamma (NSG) mice (#005557, Jax Stock, Charles River, UK) as per standard protocols [50]. Once tumours had reached ~1 cm mean diameter, tumours were imaged and mice sacrificed afterwards, with tumours collected in formalin for IHC.

4.5. Photoacoustic imaging

Mesoscopic PAI was performed using the raster-scan optoacoustic mesoscopy (RSOM) Explorer P50 (iThera Medical GmbH, Munich, Germany). The system uses a 532 nm laser for excitation. Two optical fibre bundles are arranged either side of a transducer, which provide an elliptical illumination beam of approximately 4 mm × 2 mm in size. The transducer and lasers collectively raster-scan across the field-of-view. A high-frequency single-element transducer with a centre frequency of 50 MHz (>90% bandwidth) detects ultrasound. The system achieves a lateral resolution of 40 µm, an axial resolution of 10 µm and a penetration depth of up to ~3 mm [51].

For image acquisition of both phantom and mice, degassed commercial ultrasound gel (AquaSonics Parker Lab, Fairfield, NJ, USA) was applied to the surface of the imaging target for coupling to the scan interface. Images were acquired over a field of view of 12 × 12 mm2 (step size: 20 µm) at either 100% (phantom) or 85% (mice) laser energy and a laser pulse repetition rate of 2 kHz (phantom) or 1 kHz (mice). Image acquisition took approximately 7 min. Animals were anaesthetised using 3–5% isoflurane in 50% oxygen and 50% medical air. Mice were shaved and depilatory cream applied to remove fur that could generate image artefacts; single mice were placed into the PAI system, on a heat-pad maintained at 37 °C. Respiratory rate was maintained between 70 and 80 bpm using isoflurane (~1–2% concentration) throughout image acquisition.

4.6. Segmentation and extraction of structural and topological vascular descriptors

All acquired data were subjected to pre-processing prior to segmentation, skeletonisation and structural analyses of the vascular network, with an optional step of vesselness filtering also tested (Fig. 1). Prior to segmentation, data were filtered in the Fourier domain in XY plane to remove reflection lines, before being reconstructed using a backprojection algorithm in viewRSOM software (v2.3.5.2 iThera Medical GmbH) with motion correction for in vivo images with a voxel size of 20 × 20 × 4 µm3 (X,Y,Z). To reduce background noise and artefacts from the data acquisition process, reconstructed images were subjected to a high-pass filter, to remove echo noise, followed by a Wiener filter in MATLAB (v2020b, MathWorks, Natick, MA, USA) to remove stochastic noise. Then, a built-in slice-wise background correction [52] was performed in Fiji [53] to achieve a homogenous background intensity (see exemplars of each pre-processing step in Figure S6).

4.7. Image segmentation using auto-thresholding or a random forest classifier

Using two common tools adopted in the life sciences, we tested both a rule-based moment preserving thresholding method (included in Fiji v2.1.0) and a learning-based segmentation method based on random forest classifiers (with ilastik v1.3.3 [28]). These popular packages were chosen to enable widespread application of our findings. Moment preserving thresholding, referred to as auto-thresholding (AT) for the remainder of this work, computes the intensity moments of an image and segments the image while preserving these moments [54]. Training of the random forest (RF) backend was performed on 3D voxel features in labelled regions, including intensity features, as with the AT method, combined with edge filters, to account for the intensity gradient between vessels and background, and texture descriptors, to discern artefacts in the background from the brighter and more uniform vessel features, each evaluated at different scales (up to a sigma of 5.0).

A key consideration in the machine learning-based segmentation is the preparation of training and testing data (Table S2). For the in silico ground truth L-net data, all voxel labels are known. All vessel labels were used for training, however, only partial background labels were supplied to minimise computational expense by labelling the 10 voxel radius surrounding all vessels as well as 3 planes parallel to the Z-axis (edges and middle) as background (Figure S7A,B). For the phantom data, manual segmentation of the strings from background was performed to provide ground truth. Strings were segmented in all slices on which they appeared and background was segmented tightly around the string (Figure S7C). For the in vivo tumour data, manual segmentation of vessels was made by a junior user (TLL) supervised by an experienced user (ELB), including images of varying signal-to-noise ratio (SNR) to increase the robustness of the algorithm for application in a range of unseen data. Up to 10 XY slices per image stack in the training dataset were segmented with pencil size 1 at different depths to account for depth-dependent SNR differences (Figure S7D).

Between pre-processing and segmentation, feature enhancement was tested as a variable in our segmentation pipeline. In Fiji, we adapted a modified version of Sato filtering (α = 0.25) [55] to calculate vesselness from Hessian matrix eigenvalues [56] across multiple scales. Five scales in a linear Gaussian normalized scale space were used, from which the maximal response was measured to produce the final vesselness filtered images (20, 40, 60, 80, and 100 µm) [55].

Finally, all segmented images (either from Fiji or ilastik) were passed through a built-in 3D median filter in Fiji, to remove impulse noises (Figure S8). To summarise the pipeline (Fig. 1), the methods under test for all datasets were:

-

1.

Auto-thresholding using a moment preserving method (AT);

-

2.

Auto-thresholding using a moment preserving method with vesselness filtering pre-segmentation (AT+VF);

-

3.

Random forest classifier (RF);

-

4.

Random forest classifier with vesselness filtering pre-segmentation (RF+VF).

Computation times are summarised in Table S3.

4.8. Extracting tumour ROIs using a 3D CNN

To analyse the tumour data in isolation from the surrounding tissue required delineation of tumour regions of interest (ROIs). To achieve this, we trained a 3D convolutional neural network (CNN) to fully automate extraction of tumour ROIs from PAI volumes. The 3D CNN is based on the U-Net architecture [57] extended for volumetric delineation [58]. Details on the CNN architecture and training are provided in the Supplementary Materials and Figures S9–S10.

4.9. Network structure and topological data analysis

Topological data analysis (TDA) of the vascular networks was performed using previously reported software that performs TDA and structural analyses on vasculature [36], [33]. Prior to these analyses, segmented image volumes were skeletonised into 3D axisymmetric tubes using the open-source package Russ-learn [59], [60]. Direct skeletonisation was performed using a trained convolutional-recurrent neural network [59] and post-processed using a homotopic thinning algorithm [61], followed by a pruning phase to remove artificial branches.

Our vascular descriptors comprised a set of statistical descriptors: vessel diameters and lengths, vessel tortuosity (sum-of-angles measure, SOAM) and curvature (chord-to-length ratio, CLR), In addition, the following descriptors were used to define network topology: the number of connected components (Betti number β0) and looping structures (1D holes, Betti number β1). Full descriptions of the vascular descriptors are provided in Table S1 while outputs are shown in Tables S4–S7.

4.10. Immunohistochemistry

For ex vivo validation, formalin-fixed paraffin-embedded (FFPE) tumour tissues were sectioned. Following deparaffinising and rehydration, IHC was performed for the following antibodies: CD31 (anti-mouse 77699, Cell signalling, London, UK), α-smooth muscle actin (ASMA) (anti-mouse ab5694, abcam, Cambridge, UK), carbonic anhydrase-IX (CAIX) (anti-human AB1001, Bioscience Slovakia, Bratislava, Slovakia) at 1:100, 1:500 and 1:1000, respectively, using a BOND automated stainer with a bond polymer refine detection kit (Leica Biosystems, Milton Keynes, UK) and 3,3′-diaminobenzadine as a substrate. Stained FFPE sections were scanned at 20x magnification using an Aperio ScanScope (Leica Biosystems, Milton Keynes, UK) and analysed using ImageScope software (Leica Biosystems, Milton Keynes, UK) or HALO Software (v2.2.1870, Indica Labs, Albuquerque, NM, USA). ROIs were drawn over the whole viable tumour area and built-in algorithms customised to analyse the following: CD31 positive area (µm2) normalised to the ROI area (µm2) (referred to as CD31 vessel area), area of CD31 positive pixels (µm2) colocalised on adjacent serial section with ASMA positive pixels/CD31 positive area (µm2) (reported as ASMA vessel coverage (%)) and CAIX positive pixel count per total ROI pixel count (reported as CAIX (%)).

4.11. Statistical analysis

Statistical analyses were conducted using Prism (v9, GraphPad Software, San Diego, CA, USA) and R (v4.0.1 [62], R Foundation, Vienna, Austria). We used the mean square error and R-squared statistics to quantify the accuracy and strength of the relationship between the segmented networks to the ground truth L-nets. For each outcome of interest, we predicted the ground truth (on a scale compatible with the normality assumption according to model checks) by means of each method estimates through a linear model. As model performance statistics are typically overestimated when assessing the model fit on the same data used to estimate the model parameters, we used bootstrapping (R = 500) to correct for the optimism bias and obtain unbiased estimates [63]. Bland-Altman plots were produced for each paired comparison of segmented volume to the ground truth volume in L-nets and associated bias and limits of agreement (LOA) are reported. For L-nets, F1 scores were calculated [64]. PAI quality pre-segmentation was quantified by measuring SNR, defined as the mean of signal over the standard deviation of the background signal. Comparisons of string volume, as well as SNR, were completed using one-way ANOVA with Tukey multiplicity correction.

For each outcome of interest, in vivo data was analysed as follows: A linear mixed effect model was fitted on a response scale (log, square root or cube root) compatible with the normality assumption according to model checks with the segmentation methods as a 4-level fixed predictor and animal as random effect, to take the within mouse dependence into account. Noting that the residual variance was sometimes different for each segmentation group, we also fitted a heteroscedastic linear mixed effect allowing the variance to be a function of the segmentation group. The results of the heteroscedastic model were preferred to results of the homoscedastic model when the likelihood ratio test comparing both models led to a p-value < 0.05. Two multiplicity corrections were performed to achieve a 5% family-wise error rate for each dataset: For each outcome, a parametric multiplicity correction on the segmentation method parameters was first used [65]. A conservative Bonferroni p-value adjustment was then added to it to account for the number of outcomes in the entire in vivo dataset. The following pairwise comparisons were considered: AT vs. AT+VF, AT vs. RF, RF vs. RF+VF and AT+VF vs. RF+VF. Comparisons of our vascular descriptors between ER- and ER+ tumours were completed with an unpaired student’s t-test. All p-values < 0.05 after multiplicity correction were considered statistically significant.

CRediT authorship contribution statement

Paul W. Sweeney, Emma L. Brown, Thierry L. Lefebvre, Sarah E. Bohndiek: Conceptualization. Paul W. Sweeney, Emma L. Brown, Lina Hacker, Thierry L. Lefebvre, Ziqiang Huang, Sarah E. Bohndiek: Methodology. Paul W. Sweeney, Bernadette J. Stolz, Janek Gröhl, Thierry L. Lefebvre, Ziqiang Huang: Software. Paul W. Sweeney, Emma L. Brown, Lina Hacker, Thierry L. Lefebvre: Validation. Paul W. Sweeney, Emma L. Brown, Thierry L. Lefebvre: Formal analysis. Paul W. Sweeney, Emma L. Brown, Lina Hacker, Thierry L. Lefebvre: Investigation. Heather A. Harrington, Helen M. Byrne: Resources. Paul W. Sweeney, Janek Gröhl, Thierry L. Lefebvre: Data curation. Paul W. Sweeney, Emma L. Brown, Lina Hacker, Dominique-Laurent Couturier, Thierry L. Lefebvre, Sarah E. Bohndiek: Writing – original draft. Paul W. Sweeney, Emma L. Brown, Janek Gröhl, Lina Hacker, Bernadette J. Stolz, Heather A. Harrington, Helen M. Byrne, Thierry L. Lefebvre, Sarah E. Bohndiek: Writing – review & editing. Paul W. Sweeney, Emma L. Brown, Thierry L. Lefebvre: Visualisation. Sarah E. Bohndiek: Supervision. Paul W. Sweeney, Sarah E. Bohndiek: Project administration. Paul W. Sweeney, Thierry L. Lefebvre, Sarah E. Bohndiek: Funding acquisition.

Declaration of Competing Interest

The authors declare the following financial interests/personal relationships which may be considered as potential competing interests: Sarah Bohndiek reports a relationship with EPFL Center for Biomedical Imaging that includes: speaking and lecture fees. Sarah Bohndiek reports a relationship with PreXion Inc that includes: funding grants. Sarah Bohndiek reports a relationship with iThera Medical GmbH that includes: non-financial support. The other authors have no conflict of interest related to the present manuscript to disclose.

Acknowledgements

ELM, PWS, TLL, JG, LH, DLC, and SEB acknowledge the support from Cancer Research UK under grant numbers C14303/A17197, C9545/A29580, C47594/A16267, C197/A16465, C47594/A29448, and Cancer Research UK RadNet Cambridge under the grant number C17918/A28870. PWS acknowledges the support of the Wellcome Trust and University of Cambridge through an Interdisciplinary Fellowship under grant number 204845/Z/16/Z. TLL is supported by the Cambridge Trust. LH is funded from NPL’s MedAccel programme financed by the Department of Business, Energy and Industrial Strategy’s Industrial Strategy Challenge Fund. BJS, HMB, and HAH are members of the Centre for Topological Data Analysis, funded by the EPSRC grant (EP/R018472/1). We thank the Cancer Research UK Cambridge Institute Biological Resources Unit, Imaging Core, Histopathological Core, Preclinical Genome Editing Core, Light Microscopy and Research Instrumentation and Cell Services for their support in conducting this research. Particular thanks go to Cara Brodie in the Histopathology Core for analyses support. ELB and SEB would like to thank Prof. Carlos Caldas, Dr Alejandra Bruna and Dr Wendy Greenwood for providing PDX tissue from their biobank at the CRUK Cambridge Institute and for assisting in the establishment of a sub-biobank that contributed the in vivo data presented in this manuscript.

Code Availability

Code to generate synthetic vascular trees (LNets) is available on GitHub (https://github.com/psweens/V-System). In silico photoacoustic simulations were performed using the SIMPA toolkit (https://github.com/CAMI-DKFZ/simpa). Both the trained 3D CNN to extract tumour ROIs from RSOM images (https://github.com/psweens/Predict-RSOM-ROI) and vascular TDA package are available on GitHub (https://github.com/psweens/Vascular-TDA).

Biography

Sarah Bohndiek completed her PhD in Radiation Physics at University College London in 2008 and then worked in both the UK (at Cambridge) and the USA (at Stanford) as a postdoctoral fellow in molecular imaging. Since 2013, Sarah has been a Group Leader at the University of Cambridge, where she is jointly appointed in the Department of Physics and the Cancer Research UK Cambridge Institute. She was appointed as Full Professor of Biomedical Physics in 2020. Sarah was recently awarded the CRUK Future Leaders in Cancer Research Prize and SPIE Early Career Achievement Award in recognition of her innovation in biomedical optics.

Footnotes

Supplementary data associated with this article can be found in the online version at doi:10.1016/j.pacs.2022.100357.

Appendix A. Supplementary material

Supplementary material

.

Data Availability

Exemplar datasets for the in silico, phantom, and in vivo data can be found at https://doi.org/10.17863/CAM.78208. The authors declare that all data supporting the findings of this study is available upon request.

References

- 1.Brown E., Brunker J., Bohndiek S.E. Photoacoustic imaging as a tool to probe the tumour microenvironment. Dis. Models Amp;Amp; Mech. 2019;12(7):dmm039636. doi: 10.1242/dmm.039636. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Corliss B.A., Mathews C., Doty R., Rohde G., Peirce S.M. Methods to label, image, and analyze the complex structural architectures of microvascular networks. Microcirc. (N. Y., N. Y.: 1994) 2019;26(5) doi: 10.1111/micc.12520. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Hanahan D., Weinberg R. a. Hallmarks of cancer: the next generation. Cell. 2011;144(5):646–674. doi: 10.1016/j.cell.2011.02.013. [DOI] [PubMed] [Google Scholar]

- 4.Krishna Priya S., Nagare R.P., Sneha V.S., Sidhanth C., Bindhya S., Manasa P., Ganesan T.S. Tumour angiogenesis—Origin of blood vessels. Int. J. Cancer. 2016;139(4):729–735. doi: 10.1002/ijc.30067. [DOI] [PubMed] [Google Scholar]

- 5.Nagy J.A., Dvorak H.F. Heterogeneity of the tumor vasculature: the need for new tumor blood vessel type-specific targets. Clin. Exp. Metastas. 2012;29(7):657–662. doi: 10.1007/s10585-012-9500-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Michiels C., Tellier C., Feron O. Cycling hypoxia: A key feature of the tumor microenvironment. Biochim. Et. Biophys. Acta (BBA) - Rev. Cancer. 2016;1866(1):76–86. doi: 10.1016/j.bbcan.2016.06.004. [DOI] [PubMed] [Google Scholar]

- 7.Trédan O., Galmarini C.M., Patel K., Tannock I.F. Drug resistance and the solid tumor microenvironment. JNCI: J. Natl. Cancer Inst. 2007;99(19):1441–1454. doi: 10.1093/jnci/djm135. [DOI] [PubMed] [Google Scholar]

- 8.Jährling N., Becker K., Dodt H.-U. 3D-reconstruction of blood vessels by ultramicroscopy. Organogenesis. 2009;5(4):227–230. doi: 10.4161/org.5.4.10403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Kelch I.D., Bogle G., Sands G.B., Phillips A.R.J., LeGrice I.J., Rod Dunbar P. Organ-wide 3D-imaging and topological analysis of the continuous microvascular network in a murine lymph node. Sci. Rep. 2015;5(1):16534. doi: 10.1038/srep16534. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Keller, P.J., & Dodt, H.U., 2012, Light sheet microscopy of living or cleared specimens. In Current Opinion in Neurobiology (Vol. 22, Issue 1, pp. 138–143). Curr Opin Neurobiol. 〈https://doi.org/10.1016/j.conb.2011.08.003〉. [DOI] [PubMed]

- 11.Ntziachristos V. Going deeper than microscopy: the optical imaging frontier in biology. Nat. Methods. 2010;7(8):603–614. doi: 10.1038/nmeth.1483. [DOI] [PubMed] [Google Scholar]

- 12.Beard P. Biomedical photoacoustic imaging. Interface Focus. 2011;1(4):602–631. doi: 10.1098/rsfs.2011.0028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Ntziachristos V., Ripoll J., Wang L.V., Weissleder R. Looking and listening to light: the evolution of whole-body photonic imaging. Nat. Biotechnol. 2005;23:313. doi: 10.1038/nbt1074. [DOI] [PubMed] [Google Scholar]

- 14.Wang L.V., Yao J. A practical guide to photoacoustic tomography in the life sciences. Nat. Methods. 2016;13(8):627–638. doi: 10.1038/nmeth.3925. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Omar M., Aguirre J., Ntziachristos V. Optoacoustic mesoscopy for biomedicine. Nat. Biomed. Eng. 2019;3(5):354–370. doi: 10.1038/s41551-019-0377-4. [DOI] [PubMed] [Google Scholar]

- 16.Omar M., Soliman D., Gateau J., Ntziachristos V. Ultrawideband reflection-mode optoacoustic mesoscopy. Opt. Lett. 2014;39(13):3911–3914. doi: 10.1364/OL.39.003911. [DOI] [PubMed] [Google Scholar]

- 17.Haedicke K., Agemy L., Omar M., Berezhnoi A., Roberts S., Longo-Machado C., Skubal M., Nagar K., Hsu H.-T., Kim K., Reiner T., Coleman J., Ntziachristos V., Scherz A., Grimm J. High-resolution optoacoustic imaging of tissue responses to vascular-targeted therapies. Nat. Biomed. Eng. 2020;4(3):286–297. doi: 10.1038/s41551-020-0527-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Omar M., Schwarz M., Soliman D., Symvoulidis P., Ntziachristos V. Pushing the Optical Imaging Limits of Cancer with Multi-Frequency-Band Raster-Scan Optoacoustic Mesoscopy (RSOM) Neoplasia. 2015;17(2):208–214. doi: 10.1016/j.neo.2014.12.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Orlova A., Sirotkina M., Smolina E., Elagin V., Kovalchuk A., Turchin I., Subochev P. Raster-scan optoacoustic angiography of blood vessel development in colon cancer models. Photoacoustics. 2019;13:25–32. doi: 10.1016/j.pacs.2018.11.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Imai T., Muz B., Yeh C.-H., Yao J., Zhang R., Azab A.K., Wang L. Direct measurement of hypoxia in a xenograft multiple myeloma model by optical-resolution photoacoustic microscopy. Cancer Biol. Ther. 2017;18(2):101–105. doi: 10.1080/15384047.2016.1276137. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Rebling J., Greenwald M.B.-Y., Wietecha M., Werner S., Razansky D. Long-term imaging of wound angiogenesis with large scale optoacoustic microscopy. Adv. Sci. 2021;8(13):2004226. doi: 10.1002/ADVS.202004226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Lao Y., Xing D., Yang S., Xiang L. Noninvasive photoacoustic imaging of the developing vasculature during early tumor growth. Phys. Med. Biol. 2008;53(15):4203. doi: 10.1088/0031-9155/53/15/013. [DOI] [PubMed] [Google Scholar]

- 23.Soetikno B., Hu S., Gonzales E., Zhong Q., Maslov K., Lee J.-M., Wang L.V. Vol. 8223. 2012. Vessel segmentation analysis of ischemic stroke images acquired with photoacoustic microscopy. (Proc.SPIE). [DOI] [Google Scholar]

- 24.Krig S., Krig S. Computer Vision Metrics. Apress,; 2014. Ground truth data, content, metrics, and analysis; pp. 283–311. [DOI] [Google Scholar]

- 25.Zhao F., Chen Y., Hou Y., He X. Segmentation of blood vessels using rule-based and machine-learning-based methods: a review. Multimed. Syst. 2019;25(2):109–118. doi: 10.1007/s00530-017-0580-7. [DOI] [Google Scholar]

- 26.Raumonen P., Tarvainen T. Segmentation of vessel structures from photoacoustic images with reliability assessment. Biomed. Opt. Express. 2018;9(7):2887–2904. doi: 10.1364/BOE.9.002887. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Moccia S., De Momi E., El Hadji S., Mattos L.S. Blood vessel segmentation algorithms — Review of methods, datasets and evaluation metrics. Comput. Methods Prog. Biomed. 2018;158:71–91. doi: 10.1016/j.cmpb.2018.02.001. [DOI] [PubMed] [Google Scholar]

- 28.Berg S., Kutra D., Kroeger T., Straehle C.N., Kausler B.X., Haubold C., Schiegg M., Ales J., Beier T., Rudy M., Eren K., Cervantes J.I., Xu B., Beuttenmueller F., Wolny A., Zhang C., Koethe U., Hamprecht F.A., Kreshuk A. ilastik: interactive machine learning for (bio)image analysis. Nat. Methods. 2019;16(12):1226–1232. doi: 10.1038/s41592-019-0582-9. [DOI] [PubMed] [Google Scholar]

- 29.Sommer, C., Straehle, C., Kothe, U., & Hamprecht, F.A., 2011, Ilastik: Interactive learning and segmentation toolkit. Eighth IEEE International Symposium on Biomedical Imaging, 230–233. 〈https://doi.org/10.1109/ISBI.2011.5872394〉.

- 30.Oruganti T., Laufer J.G., Treeby B.E. Vessel filtering of photoacoustic images. Proc. SPIE. 2013:8581. doi: 10.1117/12.2005988. [DOI] [Google Scholar]

- 31.Ul Haq, I., Nagaoka, R., Makino, T., Tabata, T., & Saijo, Y., 2016, 3D Gabor wavelet based vessel filtering of photoacoustic images. In Conference proceedings:. Annual International Conference of the IEEE Engineering in Medicine and Biology Society. IEEE Engineering in Medicine and Biology Society. Conference (Vol. 2016). 〈https://doi.org/10.1109/EMBC.2016.7591576〉. [DOI] [PubMed]

- 32.Zhao H., Liu C., Li K., Chen N., Zhang K., Wang L., Lin R., Gong X., Song L., Liu Z. Multiscale vascular enhancement filter applied to in vivo morphologic and functional photoacoustic imaging of rat ocular vasculature. IEEE Photonics J. 2019;11(6) doi: 10.1109/JPHOT.2019.2948955. [DOI] [Google Scholar]

- 33.Stolz B.J., Kaeppler J., Markelc B., Mech F., Lipsmeier F., Muschel R.J., Byrne H.M., Harrington H.A. Multiscale topology characterises dynamic tumour vascular networks. arXiv. 2020 doi: 10.48550/arXiv.2008.08667. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Barlow K.D., Sanders A.M., Soker S., Ergun S., Metheny-Barlow L.J. Pericytes on the tumor vasculature: jekyll or hyde? Cancer Microenviron.: Off. J. Int. Cancer Microenviron. Soc. 2013;6(1):1–17. doi: 10.1007/s12307-012-0102-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Quiros-Gonzalez I., Tomaszewski M.R., Aitken S.J., Ansel-Bollepalli L., McDuffus L.-A., Gill M., Hacker L., Brunker J., Bohndiek S.E. Optoacoustics delineates murine breast cancer models displaying angiogenesis and vascular mimicry. Br. J. Cancer. 2018;118(8):1098–1106. doi: 10.1038/s41416-018-0033-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Chung M.K., Lee H., DiChristofano A., Ombao H., Solo V. Exact topological inference of the resting-state brain networks in twins. Netw. Neurosci. 2019;3(3):674–694. doi: 10.1162/NETN_A_00091. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Meiburger K.M., Nam S.Y., Chung E., Suggs L.J., Emelianov S.Y., Molinari F. Skeletonization algorithm-based blood vessel quantification using in vivo 3D photoacoustic imaging. Phys. Med. Biol. 2016;61(22):7994–8009. doi: 10.1088/0031-9155/61/22/7994. [DOI] [PubMed] [Google Scholar]

- 38.Dantuma M., van Dommelen R., Manohar S. Semi-anthropomorphic photoacoustic breast phantom. Biomed. Opt. Express. 2019;10(11):5921–5939. doi: 10.1364/BOE.10.005921. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Epah J., Pálfi K., Dienst F.L., Malacarne P.F., Bremer R., Salamon M., Kumar S., Jo H., Schürmann C., Brandes R.P. 3D imaging and quantitative analysis of vascular networks: A comparison of ultramicroscopy and micro-computed tomography. Theranostics. 2018;8(8):2117–2133. doi: 10.7150/THNO.22610. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Hlushchuk R., Haberthür D., Djonov V. Ex vivo microangioCT: Advances in microvascular imaging. Vasc. Pharmacol. 2019;112:2–7. doi: 10.1016/J.VPH.2018.09.003. [DOI] [PubMed] [Google Scholar]

- 41.Jia D., Zhuang X. Learning-based algorithms for vessel tracking: A review. Comput. Med. Imaging Graph. 2021;89 doi: 10.1016/j.compmedimag.2020.101840. [DOI] [PubMed] [Google Scholar]

- 42.Lindenmayer A. Mathematical models for cellular interactions in development I. Filaments with one-sided inputs. J. Theor. Biol. 1968;18(3):280–299. doi: 10.1016/0022-5193(68)90079-9. [DOI] [PubMed] [Google Scholar]

- 43.Rozenberg G., Salomaa Arto, editors. Lindenmayer Systems: Impacts on Theoretical Computer Science, Computer Graphics, and Development Biology. Springer-Verlag Berlin Heidelberg; 1992. [DOI] [Google Scholar]

- 44.Galarreta-Valverde M.A. Instituto de Matemática e Estatística,; 2012. Geração de redes vasculares sintéticas tridimensionais utilizando sistemas de Lindenmayer estocásticos e parametrizados. [DOI] [Google Scholar]

- 45.Galarreta-Valverde M.A., Macedo M.M.G., Mekkaoui C., Jackowski M.P. Three-dimensional synthetic blood vessel generation using stochastic L-systems. Med. Imaging 2013: Image Process. 2013;86691I doi: 10.1117/12.2007532. [DOI] [Google Scholar]

- 46.Bresenham J.E. Algorithm for computer control of a digital plotter. IBM Syst. J. 1965;4(1) doi: 10.1147/sj.41.0025. [DOI] [Google Scholar]

- 47.Gröhl J., Dreher K.K., Schellenberg M., Rix T., Holzwarth N., Vieten P., Ayala L., Bohndiek S.E., Seitel A., Maier-Hein L. SIMPA: an open-source toolkit for simulation and image processing for photonics and acoustics. J. Biomed. Opt. 2022;27(8):1–21. doi: 10.1117/1.JBO.27.8.083010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Treeby B.E., Cox B.T. k-Wave: MATLAB toolbox for the simulation and reconstruction of photoacoustic wave fields. J. Biomed. Opt. 2010;15(2):1–12. doi: 10.1117/1.3360308. [DOI] [PubMed] [Google Scholar]

- 49.Joseph J., Tomaszewski M.R., Quiros-Gonzalez I., Weber J., Brunker J., Bohndiek S.E. Evaluation of Precision in Optoacoustic Tomography for Preclinical Imaging in Living Subjects. J. Nucl. Med. 2017;58(5):807–814. doi: 10.2967/jnumed.116.182311. 〈http://jnm.snmjournals.org/content/58/5/807.abstract〉 [DOI] [PubMed] [Google Scholar]

- 50.Bruna A., Rueda O.M., Greenwood W., Batra A.S., Callari M., Batra R.N., Pogrebniak K., Sandoval J., Cassidy J.W., Tufegdzic-Vidakovic A., Sammut S.-J., Jones L., Provenzano E., Baird R., Eirew P., Hadfield J., Eldridge M., McLaren-Douglas A., Barthorpe A., Caldas C. A biobank of breast cancer explants with preserved intra-tumor heterogeneity to screen anticancer compounds. Cell. 2016;167(1):260–274. doi: 10.1016/j.cell.2016.08.041. e22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Omar M., Gateau J., Ntziachristos V. Raster-scan optoacoustic mesoscopy in the 25–125 MHz range. Opt. Lett. 2013;38(14):2472–2474. doi: 10.1364/OL.38.002472. [DOI] [PubMed] [Google Scholar]

- 52.Sternberg S.R. Biomedical image processing. Computer. 1983;16(1):22–34. doi: 10.1109/MC.1983.1654163. [DOI] [Google Scholar]

- 53.Schindelin J., Arganda-Carreras I., Frise E., Kaynig V., Longair M., Pietzsch T., Preibisch S., Rueden C., Saalfeld S., Schmid B., Tinevez J.-Y., White D.J., Hartenstein V., Eliceiri K., Tomancak P., Cardona A. Fiji: an open-source platform for biological-image analysis. Nat. Methods. 2012;9(7):676–682. doi: 10.1038/nmeth.2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Tsai W.-H. Moment-preserving thresolding: A new approach. Comput. Vis., Graph., Image Process. 1985;29(3):377–393. doi: 10.1016/0734-189X(85)90133-1. [DOI] [Google Scholar]

- 55.Sato Y., Nakajima S., Shiraga N., Atsumi H., Yoshida S., Koller T., Gerig G., Kikinis R. Three-dimensional multi-scale line filter for segmentation and visualization of curvilinear structures in medical images. Med. Image Anal. 1998;2(2):143–168. doi: 10.1016/S1361-8415(98)80009-1. [DOI] [PubMed] [Google Scholar]

- 56.Frangi A.F., Niessen W.J., Vincken K.L., Viergever M.A. In: Medical Image Computing and Computer-Assisted Intervention --- MICCAI’98. Wells W.M., Colchester A., Delp S., editors. Springer Berlin Heidelberg; 1998. Multiscale vessel enhancement filtering; pp. 130–137. [Google Scholar]

- 57.Ronneberger, O., Fischer, P., & Brox, T., 2015, U-Net: Convolutional Networks for Biomedical Image Segmentation. Medical Image Computing and Computer-Assisted Intervention – MICCAI 2015. MICCAI 2015. Lecture Notes in Computer Science, 9351, 234–241. 〈https://doi.org/10.1007/978–3-319–24574-4〉.

- 58.Çiçek Ö., Abdulkadir A., Lienkamp S.S., Brox T., Ronneberger O. 3D U-net: Learning dense volumetric segmentation from sparse annotation. Medical Image Computing and Computer-Assisted Intervention – MICCAI 2016. MICCAI 2016. Lect. Notes Comput. Sci. 2016;9901 doi: 10.1007/978-3-319-46723-8_49. [DOI] [Google Scholar]

- 59.Bates R. University of Oxford,; 2017. Learning to Extract Tumour Vasculature: Techniques in Machine Learning for Medical Image Analysis. [Google Scholar]

- 60.Bates R. GitLab,; 2018. Russ-learn: set of tools for application and training of deep learning methods for image segmentation and vessel analysis. [Google Scholar]

- 61.Pudney C. Distance-Ordered Homotopic Thinning: A Skeletonization Algorithm for 3D Digital Images. Comput. Vis. Image Underst. 1998;72(3):404–413. doi: 10.1006/cviu.1998.0680. [DOI] [Google Scholar]

- 62.R Core, T . R Foundation for Statistical Computing,; Vienna, Austria: 2021. R: A Language and Environment for Statistical Computing.https://www.r-project.org/ [Google Scholar]

- 63.Harrell F.E. Springer International Publishing,; 2016. Regression Modeling Strategies. [Google Scholar]

- 64.Dice L.R. Measures of the amount of ecologic association between species. Ecology. 1945;26(3):297–302. doi: 10.2307/1932409. [DOI] [Google Scholar]

- 65.Bretz F., Hothorn T., Westfall P. Chapman and Hall/CRC,; 2010. Multiple Comparisons Using R. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary material related to this article can be found online

Supplementary material related to this article can be found online

Supplementary material related to this article can be found online

Supplementary material

Data Availability Statement

Exemplar datasets for the in silico, phantom, and in vivo data can be found at https://doi.org/10.17863/CAM.78208. The authors declare that all data supporting the findings of this study is available upon request.