Abstract

Background

Quality indicators are used to quantify the quality of care. A large number of quality indicators makes assessment of overall quality difficult, time consuming and impractical. There is consequently an increasing interest for composite measures based on a combination of multiple indicators.

Objective

To examine the use of different approaches to construct composite measures of quality of care and to assess the use of methodological considerations and justifications.

Methods

We conducted a literature search on PubMed and EMBASE databases (latest update 1 December 2020). For each publication, we extracted information on the weighting and aggregation methodology that had been used to construct composite indicator(s).

Results

A total of 2711 publications were identified of which 145 were included after a screening process. Opportunity scoring with equal weights was the most used approach (86/145, 59%) followed by all-or-none scoring (48/145, 33%). Other approaches regarding aggregation or weighting of individual indicators were used in 32 publications (22%). The rationale for selecting a specific type of composite measure was reported in 36 publications (25%), whereas 22 papers (15%) addressed limitations regarding the composite measure.

Conclusion

Opportunity scoring and all-or-none scoring are the most frequently used approaches when constructing composite measures of quality of care. The attention towards the rationale and limitations of the composite measures appears low.

Discussion

Considering the widespread use and the potential implications for decision-making of composite measures, a high level of transparency regarding the construction process of the composite and the functionality of the measures is crucial.

Introduction

Quality of health care is essential for all healthcare stakeholders, including, patients, healthcare providers, institutes, insurers, policy makers, and government. Hence, valid assessment of the quality is crucial in order to monitor, evaluate and improve the quality of healthcare services. Quality indicators are measurement tools that are used to quantify the quality of care. They serve various purposes such as documenting the quality, benchmarking, setting priorities, facilitating quality improvement and supporting patient choice of providers. The indicators can be classified in various ways with Donabedian’s structure, process and outcome indicator classification being the most frequently adopted approach [1]. The use of quality indicators is widespread in health care and the number of indicators is huge. Consequently, there is an increasing interest for composite measures based on a combination of multiple indicators.

A composite measure can be defined as a combination of multiple individual indicators [2]. Individual indicators are useful for measuring specific aspects of quality, however, an overall measure that reflects multiple aspects and dimensions of quality may have considerable advantages over individual indicators. Indeed, composite indicators can summarize the quality of care as a single value. Hence, they can be helpful when comparing, rating, ranking and selecting healthcare providers as an alternative to assessing providers’ performance according to many individual indicators [3]. Using composite indicators rather than individual indicators may also result in increased reliability since the combination of multiple individual indicators will imply that the underlying number of observations is larger [4].

However, composite indicators also come with limitations. Differences and relationships between individual indicators may be masked and information regarding specific aspect(s) of performance can be lost [2]. Using different approaches for construction of the composite measures may give different results. In other words, composite measures can be sensitive to the methodology that has been used [5]. Therefore, if the construction process for the composite indicators is not transparent, composite indicators may be misused and if not constructed in a methodologically sound way, the results obtained by using these indicators (such as hospital rankings) may not be reliable [6].

Detailed recommendations on the construction of reliable composite indicators have been published previously [2, 7]. The steps include development of a theoretical framework, selection of indicators, multivariable assessment of indicators, weighting and aggregation of indicators and as the last step, validation of the composite indicator [2, 7].

The aim of this study was to investigate the use of composite measures of quality of care based on process indicators in the peer-reviewed literature. In addition, we examined whether methodological considerations were provided in the publications.

Methods

This review was done in accordance with the recommendations in the PRISMA statement [8].

Definitions and terminology

Different approaches for construction of composite indicators exist, which mostly differ in terms of weighting and aggregation of individual indicators. Some of the methods to construct composite indicators are introduced (Table 1).

Table 1. Examples of methods for constructing composite measures.

| Methods | Definition |

|---|---|

| Overall percentage (Opportunity scoring) | The composite score is calculated as the total number of processes of care delivered to all patients divided by the total number of eligible care processes [9]. where n denotes the number of patients in a provider (or other level of interest). |

| Patient average (Opportunity scoring) | Composite scores are calculated for each patient (number of care processes delivered divided by number of patient specific eligible care processes) and can then be averaged to obtain provider-level composite scores [9]. where n denotes the number of patients in a provider (or other level of interest). |

| Indicator average | For each indicator the percentage of times that indicator is fulfilled is calculated and then averaged across all indicators [9]. where k denotes the number of indicators. |

| All-or-none (defect-free scoring) | Composite measure is calculated on patient level. Each patient gets either 1 (all eligible care processes are fulfilled) or 0 (at least 1 of the eligible care processes is unachieved). This approach can be preferred especially (1) when process indicators interact or partial achievement of a series of steps is insufficient to obtain the desired result, (2) when adherence rates for indicators are very high so using methods that award partially provided care will neither be helpful in order to distinguish between providers’ performance nor motivates providers to improve the quality of care [10]. where n denotes the number of patients in a provider (or other level of interest). |

| 70% standard and other thresholds | This approach is similar to all-or-none scoring but using a lower threshold than 100% [8]. |

In patient average, all-or-none scoring and 70% standard approaches, composite scores are calculated at patient-level and requires patient-level information. The scores obtained for each patient can subsequently be averaged to get provider-level, region-level or other levels of interest scores.

There are several approaches for assigning weights to individual indicators before aggregating them into composites. Some of the methods to assign weights to individual indicators are provided (Table 2).

Table 2. Examples of weighting approaches for constructing composite measures.

| Weighting approach | Definition |

|---|---|

| Equal weights | All indicators receive the same weight. This approach generally indicates that all indicators are equally important in the composite [7]. |

| Expert weights | An expert panel assigns weights to individual indicators depending on the panel’s criteria, such as indicators’ importance, impact, evidence score, feasibility and reliability. |

| Regression weights | Each indicator is weighted according to the degree of its association with an outcome, e.g., 30-day mortality. Using regression weights, the indicator with the strongest association with the outcome receives the highest weight [11]. This approach may be preferred if there is a gold standard end point. |

| Principle component analysis-based weights | PCA-based weights may be preferred when individual indicators are highly correlated. In this approach, correlated indicators are grouped, since they may share underlying characteristics. In this approach, each indicator is weighted according to its proportional factor loading [12]. This should not be confused with methods, in which factor analysis is used only as a part of the selection process for individual indicators. |

Note that in the literature opportunity scoring is sometimes also referred to as denominator-based weights approach and is a weighted average for which the weights are the rate of eligibility for each indicator. In our review, we preferred to distinguish between this kind of weights which occurs naturally due to aggregation method and the weights which are additionally assigned to indicators by investigators according to each indicator’s association with the outcome, reliability, feasibility, importance or an expert judgement. Therefore, if opportunity scoring is used in a study without further assignment of weights to individual indicators and no differentiation is made between indicators, we referred this method as “opportunity scoring with equal weights”. Furthermore, the scores obtained by using patient average, overall percentage and indicator average methods will be the same if all of the patients are eligible for all indicators, even though the interpretation of the results will differ. Finally, it should be emphasised that weighting individual indicators before aggregation is not relevant in all-or-none scoring.

Search strategy

We conducted a literature search to identify publications that used composite measures to assess quality of care. We queried the PubMed and EMBASE databases (latest update 1 December 2020), using the following terms: composite measures, quality of health care and other variations (S1 Appendix). We did not use any restrictions on date of publication in our search. The full search string is provided (S1 Appendix).

Eligibility criteria

In this review, we included studies using composite measures based exclusively on process of care indicators. Process indicators (for example, β-blocker prescription at discharge for patients with acute myocardial infarction, oxygenation assessment for patients with pneumonia or eye examination for patients with diabetes) have some advantages over outcome indicators. First, these indicators reflect actual care delivered to the patients, hence, they can be more actionable. Second, outcome indicators like 30-day mortality or readmission rate may be influenced by confounding factors, e.g., age, sex, severity of underlying disease or level of comorbidity, which may not be completely eliminated by risk adjustment [13]. Third, process indicators are a particular appealing alternative in clinical scenarios where the most relevant outcome requires long follow-up time, e.g., recurrence of cancer [13]. Fourth, composite measures consisting of a combination of both process and outcome indicators comes with additional challenges due to the inherent problem of meaningful weighting and aggregation of these two different types of indicators (for example, assessment of the relative importance of providing CT scan to patients compared to an outcome indicator such as mortality). As a conclusion, we restrict this review to only process indicators as would not be feasible to cover composite measures of multiple types of indicators satisfactory in a single paper. However, we recognize the value of outcome indicators and an assessment of composite measures of outcome indicators could be a relevant scope for a separate study.

Whereas clinical process indicators reflect actual delivered care, indicators reflecting utilization or access to care were not included as they reflect a complex result of organizational factors, patient preferences and patient compliance [14]. These indicators are therefore not under the full control of the healthcare system.

Even though patient-reported indicators may carry useful information, they typically lack details on the timeliness and appropriateness of individual clinical processes, which are crucial when evaluating the quality of care. Therefore, the studies that used these types of indicators in the composite measure were also excluded in the current review.

The exclusion criteria were as follows: (1) the composite measure included other types of indicators besides process indicators, (2) the composite measure included indicators related to access or utilization, (3) the composite measure included patient-reported indicators, (4) the scientific contribution was a protocol, trial design, purely methodological, letter, comment, editorial or a review, (5) the publication was not in English and (6) full text was not available.

Study selection

Study selection was performed using Rayyan [15]. Records were screened independently by two reviewers (PK and JBV).

Information retrieved from included studies

For each publication, we extracted information on the weighting and aggregation methodology that had been used to construct the composite indicator(s).

In addition, we registered justifications made for the selected methodology. We defined “justification” as the presence of any stated methodological argument for the methodology that had been used in the individual publications. We preferred not to use a very strict and detailed criterion to prevent subjective use and understanding of the term. We also obtained information on limitations and advantages of using composite indicators stated in the included publications. Finally, we examined whether publications using a single approach for construction of composite measures mentioned any alternative approaches.

We preferred to accept publications that only provided reference to these information (for example, the publication did not describe limitations regarding use of composite indicators itself but informed the reader about presence of limitations and included a reference for further information) as “provided information”. We recognize that especially when the main aim is not to assess the use of composite indicators directly, but rather to use composite indicators to support operational use (for example, a study that investigates effect of a programme participation and uses composite indicators as a tool to support decision making), providing detailed information about composite indicators may seem out of the scope of the publications. However, it is still important to provide the reader with some information about presence of potential limitations in relation to these indicators and the approaches used to construct them.

Results

Study selection

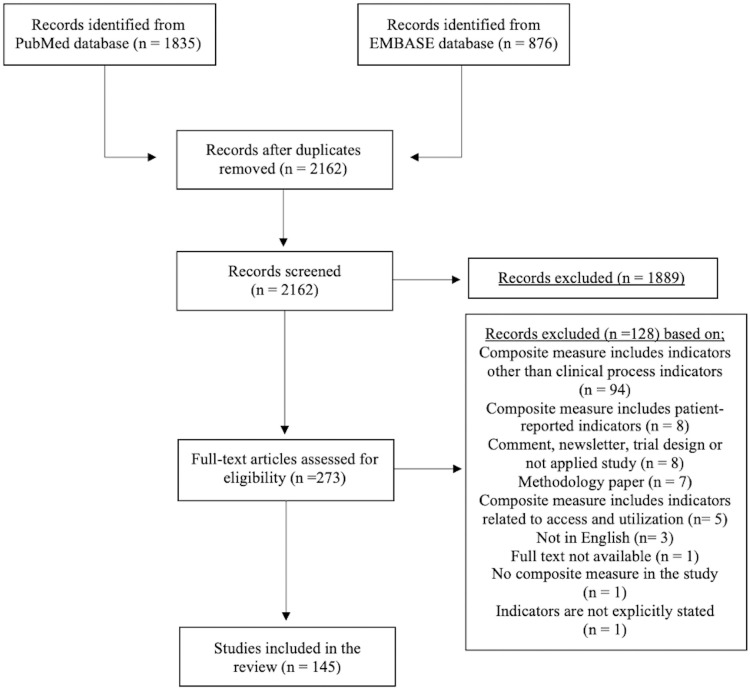

The search resulted in a total of 2711 publications, 1835 publications from PubMed database and 876 publications from EMBASE database. After removing 549 duplicates, 2162 unique publications were screened. First, title and abstracts were assessed for eligibility and 1889 publications were excluded since at least one of the exclusion reasons mentioned above was present. A total of 273 publications were included for the full-text screening and 145 of those met the full inclusion criteria. The list of included publications are provided (S1 Appendix). Detailed summary of the literature search is presented (Fig 1).

Fig 1. PRISMA diagram.

Context

We categorized the publications according to (1) methodology that was used, (2) whether the publication used a single approach or multiple approaches to construct composite indicator(s), and (3) context. For context, we classified the primary objective of the publications under two main categories: operational use (for example, the composite indicator in the study was constructed to evaluate the effect of a quality improvement program or to compare performance of healthcare providers) and research purposes (for example, to investigate the association between process and outcome indicators or to assess the construction, use and implementation of composite indicators) (Table 3). We provided tables for characteristics of the included studies (S1 Table) and classification of context for each included publication (S2 Table).

Table 3. Examples for investigated questions in included publications.

| Primary aim | Examples for investigated questions |

|---|---|

|

Operational use (n = 61) |

Effect of a program participation, implementation or intervention (n = 51)

Quality of care over time in a provider, Pure evaluation of quality of care in healthcare providers and/or comparison of healthcare providers (n = 10)

|

|

Research purposes (n = 84) |

Association between process and outcome indicators (n = 25)

Association between hospital and/or patient characteristics and quality of care (n = 32)

Use, implementation or comparison of composite indicators (n = 26)

Correlation between quality of care for heart failure and acute myocardial infarction (n = 1) |

Of the publications classified as operational use (n = 61, 42%), 51 publications [16–66] investigated whether program participation or implementation of an intervention was associated with improved quality of care as measured by a composite indicator. In 10 publications [67–76] the primary aim was to measure hospital performance and/or changes in performance over time (Table 3).

Of the studies classified as research (n = 84, 58%), 25 publications [77–101] reported on the association between processes of care, assessed by one or more composite indicators, and outcome indicators. In 32 studies [102–133] the attention was on the link between hospital and/or patient characteristics and quality of care. Finally, other research aims were addressed in the remaining 26 studies [11, 12, 134–157] including whether composite indicators could better inform hospital performance than single indicators, the reliability and/or validity of composite indicators, development and implementation of composite indicators and the impact of using different methodologies for the construction of composite indicators. One study [158] investigated the correlation between quality of care for two clinical conditions (Table 3).

Out of 145 publications, three included composite measures for mental health care, including depression [145], bipolar disorder [146], and overall mental health care [94]. Two publications [52, 99] addressed both somatic and mental health care components, whereas the remaining 140 publications were focused on composite indicators for somatic diseases.

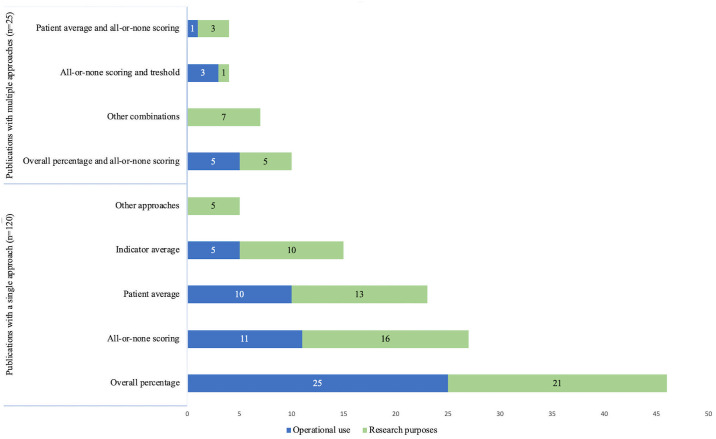

Preferred composite score methodology

Opportunity scoring was the most used scoring method and represented in 89 (61%) publications. Of these, 62 (43%) publications had applied the overall percentage approach and 28 (19%) publications applied patient average. The second most used method was all-or-none scoring (n = 48, 36%). Out of 145 publications, 8 (6%) publications used 70% standard and other thresholds approach. Indicator average approach was present in 19 (13%) publications. Other approaches included two publications using latent variable models, one publication with principal component analysis and one publication with 70% standard approach on indicator level rather than patient-level (Fig 2). References corresponding to each aggregation method is provided (S3 Table). We also included a table to illustrate examples for selected process indicators for each methodology (S4 Table).

Fig 2. Preferred composite score methodology.

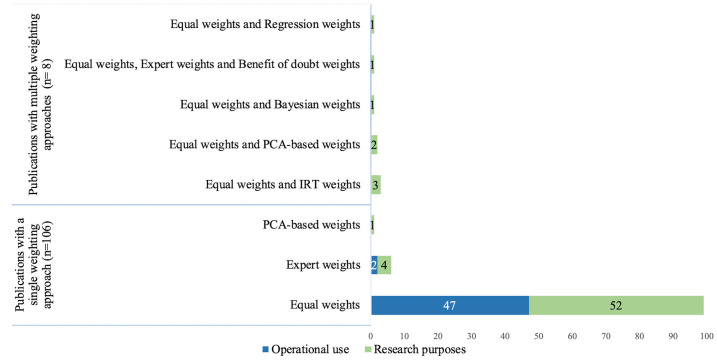

Weighting of individual indicators before aggregation were not relevant in the 27 publications using only all or none score approach. Of the remaining 118 publications, 107 used equal weights. Differential weights were present in 16 publications: 7 publications used weights obtained by expert opinion/subjective assessment, one publication used regression weights, three publications used weights obtained by item response theory, one publication used weights obtained by Bayesian approach, one publication used the benefit of doubt approach (assigning hospital specific weights in order to maximize performance) and three publications applied principal component analysis based weights (Fig 3). References corresponding to each weighting method is provided (S5 Table).

Fig 3. Weights used for constructing composite measures.

One publication [98] used patient specific weights according to care needs, i.e., care of patients is weighted by the natural logarithm of the total number of indicated care components, ln(n), to account for differences in number of needed care components for each case.

All of the studies that were conducted in order to support operational use preferred either equal weights or expert weights whereas more diverse approaches were used in publications with research purposes.

It was not possible to determine the methodology in four publications [22, 91, 95, 97]. In 26 publications, more than one aggregation and/or weighting approach were present.

Other considerations and findings

Justification for the selected methodology used in the construction of the composite measure was found in 36 (25%) publications. The justifications included both limited justifications and justifications that were referenced (Table 4). Examples of the used justifications are provided (S6 Table). A summary of findings for each publication included in the review is provided (S2 Table).

Table 4. Methodological information in publications reporting composite measures of quality of health care based on process indicators.

| Methodological information | Number of papers (%) | References |

|---|---|---|

| Any justification for the selected methodology was provided or referenced | 36 (25%) | [11, 12, 23, 25, 27, 34, 54, 55, 57, 75, 86, 87, 98, 100, 118, 122, 123, 127, 134–136, 140–147, 149–153, 156, 157] |

| Any limitation regarding composite measures is given or referenced | 22 (15%) | [11, 53, 75, 80, 98, 99, 116, 122, 134, 135, 139–142, 144–147, 149, 151, 152, 157] |

| Any advantage regarding composite measures is given or referenced | 42 (29%) | [11, 23, 31, 38, 52, 53, 55, 59, 66, 75, 80, 83, 86, 87, 100, 101, 116, 122, 123, 127, 134–136, 138–156] |

| For papers that used a single approach, presence of any other approach was mentioned or referenced | 10 (8%) | [75, 87, 93, 122, 123, 139, 142, 146, 152, 156] |

Of 145 publications, methodological limitations of composite measures were addressed in 22 (15%) publications, including limitations that were referenced (Table 4). The reported limitations included concerns regarding loss of important information (n = 6, 4%), findings being sensitive to the choice of methodology for construction of the composite measures (n = 9, 6%), concerns over the construction process, such as weighting and aggregation methods or the selection of indicators included in the composite (n = 7, 5%), concerns over transparency (3, 2%) and oversimplifying complex data (n = 2, 1%).

Of 145 publications, 42 (29%) publications mentioned specific advantages of composite indicators (Table 4). Reported advantages included the comprehensiveness of the composite indicator (for example, summarizing overall quality, presents overall picture) (n = 26, 18%), facilitation of comparisons (n = 10, 7%), interpretability and being easier to understand (n = 8, 6%), increased reliability and stability (n = 6, 4%), and simplification (for example, reduced number of indicators and numbers in quality reports) (n = 5, 3%).

Of the 119 publications, which used a single composite score methodology, a total of 10 (8%) publications mentioned the presence of alternative methods for the construction of composite indicators (Table 4).

Some examples regarding methodological statements found in the literature is provided (S7 Table).

Discussion

Despite the importance and widespread use of composite indicators to summarize the quality of health care, we found that methodological considerations were not addressed in the majority of the publications and that there was only modest variation regarding the chosen methodology for construction of the composite measure(s). Opportunity-based scoring, indicator average and all-or-none scoring were the most frequently preferred approaches to obtain composite measures, whereas use of other methods was sparse.

To our knowledge, this is the first review that investigates the use of composite measures of quality of health care based on process indicators. Some strengths of this review were: (1) It complied with the PRISMA guideline for systematic reviews whenever possible, (2) A large number of studies were included as we did not restrict our search to a specific clinical condition but considered all clinical conditions and disease areas relevant, (3) all publications were carefully screened by two independent reviewers to reduce possible bias.

This review has several limitations. First, it was restricted to peer-reviewed publications included in the PubMed and EMBASE databases. However, these two databases cover a substantial amount of publications within the field. Second, relevant publications might have been excluded if they were not in English. And third, although guidelines for validating composite measures of quality of health care have been developed [2], we did not investigate the extent to which the applied composite measures were validated in the publications under review, as this is a broad topic outside the scope of this review.

Implications and recommendations

Composite measures have the advantage of summarizing the quality of care with a single number and have been increasingly used to evaluate the quality of healthcare services. One of the concerns regarding use of composite measures is the lack of a standard approach to construct them and possible effects and consequences of using different approaches. Several studies in the literature examined the effects of using different approaches regarding weighting and aggregation. However, the findings have been contradictory with some studies indicating that the use of different methods provided substantially different results, whereas others have found highly correlated results, e.g., in healthcare provider rankings, using different methods.

Jacobs et al. [5] investigated the effect of using different aggregation and weighting methods on hospital rankings and concluded that these measures are sensitive to the methodology and hospital rankings can change substantially depending on the methods that has been used. Simms et al. [11] constructed composite indicators for acute myocardial infarction care, using opportunity scoring with equal weights, opportunity scoring with regression weights and all-or-none scoring approaches. While these composite indicators were associated with the outcome, the rankings of hospitals were substantially influenced by the method that had been used to construct composite indicators.

In contrast, Eapen et al. [149] compared two methodologies that are most commonly used for composite indicators: overall percentage with equal weights and all-or-none scoring to examine their effects on hospital rankings for acute myocardial infarction care. In their study, the rankings obtained by these two methods were highly correlated (r = 0.93).

Kolfschoten et al. [150] investigated several types of composite measures both on patient-level (patient average, all-or-none and 70% standard) and hospital-level (overall percentage, indicator average, patient average, all-or-none and 70% standard) and these measures’ association with morbidity and mortality for patients with rectum carcinoma and colon carcinoma. They found that none of the patient-level composite measures were associated with the outcome, except for an association between the 70% standard method and morbidity for patients with rectum carcinoma. In contrast, all hospital-level composite indicators were associated with morbidity for both rectum carcinoma and colon carcinoma. This difference between patient level and hospital level composite measures was attributed to other factors that may have more effect on patient-level while on hospital-level, composite scores may better present the quality of care in a hospital. This finding indicates the importance of a clear framework for the composite measure and also the consideration regarding for what and by whom it will be used.

The requirements for specific clinical conditions should be taken into account when selecting the most suitable methodology. As an example, the two most commonly used approaches, opportunity-based scoring and all-or-none scoring, emphasize different aspects of quality. Opportunity-based scoring awards partial performance and improvements, whereas all-or-none scoring promotes excellence and defect-free care. While using all-or-none scoring can be more suitable for conditions that require 100% adherence and anything other than ideal care is not enough to achieve success, opportunity-based scoring can be more useful to investigate and award improvements over time. For statistical approaches for construction, using principal component analysis can be beneficial when individual indicators are highly correlated with each other and can be grouped together (for example, a composite indicator with multiple care dimensions for diagnosis, treatment and consultation), whereas regression weights can be considered when there is a gold standard end point (for example, mortality).

Selection of individual indictors to be included in the composite requires careful evaluation. Investigating overall structure of the dataset including correlations and interrelationships between indicators may be useful and important to have meaningful composite measures and to prevent possible problems, such as double counting and can be a primer for the decision regarding assigning weights to individual indicators. Including clinical experts (for example, by establishing an expert panel) for indicator selection and weighting of indicators can be also considered in order to achieve potentially more clinically meaningful composite indicators.

Validation of the final composite indicator is an important step of construction in order to assure the composite indicator is fit for purpose, reliable, accurate and robust. National Quality Forum states that even if studies use already validated individual indicators, the final composite measure may not be the true reflection of quality after weighting and aggregation steps [159]. Hence, a separate validation process of the composite is still warranted in order to obtain a reliable composite score. Although it may be difficult to select the most suitable methodology to construct composite measures and perform validation when a study lacks gold standard, readers should be informed about possible limitations and challenges regarding the specific composite measures and the presence of alternative approaches.

Conclusion

This review provides an overview of the methodologies for composite measures used in the peer-reviewed literature to evaluate the quality of care based on process indicators, including the justifications and methodological considerations made regarding these measures. An increased awareness among researchers and healthcare professionals is warranted regarding the presence of alternative methodologies and the importance of a transparent and robust methodology when constructing and reporting composite measures of process quality of health care.

Supporting information

(DOCX)

(DOC)

(DOCX)

(DOCX)

(DOCX)

(DOCX)

(DOCX)

(DOCX)

(DOCX)

Data Availability

All relevant data are within the paper and its Supporting information files.

Funding Statement

This research project was funded by the Marie Sklodowska-Curie Innovative Training Network (HealthPros- Healthcare Performance Intelligence Professionals; https://www.healthpros-h2020.eu/) by the European Union’s Horizon 2020 research and innovation program under grant agreement no. 765141. The funder provided support through Aalborg University Hospital in the form of salaries for PK. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1.Mainz J. Defining and classifying clinical indicators for quality improvement. Int J Qual Health Care. 2003;15: 523–530. doi: 10.1093/intqhc/mzg081 [DOI] [PubMed] [Google Scholar]

- 2.Peterson ED, DeLong ER, Masoudi FA, O’Brien SM, Peterson PN, Rumsfeld JS, et al. ACCF/AHA 2010 Position Statement on Composite Measures for Healthcare Performance Assessment: a report of American College of Cardiology Foundation/American Heart Association Task Force on Performance Measures (Writing Committee to Develop a Position Statement on Composite Measures). J Am Coll Cardiol. 2010;55: 1755–1766. doi: 10.1016/j.jacc.2010.02.016 [DOI] [PubMed] [Google Scholar]

- 3.Shwartz M, Restuccia JD, Rosen AK. Composite Measures of Health Care Provider Performance: A Description of Approaches. Milbank Q. 2015;93: 788–825. doi: 10.1111/1468-0009.12165 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Austin PC, Ceyisakar IE, Steyerberg EW, Lingsma HF, Marang-van de Mheen PJ. Ranking hospital performance based on individual indicators: can we increase reliability by creating composite indicators? BMC Med Res Methodol. 2019;19: 131. doi: 10.1186/s12874-019-0769-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Jacobs R, Goddard M, Smith PC. How robust are hospital ranks based on composite performance measures? Med Care. 2005;43: 1177–1184. doi: 10.1097/01.mlr.0000185692.72905.4a [DOI] [PubMed] [Google Scholar]

- 6.Barclay M, Dixon-Woods M, Lyratzopoulos G. The problem with composite indicators. BMJ Qual Saf. 2019;28: 338–344. doi: 10.1136/bmjqs-2018-007798 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Nardo M, Saisana M, Saltelli A, et al. Handbook on constructing composite indicators: methodology and user guide. 2008. https://www.oecd.org/els/soc/handbookonconstructingcompositeindicatorsmethodologyanduserguide.htm

- 8.Moher D, Liberati A, Tetzlaff J, Altman DG, The PG. Preferred Reporting Items for Systematic Reviews and Meta-Analyses: The PRISMA Statement. PLOS Medicine. 2009;6: e1000097. doi: 10.1371/journal.pmed.1000097 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Reeves D, Campbell SM, Adams J, Shekelle PG, Kontopantelis E, Roland MO. Combining multiple indicators of clinical quality: an evaluation of different analytic approaches. Med Care. 2007;45: 489–496. doi: 10.1097/MLR.0b013e31803bb479 [DOI] [PubMed] [Google Scholar]

- 10.Nolan T, Berwick DM. All-or-none measurement raises the bar on performance. JAMA. 2006;295: 1168–1170. doi: 10.1001/jama.295.10.1168 [DOI] [PubMed] [Google Scholar]

- 11.Simms AD, Batin PD, Weston CF, Fox KAA, Timmis A, Long WR, et al. An evaluation of composite indicators of hospital acute myocardial infarction care: a study of 136,392 patients from the Myocardial Ischaemia National Audit Project. Int J Cardiol. 2013;170: 81–87. doi: 10.1016/j.ijcard.2013.10.027 [DOI] [PubMed] [Google Scholar]

- 12.Glickman SW, Boulding W, Roos JM, Staelin R, Peterson ED, Schulman KA. Alternative pay-for-performance scoring methods: implications for quality improvement and patient outcomes. Med Care. 2009;47: 1062–1068. doi: 10.1097/MLR.0b013e3181a7e54c [DOI] [PubMed] [Google Scholar]

- 13.Lilford RJ, Brown CA, Nicholl J. Use of process measures to monitor the quality of clinical practice. BMJ. 2007;335: 648–650. doi: 10.1136/bmj.39317.641296.AD [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.National Academies of Sciences, Engineering, and Medicine. Health-Care Utilization as a Proxy in Disability Determination. Washington, DC: National Academies Press; 2018. [PubMed] [Google Scholar]

- 15.Ouzzani M, Hammady H, Fedorowicz Z, Elmagarmid A. Rayyan-a web and mobile app for systematic reviews. Syst Rev. 2016;5: 210–4. doi: 10.1186/s13643-016-0384-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Wang Y, Li Z, Zhao X, Wang C, Wang X, Wang D, et al. Effect of a Multifaceted Quality Improvement Intervention on Hospital Personnel Adherence to Performance Measures in Patients With Acute Ischemic Stroke in China: A Randomized Clinical Trial. JAMA. 2018;320: 245–254. doi: 10.1001/jama.2018.8802 [DOI] [PubMed] [Google Scholar]

- 17.Hsieh F, Jeng J, Chern C, Lee T, Tang S, Tsai L, et al. Quality improvement in acute ischemic stroke care in Taiwan: the breakthrough collaborative in stroke. PloS one. 2016;11: e0160426. doi: 10.1371/journal.pone.0160426 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Holbrook A, Thabane L, Keshavjee K, Dolovich L, Bernstein B, Chan D, et al. Individualized electronic decision support and reminders to improve diabetes care in the community: COMPETE II randomized trial. Canadian Medical Association journal (CMAJ). 2009;181: 37–44. doi: 10.1503/cmaj.081272 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Bouadma L, Mourvillier B, Deiler V, Le Corre B, Lolom I, Régnier B, et al. A multifaceted program to prevent ventilator associated pneumonia: Impact on compliance with preventive measures. Critical care medicine. 2010;38: 789–796. doi: 10.1097/CCM.0b013e3181ce21af [DOI] [PubMed] [Google Scholar]

- 20.Zurovac D, Sudoi RK, Akhwale WS, Ndiritu M, Hamer DH, Rowe AK, et al. The effect of mobile phone text-message reminders on Kenyan health workers’ adherence to malaria treatment guidelines: a cluster randomised trial. The Lancet (British edition). 2011;378: 795–803. doi: 10.1016/S0140-6736(11)60783-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Lewis WR, Peterson ED, Cannon CP, Super DM, LaBresh KA, Quealy K, et al. An organized approach to improvement in guideline adherence for acute myocardial infarction: results with the Get With The Guidelines quality improvement program. Arch Intern Med. 2008;168: 1813–1819. doi: 10.1001/archinte.168.16.1813 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Jacobson JO, Neuss MN, McNiff KK, Kadlubek P, Thacker LR, Song F, et al. Improvement in oncology practice performance through voluntary participation in the Quality Oncology Practice Initiative. J Clin Oncol. 2008;26: 1893–1898. doi: 10.1200/JCO.2007.14.2992 [DOI] [PubMed] [Google Scholar]

- 23.Sperl-Hillen JM, Solberg LI, Hroscikoski MC, Crain AL, Engebretson KI, O’Connor PJ. The effect of advanced access implementation on quality of diabetes care. Prev Chronic Dis. 2008;5: A16. [PMC free article] [PubMed] [Google Scholar]

- 24.Glickman SW, Ou FS, DeLong ER, Roe MT, Lytle BL, Mulgund J, et al. Pay for performance, quality of care, and outcomes in acute myocardial infarction. JAMA. 2007;297: 2373–2380. doi: 10.1001/jama.297.21.2373 [DOI] [PubMed] [Google Scholar]

- 25.Krantz MJ, Baker WA, Estacio RO, Haynes DK, Mehler PS, Fonarow GC, et al. Comprehensive coronary artery disease care in a safety-net hospital: results of Get With The Guidelines quality improvement initiative. J Manag Care Pharm. 2007;13: 319–325. doi: 10.18553/jmcp.2007.13.4.319 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Landon BE, Hicks LS, O’Malley AJ, Lieu TA, Keegan T, McNeil BJ, et al. Improving the management of chronic disease at community health centers. N Engl J Med. 2007;356: 921–934. doi: 10.1056/NEJMsa062860 [DOI] [PubMed] [Google Scholar]

- 27.Lindenauer PK, Remus D, Roman S, Rothberg MB, Benjamin EM, Ma A, et al. Public reporting and pay for performance in hospital quality improvement. N Engl J Med. 2007;356: 486–496. doi: 10.1056/NEJMsa064964 [DOI] [PubMed] [Google Scholar]

- 28.Alvarez Morán JL, Alé FGB, Rogers E, Guerrero S. Quality of care for treatment of uncomplicated severe acute malnutrition delivered by community health workers in a rural area of Mali. Matern Child Nutr. 2018;14: e12449. doi: 10.1111/mcn.12449 Epub 2017 Apr 5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Loftus TJ, Stelton S, Efaw BW, Bloomstone J. A System-Wide Enhanced Recovery Program Focusing on Two Key Process Steps Reduces Complications and Readmissions in Patients Undergoing Bowel Surgery. J Healthc Qual. 2017;39: 129–135. doi: 10.1111/jhq.12068 [DOI] [PubMed] [Google Scholar]

- 30.Baker DW, Persell SD, Kho AN, Thompson JA, Kaiser D. The marginal value of pre-visit paper reminders when added to a multifaceted electronic health record based quality improvement system. J Am Med Inform Assoc. 2011;18: 805–811. doi: 10.1136/amiajnl-2011-000169 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Vichare A, Eads N, Punglia R, Potters L. American Society for Radiation Oncology’s Performance Assessment for the Advancement of Radiation Oncology Treatment: A practical approach for informing practice improvement. Pract Radiat Oncol. 2013;3: 37. doi: 10.1016/j.prro.2012.09.005 [DOI] [PubMed] [Google Scholar]

- 32.Cadilhac D, Grimley R, Kilkenny M, Andrew N, Lannin N, Hill K, et al. Multicenter, Prospective, Controlled, Before-and-After, Quality Improvement Study (Stroke123) of Acute Stroke Care. Stroke (1970). 2019;50: 1525–1530. doi: 10.1161/STROKEAHA.118.023075 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Tawfiq E, Alawi SAS, Natiq K. Effects of Training Health Workers in Integrated Management of Childhood Illness on Quality of Care for Under-5 Children in Primary Healthcare Facilities in Afghanistan. International journal of health policy and management. 2019;9: 17–26. doi: 10.15171/ijhpm.2019.69 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Schneider EC, Sorbero ME, Haas A, Ridgely MS, Khodyakov D, Setodji CM, et al. Does a quality improvement campaign accelerate take-up of new evidence? A ten-state cluster-randomized controlled trial of the IHI’s Project JOINTS. Implementation science: IS. 2017;12: 51. doi: 10.1186/s13012-017-0579-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Heselmans A, Delvaux N, Laenen A, Van de Velde S, Ramaekers D, Kunnamo I, et al. Computerized clinical decision support system for diabetes in primary care does not improve quality of care: a cluster-randomized controlled trial. Implementation science: IS. 2020;15: 5. doi: 10.1186/s13012-019-0955-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Wolfe HA, Morgan RW, Zhang B, Topjian AA, Fink EL, Berg RA, et al. Deviations from AHA guidelines during pediatric cardiopulmonary resuscitation are associated with decreased event survival. Resuscitation. 2020;149: 89–99. doi: 10.1016/j.resuscitation.2020.01.035 [DOI] [PubMed] [Google Scholar]

- 37.Schumacher DJ, Martini A, Holmboe E, Carraccio C, van der Vleuten C, Sobolewski B, et al. Initial Implementation of Resident-Sensitive Quality Measures in the Pediatric Emergency Department: A Wide Range of Performance. Academic medicine. 2020;95: 1248–1255. doi: 10.1097/ACM.0000000000003147 [DOI] [PubMed] [Google Scholar]

- 38.Jung K. The impact of information disclosure on quality of care in HMO markets. International journal for quality in health care. 2010;22: 461–468. doi: 10.1093/intqhc/mzq062 [DOI] [PubMed] [Google Scholar]

- 39.Wu Y, Li S, Patel A, Li X, Du X, Wu T, et al. Effect of a Quality of Care Improvement Initiative in Patients With Acute Coronary Syndrome in Resource-Constrained Hospitals in China: A Randomized Clinical Trial. JAMA cardiology. 2019;4: 418–427. doi: 10.1001/jamacardio.2019.0897 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Lytle BL, MS, Li S, MS, Lofthus DM, MD, Thomas L, PhD, Poteat JL, BA, Bhatt DL, MD, et al. Targeted versus standard feedback: Results from a Randomized Quality Improvement Trial. The American heart journal. 2014;169: 132–141.e2. doi: 10.1016/j.ahj.2014.08.017 [DOI] [PubMed] [Google Scholar]

- 41.Schwamm LH, Fonarow GC, Reeves MJ, Pan W, Frankel MR, Smith EE, et al. Get With the Guidelines-Stroke is associated with sustained improvement in care for patients hospitalized with acute stroke or transient ischemic attack. Circulation. 2009;119: 107–115. doi: 10.1161/CIRCULATIONAHA.108.783688 [DOI] [PubMed] [Google Scholar]

- 42.Brush JE, Rensing E, Song F, Cook S, Lynch J, Thacker L, et al. A statewide collaborative initiative to improve the quality of care for patients with acute myocardial infarction and heart failure. Circulation. 2009;119: 1609–1615. doi: 10.1161/CIRCULATIONAHA.108.764613 [DOI] [PubMed] [Google Scholar]

- 43.Hicks LS, O’Malley AJ, Lieu TA, Keegan T, McNeil BJ, Guadagnoli E, et al. Impact of Health Disparities Collaboratives on Racial/Ethnic and Insurance Disparities in US Community Health Centers. Arch Intern Med. 2010;170: 279–286. doi: 10.1001/archinternmed.2010.493 [DOI] [PubMed] [Google Scholar]

- 44.Xian Y, Pan W, Peterson ED, Heidenreich PA, Cannon CP, Hernandez AF, et al. Are quality improvements associated with the Get With the Guidelines-Coronary Artery Disease (GWTG-CAD) program sustained over time? A longitudinal comparison of GWTG-CAD hospitals versus non-GWTG-CAD hospitals. Am Heart J. 2010;159: 207–214. doi: 10.1016/j.ahj.2009.11.002 [DOI] [PubMed] [Google Scholar]

- 45.Laskey W, Spence N, Zhao X, Mayo R, Taylor R, Cannon CP, et al. Regional differences in quality of care and outcomes for the treatment of acute coronary syndromes: an analysis from the get with the guidelines coronary artery disease program. Crit Pathw Cardiol. 2010;9: 1–7. doi: 10.1097/HPC.0b013e3181cdb5a5 [DOI] [PubMed] [Google Scholar]

- 46.Birtcher KK, Pan W, Labresh KA, Cannon CP, Fonarow GC, Ellrodt G. Performance achievement award program for Get With The Guidelines—Coronary Artery Disease is associated with global and sustained improvement in cardiac care for patients hospitalized with an acute myocardial infarction. Crit Pathw Cardiol. 2010;9: 103–112. doi: 10.1097/HPC.0b013e3181ed763e [DOI] [PubMed] [Google Scholar]

- 47.Shubrook JH, Snow RJ, McGill SL. Effects of repeated use of the American Osteopathic Association’s Clinical Assessment Program on measures of care for patients with diabetes mellitus. J Am Osteopath Assoc. 2011;111: 13–20. [PubMed] [Google Scholar]

- 48.Morgan DJ, Day HR, Harris AD, Furuno JP, Perencevich EN. The impact of Contact Isolation on the quality of inpatient hospital care. PloS one. 2011;6: e22190. doi: 10.1371/journal.pone.0022190 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Appari A, Eric Johnson M, Anthony DL. Meaningful use of electronic health record systems and process quality of care: evidence from a panel data analysis of U.S. acute-care hospitals. Health Serv Res. 2013;48: 354–375. doi: 10.1111/j.1475-6773.2012.01448.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Perlin JB, Horner SJ, Englebright JD, Bracken RM. Rapid core measure improvement through a business case for quality. J Healthc Qual. 2014;36: 50–61. doi: 10.1111/j.1945-1474.2012.00218.x [DOI] [PubMed] [Google Scholar]

- 51.McHugh M, Neimeyer J, Powell E, Khare RK, Adams JG. An early look at performance on the emergency care measures included in Medicare’s hospital inpatient Value-Based Purchasing Program. Ann Emerg Med. 2013;61: 616–623.e2. doi: 10.1016/j.annemergmed.2013.01.012 [DOI] [PubMed] [Google Scholar]

- 52.Paustian ML, Alexander JA, El Reda DK, Wise CG, Green LA, Fetters MD. Partial and Incremental PCMH Practice Transformation: Implications for Quality and Costs. Health Serv Res. 2014;49: 52–74. doi: 10.1111/1475-6773.12085 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Peacock WF, Kontos MC, Amsterdam E, Cannon CP, Diercks D, Garvey L, et al. Impact of Society of Cardiovascular Patient Care accreditation on quality: an ACTION Registry®-Get With The Guidelines™ analysis. Crit Pathw Cardiol. 2013;12: 116–120. doi: 10.1097/HPC.0b013e31828940e3 [DOI] [PubMed] [Google Scholar]

- 54.Mitchell J, Probst J, Brock-Martin A, Bennett K, Glover S, Hardin J. Association between clinical decision support system use and rural quality disparities in the treatment of pneumonia. J Rural Health. 2014;30: 186–195. doi: 10.1111/jrh.12043 [DOI] [PubMed] [Google Scholar]

- 55.Mitchell J, Probst JC, Bennett KJ, Glover S, Martin AB, Hardin JW. Differences in pneumonia treatment between high-minority and low-minority neighborhoods with clinical decision support system implementation. Inform Health Soc Care. 2016;41: 128–142. doi: 10.3109/17538157.2014.965304 [DOI] [PubMed] [Google Scholar]

- 56.Herrera AL, Góngora-Rivera F, Muruet W, Villarreal HJ, Gutiérrez-Herrera M, Huerta L, et al. Implementation of a Stroke Registry Is Associated with an Improvement in Stroke Performance Measures in a Tertiary Hospital in Mexico. J Stroke Cerebrovasc Dis. 2015;24: 725–730. doi: 10.1016/j.jstrokecerebrovasdis.2014.09.008 [DOI] [PubMed] [Google Scholar]

- 57.Bogh SB, Falstie-Jensen AM, Bartels P, Hollnagel E, Johnsen SP. Accreditation and improvement in process quality of care: a nationwide study. Int J Qual Health Care. 2015;27: 336–343. doi: 10.1093/intqhc/mzv053 [DOI] [PubMed] [Google Scholar]

- 58.Nkoy F, Fassl B, Stone B, Uchida DA, Johnson J, Reynolds C, et al. Improving Pediatric Asthma Care and Outcomes Across Multiple Hospitals. Pediatrics. 2015;136: e1602–e1610. doi: 10.1542/peds.2015-0285 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Baack Kukreja JE, Kiernan M, Schempp B, Siebert A, Hontar A, Nelson B, et al. Quality Improvement in Cystectomy Care with Enhanced Recovery (QUICCER) study. BJU Int. 2017;119: 38–49. doi: 10.1111/bju.13521 [DOI] [PubMed] [Google Scholar]

- 60.Li Z, Wang C, Zhao X, Liu L, Wang C, Li H, et al. Substantial Progress Yet Significant Opportunity for Improvement in Stroke Care in China. Stroke. 2016;47: 2843–2849. doi: 10.1161/STROKEAHA.116.014143 [DOI] [PubMed] [Google Scholar]

- 61.Diop M, Fiset-Laniel J, Provost S, Tousignant P, Borgès Da Silva R, Ouimet M, et al. Does enrollment in multidisciplinary team-based primary care practice improve adherence to guideline-recommended processes of care? Quebec’s Family Medicine Groups, 2002–2010. Health Policy. 2017;121: 378–388. doi: 10.1016/j.healthpol.2017.02.001 [DOI] [PubMed] [Google Scholar]

- 62.Ryan AM, Krinsky S, Maurer KA, Dimick JB. Changes in Hospital Quality Associated with Hospital Value-Based Purchasing. N Engl J Med. 2017;376: 2358–2366. doi: 10.1056/NEJMsa1613412 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Ndumele CD, Schpero WL, Schlesinger MJ, Trivedi AN. Association Between Health Plan Exit From Medicaid Managed Care and Quality of Care, 2006–2014. JAMA. 2017;317: 2524–2531. doi: 10.1001/jama.2017.7118 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Peterson GG, Geonnotti KL, Hula L, Day T, Blue L, Kranker K, et al. Association Between Extending CareFirst’s Medical Home Program to Medicare Patients and Quality of Care, Utilization, and Spending. JAMA Intern Med. 2017;177: 1334–1342. doi: 10.1001/jamainternmed.2017.2775 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Falstie-Jensen AM, Bogh SB, Hollnagel E, Johnsen SP. Compliance with accreditation and recommended hospital care-a Danish nationwide population-based study. Int J Qual Health Care. 2017;29: 625–633. doi: 10.1093/intqhc/mzx104 [DOI] [PubMed] [Google Scholar]

- 66.Starks MA, Dai D, Nichol G, Al-Khatib SM, Chan P, Bradley SM, et al. The association of Duration of participation in get with the guidelines-resuscitation with quality of Care for in-Hospital Cardiac Arrest. Am Heart J. 2018;204: 156–162. doi: 10.1016/j.ahj.2018.04.018 [DOI] [PubMed] [Google Scholar]

- 67.Dentan C, Forestier E, Roustit M, Boisset S, Chanoine S, Epaulard O, et al. Assessment of linezolid prescriptions in three French hospitals. Eur J Clin Microbiol Infect Dis. 2017;36: 1133–1141. doi: 10.1007/s10096-017-2900-4 [DOI] [PubMed] [Google Scholar]

- 68.Katzenellenbogen JM, Bond-Smith D, Ralph AP, Wilmot M, Marsh J, Bailie R, et al. Priorities for improved management of acute rheumatic fever and rheumatic heart disease: analysis of cross-sectional continuous quality improvement data in Aboriginal primary healthcare centres in Australia. Australian health review: a publication of the Australian Hospital Association. 2020;44: 212–221. doi: 10.1071/AH19132 [DOI] [PubMed] [Google Scholar]

- 69.Ranasinghe WG, Beane A, Vithanage TDP, Priyadarshani GDD, Colombage DDE, Ponnamperuma CJ, et al. Quality evaluation and future priorities for delivering acute myocardial infarction care in Sri Lanka. Heart (British Cardiac Society). 2020;106: 603–608. doi: 10.1136/heartjnl-2019-315396 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Wang Y, Li Z, Zhao X, Wang C, Wang X, Wang D, et al. China Stroke Statistics 2019: A Report From the National Center for Healthcare Quality Management in Neurological Diseases, China National Clinical Research Center for Neurological Diseases, the Chinese Stroke Association, National Center for Chronic and Non-communicable Disease Control and Prevention, Chinese Center for Disease Control and Prevention and Institute for Global Neuroscience and Stroke Collaborations. Stroke Vasc Neurol. 2020. Sep;5(3):211–239. doi: 10.1136/svn-2020-000457 Epub 2020 Aug 21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Bintabara D, Nakamura K, Ntwenya J, Seino K, Mpondo B. Adherence to standards of first-visit antenatal care among providers: A stratified analysis of Tanzanian facility-based survey for improving quality of antenatal care. PLoS One. 2019;14: e0216520. doi: 10.1371/journal.pone.0216520 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Hong JY, Kang YA. Evaluation of the Quality of Care among Hospitalized Adult Patients with Community-Acquired Pneumonia in Korea. Tuberc Respir Dis (Seoul). 2018;81: 175–186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Nuti SV, Wang Y, Masoudi FA, Bratzler DW, Bernheim SM, Murugiah K, et al. Improvements in the distribution of hospital performance for the care of patients with acute myocardial infarction, heart failure, and pneumonia, 2006–2011. Med Care. 2015;53: 485–491. doi: 10.1097/MLR.0000000000000358 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Flotta D, Rizza P, Coscarelli P, Pileggi C, Nobile CGA, Pavia M. Appraising hospital performance by using the JCHAO/CMS quality measures in Southern Italy. PloS one. 2012;7: e48923. doi: 10.1371/journal.pone.0048923 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Williams SC, Koss RG, Morton DJ, Loeb JM. Performance of top-ranked heart care hospitals on evidence-based process measures. Circulation. 2006;114: 558–564. doi: 10.1161/CIRCULATIONAHA.105.600973 [DOI] [PubMed] [Google Scholar]

- 76.Plackett TP, Cherry DC, Delk G, Satterly S, Theler J, McVay D, et al. Clinical practice guideline adherence during Operation Inherent Resolve. J Trauma Acute Care Surg. 2017;83: S66–S70. doi: 10.1097/TA.0000000000001473 [DOI] [PubMed] [Google Scholar]

- 77.Starks MA, Wu J, Peterson ED, Stafford JA, Matsouaka RA, Boulware LE, et al. In-Hospital Cardiac Arrest Resuscitation Practices and Outcomes in Maintenance Dialysis Patients. Clinical journal of the American Society of Nephrology. 2020;15: 219–227. doi: 10.2215/CJN.05070419 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Getachew T, Abebe SM, Yitayal M, Persson LÅ, Berhanu D. Assessing the quality of care in sick child services at health facilities in Ethiopia. BMC health services research. 2020;20: 1–574. doi: 10.1186/s12913-020-05444-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Zhang X, Li Z, Zhao X, Xian Y, Liu L, Wang C, et al. Relationship between hospital performance measures and outcomes in patients with acute ischaemic stroke: a prospective cohort study. BMJ open. 2018;8: e020467. doi: 10.1136/bmjopen-2017-020467 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Rehman S, Li X, Wang C, Ikram M, Rehman E, Liu M. Quality of Care for Patients with Acute Myocardial Infarction (AMI) in Pakistan: A Retrospective Study. International journal of environmental research and public health. 2019;16: 3890. doi: 10.3390/ijerph16203890 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Bosko T, Wilson K. Assessing the relationship between patient satisfaction and clinical quality in an ambulatory setting. J Health Organ Manag. 2016;30: 1063–1080. doi: 10.1108/JHOM-11-2015-0181 [DOI] [PubMed] [Google Scholar]

- 82.Sequist TD, Schneider EC, Anastario M, Odigie EG, Marshall R, Rogers WH, et al. Quality monitoring of physicians: linking patients’ experiences of care to clinical quality and outcomes. J Gen Intern Med. 2008;23: 1784–1790. doi: 10.1007/s11606-008-0760-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Arora VM, Plein C, Chen S, Siddique J, Sachs GA, Meltzer DO. Relationship between quality of care and functional decline in hospitalized vulnerable elders. Med Care. 2009;47: 895–901. doi: 10.1097/MLR.0b013e3181a7e3ec [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Patterson ME, Hernandez AF, Hammill Bradley G, Fonarow GC, Peterson ED, Schulman KA, et al. Process of Care Performance Measures and Long-Term Outcomes in Patients Hospitalized With Heart Failure. Med Care. 2010;48: 210. doi: 10.1097/MLR.0b013e3181ca3eb4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Shafi S, Parks J, Ahn C, Gentilello L, Nathens A, Hemmila M, et al. Centers for Medicare and Medicaid Services Quality Indicators Do Not Correlate With Risk-Adjusted Mortality at Trauma Centers. J Trauma. 2010;68: 771–777. doi: 10.1097/TA.0b013e3181d03a20 [DOI] [PubMed] [Google Scholar]

- 86.Stulberg JJ, Delaney CP, Neuhauser DV, Aron DC, Fu P, Koroukian SM. Adherence to surgical care improvement project measures and the association with postoperative infections. JAMA. 2010;303: 2479–2485. doi: 10.1001/jama.2010.841 [DOI] [PubMed] [Google Scholar]

- 87.Aaronson DS, Bardach NS, Lin GA, Chattopadhyay A, Goldman LE, Dudley RA. Prediction of hospital acute myocardial infarction and heart failure 30-day mortality rates using publicly reported performance measures. J Healthc Qual. 2013;35: 15–23. doi: 10.1111/j.1945-1474.2011.00173.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Sills MR, Ginde AA, Clark S, Camargo CA. Multicenter analysis of quality indicators for children treated in the emergency department for asthma. Pediatrics. 2012;129: 325. doi: 10.1542/peds.2010-3302 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Sequist TD, Von Glahn T, Li A, Rogers WH, Safran DG. Measuring chronic care delivery: patient experiences and clinical performance. Int J Qual Health Care. 2012;24: 206–213. doi: 10.1093/intqhc/mzs018 [DOI] [PubMed] [Google Scholar]

- 90.Saleh S, Callan M, Kassak K. The association between the hospital quality alliance’s pneumonia measures and discharge costs. J Health Care Finance. 2012;38: 50–60. [PubMed] [Google Scholar]

- 91.Ashby J, Juarez DT, Berthiaume J, Sibley P, Chung RS. The relationship of hospital quality and cost per case in Hawaii. Inquiry. 2012;49: 65–74. doi: 10.5034/inquiryjrnl_49.01.06 [DOI] [PubMed] [Google Scholar]

- 92.Hasegawa K, Chiba T, Hagiwara Y, Watase H, Tsugawa Y, Brown DFM, et al. Quality of Care for Acute Asthma in Emergency Departments in Japan: A Multicenter Observational Study. J Allergy Clin Immunol Pract. 2013;1: 509–515. doi: 10.1016/j.jaip.2013.05.001 [DOI] [PubMed] [Google Scholar]

- 93.Kontos MC, Rennyson SL, Chen AY, Alexander KP, Peterson ED, Roe MT. The association of myocardial infarction process of care measures and in-hospital mortality: A report from the NCDR. Am Heart J. 2014;168: 766–775. doi: 10.1016/j.ahj.2014.07.005 [DOI] [PubMed] [Google Scholar]

- 94.Dusheiko M, Gravelle H, Martin S, Smith PC. Quality of Disease Management and Risk of Mortality in English Primary Care Practices. Health Serv Res. 2015;50: 1452–1471. doi: 10.1111/1475-6773.12283 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Congiusta S, Solomon P, Conigliaro J, O’Gara-Shubinsky R, Kohn N, Nash IS. Clinical Quality and Patient Experience in the Adult Ambulatory Setting. Am J Med Qual. 2019;34: 87–91. doi: 10.1177/1062860618777878 [DOI] [PubMed] [Google Scholar]

- 96.Spece LJ, Donovan LM, Griffith MF, Collins MP, Feemster LC, Au DH. Quality of Care Delivered to Veterans with COPD Exacerbation and the Association with 30-Day Readmission and Death. COPD. 2018;15: 489–495. doi: 10.1080/15412555.2018.1543390 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Barbayannis G, Chiu I, Sargsyan D, Cabrera J, Beavers TE, Kostis JB, et al. Relation Between Statewide Hospital Performance Reports on Myocardial Infarction and Cardiovascular Outcomes. Am J Cardiol. 2019;123: 1587–1594. doi: 10.1016/j.amjcard.2019.02.016 [DOI] [PubMed] [Google Scholar]

- 98.Ido MS, Frankel MR, Okosun IS, Rothenberg RB. Quality of Care and Its Impact on One-Year Mortality: The Georgia Coverdell Acute Stroke Registry. Am J Med Qual. 2018;33: 86–92. doi: 10.1177/1062860617696578 [DOI] [PubMed] [Google Scholar]

- 99.Smith LM, Anderson WL, Lines LM, Pronier C, Thornburg V, Butler JP, et al. Patient experience and process measures of quality of care at home health agencies: Factors associated with high performance. Home Health Care Serv Q. 2017;36: 29–45. doi: 10.1080/01621424.2017.1320698 [DOI] [PubMed] [Google Scholar]

- 100.Mason MC, Chang GJ, Petersen LA, Sada YH, Tran Cao HS, Chai C, et al. National Quality Forum Colon Cancer Quality Metric Performance: How Are Hospitals Measuring Up? Ann Surg. 2017;266: 1013–1020. doi: 10.1097/SLA.0000000000002003 [DOI] [PubMed] [Google Scholar]

- 101.Chui PW, Parzynski CS, Nallamothu BK, Masoudi FA, Krumholz HM, Curtis JP. Hospital Performance on Percutaneous Coronary Intervention Process and Outcomes Measures. J Am Heart Assoc. 2017;6: e004276. doi: 10.1161/JAHA.116.004276 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.Wang C, Li X, Su S, Wang X, Li J, Bao X, et al. Factors analysis on the use of key quality indicators for narrowing the gap of quality of care of breast cancer. BMC cancer. 2019;19: 1099. doi: 10.1186/s12885-019-6334-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Hess BJ, Weng W, Holmboe ES, Lipner RS. The Association Between Physiciansʼ Cognitive Skills and Quality of Diabetes Care. Academic medicine. 2012;87: 157–163. [DOI] [PubMed] [Google Scholar]

- 104.Levine DA, Kollman CD, Olorode T, Giordani B, Lisabeth LD, Zahuranec DB, et al. Physician decision-making and recommendations for stroke and myocardial infarction treatments in older adults with mild cognitive impairment. PLoS One. 2020;15: e0230446. doi: 10.1371/journal.pone.0230446 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 105.Al Qawasmeh M, Alhusban A, Alfwaress F, El-Salem K. An Assessment of Patients Factors Effect on Prescriber Adherence to Ischemic Stroke Secondary Prevention Guidelines. Current clinical pharmacology. 2020;15. doi: 10.2174/1574884715666200123145350 [DOI] [PubMed] [Google Scholar]

- 106.Kovács N, Varga O, Nagy A, Pálinkás A, Sipos V, Kőrösi L, et al. The impact of general practitioners’ gender on process indicators in Hungarian primary healthcare: a nation-wide cross-sectional study. BMJ open. 2019;9: e027296. doi: 10.1136/bmjopen-2018-027296 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 107.Min LC, Reuben DB, MacLean CH, Shekelle PG, Solomon DH, Higashi T, et al. Predictors of overall quality of care provided to vulnerable older people. J Am Geriatr Soc. 2005;53: 1705–1711. doi: 10.1111/j.1532-5415.2005.53520.x [DOI] [PubMed] [Google Scholar]

- 108.Holmboe ES, Wang Y, Meehan TP, Tate JP, Ho SY, Starkey KS, et al. Association between maintenance of certification examination scores and quality of care for medicare beneficiaries. Arch Intern Med. 2008;168: 1396–1403. doi: 10.1001/archinte.168.13.1396 [DOI] [PubMed] [Google Scholar]

- 109.Correa-de-Araujo R, McDermott K, Moy E. Gender differences across racial and ethnic groups in the quality of care for diabetes. Womens Health Issues. 2006;16: 56–65. doi: 10.1016/j.whi.2005.08.003 [DOI] [PubMed] [Google Scholar]

- 110.Lindenauer PK, Pekow P, Gao S, Crawford AS, Gutierrez B, Benjamin EM. Quality of care for patients hospitalized for acute exacerbations of chronic obstructive pulmonary disease. Ann Intern Med. 2006;144: 894–903. doi: 10.7326/0003-4819-144-12-200606200-00006 [DOI] [PubMed] [Google Scholar]

- 111.Landon BE, Normand ST, Lesser A, O’Malley AJ, Schmaltz S, Loeb JM, et al. Quality of care for the treatment of acute medical conditions in US hospitals. Arch Intern Med. 2006;166: 2511–2517. doi: 10.1001/archinte.166.22.2511 [DOI] [PubMed] [Google Scholar]

- 112.Mehta RH, Liang L, Karve AM, Hernandez AF, Rumsfeld JS, Fonarow GC, et al. Association of Patient Case-Mix Adjustment, Hospital Process Performance Rankings, and Eligibility for Financial Incentives. JAMA. 2008;300: 1897–1903. doi: 10.1001/jama.300.16.1897 [DOI] [PubMed] [Google Scholar]

- 113.Colwell C, Mehler P, Harper J, Cassell L, Vazquez J, Sabel A. Measuring quality in the prehospital care of chest pain patients. Prehosp Emerg Care. 2009;13: 237–240. doi: 10.1080/10903120802706138 [DOI] [PubMed] [Google Scholar]

- 114.López L, Hicks LS, Cohen AP, McKean S, Weissman JS. Hospitalists and the quality of care in hospitals. Arch Intern Med. 2009;169: 1389–1394. doi: 10.1001/archinternmed.2009.222 [DOI] [PubMed] [Google Scholar]

- 115.Blustein J, Borden WB, Valentine M. Hospital performance, the local economy, and the local workforce: findings from a US National Longitudinal Study. PLoS medicine. 2010;7: e1000297. doi: 10.1371/journal.pmed.1000297 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 116.Reeves MJ, Gargano J, Maier KS, Broderick JP, Frankel M, LaBresh KA, et al. Patient-level and hospital-level determinants of the quality of acute stroke care: a multilevel modeling approach. Stroke. 2010;41: 2924–2931. doi: 10.1161/STROKEAHA.110.598664 [DOI] [PubMed] [Google Scholar]

- 117.O’Connor CM, Albert NM, Curtis AB, Gheorghiade M, Heywood JT, McBride ML, et al. patient and practice factors associated with improvement in use of guideline-recommended therapies for outpatients with heart failure (from the IMPROVE HF trial). Am J Cardiol. 2011;107: 250–258. doi: 10.1016/j.amjcard.2010.09.012 [DOI] [PubMed] [Google Scholar]

- 118.Ross JS, Arling G, Ofner S, Roumie CL, Keyhani S, Williams LS, et al. Correlation of inpatient and outpatient measures of stroke care quality within veterans health administration hospitals. Stroke. 2011;42: 2269–2275. doi: 10.1161/STROKEAHA.110.611913 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 119.Kapoor JR, Fonarow GC, Zhao X, Kapoor R, Hernandez AF, Heidenreich PA. Diabetes, quality of care, and in-hospital outcomes in patients hospitalized with heart failure. Am Heart J. 2011;162: 480–486.e3. doi: 10.1016/j.ahj.2011.06.008 [DOI] [PubMed] [Google Scholar]

- 120.Bulger JB, Shubrook JH, Snow R. Racial disparities in African Americans with diabetes: process and outcome mismatch. Am J Manag Care. 2012;18: 407–413. [PubMed] [Google Scholar]

- 121.Gale CP, Cattle BA, Baxter PD, Greenwood DC, Simms AD, Deanfield J, et al. Age-dependent inequalities in improvements in mortality occur early after acute myocardial infarction in 478,242 patients in the Myocardial Ischaemia National Audit Project (MINAP) registry. Int J Cardiol. 2013;168: 881–887. doi: 10.1016/j.ijcard.2012.10.023 [DOI] [PubMed] [Google Scholar]

- 122.Schiele F, Capuano F, Loirat P, Desplanques-Leperre A, Derumeaux G, Thebaut J, et al. Hospital case volume and appropriate prescriptions at hospital discharge after acute myocardial infarction: a nationwide assessment. Circ Cardiovasc Qual Outcomes. 2013;6: 50–57. doi: 10.1161/CIRCOUTCOMES.112.967133 [DOI] [PubMed] [Google Scholar]

- 123.Ukawa N, Ikai H, Imanaka Y. Trends in hospital performance in acute myocardial infarction care: a retrospective longitudinal study in Japan. Int J Qual Health Care. 2014;26: 516–523. doi: 10.1093/intqhc/mzu073 [DOI] [PubMed] [Google Scholar]

- 124.Seghieri C, Policardo L, Francesconi P, Seghieri G. Gender differences in the relationship between diabetes process of care indicators and cardiovascular outcomes. Eur J Public Health. 2016;26: 219–224. doi: 10.1093/eurpub/ckv159 [DOI] [PubMed] [Google Scholar]

- 125.Policardo L, Barchielli A, Seghieri G, Francesconi P. Does the hospitalization after a cancer diagnosis modify adherence to process indicators of diabetes care quality? Acta Diabetol. 2016;53: 1009–1014. doi: 10.1007/s00592-016-0898-1 [DOI] [PubMed] [Google Scholar]

- 126.Pan Y, Chen R, Li Z, Li H, Zhao X, Liu L, et al. Socioeconomic Status and the Quality of Acute Stroke Care: The China National Stroke Registry. Stroke. 2016;47: 2836–2842. doi: 10.1161/STROKEAHA.116.013292 [DOI] [PubMed] [Google Scholar]

- 127.Desai NR, Udell JA, Wang Y, Spatz ES, Dharmarajan K, Ahmad T, et al. Trends in Performance and Opportunities for Improvement on a Composite Measure of Acute Myocardial Infarction Care. Circ Cardiovasc Qual Outcomes. 2019;12: e004983. doi: 10.1161/CIRCOUTCOMES.118.004983 [DOI] [PubMed] [Google Scholar]

- 128.Ji R, Wang D, Liu G, Shen H, Li H, Schwamm LH, et al. Impact of macroeconomic status on prehospital management, in-hospital care and functional outcome of acute stroke in China. Clin.Pract. 2013;10: 701–712. [Google Scholar]

- 129.Halim SA, Mulgund J, Chen AY, Roe MT, Peterson ED, Gibler WB, et al. Use of Guidelines-Recommended Management and Outcomes Among Women and Men With Low-Level Troponin Elevation: Insights From CRUSADE. Circ Cardiovasc Qual Outcomes. 2009;2: 199–206. doi: 10.1161/CIRCOUTCOMES.108.810127 [DOI] [PubMed] [Google Scholar]

- 130.Seghieri G, Seghieri C, Policardo L, Gualdani E, Francesconi P, Voller F. Adherence to diabetes care process indicators in migrants as compared to non-migrants with diabetes: a retrospective cohort study. Int J Public Health. 2019;64: 595–601. doi: 10.1007/s00038-019-01220-5 [DOI] [PubMed] [Google Scholar]

- 131.Su S, Bao H, Wang X, Wang Z, Li X, Zhang M, et al. The quality of invasive breast cancer care for low reimbursement rate patients: A retrospective study. PloS one. 2017;12: e0184866. doi: 10.1371/journal.pone.0184866 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 132.McDermott M, Lisabeth LD, Baek J, Adelman EE, Garcia NM, Case E, et al. Sex Disparity in Stroke Quality of Care in a Community-Based Study. J Stroke Cerebrovasc Dis. 2017;26: 1781–1786. doi: 10.1016/j.jstrokecerebrovasdis.2017.04.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 133.Cross DA, Cohen GR, Lemak CH, Adler-Milstein J. Outcomes For High-Needs Patients: Practices With A Higher Proportion Of These Patients Have An Edge. Health Aff (Millwood). 2017;36: 476–484. doi: 10.1377/hlthaff.2016.1309 [DOI] [PubMed] [Google Scholar]

- 134.Murtas R, Decarli A, Greco MT, Andreano A, Russo AG. Latent composite indicators for evaluating adherence to guidelines in patients with a colorectal cancer diagnosis. Medicine (Baltimore). 2020;99: e19277. doi: 10.1097/MD.0000000000019277 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 135.Halterman JS, Kitzman H, McMullen A, Lynch K, Fagnano M, Conn KM, et al. Quantifying preventive asthma care delivered at office visits: the Preventive Asthma Care-Composite Index (PAC-CI). J Asthma. 2006;43: 559–64. doi: 10.1080/02770900600859172 [DOI] [PubMed] [Google Scholar]

- 136.Samuel CA, Zaslavsky AM, Landrum MB, Lorenz K, Keating NL. Developing and Evaluating Composite Measures of Cancer Care Quality. Medical care. 2015;53: 54–64. doi: 10.1097/MLR.0000000000000257 [DOI] [PubMed] [Google Scholar]

- 137.Halasyamani LK, Davis MM. Conflicting measures of hospital quality: ratings from “Hospital Compare” versus “Best Hospitals”. J Hosp Med. 2007;2: 128–34. [DOI] [PubMed] [Google Scholar]

- 138.Kaplan Sherrie H., Griffith John L., Price Lori L., Pawlson L. Gregory, Greenfield Sheldon. Improving the Reliability of Physician Performance Assessment: Identifying the “Physician Effect” on Quality and Creating Composite Measures. Medical care. 2009;47: 378–387. doi: 10.1097/MLR.0b013e31818dce07 [DOI] [PubMed] [Google Scholar]

- 139.Wang C, Su S, Li X, Li J, Bao X, Liu M. Identifying Performance Outliers for Stroke Care Based on Composite Score of Process Indicators: an Observational Study in China. Journal of general internal medicine: JGIM. 2020;35: 2621–2628. doi: 10.1007/s11606-020-05923-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 140.O’Brien SM, Ferraris VA, Haan CK, Rich JB, Shewan CM, Dokholyan RS, et al. Quality measurement in adult cardiac surgery: part 2—Statistical considerations in composite measure scoring and provider rating. Ann Thorac Surg. 2007;83: 13. doi: 10.1016/j.athoracsur.2007.01.055 [DOI] [PubMed] [Google Scholar]

- 141.Shwartz M, Ren J, Peköz EA, Wang X, Cohen AB, Restuccia JD. Estimating a composite measure of hospital quality from the Hospital Compare database: differences when using a Bayesian hierarchical latent variable model versus denominator-based weights. Med Care. 2008;46: 778–785. doi: 10.1097/MLR.0b013e31817893dc [DOI] [PubMed] [Google Scholar]

- 142.Scholle SH, Roski J, Adams JL, Dunn DL, Kerr EA, Dugan DP, et al. Benchmarking physician performance: reliability of individual and composite measures. Am J Manag Care. 2008;14: 833–838. [PMC free article] [PubMed] [Google Scholar]

- 143.Bilimoria KY, Raval MV, Bentrem DJ, Wayne JD, Balch CM, Ko CY. National assessment of melanoma care using formally developed quality indicators. J Clin Oncol. 2009;27: 5445–5451. doi: 10.1200/JCO.2008.20.9965 [DOI] [PubMed] [Google Scholar]

- 144.Willis CD, Stoelwinder JU, Lecky FE, Woodford M, Jenks T, Bouamra O, et al. Applying composite performance measures to trauma care. J Trauma. 2010;69: 256–262. doi: 10.1097/TA.0b013e3181e5e2a3 [DOI] [PubMed] [Google Scholar]

- 145.Holmboe ES, Weng W, Arnold GK, Kaplan SH, Normand S, Greenfield S, et al. The Comprehensive Care Project: Measuring Physician Performance in Ambulatory Practice. Health Serv Res. 2010;45: 1912–1933. doi: 10.1111/j.1475-6773.2010.01160.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 146.Kilbourne AM, Farmer Teh C, Welsh D, Pincus HA, Lasky E, Perron B, et al. Implementing composite quality metrics for bipolar disorder: towards a more comprehensive approach to quality measurement. Gen Hosp Psychiatry. 2010;32: 636–643. doi: 10.1016/j.genhosppsych.2010.09.011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 147.Couralet M, Guérin S, Le Vaillant M, Loirat P, Minvielle E. Constructing a composite quality score for the care of acute myocardial infarction patients at discharge: impact on hospital ranking. Med Care. 2011;49: 569–576. doi: 10.1097/MLR.0b013e31820fc386 [DOI] [PubMed] [Google Scholar]

- 148.Martirosyan L, Haaijer-Ruskamp FM, Braspenning J, Denig P. Development of a minimal set of prescribing quality indicators for diabetes management on a general practice level. Pharmacoepidemiol Drug Saf. 2012;21: 1053–1059. doi: 10.1002/pds.2248 [DOI] [PubMed] [Google Scholar]

- 149.Eapen ZJ, Fonarow GC, Dai D, O’Brien SM, Schwamm LH, Cannon CP, et al. Comparison of composite measure methodologies for rewarding quality of care: an analysis from the American Heart Association’s Get With The Guidelines program. Circ Cardiovasc Qual Outcomes. 2011;4: 610–618. doi: 10.1161/CIRCOUTCOMES.111.961391 [DOI] [PubMed] [Google Scholar]

- 150.Kolfschoten NE, Gooiker GA, Bastiaannet E, van Leersum NJ, van de Velde C J H., Eddes EH, et al. Combining process indicators to evaluate quality of care for surgical patients with colorectal cancer: are scores consistent with short-term outcome? BMJ Qual Saf. 2012;21: 481–489. doi: 10.1136/bmjqs-2011-000439 [DOI] [PubMed] [Google Scholar]

- 151.de Wet C, McKay J, Bowie P. Combining QOF data with the care bundle approach may provide a more meaningful measure of quality in general practice. BMC Health Serv Res. 2012;12: 351. doi: 10.1186/1472-6963-12-351 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 152.Simms AD, Baxter PD, Cattle BA, Batin PD, Wilson JI, West RM, et al. An assessment of composite measures of hospital performance and associated mortality for patients with acute myocardial infarction. Analysis of individual hospital performance and outcome for the National Institute for Cardiovascular Outcomes Research (NICOR). Eur Heart J Acute Cardiovasc Care. 2013;2: 9–18. doi: 10.1177/2048872612469132 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 153.Weng W, Hess BJ, Lynn LA, Lipner RS. Assessing the Quality of Osteoporosis Care in Practice. J Gen Intern Med. 2015;30: 1681–1687. doi: 10.1007/s11606-015-3342-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 154.Amoah AO, Amirfar S, Sebek K, Silfen SL, Singer J, Wang JJ. Developing Composite Quality Measures for EHR-Enabled Primary Care Practices in New York City. J Med Pract Manage. 2015;30: 231–239. [PubMed] [Google Scholar]

- 155.Kinnier CV, Ju MH, Kmiecik T, Barnard C, Halverson T, Yang AD, et al. Development of a Novel Composite Process Measure for Venous Thromboembolism Prophylaxis. Med Care. 2016;54: 210–217. doi: 10.1097/MLR.0000000000000474 [DOI] [PubMed] [Google Scholar]

- 156.Aliprandi-Costa B, Sockler J, Kritharides L, Morgan L, Snell L, Gullick J, et al. The contribution of the composite of clinical process indicators as a measure of hospital performance in the management of acute coronary syndromes—insights from the CONCORDANCE registry. Eur Heart J Qual Care Clin Outcomes. 2017;3: 37–46. doi: 10.1093/ehjqcco/qcw023 [DOI] [PubMed] [Google Scholar]

- 157.Normand ST, Wolf RE, McNeil BJ. Discriminating Quality of Hospital Care in the United States. Med Decis Making. 2008;28: 308–322. doi: 10.1177/0272989X07312710 [DOI] [PubMed] [Google Scholar]

- 158.Wang TY, Dai D, Hernandez AF, Bhatt DL, Heidenreich PA, Fonarow GC, et al. The importance of consistent, high-quality acute myocardial infarction and heart failure care results from the American Heart Association’s Get with the Guidelines Program. J Am Coll Cardiol. 2011;58: 637–644. doi: 10.1016/j.jacc.2011.05.012 [DOI] [PubMed] [Google Scholar]

- 159.National Quality Forum. Composite Performance Measure Evaluation Guidance. 2013. https://www.qualityforum.org/publications/2013/04/composite_performance_measure_evaluation_guidance.aspx