Key Points

Question

Is it feasible to assess solid tumor response to treatment from electronic health record (EHR) documentation and how does it correlate with clinical trial standards?

Findings

This cohort study found that a modified version of RECIST 1.1 with centralized independent readouts (imaging response based on RECIST) enabled patient-level benchmarking of an EHR-based response variable. In a concordance analysis (N = 100), their agreement was 71% and 74% for confirmed and unconfirmed response, respectively.

Meaning

The use of a modified version of RECIST with independent centralized review was feasible, the concordance between clinician-assessed response and centralized imaging response based on RECIST was moderate.

This cohort study examines the feasibility of assessing solid tumor response to treatment from electronic health record (EHR) documentation and the concordance between EHR-based assessment and clinical trial standards.

Abstract

Importance

In observational oncology studies of solid tumors, response to treatment can be evaluated based on electronic health record (EHR) documentation (clinician-assessed response [CAR]), an approach different from standardized radiologist-measured response (Response Evaluation Criteria in Solid Tumours [RECIST] 1.1).

Objective

To evaluate the feasibility of an imaging response based on RECIST (IRb-RECIST) and the concordance between CAR and imaging response based on RECIST assessments, and investigate discordance causes.

Design, Setting, and Participants

This cohort study used an EHR-derived, deidentified database that included patients with stage IV non–small cell lung cancer (NSCLC) diagnosed between January 1, 2011, to June 30, 2019, selected from 3 study sites. Data analysis was conducted in August, 2020.

Exposures

Undergoing first-line therapy and imaging assessments of response to treatment.

Main Outcomes and Measures

In this study, CAR assessments (referred to in prior publications as “real-world response” [rwR]) were defined as clinician-documented changes in disease burden at radiologic evaluation time points; they were abstracted manually and assigned to response categories. The RECIST-based assessments accommodated routine practice patterns by using a modified version of RECIST 1.1 (IRb-RECIST), with independent radiology reads. Concordance was calculated as the percent agreement across all response categories and across a dichotomous stratification (response [complete or partial] vs no response), unconfirmed or confirmed.

Results

This study found that, in 100 patients evaluated for concordance, agreement between CAR and IRb-RECIST was 71% (95% CI, 61%-80%), and 74% (95% CI, 64%-82%) for confirmed and unconfirmed response, respectively. There were more responders using CAR than IRb-RECIST (40 vs 29 with confirmation; 64 vs 43 without confirmation). The main sources of discordance were the different use of thresholds for tumor size changes by RECIST vs routine care, and unavailable baseline or follow-up scans resulting in inconsistent anatomic coverage over time.

Conclusions and Relevance

In this cohort study of patients with stage IV NSCLC, we collected routine-care imaging, showing the feasibility of response evaluation using IRb-RECIST criteria with independent centralized review. Concordance between CAR and centralized IRb-RECIST was moderate. Future work is needed to evaluate the generalizability of these results to broader populations, and investigate concordance in other clinical settings.

Introduction

Data collection on patient response to treatment outside of the clinical trial setting and the evidence it generates are valuable areas of research to complement and supplement clinical trials.1 Such evidence can address issues such as generalizability to diverse populations, or investigation of rare populations, becoming a component of clinical development programs and/or regulatory decision making.2,3

These types of data analyses require metrics and end points distinct from clinical trials to accommodate particularities such as source heterogeneity or missing information.4 In clinical trials of solid tumors in oncology, treatment response or disease progression end points are typically measured via RECIST as standard research practice.5 In contrast, the definition and validation of response or progression end points for observational studies is an area of active investigation.4,6,7,8

Our work focused on deidentified EHR-derived data; previously, our team characterized a response variable based on clinician-assessed response (CAR, referred to in prior publications as “real-world response”).9 This variable, derived from EHR notes anchored at imaging assessment time points throughout lines of systemic therapy, relies on the treating clinician’s written synopses of patients’ status, not solely on radiology reports. We compared cohort-level response-rate estimates using clinical trial RECIST and CAR on analogous patient cohorts side by side,9 and investigated the correlation between end point results with either variable, accounting for differences in cohort-level patient characteristics.9 The CAR variable could provide clinically meaningful information comparable to RECIST at the cohort level, but the relationship between CAR and standardized, centralized, and radiologist-measured response (RECIST 1.1) at the patient-level within the same cohort remained an open question.

We report the results from Retrospective Unscheduled scan collection assessing disease Burden, a non-Interventional Endpoint Snapshot (RUBIES), a study aimed to further characterize the relationship between a RECIST-based response with independent review and CAR in patients with stage IV non–small cell lung cancer (mNSCLC). Our objectives were (1) to develop and examine the feasibility of conducting centralized imaging response based on RECIST (IRb-RECIST) in community settings, (2) to evaluate the patient-level concordance between CAR and IRb-RECIST evaluations performed on routine-care imaging, as a first step to assess the degree of comparability of both variables, and (3) to investigate causes of discordance, to better understand the nature of the clinical information captured by the CAR variable. We focused our work on a patient cohort engineered to optimize the availability of documentation necessary for our analyses.

Methods

Data Source

This retrospective observational study used the Flatiron Health nationwide longitudinal deidentified EHR-derived database,10 comprising patient-level structured and unstructured data, curated via technology-enabled abstraction.11 During the study period, overall data in the database originated from approximately 280 US cancer clinics (approximately 800 sites of care). Institutional review board (IRB) approval of the study protocol, with a waiver of informed consent, was obtained prior to study conduct, covering the data from all sites represented. Approval was granted by the WCG IRB. (The Flatiron Health Real-World Evidence Parent Protocol, Tracking # FLI118044). The study followed the Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) reporting guideline.

Cohort Selection

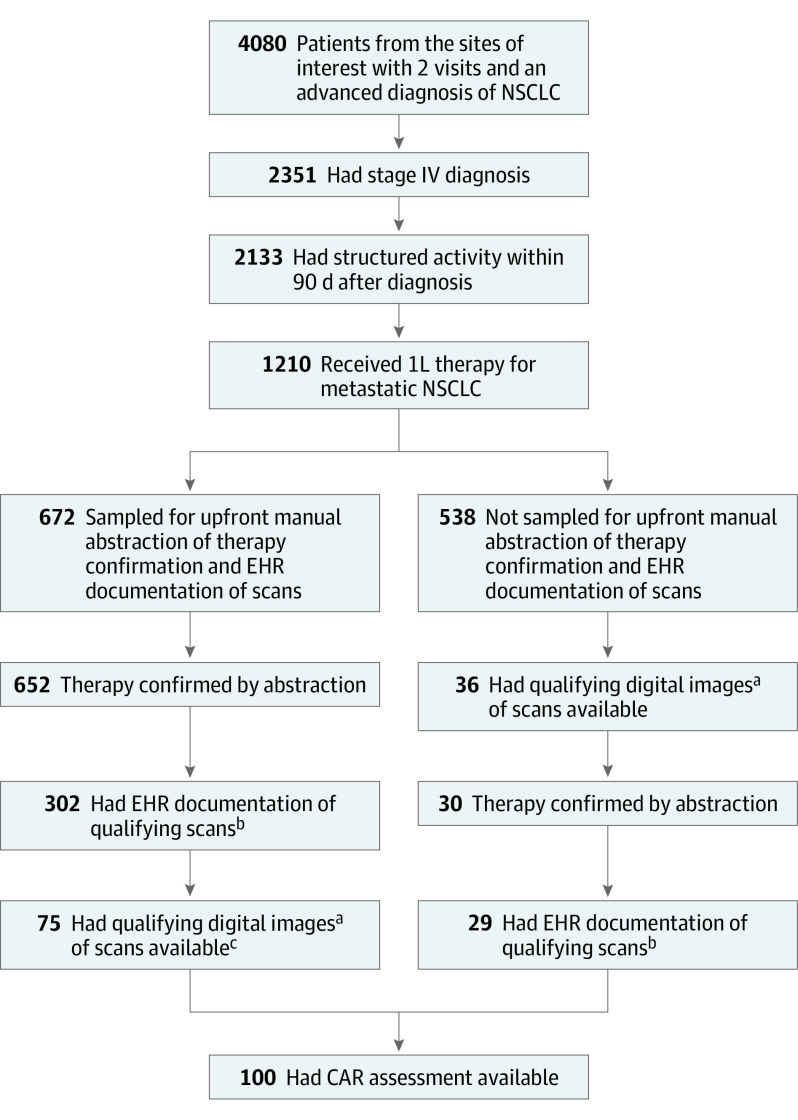

The first step of cohort selection was to apply a series of feasibility criteria. Patients whose EHRs originated from 3 study sites (community oncology practices) were included if they had a stage IV diagnosis between January 1, 2011, and June 30, 2019, had documentation of treatment (first-line therapy, 1L), and at least 2 EHR-documented clinical visits on or after January 1, 2011. Patients without structured activity (ie, a visit, vital, medication order, or medication administration) within 90 days after the initial metastatic diagnosis date were excluded (Figure).

Figure. Patient Sample Flow Through Cohort Selection Steps.

CAR Indicates clinician-assessed response; EHR, electronic health record; NSCLC, non–small cell lung cancer.

aAt least 1 baseline CT covering any of the chest, abdomen, or pelvis (CAP), and at least 1 postbaseline scan of any modality of interest.

bAt least 1 baseline CT covering any of the chest, abdomen, or pelvis (CAP), and at least 1 postbaseline scan of any modality of interest.

cAttrition in some instances was owing to operational constraints, when study staff may not have been able to go through all patients with EHR documentation of scans.

Within the cohort generated via feasibility criteria, we applied additional criteria as a second step, the documented-scan criteria. These included having EHR documentation of at least 1 chest, abdomen, or pelvis (C/A/P) computed tomography (CT) scan performed within 6 weeks prior to 1L treatment initiation (ie, baseline window). Patients were also required to have at least 1 EHR-documented scan performed any time during 1L treatment (ie, follow-up window). To define 1L treatment, receipt of therapy was confirmed by manual abstraction. Qualifying images included CT, bone scans, positron emission tomography (PET)-CT, and magnetic resonance imaging (MRI), regardless of contrast dye administration.

In a subsequent step, a third set of criteria (not included in the prior steps) required patients to have at least 1 C/A/P CT scan available in the baseline window and at least 1 scan of any qualifying modality as described above in the follow-up window. All available digital images from patients with documented scans (as per the documented-scan criteria) were obtained retrospectively from the treating centers directly and/or via picture archiving and communication systems (PACS), and were uploaded into a viewing system in the Digital Imaging and Communications in Medicine (DICOM) format. Deidentified study IDs were entered for each patient to ensure attribution and traceability. These were the available-scan criteria.

To be eligible for the concordance analysis, patients had to meet both the documented-scan and the available-scan criteria, and had to have at least 1 clinician assessment of tumor burden documented in the EHR following radiographic evaluations (CAR assessment).

Variables and Assessments

Imaging Response Based on RECIST (IRb-RECIST)

A radiology charter was developed by the study team, implementing modifications to apply RECIST 1.15 to radiologic images collected retrospectively during routine care, aiming to balance feasibility improvements with minimal effect on RECIST performance, based on clinical judgment (Table 1).

Table 1. Key Features in the Application of RECIST 1.1 to Retrospectively Collected Radiologic Images Performed as Part of Routine Care.

| RECIST 1.1 | Imaging response based on RECIST | Observed deviations from RECIST 1.1 and potential effect |

|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Abbreviations: CR, complete response; NA, not applicable; NSCLC, non–small cell lung cancer; PR, partial response.

Qualifying images included computed tomographic, bone scans, positron emission tomography, and magnetic resonance imaging, regardless of contrast dye administration.

Scans within 14 days of an initial scan during follow-up were bundled and read as a single assessment time point at the earliest date because unique assessments may routinely span a succession of multiple scans (eFigure in the Supplement). The independent centralized review of available images, per the radiology charter, used 2 primary readers plus adjudication. Response was categorized based on lesion measurement and presence or absence of new lesions at each follow-up assessment. The best overall response was the best response recorded from the start of treatment until the earliest of progression or the end of treatment, taking into account any requirement for confirmation, described herein. At each time point, digital images were assessed for readability (as readable, readable but suboptimal, not readable) according to radiology standards established by the review committee.

Clinician-Assessed Response

Clinician-assessed response (referred to in prior publications as “real-world response” [rwR])9 was defined as a change in disease burden documented in the EHR by the treating clinician at time points associated with radiologic evaluations during a line of therapy, abstracted manually from EHR documentation, and classified into real-world response categories: complete response, partial response, stable disease, progressive disease, indeterminate response, and not documented.9 Response classifications could refer to preceding assessments for comparison (not necessarily to baseline, attempting to reflect routine care practices).9 Response confirmation was based on documentation of continued response in subsequent time points (which could be noted as “stable” referring to an immediately prior response [partial or complete] documented in the EHR).

Best overall response was the best time point response recorded from the treatment start until the earliest date of progression or the end of treatment, taking into account a requirement for confirmation when applicable.9

Statistical Analyses

Scan Availability and Readability Analyses

We assessed the feasibility of the IRb-RECIST approach by calculating the rate of patient-level scan availability in the cohort meeting the feasibility criteria. Feasibility for a patient was defined as (1) having 1L therapy confirmed by abstraction, and (2) meeting both documented-scan and available-scan criteria.

For the cohort where the IRb-RECIST approach was feasible and CAR assessments were also available (ie, concordance cohort), we estimated imaging-level scan availability and rate of readability. Imaging-level scan availability was defined as the percentage of EHR-documented scans that had a corresponding digital image available for radiology reads. Readability rate was based on the proportion of available scans that yielded a time point response readout by the centralized readers.

Concordance Analysis

Patients meeting the documented and available scan criteria, and with at least 1 CAR assessment, were included in the concordance analysis. Analyses were reported at the patient level, where we calculated the agreement between paired assessments of CAR and IRb-RECIST on the same patient using percent agreement and Cohen’s κ (with point estimates and 95% CIs). As the primary outcome of the study, the calculations used a dichotomous response outcome (response [CR/PR] vs no response [non-CR/PR]); we also performed the analysis using an all categories best response outcome, and using a dichotomous disease control outcome (ever achieved SD or better vs not achieving at least SD). For all patient-level response outcomes, we performed analyses on the basis of unconfirmed and confirmed responses. Sensitivity analyses were performed on the dichotomous and best response outcome stratified by therapy class and availability of scans.

An exploratory qualitative review of EHR charts was performed for the cases with disagreement between CAR and IRb-RECIST assessments, to catalog any sources of discordance.

Analyses were conducted in R statistical software (version 3.6.1, R Foundation). The analysis was conducted in August, 2020.

Results

Feasibility criteria were met by 1210 patients; 100 patients were included in the concordance analysis (Figure). Study cohorts were largely similar to the broader advanced NSCLC population in the originating EHR-derived longitudinal database, with a median age at diagnosis between 67 to 69 years, and predominance of patients with tumors of nonsquamous histology and of smokers (Table 2). There were greater proportions of White patients and patients with nonsquamous cell carcinomas in the concordance cohort (Table 2; eTable 1 in the Supplement).

Table 2. Characteristics of the Patient Cohort for the Concordance Study.

| Characteristics | No. (%) | |

|---|---|---|

| Feasibility cohort (n = 1210) | Concordance cohort (n = 100) | |

| Age at stage IV diagnosis, median (IQR), y | 69.0 (62.0-76.0) | 67.5 (60.8-76.0) |

| Sex | ||

| Female | 591 (48.8) | 51 (51.0) |

| Male | 619 (51.2) | 49 (49.0) |

| Race | ||

| Asian | 31 (2.6) | 3 (3.0) |

| Black/African American | 42 (3.5) | 1 (1.0) |

| Unknowna | 155 (12.8) | 6 (6.0) |

| White | 886 (73.2) | 84 (84.0) |

| Otherb | 96 (7.9) | 6 (6.0) |

| Histologic findings | ||

| Nonsquamous cell | 919 (76.0) | 83 (83.0) |

| NOS | 49 (4.0) | 0 |

| Squamous cell | 242 (20.0) | 17 (17.0) |

| Smoking history | ||

| Yes | 992 (82.0) | 82 (82.0) |

| No | 215 (17.8) | 18 (18.0) |

| Unknown | 3 (0.2) | 0 |

| Stage IV diagnosis year | ||

| 2011-2014 | 497 (41.1) | 36 (36.0) |

| 2015-2017 | 501 (41.4) | 49 (49.0) |

| ≥2018 | 212 (17.5) | 15 (15.0) |

| EGFR variant | ||

| Tested | 585 (48.3) | 54 (54.0) |

| Variation negative | 433 (74.0) | 37 (68.5) |

| Variation positive | 114 (19.5) | 13 (24.1) |

| Other | 38 (6.5) | 4 (7.4) |

| ALK alteration | ||

| Tested | 580 (47.9) | 52 (52.0) |

| Rearrangement not present | 496 (85.5) | 48 (92.3) |

| Rearrangement present | 25 (4.3) | 3 (5.8) |

| Other | 59 (10.2) | 1 (1.9) |

| KRAS variant | ||

| Tested | 195 (16.1) | 17 (17.0) |

| Variation negative | 121 (62.1) | 7 (41.2) |

| Variation positive | 62 (31.8) | 9 (52.9) |

| Other | 12 (6.2) | 1 (5.9) |

| 1L therapy | ||

| ALK inhibitor | 22 (1.8) | 3 (3.0) |

| Anti–VEGF-containing | 192 (15.9) | 27 (27.0) |

| Clinical study | 6 (0.5) | 0 |

| EGFR TKI | 128 (10.6) | 19 (19.0) |

| Anti-EGFR antibody-based | 3 (0.2) | 1 (1.0) |

| Nonplatinum-based chemoth comb | 3 (0.2) | 0 |

| Other | 1 (0.1) | 0 |

| PD-(L)1-based | 182 (15.1) | 15 (15.0) |

| Platinum-based chemoth comb | 631 (52.4) | 35 (35.0) |

| Single-agent chemotherapy | 37 (3.1) | 0 |

Abbreviations: ALK, anaplastic lymphoma kinase; EGFR, epidermal growth factor receptor; IQR, interquartile range; NOS, not otherwise specified; PD-(L)1, programmed cell death-(ligand) 1; TKI, tyrosine kinase inhibitor; VEGF, vascular endothelial growth factor.

Patients whose race was either not captured or who only had “Hispanic or Latino” ethnicity captured without race, were categorized as “Unknown” owing to small cohort size.

Patients with race category not listed above were categorized as "Other."

Scan Availability and Readability Analyses

Of 1210 patients meeting the feasibility criteria, we selected an upfront sample of 672 for manual abstraction to efficiently confirm receipt of therapy (1L); confirmation was possible for 652 (97%) patients. Of them, 302 (46%) patients met the documented scan criteria, and 75 (12%) met both the documented and available scan criteria. Of the 538 patients not sampled for upfront abstraction, 29 met both the documented and available scan criteria and their receipt of therapy was subsequently confirmed by manual abstraction. Of these 104 patients who met both the documented and available scan criteria, 100 patients had at least 1 CAR assessment in the EHR.

There were a total of 1259 EHR-documented scans, of which 1002 (80%) were available for centralized IRb-RECIST reads; 322 of 434 (74%) of the baseline scans and 680 of 825 (82%) of the follow-up scans were available. Imaging was most frequently unavailable when it had been performed in a radiology facility separate from the oncology clinic. In addition, 177 scans were available for IRb-RECIST reads but not documented in the EHR.

As described in the methods, scans were bundled into assessment time points (within 14-day windows) in the concordance cohort. Few time points (3 [<1%]) had scans classified as unreadable, 332 (34%) as suboptimal but readable, and 629 (65%) as optimal by the independent radiology reading facility.

Concordance Results

In the cohort of patients eligible for the concordance analysis (N = 100), percent agreement between CAR and IRb-RECIST based on the dichotomous analysis (response/no-response) categorization with confirmation was 71% (95% CI, 61%-80%); unconfirmed response results were similar, 74% (95% CI, 64%, 82%). Percent agreement was lower in the analysis based on best response categories, 52% (95% CI, 42%-62%) (Table 3; eTable 2 in the Supplement).

Table 3. Concordance Results From the Analyses Across All Categories, Best Response, and in the Dichotomized Response/Nonresponse Categories.

| Variable | CAR [n = 100], No (%) | All categories | Dichotomized | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| CR | PR | (Dichot) response | SD | PD | Indeter | Not doc | (Dichot) no-response | ||||

| Imaging response based on RECIST (n = 100) | CR | 0 (0.0) | 2 (2.0) | NA | 1 (1.0) | 0 | 0 | 0 | NA | Agreement, % (95% CI): 52 (41.8-62.1) | Agreement, % (95% CI): 71a (61.1-79.6) |

| PR | 1 (1.0) | 17 (17.0) | 8 (8.0) | 0 | 0 | 0 | κ statistics (95% CI): 0.29 (0.14-0.43) | κ statistics (95% CI): 0.37b (0.17-0.56) | |||

| (Dichot) response | NA | NA | 20 (20.0) | NA | NA | NA | NA | 9 (9.0) | NA | NA | |

| SD | 1 (1.0) | 15 (15.0) | NA | 24 (24.0) | 8 (8.0) | 0 | 1 (1.0) | NA | Unconfirmed agreement, % (95% CI): 58 (47.7-67.8) | Unconfirmed agreement, % (95% CI): 74 (64.3-82.3) | |

| PD | 0 | 4 (4.0) | 5 (5.0) | 11 (11.0) | 0 | 0 | |||||

| NE | 0 | 0 | 0 | 2 (2.0) | 0 | 0 | κ statistics (95% CI): 0.37 (0.22-0.52) | κ statistics (95% CI): 0.50 (0.33-0.67) | |||

| (Dichot) no-response | NA | NA | 20 (20.0) | NA | NA | NA | NA | 51 (51.0) | NA | NA | |

Abbreviations: CAR, clinician-assessed response; CR, complete response; NA, not applicable; NE, not evaluable; PD, progressive disease; PR, partial response; SD, stable disease.

In the analysis based on dichotomous disease control outcome (ever achieved SD or better vs not achieving at least SD), agreement was 82% (95% CI, 73.1%-89.0%) and κ statistics, 0.48 (95% CI, 0.25-0.70).

Analyses based on confirmed response, except when noted (N = 100).

The qualitative EHR medical chart review revealed 2 potential drivers of discordance between CAR and IRb-RECIST assessments in the dichotomous analysis: lack of standardized quantitative thresholds in the assessments by treating clinicians, which resulted in different meanings for partial response as a CAR category compared with the IRb-RECIST category, and unavailability of baseline or follow-up scans (eTable 3 in the Supplement; see Table 1 for the potential effect of some of the RECIST criteria adaptations on the subsequent concordance analysis).

Sensitivity/Subgroup Analyses

Analyses showed that concordance between CAR and IRb-RECIST varied across different therapy classes (Table 4), highest for chemotherapy and lowest for targeted and immunotherapy. This pattern was consistent in the dichotomous and best-response stratifications, with response confirmation.

Table 4. Sensitivity Analyses of Concordance Rates (by Percent Agreement) According to Treatment/Therapeutic Class and to Scan Availability.

| Analysis | No. | % (95% CI) | |||

|---|---|---|---|---|---|

| Best response categories | Dichotomized response, y/n | ||||

| Unconfirmed | Confirmeda | Unconfirmed | Confirmeda | ||

| According to therapy class | |||||

| Anti–VEGF-containing | 27 | 63 (42.4-80.6) | 51.9 (31.9-71.3) | 81.5 (61.9-93.7) | 70.4 (49.8-86.2) |

| Chemotherapy | 35 | 54.3 (36.6-71.2) | 62.9 (44.9-78.5) | 71.4 (53.7-85.4) | 82.9 (66.4-93.4) |

| Targeted | 23 | 65.2 (42.7-83.6) | 43.5 (23.2-65.5) | 78.3 (56.3-92.5) | 56.5 (34.5-76.8) |

| PD-1/PD-L1-based | 15 | 46.7 (21.3-73.4) | 40 (16.3-67.7) | 60 (32.3-83.7) | 66.7 (38.4-88.2) |

| According to scan availability | |||||

| All EHR-documented scans available | 23 | 60.9 (38.5-80.3) | 60.9 (38.5-80.3) | 73.9 (51.6-89.8) | 73.9 (51.6-89.8) |

| At least 1 EHR-documented scan unavailable | 77 | 57.1 (45.4-68.4) | 49.4 (37.8-61) | 74.0 (62.8-83.4) | 70.1 (58.6-80) |

Abbreviations: EHR, electronic health record; PD-(L)1, programmed cell death-(ligand) 1; Targeted therapy, anaplastic lymphoma kinase (ALK) and epidermal growth factor receptor (EGFR) tyrosine kinase inhibitors (TKIs), and anti-EGFR antibody-based; VEGF, vascular endothelial growth factor.

Patients with an initial response, but without subsequent scan to confirm it, would be considered unconfirmed.

A different subanalysis investigated agreement rates according to availability of scans. Concordance was greater among patients with full availability vs patients with at least 1 unavailable scan result (Table 4; eTable 2 in the Supplement).

Discussion

This study is one of the first to systematically collect routine care solid tumor imaging from EHR-derived data sources to show the feasibility of implementing a RECIST adaptation using independent centralized imaging review (IRb-RECIST), which was then used to benchmark a response variable derived from clinician-documented response in the EHR. These 2 approaches had moderate concordance overall in the patient cohort studied, possibly affected by factors such as treatment type or availability of scans.

The CAR evaluation yielded more responders compared with centralized IRb-RECIST assessment. This may be owing to reasons intrinsic to the CAR variable, such as treating clinicians not relying on stringent response classification thresholds relative to a baseline, but rather on holistic clinical judgments. These features probably compound more general discrepancy patterns between local-investigator and centralized assessments, well documented in oncology studies.12,13,14,15,16,17

Overall, a formal requirement for response confirmation did not affect concordance. Given that dedicated confirmation assessments are not typical in routine practice (where surveillance aims to monitor response durability rather than confirmation), this lack of effect mitigates a potential concern that could have invalidated the CAR variable. The variability according to therapy class may be owing to the different benefit depth and duration across therapies, as well as the different routine assessment frequencies for different therapies.

Other imaging-based studies are actively developing methodology for oncology RWD analyses. Kehl et al8 focused specifically on radiology reports to develop a machine-learning model capable of annotating imaging reports to categorize response or progression. They did not examine their model’s performance relative to RECIST, and therefore cannot be compared with our report; however, their work is an important step to address the importance of scalability in the analysis of large RWD samples.

Feinberg et al18 applied RECIST to clinical imaging in EHR-based sources and to associated clinicians’ assessments. They used an adaptation of RECIST 1.1 for real-world application, but their study and ours differ across several points: Feinberg et al opted for local rwRECIST assessment by treating clinicians rather than centralized review, they allowed rwRECIST application on available imaging reports in addition to images themselves, and they evaluated single, best response follow-up time points for each patient (as opposed to all assessment time points). Interestingly, some of their findings, such as clinicians’ assessments yielding more responders relative to their rwRECIST adaptation, are consistent with ours, probably owing to similar factors.

Limitations

Among the study limitations are, first, its scope, as defined by cohort size, number and type of study sites (3 community clinics), and clinical setting (single tumor type, single therapy line). We provide context about the representativeness of the study cohort, but these findings on imaging availability may be highly specific to the 3 study sites and to the study processes; indeed, we cannot rule out selection bias associated to the imaging availability requirements with an effect on concordance, although our sensitivity analyses suggest that the association was probably minor. Investigating the generalizability of these results by expanding the scope of future research is therefore warranted. Second, scan availability and consistency were variable (in terms of imaging modalities, contrast administration, anatomic coverage and timing) despite our eligibility criteria, illustrating challenges associated with data collection from routine clinical care. We used an IRb-RECIST approach (more flexible than RECIST 1.1) as a benchmark; therefore, our reference was not strictly equal to RECIST clinical trial methodology, and the precise effect of our adaptations is not known. Applying criteria and standards conceived for clinical trials to RWD may pose a challenge. Imaging-based studies, like this, may require calibrations of stringency on technical or timing requirements, to maintain inclusive cohorts and maximize data availability, followed up by deeper research to characterize the analytic effect of those calibrations. Understanding which factors affect concordance will guide data collection improvements and iterations that could mitigate that effect. Nonetheless, in this study we were able to conduct centralized imaging readouts by modifying RECIST; sensitivity analyses that assessed the effect of data heterogeneity produced largely consistent results with the main analyses, indicating that the confounding effects associated with missing or inconsistent imaging were probably not major.

The implications of this study are 2-fold. In terms of feasibility, we believe our modifications to the RECIST approach were largely successful to enable centralized real-world imaging review. At the same time, we acknowledge that a relatively large initial patient sample was required to arrive at the concordance-analysis sample. Adaptation of clinical trial standards such as this may provide objective and reproducible measures of response (or other outcomes) to inform decision making in oncology drug development and could be considered in future studies. In addition, the concordance results add to the body of evidence characterizing a response variable derived from abstracted EHR documentation. Yet, further understanding of the utility of these variables (IRb-RECIST and CAR) in the context of different applications, such as real-world comparator cohorts, or comparative effectiveness studies, will be needed. It may be premature for CAR to be used for direct statistical comparisons with clinical trial RECIST response, but it could provide valuable information to characterize observational outcomes in a population of interest, or to do cross-cohort comparisons using the same variable. It could also be used to generate external context for results from single-arm clinical trials, with the appropriate adjustment methodology to incorporate concordance benchmarks.

Conclusions

We developed an approach to collect and centralize the evaluation of routine-care imaging in patients with mNSCLC (IRb-RECIST), and applied the ensuing readouts to better characterize an EHR-based CAR variable, performing a thorough quantitative assessment across both. This is key in the development of a framework for generating quantitative, objective response measures from routine-care imaging. Prior work9 indicated that the CAR variable produced clinically meaningful cohort-level results in their correlation with RECIST results and their association with survival. The present patient-level results reinforce the clinical value of deriving response information based on routine imaging, and highlight areas of future improvement (optimizing data completeness and collection, refining standardization of routine documentation practices) as we progress toward reliable and high-quality metrics and end points for observational oncology studies.

eFigure. Scan Timepoint Bundling Guide

eTable 1. Cohort characteristics for the feasibility evaluation and the concordance analysis. Comparison with the parent database where the cohorts were sourced

eTable 2. Detailed concordance results according to scan availability and modality of available scans

eTable 3. Reasons for discordant cases between CAR and Imaging Response Based on RECIST in binary confirmed response

References

- 1.Khozin S, Blumenthal GM, Pazdur R. Real-world data for clinical evidence generation in oncology. J Natl Cancer Inst. 2017;109(11):djx187. doi: 10.1093/jnci/djx187 [DOI] [PubMed] [Google Scholar]

- 2.Mahendraratnam N, Mercon K, Gill M, Benzing L, McClellan MB. Understanding use of real-world data and real-world evidence to support regulatory decisions on medical product effectiveness. Clin Pharmacol Ther. 2022;111(1):150-154. doi: 10.1002/cpt.2272 [DOI] [PubMed] [Google Scholar]

- 3.Seifu Y, Gamalo-Siebers M, Barthel FM, et al. Real-world evidence utilization in clinical development reflected by US product labeling: statistical review. Ther Innov Regul Sci. 2020;54(6):1436-1443. doi: 10.1007/s43441-020-00170-y [DOI] [PubMed] [Google Scholar]

- 4.Stewart M, Norden AD, Dreyer N, et al. An exploratory analysis of real-world end points for assessing outcomes among immunotherapy-treated patients with advanced non-small-cell lung cancer. JCO Clin Cancer Inform. 2019;3:1-15. doi: 10.1200/CCI.18.00155 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Eisenhauer EA, Therasse P, Bogaerts J, et al. New response evaluation criteria in solid tumours: revised RECIST guideline (version 1.1). Eur J Cancer. 2009;45(2):228-247. doi: 10.1016/j.ejca.2008.10.026 [DOI] [PubMed] [Google Scholar]

- 6.Luke JJ, Ghate SR, Kish J, et al. Targeted agents or immuno-oncology therapies as first-line therapy for BRAF-mutated metastatic melanoma: a real-world study. Future Oncol. 2019;15(25):2933-2942. doi: 10.2217/fon-2018-0964 [DOI] [PubMed] [Google Scholar]

- 7.Feinberg BA, Bharmal M, Klink AJ, Nabhan C, Phatak H. Using response evaluation criteria in solid tumors in real-world evidence cancer research. Future Oncol. 2018;14(27):2841-2848. doi: 10.2217/fon-2018-0317 [DOI] [PubMed] [Google Scholar]

- 8.Kehl KL, Elmarakeby H, Nishino M, et al. Assessment of deep natural language processing in ascertaining oncologic outcomes from radiology reports. JAMA Oncol. 2019;5(10):1421-1429. doi: 10.1001/jamaoncol.2019.1800 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Ma X, Bellomo L, Magee K, et al. Characterization of a real-world response variable and comparison with RECIST-based response rates from clinical trials in advanced NSCLC. Adv Ther. 2021;38(4):1843-1859. doi: 10.1007/s12325-021-01659-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Ma X, Long L, Moon S, Adamson BJS, Baxi SS. Comparison of population characteristics in real-world clinical oncology databases in the US: Flatiron Health, SEER, and NPCR. medRxiv. 2020:2020.03.16.20037143. doi: 10.1101/2020.03.16.20037143. [DOI]

- 11.Birnbaum B, Nussbaum N, Seidl-Rathkopf K, et al. Model-assisted cohort selection with bias analysis for generating large-scale cohorts from the EHR for oncology research. arXiv. Published online January 13, 2020. doi: 10.48550/arXiv.2001.09765 [DOI]

- 12.Dello Russo C, Cappoli N, Navarra P. A comparison between the assessments of progression-free survival by local investigators versus blinded independent central reviews in phase III oncology trials. Eur J Clin Pharmacol. 2020;76(8):1083-1092. doi: 10.1007/s00228-020-02895-z [DOI] [PubMed] [Google Scholar]

- 13.Dello Russo C, Cappoli N, Pilunni D, Navarra P. Local investigators significantly overestimate overall response rates compared to blinded independent central reviews in phase 2 oncology trials. J Clin Pharmacol. 2021;61(6):810-819. [DOI] [PubMed] [Google Scholar]

- 14.Zhang JJ, Chen H, He K, et al. Evaluation of blinded independent central review of tumor progression in oncology clinical trials: a meta-analysis. Ther Innov Regul Sci. 2013;47(2):167-174. doi: 10.1177/0092861512459733 [DOI] [PubMed] [Google Scholar]

- 15.Dodd LE, Korn EL, Freidlin B, et al. Blinded independent central review of progression-free survival in phase III clinical trials: important design element or unnecessary expense? J Clin Oncol. 2008;26(22):3791-3796. doi: 10.1200/JCO.2008.16.1711 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Mannino FV, Amit O, Lahiri S. Evaluation of discordance measures in oncology studies with blinded independent central review of progression-free survival using an observational error model. J Biopharm Stat. 2013;23(5):971-985. doi: 10.1080/10543406.2013.813516 [DOI] [PubMed] [Google Scholar]

- 17.Zhang J, Zhang Y, Tang S, et al. Evaluation bias in objective response rate and disease control rate between blinded independent central review and local assessment: a study-level pooled analysis of phase III randomized control trials in the past seven years. Ann Transl Med. 2017;5(24):481. doi: 10.21037/atm.2017.11.24 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Feinberg BA, Zettler ME, Klink AJ, Lee CH, Gajra A, Kish JK. Comparison of solid tumor treatment response observed in clinical practice with response reported in clinical trials. JAMA Netw Open. 2021;4(2):e2036741. doi: 10.1001/jamanetworkopen.2020.36741 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

eFigure. Scan Timepoint Bundling Guide

eTable 1. Cohort characteristics for the feasibility evaluation and the concordance analysis. Comparison with the parent database where the cohorts were sourced

eTable 2. Detailed concordance results according to scan availability and modality of available scans

eTable 3. Reasons for discordant cases between CAR and Imaging Response Based on RECIST in binary confirmed response