Abstract

The COVID-19 pandemic continues to wreak havoc on the world’s population’s health and well-being. Successful screening of infected patients is a critical step in the fight against it, with radiology examination using chest radiography being one of the most important screening methods. For the definitive diagnosis of COVID-19 disease, reverse-transcriptase polymerase chain reaction remains the gold standard. Currently available lab tests may not be able to detect all infected individuals; new screening methods are required. We propose a Multi-Input Transfer Learning COVID-Net fuzzy convolutional neural network to detect COVID-19 instances from torso X-ray, motivated by the latter and the open-source efforts in this research area. Furthermore, we use an explainability method to investigate several Convolutional Networks COVID-Net forecasts in an effort to not only gain deeper insights into critical factors associated with COVID-19 instances, but also to aid clinicians in improving screening. We show that using transfer learning and pre-trained models, we can detect it with a high degree of accuracy. Using X-ray images, we chose four neural networks to predict its probability. Finally, in order to achieve better results, we considered various methods to verify the techniques proposed here. As a result, we were able to create a model with an AUC of 1.0 and accuracy, precision, and recall of 0.97. The model was quantized for use in Internet of Things devices and maintained a 0.95 percent accuracy.

Keywords: Soft computing, X-ray, COVID-19, Multi-input convolutional network, Intern of Things, XAI

1. Introduction

The Coronavirus (COVID-19) is a viral disease caused by hard acute respiratory syndrome coronavirus 2 (SARS-CoV-2). The outbreak seems to have a detrimental impact on the market and health. Many nations are challenged by the medical tools necessary for COVID-19 detection. They are looking forward to developing a low-cost, fast tool to detect and diagnose the virus efficiently. Even though a chest X-ray (CXR) scan is a useful candidate, the images created by the scans must be analyzed, and large numbers of evaluations need to be processed. A CXR of individuals is a vital step in the struggle against COVID-19. This disease causes pulmonary opacities and bilateral parenchymal ground-glass, sometimes with a peripheral lung distribution and a morphology. Several Deep Learning (DL) techniques revealed a firmly optimistic accuracy of COVID-19 patient discovery via the use of CXRs [1] [2]. Because most hospitals have X-ray machines, it is the radiologists’ first choice. Automatic diagnosis of COVID-19 from chest pictures is particularly desirable because radiologists are limited and also busy in pandemic conditions. Despite the fact that most machine learning models include a margin of error, automation can be critical for screening patients who can then be assessed with more precise tests.

Image segmentation is an essential procedure for most medical image analysis tasks. Having great segmentations will help clinicians and patients provide essential information for 2-D and 3-D visualization, surgical preparation, and early disease detection [3]. Segmentation describes regions of interest (ROIs), e.g., lung, lobes, bronchopulmonary segments, and infected areas or lesions at the CXR or computed tomography (CT) images. Segmented regions could be further used to extract features for description and other applications [4]. Automated computer-aided diagnostic (CADx) tools powered by artificial intelligence (AI) techniques to detect and distinguish COVID-19 related nasal abnormalities must be tremendously valuable, given the significant number of patients. These tools are particularly vital in places with inadequate CT accessibility or radiological experience, and CXRs create fast, higher throughput triage in mass casualty situations. These instruments combine radiological picture processing components with computer vision to identify common disease indications and localize problematic ROIs. At the moment, recent advances in machine learning (ML), especially DL approaches using convolutional neural networks (CNNs), have demonstrated promising performance in identifying, classifying, and measuring disease patterns in medical images in CT scans and CXRs [5], [6], [7], [8], [9], [10], [11], [12], [13].

In the past decades, Fuzzy logic has represented a vital role in many research areas [10]. Fuzzy logic is an offshoot of fuzzy set theory, which reproduces reasoning and human thinking to boost the procedure’s efficacy when managing uncertain or vague data [13].

With little loss in model accuracy, post-training quantization is a conversion technique that can reduce model size while improving CPU and hardware accelerator latency. You can quantize a TensorFlow floating model that has already been trained by converting it to TensorFlow Lite format with the TensorFlow Lite Converter.

As a result, the goal of this research is to use ML to solve the problem of identifying COVID-19, using X-rays. VGG16, ResNet152V2, InceptionV3, and EfficientNetB3 were chosen as the neural networks to predict disease probability. Finally, in order to obtain better results, we use several techniques proposed here, such as fuzzy filters and MultiInput networks. According to a fuzzy equal relation, fuzzy rough set-based approaches find reduct directly on initial data. The difference between items is preserved by a fuzzy relation. The classification precision can be improved using a fuzzy rough set approach. As a result, we were able to produce models with an Area Under Curve (AUC) of 1.0 and several variations with very high-performance evaluation metrics like precision, accuracy, and recall. To quantize the model, we use TensorFlow Lite Converter with a 0.95 accuracy.

This study’s main novelty uses a multi-input network with a combination of segmented and non-segmented images in a neural network composed of two pre-trained networks. In a nutshell, the primary contributions of this paper are:

-

•

use a multi-input approach to CXR with COVID-19 classification;

-

•

apply a trapezoidal membership function to generate fuzzy edge images of CXR with COVID-19;

-

•

obtains classification models with AUC of 0.99 and recall of 100% to CXR COVID-19 detection.

2. Literature review

In epidemic regions, COVID-19 presumed patients are in immediate demand for identification and suitable therapy. Nevertheless, medical images, mainly chest CT, include hundreds of pieces that require a very long time for those experts in diagnosing. Additionally, COVID-19, being a new virus, has comparable symptoms to several different kinds of pneumonia, which necessitates radiologists to collect many experiences for attaining a more accurate diagnostic operation. Therefore, AI-assisted diagnosis utilizing medical images is highly desirable [4]. Several studies aim to separate COVID-19 patients from non-COVID-19 subjects. The researchers distinguished pneumonia manifestations with higher specificity from that of viral pneumonia on chest CT scans. It was noted that COVID-19 pneumonia was shown to be peripherally distributed together with ground glass opacities (GGO) and vascular thickening [14]. Abdel-Basset et al. [1] propose a hybrid COVID-19 detection model based on an improved marine hunters algorithm (IMPA) to get X-ray image segmentation. The ranking-based diversity decrease (RDR) strategy enhances the IMPA operation to achieve better alternatives from fewer iterations. The experimental results reveal that the hybrid model outperforms all other algorithms for a range of metrics. Abdul Waheed et al. [1] present a process to generate synthetic chest X-ray (CXR) images by developing an Auxiliary Classifier Generative Adversarial Network (ACGAN) [14]. The segmentation approaches in COVID-19 programs can be mostly grouped into two different classes, i.e., the lung-region-oriented approaches as well as the lung-lesion-oriented procedures. The former lung-region-oriented procedures aim to separate lung areas, i.e., entire lung and lung lobes, from other areas in CT or X-ray, which is considered as a requisite measure in COVID-19 [4]. Jin et al. [15] shows a two-stage pipeline for screening COVID-19 in CT images, where the entire lung area is first detected through an efficient segmentation network based on UNet+. Wang proposes a novel COVID-19 Pneumonia Lesion segmentation system (COPLE-Net) to better deal with the lesions with various scales and looks [16]. Chouhan et al. [6] approach extract features from images using several pre-trained neural network models. The study uses five distinct models, examined their performance, and combined outputs, which beat individual models, reaching the state-of-the-art performance in pneumonia identification. The study reached an accuracy of 96.4% with a recall of 99.62% on unseen data from the Guangzhou Women and Children’s Medical Center dataset. Zheng et al. [17] developed a weakly-supervised deep learning-based software utilizing 3D CT volumes to identify COVID-19. The lung region was segmented using a pre-trained UNet; then, the segmented 3D lung region was fed into a 3D deep neural network to foretell the probability of COVID-19 infectious. The present study presents a different approach from the studies presented, using a multi-input architecture and segmented images in conjunction with non-segmented images. To extract information from fabric photos, Lin et al. propose a multi-input neural network. The segmented small-scale image and the related features collected using standard methods are the inputs. Experiments suggest that including these manually extracted features into a neural network can increase its performance to a degree [18]. For identifying autism, Epalle et al. propose a multi-input deep neural network model. The architecture of the model is built to accommodate neuroimaging data that has been preprocessed using three different reference atlases. For each training example, the proposed deep neural network receives data from three alternative parcellation algorithms at the same time and learns discriminative features from the three input sets automatically. As a result of this process, learned features become more general and less reliant on a single brain parcellation approach. The study used a collection of 1,038 real participants and an augmented set of 10,038 samples to validate the model utilizing cross-validation methods. On genuine data, the study achieve a classification accuracy of 78.07 percent, and on augmented data, the model reach a classification accuracy of 79.13 percent, which is about 9% higher than previously reported results [19].

3. Background

3.1. Blind/referenceless image spatial quality evaluator

Blind/referenceless image spatial quality evaluator (BRISQUE) [20], [21] is a reference-less quality assessment technique. The BRISQUE algorithm estimates the quality score of an image with computational performance. The algorithm selects the pointwise numbers of sectionally normalized luminance signs and measures image naturalness based on measured differences from a natural picture form. The algorithm models the incidence of pairwise statistics of neighboring normalized luminance signals, which provide deformity orientation information. Though multiscale, the version applies to calculate features making it computationally fast and time-efficient [20], [21].

The BRISQUE model utilized a spatial method. First, a locally normalized luminance, also known as Mean Subtracted Contrast Normalized (MSCN) [20], is calculated as the following equation:

| (1) |

where is the local mean, I(m,n) is the intensity image, and normalizes using local variance (m,n). are spatial indices, M and are the image height and width, respectively, to avoid a zero denominator (variance). The local mean (m,n) and local variance (m,n) is calculated using the following equations:

| (2) |

| (3) |

where

3.2. K-means segmentation method

K-means clustering is a common segmentation technique in pixel-based methods. Clustering pixel-based approaches have low complexity in comparison to other region-based approaches. K-means clustering is adequate for image segmentation because the amount of clusters is usually known for images of particular areas of the body. K-means is a clustering algorithm to partition data. Clustering is the procedure for grouping data points with similar feature vectors into several clusters. Let the feature vectors obtained from l clustered data be . The generalized algorithm starts k cluster centroids by randomly choosing k characteristic vectors from X. Next, the feature vectors are grouped into k clusters using a chosen distance measure, such as Euclidean distance [22].

3.3. Fuzzy Edge images using trapezoidal membership functions

Edge detection is the strategy used most often for segmenting images based on fluctuations in intensity. Edge detection is a requirement for image segmentation because it usually allows the image to be represented by black and white colors. Edge detection identifies the size and shape of an item. A better edge detection method is very likely to be a valuable tool for several applications. A digital image is a discrete description of reality. The image is composed of the color of the pixels and the position of these objects. Any potential treatment of the picture will have to account for the image’s discretization issues. For instance, at times, it is not possible to discern which pixel belongs to which item. Even a human has some difficulty with the place of the edges on an image. Conventional segmentation techniques such as watershed, region growing, and thresholding are suitable for segmenting regions with clear boundaries. However, for cases with boundaries and inhomogeneity, these methods cannot help segment the areas. Therefore, the fuzzy logic appears as a suitable choice for tackling these edges’ representation [23] [24], [25], [26], [27].

Fuzzy systems are an option to the classic boolean logic that only has two states: false or true. The membership values have been signaled by either 0 absolute false or 1 for complete accuracy and range. Fuzzy systems overcome the uncertainties in the information and solve image processing [28]. In a fuzzy inference system (FIS) [24], a fuzzy set declares each fuzzy number and requires a predetermined range of crisp with a grade of membership. The fuzzy sets of input membership functions transfer crisp inputs into fuzzy inputs. The set is explained as follows where, x is an element in the set X. A membership worth expresses the grade of membership linked to each element in a fuzzy set A, which reveals a combination , , .

3.3.1. Fuzzy Trapezoidal membership function

Membership function (MF) is a curve that defines how every pixel from the input is redirected to a membership value between 1 and 0. The MF curve is a function of a vector and is determined by four scalar parameters b, a, c, and d [29], [30].

3.4. Transfer learning

The use of DL and CNN methodologies in various computer vision software has been grown quickly. DL draws its power to optimize multiple neuron layers connected as a system that includes operators and linear.

A convolutional neural network is a type of feed-forward neural network broadly employed for picture-based classification, object detection, and recognition. The fundamental principle is using convolution, which generates the filtered characteristic maps piled over each other [31].

A CNN is a structure of DL that measures the convolution between the weights along with a picture input. It selects attributes from the input data as opposed to conventional ML methods. During the learning process, the optimal values to the convolution coefficients, using a pre-defined price function, are discovered, based on which the characteristics are automatically determined. Convolution is a method that takes a little matrix of numbers (known as kernel or filter), pass it on an image and change it based on the values from the filter. Subsequent attribute map values are calculated according to the following formula [32]:

| (4) |

The convolutional layer gives a convolved characteristic map; as a result signal after applying the dot product between a small region of input and the filter weights to which they all are connected. Then, the pooling layer performs a downsampling operation. In the convolutional neural system, the size of pooling layer output can be measured using the following formula [31]:

| (5) |

where W is the input size; F is the convolutional kernel size; P is the padding value and S is the step size.

Transfer learning has brought considerable importance since it can work with little or no information in the training phase. That is, data that is well-established is adjusted by move learning from one domain to another. Transfer learning is well-suited to scenarios where a version performs poorly due to obsolete data or scant [33], [34]. This form of transfer learning used in DL is known as an inductive transfer. This is where the reach of feasible models, i.e., model bias, is narrowed in a practical way using a model match on a different but related task.

Since AlexNet won the ImageNet competition, CNNs are utilized for a broad selection of DL applications. From 2012 to the current, researchers are attempting to apply CNN on several different tasks [35].

3.4.1. VGG16

The VGG16 system is fashioned of 3 3 convolutional layers, 13 convolutional layers, and three fully-connected layers and can be attached to the pooling layer after every phase. The max-pooling layer follows some convolutional layers. The stride is set to 1 pixel. The five max-pooling layers use a determined stride of 2 and a 2 2-pixel filter. A padding of 1 pixel is done for the 3 3 convolutional layers—all the layers of the network use ReLU as the activation function [36].

3.4.2. ResNet

Deep Residual Network (ResNet) is an Artificial Neural Network (ANN) that overcomes reduced precision when developing a plain ANN using a deeper layer compared to a shallower ANN. This Deep Residual Network’s purpose would be to earn ANN with layers with higher precision. The idea of it would be to create ANN that may upgrade the weight into a shallower layer, i.e., decrease degradation gradient [37]. Residual Networks (ResNet) improving DL introduces the notion of restructuring the layers in order for residual functions to be learned, which are relative to the inputs of these layers instead of learning capabilities that have no reference to the layer inputs. This restructuring solved the vanishing gradient problem in CNNs and allowed the training of considerably deeper neural networks. ResNet-152 includes 152 layers that are 8 VGGNet’s depth, nevertheless has lower complexity. An ensemble of ResNet-152 models attained 3.57% precision error on the ImageNet test dataset and won first place in the ILSVRC 2015 classification challenge.

3.4.3. InceptionV3

Google’s Inception V3 is the variant of the DL Architectures series Inception V3 trained with 1000 classes using the first ImageNet dataset with more than 1 million pictures [38].

3.4.4. EfficientNets

EfficientNet is a DL family of models with fewer parameters than the state-of-the-art versions. The model improves performance by a smart mixture of depth, width, and resolution. EfficientNet scales width and resolution using a compound coefficient. The advantage of EfficientNets in comparison to CNN is related to the decrease in the number of FLOPS along with which parameters, increasing precision. The classification accuracy for EfficientNet can also be better than those that have similar complexities [35], [39], [40].

3.5. Class activation map

The convolutional layer can be utilized as object sensors without giving an object’s annotated bounding box to the practice sample. CNN lost this ability when connected to a fully connected layer. Unlike a traditional CNN, whereby looking at the image, the goal is to identify the picture class, the class activation map produces a heatmap to show the significant area of the image classified. Class activation mapping is a method to generate a specified category representing the discriminative areas that link the class of the object [41], [42].

3.6. Quantization

Deep learning has a long track record of success, but the use of heavy algorithms on large graphical processing units is not ideal. In response to this disparity, a new class of deep learning methods known as quantization has emerged. Quantization is used to reduce the size of the neural network while maintaining high performance accuracy. This is particularly important for on-device applications, where memory and computation capacity are constrained. The process of approximating a neural network that uses floating-point numbers by a neural network with low bit width numbers is known as quantization for deep learning. The memory requirements and computational costs of using neural networks are drastically reduced as a result of this.

4. Proposed method

The proposed method consists of using a multi-input network with two input images: a non-segmented image and the second segmented image using a fuzzy trapezoidal membership function or a K-means cluster segmentation. The proposal consists of four stages. The first one reads the set of images of the two datasets previously presented and performs the data shuffling. The second phase is to apply the fuzzy filter and the segmenter separately using K-means to compare which achieves the best accuracy. The fuzzy filter applies a trapezoidal fuzzy number presented as:

| (6) |

We use the Brisque score to select the best parameters (a,b,c,d) for the fuzzy filter. Initially, images treated with a diffuse filter and a cluster are used to train four networks using transfer of learning, namely VGG16, InceptionV3, ResNet152V2, and EfficientNetB3. This test aims to compare the performance between the applied diffuse filter and the cluster averages. We evaluate the tests using the AUC, accuracy, precision and recall metrics.

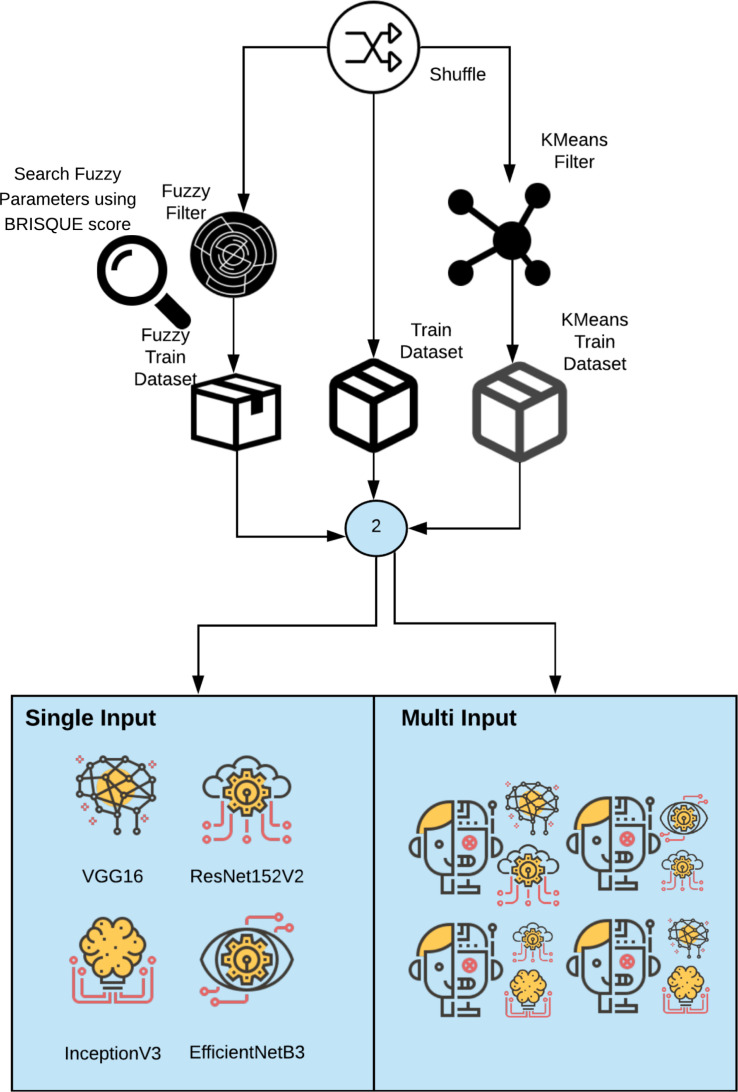

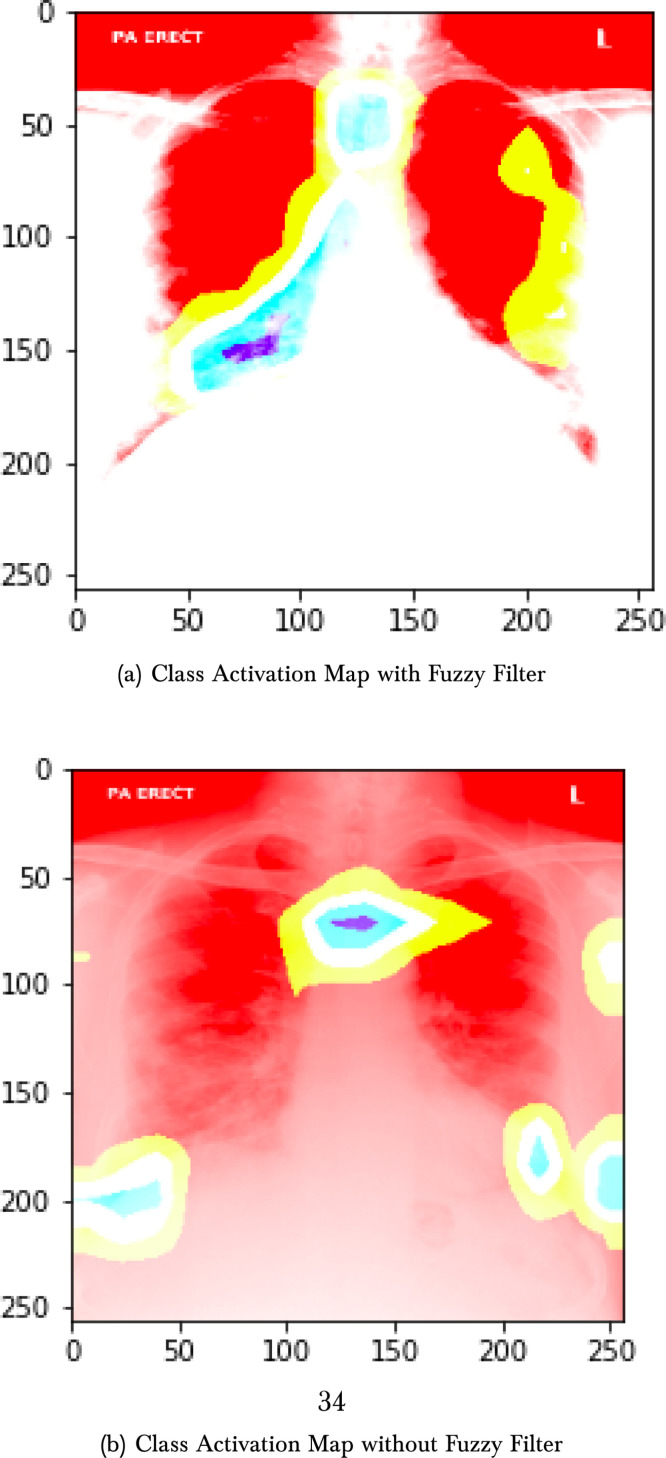

The third phase consists of applying tests with a multi-input network with two pre-trained networks. We altered the networks by substituting the last layer, using a fully connected layer, with 20 nodes divided into another fully connected sigmoid layer with a single node. The criteria are applied in 12 combinations between VGG16, InceptionV3, ResNet152V2, and EfficientNetB3. These tests were executed on ten epochs. The combination with a better AUC score was chosen to be tunning and evaluated. The fourth phase of the process consists of tunning the best model chosen. We train the best model in 100 epochs, and the metrics of the ROC curve, f1-score, and recall by epoch are presented. The last step consists of using class activation maps to compare explainable ML practice with and without the fuzzy filter (see Fig. 1, Fig. 3).

Fig. 1.

The second phase of proposed method.

Fig. 3.

X-ray samples with fuzzy filter.

4.0.1. Training and implementation details

We use Adaptive Moment Estimation (Adam) as the optimizer and Binary Cross Entropy as the loss function and Sigmoid as the networks’ activation function. The initial learning rate was 0.001. We chose the simple learning speed schedule of decreasing the learning rate by a constant element when operation metric plateaus about the validation/test place (commonly called Reduce learning rate on plateau). We configure Reduce learning rate on plateau to monitor validation loss with factor parameter equals 0.2 and patience with value 2. The best-chosen combination was obtained after 100 epochs.

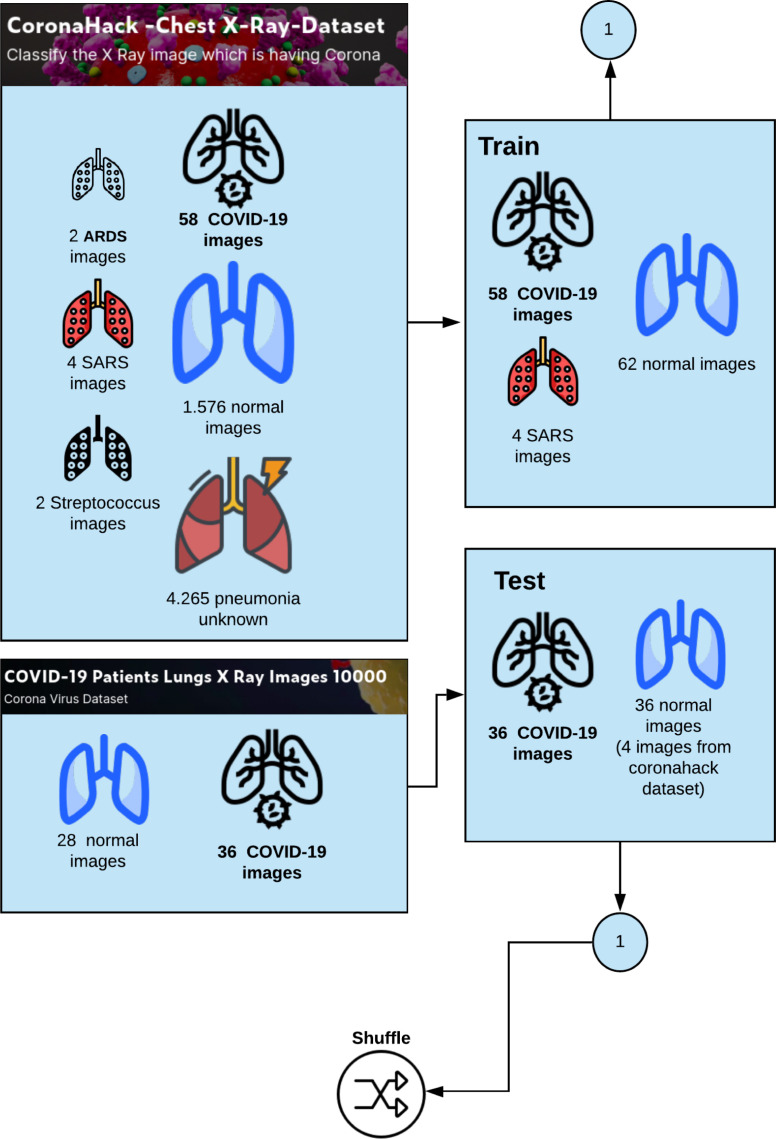

4.1. The dataset

We use two datasets to train the proposed COVID-Net. The first dataset was available on https://github.com/ieee8023/covid-chestxray-dataset and approved by the University of Montreal’s Ethics Committee (Fig. 2). The dataset is a collection of CXR of Healthy vs. Pneumonia (Corona) affected patients, infected patients, along with few other categories such as SARS (Severe Acute Respiratory Syndrome), Streptococcus and ARDS (Acute Respiratory Distress Syndrome). The second dataset is available on the Kaggle platform on https://www.kaggle.com/nabeelsajid917/covid-19-X-ray-10000-images and was used to test the model. Fig. 4 shows the diversity of patient cases from the dataset. The first dataset contains 5888 images, where 1.576 do not have any pneumonia, 4.265 have pneumonia of unknown cause, 58 cases of COVID-19, and 4 SARS images. We use a third dataset available on https://www.kaggle.com/alifrahman/covid19-chest-xray-image-dataset to improve the test results.

Fig. 2.

Image samples of the chosen datasets.

Fig. 4.

Description of the dataset used in this study.

4.2. Model analysis

We use the AUC, accuracy, precision, and recall to compare the models.

4.2.1. The AUC metric

The trapezoidal rule is used to ascertain the AUC. The resulting area is equal to this Mann–Whitney U statistic divided by , where and are the number of instances in and , respectively. The AUC can be described as the chance to correctly identify the case when confronted with a randomly selected case from each class.

4.2.2. Confusion matrix

Let I(x,y) : be a medical image and S(I(x,y)): a binary decision of picture I(x,y). According to [43], the gold standard as G and the result as R, each fold can be classified as:

-

•

true positive: ^,

-

•

false positive: ^,

-

•

true negative: ^,

-

•

false negative: ^,

4.2.3. Precision

The precision is given by

| (7) |

4.2.4. Recall

The recall is given by:

| (8) |

where TP is the number of true positives and FN the number of false negatives, where R is Recall, the recall is the capability of the classifier to find all the samples. The best value is 1, and the worst value is 0.

4.2.5. Accuracy

Accuracy is a metric for deciding the models’ performance in categorizing positive and negative classes. Assessing all detailed data with all data calculates the rating. It is given by:

| (9) |

4.3. Big O for convolutional networks

The number of features in each feature map in a CNN is at most a constant times the number of input pixels n (usually 1). Because each output is merely the sum product of k pixels in the picture and k weights in the filter, and k does not vary with n, convolving a fixed size filter across an image with n pixels requires O(n) time.

4.4. Pseudo-code and source code

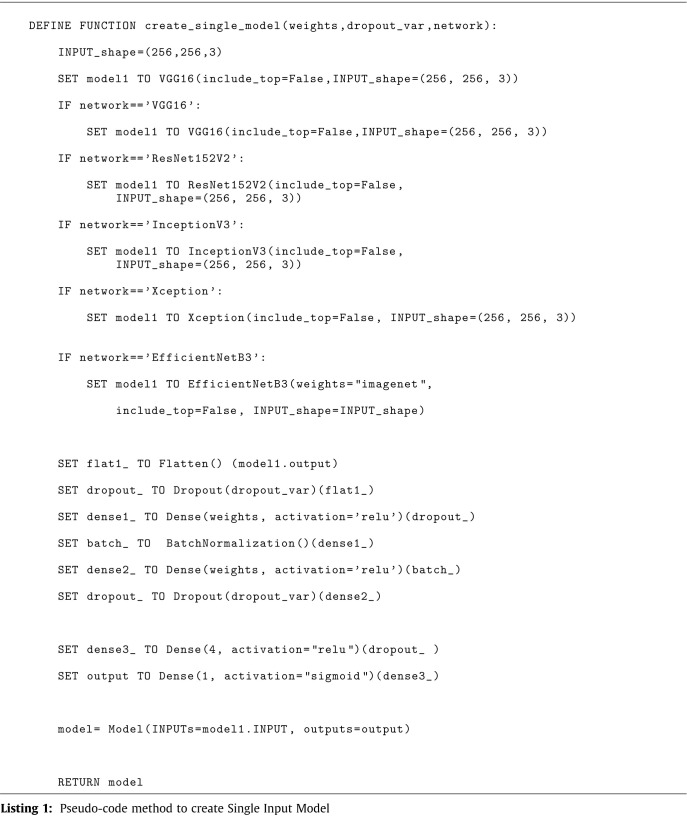

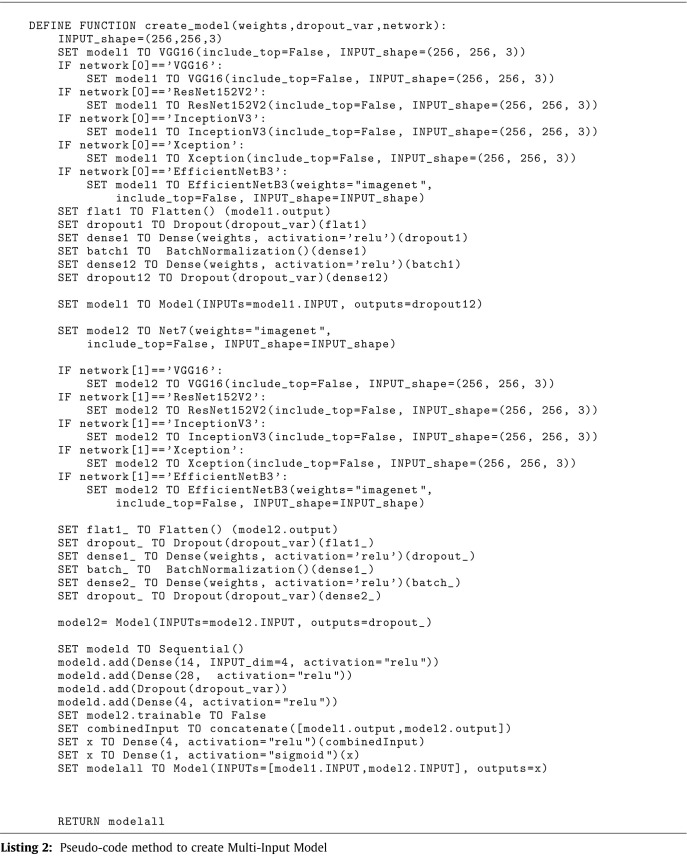

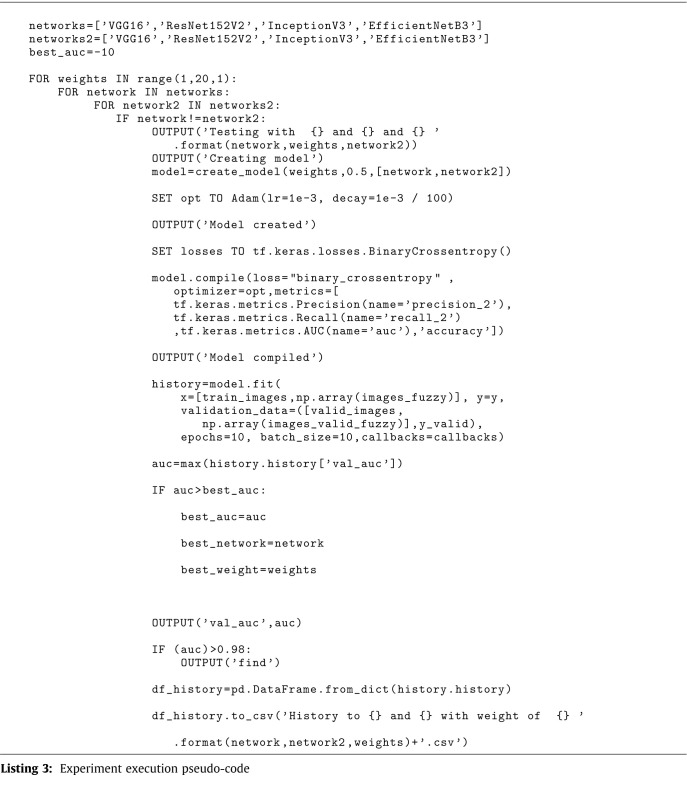

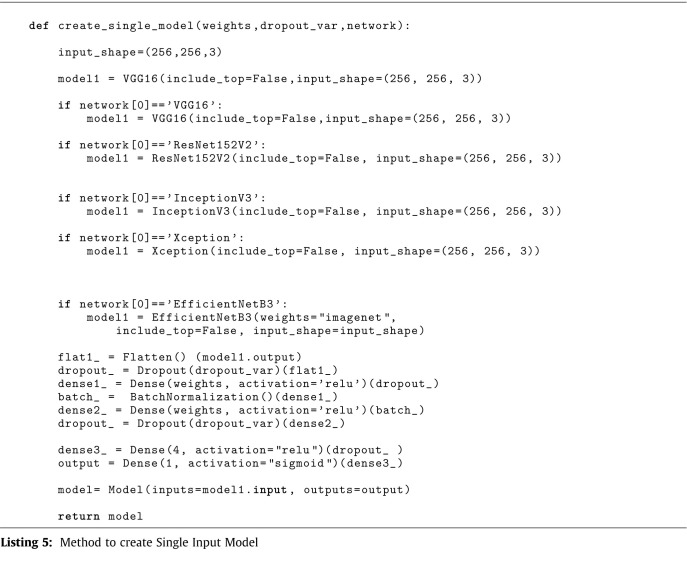

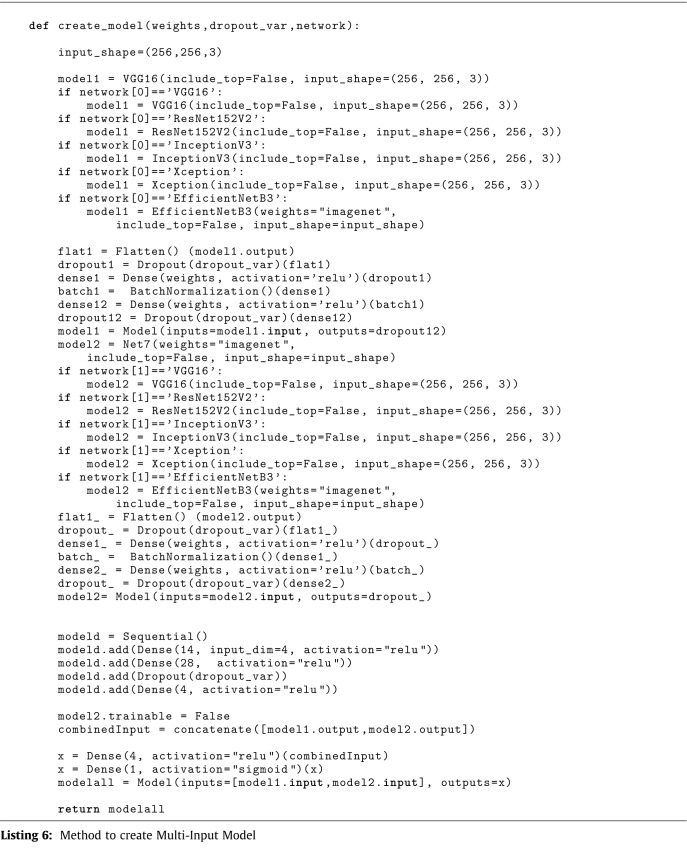

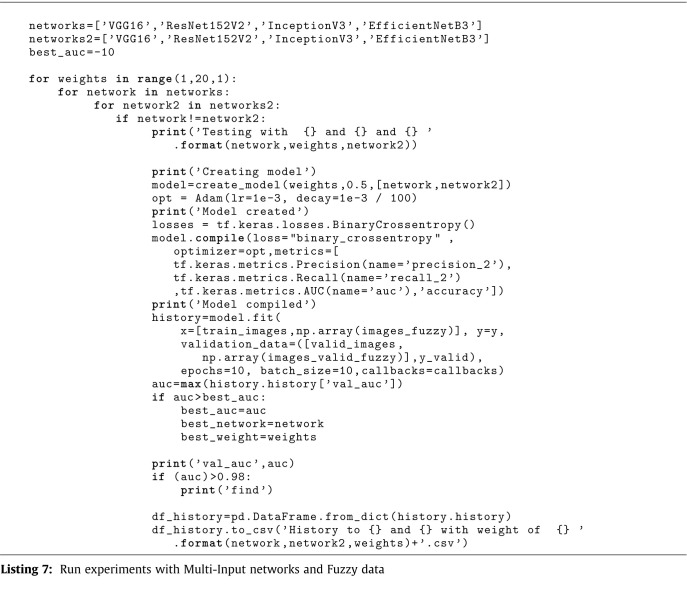

The process of building the single input model is described in the algorithm in the listing 1. Three parameters are required by the method: The weight of adjacent layers of neural networks (the model uses transfer learning, including additional layers to a pre-trained network); the Dropout value used for network generalization and the network to be created (the model uses transfer learning, including additional layers to a pre-trained network); and the weight of adjacent layers of neural networks (the model uses transfer learning, including additional layers to a pre-trained network). BatchNormalization is used in this method. Batch normalization is a transformation that keeps the mean output close to 0 and the standard deviation of the output close to 1. The algorithm in the listing 2 describes the process of creating the Multi-input model. The approach requires the same three parameters that were used to generate the single input model. The last parameter is an array containing a list of networks that will be used to build the model. Model(INPUTs=[model1.INPUT,model2.INPUT], outputs=x) concatenates the output of the two models, while concatenate([ model1.output, model2.output]) concatenates the input of the two models. The pseudo-code for experiment execution is shown in the listing 3. There are two network lists, and the algorithm combines the networks and the layer weights. The model is trained with original and fuzzy images using the Adam optimizer and binary cross entropy loss function for each combination.

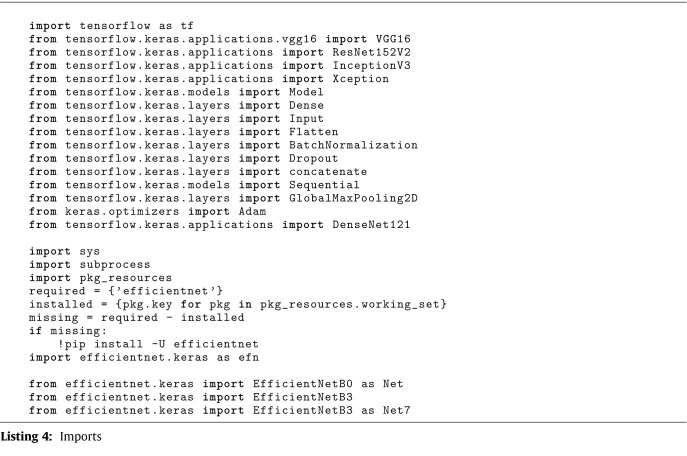

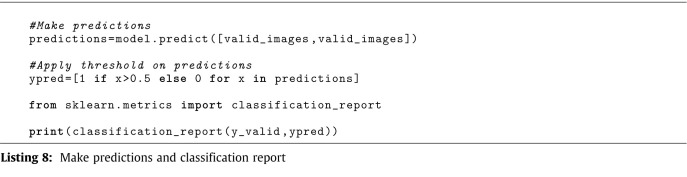

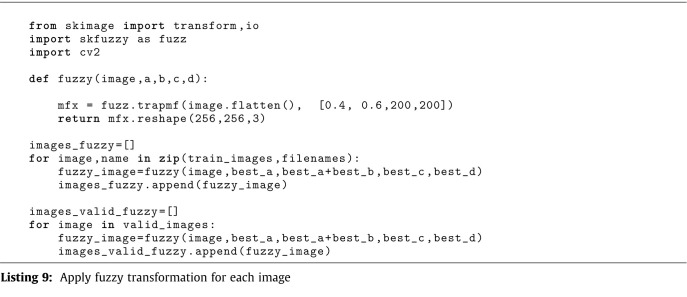

The Listing 4 show the main imports. The 5 and 6 listings describe methods for creating single and multi-input models, respectively. Listing 7 shows the code for training and evaluating several neural network configurations. The code for executing the predictions is listed in Listing 8. The procedure of applying the fuzzy transformation, which is provided through the python API skfuzzy, to each image is shown in Listing 9. The fuzzy method’s parameters were chosen using the brisque score technique.

5. Results and discussion

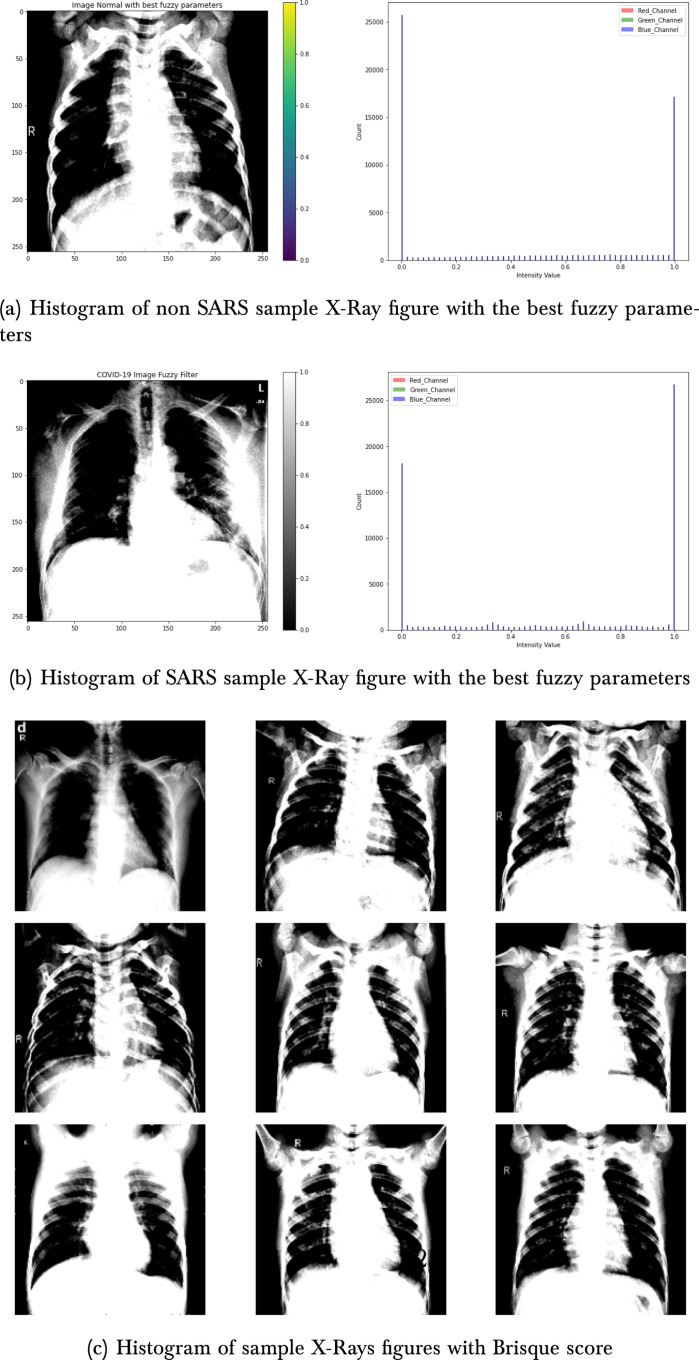

This study’s primary goal is to present a monitoring model and reduce human errors in COVID-19 diagnosis. The proposed model’s performance was measured using the Area Under the receiver operator characteristic curve (AUC). The code used in these experiments and datasets is available in https://www.kaggle.com/naubergois/covid-xray-classification-with-fuzzy-1-0-recall and https://www.kaggle.com/naubergois/fork-of-covid-xray-classification-with-fuzzy-1-0-r. The model requires O(n) time. We use Brisque values to tunning the Fuzzy filter parameters and obtain a=0.2, b=0.4, c=200, and d=200. Fig. 3(c) shows samples of the X-rays with respective Brisque scores. Fig. 3(a) presents a non-SARS sample with the best fuzzy parameters. Fig. 3(b) presents a non-SARS sample with the best fuzzy parameters.

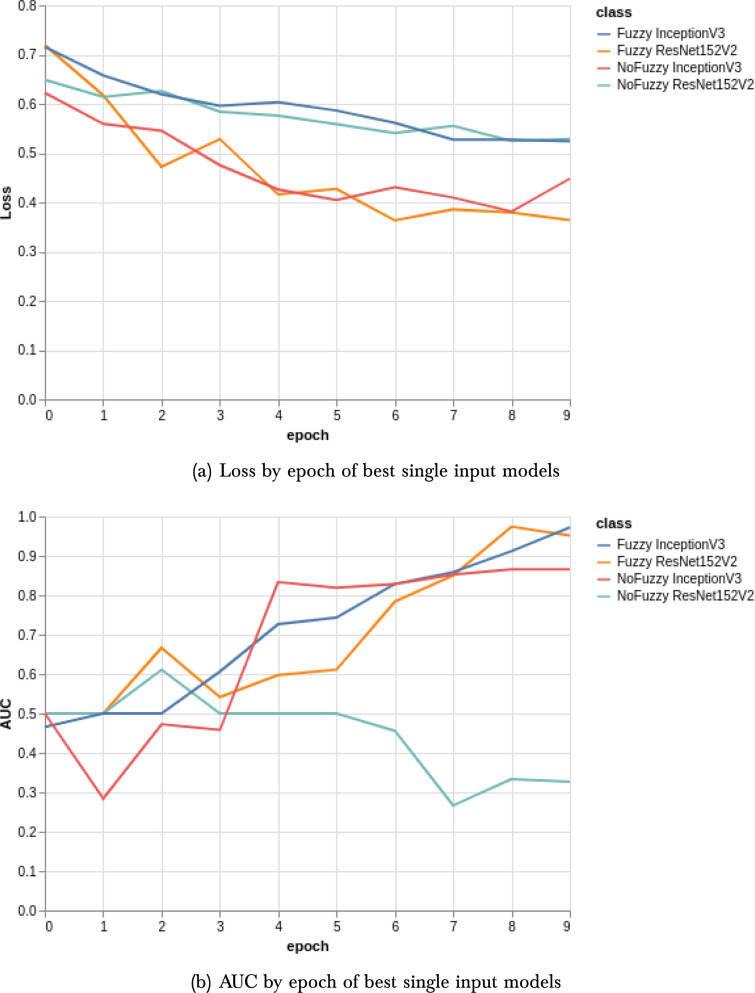

The first results concern the comparison between the use of the fuzzy filter and the segmentation with K-means. Table 1 show the results obtained with the use of K-means, each table show AUC, accuracy, precision and recall of each model. EfficientNetB3 obtain the better precision and InceptionV3 the better recall result. Table 2, Table 3 show the results for all pre-trained networks used with and without the fuzzy filter. We can see that the fuzzy filter has considerably improved the results of the ResNet152V2 model and for the AUC of all models. On the other hand, fuzzy filters reduce the recall of VGG16 and EfficientNet B3. The use of the fuzzy filter surpassed the use of the K-means cluster in all experiments. Fig. 5(a) present the loss by an epoch of the best single input models. Fig. 5(b) present the AUC by an epoch of the best single input models.

Table 1.

Results of experiment single input classification with K-Means cluster.

| Model | AUC | Accuracy | Precision | Recall |

|---|---|---|---|---|

| VGG16 | 0.861497 | 0.763889 | 0.711111 | 0.888889 |

| ResNet152V2 | 0.700231 | 0.527778 | 0.517241 | 0.833333 |

| InceptionV3 | 0.870370 | 0.500000 | 0.500000 | 1.000000 |

| EfficientNetB3 | 0.756944 | 0.680556 | 1.000000 | 0.361111 |

Table 2.

Results of experiment single input classification with Fuzzy.

| Model1 | AUC | Accuracy | Precision | Recall |

|---|---|---|---|---|

| VGG16 | 0.900463 | 0.597222 | 1.000000 | 0.194444 |

| ResNet152V2 | 0.951389 | 0.777778 | 0.692308 | 1.000000 |

| InceptionV3 | 0.912037 | 0.875000 | 0.829268 | 0.944444 |

| EfficientNetB3 | 0.992670 | 0.736111 | 1.000000 | 0.472222 |

Table 3.

Results of experiment single input classification without Fuzzy.

| Model1 | AUC | Accuracy | Precision | Recall |

|---|---|---|---|---|

| VGG16 | 0.989197 | 0.972222 | 0.972222 | 0.972222 |

| ResNet152V2 | 0.611111 | 0.611111 | 0.562500 | 1.000000 |

| InceptionV3 | 0.852623 | 0.847222 | 0.765957 | 1.000000 |

| EfficientNetB3 | 0.977238 | 0.916667 | 0.941176 | 0.888889 |

Fig. 5.

Loss and AUC by epoch results.

The Table 5, Table 6 present the results obtained through the MultiInput technique using and not using the fuzzy filter. We obtained a higher AUC in all cases with the fuzzy filter. Therefore, it is easy to conclude that the fuzzy filter contributes to distinguishing positive or negative for COVID. It is also verified that in the same way that the EfficientNetB3 shows a decrease in accuracy using a single input, there is no difference in the multi-input technique (Table 4). The Table 7 shows the comparison of the results between multi-input and single-input networks. Except for the EfficientNetB3 model, multi-input models obtained better value for AUC and accuracy.

Table 5.

Results of experiment MultiInput classification without Fuzzy.

| Model1 | Model2 | AUC | Acc | Prec. | Recall |

|---|---|---|---|---|---|

| VGG16 | ResNet152V2 | 0.99 | 0.94 | 0.97 | 0.92 |

| VGG16 | InceptionV3 | 0.98 | 0.94 | 0.97 | 0.92 |

| VGG16 | EffNetB3 | 0.97 | 0.93 | 0.92 | 0.94 |

| ResNet152V2 | VGG16 | 0.99 | 0.94 | 0.97 | 0.92 |

| ResNet152V2 | InceptionV3 | 1.00 | 0.97 | 0.95 | 1.00 |

| ResNet152V2 | EffNetB3 | 0.99 | 0.88 | 0.80 | 1.00 |

| InceptionV3 | VGG16 | 0.98 | 0.94 | 0.97 | 0.92 |

| InceptionV3 | ResNet152V2 | 1.00 | 0.97 | 0.95 | 1.00 |

| InceptionV3 | EffNetB3 | 0.99 | 0.96 | 0.97 | 0.94 |

| EffNetB3 | VGG16 | 0.97 | 0.93 | 0.92 | 0.94 |

| EffNetB3 | ResNet152V2 | 0.99 | 0.88 | 0.80 | 1.00 |

| EffNetB3 | InceptionV3 | 0.99 | 0.96 | 0.97 | 0.94 |

Table 6.

Results of experiment MultiInput classification with Fuzzy.

| Model1 | Model2 | AUC | Acc | Prec. | Recall |

|---|---|---|---|---|---|

| VGG16 | ResNet152V2 | 1.00 | 0.94 | 1.00 | 0.89 |

| VGG16 | InceptionV3 | 1.00 | 0.97 | 0.97 | 0.97 |

| VGG16 | EfficientNetB3 | 0.88 | 0.72 | 0.64 | 1.00 |

| ResNet152V2 | VGG16 | 1.00 | 0.94 | 1.00 | 0.89 |

| ResNet152V2 | InceptionV3 | 0.99 | 0.96 | 0.92 | 1.00 |

| ResNet152V2 | EfficientNetB3 | 0.98 | 0.92 | 0.94 | 0.89 |

| InceptionV3 | VGG16 | 1.00 | 0.97 | 0.97 | 0.97 |

| InceptionV3 | ResNet152V2 | 0.99 | 0.96 | 0.92 | 1.00 |

| InceptionV3 | EfficientNetB3 | 0.96 | 0.94 | 0.97 | 0.92 |

| EfficientNetB3 | VGG16 | 0.88 | 0.72 | 0.64 | 1.00 |

| EfficientNetB3 | ResNet152V2 | 0.98 | 0.92 | 0.94 | 0.89 |

| EfficientNetB3 | InceptionV3 | 0.96 | 0.94 | 0.97 | 0.92 |

Table 4.

Comparative results between using images with and without fuzzyfilter.

| Fuzzy | AUC | Accuracy |

|---|---|---|

| ResNet152V2 | 0.951389 | 0.777778 |

| InceptionV3 | 0.912037 | 0.875000 |

| EfficientNetB3 | 0.992670 | 0.736111 |

| No Fuzzy | AUC | Accuracy |

| ResNet152V2 | 0.611111 | 0.611111 |

| InceptionV3 | 0.852623 | 0.847222 |

| EfficientNetB3 | 0.977238 | 0.916667 |

Table 7.

Comparative of experiment results with multiInput and single input classification with fuzzy (mean values by network).

| Multi input | AUC | Acc | Precision | Recall |

| VGG16 | 0.97 | 0.90 | 0.90 | 0.96 |

| ResNet152V2 | 0.99 | 0.94 | 0.95 | 0.94 |

| InceptionV3 | 0.99 | 0.96 | 0.96 | 0.97 |

| EfficientNetB3 | 0.95 | 0.88 | 0.88 | 0.93 |

| Single Input | AUC | Acc | Precision | Recall |

| VGG16 | 0.90 | 0.60 | 1.00 | 0.19 |

| ResNet152V2 | 0.95 | 0.78 | 0.69 | 1.00 |

| InceptionV3 | 0.91 | 0.88 | 0.83 | 0.94 |

| EfficientNetB3 | 0.99 | 0.74 | 1.00 | 0.47 |

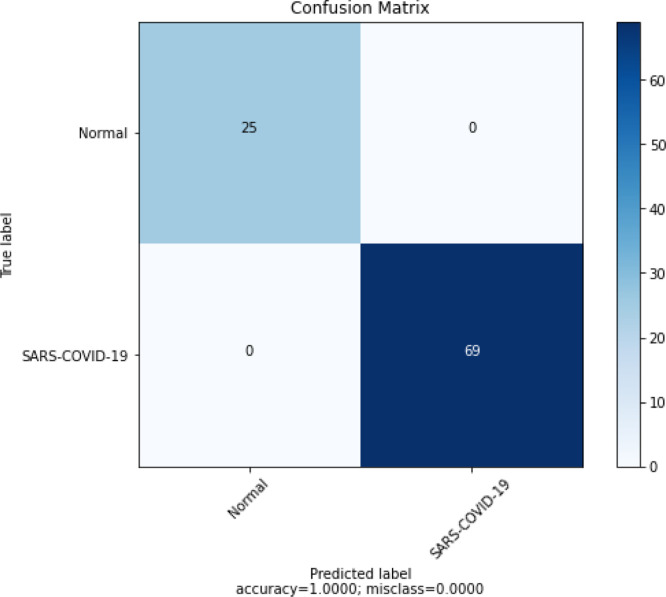

The combination of the VGG16 and ResNet152V2 network achieved the best result. This combination was trained in 100 epochs. The VGG16 network received the images with a fuzzy filter and ResNet152v2 received the images without the filter. The Table 8 show the f1_score achieved by the model. The model had a recall value of 1.

Table 8.

F1 score of the ResNet152V2-VGG16 model.

| Class | Precision | Recall | F1-score | Support |

|---|---|---|---|---|

| 0 | 1 | 0.94 | 0.97 | 36 |

| 1 | 0.95 | 1 | 0.97 | 36 |

| accuracy | 0.97 | 72 | ||

| macro avg | 0.97 | 0.97 | 0.97 | 72 |

| weighted avg | 0.97 | 0.97 | 0.97 | 72 |

Fig. 6(a) shows the model’s confusion matrix, and it is important to note that no COVID-19 was misclassified. Fig. 7, Fig. 7 present the class activation maps with and without fuzzy filter. We can observe that the regions of the map are well delimited with fuzzy filter images.

Fig. 6.

Loss and AUC by epoch results.

Fig. 7.

Class Activation Map of SARS sample X-ray.

Several approaches to discover COVID-19 are trained to find out Pneumonia. Pneumonia is a possibly life-threatening illness caused by several pathogens. In common practice, most research proposes to classify the presence of Pneumonia associated with COVID-19. COVID-19 and pneumonia are both respiratory disorders that share many of the same symptoms. However, they are much more intimately connected. As a result of the viral infection that causes COVID-19 or the flu, some patients acquire pneumonia. Many times, pneumonia develops in both lungs in COVID-19 patients, putting the patient at serious danger of respiratory problems. Even if you do not have COVID-19 or the flu, you can get pneumonia from bacteria, fungus, and other microorganisms. However, COVID-19 pneumonia is a unique infection with unusual characteristics [44]. Some studies attempt to distinguish between common pneumonia and COVID-19 pneumonia [45] [46] [47]. Some datasets with X-ray pictures of cases (pneumonia or COVID-19) and controls have been made accessible in order to develop machine-learning-based algorithms to aid in illness diagnosis. These datasets, on the other hand, are primarily made up of different sources derived from pre-COVID-19 and COVID-19 datasets. Some studies have discovered significant bias in some of the publicly available datasets used to train and test diagnostic systems, implying that the published results are optimistic and may overestimate the approaches’ real predictive capacity [48] [49] [50]. This study does not intend to validate the differences or distinguish between usual pneumonia and pneumonia caused by COVID at this time, but that is a goal for future research.

This research presents an approach where transfer learning in conjunction with fuzzy filters allows the classification of CXRs. This study has an AUC value more significant than the presented researches in the literature review. Jin et al. show an AUC value of 0.979. Zheng et al. obtained an AUC value of 0.959. Chouhan achieved a recall value of 99.62%. Abdul et al. get an accuracy of 85%. However, the given solution must be validated in a more extensive sample set and clinical tests before using it in the clinical environment. A fuzzy filter improves the AUC and precision results. Future research developments must apply the techniques presented in this study in the segmentation of computed tomography scans.

Finally, we quantized the model with the quantize_model method (tfmot. quantization. keras .quantizemodel) in the TensorFlow framework, with an accuracy of 0.95.

6. Conclusion

In this paper, we show that by using transfer learning and leveraging pre-trained models, we can achieve very high accuracy in detecting COVID-19. Also, together with the fuzzy filter, this study shows that it is possible to achieve a recall of 1.0 with more than one pre-trained model. The best model was a combination of VGG16 and ResNet152V2. Finally, using quantization technology, we achieve an accuracy of 0.95. Despite the fact that we achieved good COVID-19 detection accuracy, sensitivity, and specificity, this does not imply that the solution is ready for production, especially given the small number of photos currently accessible about COVID-19 cases. The aim of this analysis is to provide radiologists, data scientists, and the research community with a multi-input CNN model that may be used to diagnose COVID-19 early, with the aim that it will be expanded upon to speed up research in this area.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgments

QH received support from the Guangdong Province Science and Technology Plan Project, China (2018A050506086). VHCA received support from the Brazilian National Council for Research and Development (CNPq, Grant # 304315/2017-6 and #430274/2018-1). Nauber Gois received support from FUNCAP (Project 06629650/2021). LZ received support from the Research Start-up Funds of DGUT, China (GC300502-60). LY received support from the Innovation Center of Robotics And Intelligent Equipment in DGUT, China (KCYCXPT2017006) and the Key Laboratory of Robotics and Intelligent Equipment of Guangdong Regular Institutions of Higher Education, China (2017KSYS009).

References

- 1.Waheed A., Goyal M., Gupta D., Khanna A., Al-Turjman F., Pinheiro P.R. Covidgan: Data augmentation using auxiliary classifier GAN for improved Covid-19 detection. IEEE Access. 2020;8:91916–91923. doi: 10.1109/ACCESS.2020.2994762. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Abdel-Basset M., Mohamed R., Elhoseny M., Chakrabortty R.K., Ryan M. A hybrid COVID-19 detection model using an improved marine predators algorithm and a ranking-based diversity reduction strategy. IEEE Access. 2020;8:79521–79540. doi: 10.1109/ACCESS.2020.2990893. [DOI] [Google Scholar]

- 3.Ng H.P., Ong S.H., Foong K.W.C., Goh P.S., Nowinski W.L. 2006 IEEE Southwest Symposium on Image Analysis and Interpretation. 2001. Medical image segmentation using k-means clustering and improved watershed algorithm view project neuro vasculature modeling view project medical image segmentation using k-means clustering and improved watershed algorithm; pp. 61–65. URL https://www.researchgate.net/publication/4243554. [DOI] [Google Scholar]

- 4.Shi F., Wang J., Shi J., Wu Z., Wang Q., Tang Z., He K., Shi Y., Shen D. Review of artificial intelligence techniques in imaging data acquisition, segmentation and diagnosis for COVID-19. IEEE Rev. Biomed. Eng. 2020;3333(c):1–13. doi: 10.1109/RBME.2020.2987975. arXiv:2004.02731. [DOI] [PubMed] [Google Scholar]

- 5.Santos M.A., Munoz R., Olivares R., Rebouças Filho P.P., Del Ser J., de Albuquerque V.H.C. Online heart monitoring systems on the internet of health things environments: A survey, a reference model and an outlook. Inf. Fusion. 2020;53:222–239. [Google Scholar]

- 6.Chouhan V., Singh S.K., Khamparia A., Gupta D., Tiwari P., Moreira C., Damaševičius R., de Albuquerque V.H.C. A novel transfer learning based approach for pneumonia detection in chest X-ray images. Appl. Sci. (Switzerland) 2020;10(2) doi: 10.3390/app10020559. [DOI] [Google Scholar]

- 7.Ohata E.F., Bezerra G.M., das Chagas J.V.S., Neto A.V.L., Albuquerque A.B., de Albuquerque V.H.C., Reboucas Filho P.P. Automatic detection of COVID-19 infection using chest X-ray images through transfer learning. IEEE/CAA J. Autom. Sin. 2020 [Google Scholar]

- 8.Rodrigues M.B., Da Nóbrega R.V.M., Alves S.S.A., Rebouças Filho P.P., Duarte J.B.F., Sangaiah A.K., De Albuquerque V.H.C. Health of things algorithms for malignancy level classification of lung nodules. IEEE Access. 2018;6:18592–18601. [Google Scholar]

- 9.Dourado C.M., Da Silva S.P.P., Da Nóbrega R.V.M., Rebouças Filho P.P., Muhammad K., De Albuquerque V.H.C. An open ioht-based deep learning framework for online medical image recognition. IEEE J. Sel. Areas Commun. 2020 [Google Scholar]

- 10.De Souza R.W.R., De Oliveira J.V.C., Passos L.A., Ding W., Papa J.P., Albuquerque V. A novel approach for optimum-path forest classification using fuzzy logic. IEEE Trans. Fuzzy Syst. 2019;6706(c):1. doi: 10.1109/tfuzz.2019.2949771. [DOI] [Google Scholar]

- 11.Ding W., Abdel-Basset M., Eldrandaly K.A., Abdel-Fatah L., de Albuquerque V.H.C. Smart supervision of cardiomyopathy based on fuzzy harris hawks optimizer and wearable sensing data optimization: A new model. IEEE Trans. Cybern. 2020 doi: 10.1109/TCYB.2020.3000440. [DOI] [PubMed] [Google Scholar]

- 12.Muhammad K., Khan S., Del Ser J., de Albuquerque V.H.C. Deep learning for multigrade brain tumor classification in smart healthcare systems: A prospective survey. IEEE Trans. Neural Netw. Learn. Syst. 2020 doi: 10.1109/TNNLS.2020.2995800. [DOI] [PubMed] [Google Scholar]

- 13.Selvachandran G., Quek S.G., Lan L.T.H., Son L.H., Long Giang N., Ding W., Abdel-Basset M., Albuquerque V.H.C. A new design of mamdani complex fuzzy inference system for multi-attribute decision making problems. IEEE Trans. Fuzzy Syst. 2019;6706(c):1. doi: 10.1109/tfuzz.2019.2961350. [DOI] [Google Scholar]

- 14.Rajaraman S., Siegelman J., Alderson P.O., Folio L.S., Folio L.R., Antani S.K. Iteratively pruned deep learning ensembles for COVID-19 detection in chest X-rays. IEEE Access. 2020:1. doi: 10.1109/access.2020.3003810. arXiv:2004.08379. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Jin S., Wang B., Xu H., Luo C., Wei L., Zhao W., Hou X., Ma W., Xu Z., Zheng Z., Sun W., Lan L., Zhang W., Mu X., Shi C., Wang Z., Lee J., Jin Z., Lin M., Jin H., Zhang L., Guo J., Zhao B., Ren Z., Wang S., You Z., Dong J., Wang X., Wang J., Xu W. 2020. AI-Assisted CT imaging analysis for COVID-19 screening: Building and deploying a medical AI system in four weeks; pp. 1–22. MedRxiv URL http://medrxiv.org/content/early/2020/03/23/2020.03.19.20039354.abstract. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Wang G., Liu X., Li C., Xu Z., Ruan J., Zhu H., Meng T., Li K., Huang N., Zhang S. A noise-robust framework for automatic segmentation of COVID-19 pneumonia lesions from CT images. IEEE Trans. Med. Imaging. 2020;1(c):1. doi: 10.1109/tmi.2020.3000314. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Zheng C., Deng X., Fu Q., Zhou Q., Feng J., Ma H., Liu W., Wang X. 2020. Deep learning-based detection for COVID-19 from chest CT using weak label. MedRxiv, URL http://medrxiv.org/content/early/2020/03/17/2020.03.12.20027185.abstract. [DOI] [Google Scholar]

- 18.Lin J., Wang N., Zhu H., Zhang X., Zheng X. 2021 27th International Conference on Mechatronics and Machine Vision in Practice (M2VIP) IEEE; 2021. Fabric defect detection based on multi-input neural network; pp. 458–463. [Google Scholar]

- 19.Epalle T.M., Song Y., Liu Z., Lu H. Multi-atlas classification of autism spectrum disorder with hinge loss trained deep architectures: ABIDE I results. Appl. Soft Comput. 2021;107 [Google Scholar]

- 20.Chow L.S., Rajagopal H. Modified-BRISQUE as no reference image quality assessment for structural MR images. Magn. Reson. Imaging. 2017;43:74–87. doi: 10.1016/j.mri.2017.07.016. [DOI] [PubMed] [Google Scholar]

- 21.Sandilya M., Nirmala S.R. 2018 International Conference on Information, Communication, Engineering and Technology, ICICET 2018. Vol. 15. IEEE; 2018. Determination of reconstruction parameters in compressed sensing MRI using BRISQUE score; pp. 1–5. [DOI] [Google Scholar]

- 22.Wu M.N., Lin C.C., Chang C.C. Proceedings - 3rd International Conference on Intelligent Information Hiding and Multimedia Signal Processing, IIHMSP 2007. Vol. 2. 2007. Brain tumor detection using color-based K-means clustering segmentation; pp. 245–248. [DOI] [Google Scholar]

- 23.Lopez-Molina C., Bustince H., Fernandez J., De Baets B. Proceedings of the 7th Conference of the European Society for Fuzzy Logic and Technology, EUSFLAT 2011 and French Days on Fuzzy Logic and Applications, LFA 2011. Vol. 1. 2011. Generation of fuzzy edge images using trapezoidal membership functions; pp. 327–333. [DOI] [Google Scholar]

- 24.Kumar E.B., Sundaresan M. 2014 International Conference on Computing for Sustainable Global Development, INDIACom 2014. 2014. Edge detection using trapezoidal membership function based on fuzzy’s mamdani inference system; pp. 515–518. [DOI] [Google Scholar]

- 25.Jabbar S.I., Day C.R., Chadwick E.K. IEEE International Conference on Fuzzy Systems. 2019-June. IEEE; 2019. Using fuzzy inference system for detection the edges of musculoskeletal ultrasound images; pp. 1–7. [DOI] [Google Scholar]

- 26.Madrid-Herrera L., Chacon-Murguia M.I., Posada-Urrutia D.A., Ramirez-Quintana J.A. 2019 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE) IEEE; 2019. Human image complexity analysis using a fuzzy inference system; pp. 1–6. [Google Scholar]

- 27.Albashah N.L.S.B., Asirvadam V.S., Dass S.C., Meriaudeau F. 2018 IEEE EMBS Conference on Biomedical Engineering and Sciences, IECBES 2018 - Proceedings. IEEE; 2019. Segmentation of blood clot MRI images using intuitionistic fuzzy set theory; pp. 533–538. [DOI] [Google Scholar]

- 28.Sharma T., Singh V., Sudhakaran S., Verma N.K. IEEE International Conference on Fuzzy Systems. 2019-June. IEEE; 2019. Fuzzy based pooling in convolutional neural network for image classification; pp. 1–6. [DOI] [Google Scholar]

- 29.Kala R., Deepa P. 2017 4th International Conference on Advanced Computing and Communication Systems, ICACCS 2017. 2017. Removal of rician noise in MRI images using bilateral filter by fuzzy trapezoidal membership function. [DOI] [Google Scholar]

- 30.Kala R., Deepa P. Proceedings of the 2018 International Conference on Current Trends Towards Converging Technologies, ICCTCT 2018. IEEE; 2018. Intuitionistic fuzzy C-means clustering using rough set for mri segmentation; pp. 1–9. [DOI] [Google Scholar]

- 31.Mehta S., Paunwala C., Vaidya B. 2019 International Conference on Intelligent Computing and Control Systems, ICCS 2019. IEEE; 2019. CNN based traffic sign classification using adam optimizer; pp. 1293–1298. [DOI] [Google Scholar]

- 32.Yemini M., Zigel Y., Lederman D. 2018 IEEE International Conference on the Science of Electrical Engineering in Israel, ICSEE 2018. IEEE; 2019. Detecting masses in mammograms using convolutional neural networks and transfer learning; pp. 1–4. [DOI] [Google Scholar]

- 33.Liu X., Liu Z., Wang G., Cai Z., Zhang H. Ensemble transfer learning algorithm. IEEE Access. 2017;6:2389–2396. doi: 10.1109/ACCESS.2017.2782884. [DOI] [Google Scholar]

- 34.Zuo H., Lu J., Zhang G., Liu F. Fuzzy transfer learning using an infinite Gaussian mixture model and active learning. IEEE Trans. Fuzzy Syst. 2019;27(2):291–303. doi: 10.1109/TFUZZ.2018.2857725. [DOI] [Google Scholar]

- 35.Pour A.M., Seyedarabi H., Jahromi S.H.A., Javadzadeh A. Automatic detection and monitoring of diabetic retinopathy using efficient convolutional neural networks and contrast limited adaptive histogram equalization. IEEE Access. 2020:1. doi: 10.1109/access.2020.3005044. [DOI] [Google Scholar]

- 36.Qu Z., Mei J., Liu L., Zhou D.Y. Crack detection of concrete pavement with cross-entropy loss function and improved VGG16 network model. IEEE Access. 2020;8:54564–54573. doi: 10.1109/ACCESS.2020.2981561. [DOI] [Google Scholar]

- 37.Budhiman A., Suyanto S., Arifianto A. 2019 2nd International Seminar on Research of Information Technology and Intelligent Systems, ISRITI 2019. IEEE; 2019. Melanoma cancer classification using ResNet with data augmentation; pp. 17–20. [DOI] [Google Scholar]

- 38.Kristiani E., Yang C.T., Huang C.Y. ISEC: An optimized deep learning model for image classification on edge computing. IEEE Access. 2020;8:27267–27276. doi: 10.1109/ACCESS.2020.2971566. [DOI] [Google Scholar]

- 39.Karim Z., Van Zyl T. 2020 International SAUPEC/RobMech/PRASA Conference, SAUPEC/RobMech/PRASA 2020. IEEE; 2020. Deep learning and transfer learning applied to sentinel-1 DInSAR and Sentinel-2 optical satellite imagery for change detection. [DOI] [Google Scholar]

- 40.Sun Y., Binti Hamzah F.A., Mochizuki B. 2020. Optimized light-weight convolutional neural networks for histopathologic cancer detection; pp. 11–14. [DOI] [Google Scholar]

- 41.Charuchinda P., Kasetkasem T., Kumazawa I., Chanwimaluang T. Proceedings of the 16th International Conference on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology, ECTI-CON 2019. IEEE; 2019. On the use of class activation map for land cover mapping; pp. 653–656. [DOI] [Google Scholar]

- 42.Bhaswara I.D., Marsiano A.F.D., Suryani O.F., Adji T.B., Ardiyanto I. IES 2019 - International Electronics Symposium: The Role of Techno-Intelligence in Creating An Open Energy System Towards Energy Democracy, Proceedings. IEEE; 2019. Class activation mapping-based Car Saliency Region and detection for in-vehicle surveillance; pp. 349–353. [DOI] [Google Scholar]

- 43.Popovic A., de la Fuente M., Engelhardt M., Radermacher K. Statistical validation metric for accuracy assessment in medical image segmentation. Int. J. Comput. Assist. Radiol. Surg. 2007;2(3–4):169–181. doi: 10.1007/s11548-007-0125-1. [DOI] [Google Scholar]

- 44.Gattinoni L., Chiumello D., Rossi S. COVID-19 pneumonia: ARDS or not? Crit. Care. 2020;24(1):1–3. doi: 10.1186/s13054-020-02880-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Yan T., Wong P.K., Ren H., Wang H., Wang J., Li Y. Automatic distinction between COVID-19 and common pneumonia using multi-scale convolutional neural network on chest CT scans. Chaos Solitons Fractals. 2020;140 doi: 10.1016/j.chaos.2020.110153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Varela-Santos S., Melin P. A new approach for classifying coronavirus COVID-19 based on its manifestation on chest X-rays using texture features and neural networks. Inform. Sci. 2021;545:403–414. doi: 10.1016/j.ins.2020.09.041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Jin W., Dong S., Dong C., Ye X. Hybrid ensemble model for differential diagnosis between COVID-19 and common viral pneumonia by chest X-ray radiograph. Comput. Biol. Med. 2021;131 doi: 10.1016/j.compbiomed.2021.104252. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Catalá O.D.T., Igual I.S., Pérez-Benito F.J., Escrivá D.M., Castelló V.O., Llobet R., Peréz-Cortés J.-C. Bias analysis on public X-ray image datasets of pneumonia and COVID-19 patients. IEEE Access. 2021;9:42370–42383. doi: 10.1109/ACCESS.2021.3065456. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Santa Cruz B.G., Bossa M.N., Sölter J., Husch A.D. Public Covid-19 X-ray datasets and their impact on model bias–a systematic review of a significant problem. Med. Image Anal. 2021;74 doi: 10.1016/j.media.2021.102225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Vaid S., Kalantar R., Bhandari M. Deep learning COVID-19 detection bias: accuracy through artificial intelligence. Int. Orthopaed. 2020;44(8):1539–1542. doi: 10.1007/s00264-020-04609-7. [DOI] [PMC free article] [PubMed] [Google Scholar]