Abstract

In recent times, many different types of systems have been based on fractional derivatives. Thanks to this type of derivatives, it is possible to model certain phenomena in a more precise and desirable way. This article presents a system consisting of a two-dimensional fractional differential equation with the Riemann–Liouville derivative with a numerical algorithm for its solution. The presented algorithm uses the alternating direction implicit method (ADIM). Further, the algorithm for solving the inverse problem consisting of the determination of unknown parameters of the model is also described. For this purpose, the objective function was minimized using the ant algorithm and the Hooke–Jeeves method. Inverse problems with fractional derivatives are important in many engineering applications, such as modeling the phenomenon of anomalous diffusion, designing electrical circuits with a supercapacitor, and application of fractional-order control theory. This paper presents a numerical example illustrating the effectiveness and accuracy of the described methods. The introduction of the example made possible a comparison of the methods of searching for the minimum of the objective function. The presented algorithms can be used as a tool for parameter training in artificial neural networks.

Keywords: inverse problem, fractional system, fractional derivative, parameter identification, fractional differential equation, heuristic algorithm, computational methods

1. Introduction

Fractional calculus is widely used in various fields of science and technology, e.g., in the design of sensors, in signal processing, and network sensors [1,2,3,4,5]. In the paper [2], authors describe the use of fractional calculus for artificial neural networks. Fractional derivatives are mainly used for parameter training using optimization algorithms, system synchronization, and system stabilization. As the authors quote, such systems have been used in unmanned aerial vehicles (UAVs), circuit realization robotics, and many other engineering applications. The paper [3] covers applications of fractional calculus in sensing and filtering domains. The authors present the most important achievements in the fields of fractional-order sensors, fractional-order analogs, and digital filters. In [5], they present a new fractional sensor based on a classical accelerometer and the concepts of fractional calculus. In order to achieve this, two synthesis methods were presented: the successive stages follow an identical analytical recursive formulation, and in the second method, a PSO algorithm determines the fractional system elements numerically.

In addition to applications in electronics, neural networks, and sensors, fractional calculus is also used in modeling of thermal processes [6,7], in modeling of anomalous diffusion [8,9], in medicine [10], and also in control theory [11,12]. Authors of the study in [6] model heat transfer in a two-dimensional plate using Caputo operator. Theoretical results are verified by experimental data from a thermal camera. It is shown that the fractional model is more accurate than the integer-order model in the sense of mean square error cost function.

Often in applications of fractional calculus, differential equations with fractional derivatives have to be solved numerically. This is the reason for the importance of developing algorithms for solving this type of problem. A lot of papers presenting numerical solutions of fractional partial differential equations have been published in recent years. In the paper [13], the author used the artificial neural network in the construction of a solution method for the one-phase Stefan problem. In turn, Ref. [14] presented an algorithm for the solution of fractional-order delay differential equations. Bu et al., in [15], presented a space–time finite element method to solve a two-dimensional diffusion equation. The paper describes a fully discrete scheme for the considered equation. Authors also presented a theorem regarding existence, stability of the presented method, and error estimation with numerical examples. Another interesting study is [16], in which the ADI method to solve fractional reaction–diffusion equations with Dirichlet boundary conditions was described. The authors used a new fractional version of the alternating direction implicit method. A numerical example was also presented.

In the paper, authors present a solution to the inverse problem consisting of the appropriate selection of the model input parameters in such a way that the system response adjusts to the measurement data. Inverse problems are a very important part of all sorts of engineering problems [17]. In [18], the inverse problem is considered for fractional partial differential equation with a nonlocal condition on the integral type. The considered equation is a generalization of the Barenblatt–Zheltov–Kochina differential equation, which simulates the filtration of a viscoelastic fluid in fractured porous media. In [19], the authors considered two inverse problems with a fractional derivative. The first problem is to reconstruct the state function based on the knowledge of its value and the value of its derivative in the final moments of time. The second problem consists of recreating the source function in fractional diffusion and wave equations. Additional information are the measurements in a neighborhood of final time. The authors prove the uniqueness of the solution to these problems. Finally, the authors derive the explicit solution for some particular cases. In the paper [20], the fractional heat conduction inverse problem is considered, consisting of finding heat conductivity in presented model. The authors also compare two optimization methods: iteration method and swarm algorithm.

The learning algorithm constitutes the main part of deep learning. The number of layers differentiates the deep neural network from shallow ones. The higher the number of layers, the deeper it becomes. Each layer can be specialized to detect a specific aspect or feature. The goal of the learning algorithm is to find the optimal values for the weight vectors to solve a class of problem in a domain. Training algorithms aim to achieve the end goal by reducing the cost function. While weights are learned by training on the dataset, there are additional crucial parameters, referred to as hyperparameters, that are not directly learned from the training dataset. These hyperparameters can take a range of values and add complexity of finding the optimal architecturenand model [21]. Deep learning can be optimized in different areas. The training algorithms can be fine-tuned at different levels by incorporating heuristics, e.g., for hyperparameter optimization. The time to train a deep learning network model is a major factor to gauge the performance of an algorithm or network, so the problem of the training optimization in a deep learning application can be seen as the solution of an inverse problem. In fact, the inverse problem consists of selecting the appropriate model input parameters in order to obtain the desired data on the output. To solve the problem, we create an objective function that compares the desired values (target) with the network outputs calculated for the determined values of the searched parameters (weights). Finding the minimum of the objective function, we find the sought weights.

In this paper, in Section 2, a system consisting of a 2D fractional partial differential diffusion equation with Riemann–Liouville derivative is presented. Dirichlet boundary conditions were added to the equation. This type of model can be used for the designing process of heat conduction in porous media. In Section 2.2, a numerical scheme of the considered equation is presented based on the alternating direction implicit method (ADIM). In Section 3, the inverse problem is formulated. It consists of identification of two parameters of the presented model based on measurements of state function in selected points of the domain. The inverse problem has been reduced to solving the optimization problem. For this purpose, two algorithms were used and compared: probabilistic ant colony optimization (ACO) algorithm and deterministic Hooke–Jeeves (HJ) method. Section 4 presents a numerical example illustrating the operation of the described methods. Section 5 provides the conclusions.

2. Fractional Model

This section consists of a description of the considered anomalous diffusion model which is considered with a fractional derivative, and then we present a numerical algorithm solving the presented differential equation.

2.1. Model Description

Models using fractional derivatives have recently been widely used in various engineering problems, e.g., in electronics for modeling a supercapacitor, in mechanics for modeling heat flow in porous materials, in automation for describing problems in control theory, or in biology for modeling drug transport. In this study, we consider the following model of anomalous diffusion:

| (1) |

| (2) |

The differential Equation (1) describes the anomalous diffusion phenomenon (e.g., heat conduction in porous materials [22,23,24]), and is defined in the area , where , are parameters defining material properties, u is a state function, and f is an additional component in the model. Using the terminology taken from the theory of heat conduction, we can write that c is the specific heat, is the density, is the heat conduction coefficient, and the function f describes the additional heat source. All parameters are multiplied by the constants by the value of one and the units that ensure the compatibility of the units of the entire equation. The state function u describes the temperature distribution in time and space. The Equation (2) define the initial boundary conditions necessary to uniquely solve the differential equation. It is assumed that at the boundary the u state function has the value 0, and at the initial moment the value of the u function is determined by the well-known function. In the Equation (1), there also occurs fractional derivative of and order. In the model under consideration, these derivatives are defined as Riemann–Liouville [25] derivatives:

| (3) |

| (4) |

The Formula (3) defines the left derivative, and the Formula (4) defines the right derivative. In both cases, they assume that . In addition, the derivative of y of order in the Equation (1) is defined as the Riemann–Liouville derivative.

2.2. Numerical Solution of Direct Problem

Now, let us present the numerical solution of the model defined by Equations (1) and (2). If we have all the data about the model, such as parameters , initial boundary conditions, and geometry of the area, by solving the Equation (1), we solve the direct problem. In order to solve the problem under consideration, we write the Equation (1) as follows:

| (5) |

Then, we discretize the area by creating an uniform mesh in each of the dimensions. Let us assume the following symbols: , , , , , , , , , where are mesh sizes, and are points of mesh. The values of the functions in the grid points are labeled as . We approximate the Riemann–Liouville derivative using the shifted Grünwald formula [26]:

| (6) |

| (7) |

where

Similarly, we can approximate the fractional derivative to the spatial variable y. In the case of the derivative over time, we use the difference quotient:

| (8) |

Let us use the following notation:

| (9) |

| (10) |

where denotes the first-order derivative (at ) over the function with respect to the x variable. We assume analogous symbols for the y variable. After using the Formulas (6)–(10) and some transformations, the difference scheme for the Equation (5) can be written in the following form:

| (11) |

where , and .

In order to simplify the description of the numerical algorithm to be implemented, we present the difference schema (11) in matrix form, so we introduce the following matrices:

where

| (12) |

| (13) |

Now we define two block matrices, S and H. First, we create the matrix S of dimension , which is a diagonal block matrix containing matrices on the main diagonal, and zeros in other places.

| (14) |

Second, we create matrix H, which has the same dimension as matrix S, in the following form:

| (15) |

Now it is possible to write the difference scheme (11) in matrix form:

| (16) |

where

The matrices from the difference scheme (16) are large, so the obtainedsystem of equations is time-consuming to solve. Hence, we applied the alternating direction implicit method (ADIM) to the difference scheme (11), which significantly reduces the computation time (details can be found in [27]). This is an important issue in the case of inverse problems, where a direct problem should be solved many times. Let us write the scheme (11) in the form of the directional separation product:

| (17) |

Numerical scheme (17) is split into two parts and solved, respectively, first in the direction x, and afterwards in the direction y. With this approach, the resulting matrices for the systems of equations have significantly lower dimensions than in the case of the scheme (11). The numerical algorithm has two main steps:

- For each fixed , solve the numerical scheme in the direction x. As a consequence, we will obtain a temporary solution: :

(18) - Then, for each fixed , solve the numerical scheme in the direction y:

(19)

This process can be symbolically depicted as in Figure 1. For the boundary nodes and the initial condition, we applied:

Figure 1.

Numerical solution in horizontal direction (for a fixed node ) (a) and vertical direction (for a fixed node ) (b).

In the case of the ADIM method, it is also possible to present the equations in a matrix form, which has been executed below. First, for each , we define auxiliary vectors :

| (20) |

where . Hence, we obtain an auxiliary matrix dimension . Then, the numerical scheme (18) can be written in the following matrix form (for ):

| (21) |

where the temporary solution has the form , and , . We obtain systems of equations, each of dimension. Next, we present the scheme (19) in the direction y in matrix form (for ):

| (22) |

where and . At this stage of the algorithm, we can solve systems of equations with dimensions each. The Bi-CGSTAB [28,29] method is used to solve the equation systems, which has significance influences on the computation time. More implementation details and a comparison of times for the described method can be found in the papers [27,30].

3. Inverse Problem

In many engineering problems, in particular in various types of simulations and mathematical modeling, there is a need to solve the inverse problem. In this case, the inverse problem consists of selecting the appropriate model input parameters (1) and (2) to obtain the desired data on the output. Values of the state function u at selected points (so-called measurement points) of the domain are treated as input data for the inverse problem. The task consists of selecting unknown parameters of the model in such a way that the u function assumes the given values at the measurement points. Problems of this type are badly conditioned, which may result in the instability of the solution or the ambiguity of it [31,32]. Details of the solving algorithm are presented in the following sections.

3.1. Parameter Identification

In the model (1) and (2), the following data are assumed:

| (23) |

| (24) |

where . The inverse problem deals with finding the and parameters appropriately. The input data for the inverse problem are values of the u function at selected points in the area. Additionally, in order to test the algorithm, the following is assumed:

-

Two different grids ():

-

–

,

,

-

–

Different levels of measurement data disturbances (errors with a normal distribution): .

Figure 2.

Arrangements of measuring points.

To solve the problem, we create an objective function that compares the values of the u function calculated for the determined values of the searched parameters (at measurement points) with the measurement data. Therefore, we define the objective function as follows:

| (25) |

where and are the number of measuring points and the number of measurements in a given measuring point, respectively. In the considered example, , and depends on the used mesh. By , we denote the values of the u function obtained in the algorithm for the fixed parameters , and by measurement data. Finding the minimum of the objective function (25), we find the sought parameters.

3.2. Function Minimization

In the case of the minimization objective function, we can use any heuristic algorithm (e.g., swarming algorithms). In this paper, we decided to use two algorithms:

Ant colony optimization algorithm (ACO).

Hooke–Jeeves algorithm (HJ).

In this section, we describe both algorithms.

3.2.1. Ant Colony Optimization Algorithm

The presented ACO algorithm is a probabilistic one, so we obtain a different result in each execution. Proper selection of algorithm parameters should make the obtained results give convergent solutions. The algorithm is inspired by the behavior of an ant swarm in nature. More about the ACO algorithm and its applications can be found in the articles [33,34,35]. In order to describe the algorithm, we introduce the following notations:

Algorithm 1 presents ACO algorithm step by step. Number of execution objective function in case of ACO algorithm is equal to .

3.2.2. Hooke–Jeeves Algorithm

The Hooke–Jeeves algorithm is a deterministic algorithm for searching for the minimum of an objective function. It is based on two main operations:

Exploratory move. It is used to test the behavior of the objective function in a small selected area with the use of test steps along all directions of the orthogonal base.

Pattern move. It consists of moving in a strictly determined manner to the next area where the next trial step is considered, but only if at least one of the steps performed was successful.

In this algorithm, we consider the following parameters:

Pseudocode for the Hooke–Jeeves method is presented in Algorithm 2. The only drawback of the discussed method is the possibility of falling into the local minimum with more complicated objective functions. More details about the algorithm itself and its applications can be found in the papers [36,37].

| Algorithm 1 Ant Colony Optimization algorithm (ACO). | |||||

|

| Algorithm 2 Hooke–Jeeves algorithm (pseudocode). |

|

4. Results—Numerical Examples

We consider the inverse problem described in the Section 3.1. In the models (1) and (2), we set data described by the Equations (23) and (24). We used two different grids and and different levels of measurement data disturbances (input data for the inverse problem): . The unknown data in the model are and —these data need to be identified using the presented algorithm. To examine and test the algorithm, we know exact values of these parameters, which are , .

First, we present the results obtained using the ACO algorithm. We set the following parameters of the ant algorithm:

Based on the parameters, we can determine the number of calls to the objective function, which in our example is . Obtained results are presented in Table 1. The best results were obtained for exact input data and mesh, the relative errors of reconstruction parameters and are and , respectively, and for the , mesh these errors are equal to and . In the case of the input data with a pseudo-random error, the obtained results are also very good, and the errors of reconstructed parameters do not exceed the input data disturbance errors. In particular, the errors of reconstruction of the coefficient are very small and do not exceed (except in the case of disturbing the input data with an error of and the grid). Relative errors of reconstructed parameter have values greater than errors, most likely due to the fact that the sought value is significantly lower than . Of course, along with the increase in input data disturbances, the values of the minimized objective function also increased. Except for in a few cases, the mesh density did not significantly affect the results.

Table 1.

Results of calculations in case of ACO algorithm. —reconstructed value of thermal conductivity coefficient; —reconstructed value of x-direction derivative order; —the relative error of reconstruction; J—the value of objective function; —standard deviation of objective function.

| Mesh Size | Noise | J | |||||

|---|---|---|---|---|---|---|---|

| 100 × 100 × 200 | 0% | 240.06 | 2.83 × 10−2 | 0.8046 | 5.84 × 10−1 | 2.24 | 8.72 |

| 2% | 240.71 | 2.95 × 10−1 | 0.7934 | 8.14 × 10−1 | 725.13 | 5.23 | |

| 5% | 241.49 | 6.21 × 10−1 | 0.7735 | 3.31 | 4994.21 | 14.72 | |

| 10% | 236.61 | 1.41 | 0.7798 | 2.52 | 19,424.61 | 6.44 | |

| 160 × 160 × 250 | 0% | 239.63 | 1.51 × 10−1 | 0.8054 | 6.87 × 10−1 | 1.72 | 19.17 |

| 2% | 239.11 | 3.71 × 10−1 | 0.8131 | 1.64 | 1020.84 | 11.39 | |

| 5% | 241.28 | 5.36 × 10−1 | 0.7943 | 7.03 × 10−1 | 5396.34 | 5.41 | |

| 10% | 241.76 | 7.34 × 10−1 | 0.7761 | 2.98 | 23,675.2 | 2.66 |

Figure 3 shows how the value of the objective function changed depending on the iteration number for four input data cases. The figures do not include the objective function values for the initial iterations. This is due to the fact that these values were relatively high, and inclusion in the figures would reduce their legibility. We can see that in the last few iterations (2–5), the values of the objective function do not change anymore. The appropriate selection of the parameters for the ACO algorithm affects the computation time and is not always a simple task. It depends on the complication of the objective function and the number of sought parameters (size of the problem). In particular, a situation in which the algorithm does not change the solution in the next dozen iterations should be avoided. As we can observe in the presented example, the selection of ACO parameters, such as the number of iterations, as well as the size of the population, seems appropriate.

Figure 3.

Values of objective function J in iterations of ACO algorithm for different levels of input data noise: (a) 0%, (b) 2%, (c) 5%, (d) 10%.

For comparison, we now use the deterministic Hooke–Jeeves algorithm. The following parameters are set in it:

It is a deterministic algorithm, and the resulting solution, as well as the number of calls to the objective function, depend on the starting point and stop criterion . In our example, we consider four different starting points: . It turned out that regardless of the selected starting point, the same solution was always obtained, but it should be noted that in the case that the value of any of the reconstructed parameters exceeded the predetermined limits, then we execute the so-called penalty function. It was significant in the case of the starting point, for which the algorithm exceeded the limits and stopped at the local minimum; e.g., for the grid and disturbances, we obtained the results . Similar results were obtained for the remaining cases and the start. Table 2 shows the results obtained using the Hooke–Jeeves algorithm. Comparing the results obtained from both algorithms, we can see that in most cases the errors in reconstruction of the parameters are smaller for the Hooke–Jeeves algorithm; e.g., for the and input data disturbance errors, errors in sought parameters and for the HJ algorithm were and , respectively, while for the ACO algorithm, these errors were and . In addition, the value of the objective function for the HJ algorithm was smaller , . As mentioned earlier, the failure to apply the penalty function caused the HJ algorithm for the starting point to return unsatisfactory results. This should be noted when the objective function is complicated, for example, by increasing the number of parameters to be found.

Table 2.

Results of calculations in case of Hooke–Jeeves algorithm: —reconstructed value of thermal conductivity coefficient; —reconstructed value of x-direction derivative order; —the relative error of reconstruction; J—the value of objective function; —number of evaluation objective function; —starting point.

| Mesh Size | Noise | SP | J | |||||

|---|---|---|---|---|---|---|---|---|

| 100 × 100 × 200 | 0% | (100, 0.2) | 240.15 | 6.57 × 10−2 | 0.7993 | 8.33 × 10−2 | 0.0182 | 272 |

| (300, 0.1) | 246 | |||||||

| (450, 0.5) | 240 | |||||||

| (500, 0.9) | 299 | |||||||

| 2% | (100, 0.2) | 240.38 | 1.59 × 10−1 | 0.7971 | 3.61 × 10−1 | 724.57 | 254 | |

| (300, 0.1) | 217 | |||||||

| (450, 0.5) | 235 | |||||||

| (500, 0.9) | 270 | |||||||

| 5% | (100, 0.2) | 241.44 | 6.03 × 10−1 | 0.7757 | 3.03 | 4993.85 | 230 | |

| (300, 0.1) | 203 | |||||||

| (450, 0.5) | 257 | |||||||

| (500, 0.9) | 255 | |||||||

| 10% | (100, 0.2) | 236.86 | 1.31 | 0.7781 | 2.73 | 19,424.36 | 217 | |

| (300, 0.1) | 199 | |||||||

| (450, 0.5) | 239 | |||||||

| (500, 0.9) | 245 | |||||||

| 160 × 160 × 250 | 0% | (100, 0.2) | 240.06 | 2.51 × 10−2 | 0.7997 | 3.21 × 10−2 | 0.0036 | 265 |

| (300, 0.1) | 225 | |||||||

| (450, 0.5) | 221 | |||||||

| (500, 0.9) | 292 | |||||||

| 2% | (100, 0.2) | 239.95 | 1.98 × 10−2 | 0.8018 | 2.31 × 10−1 | 1014.21 | 257 | |

| (300, 0.1) | 231 | |||||||

| (450, 0.5) | 233 | |||||||

| (500, 0.9) | 284 | |||||||

| 5% | (100, 0.2) | 240.85 | 3.55 × 10−1 | 0.7935 | 8.11 × 10−1 | 5393.44 | 241 | |

| (300, 0.1) | 213 | |||||||

| (450, 0.5) | 243 | |||||||

| (500, 0.9) | 266 | |||||||

| 10% | (100, 0.2) | 241.44 | 6.02 × 10−1 | 0.7817 | 2.28 | 23,673.38 | 255 | |

| (300, 0.1) | 227 | |||||||

| (450, 0.5) | 273 | |||||||

| (500, 0.9) | 280 |

Now we present the error of reconstruction of the u state function in the grid points. These results are summarized in Table 3. The mean errors of reconstruction of the u state function are at a low level and do not exceed in each of the analyzed cases. We can also observe that the maximum errors in most cases are greater for the grid; in particular, it is visible for the input data noised by the and errors.

Table 3.

Errors of reconstruction function u in grid points in case of reconstruction of two parameters (—average absolute error; —maximal absolute error).

| Algorithm | Errors | Mesh 100 × 100 × 200 | |||

|---|---|---|---|---|---|

| 0% | 2% | 5% | 10% | ||

| ACO | Δavg[K] | 3.04 × 10−2 | 2.94 × 10−2 | 1.37 × 10−1 | 2.59 × 10−1 |

| Δmax[K] | 1.95 × 10−1 | 2.68 × 10−1 | 1.13 | 2.46 | |

| HJ | Δavg[K] | 6.28 × 10−3 | 1.36 × 10−2 | 1.24 × 10−1 | 2.59 × 10−1 |

| Δmax[K] | 1.11 × 10−1 | 1.24 × 10−1 | 1.04 | 2.42 | |

| mesh 160 × 160 × 250 | |||||

| 0% | 2% | 5% | 10% | ||

| ACO | Δavg[K] | 2.77 × 10−2 | 6.55 × 10−2 | 4.65 × 10−2 | 1.77 × 10−1 |

| Δmax[K] | 2.19 × 10−1 | 5.27 × 10−1 | 3.11 × 10−1 | 9.96 × 10−1 | |

| HJ | Δavg[K] | 2.68 × 10−3 | 1.08 × 10−2 | 3.36 × 10−2 | 8.84 × 10−2 |

| Δmax[K] | 4.72 × 10−2 | 7.43 × 10−2 | 2.53 × 10−1 | 7.55 × 10−1 | |

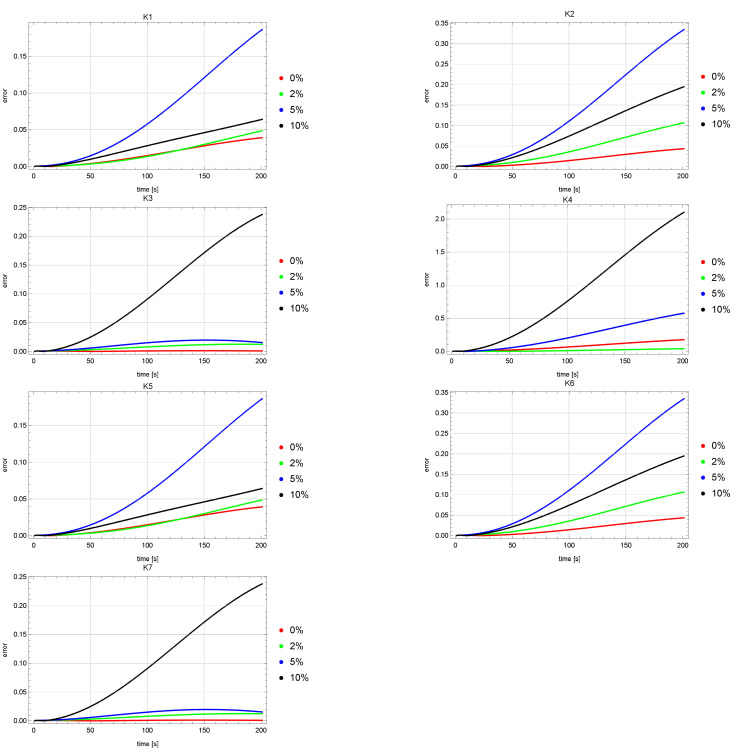

Figure 4 and Figure 5 show error plots of reconstruction of the u state function at the measurement points . The graphs of these errors for both the ACO and HJ algorithms are quite similar. It can be noticed that for the measurement points , greater errors were obtained for the input data noised by the error than for the input data disturbed by the error of . Levels of the u reconstruction errors for the input data unaffected and affected by the error (red and green colors) are on a much lower level than for the other input data (blue and black colors).

Figure 4.

Errors of reconstruction of u state function in points for ACO algorithm.

Figure 5.

Errors of reconstruction of u state function in points for HJ algorithm.

Sensitivity Analysis

A sensitivity analysis was also performed for both reproduced parameters [38]. Sensitivity coefficients are derived from the measured quantity according to the reproduced quantity:

| (26) |

| (27) |

In the calculations, both of the above derivatives are approximated by central difference quotients:

| (28) |

| (29) |

where [39], and denotes the state function determined for a given value of p.

We considered a test case with and . Figure 6 shows the variability of the sensitivity coefficients at measurement points over the entire analyzed period of time. The obtained results were symmetrical with respect to the vertical axis of symmetry of the area—the line . Therefore, the measurement coefficients in points , , and are equal to the coefficients in points , , and , respectively. The performed sensitivity analysis showed that the positions selected for the measurement points are correct. They ensure the appropriate sensitivity of the state function to changes in the values of the restored parameters.

Figure 6.

Sensitivity coefficient in measurement points along the time domain: (a) , (b) .

5. Conclusions

This paper presents algorithms for direct and inverse solutions for a model consisting of a differential equation with a fractional derivative with respect to a space of the Riemann–Liouville type. Equations of this type are used to describe the phenomena of anomalous diffusion, e.g., anomalous heat transfer in porous media. The inverse problem has been reduced to the search for the minimum of a properly created objective function. Two algorithms were used to deal with this problem: ant colony optimization algorithm and Hooke–Jeeves method. From the presented numerical example, we can draw the following conclusions:

The obtained results are satisfactory and errors of parameters reconstruction are minimal.

Both presented algorithms returned similar results, but in the case of the HJ algorithm, it was necessary to use the penalty function for one of the starting points.

The number of evaluation of the objective function was smaller for the HJ algorithm (250–300) than for the ACO algorithm (656).

The used differential scheme is unconditionally stable and has the approximation order equal to [26]. The convergence of the differential scheme is fast; already for sparse meshes, the approximation errors for the solution of the direct problem are small [27]. In addition, in the case of the inverse problem considered in this paper, it is enough to use a relatively sparse mesh to very well reconstruct the searched parameters. The presented method can be used as a tool for parameter training in artificial neural networks.

Nomenclature

The following abbreviations are used in this manuscript:

| c | specific heat |

| i-th vector in orthogonal base in HJ method | |

| f | additional source term |

| value of function f in point | |

| g | auxiliary coefficient to determine |

| I | number of iterations in ACO algorithm |

| J | objective function |

| i-th measurement point | |

| L | number of pheromone spots in ACO algorithm |

| length in x-direction | |

| length in y-direction | |

| mesh size in x-direction | |

| mesh size in y-direction | |

| n | number of sought parameters in ACO algorithm |

| number of threads in ACO algorithm | |

| N | mesh size in time |

| coefficients of matrices | |

| auxiliary matrices to to describe the solution of a direct problem | |

| t | time |

| u | state function (temperature) |

| value of state function in point | |

| q | parameter in ACO algorithm |

| x | spatial variable |

| value of x variable for | |

| starting point in HJ method | |

| y | spatial variable |

| value of y variable for | |

| T | final moment of time |

| value of time for | |

| Greek Symbols | |

| order of derivative in x-direction | |

| order of derivative in y-direction | |

| gamma function | |

| auxiliary operators to describe the solution of a direct problem | |

| time step | |

| step mesh in x-direction | |

| step mesh in y-direction | |

| thermal conductivity | |

| value of thermal conductivity in point | |

| temperature in | |

| mass density | |

| stop criterion in HJ method | |

| steps length vector in HJ method | |

| weight in shifted Grünwald formula | |

| domain of differential equation |

Author Contributions

Conceptualization, R.B. and D.S.; methodology, R.B., G.C. and G.L.S.; software, R.B.; validation, A.W., G.L.S. and D.S.; formal analysis, D.S.; investigation, R.B. and A.W.; supervision, D.S. and G.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Dong N.P., Long H.V., Giang N.L. The fuzzy fractional SIQR model of computer virus propagation in wireless sensor network using Caputo Atangana-Baleanud erivatives. Fuzzy Sets Syst. 2022;429:28–59. doi: 10.1016/j.fss.2021.04.012. [DOI] [Google Scholar]

- 2.Viera-Martin E., Gomez-Aguilar J.F., Solis-Perez J.E., Hernandez-Perez J.A., Escobar-Jimenez R.F. Artificial neural networks: A practical review of applications involving fractional calculus. Eur. Phys. J. Spec. Top. 2022:1–37. doi: 10.1140/epjs/s11734-022-00455-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Muresan C.I., Birs I.R., Dulf E.H., Copot D., Miclea L. A Review of Recent Advances in Fractional-Order Sensing and Filtering Techniques. Sensors. 2021;21:5920. doi: 10.3390/s21175920. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Fuss F.K., Tan A.M., Weizman Y. ‘Electrical viscosity’ of piezoresistive sensors: Novel signal processing method, assessment of manufacturing quality, and proposal of an industrial standard. Biosens. Bioelectron. 2019;141:111408. doi: 10.1016/j.bios.2019.111408. [DOI] [PubMed] [Google Scholar]

- 5.Lopes A.M., Tenreiro Machado J.A., Galhano A.M. Towards fractional sensors. J. Vib. Control. 2019;25:52–60. doi: 10.1177/1077546318769163. [DOI] [Google Scholar]

- 6.Oprzędkiewicz K., Mitkowski W., Rosół M. Fractional Order Model of the Two Dimensional Heat Transfer Process. Energies. 2021;14:6371. doi: 10.3390/en14196371. [DOI] [Google Scholar]

- 7.Fahmy M.A. A new LRBFCM-GBEM modeling algorithm for general solution of time fractional-order dual phase lag bioheat transfer problems in functionally graded tissues. Numer. Heat Transf. Part A Appl. 2019;75:616–626. doi: 10.1080/10407782.2019.1608770. [DOI] [Google Scholar]

- 8.Gao X., Jiang X., Chen S. The numerical method for the moving boundary problem with space-fractional derivative in drug release devices. Appl. Math. Model. 2015;39:2385–2391. doi: 10.1016/j.apm.2014.10.053. [DOI] [Google Scholar]

- 9.Błasik M., Klimek M. Numerical solution of the one phase 1D fractional Stefan problem using the front fixing method. Math. Methods Appl. Sci. 2014;38:3214–3228. doi: 10.1002/mma.3292. [DOI] [Google Scholar]

- 10.Andreozzi A., Brunese L., Iasiello M., Tucci C., Vanoli G.P. Modeling Heat Transfer in Tumors: A Review of Thermal Therapies. Ann. Biomed. Eng. 2018;47:676–693. doi: 10.1007/s10439-018-02177-x. [DOI] [PubMed] [Google Scholar]

- 11.Chen D., Zhang J., Li Z. A Novel Fixed-Time Trajectory Tracking Strategy of Unmanned Surface Vessel Based on the Fractional Sliding Mode Control Method. Electronics. 2022;11:726. doi: 10.3390/electronics11050726. [DOI] [Google Scholar]

- 12.Khooban M., Gheisarnejad M., Vafamand N., Boudjadar J. Electric Vehicle Power Propulsion System Control Based on Time-Varying Fractional Calculus: Implementation and Experimental Results. IEEE Trans. Intell. Veh. 2019;4:255–264. doi: 10.1109/TIV.2019.2904415. [DOI] [Google Scholar]

- 13.Błasik M. Numerical Method for the One Phase 1D Fractional Stefan Problem Supported by an Artificial Neural Network. Adv. Intell. Syst. Comput. 2021;1288:568–587. doi: 10.1007/978-3-030-63128-4_44. [DOI] [Google Scholar]

- 14.Amin R., Shah K., Asif M., Khan I. A computational algorithm for the numerical solution of fractional order delay differential equations. Appl. Math. Comput. 2021;402:125863. doi: 10.1016/j.amc.2020.125863. [DOI] [Google Scholar]

- 15.Bu W., Shu S., Yue X., Xiao A., Zeng W. Space–time finite element method for the multi-term time–space fractional diffusion equation on a two-dimensional domain. Comput. Math. Appl. 2019;78:1367–1379. doi: 10.1016/j.camwa.2018.11.033. [DOI] [Google Scholar]

- 16.Concezzi M., Spigler R. An ADI Method for the Numerical Solution of 3D Fractional Reaction-Diffusion Equations. Fractal Fract. 2020;4:57. doi: 10.3390/fractalfract4040057. [DOI] [Google Scholar]

- 17.Moura Neto F.D., da Silva Neto A.J. An Introduction to Inverse Problems with Applications. Springer; Berlin, Germany: 2013. [Google Scholar]

- 18.Yuldashev T.K., Kadirkulov B.J. Inverse Problem for a Partial Differential Equation with Gerasimov–Caputo-Type Operator and Degeneration. Fractal Fract. 2021;5:58. doi: 10.3390/fractalfract5020058. [DOI] [Google Scholar]

- 19.Kinash N., Janno J. An Inverse Problem for a Generalized Fractional Derivative with an Application in Reconstruction of Time- and Space-Dependent Sources in Fractional Diffusion and Wave Equations. Mathematics. 2019;7:1138. doi: 10.3390/math7121138. [DOI] [Google Scholar]

- 20.Brociek R., Chmielowska A., Słota D. Comparison of the probabilistic ant colony optimization algorithm and some iteration method in application for solving the inverse problem on model with the Caputo type fractional derivative. Entropy. 2020;22:555. doi: 10.3390/e22050555. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Shrestha A., Mahmood A. Review of deep learning algorithms and architectures. IEEE Access. 2019;7:53040–53065. doi: 10.1109/ACCESS.2019.2912200. [DOI] [Google Scholar]

- 22.Voller V.R. Anomalous heat transfer: Examples, fundamentals, and fractional calculus models. Adv. Heat Transf. 2018;50:338–380. [Google Scholar]

- 23.Sierociuk D., Dzieliński A., Sarwas G., Petras I., Podlubny I., Skovranek T. Modelling heat transfer in heterogeneous media using fractional calculus. Philos. Trans. R. Soc. A. 2013;371:20120146. doi: 10.1098/rsta.2012.0146. [DOI] [PubMed] [Google Scholar]

- 24.Bagiolli M., La Nave G., Phillips P.W. Anomalous diffusion and Noether’s second theorem. Phys. Rev. E. 2021;103:032115. doi: 10.1103/PhysRevE.103.032115. [DOI] [PubMed] [Google Scholar]

- 25.Podlubny I. Fractional Differential Equations. Academic Press; San Diego, CA, USA: 1999. [Google Scholar]

- 26.Tian W.Y., Zhou H., Deng W.H. A class of second order difference approximations for solving space fractional diffusion equations. Math. Comput. 2015;84:1703–1727. doi: 10.1090/S0025-5718-2015-02917-2. [DOI] [Google Scholar]

- 27.Brociek R., Wajda A., Słota D. Inverse problem for a two-dimensional anomalous diffusion equation with a fractional derivative of the Riemann–Liouville type. Energies. 2021;14:3082. doi: 10.3390/en14113082. [DOI] [Google Scholar]

- 28.Barrett R., Berry M., Chan T.F., Demmel J., Donato J., Dongarra J., Eijkhout V., Pozo R., Romine C., der Vorst H.V. Templates for the Solution of Linear System: Building Blocks for Iterative Methods. SIAM; Philadelphia, PA, USA: 1994. [Google Scholar]

- 29.der Vorst H.V. Bi-CGSTAB: A fast and smoothly converging variant of Bi-CG for the solution of nonsymmetric linear systems. SIAM J. Sci. Stat. Comput. 1992;13:631–644. doi: 10.1137/0913035. [DOI] [Google Scholar]

- 30.Yang S., Liu F., Feng L., Turner I.W. Efficient numerical methods for the nonlinear two-sided space-fractional diffusion equation with variable coefficients. Appl. Numer. Math. 2020;157:55–68. doi: 10.1016/j.apnum.2020.05.016. [DOI] [Google Scholar]

- 31.Jin B., Rundell W. A tutorial on inverse problems for anomalous diffusion processes. Inverse Probl. 2015;13:035003. doi: 10.1088/0266-5611/31/3/035003. [DOI] [Google Scholar]

- 32.Mohammad-Djafari A. Regularization, Bayesian Inference, and Machine Learning Methods for Inverse Problems. Entropy. 2021;23:1673. doi: 10.3390/e23121673. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Socha K., Dorigo M. Ant colony optimization for continuous domains. Eur. J. Oper. Res. 2008;185:1155–1173. doi: 10.1016/j.ejor.2006.06.046. [DOI] [Google Scholar]

- 34.Wu Y., Ma W., Miao Q., Wang S. Multimodal continuous ant colony optimization for multisensor remote sensing image registration with local search. Swarm Evol. Comput. 2019;47:89–95. doi: 10.1016/j.swevo.2017.07.004. [DOI] [Google Scholar]

- 35.Brociek R., Słota D. Application of real ant colony optimization algorithm to solve space fractional heat conduction inverse problem. Commun. Comput. Inf. Sci. 2016;639:369–379. doi: 10.1007/978-3-319-46254-7_29. [DOI] [Google Scholar]

- 36.Hook R., Jeeves T.A. “Direct Search” Solution of Numerical and Statistical Problems. J. ACM. 1961;8:212–229. doi: 10.1145/321062.321069. [DOI] [Google Scholar]

- 37.Shakya A., Mishra M., Maity D., Santarsiero G. Structural health monitoring based on the hybrid ant colony algorithm by using Hooke–Jeeves pattern search. SN Appl. Sci. 2019;1:799. doi: 10.1007/s42452-019-0808-6. [DOI] [Google Scholar]

- 38.Marinho G.M., Júnior J.L., Knupp D.C., Silva Neto A.J., Vieira Vasconcellos J.F. Inverse problem in space fractional advection diffusion equation. Proceeding Ser. Braz. Soc. Comput. Appl. Math. 2020;7:1–7. doi: 10.5540/03.2020.007.01.0394. [DOI] [Google Scholar]

- 39.Özişik M., Orlande H. Inverse Heat Transfer: Fundamentals and Applications. Taylor & Francis; New York, NY, USA: 2000. [Google Scholar]