ABSTRACT

Introduction

Computational brain network modeling using The Virtual Brain (TVB) simulation platform acts synergistically with machine learning (ML) and multi‐modal neuroimaging to reveal mechanisms and improve diagnostics in Alzheimer's disease (AD).

Methods

We enhance large‐scale whole‐brain simulation in TVB with a cause‐and‐effect model linking local amyloid beta (Aβ) positron emission tomography (PET) with altered excitability. We use PET and magnetic resonance imaging (MRI) data from 33 participants of the Alzheimer's Disease Neuroimaging Initiative (ADNI3) combined with frequency compositions of TVB‐simulated local field potentials (LFP) for ML classification.

Results

The combination of empirical neuroimaging features and simulated LFPs significantly outperformed the classification accuracy of empirical data alone by about 10% (weighted F1‐score empirical 64.34% vs. combined 74.28%). Informative features showed high biological plausibility regarding the AD‐typical spatial distribution.

Discussion

The cause‐and‐effect implementation of local hyperexcitation caused by Aβ can improve the ML–driven classification of AD and demonstrates TVB's ability to decode information in empirical data using connectivity‐based brain simulation.

Keywords: Alzheimer's disease, machine learning, multi‐scale brain simulation, positron emission tomography, The Virtual Brain

1. INTRODUCTION

Alzheimer's disease (AD) is a health problem with broad impact on a patient's personal life, as well as on our aging society. However, early diagnosis remains a challenge, and the knowledge of underlying disease mechanisms is still incomplete. Besides the two hallmark proteins amyloid beta (Aβ) 1 , 2 and tau, 3 4 other involved factors have been identified, such as impairment of the blood–brain barrier, 5 synaptic dysfunction, 6 network disruption, 7 mitochondrial dysfunction, 8 neuroinflammation, 9 and genetic risk factors. 10 While Aβ and tau are widely accepted as involved core features, 11 , 12 their mutual interaction 13 and interaction with other factors 5 are incompletely understood. Comprehensive knowledge of this multifactorial interaction in the pathogenesis of AD is crucial for further therapeutic strategies, including recent developments of potentially disease‐modifying anti‐Aβ therapy with aducanumab. 14

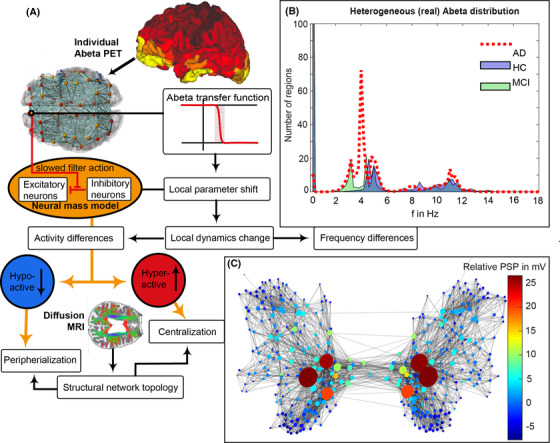

The Virtual Brain (TVB, www.thevirtualbrain.org) is an open‐source platform for modeling and simulating large‐scale brain networks by using personalized structural connectivity models. 15 , 16 TVB enables model‐based inference of underlying neurophysiological mechanisms across different brain scales that are involved in the generation of macroscopic neuroimaging signals including functional magnetic resonance imaging (MRI), electroencephalography (EEG), and magnetoencephalography. Moreover, TVB facilitates the reproduction and evaluation of individual configurations of the brain by using subject‐specific data. In this study, we make use of virtual local field potentials (LFPs) from simulated brain data from a recent experiment with TVB. 17 In our previous work, 17 we integrated individual Aβ patterns obtained from positron emission tomography (PET) with the Aβ‐binding tracer 18F‐AV‐45 into the brain model. Consecutively, distinct spectral patterns in simulated LFPs and EEG could be observed for patients with AD, mild cognitive impairment (MCI), and healthy control (HC) subjects (Figure 1). Such integration was done by transferring the local concentration of Aβ to a variation in the brain model's local excitation–inhibition balance. This resulted in a shift from alpha to theta rhythms in AD patients, which was located in a similar pattern as local hyperexcitation in core structures of the brain network. The frequency shift was reversible by applying “virtual memantine,” that is, virtual N‐methyl‐D‐aspartate (NMDA) antagonistic drug therapy. An overview of the study results is provided in Figure 1.

FIGURE 1.

Modified from Stefanovski et al. 17 Aβ‐PET‐driven brain simulation model of AD. (A): Regional PET intensity constraints regional parameters. A sigmoidal transfer function translates the regional Aβ load to changes in the excitation‐inhibition balance. (B) Virtual AD patient brains exhibited significantly slower simulated LFPs than MCI and HC virtual brains and showed a shift from alpha to theta frequency range. While the AD group is solely dominated by two clusters in the alpha and theta band, the groups of HC and MCI have an additional strong cluster exhibiting no oscillations (frequency of zero), called a stable focus. This phenomenon is absent in the AD group. The stable focus in HC and MCI virtual brains provides an additional—simulation inferred—distinctive criterion between groups. Although there has been observed a correlation between high Abeta burdens and lower LFP frequencies only in the AD group, 17 the spatial distribution of this LFP slowing is in addition determined by network characteristics. Moreover, the observed slowing was spatially associated with local hyperexcitation. The graph in (C) represents the SC, wherein the nodes’ size reflects the degree, while color corresponds to the relative postsynaptic potentials (relative to the mean postsynaptic potential of the simulation). The graph indicates that local hyperexcitation occurs in central parts of the networks. Aβ, amyloid beta; AD, Alzheimer's disease; HC, healthy controls; LFP, local field potential; MCI, mild cognitive impairment; MRI, magnetic resonance imaging; PET, positron emission tomography; PSP, postsynaptic potential; SC, structural connectivity

AD‐specific pathologies, such as deposition of Aβ in neuritic plaques, tau deposition in neurofibrillary tangles, and atrophy of neural tissue, have been widely studied with machine learning (ML) approaches. 18 , 19 The major advantage of using ML‐based classification algorithms on neuroimaging data is the potential for recognizing complex high dimensional previously unknown disease patterns in the data, potentially identifying AD before clinical manifestation or predicting a disease trajectory.

We further argue that the current sample size of 33 subjects is sufficient to achieve a reliable proof of concept, considering the following three main aspects:

This study aims to show an information gain provided by TVB with regard to differential classification among HC, MCI, and AD populations. We do not aim to push generalizability performance of state‐of‐the‐art ML methodologies with this sample size. This leads to a primary focus on the group‐level significance of the decoding accuracy rather than the accuracies themselves. 20

This information gain and the significance of the model performances are validated by comparing the distributions of model accuracies between feature sets and against null distributions of accuracies approximated using permutation testing. 20

As implemented in our approach, nested cross‐validation still represents the best way to estimate generalizability in the given context. 21 In combination with the previous points, this leads to a feasible and robust estimation of the information gain.

We show that TVB simulations provide additional unique diagnostic information that is not readily available using the available empirical data alone. This supports the idea that TVB provides value and real‐world applicability above and beyond merely reorganizing empirical data.

2. MATERIALS AND METHODS

2.1. Alzheimer's Disease Neuroimaging Initiative database

Data used in the preparation of this article were obtained from the Alzheimer's Disease Neuroimaging Initiative (ADNI) database (adni.loni.usc.edu). The ADNI was launched in 2003 as a public–private partnership, led by Principal Investigator Michael W. Weiner, MD. The primary goal of ADNI has been to test whether serial MRI, PET, other biological markers, and clinical and neuropsychological assessment can be combined to measure the progression of MCI and early AD. For up‐to‐date information, see www.adni‐info.org.

2.2. Data acquisition, processing, and brain simulation

Detailed methodology of data acquisition, selection, processing, and simulation is described in a previous study. 17 We included 33 ADNI‐3 participants, thereof 10 AD patients, 15 HC participants, and 8 MCI patients. The selection criteria included availability of both Aβ and tau PET, diffusion‐weighted MRI, and all MRI sequences necessary to fulfill the standards of the human connectome project minimal preprocessing pipeline. 22 The number of participants was limited because of restricted availability of all data modalities at once and comparable scanners (only the largest subcohort, Siemens scanner models with 3T, were included). 17

In addition to the data used in our previous study, 17 we also used the distribution of tau in 18F‐AV‐1451 PET for our analyses to obtain the best available empirical data basis. The nuclear signal intensity for both Aβ and tau PET is related to a reference volume in the cerebellum.

For the subcortical volumetrics used in this study, we obtained the volumetry statistics provided by the ‐autorecon2 command. The segmentation is performed with the modified Fischl parcellation 23 of subcortical regions in FreeSurfer. 24

A detailed description of image processing can be found in Appendix A in supporting information.

Whole‐brain simulations with TVB are based on a structural connectivity (SC) matrix derived from diffusion‐weighted MRI. After processing the empirical imaging data, we used the SC of the HC population to generate an averaged standard SC for all participants. For the simulations, we made use of the Jansen‐Rit neural mass model. 25 , 26 Neural mass models use a mean field simplification to compute electrical potentials on a regional level by using oscillatory equations systems. 27 The variables, parameters, and model equations can be found in Stefanovski et al. 17 Parameter settings were chosen due to theoretical considerations in previous studies. 17 , 28 We explored a range of the global scaling factor G, a coefficient that scales the connection between distant brain regions, to capture different dynamic states of the simulation. The novelty in our recent simulation study was the introduction of a mechanistic model for Aβ‐driven effects. We linked local Aβ concentrations, measured by Aβ PET in 379 regions of the Glasser 29 and Fischl 23 parcellations, to the excitation–inhibition balance in the model by defining the inhibitory time constant τi as a sigmoidal function of local Aβ burden. 17

The simulation models electrical potentials in the whole brain, here measured on the region level by LFPs using the same 379 regions as above. In addition, we calculate the EEG signal as a projection of the LFP from within the brain to the surface of the head, taking into the concept of a lead‐field matrix simplification to three compartment borders brain–skull, skull–scalp, and scalp–air. 15 , 30 , 31 , 32

A detailed description of the simulations can be found in Appendix B in supporting information.

2.3. Machine learning approach

Our primary objective is to determine whether extracted features from TVB add to the classifiers’ predictive power. To achieve this, we repeated the ML procedure with three different feature sets: (1) using empirical features alone, (2) using simulated features alone, and (3) using both types of features to create a combined model.

RESEARCH IN CONTEXT

Systematic Review: Machine learning has been proven to augment diagnostics of dementia in several ways. Imaging‐based approaches enable early diagnostic predictions. However, individual projections of long‐term outcome as well as differential diagnosis remain difficult, as the mechanisms behind the used classifying features often remain unclear. Mechanistic whole‐brain models in synergy with powerful machine learning aim to close this gap.

Interpretation: Our work demonstrates that multi‐scale brain simulations considering amyloid beta distributions and cause‐and‐effect regulatory cascades reveal hidden electrophysiological processes that are not readily accessible through measurements in humans. We demonstrate that these simulation‐inferred features hold the potential to improve diagnostic classification of Alzheimer's disease.

Future Directions: The simulation‐based classification model needs to be tested for clinical usability in a larger cohort with an independent test set, either with another imaging database or a prospective study to assess its capability for long‐term disease trajectories.

As simulated features, we used the 379 regional LFP frequencies from the simulations from our previous study. 17 As empirical features, we used the global average and the corresponding 379 regional values in Glasser 29 and Fischl parcellation 23 for each Aβ PET standardized uptake value ratio (SUVR) and tau PET SUVR, moreover 40 subcortical volumes, leading to 800 empirical features. The combined feature space contains all the above with 1179 features (see the supporting information Data section containing a list with all these features). Therefore, we developed a methodology using extensive feature reduction to minimize overfitting.

Two types of ML classifiers that are suitable for small‐sample classification problems were used: the kernel‐based support vector machine (SVM) 33 and the decision‐tree–based random forest (RF). 34

By training two classifiers based on different underlying ML mechanisms, we provide more robust evidence that the pattern in classification performance, when combining simulated and empirical features, is reliable and clinically relevant. Further, this pattern is driven by a reliably reoccurring subset of the features themselves, rather than by particular mechanisms underlying a classification algorithm.

Our main results make use of a hybrid classification approach in which a RF is used for feature selection to take advantage of its ability to select features based on interactions between many features together in an interpretable way, 35 and an SVM is used for classification due to its relative reliability in small‐sample non‐linear classification problems. 36 The number of features selected by the RF is restricted to a maximum of 34 features, the square root of the total feature number (P = 1179). To validate our hybrid classification approach, we ran experiments using either the RF or SVM alone as comparisons. These results, along with additional details of the methodology, are presented in Appendix C in supporting information. To summarize, they show a significant improvement in classification performance using the hybrid classification approach over either individual classifier. Our ML approach is primarily designed to satisfy two goals:

Providing a robust, reproducible, and accurate evaluation of classification performance with the data.

Facilitating exploration of the empirical and simulated features that are most important for achieving optimal separation between the AD, MCI, and HC groups.

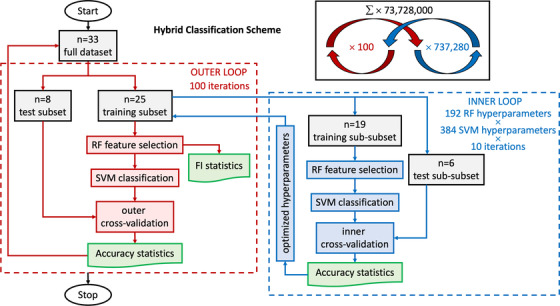

To satisfy the first goal, we implemented a strict nested cross‐validation scheme that allows us to obtain statistically reliable classification performance metrics while minimizing overfitting in a P >> N setting (i.e., we have a small sample size N, but a very large number of features P). Our cross‐validation method is adapted from earlier work in ML for clinical neuroscience, 37 and is described in greater detail in Figure 2.

FIGURE 2.

Nested cross‐validation loop design. In this hybrid classification scheme, random forest (RF; feature selection) and support vector machine (SVM; classification) are used jointly in both inner and outer loop of a nested cross‐validation loop design. Starting in the outer loop: stochastic cross‐validation starts with 100 iterations using 25% of data (randomly selected per iteration without taking into account age or sex) for testing. The training subset goes to the inner loop after the train–test split. In the inner loop: split data again just like in the outer loop to obtain training set and validation set for an inner 10 cross‐validation iterations with each hyperparameter setting (in total 192 combinations for RF and 384 for SVM, leading to 73,728 combinations with every 10 iterations). Next, we scale training features by subtracting the median and dividing by the interquartile range (makes them robust to outliers we identified above). We apply these scaling statistics calculated from the training set also to the test set. Then, we iterate through hyperparameters (Tables SC.1 and SC.2 in supporting information). RF is used for feature selection. Afterward, the remaining features are used for training the SVM classifier with specific hyperparameter settings. We track the selected features for each run and compute the frequency with which they are selected across iterations for the outer loop. The SVM classifications are validated with the test sub‐subset (inner cross‐validation). This provides optimized hyperparameter settings from the inner cross‐validation loop. Back to the outer loop, we recombine training and validation data (which were separated in the inner loop)—still keeping test data separate. We set hyperparameters to the best settings obtained in the inner loop. Then, we train the model and record results: RF is again used for feature selection, which leads to feature importance (FI) statistics used for the results. Afterward, SVM classifies the reaming features, which are then validated with the test set (outer cross‐validation). After this, the next iteration of the outer loop begins

We satisfy the second goal in two ways. First, our cross‐validation scheme provides a natural metric for feature relevance, that is, feature selection frequency across cross‐validation runs. Additionally, we use feature importance metrics inherent to each feature selection method explored. In our case, the F‐statistic and the entropy criterion were two metrics used for feature selection for the SVM and the RF, respectively.

Currently, the most reliable method for statistical control of prediction accuracy is permutation testing. 20 To this end, we performed the same classification pipeline, including all feature preprocessing, feature selection, and cross‐validation steps, using randomly shuffled class labels. This was repeated 750 times to achieve a robust estimate of the null model as an approximation for the inherent prediction error of the model and chance classification results.

A detailed technical description of the ML methodology can be found in Appendix C.

3. RESULTS

3.1. Data properties

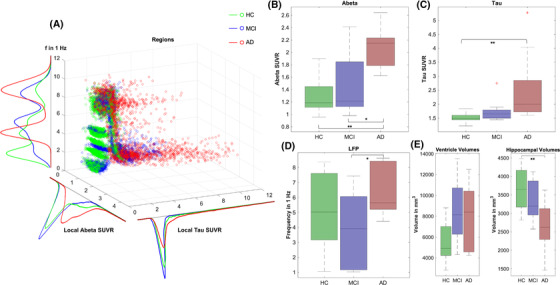

We used basic descriptive statistics to assess data quality prior to ML analysis. The distribution of simulated LFP frequencies, Aβ PET SUVR, tau SUVR, and regional volumes and their interdependency are shown in Figure 3. Aβ (P = 0.002) and tau SUVR (P < 0.001) are significantly different between AD and HC after Bonferroni correction. LFP frequency differs significantly between AD and MCI (P = 0.032) but is not significant after Bonferroni correction. We do not see significant differences in overall brain volume (AD and MCI [P = 0.706], AD and HC [P = 0.510], or HC and MCI [P = 0.141]), but a tendency toward ventricle enlargement and significant hippocampal atrophy in AD.

FIGURE 3.

Characteristics of empirical feature space. In (A), regional distributions of Aβ, tau, and LFP frequency are shown for all groups in a 3D scatterplot. Red data points symbolize regions of AD patients, green points MCI patients, and blue points HC. Each scatters point stands for one region of one subject. Color density is normalized between groups. A kernel density estimate of the corresponding histograms is shown (projection of the 3D plot to one axis). In particular, it can be seen a string of outliers with very high tau values in the AD group and in parts in the MCI group, which does not appear for HC. Moreover, AD participants’ regions show higher Aβ values, in particular for lower frequencies. Besides, boxplots are presented for groupwise comparisons for the features mean Aβ per subject, mean tau per subject, mean simulated LFP frequency per subject, and mean volume per subject. A Kruskal–Wallis test was performed to assess significance: * marks significance with P < 0.05; ** marks significance after Bonferroni‐correction with P < 0.003 (for 15 tests). B, Aβ SUVR is significantly different between AD and HC (P = 0.002) and MCI (P = 0.045), but not between HC and MCI (P = 0.811). C, Tau SUVR is only significantly different between AD and HC (P < 0.001), but not between AD and MCI (P = 0.174) or HC and MCI (P = 0.267). D, LFP frequency is only significantly different between AD and MCI (P = 0.032), but not between AD and HC (P = 0.216) or HC and MCI (P = 0.472). E, As the mean volume of all regions (including, e.g., ventricles and white matter) does not show significant differences (as expected because of volume shifts between parenchyma and CSF). We explored the data regarding ventricle enlargement 39 and hippocampal atrophy. 40 Although we see a tendency for both in the AD group, only the difference in hippocampal volume reaches significance between AD and HC. Ventricle volumes: HC and MCI (P = 0.056), HC and AD (P = 0.116), MCI and AD (P = 0.910). Hippocampal volumes: HC and MCI (P = 0.556), HC and AD (P = 0.003), MCI and AD (P = 0.144). Aβ, amyloid beta; AD, Alzheimer's disease; CSF, cerebrospinal fluid; HC, healthy controls; LFP, local field potential; MCI, mild cognitive impairment; MRI, magnetic resonance imaging; PET, positron emission tomography; PSP, postsynaptic potential; SC, structural connectivity; SUVR, standardized uptake value ratio

3.2. Classification performance

Overall, we performed nine experiments spanning three different classification schemes and three feature sets (see Appendix D in supporting information). The hybrid classification scheme with SVM and RF performed best. For all schemes, the combined feature space outperformed both the empirical and the simulated feature space (Table SD.1 in supporting information). The results of the hybrid classification approach are given below.

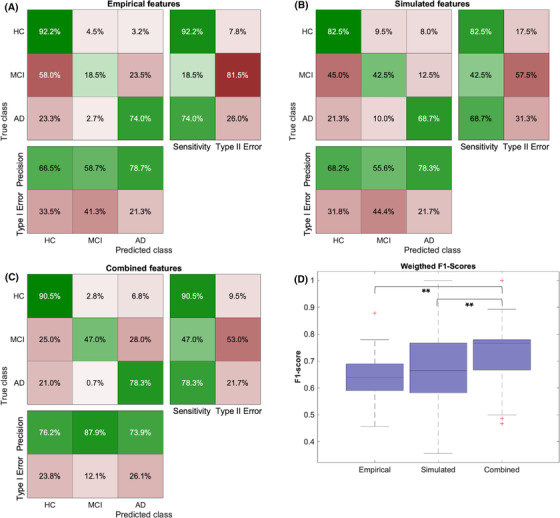

Weighted F1‐scores (wF1) and normalized confusion matrices are given in Figure 4. The combined approach (wF1 = 0.743) outperformed the empirical one (wF1 = 0.643) by about 0.1 (Figure 4D), mainly because of an improvement in the classification of the MCI group (Figure 4A–C). We used the Wilcoxon signed rank test from 100 cross‐validation runs to assess significance (Shapiro–Wilk test of normality for the wF1 distributions revealed P < 0.001 for empirical and combined approach and P = 0.070 for the simulated approach, leading to the usage of a nonparametric test). The differences between the combined approach and both individual approaches (empirical and simulated) were highly significant with P < 0.001; meanwhile, there was no significant difference between the empirical and simulated approaches (P = 0.340). Additionally, the hybrid classification approach outperformed the SVM‐only approach (wF1 = 0.718) and the RF‐only approach (wF1 = 0.670) for the combined features.

FIGURE 4.

Results of the nested cross‐validation classification approach. A–C, Confusion matrices are computed by summing the confusion matrices across all 100 cross‐validation runs and normalizing per class. In particular, the combined approach improved the prediction of MCI participants, as AD and HC were already quite well distinguishable by the empirical features. D, Boxplots of mean weighted F1‐scores for three different feature spaces. The combined approach (wF1 = 0.743) outperformed the empirical one (wF1 = 0.643) by about 0.1. Significance assessment with the Wilcoxon signed rank test from 100 cross‐validation runs: combined versus empirical: P < 0.001; combined versus simulated: P < 0.001, empirical versus simulated P = 0.340. AD, Alzheimer's disease; HC, healthy controls; MCI, mild cognitive impairment;

3.3. Classification validity

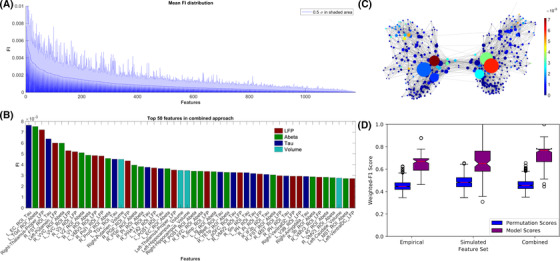

As a further analysis to understand this classification improvement, we calculated the feature importance. Figure 5A shows the mean entropy‐based feature importance given by the RF classifier for 100 outer cross‐validation runs. This is used to show that there is a decreasing curve, as we would expect if meaningful features were found (as opposed to a more uniform distribution). Many of the more important features seem to be biologically plausible in the context of AD (Figures 5B and 6, full list in supporting information Data).

FIGURE 5.

Feature importance (FI) distribution. A, Mean random forest (RF)‐derived feature importance from 100 outer cross‐validation runs. Entropy criterion with combined feature types shown here. Feature importance values are normalized, so all features sum to one. In shaded blue, half standard deviation is displayed for each feature. B, Top 50 features across all cross‐validation runs. Both empirical (tau in dark blue, amyloid beta [Aβ] in green, volume in light blue), as well as simulated frequencies (red), contributed to the improved classification. Many features seem moreover to be biologically plausible in the context of Alzheimer's disease (AD), as, for example, tau in entorhinal cortex (Braak stage 1), 41 thalamic dysfunction (as significant rhythm generator), 42 and volumes in hippocampus (as signs of atrophy). 40 C, Visualization of the structural connectivity (SC) graph with color indicating FI of the regional local field potential (LFP) frequencies, while vertex diameter reflects the structural degree. It shows a network dependency of the LFP FI. Only edges with connection strength above the 95th percentile are shown. D, The distributions of weighted F1 scores for permutation based null model (left box) and corresponding true model (right box). All models significantly outperform the null model with the combined model showing the greatest average distance to its null model, indicating the gain in differentiating information

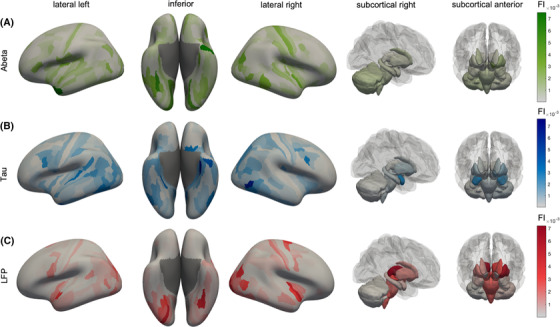

FIGURE 6.

Anatomical representation of feature importance (FI) distribution. Displayed are cortical regions from left, right, and inferior as well as subcortical regions. The color indicates the FI. A, Aβ FI. The anatomical patterns reveal high importance of left‐temporal regions, as well as the left dorsal stream in the parietal and occipital cortex. The Aβ top features showed a more disseminated allocation mostly in the temporal, occipital, frontal, and insular cortices, which is also in line with typical amyloid deposition and locations of increased AV‐45 uptake in AD. 43 B, Tau features show a similar pattern as Aβ, but with a higher focus on typical Braak stage 1 regions (as the entorhinal cortex). Most of the tau top features can be allocated to the temporal lobe, which is also the location of early tau deposition according to the neuropathological Braak and Braak stages I–III 41 , 44 and the location of increased in vivo binding of 18F‐AV‐1451 in AD. 45 In particular, the entorhinal cortex is a consistent starting point of the sequential spread of tau through the brain 44 , 45 and also showed the most robust relationship between flortaucipir and memory scores in a recent machine learning study. 46 C, Simulated frequencies do not show strong laterality as the empirical features but seem to have a focus in both occipital lobes, where typically alpha oscillations occur. The occipito‐temporal and occipito‐parietal regions of the first area are typical alpha‐rhythm generators in resting‐state electroencephalogram. 47 Alteration of these posterior alpha sources is a typical phenomenon in AD and MCI compared to HC. 48 The ventral or “what” stream and the dorsal or “where” stream have been implicated in object recognition and spatial localization and are essential for accurate visuospatial navigation. 49 Impairment in visuospatial navigation is a potential cognitive marker in early AD/MCI that could be more specific than episodic memory or attention deficits. 50 Besides this, subcortical areas like the thalami play a more crucial role than for Aβ and tau. Aβ, amyloid beta; AD, Alzheimer's disease; FI, feature importance; HC, healthy controls; MCI, mild cognitive impairment

We also showed that feature relevance is dependent on the structural degree of the regions in the underlying SC network (Figure 5C). This is an indicator of network effects contributing to the improved classification and another indicator for meaningful classification results.

Using the Wilcoxon signed rank test, we could further show that the classification performance was significantly higher than the null model (with P < 0.001 for all three approaches). The average performance of the combined approach showing the greatest distance to the corresponding null model laying outside the 100% interval (Figure 5D).

4. DISCUSSION

In this study, we show that the inclusion of virtual, simulated TVB features into ML classification can lead to an improved classification among HC, MCI, and AD.

The diagnostic value of the underlying empirical features can be improved by integrating the features into a multi‐scale brain simulation framework in TVB. We showed an improvement in classification performance when combining both the empirical and the virtual derived features. The absolute gain of accuracy was 10%. Keeping in mind that all differences between the subjects have to be derived from their Aβ PET signal (because all other factors, e.g., the underlying SC, are the same) this provides evidence that TVB is able to decode the information that is contained in empirical data like the amyloid PET. More specific for the PET and its usage in diagnostics, it highlights the relevance of spatial distribution, which is often not considered in its analysis.

The main reason for this improvement seems to be a better classification of MCI subjects. Without the simulated features, the models frequently misclassify MCI subjects as HC. In contrast, the simulated features alone result in more misclassification of HCs as either MCI or AD subjects compared to using the empirical features alone. However, combining the empirical features with the simulated features appears to complement their strengths in a clinically useful way; these models retain all or most of the ability to correctly classify healthy controls with the empirical features and retain much of the simulated features’ ability to classify MCI patients. The processing inside TVB seems to reorganize the existing data beneficially.

In theory, a larger number of available features could provide a ML algorithm greater flexibility in finding useful combinations. This is the case simply due to a higher degree of freedom during feature selection and weighting. However, the equal empirical data foundation (only PET as individual features) in combination with a nested cross‐validation method protects from an overfitting bias due to the larger feature space, with additional evidence of this provided by the chance level performance of the null distributions. If the explanation for the improvement in classification accuracy were simply the presence of additional noisy features, we would see a flatter feature importance distribution than shown in Figure 5, and therefore a more random distribution of selected features across the 100 cross‐validation iterations. Instead, we see that only a few features with high importance are consistently guiding classification, indicating that they in fact provide useful discriminative information. Preventing this kind of overfitting via feature selection is a key motivation behind our use of the nested cross‐validation approach (Figure 2): Because the features are selected on the training and validation (test) set in the inner loop, any overfitting due to feature selection should not be transferred to the test set in the outer loop.

We have shown that only a few selected features seem to play a crucial role in classification throughout the cross‐validation iterations and that these features play a biologically plausible role in the context of AD (Figures 5 and 6).

As a limitation of our study, we see that the used simulated feature, the mean simulated LFP frequency (averaged across a wide range of the large‐scale coupling parameter G), is not directly equivalent to a biophysical measurement like empirically measured LFP. G scales the strength of long‐range connections in the brain network model and is a crucial factor in the simulation. Many different dynamics can develop across the dimension of G, from which some are similar to empirically observed phenomena, but others are not. Our former work has found that particular ranges of G with non‐plausible frequency patterns hold the potential to differentiate between diagnosis groups. 17 This is mainly because of the underlying mathematics of the Jansen‐Rit model: besides two limit cycles that produce alpha‐like and theta‐like activity, the local dynamic model has a region of stable focus wherein no oscillations are produced in the absence of noise. Technically, this stable focus is represented as a zero‐line artifact that appears mainly in the HC group, because only Aβ values above a critical value led to the presence of the slower theta‐limit cycle. By averaging LFP frequencies across the whole spectrum of G, we incorporate this zero‐line information, which leads to apparently higher mean LFP frequencies for the AD group compared to non‐AD groups. In contrast, in the region of biologically plausible results, AD has lower frequencies, as would be expected. 17 This can also be seen as another advantage of TVB. It shows how TVB does not just reproduce data that could also be obtained with EEG or intracranial electrodes, but delivers “artificial” data that are still informative. While particular parameter ranges deliver biologically plausible results, even other (less plausible) parameter settings provide unique individual patterns and can contribute to the classification.

This work's primary aim is not to develop a ready‐to‐use ML classifier for AD, but to show the potential of brain simulation to enhance empirical datasets in clinically relevant ways. While the limited sample size used in this study would potentially be problematic in a more traditional ML study aimed at providing an ML‐based diagnostic aid, combined with our careful cross‐validation methodology, it does not detract from our primary conclusion. Future studies will have to reproduce these results using a more extensive cohort for further clinical usage of this work. Ideally, external validation with a dataset outside of ADNI would be performed.

We used ML as an approach for the comparison of classifier performance with empirical data against simulated data, which is wholly derived from the empirical data. Improvement in classification is then strong evidence for successful processing of the empirical data in TVB: TVB decodes the information embedded within the empirical data which cannot be detected by statistics or ML classifiers. We showed in ADNI data that TVB can derive additional information out of the spatial distribution pattern in PET images.

Our work provides novel evidence that TVB can act as a biophysical brain model and not just like a black box. Complex multi‐scale brain simulation in TVB can lead to additional information that goes beyond the implemented empirical data. Our analysis of feature importance supports this hypothesis, as the features with the highest relevance are already well‐known AD factors and hence, biologically plausible surrogates for clinically relevant information in the data. Moreover, in this pilot study, we demonstrate that TVB simulation can lead to an improved diagnostic value of empirical data and might become a clinically relevant tool.

CONFLICTS OF INTEREST

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest. The disclosures are based on the disclosure form of the International Committee of Medical Journal Editors (ICMJE). PR, ARM, and VJ report the following patent application: McIntosh AR, Mersmann J, Jirsa VK, Ritter P. Method and Computing System for Modeling a Primate Brain. Patent Application 137PCT1754. VJ report stock or stock options in Virtual Brain Technologies (VB‐Tech). VB‐Tech performs activities in the domain of brain simulation. There is no relation to field of dementia, nor to the content of the manuscript. All other authors, namely PT, LS, KD, MD, PB, KB, RP, ASp, and ASo, have nothing to declare.

AUTHOR CONTRIBUTIONS

All authors have made substantial intellectual contributions to this work and approved it for publication. PT and LS had equal contributions to this work. Particular roles according to CRediT 38 : Paul Triebkorn: conceptualization, data curation, investigation, methodology, visualization, writing – original draft. Leon Stefanovski: conceptualization, formal analysis, investigation, methodology, visualization, writing – original draft. Kiret Dhindsa: formal analysis, methodology, software, writing – review and editing. Margarita‐Arimatea Diaz‐Cortes: methodology, software, writing – review and editing. Patrik Bey: methodology, software, writing – review and editing. Konstantin Bülau: validation, writing – review and editing. Roopa Pai: data curation, writing – review and editing. Andreas Spiegler: methodology, writing – review and editing. Ana Solodkin: writing – review and editing. Viktor Jirsa: writing – review and editing. Anthony Randal McIntosh: writing – review and editing. Petra Ritter: conceptualization, funding acquisition, methodology, project administration, supervision, writing – review and editing.

Supporting information

SUPPORTING INFORMATION (APPENDICES A ‐ D)

SUPPORTING DATA

ACKNOWLEDGMENTS

Data collection and sharing for this project was funded by the Alzheimer's Disease Neuroimaging Initiative (ADNI; National Institutes of Health Grant U01 AG024904) and DOD ADNI (Department of Defense award number W81XWH‐12‐2‐0012). ADNI is funded by the National Institute on Aging, the National Institute of Biomedical Imaging and Bioengineering, and through generous contributions from the following: AbbVie; Alzheimer's Association; Alzheimer's Drug Discovery Foundation; Araclon Biotech; BioClinica, Inc.; Biogen; Bristol‐Myers Squibb Company; CereSpir, Inc.; Cogstate; Eisai Inc.; Elan Pharmaceuticals, Inc.; Eli Lilly and Company; EuroImmun; F. Hoffmann‐La Roche Ltd and its affiliated company Genentech, Inc.; Fujirebio; GE Healthcare; IXICO Ltd.; Janssen Alzheimer Immunotherapy Research & Development, LLC; Johnson & Johnson Pharmaceutical Research & Development LLC; Lumosity; Lundbeck; Merck & Co., Inc.; Meso Scale Diagnostics, LLC; NeuroRx Research; Neurotrack Technologies; Novartis Pharmaceuticals Corporation; Pfizer Inc.; Piramal Imaging; Servier; Takeda Pharmaceutical Company; and Transition Therapeutics. The Canadian Institutes of Health Research is providing funds to support ADNI clinical sites in Canada. Private sector contributions are facilitated by the Foundation for the National Institutes of Health (www.fnih.org). The grantee organization is the Northern California Institute for Research and Education, and the study is coordinated by the Alzheimer's Therapeutic Research Institute at the University of Southern California. ADNI data are disseminated by the Laboratory for Neuro Imaging at the University of Southern California.

Computation of underlying data has been performed on the HPC for Research cluster of the Berlin Institute of Health. PR acknowledges support by EU H2020 Virtual Brain Cloud 826421, Human Brain Project SGA2 785907; Human Brain Project SGA3 945539, ERC Consolidator 683049; German Research Foundation SFB 1436 (project ID 425899996); SFB 1315 (project ID 327654276); SFB 936 (project ID 178316478; SFB‐TRR 295 (project ID 424778381); SPP Computational Connectomics RI 2073/6‐1, RI 2073/10‐2, RI 2073/9‐1; PHRASE Horizon EIC grant 101058240; Berlin Institute of Health & Foundation Charité, Johanna Quandt Excellence Initiative; ERAPerMed Pattern‐Cog.

Triebkorn P, Stefanovski L, Dhindsa K, et al. Brain simulation augments machine‐learning–based classification of dementia. Alzheimer's Dement. 2022;8:e12303. 10.1002/trc2.12303

Paul Triebkorn and Leon Stefanovski contributed equally to this article.

Data used in preparation of this article were obtained from the Alzheimer's Disease Neuroimaging Initiative (ADNI) database (adni.loni.usc.edu). As such, the investigators within the ADNI contributed to the design and implementation of ADNI and/or provided data but did not participate in analysis or writing of this report. A complete listing of ADNI investigators can be found at: http://adni.loni.usc.edu/wp‐content/uploads/how_to_apply/ADNI_Acknowledgement_List.pdf

REFERENCES

- 1. Selkoe DJ, Hardy J. The amyloid hypothesis of Alzheimer's disease at 25 years. EMBO Mol Med. 2016;8:595–608. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Sadigh‐Eteghad S, Sabermarouf B, Majdi A, Talebi M, Farhoudi M, Mahmoudi J. Amyloid‐beta: a crucial factor in Alzheimer's Disease. Med Princ Pract. 2015;24:1–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Tapia‐Rojas C, Cabezas‐Opazo F, Deaton CA, Vergara EH, Johnson GVW, Quintanilla RA. It's all about tau. Prog Neurobiol. 2019;175:54–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Jadhav S, Avila J, Scholl M, et al. A walk through tau therapeutic strategies. Acta Neuropathol Commun. 2019;7:22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Zetterberg H, Schott JM. Biomarkers for Alzheimer's disease beyond amyloid and tau. Nat Med. 2019;25:201–203. [DOI] [PubMed] [Google Scholar]

- 6. Jackson J, Jambrina E, Li J, et al. Targeting the synapse in Alzheimer's disease. 2019;13:735. 10.3389/fnins.2019.00735 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Selkoe DJ. Early network dysfunction in Alzheimer's disease. 2019;365(6453):540–541. 10.1126/science.aay5188 [DOI] [PubMed] [Google Scholar]

- 8. Swerdlow RH, Khan SM. The Alzheimer's disease mitochondrial cascade hypothesis: an update. Exp Neurol. 2009;218:308–315. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Heneka MT, Carson MJ, Khoury JE, et al. Neuroinflammation in Alzheimer's disease. Lancet Neurol. 2015;14:388–405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Takatori S, Wang W, Iguchi A, Tomita T. Genetic risk factors for Alzheimer disease: emerging roles of microglia in disease pathomechanisms. Adv Exp Med Biol. 2019;1118:83–116. [DOI] [PubMed] [Google Scholar]

- 11. Blennow K, de Leon MJ, Zetterberg H. Alzheimer's disease. Lancet (London, England). 2006;368:387–403. [DOI] [PubMed] [Google Scholar]

- 12. Jack CR, Jr. , Bennett DA, Blennow K, et al. NIA‐AA research framework: toward a biological definition of Alzheimer's disease. Alzheimers Dement. 2018;14:535–562. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Bloom GS. Amyloid‐β and tau: the trigger and bullet in Alzheimer disease pathogenesis. JAMA Neurol. 2014;71:505–508. [DOI] [PubMed] [Google Scholar]

- 14. Cummings J, Aisen P, Lemere C, Atri A, Sabbagh M, Salloway S. Aducanumab produced a clinically meaningful benefit in association with amyloid lowering. Alzheimers Res Ther. 2021;13:98. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Ritter P, Schirner M, McIntosh AR, Jirsa VK. The virtual brain integrates computational modeling and multimodal neuroimaging. Brain Connect. 2013;3:121–145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Sanz Leon P, Knock SA, Woodman MM, et al. The virtual brain: a simulator of primate brain network dynamics. Front Neuroinform. 2013;7:10. 10.3389/fninf.2013.00010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Stefanovski L, Triebkorn P, Spiegler A, et al. Linking molecular pathways and large‐scale computational modeling to assess candidate disease mechanisms and pharmacodynamics in Alzheimer's Disease. Front Comput Neurosci. 2019;13:54. 10.3389/fncom.2019.00054 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Forlenza OV, Diniz BS, Teixeira AL, Stella F, Gattaz W. Mild cognitive impairment (part 2): biological markers for diagnosis and prediction of dementia in Alzheimer's disease. Braz J Psychiatry. 2013;35:284–294. [DOI] [PubMed] [Google Scholar]

- 19. van Rossum IA, Vos S, Handels R, Visser PJ. Biomarkers as predictors for conversion from mild cognitive impairment to Alzheimer‐type dementia: implications for trial design. Alzheimers Dis. 2010;20:881–891. [DOI] [PubMed] [Google Scholar]

- 20. Stelzer J, Chen Y, Turner R. Statistical inference and multiple testing correction in classification‐based multi‐voxel pattern analysis (MVPA): random permutations and cluster size control. Neuroimage. 2013;65:69–82. [DOI] [PubMed] [Google Scholar]

- 21. Varoquaux G. Cross‐validation failure: small sample sizes lead to large error bars. NeuroImage. 2018;180:68–77. [DOI] [PubMed] [Google Scholar]

- 22. Glasser MF, Sotiropoulos SN, Wilson JA, et al. The minimal preprocessing pipelines for the Human Connectome Project. NeuroImage. 2013;80:105–124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Fischl B, Salat DH, Busa E, et al. Whole brain segmentation: automated labeling of neuroanatomical structures in the human brain. Neuron. 2002;33:341–355. [DOI] [PubMed] [Google Scholar]

- 24.Freesurfer. http://freesurfer.net/fswiki/SubcorticalSegmentation. Accessed at 06/30/2021.

- 25. Jansen BH, Rit VG. Electroencephalogram and visual evoked potential generation in a mathematical model of coupled cortical columns. Biol Cybern. 1995;73:357–366. [DOI] [PubMed] [Google Scholar]

- 26. Jansen BH, Zouridakis G, Brandt ME. A neurophysiologically‐based mathematical model of flash visual evoked potentials. Biol Cybern. 1993;68:275–283. [DOI] [PubMed] [Google Scholar]

- 27. Sanz‐Leon P, Knock SA, Spiegler A, Jirsa VK. Mathematical framework for large‐scale brain network modeling in the virtual brain. NeuroImage. 2015;111:385–430. [DOI] [PubMed] [Google Scholar]

- 28. Spiegler A, Kiebel SJ, Atay FM, Knösche TR. Bifurcation analysis of neural mass models: impact of extrinsic inputs and dendritic time constants. NeuroImage. 2010;52:1041–1058. [DOI] [PubMed] [Google Scholar]

- 29. Glasser MF, Coalson TS, Robinson EC, et al. A multi‐modal parcellation of human cerebral cortex. Nature. 2016;536:171–178. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Bojak I, Oostendorp TF, Reid AT, Kotter R. Connecting mean field models of neural activity to EEG and fMRI data. Brain Topogr. 2010;23:139–149. [DOI] [PubMed] [Google Scholar]

- 31. Jirsa VK, Jantzen KJ, Fuchs A, Kelso JAS. Spatiotemporal forward solution of the EEG and MEG using network modeling. IEEE Trans Med Imaging. 2002;21:493–504. [DOI] [PubMed] [Google Scholar]

- 32. Litvak V, Mattout J, Kiebel S, et al. EEG and MEG data analysis in SPM8. Comput Intell Neurosci. 2011;2011:852961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Cortes C, Vapnik V. Support‐vector networks. Mach Learn. 1995;20:273–297. [Google Scholar]

- 34. Breiman L. Random forests. Mach Learn. 2001;45:5–32. [Google Scholar]

- 35. Suk HI, Lee SW, Shen D. Hierarchical feature representation and multimodal fusion with deep learning for AD/MCI diagnosis. Neuroimage. 2014;101:569–582. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Zhang D, Wang Y, Zhou L, Yuan H, Shen D. Multimodal classification of Alzheimer's disease and mild cognitive impairment. NeuroImage. 2011;55:856–867. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Boshra R, Dhindsa K, Boursalie O, et al. From group‐level statistics to single‐subject prediction: machine learning detection of concussion in retired athletes. IEEE Trans Neural Syst Rehabil Eng. 2019;27:1492–1501. [DOI] [PubMed] [Google Scholar]

- 38. Brand A, Allen L, Altman M, Hlava M, Scott J. Beyond authorship: attribution, contribution, collaboration, and credit. Lear Publ. 2015;28:151–155. [Google Scholar]

- 39. Nestor SM, Rupsingh R, Borrie M, et al. Ventricular enlargement as a possible measure of Alzheimer's disease progression validated using the Alzheimer's disease neuroimaging initiative database. Brain. 2008;131:2443–2454. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Jack CR, Petersen RC, Xu Y, et al. Rates of hippocampal atrophy correlate with change in clinical status in aging and AD. Neurology. 2000;55:484–490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Braak H, Braak E. Frequency of stages of Alzheimer‐related lesions in different age categories. Neurobiol Aging. 1997;18:351–357. [DOI] [PubMed] [Google Scholar]

- 42. Aggleton JP, Pralus A, Nelson AJD, Hornberger M. Thalamic pathology and memory loss in early Alzheimer s disease: moving the focus from the medial temporal lobe to Papez circuit. Brain. 2016;139:1877–1890. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Thal DR, Rub U, Orantes M, Braak H. Phases of A beta‐deposition in the human brain and its relevance for the development of AD. Neurology. 2002;58:1791–1800. [DOI] [PubMed] [Google Scholar]

- 44. Braak H, Braak E. Neuropathological stageing of Alzheimer‐related changes. Acta Neuropathol. 1991;82:239–259. [DOI] [PubMed] [Google Scholar]

- 45. Cho H, Choi JY, Hwang MS, et al. Tau PET in Alzheimer disease and mild cognitive impairment. Neurology. 2016;87:375–383. [DOI] [PubMed] [Google Scholar]

- 46. Knopman DS, Lundt ES, Therneau TM, et al. Entorhinal cortex tau, amyloid‐β, cortical thickness and memory performance in non‐demented subjects. Brain. 2019;142:1148–1160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Barzegaran E, Vildavski VY, Knyazeva MG. Fine structure of posterior alpha rhythm in human EEG: frequency components, their cortical sources, and temporal behavior. Sci Rep. 2017;7:8249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Babiloni C, Del Percio C, Lizio R, et al. Abnormalities of resting state cortical EEG rhythms in subjects with mild cognitive impairment due to Alzheimer's and Lewy body diseases. J Alzheimers Dis. 2018;62:247–68. [DOI] [PubMed] [Google Scholar]

- 49. Grill‐Spector K, Malach R. The human visual cortex. Annu Rev Neurosci. 2004;27:649–677. [DOI] [PubMed] [Google Scholar]

- 50. Coughlan G, Laczó J, Hort J, Minihane A‐M, Hornberger M. Spatial navigation deficits —overlooked cognitive marker for preclinical Alzheimer disease? Nat Rev Neurolo. 2018;14:496–506. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

SUPPORTING INFORMATION (APPENDICES A ‐ D)

SUPPORTING DATA