Abstract

Objective

To assess the quantity and impact of research publications among US acute care hospitals; to identify hospital characteristics associated with publication volumes; and to estimate the independent association of bibliometric indicators with Hospital Compare quality measures.

Data Sources

Hospital Compare; American Hospital Association Survey; Magnet Recognition Program; Science Citation Index Expanded.

Study Design

In cross‐sectional studies using a 40% random sample of US Medicare‐participating hospitals, we estimated associations of hospital characteristics with publication volumes and associations of hospital‐linked bibliometric indicators with 19 Hospital Compare quality metrics.

Data Collection/Extraction Methods

Using standardized search strategies, we identified all publications attributed to authors from these institutions from January 1, 2015 to December 31, 2016 and their subsequent citations through July 2020.

Principal Findings

Only 647 of 1604 study hospitals (40.3%) had ≥1 publication. Council of Teaching Hospitals and Health Systems (COTH) hospitals had significantly more publications (average 599 vs. 11 for non‐COTH teaching and 0.6 for nonteaching hospitals), and their publications were cited more frequently (average 22.6/publication) than those from non‐COTH teaching (18.2 citations) or nonteaching hospitals (12.8 citations). In multivariable regression, teaching intensity, hospital beds, New England or Pacific region, and not‐for‐profit or government ownership were significant predictors of higher publication volumes; the percentage of Medicaid admissions was inversely associated. In multivariable linear regression, hospital publications were associated with significantly lower risk‐adjusted mortality rates for acute myocardial infarction (coefficient −0.52, p = 0.01), heart failure (coefficient −0.74, p = 0.004), pneumonia (coefficient −1.02, p = 0.001), chronic obstructive pulmonary disease (coefficient −0.48, p = 0.005), and coronary artery bypass surgery (coefficient −0.73, p < 0.0001); higher overall Hospital Consumer Assessment of Healthcare Providers and Systems (HCAHPS) ratings (coefficient 2.37, p = 0.04); and greater patient willingness to recommend (coefficient 3.38, p = 0.01).

Conclusions

A minority of US hospitals published in the biomedical literature. Publication quantity and impact indicators are independently associated with lower risk‐adjusted mortality and higher HCAHPS scores.

Keywords: outcomes, publications, quality, research

What is known on this topic

Although hospital teaching intensity is associated with better outcomes, the association between hospital‐based research and outcomes is less well characterized.

Previous studies have primarily focused on clinical trial participation and outcomes.

No large‐scale studies in the United States have analyzed the association of hospital‐linked publications, a widely accepted research metric, with clinical outcomes while adjusting for multiple potential confounders.

What this study adds

A minority of US acute care hospitals are linked to even one publication. When evaluating hospital quality, staff publications are a useful and objective structural metric that may be routinely assessed.

Significant predictors of higher publication volumes include teaching intensity, number of hospital beds, New England or Pacific region, not‐for‐profit or government ownership, and a lower percentage of Medicaid admissions.

The number and impact (e.g., citations, journal impact factor) of publications attributed to hospital staff are independently associated with Hospital Compare outcomes through a variety of hypothesized direct and indirect mechanisms.

1. INTRODUCTION

Hospitals have many functions, the most fundamental of which is direct patient care. To varying extents, they also educate the next generations of health care providers; they collaborate with their communities to address social determinants of health, sometimes serving as the primary health care contact for vulnerable populations 1 ; and some conduct clinical or laboratory research.

While the societal impact of other hospital roles is more transparent and measurable, the value of research is often neither immediately apparent nor easily quantified 2 and may be regarded by some as a distraction from direct patient care. Although various measures of research impact in health care have been proposed, 2 , 3 , 4 , 5 , 6 , 7 , 8 , 9 , 10 , 11 , 12 , 13 , 14 , 15 , 16 arguably the most convincing would be an independent, hospital‐level association of research and clinical quality. Because the extent of research and teaching activities at hospitals are often correlated (e.g., both are more common at academic medical centers), and because hospital characteristics, including teaching intensity, 17 , 18 , 19 , 20 , 21 , 22 have been associated with better outcomes, analyses to demonstrate the independent value of research must account for these known confounders.

Publication quantity and quality 3 , 23 , 24 , 25 are among the most comprehensive and universally accepted markers of research productivity. The objectives of this study are to evaluate the hospital characteristics associated with publication volumes and to estimate the independent association of various bibliometric indicators with a broad portfolio of CMS Hospital Compare quality metrics from a large random sample of US acute care hospitals.

2. METHODS

Using downloadable files from CMS Hospital Compare, we identified 4008 US acute care hospitals that reported 30‐day risk‐adjusted mortality rates for acute myocardial infarction (AMI), heart failure (HF), pneumonia (PN), chronic obstructive pulmonary disease (COPD), or coronary artery bypass grafting (CABG) during the 2018 reporting period. We chose 2018 Hospital Compare data because the data collection periods used to calculate hospital performance overlapped with our publication search period (2015–2016). Veterans Administration hospitals, hospitals in US territories, and hospitals with unavailable data were excluded.

We linked these hospitals with the 2016 American Hospital Association (AHA) survey to obtain official hospital names, addresses, teaching status (Council of Teaching Hospitals and Health Systems [COTH] member, non‐COTH teaching, or nonteaching), ownership, bed size, geographic region, metropolitan location, total annual admissions, and percentage of Medicaid admissions. The ANCC Magnet Recognition Program was queried to identify hospital Magnet recognition, a measure of nursing excellence, at any time between 2015 and 2016.

Hospital Medicare Provider Identification numbers from the CMS Hospital File were used to combine data from Hospital Compare, AHA, and Magnet sources. From these linked data, we randomly sampled 40% of hospitals based on their teaching status, bed size, and geographic regions, resulting in a final study cohort of 1604 US hospitals. We applied a standardized search strategy using the Science Citation Index Expanded (a subset of Web of Science; [see Appendix S1]) to identify all publications attributed to these institutions in 2015 and 2016, based on an author's designated affiliation. A hospital was credited only once regardless of the number of coauthors from that hospital, and multiple hospital affiliations were possible for a single paper.

The validity of our search process was highly dependent upon manual review by an experienced librarian and, despite prespecified rules, required considerable case‐by‐case judgment. Accordingly, after completion of the initial search, we performed a two‐step validation in which publications associated with a subset of hospitals in the study cohort were independently assessed by a second credentialed librarian (Appendix S2). Overall, 15% of COTH hospitals and 5% of non‐COTH teaching and nonteaching hospitals were included in the validation.

Multiple bibliometric indices were used to measure the quantity and quality of hospital publications, including total publications and citations, as well as publications and citations per 1000 admissions to standardize for institution size. We also explored the possibility of adjusting hospital publications by the number of hospital clinical staff. Several physician variables were obtained from the AHA survey, including “number of full‐time physicians,” “number of full‐time physician FTEs,” and “number of privileged physicians.” Missing responses for these hospital‐reported data were frequent (approximately 27%–37% across different variables). Discussions with AHA led us to the conclusion that reported physician numbers were likely underestimated at many institutions and that hospitals varied substantially in their methods of counting staff. Accordingly, we did not regard these data as sufficiently reliable and did not pursue analyses that controlled the number of clinical staff.

To gauge the quality of the journals in which these articles were published, a cumulative journal impact factor (JIF) was estimated by aggregating the JIF's for all journals in which a hospital had publications, as calculated by Journal Citation Reports in 2015 and 2016 (available at Web of Science). A few journals had no JIF because they were new or their impact was too low, in which case we assigned JIF = 0 and included them in the analyses. A sensitivity analysis excluding these journals was performed. We also estimated an institutional h‐index 26 , 27 reflecting citations through June/July 2020 that were attributable to a hospital's publications during the 2015–2016 study period.

We estimated associations between expected publication rates per number of annual admissions and hospital characteristics using negative binomial (NB) regression models to account for overdispersion. Publication count was the dependent variable, and the annual number of hospital admissions in the thousands was used in an offset term to account for variation in institutional size (which may be a proxy for greater resources, more physician staff, and for the well‐described association between volume and outcomes) across hospitals. Independent variables in the models included hospital teaching status (COTH, non‐COTH teaching, nonteaching [reference]); bed size (continuous variable); census region (New England, Mid/South Atlantic, East North/South Central, West North/South Central, Mountain [reference], and Pacific); hospital ownership (not‐for‐profit, government‐owned, and investor‐owned [reference]; metropolitan location (yes/no); Magnet status (yes/no); and percentage of Medicaid admissions.

Metrics encompassing multiple dimensions of hospital quality (patient outcomes, patient experience, hospital safety, and cost efficiency) were extracted from Hospital Compare files, which include publicly available performance data for all US Medicare‐certified hospitals. Specific measures included 30‐day risk‐standardized mortality (AMI, HF, PN, COPD, CABG); Hip/Knee replacement risk‐standardized complication rates; 30‐day risk‐standardized unplanned readmission rates (AMI, HF, PN, COPD, CABG, and Hip/Knee); Hospital Consumer Assessment of Healthcare Providers and Systems Survey (HCAHPS) percent top box 9 , 10 overall hospital rating and percent willingness to recommend the hospital; Medicare Spending per Beneficiary, an efficiency measure; PSI‐90 composite; and central line‐associated bloodstream infections (CLABSI), catheter‐associated urinary tract infections (CAUTI), and surgical site infections after colon surgery (SSI‐Colon). Descriptions and data collection periods for each measure are provided in Appendix S3.

Multivariable linear regression was used to estimate the associations between the number of hospital publications and Hospital Compare measures. Separate models were estimated using specific hospital quality measures as dependent variables. Independent variables included various hospital characteristics and three categories of total publication volume during the study period: 0 publications [reference], 1–46, and >46 publications (>95th percentile of total publications among study hospitals). Hospital characteristics included in the models were identical to those in the NB regression, except that the COTH and non‐COTH teaching categories were combined to mitigate multicollinearity issues since there were no COTH hospitals in the zero‐publication group. Subsequent analyses were performed using two teaching groups (teaching vs. nonteaching [reference]).

Because hospital volumes are associated with outcomes for many procedures and conditions, we also performed a sensitivity analysis using publications per 1000 admissions to define hospital publication groups (no publication, ≤95th percentile, and >95th percentile, based on publications per 1000 admissions). Additionally, we repeated analyses using beta regression for those Hospital Compare aggregate outcomes represented by proportions (mortality, complications, readmissions, and HCAHPS scores) to assess whether the use of linear regression in our primary analyses impacted the estimated associations for outcomes that are bounded between 0 and 1.

Finally, in separate multivariable analyses, we replaced publication volume categories with publication quality and impact indicators, including total citations per 1000 admissions and cumulative JIF for all publications attributed to a hospital, using three categories for each: no publications [reference], ≤95th percentile, and >95th percentile of their respective distributions. We estimated the association of each publication quality/impact indicator with Hospital Compare metrics.

Statistical analyses were performed using SAS software version 9.4 (SAS Institute, Inc.).

3. RESULTS

Among the 1604 study hospitals, 957 (59.7%) had no publications in 2015–2016 (Table 1). Only 647 hospitals (40.3%) had 1 or more publications, for a total of 58,347 publications (mean 36.4 publications per hospital, range 0–11,258). For hospitals with publications, more than half (53.5% of 647 hospitals) had ≤3 publications. In descriptive analyses, average numbers of publications were higher among larger, metropolitan, not‐for‐profit hospitals, hospitals located in New England, COTH members, Magnet Recognition recipients, and hospitals with higher percentages of Medicaid admissions. Hospitals with no publications were often smaller, rural, nonteaching, investor‐owned, non‐Magnet recipients, had fewer Medicaid patients, and were located in the Central region.

TABLE 1.

Publication volume (2015–2016) by hospital characteristics

| Statistics | Number (%) hospitals a | |||||||

|---|---|---|---|---|---|---|---|---|

| # hospitals | Total publications | Mean (median) | 0 publications | 1–10 publications | 11–100 publications | 101–500 publications | >500 publications | |

| All hospitals | 1604 | 58,347 | 36.38 (0) | 957 (59.66%) | 481 (29.99%) | 123 (7.67%) | 28 (1.75%) | 15 (0.94%) |

| Teaching status | ||||||||

| COTH | 86 | 51,524 | 599.12 (81) | 0 | 8 (9.30%) | 42 (48.84%) | 22 (25.58%) | 14 (16.28%) |

| Non‐COTH teaching | 562 | 6252 | 11.12 (2) | 186 (33.10%) | 293 (52.14%) | 76 (13.52%) | 6 (1.07%) | 1 (0.18%) |

| Nonteaching | 956 | 571 | 0.60 (0) | 771 (80.65%) | 180 (18.83%) | 5 (0.52%) | 0 | 0 |

| Hospital ownership | ||||||||

| Not‐for‐profit | 1016 | 51,385 | 50.58 (0) | 530 (52.17%) | 350 (34.45%) | 104 (10.24%) | 21 (2.07%) | 11 (1.08%) |

| Government‐owned | 325 | 6444 | 19.83 (0) | 253 (77.85%) | 48 (14.77%) | 13 (4.00%) | 7 (2.15%) | 4 (1.23%) |

| Investor‐owned | 263 | 518 | 1.97 (0) | 174 (66.16%) | 83 (31.56%) | 6 (2.28%) | 0 | 0 |

| Metro location | ||||||||

| Yes | 954 | 58,024 | 60.82 (1) | 396 (41.51%) | 395 (41.40%) | 120 (12.58%) | 28 (2.94%) | 15 (1.57%) |

| No | 650 | 323 | 0.50 (0) | 561 (86.31%) | 86 (13.23%) | 3 (0.46%) | 0 | 0 |

| Magnet status in 2015–2016 | ||||||||

| Yes | 137 | 28,834 | 210.47 (9) | 11 (8.03%) | 61 (44.53%) | 44 (32.12%) | 13 (9.49%) | 8 (5.84%) |

| No | 1467 | 29,513 | 20.12 (0) | 946 (64.49%) | 420 (28.63%) | 79 (5.39%) | 15 (1.02%) | 7 (0.48%) |

| Bed size | ||||||||

| <100 beds | 755 | 189 | 0.25 (0) | 681 (90.20%) | 71 (9.40%) | 3 (0.40%) | 0 | 0 |

| 100–299 beds | 550 | 2180 | 3.96 (1) | 248 (45.09%) | 270 (49.09%) | 29 (5.27%) | 3 (0.55%) | 0 |

| 300–499 beds | 188 | 5302 | 28.20 (4) | 22 (11.70%) | 109 (57.98%) | 50 (26.60%) | 4 (2.13%) | 3 (1.60%) |

| ≥500 beds | 111 | 50,676 | 456.54 (43) | 6 (5.41%) | 31 (27.93%) | 41 (36.94%) | 21 (18.92%) | 12 (10.81%) |

| Percent of Medicaid admissions among all inpatient admissions | ||||||||

| Low tercile | 535 | 2291 | 4.28 (0) | 382 (71.40%) | 122 (22.80%) | 25 (4.67%) | 6 (1.12%) | 0 |

| Medium tercile | 550 | 26,505 | 48.19 (0) | 323 (58.73%) | 177 (32.18%) | 42 (7.64%) | 3 (0.55%) | 5 (0.91%) |

| High tercile | 519 | 29,551 | 56.94 (1) | 252 (48.55%) | 182 (35.07%) | 56 (10.79%) | 19 (3.66%) | 10 (1.93%) |

| Regions | ||||||||

| Mountain | 126 | 357 | 2.83 (0) | 77 (61.11%) | 42 (33.33%) | 6 (4.76%) | 1 (0.79%) | 0 |

| Mid/South Atlantic | 394 | 7031 | 17.85 (1) | 181 (45.94%) | 151 (38.32%) | 48 (12.18%) | 12 (3.05%) | 2 (0.51%) |

| Pacific | 176 | 3760 | 21.36 (1) | 85 (48.30%) | 61 (34.66%) | 23 (13.07%) | 4 (2.27%) | 3 (1.70%) |

| East North/South Central | 404 | 13,851 | 34.28 (0) | 268 (66.34%) | 103 (25.50%) | 24 (5.94%) | 5 (1.24%) | 4 (0.99%) |

| West North/South Central | 437 | 15,213 | 34.81 (0) | 319 (73.00%) | 97 (22.20%) | 15 (3.43%) | 3 (0.69%) | 3 (0.69%) |

| New England | 67 | 18,135 | 270.67 (1) | 27 (40.30%) | 27 (40.30%) | 7 (10.45%) | 3 (4.48%) | 3 (4.48%) |

Note: Number of hospitals, total publications, and mean (median) publications per hospital, overall and for various subgroups. Number of hospitals in each publication category are also provided.

Abbreviation: COTH, Council of Teaching Hospitals and Health Systems.

Total row percents equal to 100%.

Validation results for the publication search strategy are summarized in Table S1, showing an absolute overall discordance of only 36 publications, or 0.27% of the total publications (13,162) in the original search.

Compared to other teaching intensity categories, COTH hospital publications were more likely to be published by high‐impact journals (Table 2) with journal impact factor >10 (11.71% for COTH, 6.49% for non‐COTH teaching, and 3.50% for nonteaching hospital publications). Among 58,334 total publications, 90.64% (52,876) were cited at least once as of June/July 2020. Average COTH hospital citations per publication (22.6) exceeded those of non‐COTH teaching (18.2) or nonteaching hospitals (12.8).

TABLE 2.

Journal impact factor (JIF) and citations for publications from three hospital categories of teaching intensity

| All hospitals (n = 1604) | COTH (n = 86) | Non‐COTH teaching (n = 562) | Nonteaching (n = 956) | |

|---|---|---|---|---|

| Journal impact factor (JIF) a | ||||

| Number of publications (% b ) | 58,347 (100%) | 51,524 (88.31%) | 6252 (10.72%) | 571 (0.98%) |

| Average JIF per publication (median) | 5.69 (3.49) | 5.86 (3.56) | 4.54 (2.98) | 3.39 (2.57) |

| JIF categories, Number of publications (% c ) | ||||

| JIF: 0–5 | 39,916 (68.41%) | 34,575 (67.10%) | 4869 (77.88%) | 472 (82.66%) |

| JIF: 5–10 | 11,974 (20.52%) | 10,918 (21.19%) | 977 (15.63%) | 79 (13.84%) |

| JIF: >10 | 6457 (11.07%) | 6031 (11.71%) | 406 (6.49%) | 20 (3.50%) |

| Citations | ||||

| Number of publications with available citation data, (% b ) | 58,334 (100%) | 51,515 (88.31%) | 6248 (10.71%) | 571 (0.98%) |

| Average citation per publication (median) | 22.00 (9) | 22.57 (9) | 18.17 (7) | 12.80 (6) |

| Citation categories, Number of publications (% c ) | ||||

| Citations: 0–10 | 32,690 (56.04%) | 28,445 (55.22%) | 3851 (61.64%) | 394 (69.00%) |

| Citations: 11–50 | 20,967 (35.94%) | 18,810 (36.51%) | 2005 (32.09%) | 152 (26.62%) |

| Citations: 51–100 | 2879 (4.94%) | 2616 (5.08%) | 244 (3.91%) | 19 (3.33%) |

| Citations: 101–300 | 1453 (2.49%) | 1329 (2.58%) | 118 (1.89%) | 6 (1.05%) |

| Citations: >300 | 345 (0.59%) | 315 (0.61%) | 30 (0.48%) | 0 |

Note: Journal Impact Factor and citation numbers for all hospitals and for the three categories of hospital teaching intensity. Number of citations and Journal impact factor increased with teaching intensity (i.e., COTH > non‐COTH teaching > nonteaching).

Abbreviation: JIF, journal impact factor.

Some journals have no impact factor because they are new journals, or the impact factors are too low. We assigned “0” journal impact factors for these journals and included them in the analyses. A sensitivity analysis excluding these journals was performed, and similar findings were observed.

Sum of percents from COTH, non‐COTH teaching, and nonteaching equal to 100% for all hospitals.

Sum of percents from JIF categories and citation categories equal to 100%.

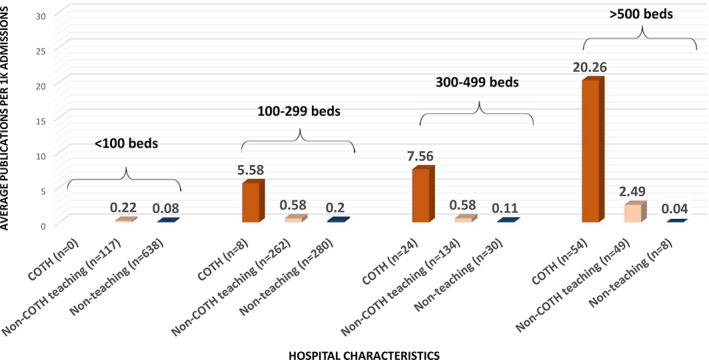

Figure 1 displays the number of publications per 1000 admissions, and Supplemental Figure S1 shows citations per 1000 hospital admissions, both stratified by teaching status and bed size. COTH teaching hospitals with >500 beds had the highest average number of publications (20.3) and citations per 1000 admissions (454.2). In each bed size category, they consistently had higher average publications and citations per 1000 hospital admissions compared to non‐COTH teaching and nonteaching hospitals. The magnitude of differences in publications and citations between COTH, non‐COTH teaching, and nonteaching hospitals increased with the number of hospital beds.

FIGURE 1.

Average publications per 1000 hospital admissions, stratified by hospital teaching category and beds. Council of Teaching Hospitals and Health Systems (COTH) hospitals had the highest numbers of publications per 1000 admissions in every category of hospital size (measured by number of beds) [Color figure can be viewed at wileyonlinelibrary.com]

We were able to estimate hospital‐level h‐Indices for 614 hospitals (94.9% of the 647 hospitals with publications in our study), based on their 2015–2016 publications (Table S2). These 614 hospitals included 100% of COTH, 64% of non‐COTH teaching, and 17% of nonteaching hospitals. The average institutional h‐index was 27.2 for COTH, 4.3 for non‐COTH teaching, and 1.9 for nonteaching hospitals. The five highest hospital h‐indices were from major academic medical centers with scores of 177, 168, 134, 130, and 94.

Table 3 reports the estimates from the NB regression analysis. Hospital characteristics associated with higher expected rates of publications include COTH (rate ratio (RR): 24.29, 95% confidence interval (CI): 15.03–38.86) and non‐COTH teaching (RR: 3.09, 95% CI: 2.44–3.94) status; increased number of hospital beds (RR: 1.02 per increase of 10 beds, 95% CI: 1.01–1.03); New England (RR: 3.90, 95% CI: 2.16–6.96) and Pacific region (RR: 1.99, 95% CI: 1.23–3.19); and not‐for‐profit (RR: 1.39, 95% CI: 1.04–1.86) and government ownership (RR: 2.59, 95% CI: 1.75–3.78). Although initial descriptive analyses (Table 1) suggested that hospitals with a higher percentage of Medicaid admissions had higher publication rates, the direction of this association reversed after adjusting for other hospital characteristics in the multivariable model (RR: 0.99, 95% CI: 0.98–0.998).

TABLE 3.

Association between hospital characteristics and hospital publication volumes using negative binomial regression

| Variables | Rate ratio | 95% confidence interval | p value |

|---|---|---|---|

| Teaching status | |||

| COTH teaching | 24.29 | 15.03–38.86 | <0.0001 |

| Non‐COTH teaching | 3.09 | 2.44–3.94 | <0.0001 |

| Nonteaching hospitals | ref | ref | ref |

| Hospital ownership | |||

| Not‐for‐profit | 1.39 | 1.04–1.86 | 0.03 |

| Government‐owned | 2.59 | 1.75–3.78 | <0.0001 |

| Investor‐owned | ref | ref | ref |

| Metropolitan location | |||

| Yes | 1.16 | 0.87–1.54 | 0.32 |

| No | ref | ref | ref |

| Magnet status in 2015–2016 | |||

| Yes | 1.36 | 0.97–1.90 | 0.07 |

| No | ref | ref | ref |

| Hospital beds (per 10 beds increase) | 1.02 | 1.01–1.03 | <0.0001 |

| Percent of Medicaid admissions among all inpatient admissions, 1% increase | 0.99 | 0.98–0.998 | 0.01 |

| Regions (Census divisions) | |||

| Mid/South Atlantic | 1.02 | 0.66–1.58 | 0.92 |

| Pacific | 1.99 | 1.23–3.19 | 0.01 |

| East North/South Central | 0.99 | 0.63–1.55 | 0.97 |

| West North/South Central | 1.42 | 0.90–2.23 | 0.12 |

| New England | 3.90 | 2.16–6.96 | <0.0001 |

| Mountain | ref | ref | ref |

Note: Negative binomial regression for number of publications with an offset for the annual admissions was used to estimate the association between hospital characteristics and expected publication rates. The rate ratio refers to the ratio of expected rate of publications per annual admission relative to the reference group after adjustment for the other variables listed. Significant predictors of high publication rates included teaching intensity, number of hospital beds, New England or Pacific region, not‐for‐profit or government ownership. Percentage of Medicaid admissions was negatively associated with publication rates.

In multivariable linear regression analyses, hospitals with more publications had lower 30‐day mortality rates for common index diagnoses (Table 4). Compared with hospitals having 0 publications, those with >46 publications had average 30‐day adjusted mortality rates (adjusted for patient characteristics, per CMS models) that were 4.8% lower for AMI, 12.7% lower for heart failure, 7.9% lower for pneumonia, 5.5% lower for COPD, and 27.2% lower for CABG. In multivariable linear regression with additional adjustment for hospital characteristics, hospitals with >46 publications had statistically significant (p values = 0.01 to <0.0001) inverse associations with risk‐adjusted mortality rates for each diagnosis or procedure studied; hospitals with 1–46 publications also had significantly lower risk‐adjusted mortality rate for CABG (p = 0.02). The HCAHPS percentage of “top box” (rated 9 or 10) overall hospital rating and patient willingness to recommend were higher in hospitals with >46 versus 0 publications (p = 0.04 and 0.01, respectively). No statistically significant difference was found in either HCAHPS measure for hospitals with 1–46 publications versus 0 publications.

TABLE 4.

Unadjusted and adjusted associations between hospital publications and core quality and process measuresa from Hospital Compare

| Hospital Compare data | Adjusted multivariable linear regression | |||||||

|---|---|---|---|---|---|---|---|---|

| Hospitals with available HC data | Hospitals with > 46 publications | Hospitals with 1–46 publications | Hospitals with 0 publications | Hospitals with > 46 publications versus hospitals with 0 publications | Hospitals with 1–46 publications versus hospitals with 0 publications | |||

| n | Mean (SD) | Mean (SD) | Mean (SD) | Coefficient (95% CI) | p value | Coefficient (95% CI) | p value | |

| 30‐day risk adjusted mortality rate, % | ||||||||

| AMI | 918 | 12.75 (1.32) | 13.27 (1.33) | 13.39 (1.13) | −0.52 (−0.92, −0.12) | 0.01 | 0.01 (−0.19, 0.20) | 0.95 |

| HF | 1404 | 10.62 (1.56) | 11.69 (1.80) | 12.16 (1.56) | −0.74 (−1.24, −0.23) | 0.004 | −0.07 (−0.30, 0.15) | 0.51 |

| PN | 1594 | 14.69 (1.89) | 15.85 (2.04) | 15.95 (1.90) | −1.02 (−1.61, −0.44) | 0.001 | 0.04 (−0.21, 0.30) | 0.73 |

| COPD | 1424 | 7.96 (0.95) | 8.48 (1.26) | 8.42 (0.98) | −0.48 (−0.82, −0.14) | 0.005 | 0.06 (−0.08, 0.21) | 0.40 |

| CABG | 417 | 2.65 (0.67) | 3.26 (0.88) | 3.64 (1.05) | −0.73 (−1.09, −0.37) | <0.0001 | −0.28 (−0.52, −0.04) | 0.02 |

| Risk‐adjusted complication rate for hip/knee replacement, % | ||||||||

| Hip/knee | 1026 | 2.59 (0.65) | 2.60 (0.54) | 2.68 (0.49) | −0.02 (−0.19, 0.15) | 0.82 | −0.05 (−0.13, 0.03) | 0.17 |

| 30‐day risk adjusted unplanned readmission rate, % | ||||||||

| AMI | 848 | 16.09 (1.13) | 16.00 (1.15) | 16.14 (0.88) | 0.03 (−0.31, 0.37) | 0.88 | −0.05 (−0.22, 0.12) | 0.57 |

| HF | 1430 | 21.68 (1.89) | 21.52 (1.72) | 21.77 (1.43) | −0.21 (−0.68, 0.26) | 0.39 | −0.25 (−0.46, −0.04) | 0.02 |

| PN | 1584 | 17.17 (1.51) | 16.80 (1.50) | 16.57 (1.15) | 0.13 (−0.26, 0.52) | 0.51 | 0.00 (−0.17, 0.18) | 0.99 |

| COPD | 1444 | 19.65 (1.03) | 19.62 (1.26) | 19.62 (1.04) | −0.26 (−0.61, 0.09) | 0.14 | −0.14 (−0.29. 0.01) | 0.07 |

| CABG | 417 | 13.19 (1.37) | 13.21 (1.26) | 13.51 (1.17) | −0.13 (−0.64, 0.39) | 0.63 | −0.15 (−0.48, 0.19) | 0.38 |

| Hip/Knee | 1039 | 4.18 (0.54) | 4.18 (0.54) | 4.19 (0.40) | −0.03 (−0.19, 0.13) | 0.72 | −0.03 (−0.10, 0.04) | 0.43 |

| HCAHPS (Hospital Consumer Assessment of Healthcare Providers and Systems), % of “top box” | ||||||||

| Overall rating, % “top box” (scored 9 or 10) | 1523 | 72.97 (6.93) | 70.38 (7.25) | 72.65 (8.62) | 2.37 (0.14, 4.60) | 0.04 | −0.69 (−1.66, 0.27) | 0.16 |

| Willingness to recommend, % yes | 1523 | 75.56 (7.60) | 70.90 (8.43) | 70.93 (9.51) | 3.38 (0.83, 5.92) | 0.01 | −0.14 (−1.23, 0.96) | 0.81 |

| Medicare spending per beneficiary (MSPB), ratio of hospital cost/cost of national median hospital | ||||||||

| MSPB | 1177 | 0.99 (0.04) | 1.00 (0.07) | 0.98 (0.09) | −0.02 (−0.04, 0.01) | 0.21 | 0.01 (−0.01, 0.02) | 0.13 |

| AHRQ patient safety indicators: PSI 90, weighted average of 10 component observed/expected ratios | ||||||||

| PSI 90 composite | 1201 | 1.05 (0.22) | 1.01 (0.23) | 0.99 (0.13) | 0.02 (−0.04, 0.07) | 0.55 | 0.01 (−0.02, 0.03) | 0.87 |

| Health care associated infection measures, standardized infection observed/expected ratio | ||||||||

| Central line associated bloodstream infection | 1333 | 0.93 (0.36) | 0.85 (0.81) | 0.64 (1.95) | 0.10 (−0.37, 0.58) | 0.67 | 0.10 (−0.11, 0.31) | 0.34 |

| Catheter associated urinary tract infection | 1387 | 1.00 (0.46) | 0.96 (0.86) | 1.11 (7.49) | 0.22 (−1.53, 1.97) | 0.80 | 0.04 (−0.71, 0.80) | 0.91 |

| Surgical site infection for colon surgery | 1223 | 1.16 (0.64) | 0.89 (0.99) | 0.79 (1.78) | 0.30 (−0.16, 0.75) | 0.20 | 0.09 (−0.11, 0.29) | 0.36 |

Note: Hospital Compare data in seven categories of quality indicators for hospitals in various total publication categories. Adjusted multivariable regression coefficients relating publication volume categories and Hospital Compare outcomes demonstrates that publications were positively associated with lower risk‐adjusted mortality for AMI, HF, PN, COPD, and CABG, and with higher overall hospital rating and willingness to recommend in HCAHPS. Similar findings were observed in sensitivity analyses when using publications per 1000 inpatient admissions to define hospital publication categories (Table S3).

Abbreviations: AHRQ, Agency for Healthcare Research and Quality; AMI, acute myocardial infarction; CABG, coronary artery bypass grafting surgery; COPD, chronic obstructive pulmonary disease; HCAHPS, Hospital Consumer Assessment of Healthcare Providers and Systems; HF, heart failure; MSPB, Medicare spending per beneficiary; PN, pneumonia; PSI, patient safety indicator; SD, standard deviation.

Definitions of core quality and process measures available in Appendix S3.

Risk‐adjusted 30‐day unplanned readmission rates for heart failure were significantly lower (p = 0.02) for hospitals with 1–46 publications compared with hospitals having 0 publications. With this exception, there were no other statistically significant associations between publications and complication rates, readmission rates, Medicare Spending per Beneficiary, PSI 90 scores, or standardized infection ratios (Table 4). Results using Beta regression were similar.

Generally, similar findings were observed when using publications per 1000 hospital admissions or either of the publication quality and impact measures (number of publication citations per 1000 admissions or the cumulative journal impact factor) as bibliometric indicators. (Tables S3–S5).

4. DISCUSSION

The current study contributes three important findings. First, having staff who publish in credible peer‐reviewed journals is not the norm among US hospitals–only 40% of hospitals in this study had even a single publication. Second, publications are overwhelmingly produced by COTH (RR = 24.29) and non‐COTH teaching (RR = 3.09) hospitals rather than nonteaching institutions, even after accounting for the volume of admissions (a reasonable proxy for the number of physicians, direct data for which were unavailable, as previously described) and other hospital characteristics. Third, publication quantity and quality are significantly and independently associated with lower risk‐adjusted mortality for five index conditions and procedures and higher patient experience of care scores, even after adjustment for hospital teaching status, size, ownership, metropolitan location, Magnet status, percent of Medicaid admissions, and geographic region.

4.1. Demonstrating the independent value‐added of research

Health care research often lacks the immediate, tangible societal impact associated with other hospital activities and may be viewed by some as a costly, “ivory‐tower” activity 22 with little direct impact on quality of care. By contrast, in industry and business, the value of Research and Development is readily affirmed by new products introduced, increased sales, and profitability. Research may improve absorptive capacity, the critical ability of a business to access, assimilate, and apply new knowledge, often from extrinsic sources. Although less frequently discussed in the health care context, this benefit could arguably also accrue to research‐oriented institutions. 8 , 9 , 13 , 14 , 15 , 16

Properly valuing the societal impact of hospital‐based health care research has important ramifications, especially for AMCs. As the scientific quantity and quality of research is not necessarily concordant with its societal benefit, various metrics have been proposed to specifically quantify the latter, 2 , 3 , 4 , 5 , 6 , 7 , 8 , 9 , 10 , 11 , 12 the most compelling of which would be an association with improved clinical outcomes. Through adjustment for multiple hospital characteristics, our study sought to isolate the association of hospital‐attributed publications and clinical quality from the well‐documented association of teaching intensity and quality. 17 , 18 , 19 , 20 , 21 , 22

4.2. Grant‐funding, clinical trial participation

Before focusing on publications, we considered other markers of hospital research productivity, including grant funding and clinical trial participation, both of which presented challenges. For example, some NIH grants are attributed directly to specific hospitals, while others are attributed to their affiliated medical schools, thus complicating linkages to Hospital Compare metrics. Further, grant funding is only the beginning of a research project's life cycle. Ultimately, the project may or may not generate actionable knowledge that improves patient outcomes; the translational delay may be substantial; and the amount of grant funding may not correlate with the project's clinical impact. Clinical trial participation is more easily linked to specific hospitals and has been associated with greater use of guideline‐concordant practices and better patient outcomes. An institution's patients may benefit whether or not they themselves are enrolled in a trial or have the specific diagnosis of interest. 28 , 29 , 30 , 31 , 32 , 33 , 34 , 35 However, like grant‐funded studies, opportunities for clinical trial participation at an institution may be limited.

4.3. Publications

We selected publication quantity and quality as the least problematic metrics of research productivity. These may report the end‐product of grants and clinical trials 23 , 36 but more often represent free‐standing projects. Publications have an unlimited range of topics and presentation formats (e.g., original clinical or basic science investigations, commentaries, reviews, meta‐analyses, editorials, letters); they may be produced by any author at any institution; and they have immediate and widespread electronic dissemination upon publication. Publications in credible journals have undergone a peer‐review process, which filters less worthy submissions, and revisions suggested by knowledgeable reviewers often result in significant improvements. As in our study, publication metrics may be compiled by skilled health care librarians, professionals who apply their specialized knowledge in information searching and retrieval to support and enhance clinical care and research. 37 Previous studies of the association of bibliometric indices and outcomes are from smaller studies, international sites, and based on older data or less generalizable outcomes measures. 16 , 38 , 39 , 40 , 41 Consistent with our contemporary US study, they have generally found positive associations between bibliometric research indicators and clinical performance.

4.4. Mechanisms linking research and clinical outcomes

Potential mechanisms for the association of research and clinical outcomes have been most extensively studied in the context of clinical trial participation 8 , 9 , 10 , 16 , 28 , 29 , 30 , 31 , 32 , 33 , 34 , 38 , 39 , 41 , 42 , 43 , 44 , 45 , 46 , 47 and include direct “bench to bedside” translational impact of clinical trials on the outcomes of enrolled patients, and a broad range of indirect benefits, including enhanced education and interaction of hospital staff with knowledgeable national experts; greater use of protocol‐driven, guideline‐concordant care; hospital infrastructure upgrades to satisfy trial requirements; more systematic, granular patient follow‐up; standardized quality and safety audits and monitoring; openness to new and innovative approaches; collaborative, team‐based care; and greater attention to detail.

Many of the hypothesized mechanisms relating clinical trial participation with outcomes are not directly relevant to the more generic process of conceiving, writing, and publishing a peer‐reviewed paper—many, perhaps most publications, are not the work product of formal clinical trials. In some instances, special expertise and experience of institutional authors with a particular disease, procedure, or health policy topic does create a natural milieu in which publications are more likely, and that expertise could presumably contribute to better clinical outcomes. A disproportionate concentration of knowledgeable clinician‐researchers at certain hospitals may occur because they actively recruit such individuals or because their cultures directly promote and reward expertise.

Importantly, we made no attempt, nor would it be practical, to identify and link the specific content of thousands of publications to corresponding clinical quality indicators, but neither does our hypothesis require such a linkage. We believe that research activity such as academic publishing creates an overall intellectual milieu of curiosity, innovation, critical thinking, and desire to excel that transcends the specific content of individual papers.

The process of writing and publishing papers also helps to create expertise and state‐of‐the‐art content knowledge among coauthors and study staff. Project inception and preparation require a comprehensive review of existing literature, including the results of previous studies and evidence‐based care recommendations derived from them. For multi‐author and multi‐institutional papers, each author also gains additional knowledge and perspectives from their collaborators. Finally, journal reviewers and editors often provide valuable insights.

All publications attributed to a hospital's staff physicians were identified in our analyses, which included a broad spectrum of basic science and clinical topics. We believe that a better understanding of the basic science foundations of disease contributes significantly to the ability of physicians to care for patients, and that it complements their knowledge of more clinical aspects.

Certain personal characteristics may also be common to both excellent clinical care and to writing and publishing scientific papers, and some hospitals either attract more staff with these characteristics or create an environment in which clinician‐scientists with these characteristics are developed. 42 Regardless of the specific topic, writing papers and navigating the peer‐review publication process requires initiative, intellectual curiosity, innovation, discipline, integrity, critical thinking, clarity of thought and expression, attention to detail, organizational skills, teamwork, effective time management, and persistence in the face of obstacles or failure, all qualities which should also contribute to being a good clinician.

These hypothesized mechanisms are all consistent with improved mortality and patient experience scores, as these endpoints reflect direct interactions of clinician‐authors with patients. They are also consistent with the lack of statistically significant association of publication metrics with other clinical performance indicators such as readmissions, cost‐efficiency, and hospital‐acquired conditions, which may reflect hospital systems‐related outcomes less under the direct influence of individual authors (e.g., readmissions are substantially impacted by patient socioeconomic factors and the availability of community resources).

5. LIMITATIONS

As in all observational studies, these findings reflect associations rather than causal relationships. Prolific clinician‐authors or hospitals with numerous publications do not inevitably render excellent clinical care, and many doctors and hospitals that do not publish in the peer‐reviewed literature may provide superb care.

The publication search strategy used in this study, while systematic and internally validated, still required personal initiative and subjective judgment. Further, there may be variations and misspellings in the published names of individual authors, hospital affiliations, and addresses, all of which complicate bibliometric searches. 48 Even standard bibliometric indices such as the impact factor and h‐index have known limitations. 23 , 24 , 48 , 49 , 50

Some clinician researchers may have listed only their medical school affiliation on their publications and by design, would not be identified by our search strategy. Our intent was to link authors and publications to specific hospitals (see Appendix S1), the units of analysis for our study, as they were the locus of clinical activities which constituted our primary outcomes. If there are clinician‐scientists who listed only their medical school and not their hospital affiliation, our results would underestimate publications from those hospitals, almost all of which would be teaching hospitals given their medical school affiliations.

While Hospital Compare metrics are neither perfect nor completely comprehensive indicators of hospital quality, they are among the more widely used, transparent, and vetted measures in health care. We selected a broad, representative group of 19 common Hospital Compare metrics, but others could have been chosen instead.

A preoccupation with publications could lead to excessive self‐citation or a focus on publication quantity at the expense of quality, especially when the academic advancement of the primary author is at stake. 41 This might lead to a proliferation of marginal publications, including those from so‐called predatory publishers.

Notwithstanding publication checklists, the actual contributions of all but the first author of a publication are often unclear. Similarly, the clinical involvement of each author and their ability to influence patient outcomes is generally unavailable.

Finally, this study focused on more traditional associations of individual clinician‐authors and individual institutions. Although an author could list several hospitals, and all would be credited with a publication, our study does not account for health care systems where an individual physician‐hospital affiliation may not exist.

6. CONCLUSION

A minority of acute care hospitals have staff who publish in the peer‐reviewed literature. Like teaching intensity, publications are measurable hospital characteristics, and they are often associated with academic medical centers. Hospitals whose staff publish peer‐reviewed papers in the biomedical literature have, on average, superior mortality rates and patient experience of care scores, even after adjustment for teaching intensity, hospital size, metro location, ownership, geographic region, payer mix, and nursing Magnet recognition.

Publication‐related measures of research activity and impact are objective structural metrics of hospital quality. They could be incorporated into the mix of considerations used by patients in selecting a hospital provider, by funding agencies to assess the potential downstream value of research projects, and by organizations that develop measures of hospital quality.

CONFLICT OF INTEREST

All authors were employed by MGH, an academic medical center; Dr. David Cheng has also received support for unrelated research conducted at VA Boston Healthcare System.

Supporting information

Data S1. Supporting information.

ACKNOWLEDGMENTS

The authors wish to thank Jillian Carkin, MLIS for her invaluable assistance in the validation of our Web of Science search strategy.

Shahian DM, McCloskey D, Liu X, Schneider E, Cheng D, Mort EA. The association of hospital research publications and clinical quality. Health Serv Res. 2022;57(3):587-597. doi: 10.1111/1475-6773.13947

Funding informationNone declared.

REFERENCES

- 1. Sutton JP, Washington RE, Fingar KR, Elixhauser A. Characteristics of Safety‐Net Hospitals, 2014: Statistical Brief #213. Healthcare Cost and Utilization Project (HCUP) Statistical Briefs. Rockville (MD): Agency for Healthcare Research and Quality (US). 2006. [PubMed]

- 2. Smith R. Measuring the social impact of research. BMJ. 2001;323(7312):528. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Patel VM, Ashrafian H, Ahmed K, et al. How has healthcare research performance been assessed?: a systematic review. J R Soc Med. 2011;104(6):251‐261. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Cruz Rivera S, Kyte DG, Aiyegbusi OL, Keeley TJ, Calvert MJ. Assessing the impact of healthcare research: a systematic review of methodological frameworks. PLoS Med. 2017;14(8):e1002370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Adam P, Ovseiko PV, Grant J, et al. ISRIA statement: ten‐point guidelines for an effective process of research impact assessment. Health Res Pol Syst. 2018;16(1):8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Panel on the Return on Investments in Health Research. Making an Impact: A Preferred Framework and Indicators to Measure Returns on Investment in Health Research. https://secureservercdn.net/50.62.88.87/cpp.178.myftpupload.com/wp-content/uploads/2011/09/ROI_FullReport.pdf. Ottawa, Ontario, Canada; 2009.

- 7. Banzi R, Moja L, Pistotti V, Facchini A, Liberati A. Conceptual frameworks and empirical approaches used to assess the impact of health research: an overview of reviews. Health Res Pol Syst. 2011;9:26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Boaz A, Hanney S, Jones T, Soper B. Does the engagement of clinicians and organisations in research improve healthcare performance: a three‐stage review. BMJ Open. 2015;5(12):e009415. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Hanney S, Boaz A, Jones T, Soper B. Engagement in research: an innovative three‐stage review of the benefits for health‐care performance. Health Serv Del Res. 2013;1(8):1‐152. [PubMed] [Google Scholar]

- 10. Hanney SR, González‐Block MA. Health research improves healthcare: now we have the evidence and the chance to help the WHO spread such benefits globally. Health Res Pol Syst. 2015;13(1):12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Selby P. The impact of the process of clinical research on health service outcomes. Ann Oncol. 2011;22(Suppl 7):vii2‐vii4. [DOI] [PubMed] [Google Scholar]

- 12. Davies DS. Clinical research and healthcare outcomes. Ann Oncol. 2011;22(Suppl 7):vii1. [DOI] [PubMed] [Google Scholar]

- 13. Zahra SA, George G. Absorptive capacity: a review, reconceptualization, and extension. AMR Acad Manag Rev. 2002;27(2):185‐203. [Google Scholar]

- 14. Cohen WM, Levinthal DA. Absorptive capacity: a new perspective on learning and innovation. Adm Sci Q. 1990;35(1):128‐152. [Google Scholar]

- 15. Rosenberg N. Why do firms do basic research (with their own money)? Res Policy. 1990;19(2):165‐174. [Google Scholar]

- 16. García‐Romero A. Assessing the socio‐economic returns of biomedical research (I): how can we measure the relationship between research and health care? Scientometrics. 2006;66(2):249‐261. [Google Scholar]

- 17. Shahian DM, Liu X, Meyer GS, Normand SL. Comparing teaching versus nonteaching hospitals: the association of patient characteristics with teaching intensity for three common medical conditions. Acad Med. 2014;89(1):94‐106. [DOI] [PubMed] [Google Scholar]

- 18. Shahian DM, Liu X, Meyer GS, Torchiana DF, Normand SL. Hospital teaching intensity and mortality for acute myocardial infarction, heart failure, and pneumonia. Med Care. 2014;52(1):38‐46. [DOI] [PubMed] [Google Scholar]

- 19. Shahian DM, Liu X, Mort EA, Normand ST. The association of hospital teaching intensity with 30‐day postdischarge heart failure readmission and mortality rates. Health Serv Res. 2020;55(2):259‐272. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Shahian DM, Nordberg P, Meyer GS, et al. Contemporary performance of U.S. teaching and nonteaching hospitals. Acad Med. 2012;87(6):701‐708. [DOI] [PubMed] [Google Scholar]

- 21. Burke LG, Frakt AB, Khullar D, Orav EJ, Jha AK. Association between teaching status and mortality in US hospitals. JAMA. 2017;317(20):2105‐2113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Khullar D, Frakt AB, Burke LG. Advancing the Academic Medical Center value debate: are teaching hospitals worth it? JAMA. 2019;322(3):205‐206. [DOI] [PubMed] [Google Scholar]

- 23. Williams G. Misleading, unscientific, and unjust: the United Kingdom's research assessment exercise. BMJ. 1998;316(7137):1079‐1082. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Hendrix D. An analysis of bibliometric indicators, National Institutes of Health funding, and faculty size at Association of American Medical Colleges medical schools, 1997‐2007. J Med Libr Assoc. 2008;96(4):324‐334. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Carpenter CR, Cone DC, Sarli CC. Using publication metrics to highlight academic productivity and research impact. Acad Emerg Med. 2014;21(10):1160‐1172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Turaga KK, Gamblin TC. Measuring the surgical academic output of an institution: the “institutional” H‐index. J Surg Educ. 2012;69(4):499‐503. [DOI] [PubMed] [Google Scholar]

- 27. Khan NR, Thompson CJ, Taylor DR, et al. An analysis of publication productivity for 1225 academic neurosurgeons and 99 departments in the United States. J Neurosurg. 2014;120(3):746‐755. [DOI] [PubMed] [Google Scholar]

- 28. Ozdemir BA, Karthikesalingam A, Sinha S, et al. Research activity and the association with mortality. PLoS One. 2015;10(2):e0118253. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Downing A, Morris EJ, Corrigan N, et al. High hospital research participation and improved colorectal cancer survival outcomes: a population‐based study. Gut. 2017;66(1):89‐96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Purvis T, Hill K, Kilkenny M, Andrew N, Cadilhac D. Improved in‐hospital outcomes and care for patients in stroke research: an observational study. Neurology. 2016;87(2):206‐213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Du Bois A, Rochon J, Lamparter C, Pfisterer J. Pattern of care and impact of participation in clinical studies on the outcome in ovarian cancer. Int J Gynecol Cancer. 2005;15(2):183‐191. [DOI] [PubMed] [Google Scholar]

- 32. Rochon J, du Bois A. Clinical research in epithelial ovarian cancer and patients' outcome. Ann Oncol. 2011;22(Suppl 7):vii16‐vii19. [DOI] [PubMed] [Google Scholar]

- 33. Majumdar SR, Roe MT, Peterson ED, Chen AY, Gibler WB, Armstrong PW. Better outcomes for patients treated at hospitals that participate in clinical trials. Arch Intern Med. 2008;168(6):657‐662. [DOI] [PubMed] [Google Scholar]

- 34. Laliberte L, Fennell ML, Papandonatos G. The relationship of membership in research networks to compliance with treatment guidelines for early‐stage breast cancer. Med Care. 2005;43(5):471‐479. [DOI] [PubMed] [Google Scholar]

- 35. Carpenter WR, Reeder‐Hayes K, Bainbridge J, et al. The role of organizational affiliations and research networks in the diffusion of breast cancer treatment innovation. Med Care. 2011;49(2):172‐179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Druss BG, Marcus SC. Tracking publication outcomes of National Institutes of Health grants. Am J Med. 2005;118(6):658‐663. [DOI] [PubMed] [Google Scholar]

- 37. Sollenberger JF, Holloway RG Jr. The evolving role and value of libraries and librarians in health care. JAMA. 2013;310(12):1231‐1232. [DOI] [PubMed] [Google Scholar]

- 38. Bennett WO, Bird JH, Burrows SA, Counter PR, Reddy VM. Does academic output correlate with better mortality rates in NHS trusts in England? Public Health. 2012;126(Suppl 1):S40‐s3. [DOI] [PubMed] [Google Scholar]

- 39. Pons J, Sais C, Illa C, et al. Is there an association between the quality of hospitals' research and their quality of care? J Health Serv Res Policy. 2010;15(4):204‐209. [DOI] [PubMed] [Google Scholar]

- 40. Alotaibi NM, Ibrahim GM, Wang J, et al. Neurosurgeon academic impact is associated with clinical outcomes after clipping of ruptured intracranial aneurysms. PLoS One. 2017;12(7):e0181521. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Tchetchik A, Grinstein A, Manes E, Shapira D, Durst R. From research to practice: which research strategy contributes more to clinical excellence? Comparing high‐volume versus high‐quality biomedical research. PLoS One. 2015;10(6):e0129259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Krzyzanowska MK, Kaplan R, Sullivan R. How may clinical research improve healthcare outcomes? Ann Oncol. 2011;22(Suppl 7):vii10‐vii15. [DOI] [PubMed] [Google Scholar]

- 43. Clarke M, Loudon K. Effects on patients of their healthcare practitioner's or institution's participation in clinical trials: a systematic review. Trials. 2011;12:16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Christmas C, Durso SC, Kravet SJ, Wright SM. Advantages and challenges of working as a clinician in an academic department of medicine: academic clinicians' perspectives. J Grad Med Educ. 2010;2(3):478‐484. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Zerhouni EA. Translational and clinical science — time for a new vision. N Engl J Med. 2005;353(15):1621‐1623. [DOI] [PubMed] [Google Scholar]

- 46. Lenfant C. Clinical research to clinical practice — lost in translation? N Engl J Med. 2003;349(9):868‐874. [DOI] [PubMed] [Google Scholar]

- 47. Van De Ven AH, Johnson PE. Knowledge for theory and practice. Acad Manag Rev. 2006;31(4):802‐821. [Google Scholar]

- 48. Plana NM, Massie JP, Bekisz JM, Spore S, Diaz‐Siso JR, Flores RL. Variations in databases used to assess academic output and citation impact. N Engl J Med. 2017;376(25):2489‐2491. [DOI] [PubMed] [Google Scholar]

- 49. Seglen PO. Why the impact factor of journals should not be used for evaluating research. BMJ. 1997;314(7079):498‐502. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Garfield E. The history and meaning of the journal impact factor. JAMA. 2006;295(1):90‐93. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data S1. Supporting information.