Highlights

-

•

Admission CTA Radiomics and machine learning models can predict stroke outcomes.

-

•

Models solely using radiomics are may be useful in the absence of a reliable clinical exam.

-

•

Automated models like ours can provide risk stratification for acute LVO stroke.

Keywords: Radiomics, Stroke, Large vessel occlusion, CTA, Quantitative imaging, Mechanical thrombectomy

Abstract

Background and Purpose

As “time is brain” in acute stroke triage, the need for automated prognostication tools continues to increase, particularly in rapidly expanding tele-stroke settings. We aimed to create an automated prognostication tool for anterior circulation large vessel occlusion (LVO) stroke based on admission CTA radiomics.

Methods

We automatically extracted 1116 radiomics features from the anterior circulation territory on admission CTAs of 829 acute LVO stroke patients who underwent mechanical thrombectomy in two academic centers. We trained, optimized, validated, and compared different machine-learning models to predict favorable outcome (modified Rankin Scale ≤ 2) at discharge and 3-month follow-up using four different input sets: “Radiomics”, “Radiomics + Treatment” (radiomics, post-thrombectomy reperfusion grade, and intravenous thrombolysis), “Clinical + Treatment” (baseline clinical variables and treatment), and “Combined” (radiomics, treatment, and baseline clinical variables).

Results

For discharge outcome prediction, models were optimized/trained on n = 494 and tested on an independent cohort of n = 100 patients from Yale. Receiver operating characteristic analysis of the independent cohort showed no significant difference between best-performing Combined input models (area under the curve, AUC = 0.77) versus Radiomics + Treatment (AUC = 0.78, p = 0.78), Radiomics (AUC = 0.78, p = 0.55), or Clinical + Treatment (AUC = 0.77, p = 0.87) models. For 3-month outcome prediction, models were optimized/trained on n = 373 and tested on an independent cohort from Yale (n = 72), and an external cohort from Geisinger Medical Center (n = 232). In the independent cohort, there was no significant difference between Combined input models (AUC = 0.76) versus Radiomics + Treatment (AUC = 0.72, p = 0.39), Radiomics (AUC = 0.72, p = 0.39), or Clinical + Treatment (AUC = 76, p = 0.90) models; however, in the external cohort, the Combined model (AUC = 0.74) outperformed Radiomics + Treatment (AUC = 0.66, p < 0.001) and Radiomics (AUC = 0.68, p = 0.005) models for 3-month prediction.

Conclusion

Machine-learning signatures of admission CTA radiomics can provide prognostic information in acute LVO stroke candidates for mechanical thrombectomy. Such objective and time-sensitive risk stratification can guide treatment decisions and facilitate tele-stroke assessment of patients. Particularly in the absence of reliable clinical information at the time of admission, models solely using radiomics features can provide a useful prognostication tool.

Nomenclature

Non-standard Abbreviations and Acronyms

- AUC

Area under the curve

- ET

endovascular mechanical thrombectomy

- ElNet

elastic net

- HClust

hierarchical clustering

- ICA

internal carotid artery

- IQR

interquartile range

- LVO

large vessel occlusion

- RIDGE

logistic regression with RIDGE regularization

- MCA

middle cerebral artery

- MRMR

minimum redundancy maximum relevance filter

- mRS

modified Rankin Scale

- mTICI

modified treatment in cerebral ischemia

- NIHSS

National Institutes of Health Stroke Scale

- NBayes

native Bayes

- noFS

no feature selection

- pMIM

Pearson correlation-based redundancy reduction with mutual information filter

- PCA

principal component analysis

- RF

random forest

- ROC

receiver operating characteristic

- rt-PA

recombinant tissue plasminogen activator

- SVM

support vector machine

- XGB

XGBoost

1. Introduction

Stroke is the leading cause of severe disability and the fifth leading cause of death in the United States (Benjamin et al., 2017, Xu et al., 2020), with large vessel occlusion (LVO) strokes accounting for 30% of all ischemic strokes but 90% of stroke-related mortality and severe disability (Malhotra et al., 2017). Efforts to combat such devastating outcomes in LVO stroke have led to the rise of endovascular therapies, particularly mechanical thrombectomy in the last decade. Landmark trials have shown significant benefit of endovascular thrombectomy (ET) in LVO stroke outcome, even when performed up to 24 h after onset (Albers et al., 2018, Nogueira et al., 2018).

However, it remains difficult to determine which patients will benefit from ET. For patients presenting more than six hours after stroke onset, current eligibility criteria ascribed by the American Heart Association (AHA) (Powers, 2019) rely on the mismatch between the volume of at-risk territory and the infarct core, as indicated by CT or MRI perfusion scans, which add cost and infrastructure requirements. Despite the overwhelming efficacy of ET, up to 50% of acute LVO patients have poor long-term outcomes after successful reperfusion – i.e. futile recanalization (Meinel et al., 2020). Conversely, of the 30% of acute ischemic stroke patients with intracranial LVO (Lakomkin et al., 2019), only 7–8% are eligible for ET according to current AHA guidelines (Desai et al., 2019), and, under those guidelines, the “number needed to treat” with ET is only 2–2.6 to achieve a 1-point improvement of 90-day modified Rankin Scale (mRS) score (Haussen et al., 2018, Weber et al., 2019). These statistics highlight a great proportion of patients who may potentially benefit from ET but who do not fulfill current restrictive eligibility guidelines.

Non-contrast CT, followed by CTA, are the mainstay of acute ischemic stroke imaging workflow and LVO treatment triage (Martinez et al., 2020). CTA source images have higher sensitivity in the detection of acute ischemic changes compared to CT (Camargo et al., 2007), and areas of CTA hypoattenuation better correlate with depressed cerebral blood flow (CBF) rather than volume (CBV) in arterial phase images obtained with multidetector scanners (Pulli et al., 2012, Sharma et al., 2011). Thus, quantitative features of admission CTAs may provide better prognostication and treatment triage for acute LVO stroke patients beyond estimation of infarct volume. This can be done by utilizing radiomics, which enables automated extraction of a large number of quantitative features from medical images representing shape, intensity, and texture characteristics. Machine-learning models using radiomics have already demonstrated utility in prediction of clinically relevant information based on radiomics features (Haider et al., 2020a), including in LVO (Regenhardt et al., 2022).

With the goal of assisting treatment decisions and improving baseline risk stratification, we hypothesized that radiomics features extracted from admission CTAs could predict post-thrombectomy outcome. From a database of over 800 patients from two comprehensive stroke centers, we devised and automated a platform for extraction of radiomics features from the anterior circulation territory of admission CTAs. Then, using different combinations of feature selection and machine-learning classifiers, we trained, optimized, validated, and compared different models for prediction of outcome at discharge and 3-month follow-up.

2. Materials and methods

2.1. Clinical and imaging data acquisition

The subjects for training, validation, and independent testing of models were identified from the Yale New Haven Hospital stroke center registry between 1/1/2014 and 10/31/2020. In addition, a cohort of 232 subjects from Geisinger Medical Center who presented between 1/1/2016 and 12/31/2019 was used for external testing of models predicting long-term functional outcome at 3-month follow-up. Patients were included if they (1) suffered an anterior circulation LVO stroke (ICA, M1, or M2), (2) had CTA source images with slice thickness ≤ 1 mm, (3) underwent ET, and (4) had modified Rankin Scale (mRS) assessment of functional outcome recorded at discharge or long-term (at 3-month follow-up). Functional outcome was dichotomized as favorable (mRS ≤ 2) versus poor (mRS > 2), and patients were excluded from discharge or long-term analysis groups if they were missing mRS data for that timepoint. Post-thrombectomy reperfusion was assessed using the modified Thrombolysis in Cerebral Infarction (mTICI) system, reported by the treating neurointerventionalist. Patients were excluded if they had (a) any simultaneous posterior circulation LVO, (b) poor quality CTA not amenable to analysis (due to motion, metal artifact, or scanner-based artifacts), or (c) missing admission clinical information. In the external dataset, no patient was aged 90. Institutional review board approval was obtained for retrospective data collection with informed consent waived at respective institutes. All procedures followed were in accordance with institutional guidelines.

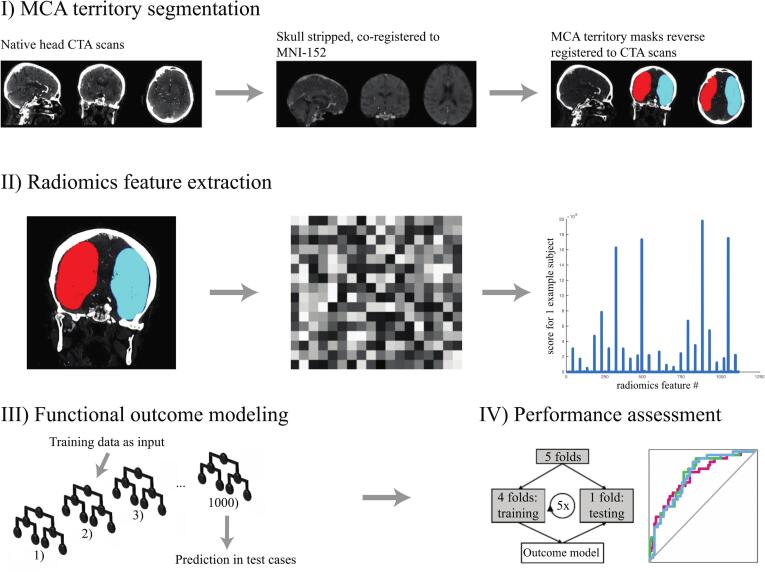

2.2. Image pre-processing and feature extraction

Using the FSL software FLIRT tool (https://www.fmrib.ox.ac.uk/), baseline CTA images were skull-stripped and co-registered to the Montreal Neurological Institute-152 brain space. Preset middle cerebral artery (MCA) territory masks, from the brain stroke atlas (Wang et al., 2019), were then reverse registered to native CTAs (Fig. 1). All CTA images and MCA masks were resampled to an isotropic 1x1x1 mm voxel spacing using trilinear interpolation to ensures rotational invariance of texture features (Haider et al., 2020b, Haider et al., 2020c, Haider et al., 2020d). To compensate for differences in intravenous bolus timing among different CTA scans, only voxels within a 1–500 Hounsfield unit (HU) range were included in analysis, and all images were normalized by centering voxel values at the mean with standard deviation from the image. After applying high- and low-pass filters in each spatial direction (“coif-1” wavelet transform (Pyradiomics-community, 2018)) and the “edge-enhancement” Laplacian of Gaussian (LoG) filter (with “sigma” settings of 2, 4, and 6 mm (Pyradiomics-community, 2018)), one set of 1116 “first-order” and “texture-matrix” radiomics feature were extracted from the single VOI combining right and left MCA territories (Pyradiomics-community, 2018). These steps of preprocessing, derivative image generation, and feature extraction were executed using a custom Pyradiomics version 2.1.2 pipeline (Pyradiomics-community, 2018). The first-order and texture-based features are listed in Supplementary Table 1.

Fig. 1.

Processing pipeline from admission CTAs to machine-learning prediction of functional outcome.

Table 1.

Model input variables and abbreviations for machine-learning classifiers and feature selection methods.

| A) Clinical Variables | B) Treatment Variable |

|---|---|

| Age | Thrombectomy reperfusion success: modified treatment in cerebral ischemia (mTICI) score |

| Sex | |

| Admission NIH Stroke Score | Intravenous thrombolytic therapy |

| C) Machine-Learning Classifiers | |

| Random forest | RF |

| XGBoost | XGB |

| Logistic regression with elastic net regularization | ElNet |

| Native Bayes classifier | NBayes |

| Support vector machine with radial kernel | SVM_rad |

| Support vector machine with sigmoid kernel | SVM_sig |

| D) Feature Selection Methods | |

| Minimum redundancy maximum relevance filter | MRMR |

| Pearson correlation-based redundancy reduction combined with a mutual information maximization filter | pMIM |

| Logistic regression with RIDGE regularization adapted for feature selection | RIDGE |

| Hierarchical clustering | HClust |

| Principal component analysis-based feature selection | PCA |

| No feature selection implemented | noFS |

Three main prognostic clinical variables at the time of admission (A) were included in the Combined and Clinical + Treament models. The treatment variables of post-thrombectomy reperfusion success (mTICI ascore) and intravenous thrombolytic treatment (B) were used in the Radiomics + Treatment, Clinical + Treatment, and Combined models. Six machine-learning classifiers (C) and 6 feature selection methods (D) were used in 36 combinations for the Radiomics, Radiomics + Treatment, Combined models, while feature selection was omitted in Clinical + Treatment models. Machine-learning and feature selection abbreviations were previsouly described in detail (Haider et al., 2020b) and are summarized in the supplementary methods.

2.3. Data allocation

To develop predictive models for favorable outcome (mRS ≤ 2) at discharge and long-term follow-up, we randomly allocated separate datasets for training/cross-validation, independent testing, and external testing. The training cohort was used for hyperparameter optimization and selection of optimal models through a repeated 5-fold cross-validation scheme. Independent internal and external cohorts were used for testing the optimized models. For both discharge and long-term outcome prognostic models, four different input sets were used (Table 1): (1) “Radiomics” (MCA territory radiomics features only), (2) “Radiomics + Treatment” (radiomics, reperfusion mTICI score, and intravenous thrombolysis)”, (3) Clinical + Treatment (baseline clinical variables and treatment), and (4) “Combined” input (radiomics, treatment, and baseline clinical variables). In our machine-learning pipeline, reperfusion mTICI score was a 0-to-4 ordinal variable, intravenous thrombolysis was a binary variable, and clinical variables included sex (binary), age (numeric), and admission NIHSS (numeric) (Table 1).

2.4. Cross-validation and Bayesian hyperparameter optimization

Using a framework devised by Haider et al. (Haider et al., 2020b, Haider et al., 2020c, Haider et al., 2020d), we applied 20 rounds of 5-fold cross-validation for hyperparameter optimization and internal performance validation of candidate models, each of which combined one of 6 feature selection strategies and one of 6 machine-learning classifiers (36 pairs, Table 1). A brief description of feature selection methods and machine-learning models is provided in the supplementary methods and detailed previously (Haider et al., 2020b, Haider et al., 2020c, Haider et al., 2020d). Using the “rBayesianOptimization” package in R (Yan, 2016), we applied Bayesian Optimization for iterative optimization of model performance in the cross-validation framework. The machine-learning classifiers’ hyperparameters, their ranges, and tuning repetition counts are provided in Supplementary Table 2. We applied 20 rounds of 5-fold cross-validation with optimized hyperparameters to measure the cross-validation performance of each candidate model. In each round of cross-validation, feature selection and training of machine-learning classifiers were performed on the training fold and assessed in validation folds to avoid data leakage from training to validation samples and reduce overfitting. This approach enabled accurate estimation of final model performance based on averaged receiver operating characteristic (ROC) area under the curve (AUC) in validation folds across all 100 permutations.

Table 2.

Demographic characteristics of patients in training and testing cohorts for prediction of (A) discharge and (B) long-term outcome.

| A. Discharge outcome prediction | Training/validation cohort (n = 494) |

Independent cohort (n = 100) |

P value | |

|---|---|---|---|---|

| Age (years) | 70.4 ± 15.4 | 69.2 ± 14.0 | 0.471 | |

| Male sex | 269 (54%) | 52 (52%) | 0.653 | |

| Admission NIHSS (median, interquartile) | 15 (10–19) | 13 (7–19.25) | 0.126 | |

| Onset-to-catheterization time (hours) | 7.2 ± 5.2 | 6.8 ± 4.8 | 0.501 | |

| Onset-to-CTA scan (hours) | 5.3 ± 5.4 | 5.6 ± 5.3 | 0.603 | |

| Occlusion side (left) | 256 (52%) | 59 (59%) | 0.518 | |

| ICA occlusion | 120 (24%) | 19 (19%) | 0.254 | |

| MCA M1 occlusion | 314 (64%) | 48 (48%) | 0.004 | |

| MCA M2 occlusion | 152 (31%) | 41 (41%) | 0.046 | |

| Received intravenous rt-PA | 187 (38%) | 38 (38%) | 0.978 | |

| Successful reperfusion* | 368 (74%) | 87 (87%) | 0.007 | |

| Discharge mRS score (median, interquartile) | 4 (3–5) | 4 (1–4) | 0.101 | |

| Favorable outcome at discharge† | 123 (25%) | 32 (32%) | 0.140 | |

| B. Long-term outcome prediction | Training/validation cohort (n = 373) |

Independent cohort (n = 72) |

External cohort (n = 232) |

P value |

| Age (years) | 71.5 ± 15.3 | 68.7 ± 14.0 | 69.8 ± 14.8 | 0.207 |

| Male sex | 200 (54%) | 41 (57%) | 103 (44%) | 0.048 |

| Admission NIHSS (median, interquartile) | 14 (IQR 10–19) | 13 (6.75–19) | 18 (12–23.25) | <0.001 |

| Onset-to-catheterization time (hours) | 7.4 ± 5.3 | 7.1 ± 4.9 | 6.8 ± 5.2 | 0.388 |

| Onset-to-CTA scan (hours) | 5.4 ± 5.4 | 5.2 ± 5.0 | 5.7 ± 5.5 | 0.718 |

| Occlusion side (left) | 193 (52%) | 43 (60%) | 119 (51%) | 0.421 |

| ICA occlusion | 93 (25%) | 13 (18%) | 54 (23%) | 0.447 |

| MCA M1 occlusion | 233 (62%) | 37 (51%) | 144 (62%) | 0.198 |

| MCA M2 occlusion | 115 (31%) | 29 (40%) | 32 (14%) | <0.001 |

| Received intravenous rt-PA | 137 (37%) | 24 (33%) | 93 (40%) | 0.525 |

| Successful reperfusion* | 283 (76%) | 60 (83%) | 215 (93%) | <0.001 |

| 3-month mRS score (median, interquartile) | 4 (IQR 2–6) | 3 (1–6) | 3 (1–5) | 0.063 |

| Favorable outcome at 3 months† | 123 (33%) | 28 (39%) | 82 (35%) | 0.586 |

(A) Demographic characteristics differed significantly between the training and internal independent cohorts in proportion of MCA M1 segment occlusion, proportion of MCA M2 segment occlusion, and rate of successful reperfusion. (B) Demographic characteristics differed significantly between the training, independent, and external cohorts in admission NIHSS, gender ratio, rate of MCA M2 segment occlusion, and successful reperfusion.

ICA = internal carotid artery; MCA = middle cerebral artery; mRS = modified Rankin Scale; NIHSS = National Institutes of Health Stroke Scale; rt-PA = recombinant tissue plasminogen activator.

*Successful reperfusion was defined by achieving modified Thrombolysis in Cerebral Infarction (mTICI) of 2b or 3.

†Favorable outcome was defined by an mRS score ≤ 2.

2.5. Final model training and validation

For each input set, the select model (feature selection and machine-learning algorithm combination) with the highest averaged AUC in cross-validation was trained on the whole training dataset applying optimized machine-learning hyperparameters. These final models were then tested on independent internal (Yale dataset) and external (Geisinger Clinic) cohorts. DeLong’s test was used to compare paired AUCs and to calculate p-values and AUC 95% confidence intervals (CI) (DeLong et al., 1988). The R pROC package was used for AUC metric computations (Robin et al., 2011).

2.6. Statistical analysis

For univariate comparison between two groups, we used a student’s t-test for continuous variables (age, onset-to-catheterization, onset-to-CTA), the Mann-Whitney rank test for ordinal variables (NIHSS and mRS), and the Fisher exact test for categorical variables. For univariate comparison between three groups, we used ANOVA for continuous variables, the Kruskal-Wallis test for ordinal variables, and the Chi-square test for categorical variables.

3. Results

3.1. Patient demographics

Our training dataset consisted of 496 Yale LVO stroke patients, 494 of whom had discharge outcome scores, and 373 of whom had long-term outcome scores available. See Supplementary Fig. 1 for flowchart of patients included in final analysis. Our independent internal testing cohort consisted of 101 Yale LVO stroke patients, 100 of whom had discharge outcome scores and 72 of whom had long-term outcome scores available. Our external testing cohort from Geisinger Health consisted of 232 LVO stroke patients with long-term outcome information available. Table 2 summarizes the demographic characteristics of patients included for discharge and long-term outcome prediction.

3.2. Cross-validation results

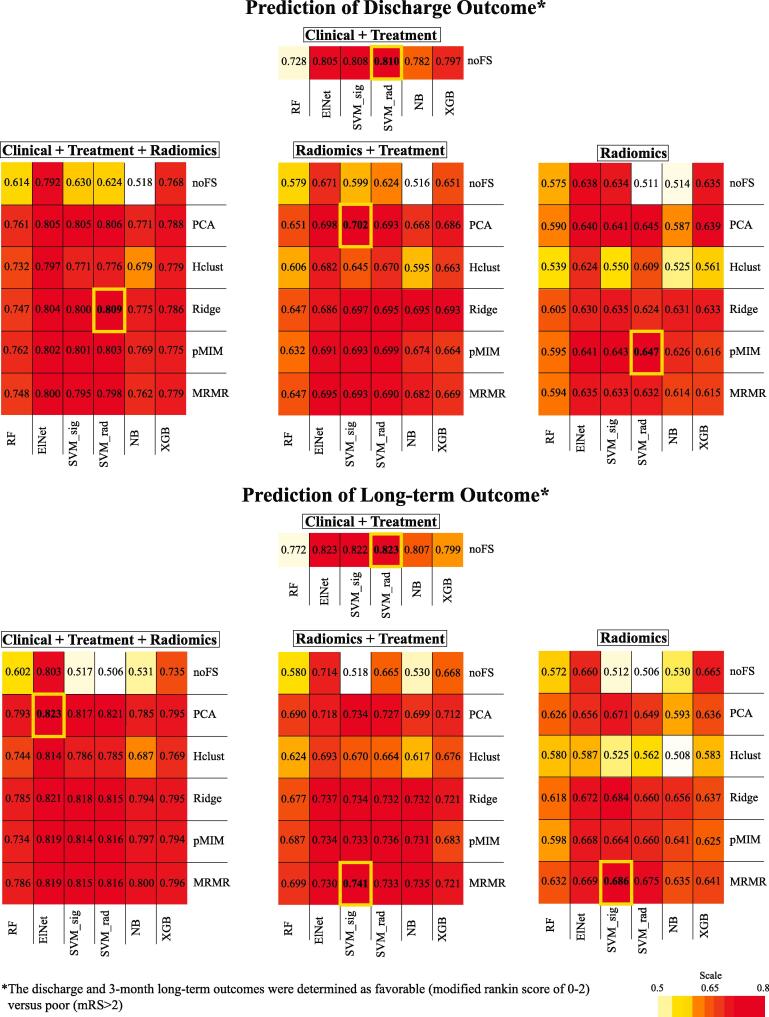

Heatmap summaries of the cross-validation performance for all candidate models are depicted in Fig. 2. The best performing Radiomics only models had an averaged AUC of 0.69 ± 0.06 for long-term outcome prediction and 0.65 ± 0.05 for discharge outcome prediction. The best performing Radiomics + Treatment models had an averaged AUC of 0.74 ± 0.06 for long-term outcome prediction and 0.70 ± 0.05 for discharge outcome prediction. The best performing Clinical + Treatment models had an averaged AUC of 0.810 ± 0.05 for long-term outcome prediction and 0.82 ± 0.05 for discharge outcome prediction. The best performing Combined input models had an averaged AUC of 0.82 ± 0.05 for long-term outcome prediction and 0.81 ± 0.04 for discharge outcome prediction. Support vector machine (SVM) with either sigmoid or radial kernel was the machine-learning classifier utilized by the best-performing models of all input types except the Combined model for prediction of long-term outcome, which utilized logistic regression with elastic net regularization (ElNet). The feature selection methods most utilized by best-performing models were principal component analysis (PCA, used in 3 of the 6 best-performing models) and minimum redundancy maximum relevance filter (MRMR, used in 2 of 6 best-performing models). Notably, cross-validation results for models trained on radiomics features extracted from the whole brain had similar averaged AUC to those using MCA territory radiomics (Supplementary Fig. 2).

Fig. 2.

Heatmap summary of cross-validation performance for all candidate models. The feature selection/machine-learning combinations with the highest averaged area under the curve (AUC) across validation folds (from 20 repeats × 5-fold cross-validation) are highlighted with bold yellow cell border lines. These best-performing models were selected for internal independent and external cohort testing. (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.)

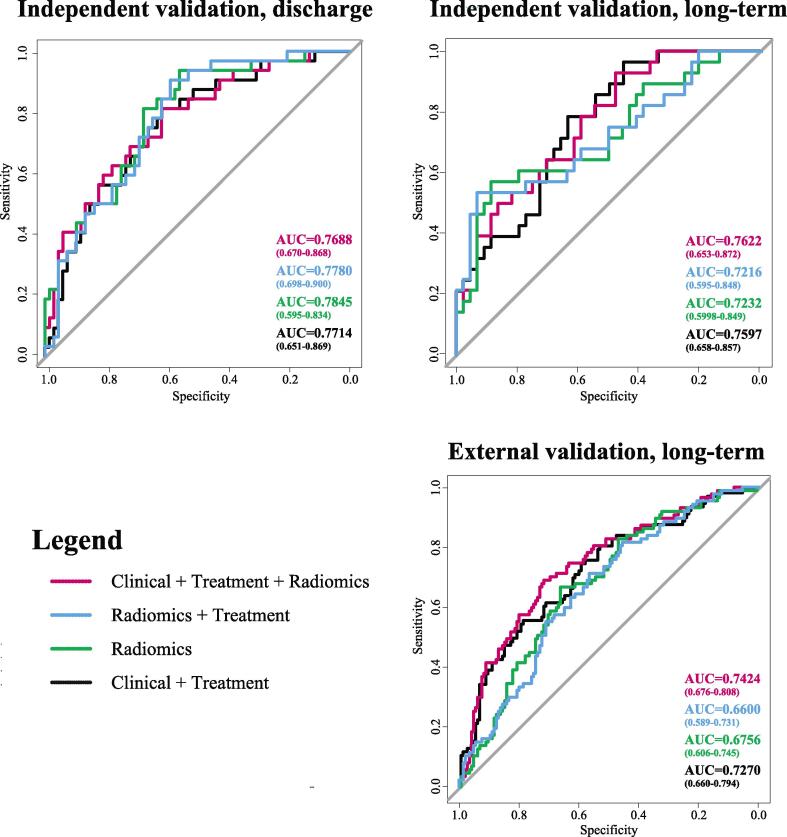

3.3. Independent and external testing

The internal independent and external testing performances of the best-performing models selected from cross-validation results are summarized in Fig. 3 and Table 3. In the internal (Yale) independent cohort, there was no significant difference in the prognostic performance of the Radiomics only model (AUC = 0.79, 95% CI = 0.60–0.83, p = 0.55; AUC = 0.72, 95% CI = 0.60–0.85, p = 0.39), the Radiomics + Treatment model (AUC = 0.78, 95% CI = 0.70–0.90, p = 0.78; AUC = 0.72, 95% CI = 0.60–0.85, p = 0.39), or the Clinical + Treatment model (AUC = 0.77, 95% CI = 0.65–0.87, p = 0.87; AUC = 0.76, 95% CI = 0.66–0.86, p = 0.90) compared to the Combined model (AUC = 0.77, 95% CI = 0.67–0.87; AUC = 0.76, 95% CI = 0.65–0.87) in predicting functional outcome at both discharge or long-term, respectively. However, in the external (Geisinger Medical Center) cohort, the Combined model (AUC = 0.74, 95% CI = 0.68–0.81) outperformed both the Radiomics model (AUC = 0.68, 95% CI = 0.61–0.75, p = 0.005) and the Radiomics + Treatment model (AUC = 0.66, 95% CI = 0.59–0.73, p < 0.001). There was no significant difference in the prognostic performance of the Clinical + Treatment model (AUC = 0.73, 95% CI = 0.66–0.79, p = 0.81) compared to the Combined model in the external cohort.

Fig. 3.

Area under the curve (AUC) of receiver operating characteristics (ROC) analysis for prediction of discharge outcome in the internal independent cohort, and prediction of long-term outcome in the independent and external cohorts (Table 3). ROC curves for Radiomics (green), Radiomics + Treatment (blue), Clinical + Treatment (black), and Combined (red) models are shown in each quadrant. (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.)

Table 3.

Independent and external testing of prognostic models.

| A. Independent testing of prognostic models for outcome at discharge | ||||||

|---|---|---|---|---|---|---|

| Model type | Selected model | Averaged AUC cross-validation | Independent testing AUC (95% CI) | P value* (compared vs combined input) |

||

| Combined | RIDGE/SVM_rad | 0.8090 ± 0.043 | 0.7688 (0.670–0.868) |

– | ||

| Radiomics + Treatment | PCA/SVM_sig | 0.7021 ± 0.052 | 0.7780 (0.698–0.900) |

0.7827 | ||

| Radiomics | PCA/SVM_rad | 0.6472 ± 0.050 | 0.7845 (0.595–0.834) |

0.5479 | ||

| Clinical + Treatment | SVM_rad | 0.8104 ± 0.049 | 0.7714 (0.651–0.869) |

0.8738 | ||

| B. Independent and external testing of prognostic models for outcome at 3 months | ||||||

| Model type | Selected model | Averaged AUC cross-validation | Independent testing | External testing | ||

| AUC (95% CI) | P value* | AUC (95% CI) | P value* | |||

| Combined | PCA/ElNet | 0.8232 | 0.7622 (0.653–0.872) |

– | 0.7424 (0.676–0.808) |

– |

| Radiomics + Treatment | PCA/SVM_sig | 0.7414 | 0.7216 (0.595–0.848) |

0.3862 | 0.6600 (0.589–0.731) |

<0.001† |

| Radiomics | pMIM/SVM_rad | 0.6856 | 0.7232 (0.598–0.849) |

0.3941 | 0.6756 (0.606–0.745) |

0.0049† |

| Clinical + Treatment | SVM_rad | 0.8234 | 0.7597 (0.658–0.857) |

0.9006 | 0.7270 (0.660–0.794) |

0.8051 |

The selected models represent the best-performing models (Fig. 2 hearmap) from each set of input variables as identified by highest averaged cross-validation AUC. These models were applied to the internal independent and external datasets to predict (A) discharge and (B) long-term functional outcome. No statistically significant difference in independent testing AUC values was found when comparing the Radiomics, Radiomics + Treatment, or Clinical + Treatment models to the Combined model in prediction of either (A) discharge or (B) long-term functional outcome. However, the Combined model outperformed Radiomics and Radiomics + Treatment models in prediction of long-term functional outcome in the external cohort (B).

*P value represents difference from Combined input model. P values were calculated using DeLong’s test.

†Statistically significant at threshold p < 0.05.

3.4. Model bias analysis

To discern factors influencing incorrect radiomics model predictions in external testing, we compared the group of subjects whose outcomes were falsely predicted by the Radiomics, Radiomics + Treatment, and Combined model types (false negative (FN) and false positive (FP) predictions) to the group of subjects whose outcomes were correctly predicted by all 3 radiomics-containing model types (true positive (TP) and true negative (TN) predictions). Our models’ binary predictions for favorable versus poor outcomes were derived by rounding the probabilistic output (0.00–1.00) of machine-learning classifiers for each subject. The FP + FN subgroup differed significantly from the TP + TN subgroup with regard to proportion of good 3-month outcomes (FP + FN: median mRS = 1(0–2), 80% mRS < 3; TP + TN mRS = 4(3–6), 10% mRS < 3; p < 0.0001), admission NIHSS (FP + FN: median 17(12–22); TP + TN: 21(16–26), p = 0.0025), and proportion of male subjects (FP + FN:30%; TP + TN: 49%; p = 0.035).

4. Discussion

Using radiomics features extracted from the admission CTAs of 829 acute LVO stroke patients, we devised, optimized, and validated machine-learning classifiers to predict functional outcomes at both discharge and 3-month follow-up. Four different input types were analyzed: Radiomics only, Radiomics + Treatment (radiomics, reperfusion mTICI score, and intravenous thrombolysis), Clinical + Treatment (clinical variables and treatment), and Combined input (radiomics, treatment, and clinical variables), all of which showed strong performance in cross-validation analyses (Fig. 2). In independent testing using a separate 101-subject dataset from the same institution, Radiomics, Radiomics + Treatment, and Clinical + Treatment models performed with similar accuracy to the Combined model in prediction of both discharge and long-term outcome (Tables 3a & 3b, Fig. 3). In external testing using a 232-subject dataset from another institution, the Combined model performed with similar accuracy to the Clinical + Treatment model, but significantly better than both the Radiomics and Radiomics + Treatment models (Table 3b, Fig. 3). In all, the general success of radiomics-based model types in predicting LVO stroke outcome indicates that there may be a role for radiomics models in guiding cost- and time-sensitive treatment decisions by automating risk stratification of acute LVO stroke patients based on admission CTA.

As clinical variables serve as the current standard for acute stroke triage, the fact that similar prognostic performance was observed in the radiomics and clinical models indicates that a radiomics-only model can offer useful prognostic information at the time of admission when there are ‘real-life scenario’ limitations in obtaining thorough clinical information. Such scenarios include: the inability to perform a thorough neurological exam, patient sedation, language barrier, fluctuating neurological exam, and prior motor deficits confounding baseline exam. Furthermore, image-guided risk stratification tools can potentially help expand treatment eligibility of acute LVO stroke patients to those with unknown time of onset (wake-up stroke) or with mild/indeterminate stroke severity.

As indicated by the strong performance of Radiomics and Radiomics + Treatment models in cross-validation and independent cohort analyses, radiomics-based model success was observed whether or not input variables included reperfusion success or even clinical data. However, the addition of clinical input variables allowed the Combined model to demonstrate more stable generalizability upon external testing. Of interest, the statistically inferior performance of Radiomics and Radiomics + Treatment models was driven by a tendency of these model types to overestimate the risk of poor outcome: incorrectly predicted cases had a significantly higher proportion of patients with 3-month favorable outcome compared to correctly predicted cases in external testing (80% vs 10%, p < 0.0001; median mRS 1(0–2) vs 4(3–6), p < 0.0001). Such a tendency towards overestimation of risk would in reality promote additional support for patients expected to do poorly, without imposing potential harm. The prominence of successful mTICI reperfusion scores may also contribute to the minimal improvement observed in the Radiomics + Treatment model as compared to Radiomics alone: mTICI scores of 2b or 3 were observed in 74% of subjects in the training set, 87% in the internal independent cohort, and 93% in the external cohort. These proportions of successful reperfusion are in line with other recently published studies such as the ASTER trial, in which 86% of subjects who underwent ET had mTICI 2b or greater (Dargazanli et al., 2018). However, it has been documented that operators tend to overestimate the degree of reperfusion compared to core lab scoring (Zhang et al., 2018). In general, a ceiling effect of mTICI scoring likely reduces this variable’s capability of enhancing model performance.

A fully automated prognostication tool based on admission CTA radiomics can facilitate objective assessment of stroke patients and effective treatment triage in the context of expanding telemedicine practice. While the use of telemedicine to guide stroke treatment is well established, the COVID-19 pandemic motivated rapid expansion of care via tele-stroke to provide consistent and timely care while minimizing patient and provider exposure and preserving personal protective equipment (Guzik et al., 2021). Prior studies have shown that tele-stroke programs improve the timeliness of thrombolysis treatments (Lee et al., 2017). In addition, tele-stroke services may reduce the racial, ethnic, and sex disparities in ischemic stroke care (Reddy et al., 2021). Furthermore, current human prediction accuracy of LVO patient outcome requires improvement, as evidenced by the current rate of poor prognosis after successful ET (futile reperfusion, observed in 50% of LVO patients undergoing ET) (Meinel et al., 2020) and the high percentage (92–93%) of LVO patients who do not currently meet criteria for ET (Desai et al., 2019). Models such as ours with AUC ranges up to 0.74 in external testing exceed these statistics and could improve patient outcomes and resource utilization in both tele-stroke and traditional settings.

More specifically, identification of patients with futile reperfusion can guide treatment triage by optimizing patient selection for inter-hospital transfer to comprehensive stroke centers (Stefanou et al., 2020). Patients with high likelihood of futile reperfusion may benefit from additional treatment intervention for improvement of long-term outcome and may be candidates for future clinical trials evaluating treatment options beyond current reperfusion interventions.

Our methodology may also guide future studies applying radiomics algorithms to stroke imaging data. Of interest, all but one of the best-performing models identified in cross-validation utilized the support vector machine classifier with either sigmoid (SVM_sig) or radial (SVM_rad) kernel. While the most prominent classifiers in many recent machine-learning studies have been ensemble methods such as XGBoost and random forest (Belgiu and Dragut, 2016, Chen and Guestrin, 2016), SVM classifiers have also shown utility in multiple neuro-radiomics studies (Ortiz-Ramon et al., 2019, Tian et al., 2018). Future work at the intersection of machine-learning, radiomics, and stroke clinical research may utilize deep learning, which allows for implicit learning of relevant features and has been shown to significantly enhance machine-learning performance (LeCun et al., 2015). Substantially larger training datasets are required for a deep learning approach compared to conventional machine-learning techniques and are needed to implement deep learning for prediction of LVO stroke functional outcome in future works. The machine-learning methods of the present work maintain the advantage of being more transparent than deep learning methods, allowing for extraction and analysis of important features.

Our study is limited by the nonrandomized nature of patient selection and the lack of an untreated control group. However, considering the outcome for unsuccessful thrombectomy as the natural course of untreated LVO, this subgroup may substitute for control subjects. Of note, the fact that practice-changing trials (Albers et al., 2018, Nogueira et al., 2018) were published during the timeline of patient presentation in our cohorts (2014–2020) may have impacted treatment variables in older vs more recently presenting patients. Our results also demonstrate inherent limitations in the generalizability of our Radiomics and Radiomics + Treatment models on external data, which could be in part due to differences in CTA acquisition methods. Additionally, we excluded patients with imaging artifacts such as metal instrumentation, head movement, and prior surgeries. While inclusion of these images could have potentially improved the robustness of final models, introduction of such noise into the training dataset could have hindered model development. Lastly, we trained our models on radiomics features extracted from the bilateral MCA territories rather than the whole brain or whole anterior circulation territory which includes anterior cerebral artery as well. However, models trained on whole-brain radiomics are described in Supplementary Fig. 2 and demonstrate similar cross-validation performance to the models described in this manuscript.

5. Conclusion

In summary, our work demonstrates the feasibility of using automatically extracted radiomics features to create prognostic models for LVO patient outcomes from baseline CTA scans. With comparable performance to models based on patients’ neurological exams and clinical variables, models solely utilizing radiomics features can help with prognostication when acquisition of baseline clinical information is limited or confounded. Such models are a promising step towards the increasingly important goals of implementing automated cost- and time-sensitive decision-assistance tools, particularly in tele-stroke and community hospital settings, and increasing the number of patients who are eligible for life-saving ET.

6. Funding and competing interests

Emily Avery declares no competing interests.

Jonas Behland declares no competing interests.

Adrian Mak declares no competing interests.

Stefan Haider declares no competing interests.

Tal Zeevi declares no competing interests.

Dr. Pina Sanelli declares no competing interests.

Dr. Christopher G. Filippi receives consulting honoraria from Syntactx, Inc; minority stockholder in Avicenna.ai; and receives research funding from the National Multiple Sclerosis Society.

Dr. Ajay Malhotra declares no competing interests.

Dr. Charles Matouk declares no competing interests.

Dr. Christoph Griessenauer receives research funding from Medtronic and Penumbra and consulting honoraria from Stryker and MicroVention.

Dr. Ramin Zand declares no competing interests.

Dr. Philipp Hendrix declares no competing interests. Part of his salary was provided by Medtronic and used to support this work.

Dr. Vida Abedi declares no competing interests.

Dr. Guido Falcone is supported by the National Institutes of Health (K76AG059992, R03NS112859 and P30AG021342), the American Heart Association (18IDDG34280056), the Yale Pepper Scholar Award and the Neurocritical Care Society Research Fellowship.

Dr. Nils Petersen is supported by AHA 17MCPRP33460188 and National Institutes of Health KL2 TR001862.

Dr. Kevin Sheth is supported by the National Institutes of Health (U24NS107215, U24NS107136, U01NS106513, R01NR018335), and the American Heart Association (17CSA33550004), and grants from Novartis, Biogen, Bard, Hyperfine and Astrocyte. He also reports equity interests in Alva Health.

Dr. Lauren Sansing is supported by the National Institutes of Health (R01NS095993, R01NS097728).

Dr. Seyedmehdi Payabvash received grant support from Doris Duke Charitable Foundation (2020097), Radiological Society of North America (A129581), American Society of Neuroradiology, and National Institutes of Health (K23NS118056).

CRediT authorship contribution statement

Emily W. Avery: Conceptualization, Investigation, Data curation, Methodology, Writing – original draft, Writing – review & editing, Visualization. Jonas Behland: Investigation, Data curation. Adrian Mak: Data curation, Investigation, Writing – review & editing. Stefan P. Haider: Methodology, Software, Writing – review & editing. Tal Zeevi: Methodology, Software, Writing – review & editing. Pina C. Sanelli: Supervision, Writing – review & editing. Christopher G. Filippi: Investigation, Data curation, Writing – review & editing. Ajay Malhotra: Conceptualization, Supervision, Writing – review & editing. Charles C. Matouk: Conceptualization, Investigation, Data curation. Christoph J. Griessenauer: Investigation, Data curation, Writing – review & editing. Ramin Zand: Investigation, Data curation, Writing – review & editing. Philipp Hendrix: Investigation, Data curation, Writing – review & editing. Vida Abedi: Investigation, Data curation, Writing – review & editing. Guido J. Falcone: Investigation, Writing – review & editing. Nils Petersen: Investigation, Writing – review & editing. Lauren H. Sansing: Investigation, Writing – review & editing. Kevin N. Sheth: Investigation, Writing – review & editing. Seyedmehdi Payabvash: Conceptualization, Supervision, Data curation, Writing – original draft, Writing – review & editing.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Footnotes

Supplementary data to this article can be found online at https://doi.org/10.1016/j.nicl.2022.103034.

Appendix A. Supplementary data

The following are the Supplementary data to this article:

References

- Albers G.W., Marks M.P., Kemp S., Christensen S., Tsai J.P., Ortega-Gutierrez S., McTaggart R.A., Torbey M.T., Kim-Tenser M., Leslie-Mazwi T., Sarraj A., Kasner S.E., Ansari S.A., Yeatts S.D., Hamilton S., Mlynash M., Heit J.J., Zaharchuk G., Kim S., Carrozzella J., Palesch Y.Y., Demchuk A.M., Bammer R., Lavori P.W., Broderick J.P., Lansberg M.G., Investigators D. Thrombectomy for Stroke at 6 to 16 Hours with Selection by Perfusion Imaging. N. Engl. J. Med. 2018;378:708–718. doi: 10.1056/NEJMoa1713973. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Belgiu M., Dragut L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016;114:24–31. [Google Scholar]

- Benjamin, E.J., Blaha, M.J., Chiuve, S.E., Cushman, M., Das, S.R., Deo, R., de Ferranti, S.D., Floyd, J., Fornage, M., Gillespie, C., Isasi, C.R., Jimenez, M.C., Jordan, L.C., Judd, S.E., Lackland, D., Lichtman, J.H., Lisabeth, L., Liu, S., Longenecker, C.T., Mackey, R.H., Matsushita, K., Mozaffarian, D., Mussolino, M.E., Nasir, K., Neumar, R.W., Palaniappan, L., Pandey, D.K., Thiagarajan, R.R., Reeves, M.J., Ritchey, M., Rodriguez, C.J., Roth, G.A., Rosamond, W.D., Sasson, C., Towfighi, A., Tsao, C.W., Turner, M.B., Virani, S.S., Voeks, J.H., Willey, J.Z., Wilkins, J.T., Wu, J.H., Alger, H.M., Wong, S.S., Muntner, P., American Heart Association Statistics, C., Stroke Statistics, S., 2017. Heart Disease and Stroke Statistics-2017 Update: A Report From the American Heart Association. Circulation 135, e146-e603. [DOI] [PMC free article] [PubMed]

- Camargo E.C., Furie K.L., Singhal A.B., Roccatagliata L., Cunnane M.E., Halpern E.F., Harris G.J., Smith W.S., Gonzalez R.G., Koroshetz W.J., Lev M.H. Acute brain infarct: detection and delineation with CT angiographic source images versus nonenhanced CT scans. Radiology. 2007;244:541–548. doi: 10.1148/radiol.2442061028. [DOI] [PubMed] [Google Scholar]

- Chen T.Q., Guestrin C. Kdd'16: Proceedings of the 22nd Acm Sigkdd International Conference on Knowledge Discovery and Data Mining. 2016. XGBoost: A Scalable Tree Boosting System; pp. 785–794. [Google Scholar]

- Dargazanli C., Fahed R., Blanc R., Gory B., Labreuche J., Duhamel A., Marnat G., Saleme S., Costalat V., Bracard S., Desal H., Mazighi M., Consoli A., Piotin M., Lapergue B., Investigators A.T. Modified Thrombolysis in Cerebral Infarction 2C/Thrombolysis in Cerebral Infarction 3 Reperfusion Should Be the Aim of Mechanical Thrombectomy Insights From the ASTER Trial (Contact Aspiration Versus Stent Retriever for Successful Revascularization) Stroke. 2018;49:1189-+. doi: 10.1161/STROKEAHA.118.020700. [DOI] [PubMed] [Google Scholar]

- DeLong E.R., DeLong D.M., Clarke-Pearson D.L. Comparing the areas under two or more correlated receiver operating characteristic curves: a nonparametric approach. Biometrics. 1988;44:837–845. [PubMed] [Google Scholar]

- Desai S.M., Starr M., Molyneaux B.J., Rocha M., Jovin T.G., Jadhav A.P. Acute Ischemic Stroke with Vessel Occlusion-Prevalence and Thrombectomy Eligibility at a Comprehensive Stroke Center. J. Stroke Cerebrovasc. Dis. 2019;28 doi: 10.1016/j.jstrokecerebrovasdis.2019.104315. [DOI] [PubMed] [Google Scholar]

- Guzik A.K., Martin-Schild S., Tadi P., Chapman S.N., Al Kasab S., Martini S.R., Meyer B.C., Demaerschalk B.M., Wozniak M.A., Southerland A.M. Telestroke Across the Continuum of Care: Lessons from the COVID-19 Pandemic. J. Stroke Cerebrovasc. Dis. 2021;30 doi: 10.1016/j.jstrokecerebrovasdis.2021.105802. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haider S.P., Burtness B., Yarbrough W.G., Payabvash S. Applications of radiomics in precision diagnosis, prognostication and treatment planning of head and neck squamous cell carcinomas. Cancers Head Neck. 2020;5:6. doi: 10.1186/s41199-020-00053-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haider S.P., Mahajan A., Zeevi T., Baumeister P., Reichel C., Sharaf K., Forghani R., Kucukkaya A.S., Kann B.H., Judson B.L., Prasad M.L., Burtness B., Payabvash S. PET/CT radiomics signature of human papilloma virus association in oropharyngeal squamous cell carcinoma. Eur. J. Nucl. Med. Mol. Imaging. 2020 doi: 10.1007/s00259-020-04839-2. [DOI] [PubMed] [Google Scholar]

- Haider S.P., Sharaf K., Zeevi T., Baumeister P., Reichel C., Forghani R., Kann B.H., Petukhova A., Judson B.L., Prasad M.L., Liu C., Burtness B., Mahajan A., Payabvash S. Prediction of post-radiotherapy locoregional progression in HPV-associated oropharyngeal squamous cell carcinoma using machine-learning analysis of baseline PET/CT radiomics. Transl. Oncol. 2020;14 doi: 10.1016/j.tranon.2020.100906. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haider, S.P., Zeevi, T., Baumeister, P., Reichel, C., Sharaf, K., Forghani, R., Kann, B.H., Judson, B.L., Prasad, M.L., Burtness, B., Mahajan, A., Payabvash, S., 2020d. Potential Added Value of PET/CT Radiomics for Survival Prognostication beyond AJCC 8th Edition Staging in Oropharyngeal Squamous Cell Carcinoma. Cancers (Basel) 12. [DOI] [PMC free article] [PubMed]

- Haussen D.C., Lima F.O., Bouslama M., Grossberg J.A., Silva G.S., Lev M.H., Furie K., Koroshetz W., Frankel M.R., Nogueira R.G. Thrombectomy versus medical management for large vessel occlusion strokes with minimal symptoms: an analysis from STOPStroke and GESTOR cohorts. J. Neurointerv. Surg. 2018;10:325–329. doi: 10.1136/neurintsurg-2017-013243. [DOI] [PubMed] [Google Scholar]

- Lakomkin N., Dhamoon M., Carroll K., Singh I.P., Tuhrim S., Lee J., Fifi J.T., Mocco J. Prevalence of large vessel occlusion in patients presenting with acute ischemic stroke: a 10-year systematic review of the literature. J. Neurointerv. Surg. 2019;11:241–245. doi: 10.1136/neurintsurg-2018-014239. [DOI] [PubMed] [Google Scholar]

- LeCun Y., Bengio Y., Hinton G. Deep learning. Nature. 2015;521:436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- Lee V.H., Cutting S., Song S.Y., Cherian L., Diebolt E., Bock J., Conners J.J. Participation in a Tele-Stroke Program Improves Timeliness of Intravenous Thrombolysis Delivery. Telemed. J. E Health. 2017;23:60–62. doi: 10.1089/tmj.2016.0014. [DOI] [PubMed] [Google Scholar]

- Malhotra, K., Gornbein, J., Saver, J.L., 2017. Ischemic Strokes Due to Large-Vessel Occlusions Contribute Disproportionately to Stroke-Related Dependence and Death: A Review. Frontiers in Neurology 8. [DOI] [PMC free article] [PubMed]

- Martinez G., Katz J.M., Pandya A., Wang J.J., Boltyenkov A., Malhotra A., Mushlin A.I., Sanelli P.C. Cost-Effectiveness Study of Initial Imaging Selection in Acute Ischemic Stroke Care. J. Am. Coll. Radiol. 2020 doi: 10.1016/j.jacr.2020.12.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meinel T.R., Kaesmacher J., Mosimann P.J., Seiffge D., Jung S., Mordasini P., Arnold M., Goeldlin M., Hajdu S.D., Olive-Gadea M., Maegerlein C., Costalat V., Pierot L., Schaafsma J.D., Fischer U., Gralla J. Association of initial imaging modality and futile recanalization after thrombectomy. Neurology. 2020;95:e2331–e2342. doi: 10.1212/WNL.0000000000010614. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nogueira R.G., Jadhav A.P., Haussen D.C., Bonafe A., Budzik R.F., Bhuva P., Yavagal D.R., Ribo M., Cognard C., Hanel R.A., Sila C.A., Hassan A.E., Millan M., Levy E.I., Mitchell P., Chen M., English J.D., Shah Q.A., Silver F.L., Pereira V.M., Mehta B.P., Baxter B.W., Abraham M.G., Cardona P., Veznedaroglu E., Hellinger F.R., Feng L., Kirmani J.F., Lopes D.K., Jankowitz B.T., Frankel M.R., Costalat V., Vora N.A., Yoo A.J., Malik A.M., Furlan A.J., Rubiera M., Aghaebrahim A., Olivot J.M., Tekle W.G., Shields R., Graves T., Lewis R.J., Smith W.S., Liebeskind D.S., Saver J.L., Jovin T.G., Investigators D.T. Thrombectomy 6 to 24 Hours after Stroke with a Mismatch between Deficit and Infarct. N. Engl. J. Med. 2018;378:11–21. doi: 10.1056/NEJMoa1706442. [DOI] [PubMed] [Google Scholar]

- Ortiz-Ramon R., Hernandez M.D.V., Gonzalez-Castro V., Makin S., Armitage P.A., Aribisala B.S., Bastin M.E., Dearye I.J., Wardlaw J.M., Moratal D. Identification of the presence of ischaemic stroke lesions by means of texture analysis on brain magnetic resonance images. Comput. Med. Imaging Graph. 2019;74:12–24. doi: 10.1016/j.compmedimag.2019.02.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powers, Guidelines for the Early Management of Patients With Acute Ischemic Stroke: 2019 Update to the 2018 Guidelines for the Early Management of Acute Ischemic Stroke: A Guideline for Healthcare Professionals From the American Heart Association/American Stroke Association (vol 50, pg e344, 2019) Stroke. 2019;50:E440–E441. doi: 10.1161/STR.0000000000000215. [DOI] [PubMed] [Google Scholar]

- Pulli B., Schaefer P.W., Hakimelahi R., Chaudhry Z.A., Lev M.H., Hirsch J.A., Gonzalez R.G., Yoo A.J. Acute ischemic stroke: infarct core estimation on CT angiography source images depends on CT angiography protocol. Radiology. 2012;262:593–604. doi: 10.1148/radiol.11110896. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pyradiomics-community, 2018. Pyradiomics Documentation Release 2.1.2.

- Reddy S., Wu T.C., Zhang J., Rahbar M.H., Ankrom C., Zha A., Cossey T.C., Aertker B., Vahidy F., Parsha K., Jones E., Sharrief A., Savitz S.I., Jagolino-Cole A. Lack of Racial, Ethnic, and Sex Disparities in Ischemic Stroke Care Metrics within a Tele-Stroke Network. J. Stroke Cerebrovasc. Dis. 2021;30 doi: 10.1016/j.jstrokecerebrovasdis.2020.105418. [DOI] [PubMed] [Google Scholar]

- Regenhardt R.W., Bretzner M., Zanon Zotin M.C., Bonkhoff A.K., Etherton M.R., Hong S., Das A.S., Alotaibi N.M., Vranic J.E., Dmytriw A.A., Stapleton C.J., Patel A.B., Kuchcinski G., Rost N.S., Leslie-Mazwi T.M. Radiomic signature of DWI-FLAIR mismatch in large vessel occlusion stroke. J. Neuroimaging. 2022;32:63–67. doi: 10.1111/jon.12928. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robin X., Turck N., Hainard A., Tiberti N., Lisacek F., Sanchez J.C., Müller M. pROC: an open-source package for R and S+ to analyze and compare ROC curves. BMC Bioinf. 2011;12:77. doi: 10.1186/1471-2105-12-77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sharma M., Fox A.J., Symons S., Jairath A., Aviv R.I. CT angiographic source images: flow- or volume-weighted? AJNR Am. J. Neuroradiol. 2011;32:359–364. doi: 10.3174/ajnr.A2282. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stefanou M.I., Stadler V., Baku D., Hennersdorf F., Ernemann U., Ziemann U., Poli S., Mengel A. Optimizing Patient Selection for Interhospital Transfer and Endovascular Therapy in Acute Ischemic Stroke: Real-World Data From a Supraregional, Hub-and-Spoke Neurovascular Network in Germany. Front. Neurol. 2020;11 doi: 10.3389/fneur.2020.600917. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tian Q., Yan L.F., Zhang X., Zhang X., Hu Y.C., Han Y., Liu Z.C., Nan H.Y., Sun Q., Sun Y.Z., Yang Y., Yu Y., Zhang J., Hu B., Xiao G., Chen P., Tian S., Xu J., Wang W., Cui G.B. Radiomics strategy for glioma grading using texture features from multiparametric MRI. J. Magn. Reson. Imaging. 2018;48:1518–1528. doi: 10.1002/jmri.26010. [DOI] [PubMed] [Google Scholar]

- Wang Y., Juliano J.M., Liew S.L., McKinney A.M., Payabvash S. Stroke atlas of the brain: Voxel-wise density-based clustering of infarct lesions topographic distribution. Neuroimage Clin. 2019;24 doi: 10.1016/j.nicl.2019.101981. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weber R., Minnerup J., Nordmeyer H., Eyding J., Krogias C., Hadisurya J., Berger K., investigators, R., Thrombectomy in posterior circulation stroke: differences in procedures and outcome compared to anterior circulation stroke in the prospective multicentre REVASK registry. Eur. J. Neurol. 2019;26:299–305. doi: 10.1111/ene.13809. [DOI] [PubMed] [Google Scholar]

- Xu J., Murphy S.L., Kockanek K.D., Arias E. Mortality in the United States, 2018. NCHS Data Brief. 2020:1–8. [PubMed] [Google Scholar]

- Yan, Y., 2016. rBayesianOptimization: Bayesian Optimization of Hyperparameters.

- Zhang G., Treurniet K.M., Jansen I.G.H., Emmer B.J., van den Berg R., Marquering H.A., Uyttenboogaart M., Jenniskens S.F.M., Roos Y.B.W.E.M., van Doormaal P.J., van Es A.C.G.M., van der Lugt A., Vos J.A., Nijeholt G.J.L.A., van Zwam W.H., Shi H.Z., Yoo A.J., Dippel D.W.J., Majoie C.B.L.M., Investigators M.C.R. Operator Versus Core Lab Adjudication of Reperfusion After Endovascular Treatment of Acute Ischemic Stroke. Stroke. 2018;49:2376–2382. doi: 10.1161/STROKEAHA.118.022031. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.