Abstract

Technology-delivered interventions have the potential to help address the treatment gap in mental health care but are plagued by high attrition. Adding coaching, or minimal contact with a nonspecialist provider, may encourage engagement and decrease dropout, while remaining scalable. Coaching has been studied in interventions for various mental health conditions but has not yet been tested with anxious samples. This study describes the development of and reactions to a low-intensity coaching protocol administered to N = 282 anxious adults identified as high risk to drop out of a web-based cognitive bias modification for interpretation intervention. Undergraduate research assistants were trained as coaches and communicated with participants via phone calls and synchronous text messaging. About half of the sample never responded to coaches’ attempts to schedule an initial phone call or did not answer the call, though about 30% completed the full intervention with their coach. Some anxious adults may choose technology-delivered interventions specifically for their lack of human contact and may fear talking to strangers on the phone; future recommendations include taking a more intensive user-centered design approach to creating and implementing a coaching protocol, allowing coaching support to be optional, and providing users with more information about how and why the intervention works.

Keywords: coaching, eHealth, technology-delivered intervention, cognitive bias modification

Although there are effective treatments for anxiety disorders, there are significant barriers to accessing evidence-based treatments. One model for increasing the reach of evidence-based interventions is through technologically delivered interventions (TDIs; Kazdin & Blase, 2011)—however, dropout rates from TDIs are often very high (e.g., 70%; see Karyotaki et al., 2015). In the current study, we report participants’ reactions to coaching (i.e., minimal human contact to a TDI to encourage engagement and reduce attrition) during an online intervention for adults with anxiety symptoms. The full intervention tested the effects of coaching on attrition and mental health outcomes, and those results are detailed elsewhere (Eberle et al., 2021)—this paper aims to describe the development of the coaching protocol and to report acceptability and feasibility of coaching with adults with symptoms of anxiety.

Leveraging Technology to Overcome Barriers to Accessing Treatment

Anxiety disorders are highly prevalent, with the 12-month worldwide prevalence ranging between 2.4 and 29.8% (Baxter et al., 2013), but most individuals do not receive care, in part due to the high cost of services, stigma, and lack of access to providers (Alonso et al., 2018). TDIs may help address some of these barriers to accessing care. Although some TDIs have shown mixed efficacy for anxiety disorders (e.g., Arnberg et al., 2014; MacLeod & Mathews, 2012), TDIs can have comparable efficacy to face-to-face interventions (Andersson et al., 2014). In a recent meta-analysis, TDIs were more effective for reducing anxiety symptoms than both active control and online peer support conditions (Domhardt et al., 2019).

Cognitive bias modification for interpretation training (CBM-I) is one promising TDI for treating symptoms of anxiety (Ji et al., 2021), but results across studies are mixed (see Fodor et al., 2020). CBM-I programs for anxiety aim to directly reduce rigid, negative, or threatening interpretations of ambiguous stimuli, which are considered to play a causal role in anxiety vulnerability and dysfunction (MacLeod & Mathews, 2012), and can be delivered completely through digital interfaces. Exposure therapy is one of the recommended treatments for anxiety disorders (Abramowitz et al., 2019) and requires individuals to systematically confront feared stimuli over the course of treatment. CBM-I may work in part because it encourages imaginal exposure to feared situations, though Beadel et al. (2014) found that subjective fear and arousal did not change as a function of CBM-I condition, suggesting that CBM-I’s effects are likely not operating similarly to exposure-based habituation. CBM-I may be useful as a standalone intervention or as an adjunct to other ongoing treatment to practice flexible thinking. This type of intervention also addresses some of the known barriers to seeking and sticking with help from a provider, such as wanting to handle the problem on one’s own, perceived ineffectiveness of talking with a provider, and negative experiences with a provider (Andrade et al., 2014). CBM-I may be especially appealing for users who feel less ready to explicitly report and introspect on their anxious thoughts, feelings, and behaviors (as occurs in cognitive-behavioral therapy [CBT]). Along these lines, CBM-I may be an initial treatment step for those who need a higher level of care but are not yet willing or able to commit (e.g., an individual who feels too anxious to seek in-person therapy may try CBM-I, which may then make him or her more open to future in-person therapy).

While CBM-I has the potential to address the unmet treatment need, attrition in online versions is a major problem. In a recent study of a web-based version of CBM-I, 86% of participants dropped out of the intervention before the sixth session of an eight-session intervention (Hohensee et al., 2020). Attrition is also a major threat to TDIs more broadly—for example, Mood-Gym, an online CBT site, has reported that participant dropout is common, with only 10% of more than 80,000 visitors completing a second module (Batterham et al., 2008). To combat this problem, researchers have tested whether different models of added human support or guided self-help to TDIs increase intervention engagement and reduce attrition. Because added human support does not require extensive mental health expertise to administer, it can be delivered by non-specialist providers using digital tools (i.e., text messaging, phone calls; Lattie et al., 2019). As such, added human support offers promise as a cost-effective and scalable delivery model with the potential to reach more individuals than is possible through one-to-one in-person service delivery. Results appear promising, with one meta-analysis finding that guided interventions were superior to unguided interventions in terms of symptom reduction, rates of module completion, and intervention completion (Baumeister et al., 2014). Although adding human support to CBM-I for anxious individuals may reduce rates of dropout and improve intervention outcomes, no previous research has yet tested these questions. In the current study, individuals with anxiety who were completing an Internet-delivered CBM-I intervention were randomly assigned to receive coaching, or not. In the present study, we report on reactions to coaching.

Examples of Added Human Support

Different models of added human support have been developed. These differ from traditional clinical practice in that a coach’s goal is to support use of the intervention rather than to offer emotional support or serve as a therapist (Lattie et al., 2019). For example, the efficiency model highlights that the coach’s job is to identify reasons why the user may be failing to benefit from the intervention (e.g., due to failures with usability, engagement, fit, knowledge, or implementation; see Table 2) and to provide support resources to address each challenge (Schueller et al., 2017). This model may be particularly useful within the context of CBM-I, given that some previous CBM-I users have reported finding the program boring or not relevant to their lives and have described CBM-I as less helpful if they lack an understanding of the purpose of the trainings or their relevance (Beard, Rifkin, et al., 2019).

Table 2.

Examples of failure points of digital interventions.

| Failure point | Definition | MindTrails examples |

|---|---|---|

|

| ||

| Usability and knowledge | Design flaws that would serve as a barrier or not knowing how to use the intervention | Participant does not agree with correct answer to comprehension question or does not understand how CBM-I will help him or her feel less anxious. |

| Engagement | Lacking intention or motivation to use the intervention | Participant is too busy to complete training or finds training boring. |

| Implementation | Failing to incorporate lessons learned during the intervention into daily life | Participant does not know how to apply training to anxiety-provoking situations. |

| Technical issues | Problems with technology | Page reloads during session or page does not present options to click to advance on site. |

Note. CBM-I = cognitive bias modification for interpretation training. Failure points were taken from the efficiency model of support (Schueller et al., 2017).

The supportive accountability model argues that added human support increases intervention adherence through holding the intervention user accountable to a coach (i.e., via the added presence of another social being vs. an automated system, setting clear expectations and goals, and performance monitoring; Mohr et al., 2011). However, the effects of supportive accountability on attrition are mixed (Dennison et al., 2014; Kleiboer et al., 2015) and some studies have found no differences in treatment outcomes between individuals who did or did not receive added support from a coach (Boß et al., 2018; Mohr et al., 2013). Supportive accountability has been added to TDIs for treatment of depression (Mohr et al., 2013, 2019), symptoms of depression and anxiety (Kleiboer et al., 2015), alcohol use disorder (Boß et al., 2018), and weight management (Dennison et al., 2014). However, to our knowledge, it has not been incorporated into web-based CBM-I, or offered to highly anxious individuals.

Overview of Current Study and Hypotheses

In the current study, we report on findings from one condition of a clinical trial of the efficacy and effectiveness of a web-based CBM-I program for individuals struggling with symptoms of anxiety. The larger trial builds on preliminary evidence of the efficacy of this program for the treatment of symptoms of anxiety among adults (Ji et al., 2021). Specifically, in this paper we focus on the development of, and acceptability and feasibility for, a coaching protocol for highly anxious individuals, which was largely modeled after coaching protocols for individuals with symptoms of depression (Duffecy et al., 2010; Tomasino et al., 2017). In the broader trial, individuals who were identified as likely to drop out of the study were randomly assigned to receive minimal human contact (coaching) or not. The goal of adding coaching was to reduce attrition and increase adherence and engagement in the program. In an exploratory analysis, we examine whether individuals’ reported importance of reducing symptoms of anxiety differed by coaching completion. We examined differences in engagement with coaching (initial response and completion of the coaching protocol) as a function of participant gender, age, education, and baseline anxiety symptoms. Hypotheses for these analyses were preregistered (https://osf.io/fmucx/).

We hypothesize that older (vs. younger) and more (vs. less) highly educated intervention users will be more engaged with coaching. Research on alliance in face-to-face settings suggests that older (vs. younger) clients have stronger therapeutic alliances with their therapists (Arnow et al., 2013). With regard to education, among adult patients attending a partial hospital program, more (vs. less) highly educated individuals reported that they would be more willing to use a mental health app to treat their symptoms (Beard, Silverman, et al., 2019). It is possible that more (vs. less) highly educated people may feel comfortable using technology due to greater exposure to it (i.e., greater use of the Internet: Pew Research Center, 2019a; greater smartphone ownership: Pew Research Center, 2019b) and therefore may feel more willing to engage with technology-delivered services. The role of participant’s gender on engagement is exploratory in this anxiety intervention1—however, previous work has found that men are more likely to drop out of online interventions for depression (Karyotaki et al., 2015). Finally, we hypothesize that participants with higher (vs. lower) baseline levels of anxiety symptom severity will demonstrate less coaching engagement. Given that social anxiety is associated with avoidance of social contexts, individuals may be more versus less willing to engage with a coach as a function of their level of trait anxiety. For example, highly anxious individuals might prefer texting over calling because it requires less direct interaction (Kashdan et al., 2014).

In this paper, we first provide a brief background on the larger trial to put the coaching protocol into context. Next, we provide information on the development of the coaching protocol and describe implementation with an international sample. We then provide quantitative results and excerpts of qualitative responses from individuals who were assigned to complete the coaching protocol. Finally, we offer lessons learned for future research.

Method

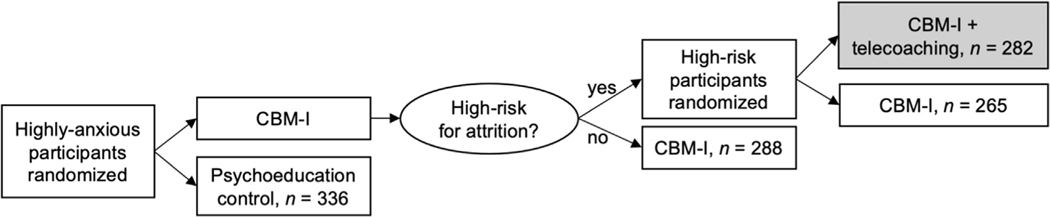

This study reports on a subset of data from a larger clinical trial (N = 1,824 participants) investigating the effects of a multisession CBM-I intervention for anxiety symptoms on the public website MindTrails (http://mindtrails.virginia.edu). The trial was open from January 20, 2019, to April 1, 2020. In the current study, we focus on one branch of the trial (see Figure 1)—namely, individuals randomly assigned to receive coaching support throughout the 5-week intervention. The preregistered hypotheses for the full trial (https://osf.io/af4n7) are being examined separately. In this paper, we focus on a subset of the data to address reactions to coaching. This study was approved by the IRB. All participants provided informed consent prior to participation. The consent form included information about the potential added coach.

FIGURE 1.

SMART design of larger clinical trial. Note. SMART = sequential, multiple assignment, randomized trial. In this paper, we focus on the CBM-I + telecoaching condition.

PARTICIPANTS

Individuals were recruited through in-person (e.g., posted flyers) and online (e.g., Facebook) advertisements, in addition to a service that connects potential research participants to clinical trials. The Depression, Anxiety, Stress Scales–21: Anxiety subscale (DASS-AS; Antony et al., 1998; Lovibond & Lovibond, 1995) was used as a screening measure to determine study eligibility. Individuals ages 18 and older and who reported a moderate level of anxiety or greater on the DASS-AS were invited to participate. Participants were compensated based on the number of assessments they completed (up to $25 for completing all assessments). Participants predicted to be at high risk for dropout based on the implemented attrition algorithm (Baee et al., 2021) were randomly assigned to receive coaching (vs. no coaching). Ages of the coaching participants ranged from 18 to 68 (M = 34.39 years, SD = 11.86). Two hundred sixty (92.2%) were from the United States; 3.5% from Australia (n = 10); 0.7% from Ireland (n = 2); and 0.4% (n = 1) each from Bahamas, Brazil, Canada, Ethiopia, Germany, India, Japan, Philippines, Romania, and Switzerland. See Table 1 for additional demographic characteristics.

Table 1.

Demographic characteristics of coaching sample.

| n | % | |

|---|---|---|

|

| ||

| Gender | ||

| Female | 231 | 81.9% |

| Male | 44 | 15.6% |

| Other | 4 | 1.4% |

| Transgender | 2 | 0.7% |

| Not reported | 1 | 0.4% |

| Race | ||

| American Indian/Alaska Native | 1 | 0.4% |

| Asian | 17 | 6.0% |

| Black or African American | 19 | 6.7% |

| White | 213 | 75.5% |

| Multiracial | 23 | 8.2% |

| Other or unknown/not reported | 9 | 3.2% |

| Ethnicity | ||

| Hispanic or Latino | 38 | 13.5% |

| Not Hispanic or Latino | 237 | 84.0% |

| Unknown/not reported | 7 | 2.5% |

| Education | ||

| Some high school | 5 | 1.8% |

| High school graduate | 35 | 12.4% |

| Some college or associate’s degree | 108 | 38.3% |

| Bachelor’s degree | 64 | 22.7% |

| Some graduate school | 22 | 7.8% |

| Advanced degree | 47 | 16.7% |

| Not reported | 1 | 0.4% |

MEASURES

Anxiety Symptoms

Participants completed self-report measures of anxiety symptoms on the DASS-AS (Antony et al., 1998; Lovibond & Lovibond, 1995) at screening (baseline), after Sessions 3 and 5, and at 2-month follow-up. Scores were calculated by taking the average of items answered multiplied by 14. In the current study, the DASS-AS demonstrated stable internal consistency (Cronbach’s α = .70) at baseline. Participants were eligible if they scored 10 or greater on the DASS-AS, indicating moderate to extremely severe anxiety symptoms over the previous week.

Participants also completed the Overall Anxiety Severity and Impairment Scale (OASIS; Norman et al., 2006) before the first training, after each of the five training sessions, and at the 2-month follow-up. The OASIS assesses the frequency and severity of anxiety and avoidance and impairment due to anxiety over the past week. Scores were calculated by taking the average of items answered multiplied by 5. In the current study, the OASIS demonstrated good internal consistency (Cronbach’s α = .80) at baseline.

Importance of Reducing Anxiety

Participants were asked to answer, “How important is reducing your anxiety to you right now?” on a scale from 0 (not at all) to 4 (very) prior to completing the first CBM-I training session.

Nonresponse Survey

We created a brief measure to assess why participants may have decided not to respond to efforts to schedule a coaching phone call. This measure was created after the study started given we had not anticipated high levels of nonresponse to coaching efforts. The first survey was sent just over 1 month after the trial started. Participants who did not respond to our efforts to schedule an initial coaching call were sent an e-mail from the program administrator requesting feedback to improve the program via a Qualtrics survey with two items. The first read “Why did you choose not to schedule a coaching phone call? Please select all that apply.” The options included Did not receive invitation e-mail, Did not want to talk on the phone to a coach, Do not have time for conversations about the program each week, Do not need coaching for this program, Did not want to continue doing the MindTrails online program, Other (with ability to write in a response). The second question read “Is there anything we could do to make coaching more appealing?” with space to type in a free response.

PARTICIPANT ENGAGEMENT IN COACHING

Initial Response

Coaches documented whether participants responded to the scheduling attempts: “Yes,” indicating that the participant responded to any of the three scheduling attempts; or “No,” indicating that the participant did not respond to scheduling attempts.

Coaching Completion

Coaches documented whether all coaching sessions were completed or not. Only participants who responded to the initial coaching scheduling e-mail (n = 147) were included in analyses for this metric of engagement to examine dropout among those who started coaching.

PROCEDURE

Study Design

Following screening for eligibility, eligible participants assigned to the CBM-I group completed the baseline assessment and the first training session. Note that the current study used a sequential, multiple assignment, randomized trial (SMART) design, an adaptive, multigated configuration that initially allocated participants to test the efficacy of the online CBM-I intervention, and then rerandomized participants in the CBM-I group after the first training session to examine whether the added coaching protocol would improve retention rates for participants determined as high risk for dropout.

During the assessment after the Session 1 training, an attrition score (a metric for dropout risk) was calculated for each participant using machine learning based on user behavior and responses to questionnaires in the baseline and post-Session 1 training assessments. Next, a threshold score was set that could be adjusted as needed to balance participant numbers for the predetermined ratio for those receiving coaching versus not. The goal was to have two thirds of the CBM-I sample designated as high risk for dropout, so that about one third of the sample would be randomly assigned to the coaching condition (see Figure 1). When an attrition score was calculated for a participant, he or she was reassigned to one of three conditions: low risk with no coaching (attrition score < threshold), high risk with no coaching (attrition score > threshold), or high risk with coaching (attrition score > threshold). Similar to the first allocation, randomization to coaching within the high-risk group was stratified based on anxiety severity and gender.

CBM-I Training

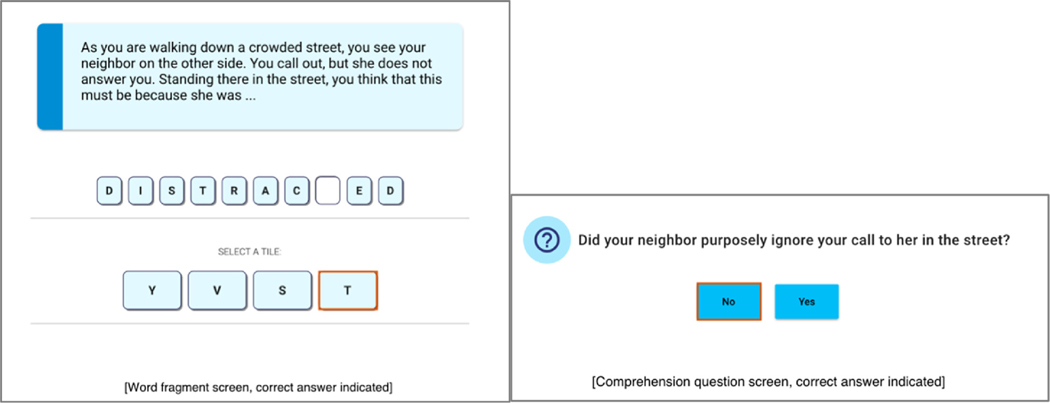

All participants in the CBM-I groups were administered the same training dose and schedule: five CBM-I sessions, each comprising four blocks of 10 scenarios (40 scenarios total) taking approximately 15 minutes to complete. Each scenario was introduced by a scenario-relevant image, followed by text depicting the reader in a potentially threatening situation (e.g., social evaluation, experiencing somatic symptoms of anxiety). Scenarios were emotionally ambiguous up until the final word, which was presented as a word fragment (see Figure 2). Participants were then instructed to select the correct letter among four letters to complete the word fragment, thus resolving the emotional ambiguity of the situation. To reduce a rigid negative interpretation bias, the situations were resolved with a nonthreatening positive/neutral ending in 90% of the training scenarios.

FIGURE 2.

Screenshots of sample CBM-I training scenario and comprehension question.

After the word fragment task, to ensure that participants actually read the scenario text and to reinforce the benign interpretation, a comprehension question was presented (see Figure 2). Participants then selected the correct answer among two possible response options. When a participant completed a session, an automatic 5-day wait period was initiated before a participant could access the next training session. Note that the training varied slightly across sessions. The number of missing letters in word fragments and the format of the comprehension questions changed, but the goal was always the same.

COACHING CONDITION

Coach Training

Coaches for the program were undergraduate research assistants in a clinical psychology research laboratory in the Eastern Standard time zone. They were supervised by clinical psychology graduate students. Coaches were informed of the rationale for coaching for this intervention and were instructed that their role was to support the use of the intervention (and not provide therapy to participants). They were provided with a link to a website that included coaching training instructions and coaching session checklists to ensure adherence to the protocol. Coaches were also taught how to use an online dashboard to view and manage intervention participants. This dashboard was part of the larger MindTrails infrastructure, and coaches had access only to the participant’s first name, phone number, e-mail address, and time zone, in addition to whether they had completed a given training session.

Training of coaches included reading articles about CBM (e.g., Beard, 2011), as well as articles about supportive accountability and coaching for TDIs (Mohr et al., 2011; Schueller et al., 2017). To reduce between-coach differences, each coach was instructed to create and use an e-mail address that followed a similar format (mindtrailscoach[firstname]@gmail.com) when contacting participants, and to create a Google Voice number for calling and texting with participants. Supervisors had access to coaches’ coaching e-mail accounts so they could check that coaches were following appropriate scripts. Coaches were provided with e-mail scripts for routine interactions (e.g., initial contact describing coaching) and voice message setup. Coaches were also expected to adhere to strict confidentiality and privacy rules to protect participant data.

Following the efficiency model, supervisors created scripts for coaches to check in with participants on each of the five possible failure points (Schueller et al., 2017); see Table 2 for failure points assessed in this study. Following the supportive accountability model, before contacting participants, coaches were instructed to check whether the participant had completed the prior CBM-I training session. A component of coaching included holding participants accountable by asking about barriers to completing training if not completed and problem solving or using motivational interviewing to address these barriers. Coaches engaged in training sessions with supervisors and one another in which they responded to hypothetical participant questions or difficult scenarios (e.g., a coaching participant reporting worsening emotional difficulties). These training calls were used to build coaches’ skills in rapport building, including providing emotional support and validation, collaborating with participants to problem solve, assuming a curious and nonjudgmental stance, and referring participants to additional therapeutic resources when appropriate (e.g., participants expressing suicidality). Finally, after completing readings, account setup, and in-person trainings with other coaches and graduate student supervisors, the coaches completed a mock initial call that was observed by supervisors before they were approved to start coaching real participants. Training took approximately 20 hours across 2 weeks. The coaching website is available for download (https://osf.io/674qc/?view_only=ee52cf5d9e0046fa9f56d2d64ba1c856).

Coaching Protocol

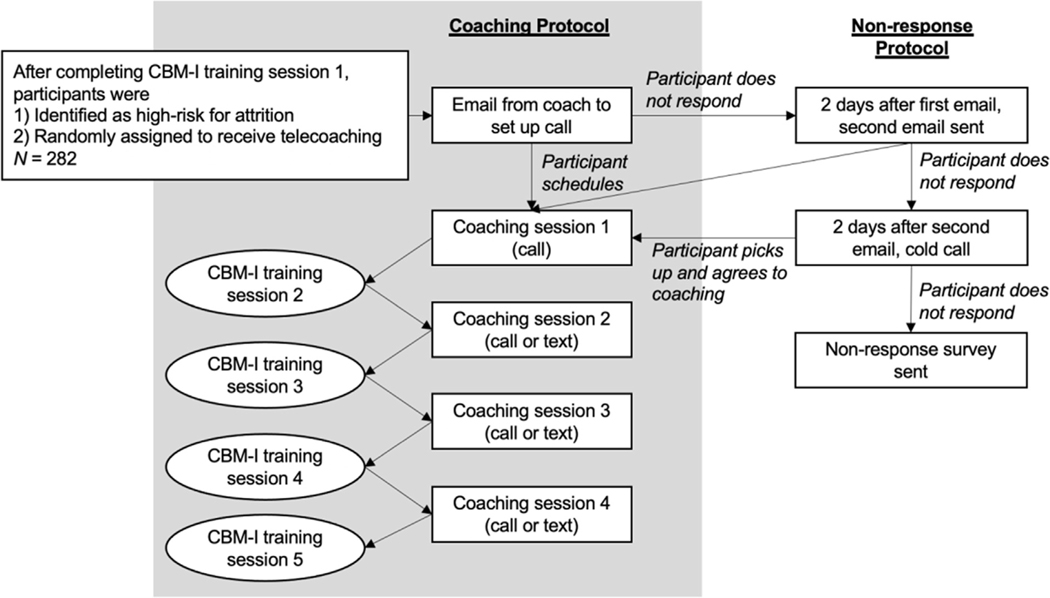

After a participant was assigned to the coaching condition, he or she was sent an automated text message from the MindTrails system that read “Thank you for completing your Calm Thinking training! You’ve been assigned a coach to support you in this program. Check your e-mail tomorrow for more info.” The supervisors then randomly assigned the participant to a coach, and notified the coach, who then e-mailed the participant using the appropriate script to set up an initial first coaching call. If the participant responded, the coach called the participant at the scheduled time. At the conclusion of the initial call, the coach and participant mutually decided when to meet next and the participant chose the platform (i.e., phone or synchronous text messaging). The coach and participant were expected to meet four times (initial call after Session 1 of training, and then again after Sessions 2, 3, and 4 of training). If the participant completed MindTrails as suggested (one session per week), each coach would ideally meet with participants once-weekly for 4 weeks (see Figure 3).

FIGURE 3.

Basic coaching protocol.

Initial Session Content

The initial coaching session was always over the telephone. At the beginning of the call, the coach noted that he or she was following a script but would be responsive to the participant’s specific questions. We opted to include a comment about reading off of a script after receiving feedback from team members who expressed that coaches sounded inauthentic when following a script. Following an introduction, coaches discussed confidentiality, the purpose of MindTrails, more detailed information about the coaching schedule, and answered any questions that participants listed on the website after completing their first CBM-I session. Coaches also asked whether the participant had any questions regarding the CBM-I training. If the participant indicated technical, usability or knowledge, implementation, or engagement issues, coaches followed the suggested strategies outlined in the coaching protocol to follow up on each question or issue. For example, if an individual experienced issues with engagement, the coach would use motivational interviewing techniques to increase motivation to complete the next training session. Next, coaches asked the participants how likely they thought they were to complete training the next week on a scale from 1 (not at all likely) to 5 (very likely). Depending on the response, the coach encouraged the participant to complete training over the following week. At the conclusion of the call, coaches explained the follow-up coaching sessions and scheduled the next session. Participants reported their preferred method of contact for the next session (texting or call).

Follow-Up Sessions Content

Coaches contacted participants at scheduled times for the two follow-up sessions. Coaches were instructed to respond to any outstanding questions that the participant may have asked over the course of the week via e-mail or text message, and then were instructed to ask whether the participant had any questions about training. Coaches followed up on questions about technical issues, usability/knowledge, implementation, and engagement as appropriate. Coaches closed the session by asking about the participants’ intention to complete the training over the next week. Coaches again followed a semistructured script for the session if it took place over the phone. If participants preferred to interact via synchronous text messaging, coaches could copy and paste from a script to keep content consistent across participants. Again, participants were notified that some information may be copied and pasted if appropriate. Content for the final coaching session was identical to the follow-up sessions, except at the end of the session the coach shared when the final 2-month follow-up assessment reminder would be sent to the participant.

Nonresponse Protocol

Scheduling E-Mail.

If the participant did not respond to the e-mail for scheduling the initial call, 2 days later the coach would send a second e-mail. If the participant still did not respond, after 2 days the coach would call the participant and discuss coaching. If the participant opted to continue with coaching, this call was treated as the initial call and the protocol was followed. If the participant did not pick up the phone, the coach sent a final e-mail to schedule a call.

Initial Call, Follow-Up Sessions, or Final Session by Phone.

If the participant did not pick up a scheduled call, the coach left a voice message and sent an e-mail following up.

Follow-Up Sessions or Final Session by Text Messaging.

If the participant did not answer the initial text within 5 minutes, the coach sent a text noting, “It seems like now might not be the best time for us to have our coaching conversation. I will e-mail you to reschedule. Thanks!” and then followed up with an e-mail.

International Participants

International calls were conducted via Google Hangouts without video if the participant had access to that platform.

Results

ANALYTIC APPROACH

Frequencies, descriptive statistics, and mean group-level differences in importance of reducing anxiety were calculated by A.W. Regressions examining demographic and clinical characteristics with engagement were conducted by S.K.P. All significant tests were two-tailed with alpha set at .05.

BASELINE ANXIETY

On the DASS-AS, 46 (16.3%) scored in the moderate (10–14) range, 50 (17.7%) scored in the severe (15–19) range, and 186 (66.0%) scored in the extremely severe (≥20) range (M = 23.04, SD = 7.34, range = 12–42)2 at baseline. Using the OASIS, at baseline 258 (91.5%) reported a score of 8 or greater indicating the presence of an anxiety diagnosis (24 [8.5%] reported a score below 8; Norman et al., 2006).

BEHAVIORAL DATA

Responsiveness to Coaches

Eighty-four participants (29.8%) completed the full intervention with their coach; 51 (18.1%) dropped out of the intervention partway through. There were 108 (38.3%) who never responded to coaching attempts and never started coaching; 33 (11.7%) scheduled an initial call but did not pick up or respond; 5 (1.8%) explicitly declined coaching after scheduling attempts were made. Three (1.1%) participants explicitly told their coaches during coaching sessions that they wished to withdraw from the study (one each during the first, second, and fourth coaching sessions). There were 560 attempted interactions with participants throughout the study, and 417 (74.5%) resulted in completed coaching sessions. Sessions were considered complete if the participant picked up the scheduled call or if they responded to a scheduled text message. If a session was not completed, the coach e-mailed the participant to reschedule.

Preferred Modality of Coaching Contacts

After the first coaching session by phone, participants could choose to participate in coaching sessions via phone call or text messaging, and could switch across sessions. For the second coaching session, 56.8% of the sessions were completed via text, and 62.5 and 59.6% of the third and fourth sessions, respectively, were completed via text. Coaching calls (n = 240) were recorded as lasting from 1 min to 28 min, with the mean interaction lasting 6 min 13 sec (SD = 3 min 50 sec). Initial calls (n = 140) lasted an average of 7 min 54 sec (SD = 3 min 14 sec), second session calls (n = 43) lasted an average of 3 min 48 sec (SD = 4 min 24 sec), third session calls (n = 29) lasted an average of 3 min 59 sec (SD = 2 min 26 sec), and final session calls (n = 28) lasted an average of 3 min 55 sec (SD = 2 min 13 sec).

Content of Coaching Interactions

Coaches also reported the content of coaching sessions based on the failure points outlined in the efficiency model (Schueller et al., 2017) at the completion of each interaction. Across all successful coaching sessions (n = 417): 38 (9.1%) of the interactions discussed engagement issues, 29 (7.0%) discussed implementation issues, 40 (9.6%) discussed technical issues, and 54 (12.9%) discussed usability or knowledge issues. Coaches also noted any other issues that participants reported. One participant reported it was difficult to find the time to complete sessions and another reported difficulty remembering to come back to training. One participant also indicated that the person “hated” the negative training scenarios (presumably referring to the 10.0% of scenarios that had a negative rather than benign ending), and that a warning should be included with the training. Three participants indicated that they had difficulty with being told that they responded incorrectly to the comprehension questions after negative training scenarios. Taken together, these data suggest that participants did not raise questions or concerns with their coaches specific to the five failure points outlined in the efficiency model during the majority (>60.0%) of coaching sessions. If participants did not raise specific questions or issues, coaching sessions tended to be brief and involved coaches asking participants about their intention to complete the next training and (if applicable) scheduling the next coaching session. Coaches reinforced participation in the training sessions during the calls—for example, if a participant expressed ambivalence about completing training over the next week, coaches would discuss this with the participant and work to increase motivation to complete training.

NONRESPONSE SURVEY

There were 111 participants who were sent the nonresponse survey3 and 39 (35.1%) responded. The most common response (n = 23, 59.0%) was “Did not want to talk on the phone to the coach.” The next most common response was “Do not have time for conversations about the program each week” (n = 18, 46.2%), followed by “other” (n = 17, 43.6%), “Do not need coaching for this program” (n = 10, 25.6%), “Did not want to continue doing the MindTrails online program” (n = 3, 7.7%), and “Did not receive invitation e-mail” (n = 2, 5.1%). For those individuals who selected “other” as a response, four (10.3%) explicitly referenced anxiety or feeling too nervous to answer the phone. Two (5.1%) reported not wanting to discuss personal issues with strangers. Eight (20.5%) indicated that they were either too busy, forgot to respond, or could not find a mutually agreeable time with their coach. Three (7.7%) reported that they did schedule a call but were never called by their coaches (it is unclear if this occurred or a call was simply missed). One (2.6%) reported that they were called by their coach.

Nineteen individuals (48.7%) responded to the final question regarding how to make coaching more appealing, though not all write-in responses were suggestions for improving the program. Five (12.8%) reported being averse to speaking on the phone, with three of those individuals referencing anxiety related to speaking on the phone:

“A phone call with a stranger is massively anxiety producing, lol.”

“Don’t require someone with anxiety to speak on the phone.”

“I had hoped I wasn’t one chosen for the telephone portion, but unfortunately was. I have social anxiety and part of that is the difficulty I have with talking on the phone. It is really bad. I shouldn’t have signed up for the study, because I knew talking on the phone was a possibility, but at the time, I thought it was worth a shot. I am sorry that I wasted your time.”

In addition, three (7.7%) suggested making coaching optional. Suggestions also included showing profiles of coaches so the participants had a choice in who they wanted to work with (n = 1, 2.6%), and allowing for the option of texting without phone calls (n = 2, 5.1%). One reported being excited to participate with a coach and one reported that they really enjoyed working with their coach, suggesting either some confusion on the participant’s side or a researcher error, as this survey was intended to target coaching nonresponders.

EXAMINING GROUP-LEVEL DIFFERENCES IN IMPORTANCE OF REDUCING ANXIETY4

In an exploratory analysis, we examined whether participants differed in their self-reported importance of reducing anxiety symptoms, which was assessed at baseline prior to starting MindTrails training. Participants were grouped into one of three groups based on response to coaching, and these groups’ self-reported importance was compared. Notably, participants who completed MindTrails with coaching (n = 85, M = 3.62, SD = .58), participants who did not respond to coaching attempts (n = 108, M = 3.58, SD = .64), and those who responded initially but did not complete coaching (n = 89, M = 3.65, SD = .61) did not have significantly different ratings of the importance of reducing anxiety at baseline, F(2, 279) = .31, p = .733, η2 = .00. The majority of participants indicated that it was very important to reduce anxiety (n = 192, 68.1%), 73 (25.9%) indicated “a lot,” 16 (5.7%) indicated “somewhat,” and one (0.4%) responded “a little.” No participants used the lowest rating of “not at all.”

RELATIONS BETWEEN DEMOGRAPHIC CHARACTERISTICS AND ANXIETY SYMPTOMS WITH ENGAGEMENT

Age

Contrary to hypotheses, age was not found to be associated with initial response, β(SE) = 0.01 (0.01), p = .111, OR = 1.02, 95% CI [0.00, 0.04]; or coaching completion, β(SE) = 0.01 (0.01), p = .262, OR = 1.01, 95% CI [−0.01, 0.03].

Gender

Gender (binary variable of men and women) was not associated with initial response, β(SE) = 0.28 (0.36), p = .439, OR = 1.32, 95% CI [−0.41, 1.01]; or coaching completion, β(SE) = −0.15 (0.37), p = .675, OR = 0.86, 95% CI [−0.091, 0.54]. Our sample did not include enough individuals reporting gender other than male or female to allow us to examine whether other gender identities related to engagement, which is a limitation of this analysis.

Education

Binary logistic regressions were conducted using “high school diploma or less” as the reference group to compare all other levels of education. This decision was made to reduce the number of tests while still being able to test our hypothesis that more (vs. less) education would be associated with greater coaching engagement. There was a significant association between education level and likelihood of initially responding to the coach. In line with hypotheses, in comparison to individuals who had a high school diploma or less, participants with some college education, β(SE) = 0.81 (0.39), p = .043, OR = 2.24, 95% CI [0.03, 1.6]; a bachelor’s or associate’s degree, β(SE) = 0.77 (0.38), p = .049, OR = 2.15, 95% CI [0.01, 1.54]; or greater than a college degree, β(SE) = 1.18(0.42), p < .01, OR = 3.24, 95% CI [0.36, 2.02], had a greater likelihood of initially responding. In line with hypotheses, participants with some college education, β(SE) = 1.07(0.54), p = .047, OR = 2.91, 95% CI [0.08, 2.23], or greater than a college degree, β(SE) = 1.54(0.54), p < .01, OR = 4.64, 95% CI [0.55, 2.67], were more likely to complete all coaching sessions than individuals who had a high school diploma or less education.

Anxiety Symptoms as Measured by the OASIS

Contrary to hypotheses, baseline anxiety symptom severity was not associated with initial response to coaching, β(SE) = −0.22(0.19), p = .242, OR = 0.80, 95% CI [−0.60, 0.15]. As hypothesized, participants with more (vs. less) severe anxiety symptoms at baseline were less likely to complete coaching, β(SE) = −0.41(0.19), p = .038, OR = 0.66, 95% CI [−0.81, −0.26].

Discussion

To reduce attrition in an online anxiety intervention, we paired participants who were at high risk of dropout with coaches. Coaches were intended to provide support for using a web-based CBM-I program, and interactions with coaches were not meant to serve as teletherapy. Fewer than 30% of participants assigned to the coaching condition completed the online anxiety intervention with their coach. In a follow-up survey for those who did not engage with coaches, over half reported not wanting to talk to a coach. However, for the majority of nonresponders, there are no data on reasons for not engaging. There were also a few comments suggesting that added human support reduced the likelihood that someone with anxiety would engage in the intervention. Although there is a growing body of evidence for the efficacy of coaching in the context of TDIs (and some recommendations to enhance coaching; Lattie et al., 2019), the field is lacking clear recommendations for implementing coaching with individuals with anxiety. Our goal is to offer lessons learned to improve future iterations of coaching for anxious individuals.

OFFERING COACHING FOR HIGHLY ANXIOUS INDIVIDUALS

MindTrails is likely an appealing option for individuals struggling with anxiety because it is a free, convenient, and anonymous intervention. Except for coaching, participants do not have to engage with anyone to enroll or participate, which could make it most appealing to individuals with social anxiety who may not wish to interact with others. We speculate this could make MindTrails (along with other TDIs) more appealing than traditional one-on-one therapy for some people, and could even serve as a stepping-stone to higher-intensity services if needed in the future. In the coaching condition, we required participants to have an initial phone session because we hoped it would lead to increased engagement and alliance with coaches. This approach is similar to a successful TDI plus coaching intervention (Graham et al., 2020) for individuals with depression and/or anxiety. However, in the Graham et al. trial, participants were from a primary care clinic, and were often referred to the TDI by their clinician. Thus, this sample likely consisted of participants who expressed openness to that type of interaction. In our case, baseline anxiety symptoms did not predict whether the participant would respond to the coaching scheduling e-mail, suggesting that symptom severity was not necessarily influencing this result. Nonetheless, approximately half of our sample completed the initial call with the coach, leading us to recommend that coaching be optional, as there is not a one-size-fits-all model.

In addition, participants indicated high importance of reducing anxiety symptoms when starting the program. However, self-reported motivation to reduce anxiety symptoms did not vary based on whether someone completed the intervention with his or her coach or responded to the initial e-mail. Because the sample was skewed toward reporting being highly motivated to reduce anxiety symptoms at the beginning of the intervention, it was not possible for us to test whether the addition of coaching was especially helpful or iatrogenic for varying levels of motivation. This is important for future work given that individuals vary in how motivated they are at any given time to reduce their symptoms of anxiety. For example, if someone is experiencing low motivation midway through treatment, a coach may be helpful for reminding the individual why he or she was initially motivated.

Experimental evidence suggests that when patients can choose a specific treatment, treatment efficacy increases (Mott et al., 2014). Yet, in the current study, participants randomly assigned to the coaching condition were not able to choose whether they would like to engage with a coach. In hindsight, perhaps testing whether the option of coaching would have reduced attrition would have been a more effective test of human support (i.e., randomize to no coaching or the option of coaching). One participant felt strongly that a call should have been optional: “Give a nonphone option. I don’t talk on the phone unless my life depends on it.” One participant also suggested that we include profiles of coaches, and we could give users choice in selecting a coach. We agree that this may make coaching more appealing. Intolerance of uncertainty is a transdiagnostic vulnerability factor associated with anxiety (Jacoby, 2020); it may be the case that having information available about the coaches may reduce initial apprehension about who the coach will be and help build a stronger (early) personal connection and motivation to engage with the coach. This may be especially true if participants feel empowered to choose a coach who matches their preferences (e.g., age, gender of the coach).

TAKING A USER-CENTERED DESIGN APPROACH

A key aspect of developing an intervention is understanding how the user will respond. User-centered design is an approach that involves the active participation of and feedback from users in an iterative design process (Ondersma & Walters, 2020). User-centered design is popular in developing TDIs because it allows potential end users to provide feedback early in the process of product design. The version of MindTrails described in this paper evolved from our team’s earlier version of a web-based CBM-I intervention (Ji et al., 2021) that was developed through a two-step process:(a)interviews with highly anxious individuals, clinicians, and experts in CBM on the usability and acceptability of a web-based CBM-I platform; and (b) a randomized controlled trial of CBM-I for anxiety symptoms for individuals with moderate to severe levels of anxiety. Results suggested that positive CBM-I training (compared to no training and control training)was associated with a decrease in anxiety symptoms, but attrition was high in the program. To increase user engagement and reduce attrition, our team used the SMART design and coaching protocol for the version of MindTrails discussed here.

While we had implemented multiple components of a user-centered design approach when developing the main intervention, and we conducted some pilot testing of the coaching protocol, we were less intentional in following user-centered design when developing the coaching protocol. We believe greater adherence to these design principles could have led to stronger coaching outcomes. For instance, listening more carefully to users’ feedback on coaching during the development phase likely would have raised concerns about requiring an initial phone call with an anxious sample.

Our recommendation for those developing coaching and supportive accountability protocols for mental health TDIs is to gather data iteratively from target users to understand (a) users’ comfort with different types of support persons (e.g., a stranger vs. a loved one); (b) preferred frequency and duration of coaching interactions; (c) what barriers to engagement most need to be addressed during coaching; and (d) how coaches can be optimally responsive to users’ needs while retaining the key elements of coaching. Integrating components of persuasive systems design (that ethically tries to increase user engagement in noncoercive ways; e.g., Oinas-Kukkonen & Harjumaa, 2018) as part of the development work may also be helpful. For instance, the Fogg behavior model (Fogg et al., 2009) could be useful to guide coaching protocol refinements by using iterative user-centered design to solicit feedback on different types of prompts that would be helpful at varying levels of motivation and perceived abilities.

CONTENT OF COACHING SESSIONS

We used the efficiency model of support (Schueller et al., 2017) to assess possible failure points. In the current study, the most frequently addressed failure point was usability/knowledge, suggesting that it may be important for interventions like CBM-I to provide both information and examples at the beginning of the intervention that more clearly describe how the training should be used in daily life. It was surprising to us that engagement was not discussed more during the coaching sessions, especially because coaches would frequently discuss engagement issues raised by participants with the research team, and the literature points to engagement being a significant challenge in TDIs. We speculate that engagement issues were more widespread than reported and that people who were not engaged simply did not continue with their coach or did not share this concern. It may be helpful for coaches to make it clear that engagement issues are normative for TDIs and that this is a space designed to talk about those issues. More generally, strategies to make users comfortable raising engagement issues and other concerns will be important to maximize the utility of coaching.

The data suggest that over 60% of the coaching sessions did not address any failure points, and participants frequently noted that they did not have any problems. Of course, the sample that completed coaching sessions was biased in that these were the individuals who continued using MindTrails and continued to talk to their coaches (i.e., not the ones who dropped out, who may have experienced more challenges). A critical question for future research is whether the low rate of discussing failure points indicates that participants did not know how to best use coaching to support their TDI use, or that coaches did not adequately elicit problems with the program from participants, or that MindTrails (and potentially CBM-I generally) is clear and easy to use, and therefore may not require human support to address failure points. It is also possible that this evidence, in conjunction with high attrition, suggests that users may not like the intervention.

Results also suggested that participants with more education than a high school degree (compared to those with a high school degree or less education) were more likely to respond to the coaching e-mail and were more likely to complete the intervention with coaching, which is aligned with research indicating greater education is related to higher levels of mental health treatment overall (Steele et al., 2007). Although only a small proportion of the sample had a high school degree or less education, if these results were to be replicated, it would suggest that MindTrails and/or coaching may not have been appealing for those without postsecondary education. Potential reasons could be that our materials (including information presented on the website and the language used in introductory e-mails) were not inviting or easily understood by these participants.

Improving this and future coaching protocols will require understanding the key components of coaching protocols that increase their effectiveness. The current coaching protocol was developed by drawing from two models of support for TDIs: the supportive accountability and the efficiency models. In the present study, we did not wish to test these models against each other, and attempted to create our best possible version of a protocol for our single coaching condition by drawing from both models. Thus, it is impossible for us to disentangle whether specific elements from the different models were particularly effective (e.g., raising failure points during coaching conversations versus holding participants accountable for completing training sessions), though this would be an interesting question for future research.

Despite the concerns raised by some participants, many of the free responses recorded by coaches at the end of coaching sessions indicated that coaching was a very positive experience for some participants. Participants shared about former therapy experiences, offered detailed suggestions for improving MindTrails, described which scenarios were helpful, and how training was helpful for changing thinking in daily life.

SELECTING AND TRAINING COACHES

There are also lessons learned tied to implementing coaching within a university laboratory setting. All coaches were undergraduate research assistants with varying levels of research and clinical experience. Importantly, nonspecialist providers, mentors, and lay health workers have been harnessed as one potential solution to address the treatment gap (Barnett et al., 2018). In this case, we were curious whether adding minimal human support would encourage users to engage in MindTrails, which is one relatively low-cost option to increase engagement in digital interventions. Thus, with an eye on scaling MindTrails in the future, we wished to test whether undergraduate research assistants would be able to effectively deliver the coaching protocol. It is important to create a protocol and training procedure that allows coaches to feel effective in providing supportive accountability. Based on our adherence checks, the student coaches were able to follow the protocol.

LIMITATIONS AND CONCLUSION

Within the current study, coaching was offered to individuals who were predicted to drop out of MindTrails prematurely, so these users are not representative of individuals seeking TDIs more broadly. Moreover, only 39 of 111 participants who had already stopped responding to our emails responded to our survey requesting additional information about their attitudes about coaching. In addition, some participants were not able to receive coaching owing to scheduling difficulties, in part from the limitations of our coaches and in part from time zone differences for international participants. Flexibly tailoring evidence-based treatments based on clients’ preferences and challenges (Georgiadis et al., 2020) is important in traditional psychotherapy settings, and is also important for the development of TDIs and coaching protocols.

In the current paper, we describe the addition of coaching as just one way of potentially increasing engagement in a free, web-based program for individuals seeking to reduce their symptoms of anxiety. However, we acknowledge that there are many other ways that engagement in TDIs can be increased. Recent evidence suggests that credibility of MindTrails as an effective intervention for reducing anxiety symptoms predicts lower dropout (Hohensee et al., 2020). This suggests that perhaps coaches and others providing support for TDIs can be better trained in describing how specific TDIs work, and why they are likely effective. Taking a user-centered design approach, researchers can learn what makes CBM-I (and other TDIs) seem more or less credible, and can optimize how these interventions are marketed and described to increase engagement. Future work may also benefit from more closely testing the integration of theoretical approaches into coaching protocols. For example, the Fogg behavioral model (Fogg et al., 2009) highlights how motivation, ability, and prompts interact to modify behavior. Simple additions to coaching protocols, including assessing users’ desire to participate in activities that may cause anxiety, may allow coaches to more adequately provide a level of supportive accountability that fits the individual user.

Nonetheless, the current study helps guide recommendations that may improve future coaching protocols for TDIs for anxiety symptoms. We recommend taking a user-centered design approach to creating and implementing coaching for TDIs. Listening to key stakeholders throughout the design process will allow for these protocols to best meet the needs of users. We also recommend a flexible coaching schedule. When possible, we recommend allowing users to make decisions regarding coaching, such as whether interactions occur via call or texting, and are synchronous or asynchronous. We also recommend that coaches normalize engagement issues with TDIs early in the relationship, in addition to coaches discussing how and why TDIs work (with the goal of increasing credibility).

Taken together, it is critical to consider the unique challenges of coaching individuals with anxiety, especially when social fears are driving their choice to seek TDIs. We do not believe coaching will be a panacea to address the engagement challenges that limit TDIs’ clinical utility but think that providing the option of coaches with maximal choice regarding coaching delivery format may help a substantial portion of users get the most out of their TDI and increase the reach and impact of care.

Acknowledgments

We would like to thank undergraduate research assistants in the Program for Anxiety, Cognition, and Treatment Laboratory, in particular, Casey Baker, Kirby Eule, Irena Kesselring, Amelia Wilt, Sophie Ali, Leila Sachner, Kelsey Ainslie, Noah French, and Claudia Calicho-Mamani. We would also like to thank Dan Funk for programming and developing MindTrails.

This work was supported by the National Institute of Mental Health (R01MH113752) awarded to Bethany A. Teachman, the Center for Evidence-Based Mentoring, and the University of Virginia COVID-19 Rapid Response Grant.

Footnotes

Preregistered hypotheses for gender focused on a user and coach gender interaction—however, those results are not presented in this paper owing to concerns about participants’ ability to interpret their coaches’ gender from their first name (including multiple coaches with names that do not necessarily correspond to traditional Western gender-naming traditions). Full results of the gender interaction analyses are available from A.W. We would like to thank an anonymous reviewer for raising this concern.

Nine individuals had two DASS-AS sets of responses. For those individuals, the scores were calculated based on means of each item (responses). One individual with two DASS-AS scores omitted an item while completing one DASS-AS, so instead of taking the mean, that item was omitted for the final version of that person’s data.

Although the nonresponse survey was meant to be sent to the 108 individuals who did not respond to scheduling efforts from coaches, four additional individuals received the e-mail (early on in the program time line): two who scheduled a phone call but did not pick up, one who explicitly declined coaching, and one who dropped out midway through the program. One individual was not sent the nonresponse survey after not responding to scheduling attempts because the e-mail address was not valid.

We would like to thank an anonymous reviewer for suggesting this additional analysis.

Conflict of Interest Statement

The authors declare that there are no conflicts of interest.

References

- Abramowitz JS, Deacon BJ, & Whiteside SPH (2019). Exposure therapy for anxiety: Principles and practice (2nd ed.). Guilford Press; https://books.google.com/books?id=YZ-BDwAAQBAJ. [Google Scholar]

- Alonso J, Liu Z, Evans-Lacko S, Sadikova E, Sampson N, Chatterji S, Abdulmalik J, Aguilar-Gaxiola S, Al-Hamzawi A, Andrade LH, Bruffaerts R, Cardoso G, Cia A, Florescu S, de Girolamo G, Gureje O, Haro JM, He Y, de Jonge P, ... WHO World Mental Health Survey Collaborators (2018). Treatment gap for anxiety disorders is global: Results of the World Mental Health Surveys in 21 countries. Depression and Anxiety, 35(3), 195–208. 10.1002/da.22711. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Andersson G, Cuijpers P, Carlbring P, Riper H, & Hedman E (2014). Guided Internet-based vs. face-to-face cognitive behavior therapy for psychiatric and somatic disorders: A systematic review and meta-analysis. World Psychiatry, 13(3), 288–295. 10.1002/wps.20151. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Andrade LH, Alonso J, Mneimneh Z, Wells JE, Al-Hamzawi A, Borges G, Bromet E, Bruffaerts R, de Girolamo G, de Graaf R, Florescu S, Gureje O, Hinkov HR, Hu C, Huang Y, Hwang I, Jin R, Karam EG, Kovess-Masfety V, ... Kessler RC (2014). Barriers to mental health treatment: Results from the WHO World Mental Health (WMH) Surveys. Psychological Medicine, 44(6), 1303–1317. 10.1017/S0033291713001943. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Antony MM, Bieling PJ, Cox BJ, Enns MW, & Swinson RP (1998). Psychometric properties of the 42-item and 21-item versions of the Depression Anxiety Stress Scales in clinical groups and a community sample. Psychological Assessment, 10(2), 176–181. 10.1037/1040-3590.10.2.176. [DOI] [Google Scholar]

- Arnberg FK, Linton SJ, Hultcrantz M, Heintz E, & Jonsson U (2014). Internet-delivered psychological treatments for mood and anxiety disorders: A systematic review of their efficacy, safety, and cost-effectiveness. PLoS One, 9 (5). 10.1371/journal.pone.0098118 e98118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arnow BA, Steidtmann D, Blasey C, Manber R, Constantino MJ, Klein DN, Markowitz JC, Rothbaum BO, Thase ME, Fisher AJ, & Kocsis JH (2013). The relationship between the therapeutic alliance and treatment outcome in two distinct psychotherapies for chronic depression. Journal of Consulting and Clinical Psychology, 81(4), 627–638. 10.1037/a0031530. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baee S, Baglione A, Spears T, Lewis E, Behan H, Funk D, Eberle JW, Wang H, Teachman BA, & Barnes L (2021). DIME: Digital mental health attrition prediction in early stage (in preparation). [Google Scholar]

- Barnett ML, Lau AS, & Miranda J (2018). Lay health worker involvement in evidence-based treatment delivery: A conceptual model to address disparities in care. Annual Review of Clinical Psychology, 14(1), 185–208. 10.1146/annurev-clinpsy-050817-084825. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Batterham PJ, Neil AL, Bennett K, Griffiths KM, & Christensen H (2008). Predictors of adherence among community users of a cognitive behavior therapy website. Patient Preference and Adherence, 2, 97–105 https://pubmed.ncbi.nlm.nih.gov/19920949. [PMC free article] [PubMed] [Google Scholar]

- Baumeister H, Reichler L, Munzinger M, & Lin J (2014). The impact of guidance on Internet-based mental health interventions—A systematic review. Internet Interventions, 1 (4), 205–215. 10.1016/j.invent.2014.08.003. [DOI] [Google Scholar]

- Baxter AJ, Scott KM, Vos T, & Whiteford HA (2013). Global prevalence of anxiety disorders: A systematic review and meta-regression. Psychological Medicine, 43(5), 897–910. 10.1017/s003329171200147x. [DOI] [PubMed] [Google Scholar]

- Beadel JR, Smyth FL, & Teachman BA (2014). Change processes during cognitive bias modification for obsessive compulsive beliefs. Cognitive Therapy and Research, 38(2), 103–119. 10.1007/s10608-013-9576-6. [DOI] [Google Scholar]

- Beard C (2011). Cognitive bias modification for anxiety: Current evidence and future directions. Expert Review of Neurotherapeutics, 11(2), 299–311. 10.1586/ern.10.194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beard C, Rifkin LS, Silverman AL, & Björgvinsson T (2019). Translating CBM-I into real-world settings: Augmenting a CBT-based psychiatric hospital program. Behavior Therapy, 50(3), 515–530. 10.1016/j.beth.2018.09.002. [DOI] [PubMed] [Google Scholar]

- Beard C, Silverman AL, Forgeard M, Wilmer MT, Torous J, & Bjӧrgvinsson TS (2019). Smartphone, social media, and mental health app use in an acute transdiagnostic psychiatric sample. JMIR Mhealth Uhealth, 7(6). 10.2196/13364 e13364. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boß L, Lehr D, Schaub MP, Paz Castro R, Riper H, Berking M, & Ebert DD (2018). Efficacy of a webbased intervention with and without guidance for employees with risky drinking: Results of a three-arm randomized controlled trial. Addiction, 113(4), 635–646. 10.1111/add.14085. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dennison L, Morrison L, Lloyd S, Phillips D, Stuart B, Williams S, Bradbury K, Roderick P, Murray E, Michie S, Little P, & Yardley L (2014). Does brief telephone support improve engagement with a web-based weight management intervention? Randomized controlled trial. Journal of Medical Internet Research, 16(3). 10.2196/jmir.3199 e95. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Domhardt M, Geßlein H, Rezori RE, & Baumeister H (2019). Internet- and mobile-based interventions for anxiety disorders: A meta-analytic review of intervention components. Depression and Anxiety, 36(3), 213–224. 10.1002/da.22860. [DOI] [PubMed] [Google Scholar]

- Duffecy J, Kinsinger S, Ludman E, & Mohr DC (2010). Telephone coaching to support adherence to Internet interventions (TeleCoach; ): Coach manual. Unpublished manual. [Google Scholar]

- Eberle JW, Daniel KE, Baee S, Behan HC, Silverman AL, Calicho-Mamani C, Baglione AN, Werntz A, French NJ, Ji JL, Hohensee N, Tong X, Boukhechba M, Funk DH, Barnes LE, & Teachman BA (2021). Web-based interpretation training to reduce anxiety: A sequential multiple-assignment randomized trial (in preparation) https://osf.io/af4n7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fodor LA, Georgescu R, Cuijpers P, Szamoskozi S, David D, Furukawa TA, & Cristea IA (2020). Efficacy of cognitive bias modification interventions in anxiety and depressive disorders: A systematic review and network meta-analysis. Lancet Psychiatry, 7(6), 506–514. 10.1016/S2215-0366(20)30130-9. [DOI] [PubMed] [Google Scholar]

- Fogg BJ, Cuellar G, & Danielson D (2009). Motivating, influencing, and persuading users. In Jacko JA(Ed.), The human–computer interaction handbook (pp 358–370). Erlbaum. [Google Scholar]

- Georgiadis C, Peris TS, & Comer JS (2020). Implementing strategic flexibility in the delivery of youth mental health care: A tailoring framework for thoughtful clinical practice. Evidence-Based Practice in Child and Adolescent Mental Health, 5(3), 215–232. 10.1080/23794925.2020.1796550. [DOI] [Google Scholar]

- Graham AK, Greene CJ, Kwasny MJ, Kaiser SM, Lieponis P, Powell T, & Mohr DC (2020). Coached mobile app platform for the treatment of depression and anxiety among primary care patients: A randomized clinical trial. JAMA Psychiatry, 77(9), 906–914. 10.1001/jamapsychiatry.2020.1011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hohensee N, Meyer MJ, & Teachman BA (2020). The effect of confidence on dropout rate and outcomes in online cognitive bias modification. Journal of Technology in Behavioral Science, 5(3), 226–234. 10.1007/s41347-020-00129-8. [DOI] [Google Scholar]

- Jacoby RJ (2020). Intolerance of uncertainty. In Abramowitz JS& Blakey SM(Eds.), Clinical handbook of fear and anxiety: Maintenance processes and treatment mechanisms (pp. 45–63). 10.1037/0000150003. [DOI] [Google Scholar]

- Ji JL,Baee S,Zhang D, Calicho-Mamani CP, Meyer MJ, Funk D, Portnow S, Barnes L, & Teachman BA (2021). Multi-session online interpretation bias training for anxiety in a community sample. Behaviour Research and Therapy, 142. 10.1016/j.brat.2021.103864 103864. [DOI] [PubMed] [Google Scholar]

- Karyotaki E, Kleiboer A, Smit F, Turner DT, Pastor AM, Andersson G, Berger T, Botella C, Breton JM, Carlbring P, Christensen H, de Graaf E, Griffiths K, Donker T, Farrer L, Huibers MJH, Lenndin J, Mackinnon A, Meyer B, ... Cuijpers P (2015). Predictors of treatment dropout in self-guided web-based interventions for depression: An “individual patient data” meta-analysis. Psychological Medicine, 45(13), 2717–2726. 10.1017/s0033291715000665. [DOI] [PubMed] [Google Scholar]

- Kashdan TB, Goodman FR, Machell KA, Kleiman EM, Monfort SS, Ciarrochi J, & Nezlek JB (2014). A contextual approach to experiential avoidance and social anxiety: Evidence from an experimental interaction and daily interactions of people with social anxiety disorder. Emotion, 14(4), 769–781. 10.1037/a0035935. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kazdin AE, & Blase SL (2011). Rebooting psychotherapy research and practice to reduce the burden of mental illness. Perspectives on Psychological Science, 6(1), 21–37. 10.1177/1745691610393527. [DOI] [PubMed] [Google Scholar]

- Kleiboer A, Donker T, Seekles W, van Straten A, Riper H, & Cuijpers P (2015). A randomized controlled trial on the role of support in Internet-based problem solving therapy for depression and anxiety. Behavior Research and Therapy, 72, 63–71. 10.1016/j.brat.2015.06.013. [DOI] [PubMed] [Google Scholar]

- Lattie EG,Graham AK,Hadjistavropoulos HD,Dear BF, Titov N, & Mohr DC (2019). Guidance on defining the scope and development oftext-based coaching protocols fordigitalmentalhealthinterventions.DigitalHealth,16(5). 10.1177/2055207619896145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lovibond SH, & Lovibond PF (1995). Manual for the depression anxiety stress scales (2nd ed.). Psychology Foundation. [Google Scholar]

- MacLeod C, & Mathews A (2012). Cognitive bias modification approaches to anxiety. Annual Review of Clinical Psychology, 8, 189–217. 10.1146/annurevclinpsy-032511-143052. [DOI] [PubMed] [Google Scholar]

- Mohr DC, Cuijpers P, & Lehman K (2011). Supportive accountability: A model for providing human support to enhance adherence to eHealth interventions. Journal of Medical Internet Research, 13(1). 10.2196/jmir.1602 e30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mohr DC, Duffecy J, Ho J, Kwasny M, Cai X, Burns MN, & Begale M (2013). A randomized controlled trial evaluating a manualized TeleCoaching protocol for improving adherence to a web-based intervention for the treatment of depression. PloS One, 8(8). 10.1371/journal.pone.0070086 e70086. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mohr DC, Schueller SM, Tomasino KN, Kaiser SM, Alam N, Karr C, Vergara JL, Gray EL, Kwasny MJ, & Lattie EG (2019). Comparison of the effects of coaching and receipt of app recommendations on depression, anxiety, and engagement in the IntelliCare platform: Factorial randomized controlled trial. Journal of Medical Internet Research, 21(8). 10.2196/13609e13609 e13609. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mott JM, Stanley MA, Street RL Jr., Grady RH, & Teng EJ (2014). Increasing engagement in evidence-based PTSD treatment through shared decision-making: A pilot study. Military Medicine, 179(2), 143–149. 10.7205/MILMED-D-13-00363. [DOI] [PubMed] [Google Scholar]

- Norman SB, Hami Cissell S, Means-Christensen AJ, & Stein MB (2006). Development and validation of an Overall Anxiety Severity and Impairment Scale (OASIS). Depression and Anxiety, 23(4), 245–249. 10.1002/da.20182. [DOI] [PubMed] [Google Scholar]

- Oinas-Kukkonen H, & Harjumaa M (2018). Persuasive systems design: Key issues, process model and system features. In Howlett M& Mukherjee I(Eds.), Routledge handbook of policy design. (pp. 87–105). 10.4324/9781351252928-6. [DOI] [Google Scholar]

- Ondersma SJ, & Walters ST (2020). Clinician’s guide to evaluating and developing eHealth interventions for mental health. Psychiatric Research and Clinical Practice, 2(1), 26–33. 10.1176/appi.prcp.2020.20190036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pew Research Center (2019a). Internet/broadband fact sheet. https://www.pewresearch.org/internet/fact-sheet/internet-broadband/.

- Pew Research Center (2019b). Mobile fact sheet. https://www.pewresearch.org/internet/fact-sheet/mobile/.

- Schueller SM, Tomasino KN, & Mohr DC (2017). Integrating human support into behavioral intervention technologies: The efficiency model of support. Clinical Psychology: Science and Practice, 24(1), 27–45. 10.1111/cpsp.12173. [DOI] [Google Scholar]

- Steele LS, Dewa CS, Lin E, & Lee KL (2007). Education level, income level and mental health services use in Canada: Associations and policy implications. Health Policy, 3(1), 96–106. [PMC free article] [PubMed] [Google Scholar]

- Tomasino KN, Lattie EG, & Mohr DC (2017). TeleHealth Study coaching manual for iCBT: RCT version 2. [Google Scholar]