Abstract

A growing number of artificial intelligence (AI)-based clinical decision support systems are showing promising performance in preclinical, in silico, evaluation, but few have yet demonstrated real benefit to patient care. Early stage clinical evaluation is important to assess an AI system’s actual clinical performance at small scale, ensure its safety, evaluate the human factors surrounding its use, and pave the way to further large scale trials. However, the reporting of these early studies remains inadequate. The present statement provides a multistakeholder, consensus-based reporting guideline for the Developmental and Exploratory Clinical Investigations of DEcision support systems driven by Artificial Intelligence (DECIDE-AI). We conducted a two round, modified Delphi process to collect and analyse expert opinion on the reporting of early clinical evaluation of AI systems. Experts were recruited from 20 predefined stakeholder categories. The final composition and wording of the guideline was determined at a virtual consensus meeting. The checklist and the Explanation & Elaboration (E&E) sections were refined based on feedback from a qualitative evaluation process. 123 experts participated in the first round of Delphi, 138 in the second, 16 in the consensus meeting, and 16 in the qualitative evaluation. The DECIDE-AI reporting guideline comprises 17 AI specific reporting items (made of 28 subitems) and 10 generic reporting items, with an E&E paragraph provided for each. Through consultation and consensus with a range of stakeholders, we have developed a guideline comprising key items that should be reported in early stage clinical studies of AI-based decision support systems in healthcare. By providing an actionable checklist of minimal reporting items, the DECIDE-AI guideline will facilitate the appraisal of these studies and replicability of their findings.

The prospect of improved clinical outcomes and more efficient health systems has fuelled a rapid rise in the development and evaluation of artificial intelligence (AI) systems over the last decade. Because most AI systems within healthcare are complex interventions designed as clinical decision support systems, rather than autonomous agents, the interactions between the AI systems, their users and the implementation environments are defining components of the AI interventions’ overall potential effectiveness. Therefore, bringing AI systems from mathematical performance to clinical utility, needs an adapted, stepwise implementation and evaluation pathway, addressing the complexity of this collaboration between two independent forms of intelligence, beyond measures of effectiveness alone.1 Despite indications that some AI-based algorithms now match the accuracy of human experts within preclinical in silico studies,2 there is little high quality evidence for improved clinician performance or patient outcomes in clinical studies.3 4 Reasons proposed for this so called AI-chasm5 are lack of necessary expertise needed for translating a tool into practice, lack of funding available for translation, a general underappreciation of clinical research as a translation mechanism6 and more specifically a disregard for the potential value of the early stages of clinical evaluation and the analysis of human factors.7

Key messages.

DECIDE-AI is a stage specific reporting guideline for the early, small scale and live clinical evaluation of decision support systems based on artificial intelligence

The DECIDE-AI checklist presents 27 items considered as minimum reporting standards. It is the result of a consensus process involving 151 experts from 18 countries and 20 stakeholder groups

DECIDE-AI aims to improve the reporting around four key aspects of early stage live AI evaluation: proof of clinical utility at small scale, safety, human factors evaluation, and preparation for larger scale summative trials

The challenges of early stage clinical AI evaluation (see box 1) are similar to those of complex interventions, as reported by the Medical Research Council dedicated guidance,1 and surgical innovation, as described by the IDEAL Framework.8 9 For example, in all three cases, the evaluation needs to consider the potential for iterative modification of the interventions and the characteristics of the operators (or users) performing them. In this regard, the IDEAL framework offers readily implementable and stage specific recommendations for the evaluation of surgical innovations under development. IDEAL stages 2a/2b, for example, are described as development and exploratory stages, during which the intervention is refined, operators’ learning curves analysed, and the influence of patient and operator variability on effectiveness are explored prospectively, prior to large scale efficacy testing.

Box 1. Methodological challenges of the artificial intelligence (AI)-based decision support system evaluation.

-

The clinical evaluation of AI-based decision support systems presents several methodological challenges, all of which will likely be encountered at early stage. These are the needs to:

account for the complex intervention nature of these systems and evaluate their integration within existing ecosystems

account for user variability and the added biases occurring as a result

consider two collaborating forms of intelligence (human and AI system) and therefore integrate human factors considerations as a core component

consider both physical patients and their data representations

account for the changing nature of the intervention (either due to early prototyping, version updates, or continuous learning design) and to analyse related performance changes

minimise the potential of this technology to embed and reproduce existing health inequality and systemic biases

estimate the generalisability of findings across sites and populations

enable reproducibility of the findings in the context of a dynamic innovation field and intellectual property protection

Early stage clinical evaluation of AI systems should also place a strong emphasis on validation of performance and safety, in a similar manner to phase 1 and 2 pharmaceutical trials, before efficacy evaluation at scale in phase 3. For example, small changes in the distribution of the underlying data between the algorithm training and clinical evaluation populations (so called dataset shift) can lead to significant variation in clinical performance and expose patients to potential unexpected harm.10 11

Human factors (or ergonomics) evaluations are commonly conducted in safety critical fields such as aviation, the military and energy sectors.12 13 14 Their assessments evaluate the impact of a device or procedure on their users’ physical and cognitive performance, and vice versa. Human factors, such as usability evaluation, are an integral part of the regulatory process for new medical devices15 16 and their application to AI specific challenges is attracting growing attention in the medical literature.17 18 19 20 However, few clinical AI studies report on the evaluation of human factors,3 and usability evaluation of related digital health technology is often performed with inconstant methodology and reporting.21

Other areas of suboptimal reporting of clinical AI studies have also recently been highlighted,3 22 such as implementation environment, user characteristics and selection process, training provided, underlying algorithm identification, and disclosure of funding sources. Transparent reporting is necessary for informed study appraisal and to facilitate reproducibility of study results. In a relatively new and dynamic field such as clinical AI, comprehensive reporting is also key to construct a common and comparable knowledge base to build upon.

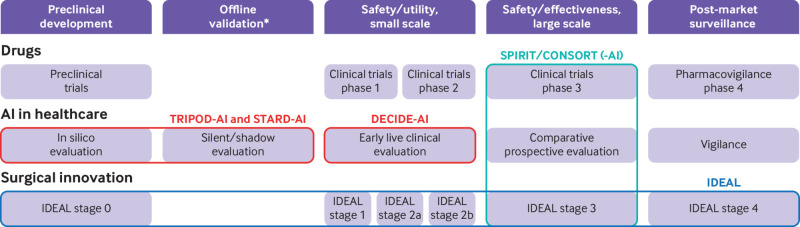

Guidelines already exist, or are under development, for the reporting of preclinical, in silico, studies of AI systems, their offline validation, and for their evaluation in large comparative studies23 24 25 26; but there is an important stage of research between these, namely studies focussing on the initial clinical use of AI systems, for which no such guidance currently exists (fig 1 and table 1). This early clinical evaluation provides a crucial scoping evaluation of clinical utility, safety, and human factors challenges in live clinical settings. By investigating the potential obstacles to clinical evaluation at scale and informing protocol design, these studies are also important stepping stones toward definitive comparative trials.

Fig 1.

Comparison of development pathways for drug therapies, artificial intelligence (AI) in healthcare, and surgical innovation. The coloured lines represent reporting guidelines, some of which are study design specific (TRIPOD-AI, STARD-AI, SPIRIT/CONSORT, SPIRIT/CONSORT-AI), others stage specific (DECIDE-AI, IDEAL). Depending on the context, more than one study design can be appropriate for each stage. *Only apply to AI in healthcare

Table 1.

Overview of existing and upcoming artificial intelligence (AI) reporting guidelines

| Name | Stage | Study design | Comment |

|---|---|---|---|

| TRIPOD-AI | Preclinical development | Prediction model evaluation* | Extension of TRIPOD. Used to report prediction models (diagnostic or prognostic) development, validation and updates. Focuses on model performance |

| STARD-AI | Preclinical development, offline validation | Diagnostic accuracy studies* | Extension of STARD. Used to report diagnostic accuracy studies, either at development stage or as an offline validation in clinical settings. Focuses on diagnostic accuracy |

| DECIDE-AI | Early live clinical evaluation* | Various (prospective cohort studies, non-randomised controlled trials, . . .)† with additional features such as modification of intervention, analysis of prespecified subgroups, or learning curve analysis | Stand alone guideline. Used to report the early evaluation of AI systems as an intervention in live clinical settings (small scale, formative evaluation), independently of the study design and AI system modality (diagnostic, prognostic, therapeutic). Focuses on clinical utility, safety, and human factors |

| SPIRIT-AI | Comparative prospective evaluation | Randomised controlled trials (protocol)* | Extension of SPIRIT. Used to report the protocols of randomised controlled trials evaluating AI systems as interventions |

| CONSORT-AI | Comparative prospective evaluation | Randomised controlled trials* | Extension of CONSORT. Used to report randomised controlled trials evaluating AI systems as interventions (large scale, summative evaluation), independently of the AI system modality (diagnostic, prognostic, therapeutic). Focuses on effectiveness and safety |

Primary target of the guidelines, either a specific stage or a specific study design.

Although existing reporting guidelines exist for some of these study designs (eg, STROBE for cohort studies), none of them cover all the core aspects of AI system early stage evaluation and none would fit all possible study designs; DECIDE-AI was therefore developed as a new stand alone reporting guideline for these studies.

To address this gap, we convened an international, multistakeholder group of experts in a Delphi exercise to produce the DECIDE-AI reporting guideline. Focusing on AI systems supporting, rather than replacing human intelligence, DECIDE-AI aims to improve the reporting of studies describing the evaluation of AI-based decision support systems during their early, small scale implementation in live clinical settings (ie, the supported decisions have an actual impact on patient care). Whereas TRIPOD-AI, STARD-AI, SPIRIT-AI, and CONSORT-AI are specific to particular study designs, DECIDE-AI is focused on the evaluation stage and does not prescribe a fixed study design.

Methods

The DECIDE-AI guideline was developed through an international expert consensus process and in accordance with the EQUATOR Network’s recommendations for guideline development.27 A Steering Group was convened to oversee the guideline development process. Its members were selected to cover a broad range of expertise and ensure a seamless integration with other existing guidelines. We conducted a modified Delphi process,28 with two rounds of feedback from participating experts and one virtual consensus meeting. The project was reviewed by the University of Oxford Central University Research Ethics Committee (approval number R73712/RE003) and registered with the EQUATOR Network. Informed consent was obtained from all participants in the Delphi process and consensus meeting.

Initial item list generation

An initial list of candidate items was developed based on expert opinion informed by a systematic literature review focusing on the evaluation of AI-based diagnostic decision support systems,3 an additional literature search about existing guidance for AI evaluation in clinical settings (search strategy available on the Open Science Framework29), literature recommended by Steering Group members,19 22 30 31 32 33 34 and institutional documents.35 36 37 38

Expert recruitment

Experts were recruited through five different channels: invitation to experts recommended by the Steering Group, invitation to authors of the publications identified through the initial literature searches, call to contribute published in a commentary article in a medical journal,7 consideration of any expert contacting the Steering Group of their own initiative, and invitation to experts recommended by the Delphi participants (snowballing). Before starting the recruitment process, 20 target stakeholder groups were defined, namely: administrators/hospital management, allied health professionals, clinicians, engineers/computer scientists, entrepreneurs, epidemiologists, ethicists, funders, human factors specialists, implementation scientists, journal editors, methodologists, patient representatives, payers/commissioners, policy makers/official institution representatives, private sector representatives, psychologists, regulators, statisticians, and trialists.

One hundred and thirty eight experts agreed to participate in the first round of Delphi, of whom 123 (89%) completed the questionnaire (83 identified from Steering Group recommendation, 12 from their publications, 21 contacting the Steering Group from of own initiative, and seven through snowballing). One hundred and sixty two experts were invited to take part in the second round of Delphi, of whom 138 completed the questionnaire (85%). 110 had also completed the first round (continuity rate of 89%)39 and 28 were new participants. The participating experts represented 18 countries and spanned all 20 of the defined stakeholder groups (see supplementary notes 1 and supplementary tables 1 and 2).

Delphi process

The Delphi surveys were designed and distributed via the REDCap web application.40 41 The first round consisted of four open ended questions on aspects viewed by the Delphi participants as necessary to be reported during early stage clinical evaluation. The participating experts were then asked to rate, on a 1 to 9 scale, the importance of items in the initial list proposed by the research team. Ratings of 1 to 3 on the scale were defined as “not important,” 4 to 6 as “important but not critical,” and 7 to 9 as “important and critical.” Participants were also invited to comment on existing items and to suggest new items. An inductive thematic analysis of the narrative answers was performed independently by two reviewers (BV and MN) and conflict was resolved by consensus.42 The themes identified were used to correct any omissions in the initial list and to complement the background information about proposed items. Summary statistics of the item scores were produced for each stakeholder group, by calculating the median score, interquartile range, and the percentage of participants scoring an item 7 or higher, as well as 3 or lower, which were the prespecified inclusion and exclusion cut-offs, respectively). A revised item list was developed based on the results of the first round.

In the second round, the participants were shown the results of the first round and invited to rate and comment on the items in the revised list. The detailed survey questions of the two rounds of Delphi can be found on the Open Science Framework (OSF).29 All analyses of item scores and comments were performed independently by two members of the research team (BV and MN), using NVivo (QSR International, v1.0) and Python (Python Software Foundation, v3.8.5). Conflicts were resolved by consensus.

The initial item list contained 54 items. 120 sets of responses were included in the analysis of the first round of Delphi (one set of responses was excluded due to a reasonable suspicion of scale inversion, two due to completion after the deadline). The first round yielded 43 986 words of free text answers to the four initial open ended questions, 6,419 item scores, 228 comments, and 64 proposals for new items. The thematic analysis identified 109 themes. In the revised list, nine items remained unchanged, 22 were reworded/completed, 21 reorganised (merged/split, becoming 13 items), two items dropped, and nine new items added, for a total of 53 items. The two items dropped were related to health economic assessment. They were the only two items with a median score below 7 (median 6, interquartile range 2-9 for both) and received numerous comments describing them as an entirely separate aspect of evaluation. The revised list was reorganised into items and subitems. 136 sets of answers were included in the analysis of the second round of Delphi (one set of answers was excluded due to lack of consideration for the questions, one due to completion after the deadline). The second round yielded 7101 item scores and 923 comments. The results of the thematic analysis, the initial and revised item lists, as well as per item narrative and graphical summaries of the feedback received in both rounds can be found on OSF.29

Consensus meeting

A virtual consensus meeting was held on three occasions between the 14 and 16 of June 2021, to debate and agree the content and wording of the DECIDE-AI reporting guideline. The 16 members of the Consensus Group (see supplementary notes 1 and supplementary tables 2a and 2b) were selected to ensure a balanced representation of the key stakeholder groups, as well as geographic diversity. All items from the second round of Delphi were discussed and voted on during the consensus meeting. For each item, the results of the Delphi process were presented to the Consensus Group members and a vote was carried out anonymously using the Vevox online application (www.vevox.com). A prespecified cut-off of 80% of the Consensus Group members (excluding blank votes and abstentions) was necessary for an item to be included. To highlight the new, AI specific reporting items, the Consensus Group divided the guidelines into two item lists: an AI specific items list, which represents the main novelty of the DECIDE-AI guideline, and a second list of generic reporting items, which achieved high consensus but are not AI specific and could apply to most types of study. The Consensus Group selected 17 items (made of 28 subitems in total) for inclusion in the AI specific list and 10 items for inclusion in the generic reporting item list. Supplementary table 3 provides a summary of the Consensus Group meeting votes.

Qualitative evaluation

The drafts of the guideline and of the Explanation and Elaboration (E&E) sections were sent for qualitative evaluation to a group of 16 selected experts with experience in AI system implementation or in the peer reviewing of literature related to AI system evaluation (see supplementary notes 1), all of whom were independent of the Consensus Group. These 16 experts were asked to comment on the clarity and applicability of each AI specific item, using a custom form (available on OSF29). Item wording amendments and modifications to the E&E sections were conducted based on the feedback from the qualitative evaluation, which was independently analysed by two reviewers (BV and MN) and with conflicts resolved by consensus. A glossary of terms (see box 2) was produced to clarify key concepts used in the guideline. The Consensus Group approved the final item lists including any changes made during the qualitative evaluation. Supplementary figures 1 and 2 provide graphical representations of the two item lists’ (AI specific and generic) evolution.

Box 2. Glossary of terms.

AI system

Decision support system incorporating AI and consisting of: (i) the artificial intelligence or machine learning algorithm; (ii) the supporting software platform; and (iii) the supporting hardware platform

AI system version

Unique reference for the form of the AI system and the state of its components at a single point in time. Allows for tracking changes to the AI system over time and comparing between different versions

Algorithm

Mathematical model responsible for learning from data and producing an output

Artificial intelligence (AI)

“Science of developing computer systems which can perform tasks normally requiring human intelligence”26

Bias

“Systematic difference in treatment of certain objects, people, or groups in comparison to others”43

Care pathway

Series of interactions, investigations, decision making and treatments experienced by patients in the course of their contact with a healthcare system for a defined reason

Clinical

Relating to the observation and treatment of actual patients rather than in silico or scenario-based simulations

Clinical evaluation

Set of ongoing activities, analysing clinical data and using scientific methods, to evaluate the clinical performance, effectiveness and/or safety of an AI system, when used as intended35

Clinical investigation

Study performed on one or more human subjects to evaluate the clinical performance, effectiveness and/or safety of an AI system.44 This can be performed in any setting (eg, community, primary care, hospital)

Clinical workflow

Series of tasks performed by healthcare professionals in the exercise of their clinical duties

Decision support system

System designed to support human decision making by providing person specific and situation specific information or recommendations, to improve care or enhance health

Exposure

State of being in contact with, and having used, an AI system or similar digital technology.

Human-computer interaction

Bidirectional influence between human users and digital systems through a physical and conceptual interface

Human factors

Also called ergonomics. “The scientific discipline concerned with the understanding of interactions among humans and other elements of a system, and the profession that applies theory, principles, data and methods to design in order to optimise human well-being and overall system performance.” (International Ergonomics Association)

Indication for use

Situation and reason (medical condition, problem, and patient group) where the AI system should be used

In silico evaluation

Evaluation performed via computer simulation outside the clinical settings

Intended use

Use for which an AI system is intended, as stated by its developers, and which serves as the basis for its regulatory classification. The intended use includes aspects of: the targeted medical condition, patient population, user population, use environment, mode of action

Learning curves

Graphical plotting of user performance against experience.45 By extension, analysis of the evolution of user performance with a task as exposure to the task increases. The measure of performance often uses other context specific metrics as a proxy

Live evaluation

Evaluation under actual clinical conditions, in which the decisions made have a direct impact on patient care. As opposed to “offline” or “shadow mode” evaluation where the decisions do not have a direct impact on patient care

Machine learning

“Field of computer science concerned with the development of models/algorithms that can solve specific tasks by learning patterns from data, rather than by following explicit rules. It is seen as an approach within the field of AI”26

Participant

Subject of a research study, on which data will be collected and from whom consent is obtained (or waived). The DECIDE-AI guideline considers that both patients and users can be participants

Patient

Person (or the digital representation of this person) receiving healthcare attention or using health services, and who is the subject of the decision made with the support of the AI system. NB: DECIDE-AI uses the term “patient” pragmatically to simplify the reading of the guideline. Strictly speaking, a person with no health conditions who is the subject of a decision made about them by an AI-based decision support tool to improve their health and wellbeing or for a preventative purpose is not necessarily a “patient” per se

Patient involvement in research

Research carried out “with” or “by” patients or members of the public rather than “to”, “about” or “for” them. (Adapted from the INVOLVE definition of public involvement)

Standard practice

Usual care currently received by the intended patient population for the targeted medical condition and problem. This may not necessarily be synonymous with the state-of-the-art practice

Usability

“Extent to which a product can be used by specified users to achieve specified goals with effectiveness, efficiency, and satisfaction in a specified context of use”46

User

Person interacting with the AI system to inform their decision making. This person could be a healthcare professional or a patient

The definitions given pertain to the specific context of DECIDE-AI and the use of the terms in the guideline. They are not necessarily generally accepted definitions and might not always be fully applicable to other areas of research

Recommendations

Reporting item checklist

The DECIDE-AI guideline should be used for the reporting of studies describing the early stage live clinical evaluation of AI-based decision support systems, independently of the study design chosen (fig 1 and table 1). Depending on the chosen study design and if available, authors may also wish to complete the reporting according to study type specific guideline (eg, STROBE for cohort studies).47 Table 2 presents the DECIDE-AI checklist, comprising of the 17 AI specific reporting items and 10 generic reporting items selected by the Consensus Group. Each item comes with an E&E to explain why and how reporting is recommended (see supplementary appendix 1). A downloadable version of the checklist, designed to help researchers and reviewers check compliance when preparing or reviewing a manuscript, is available as supplementary appendix 2. Reporting guidelines are a set of minimum reporting recommendations and not intended to guide research conduct. Although familiarity with DECIDE-AI might be useful to inform some aspects of the design and conduct of studies within the guideline’s scope,48 adherence to the guideline alone should not be interpreted as an indication of methodological quality (which is the realm of methodological guidelines and risk of bias assessment tools). With increasingly complex AI interventions and evaluations, it might become challenging to report all the required information within a single primary manuscript, in which case references to the study protocol, open science repositories, related publications, and supplementary materials are encouraged.

Table 2.

DECIDE-AI checklist

| Item No | Theme | Recommendation | Reported on page |

|---|---|---|---|

| 1-17 | AI specific reporting items | ||

| I-X | Generic reporting items | ||

| Title and abstract | |||

| 1 | Title | Identify the study as early clinical evaluation of a decision support system based on AI or machine learning, specifying the problem addressed | |

| I | Abstract | Provide a structured summary of the study. Consider including: intended use of the AI system, type of underlying algorithm, study setting, number of patients and users included, primary and secondary outcomes, key safety endpoints, human factors evaluated, main results, conclusions | |

| Introduction | |||

| 2 | Intended use | a) Describe the targeted medical condition(s) and problem(s), including the current standard practice, and the intended patient population(s) | |

| b) Describe the intended users of the AI system, its planned integration in the care pathway, and the potential impact, including patient outcomes, it is intended to have | |||

| II | Objectives | State the study objectives | |

| Methods | |||

| III | Research governance | Provide a reference to any study protocol, study registration number, and ethics approval | |

| 3 | Participants | a) Describe how patients were recruited, stating the inclusion and exclusion criteria at both patient and data level, and how the number of recruited patients was decided | |

| b) Describe how users were recruited, stating the inclusion and exclusion criteria, and how the intended number of recruited users was decided | |||

| c) Describe steps taken to familiarise the users with the AI system, including any training received prior to the study | |||

| 4 | Al system | a) Briefly describe the AI system, specifying its version and type of underlying algorithm used. Describe, or provide a direct reference to, the characteristics of the patient population on which the algorithm was trained and its performance in preclinical development/validation studies | |

| b) Identify the data used as inputs. Describe how the data were acquired, the process needed to enter the input data, the pre-processing applied, and how missing/low-quality data were handled | |||

| c) Describe the AI system outputs and how they were presented to the users (an image may be useful) | |||

| 5 | Implementation | a) Describe the settings in which the AI system was evaluated | |

| b) Describe the clinical workflow/care pathway in which the AI system was evaluated, the timing of its use, and how the final supported decision was reached and by whom | |||

| IV | Outcomes | Specify the primary and secondary outcomes measured | |

| 6 | Safety and errors | a) Provide a description of how significant errors/malfunctions were defined and identified | |

| b) Describe how any risks to patient safety or instances of harm were identified, analysed, and minimised | |||

| 7 | Human factors | Describe the human factors tools, methods or frameworks used, the use cases considered, and the users involved | |

| V | Analysis | Describe the statistical methods by which the primary and secondary outcomes were analysed, as well as any prespecified additional analyses, including subgroup analyses and their rationale | |

| 8 | Ethics | Describe whether specific methodologies were utilised to fulfil an ethics-related goal (such as algorithmic fairness) and their rationale | |

| VI | Patient involvement | State how patients were involved in any aspect of: the development of the research question, the study design, and the conduct of the study | |

| Results | |||

| 9 | Participants | a) Describe the baseline characteristics of the patients included in the study, and report on input data missingness | |

| b) Describe the baseline characteristics of the users included in the study | |||

| 10 | Implementation | a) Report on the user exposure to the AI system, on the number of instances the AI system was used, and on the users’ adherence to the intended implementation | |

| b) Report any significant changes to the clinical workflow or care pathway caused by the AI system | |||

| VII | Main results | Report on the prespecified outcomes, including outcomes for any comparison group if applicable | |

| VIII | Subgroups analysis | Report on the differences in the main outcomes according to the prespecified subgroups | |

| 11 | Modifications | Report any changes made to the AI system or its hardware platform during the study. Report the timing of these modifications, the rationale for each, and any changes in outcomes observed after each of them | |

| 12 | Human-computer agreement | Report on the user agreement with the AI system. Describe any instances of and reasons for user variation from the AI system’s recommendations and, if applicable, users changing their mind based on the AI system’s recommendations | |

| 13 | Safety and errors | a) List any significant errors/malfunctions related to: AI system recommendations, supporting software/hardware, or users. Include details of: (i) rate of occurrence, (ii) apparent causes, (iii) whether they could be corrected, and (iv) any significant potential impacts on patient care | |

| b) Report on any risks to patient safety or observed instances of harm (including indirect harm) identified during the study | |||

| 14 | Human factors | a) Report on the usability evaluation, according to recognised standards or frameworks | |

| b) Report on the user learning curves evaluation | |||

| Discussion | |||

| 15 | Support for intended use | Discuss whether the results obtained support the intended use of the AI system in clinical settings | |

| 16 | Safety and errors | Discuss what the results indicate about the safety profile of the AI system. Discuss any observed errors/malfunctions and instances of harm, their implications for patient care, and whether/how they can be mitigated | |

| IX | Strengths and limitations | Discuss the strengths and limitations of the study | |

| Statements | |||

| 17 | Data availability | Disclose if and how data and relevant code are available | |

| X | Conflicts of interest | Disclose any relevant conflicts of interest, including the source of funding for the study, the role of funders, any other roles played by commercial companies, and personal conflicts of interest for each author | |

AI=artificial intelligence.

AI specific items are numbered in Arab numerals, generic items in Roman numerals.

Discussion

The DECIDE-AI guideline is the result of an international consensus process involving a diverse group of experts spanning a wide range of professional background and experience. The level of interest across stakeholder groups and the high response rate amongst the invited experts speaks to the perceived need for more guidance in the reporting of studies presenting the development and evaluation of clinical AI systems, and to the growing value placed on comprehensive clinical evaluation to guide implementation. The emphasis placed on the role of human-in-the-loop decision making was guided by the Steering Group’s belief that AI will, at least in the foreseeable future, augment rather than replace human intelligence in clinical settings. In this context, thorough evaluation of the human-computer interaction and the roles played by the human users will be key to realising the full potential of AI.

The DECIDE-AI guideline is the first stage specific AI reporting guideline to be developed. This stage specific approach echoes recognised development pathways for complex interventions,1 8 9 49 and aligns conceptually with proposed frameworks for clinical AI,6 50 51 52 although no commonly agreed nomenclature or definition has so far been published for the stages of evaluation in this field. Given the current state of clinical AI evaluation, and the apparent deficit in reporting guidance for the early clinical stage, the DECIDE-AI Steering Group considered it important to crystallise current expert opinion into a consensus, to help improve reporting of these studies. Beside this primary objective, the DECIDE-AI guideline will hopefully also support authors during study design, protocol drafting and study registration, by providing them with clear criteria around which to plan their work. As with other reporting guidelines, it is important to note that the overall impact on the standard of reporting will need to be assessed in due course, once the wider community has had a chance to use the checklist and explanatory documents, which is likely to prompt modification and fine tuning of the DECIDE-AI guideline, based on its real world use. While the outcome of this process cannot be prejudged, there is evidence that the adoption of consensus-based reporting guidelines (such as CONSORT) does indeed improve the standard of reporting.53

The Steering Group paid special attention to the integration of DECIDE-AI within the broader scheme of AI guidelines (eg, TRIPOD-AI, STARD-AI, SPIRIT-AI, and CONSORT-AI). It also focussed on DECIDE-AI being applicable to all type of decision support modalities (ie, detection, diagnostic, prognostic, and therapeutic). The final checklist should be considered as minimum scientific reporting standards and do not preclude reporting additional information, nor are they a substitute for other regulatory reporting or approval requirements. The overlap between scientific evaluation and regulatory processes was a core consideration during the development of the DECIDE-AI guideline. Early stage scientific studies can be used to inform regulatory decisions (eg, based on the stated intended use within the study), and are part of the clinical evidence generation process (eg, clinical investigations). The initial item list was aligned with information commonly required by regulatory agencies and regulatory considerations are introduced in the E&E paragraphs. However, given the somewhat different focuses of scientific evaluation and regulatory assessment,54 as well as differences between regulatory jurisdictions, it was decided to make no reference to specific regulatory processes in the guideline, nor to define the scope of DECIDE-AI within any particular regulatory framework. The primary focus of DECIDE-AI is scientific evaluation and reporting, for which regulatory documents often provide little guidance.

Several topics led to more intense discussion than others, both during the Delphi process and Consensus Group discussion. Regardless of whether the corresponding items were included or not, these represent important issues that the AI and healthcare communities should consider and continue to debate. Firstly, we discussed at length whether users (see glossary of terms) should be considered as study participants. The consensus reached was that users are a key study population, about whom data will be collected (eg, reasons for variation from the AI system recommendation, user satisfaction, etc), who might logically be consented as study participants, and therefore should be considered as such. Because user characteristics (eg, experience) can affect intervention efficacy, both patient and user variability should be considered when evaluating AI systems, and reported adequately.

Secondly, the relevance of comparator groups in early stage clinical evaluation was considered. Most studies retrieved in the literature search described a comparator group (commonly the same group of clinicians without AI support). Such comparators can provide useful information for the design of future large scale trials (eg, information on the potential effect size). However, comparator groups are often unnecessary at this early stage of clinical evaluation, when the focus is on issues other than comparative efficacy. Small scale clinical investigations are also usually underpowered to make statistically significant conclusions about efficacy, accounting for both patient and user variability. Moreover, the additional information gained from comparator groups in this context can often be inferred from other sources, like previous data on unassisted standard of care in the case of the expected effect size. Comparison groups are therefore mentioned in item VII but considered optional.

Thirdly, output interpretability is often described as important to increase user and patient trust in the AI system, to contextualise the system’s outputs within the broader clinical information environment,19 and potentially for regulatory purpose.55 However, some experts argued that an output’s clinical value may be independent of its interpretability, and that the practical relevance of evaluating interpretability is still debatable.56 57 Furthermore, there is currently no generally accepted way of quantifying or evaluating interpretability. For this reason, the Consensus Group decided not to include an item on interpretability at the current time.

Fourthly, the notion of users’ trust in the AI system, and its evolution with time, were discussed. As users accumulate experience with, and receive feedback from, the real world use of AI systems, they will adapt their level of trust in its recommendations. Whether appropriate or not, this level of trust will influence, as recently demonstrated by McIntosh et al,58 how much impact the systems have on the final decision making and therefore influence the overall clinical performance of the AI system. Understanding how trust evolves is essential for planning user training and determining the optimal timepoints at which to start data collection in comparative trials. However, as for interpretability, there is currently no commonly accepted way to measure trust in the context of clinical AI. For this reason, the item about user trust in the AI system was not included in the final guideline. The fact that interpretability and trust were not included highlights the tendency of consensus-based guidelines development towards conservatism, because only widely agreed upon concepts reach the level of consensus needed for inclusion. However, changes of focus in the field as well as new methodological development can be integrated into subsequent guideline iterations. From this perspective, the issues of interpretability and trust are far from irrelevant to future AI evaluations and their exclusion from the current guideline reflects less a lack of interest than a need for further research into how we can best operationalise these metrics for the purposes of evaluation in AI systems.

Fifthly, the notion of modifying the AI system (the intervention) during the evaluation received mixed opinions. During comparative trials, changes made to the intervention during data collection are questionable unless the changes are part of the study protocol; some authors even consider them as impermissible, on the basis that they would make valid interpretation of study results difficult or impossible. However, the objectives of early clinical evaluation are often not to make definitive conclusions on effectiveness. Iterative design evaluation cycles, if performed safely and reported transparently, offer opportunities to tailor an intervention to its users and beneficiaries, and augment chances of adoption of an optimised, fixed version during later summative evaluation.8 9 59 60

Sixthly, several experts noted the benefit of conducting human factors evaluation prior to clinical implementation and considered that therefore human factors should be reported separately. However, even robust preclinical human factors evaluation will not reliably characterise all the potential human factors issues which might arise during the use of an AI system in a live clinical environment, warranting a continued human factors evaluation at the early stage of clinical implementation. The Consensus Group agreed that human factors play a fundamental role in AI system adoption in clinical settings at scale and that the full appraisal of an AI system’s clinical utility can only happen in the context of its clinical human factors evaluation.

Finally, several experts raised concerns that the DECIDE-AI guideline prescribes an evaluation too exhaustive to be reported within a single manuscript. The Consensus Group acknowledged the breadth of topics covered and the practical implications. However, reporting guidelines aim to promote transparent reporting of studies, rather than mandating that every aspect covered by an item must have been evaluated within the studies. For example, if a learning curves evaluation has not been performed, then fulfilment of item 14b would be to simply state that this was not done, with an accompanying rationale. The Consensus Group agreed that appropriate AI evaluation is a complex endeavour necessitating the interpretation of a wide range of data, which should be presented together as far as possible. It was also felt that thorough evaluation of AI systems should not be limited by a word count and that publications reporting on such systems might benefit from special formatting requirements in the future. The information required by several items might already be reported in previous studies or in the study protocol, which could be cited, rather than described in full again. The use of references, online supplementary materials, and open access repositories (eg, OSF) is recommended to allow the sharing and connecting of all required information within one main published evaluation report.

There are several limitations to our work which should be considered. Firstly, the issue of potential biases, which apply to any consensus process: these include anchoring or participant selection biases.61 The research team tried to mitigate bias through the survey design, using open ended questions analysed through a thematic analysis, and by adapting the expert recruitment process, but it is unlikely that it was eliminated entirely. Despite an aim for geographical diversity and several actions taken to foster it, representation was skewed towards Europe and more specifically the United Kingdom. This could be explained in part by the following factors: a likely selection bias in the Steering Group’s expert recommendations, a higher interest in our open invitation to contribute coming from European/UK scientists (25 out of 30 experts approaching us, 83%), and a lack of control over the response rate and self-reported geographical location of participating experts. Considerable attention was also paid to diversity and balance between stakeholder groups, even though clinicians and engineers were the most represented, partly due to the profile of researchers who contacted us spontaneously after the public announcement of the project. Stakeholder group analyses were performed to identify any marked disagreements from underrepresented groups. Finally, as also noted by the authors of the SPIRIT-AI and CONSORT-AI guidelines,25 26 few examples of studies reporting on the early stage clinical evaluation of AI systems were available at the time we started developing the DECIDE-AI guideline. This might have impacted the exhaustiveness of the initial item list created from literature review. However, the wide range of stakeholders involved and design of the first round of Delphi allowed identification of several additional candidate items which were added in the second iteration of the item list.

The introduction of AI into healthcare needs to be supported by sound, robust and comprehensive evidence generation and reporting. This is essential both to ensure the safety and efficacy of AI systems, and to gain the trust of patients, practitioners, and purchasers, so that this technology can realise its full potential to improve patient care. The DECIDE-AI guideline aims to improve the reporting of early stage live clinical evaluation of AI systems, which lay the foundations for both larger clinical studies and later widespread adoption.

Acknowledgments

This article is being simultaneously published in May 2022 by The BMJ and Nature Medicine.

Members of the DECIDE-AI expert group (also see supplementary file for a full list, including affiliations) are as follows: Aaron Y Lee, Alan G Fraser, Ali Connell, Alykhan Vira, Andre Esteva, Andrew D Althouse, Andrew L Beam, Anne de Hond, Anne-Laure Boulesteix, Anthony Bradlow, Ari Ercole, Arsenio Paez, Athanasios Tsanas, Barry Kirby, Ben Glocker, Carmelo Velardo, Chang Min Park, Charisma Hehakaya, Chris Baber, Chris Paton, Christian Johner, Christopher J Kelly, Christopher J Vincent, Christopher Yau, Clare McGenity, Constantine Gatsonis, Corinne Faivre-Finn, Crispin Simon, Danielle Sent, Danilo Bzdok, Darren Treanor, David C Wong, David F Steiner, David Higgins, Dawn Benson, Declan P O’Regan, Dinesh V Gunasekaran, Dominic Danks, Emanuele Neri, Evangelia Kyrimi, Falk Schwendicke, Farah Magrabi, Frances Ives, Frank E Rademakers, George E Fowler, Giuseppe Frau, H D Jeffry Hogg, Hani J Marcus, Heang-Ping Chan, Henry Xiang, Hugh F McIntyre, Hugh Harvey, Hyungjin Kim, Ibrahim Habli, James C Fackler, James Shaw, Janet Higham, Jared M Wohlgemut, Jaron Chong, Jean-Emmanuel Bibault, Jérémie F Cohen, Jesper Kers, Jessica Morley, Joachim Krois, Joao Monteiro, Joel Horovitz, John Fletcher, Jonathan Taylor, Jung Hyun Yoon, Karandeep Singh, Karel G M Moons, Kassandra Karpathakis, Ken Catchpole, Kerenza Hood, Konstantinos Balaskas, Konstantinos Kamnitsas, Laura Militello, Laure Wynants, Lauren Oakden-Rayner, Laurence B Lovat, Luc J M Smits, Ludwig C Hinske, M Khair ElZarrad, Maarten van Smeden, Mara Giavina-Bianchi, Mark Daley, Mark P Sendak, Mark Sujan, Maroeska Rovers, Matthew DeCamp, Matthew Woodward, Matthieu Komorowski, Max Marsden, Maxine Mackintosh, Michael D Abramoff, Miguel Ángel Armengol de la Hoz, Neale Hambidge, Neil Daly, Niels Peek, Oliver Redfern, Omer F Ahmad, Patrick M Bossuyt, Pearse A Keane, Pedro N P Ferreira, Petra Schnell-Inderst, Pietro Mascagni, Prokar Dasgupta, Pujun Guan, Rachel Barnett, Rawen Kader, Reena Chopra, Ritse M Mann, Rupa Sarkar, Saana M Mäenpää, Samuel G Finlayson, Sarah Vollam, Sebastian J Vollmer, Seong Ho Park, Shakir Laher, Shalmali Joshi, Siri L van der Meijden, Susan C Shelmerdine, Tien-En Tan, Tom JW Stocker, Valentina Giannini, Vince I Madai, Virginia Newcombe, Wei Yan Ng, Wendy A Rogers, William Ogallo, Yoonyoung Park, Zane B Perkins

We thank all Delphi participants and experts who participated in the guideline qualitative evaluation. BV would also like to thank Benjamin Beddoe (Sheffield Teaching Hospital), Nicole Bilbro (Maimonides Medical Center), Neale Marlow (Oxford University Hospitals), Elliott Taylor (Nuffield Department of Surgical Science, University of Oxford), and Stephan Ursprung (Department for Radiology, Tübingen University Hospital) for their support in the initial stage of the project. The views expressed in this guideline are those of the authors, Delphi participants, and experts who participated in the qualitative evaluation of the guidelines. These views do not necessarily reflect those of their institutions or funders.

Web extra.

Extra material supplied by authors

Supplementary appendix 1: Explanation and Elaboration

Supplementary appendix 2: DECIDE-AI reporting item checklist

Supplementary materials: Additional figures (1 and 2), tables (1-3), and notes

Supplementary information: Full list of DECIDE-AI expert group members and their affiliations

Contributors: BV, MN, and PMcC designed the study. BV and MI conducted the literature searches. Members of the DECIDE-AI Steering Group (BV, DC, GSC, AKD, LF, BG, XL, PMa, LM, SS, PWa, PMc) provided methodological input and oversaw the conduct of the study. BV and MN conducted the thematic analysis, Delphi rounds analysis, and produced the Delphi round summaries. Members of the DECIDE-AI Consensus Group (BV, GSC, SP, BG, XL, BAM, PM, MM, LM, JO, CR, SS, DSWT, WW, PWh, PMc) selected the final content and wording of the guideline. BC chaired the consensus meeting. BV, MN, and BC drafted the final manuscript and E&E sections. All authors reviewed and commented on the final manuscript and E&E sections. All members of the DECIDE-AI expert group collaborated in the development of the DECIDE-AI guidelines by participating in the Delphi process, the qualitative evaluation of the guidelines, or both. The corresponding author attests that all listed authors meet authorship criteria and that no others meeting the criteria have been omitted. PMcC is the guarantor of this work.

Funding: This work was supported by the IDEAL Collaboration. BV is funded by a Berrow Foundation Lord Florey scholarship. MN is supported by the UKRI CDT in AI for Healthcare (http://ai4health.io - grant No P/S023283/1). DC receives funding from Wellcome Trust, AstraZeneca, RCUK and GlaxoSmithKline. GSC is supported by the NIHR Biomedical Research Centre, Oxford, and Cancer Research UK (programme grant: C49297/A27294). MI is supported by a Maimonides Medical Center Research fellowship. XL receives funding from the Wellcome Trust, the National Institute of Health Research/NHSX/Health Foundation, the Alan Turing Institute, the MHRA, and NICE. BAM is a fellow of The Alan Turing Institute supported by EPSRC grant EP/N510129/, and holds a Wellcome Trust funded honorary post at University College London for the purposes of carrying out independent research. MM receives funding from the Dalla Lana School of Public Health and Leong Centre for Healthy Children. JO is employed by the Medicines and Healthcare products Regulatory Agency, the competent authority responsible for regulating medical devices and medicines within the UK. Elements of the work relating to the regulation of AI as a medical device are funded via grants from NHSX and the Regulators’ Pioneer Fund (Department for Business, Energy, and Industrial Strategy). SS receives grants from the National Science Foundation, the American Heart Association, the National Institute of Health, and the Sloan Foundation. DSWT is supported by the National Medical Research Council, Singapore (NMRC/HSRG/0087/2018;MOH-000655-00), National Health Innovation Centre, Singapore (NHIC-COV19-2005017), SingHealth Fund Limited Foundation (SHF/HSR113/2017), Duke-NUS Medical School (Duke-NUS/RSF/2021/0018;05/FY2020/EX/15-A58), and the Agency for Science, Technology and Research (A20H4g2141; H20C6a0032). PWa is supported by the NIHR Biomedical Research Centre, Oxford and holds grants from the NIHR and Wellcome. PMc receives grants from Medtronic (unrestricted educational grant to Oxford University for the IDEAL Collaboration) and Oxford Biomedical Research Centre. The funders had no role in considering the study design or in the collection, analysis, interpretation of data, writing of the report, or decision to submit the article for publication.

Competing interests: All authors have completed the ICMJE uniform disclosure form at www.icmje.org/coi_disclosure.pdf and declare: MN consults for Cera Care, a technology enabled homecare provider. BC was a Non-Executive Director of the UK Medicines and Healthcare products Regulatory Agency (MHRA) from September 2015 until 31 August 2021. DC receives consulting fees from Oxford University Innovation, Biobeats, Sensyne Health, and has advisory role with Bristol Myers Squibb. BG has received consultancy and research grants from Philips NV and Edwards Lifesciences, and is owner and board member of Healthplus.ai BV and its subsidiaries. XL has advisory roles with the National Screening Committee UK, the WHO/ITU focus group for AI in health and the AI in Health and Care Award Evaluation Advisory Group (NHSX, AAC). PMa is the cofounder of BrainX and BrainX Community. MM reports consulting fees from AMS Healthcare, and honorariums from the Osgoode Law School and Toronto Pain Institute. LM is director and owner of Morgan Human Systems. JO holds an honorary post as an Associate of Hughes Hall, University of Cambridge. CR is an employee of HeartFlow, including salary and equity. SS has received honorariums from several universities and pharmaceutical companies for talks on digital health and AI. SS has advisory roles in Child Health Imprints, Duality Tech, Halcyon Health, and Bayesian Health. SS is on the board of Bayesian Health. This arrangement has been reviewed and approved by Johns Hopkins in accordance with its conflict-of-interest policies. DSWT holds patents linked to AI driven technologies, and a co-founder and equity holder for EyRIS. PWa declares grants, consulting fees and stocks from Sensyne Health and holds patents linked to AI driven technologies. PMc has advisory role for WEISS International and the technology incubator PhD programme at University College London. BV, GSC, AKD, LF, MI, BAM, SD, PWh, and WW declare no financial relationships with any organisations that might have an interest in the submitted work in the previous three years and no other relationships or activities that could appear to have influenced the submitted work.

Patient and public involvement: Patient representatives were invited to the Delphi process and participated in it. A patient representative (PWh) was a member of the Consensus Group, participated in the selection of the reporting items, edited their wording and reviewed the related E&E paragraphs.

Provenance and peer review: Not commissioned; externally peer reviewed.

Contributor Information

Collaborators: DECIDE-AI expert group, Aaron Y Lee, Alan G Fraser, Ali Connell, Alykhan Vira, Andre Esteva, Andrew D Althouse, Andrew L Beam, Anne de Hond, Anne-Laure Boulesteix, Anthony Bradlow, Ari Ercole, Arsenio Paez, Athanasios Tsanas, Barry Kirby, Ben Glocker, Carmelo Velardo, Chang Min Park, Charisma Hehakaya, Chris Baber, Chris Paton, Christian Johner, Christopher J Kelly, Christopher J Vincent, Christopher Yau, Clare McGenity, Constantine Gatsonis, Corinne Faivre-Finn, Crispin Simon, Danielle Sent, Danilo Bzdok, Darren Treanor, David C Wong, David F Steiner, David Higgins, Dawn Benson, Declan P O’Regan, Dinesh V Gunasekaran, Dominic Danks, Emanuele Neri, Evangelia Kyrimi, Falk Schwendicke, Farah Magrabi, Frances Ives, Frank E Rademakers, George E Fowler, Giuseppe Frau, H D Jeffry Hogg, Hani J Marcus, Heang-Ping Chan, Henry Xiang, Hugh F McIntyre, Hugh Harvey, Hyungjin Kim, Ibrahim Habli, James C Fackler, James Shaw, Janet Higham, Jared M Wohlgemut, Jaron Chong, Jean-Emmanuel Bibault, Jérémie F Cohen, Jesper Kers, Jessica Morley, Joachim Krois, Joao Monteiro, Joel Horovitz, John Fletcher, Jonathan Taylor, Jung Hyun Yoon, Karandeep Singh, Karel G M Moons, Kassandra Karpathakis, Ken Catchpole, Kerenza Hood, Konstantinos Balaskas, Konstantinos Kamnitsas, Laura Militello, Laure Wynants, Lauren Oakden-Rayner, Laurence B Lovat, Luc J M Smits, Ludwig C Hinske, M Khair ElZarrad, Maarten van Smeden, Mara Giavina-Bianchi, Mark Daley, Mark P Sendak, Mark Sujan, Maroeska Rovers, Matthew DeCamp, Matthew Woodward, Matthieu Komorowski, Max Marsden, Maxine Mackintosh, Michael D Abramoff, Miguel Ángel Armengol de la Hoz, Neale Hambidge, Neil Daly, Niels Peek, Oliver Redfern, Omer F Ahmad, Patrick M Bossuyt, Pearse A Keane, Pedro N P Ferreira, Petra Schnell-Inderst, Pietro Mascagni, Prokar Dasgupta, Pujun Guan, Rachel Barnett, Rawen Kader, Reena Chopra, Ritse M Mann, Rupa Sarkar, Saana M Mäenpää, Samuel G Finlayson, Sarah Vollam, Sebastian J Vollmer, Seong Ho Park, Shakir Laher, Shalmali Joshi, Siri L van der Meijden, Susan C Shelmerdine, Tien-En Tan, Tom JW Stocker, Valentina Giannini, Vince I Madai, Virginia Newcombe, Wei Yan Ng, Wendy A Rogers, William Ogallo, Yoonyoung Park, and Zane B Perkins

Data availability statement

All data generated during this study (pseudonymised where necessary) are available upon justified request to the research team and for a duration of three years after publication of this manuscript. Translation of this guideline into different languages is welcomed and encouraged, as long as the authors of the original publication are included in the process and resulting publication. All codes produced for data analysis during this study are available upon justified request to the research team and for a duration of three years after publication of this manuscript.

References

- 1. Skivington K, Matthews L, Simpson SA, et al. A new framework for developing and evaluating complex interventions: update of Medical Research Council guidance. BMJ 2021;374:n2061. 10.1136/bmj.n2061 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Liu X, Faes L, Kale AU, et al. A comparison of deep learning performance against health-care professionals in detecting diseases from medical imaging: a systematic review and meta-analysis. Lancet Digit Health 2019;1:e271-97. 10.1016/S2589-7500(19)30123-2. [DOI] [PubMed] [Google Scholar]

- 3. Vasey B, Ursprung S, Beddoe B, et al. Association of Clinician Diagnostic Performance With Machine Learning-Based Decision Support Systems: A Systematic Review. JAMA Netw Open 2021;4:e211276. 10.1001/jamanetworkopen.2021.1276 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Freeman K, Geppert J, Stinton C, et al. Use of artificial intelligence for image analysis in breast cancer screening programmes: systematic review of test accuracy. BMJ 2021;374:n1872. 10.1136/bmj.n1872 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Keane PA, Topol EJ. With an eye to AI and autonomous diagnosis. NPJ Digit Med 2018;1:40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. McCradden MD, Stephenson EA, Anderson JA. Clinical research underlies ethical integration of healthcare artificial intelligence. Nat Med 2020;26:1325-6. 10.1038/s41591-020-1035-9. [DOI] [PubMed] [Google Scholar]

- 7. Vasey B, Clifton DA, Collins GS, et al. DECIDE-AI Steering Group . DECIDE-AI: new reporting guidelines to bridge the development-to-implementation gap in clinical artificial intelligence. Nat Med 2021;27:186-7. 10.1038/s41591-021-01229-5 [DOI] [PubMed] [Google Scholar]

- 8. McCulloch P, Altman DG, Campbell WB, et al. Balliol Collaboration . No surgical innovation without evaluation: the IDEAL recommendations. Lancet 2009;374:1105-12. 10.1016/S0140-6736(09)61116-8 [DOI] [PubMed] [Google Scholar]

- 9. Hirst A, Philippou Y, Blazeby J, et al. No Surgical Innovation Without Evaluation: Evolution and Further Development of the IDEAL Framework and Recommendations. Ann Surg 2019;269:211-20. 10.1097/SLA.0000000000002794 [DOI] [PubMed] [Google Scholar]

- 10. Finlayson SG, Subbaswamy A, Singh K, et al. The Clinician and Dataset Shift in Artificial Intelligence. N Engl J Med 2021;385:283-6. 10.1056/NEJMc2104626. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Subbaswamy A, Saria S. From development to deployment: dataset shift, causality, and shift-stable models in health AI. Biostatistics 2020;21:345-52. 10.1093/biostatistics/kxz041. [DOI] [PubMed] [Google Scholar]

- 12. Kapur N, Parand A, Soukup T, Reader T, Sevdalis N. Aviation and healthcare: a comparative review with implications for patient safety. JRSM Open 2015;7:2054270415616548. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Corbridge C, Anthony M, McNeish D, Shaw G. A New UK Defence Standard For Human Factors Integration (HFI). Proc Hum Factors Ergon Soc Annu Meet 2016;60:1736-40 10.1177/1541931213601398. [DOI] [Google Scholar]

- 14. Stanton NA, Salmon P, Jenkins D, Walker G. Human factors in the design and evaluation of central control room operations. CRC Press, 2009. 10.1201/9781439809921. [DOI] [Google Scholar]

- 15.US Food and Drug Administration. Applying Human Factors and Usability Engineering to Medical Devices - Guidance for Industry and Food and Drug Administration Staff. 2016.

- 16.Medicines & Healthcare products Regulatory Agency (MHRA). Guidance on applying human factors and usability engineering to medical devices including drug-device combination products in Great Britain. 2021.

- 17. Asan O, Choudhury A. Research Trends in Artificial Intelligence Applications in Human Factors Health Care: Mapping Review. JMIR Hum Factors 2021;8:e28236. 10.2196/28236 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Felmingham CM, Adler NR, Ge Z, Morton RL, Janda M, Mar VJ. The Importance of Incorporating Human Factors in the Design and Implementation of Artificial Intelligence for Skin Cancer Diagnosis in the Real World. Am J Clin Dermatol 2021;22:233-42. 10.1007/s40257-020-00574-4 [DOI] [PubMed] [Google Scholar]

- 19. Sujan M, Furniss D, Grundy K, et al. Human factors challenges for the safe use of artificial intelligence in patient care. BMJ Health Care Inform 2019;26:e100081. 10.1136/bmjhci-2019-100081 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Sujan M, Baber C, Salmon P, Pool R, Chozos N. Human Factors and Ergonomics in Healthcare AI. Chartered Institute of Ergonomics & Human Factors, 2021. https://ergonomics.org.uk/resource/human-factors-in-healthcare-ai.html [Google Scholar]

- 21. Wronikowska MW, Malycha J, Morgan LJ, et al. Systematic review of applied usability metrics within usability evaluation methods for hospital electronic healthcare record systems: Metrics and Evaluation Methods for eHealth Systems. J Eval Clin Pract 2021;27:1403-16. 10.1111/jep.13582. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Nagendran M, Chen Y, Lovejoy CA, et al. Artificial intelligence versus clinicians: systematic review of design, reporting standards, and claims of deep learning studies. BMJ 2020;368:m689. 10.1136/bmj.m689 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Collins GS, Moons KGM. Reporting of artificial intelligence prediction models. Lancet 2019;393:1577-9. 10.1016/S0140-6736(19)30037-6 [DOI] [PubMed] [Google Scholar]

- 24. Sounderajah V, Ashrafian H, Aggarwal R, et al. Developing specific reporting guidelines for diagnostic accuracy studies assessing AI interventions: The STARD-AI Steering Group. Nat Med 2020;26:807-8. 10.1038/s41591-020-0941-1. [DOI] [PubMed] [Google Scholar]

- 25. Cruz Rivera S, Liu X, Chan AW, Denniston AK, Calvert MJ, SPIRIT-AI and CONSORT-AI Working Group. SPIRIT-AI and CONSORT-AI Steering Group. SPIRIT-AI and CONSORT-AI Consensus Group . Guidelines for clinical trial protocols for interventions involving artificial intelligence: the SPIRIT-AI extension. Nat Med 2020;26:1351-63. 10.1038/s41591-020-1037-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Liu X, Cruz Rivera S, Moher D, Calvert MJ, Denniston AK, SPIRIT-AI and CONSORT-AI Working Group . Reporting guidelines for clinical trial reports for interventions involving artificial intelligence: the CONSORT-AI extension. Nat Med 2020;26:1364-74. 10.1038/s41591-020-1034-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Moher D, Schulz KF, Simera I, Altman DG. Guidance for developers of health research reporting guidelines. PLoS Med 2010;7:e1000217. 10.1371/journal.pmed.1000217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Dalkey N, Helmer O. An Experimental Application of the DELPHI Method to the Use of Experts. Manage Sci 1963;9:458-67. 10.1287/mnsc.9.3.458. [DOI] [Google Scholar]

- 29. Vasey B, Nagendran M, McCulloch P. DECIDE-AI 2022. Open Science Framework, 2022. 10.17605/OSF.IO/TP9QV [DOI] [Google Scholar]

- 30. Vollmer S, Mateen BA, Bohner G, et al. Machine learning and artificial intelligence research for patient benefit: 20 critical questions on transparency, replicability, ethics, and effectiveness. BMJ 2020;368:l6927. 10.1136/bmj.l6927 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Bilbro NA, Hirst A, Paez A, et al. IDEAL Collaboration Reporting Guidelines Working Group . The IDEAL reporting guidelines: A delphi consensus statement stage specific recommendations for reporting the evaluation of surgical innovation. Ann Surg 2021;273:82-5. 10.1097/SLA.0000000000004180 [DOI] [PubMed] [Google Scholar]

- 32. Morley J, Floridi L, Kinsey L, Elhalal A. From What to How: An Initial Review of Publicly Available AI Ethics Tools, Methods and Research to Translate Principles into Practices. Sci Eng Ethics 2020;26:2141-168. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Xie Y, Gunasekeran DV, Balaskas K, et al. Health Economic and Safety Considerations for Artificial Intelligence Applications in Diabetic Retinopathy Screening. Transl Vis Sci Technol 2020;9:22. 10.1167/tvst.9.2.22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Norgeot B, Quer G, Beaulieu-Jones BK, et al. Minimum information about clinical artificial intelligence modeling: the MI-CLAIM checklist. Nat Med 2020;26:1320-4. 10.1038/s41591-020-1041-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.IMDRF Medical Device Clinical Evaluation Working Group. Clinical Evaluation. 2019. Report No.: WG/N56FINAL:2019.

- 36. IMDRF Software as Medical Device (SaMD) Working Group . Software as a Medical Device. Possible Framework for Risk Categorization and Corresponding Considerations, 2014. [Google Scholar]

- 37.National Institute for Health and Care Excellence (NICE). Evidence standards framework for digital health technologies. 2019.

- 38.European Commission, Directorate-General for Communications Networks, Content and Technology. Ethics guidelines for trustworthy AI, Publications Office, 2019. https://data.europa.eu/doi/10.2759/346720

- 39. Boel A, Navarro-Compán V, Landewé R, van der Heijde D. Two different invitation approaches for consecutive rounds of a Delphi survey led to comparable final outcome. J Clin Epidemiol 2021;129:31-9. 10.1016/j.jclinepi.2020.09.034 [DOI] [PubMed] [Google Scholar]

- 40. Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)--a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform 2009;42:377-81. 10.1016/j.jbi.2008.08.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Harris PA, Taylor R, Minor BL, et al. REDCap Consortium . The REDCap consortium: Building an international community of software platform partners. J Biomed Inform 2019;95:103208. 10.1016/j.jbi.2019.103208 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Nowell LS, Norris JM, White DE, Moules NJ. Thematic Analysis: Striving to Meet the Trustworthiness Criteria. Int J Qual Methods 2017;16:1609406917733847. 10.1177/1609406917733847. [DOI] [Google Scholar]

- 43.International Organization for Standardization. Information technology - Artificial intelligence (AI) - Bias in AI systems and AI aided decision making (ISO/IEC TR 24027:2021). 2021.

- 44.IMDRF Medical Device Clinical Evaluation Working Group. Clinical Investigation. 2019. Report No.: WG/N57FINAL:2019.

- 45. Hopper AN, Jamison MH, Lewis WG. Learning curves in surgical practice [abstract]. Postgrad Med J 2007;83:777-9. https://pmj.bmj.com/content/83/986/777. 10.1136/pgmj.2007.057190 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.International Organization for Standardization. Ergonomics of human-system interaction - Part 11: Usability: Definitions and concepts (ISO 9241-11:2018). 2018. Report No.: ISO 9241-11:2018.

- 47. von Elm E, Altman DG, Egger M, Pocock SJ, Gøtzsche PC, Vandenbroucke JP, STROBE Initiative . Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) statement: guidelines for reporting observational studies. BMJ 2007;335:806-8. 10.1136/bmj.39335.541782.AD [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Page MJ, McKenzie JE, Bossuyt PM, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ 2021;372:n71. 10.1136/bmj.n71 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Sedrakyan A, Campbell B, Merino JG, Kuntz R, Hirst A, McCulloch P. IDEAL-D: a rational framework for evaluating and regulating the use of medical devices. BMJ 2016;353:i2372. 10.1136/bmj.i2372 [DOI] [PubMed] [Google Scholar]

- 50. Park Y, Jackson GP, Foreman MA, Gruen D, Hu J, Das AK. Evaluating artificial intelligence in medicine: phases of clinical research. JAMIA Open 2020;3:326-31. 10.1093/jamiaopen/ooaa033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51. Higgins D, Madai VI. From Bit to Bedside: A Practical Framework for Artificial Intelligence Product Development in Healthcare. Adv Intell Syst 2020;2:2000052. 10.1002/aisy.202000052. [DOI] [Google Scholar]

- 52. Sendak MP, D’Arcy J, Kashyap S, Gao M, Nichols M, Corey K, et al. A path for translation of machine learning products into healthcare delivery. EMJ Innov 2020. 10.33590/emjinnov/19-00172. [DOI] [Google Scholar]

- 53. Moher D, Jones A, Lepage L, CONSORT Group (Consolidated Standards for Reporting of Trials) . Use of the CONSORT statement and quality of reports of randomized trials: a comparative before-and-after evaluation. JAMA 2001;285:1992-5. 10.1001/jama.285.15.1992. [DOI] [PubMed] [Google Scholar]

- 54. Park SH. Regulatory Approval versus Clinical Validation of Artificial Intelligence Diagnostic Tools. Radiology 2018;288:910-1. 10.1148/radiol.2018181310. [DOI] [PubMed] [Google Scholar]

- 55.US Food and Drug Administration (FDA). Clinical Decision Support Software - Draft Guidance for Industry and Food and Drug Administration Staff 2019. www.fda.gov/media/109618/download

- 56. Lipton ZC. The Mythos of Model Interpretability. Commun ACM 2018;61:36-43. 10.1145/3233231. [DOI] [Google Scholar]

- 57. Ghassemi M, Oakden-Rayner L, Beam AL. The false hope of current approaches to explainable artificial intelligence in health care. Lancet Digit Health 2021;3:e745-50. 10.1016/S2589-7500(21)00208-9 [DOI] [PubMed] [Google Scholar]

- 58. McIntosh C, Conroy L, Tjong MC, et al. Clinical integration of machine learning for curative-intent radiation treatment of patients with prostate cancer. Nat Med 2021;27:999-1005. 10.1038/s41591-021-01359-w. [DOI] [PubMed] [Google Scholar]

- 59.International Organization for Standardization. Ergonomics of human-system interaction — Part 210: Human-centred design for interactive systems (ISO 9241-210:2019). 2019.

- 60. Norman DA. User Centered System Design. 1st ed. CRC Press, 1986. 10.1201/b15703. [DOI] [Google Scholar]

- 61. Winkler J, Moser R. Biases in future-oriented Delphi studies: A cognitive perspective. Technol Forecast Soc Change 2016;105:63-76 10.1016/j.techfore.2016.01.021. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary appendix 1: Explanation and Elaboration

Supplementary appendix 2: DECIDE-AI reporting item checklist

Supplementary materials: Additional figures (1 and 2), tables (1-3), and notes

Supplementary information: Full list of DECIDE-AI expert group members and their affiliations

Data Availability Statement

All data generated during this study (pseudonymised where necessary) are available upon justified request to the research team and for a duration of three years after publication of this manuscript. Translation of this guideline into different languages is welcomed and encouraged, as long as the authors of the original publication are included in the process and resulting publication. All codes produced for data analysis during this study are available upon justified request to the research team and for a duration of three years after publication of this manuscript.