Abstract

Previous research has suggested that skills taught with a least-to-most prompting procedure to 80% and 90% accuracy did not always maintain high levels of performance maintenance. The present study replicates and extends previous research by evaluating the effects of various mastery criteria (i.e., 80%, 90%, and 100% accuracy across three consecutive sessions) on the maintenance of tacting skills taught with a most-to-least prompting procedure combined with a progressive time delay. Results of this study support previous research and further demonstrate that the highest levels of maintenance are achieved with 100% and 90% accuracy criteria for up to a month. For the 80% criterion, performance deteriorated during follow-up probes. Contrary to previous research suggesting a 90% criterion combined with least-to-most prompting procedures was not always sufficient for producing skill maintenance, the current study may provide preliminary support for the use of a 90% accuracy mastery criterion when combined with a most-to-least prompting procedure with a progressive time delay.

Keywords: Discrete-trial teaching, Maintenance, Mastery criteria, Most-to-least prompting, Skill acquisition

Baer et al. (1968) stipulated that applied behavior analysts seek to design interventions that target socially significant behaviors and result in meaningful and lasting behavior change. As such, researchers and practitioners often establish predetermined mastery criteria to serve as a guideline to determine when clients meet behavior change or skill acquisition goals. Mastery criteria are composed of two primary dimensions: (a) the level of performance and (b) the frequency of observations at that level of performance (Fuller & Fienup, 2018). Previous research suggests that these criteria are selected without an evidentiary basis, and selections vary across researchers and practitioners (McDougale et al., 2020; Richling et al., 2019). Additionally, although the adoption of performance criteria in the field of behavior analysis has increased over time, the current status of the literature still does not contain an adequate number of experimental evaluations pinpointing the effects of varying mastery criteria on the maintenance of skills.

The use of performance criteria in behavior-analytic endeavors has a long-standing history. Sayrs and Ghezzi (1997) conducted an early literature review of empirical studies published in the Journal of Applied Behavior Analysis from 1968 to 1995 to accumulate data on researchers’ reports of steady-state criteria. Results indicated that fewer than 20% of studies reported steady-state criteria. However, of those that reported steady-state criteria, nearly all of the studies reported mastery criteria as opposed to statistical or visual criteria. Currently, the adoption of mastery criteria by both practitioners and researchers is more commonplace (McDougale et al., 2020; Richling et al., 2019). Love et al. (2009) reported that 62% of clinicians surveyed used mastery criteria requiring a certain percentage correct across multiple sessions. A subsequent survey conducted by Richling et al. (2019) sought to extend the findings by identifying more specific practices regarding the level of accuracy and the number of observations required to achieve mastery levels of performance. The results indicated that most certified behavior analysts require 80% accuracy across three sessions to meet mastery. Clinicians also reported their selections as a product of their training experiences and supervisor preferences rather than an artifact of empirical support (Richling et al., 2019). This highlights a potential issue whereby practitioners are selecting meaningful teaching components based on lore rather than based on research findings.

Further evidence of the gap between research and practice can be found by looking at the specific mastery criteria selected in practical and research endeavors. As mentioned, Richling et al. (2019) revealed that all surveyed practitioners report using mastery criteria, the most common being a criterion of 80% accuracy across three sessions. McDougale et al. (2020), however, found that within published behavior-analytic literature, researchers are more likely to employ a criterion of 90% accuracy across two sessions. This seeming difference between the behavior of researchers and practitioners again points to a problem in the research-to-practice process.

Compounding this issue further, McDougale et al. (2020) stated that less than half of the published research articles (41%) reported on the maintenance of skills following the termination of intervention, and 26% of articles did not report mastery criteria. As such, practitioners seeking empirical guidance on selecting appropriate mastery criteria would find limited information. The lack of studies reporting which mastery criteria were adopted makes it difficult to draw any valid inferences or build empirical support for the use of specific mastery criteria associated with adequate skill maintenance for any particular clinical intervention. In order to provide clinicians with an empirically based guideline for selecting mastery criteria, more research with direct evaluations of the effects of varying mastery criteria on the maintenance of skills is warranted.

There are a few less obvious direct evaluations of performance criteria from which practitioners may begin to draw inferences. Early research groups directly evaluated the effects of mastery criteria on the performance of skills in undergraduate students (Johnston & O’Neill, 1973; Semb, 1974). In a study conducted by Johnston and O’Neill (1973), participants engaged in higher response rates when conditions included a performance criterion. A later study conducted by Semb (1974) resulted in lower levels of accurate responding during conditions with lower assigned mastery criteria. Although this research provides valuable information, applied behavior analysis has become a hallmark intervention to promote skill acquisition for children with intellectual and developmental disabilities (National Autism Center, 2015). Additionally, as noted by Cooper et al. (2007), it is imperative that behavior analysts make special considerations to ensure that the gains or skills acquired during programming sustain after the termination of treatment. As such, it is essential to demonstrate the relationship between different variables in acquisition programs and their effects on maintenance, especially within commonly targeted populations for behavior-analytic interventions.

Recently, two research groups have begun directly evaluating the effects of varying mastery criteria on the maintenance of acquisition skills with neurodiverse populations. Richling et al. (2019) evaluated the effects of various mastery criteria (i.e., 60%, 80%, and 100% accuracy across three sessions) on performance at weekly follow-up maintenance probes. The results indicated that skill performance remained above 80% accuracy during follow-up probes for the target sets assigned to the 100% accuracy criterion condition. The data indicated that for some individuals, an 80% accuracy mastery criterion is not sufficient to promote long-term maintenance of the skill. Furthermore, in a subsequent experiment, Richling et al. demonstrated that a 90% accuracy mastery criterion did not promote the maintenance of skills for any participants. In contrast, Fuller and Fienup (2018) evaluated the effects of 50%, 80%, and 90% accuracy criteria across one 20-trial session on the maintenance of skills for three elementary-school-aged children diagnosed with autism. Results showed participants displayed higher levels of correct responding during 1-, 2-, 3-, and 4-week follow-up maintenance probes for target sets assigned to the highest accuracy mastery criterion (90%).

In addition to varying levels of accuracy requirements to reach mastery, skill acquisition programs embed many components of teaching strategies that may affect the maintenance of skills. Both Richling et al. (2019) and Fuller and Fienup (2018) employed a least-to-most(LTM) prompting procedure in order to facilitate acquisition. LTM prompting hierarchies provide participants with the opportunity to engage in independent responses before the presentation of a prompt. Often, these procedures result in more errors than most-to-least(MTL) prompting procedures (Libby et al., 2008). As such, there is some evidence to suggest MTL procedures may improve the maintenance of skills (Fentress & Lerman, 2012). Additionally, Love et al. (2009) reported 89% of clinicians most commonly use MTL prompting when teaching new skills. In order to more closely replicate common clinical practices and provide clinicians with a more comprehensive analysis of the effects of varying mastery criteria on maintenance, further research is warranted. The purpose of the current study was to replicate Richling et al. and evaluate the effects of varying levels of accuracy within mastery criteria (80%, 90%, and 100%) on the maintenance of skills that researchers taught using an MTL prompting procedure combined with a progressive time delay.

Method

Participants and Setting

Researchers recruited three participants from a local preschool and a university-based clinic. All participants were vocal and verbal. Walter was a 4-year-old boy diagnosed with autism spectrum disorder (ASD) who displayed imitative and spontaneous tacting, manding (three- to five-word utterances), and intraverbal skills. Adam was a 5-year-old boy diagnosed with ASD who displayed imitative and spontaneous tacting, manding (three- to five-word utterances), receptive identification, and intraverbal skills. Paul had a generalized imitative repertoire. He emitted spontaneous mands, tacts, and intraverbals in full sentences. Paul scored a Level 6 on the Kerr–Meyerson Assessment of Basic Learning Abilities–Revised (ABLA-R; DeWiele et al., 2011), demonstrating his ability to make auditory and visual discriminations. Researchers did not conduct the ABLA-R assessment with Walter and Adam; however, it is expected they would have likely passed Level 6.

Researchers conducted sessions in a 3.5 m × 4.6 m designated therapy room at the preschool or the university clinic. The room included a table and at least three chairs for the participant, experimenter, and undergraduate research assistant. There were minimal items present in the room to avoid distractions during instructional periods and increase attending to the therapist and stimuli.

Target Behavior and Response Measurement

The target behavior for each participant was correctly tacting stimuli by vocally stating the name of the item depicted in the photograph. Researchers assigned one set of three target stimuli to each mastery criterion examined (i.e., 80%, 90%, and 100%). Each set included three 2-dimensional picture targets. Set 1 included a grater, tongs, and a kettle. Set 2 included a grill, a whisk, and a tray. Set 3 included a colander, a ladle, and a sink.

Researchers selected common targets that participants were unlikely to receive formal outside training on for the duration of the experimental sessions (as indicated by teachers or parents). Teachers and parents helped identify targets that included sound blends and numbers of syllabi that all participants had previously demonstrated the ability to pronounce and respond to differentially. No formal assessments were used to equate the difficulty of the target words; however, researchers counterbalanced all target sets across mastery criteria and participants.

Each session included a total of 10 trials. Researchers randomly presented the three target stimuli in a 3-3-4 format to counterbalance target presentations across conditions. Researchers scored each trial as correct or incorrect and as prompted or independent. Researchers converted those data into percentages by dividing the number of responses in each category by the total number of trials (10) and multiplying by 100%. If the participant did not respond following the presentation of the discriminative stimulus (e.g., “What’s this?”), the researcher re-presented the trial until the participant emitted a correct or incorrect response. This procedure ensured that nonresponses due to noncompliance were not included in the dependent measure. For Adam, researchers re-presented trials ending in no responses an average of one time per session across the study. Researchers did not have to re-present any trials for Walter or Paul.

Design and Procedures

Researchers evaluated the effects of three varying mastery criteria on the maintenance of skills using a nonconcurrent multiple-baseline design with an adapted alternating-treatments design (Sindelar et al., 1985). Researchers compared the effects of 80%, 90%, and 100% accuracy criteria across three consecutive sessions on the levels of correct responding during four weekly follow-up maintenance probes. The current evaluation included three phases: baseline, teaching, and weekly follow-up maintenance probes. All sessions included 10 discrete trials. For each participant, researchers conducted experimental sessions one to four times per day across 1 to 5 days per week.

Preference Assessment

Researchers conducted a multiple-stimulus without-replacement preference assessment prior to the onset of the experiment for each participant in order to identify highly preferred edibles and tangibles (DeLeon & Iwata, 1996). The preference assessment included up to seven edible or tangible items identified as preferred items based on caregiver or teacher reports. Before beginning each experimental session, researchers presented the three most highly preferred items and instructed the individual to select a preferred item to work for. The researchers used the selected item in the subsequent training session.

Baseline

During baseline, therapists presented an array of three target stimuli equally spaced on the table in front of the participant. The therapist provided the discriminative stimulus “What’s this?” while pointing to the target stimulus. Therapists did not deliver any consequences for correct or incorrect responses in baseline. In addition, the therapist did not deliver any prompts during this phase. Therapists provided an edible or tangible item (30-s access) paired with nonspecific praise (e.g., “Cool working with me!”) on a fixed-time 1-min schedule to reduce the likelihood of problem behavior during baseline sessions.

Teaching

During this phase, therapists presented trials as described previously with an embedded prompt sequence, differential reinforcement for correct responses, and an error correction procedure. Therapists employed an MTL prompting procedure combined with a progressive time delay with the following steps: (a)0-s delay to a full vocal prompt, (b)2-s delay to a partial vocal prompt, (c)4-s delay to a partial vocal prompt, and (d) no prompt. Researchers increased prompt steps after participants met the prescribed mastery percentage assigned to the target set for two consecutive sessions. For example, for the target set assigned to the 80% accuracy criterion, the therapist would decrease the intrusiveness of the prompt when the participant achieved two consecutive sessions at 80% correct (prompted or independent). If the participant engaged in three incorrect responses within a 10-trial session, the therapist completed the session at the current prompt step and increased the intrusiveness of the prompt for the following session.

Following a correct prompted or independent response, therapists delivered behavior-specific praise on a fixed-ratio 1 schedule. In addition, researchers provided 30-s access to preferred items/activities or a quarter-size amount of an edible on a variable-ratio 3 schedule of reinforcement. If an error occurred, the therapist re-presented the trial with a full vocal prompt and stated, “Say [target].” The therapist terminated the trial regardless of whether the participant engaged in the correct response following the error correction procedure.

Follow-Up Probes

After the participant met the assigned mastery criterion for a target set, researchers began the maintenance phase for that set. Researchers conducted weekly follow-up probes under baseline conditions for 4 weeks following mastery for each individual target set. That is, target sets for each condition were mastered at different times. As such, follow-up probes for each condition did not always fall on the same date, but rather at weekly increments calculated from the time when the participant met the mastery criterion for each target set. Given the applied setting of the study, several weekly probes could not be conducted due to scheduling conflicts. Researchers also introduced additional learning sets as described by Richling et al. (2019) to ensure that participants were being exposed to the same number of stimuli in a teaching phase throughout the study.

For Walter, researchers were unable to conduct maintenance probes 1 week after mastery due to an extended holiday break. However, researchers conducted maintenance probes 2 weeks following mastery for all conditions (80%, 90%, and 100%), 3 weeks following mastery for 90%, and 4 weeks following mastery for 80% and 90%. For Paul, researchers conducted maintenance probes for Weeks 1, 2, 3, and 4 for the targets assigned to the 80% and 90% accuracy criteria. For the targets assigned to the 100% accuracy criterion, researchers conducted probes at Weeks 1, 3, and 4 following mastery.

Interobserver Agreement and Treatment Integrity

Researchers collected data for the purpose of measuring interobserver agreement (IOA) either in situ or from a video recording for 43% of all sessions (range 19%–62%) throughout the study. Researchers calculated IOA for 19% of sessions for Walter, 42% of sessions for Adam, and 62% of sessions for Paul. Researchers calculated IOA using the trial-by-trial method by dividing the total number of agreements by the total number of agreements and disagreements and then multiplying by 100. Researchers rounded percentages to the nearest whole number. Average agreement was 93% (range 80%–100%) for Walter, 99% (range 80%–100%) for Adam, and 100% (range 90%–100%) for Paul.

A second observer collected data for measuring treatment integrity. The observer used a checklist and scored each of the following components as correct or incorrect: (a) delivery of the discriminative stimulus; (b) recording of data for correct and incorrect responses; (c) delivery of the prescribed prompt, if applicable; and (d) delivery of the consequence according to the participant’s response. Researchers calculated treatment integrity by dividing the number of correct responses by the total number of opportunities for a behavior and multiplying by 100 to get a percentage. Data were collected for 30% of all sessions (range 15%–41%) throughout the study. Average treatment integrity scores were 98% (range 93%–100%) for Walter, 99% (range 90%–100%) for Adam, and 100% (range 97.5%–100%) for Paul.

Results

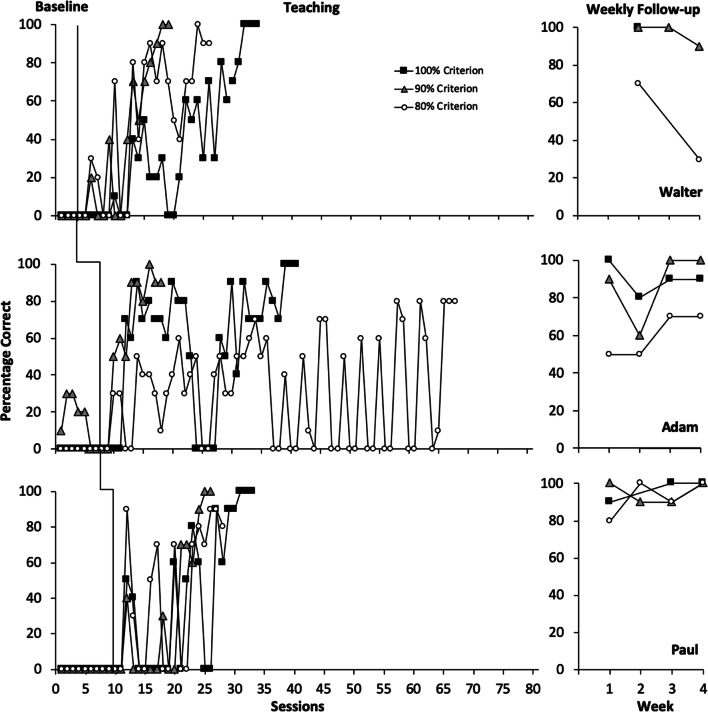

Figure 1 displays the results for Walter (top panel), Adam (middle panel), and Paul (bottom panel). During baseline, Walter emitted zero correct responses across all three target sets. With the introduction of teaching procedures, Walter demonstrated a steady increase in correct independent responding across all experimental conditions. Walter achieved the 90% accuracy mastery criterion target set, followed by the 80% and 100% accuracy criteria target sets, respectively. Walter achieved the mastery criteria for the 80%, 90%, and 100% target sets after 23, 13, and 31 teaching sessions, respectively. At Week 2, Walter demonstrated 100% correct responding for target sets assigned to the 90% and 100% accuracy mastery criteria. At Weeks 3 and 4, Walter maintained high levels of correct responding for the 90% accuracy mastery criterion target set, demonstrating 100% and 90% accuracy, respectively. However, Walter’s results indicate below-mastery levels of responding on both maintenance probes conducted for the target set assigned the 80% accuracy criterion during the Week 2 and Week 4 follow-ups, scoring 70% and 30% accuracy, respectively.

Fig. 1.

Percentage of Responses Independently Correct. Note. The left panel depicts the percentage of responses independently correct for Walter, Adam, and Paul across sessions during baseline and teaching phases for 80% (white circles), 90% (gray circles), and 100% (black circles) criteria target sets. The right panel depicts the percentage of responses independently correct across weeks during weekly maintenance probes

Adam (middle panel) responded with 0% correct independent responding for the 80% and 100% accuracy criteria target sets and low levels of responding for the 90% accuracy mastery criterion target set in baseline conditions. Adam immediately responded with a steady increase in correct independent responding with the introduction of teaching procedures. Adam first achieved mastery with the target set assigned to the 90% accuracy mastery criterion; then, he obtained mastery with the 100% and 80% accuracy mastery criteria. Adam achieved mastery for the 80%, 90%, and 100% target sets after 61, 11, and 34 teaching sessions, respectively. At Week 1, Adam performed at mastery levels of correct responding for the target sets assigned to the 90% and 100% accuracy mastery criteria conditions. Adam demonstrated 50% correct responding for the 80% accuracy mastery criterion at Week 1, which is well below the designated mastery criterion. At Week 2, correct responding slightly decreased to 60% and 80% for target sets assigned to the 90% and 100% accuracy mastery criteria, followed by an immediate increase to 100% and 90% during the Week 3 and Week 4 follow-up assessments. Correct responding remained steady at 50% correct responding for the 80% accuracy mastery criterion at the Week 2 probe and slightly increased to 70% correct responding during the Week 3 and Week 4 probes. However, levels of correct responding for the target set assigned to the 80% accuracy mastery criterion remained below mastery levels across all four maintenance probes.

Paul (bottom panel) responded with 0% correct independent responding across all target sets in baseline conditions. Paul immediately responded with a steady increase in correct independent responding with the introduction of teaching procedures. Paul first achieved mastery with the target set assigned to the 90% accuracy criterion; then, he obtained mastery in the 80% and 100% accuracy criteria. Paul achieved mastery for the 80%, 90%, and 100% target sets after 19, 17, and 24 teaching sessions, respectively. During weekly follow-up probes, Paul performed at or above the assigned mastery criterion level for each set. Paul performed with the highest levels of accuracy on the target set assigned to the 100% accuracy mastery criterion, scoring 90% or higher on each probe. Paul performed with the lowest levels of accuracy on the target set assigned to the 80% accuracy mastery criterion, scoring between 80% and 100% on each probe.

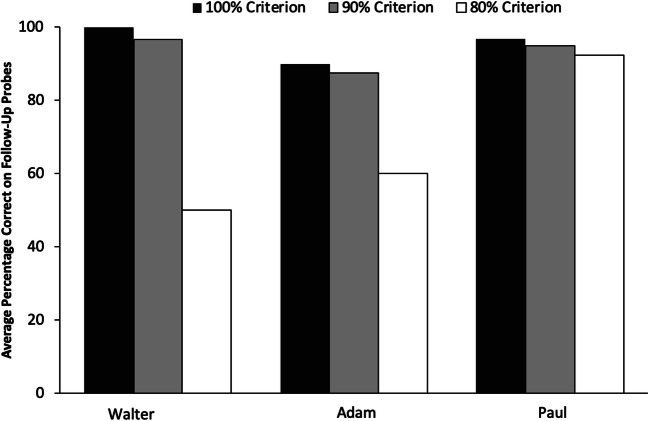

Figure 2 depicts the average levels of performance during weekly follow-up probes for each mastery criterion target set across participants. For all participants, performance during follow-up probes deteriorated as researchers lowered the mastery criteria. For Walter and Adam, the 100% and 90% accuracy criteria were sufficient for maintaining average levels of responding at greater than 80% accuracy during maintenance. For Paul, responding on target sets assigned to each mastery criterion maintained above 90% average accuracy during weekly follow-ups. For all participants, the 80% accuracy criterion resulted in the lowest levels of accurate responding during follow-ups.

Fig. 2.

Average Percentage Correct on Follow-Up Probes for Each Mastery Criterion Target Set Across Participants

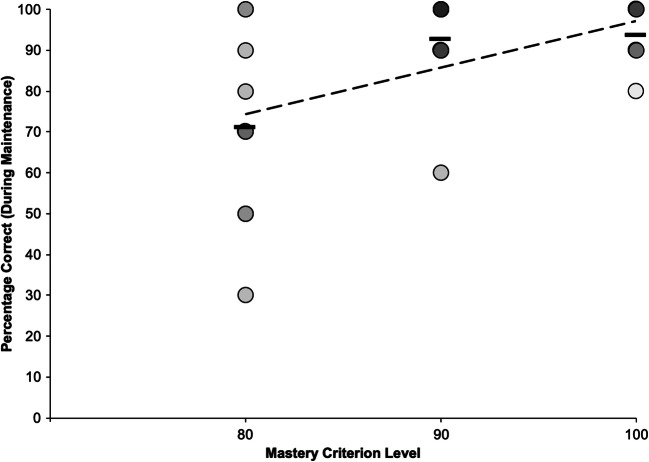

Figure 3 depicts the percentage of correct responses on the y-axis during all maintenance probes for each participant and the assigned mastery criterion on the x-axis. The darker data points indicate overlapping scores. The black bars depict the overall performance averages on maintenance probes for each mastery criterion. The average level of performance was 71% (range 30%–100%), 93% (range 60%–100%), and 94% (range 80%–100%) for the 80%, 90%, and 100% mastery criteria, respectively. Similar to Fuller and Fienup (2018), the data depict higher variability and lower levels of performance with lower mastery criteria. In contrast, higher levels of accuracy required for mastery criteria resulted in a narrower range and higher levels of performance during maintenance. The dashed line represents the linear trend for the average scores across each mastery criterion. The trend line displays a positive correlation between the prescribed accuracy level for mastery criteria and the average performance score during follow-up probes.

Fig. 3.

Percentage Correct During Maintenance for Each Participant Across Mastery Criteria Levels. Note. Each gray circle represents an individual’s performance, with the darker gray circles depicting overlapping data. The black horizontal hashes at each criterion level depict the average performance during maintenance for all participants at that criterion level. The dashed line represents the linear trend

Discussion

The current study evaluated the effects of three mastery criteria (80%, 90%, and 100% accuracy across three sessions) on the maintenance of skills taught with a combined MTL and time-delay prompting hierarchy. Results show higher levels of performance for target sets assigned to higher accuracy criteria (90% and 100%) when compared to targets taught to a lower accuracy criterion (80%) for three participants during weekly follow-up probes. The outcomes of this study extend the previous literature by providing a direct evaluation of the relationship between mastery criteria and maintenance for skills taught with an MTL prompting hierarchy with a time delay. The findings have important implications for practitioners’ and researchers’ selection of mastery criteria for skill acquisition programs.

Our findings replicate the results of Richling et al. (2019) by demonstrating high levels of maintenance for skills assigned to the 100% accuracy mastery criterion condition. Previous literature indicates idiosyncratic results regarding the effects of a 90% accuracy criterion on skill maintenance (Fuller & Fienup, 2018; Richling et al., 2019). The varied results may be attributed to the differences in the number of observations required at the designated level of performance or accuracy. Additionally, in contrast to the current study, the previously mentioned studies employed an LTM prompting hierarchy during acquisition. The results of this study suggest that a lower accuracy requirement (90%) may produce acceptable levels of performance during maintenance if the skills are taught using an MTL hierarchy combined with a time delay procedure, and a more stringent accuracy criterion may be required to produce maintenance for skills taught with an LTM procedure.

The findings of this study help to provide a more comprehensive evidence base for guiding practitioners’ selection of mastery criteria. Whereas most practitioners are currently employing an 80% accuracy requirement across three consecutive sessions, the previous literature and our current findings suggest this criterion may not be sufficient for maintaining responding once teaching is terminated (Richling et al., 2019). Similar to Richling et al. (2019) and Fuller & Fienup (2018), the current findings further demonstrate that a more stringent mastery criterion or higher level of accuracy predicts higher levels of performance after training.

An additional contribution of this study to the current literature points to the potential influence of skill level on the maintenance of skills. Anecdotally, Paul had the most advanced language repertoire of the three participants. In turn, Paul performed at high levels of accuracy across all experimental conditions during follow-up probes. However, no formal assessments were conducted across the three participants that support this theory. Future research should directly evaluate the extent to which skill level may contribute to maintenance. A replication of this study that includes employing a single formal assessment across a larger sample of participants with varied skill sets would allow researchers to evaluate this relationship further. Alternatively, one might conduct a post hoc analysis of a large existing clinical data set within an organization that has systemically adopted the consistent use of (a) a single formal skill assessment, (b) set mastery criteria, and (c) reliably conducted follow-up maintenance probes. Research of this nature could support the theory that practitioners may be able to adopt a less stringent accuracy criterion for individuals with higher skill sets. By assigning a lower mastery criterion, individuals may master targets more quickly, which allows novel targets to be introduced into teaching. This could result in a higher density of mastered targets over time. However, more research needs to be conducted to compile empirical evidence for this clinical practice for several reasons. First, the number of remaining errors may compound and create additional errors for composite skills later on. Second, the rate of acquisition of targets may also contribute to the maintenance of skills. It is plausible that targets that are more difficult to acquire in teaching may be more difficult to retain. Adam reached the 80% mastery criterion after 61 teaching sessions, which is significantly more than the 90% and 100% mastery criteria, which were mastered after 11 and 34 teaching sessions, respectively. Adam also performed with the lowest level of correct responding during the follow-up probes for target sets assigned to the 80% condition despite having a significantly higher number of teaching sessions in that condition. This may suggest that those targets were more difficult to acquire, which led to poorer maintenance. Future research should attempt a more formal assessment of target difficulty and create equal target sets or targets, as much as is clinically possible.

Several additional limitations of the current study warrant further discussion. First, treatment integrity errors occurred in which one participant was exposed to sessions with more intrusive prompts than those designated by the prescribed prompting procedures. This occurred for one session with the 100% target set for Paul. It is possible that additional exposures to more intrusive prompts influenced the levels of maintenance for that target set.

Second, due to the applied setting of the study, researchers were unable to conduct weekly maintenance probes for all participants across all conditions. For Walter, researchers conducted maintenance probes at Weeks 2, 3, and 4 for the target set assigned to the 90% criterion. However, researchers were only able to conduct maintenance probes at Weeks 2 and 4 for the 80% criterion. It is possible the gap in follow-up probes resulting in less exposure to the target stimuli during the maintenance phase may have contributed to lower levels of maintenance for targets in the 80% mastery criterion set. However, prior to missing a scheduled weekly probe, Walter performed below mastery levels of performance, scoring 70% accuracy during his first follow-up probe. More complete data sets would allow for researchers to draw stronger inferences about the relationship between the mastery criteria and maintenance. Additionally, researchers did not always conduct weekly maintenance probes exactly 7 days apart due to school breaks, illnesses, and other absences.

Third, the researchers did not conduct any within-subject replications during this study. Without within-subject replications, it is possible that the results are due to the targets themselves rather than the assigned mastery criteria. A within-subject replication would aid in the interpretation of Adam’s data, given the rate of acquisition across target sets varied widely.

Future research should seek to address these limitations and provide further evidence to support the relationship between the varying levels of accuracy within mastery criteria and their effects on the maintenance of skills. Additionally, future research should evaluate the effects of other components of mastery criteria on the maintenance of skills. For example, researchers could evaluate the effects of the number of observations of performance required to meet mastery by comparing the effects of a 100% accuracy criterion across one, two, or three consecutive sessions. Researchers could evaluate the temporal component of mastery criteria by comparing the effects of requiring a prescribed level of performance across 1, 2, or 3 days.

Lastly, future research should examine variables within mastery criteria that may affect the generalization of skills to untrained environments. For example, a study could evaluate the effects of training skills to mastery with one, two, or three therapists on performance in generalization probes. In order to provide researchers and practitioners with a sufficient amount of empirical support to make evidence-based decisions in regard to the selection of mastery criteria, more research is warranted in this area. Extensions of this line of research are essential to providing practitioners with the most effective and efficient technology to produce long-lasting and socially significant changes.

The results of the current study are only preliminary and should be viewed in conjunction with previous research as an initial guide for the selection of evidence-based mastery criteria associated with both skill acquisition and response maintenance. Still, the current study suggests that a mastery criterion of 80% accuracy across three sessions may not be sufficient to produce response maintenance when combined with an MTL/time delay procedure. Alternatively, a mastery criterion of 90% or 100% accuracy across three sessions may be sufficient for producing response maintenance when combined with an MTL/time delay procedure. When viewing the results of the current study in combination with previous research (Richling et al., 2019), a criterion of 90% accuracy across three sessions may be preferable when combined with an MTL/time delay procedure over an LTM prompting procedure. However, future research should evaluate this comparison directly.

Further, the current study highlights that the selection of particular components of a learning context (e.g., the prompting strategy and the mastery criterion) can be viewed as the independent variables that interact and collectively contribute to learning outcomes (e.g., response maintenance). This remains true within the entirety of empirical research involving skill acquisition. In this light, any given teaching component may be studied in isolation for empirical purposes, but the adoption of that component often occurs in the presence of a new configuration of the learning context in an applied setting. Whereas this is common, the same learning outcomes may not be observed as a result of the new configuration. Therefore, clinicians should consider the appropriateness of employing only specific components of the methodology from a given research study, and closely monitor response maintenance when this practice is adopted.

Declarations

Conflict of Interest

Emily Longino, Sarah Richling, Cassidy McDougale, and Jessie Palmier declare that they have no conflicts of interest.

Ethical Approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards. All procedures were in accordance with the ethical standards of the Behavior Analyst Certification Board.

Informed Consent

Informed consent was obtained from all individual participants included in this study.

Footnotes

This study was conducted by the first author, under the supervision of the second author, in partial fulfillment of the requirements for the master of science degree in applied behavior analysis at Auburn University.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- Baer, D. M., Wolf, M. M., & Risley, T. R. (1968). Some current dimensions of applied behavior analysis. Journal of Applied Behavior Analysis, 1(1), 91–97. 10.1901/jaba.1968.1-91. [DOI] [PMC free article] [PubMed]

- Cooper, J. O., Heron, T. E., & Heward, W. L. (2007). Applied behavior analysis (2nd ed.). Merrill.

- DeWiele, L., Martin, G., Martin, T. L., Yu, C. T., & Thomson, K. (2011). The Kerr-Meyerson assessment of basic learning abilities revised: A self-instructional manual (2nd Ed.). St. Amant Research Centre, Winnipeg, MB, Canada. Retrieved from http://stamant.ca/research/abla

- DeLeon, I. G., & Iwata, B. A. (1996). Evaluation of a multiple-stimulus presentation format for assessing reinforcer preferences. Journal of Applied Behavior Analysis, 29(4), 519–533. 10.1901/jaba.1996.29-519. [DOI] [PMC free article] [PubMed]

- Fentress GM, Lerman DC. A comparison of two prompting procedures for teaching basic skills to children with autism. Research in Autism Spectrum Disorders. 2012;6(3):1083–1090. doi: 10.1016/j.rasd.2012.02.006. [DOI] [Google Scholar]

- Fuller JL, Fienup DM. A preliminary analysis of mastery criterion level: Effects on response maintenance. Behavior Analysis in Practice. 2018;11(1):1–8. doi: 10.1007/s40617-017-0201-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnston, J. M., & O’Neill, G. (1973). The analysis of performance criteria defining course grades as a determinant of college student academic performance. Journal of Applied Behavior Analysis, 6(2), 261–268. 10.1901/jaba.1973.6-261. [DOI] [PMC free article] [PubMed]

- Libby ME, Weiss JS, Bancroft S, Ahearn WA. A comparison of MTL and LTM prompting on the acquisition of solitary play skills. Behavior Analysis in Practice. 2008;1(1):37–43. doi: 10.1007/BF03391719. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Love JR, Carr JE, Almason SM, Petursdottir AI. Early and intensive behavioral intervention for autism: A survey of clinical practices. Research in Autism Spectrum Disorders. 2009;3(2):421–428. doi: 10.1016/j.rasd.2008.08.008. [DOI] [Google Scholar]

- McDougale, C. B., Richling, S. M., Longino, E. B., & O’Rourke, S. A. (2020). Mastery criteria and maintenance: A descriptive analysis of applied research procedures. Behavior Analysis in Practice, 13(2), 402-410. 10.1007/s40617-019-00365-2. [DOI] [PMC free article] [PubMed]

- National Autism Center. (2015). Findings and conclusions: National Standards Project, phase 2.

- Richling, S. M., Williams, W. L., & Carr, J. E. (2019). The effects of different mastery criteria on the skill maintenance of children with developmental disabilities. Journal of Applied Behavior Analysis, 52(3), 701-717. 10.1002/jaba.580. [DOI] [PubMed]

- Sayrs, D.M.,& Ghezzi, P. M. (1997). The steady-state strategy in applied behavior analysis. The Experimental Analysis of Human Behavior Bulletin, 15(2), 29–30.

- Semb G. The effects of mastery criteria and assignment length on college-student test performance. Journal of Applied Behavior Analysis. 1974;7:61–69. doi: 10.1901/jaba.1974.7-61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sindelar, P. T., Rosenberg, M. S., & Wilson, R. J. (1985). An adapted alternating treatments design for instructional research. Education and Treatment of Children, 8(1), 67–76.