Abstract

Background

During the coronavirus disease (COVID-19) pandemic, low- and middle-income countries have rapidly scaled up intensive care unit (ICU) capacities. Doing this without monitoring the quality of care poses risks to patient safety and may negatively affect patient outcomes. While monitoring the quality of care is routine in high-income countries, it is not systematically implemented in most low- and middle-income countries. In this resource-scarce context, there is a paucity of feasibly implementable tools to monitor the quality of ICU care. Lebanon is an upper middle-income country that, during the autumn and winter of 2020–1, has had increasing demands for ICU beds for COVID-19. The World Health Organization has supported the Ministry of Public Health to increase ICU beds at public hospitals by 300%, but no readily available tool to monitor the quality of ICU care was available.

Objective

The objective with this study was to describe the process of rapidly developing and implementing a tool to monitor the quality of ICU care at public hospitals in Lebanon.

Methods

In the midst of the escalating pandemic, we applied a systematic approach to develop a realistically implementable quality assurance tool. We conducted a literature review, held expert meetings and did a pilot study to select among identified quality indicators for ICU care that were feasible to collect during a 1-hour ICU visit. In addition, a limited set of the identified indicators that were quantifiable were specifically selected for a scoring protocol to allow comparison over time as well as between ICUs.

Results

A total of 44 quality indicators, which, using different methods, could be collected by an external person, were selected for the quality of care tool. Out of these, 33 were included for scoring. When tested, the scores showed a large difference between hospitals with low versus high resources, indicating considerable variation in the quality of care.

Conclusions

The proposed tool is a promising way to systematically assess and monitor the quality of care in ICUs in the absence of more advanced and resource-demanding systems. It is currently in use in Lebanon. The proposed tool may help identifying quality gaps to be targeted and can monitor progress. More studies to validate the tool are needed.

Keywords: COVID-19, critical care, quality of health care, checklist

Introduction

The coronavirus disease (COVID-19) pandemic has resulted in significant pressure on health systems globally, particularly in low- and middle-income countries (LMICs) where resources are limited [1]. Critical care is hospital-based care for the most severely sick patients and has been a key part of the response to the pandemic [2]. Critically ill patients in high-income countries (HICs) are typically treated in intensive care units (ICUs) staffed with highly specialized healthcare workers and high-cost equipment and medication [3]. However, in most LMICs, ICU care is not available or is significantly constrained due to the lack of resources.

Mortality outcomes following ICU care varied significantly between LMICs and HICs prior to the COVID-19 pandemic [4]. It is likely that these differences have increased further during the pandemic due to rapid increase of patients as well as urgent scale-up of ICU beds without sufficiently added resources.

The quality of care is high on the global health agenda and is considered a crucial part of the work to reach universal health coverage (UHC) and Sustainable Development Goals 3 and 8 [5]. In HICs, assessment tools to measure and monitor the hospital’s quality of care are part of regular routines and are well studied [6–8]. By routinely collecting indicators of quality, gaps can be identified, and shortcomings addressed [9]. The use of quality assessment tools in hospital settings in LMICs is not well studied. However, it may be assumed that such tools could significantly contribute to improving mortality outcomes, with significantly greater effects compared to HICs [4].

Lebanon is an upper middle-income country where health care is mainly provided by a private, for-profit system. Before the compound crisis of the Lebanese liquidity crisis and the COVID-19 pandemic, 90% of hospital beds were in the private sector (unpublished data from World Health Organization Regional Office for Eastern Mediterranean). The public hospital system governed by the Ministry of Public Health (MOPH) is underfunded, and public hospitals lack staff, equipment and medications [10].

Lebanon was relatively spared from COVID-19 during the spring of 2020. However, following the explosion in the Beirut harbour in August, COVID-19 cases rapidly increased [10] triggering additional needs for COVID-19 hospital care and more ICU beds. At the end of August 2020, a total of 300 ICU beds, dedicated to COVID-19 care beds, were available in Lebanon. Significant efforts were invested in scaling up the number of ICU beds, and by 1 April 2021, a total of 1176 ICU beds were available, out of which 90% were occupied [11]. In public hospitals, ICU beds almost tripled in <8 months accounting for around 40% of total ICU beds available for COVID-19 care. However, there were significant concerns regarding quality care for critically ill COVID-19 patients, especially in public hospitals as they were severely understaffed and lacked systematic implementation of evidence-based protocols for ICU care (World Health Organization Lebanon, Public Hospitals Assessment 2020: Unpublished).

In this context, the World Health Organization (WHO) in Lebanon focused its support to public hospitals to improve the quality of care and upgrade their capacity through procurement of necessary equipment, coaching and hiring extra nursing staff. To ensure patient safety at the supported hospitals, a tool to monitor the quality of care in ICUs was urgently needed. However, a validated, easily implementable quality assessment tool for ICU care, which was applicable to the context, was neither readily available nor sufficiently described in the scientific literature. Despite this, it was essential to rapidly develop such a tool to be implemented while scaling up ICU beds in the midst of an escalating health emergency. The aim of this paper is to describe the ICU-care quality of care tool that was developed and the process of developing it. The tool is currently being used in 11 public COVID-19 ICUs in Lebanon.

Methods

In the rapidly evolving and urgent COVID-19 pandemic, we implemented a quality assurance project. Between September and November 2020, we created a tool to assess and document the quality of care in the ICUs, using a systematic approach. We wanted the tool to be able to capture quality at a baseline assessment and assess changes in quality over time.

Ideally, a tool to monitor the quality of care should be based on patient outcome data. However, in our setting such data were neither readily available as if so difficult to interpret due to significant case-mix variations. For the quality of care, we sought inspiration for the Donobedian model that provides a framework to examine and evaluate the quality of health care including ICU [28]. According to the model, information about the quality of care can be drawn from three domains: structure, process and outcomes. In our case, we focused on the first two.

Besides assessing the quality of care, we also wanted the tool to monitor changes following supportive interventions, such as the addition of extra nurses, the introduction of high-flow nasal cannula machines and the implementation of new protocols.

We defined an ICU according to the MOPH Lebanon accreditation standards classification and required the ICU to reach at least level II according to the international classification [3], which requires the ICU to have the possibility to provide invasive mechanical ventilation.

First, we conducted a literature review to identify potential indicators on structure and process for the checklist. We searched PubMed using the key words ‘assessment’, ‘ICU’, ‘quality of care’, ‘minimal standards’, ‘low resource setting’ and ‘quality indicators’. To assess the identified manuscripts, we followed the PRISMA checklist [12]. From the literature, we extracted indicators that met the following criteria: (i) indicators that covered the domains of infrastructure, equipment and drugs, staffing, training and development, protocols and clinical management [13]; (ii) indicators that were related to COVID-19 critical care and (iii) indicators that were considered feasible to collect. In this context, we assumed indicators were feasible to collect if they were possible to collect within 1 h or could be collected through observations, review of medical records or by interviewing a responsible physician and nurse at each ICU [14]. We then reviewed the ICU quality of care indicators within the accreditation standards of the Lebanese MOPH [15] and identified and added further possible indicators.

Secondly, we sought the expert opinion of two experienced ICU physicians familiar with working in LMIC settings and three public health physicians well orientated in the healthcare system in Lebanon. The experts were asked to assess each indicator based on our selection criteria and to focus on creating a checklist that would be able to discriminate between ICUs performing above or below minimum standards. Due to time limitations, the indicators were selected in one single face-to-face meeting with the five experts. Consensus among the five experts was defined a priori as a prerequisite for an indicator to be included in the tool.

Finally, a pilot test was performed in one public hospital ICU to test the feasibility of collecting the selected indicators. To ensure the standardized use of the checklist, we created instructions for how to collect the data, including how to assess and categorize the parameters of each indicator. We stipulated that data collectors should be an experienced ICU physician or ICU nurse. We also performed a test where two data collectors filled in the checklist independently of each other in order to evaluate the concordance of the results. We added an additional specification to the instructions after this test.

Finally, we selected a group of indicators from the checklist to create a scoring protocol. The protocol was developed to provide numerical estimates of selected key indicators. This would allow one to monitor quality progress over time as well as enable the comparison of results between ICUs. Indicators from the checklist were selected for the scoring protocol if they could be quantified and if they could be classified as either above or below minimum standards. The selection process was performed by the same expert opinion group. We decided on a simple protocol where each included indicator could generate zero or one point. If data were missing on any indicator, one point was subtracted from the total sum. Scoring results were displayed as both the sum of the score and the percentage of the maximum possible score.

To assess the checklist’s ability to capture significant differences in the quality of care between high versus low resourced ICUs, we conducted a test in one public hospital with low resources and two high-resourced university hospitals that had adopted internationally standardized protocols for ICU care. This was done under the assumptions that the quality of care would vary between the hospitals [16].

This study documents a quality assurance project that is part of improving health care in Lebanon. As such, there has been no formal ethical application submitted for writing up this study. No identifiable patient data were used, and no individual has been exposed to any harm in this study.

Results

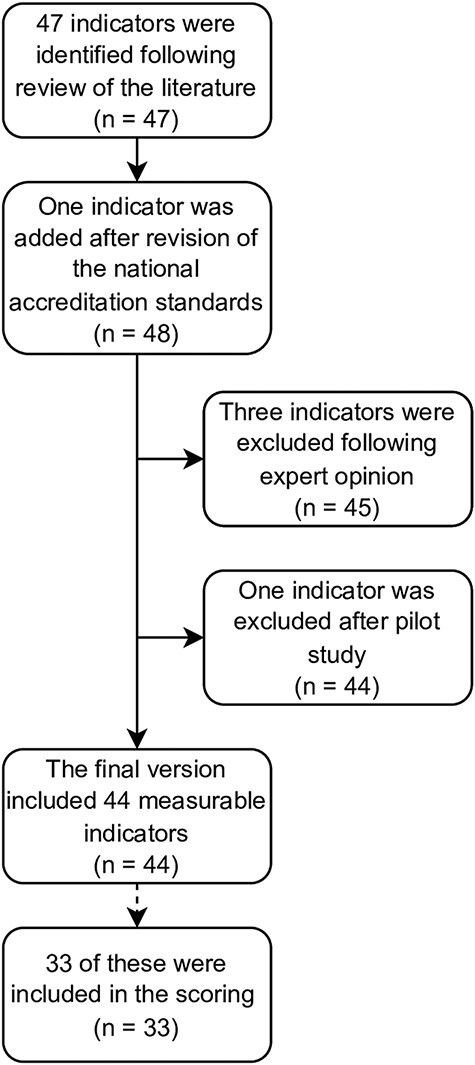

A total of 47 indicators were identified following the literature review, and after revision of the national accreditation standards, one further indicator was added, for a total of 48 potential indicators. Three indicators were excluded following expert opinion, and one indicator was excluded after the pilot, all due to doubts regarding the feasibility of collecting them (Figure 1). In the final checklist, 44 measurable indicators were included (see Table 1).

Figure 1.

Selection of indicators.

Table 1.

Forty-four indicators selected for the quality of care tool categorized by domains

| A Observations (at the moment of the visit) | Subgroup |

|---|---|

|

Nurse/patient ratio |

Staffing |

|

Person present at the central monitors |

Staffing |

|

ICU patients intubated |

Clinical management |

|

Patients on BiPAP or CPAP |

Clinical management |

|

Patients on high-flow nasal cannula |

Clinical management |

|

Patients that fill discharge criteria |

Clinical management |

|

No. of high-flow nasal cannula readily available in total |

Clinical management |

|

No. of ventilators readily available in total |

Clinical management |

|

All patients directly visible/or visible by camera |

Infrastructure |

|

Clearly separated cleaned/dirty area |

Infrastructure |

|

Complete intubation equipment readily available |

Equipment/drugs |

|

Complete intubation drugs readily available |

Equipment/drugs |

|

Readily available written protocols for intubation |

Protocols |

|

Readily available written protocols for prone positioning |

Protocols |

|

Any patient with asynchrony with ventilator |

Clinical management |

|

Any patient with no peak pressure alarm set |

Clinical management |

|

Intubated patients with head elevated 30° |

Clinical management |

|

No. of prone patients (intubated or awake) |

Clinical management |

|

No. of unattended alarms during 10 min |

Clinical management |

| B Assessment of patient files/observational charts | Subgroup |

|

No. of noted values of respiratory rate |

Clinical management |

|

No. of noted values of minute volume or tidal volume |

Clinical management |

|

No. of noted values of peak pressure |

Clinical management |

|

No. of noted values of blood pressure |

Clinical management |

|

Fluid balance last 24 h |

Clinical management |

|

Noted measures following the last severely deranged value |

Clinical management |

|

Arterial blood gas daily |

Clinical management |

|

Measures undertaken following the pathologic ABG results |

Clinical management |

|

Electrolytes daily |

Clinical management |

|

D-dimer at least two times per week |

Clinical management |

|

Anticoagulation provided based on national guidelines |

Clinical management |

|

Receiving proton pump inhibitors |

Clinical management |

| C Questions to the present doctor/nurse/supervisor | Subgroup |

|

Doctor rounds two times per day or more |

Staffing |

|

How long did it take for doctor to arrive for the last alarm |

Staffing |

|

Time specialist doctor present yesterday |

Staffing |

|

Nurse/patient ratio night shift (21.00-06.00) |

Staffing |

|

Nurse/patient ratio weekend (Sat–Sun) |

Staffing |

|

Emergency X-ray available for ICU patients |

Infrastructure |

|

Echocardiography available within 1 day in ICU |

Infrastructure |

|

Time from sampling to result of arterial blood gas |

Infrastructure |

|

System for recording complications |

Training and development |

|

(a) Thromboembolism diagnosed? (b) If yes, how? |

Clinical management |

|

(a) Pneumothorax diagnosed? (b) If yes, how? |

Clinical management |

|

Morbidity and mortality conference on a regular basis |

Training and development |

|

Responsible doctor specialist with critical care diploma |

Staffing |

Abbreviation: BiPAP: bilevel positive airway pressure; CPAP: continous positive airway pressure.

The comparison of results between two different collectors showed a high concordance; only one indicator differed in classification between the two collectors.

For scoring, 33 out of 44 indicators were selected by the expert opinion group. The indicators that were not selected were mainly descriptive. Although still valid for identifying possible support to an ICU, they were not able to classify as above or below any standard. The maximum score was 26, as 11 indicators were grouped (see Table 2).

Table 2.

Indicators selected for the scoring protocol, including points

| Staffing (max 3p) | |

|---|---|

| C14 Responsible doctor specialist with critical care diploma | 1p if yes, 0p if no |

| A01 Nurse/patient ratio ≥0.5 C04 Nurse/patient ratio night shift (21.00-06.00) ≥0.5 C05 Nurse/patient ratio weekend (Sat–Sun) ≥0.5 |

1p if all true, 0p if at least one false |

| C01 Doctor rounds two times per day or more | 1p if yes, 0p if no |

| Infrastructure (max 5p) | |

| A09 All patients directly visible/or visible by camera | 1p if yes, 0p if no |

| A10 Clearly separated cleaned/dirty area | 1p if yes, 0p if no |

| C06 Emergency X-ray available for ICU patients | 1p if yes, 0p if no |

| C07 Echocardiography available within 1 day in ICU | 1p if yes, 0p if no |

| C08 Time from sampling to result of arterial blood gas | 1p if ≤15 min, 0p if >15 min |

| Equipment/drugs (max 2p) | |

| A11 Complete intubation equipment readily available | 1p if yes, 0p if no |

| A12 Complete intubation drugs readily available | 1p if yes, 0p if no |

| Clinical management (12p) | |

| A17 Intubated patients with head elevated | 1p if ≥80%, 0p if <80% |

| A18 No. of prone patients (intubated or awake) | 1p if 1 or more patients, 0p if 0 patients |

| B01 No. of noted values of respiratory rate >12 B02 No. of noted values of minute volume or tidal volume >6 B03 No. of noted values of peak pressure >6 B04 No. of noted values of blood pressure >12 |

1p if all true, 0p if at least one false |

| B05 Fluid balance last 24 h | 1p if yes, 0p if no |

| B07 Arterial blood gas daily | 1p if yes, 0p if no |

| B09 Electrolytes daily B10 D-dimer at least two times per week |

1p if both true, 0p if at least one false |

| B11 Anticoagulation provided based on national guidelines B12 Receiving proton pump inhibitors |

1p if both true, 0p if at least one false |

| B06 Noted measures following the last severely deranged value | 1p if yes, 0p if no |

| B08 Measures undertaken following the pathologic ABG results | 1p if yes, 0p if no |

| A19 No. of unattended alarms during 10 min | 1p if no unattended alarm, 0p if unattended alarm exists |

| A15 Any patient with asynchrony with ventilator | 1p if no, 0p if yes |

| A16 Any patient with no peak pressure alarm | 1p if no, 0p if yes |

| Training and development (max 2p) | |

| C09 System for recording complications | 1p if yes, 0p if no |

| C13 Morbidity and mortality conference on a regular basis | 1p if yes, 0p if no |

| Protocols (max 2p) | |

| A13 Readily available written protocols for intubation | 1p if yes, 0p if no |

| A14 Readily available written protocols for prone positioning | 1p if yes, 0p if no |

| Total max 26p | |

| Missing data give zero points and one point is subtracted from the total | |

The scoring protocol was able to capture significant differences in the quality of care. The public hospital ICU received a score of 13 points or 52% of the maximum total score. The first university hospital ICU scored 21 points (84%), and the second university hospital ICU scored 23 points (90%).

Discussion

Statement of principal findings

The study has shown that it is possible to, in the midst of an escalating pandemic, during a short time and with limited resources, develop and implement a checklist to monitor the quality of care in ICUs in Lebanon. Furthermore, scoring the quality of care indicators seems to be a promising way to quantify the quality of care and compare between ICUs.

Strengths and limitations

The checklist was developed under significant time pressure. The main aim of the checklist was not research, but quality assurance. The strength of this manuscript is that it systematically documents the development and implementation of a quality assurance checklist in the midst of a pandemic with escalating ICU care needs. Without researched tools that assess the quality of care, ethical issues arise, since the right to health care also includes the right to quality of care [17] and ensuring high-quality care is a mandatory step to reaching UHC [5].

The rapid development of the checklist and the context has led to trade-offs and methodological considerations. The main one is linked to the selection of indicators and the checklist’s ability to capture the quality of care. The quality of care is a dynamic concept including a range of aspects, some of which are difficult to measure [8, 18]. The selected indicators are not in any way comprehensive, but they capture different domains, with the main focus on respiratory care, and include many indicators that have already been adopted internationally. By conducting both a literature review and seeking expert opinion in the selection process, the validity of the checklist was justified to a certain degree [8]. However, more studies are needed to validate the checklist and its feasibility to be implemented in other resource-limited settings.

Interpretation within the context of the wider literature

The quality of care was originally described as including three domains: structure, process and outcome [18]. We expanded these domains to five, according to previous research on developing assessment tools in LMIC [13]. All five domains can be categorized under structure or process, as we actively chose to exclude outcome measures from the checklist. Outcome measures require data collection over time which can be burdensome [19]. Mortality is the main outcome of quality of care, but due to significant variations in COVID-19 patient mix at the ICUs, we found it impossible to use. Furthermore, the literature advocates that quality indicators should primarily focus on process and less on outcome measures [20]. The checklist should be seen as a complement to existing outcome indicators that are routinely collected, as it has the advantage of being easier to collect and less influenced by case-mix variation [21]. To improve the quality of care, targeted interventions acting on the results of the assessment tool are needed. One such intervention that showed promising results was the introduction of a vital sign-directed therapy protocol in an ICU in Tanzania [22]. Other interventions could emphasize the importance of the essential care of all patients with critical illness, both within and outside ICUs [23]. However, this requires political willingness and economic resources, as well as motivated staff.

Medical records are frequently used to extract the quality of care indicators [24]. However, the quality and viability of collecting indicator data from records depends on reliable medical records and has been criticized as measuring what is documented rather than what is actually performed [25]. In this checklist, we complemented data from medical records with two more approaches: interviews [26] and direct observations. Using different approaches enables a more thorough picture of the reality in the ICU and increases the likelihood of generating robust results [27].

We tried to standardize data collection by including clear instructions with the checklist. Using explicit criteria to perform the assessment can increase the reliability of the process [28]. Our test showed a good concordance between two independent collectors, and we further modified the instructions for better clarity following the test. Still, questions can be asked differently and observations can be disparate among data collectors, which risks producing measurement bias [29]. We therefore advocate to keep the number of data collectors as limited as possible to guarantee the internal validity of the results. All data collectors should, however, have expertise in ICU care.

Assigning scores to the indicators enables a more pedagogical communication of the results and facilitates comparison between different ICUs, between the same ICU over time and before and after interventions in an ICU that are aimed at improving outcomes [30]. The indicators that were selected for scoring were those that could be dichotomized and could be defined as below or above minimum standards [31]. Unlike other assessment tools [26], we chose to only use dichotomized categorization in order to simplify the assessment process. This may have reduced the tool’s sensitivity to measure small differences between ICUs. However, it did facilitate the tool’s ability to identify ICUs that were performing below standard.

There was no gold standard to compare to our scoring protocol’s ability to capture differences in the quality of care. However, we assumed that comparing hospitals with low versus high resources and hospitals that had or did not have standardized protocols before the COVID-19-pandemic could be used as a surrogate for levels of quality of care. Provided that our assumption is valid, a difference of 40 percentage points between hospitals with high versus low resources indicates that our scoring protocol was able to capture a significant difference in the quality of care.

Implications for policy, practice and research

The policy implication of the proposed tool is of limited value unless it is included in larger efforts to improve the quality of care. It must be highlighted that our tool only provides quantitative and descriptive values and that documenting them will not automatically improve the quality of care. However, given the extreme situation with escalating number of severely sick COVID-19 patients and dramatic scale-up of ICU-bed, we found it necessary to document quality systematically. To what extent this effort will improve quality remains to be seen as it will require efforts outside the mandate of the WHO. Nevertheless, the quantifiable results document the situation and offer opportunities to numerically define. In upcoming papers, we will present the results of the scoring carried out. We hope that this paper will inspire colleagues in similar settings and hopefully serve as a basis for catalysing interventions for improvements. We also encourage colleagues in similar settings to write up their experiences of setting up systems to assess and monitor the quality of care in ICUs in LMIC during the COVID-19 pandemic.

Conclusion

Our study shows that it is possible to develop and implement a tool to assess and monitor the quality of care in ICUs in the midst of a pandemic, in a short time and with limited resources, but more studies are needed to validate the tool. The tool checklist and the scoring protocol are currently being used in 11 public ICUs in Lebanon.

Contributor Information

Märit Halmin, Department of Global Public Health, Karolinska Institutet, Solnavägen, Stockholm 171 77, Sweden.

Ghada Abou Mourad, The World Health Organization, Bloc left 4th floor, Glass building, Museum Square, Beirut 5391, Lebanon.

Adam Ghneim, Department of Global Public Health, Karolinska Institutet, Solnavägen, Stockholm 171 77, Sweden.

Alissar Rady, The World Health Organization, Bloc left 4th floor, Glass building, Museum Square, Beirut 5391, Lebanon.

Tim Baker, Department of Global Public Health, Karolinska Institutet, Solnavägen, Stockholm 171 77, Sweden.

Johan Von Schreeb, Department of Global Public Health, Karolinska Institutet, Solnavägen, Stockholm 171 77, Sweden.

Data sharing statement

No additional data exist.

Ethical approval statement

This quality assurance project did not include data on individual participants nor did it disclose or reveal any individual medical information. The development and usage of the checklist are approved by the ethical committee in Lebanon (USJ-2021-243).

References

- 1. Fantom N, Serajuddin U. The World Bank’s classification of countries by income. Policy Research Working Paper, 2016. [Google Scholar]

- 2. Murthy S, Gomersall CD, Fowler RA. Care for critically ill patients with COVID-19. JAMA 2020;323:1499–500. [DOI] [PubMed] [Google Scholar]

- 3. Marshall JC et al. What is an intensive care unit? A report of the task force of the world federation of societies of intensive and critical care medicine. J Crit Care 2017;37:270–6. [DOI] [PubMed] [Google Scholar]

- 4. Vincent JL, Marshall JC, Ñamendys-Silva SA et al. Assessment of the worldwide burden of critical illness: the intensive care over nations (ICON) audit. Lancet Respir Med 2014;2:380–6. [DOI] [PubMed] [Google Scholar]

- 5. The United Nations Department of Economic and Social Affairs . Sustainable development goals report 2020. United Nations, 2020. [Google Scholar]

- 6. Najjar-Pellet J, Jonquet O, Jambou P et al. Quality assessment in intensive care units: proposal for a scoring system in terms of structure and process. Intensive Care Med 2008;34:278–85. [DOI] [PubMed] [Google Scholar]

- 7. Rhodes A, Moreno RP, Azoulay E et al. Prospectively defined indicators to improve the safety and quality of care for critically ill patients: a report from the Task Force on Safety and Quality of the European Society of Intensive Care Medicine (ESICM). Intensive Care Med 2012;38:598–605. [DOI] [PubMed] [Google Scholar]

- 8. Braun JP et al. Quality indicators in intensive care medicine: why? Use or burden for the intensivist. Ger Med Sci 2010;8:Doc22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Berwick DM, James B, Coye MJ. Connections between quality measurement and improvement. Med Care 2003;41:I30–8. [DOI] [PubMed] [Google Scholar]

- 10. Republic of Lebanon Ministry of Public Health . Lebanon Health Resilience Project, Social and Environmental Safeguards Framework. Beirut: Ministry of public Health, Republic of Lebanon, 2021. [Google Scholar]

- 11. World Health Organization . WHO Lebanon daily brief COVID-19. 19 August 2020–5 March 2021. 2021.

- 12. Moher D et al. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. BMJ 2009;339:b2535. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Baker T, Lugazia E, Eriksen J et al. Emergency and critical care services in Tanzania: a survey of ten hospitals. BMC Health Serv Res 2013;13:140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Kötter T, Blozik E, Scherer M. Methods for the guideline-based development of quality indicators—a systematic review. Implement Sci 2012;7:21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Republic of Lebanon Ministry of Public Health . Revised hospital accreditation standards in Lebanon. 2019.

- 16. Ayanian JZ, Weissman JS. Teaching hospitals and quality of care: a review of the literature. Milbank Q 2002;80:569–93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Akachi Y, Kruk ME. Quality of care: measuring a neglected driver of improved health. Bull World Health Organ 2017;95:465–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Donabedian A. The quality of care. How can it be assessed? JAMA 1988;260:1743–8. [DOI] [PubMed] [Google Scholar]

- 19. Pronovost PJ, Waters H, Dorman T. Impact of critical care physician workforce for intensive care unit physician staffing. Curr Opin Crit Care 2001;7:456–9. [DOI] [PubMed] [Google Scholar]

- 20. Levy MM. Finding out what we do in the ICU. Crit Care Med 2006;34:227–8. [DOI] [PubMed] [Google Scholar]

- 21. Davenport RJ, Dennis MS, Warlow CP. Effect of correcting outcome data for case mix: an example from stroke medicine. BMJ 1996;312:1503–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Baker T, Schell CO, Lugazia E et al. Vital signs directed therapy: improving care in an intensive care unit in a low-income country. PLoS One 2015;10:e0144801. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Schell CO, Gerdin Wärnberg M, Hvarfner A et al. The global need for essential emergency and critical care. Crit Care 2018;22:284. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Corriol C, Daucourt V, Grenier C et al. How to limit the burden of data collection for quality indicators based on medical records? The COMPAQH experience. BMC Health Serv Res 2008;8:215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Rios-Zertuche D, Zúñiga-Brenes P, Palmisano E et al. Methods to measure quality of care and quality indicators through health facility surveys in low- and middle-income countries. Int J Qual Health Care 2019;31:183–90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. de Carvalho AGR, de Moraes APP, Tanaka LMS et al. Quality in intensive care units: proposal of an assessment instrument. BMC Res Notes 2017;10:222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Catchpole K, Neyens DM, Abernathy J et al. Framework for direct observation of performance and safety in healthcare. BMJ Qual Saf 2017;26:1015–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Cancer Australia . EdCaN Module: Case-Based Learning Resources. 2018. [cited 2 March 2021]. https://www.edcan.org.au/edcan-learning-resources/case-based-learning-resources (12 June 2021, date last accessed).

- 29. Oort FJ, Visser MR, Sprangers MA. Formal definitions of measurement bias and explanation bias clarify measurement and conceptual perspectives on response shift. J Clin Epidemiol 2009;62:1126–37. [DOI] [PubMed] [Google Scholar]

- 30. Agency for Healthcare Research and Quality . Translate Health Care Quality Data Into Usable Information. 2015. [cited 2 March 2021]. https://www.ahrq.gov/talkingquality/translate/index.html (February 2016, date last accessed).

- 31. Rubin HR, Pronovost P, Diette GB. From a process of care to a measure: the development and testing of a quality indicator. Int J Qual Health Care 2001;13:489–96. [DOI] [PubMed] [Google Scholar]