Abstract

Cells respond heterogeneously to molecular and environmental perturbations. Phenotypic heterogeneity, wherein multiple phenotypes coexist in the same conditions, presents challenges when interpreting the observed heterogeneity. Advances in live cell microscopy allow researchers to acquire an unprecedented amount of live cell image data at high spatiotemporal resolutions. Phenotyping cellular dynamics, however, is a nontrivial task and requires machine learning (ML) approaches to discern phenotypic heterogeneity from live cell images. In recent years, ML has proven instrumental in biomedical research, allowing scientists to implement sophisticated computation in which computers learn and effectively perform specific analyses with minimal human instruction or intervention. In this review, we discuss how ML has been recently employed in the study of cell motility and morphodynamics to identify phenotypes from computer vision analysis. We focus on new approaches to extract and learn meaningful spatiotemporal features from complex live cell images for cellular and subcellular phenotyping.

Keywords: machine learning, live cell imaging, deep learning, phenotyping, cell motility, cell morphodynamics

1. Introduction

A primary goal of biology is to understand the phenotypic characteristics of organisms and their underlying mechanisms. Indeed, biology is a branch of natural science that has historically focused on the study of differences in organismal traits or phenotypes (appendix A). The careful observation of phenotypes has traditionally been important in both medicine and agriculture. Prior to the advent of modern medicine, medical diagnosis focused on carefully detecting abnormal phenotypes and treating the associated diseases. The recent advancement of high-throughput tools has accelerated phenotyping at the molecular, cellular, and tissue levels [1]. Advances have been made in many areas by employing state-of-the-art genomic and imaging technologies, including medical diagnosis [2–5], drug discovery [6–13], agriculture [14–16], and bioproduction [17, 18].

Conventionally, phenotypes are characterized by static information such as cell morphology, protein abundance, and localization. Living organisms, however, dynamically react to wide ranges of everchanging environments by adapting their biochemical, physical, and morphological characteristics to new environments. Cellular dynamic responses occur on various timescales: biochemical signaling within seconds, transcriptional changes from minutes to hours, and differentiation and division from hours and days [19]. For example, the protein level of p53, a tumor suppressing transcription factor that controls cell division and cell death, displays dynamic oscillations in response to DNA damage to mitigate the irreversible effects of perpetual activation of p53 target genes [20, 21]. Cells also dynamically change their morphology within seconds to hours in response to environmental cues [22]. It is increasingly clear that static phenotypes are limited when investigating such dynamic processes and should be complemented by temporal organismic behaviors called ‘dynamic phenotypes’ [23].

Since cell morphology and motility reflect the physiological and signaling states of a cell [24–26], efforts have long been underway to analyze the dynamics of cell morphology (morphodynamics) as well as motility and locomotion in a quantitative manner using live cell imaging [27–29]. However, phenotypic heterogeneity, where multiple phenotypes coexist at subcellular [30–32], cellular [33–35], and multicellular [36, 37] levels, hinders the task of phenotyping from a large and high-dimensional live cell dataset. Moreover, highly dynamic new phenotypes can emerge depending on environmental conditions and developmental age [38]. Therefore, the heterogeneity of cellular and subcellular dynamics has been a significant challenge for the quantitative identification of dynamic phenotypes.

Computer vision and machine learning (ML) have been employed to extract quantitative information from cell images and have become key tools for identifying cellular phenotypes [39]. There are existing tools that aid computational image analysis, including morphological profiling (CellProfiler [40] and PhenoRipper [41]), the supervised learning of cellular phenotypes (CellClassifier [42]), and the discovery of phenotypes in high-content imaging data (advanced cell classifier [43]). While these methods have been used to distinguish between normal and cancer cells [44, 45], these tools have been limited to static cell image datasets. Computer vision and ML enable us to identify previously unknown dynamic phenotypes that cannot be detected by the human eye. These new technologies are capable of unraveling phenotypic heterogeneity and opening a new avenue for defining phenotypes at unprecedented spatial and temporal resolutions. In this review, we focus on how ML can address the issues of phenotypic heterogeneity within live cell image datasets and identify spatiotemporal phenotypes of cell motility and morphodynamics.

2. Feature extraction in machine learning to recognize phenotypes

In recent years, ML has proven to be instrumental in biomedical research, allowing scientists and clinicians alike to implement sophisticated computation in which computers learn and effectively perform specific tasks from biologically relevant datasets with minimal human instruction or intervention. ML can be mainly categorized into supervised and unsupervised learning. Supervised learning requires labeled datasets and discovers the relationship between inputs and outputs of ML systems. Unsupervised learning utilizes data representations as input and discovers the internal structures of data. In terms of phenotyping, supervised learning can be used to classify data into known phenotypes, and unsupervised learning can be used to discover previously unknown phenotypes. Conceptually, both supervised and unsupervised learning consist of feature extraction and optimization, which can include classification, regression, or clustering. After features are extracted from the data, supervised learning can be performed for classification or regression, or unsupervised learning can be performed for data clustering. The goal of these procedures is to optimize the objective functions related to the criteria for given ML tasks.

Raw datasets from live cell imaging inherently have high dimensionality (a time-lapse image set with 100 × 100 pixels and 10 timeframes has 105 dimensions). This leads to the ‘curse of dimensionality’ [46, 47], wherein the volume of data space becomes exponentially large as the dimensionality increases. This makes the data distribution too sparse, ultimately hindering computational algorithms from reaching statistically significant results. Therefore, for the effective phenotyping of high-dimensional datasets, it is necessary to project raw data onto low-dimensional space while retaining intrinsic dimensions. The goal of feature extraction is to represent raw data effectively using relevant features with lower dimensions. The new representations derived from raw datasets have less noise, redundancy, and dimensionality than the original dataset, making them more informative and beneficial to subsequent computational processes. Therefore, one of the most important steps to determine the success of ML applications is feature extraction.

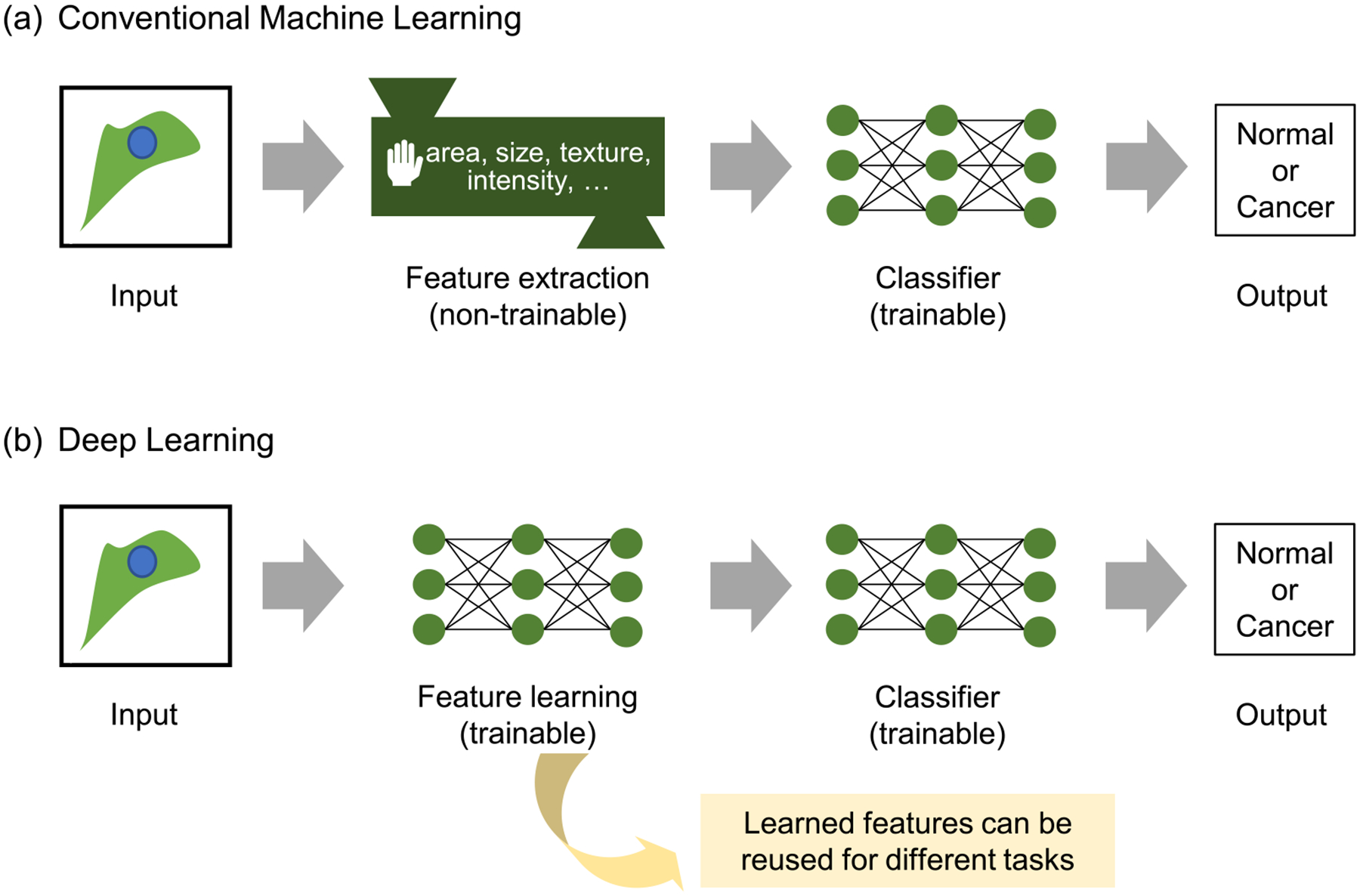

In traditional ML contexts (figure 1(a)), manually selected features are used for dimensionality reduction. This process, however, is very time consuming and requires considerable human effort. An alternative method for feature extraction is feature learning, in which computers learn features directly from the data with less human instruction (figure 1(b)). Deep learning (appendix B) offered a breakthrough in feature learning, wherein deep neural networks (DNNs) can learn the relevant features automatically and directly from raw data through multiple hidden layers [48]. Since the deep learning approach utilizes all the information from raw data, it provides more comprehensive features that human intuition cannot offer, while the manually selected features used in traditional ML are generally more interpretable and related to domain knowledge. Autoencoders (AEs), based on deep learning, have been widely used for feature learning [49] because they reproduce the input of the AE while limiting the number of hidden units. The values from these hidden units can serve as the learned features from the data. The learned features from one domain can be used in another domain by transfer learning, which extracts feature information from input data using networks pretrained on different but related domains [50, 51]. Convolutional neural network (CNN) models pretrained with numerous ordinary images available on ImageNet [52] have been shown to generate highly effective features in many image-related ML tasks [53–56].

Figure 1.

Comparison between conventional machine learning and deep learning. (a) In conventional machine learning, we need to extract handcrafted features from raw data. These features are used to train the classifier. (b) In deep learning, feature learning and classifier training are performed end-to-end. After the training, the trained feature extractor can produce meaningful features, which can be reused for different tasks, including unsupervised phenotyping.

3. Phenotypes of cellular motility and morphodynamics at various spatiotemporal scales

Cells and subcellular structures constantly undergo heterogeneous morphological changes over various spatiotemporal scales. Advances in fluorescence microscopy have allowed researchers to acquire an unprecedented amount of live cell image data at high spatiotemporal resolutions, which has revealed a massive amount of heterogeneity. Current image analysis tools, however, usually have limited capacity for phenotyping cellular and subcellular behaviors from heterogeneous live cell image datasets. ML and deep learning are being increasingly employed to extract spatiotemporal features from live-cell imaging data and identify their phenotypes to better understand the underlying biological mechanisms. In this section, we briefly review recent efforts to understand the diverse dynamic phenotypes of cell motility and cellular morphodynamics on various length and time scales.

Cell motility.

Cell motility is an essential process for various physiological and pathophysiological processes such as development, immune responses, wound healing, angiogenesis, and cancer metastasis. Cell motility occurs on time scales from hours to days and has long been a subject of study in the field of quantitative cell biology. Previously, cell migration trajectories were studied using simple random walk models [28, 29]. However, it is increasingly recognized that there exists significant cell-to-cell variability in motility speed [57], and cell motility has multiple phenotypes representing unique cellular states. Several ML frameworks for time-lapse live cell images have been developed to characterize numerous motility phenotypes using the unsupervised learning of single-cell motility [58–60] and collective cell migration [36, 61]. A recent study suggested that motility phenotypes in muscle stem cells (MuSCs) in mice represented the intermediate steps of MuSC differentiation [59]. The motility phenotyping of retinal progenitor cells (RPCs) before mitosis allowed for the prediction of the fate of RPCs (self-renewing vs terminal division and photoreceptor vs nonphotoreceptor progeny) [62]. The distance traveled by bone marrow mesenchymal stem cells was correlated with their adipogenic, chondrogenic, and osteogenic differentiation potentials [63]. The motility speed of human osteosarcoma cells can distinguish between a dormant nonangiogenic phenotype and an active angiogenic phenotype [64]. Knockout of a breast cancer oncogene, lipocalin 2, in human triple-negative breast cancer cells significantly reduced the motility speed and the migration distance [65].

Cellular morphodynamics.

Leading edges (protrusive plasma membranes at the front of the cell) of migrating cells display significant spatiotemporal heterogeneity [27, 30]. Although these morphodynamic events occur on time scales from minutes to hours, which is much faster than cell motility, the morphology of motile cells by itself tells us about the biochemistry and mechanics underlying cell motility [66]. Recent ML analyses of live cell images revealed the relationships between cell morphodynamics and motility as follows. The morphological changes over second timescales enabled the prediction of migratory behaviors over minute timescales in Dictyostelium [67]. The morphological coordination between protrusion and retraction determines metastatic potency [68] and governs switching between ‘continuous’ and ‘discontinuous’ mesenchymal migratory phenotypes [69]. Morphodynamic phenotypic biomarkers together with migratory information can be used to evaluate the metastatic potential of breast and prostate cancer cells [70]. The neuronal growth cone is an essential structure for guiding axons to their targets during neural development. It exhibits complex and rapidly changing morphology, and the morphology of neuronal growth cones is highly correlated with neurite outgrowth [71]. The significance of morphodynamic phenotypes is not limited to cell motility. For example, the time-series modeling of live cell shape dynamics can reveal differential drug responses in breast cancer cells [72]. The local protrusion patterns of leukocytes can inform immune responses [73]. Finally, the morphodynamics of hematopoietic stem and progenitor cells (HSPCs) can predict the lineage before three generations [74].

Cell motility and morphodynamics in 3D environments.

A more recent study emphasized the importance of understanding cell motility in 3D cultures due to its physiological relevance and the advent of 3D cultures [75] and light sheet microscopy [76]. The persistent random walk model used for 2D motility is not suitable for modeling 3D motility since 3D motility is temporally coupled and anisotropic [77]. There are also significant technical challenges in assessing 3D cell morphology in a quantitative manner compared to 2D morphology [78]. The 3D morphological features of cancer cells derived from quantitative phase contrast microscopy can classify healthy, cancer, and metastatic cells [79]. A 3D image analysis of endothelial cell branching in 3D collagen gels revealed the role of myosin II in shape control [80]. Recently, an ML-based 3D morphological motif detector, u-shape 3D, was developed to identify lamellipodia, filopodia, pseudopodia and blebs in 3D live cell images, clearing a path for 3D subcellular morphodynamic phenotyping [81].

Subcellular cytoskeleton dynamics.

The cytoskeleton orchestrates cellular morphodynamics and motility. Therefore, characterizing the heterogeneity of cytoskeletal dynamics at subcellular levels can reveal the underlying mechanism for cell motility and morphodynamics. The actin cytoskeleton directly affects cellular morphology via actin remodeling. Lamellipodia are composed of actin network structures at the leading edge of a motile cell, which provide strong force generation for cell shape changes and motility. Quantitative fluorescence speckle microscopy [82] and local sampling strategies [83, 84] have been extensively used to probe lamellipodial dynamics in cell protrusions. Furthermore, the leading edge dynamics of lamellipodia were shown to have distinct subcellular protrusion phenotypes with differential recruitment of VASP and Arp2/3 [30]. Extended lamellipodia promoted by hyperactivation of Rac1 via the P29S mutation promotes the proliferation of melanoma cells [85]. Filopodia are finger-like protrusive structures containing thin actin bundles that play a role as sensory organelles for cell migration. Several computational platforms for the analysis of filopodial dynamics have been developed, including cellGeo [86], filopodyan [87], filoQuant [88], and GCA [71]. In neuronal growth cones, there are multiple filopodial phenotypes whereby Ena and VASP play differential roles in associated filopodial dynamics [87]. Additionally, the increased density of filopodia in breast cancer cells promotes higher invasiveness [88].

4. Strategies for spatiotemporal feature extraction

With the advancement of live cell imaging techniques, various computational strategies have been employed to extract both spatial and temporal features to characterize new phenotypes. As discussed before, feature extraction is a critical step for successful ML-based phenotyping because the nature of the extracted features from raw data determines the phenotyping. In this section, we discuss analytical strategies for feature extraction to identify dynamic cellular phenotypes in detail. We first focus on handcrafted feature extraction. Then, we discuss the application of emerging feature learning-based methods using deep learning.

4.1. Handcrafted feature extraction

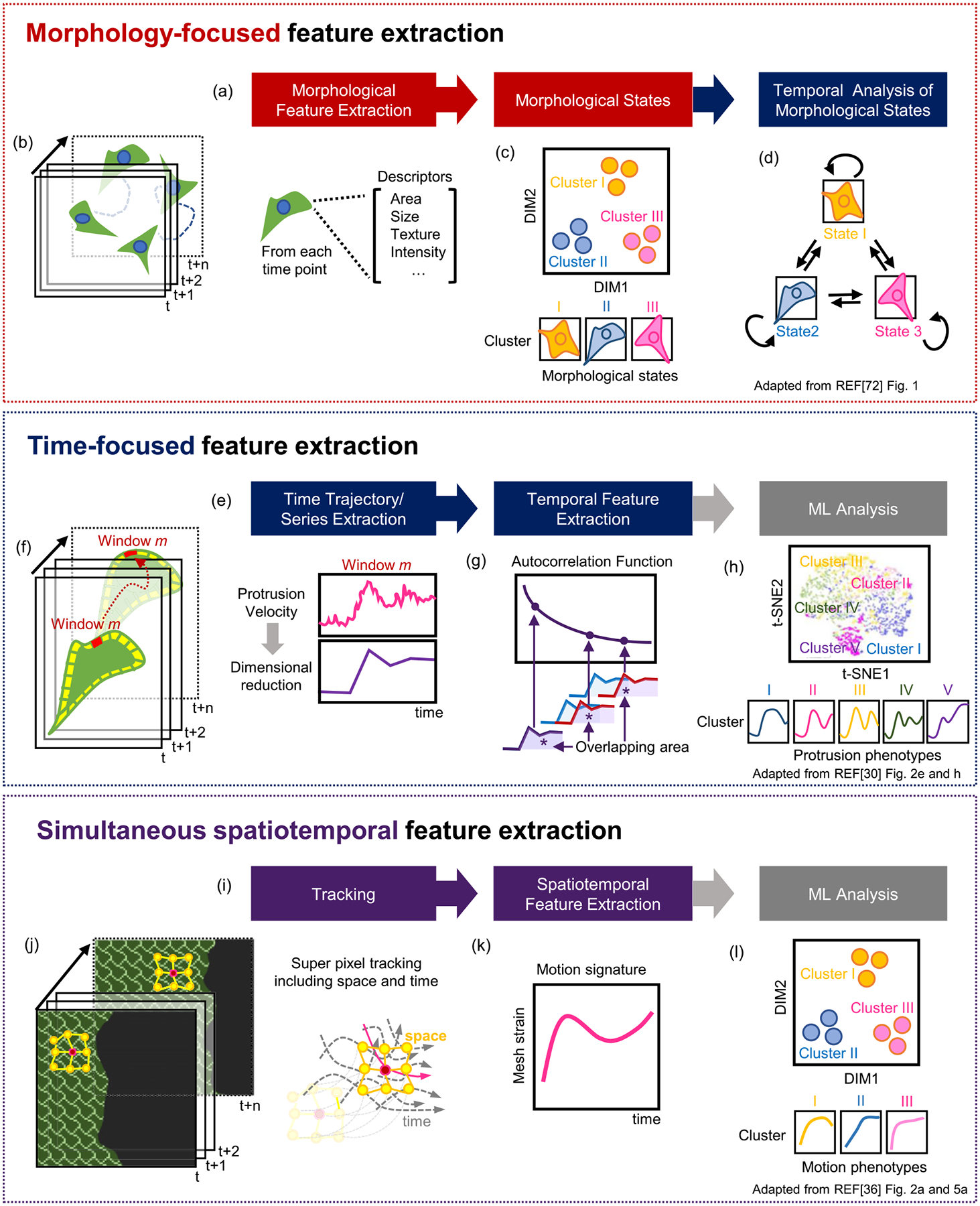

The overall strategy defined here can be largely grouped by which type of feature is the focus for identifying phenotypes as follows (figure 2): (i) morphology-focused extraction, followed by temporal analysis, (ii) time-focused feature extraction, and (iii) simultaneous spatiotemporal feature extraction. Below is a more detailed description of representative analytical processes in defining cellular phenotypes in each category.

Figure 2.

Feature extraction-based phenotyping of cell motility and morphodynamics. (a) Phenotyping based on morphology-focused feature extraction. (b) Cellular morphology at each timepoint. (c) Identification of morphological states by dimensional reduction of morphological features. (d) Temporal transition of morphological states. (e) Phenotyping based on time-focused feature extraction. (f) Examples of subcellular protrusion time series. (g) Extraction of autocorrelation function (ACF) temporal features. (h) Subcellular protrusion velocity phenotypes. (i) Phenotyping based on simultaneous spatiotemporal feature extraction. (j) An example of tracking cells in collective migration. (k) Methods for spatiotemporal feature extraction. (l) Phenotypes of the strain curves from collective cell migration. Panels (c) and (d) are adapted with permission from figure 1 in reference [72], Oxford University Press. Panel (h) is adapted from figures 2(e) and (h) in reference [30]. Panels (j)–(l) are adapted from figures 2(a) and 5(a) in reference [36]. Panels (h) and (j)–(l) are licensed under (CC-BY-4.0).

Morphology-focused feature extraction.

In this category (figure 2(a)), rich morphological features are first extracted at each time point (figure 2(b)); then, the feature dimensions are often reduced by principal component analysis [89] (PCA, reducing the dimensionality of data while preserving the information of data as much as possible), and morphological states are identified (figure 2(c)). The subsequent temporal analyses are performed by ML, such as time-series modeling, clustering, and classification. These types of analyses usually assume that cell morphology dictates distinct cellular states and then investigate how the morphological states evolve over time (figure 2(d)).

Gerlich’s group pioneered the development of ML frameworks for cellular morphodynamics in mitosis. Their overall approach focused on accurate morphological phenotyping by taking advantage of temporal information. Held et al developed a supervised ML framework, termed CellCognition [90], to classify complex cellular dynamics through morphologically distinct cell states (interphase, six different mitotic stages, and apoptosis) combining support vector machine [91] (SVM, see appendix C) classification with a hidden Markov model [92] (HMM, see appendix C). They extracted 186 quantitative features of texture and shape from the confocal images of live HeLa cells stably expressing the chromatin marker H2B-mCherry, followed by SVM training for the classification of the cell states. Thereafter, they trained an HMM to take advantage of the temporal context of the cellular states, which corrected the misclassification occurring during the state transition. Zhong et al, in the same group, developed an unsupervised ML approach to identify the different cellular stages in mitosis without user annotation [93]. The same morphological features were reduced by PCA, and then temporally constrained combinatorial clustering was applied to make temporally linked objects cluster together, producing results consistent with the user annotation. This avoids expensive user annotation and facilitates high-throughput image-based screening.

To analyze morphodynamics in cell motility, Godonov et al developed an unsupervised ML method, the SAPHIRE (stochastic annotation of phenotypic individual-cell responses) framework [72], wherein 18 morphological features, including area, perimeter, equivalent diameter, major/minor axis length, eccentricity, solidity, extent, convex area, axis ratio, circularity, waviness, geodesic diameter, and convex diameter, were extracted for each cell object at a specific temporal point. The high dimensionality of the extracted features is then reduced to low-dimensional space by PCA, and distinct shape states are determined by clustering. Thereafter, they applied an HMM to the time trajectories of these PCA-reduced features. Similarly, with the previously mentioned works, they also considered that cell morphology represents cellular states, and the HMM was applied to study the dynamics of the transition between morphological states. The temporal features extracted from the HMM can reveal more refined drug effects than the morphological features alone, which can be used to dissect the heterogeneity in cellular drug responses.

Morphodynamic phenotypes were also studied in the context of epithelial-to-mesenchymal transition (EMT) by Wang et al [94]. Using 150 points on cell outlines as cellular morphological features, they extracted Haralick features [95] (quantifying texture information from images) from fluorescently tagged endogenous vimentin, which is an EMT marker. After tracking these features over time during EMT, the acquired time series were projected onto a 2D space using a nonlinear dimensionality reduction technique, t-SNE [96] (t-distributed stochastic neighbor embedding, assigning pairs of similar data with high probabilities of being neighbors in low dimensional space), and they found two clusters using k-means clustering [97] (grouping unlabeled data into k clusters by assigning them to nearest cluster means). In one cluster, the changes in vimentin Haralick features preceded those of cell morphology. In the second cluster, the vimentin Haralick and morphological features changed concertedly. Since they could not find these results using pseudo-time analysis from the snapshot data, their live cell image analysis revealed the heterogeneity of EMT trajectories, which could not be obtained from static datasets.

The morphodynamic phenotyping of neuronal growth cones was studied using PCA in shape space, which revealed five to six basic shape modes of neuronal growth cone morphology [98]. For each growth cone mode, the autocorrelation function [99] (ACF, quantifying the similarity between a time series and its lagged one) and Fourier power spectrum were quantified to identify oscillatory phenotypes. It was found that the average growth rate was significantly correlated with the oscillation strength of specific growth cone modes.

Time-focused feature extraction.

Previous morphology-focused approaches have treated cell morphology as a readout of cell states. While this can be a faithful approximation, particularly in mitosis, it is also possible that temporal dynamics in cellular or subcellular processes can reflect their unique properties. Therefore, temporal phenotyping based on time-focused feature extraction could reveal new findings. To identify temporal phenotypes (figure 2(e)), we must extract time-series or trajectory data directly from live-cell time-lapse videos using image analysis (figure 2(f)), and then quantify specific temporal features from the time-series (figure 2(g)). If necessary, the dimensions of temporal features can be reduced by PCA. Thereafter, standard ML methods such as clustering or classification can be applied to characterize the temporal phenotypes (figure 2(h)). The identified temporal phenotypes can then be further studied in a spatial context. This approach has been applied in cell motility, protrusion, and endocytosis, as discussed below.

The initial efforts in this approach were focused on phenotyping cell motility based on cellular trajectories. Sebag et al [58] developed a generic unsupervised ML method termed MotIW (motility study integrated workflow) from high-throughput time-lapse image data. First, fifteen features were extracted from each cellular trajectory, including the diffusion coefficient and track entropy, other global features (such as convex hull area and effective path length), and average local features (including mean square displacement and mean signed turning angle). PCA was used for dimensionality reduction, and k-means clustering was applied. Using the Mitocheck dataset, they discovered eight motility phenotypes, but the biological meaning of each phenotype remains to be discerned. Kimmel et al [59] took a similar approach, termed heteromotility, that extracts motility features from time-lapse cell images, including distance traveled, turning, and speed metrics. Using heteromotility, they identified more detailed phenotypes because the approach included features with more complex motions, such as Levy flight-like motion features, fractal Brownian motion features, and autocorrelation functions for displacements. Subsequently, hierarchical clustering was performed with Ward’s linkage, which identified multiple motility phenotypes within the cell population. The application of heteromotility analysis to the MuSC system during activation revealed three distinct MuSC phenotypes, and the motility phenotypes of activated MuSCs sequentially led to the motility phenotypes of muscle progenitor myoblasts, suggesting that dynamic phenotypes of cell motility can represent the intermediate steps of MuSC differentiation.

Multiple phenotypes exist not only at the single-cell level but also at the subcellular level. Therefore, dynamic phenotyping has been applied to subcellular leading-edge dynamics. Wang et al [30] developed an unsupervised ML framework, termed HACKS (deconvolution of heterogeneous activity in the coordination of cytoskeleton at the subcellular level) that deconvolves the heterogeneity of the subcellular protrusion at micron and minute scales. The images of the leading edge of PtK1 epithelial cells were segmented by multiple probing windows, and then the time series of protrusion velocities in each probing window were quantified (figure 2(f)). The ACF was calculated as a temporal feature of the protrusion velocity time series (figure 2(g)). Then, density peak clustering [100] (identifying cluster centers that are at local density maxima and away from other high-density regions) was applied to identify distinct subcellular protrusion phenotypes (figure 2(h)). HACKS identified distinct subcellular protrusion phenotypes hidden in highly heterogeneous protrusion activities, revealing the temporal coordination of Arp2/3 and VASP in accelerating protrusion phenotypes. This analysis also suggests that the unsupervised ML of cellular dynamics could dissect the underlying molecular mechanisms and drug responses obscured by heterogeneity. Li et al also analyzed the subcellular dynamics of leading-edge displacement using unsupervised ML and demonstrated that the subcellular phenotypes could be utilized for single-cell phenotyping [101]. They tracked and segmented target cells from the phase contrast microscopy images of lymphocytes. The local time series of edge displacement was extracted, and six-dimensional temporal features, such as temporal regularity, were quantified. Based on the local temporal features, the researchers further reduced the dimension of the vector to 2 with PCA, followed by applying k-means clustering. Three clusters of subcellular deformation patterns were identified, and the frequency of each cluster within the same cells was used for the feature of dynamic cellular morphology to perform supervised ML to classify ‘normal’ and ‘drastic’ cellular phenotypes.

Membrane trafficking, including endocytosis and exocytosis, is also a promising field where this ML application can make a significant contribution due to the multiple modes of endocytic and exocytic events [102, 103]. Wang et al [102] developed a ML method termed DASC (disassembly asymmetry score classification) applied to clathrin-mediated endocytosis (CME), that resolves aborted coats (ACs) from bona fide clathrin-coated pits (CCPs) based on single-channel fluorescent movies. They defined a clathrin disassembly risk function, which indicates the net risk for disassembly at every intensity-time state from the fluorescence time series. From this risk function, they quantified the features, including time-average, lifetime-normalized difference between the maximum and minimum value, and a modified skewness of the disassembly risk, which allowed accurate classification between ACs and CCPs. DASC can provide more accurate pictures of the progression of CME by deconvolving previously unresolvable ACs and CCPs.

Simultaneous spatiotemporal feature extraction.

In the previous ML approaches, spatial and temporal analyses were performed sequentially. However, when spatial and temporal processes are tightly interconnected with each other, it is desirable to consider spatiotemporal features simultaneously (figure 2(i)). This approach may preserve more information about spatiotemporal coordination than sequential procedures.

Spatiotemporal feature extraction is particularly relevant in collective cell migration, where neighboring cells constantly interact to migrate together. To study the propagation of directional cues from wound edges through a cellular monolayer undergoing collective migration, Zaritsky et al [61] quantified the mean directionality at different distances from the monolayer edge over time, followed by PCA. They were able to identify guanine nucleotide exchange factors (GEFs) that are involved in intercellular communication. Zhou et al [36] also developed a generalized computational framework, MOSES (motion sensing superpixels), to describe collective cell migration in terms of the individual trajectories of migrating cells and their spatiotemporal interactions (figure 2(j)). The long-term motion tracks from individual cells were constructed by capturing spatial motion dynamics with superpixels (formed with specific spatial points grouped with neighboring pixels at certain time points). The locations of superpixels in the next frame were calculated by averaging optical flow. Then, superpixels were linked to meshes to indicate the relationship between the movement of superpixels and that of their neighbors. Local and global spatiotemporal features can be derived from the strain curves, which measure the relative deformation between connected superpixels with respect to their initial mesh geometry over time (figure 2(k)). They applied PCA to the strain curves to visualize the phenotypic distributions of 2D collective migration (figure 2(l)). They demonstrated that the junctional motion dynamics of squamous-columnar cells with increasing epidermal growth factor (EGF) became similar to those of squamous-cancer cells. MOSES can provide a useful framework to investigate many biological collective phenomena in a quantitative and unbiased manner.

Different subcellular regions of leading edges are interconnected via cytoskeleton and membrane structures. Therefore, spatiotemporal feature-based phenotyping of leading-edge dynamics could provide additional insights in comparison to time-focused feature extraction. Ma et al [32] combined local shape descriptors and temporal features of time series for spatiotemporal feature extraction and studied the phenotypes of COS-7 cell edge dynamics. The cell edges were segmented and divided into sampling windows, and the time series of protrusion velocity was calculated for each window over time. They applied empirical mode decomposition, which reduces the data to six intrinsic mode functions (IMFs). The frequency spectra of each IMF in both the spatial and time domains were acquired by applying the Hilbert–Huang transform. Thereafter, they compiled instantaneous temporal and spatial frequency spectra into one feature vector for each time point and each sampling window. These features were used to merge similar neighboring spatial and temporal points by statistical region merging clustering and identify distinct motion regimens. They were able to locate subcellular microdomains with distinct Rac1 signaling activities.

4.2. Feature learning

Deep learning offers an entirely new approach for feature extraction. Instead of determining what kinds of features are useful for specific problems, DDNs are trained for certain tasks. The successful training of the neural networks means that the features learned by the neural networks can represent the input data very well in low dimensional space (figure 1(b)). This feature learning is an attractive method for feature extraction since it does not require prior assumptions about which features would be necessary for the problems. Even if the application of feature learning in morphodynamic phenotyping is still in its infancy, this is likely to accelerate morphodynamic phenotyping since it is very challenging to acquire sufficient prior information in regard to heterogeneous morphodynamic phenotypes. Although end-to-end training is known to be one of the advantages of deep learning, most of the feature learning for morphodynamic phenotyping involves multistage training. Usually, the morphological features are learned during the first stage of training. Then, using these features, different types of DL or conventional ML algorithms are used for temporal analyses.

Buggenthin et al [74] pioneered the application of DNNs to live cell images and demonstrated strong feature learning capability for stem cell differentiation. They trained a CNN to classify primary murine HSPCs into granulocytic/monocytic (GM) or megakaryocytic/erythroid (MegE) lineages to learn the static features from static brightfield microscopy images of differentiating primary hematopoietic progenitors. These CNN features along with cell movement were used to train another neural network, recurrent neural network [104] (RNN, a type of artificial neural network where previous output is used for current input) to forecast their lineage choice. They found that lineage choice can be detected before three generations using label-free live cell video when conventional fluorescence molecular markers are not observable. This means that DNNs are highly capable of capturing useful information in live cell video that cannot be detected by the human eye.

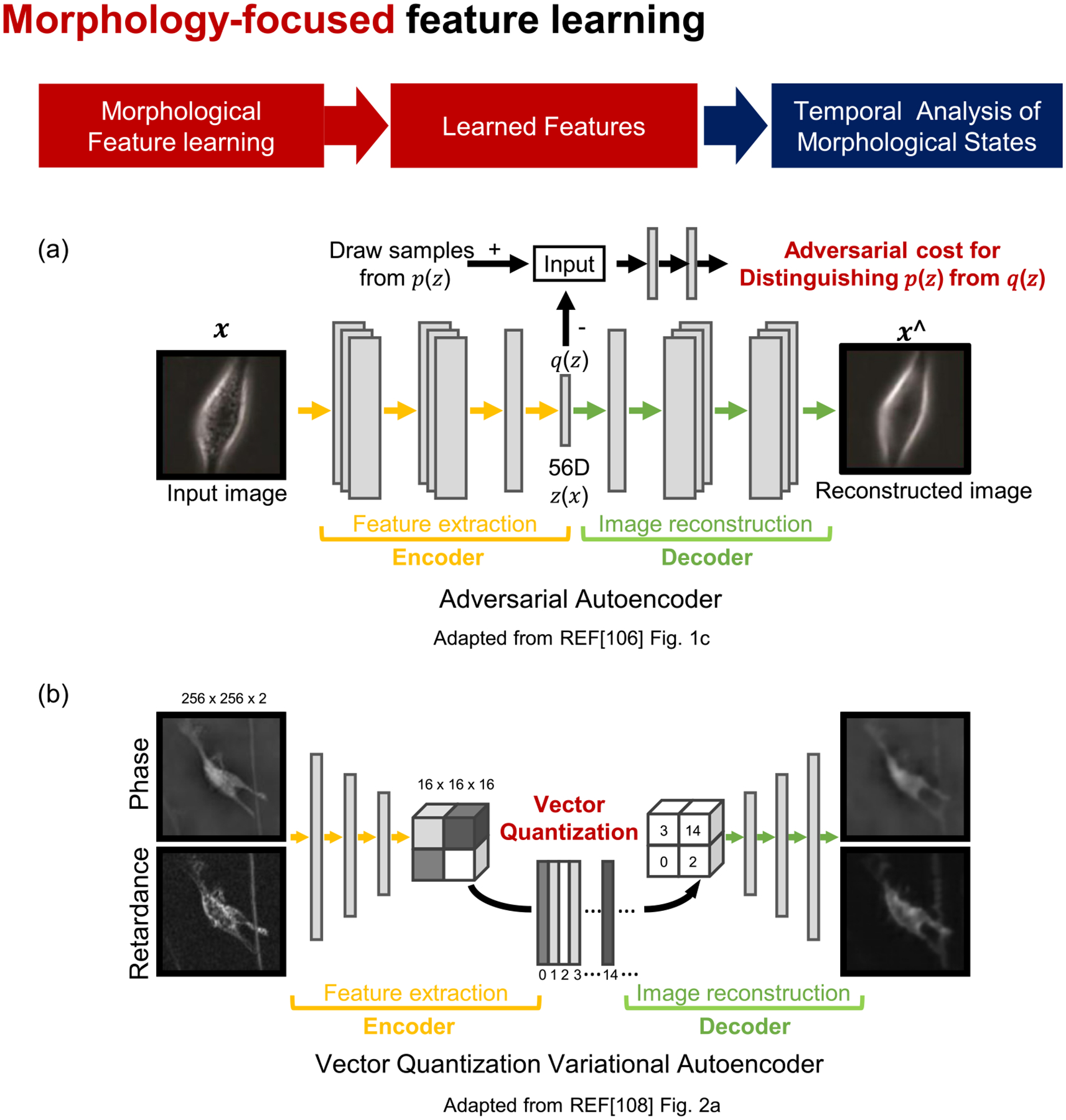

Generative adversarial networks (GANs, a generative modeling strategy where two artificial neural networks are trained to compete with each other) can automatically discover the patterns in input data and generate new data that resemble the original dataset [105]. Zaritsky et al [106] used a GAN variant, an adversarial autoencoder (figure 3(a)), to learn the morphological features of melanoma cells for cancer diagnosis. Then, the time-averaged morphological features were classified using linear discriminant analysis [107] (LDA, see appendix C) to predict the metastatic efficiency of patient-derived xenograft melanoma stage III cells. While deep learning features are usually not interpretable due to the inherent black-box nature of deep learning, they manipulated the autoencoder features to identify the interpretable cellular information that determines the aggressiveness of metastatic cells. This work demonstrated that deep learning analyses of label-free live cell images can be applied for cancer diagnosis.

Figure 3.

Phenotyping of cell morphodynamics based on morphology-focused feature learning. (a) and (b) Phenotyping procedure by morphology-focused feature learning. Autoencoders learn cellular morphological features. (a) Adversarial training and (b) vector quantization variational autoencoder. Panel (a) is adapted from figure 1(c) from reference [106]. Panel (b) is adapted from figure 2(a) from reference [108]. All panels are licensed under (CC-BY-4.0).

Wu et al [108] developed a computational framework, DynaMorph, that employed deep learning for the automated discovery of morphodynamic states. The features of cell morphology were learned by training a CNN model using a vector quantized variational autoencoder (VQ-VAE, figure 3(b)). To understand the interplay between morphology and dynamic behavior, morphodynamic feature vectors were generated by combining the VQ-VAE morphological features, the trajectory-averaged principal components, and averaged displacements between frames. Unsupervised clustering was then performed to reveal novel morphodynamic states. This approach identified two distinct phenotypes of microglial cells that exhibit morphodynamically distinct responses upon immunological challenges. The authors further compared the morphodynamic states and gene expression patterns, demonstrating that the different morphodynamic phenotypes are correlated with differential gene expression programs.

In contrast to previous feature learning, Li et al [73] applied a CNN to simultaneously extract spatiotemporal features for cell dynamic morphology classification. The cell dynamic morphology in video data was converted into 2D image data by quantifying local edge displacement over time, and then the CNN was used to extract features to discover edge deformation patterns. They pursued a transfer learning approach, where the pretrained models (VGG16 or VGG19) were used for feature extraction, and SVM was used as a classifier. They demonstrated that their morphodynamic features were useful for classifying the immune activation of mouse lymphocytes.

5. Discussion

Cell populations have been widely observed to respond heterogeneously to molecular and environmental perturbations [33, 109, 110]. Poorly characterized cellular phenotypes make it challenging to interpret the true observed heterogeneity. Furthermore, today, with the increased volume of resultant datasets along with the development of imaging and genomic technologies, dissecting heterogeneity in cellular datasets under specific experimental conditions faces many challenges for the identification of subtle but significant phenotypic variations in cellular dynamics.

The task of assessing cellular dynamic states requires a high-throughput and fully automated approach to analyze a massive amount of data for statistically significant discrimination to determine rare but biologically meaningful dynamic cellular phenotypes. However, the longitudinal monitoring of dynamic cellular responses with live-cell imaging remains a low-throughput endeavor. High-throughput studies with long-term and large-scale examinations of cell populations, including neuronal differentiation [111] and cell lineages of S. pombe [112], are usually limited in low-resolution settings. Conventional microscopes cannot acquire high-resolution and large field-of-view images at the same time. Therefore, the resolution enhancement of live cell imaging seeks to address this challenge to advance the identification of detailed motility and morphodynamic phenotypes. Fourier ptychographic microscopy [113] can stitch the Fourier components from low-resolution and a large field of view images with different directions of illumination, generating high-resolution large field-of-view images. DL can also improve image resolution by training neural networks to translate low-resolution to high-resolution microscopy images [114]. These computational imaging technologies will likely make significant contributions to the development of high-throughput live cell imaging and the integration of cellular motility and morphodynamics across various spatial and temporal scales.

Recently implemented multiomics measurements of genomes, transcriptomes, epigenomes, proteomes, and chromatin organization have opened up new avenues to disentangle the causal relationship between multiomics layers and cellular phenotypes. Integrating these multiple datasets will provide more comprehensive phenotypes [115]. While spatial transcriptomics integrating single-cell RNA-seq and static cellular image data is emerging [116, 117], significant technical hurdles remain for integrating live cell imaging with multiomic technologies. It is expected that MERFISH [118], revealing the spatial distribution of hundreds to thousands of RNA species in individual cells, could be employed for this purpose in the future.

The comprehensive identification of biologically meaningful phenotypes hidden in phenotypic heterogeneity remains a major goal. Many fine-grained dynamic phenotypes can arise from diverse live cell images and multiomic datasets in conjunction with new ML analysis techniques. Given that cellular motility and morphodynamics reflect the states of cellular physiology and pathophysiology, this effort will allow us to deconvolve their heterogeneity and uncover molecular and cellular mechanisms of disease progression in unprecedented detail. This will ultimately open up fresh opportunities for live cell-based drug discovery and diagnosis.

Acknowledgement

This work was supported by NIH grant R35GM133725.

Appendix A. Phenotyping

‘Phenotype’ originates from the Greek words phainein (meaning ‘shining’ or ‘to show’) and typos (meaning ‘type’). A phenotype is defined as the observable properties of an organism, including but not limited to behavior, biochemical properties, color, shape, and size, that result from both its genotype (total genetic inheritance) and the external environment. Biological phenotypes differ from the characteristics of nonliving or inanimate objects in two main ways: (1) they are innately inherited, and (2) they continuously evolve over generations [119, 120] such that they exhibit plasticity during development [121] or as a way of adapting to their environment [122]. As the broad definition of the terminology suggests, the meaning of the word continues to evolve given the perpetual technological evolution of today’s observational tools. In addition, our understanding of biological systems has evolved in an effort to elucidate the mechanisms that underlie the generation of highly complex naturally occurring biological phenotypes. In contrast to a phenotype, a genotype is defined as the genetic composition of an individual organism. Elucidating the mechanism by which genotypes generate phenotypes and how the environment affects the relationship between genotypes and phenotypes is still a major challenge in biology, both for basic research and for practical applications in medicine and agriculture.

Appendix B. Deep learning

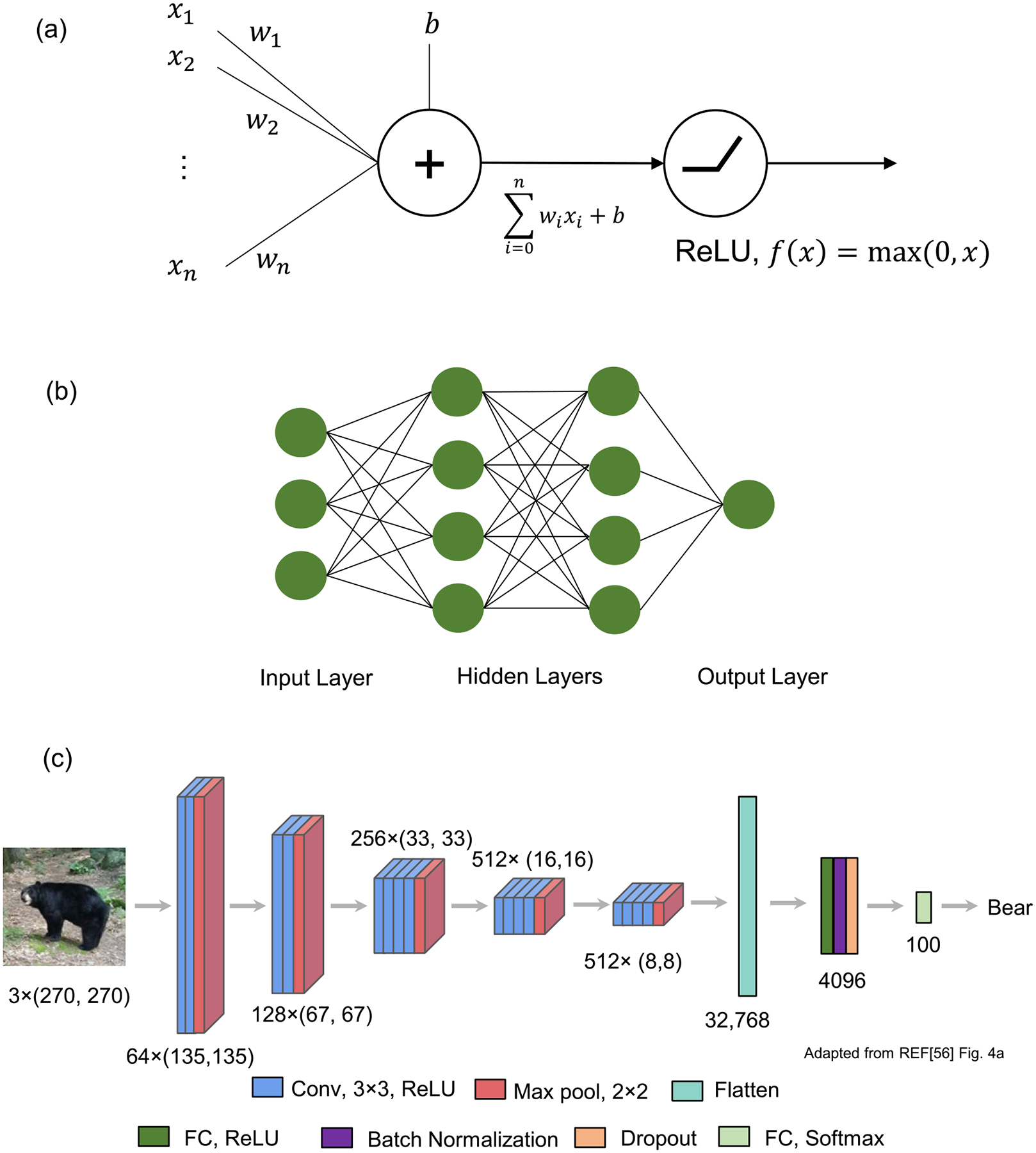

Deep learning is a technique that uses a network of neuron-like components to learn the input and output relationships from the data [104, 123–125]. Since 1943, it has evolved gradually from a mathematical model (perceptron) that mimics a neuron in the brain [126]. Each neuron can take multiple inputs from other neurons and output a value to another neuron with nonlinearity (figure 4(a)). Every connection between neurons has a weight or parameter that is updated during the training process. Together, they form an artificial neural network, minimally resembling neural networks in brains (figure 4(b)) [127]. With the advent of powerful GPUs (graphics processing units) and large-scale image databases, artificial neural networks with more than three layers, called DNNs, have opened a new era of artificial intelligence [124].

Figure 4.

Deep learning models. (a) A mathematical model of a neuron. The weighted sum of input values is transformed by a nonlinear activation function. A ReLU (rectified linear unit) is a widely used activation function. (b) Fully connected artificial neural network. (c) An example of a CNN for image classification. Conv, 3 × 3, ReLU: convolutional layer with 3 × 3 filters and ReLU activation. Max pool, 2 × 2: max pooling layer with 2 × 2. FC: fully connected layer. (a) × (b) and (c): output format of a convolutional layer ((a): the number of filters, (b) × (c): the size of filter image). Panel (c) is adapted from figure 4(a) in reference [56] and licensed under (CC-BY-4.0).

The strength of deep learning is that a DNN can approximate any function. In other words, it can map or find the relationship between the input and output of any data [128]. For instance, a DNN can take an image as input and output the name of the object in the image [124]. As a result, deep learning methods are applied in various ways, such as image recognition [124] and speech recognition [129], protein folding [130], drawing art [131], and playing Go games [132]. The high dimensionality of a DNN allows it to excel at learning patterns or features from the high dimensional data of the real world [133]. However, this property also makes the DNN easily overfit to training data, where a DNN learns the patterns specific to training data, which do not exist in unseen real-world testing data. The best way to prevent overfitting is to use a large amount of training data [123]. Training datasets, however, are not always large due to high cost and time. Therefore, several regularization techniques, such as data augmentation [124], dropout [134], and batch normalization [135], have been employed to mitigate overfitting.

CNNs are the most popular type of deep neural network widely used in computer vision tasks that extract information from the image and understand its content (figure 4(c)) [104, 123]. The CNN architecture used for image classification comprises a sequence of convolutional and pooling layers with fully connected layers at the end [124]. Each convolutional layer contains a block of neurons, called a filter, that finds local spatial patterns from the two-dimensional (2D) input and outputs a 2D feature map. Then, the pooling layer summarizes neighboring values and reduces the feature map to a lower resolution so that the next convolutional layers can detect features at a higher receptive field. Fully connected layers flatten the feature map to the 1D vector and learn to output numbers representing the classes of input images. This hierarchical structure of the CNN that processes from low- to higher-level features resembles how our brains process visual information [127, 136].

Appendix C. Machine learning glossary

Autocorrelation function (ACF) [99]: a common time series feature that quantifies the similarity between a time series and its lagged one.

Autoencoder (AE) [49]: a type of artificial neural network widely used for feature learning. It reproduces the input of the AE with a reduced number of hidden units, whose values can serve as the learned features from the data.

CNNs [104]: the most popular type of artificial neural network used for image data. They automatically learn multilevel image features for image classification.

Density-peak clustering [100]: a clustering algorithm that identifies cluster centers that are at local density maxima and away from other high-density regions.

Generative adversarial network (GAN) [105]: a generative modeling strategy where two artificial neural networks (generator and discriminator) are trained to compete with each other.

Haralick feature [95]: a feature that quantifies texture information from images.

Hidden Markov model (HMM) [92]: a statistical technique that models observed data by the probabilistic transitions of hidden states by assuming a Markov process.

K-means [97]: a clustering algorithm that groups unlabeled data into k clusters by assigning them to nearest cluster means (centroids).

Linear discriminant analysis (LDA) [107]: an algorithm that finds a linear combination of features that separates given classes of data.

Principal component analysis (PCA) [89]: an algorithm that reduces the dimensionality of data while preserving the variance of data as much as possible.

Recurrent neural network (RNN) [104]: a type of artificial neural network where previous output is used for current input. It is widely used for time series modeling and natural language processing.

Support vector machine (SVM) [91]: a classifier that maximizes the space between two classes.

t-distribution stochastic neighbor embedding (t-SNE) [96]: assigning pairs of similar data with high probabilities of neighbors in low-dimensional space.

Data availability statement

No new data were created or analysed in this study.

References

- [1].Cobb JN, DeClerck G, Greenberg A, Clark R and McCouch S 2013. Next-generation phenotyping: requirements and strategies for enhancing our understanding of genotype-phenotype relationships and its relevance to crop improvement Theor. Appl. Genet 126 867–87 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Gershon ES et al. 2018. Genetic analysis of deep phenotyping projects in common disorders Schizophr. Res 195 51–7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Yu H and Zhang VW 2015. Precision medicine for continuing phenotype expansion of human genetic diseases BioMed Res. Int 2015 745043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Baynam G et al. 2015. Phenotyping: targeting genotype’s rich cousin for diagnosis J. Paediatr. Child Health 51 381–6 [DOI] [PubMed] [Google Scholar]

- [5].Girdea M et al. 2013. PhenoTtips: patient phenotyping software for clinical and research use Hum. Mutat 34 1057–65 [DOI] [PubMed] [Google Scholar]

- [6].Heilker R, Lessel U and Bischoff D 2019. The power of combining phenotypic and target-focused drug discovery Drug Discovery Today 24 526–32 [DOI] [PubMed] [Google Scholar]

- [7].Haasen D, Schopfer U, Antczak C, Guy C, Fuchs F and Selzer P 2017. How phenotypic screening influenced drug discovery: lessons from five years of practice Assay Drug Dev. Technol 15 239–46 [DOI] [PubMed] [Google Scholar]

- [8].Schirle M and Jenkins JL 2016. Identifying compound efficacy targets in phenotypic drug discovery Drug Discovery Today 21 82–9 [DOI] [PubMed] [Google Scholar]

- [9].Fang Y 2015. Combining label-free cell phenotypic profiling with computational approaches for novel drug discovery Expert Opin. Drug Discovery 10 331–43 [DOI] [PubMed] [Google Scholar]

- [10].Moffat JG, Rudolph J and Bailey D 2014. Phenotypic screening in cancer drug discovery—past, present and future Nat. Rev. Drug Discovery 13 588–602 [DOI] [PubMed] [Google Scholar]

- [11].Hart C 2005. Finding the target after screening the phenotype Drug Discovery Today 10 513–9 [DOI] [PubMed] [Google Scholar]

- [12].Gebre AA, Okada H, Kim C, Kubo K, Ohnuki S and Ohya Y 2015. Profiling of the effects of antifungal agents on yeast cells based on morphometric analysis FEMS Yeast Res 15 fov040. [DOI] [PubMed] [Google Scholar]

- [13].Wawer MJ et al. 2014. Toward performance-diverse small-molecule libraries for cell-based phenotypic screening using multiplexed high-dimensional profiling Proc. Natl Acad. Sci 111 10911–6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [14].Mochida K, Koda S, Inoue K, Hirayama T, Tanaka S, Nishii R and Melgani F 2019. Computer vision-based phenotyping for improvement of plant productivity: a machine learning perspective Gigascience 8 giy153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Tsaftaris SA, Minervini M and Scharr H 2016. Machine learning for plant phenotyping needs image processing Trends Plant Sci 21 989–91 [DOI] [PubMed] [Google Scholar]

- [16].Singh A, Ganapathysubramanian B, Singh AK and Sarkar S 2016. Machine learning for high-throughput stress phenotyping in plants Trends Plant Sci 21 110–24 [DOI] [PubMed] [Google Scholar]

- [17].Bandiera L, Furini S and Giordano E 2016. Phenotypic variability in synthetic biology applications: dealing with noise in microbial gene expression Front. Microbiol 7 479. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Schmitz J, Noll T and Grünberger A 2019. Heterogeneity studies of mammalian cells for bioproduction: from tools to application Trends Biotechnol 37 645–60 [DOI] [PubMed] [Google Scholar]

- [19].Spiller DG, Wood CD, Rand DA and White MRH 2010. Measurement of single-cell dynamics Nature 465 736–45 [DOI] [PubMed] [Google Scholar]

- [20].Lev Bar-Or R, Maya R, Segel LA, Alon U, Levine AJ and Oren M 2000. Generation of oscillations by the p53-Mdm2 feedback loop: a theoretical and experimental study Proc. Natl Acad. Sci 97 11250–5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Lahav G, Rosenfeld N, Sigal A, Geva-Zatorsky N, Levine AJ, Elowitz MB and Alon U 2004. Dynamics of the p53-Mdm2 feedback loop in individual cells Nat. Genet 36 147–50 [DOI] [PubMed] [Google Scholar]

- [22].Akanuma T, Chen C, Sato T, Merks RM and Sato TN 2016. Memory of cell shape biases stochastic fate decision-making despite mitotic rounding Nat. Commun 7 11963. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Ruderman D 2017. The emergence of dynamic phenotyping Cell Biol. Toxicol 33 507–9 [DOI] [PubMed] [Google Scholar]

- [24].Prasad A and Alizadeh E 2018. Cell form and function: interpreting and controlling the shape of adherent cells Trends Biotechnol 37 347–57 [DOI] [PubMed] [Google Scholar]

- [25].Keren K, Pincus Z, Allen GM, Barnhart EL, Marriott G, Mogilner A and Theriot JA 2008. Mechanism of shape determination in motile cells Nature 453 475–80 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [26].Lacayo CI, Pincus Z, VanDuijn MM, Wilson CA, Fletcher DA, Gertler FB, Mogilner A and Theriot JA 2007. Emergence of large-scale cell morphology and movement from local actin filament growth dynamics PLoS Biol 5 e233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27].Machacek M and Danuser G 2006. Morphodynamic profiling of protrusion phenotypes Biophys. J 90 1439–52 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].Tranquillo RT and Lauffenburger DA 1987. Stochastic model of leukocyte chemosensory movement J. Math. Biol 25 229–62 [DOI] [PubMed] [Google Scholar]

- [29].Tranquillo R, Lauffenburger D and Zigmond S 1988. A stochastic model for leukocyte random motility and chemotaxis based on receptor binding fluctuations J. Cell Biol 106 303–9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [30].Wang C, Choi HJ, Kim S-J, Desai A, Lee N, Kim D, Bae Y and Lee K 2018. Deconvolution of subcellular protrusion heterogeneity and the underlying actin regulator dynamics from live cell imaging Nat. Commun 9 1688. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [31].da Rocha-Azevedo B, Lee S, Dasgupta A, Vega AR, de Oliveira LR, Kim T, Kittisopikul M, Malik ZA and Jaqaman K 2020. Heterogeneity in VEGF receptor-2 mobility and organization on the endothelial cell surface leads to diverse models of activation by VEGF Cell Rep. 32 108187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [32].Ma X, Dagliyan O, Hahn KM and Danuser G 2018. Profiling cellular morphodynamics by spatiotemporal spectrum decomposition PLoS Comput. Biol 14 e1006321. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [33].Slack MD, Martinez ED, Wu LF and Altschuler SJ 2008. Characterizing heterogeneous cellular responses to perturbations Proc. Natl Acad. Sci 105 19306–11 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [34].Patsch K, Chiu C-L, Engeln M, Agus DB, Mallick P, Mumenthaler SM and Ruderman D 2016. Single cell dynamic phenotyping Sci. Rep 6 34785. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [35].Goglia AG, Wilson MZ, Jena SG, Silbert J, Basta LP, Devenport D and Toettcher JE 2020. A live-cell screen for altered erk dynamics reveals principles of proliferative control Cell Syst 10 240–53 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [36].Zhou FY, Ruiz-Puig C, Owen RP, White MJ, Rittscher J and Lu X 2019. Motion sensing superpixels (MOSES) is a systematic computational framework to quantify and discover cellular motion phenotypes elife 8 e40162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [37].Zamir A, Li G, Chase K, Moskovitch R, Sun B and Zaritsky A 2020. Emergence of synchronized multicellular mechanosensing from spatiotemporal integration of heterogeneous single-cell information transfer bioRxiv Preprint 10.1101/2020.09.28.316240 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [38].Cruz JA, Savage LJ, Zegarac R, Hall CC, Satoh-Cruz M, Davis GA, Kovac WK, Chen J and Kramer DM 2016. Dynamic environmental photosynthetic imaging reveals emergent phenotypes Cell Syst 2 365–77 [DOI] [PubMed] [Google Scholar]

- [39].Danuser G 2011. Computer vision in cell biology Cell 147 973–8 [DOI] [PubMed] [Google Scholar]

- [40].McQuin C. et al. CellProfiler 3.0: next-generation image processing for biology. PLoS Biol. 2018;16:e2005970. doi: 10.1371/journal.pbio.2005970. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [41].Rajaram S, Pavie B, Wu LF and Altschuler SJ 2012. PhenoRipper: software for rapidly profiling microscopy images Nat. Methods 9 635–7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [42].Rämö P, Sacher R, Snijder B, Begemann B and Pelkmans L 2009. CellClassifier: supervised learning of cellular phenotypes Bioinformatics 25 3028–30 [DOI] [PubMed] [Google Scholar]

- [43].Piccinini F et al. 2017. Advanced cell classifier: user-friendly machine-learning-based software for discovering phenotypes in high-content imaging data Cell Syst 4 651–5 [DOI] [PubMed] [Google Scholar]

- [44].Partin AW, Schoeniger JS, Mohler JL and Coffey DS 1989. Fourier analysis of cell motility: correlation of motility with metastatic potential Proc. Natl Acad. Sci 86 1254–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [45].Giuliano KA 1996. Dissecting the individuality of cancer cells: the morphological and molecular dynamics of single human glioma cells Cell Motil. Cytoskeleton 35 237–53 [DOI] [PubMed] [Google Scholar]

- [46].Bellman R 1966. Dynamic programming Science 153 34–7 [DOI] [PubMed] [Google Scholar]

- [47].Aggarwal CC, Hinneburg A and Keim DA 2001. On the surprising behavior of distance metrics in high dimensional space Int. Conf. on Database Theory pp 420–34 [Google Scholar]

- [48].Bengio Y, Courville A and Vincent P 2013. Representation learning: a review and new perspectives IEEE Trans. Pattern Anal. Mach. Intell 35 1798–828 [DOI] [PubMed] [Google Scholar]

- [49].Kramer MA 1991. Nonlinear principal component analysis using autoassociative neural networks AIChE J 37 233–43 [Google Scholar]

- [50].Yosinski J, Clune J, Bengio Y and Lipson H 2014. How transferable are features in deep neural networks? Proc. of the 27th Int. Conf. on Neural Information Processing Systems vol 2 (Cambridge, MA: MIT Press; ) pp 3320–8 [Google Scholar]

- [51].Pratt LY 1992. Discriminability-based transfer between neural networks Advances in Neural Information Processing Systems [NIPS Conf.] vol 5 (San Mateo, CA: Morgan Kaufmann Publishers; ) pp 204–11 [Google Scholar]

- [52].Deng J, Dong W, Socher R, Li L, Kai L and Li F-F 2009. ImageNet: a large-scale hierarchical image database 2009 IEEE Conf. on Computer Vision and Pattern Recognition pp 248–55 [Google Scholar]

- [53].Razavian AS, Azizpour H, Sullivan J and Carlsson S 2014. CNN features off-the-shelf: an astounding baseline for recognition 2014 IEEE Conf. on Computer Vision and Pattern Recognition Workshops pp 512–9 [Google Scholar]

- [54].Donahue J, Jia Y, Vinyals O, Hoffman J, Zhang N, Tzeng E and Darrell T 2014. DeCAF: a deep convolutional activation feature for generic visual recognition Proc. of the 31st Int. Conf. on Machine Learning (Proceedings of Machine Learning Research, PMLR) ed Eric PX and Tony J pp 647–55 [Google Scholar]

- [55].Oquab M, Bottou L, Laptev I and Sivic J 2014. Learning and transferring mid-level image representations using convolutional neural networks 2014 IEEE Conf. on Computer Vision and Pattern Recognition pp 1717–24 [Google Scholar]

- [56].Kim S-J. et al. Deep transfer learning-based hologram classification for molecular diagnostics. Sci. Rep. 2018;8:17003. doi: 10.1038/s41598-018-35274-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [57].Jerison ER and Quake SR 2020. Heterogeneous T cell motility behaviors emerge from a coupling between speed and turning in vivo elife 9 e53933. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [58].Schoenauer Sebag A, Plancade S, Raulet-Tomkiewicz C, Barouki R, Vert J-P and Walter T 2015. A generic methodological framework for studying single cell motility in high-throughput time-lapse data Bioinformatics 31 i320–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [59].Kimmel JC, Chang AY, Brack AS and Marshall WF 2018. Inferring cell state by quantitative motility analysis reveals a dynamic state system and broken detailed balance PLoS Comput. Biol 14 e1005927. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [60].Bray M-A and Carpenter AE 2015. CellProfiler tracer: exploring and validating high-throughput, time-lapse microscopy image data BMC Bioinf 16 369. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [61].Zaritsky A, Tseng Y-Y, Rabadán MA, Krishna S, Overholtzer M, Danuser G and Hall A 2017. Diverse roles of guanine nucleotide exchange factors in regulating collective cell migration J. Cell Biol 216 1543–56 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [62].Cohen AR, Gomes FLAF, Roysam B and Cayouette M 2010. Computational prediction of neural progenitor cell fates Nat. Methods 7 213–8 [DOI] [PubMed] [Google Scholar]

- [63].Bertolo A, Gemperli A, Gruber M, Gantenbein B, Baur M, Pötzel T and Stoyanov J 2015. In vitro cell motility as a potential mesenchymal stem cell marker for multipotency Stem Cells Transl. Med 4 84–90 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [64].Guo P, Huang J and Moses MA 2017. Characterization of dormant and active human cancer cells by quantitative phase imaging Cytometry 91 424–32 [DOI] [PubMed] [Google Scholar]

- [65].Guo P, Yang J, Huang J, Auguste DT and Moses MA 2019. Therapeutic genome editing of triple-negative breast tumors using a noncationic and deformable nanolipogel Proc. Natl Acad. Sci. USA 116 18295–303 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [66].Mogilner A and Keren K 2009. The shape of motile cells Curr. Biol 19 R762–71 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [67].Tweedy L, Witzel P, Heinrich D, Insall RH and Endres RG 2019. Screening by changes in stereotypical behavior during cell motility Sci. Rep 9 8784. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [68].Hermans TM, Pilans D, Huda S, Fuller P, Kandere-Grzybowska K and Grzybowski BA 2013. Motility efficiency and spatiotemporal synchronization in non-metastatic vs metastatic breast cancer cells Integr. Biol 5 1464–73 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [69].Shafqat-Abbasi H, Kowalewski JM, Kiss A, Gong X, Hernandez-Varas P, Berge U, Jafari-Mamaghani M, Lock JG and Strömblad S 2016. An analysis toolbox to explore mesenchymal migration heterogeneity reveals adaptive switching between distinct modes elife 5 e11384. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [70].Manak MS et al. 2018. Live-cell phenotypic-biomarker microfluidic assay for the risk stratification of cancer patients via machine learning Nat. Biomed. Eng 2 761–72 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [71].Bagonis MM, Fusco L, Pertz O and Danuser G 2019. Automated profiling of growth cone heterogeneity defines relations between morphology and motility J. Cell Biol 218 350–79 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [72].Gordonov S, Hwang MK, Wells A, Gertler FB, Lauffenburger DA and Bathe M 2016. Time series modeling of live-cell shape dynamics for image-based phenotypic profiling Integr. Biol 8 73–90 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [73].Li H, Pang F, Shi Y and Liu Z 2018. Cell dynamic morphology classification using deep convolutional neural networks Cytometry 93 628–38 [DOI] [PubMed] [Google Scholar]

- [74].Buggenthin F et al. 2017. Prospective identification of hematopoietic lineage choice by deep learning Nat. Methods 14 403–6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [75].Lee GY, Kenny PA, Lee EH and Bissell MJ 2007. Three-dimensional culture models of normal and malignant breast epithelial cells Nat. Methods 4 359–65 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [76].Chen B-C. et al. Lattice light-sheet microscopy: imaging molecules to embryos at high spatiotemporal resolution. Science. 2014;346:1257998. doi: 10.1126/science.1257998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [77].Wu P-H, Giri A, Sun SX and Wirtz D 2014. Three-dimensional cell migration does not follow a random walk Proc. Natl Acad. Sci 111 3949–54 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [78].Dufour AC, Liu T-Y, Ducroz C, Tournemenne R, Cummings B, Thibeaux R, Guillen N, Hero AO and Olivo-Marin J-C 2014. Signal processing challenges in quantitative 3D cell morphology: more than meets the eye IEEE Signal Process. Mag 32 30–40 [Google Scholar]

- [79].Roitshtain D, Wolbromsky L, Bal E, Greenspan H, Satterwhite LL and Shaked NT 2017. Quantitative phase microscopy spatial signatures of cancer cells Cytometry 91 482–93 [DOI] [PubMed] [Google Scholar]

- [80].Elliott H, Fischer RS, Myers KA, Desai RA, Gao L, Chen CS, Adelstein RS, Waterman CM and Danuser G 2015. Myosin II controls cellular branching morphogenesis and migration in three dimensions by minimizing cell-surface curvature Nat. Cell Biol 17 137–47 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [81].Driscoll MK, Welf ES, Jamieson AR, Dean KM, Isogai T, Fiolka R and Danuser G 2019. Robust and automated detection of subcellular morphological motifs in 3D microscopy images Nat. Methods 16 1037–44 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [82].Ponti A, Machacek M, Gupton S, Waterman-Storer C and Danuser G 2004. Two distinct actin networks drive the protrusion of migrating cells Science 305 1782–6 [DOI] [PubMed] [Google Scholar]

- [83].Machacek M et al. 2009. Coordination of Rho GTPase activities during cell protrusion Nature 461 99–103 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [84].Lee K, Elliott HL, Oak Y, Zee C-T, Groisman A, Tytell JD and Danuser G 2015. Functional hierarchy of redundant actin assembly factors revealed by fine-grained registration of intrinsic image fluctuations Cell Syst 1 37–50 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [85].Mohan AS et al. 2019. Enhanced dendritic actin network formation in extended lamellipodia drives proliferation in growth-challenged Rac1P29S melanoma cells Dev. Cell 49 444–60 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [86].Tsygankov D, Bilancia CG, Vitriol EA, Hahn KM, Peifer M and Elston TC 2014. CellGeo: a computational platform for the analysis of shape changes in cells with complex geometries J. Cell Biol 204 443–60 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [87].Urbaňcič V, Butler R, Richier B, Peter M, Mason J, Livesey FJ, Holt CE and Gallop JL 2017. Filopodyan: an open-source pipeline for the analysis of filopodia J. Cell Biol 216 3405–22 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [88].Jacquemet G, Paatero I, Carisey AF, Padzik A, Orange JS, Hamidi H and Ivaska J 2017. FiloQuant reveals increased filopodia density during breast cancer progression J. Cell Biol 216 3387–403 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [89].Jolliffe IT and Cadima J 2016. Principal component analysis: a review and recent developments Phil. Trans. R. Soc. A 374 20150202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [90].Held M, Schmitz MHA, Fischer B, Walter T, Neumann B, Olma MH, Peter M, Ellenberg J and Gerlich DW 2010. CellCognition: time-resolved phenotype annotation in high-throughput live cell imaging Nat. Methods 7 747–54 [DOI] [PubMed] [Google Scholar]

- [91].Cortes C and Vapnik V 1995. Support-vector networks Mach. Learn 20 273–97 [Google Scholar]

- [92].Durbin R, Eddy SR, Krogh A and Mitchison G 1998. Biological Sequence Analysis: Probabilistic Models of Proteins and Nucleic Acids (Cambridge: Cambridge University Press; ) [Google Scholar]

- [93].Zhong Q, Busetto AG, Fededa JP, Buhmann JM and Gerlich DW 2012. Unsupervised modeling of cell morphology dynamics for time-lapse microscopy Nat. Methods 9 711–3 [DOI] [PubMed] [Google Scholar]

- [94].Wang W. et al. Live-cell imaging and analysis reveal cell phenotypic transition dynamics inherently missing in snapshot data. Sci. Adv. 2020;6:eaba9319. doi: 10.1126/sciadv.aba9319. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [95].Haralick RM 1979. Statistical and structural approaches to texture Proc. IEEE 67 786–804 [Google Scholar]

- [96].van der Maaten L and Hinton G 2008. Visualizing data using t-SNE J. Mach. Learn. Res 9 2579–605 [Google Scholar]

- [97].MacQueen J 1967. Some methods for classification and analysis of multivariate observations Proc. of the 5th Berkeley Symp. on Mathematical Statistics and Probability (Oakland, CA, USA) pp 281–97 [Google Scholar]

- [98].Goodhill GJ, Faville RA, Sutherland DJ, Bicknell BA, Thompson AW, Pujic Z, Sun B, Kita EM and Scott EK 2015. The dynamics of growth cone morphology BMC Biol 13 10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [99].Montero P and Vilar JA 2014. TSclust: an R package for time series clustering J. Stat. Softw 62 1–43 [Google Scholar]

- [100].Rodriguez A and Laio A 2014. Clustering by fast search and find of density peaks Science 344 1492–6 [DOI] [PubMed] [Google Scholar]

- [101].Li H, Pang F and Liu Z 2019. A modeling strategy for cell dynamic morphology classification based on local deformation patterns Biomed. Signal Process. Control 54 101587 [Google Scholar]

- [102].Wang X, Chen Z, Mettlen M, Noh J, Schmid SL and Danuser G 2020. DASC, a sensitive classifier for measuring discrete early stages in clathrin-mediated endocytosis eLife 9 e53686. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [103].Urbina FL, Menon S, Edwards RJ, Goldfarb D, Major MB, Brennwald PJ and Gupton SL 2020. TRIM67 regulates exocytic mode and neuronal morphogenesis via SNAP47 bioRxiv [DOI] [PMC free article] [PubMed] [Google Scholar]

- [104].LeCun Y, Bengio Y and Hinton G 2015. Deep learning Nature 521 436–44 [DOI] [PubMed] [Google Scholar]

- [105].Goodfellow IJ, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A and Bengio Y 2014. Generative adversarial networks Proc. of the Int. Conf. on Neural Information Processing Systems pp 2672–80 [Google Scholar]

- [106].Zaritsky A, Jamieson AR, Welf ES, Nevarez A, Cillay J, Eskiocak U, Cantarel BL and Danuser G 2020. Interpretable deep learning of label-free live cell images uncovers functional hallmarks of highly-metastatic melanoma bioRxiv [DOI] [PMC free article] [PubMed] [Google Scholar]

- [107].McLachlan GJ 2004. Discriminant Analysis and Statistical Pattern Recognition (New York: Wiley; ) [Google Scholar]

- [108].Wu Z, Chhun BB, Schmunk G, Kim CN, Yeh L-H, Nowakowski T, Zou J and Mehta SB 2020. DynaMorph: learning morphodynamic states of human cells with live imaging and sc-RNAseq bioRxiv Preprint 10.1101/2020.07.20.213074 [DOI] [Google Scholar]

- [109].Kim C, Seedorf GJ, Abman SH and Shepherd DP 2019. Heterogeneous response of endothelial cells to insulin-like growth factor 1 treatment is explained by spatially clustered sub-populations Biol. Open 8 bio045906. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [110].Snijder B, Sacher R, Rämö P, Damm E-M, Liberali P and Pelkmans L 2009. Population context determines cell-to-cell variability in endocytosis and virus infection Nature 461 520–3 [DOI] [PubMed] [Google Scholar]

- [111].Weber S, Fernández-Cachón ML, Nascimento JM, Knauer S, Offermann B, Murphy RF, Boerries M and Busch H 2013. Label-free detection of neuronal differentiation in cell populations using high-throughput live-cell imaging of PC12 cells PloS One 8 e56690. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [112].Nobs J-B and Maerkl SJ 2014. Long-term single cell analysis of S Pombe on a microfluidic microchemostat array PloS One 9 e93466. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [113].Zheng G, Horstmeyer R and Yang C 2013. Wide-field, high-resolution Fourier ptychographic microscopy Nat. Photon 7 739–45 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [114].Qiao C, Li D, Guo Y, Liu C, Jiang T, Dai Q and Li D 2021. Evaluation and development of deep neural networks for image super-resolution in optical microscopy Nat. Methods 18 194. [DOI] [PubMed] [Google Scholar]

- [115].Yifeng L, Fang-Xiang W and Alioune N 2016. A review on machine learning principles for multi-view biological data integration Brief Bioinf 19 bbw113. [DOI] [PubMed] [Google Scholar]

- [116].Ståhl PL et al. 2016. Visualization and analysis of gene expression in tissue sections by spatial transcriptomics Science 353 78–82 [DOI] [PubMed] [Google Scholar]

- [117].Gerbin KA. et al. Cell states beyond transcriptomics: integrating structural organization and gene expression in hiPSC-derived cardiomyocytes. bioRxiv Preprint. 2020 doi: 10.1101/2020.05.26.081083. [DOI] [PubMed] [Google Scholar]

- [118].Chen KH, Boettiger AN, Moffitt JR, Wang S and Zhuang X 2015. Spatially resolved, highly multiplexed RNA profiling in single cells Science 348 aaa6090. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [119].Auge GA, Leverett LD, Edwards BR and Donohue K 2017. Adjusting phenotypes via within- and across-generational plasticity New Phytol 216 343–9 [DOI] [PubMed] [Google Scholar]

- [120].Norouzitallab P, Baruah K, Vanrompay D and Bossier P 2019. Can epigenetics translate environmental cues into phenotypes? Sci. Total Environ 647 1281–93 [DOI] [PubMed] [Google Scholar]

- [121].Corona M, Libbrecht R and Wheeler DE 2016. Molecular mechanisms of phenotypic plasticity in social insects Curr. Opin. Insect Sci 13 55–60 [DOI] [PubMed] [Google Scholar]

- [122].Otte T, Hilker M and Geiselhardt S 2018. Phenotypic plasticity of cuticular hydrocarbon profiles in insects J. Chem. Ecol 44 235–47 [DOI] [PubMed] [Google Scholar]

- [123].Chartrand G, Cheng PM, Vorontsov E, Drozdzal M, Turcotte S, Pal CJ, Kadoury S and Tang A 2017. Deep learning: a primer for radiologists RadioGraphics 37 2113–31 [DOI] [PubMed] [Google Scholar]

- [124].Krizhevsky A, Sutskever I and Hinton GE 2012. Imagenet classification with deep convolutional neural networks Advances in Neural Information Processing Systems pp 1097–105 [Google Scholar]

- [125].Lecun Y, Bottou L, Bengio Y and Haffner P 1998. Gradient-based learning applied to document recognition Proc. IEEE 86 2278–324 [Google Scholar]

- [126].Wang H and Raj B 2017. On the origin of deep learning (arXiv:1702.07800) [Google Scholar]

- [127].Savage N 2019. How AI and neuroscience drive each other forwards Nature 571 S15–7 [DOI] [PubMed] [Google Scholar]

- [128].Cybenko G 1989. Approximation by superpositions of a sigmoidal function Math. Control Signal Syst 2 303–14 [Google Scholar]

- [129].Chiu C-C et al. 2018. State-of-the-Art speech recognition with sequence-to-sequence models IEEE Int. Conf. on Acoustics, Speech and Signal Processing (ICASSP) (Piscataway, NJ: IEEE; ) [Google Scholar]

- [130].Senior AW et al. 2020. Improved protein structure prediction using potentials from deep learning Nature 577 706–10 [DOI] [PubMed] [Google Scholar]

- [131].Zhu J-Y, Park T, Isola P and Efros AA 2020. Unpaired image-to-image translation using cycle-consistent adversarial networks (arXiv:1703.10593) [Google Scholar]

- [132].Silver D et al. 2016. Mastering the game of Go with deep neural networks and tree search Nature 529 484–9 [DOI] [PubMed] [Google Scholar]

- [133].Sejnowski TJ 2020. The unreasonable effectiveness of deep learning in artificial intelligence Proc. Natl Acad. Sci. USA 117 30033–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [134].Srivastava N, Hinton G, Krizhevsky A, Sutskever I and Salakhutdinov R 2014. Dropout: a simple way to prevent neural networks from overfitting J. Mach. Learn. Res 15 1929–58 [Google Scholar]

- [135].Ioffe S and Szegedy C 2015. Batch normalization: accelerating deep network training by reducing internal covariate shift Int. Conf. on Machine Learning (PMLR; ) pp 448–56 [Google Scholar]

- [136].Zeiler MD and Fergus R 2014. Visualizing and understanding convolutional networks Visualizing and Understanding Convolutional Networks (Berlin: Springer; ) pp 818–33 [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

No new data were created or analysed in this study.