Abstract

Modern auscultation, using digital stethoscopes, provides a better solution than conventional methods in sound recording and visualization. However, current digital stethoscopes are too bulky and nonconformal to the skin for continuous auscultation. Moreover, motion artifacts from the rigidity cause friction noise, leading to inaccurate diagnoses. Here, we report a class of technologies that offers real-time, wireless, continuous auscultation using a soft wearable system as a quantitative disease diagnosis tool for various diseases. The soft device can detect continuous cardiopulmonary sounds with minimal noise and classify real-time signal abnormalities. A clinical study with multiple patients and control subjects captures the unique advantage of the wearable auscultation method with embedded machine learning for automated diagnoses of four types of lung diseases: crackle, wheeze, stridor, and rhonchi, with a 95% accuracy. The soft system also demonstrates the potential for a sleep study by detecting disordered breathing for home sleep and apnea detection.

A soft wearable stethoscope offers wireless continuous real-time auscultation and automated disease diagnosis.

INTRODUCTION

Chronic obstructive pulmonary disease (COPD) and cardiovascular disease (CVD) (1) are the leading causes of death worldwide, taking almost a million lives each year (2). COPD and CVD are umbrella terms for a group of diseases that cause heart and lungs to malfunction, restricting blood flow to cause difficulty in breathing and severe discomfort (3). An alarming 80% of COPD deaths occur in low- and middle-income countries (LMICs), where the lack of accessibility to health care treatment and affordability for current medical devices limits the feasibility of tracking the development of these progressive diseases over extended periods. For the diagnosis and care of patients with COPD and CVD, accurate auscultation is helpful for the diagnosis at an early stage and evaluation of the treatment response (4, 5). Wheezing is important in the diagnosis and monitoring of those diseases (6). Crackles have a crucial significance in diagnosing pneumonia, idiopathic pulmonary fibrosis, and pulmonary edema (5). Stridor suggests severe obstruction of the upper airway and is helpful for the patient’s emergency treatment. Heart sound also provides substantial information for diagnosing and identifying various valvular heart diseases and heart failures. If there is sound splitting in S1 and S2, some CVDs such as valve diseases, arrhythmias, and pulmonary arterial hypertension can be suspected (7, 8). If the pathological S3 or S4 sound is heard, it may suggest valve diseases, heart failure, and acute coronary diseases (9).

Auscultation has been the most basic and vital diagnostic method in the medical field because it is noninvasive, fast, informative, and inexpensive. Although many imaging and diagnostic technologies such as chest computed tomography and echocardiogram have been widely applied in clinical practice, auscultation is used as a primary diagnostic tool, especially in the LMICs (10). However, auscultation with conventional stethoscopes has fundamental limitations. Most stethoscopes cannot record the detected sounds, making it difficult to share the auscultatory sounds with other medical staff. In addition, analysis of auscultation sounds is quite different depending on the knowledge and experience of the clinician. As a result, some critical respiratory or heart diseases are underdiagnosed or misdiagnosed (5). Recently, the incidence and socioeconomic burden of COPD and CVD continue to increase because of the increasingly aging population, worsening air pollution, and various infectious diseases. Early diagnosis and accurate monitoring using exact auscultation are becoming more crucial and urgently required to improve auscultation technology.

Digital stethoscopes assist auscultation in real time and telemedicine diagnosis by recording and converting acoustic sound to electrical signals, amplifying subtle sounds that are inaudible using acoustic stethoscopes (11). They can be used instead of binaural stethoscopes in everyday patient care. In addition, these devices can be supplemented with computer software to improve diagnostic capabilities, although effective diagnosis using signal processing is still unavailable (12). Although signal graphs define quantitative measurements and reduce the subjectiveness of diagnosis from different physicians, varying positions and pressure of stethoscope placement on the chest and the back still cause unwanted friction noise and human errors during data collection. This is especially a concern for patients self-operating a digital stethoscope at home, who lack experience compared with trained medical professionals. The rigidity and bulkiness of current digital stethoscopes create an unmet need for a more patient-friendly digital stethoscope. Furthermore, digital stethoscopes cannot detect pathology or aberrant sounds for diagnostic purposes. They just serve as sound recording devices, with the ability to graphically show the sound in the form of a phonocardiogram in some circumstances. Computer algorithms must be developed and accompanied for automated diagnosis to be possible. The approach in the vast majority of studies describes a single chest recording. However, for the sake of sophisticated diagnosis, this is frequently insufficient. Multiple auscultation sites are required, which might lead to more errors (12). Developing a more inexpensive, accurate, and wearable digital stethoscope device makes telehealth and other forms of remote medical diagnosis more accessible, allowing for early cardiovascular and respiratory disease detection through continuous monitoring.

Here, this paper introduces a soft wearable stethoscope (SWS) system for ambulatory cardiopulmonary auscultation using a class of technologies with advanced electronics, flexible mechanics, and soft packaging, which serves as a novel self-operable wearable for continuous cardiovascular and respiratory monitoring. The area of focus will be the device’s design for accurate cardiorespiratory data collection (13) during daily activities to diagnose various pulmonary abnormalities. Improving the signal-to-noise ratio (SNR) from the wavelet-denoised sound collection, minimizing circuitry to make the device model more compact, and training a machine learning model to correctly identify stridor (14), rhonchi (15), wheezing (16), and crackling (17) lung sounds from the device are all demonstrated in this article. A user-friendly mobile device application is also developed that can record heart and lung sounds, track and display real-time signals, automatically diagnose various abnormal lung sounds, and upload information to a synchronized local memory remotely and securely.

RESULTS

Design, architecture, and mechanical properties of an SWS

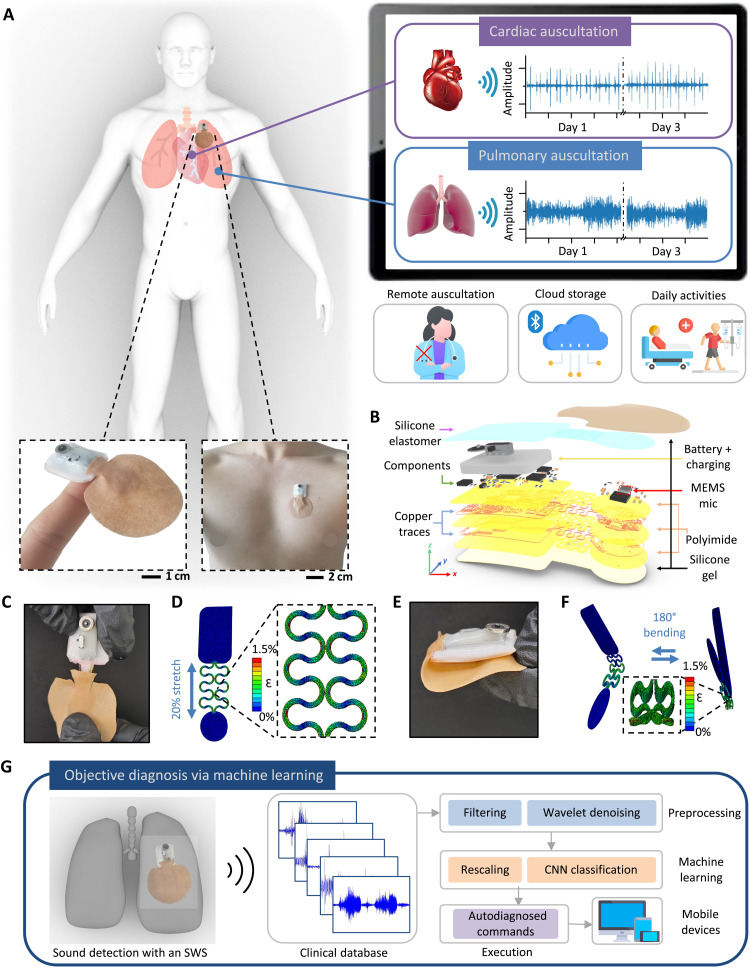

The design overview and key functions of an SWS are summarized in Fig. 1. The combination of nanomaterial printing, system integration, and soft material packaging makes a miniaturized, soft wearable system for a remote patient cardiopulmonary auscultation. The SWS in Fig. 1A has an exceptionally small form factor and mechanically soft and flexible properties, allowing for intimate skin integration and self-operable auscultation for remote and continuous monitoring without physical interactions between patients and physicians. The soft mechanical characteristics include an elastomeric enclosure with an inner silicone gel (300 μm in thickness and 4 kPa in Young’s modulus; details in movie S1) (18). This arrangement uses the gentle placement of the device on the curved skin of the chest and the back via a thin, conductive hydrogel coupling layer to auscultate cardiac and respiratory activities. The silicone-gel backing provides reversible, multiple uses of the device with maintained sound detection qualities over a couple of days. The schematic illustration in Fig. 1B shows the details of the densely packed multiple layers of soft materials and electronic components, including a microphone sensor, a flexible thin-film circuit, a rechargeable battery, and a Bluetooth-low-energy (BLE) unit for wireless data transmission (details of the circuit design are in figs. S1 and S2). This system uses a microelectronic mechanical system (MEMS) microphone because of the small diaphragms for sound recording. Collected sounds from the microphone are then converted to digital signals through the analog-to-digital converter and streamed in real time via the BLE chip for data processing. After sound collection, signal processing and denoising algorithm are used to filter out extraneous noise and label signals with various classes (19). The critical design point of the device is to isolate the microphone from the core circuit area, which provides an enhanced and more stable contact with the skin for noise-reduced continuous auscultation. The step-by-step fabrication processes are summarized in fig. S3, and the sequential photos in fig. S4 show how the chip components are assembled and packaged for mounting on the skin. The integrated SWS can measure heart and lung sounds for more than 10 hours with continuous wireless data transmission (fig. S5); this device is powered by a miniaturized, rechargeable, lithium-ion polymer battery (40 mAh capacity). The battery’s two terminals and the circuit’s power pads are soldered with small neodymium magnets for a guided battery connection and continued uses. Computational finite element analysis and corresponding experiments capture the stretching and bending mechanics of the device on the human skin (20), mimicking the user’s respiration cycles (Fig. 1, C to F). Additional details of the device’s mechanical reliability are shown in fig. S6, where cyclic mechanical tests of the device are conducted with measured electrical resistance. When evaluated by a digital force gauge (EMS303, Mark-10) and a multimeter (DMM7510, Tektronix), the soft and flexible system, enclosed by low-modulus elastomers, displays mechanical stability without fractures. Resistance fluctuation was negligible over the 100 cycles with less than 30-milliohm variations. The total resistance change was about 0.41 milliohms for cyclic stretching and 0.71 milliohms for cyclic bending tests. The schematic illustration in Fig. 1G shows the overall flow for automated, objective diagnosis of different diseases via machine learning in the SWS. For real-time, continuous data recording, an android-based customized app is used (fig. S7). Collected sounds through the app go through preprocessing, machine learning, and classification using convolutional neural networks (CNNs). For instance, coarse breathing crackles during inhaling is a symptom of COPD, while the appearance of S3 and S4 heart signals indicates cardiac dysfunction (21). Collectively, the fully portable SWS can offer a unique opportunity for remote, digital health monitoring of patients without frequent visits to hospitals (Table 1).

Fig. 1. Design, architecture, and mechanical properties of an SWS.

(A) Schematic illustration of remote monitoring using the SWS, with the zoomed-in photo of the device on the finger and the chest (bottom). Mobile device showing real-time graphs of cardiac and pulmonary auscultation data over 3 days (right) while doing daily activities without any contact (bottom right). (B) Exploded view of the SWS with multiple layers of deposited materials. (C) Image of the 20% stretched interconnects in the SWS. (D) Finite element analysis (FEA) results in (C). (E) Photo of the SWS with 180° bending. (F) FEA results showing cyclic bending from (E). (G) Schematic illustration of the flow for automated, objective diagnosis of diseases via machine learning in the SWS. Various real-time collected abnormal sounds go through preprocessing, machine learning, and classified results stream through the application installed in any mobile device.

Table 1. Performance comparison of wearable digital stethoscopes using microphones.

MIO, mechanical intestinal obstruction.

| Reference |

Continuous cardiopulmonary monitoring |

Controlled motion artifact |

SNR of cardiac signals (dB) |

Activity levels | Clinical study | Number of patients |

| This work | Yes (more than 10 hours up to 3 days with a 150-mAh battery) |

Yes | 14.8 | Daily activities with jogging |

Patients with lung disease |

20 (12 crackle, 1 rhonchi, 4 wheeze, and 3 stridor) |

| (33) | No | No | 1.76 | Stationary | – | – |

| (34) | No | No | 10.0 | Stationary | – | – |

| (35) | No | No | 6.02 | Stationary | – | – |

| (36) | No | No | 10.0 | Stationary | – | – |

| (37) | No | No | 6.02 | Stationary | – | – |

| (38) | No | No | 7.00 | Stationary | Patients with MIO and paralytic ileus |

2 (1 MIO and 1 paralytic ileus) |

| (39) | No | No | 7.36 | Stationary | – | – |

| (19) | No | No | 6.19 | Stationary | – | – |

| (40) | No | No | 10.0 | Stationary | Patients with pneumonia |

5 (pneumonia) |

| (41) | No | No | 3.98 | Stationary | Patients with wheeze |

40 (wheeze) |

| (42) | No | No | 10.0 | Stationary | – | – |

| (13) | No | No | 6.99 | Stationary | – | – |

| (43) | No | No | 9.03 | Stationary | – | – |

Mechanics, optimization, and control of motion artifacts with an SWS

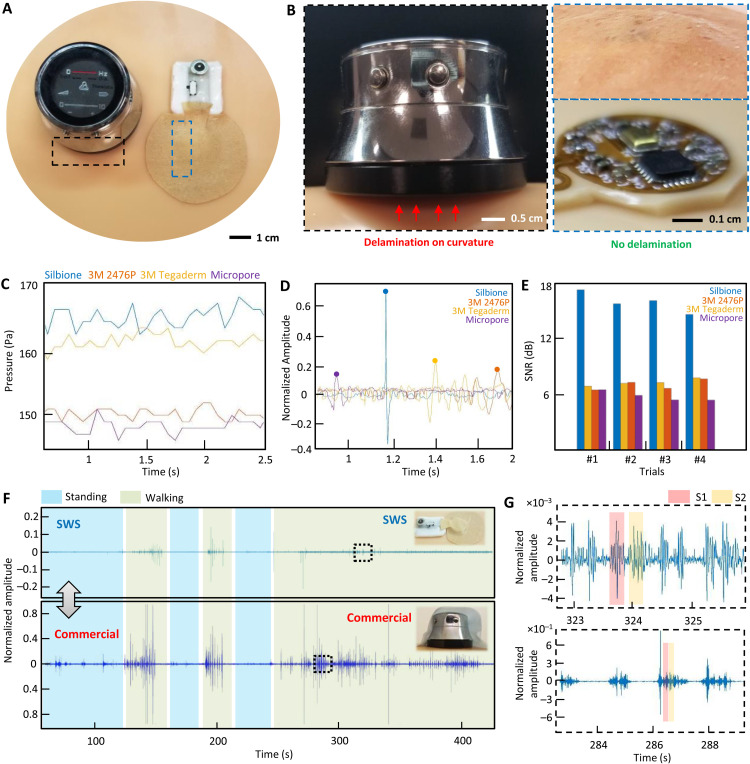

For high-quality, low-noise auscultation, it is essential to maintain the intimate contact of the wearable microphone system to the skin, even with movements in daily life. A comprehensive set of experimental studies in Fig. 2 captures the novelty of an SWS in ensuring skin-conformable contact and controlling motion artifacts. A photo in Fig. 2A shows the side-by-side comparison between a miniaturized SWS and a commercial digital stethoscope [ThinkLabs One (TLO)]. The commercial device’s biggest limitation is the rigidity of the materials and electronic packaging, causing notable air gaps and delamination on the curved human skin (Fig. 2B). As expected, the conventional classical stethoscope (fig. S8) has a similar form factor and rigidity to the digital stethoscope. On the other hand, the thin and flexible SWS makes conformable contact with the skin. Furthermore, when the top enclosure is removed, the microphone island unit shows great skin contact with unnoticeable air gaps, providing high-quality sound recording. An experimental study in Fig. 2C, using four types of thin-film membranes, finds the optimal backing substrate to provide high pressure for enhanced device-skin contact. By using a miniaturized pressure sensor (SingleTact), we measured pressure values from membranes, including silbione substrate, 3M 2476P tape, 3M Tegaderm tape, and 3M Micropore tape. Details of the experimental setup are shown in fig. S9. When four types of devices measure heart sounds, the silbione-based device delivers the best S1 sound quality with a normalized amplitude over 0.6 (Fig. 2D). Clinically, S1 corresponds to the pulse; S1 usually is a single sound because mitral and tricuspid valve closure occurs almost simultaneously. A graph in Fig. 2E summarizes SNR values of those devices with four trials, which clearly captures the highest contact quality of the silbione case with an average value of 16 dB. An additional experimental study in Fig. 2F compares the sound recording performance between the SWS and the commercial TLO. By mounting both devices on the chest, a healthy subject makes different activities, including standing and walking, while recording the sounds for more than 5 min. As represented in zoomed-in view (Fig. 2G), the SWS with skin-conformable contact (top graph) clearly shows S1 and S2 peaks, while the commercial one (bottom graph) shows substantial motion artifacts because of the device’s rigidity and weight. In addition, the SWS has waterproof capability, as summarized in fig. S10, showing the possibility of using the device in various daily actions, including showers. Even with water-flowing conditions, the SWS could clearly detect S1 and S2 peaks. The SWS also has great breathability, allowing enhanced air permeation through the substrate. Materials with high water vapor transmission allow for the skin to breathe better. The summarized data in fig. S11 demonstrates that the SWS has the best gas permeability among different membranes, offering long-term use without causing skin breakdown. The skin-friendly SWS shows how well the device can be used for multiple days with the subject’s different actions in daily life, including desk work, business meeting with talking, sleeping, and exercising (fig. S12). This subject used the same device for 3 days with a multihour sound recording (fig. S13) with adhesion values changing over time, without any adverse effects observed during the test.

Fig. 2. Mechanics, optimization, and control of motion artifacts with an SWS.

(A) Photo comparing an SWS with a commercial device (TLO digital stethoscope) on the skin model. (B) Comparison of skin contact quality between the commercial rigid stethoscope (left) showing delamination from the skin due to the 45° curvature and the SWS showing intimate contact. (C) Difference of pressure applied on the microphone island using various biocompatible adhesives, including silbione, 3M 2476P, 3M Tegaderm, and micropore. (D) Time-series graph versus normalized amplitude for the S1 peak from the heart sounds using different adhesives. (E) Calculated SNR from S1 peaks from (D); there are four trials. (F) Time-series graph of the SWS versus the commercial device (TLO) when both are mounted on the chest; this subject conducts different activities, including standing and walking while recording the sounds. (G) Zoomed-in graphs for part of the noise peaks caused by walking; the SWS with skin-conformable contact (top graph) clearly shows S1 and S2 peaks, while the commercial one (bottom graph) shows step-noise amplified compared to the heart sounds.

Fully portable, continuous monitoring of cardiac sounds in daily life

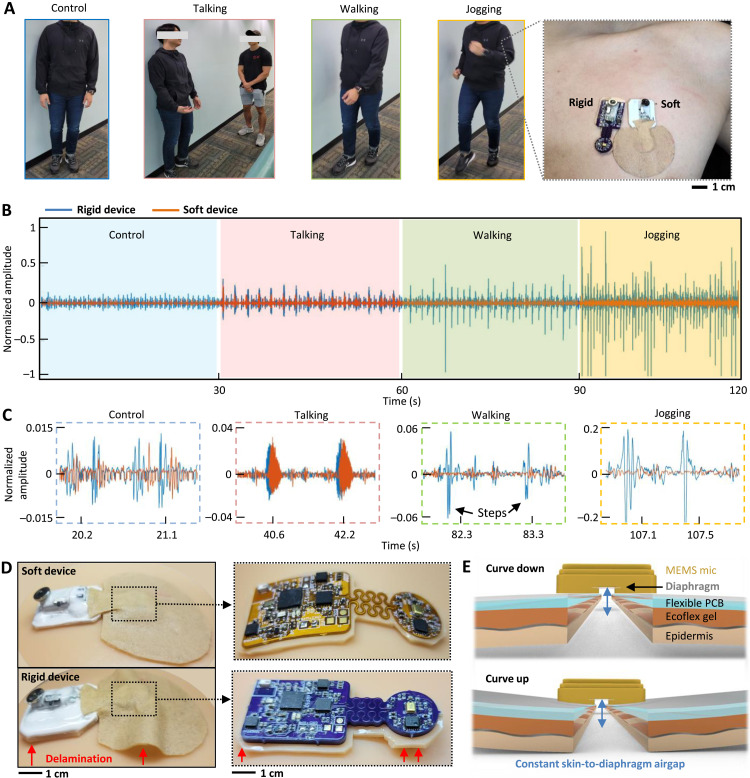

Various types of daily activities have different sources of noise that can negatively affect the recording of sounds with an SWS. The existing manual acoustic stethoscopes and commercial digital ones are suffering from notable noise and motion artifacts. That is why auscultations are performed with patients during the resting state only. Unlike those devices, an SWS can successfully handle and control motion artifacts with the device form factor and maintained skin-contact quality. A set of experiments in Fig. 3A shows a subject mimicking various real-life situations, including standing still (control example), talking with another person, walking, and jogging. This study aimed to demonstrate how a rigid electronic circuit affects the measured sound quality. Because no available commercial wearable stethoscope exists, we fabricated our own rigid-version device, which was compared to the soft device side to side (Fig. 3A, right photo). A graph in Fig. 3B summarizes measured cardiac sounds by both devices in different activities (control, talking, walking, and jogging). As a result, the soft device shows motion artifact–controlled data with negligible noise, while the rigid one has corrupted data because of device delamination from motions. Detailed signals in Fig. 3C capture clear cardiac signals, showing that the movements from walking and jogging cause noise issues to the rigid one, while the soft device shows negligible effects. Photos in Fig. 3D compare two devices (top: soft one and bottom: rigid one) mounted on a soft skin model where the soft device shows conformable contact while the rigid device shows delamination (bottom-left and bottom-right photos; magnified view of device structures without the top encapsulation).

Fig. 3. Fully portable, continuous monitoring of cardiac sounds in daily life.

(A) Series of photos showing different daily activities, including standing-control, talking, walking, and jogging. To demonstrate the device’s performance, the SWS is compared with a rigid device (right photo). (B) Graph showing cardiac sounds measured by both devices during different activities (control, talking, walking, and jogging). The SWS shows motion-artifact controlled data with negligible noise, while the rigid device has corrupted data because of device delamination from motions. (C) Zoomed-in data of measured cardiac sounds; motions from walking and jogging cause noise issues for the rigid one, while the SWS shows negligible effects. (D) Photos comparing two devices (top: SWS and bottom: rigid one) mounted on a soft skin model where the SWS shows conformable contact while the rigid device shows delamination (left photos), and magnified view of device structures without the top encapsulation (right photos). (E) Detailed design of the SWS showing skin-conformal contact for minimizing changes in skin-to-diaphragm air gaps.

Another crucial part of motion-artifact control is to ensure the minimized changes of air gaps between the skin and the diaphragm inside the microphone (Fig. 3E) because the gap acts as an acoustic capacitance converting pressure wave to electrical signals (22). As demonstrated earlier, the soft device offers skin-conformal lamination to withstand any air gap changes during different activities, aided soft gel layers. On the other hand, the conventional rigid device still suffers from motion noise when the subject walks and runs downstairs (details of the device comparison in movie S1). Continuous delamination of the rigid device exaggerates changes of skin-to-diaphragm air-gap capacitance, hence decreasing signal quality because of absorption layers being impractically thick. These factors maximize the motion artifact noise (sample sounds in movie S2), causing low-quality recording of heart and lung sounds. On the other hand, the soft device, having excellent skin contact, could minimize the air acoustic impedance between the epidermis and the diaphragm inside the microphone. Additional filtering of the first-level cutoff frequencies was used to remove the unwanted high-frequency noise (22), caused by motions, speech sounds, and beeping sounds in clinical settings.

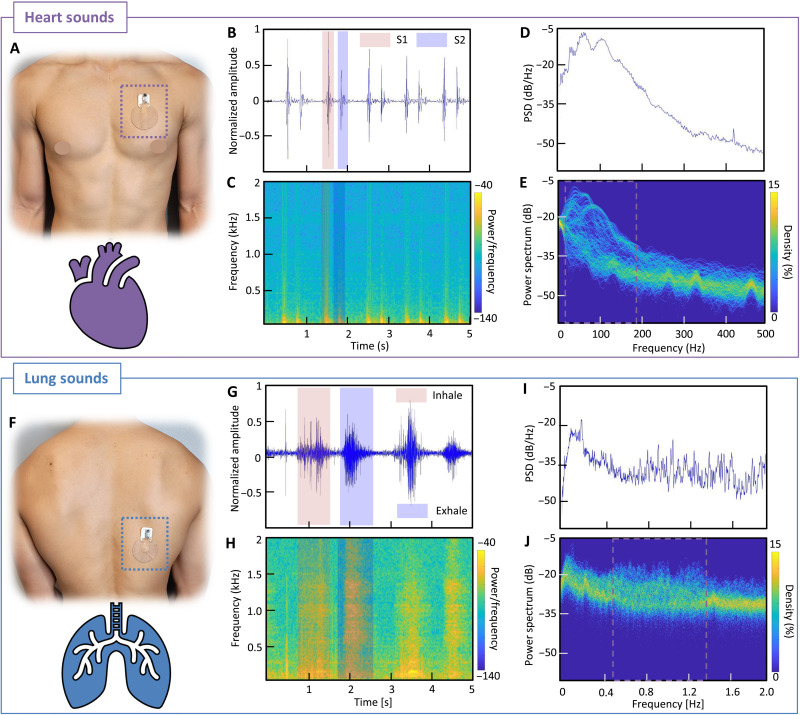

Device performance in the monitoring of heart and lung sounds

Heart sounds are produced by the opening and closing of the valves in the heart. The closing of the mitral and tricuspid valves creates the S1 sound; this process is called systole. When the pulmonic and aortic valves close, also known as the diastole, they create the S2 sound, which is louder (23). These heart sounds typically range from 20 to 220 Hz, which a microphone can detect with high sensitivity (movie S3). The summarized set of data in Fig. 4 captures the performance of an SWS for high-quality recording of heart sounds as well as lung sounds. When the device is mounted on the chest (Botkin-Erb point; Fig. 4A), S1 and S2 sounds are clearly captured and differentiated, as summarized in Fig. 4B. The gathered information, including magnitude, shape, S1-S2 distance, and size relativity, is used to develop a machine learning algorithm for generating an individual profile. A spectrogram in Fig. 4C displays the power of the frequencies based on color. The yellow-highlighted portions in the graph show S1 and S2 waves, and their frequency ranges are around 110 Hz, as expected. Overall, the soft wearable device shows excellent performance in high-quality detection of sounds, which could detect slight changes in sound irregularities that might be indistinguishable in manual listening or invisible to the eye based on raw data. A graph, showing Welch’s power spectrum density in Fig. 4D, displays the peak magnitudes in the 90-to-150 Hz range. Last, the measured signal is analyzed in a time-frequency perspective with the percentage of time that a specific frequency is present in a signal for the persistence spectrum (Fig. 4E). The greater the time percentage and the brighter the color, the stronger a specific frequency is displayed in a signal for that specific time. Compared with a spectrogram, brighter colors represent unwanted noises in the surrounding and wanted frequency showing the lower degree of clustered curves, 20-to-180 Hz range for heart sounds.

Fig. 4. Device performance in the monitoring of heart and lung sounds.

(A) Photo of the SWS mounted on the chest (Botkin-Erb point) for heart sound detection. (B) Time-series plot of the 5-s window heart sounds, measured from a healthy subject. (C) Spectrogram of the time-series plot showing the frequency range of the measured signals. (D) Welch-plotted power spectrum density (PSD) over the heart frequency range from 0 to 500 Hz. (E) Persistence spectrum of the heart sounds showing the percentage of time that heart frequency is present in the signals around 20 to 180 Hz. Compared with the spectrogram, brighter colors represent unwanted noises in the surrounding. (F) Photo of the SWS mounted on the back (right lower lobe point) for lung sound detection. (G) Time-series plot of the 5-s window lung sounds from a healthy subject with normal breathing. (H) Spectrogram of the time-series plot showing the frequency range of the lung signals. (I) Welch-plotted PSD over the lung frequency range from 0 to 2 kHz. (J) Persistence spectrum of the lung sounds showing the percentage of time that lung frequency is present in the signals around 450 to 1350 Hz.

In addition, the same device was used to measure lung sounds on the back (right lower lobe; Fig. 4F). Lung sounds are produced by air running in and out of the bronchial tree inside one’s lungs. The shape of the chest cavity, the number of bones, the thickness of skin and layers of fat, and the density of the muscles all can affect the sound and pitch of lung sounds (24). The frequency of lung sounds ranges from 100 to 2500 Hz, and tracheal sounds can reach up to 4000 Hz (movie S4) (24). Despite all these variabilities, the sensitivity of the SWS is excellent, which allows for clear recording and detection of various types of breathing issues (24). Similar to the heart sound analysis, the measured lung sounds by the SWS go through multiple analysis processes as described in Fig. 4 (G to J). We also used the fast Fourier transform for the heart and lung sounds, as summarized in fig. S14, showing discrete transform values in the frequency domain to detect the power from 0 to 2 kHz. For reliability validation, we compared the recorded data from the SWS with the commercial digital stethoscope (TLO; fig. S15). For simultaneous sound detection, both devices were mounted on a biomimetic skin model with an embedded speaker, playing sounds from 20 to 750 Hz. Overall, the SWS shows better performance in collecting lower-frequency range (from 36 Hz), compared to 48 Hz for TLO.

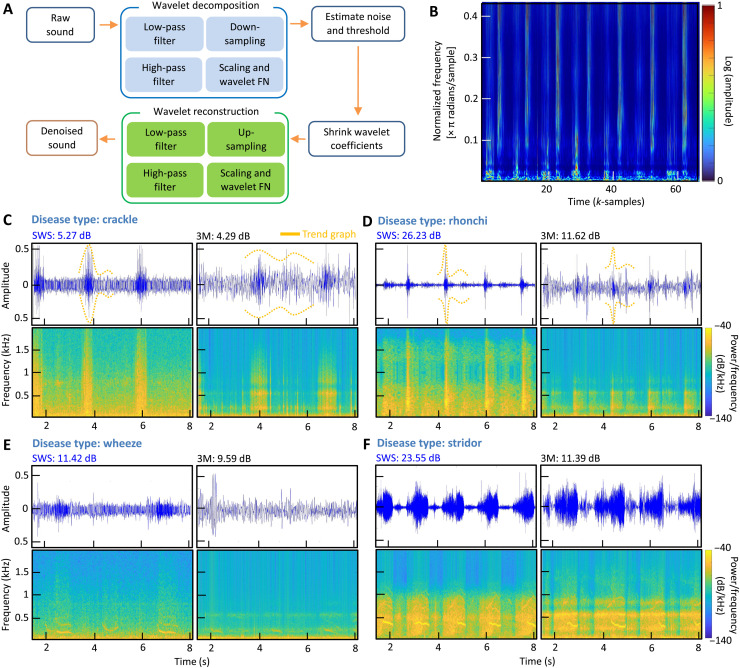

Wavelet denoising algorithm to analyze heart and lung sounds

Wavelet transformation on heart and lung sound signals and noise filtering processes are crucial in this study because the microphone captures all sounds from the body and the surrounding. Wavelet denoising using a threshold algorithm is one of the most powerful methods for suppressing noise in digital signals. In auscultation, determining threshold values for heart and lung sounds is critical in the wavelet threshold denoising method. A modest threshold value may not eliminate all the noisy coefficients, while notable thresholding sets more coefficients to zero, removing features from the decomposed data (23). A flowchart in Fig. 5A shows how the wavelet denoising algorithm works through decomposition and reconstruction of collected sounds. This study used two parts of filter banks to denoise the surrounding noise for auscultated data, including the analysis filter and the synthesis filter bank (fig. S16A). The analysis filters decompose the inputted heart and lung sounds into down-sampled subbands, and the synthesis filter bank reconstructs the original heart and lung sound data after upsampling. After an audio signal is read through the algorithm, it adds Gaussian noise to the raw signal to form a noisy signal. After the SNR and the root mean square error (RMSE) is calculated for the noisy signal, the threshold value for wavelet thresholding is calculated by SNR over RMSE value, also depending on the noise intensity and the decomposition stage. This thresholding is applied to decomposed wavelet coefficients, and a soft threshold is used for lung auscultation (24). The soft thresholding provides a consistent difference between the reconstructed and the original signals, causing sharp sounds to be smoothed. The last step is to reconstruct the lung sound signals leveraging the soft-threshold wavelet coefficients fed into the synthesis filter bank. The wavelet denoised breathing data are displayed in Fig. 5B, capturing various abnormalities from measured lung sounds and displaying normalized frequency for each sample in π radians per sample. Each wavelet of the denoised lung sounds is summarized in fig. S16B. Similarly, heart sounds are following the same process in the analysis, as summarized in fig. S16C. We applied the wavelet denoising algorithm for multiple patient datasets, measured and compared by an SWS and a commercial stethoscope (3M Littmann). A set of summarized graphs in Fig. 5 (C to F) shows calculated SNR values for patients with lung disease, including crackle, rhonchi, wheeze, and stridor. In this comparison, the left column of each dataset shows collected sounds from an SWS, while the right column shows the measured data from the commercial 3M device. In addition, filter frequency spectrograms, shown in fig. S17, compare the denoised signal quality between the new device and the commercial one. To sum up, the experimental study using two devices demonstrates the superior performance of the soft wearable system by offering enhanced detection of lung sounds and abnormalities from various diseases. Table S1 summarizes the details of the measured SNR from two devices with patient information and their symptoms (movies S5 to S8 playing various symptomatic sounds).

Fig. 5. Overview of a wavelet denoising algorithm to analyze heart and lung sounds.

(A) Schematic illustration of the flow showing how the wavelet denoising algorithm works through decomposition and recomposition of collected sounds. (B) Scalograms of various abnormalities from measured lung sounds, displaying normalized frequency for each sample in π radians per sample. All sample data are fed into the wavelet decomposition of the denoising algorithm. (C) Crackle patient data with trend-graphing showing denoised SWS data (left column) compared with measured sounds from a commercial stethoscope (3M Littmann; right column) and the corresponding SNR values. (D) Rhonchi patient data with trend-graphing and SNR values. (E) Wheeze patient data with trend-graphing and SNR values. (F) Stridor patient data with trend-graphing and SNR values.

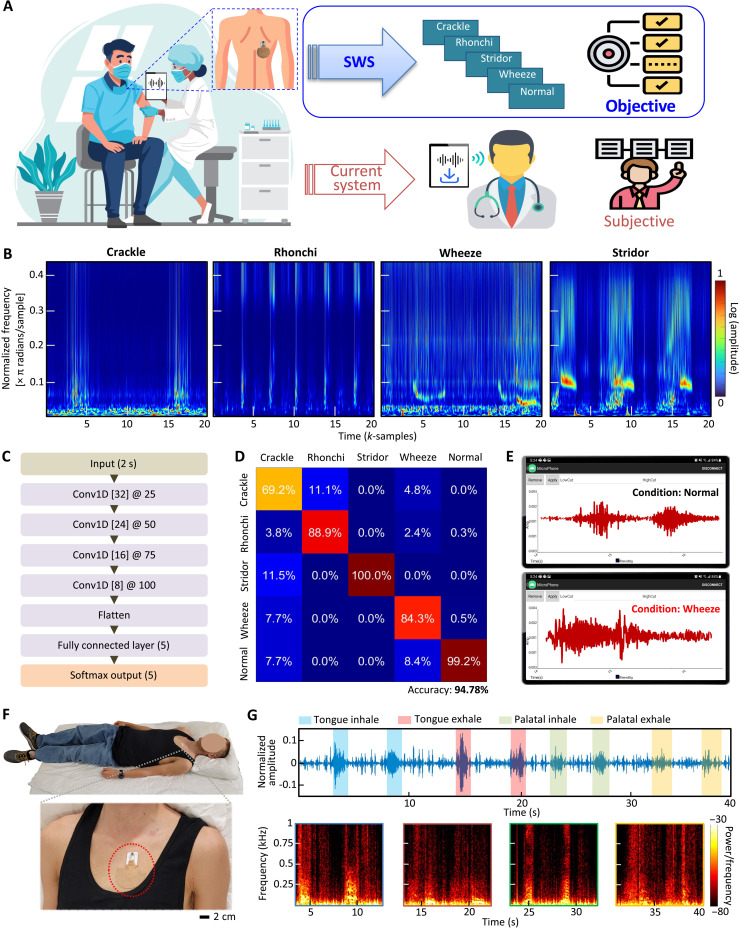

Automatic disease diagnosis via CNN-based machine learning, embedded in the SWS

When necessary, physicians or clinicians use conventional digital stethoscopes to collect sound signals from patients. However, as described in Fig. 6A, this way includes an inherent problem of subjective auscultation analysis of collected sounds. For example, pulmonologists who diagnose and treat respiratory diseases may provide different diagnostic opinions, depending on the quality or duration of recorded sounds. Here, the recently developed SWS has a crucial advantage with the capabilities of noise-controlled continuous, real-time recording of high-quality sounds, quantitative data analysis, and automated objective classification of diseases based on machine learning (e.g., lung abnormalities like crackle, rhonchi, wheeze, and stridor). Scalograms of various lung abnormalities in Fig. 6B show sample-series graphs displaying normalized frequency for each sample in π radians per sample. The data are divided into training and test sets using a 75 to 25% split, ensuring that no training and test sets overlapped. This test is repeated four times as part of fivefold cross-validation. Each sample is clustered into 2-s packets and fed into CNN-based machine learning. To additionally show wavelet-transformed heart sounds, 20-s heart sound data also were analyzed with derived heart rate for further application (fig. S18). The flowchart in Fig. 6C shows a spatial CNN model featuring four layers of one-dimensional convolutions with filters of decreasing size, flattening, and followed by a fully connected layer and Softmax output. The training set was split into a fivefold cross-validation scheme. Data were divided into 8000 8-s windows, sampled at 250 samples/s. For the training process, the validation accuracy was evaluated on each training epoch. The training was ceased if the validation accuracy did not improve after 10 successive epochs. Data were gathered from 20 patients with various lung abnormalities. The classification results (confusion matrix) in Fig. 6D capture the machine learning’s performance with an accuracy of 94.78% with five classes; each class compares abnormal lung sounds with a normal case. When we compared the CNN method with the support vector machine approach in signal classification, the CNN classification results show higher accuracies in the data analysis for both 2- and 4-s windows (details in fig. S19). The automated detection of sound abnormality can be easily usable in a mobile app platform for patients having daily activities (Fig. 6E); the smartphone app with the converged machine learning shows real-time lung sound waves while detecting an abnormal condition like a wheeze. Movie S9 demonstrates a real-time classification in the mobile app for various abnormal lung sounds simulated.

Fig. 6. Automatic disease diagnosis via CNN-based machine learning embedded in the SWS.

(A) Schematic illustration showing the overall flow of clinical data collection with the SWS compared with the conventional digital stethoscope or manual listening with subjective diagnosis. As highlighted, the SWS offers portable, continuous auscultation that can be used to automatically diagnose multiple lung diseases, which will be delivered to clinicians in real time. (B) Scalogram of crackle, rhonchi, wheeze, and stridor data in sample series versus normalized frequency with density for each sample. Each sample is clustered into 2-s packets and fed into machine learning. (C) Flowchart showing a spatial CNN model featuring four layers of one-dimensional convolutions with filters of decreasing size, flattening, and followed by a fully connected layer and Softmax output. (D) A confusion matrix representing results from multiple patients with classified four types of lung diseases compared to the normal case; the overall accuracy with five classes is 94.78%. (E) Mobile application showing real-time auscultation data and automatic detection of abnormal signals and corresponding classification based on machine learning. (F) Photos showing a subject who is sleeping with the SWS mounted on the skin. (G) Various snoring sounds of the mocked data for 10 s each for each snoring, indicating different patterns, including tongue and palatal, shown in the spectrograms decomposing the sound signal in frequency series.

The unique advantages of the SWS in terms of the form factor, portability, and high-quality sound recording offer the potential for applications in a sleep study, as demonstrated in Fig. 6, F to G. The soft device, mounted on the chest, successfully measures and collects snoring sounds separated frequency ranges from heart sounds (movie S10). Sleep-disordered breathing, such as snoring, is linked to cardiovascular illness, including heart failure, hypertension, and increased arrhythmias (25). The time of snoring in relation to the inspiration period would reveal the anatomical origin of snoring: tongue or soft palate during inhale or exhale, according to scientific explanation (26). Compared to soft palate snoring, tongue snoring reveals uneven timing relative to the breathing cycle and inconsistent frequency ranges from spectrograms, indicating obstructive sleep apnea that needs to be screened for treatment. Furthermore, snoring has been linked to respiratory symptoms, including wheezing and chronic bronchitis. Those with asthma and sleep-disordered breathing have poorer sleep quality and decreased nocturnal oxygen saturation (27). Various snoring sounds, collected in Fig. 6G, show the mocked data for 10 s each for each snoring, indicating different patterns, including tongue and palatal. Tongue inhale has a distinct range of power in frequency from 0 to 500 Hz as well as distinct peaks ranging from 500 to 1 kHz, followed by decreasing power of the signal in exhaling. On the other hand, tongue snoring during exhale shows a gradual increase of power from the inhale ranging up to 250 Hz, capturing distinct signal peaks. This measurement also presents the palatal snoring during the inhale. Compared to tongue snoring, similar signal power is shown throughout the range of the frequency during inhale except the range of 350 to 400 Hz. Overall, this pilot study demonstrates the soft device’s potential for more accurate at-home sleep monitoring by simultaneously monitoring cardiopulmonary sounds and electrophysiological signals.

DISCUSSION

This paper reports a comprehensive study, including soft material engineering, noise reduction mechanisms, flexible mechanics, signal processing, and algorithm development, to realize a fully portable, continuous, real-time auscultation with a wearable stethoscope. The soft auscultation system demonstrates continuous cardiopulmonary monitoring with multiple human subjects in various daily activities. The computational mechanics study offers a key design guide for developing a soft wearable system, maintaining mechanical reliability in multiple uses with bending and stretching. Optimizing a system packaging using biocompatible elastomers and soft adhesives allows for skin-friendly, robust adhesion to the body while minimizing motion artifacts due to the stress distribution and conformable lamination. The soft device demonstrates a precise detection of high-quality cardiopulmonary sounds even with the subject’s different actions. Compared with the commercial digital stethoscopes, the SWS using a wavelet denoising algorithm shows superior performance as validated by the enhanced SNR for detecting four lung diseases. Deep learning integration with the SWS demonstrates a successful application for a clinical study where the soft stethoscope is used for continuous, wireless auscultation with multiple patients. The results show automatic detection and diagnosis of four different types of lung diseases, such as crackle, wheeze, stridor, and rhonchi, with about 95% accuracy for five classes. The proposed design and system integration technology align well with micromachining and nanomanufacturing processes, offering a high technology readiness level. Future studies will focus on a large-group clinical trial with the SWS to automatically diagnose cardiopulmonary diseases while providing continuous, digital, real-time auscultation for advancing digital and smart healthcare. In addition, integrating the SWS using a highly sensitive microphone with other sensing modalities would expand its applications for targeting a next-generation biometric security system using personalized physiological signals.

MATERIALS AND METHODS

Device encapsulation

A soft elastomer gel (Ecoflex, Smooth-On) was used as a base adhesion layer for the SWS. A mixture of the gel was spin coated to form a thin layer, and the integrated circuit was placed on top of the gel layer. A silicone gel (High-Tack Silicone Gel, Factor II) was used on a fabric layer (3M 9907 T). The fabric was cut out in a circle shape on top of the encapsulated microphone island for better pressure applied on the microphone.

Study of mechanical reliability

Finite element analysis was performed using the commercial software ABAQUS to find out an ideal design of the device. For validation, we conducted an experimental study with a motorized force tester (ESM303, Mark-10) while applying cyclic stretching and bending.

Study of device performance

The frequency range of the SWS was tested simultaneously with the commercial device (TLO) on a speaker playing from 20 to 2 kHz. While the SWS could collect sounds from 36 Hz, the commercial one was limited by 48 Hz; overall, the SWS shows a wider range of frequency collection.

Collection of sound data

The SWS was mounted on Botkin-Erb’s point (28), located in the third intercostal space for cardiac sounds, while it was mounted on the right lower lobe point for pulmonary sounds. The duration for each recording session was 30 s, ranging up to 2 min. Testing subjects were asked to do different activities, including standing (control activity), talking, walking, and jogging.

Data analysis

We used MATLAB to analyze measured signals. The signal processing involved a second-order bandpass filter; then, a wavelet denoising algorithm was applied. Plotting waves and spectrograms involved hamming the window fed into the spectrogram function.

Classification of abnormal lung sounds

Digitized sound data were recorded at 4000 samples/s, and the corresponding data were manually segmented and labeled by clinicians. These labeled sections (normal, crackle, rhonchi, stridor, and wheeze) were segmented into equal-sized 2-s segments (8000 data points) with a 75% overlap between consecutive segments. These data were filtered using a third-order Butterworth high-pass filter, with a corner frequency of 4.0 Hz to remove offset and low-frequency drift. For training, the dataset size was modified to have a similar number of samples for each class. These data were entered into a CNN, featuring four layers of one-dimensional convolutions with filters of decreasing size (32, 24, 16, and 8, respectively) and filters of increasing size (25, 50, 75, and 100, respectively). Each convolutional layer is followed by batch normalization and max-pooling step with a filter size of 2. Last, the data were flattened and followed by a fully connected layer and Softmax output. The network variables were optimized using the Adam optimization algorithm (29, 30), and error was calculated using the cross-entropy loss function. The training was performed in a fivefold cross-validation scheme to validate performance.

SNR calculation

For the SNR comparison (31), data were analyzed using the power spectral density estimation (Welch method) and the Hamming window for bias reduction. The entire region of inhaling and exhaling was extracted using MATLAB, and the noise was assumed to be resting between inhale and exhale regions. The SNR was calculated as follows .

Derivation of heart rates

Heart rates were derived using the findpeaks function from MATLAB (32). First, we used Pan-Thompkins filtering and periodicity over a small window to ignore noisy regions. Then, we calculated the threshold to eliminate double counting for S1 and S2 peaks and smooth off the heart-rate curve.

Study with human subjects

The study involved multiple human subjects: healthy and symptomatic cases. The study involved healthy volunteers aged between 18 and 40 and was conducted by following the approved Institutional Review Board (IRB) protocol (#H21038) at Georgia Tech. In addition, for patients, the study was conducted at the Chungnam National University Hospital (CNUH) following the IRB protocol (#2020-10-092); device components were delivered to the CNUH study team, such that they finalized the investigational device to use in the human study. Before the study, all subjects agreed with the study procedures and provided signed consent forms.

Acknowledgments

Funding: We acknowledge the support from the IEN Center for Human-Centric Interfaces and Engineering at Georgia Tech, Chungnam National University Hospital Research Fund 2019, and Korea Health Technology R&D Project through the Korea Health Industry Development Institute (KHIDI), funded by the Ministry of Health & Welfare, Republic of Korea (grant HR20C0025). Electronic devices in this work were fabricated at the Institute for Electronics and Nanotechnology, a member of the National Nanotechnology Coordinated Infrastructure, which is supported by the National Science Foundation (grant ECCS-2025462).

Author contributions: S.H.L., Y.S.K., M.-K.Y., S.-S.J., and W.-H.Y. designed the experiments. S.H.L. performed computational modeling. S.H.L. and M.M. designed the circuits and sensors. S.H.L., Y.S.K., and N.Z. performed experiments and data analysis. M.-K.Y., C.C., J.Y.H., Y.K., and S.S.J. conducted the human subject study and data analysis. S.H.L., Y.S.K., M.-K.Y., and W.-H.Y. wrote the paper.

Competing interests: S.H.L., M.-K.Y., and W.-H.Y. are inventors on a pending patent application related to this work, which was filed on 8 November 2021 under U.S. serial no. 63/276,830. The authors declare that they have no other competing interests.

Data and materials availability: All data needed to evaluate the conclusions in the paper are present in the paper and/or the Supplementary Materials.

Supplementary Materials

This PDF file includes:

Figs. S1 to S19

Table S1

Other Supplementary Material for this manuscript includes the following:

Movies S1 to S10

REFERENCES AND NOTES

- 1.Liu Y., Norton J. J. S., Qazi R., Zou Z., Ammann K. R., Liu H., Yan L., Tran P. L., Jang K.-I., Lee J. W., Zhang D., Kilian K. A., Jung S. H., Bretl T., Xiao J., Slepian M. J., Huang Y., Jeong J.-W., Rogers J. A., Epidermal mechano-acoustic sensing electronics for cardiovascular diagnostics and human-machine interfaces. Sci. Adv. 2, e1601185 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Mannino D. M., Kiri V. A., Changing the burden of COPD mortality. Int. J. COPD 1, 219–233 (2006). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Arena R., Sietsema K. E., Cardiopulmonary exercise testing in the clinical evaluation of patients with heart and lung disease. Circulation 123, 668–680 (2011). [DOI] [PubMed] [Google Scholar]

- 4.Arts L., Lim E. H. T., Van De Ven P. M., Heunks L., Tuinman P. R., The diagnostic accuracy of lung auscultation in adult patients with acute pulmonary pathologies: A meta-analysis. Sci. Rep. 10, 7347 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Kim Y., Hyon Y., Jung S. S., Lee S., Yoo G., Chung C., Ha T., Respiratory sound classification for crackles, wheezes, and rhonchi in the clinical field using deep learning. Sci. Rep. 11, 17186 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Athanazio R., Airway disease: Similarities and differences between asthma, COPD and bronchiectasis. Clin. (Sao Paulo) 67, 1335–1343 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Nath M., Srivastava S., Kulshrestha N., Singh D., Detection and localization of S1 and S2 heart sounds by 3rd order normalized average Shannon energy envelope algorithm. Proc. Inst. Mech. Eng. H 235, 615–624 (2021). [DOI] [PubMed] [Google Scholar]

- 8.Maganti K., Rigolin V. H., Sarano M. E., Bonow R. O., Valvular heart disease: Diagnosis and management. Mayo Clin. Proc. 85, 483–500 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Tseng Y.-L., Ko P.-Y., Jaw F.-S., Detection of the third and fourth heart sounds using Hilbert-Huang transform. Biomed. Eng. Online 11, 8 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Verghese A., Charlton B., Cotter B., Kugler J., A history of physical examination texts and the conception of bedside diagnosis. Trans. Am. Clin. Climatol. Assoc. 122, 290–311 (2011). [PMC free article] [PubMed] [Google Scholar]

- 11.Lee S. H., Kim Y.-S., Yeo W.-H., Advances in microsensors and wearable bioelectronics for digital stethoscopes in health monitoring and disease diagnosis. Adv. Healthc. Mater. 10, e2101400 (2021). [DOI] [PubMed] [Google Scholar]

- 12.Ramanathan A., Zhou L., Marzbanrad F., Roseby R., Tan K., Kevat A., Malhotra A., Digital stethoscopes in paediatric medicine. Acta Paediatr. 108, 814–822 (2019). [DOI] [PubMed] [Google Scholar]

- 13.C. Aguilera-Astudillo, M. Chavez-Campos, A. Gonzalez-Suarez, J. L. Garcia-Cordero, in 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) (IEEE, 2016). [DOI] [PubMed] [Google Scholar]

- 14.X. Liu, W. Ser, J. Zhang, D. Y. T. Goh, in 2015 10th International Conference on Information, Communications and Signal Processing (ICICS) (IEEE, 2015). [Google Scholar]

- 15.Melbye H., Garcia-Marcos L., Brand P., Everard M., Priftis K., Pasterkamp H., Wheezes, crackles and rhonchi: Simplifying description of lung sounds increases the agreement on their classification: A study of 12 physicians’ classification of lung sounds from video recordings. BMJ Open Respir. Res. 3, e000136 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Aviles-Solis J. C., Storvoll I., Vanbelle S., Melbye H., The use of spectrograms improves the classification of wheezes and crackles in an educational setting. Sci. Rep. 10, 8461 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.M. Grønnesby, J. C. A. Solis, E. Holsbø, H. Melbye, L. A. Bongo, Feature extraction for machine learning based crackle detection in lung sounds from a health survey. arXiv:1706.00005v2 (2017).

- 18.Chung H. U., Rwei A. Y., Hourlier-Fargette A., Xu S., Lee K., Dunne E. C., Xie Z., Liu C., Carlini A., Kim D. H., Ryu D., Kulikova E., Cao J., Odland I. C., Fields K. B., Hopkins B., Banks A., Ogle C., Grande D., Park J. B., Kim J., Irie M., Jang H., Lee J., Park Y., Kim J., Jo H. H., Hahm H., Avila R., Xu Y., Namkoong M., Kwak J. W., Suen E., Paulus M. A., Kim R. J., Parsons B. V., Human K. A., Kim S. S., Patel M., Reuther W., Kim H. S., Lee S. H., Leedle J. D., Yun Y., Rigali S., Son T., Jung I., Arafa H., Soundararajan V. R., Ollech A., Shukla A., Bradley A., Schau M., Rand C. M., Marsillio L. E., Harris Z. L., Huang Y., Hamvas A., Paller A. S., Weese-Mayer D. E., Lee J. Y., Rogers J. A., Skin-interfaced biosensors for advanced wireless physiological monitoring in neonatal and pediatric intensive-care units. Nat. Med. 26, 418–429 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Zhang S., Zhang R., Chang S., Liu C., Sha X., A low-noise-level heart sound system based on novel thorax-integration head design and wavelet denoising algorithm. Micromachines 10, 885 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Herbert R., Lim H.-R., Yeo W.-H., Printed, soft, nanostructured strain sensors for monitoring of structural health and human physiology. ACS Appl. Mater. Interfaces 12, 25020–25030 (2020). [DOI] [PubMed] [Google Scholar]

- 21.Gupta P., Moghimi M. J., Jeong Y., Gupta D., Inan O. T., Ayazi F., Precision wearable accelerometer contact microphones for longitudinal monitoring of mechano-acoustic cardiopulmonary signals. npj Digit. Med. 3, 19 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Feiertag G., Winter M., Bakardjiev P., Schröder E., Siegel C., Yusufi M., Iskra P., Leidl A., Determining the acoustic resistance of small sound holes for MEMS microphones. Procedia Eng. 25, 1509–1512 (2011). [Google Scholar]

- 23.M. A. Ali, P. M. Shemi, in 2015 International Conference on Power, Instrumentation, Control and Computing (PICC) (IEEE, 2015). [Google Scholar]

- 24.B. Ergen, “Signal and Image Denoising Using Wavelet Transform,” in Advances in Wavelet Theory and Their Applications in Engineering, Physics and Technology (InTech, 2012), pp. 503–504. [Google Scholar]

- 25.Budhiraja R., Budhiraja P., Quan S. F., Sleep-disordered breathing and cardiovascular disorders. Respir. Care 55, 1322–1332 (2010). [PMC free article] [PubMed] [Google Scholar]

- 26.Lee K., Ni X., Lee J. Y., Arafa H., Pe D. J., Xu S., Avila R., Irie M., Lee J. H., Easterlin R. L., Kim D. H., Chung H. U., Olabisi O. O., Getaneh S., Chung E., Hill M., Bell J., Jang H., Liu C., Park J. B., Kim J., Kim S. B., Mehta S., Pharr M., Tzavelis A., Reeder J. T., Huang I., Deng Y., Xie Z., Davies C. R., Huang Y., Rogers J. A., Mechano-acoustic sensing of physiological processes and body motions via a soft wireless device placed at the suprasternal notch. Nat. Biomed. Eng. 4, 148–158 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Ezzie M. E., Parsons J. P., Mastronarde J. G., Sleep and obstructive lung diseases. Sleep Med. Clin. 3, 505–515 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Kolb F., Spanke J., Winkelmann A., Auf den Spuren des Erb’schen Auskultationspunkts: Rätsel gelöst. Dtsch. Med. Wochenschr. 143, 1852–1857 (2018). [DOI] [PubMed] [Google Scholar]

- 29.D. P. Kingma, J. Ba, Adam: A method for stochastic optimization. arXiv:1412.6980 (2014).

- 30.Lee S. H., Kim Y.-S., Yeo W.-H., Soft wearable patch for continuous cardiac biometric security. Eng. Proc. 10, 73 (2021). [Google Scholar]

- 31.Kim Y. S., Mahmood M., Lee Y., Kim N. K., Kwon S., Herbert R., Kim D., Cho H. C., Yeo W. H., Stretchable hybrid electronics: All-in-one, wireless, stretchable hybrid electronics for smart, connected, and ambulatory physiological monitoring (Adv. Sci. 17/2019). Adv. Sci. 6, 1970104 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.J. Hernandez, D. J. Mcduff, R. W. Picard, in 2015 IEEE 12th International Conference on Wearable and Implantable Body Sensor Networks (BSN) (IEEE, 2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Yilmaz G., Rapin M., Pessoa D., Rocha B. M., De Sousa A. M., Rusconi R., Carvalho P., Wacker J., Paiva R. P., Chételat O., A wearable stethoscope for long-term ambulatory respiratory health monitoring. Sensors 20, 5124 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Chowdhury M. E. H., Khandakar A., Alzoubi K., Mansoor S., Tahir A. M., Reaz M. B. I., Al-Emadi N., Real-time smart-digital stethoscope system for heart diseases monitoring. Sensors 19, 2781 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.H. Huang, D. Yang, X. Yang, Y. Lei, Y. Chen, in 2019 IEEE 3rd Information Technology, Networking, Electronic and Automation Control Conference (ITNEC) (IEEE, 2019). [Google Scholar]

- 36.M. Klum, F. Leib, C. Oberschelp, D. Martens, A.-G. Pielmus, T. Tigges, T. Penzel, R. Orglmeister, in 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) (IEEE, 2019). [DOI] [PubMed] [Google Scholar]

- 37.Y.-J. Lin, C.-W. Chuang, C.-Y. Yen, S.-H. Huang, P.-W. Huang, J.-Y. Chen, S.-Y. Lee, in 2019 IEEE International Symposium on Circuits and Systems (ISCAS) (IEEE, 2019). [Google Scholar]

- 38.Wang F., Wu D., Jin P., Zhang Y., Yang Y., Ma Y., Yang A., Fu J., Feng X., A flexible skin-mounted wireless acoustic device for bowel sounds monitoring and evaluation. Sci. China Inf. Sci. 62, (2019). [Google Scholar]

- 39.M. Waqar, S. Inam, M. A. Ur Rehman, M. Ishaq, M. Afzal, N. Tariq, F. Amin, Qurat-Ul-Ain, in 2019 15th International Conference on Emerging Technologies (ICET) (IEEE, 2019). [Google Scholar]

- 40.Rao A., Ruiz J., Bao C., Roy S., Tabla: A proof-of-concept auscultatory percussion device for low-cost pneumonia detection. Sensors 18, 2689 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Li S.-H., Lin B.-S., Tsai C.-H., Yang C.-T., Lin B.-S., Design of wearable breathing sound monitoring system for real-time wheeze detection. Sensors 17, 171 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.B. Malik, N. Eya, H. Migdadi, M. J. Ngala, R. A. Abd-Alhameed, J. M. Noras, in 2017 Internet Technologies and Applications (ITA) (IEEE, 2017). [Google Scholar]

- 43.D. Ou, L. Ouyang, Z. Tan, H. Mo, X. Tian, X. Xu, in 2016 IEEE 14th International Conference on Industrial Informatics (INDIN) (IEEE, 2016). [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Figs. S1 to S19

Table S1

Movies S1 to S10