Abstract

Recent advancements in socially assistive robotics (SAR) have shown a significant potential of using social robotics to achieve increasing cognitive and affective outcomes in education. However, the deployments of SAR technologies also bring ethical challenges in tandem, to the fore, especially in under-resourced contexts. While previous research has highlighted various ethical challenges that arise in SAR deployment in real-world settings, most of the research has been centered in resource-rich contexts, mainly in developed countries in the ‘Global North,’ and the work specifically in the educational setting is limited. This research aims to evaluate and reflect upon the potential ethical and pedagogical challenges of deploying a social robot in an under-resourced context. We base our findings on a 5-week in-the-wild user study conducted with 12 kindergarten students at an under-resourced community school in New Delhi, India. We used interaction analysis with the context of learning, education, and ethics to analyze the user study through video recordings. Our findings highlighted four primary ethical considerations that should be taken into account while deploying social robotics technologies in educational settings; (1) language and accent as barriers in pedagogy, (2) effect of malfunctioning, (un)intended harms, (3) trust and deception, and (4) ecological viability of innovation. Overall, our paper argues for assessing the ethical and pedagogical constraints and bridging the gap between non-existent literature from such a context to evaluate better the potential use of such technologies in under-resourced contexts.

Keywords: Child-robot interaction, Under-resourced communities, Philosophy, Ethics, User study, Global south

Introduction

For the past two decades, Human/Child-Robot Interaction (HRI/CRI) has become a forefront research area worldwide. Researchers [11, 13, 21, 22, 25, 75] have investigated the roles social robots serve to accomplish different tasks for society, including healthcare [21], elderly care [22], therapies [25, 75] and the industry [11]. One particular area which has been widely studied is education. Social robots effectively improve cognitive and affective outcomes in education principally due to their physical embodiment [13]. Examples of such use-cases are second-language learning [14] and improving handwriting applications [68]. However, the existing evidence about the robot’s personality and social behavior effects on learners’ motivation and learning outcomes across studies is mixed [15].

One of the most notable aspects of the available published works in child-robot interaction is that a vast majority of these works belong to the world’s developed, wealthy, industrially and technologically advanced, resource-rich countries, often referred to as the ’Global North’. Most of these works explore how the interactions between children and robots shape up in due course in a given context, and how do these interactions impact the child [70]. These studies aim to highlight the possible benefits robotic technology has for children and society while also studying and highlighting drawbacks, fall-outs, and potential harms [84]. However, the deployment of the same emerging technologies (such as social robots) in a context deprived of resources with a completely different societal structure throws up unique and challenging ethical concerns. Questions regarding the consequences of the insertion of social robots in under-resourced contexts are particularly scarcely researched and poorly understood.

This work shines the light on the currently understudied perspective in the global landscape of CRI research: the ethical and pedagogical challenges which arise from the deployment of a social robot in an educational setting, in an under-resourced community school. Our findings stem from a user study conducted at an under-resourced community school in New Delhi, India [48]. The study explored how kindergarten students interacted with a social robot in an ‘English as a second language learning’ task and analyzed these child-robot interaction sessions in detail. It was presented as a Late-Breaking Report (LBR) at Human- Robot Interaction (HRI) Conference in 2020. Moving forward, we took the same participant sample as the basis of this interdisciplinary study, which scrutinized the findings from an ethical perspective undertaking an integrative approach. We present these new findings and the related discussion in this paper.

We explore the challenges arising from positioning a robot designed in a resource-rich setting, in the developed world, with a different social and linguistic context, made by designers who may have been unaware of the contexts of their designs’ future deployments. We also address the near absence of formal protocols, ethical frameworks, and literature on deploying such technologies in under-resourced contexts in the Global South (in our case - India). We highlight four ethical considerations from our in-the-wild user study – (1) language and accent as barriers in pedagogy, (2) role of trust, (3) effect of malfunctioning, (un)intended harms and psychological safety, and (4) ecological viability of innovation. We argue for the pressing need to critically and ethically examine the needs and requirements of deploying such technologies in an under-resourced context. Researchers, designers, practitioners, policymakers, and civil society must collectively engage as stakeholders in drawing a blueprint that maps out the course of such deployments in a contextualized manner. Our research highlights that under-resourced communities face an accessibility gap due to structural inequities in society. Therefore, we advocate for a balance between innovation and societal-savvy education, focusing on basic social needs like primary infrastructure development. Overall, this work synthesizes and puts forth an integrative ethical approach that makes a case for promoting understanding of ethical questions as embedded in the contexts of their deployment. As a way forward, we propose a collaboration to develop a substantial body of work in order to facilitate in-depth and nuanced understanding by studying these questions in the less-studied Global South.

Taking this paper forward, Sect. 2 introduces a discussion that chronicles the analysis of related works. Next, Sect. 3 details the user study with children at a community school. Section 4 presents the findings from our analysis, followed by Sect. 5, which presents an elaborate discussion on the four major ethical questions raised, and forwards arguments under each. Finally, in Sect 6, we conclude the paper with our thoughts on this study and the future research directions.

Literature Review

Child Robot Interaction and Ethics

Technology ethics is not a new research domain. It has been studied in different contexts, for example, online communities [30], ethics education [34], gender and tech [24, 36]. Similarly, in HRI/CRI, researchers have reviewed various example settings where ethical issues arise and proposed specific principles that one should consider as an HRI/CRI practitioner [61]. Researchers have explored what factors play a role in determining children’s trust towards a social robot in an in-the-wild user study with 105 Polish children from a kindergarten [90]. They highlighted that it is not only the functional design of the robot that stimulates trust in children, but also the carefully thought-out conversation scenario. Design is just one aspect of ethics, but more importantly it is the interaction between the agent/technology and the human, because interactions are pluralistic, and one design cannot be fit for all.

In [65], authors conducted a user study in two different experimental scenarios – (a) Correct Mode and (b) Faulty Mode, to investigate how mistakes made by a robot affect its trustworthiness and acceptance in human-robot collaboration. Their study revealed that, while there was a significant effect on the robot’s subjective perceptions, assessments of its reliability, and trustworthiness, the robot’s performance does not seem to substantially influence participants’ decisions to (not) comply with its requests. Similarly, researchers have also proposed a framework of 10-different stages of guidelines for the design and ethical consideration in robotic adjunct therapy protocols for children with autism [71].

In a recent study, researchers emphasized that while there is some previous framework of ethics in HRI, there is still a lack of literature on the ethics of CRI in a kindergarten classroom context per se [84]. In CRI, multiple stakeholders: parents [78], teachers [32, 69], and policymakers [77] have highlighted their concerns and opportunities with the use of social robots for education. In [77], the authors conducted a focused group discussion to explore the moral considerations with Dutch policymakers and highlighted the key concerns within CRI, such as trust, safety, and accountability with data collection via social robots. Their study highlighted the need for more rigorous and inclusive discussion amongst various stakeholders to design better moral guidelines for implementing social robots in education. Similarly, in [69], authors conducted focus group discussion with teachers and scholars pursuing education degrees in Sweden, Portugal, and the UK, exploring the ethical implication of classroom use of social robots. Their study highlighted three ethical considerations – (a) privacy, (b) the robot’s role, and (c) its effect on children. They argue for discussing the collective responsibility of stakeholders and researchers while using such robots within the classroom’s context. In our project, we integrated these considerations and engaged with a multidisciplinary team to understand the ethical, legal, and societal implications of deploying a social robot in an under-resourced context. Our arguments are presented in detail in the discussion section of this paper.

The debate on the ethics of technologies deployed in under-resourced communities is slowly beginning to take root, albeit a bit late. Governmental planning bodies and think tanks have acknowledged the importance of ethical issues like data misuse, privacy, and accountability while developing regulatory and legal frameworks and benchmarking (National AI Strategy NITI Aayog, 2018 [4]). The movement toward Unique Identification Numbers (Aadhar1) Data privacy forms a considerable part of India’s AI ethics discourse due to significant awareness and public debate (Srikrishna Committee on Personal Data Protection, 2018 [3]). ’Data Cascade’ due to faulty methodology or low standards of data practices and its downstream impacts have also been explored [67]. Liabilities, new provisions, accountability, regular audits, new industrial frameworks, and autonomous agents’ moral and legal status have also been touched upon (Report on Task Force of Artificial Intelligence, 2018 [5]). Most other research and reports come from the country’s corporate sector that generally highlights the optimism regarding AI, its use, and its future through surveys but does not touch upon its ethics (PwC, 2018 [1]). While others emphasize the tremendous growth potential with which AI technology could transform national growth and development (Accenture, 2018 [2]). It is interesting to see researchers question the western framework of AI Ethics with principles like AI fairness which are being quickly adopted. The idea that ’fairness’ could mean and represent different sets of values and practices in two distinct societies. Again, these researches come from a collaboration of scholars, including researchers working for industry and academia, and are primarily based out of India and the Indian context [66]. A near absence of a focused discourse dedicated to either Technology Ethics or AI Ethics is unsettling and needs to be addressed immediately.

Technology in India

Technology for Children in India

After 1991, when India economically opened its door to the world, it witnessed tremendous growth in many different sectors, and one of them was technology. Mitra [53], through the ’Hole in the Wall’ experiment, found that children can learn to use computers and the Internet on their own, irrespective of who they are and from where they come. Even though the children can learn and adapt to the new technologies themselves, the socio-cultural constraints to access the technology is still prevalent in India [73]. ICT for Development (ICT4D) focuses on education and understanding of teenagers’ mobile Internet use and is one of the research areas studied extensively in India [42, 47, 52, 58, 59, 74, 85, 87]. In [59], authors conducted an anthropological study of everyday mobile internet adoption among teenagers in a low-income urban setting in Hyderabad, India. The researchers noticed that teenagers from the community were engaged with mobile Internet more for entertainment purposes than education. They concluded that these entertainment practices could offer space to experiment with technology and create an informal technology hub. In their 2-week participatory design session for prototyping low-tech and hi-tech English language learning games with rural student participants in Uttar Pradesh, India, [42], authors found that researchers should consider developing a substantially different relationship between teachers and students and enlist local adults and children as facilitators.

Sharma [73] investigated the socio-cultural factors that affect cross-cultural collaboration technology with underprivileged school children in India. Their work highlighted two significant barriers to such collaboration in the given context, i.e., (a) social face-saving, power-distance, and (b) technical–lack of infrastructure. To overcome such barriers, the authors proposed using dramatized scenarios inspired by context familiar to students before introducing technology in order to help them better learn and explore the technology. In [87], authors conducted a 7-week co-designing low-cost Virtual Reality-augmented learning experience with students and staff members at an after-school learning center in Mumbai, India. After introducing virtual reality (VR) technology, the authors suggested that the students tend to ask questions that reflect a deeper level of engagement with the topics targeted.

Human/Child-Robot Interaction in Under-Resourced Communities

While the studies in ICT4D and HCI4D in India are emerging and are not only limited to education but span across sectors like healthcare [40] and education [42, 73]. However, studies on social robots or HRI/CRI remain limited [10, 16, 17, 28, 29]. Healthcare is one of India’s domains that had found its early usage of robots for surgery in 2006 [18]. Shukla et al. [76] conducted interviews and focus group discussions with pediatricians, Autism Spectrum Disorder (ASD) therapists, and educators on understanding the clinical practices for teaching pre-requisite learning (PRL) skills among children with ASD. Other than healthcare, researchers from HRI are now exploring social robots as assistive technology in India.

For example, in [28], authors conducted a feasibility study with 11 participants in a village in Tamil Nadu, India, where they explored technological acceptance and the social perception of a robot helper designed to assist in carrying water for the villagers. They found that socio-cultural factors such as gender played a significant role in the acceptance of the robot. The authors also highlighted the need for a localized approach while deploying such robots in India. In another study [29], authors deployed a self-designed robot named ’Pepe’ in a rural government school in Kerala, India, to promote handwashing behavior by targeting the moments before a mean, and immediately after the use of toilets amongst school students. Their intervention using the robot was effective at increasing levels of handwashing – a 40% rise.

Our previous work [1] was a first-of-its-kind study that deployed social robots in India’s learning environment. Our findings suggested different ways kindergarten students from an under-resourced urban community in Delhi, India, perceived a social robot. However, we also argued that most of the work related to social robots for education comes from Global North countries. From what we see in our experiments, we need to assess such technology with a Global South socio-cultural lens to provide a better, more holistic view. In this vein, a recent study from Tolksdorf and colleagues [84] emphasized that while there exists some previous framework of ethics in HRI, there is still a lack of literature in CRI and especially while applying CRI in the kindergarten classroom context. Therefore, an important question arises about the ethics of deploying such social robots in an under-resourced context. There has not been previous research in HRI in India which has explored the problem of ethical considerations that potentially arise in such interactions. Furthermore, policy level frameworks too are absent. This work attempts to address these gaps in ethics in HRI in an under-resourced context. We draw insights on pedagogy and ethics from our experience of deploying social robots in an urban under-resourced community school in New Delhi, India.

Methodology

We conducted a two-phase exploratory user study over five weeks in August 2019 to understand young children’s interactions and behaviors with a social robot in a classroom environment at a community school in New Delhi [48]. We were particularly interested in exploring these interactions in an under-resourced setting so as to understand and center the ethical questions which would arise from such settings.

Material: The Cozmo Robot

The study employed the Cozmo robot (see Fig. 1), as it was the only available option that the research group could afford due to lack of resources. Cozmo has been developed by a team of robotics engineers in the US. With Cozmo, children of different ages can play games, interact socially, and learn different skills. Cozmo is fully programmable and accessible. Thus, its interaction and skills were determined and controlled by the researchers involved in the project using ’Wizard-of-Oz’ (WoZ). Cozmo has been programmed with various functions, such as lifting arms up and down, expressions (happy, sad, or excited) coupled with voice and rotation by its axis. Cozmo has an inbuilt VGA camera and can support object and face identification.

Fig. 1.

Cozmo robot

Participants and Set-up

Twelve children (see Table 1) from a kindergarten class with an average age of 5 years (M = 5.78; SD = 0.93) participated in the study. Parents of participants were duly informed about the research procedure and the type of data that would be collected during the study. The researchers obtained written consent from parents allowing their wards to partake in this study. Finally, verbal assent was taken from the children individually, before every interaction after explaining the experiment and what they would do. They were asked to pair up with one other child of their choice, creating six pairs. Each pair participated in two sessions every week over a period of three weeks. The study employed two phases: an informal interaction with a demo of the Cozmo robot and child-robot interactions with the robot using the ‘learning by teaching’ approach. In ’learning by teaching,’ students take the role of a teacher, as it builds confidence in the student [41] and improves learning outcomes [13]. Figure 2 shows the setup for phase 2 in the community school. This research received approval from our Institutional Research Board (IRB) at IIIT Delhi in consultation with the community school recommendations. During both phases of the study, data was collected with due clearance from the ethics board.

Table 1.

Details of participants (students)

| Participant | Gender | Age (in years) |

|---|---|---|

| C1 | Female | 4 |

| C2 | Female | 4 |

| C3 | Female | 5 |

| C4 | Female | 8 |

| C5 | Female | 6 |

| C6 | Male | 5 |

| C7 | Male | 5 |

| C8 | Male | 5 |

| C9 | Male | 5 |

| C10 | Male | 5 |

| C11 | Male | 6 |

| C12 | Male | 6 |

Fig. 2.

The community school library

Procedure

First, we conducted a two-day in-class observation to (a) familiarize students and staff with the researchers, (b) understand the teacher’s pedagogical approach to teaching students English, and (c) brainstorm with the teacher to design appropriate language learning exercises. Later, in phase 1, we provided a demo of Cozmo to the students, and during phase 2, we conducted six 2-hour sessions each week, during which the children conducted similar activities as they would have in a classroom setting, with Cozmo robot as a learning partner.

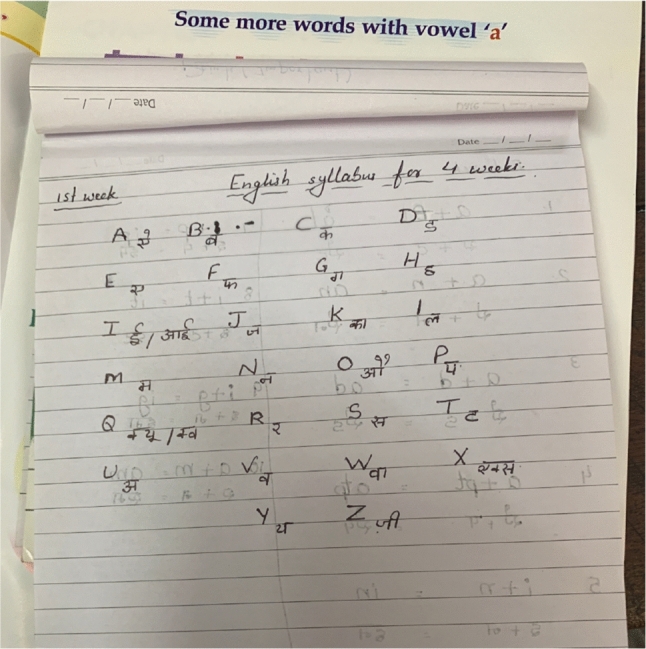

2-Day Field Visit

We conducted a 2-day field visit at the community school to interact with teachers and the school principal. We briefed them about our study goals and gave them a demo of the Cozmo robot so as to understand their views on using robots within the classroom. Later on, after the demo, we engaged with the class teacher in a brainstorming session to understand students’ current spoken and written language abilities before designing appropriate activities accordingly. The teacher provided us with some ideas and plans in which she emphasized the verbal identification and recognition of letters with corresponding images (see Fig. 3). Later, with the school authorities’ permission, we attended some English language learning classes along with students (see Fig. 4). When observing the teacher teaching the students in the classroom, we found that the teacher structured lessons for teaching the English alphabets by using four different modalities, (a) speaking each letter aloud with the children while simultaneously looking at images in an alphabet textbook (e.g. ’a’ - apple, ’b’- ball); (b) writing the letter in notebooks; (c) matching a letter with an image for word identification (e.g., h [picture of hat] or e [picture of elephant]); and (d) presentations where students volunteered to stand in front of the class and speak the alphabet. Not all of these modalities could be addressed for this study, for example, singing letters in unison with the teachers, but we were able to incorporate others such as letter recognition tasks, into our interaction protocol. This helped us with two goals: (a) understanding various pedagogical methods such as rhyming letters, recognizing letters with images/flashcards and (b) familiarizing researchers with the students and vice-versa.

Fig. 3.

2-day field visits: notes by teacher during the brainstorming session to design activities

Fig. 4.

2-day field visits: teacher teaching students letter recognition in the classroom

Phase-I Discovery

Before we interviewed the children, the teacher introduced us to them and requested that the children address us as didi (sister) or bhaiya (brother) rather than sir/madam, a more common practice in schools in India. This was done to make the children feel more comfortable around the researcher team. First, we wanted to understand how familiar the children already were with robots. We asked them if they knew what a robot was and asked them to draw one. We also asked them if they thought they could talk to robots, whether robots showed emotions, and if they could help them with their school work. Afterwards, we gave a demo of the Cozmo robot to the children. During the demo, Cozmo introduced itself as Raju, a prevalent Hindi name, and was selected to make Cozmo more familiar to the students. The students were asked if they thought a robot had feelings. Then the robot displayed pre-programmed emotions meant to represent feelings such as happy, sad, angry, and surprised. Each of these emotions were demonstrated, and the students were asked how they thought the robot was feeling.

After the demo, the children were asked if they knew the English alphabet and sang it together with the researchers and the teacher. We informed the children that Raju did not learn the English alphabet and was asked if they would like to teach the robot, to which they agreed.

Phase-II Child-Robot Interaction

In the first session of each week, a demo was given to the students to teach that week’s activity to Raju. After the demo, the students were asked to teach the robot. Since each session aimed to have the children lead and learn the English alphabet simultaneously, there were many times when the session’s moderator needed to explain what the letter they were given was. The robot was controlled using the ’Wizard-of-Oz’ approach. Before each interaction, the research assistant controlling the robot was briefed about the activity and provided with a list of actions (rules) to make the interaction uniform for all participants. The list of actions contained two sections: (a) verbal response, such as responding only in alphabets and small words (“A,” “B,” “Hello,” “Bye”), and (b) gesture and movement (such as lifting arm up and down, moving back and forth, etc). Cozmo has pre-programmed gestures and emotions, such as happy faces, excited faces, or lifting arms up and down. Hence, only those cues were allowed. Each pair was given ten random letters to teach Raju and was engaged in the activity for durations ranging from 10–15 min.

After completing all the interaction sessions, we conducted small semi-structured interviews with students and their class teachers to understand their experience of interaction with the robot. The students were asked questions in groups of 2–3 about their experience with interacting with the robot, what they liked and disliked, if they would be willing to interact with the robot in the future, if yes, how, and so on. Similarly, for the teacher, the interview asked questions about their interaction with robots and children after the session, how such technology can be used in the classroom, and what things technology can focus on more.

Data Collection and Analysis

Data related to the demographics were collected, including the participants’ name and date of birth. All the six robot-interaction sessions conducted at the community school were video recorded for the researchers’ video analysis. All the interviews were audio-recorded, and the average interview lasted for 5 min. At the beginning of the interview, all the teachers and students were duly asked for their consent and assented to audio record their interviews. The data has been processed according to the procedures established by Organic Law 15/1999 of December 13, Protection of Personal Data, and secured at the university servers.

We analyzed interaction video, audio recording, and field notes for this study to understand the interaction, the problem faced, and potential ethical issues. We conducted iterative and inductive analyses of video recording using open coding and thematic analysis [20] as has been used in prior HRI studies [69, 70]. Four members of the research team were involved in this process. We followed the iterative process. Therefore, to begin with, the first and fourth authors individually coded three videos to develop an individual coding list (first level codes), for example, “robot malfunction during the session,” “student confused with letters.” This list of codes contained each label defining qualitative descriptions of the annotation. Then the first and fourth author discussed their coding list to generate labels for each code. After this, they again engaged in the second iteration of coding using the initial set of the code list. After each iteration, they met the second and fifth author to discuss the coding process and video segments, refined codes, and conceptualize themes such as “Malfunction and safety” or “Robot’s Accents as Barrier.” This process helped us conduct timely member checking to resolve any conflicts and differences of opinion. This process was followed until saturation was received and all the authors agreed upon all themes. Similarly, interviews were first transcribed by the first author for the interview. All the interviews were analyzed using thematic analysis [20]. We followed an iterative approach as mentioned above for the interview analysis as well.

Findings

The main aim of the proposed research was to investigate the ethical issues involved in children’s interactions and behaviors with a social robot in a classroom environment at a community school in an under-resourced setting. Before discussing the findings, we briefly outline our approach to interpreting the findings. The ethical issues arise at different intersections of this research: in the interaction between the robot and the child, in the deployment and the design of the research, and finally, in the context in which the research is carried out. The research is integrative as it is not only attentive to the interactions at the immediate level between the Child and the Robot but the conditions that structure those interactions. Problems and challenges emerge because of the situatedness of the researched location and its interactions with the question of the socio-economic and cultural conditions of the children, the physical, pedagogical, curricular infrastructure of the school, the human resource available at the level of the school, and finally the policy environment in which the deployment is made. These conditions affect the efficacy and effectiveness of deploying Robots. These conditions result from the digital divide that is both a product of the socio-economic circumstances and that feeds into further marginalization. In discussing the findings, we will discuss the ethical issues emerging out of context in detail in the discussion section. Below we present in detail our findings:

“What did he say?”: Roles and Challenges of Language (Accent) in Robot Assisted Learning

In the context of the researched school, we see that the primary language of engagement is Hindi. English is the second language for most students and is neither their mother tongue nor something they encounter in their social environment.

As explained in Sect. 3, the social robot engaged with the children during an English language learning session. We noticed almost across all the videos that students were struggling to understand the pronunciation of the alphabet by the robot. It was because the Cozmo robot API was trained and created with Westernized English and a ‘foreign’ pronunciation. This made it difficult for the students to understand and keep interacting with the robot glitch-free as they were confused about the accent (language) of the robot. A scenario(see Fig. 5) exemplifying the interaction follows here:

Fig. 5.

Student getting confused because of robot’s accent. (Figure taken from [48])

C10: Raju, what is this? (Showing alphabet flash card ‘t’ to student)

Cozmo: ‘t’

C11: What did he say?

C10: ‘p’?

C10: Raju, this is ‘t’ not ‘p’

Cozmo: ‘t’

(Both students were confused and looked at moderator for confirmation, the moderator confirmed that Raju is saying ‘t’ only)

In this particular scenario, when children displayed and pronounced flashcards with the letter “P,” the robot (wizard) replied with the letter “P,” but it sounded more like “T” to the students. It is important to note that many primary schools teach the English alphabet to students by making them correspond to the Hindi alphabet. We also noted a similar methodology being followed by the teacher during our field visit. In Fig. 3, the notes from the teacher also represent a similar methodology where each English alphabet is written along with its corresponding Hindi alphabet sounds. The emphasis on the pronunciation of “P” by Cozmo made it sound like the Hindi varna pronounced as “ta.” So, based on prior training, the students played by ear and guessed it to be the English alphabet “T,” which rhymes with “P.” This exemplifies the ‘accent gap’ between the robot and the students. Similarly, throughout the study, we noted many such letters that the students confused for each other, for example: ‘P’ and ‘B,’ ‘S’ and ‘H,’ ‘M’ and ‘N.’

Malfunctioning and (Un)Intended Harms

Across the interaction over three weeks, cozmo malfunctioned several times. The prominent issues that arose are as follows:

Cozmo’s battery drained, and Bluetooth disconnected in the middle of interactions: In such a scenario, to keep the students engaged, the moderator would ask students questions about what they thought had happened. The students would infer from Cozmo’s blinking and yawning gestures, and their prompt answer would be - “Cozmo is tired, and he went to sleep!”

Since Cozmo was being controlled, it was sometimes unable to process commands. It then engaged in mixed actions, which included speedily rotating at one place, and moving its arms up and down. However, Cozmo lost control in two instances and speedily dashed across the table. In these cases, the moderator had to swiftly reach out to catch it to avoid it hitting the students and/or falling off the table (see Fig. 6).

Fig. 6.

The scenarios with sequence of events where moderator jumped in to catch the robot. (left) Student are interacting with robot, (center) Robot malfunctions and run towards the student, (right) Moderator jumps-in to catch the robot

Whenever Cozmo’s Bluetooth connection with the computer was disconnected or the battery drained, its inbuilt function generated sleeping gestures, such as yawning and snoring sounds, with a dizzy eye expression on its screen. This helped students believe that the robot had slept instead of malfunctioned. As one of the students (C2, Female) mentioned to us: “I think he (robot) has slept.”

At times, they would also try to shake the robot to wake it up gently. If there was a loss of Bluetooth connection, the controller quickly reconnected it, but in the case of battery draining, we had to halt the interaction for 3–5 min and connect it to the charger. Overall, there was no fracture in the form of loss of trust or interest. However, in the particular incident mentioned above (i.e., when the robot severely malfunctioned and dashed towards a student), we did notice a wave of shock among all the present participants. All of them made hand gestures to try and stop it. The moderator had to quickly leap at it before it could hit anyone (Fig. 6). Considering the small size and toy-like figure, the children, though shocked initially, neither complained nor displayed any symptoms of feeling traumatized. Furthermore, they participated with the usual fervor once the activity resumed and enjoyed the interaction.

“You are controlling it!”: Deception & Trust

During our experiment, the moderator and the robot controller were in the same room, and there was no blind/screen to hide the robot controller (see Fig. 2). This was mainly done because (a) the internet connection was too poor to connect robots virtually using software like Teamviewer, and (b) the process was not automated because some API calls required internet access, and the lighting in the room along with Cozmo’s VGA camera was not enough to support proper image analysis. The Wizard-of-Oz approach was used to ensure the robot’s task performance and reliability. Prior studies have highlighted ethical concerns with the use of Wizard-of-Oz methodology in HRI [61, 62, 89]. In our research, participants had no idea that another individual controlled the robot in the loop (Wizard Of Oz) during the study. During the first week, students engaged and responded naturally to the robot. However, by the second week, one of the students had somehow figured out that the robot was being controlled by another individual – the controller – sitting beside them (see Fig. 7). Two more participants echoed this doubt about the robot being controlled. Presented below is the interaction during one such scenario where the children figured out the robot was being controlled by the controller and questioned them regarding the same.

Fig. 7.

Children Asking Question About Who is Controlling the Robot

Upon this, the moderator-participant engagement which followed is recorded as below:

C6: “You (controller) are controlling it (robot).”Moderator: “No, see our hands are above we are not controlling to it”

C6: “I think you are”

Moderator: “See it is moving on its own”

C6: “Isme Cell Daale Honge Issiliye Chal Raha hai”, which translates as - “This (robot) might have electric cell, that is why it is working (autonomously)”

As can be seen, the moderator in the above scenario had to conceal the facts to maintain the experiment protocol’s integrity. We observe that after the first student raised the doubt, similar doubts were registered by two others. One student argued with the moderator about how another researcher sitting in the same room controlled the robot. The moderators and the controller tried to justify their non-inteference in controlling the robot by displaying their hands above in the air. Nevertheless, even after the moderator’s persuasion, students still were adamant for some time. It is essential to highlight that we noticed a brief withdrawal phase in one of the students, who suddenly seemed less interested in the ongoing exercise.

Ecological Viability of Innovation

As we progressed in our interaction, the children became more friendly with the robot and the moderator. This was highlighted when they started playing with the robot [48]. In a couple of instances, the student (C6, Male) would ask the moderator in an adamant but polite tone –

“Bhaiya, Mere liye bhi bana do na, please” which translates to “Brother, can you please make (robot) one for me”

The same was echoed by participants in other groups as well. The moderator would delay their request, but then the participants would ask the follow-up question, “How much does it cost?”. This happened a couple of times. After the interaction, another researcher (who was also familiar with the student) conducted the interviews in the next step. Wherein the participants complained –

“I asked brother (referring to the moderator) to make a robot for me, but he did not listen to me”

While there was growing interest and curiosity in children’s interaction with the robot, we noticed reduced participation of students in the class. To us, this was ironic and surprising. Therefore, we enquired the class teacher about the same. The answer we received is below –

“Students try to avoid school at the end of every month, because it is the time when they/their parents have to pay the fees.”

These vignettes bring to our notice the stark realities that the context of this research presents before us, realities that make ethical reflection an imperative. We notice a sort of contradiction here. Unlike our preliminary findings that show some noticeable challenges in deploying Cozmo–in an under-resourced setting due to the problem of context gap–we do see significant interest among the children. This highlights the potential of social robots in educational contexts, whereby if used judiciously and with proper training (to overcome accent concerns), the robot could impact the learning process. If properly deployed, it has the potential to attract students to the classroom and make learning joyful.

Discussion

The presented findings unearthed a much more profound question: can social robots designed and conceived in the Global North be deployed in an under-resourced context? Such striking but straightforward questions kept us thinking about whether the western dominance over the social robot global market thought about the specificities that other less privileged contexts demand before being successful. In this discussion section, we reflect upon this question and elaborate on the following points: (1) language and context; (2) trust; (3) (un)intended harm and regulation, and (4) balancing innovation with the right to education.

Language (Accent) and Context

Children’s motivation to learn a second language depends on integrative and non-instrumental identification with the linguistic and non-linguistic features of the target language [37]. Language as a tool of meaning-making, understanding, and expressing is thus, intimately and inseparably grounded in the social context2,3 in which the language is situated. Children acquire language by watching, hearing, and interacting with other participants in their respective contexts like homes, schools, playgrounds, and social occasions. Our study observed this in that the students learn English alphabets phonetically corresponding with those in their first language, i.e., Hindi.

In the context of the experiment, we notice the crucial role that accent detection plays in learning the second language. The children are confused by the pronunciation of the robot and look at the researcher for validation. The confusion can be read as a sign of dissociation, the seamless learning environment where the robot and the child share the same context is broken. This is bound to happen as the robot is trained in a different accent. The effect of accent on intelligibility, especially across linguistic and cultural boundaries, has attracted numerous scholarly works [79–82]. Derwing et al., [27] mention that accent characteristics primarily affect intelligibility and comprehensibility. In the context of our research, we too, find that the children are unable to grasp and comprehend the alphabets being pronounced. This creates a ‘context gap’ that creates confusion in the mind of the students. It is here that they seek validation from the researcher. This has severe implications for the learning process that requires detailed investigation. The first implication of a ‘context gap’ is an erosion of children’s confidence in their learning ability. Due to their young age, learners could take their accent as ’wrong’ and the robot’s accent - which has a more precise and confident expression - to be correct, as is in this study. Our intuition is that this can lead to the gradual erosion of self-confidence and quality of interaction and may even result in a loss of interest at the users’ end. It is difficult for the learner to make meanings, interpret, and creatively make use of the learning materials, tools, and assistive technologies (like robots), if they cannot understand what is being conveyed. Kanda et al., [43] suggest that a good uptake of a social robot in second language learning is dependent on establishing a common ground that is dependent on ‘some initial proficiency or interest in English.

The context gap creates another level of disadvantage for the students, which their peers in other contexts (resource-rich) may not face.4 Evidence suggests that children from low socio-economic strata already face considerable difficulty in second language learning when that language is English [39, 46]. From where the child as a learner is situated, the leap - of learning from a robot designed in an alien setting that speaks in a foreign accent – is huge. This makes learning cumbersome for children who lack the cultural capital, pedagogical resources, and exposure required to make sense of the process of learning, through interaction with a social robot.

A consequence of the context gap is that participants across different groups turned towards the moderator. Thus, we infer that timely interventions from the moderator(s) act as interventions that may mitigate unwanted outcomes like low self-belief, withdrawal, and loss of learning. We thus infer that the presence of a trusted, ’More Knowledgeable Other’ (referred to as MKO from now on) [88] is significant to provide scaffolding and, if required, be mitigatory. The concept of MKO is derived from the Socio-Cultural Theory (Vygotsky) [88] and means an individual with a higher level of knowledge of vis-à-vis the learner who could provide guidance and instructions to the learners throughout the process. But, it would not be wrong to claim that the teacher (who is or may not be as well trained as the researcher to operate such social robots) may fail to mediate (as MKO) due to the same problems as the learner faced such as: accent and lack of cultural capital resources. This is particularly true for resource-constrained schools where teachers are poorly trained.5

The second consequence that follows is that Social Robots like Cozmo that perform language functions and interact with children in their local contexts must be carefully grounded in the intended contexts at the design, production, and deployment levels to not cause a fracture in the interactive process due to ’context gap.’ For social robots to function as a pedagogical tool, their speech (accent) needs to be easily understood and accessible to students. Children should be able to relate, seamlessly engage, and, if required, be confident enough to question it. In this sense, there is no apparent instrumental reason why a western accent should be preferred in an Indian local community. On the contrary, our research in a particular community suggests that the native accent is important to the context, to the identity of the child, and also for the learning process of a foreign language. However, before making set-in-stone decisions on the topic, further research in this arena comparing both accents is very much needed.

Trust

Deception, at some level, is arguably integral to research practices in social robotics. Quite often, researchers rely on the Wizard of Oz (WoZ), which is a manipulation technique to control “the robot like a puppet to uncover specific social human behaviors when confronted with a machine” [12]. Like our project, an experimenter operates the robot and controls various input variables according to careful and meticulously laid down protocols. On many occasions, these simulations are necessary to learn about human-machine interactions [62]. The community acknowledges that there is nothing inherently suspicious or unacceptable with this research paradigm. At the same time, by using WoZ, designers may also inadvertently create conditions for over attribution of the robot’s capabilities, which may result in scenarios where the participant puts too much trust in the capabilities of the robot, or is left feeling deceived as they understand that interaction was a carefully crafted montage.

This incident also reflects trust’s crucial role in pedagogical contexts using robot technologies. In automation and the robotics literature, there are two standard definitions of trust: trust “as the attitude that an agent will help achieve an individual’s goals in a situation characterized by uncertainty and vulnerability” [49], and trust as “the reliance by an agent that actions prejudicial to their well-being will not be undertaken by influential others” [38]. However, trust is a highly context-dependent value that varies across cultures [64]. To use the robot as a pedagogical device, the learner must appreciate the robot’s appearance and responses and have a fair degree of trust in the system. Being unaware that a human is controlling the robot may affect the terms of engagement. The students initially respond under the assumption that the robot is “alive” and responds independently. The moment they suspect or become aware that the researcher is mediating the robot’s response, it creates a distance because the learner may feel deceived, believing the robot to act autonomously, when in reality, it was never the case. It has been observed and argued that children learn better when they are not merely treated as passive recipients of knowledge but as active agents in the knowledge-making process (National Curriculum Framework 2005 [55]). Thus, to participate in the knowledge-making process, the participants would need to engage with the robot as equals, maneuver, workaround, and understand how it works and why it works in the manner it does. This incident of fracture (of trust between the learner and the moderator) made us introspect on the responsibility we have as researchers. And how could we do better by keeping the learners/users on board throughout the process, by making them understand their exact role and contribution towards the project’s goals, while maintaining transparency and intelligibility on the robot’s behavior to ensure the user can anticipate, react, and act upon such actions [33]. This would ensure that the users partake in the study as empowered agents whose agency is buttressed by their awareness of the process and its goals. This would require us to look into methods like WoZ from a fresh perspective and scrutinize them critically. It might even require us, researchers, as a community to bring about a paradigm shift from simply adhering to given methods and protocols to modifying and adapting those in the face of newer demands, requirements, and needs.

Some of these interactions can be sporadic or geared towards supporting longer-term engagement over time. The latter is often based on emotion, and memory adaptations that designers manipulate to combat the decline of children’s interest [8]. In this respect, the literature alerts that, given our human tendency to form bonds with the entities with whom we interact and the human-like capabilities of these devices, children will have strong emotional connections when immersed in connected play [50]), teachers’ in particular, have highlighted concerns with emotional entanglements of social robots with children [32]. Hence, this may leave them in a vulnerable position with their young minds prone to manipulation [7]. In HRI, this demands rigorous WoZ guidelines and protocols to understand the role played by the wizards in these interactions, including information about variables such as their demographics, training level, error rate, production and recognition variables, and familiarity with the experimental hypothesis [62].

(Un)Intended Harms, Psychosocial Safety and Regulatory Framework

A robot may malfunction due to several factors. Some of them could be listed as the following: (a) If the designer fails to consider certain variables or unstable connections, (b) The evaluation method used was not correct, (c) The roboticist(s) did not want/need to be more cost-efficient when training the robot, (d) If there were wrong extrapolations from limited samples, and (e) If insufficient training data was used. All of the above-cited factors could affect the learning model of the robot negatively [9, 60]. Autonomous systems which interact with humans physically in real-time have the potential to harm users not only physically [9], but psychosocially as well [63]. Hence, there is a need to challenge the regulatory framework(s) in ways unencountered before vis-à-vis standalone robots [35]. Moreover, prior work in CRI has highlighted (un)intentional behavioral and social harms that could develop during child-robot interaction scenarios [69]. This highlights the need for developing stricter and inclusive regulatory frameworks to not only ensure physical safety during such interactions but psychosocial safety as well.

Regulatory frameworks around the globe typically focus on ensuring physical safety by separating the robot from humans because the definition of safety has been traditionally interpreted to exclusively apply to risks that have a physical impact on persons’ safety, such as, among others, collision risks [6]. However, the increasing use of service robots in shared spaces interacting with users socially (also known as social robots) challenges the way safety has been addressed [86]. For the physical elements, robot and AI deployments have to be secure to prevent negatively impacting users’ safety, health, and well-being. However, the psychosocial elements of HRI are often neglected and largely underestimated, although the literature alerts that, given our human tendency to form bonds with entities with whom we interact and the human-like capabilities of these devices, users may have strong connections with robots, which may include dependency, deception, and overtrust [19, 63]. Moreover, the integration of AI in cyber-physical systems such as robots, the increasing interconnectivity with other devices and cloud services, and the growing human-machine interaction challenge the narrowness of the concept of safety. Since HRI research worldwide is now increasingly investigating robots in educational settings that interact directly with children, the HRI community must look at the concept of safety in a much broader spectrum that also encompasses the aspects of safety mentioned above [26, 51].

In our study, even though we noticed that children were not affected by the malfunctions, it is significantly vital to acknowledge that such incidents could potentially impact the children at the cognitive level. This becomes more important with young children, especially those in an under-resourced environment where acquiring technology within a household is neither easy nor commonplace. There could be unintended harms such as: unfulfilled aspirations, withdrawal from learning in the absence of the robot, emotional setback, and feelings of unfulfillment once they realize they cannot possess the robot or access it at will. These harms though unintended, may very well lead to further marginalization through possible negative cognitive or emotional impacts. Moreover, in case of complications post-interaction, it would be almost impossible for them to access consultation related to cognitive or psychological needs because accessing primary healthcare benefits in the context is an uphill task. Hence, we would assert that accountability for user safety and the designer’s familiarity with the context are crucial for such deployments. According to the European Parliament (2017), [31], designers should “design and evaluate protocols and join with potential users and stakeholders when evaluating the benefits and risks of robotics, including cognitive, psychological, and environmental ones.” However, it is essential to note here that there are no tangible guidelines for modeling, evaluating, assessing, and implementing psychological safeguards to ensure a human-robot safe interaction [35]. Keeping these facts in mind, we would like to implore the HRI researchers, policymakers, legal experts, ethicists, and all other stakeholders to look deeper into the ramifications of social robots on individual users and society, and arrive at a definition of safety which acknowledges as well as addresses the complex ways in which these technologies could cause harms (intended and unintended).

Balancing Innovation with the Right to Education

Our paper highlights the ethical considerations that arise from deploying a Social Robot in an under-resourced classroom setting. It also points toward how tech deployments and interactions may take shape in the future in similar contexts. In Sect. 4.4, we ponder over vignettes that sit uneasily in the research. It is one of those moments that cannot be captured within the frames of the specific research questions we sought but points to directions that researchers cannot ignore.

When children learn to maneuver the robot and interact with it on their terms and creatively deploy it into different tasks, technology plays the role of an enabler, a suitable learning device with enormous potential to excite and interest children in learning and bringing them to classrooms. Prior work in CRI has also noted significant opportunities that social robots can offer in education [69, 72, 78].

However, here are the ethical conundrums that a researcher of CRI faces in an under-resourced context. The first challenge arises at the level of the context itself where the research is situated. Being an under-resourced setting, the problems of financing are bound to happen. It poses us with a dilemma where we are aware of the potential of the social robot but also the constraints of the context. It is one thing to deploy a robot in an experimental set-up, but quite another to introduce it into a relatively under-resourced school (as a learning aid), where children can barely afford 150 INR (approx $2) as school fees per month. A prior study by Smakman et al., [77] highlighted the issue of usability of social robots and the issue of teacher trainability. The introduction of tech would put additional burdens and may require adaptation of teaching methodology to match new demands. However, in an under-resourced school setting such as ours, the schools not only face issues of usability (see Sect. 5.1) but also of accessibility of social robots. One could argue that it should be the state that should finance these robots’ deployment. However, we are talking of a resource-deprived setting where the government invests less than 6% of its budget on education despite multiple policy recommendations.6 How would the money that needs to be invested be raised is a moot question, especially when governments have been reluctant to increase budgets in education, and there are unfulfilled demands of infrastructure, regular teacher hiring, the problem of contract teaching, among others, it seems that deployment of social robots will be the last thing on the mind of any government.

This issue is symptomatic of problems faced in introducing technology in resource-deprived or under-resourced regions. When the researcher, as an outsider, tries to enter and introduce technology, the glaring gaps between the context in which the technology is designed and introduced become clear. Here, the under-resourced context itself becomes a site of ethical inquiry. The context raises questions on the policy and practical effectiveness of such research. It is essential to highlight that though the aim of the observations is restricted to interactions in the classroom settings, the research cannot but engage with the institutional and structural conditions which impact both the deployment of the robot and its effectiveness in the learning process.

These ethical issues are a product of the digital divide and risk to reinforce it. In general, this divide is both spatial and temporal. Spatially it manifests in the division between resource-rich regions and resource deprived regions. These regions could be across national boundaries or situated within the confines of the same boundary. Temporally it exists at the level of different degrees of technological introduction in educational settings. Different regions lag in terms of time in the uptake of technology where what can be considered outmoded in a specific context may be overvalued in another. At the level of specificity, this divide exists and manifests in various forms. The first form is at the level of technological deployment in classroom settings. While the deployment of social robots has good uptake in resource-rich settings, it is not so for resource-deprived regions. The second is a product of the first. The deployment of Robots requires technological literacy and training that may often be missing for most teachers. The third appears at the level of interaction between the child and the robot, the context gap. This part we have already discussed in Sect. 5.1. Suffice it to say that insensitiveness to the context ends up doing more harm than good.

A critic can point out that the social robot in itself does not pertain to the context’s needs but is supplemental to what is of immediate priority. Where priority demands intervention in basic educational infrastructure, social robots may at best be seen as additional to the needs. Responsible innovation requires that it caters to the context’s actual needs and addresses the need that arises from it. The question, though, would require detailed investigation beyond the scope of this study. It would need to balance the fiscal considerations with the advantages technology brings to education. Our study does highlight that social robots are a vital pull that can invite out-of-school children to schools. This is a significant need in the Indian context, where dropout rates are high. But in the absence of physical infrastructure, creative strategies, trained teachers, a well-defined and delivered curriculum, and pedagogy, these efforts will only turn out to be partial.

Study Limitations

We would like to acknowledge that the sample size of our study was small, and the interaction sessions were limited in number. Moreover, the child-robot interaction sessions were mediated by a moderator who was an outsider to the student’s environment. These factors may have impacted the analysis and implications of this study. In addition to this, we were able to recruit only one teacher for the study due to administrative and logistical constraints. Recruiting more teachers and students and engaging them in the interaction sessions could be the next step in future work. Lastly, due to the global COVID-19 pandemic, we could not progress with our initial plan to conduct a longitudinal study with the children, which could have yielded richer, more contextual insights.

Conclusion and Future Work

Deploying social robots for primary education in an under-resourced context has limitations with respect to the (1) technology itself (because technology is not easily accessible and there are not many choices); (2) the environment (the school setting is not prepared to accommodate such new technologies); and the (3) broader context (under-resourced communities face other challenges that should have priority, i.e., access to education is limited and economy-dependant). We base our findings on an in-the-wild experiment conducted with kindergarten students at a community school in New Delhi, India.

With respect to the technology, we identified four ethical considerations that arise and should be taken into account while deploying such technology in under-resourced environments: (1) language, and accent as barriers in pedagogy, (2) (un)intended harms and psychosocial safety, (3) trust and deception, and (4) ecological viability of innovation. Our study shows that Cozmo’s context gap, which reflects clearly in the accent clash, could cause a fracture in the learning process and confuse learners, thus defeating the primary aim of its deployment by shifting the burden of understanding on learners. Thus, making the process of learning difficult. Despite the initial stumble, it was heartening to be privy to the students’ level of zest and engagement. However, cut to the second week, some participants realized that Cozmo/Raju was not autonomous; instead, they were made to believe it was. This was followed by subtle expressions of confusion, disbelief, and dissatisfaction, which raised questions on the extent to which deception is acceptable. It made us argue that perhaps, it is necessary and in the learners’ best interest to treat them as proactive participants and agents in their knowledge-making.

The contextual grounding of this study compelled us to reckon with the socio-economic realities of the context, which highlighted an additional need to address the psychosocial safety of the participants. Any loopholes in the interaction protocols or design could lead - not only to physical but also to the often under-addressed - psychosocial harms to learners. In addition, access barriers impede their access to healthcare at all levels leaving them doubly vulnerable to harm. Hence, we conclude that there is an imminent need for an aptly designed regulatory framework with fixed accountability, especially in under-resourced contexts.

The arguments presented in this article pose difficult questions about the role that social robots could play in under-resourced settings which struggle for basic amenities and support for teachers and children. Our findings and reflections point to the direction in which technology will have to be imagined within the social world in which it operates to respond to the context’s demands. Social robots cannot be conceived as an overarching solution for social ills but rather as something that can only act as an enabler, that can add value when conditions are favorable. However, there cannot be one size fits all approach, and the context needs to play a role already in the design of these technologies.

Overall, we argue that researchers, designers, practitioners, and policymakers should critically and ethically examine the needs and requirements before deploying such technologies in an under-resourced context. India is currently a developing nation, and we have seen how well the internet has penetrated India. Similarly, in the coming years, we expect more assistive technology to be employed in our education ecosystem, which vastly differs from Western societies.

We would like to call upon fellow researchers to conduct sustained and extensive interaction to build a corpus of work that unpacks more nuanced details of such interactions in different contexts, resources, cultures, and languages before formulating an ethical framework for future deployments. While we acknowledge that the research around robot ethics is growing, most of these works come out of and are situated in first-world countries/continents like Europe and the USA. This has led us to conclusively highlight the pressing need for more work exploring emerging themes and looking into the formulation and implementation of robust policy frameworks, especially in the diverse global south countries like India, where even basics like the data protection law have yet to be afforded to citizens.

Acknowledgements

This article was based on fieldwork from a previous collaboration between Jainendra Shukla, Divyanshu Kumar Singh, Grace Eden and Sumita Sharma who contributed to the research design and data collection. The authors acknowledge Grace Eden and Sumita Sharma’s contributions during data collection and analysis and thank them for granting permission to reuse the video data collected as well as allowing us to reuse the analysis related to the video data for this article. They also thank Sumita Sharma for connecting the authors to Deepalaya, the NGO where the study was conducted, and Tanya Budhiraja for their assistance during the fieldwork and contribution to video sessions.

Biographies

Divyanshu Kumar Singh

is an incoming doctoral student at the Department of Information Science at the University of Colorado Boulder. He completed his B.Tech in Computer Science and Engineering at Indraprastha Institute of Information Technology Delhi, where he was part of the Human-Machine Interaction research group. His research interests lie at the intersection of Critical Computing, Social Justice, Human-Computer Interaction and Child-Robot Interaction.

Manohar Kumar

is an Assistant Professor at the Department of Social Sciences and Humanities and a member of Infosys Centre for Artificial Intelligence, Indraprastha Institute of Information Technology (IIIT), Delhi. His work lies at the intersection of moral and political philosophy, applied ethics, and ethics of AI. He is the co-author of Speaking Truth to Power. A Theory of Whistleblowing with Springer and is the PI of ‘Ethics of Cognitive Computing and Social Sensing’ supported by Technology Innovation Hub, Anubhuti at IIIT Delhi. He has a PhD from LUISS University, Rome and has held Postdoctoral Fellow ships at Indian Institute of Technology, Delhi and Aix Marseille School of Economics, Aix Marseille University.

Eduard Fosch-Villaronga

PhD MA LLM is Assistant Professor at the eLaw Center for Law and Digital Technologies at Leiden University. Eduard investigates legal and regulatory aspects of robots and AI, focusing on healthcare, diversity, governance, and transparency. Eduard published the books ‘AI for Healthcare Robotics’ with CRC Press and ‘Robots, Healthcare, and the Law. Regulating Automation in Personal Care’ with Routledge and is the PI of PROPELLING, an FSTP from the H2020 Eurobench project using testbeds for evidence-based policymaking. He is also the co-leader of the project Gendering Algorithms, an interdisciplinary interfaculty pilot project at Leiden University aiming to explore the functioning, effects, and governance policies of AI-based gender classification systems. In 2020, Eduard served the European Commission in the Sub-Group on AI, connected products, and other new challenges in product safety to the Consumer Safety Network (CSN) to revise the General Product Safety directive. Eduard holds an Erasmus Mundus Joint Doctorate in Law, Science, & Technology, coordinated by the University of Bologna (2017), an LL.M. from the University of Toulouse (2012), an M.A. from the Autonomous University of Madrid (2013), and an LL.B. from the Autonomous University of Barcelona (2011).

Deepa Singh

is a doctoral researcher in Artificial Intelligence Ethics, at the Department of Philosophy, University of Delhi, where she is an Indian Council of Philosophical Research Fellow. Her doctoral research focuses on contextualizing and re-imagining Fairness, Accountability and Accountability (FAccT) in AI/ML. Her work lies at the intersections of Ethics, Artificial Intelligence and Human Computer Interaction. She was recognised as one of the“100 Brilliant Women in AI Ethics”, 2021 In her research, she actively draws on from her past experiences of being a mental health advocate and a social entrepreneur working at the grassroots in Uttar Pradesh.

Jainendra Shukla

is the founder and director of the Human-Machine Interaction research group and is an Assistant Professor at the Department of Computer Science and Engineering in joint affiliation with the Department of Human-Centered Design at IIIT-Delhi. He enthusiastically investigates how empowering social robots and machines with adaptive interaction abilities can improve the quality of life in health and social care. He has been awarded the startup research grant by SERB, DST, and the research excellence award at IIITDelhi. He is the recipient of the prestigious Industrial Doctorate research grant by the Government of Spain in 2014. He received a B.E. degree in Information Technology (2009) from the University of Mumbai in First Class with Distinction and pursued his M.Tech. Degree in Information Technology with a Specialization in Robotics (2012) from the Indian Institute of Information Technology, Allahabad (IIIT-A). He received his Ph.D. in excellent grades with Industrial Doctorate Distinction and International Doctorate Distinction from Universitat Rovira I Virgili (URV), Spain, in 2018.

Funding

This research work is partially supported by the Infosys Centre for Artificial Intelligence, IIIT-Delhi (Ref. ID.: 2020/CAI/P7/SRP-164). In addition, Jainendra Shukla is partially supported by the Start-up Research Grant (Ref. ID.: RG/2020/002454) of the Science and Engineering Research Board, Government of India, and Center for Design and New Media (Sponsored by Tata Consultancy Services, A TCS Foundation Initiative). Deepa Singh is supported by Indian Council of Philosophical Research Fellowship (F. No. 1- 54/2020/P &R/ICPR).

Data Availability

The data used in this study cannot be made available as per the guidelines of Institutional Review Board (IRB) of Indraprastha Institute of Information Technology Delhi.

Declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical Approval

The user study was approved by the Institutional Review Board (IRB) of the Indraprastha Institute of Information Technology Delhi (IIITD/IRB/9/2/2019-1).

Footnotes

https://uidai.gov.in/ (Accessed on 03/01/2021).

The Socio-Cultural Theory developed by Lev Vygotsky [88] is a prominent theory highlighting the importance of social contexts and societies that shape what children think and how they think. Through various interactions in their particular contexts, children acquire cultural values, beliefs, and problem-solving skills through collaborative dialogue. Children are proactively involved in their learning and development from an early stage and make meaning of the world around them mediated by the community.

Social Interactionist Theory’ [23]. Developed by Bruner was built on Vygotsky’s foundational work and took it forward. It emphasized a child’s social surroundings. Bruner asserted that adults could better facilitate this process through ‘scaffolding’ [23], i.e., helping children learn through well-organized interactions.

In the Indian context it has been noted by various studies that proficiency in the second language (in this case English) is ‘explained not only by motivational and attitudinal variables but also by a variety of social, cultural, and demographic variables, including claimed control over different languages, patterns of language use, exposure to English, use of English in the family, the type of school, size of the community, anxiety levels, etc.’ (National Focus Group Position Paper on Teaching of Indian Language [54]; pp. 4–5), see also Khanna and Agnihotri 1982 [44]; 1984 [45]).

Teacher training is a big concern for under-resourced schools. Most of the government school teachers in India are poorly trained. This issue has been continuously raised in various policy documents like Learning Without Burden 2003 [57], National Curriculum Framework 2005 [55], National Curriculum Framework on Teacher Education 2009 [56].

The figure of 6% has been recommended from the times of the DS Kothari Commission in 1966. On a discussion on allocation of 6% of GDP in education see Tilak [83].

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Change history

3/23/2023

A Correction to this paper has been published: 10.1007/s12369-023-00995-1

Contributor Information

Divyanshu Kumar Singh, Email: divyanshu17048@iiitd.ac.in.

Manohar Kumar, Email: manohar.kumar@iiitd.ac.in.

Eduard Fosch-Villaronga, Email: e.fosch.villaronga@law.leidenuniv.nl.

Deepa Singh, Email: deepa.asingh@gmail.com.

Jainendra Shukla, Email: jainendra@iiitd.ac.in.

References

- 1.(2018) Artificial Intelligence in India: Hype or Reality. https://www.pwc.in/research-insights/2018/advance-artificial-intelligence-for-growth-leveraging-ai-and-robotics-for-india-s-economic-transformation.html, (Accessed on 03/1/2021)

- 2.(2018) Citizen AI: artificial intelligence for good - accenture tech vision 2018. https://www.accenture.com/us-en/insight-explainable-citizen-ai, (Accessed on 03/01/2021)

- 3.(2018) Data protection committee- report | Ministry of Electronics and Information Technology, Government of India. https://www.meity.gov.in/content/data-protection-committee-report, (Accessed on 03/01/2021)

- 4.(2018) National strategy on artificial intelligence | NITI Aayog. https://niti.gov.in/national-strategy-artificial-intelligence, (Accessed on 03/01/2021)

- 5.(2018) Report of task force on artificial intelligence | Department for Promotion of Industry and Internal Trade | MoCI | Govt. of India. https://dipp.gov.in/whats-new/report-task-force-artificial-intelligence, (Accessed on 03/01/2021)

- 6.(2020) Consumer safety network (2020) opinion of the sub-group on artificial intelligence (ai), connected products and other new challenges in product safety to the consumer safety network. (Accessed on 02/26/2021)

- 7.(2020) Growing up with ai - a call for further research and a precautionary approach. response to the policy guidance on ai for children (draft 1.0, september 2020) presented by unicef and the ministry for foreign affairs of finland. leiden law blog, october 20. https://leidenlawblog.nl/articles/growing-up-with-ai, (Accessed on 02/26/2021)

- 8.Ahmad M, Mubin O, Orlando J. A systematic review of adaptivity in human-robot interaction. Multimodal Technol Inter. 2017;1(3):14. doi: 10.3390/mti1030014. [DOI] [Google Scholar]

- 9.Amodei D, Olah C, Steinhardt J, Christiano P, Schulman J, Mané D (2016) Concrete problems in ai safety. arXiv preprint arXiv:1606.06565

- 10.Ashwini B, Narayan V, Bhatia A, Shukla J (2021) Responsiveness towards robot-assisted interactions among pre-primary children of indian ethnicity. In: 2021 30th IEEE international conference on robot & human interactive communication (RO-MAN), IEEE, pp 619–625

- 11.Baillie L, Breazeal C, Denman P, Foster ME, Fischer K, Cauchard JR (2019) The challenges of working on social robots that collaborate with people. In: Extended abstracts of the 2019 CHI conference on human factors in computing systems, pp 1–7

- 12.Baxter P, Kennedy J, Senft E, Lemaignan S, Belpaeme T (2016) From characterising three years of hri to methodology and reporting recommendations. In: 2016 11th ACM/IEEE international conference on human-robot interaction (HRI), IEEE, pp 391–398

- 13.Belpaeme T, Kennedy J, Ramachandran A, Scassellati B, Tanaka F (2018) Social robots for education: A review. Science robotics 3(21) [DOI] [PubMed]

- 14.Belpaeme T, Vogt P, Van den Berghe R, Bergmann K, Göksun T, De Haas M, Kanero J, Kennedy J, Küntay AC, Oudgenoeg-Paz O, et al. Guidelines for designing social robots as second language tutors. Int J Social Robot. 2018;10(3):325–341. doi: 10.1007/s12369-018-0467-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.van den Berghe R, Verhagen J, Oudgenoeg-Paz O, Van der Ven S, Leseman P. Social robots for language learning: A review. Rev Edu Res. 2019;89(2):259–295. doi: 10.3102/0034654318821286. [DOI] [Google Scholar]

- 16.Bharatharaj J, Huang L, Mohan RE, Al-Jumaily A, Krägeloh C. Robot-assisted therapy for learning and social interaction of children with autism spectrum disorder. Robotics. 2017;6(1):4. doi: 10.3390/robotics6010004. [DOI] [Google Scholar]

- 17.Bharatharaj J, Huang L, Krägeloh C, Elara MR, Al-Jumaily A. Social engagement of children with autism spectrum disorder in interaction with a parrot-inspired therapeutic robot. Proc Comput Sci. 2018;133:368–376. doi: 10.1016/j.procs.2018.07.045. [DOI] [Google Scholar]

- 18.Bora GS, Narain TA, Sharma AP, Mavuduru RS, Devana SK, Singh SK, Mandal AK. Robot-assisted surgery in India: a swot analysis. Indian J Urol IJU J Urol Soc India. 2020;36(1):1. doi: 10.4103/iju.IJU_220_19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Borenstein J, Wagner AR, Howard A. Overtrust of pediatric health-care robots: a preliminary survey of parent perspectives. IEEE Robot Autom Mag. 2018;25(1):46–54. doi: 10.1109/MRA.2017.2778743. [DOI] [Google Scholar]

- 20.Braun V, Clarke V. Using thematic analysis in psychology. Qualitative Res Psychol. 2006;3(2):77–101. doi: 10.1191/1478088706qp063oa. [DOI] [Google Scholar]

- 21.Breazeal C (2011) Social robots for health applications. In: 2011 Annual international conference of the IEEE engineering in medicine and biology society, IEEE, pp 5368–5371 [DOI] [PubMed]