Abstract

Objective:

Involuntary subject motion is the main source of artifacts in weight-bearing cone-beam CT of the knee. To achieve image quality for clinical diagnosis, the motion needs to be compensated. We propose to use inertial measurement units (IMUs) attached to the leg for motion estimation.

Methods:

We perform a simulation study using real motion recorded with an optical tracking system. Three IMU-based correction approaches are evaluated, namely rigid motion correction, non-rigid 2D projection deformation and non-rigid 3D dynamic reconstruction. We present an initialization process based on the system geometry. With an IMU noise simulation, we investigate the applicability of the proposed methods in real applications.

Results:

All proposed IMU-based approaches correct motion at least as good as a state-of-the-art marker-based approach. The structural similarity index and the root mean squared error between motion-free and motion corrected volumes are improved by 24-35% and 78-85%, respectively, compared with the uncorrected case. The noise analysis shows that the noise levels of commercially available IMUs need to be improved by a factor of 105 which is currently only achieved by specialized hardware not robust enough for the application.

Conclusion:

Our simulation study confirms the feasibility of this novel approach and defines improvements necessary for a real application.

Significance:

The presented work lays the foundation for IMU-based motion compensation in cone-beam CT of the knee and creates valuable insights for future developments.

Keywords: CT reconstruction, Inertial measurements, Motion compensation, Noise, Non-rigid motion

I. Introduction

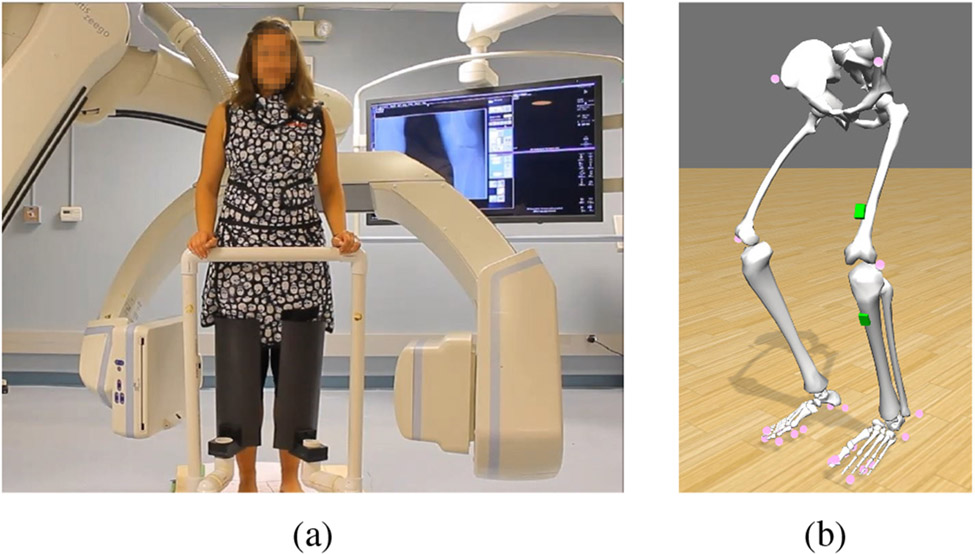

In clinical practice, the most commonly used imaging technique for analyzing the knee joints of Osteoarthritis patients are standing 2D radiographs. The advantage of 3D Computed Tomography (CT) images compared to 2D radiographs is that the anatomical structures of the joints can be visualized individually instead of showing a superimposition of all components. Furthermore, flexible C-arm cone-beam CT (CBCT) systems and dedicated extremity scanners allow imaging under natural loading conditions similar to standing radiographs [1], [2]. For example, systems by Curvebeam (PedCAT, Curvebeam LLC) or Planmed (Verity, Planmed Oy) are already used in everyday clinical practice to image the foot and ankle in a standing position, thus enabling a mechanical analysis of the joint [3], [4]. Patient motion is only a minor problem in ankle radiographs because the foot is in direct contact with the ground and therefore little motion is expected. In contrast, when imaging the knee joints with weight-bearing CBCT as visualized in Fig. 1a, postural sway of the subjects while standing poses a greater challenge [5]. The swaying motion leads to blurring and streaking artifacts in the 3D reconstructions, sometimes to such an extent that the images cannot be used for further analysis [6], [7]. Since it is not useful to prevent subject motion when aiming for natural stance, the movement during the scan has to be estimated and corrected in order to obtain artifact-free images.

Fig. 1:

(a) Setup of a weight-bearing C-arm cone-beam CT scan of the knees, (b) Biomechanical model with virtual reflective markers on the legs (pink spheres) and IMUs on thigh and shin (green boxes).

Previous approaches are either image-based or use an external signal or marker in order to correct for motion. Performing 2D/3D registration showed very good motion compensation capabilities, but required prior bone segmentations and is computationally expensive [8]. The same limitation holds for an approach based on a penalized image sharpness criterion [9]. By leveraging the epipolar consistency of CT scans, the translation but not the rotation of the knees during a CT scan was estimated [10]. Bier et al. [11] proposed to estimate motion by tracking anatomical landmarks in the projection images using a neural network. Until now, their approach was not applied for motion compensation and was only reliable if there were no other objects present. An investigation on the practicality of using range cameras for motion compensated reconstruction showed promising results on simulated data [12]. An established and effective method for motion compensation in weight-bearing imaging of the knee is based on small metallic markers attached to the leg and tracked in the projection images to iteratively estimate 3D motion [13], [14]. However, the process of placing the markers is tedious and they produce metal artifacts in areas of interest in the resulting images.

In C-arm CBCT, inertial measurement units (IMUs) containing an accelerometer and a gyroscope have until now been applied for navigation [15] and calibration [16] purposes. Our recent work was the first to propose the use of IMUs for motion compensation in weight-bearing CBCT of the knee [5]. We evaluated the feasibility of using the measurements of one IMU placed on the shin of the subject for rigid motion compensation in a simulation study. However, since the actual movement during the scan is non-rigid, not all artifacts could be resolved with the rigid correction approach. For this reason, we now investigate non-rigid motion compensation based on 2D or 3D deformation using signals recorded by two IMUs placed on the shin and the thigh. Furthermore, a method to estimate the initial pose and velocity of the sensors is presented. These two parameters are needed for motion estimation and were assumed to be known in the aforementioned publication [5]. Another drawback of our previous publication is that we only simulated optimal IMU signals and neglected possible measurement errors. In order to assess the applicability of our proposed methods in a real setting, and as a third contribution, we now analyze how sensor noise added to the optimal IMU signals influences the motion compensation capabilities.

In this article, we present a simulation study similar to the one in our previous publication, therefore some content of section II-A is closely related to Maier et al. [5]. Furthermore, the previously published rigid motion estimation approach is repeated for better comprehensibility.

II. Materials and methods

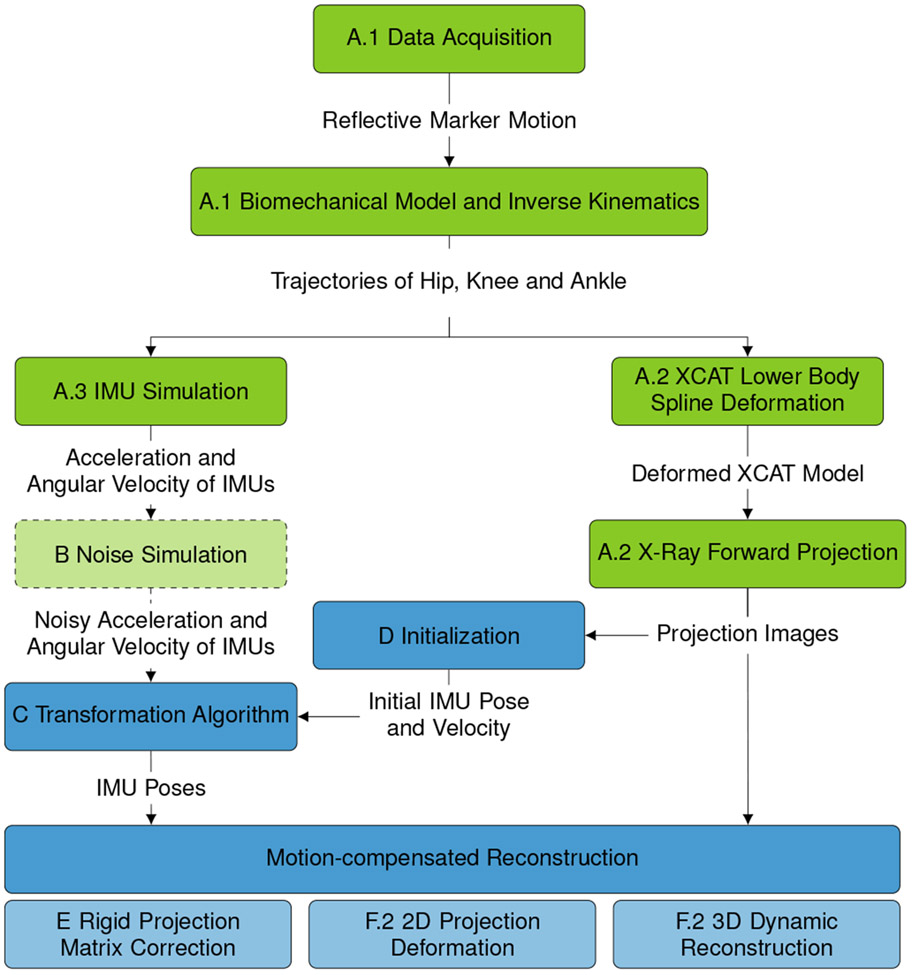

The whole processing pipeline of the presented simulation study is shown in Fig. 2, where black font describes each processing step and green font the respective output. All steps shaded in gray relate to the simulation and are described in Section II-A, while all steps shaded in green refer to the proposed data processing presented in Sections II-B to II-F.

Fig. 2:

Processing Pipeline presented in section II. Green boxes describe all parts of the simulation study presented in section II-A. Blue boxes refer to the proposed data processing, which is detailed in Sections II-B to II-F.

The simulation contains the following steps: The motion of standing subjects is recorded with an optical motion capture system and used to animate a biomechanical model to obtain the trajectories of hip, knees and ankles (II-A.1). These positions are then used in two ways: First, the lower body of a numerical phantom is deformed to mimic the subject motion and a motion-corrupted C-arm CBCT scan is simulated (II-A.2). Secondly, the signals of IMUs placed on the model’s leg are computed (II-A.3).

In Section II-B, measurement noise is added to the optimal sensor signals. These noisy signals are later used to analyze the influence of measurement errors on the motion correction with IMUs.

Then, the proposed IMU-based approaches for motion compensated reconstruction of the motion-corrupted CT scan are described: From the IMU measurements, the position and orientation, i.e. the pose, of the IMUs over time are computed (II-C). For this step, the initial sensor pose and velocity need to be known and are estimated from the first two projection images (II-D). The computed poses are then used for three different motion correction approaches compared in this article. First, rigid motion matrices are computed from the IMU poses and used to adapt the projection matrices for reconstruction (II-E). Second, the projection images are non-rigidly deformed before 3D reconstruction (II-F.2). Third, the sensor poses are incorporated in the reconstruction algorithm for a 3D non-rigid deformation (II-F.3).

A. Simulation

1). Data acquisition and biomechanical model:

In order to create realistic simulations, real motion of standing persons is acquired. Seven healthy subjects are recorded in three settings of 20 seconds duration: holding a squat of 30 degrees and 60 degrees knee flexion, and actively performing squats. Seven reflective markers are attached to each subject’s sacrum, to the right and left anterior superior iliac spine, to the right and left lateral epicondyle of the knee, and to the right and left malleolus lateralis. The marker positions are tracked with a 3D optical motion tracking system (Vicon, Oxford, UK) at a sampling rate of 120 Hz.

Subsequently, in the software OpenSim [17], the marker positions of the active squatting scan of each subject are used to scale a biomechanical model of the human lower body [18] to the subject’s anthropometry. The model with attached virtual markers shown in pink is displayed in Fig. 1b.

The scaled model is then animated two times per subject by computing the inverse kinematics using the marker positions of the 30 degrees and 60 degrees squatting scans [19]. The inverse kinematics computation results in the generalized coordinates that best represent the measured motion. These generalized coordinates describe the complete model motion as the global position and orientation of the pelvis and the angles of all leg joints. Before further processing, jumps in the data that occur due to noise are removed, and the signals are filtered with a second order Butterworth filter with a cutoff frequency of 6 Hz in order to remove system noise. Since the model is scaled to the subject’s anthropometry, the generalized coordinates can be used to compute the trajectories of hip, knee and ankle joints. These joint trajectories are the input to all further steps of the data simulation.

2). XCAT deformation and CT projection generation:

We generate a virtual motion-corrupted CT scan using the moving 4D extended cardiac-torso (XCAT) phantom [20]. The legs of the numerical phantom consist of the bones tibia, fibula, femur and patella including bone marrow and surrounded by body soft tissue. All structures contained in the phantom have material-specific properties. Their shapes are defined by 3D control points spanning non-uniform rational B-splines. By changing the positions of these control points the structures of the XCAT phantom can be non-rigidly deformed.

In the default XCAT phantom the legs are extended. To simulate a standing C-arm CT scan, the phantom needs to take on the squatting pose of the recorded subjects that is varying over time. For this purpose, the positions of the XCAT spline control points are changed based on the hip, knee, and ankle positions of the biomechanical model. The deformation process is described in detail by Choi et al. [13].

Then, a horizontal circular CT scan of the knees is simulated. As in a real setting, 248 projections are generated with an angular increment of 0.8 degrees between projections, corresponding to a sampling rate of 31 Hz. The virtual C-arm rotates on a trajectory with 1198 mm source detector distance and 780 mm source isocenter distance. The detector has a size of 620 × 480 pixels with an isotropic pixel resolution of 0.616 mm. In a natural standing position, the knees are too far apart to both fit on the detector, therefore the rotation center is placed in the center of the left leg of the deformed XCAT phantom. Then, forward projections are created as described in Maier et al. [21]. Since the subject of this study is to analyze the motion compensation capability of IMUs, CBCT artifacts other than motion are not included in the simulation.

3). Simulation of IMU measurements:

The trajectories of hip, knees and ankles computed using the biomechanical model are used to simulate the measurements of IMUs placed on the leg of the model. IMUs are commonly used for motion analysis in sports and movement disorders [22]. They are low cost, small and lightweight devices that measure their acceleration and angular velocity on three perpendicular axes. Besides the motion signal, the accelerometer measures the earth’s gravitational field distributed on its three axes depending on its orientation. We virtually place two such sensors on the shin, 14 cm below the left knee joint and on the thigh, 25 cm below the hip joint aligned with the respective body segment (Fig. 1b). In a future real application, sensors in these positions are visible in the projections as needed for initialization (II-D). At the same time, they are situated at a sufficient distance from the knee joint in the direction of the CBCT rotation axis such that their metal components do not cause artifacts in the region of interest.

The simulated acceleration a(t) and angular velocity ω(t) at time point t are computed as described by Bogert et al. and Desapio et al. [23], [24]:

| (1) |

| (2) |

| (3) |

All parameters required in these equations are obtained by performing forward kinematics of the biomechanical model. The 3×3 rotation matrix R(t) describes the orientation of the sensor at time point t in the global coordinate system, and are its first and second order derivatives with respect to time. The position of the segment the sensor is mounted on in the global coordinate system at time point t is described by rSeg(t), with being its second order derivative. pSen(t) is the position of the sensor in the local coordinate system of the segment the sensor was mounted on. Parameter g = (0, −9.80665, 0)⊤ is the global gravity vector.

B. IMU noise simulation

The IMU signal computation assumes a perfect IMU that can measure without the influence of errors. However, in a real application errors can have a significant influence preventing an effective motion compensation. For example, Kok et al. [25] showed that the integration of a stationary IMU over ten seconds leads to errors in the orientation and position estimates of multiple degrees and meters, respectively.

The most prominent error sources in IMUs leading to these high deviations are random measurement noise and an almost constant bias [26]. In this study, we focus on the analysis of the unpredictable sensor noise. Commercially available consumer IMU devices have noise densities that are acceptable for larger motion analysis. An example of a commercially available sensor BMI160, Bosch Sensortec GmbH, Reutlingen, Germany, has an output noise density of 180 μg/s2 and 0.007 °/s and a root mean square (RMS) noise of 1.8 mg/s2 and 0.07 °/s at 200 Hz [27]. However, our data shows that the signals produced by a standing swaying motion have amplitudes in the range of 0.3 mg/s2 resp. 0.02 °/s. This means that when measuring with an off-the-shelf sensor, the signal would be completely masked by noise. For this reason, we investigate the noise level improvement necessary to use IMUs for the task of motion compensation in weight-bearing CT imaging.

We simulate white Gaussian noise signals of different RMS levels and add them onto the simulated acceleration a(t) and angular velocity ω(t). Starting with the RMS values of the aforementioned Bosch sensor, the noise level is divided by factors of ten down to a factor of 105. The accelerometer and gyroscope noise levels are decreased independently. In the following, we will use the notation fa and fg for the exponent, i.e. the factor the RMS value is divided by is 10fa resp. 10fg. The noisy IMU signals are then used to compute rigid motion matrices for motion compensation as explained in Sections II-C and II-E.

Note that the noise influence is evaluated independently of the IMU-based motion compensation methods. All motion compensation methods presented in the following sections are first evaluated on the noise-free signals. Afterwards, we perform rigid motion compensation with noisy IMU signals to investigate the influence of noise on the applicability of IMUs for motion compensation.

C. Transformation algorithm

The following descriptions are based on Maier et al. [5] and are required for all IMU-based motion compensation approaches presented in this article.

The IMU measures motion in its local coordinate system, however, motion in the global coordinate system of the CBCT scan is required for motion compensation. The orientation and position of the IMU S(t) in the global coordinate system at each frame t is described by the affine matrix

| (4) |

where R(t) is a 3×3 rotation matrix, r(t) is a 3×1 translation vector, and 0 is the 3×1 zero-vector. The IMU pose can be updated for each subsequent frame using the affine global pose change matrix Δg(t):

| (5) |

This global change Δg(t) can be computed by transforming the local change in the IMU coordinate system Δl(t) to the global coordinate system using the current IMU pose:

| (6) |

Thus, if the initial pose S(t = 0) is known, the problem is reduced to estimating the local pose change Δl(t) in the IMU coordinate system, which is described in the following paragraphs.

The gyroscope measures the orientation change over time ω(t) on the three axes of the IMU’s local coordinate system which can be directly used to rotate the IMU from frame to frame. The measured acceleration a(t), however, needs to be processed to obtain the position change over time. First, the gravity measured on the IMU’s three axes is removed based on its global orientation. For this purpose, the angular velocity ω(t) is rewritten to 3×3 rotation matrices G(t) and used to update the global orientation of the sensor R(t). This orientation can then be used to obtain the gravity vector g(t) in the local coordinate system for each frame t:

| (7) |

| (8) |

The sensor’s local velocity v(t), i.e. its position change over time, is then computed as the integral of the gravity-free acceleration considering the sensor’s orientation changes:

| (9) |

With these computations, the desired local pose change of the IMU Δl(t) for each frame t is expressed as an affine matrix containing the local rotation change and position change:

| (10) |

Note that the initial pose S(t = 0) and velocity v(t = 0) need to be known or estimated in order to apply this transformation process.

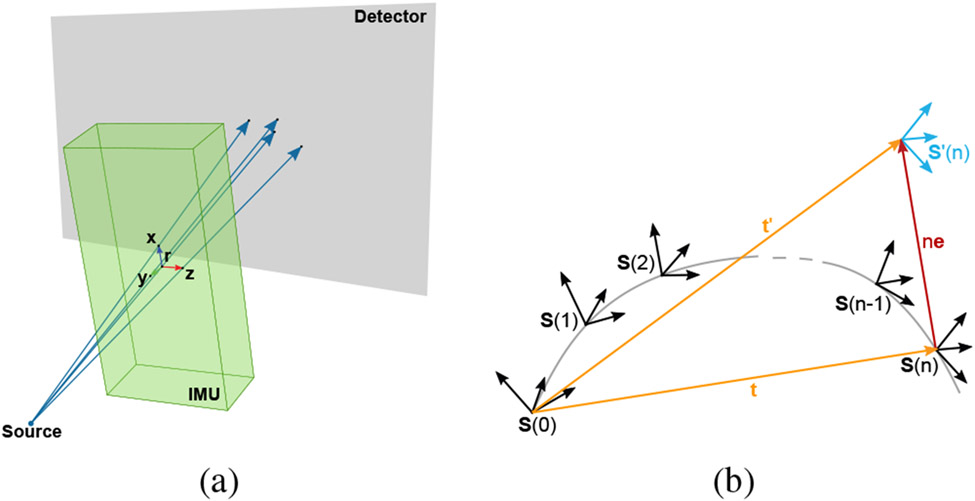

D. IMU pose and velocity initialization

In our previously published work, the initial pose and velocity of the IMU necessary for pose estimation in (5) and (9) were assumed to be known, which is not the case in a real setting [5]. Thies et al. [28] proposed to estimate the initial pose as an average sensor pose computed from the complete set of projection images. However, using the average position over the multi-second scan including subject motion leads to inaccurate motion compensation results. For this reason, we present an initial pose estimation based only on the first projection image. By incorporating also the second projection image, the initial velocity can be estimated.

1). Initial IMU pose:

The pose of the IMU S(t) at frame t contains the 3D position of the origin r(t) and the three perpendicular axes of measurement ux(t), uy(t) and uz(t) in the rotation matrix R(t). This coordinate system can be computed from any four non-coplanar points within the IMU if their geometrical relation to the sensor’s measurement coordinate system is known. For simplicity, in this simulation study we assume that these four points are the IMU’s origin r and the points x, y and z at the tips of the three axes’ unit vectors ux, uy, and uz. We also assume that the sensor has small, highly attenuating metal components at these four points making their projected 2D positions easy to track on an X-ray projection image. Since the C-arm system geometry is calibrated prior to performing CT acquisitions, the 3D position of each 2D detector pixel, and the 3D source position for each projection are also known. Then the searched points r(t), x(t), y(t), and z(t) are positioned on the straight line between the source and the respective projected point (Fig. 3a).

Fig. 3:

(a) Disproportionate visualization of the initialization concept. The green box represents the sensor with its coordinate system plotted inside. The X-rays (blue) pass through the metal components and hit the detector (gray). (b) Visualization of the velocity initialization approach. Computing the pose S′(t = n) with incorrect initial velocity v(t = 0) leads to a wrong translation t′ which is used for velocity initialization.

In the considered case, the four points need to fulfill the following properties:

The vectors ux(t), uy(t) and uz(t) spanned by r(t), x(t), y(t) and z(t) must have unit length.

The euclidean distance between two of the points x(t), y(t) and z(t) must be .

The inner product of two of the vectors ux(t), uy(t) and uz(t) must be zero.

The right-handed cross product of two of the vectors ux(t), uy(t) and uz(t) must result in the third vector.

Solving the resulting non-linear system of equations defined by these constraints for the first projection at time point t = 0 yields the 3D positions of r(t = 0), x(t = 0), y(t = 0), and z(t = 0) and thereby the initial sensor pose S(t = 0).

2). Initial IMU velocity:

The initial IMU velocity v(t = 0) is needed to compute the velocity update in (9). In the following paragraphs, we describe a process to estimate the initial velocity, which is illustrated in Fig. 3b.

The IMU acquires data with a higher sampling rate than the C-arm acquires projection images (120 Hz and 31 Hz, respectively). If the two systems are synchronized the correspondence between the sampling time points t of the IMU and the projection image acquisition points i is known. The first projection image corresponds to the first IMU sample at time point t = i = 0 and is used to estimate the initial pose S(t = 0). The second projection image at i = 1 corresponds to the IMU sampling point t = n with n > 1 and is used to estimate the pose S(t = n).

Since each IMU pose can be computed from the previous one by applying the pose change between frames with (5) and (6), the IMU pose at frame t = n can also be expressed as

| (11) |

which can be rearranged to

| (12) |

However, since v(t = 0) is not known, also Δl(t = 0) and all subsequent local change matrices are not known. Therefore, instead of the actual v(t = 0), we use the zero-vector as initial velocity introducing an error vector e:

| (13) |

This error is propagated and accumulated in the frame by frame velocity computation in (9) and for t >= 1 the resulting error-prone velocity is

| (14) |

These error-prone velocities v′(t) lead to incorrect pose change matrices and thereby to an incorrect computation of S′(t = n):

| (15) |

| (16) |

Inserting (14) and expanding (16) shows that the incorrect initial velocity only has an effect on the translation of the resulting affine matrix:

| (17) |

In this equation, 03,3 denotes a 3×3 matrix filled with zeros. If the translation of S(t = 0)−1S′(t = n) is denoted as t′ and the translation of S(t = 0)−1S(t = n) is denoted as t, this leads to:

| (18) |

The correct initial velocity v(t = 0) is computed as

| (19) |

E. Rigid projection matrix correction

Under the assumption that the legs move rigidly during the CT scan, it is sufficient to use the measurements of only one sensor placed e.g. on the shin for motion estimation. The pose change matrices estimated in (6) and (10) can then be directly applied for motion correction. Note that the angular velocity and velocity are resampled to the CT acquisition frequency before pose change computation using the synchronized correspondences between C-arm and IMU.

An affine motion matrix M(i) containing the rotation and translation is computed for each projection i. The motion matrix for the first projection i = 0 is defined as the identity matrix M(i = 0) = I, i.e. the pose at the first projection is used as the reference pose. Each subsequent matrix is then obtained using the global pose change matrix computed from the sensor measurements:

| (20) |

In order to correct for the motion during the CBCT scan, we then modify the projection matrices P(i) of the calibrated CT scan with the motion matrices M(i) resulting in motion corrected projection matrices :

| (21) |

The corrected projection matrices are then used for the volume reconstruction as described in Section III-A.

F. Non-rigid motion correction

Contrary to the assumption in Section II-E, the leg motion during the scan is non-rigid since the subjects are not able to hold exactly the same squatting angle for the duration of the scan. As a consequence, the motion can not entirely be described by a rigid transformation. To address this issue, we propose a non-rigid motion correction using both IMUs placed on the model. Using the formulas presented in II-C, we can compute the poses tS(t) and fS(t) of the IMUs on tibia and femur, respectively. Since the placement of the IMUs on the segments relative to the joints is known, the IMU poses can be used to describe the positions of ankle, knee and hip joint, a(t), k(t) and h(t), at each time point t.

These estimated joint positions are used to non-rigidly correct for motion during the scans. We propose two approaches that make use of Moving Least Squares (MLS) deformations in order to correct for motion [29], [30]. The first approach applies a 2D deformation to each projection image, and the second approach performs a 3D dynamic reconstruction where the deformation is integrated into the volume reconstruction.

1). Moving least squares deformation:

The idea of MLS deformation is that the deformation of a scene is defined by a set of m control points. The original positions of the control points are denoted as pj, and their deformed positions are qj with j = 1, …, m. For each pixel ν in the image or volume, the goal is to find its position in the deformed image or volume depending on these control points. For this purpose, the affine transformation f(ν) that minimizes the weighted distance between the known and estimated deformed positions should be found:

| (22) |

This optimization is performed for each pixel individually, since the weights ωj depend on the distance of the pixel ν to the control points pj:

| (23) |

The weighted centroids p∗ and q∗ and the shifted control points and are used in order to find the optimal solution of (22) in both the 2D and 3D case:

| (24) |

| (25) |

According to Schäfer et al. [29], in the 2D image deformation case, the transformation minimizing (22) is described by:

| (26) |

where

| (27) |

Finding the transformation that minimizes (22) in the 3D case requires the computation of a singular value decomposition, as explained by Zhu et al. [30]:

| (28) |

The optimal transformation is then described by:

| (29) |

2). 2D projection deformation:

In our first proposed non-rigid approach, we deform the content of the 2D projection images in order to correct for motion. The initial pose of the subject is used as reference pose, so the first projection image i = 0 is left unaltered. Each following projection image acquired at time point i is transformed by MLS deformation using the estimated hip, knee and ankle joint positions h(i), k(i) and a(i) by using them as control points for the MLS deformation as described in the following paragraph.

To obtain the 2D points needed for a 2D projection image deformation, the 3D positions h(i), k(i) and a(i) are forward projected onto the detector using the system geometry. However, since the detector is too small to cover the whole leg of a subject, the projected positions of the hip and ankle would be outside of the detector area. For this reason, 3D points situated closer to the knee on the straight line between hip and knee, and on the straight line between ankle and knee are computed with α = 0.8:

| (30) |

| (31) |

Then, for each projection i, the initial 3D reference positions a′(i = 0), k(i = 0) and h′(i = 0) are forward projected onto the detector resulting in the 2D control points pj(i) with j = 1, 2, 3. The 3D positions h′(i), k(i) and a′(i) at time of projection acquisition i are forward projected to obtain qj(i) with j = 1, 2, 3. Each projection image is then deformed by computing the transformation f(ν) according to (26) for each image pixel using these control points. Finally, the motion corrected 3D volume is reconstructed from the resulting deformed projection images as described in Section III-A.

3) 3D dynamic reconstruction:

The second proposed non-rigid approach applies 3D deformations during volume reconstruction. In the typical back-projection process of CT reconstruction, the 3D position of each voxel of the output volume is forward projected onto the detector for each projection image i, and the value at the projected position is added to the 3D voxel value. For the proposed 3D dynamic reconstruction, this process is altered: before forward projecting the 3D voxel position onto the detector for readout, it is transformed using 3D MLS deformation. However, the readout value is added at the original voxel position.

For MLS deformation during reconstruction, the estimated positions of hip, knee and ankle joint h(i), k(i) and a(i) are used. The reference pose is again the first pose at i = 0 and the 3D positions of hip, knee and ankle h(i = 0), k(i = 0) and a(i = 0) are used as control points pj with j = 1, 2, 3. The 3D positions h(i), k(i) and a(i) are used as qj(i) with j = 1, 2, 3. Note that the 3D positions pj are the same for each projection, contrary to the 2D approach where they depend on forward projection using the system geometry. Using these control points, during reconstruction the transformation f(ν) is computed for each voxel of the output volume and each projection according to (29) and applied for deformation resulting in a motion-compensated output volume.

III. Evaluation and Results

A. IMU-based motion compensation

All volumes are reconstructed by GPU accelerated filtered back-projection in the software framework CONRAD [31]. The filtered back-projection pipeline included a cosine weighting, a Parker weighting, a truncation correction and a Shepp Logan ramp filtering. The reconstructed volumes have a size of 5123 voxels with isotropic spacing of 0.5 mm. In the case of rigid motion compensation, the motion compensated projection matrices P′ are used for reconstruction. In the case of 2D non-rigid motion compensation, the deformed projection images are reconstructed using the original projection matrices. In the case of 3D non-rigid motion compensation, the original projection matrices and projection images are used, but the back-projection process is adapted as described in Section II-F.3.

For comparison, an uncorrected motion-corrupted volume is reconstructed. Furthermore, a motion-free reference is realized by simulating a CT scan where the initial pose of the model is kept constant throughout the scan. The IMU-based motion compensation approaches are compared with a marker-based gold standard approach [14]. For this purpose, small highly attenuating metal markers placed on the knee joint are added to the projections and tracked for motion compensation as proposed by Choi et al. [14]. All volumes are scaled from 0 to 1 and registered to the motion-free reference reconstruction.

The image quality is compared against the motion-free reference by the structural similarity index measure (SSIM) and the root mean squared error (RMSE). The SSIM index ranges from 0 (no similarity) to 1 (identical images) and considers differences in luminance, contrast and structure [32]. The metrics are computed on the whole reconstructed leg and on the lower leg and upper leg separately.

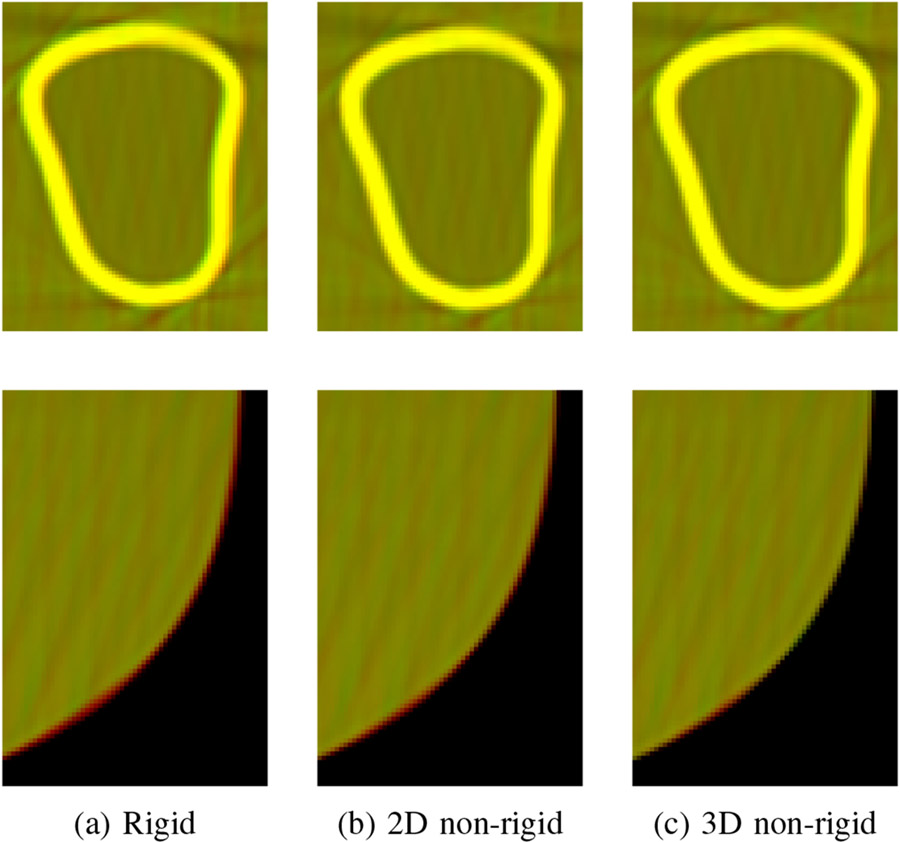

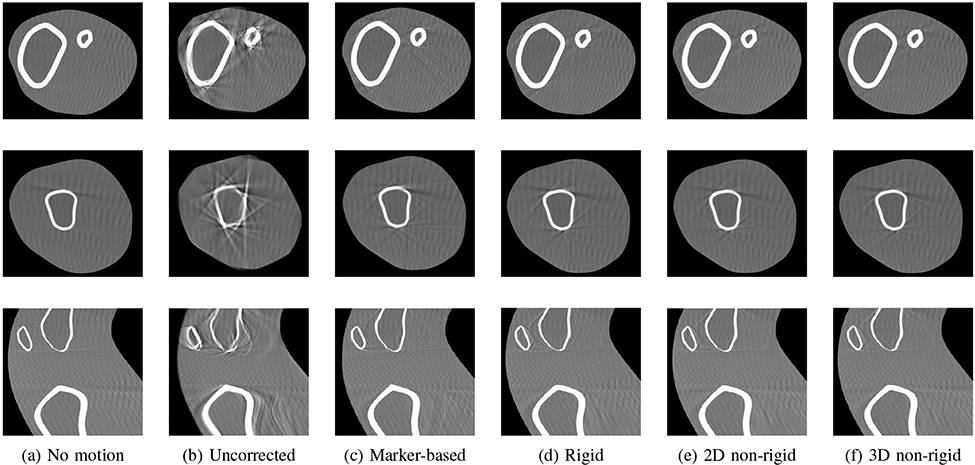

The proposed initialization method yields the correct initial pose and velocity for all scans and all further computations are based on these estimates. Figure 4 shows axial slices through the tibia and the femur, and a sagittal slice of one example reconstruction. All proposed methods are able to compensate for motion equally as well as the marker-based reference approach, or even slightly better. Differences between the methods can only be seen in a detailed overlay of the motion compensated reconstruction with the motion-free reconstruction. In Fig. 5, details of the axial slice through the thigh are depicted at the femoral bone and at the skin-air-border. The motion-free reconstruction is shown in red, and the motion compensated reconstructions of the rigid, non-rigid 2D and non-rigid 3D IMU methods are shown in green in the three columns. All pixels that occur in both overlaid images are depicted in yellow. It is noticeable that the rigid correction method fails to estimate the exact thigh motion leading to an observable shift as a red or green halo at the bone interface and at the skin border. This is reduced for the non-rigid 2D correction and almost imperceptible for the non-rigid 3D correction.

Fig. 4:

Exemplary slices of a reconstructed volume. Rows: axial slice through shin, axial slice through thigh, sagittal slice. (a) Scan without motion, (b) uncorrected case, (c) marker-based reference method, (d) rigid IMU method, (e) non-rigid IMU 2D projection deformation, (f) non-rigid IMU 3D dynamic reconstruction. Motion artifacts can be reduced by all proposed methods in a similar manner as the marker-based method.

Fig. 5:

Details of an axial slice through the thigh. Rows: femoral bone, skin border. Overlay of motion-free reference (red) and the result of the method (a) rigid IMU, (b) non-rigid 2D IMU, (c) non-rigid 3D IMU (green), overlapping pixels shown in yellow.

This visual impression is confirmed by the SSIM and RMSE values in Table I. All proposed methods achieve SSIM and RMSE values that are similar or better than those of the reference marker-based method. Compared with the uncorrected case, this denotes an improvement of 24-35% in the SSIM and 78-85% in the RMSE values, respectively. Higher SSIM scores and lower RMSE values are achieved for the 30 degrees squat scans compared with the 60 degrees squat scans. When comparing the three proposed IMU methods, the results show a slight advantage of the non-rigid 3D approach over the other two IMU-based approaches.

TABLE I:

Average SSIM and RMSE values with standard deviation of the motion compensated reconstructions compared to the motion-free reconstruction, where all volumes were scaled from 0 to 1. 30 degrees and 60 degrees squats are evaluated separately in the topmost two blocks, while the last block shows the average results over all scans. All measures were computed on the whole reconstructed part of the leg, and on the shank resp. thigh only.

| SSIM | RMSE | |||||

|---|---|---|---|---|---|---|

| Whole Leg | Shank | Thigh | Whole Leg | Shank | Thigh | |

| 30 degrees squat | ||||||

| Uncorrected | 0.777 ± 0.089 | 0.799 ± 0.093 | 0.742 ± 0.084 | 0.084 ± 0.029 | 0.081 ± 0.030 | 0.088 ± 0.029 |

| Marker-based | 0.986 ± 0.002 | 0.986 ± 0.002 | 0.984 ± 0.003 | 0.021 ± 0.006 | 0.021 ± 0.006 | 0.022 ± 0.005 |

| Rigid IMU | 0.993 ± 0.003 | 0.993 ± 0.004 | 0.992 ± 0.004 | 0.015 ± 0.005 | 0.015 ± 0.005 | 0.015 ± 0.005 |

| Non-rigid IMU 2D | 0.991 ± 0.003 | 0.991 ± 0.003 | 0.992 ± 0.002 | 0.017 ± 0.005 | 0.018 ± 0.005 | 0.016 ± 0.005 |

| Non-rigid IMU 3D | 0.994 ±0.002 | 0.994 ± 0.003 | 0.994 ± 0.002 | 0.014 ± 0.004 | 0.014 ± 0.004 | 0.013 ± 0.004 |

| 60 degrees squat | ||||||

| Uncorrected | 0.777 ± 0.111 | 0.790 ± 0.090 | 0.736 ± 0.118 | 0.079 ± 0.022 | 0.076 ± 0.020 | 0.084 ± 0.025 |

| Marker-based | 0.982 ± 0.009 | 0.979 ± 0.012 | 0.974 ± 0.013 | 0.026 ± 0.009 | 0.025 ± 0.009 | 0.027 ± 0.009 |

| Rigid IMU | 0.991 ± 0.006 | 0.990 ± 0.004 | 0.987 ± 0.006 | 0.018 ± 0.006 | 0.018 ± 0.007 | 0.019 ± 0.006 |

| Non-rigid IMU 2D | 0.989 ± 0.005 | 0.989 ± 0.005 | 0.989 ± 0.005 | 0.018 ± 0.006 | 0.018 ± 0.006 | 0.018 ± 0.007 |

| Non-rigid IMU 3D | 0.994 ± 0.004 | 0.991 ± 0.004 | 0.991 ± 0.004 | 0.016 ± 0.006 | 0.017 ± 0.006 | 0.016 ± 0.006 |

| All scans | ||||||

| Uncorrected | 0.774 ± 0.092 | 0.794 ± 0.090 | 0.739 ± 0.098 | 0.081 ± 0.025 | 0.078 ± 0.025 | 0.086 ± 0.026 |

| Marker-based | 0.981 ± 0.009 | 0.982 ± 0.009 | 0.979 ± 0.011 | 0.024 ± 0.007 | 0.023 ± 0.007 | 0.024 ± 0.008 |

| Rigid IMU | 0.991 ± 0.004 | 0.991 ± 0.004 | 0.990 ± 0.006 | 0.017 ± 0.006 | 0.016 ± 0.006 | 0.017 ± 0.006 |

| Non-rigid IMU 2D | 0.990 ± 0.004 | 0.990 ± 0.004 | 0.990 ± 0.004 | 0.017 ± 0.006 | 0.018 ± 0.006 | 0.017 ± 0.006 |

| Non-rigid IMU 3D | 0.993 ± 0.004 | 0.992 ± 0.004 | 0.993 ± 0.004 | 0.015 ± 0.005 | 0.015 ± 0.005 | 0.014 ± 0.005 |

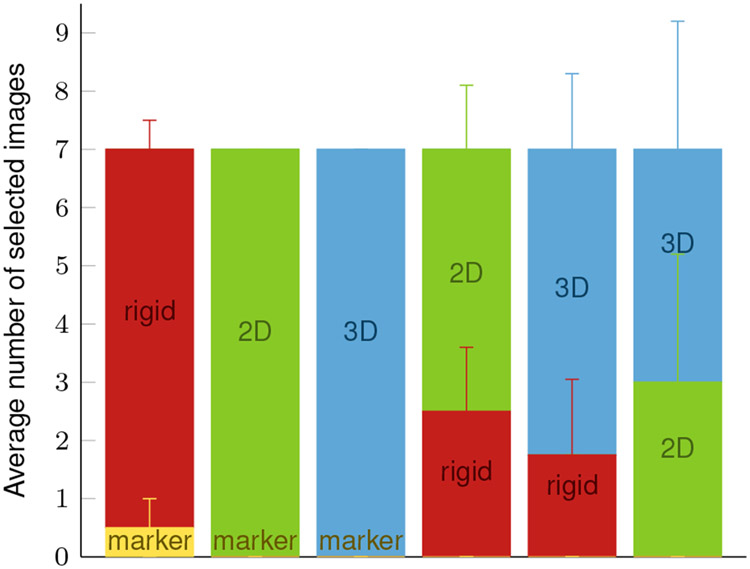

To evaluate whether the proposed motion correction approaches lead to a perceived improved image quality compared to the marker-based method, a user study is conducted. Four medical imaging experts that have several years of experience with CT image processing are asked to evaluate the image quality of the motion-corrected images. Three of them have intensively worked on improving the reconstruction quality of CT images of the knee joint before. For rating the image quality, two slice images in the sagittal or axial plane from volumes corrected with different motion compensation methods are displayed side by side for comparison. The experts then need to decide which image is of better quality. The compared correction methods are marker-based, rigid IMU, 2D non-rigid IMU, and 3D non-rigid IMU. Each expert is shown seven image pairs per comparison of two methods, which results in a total of 42 comparisons per rater in a random order. The raters are not informed which motion compensation methods have been applied to obtain the two compared images. If raters have difficulties making a decision, they can request to be shown an overlay with the motion-free slice image (similar to the overlays displayed in Fig. 5) to highlight differences in more detail.

The results of the user study are shown in Fig. 6. The experts prefer the results of all IMU-based methods over the marker-based corrected images. Only two times the marker-based image is chosen over the rigid IMU correction, whereas the 2D and 3D non-rigid approaches are always preferred against the marker-based approach. Both the 2D and 3D non-rigid IMU-based approaches are chosen around twice as often when comparing them with the rigid IMU-based approach. There is no clear preference observable in the direct comparison between 2D and 3D non-rigid approaches.

Fig. 6:

Results of the user study averaged over all four raters with standard deviation. The six bar plots refer to the comparison between two motion compensation methods each (yellow: marker-based, red: rigid IMU-based, green: 2D non-rigid IMU-based, blue: 3D non-rigid IMU-based). Each rater was shown seven image pairs per method comparison. The bar height shows how many times the raters on average chose a method over the other.

Only one rater asked for the overlay to decide between marker-based vs. the IMU-based methods for two comparisons. For almost 80% of the comparisons between two of the three IMU-based methods the raters required the overlay with the motion-free image.

B. Noise analysis

The influence of noise on the motion correction is evaluated in two ways:

First, the estimated motion is compared by decomposing the motion matrices resulting from the noise-free signal and from the different levels of noisy signals into three-axial translations and rotations. Each noisy result is then compared to the noise- free estimate. For comparison, we compute the RMSE between each axis of the noise-free and the noisy translations and rotations, and then average over the three axes. We only evaluate on one scan of one subject, but average over five independent repetitions of adding random white noise and computing motion matrices and RMSE.

Secondly, volumes reconstructed from noisy signal motion estimates are analyzed. Based on the RMSE results from the first part of the analysis, certain noise levels are chosen for rigid motion compensated reconstruction. Rigid motion matrices are computed from the noisy signals as described in Sections II-C and II-E and used for volume reconstruction as described above. For image quality comparison, the SSIM and RMSE are again computed.

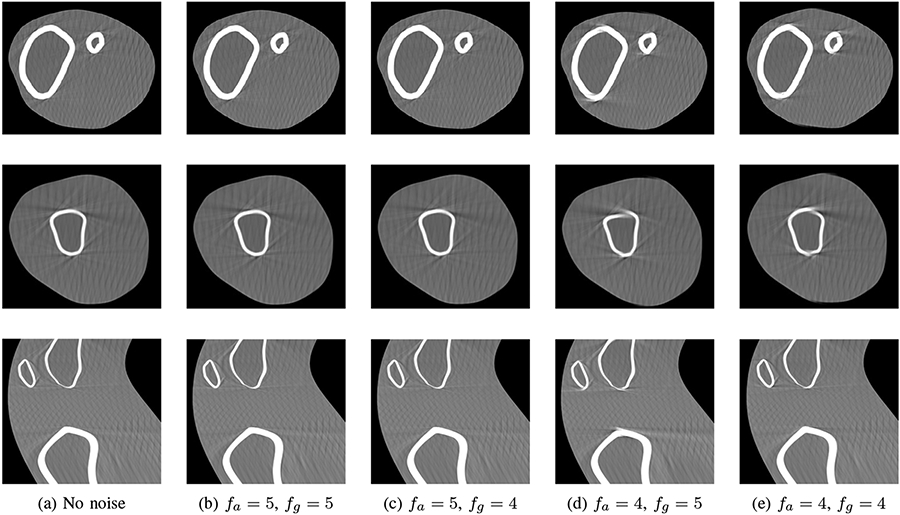

The decremental signal noise analysis shows that the RMS noise of current commercially available community IMUs would prevent a successful IMU motion compensation (Table II, top left). While the resulting rotation estimate shows an average RMSE to the noise-free estimate of 1.45°, the value of the estimated translation is considerably larger (9461 mm). Deviations above 1 mm and 1° of the translation and rotation are expected to decrease the reconstruction quality considerably. For noisy acceleration and angular velocity, an average RMSE value below these thresholds was only achieved if the RMS noise value was decreased by a factor of 104 or 105. For this reason, and in the further analysis, the estimated motion matrices of these noise levels are used to perform a motion compensated reconstruction. The resultant reconstructions are shown in Fig. 7. While the image quality for fa = 5 is similar to the motion-free case, streaking artifacts are visible when fa = 4, independent of fg = 4 or fg = 5. The quantitative analysis of the noisy results in Table III confirms this finding: The average SSIM and RMSE values are only slightly decreased respectively increased compared with the noise-free estimation if fa = 5, but deteriorate markedly when fa = 4.

TABLE II:

Mean RMSE between the noise-free and noisy estimation of translation [mm] / rotation [°]. All values are the result of averaging over five independent noise simulations of one scan. The rows and columns show the exponents fa and fg of the noise factor for the accelerometer resp. gyroscope. RMSE values for both noisy acceleration and angular velocity that are below 1 for translation and rotation are highlighted as bold.

| fa╲fg | 0 | 1 | 2 | 3 | 4 | 5 | no noise |

|---|---|---|---|---|---|---|---|

| 0 | 9461 / 1.453 | 8866 / 0.146 | 6329 / 0.016 | 6762 / 10−3 | 8518 / 10−4 | 10389 / 2 · 10−5 | 9390 / 10−5 |

| 1 | 1899 / 1.404 | 883 / 0.128 | 663 / 0.012 | 754 / 10−3 | 883 / 10−4 | 666 / 2 · 10−5 | 940 / 10−5 |

| 2 | 1104 / 1.357 | 158 / 0.128 | 133 / 0.016 | 73 / 10−3 | 61 / 10−4 | 77 / 2 · 10−5 | 81 / 10−5 |

| 3 | 1173 / 1.270 | 125 / 0.122 | 20 / 0.015 | 7 / 10−3 | 9 / 10−4 | 9 / 2·10−5 | 9 / 10−5 |

| 4 | 1622 / 1.645 | 190 / 0.190 | 10 / 0.014 | 1 / 10−3 | 0.943 / 10−4 | 0.985 / 2 · 10−5 | 0.672 / 10−5 |

| 5 | 108 / 1.193 | 146 / 0.178 | 10 / 0.013 | 1 / 10−3 | 0.167 / 10−4 | 0.078 / 2 · 10−5 | 0.077 / 10−5 |

| no noise | 148 / 1.285 | 80 / 0.129 | 12 / 0.014 | 1 / 10−3 | 0.077 / 10−4 | 0.021 / 2 · 10−5 | 0 / 0 |

Fig. 7:

Comparison of noise-free and noisy rigid IMU compensation. Rows: axial slice through shin, axial slice through thigh, sagittal slice. (a) Noise-free IMU signal, in row (b)-(e) noise is added to the simulated acceleration and angular velocity. The RMS noise value is 1.8 mg/s2 resp. 0.07 °/s divided by 10fa resp. 10fg.

TABLE III:

Average SSIM and RMSE values with standard deviation of the rigid motion compensated reconstructions from noisy signals compared to the motion-free reconstruction over all scans. The noise factor is 10−fa for the simulated accelerometer resp. 10−fg for the gyroscope. All measures were computed on the whole reconstructed part of the leg, and on the shank resp. thigh only.

| SSIM | RMSE | |||||

|---|---|---|---|---|---|---|

| Whole Leg | Shank | Thigh | Whole Leg | Shank | Thigh | |

| No noise | 0.991 ± 0.004 | 0.991 ± 0.004 | 0.990 ± 0.006 | 0.017 ± 0.006 | 0.016 ± 0.006 | 0.017 ± 0.006 |

| fa = 5, fg = 5 | 0.989 ± 0.005 | 0.990 ± 0.005 | 0.987 ± 0.004 | 0.018 ± 0.006 | 0.018 ± 0.006 | 0.019 ± 0.006 |

| fa = 5, fg = 4 | 0.987 ± 0.004 | 0.988 ± 0.004 | 0.985 ± 0.004 | 0.020 ± 0.006 | 0.020 ± 0.006 | 0.021 ± 0.007 |

| fa = 4, fg = 5 | 0.926 ± 0.049 | 0.929 ± 0.046 | 0.919 ± 0.054 | 0.047 ± 0.022 | 0.046 ± 0.022 | 0.048 ± 0.023 |

| fa = 4, fg = 4 | 0.927 ± 0.043 | 0.929 ± 0.040 | 0.923 ± 0.052 | 0.048 ± 0.021 | 0.048 ± 0.021 | 0.047 ± 0.021 |

IV. Discussion

The presented initialization approach based on the system geometry and the first two projection images works well under the optimal conditions of a simulation. In a real setting, it is unlikely that the IMU will contain clearly distinguishable metal components at the IMU coordinate system and they are unlikely to be resolved using current flat panel detectors. However, the presented approach can be applied with arbitrary four IMU points, assuming their relation to the origin and coordinate system is known. The IMU should then be positioned such that their projections are well distinguishable in the two projection images required for initialization.

The results of all proposed IMU-based motion compensation methods are qualitatively and quantitatively equivalent, or even improved, compared with the gold standard marker-based approach that estimates a rigid motion. For the marker-based approach, individual multiple tiny markers have to be placed successively, and need to be attached directly to the skin in order to limit soft tissue artifact. For effective marker tracking, it should be ensured that they don’t overlap in the projections. The metal also produces artifacts in the knee region. An advantage of our proposed methods is the need for only one or two IMUs on the leg. Here, the only is that the components used for initialization need to be visible in the first projection images. Since the shank and thigh are modeled as stiff segments, the sensors can be placed sufficiently far away from the knee joint in order not to cause metal artifacts that could hinder subsequent image analyses.

It is noticeable that all methods performed slightly better on the scans where subjects were asked to hold a squat of 30 degrees compared with those for the 60 degrees squat. This is likely a result of it being more challenging to hold the same pose at a lower squat, where the motion in these cases has a higher range leading to increased error.

With the non-rigid 3D IMU approach, improved results are achieved compared with the rigid IMU approach, especially in the region of the thigh. Although this is only a small improvement, it may have significant impact on further image analyses, as the expected cartilage change under load lies in the range of 0.3-0.5 mm [33]. Considering that the pixel spacing of the reconstructed volumes is 0.2 mm, this corresponds to a change of 1-3 pixels. Thus, it is of utmost importance to be as close as possible to the motion-free case, even if the improvement by the non-rigid approaches seems to be subtle.

The results of the user study confirm the superior quality of images resulting from the IMU-based methods compared with the marker-based correction. The subtle differences between the images corrected by the IMU-based approaches seem to be hard to recognize, as the raters very often needed the overlay to decide between the images. However, with this support, in the majority of cases the non-rigidly corrected images are rated to have higher quality compared with the rigid IMU-based correction. The study also confirms that both non-rigid approaches produce results of similar quality, as there is no clear trend of raters in favor of or against one of the methods.

The simple model of three moving joint positions and an affine deformation is considerably less complex than the XCAT spline deformation during projection generation suggesting that further improvements can be achieved by using a more realistic model.

The non-rigid 2D IMU approach provides small improvements in visual results compared with the rigid approach (Fig. 5), but the quantitative evaluation shows similar SSIM and RMSE values. Although the non-rigid motion estimate might be more accurate, at the same time the image deformation introduces small errors, since X-rays measured at a deformed detector position would have also been attenuated by other materials.

It is notable that the noise has a larger effect on the processing of the accelerometer signal compared with that of the gyroscope signal (Table II). On the one hand, the double integration performed on the acceleration leads to a quadratic error propagation. On the other hand, the noisy gyroscope signals used for gravity removal and velocity integration introduce additional errors that are accumulated during acceleration processing.

The noise level improvements that are required for real application are in the range of 105 for the accelerometer and 104 for the gyroscope. Although recently developed accelerometers and gyroscopes achieve these low noise levels, they are designed to measure signals in the mg-range and are far too delicate for the application at hand [34]-[36].

Additionally to aiming at reducing the noise level of the applied IMUs, alternative approaches towards handling the erroneous data need to be considered in future work. Kalman filters and sensor fusion would be well-suited to reduce the large deviations that are currently obtained. In many motion analysis applications these approaches have been successfully applied to improve motion tracking with noisy data [25], [37]. Further information about the sensor pose at certain points in time could for example be obtained similarly as in the presented initialization approach. By tracking the projected positions of the IMU elements in a few selected projection images an integration update could be performed to reduce errors. Moreover, including prior knowledge about the motion amplitudes could help to restrict the signal integration to reasonable values.

With regard to a real application, it must also be considered that, unlike during real recordings, the subjects in this study did not have a support bar available to hold on to (see Fig. 1a). Holding on to the support bar restricts the possible movement, which leads to smaller motion and further complicates motion compensation with IMUs.

In our study, we focus only on signal noise as one of the most severe IMU measurement errors. In the future, similar simulations might be performed in order to determine further necessary specifications.

V. Conclusion

With the presented simulation study, we have shown the feasibility and limitations of using IMUs for motion compensated CT reconstruction. While all proposed methods are capable of reducing motion artifacts in the noise-free case, our noise analysis shows that the applicability in real settings is not yet fully realizable. Our simulation analysis nevertheless provides important insights that will be of considerable value in future research. If developments continue to progress rapidly, and a robust sensor with low noise level and high measurement range is developed, our method could be applied in a real setting.

Acknowledgments

This work was supported by the NIH under grant 5R01AR065248-03 and Shared Instrument Grant No. S10 RR026714 supporting the zeego@StanfordLab, by the DFG under grant RTG 1773, and by the BMBF under grant AIT4Surgery. B. Eskofier acknowledges the support of the DFG within the framework of the Heisenberg professorship programme (ES 434/8-1)

Contributor Information

Jennifer Maier, Pattern Recognition Lab, Machine Learning and Data Analytics Lab, Friedrich-Alexander-Universität Erlangen-Nürnberg (FAU), Erlangen, Germany.

Marlies Nitschke, Machine Learning and Data Analytics Lab, Friedrich-Alexander-Universität Erlangen-Nürnberg (FAU), Erlangen, Germany.

Jang-Hwan Choi, Division of Mechanical and Biomedical Engineering, Graduate Program in System Health Science and Engineering, Ewha Womans University, Seoul, South Korea..

Garry Gold, Department of Radiology, Stanford University School of Medicine, CA, USA..

Rebecca Fahrig, Siemens Healthcare GmbH, Forchheim, Germany..

Bjoern M. Eskofier, Machine Learning and Data Analytics Lab, Friedrich-Alexander-Universität Erlangen-Nürnberg (FAU), Erlangen, Germany.

Andreas Maier, Pattern Recognition Lab, Friedrich-Alexander-Universität Erlangen-Nürnberg (FAU), Erlangen, Germany.

References

- [1].Maier A et al. , “Analysis of Vertical and Horizontal Circular C-Arm Trajectories,” in Proc SPIE, vol. 7961, 2011, pp. 796 123-1–8. [Google Scholar]

- [2].Segal NA et al. , “Correlations of medial joint space width on fixed-flexed standing computed tomography and radiographs with cartilage and meniscal morphology on magnetic resonance imaging,” Arthritis Care & Research, vol. 68, no. 10, pp. 1410–1416, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Broos M et al. , “Geometric 3d analyses of the foot and ankle using weight-bearing and non weight-bearing cone-beam ct images: The new standard?” European Journal of Radiology, vol. 138, p. 109674, 2021. [DOI] [PubMed] [Google Scholar]

- [4].Richter M et al. , “Results of more than 11,000 scans with weightbearing ct – impact on costs, radiation exposure, and procedure time,” Foot and Ankle Surgery, vol. 26, no. 5, pp. 518–522, 2020. [DOI] [PubMed] [Google Scholar]

- [5].Maier J et al. , “Inertial measurements for motion compensation in weight-bearing cone-beam ct of the knee,” in MICCAI, 2020, pp. 14–23. [Google Scholar]

- [6].Segal NA et al. , “Comparison of tibiofemoral joint space width measurements from standing ct and fixed flexion radiography,” Journal of Orthopaedic Research, vol. 35, no. 7, pp. 1388–1395, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Kothari MD et al. , “The relationship of three-dimensional joint space width on weight-bearing ct with pain and physical function,” Journal of Orthopaedic Research, vol. 38, no. 6, pp. 1333–1339, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Berger M et al. , “Marker-free motion correction in weight-bearing cone-beam CT of the knee joint,” Med Phys, vol. 43, no. 3, pp. 1235–48, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Sisniega A et al. , “Motion compensation in extremity cone-beam CT using a penalized image sharpness criterion,” Phys Med Biol, vol. 62, no. 9, pp. 3712–3734, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Bier B et al. , “Epipolar Consistency Conditions for Motion Correction in Weight-Bearing Imaging,” in BVM 2017, 2017, pp. 209–214. [Google Scholar]

- [11].—, “Detecting anatomical landmarks for motion estimation in weight-bearing imaging of knees,” in Machine Learning for Medical Imaging Reconstruction, 2018, pp. 83–90. [Google Scholar]

- [12].—, “Range Imaging for Motion Compensation in C-Arm Cone-Beam CT of Knees under Weight-Bearing Conditions,” J Imaging, vol. 4, no. 1, pp. 1–16, 2018. [Google Scholar]

- [13].Choi J-H et al. , “Fiducial marker-based correction for involuntary motion in weight-bearing C-arm CT scanning of knees. Part I. Numerical model-based optimization.” Med Phys, vol. 40, no. 9, pp. 091 905–1 – 091 905–12, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [14].—, “Fiducial marker-based correction for involuntary motion in weight-bearing C-arm CT scanning of knees. II. Experiment.” Med Phys, vol. 41, no. 6, pp. 061 902–1 – 061 902–16, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Jost G et al. , “A novel approach to navigated implantation of s-2 alar iliac screws using inertial measurement units,” J Neurosurg: Spine, vol. 24, no. 3, pp. 447–453, 2016. [DOI] [PubMed] [Google Scholar]

- [16].Lemammer I et al. , “Online mobile c-arm calibration using inertial sensors: a preliminary study in order to achieve cbct,” Int J Comput Assist Radiol Surg, vol. 15, p. 213–224, 2019. [DOI] [PubMed] [Google Scholar]

- [17].Delp SL et al. , “Opensim: Open-source software to create and analyze dynamic simulations of movement,” IEEE Trans Biomed Eng, vol. 54, no. 11, pp. 1940–1950, 2007. [DOI] [PubMed] [Google Scholar]

- [18].Hamner SR et al. , “Muscle contributions to propulsion and support during running,” J Biomech, vol. 43, no. 14, pp. 2709 – 2716, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Seth A et al. , “Opensim: Simulating musculoskeletal dynamics and neuromuscular control to study human and animal movement,” PLOS Comput Biol, vol. 14, no. 7, pp. 1–20, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Segars WP et al. , “4d xcat phantom for multimodality imaging research,” Med Phys, vol. 37, no. 9, pp. 4902–4915, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Maier A et al. , “Fast Simulation of X-ray Projections of Spline-based Surfaces using an Append Buffer,” Phys Med Biol, vol. 57, no. 19, p. 6193–6210, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Kautz T et al. , “Activity recognition in beach volleyball using a deep convolutional neural network,” Data Min Knowl Discov, vol. 31, no. 6, pp. 1678–1705, 2017. [Google Scholar]

- [23].van den Bogert AJ et al. , “A method for inverse dynamic analysis using accelerometry,” J Biomech, vol. 29, no. 7, pp. 949 – 954, 1996. [DOI] [PubMed] [Google Scholar]

- [24].De Sapio V, Advanced Analytical Dynamics: Theory and Applications. Cambridge University Press, 2017. [Google Scholar]

- [25].Kok M et al. , “Using Inertial Sensors for Position and Orientation Estimation,” Foundations and Trends® in Signal Processing, vol. 11, no. 1-2, pp. 1–153, 2017. [Google Scholar]

- [26].Woodman OJ, “An introduction to inertial navigation,” University of Cambridge, Computer Laboratory, Tech. Rep. UCAM-CL-TR-696, 2007. [Google Scholar]

- [27].Bosch Sensortec, BMI160 - Data sheet, 11 2020, accessed: 2021-01-18. [Online]. Available: https://www.bosch-sensortec.com/products/motion-sensors/imus/bmi160.html [Google Scholar]

- [28].Thies M et al. , “Automatic orientation estimation of inertial sensors in c-arm ct projections,” Curr Dir Biomed Eng, vol. 5, no. 1, pp. 195–198, 2019. [Google Scholar]

- [29].Schaefer S et al. , “Image deformation using moving least squares,” ACM Trans Graph, vol. 25, no. 3, p. 533–540, 2006. [Google Scholar]

- [30].Zhu Y and Gortler SJ, “3d deformation using moving least squares,” Harvard Computer Science, Technical report: TR-10-07, 2007. [Google Scholar]

- [31].Maier A et al. , “CONRAD - A software framework for cone-beam imaging in radiology,” Med Phys, vol. 40, no. 11, p. 111914, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [32].Wang Z et al. , “Image quality assessment: from error visibility to structural similarity,” IEEE Trans Image Process, vol. 13, no. 4, pp. 600–612, 2004. [DOI] [PubMed] [Google Scholar]

- [33].Glaser C and Putz R, “Functional anatomy of articular cartilage under compressive loading quantitative aspects of global, local and zonal reactions of the collagenous network with respect to the surface integrity,” Osteoarthritis Cartilage, vol. 10, no. 2, pp. 83 – 99, 2002. [DOI] [PubMed] [Google Scholar]

- [34].Darvishia A and Najafi K, “Analysis and design of super-sensitive stacked (s3) resonators for low-noise pitch/roll gyroscopes,” in 2019 IEEE INERTIAL, 2019, pp. 1–4. [Google Scholar]

- [35].Masu K et al. , “Cmos-mems based microgravity sensor and its application,” ECS Trans, vol. 97, no. 5, p. 91, 2020. [Google Scholar]

- [36].Yamane D et al. , “A mems accelerometer for sub-mg sensing,” Sensor Mater, vol. 31, no. 9, pp. 2883–2894, 2019. [Google Scholar]

- [37].Ligorio G and Sabatini AM, “Extended kalman filter-based methods for pose estimation using visual, inertial and magnetic sensors: Comparative analysis and performance evaluation,” Sensors, vol. 13, no. 2, pp. 1919–1941, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]