Abstract

Contemporary bioinformatic and chemoinformatic capabilities hold promise to reshape knowledge management, analysis and interpretation of data in natural products research. Currently, reliance on a disparate set of non-standardized, insular, and specialized databases presents a series of challenges for data access, both within the discipline and for integration and interoperability between related fields. The fundamental elements of exchange are referenced structure-organism pairs that establish relationships between distinct molecular structures and the living organisms from which they were identified. Consolidating and sharing such information via an open platform has strong transformative potential for natural products research and beyond. This is the ultimate goal of the newly established LOTUS initiative, which has now completed the first steps toward the harmonization, curation, validation and open dissemination of 750,000+ referenced structure-organism pairs. LOTUS data is hosted on Wikidata and regularly mirrored on https://lotus.naturalproducts.net. Data sharing within the Wikidata framework broadens data access and interoperability, opening new possibilities for community curation and evolving publication models. Furthermore, embedding LOTUS data into the vast Wikidata knowledge graph will facilitate new biological and chemical insights. The LOTUS initiative represents an important advancement in the design and deployment of a comprehensive and collaborative natural products knowledge base.

Research organism: Other

Introduction

Evolution of electronic natural products resources

Natural Products (NP) research is a transdisciplinary field with wide-ranging interests: from fundamental structural aspects of naturally occurring molecular entities to their effects on living organisms and extending to the study of chemically mediated interactions within entire ecosystems. Defining the ‘natural’ qualifier is a complex task (Ducarme and Couvet, 2020; All natural, 2007). We thus adopt here a broad definition of a NP as any chemical entity found in a living organism, hereafter refered to as a structure-organism pair. An additional and fundamental element of a structure-organism pair is a reference to the experimental evidence that establishes the linkages between the chemical structure and the biological organism. A future-oriented electronic NP resource should contain fully-referenced structure-organism pairs.

Reliance on data from the NP literature presents many challenges. The assembly and integration of NP occurrences into an inter-operative platform relies primarily on access to a heterogeneous set of databases (DB) whose content and maintenance status are critical factors in this dependency (Tsugawa, 2018). A tertiary inter-operative NP platform is thus dependent on a secondary set of data that has been selectively annotated into a DB from primary literature sources. The experimental data itself reflects a complex process involving collection or sourcing of natural material (and establishment of its identity), a series of material transformation and separation steps and ultimately the chemical or spectral elucidation of isolates. The specter of human error and the potential for the introduction of biases are present at every phase of this journey. These include publication biases (Lee et al., 2013), such as emphasis on novel and/or bioactive structures in the review process, or, in DB assembly stages, with selective focus on a specific compound class or a given taxonomic range, or disregard for annotation of other relevant evidence that may have been presented in primary sources. Temporal biases also exist: a technological ‘state-of-the-art’ when published can eventually be recast as anachronistic.

The advancement of NP research has always relied on the development of new technologies. In the past century alone, the rate at which unambiguous identification of new NP entities from biological matrices can be achieved has been reduced from years to days and in the past few decades, the scale at which new NP discoveries are being reported has increased exponentially. Without a means to access and process these disparate NP data points, information is fragmented and scientific progress is impaired (Balietti et al., 2015). To this extent, contemporary bioinformatic tools enable the (re-)interpretation and (re-)annotation of (existing) datasets documenting molecular aspects of biodiversity (Mongia and Mohimani, 2021; Jarmusch et al., 2020).

While large, well-structured and freely accessible DB exist, they are often concerned primarily with chemical structures (e.g. PubChem (Kim et al., 2019), with over 100 M entries) or biological organisms (e.g. GBIF (GBIF, 2020), with over 1900 M entries), but scarce interlinkages limit their application for documentation of NP occurrence(s). Currently, no open, cross-kingdom, comprehensive and computer-interpretable electronic NP resource links NP and their containing organisms, along with referral to the underlying experimental work. This shortcoming breaks the crucial evidentiary link required for tracing information back to the original data and assessing its quality. Even valuable commercially available efforts for compiling NP data, such as the Dictionary of Natural Products (DNP), can lack proper documentation of these critical links.

Pioneering efforts to address such challenges led to the establishment of KNApSAck (Shinbo et al., 2006), which is likely the first public, curated electronic NP resource of referenced structure-organism pairs. KNApSAck (Afendi et al., 2012) currently contains 50,000+ structures and 100,000+ structure-organism pairs. However, the organism field is not standardized and access to the data is not straightforward. Another early-established electronic NP resources is the NAPRALERT dataset (Graham and Farnsworth, 2010), which was compiled over five decades from the NP literature, gathering and annotating data derived from over 200,000 primary literature sources. This dataset contains 200,000+ distinct compound names and structural elements, along with 500,000+ records of distinct, fully-cited structure-organism pairs. In total, NAPRALERT contains over 900,000 such records, due to equivalent structure-organism pairs reported in different citations. However, NAPRALERT is not an open platform and employs an access model that provides only limited free searches of the dataset. Finally, the NPAtlas (van Santen et al., 2019; van Santen et al., 2022) is a more recent project complying with the FAIR (Findability, Accessibility, Interoperability, and Reuse) guidelines for digital assets (Wilkinson et al., 2016) and offering convenient web access. While the NPAtlas allows retrieval and encourages submission of compounds with their biological source, it focuses on microbial NP and ignores a wide range of biosynthetically active organisms found in the Plantae kingdom.

The LOTUS initiative seeks to address the aforementioned shortcomings. Building on the experience gained through the establishment of the recently published COlleCtion of Open NatUral producTs (COCONUT) (Sorokina et al., 2021a) regarding the aggregation and curation of NP structural databases, this savoir-faire was expanded to accommodate biological organisms and scientific references in the equation. After extensive data curation and harmonization of over 40 electronic ressources, pairs characterizing a NP occurrence were standardized at the chemical, biological and reference levels. At its current stage of development, LOTUS disseminates 750,000+ referenced structure-organism pairs. These efforts and experiences represent an intensive preliminary curatorial phase and the first major step towards providing a high-quality, computer-interpretable knowledge base capable of transforming NP research data management from a classical (siloed) database approach to an optimally shared resource.

Accommodating principles of FAIRness and TRUSTworthiness for natural products knowledge management

In awareness of the multi-faceted pitfalls associated with implementing, using and maintaining classical scientific DBs (Helmy et al., 2016), and to enhance current and future sharing options, the LOTUS initiative selected the Wikidata platform for disseminating its resources. The idea of using wikis to disseminate databases is not new, with multiple underlying advantages (Finn et al., 2012). Since its creation, Wikidata has focused on cross-disciplinary and multilingual support. Wikidata is curated and governed collaboratively by a global community of volunteers, about 20,000 of which are contributing monthly. Wikidata currently contains more than 1 billion statements in the form of subject-predicate-object triples. Triples are machine-interpretable and can be enriched with qualifiers and references. Within Wikidata, data triples correspond to approximately 100 million entries, which can be grouped into classes as diverse as countries, songs, disasters, or chemical compounds. The statements are closely integrated with Wikipedia and serve as the source for many of its infoboxes. Various workflows have been established for reporting such classes, particularly those of interest to life sciences, such as genes, proteins, diseases, drugs, or biological taxa (Waagmeester et al., 2020).

Building on the principles and experiences described above, the present report introduces the development and implementation of the LOTUS workflow for NP occurrence curation and dissemination, which applies both FAIR and TRUST (Transparency, Responsibility, User focus, Sustainability and Technology) principles (Lin et al., 2020). LOTUS data upload and retrieval procedures ensure optimal accessibility by the research community, allowing any researcher to contribute, edit and reuse the data with a clear and open CC0 license (Creative Commons 0).

Despite many advantages, Wikidata hosting has some notable, yet manageable drawbacks. While its SPARQL query language offers a powerful way to query available data, it can also appear intimidating to the less experienced user. Furthermore, some typical queries of molecular electronic NP resources such as structural or spectral searches are not yet available in Wikidata. To address these shortcomings, LOTUS is hosted in parallel at https://lotus.naturalproducts.net (LNPN) within the naturalproducts.net ecosystem. The Natural Products Online website is a portal for open-source and open-data resources for NP research. In addition to the generalistic COCONUT and LNPN databases, the portal will enable hosting of arbitrary and skinned collections, themed in particular by species or taxonomic clade, by geographic location or by institution, together with a range of cheminformatics tools for NP research. LNPN is periodically updated with the latest LOTUS data. This dual hosting provides an integrated, community-curated and vast knowledge base (via Wikidata), as well as a NP community-oriented product with tailored search modes (via LNPN). The multiple data interaction options should establish the basis for the transparent and sustainable access, sharing and creation of knowledge on NP occurrence.

The LOTUS initiative was initially launched to answer our need to access the most comprehensive compilation of biological occurrences of NP. Indeed, we recently highlighted the interest of considering the taxonomic dimension when annotating metabolites (Rutz et al., 2019). This being said, many other concrete applications can result from an access by the scientific community to the LOTUS initiative data. For example, such a resource will facilitate the exploration of eco-evolutionary mechanisms at the molecular level (Defossez et al., 2021). In terms of drug discovery, this resource is extremely valuable to orient and guide researchers interested in a structure of interest. On the same theme, LOTUS is expected to be the perfect place to encounter ‘molecular arguments’ for biodiversity conservation (Campbell, 2003). Researchers interested in the history of science will be able, through this kind of resource, to gain a preliminary view of the temporal evolution of disciplines such as pharmacognosy. More generally, the objective of the LOTUS initiative is to prepare the ground for an electronic and globally accessible resource that would be the counterpart, at the metabolite level, of established databases linking proteins to biological organisms (e.g. Uniprot) and genes to biological organisms (Genbank). Once such an objective is reached, it will be possible to interconnect the three central objects of the living, that is metabolites, proteins and genes, through the common entity of these resources, the biological organism. Such an interconnection, fostering cross-fertilization of the fields of chemistry, biology and associated disciplines is desirable and necessary to advance towards a better understanding of Life.

Results and discussion

This section is structured as follows: first, we present an overview of the LOTUS initiative at its current stage of development. The central curation and dissemination elements of the LOTUS initiative are then explained in detail. The third section addresses the interaction modes between end-users and LOTUS, including data retrieval, addition, and editing. Some examples on how LOTUS data can be used to answer research questions or develop hypothesis are given. The final section is dedicated to the interpretation of LOTUS data and illustrates the dimensions and qualities of the current LOTUS dataset from chemical and biological perspectives.

Blueprint of the LOTUS initiative

Building on the standards established by the related WikiProjects on Wikidata (Chemistry, Taxonomy and Source Metadata), a NP chemistry-oriented subproject was created (Chemistry/Natural products). Its central data consists of three minimal sufficient objects:

A chemical structure object, with associated Simplified Molecular Input Line Entry System (SMILES) (Weininger, 1988), International Chemical Identifier (InChI) (Heller et al., 2013) and InChIKey (a hashed version of the InChI).

A biological organism object, with associated taxon name, the taxonomic DB where it was described and the taxon ID in the respective DB.

A reference object describing the structure-organism pair, with the associated article title and a Digital Object Identifier (DOI), a PubMed (PMID), or PubMed Central (PMCID) ID.

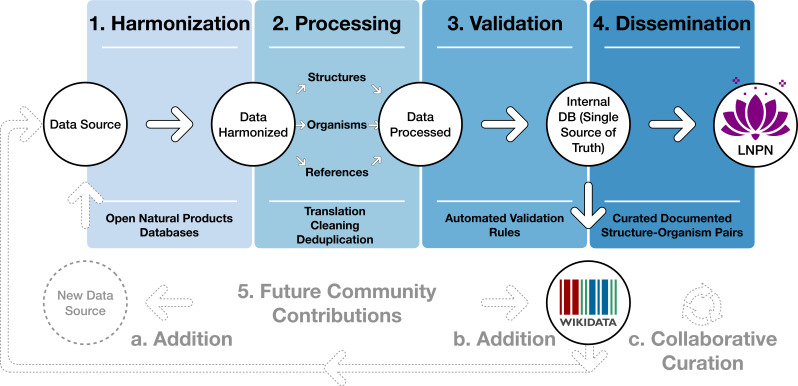

As data formats are largely inhomogeneous among existing electronic NP resources, fields related to chemical structure, biological organism and references are variable and essentially not standardized. Therefore, LOTUS implements multiple stages of harmonization, processing, and validation (Figure 1, stages 1–3). LOTUS employs a Single Source of Truth (SSOT, Single_source_of_truth) to ensure data reliability and continuous availability of the latest curated version of LOTUS data in both Wikidata and LNPN (Figure 1, stage 4). The SSOT approach consists of a PostgreSQL DB that structures links and data schemes such that every data element has a single place. The LOTUS processing pipeline is tailored to efficiently include and diffuse novel or curated data directly from new sources or at the Wikidata level. This iterative workflow relies both on data addition and retrieval actions as described in the Data Interaction section. The overall process leading to referenced and curated structure-organisms pairs is illustrated in Figure 1 and detailed hereafter.

Figure 1. Blueprint of the LOTUS initiative.

Data undergo a four-stage process: (1) Harmonization, (2) Processing, (3) Validation, and (4) Dissemination. The process was designed to incorporate future contributions (5), either by the addition of new data from within Wikidata (a) or new sources (b) or via curation of existing data (c). The figure is available under the CC0 license at https://commons.wikimedia.org/wiki/File:Lotus_initiative_1_blueprint.svg.

By design, this iterative process fosters community participation, essential to efficiently document NP occurrences. All stages of the workflow are described on the git sites of the LOTUS initiative at https://github.com/lotusnprod and in the methods. At the time of writing, 750,000+ LOTUS entries contained a curated chemical structure, biological organism and reference and were available on both Wikidata and LNPN. As the LOTUS data volume is expected to increase over time, a frozen (as of 2021-12-20), tabular version of this dataset with its associated metadata is made available at https://doi.org/10.5281/zenodo.5794106 (Rutz et al., 2021a).

Data harmonization

Multiple data sources were processed as described hereafter. All publicly accessible electronic NP resources included in COCONUT that contain referenced structure-organism pairs were considered as initial input. The data were complemented with COCONUT’s own referenced structure-organism pairs (Sorokina and Steinbeck, 2020a), as well as the following additional electronic NP resources: Dr. Duke (U.S. Department of Agriculture, 1992), Cyanometdb (Jones et al., 2021), Datawarrior (Sander et al., 2015), a subset of NAPRALERT, Wakankensaku (Wakankenaku, 2020) and DiaNat-DB (Madariaga-Mazón et al., 2021).

The contacts of the electronic NP resources not explicitly licensed as open were individually reached for permission to access and reuse data. A detailed list of data sources and related information is available as Appendix 1. All necessary scripts for data gathering and harmonization can be found in the lotus-processor repository in the src/1_gathering directory and processed is detailed in the corresponding methods section gathering section. All subsequent iterations including new data sources, either updated information from the same data sources or new data, will involve a comparison with the previously gathered data at the SSOT level to ensure that the data is only curated once.

Data processing and validation

As shown in Figure 1, data curation consisted of three stages: harmonization, processing, and validation. Thereby, after the harmonization stage, each of the three central objects – chemical compounds, biological organisms, and reference – were processed, as described in related methods section. Given the data size (2.5M+ initial entries), manual validation was unfeasible. Curating the references was a particularly challenging part of the process. Whereas organisms are typically reported by at least their vernacular or scientific denomination and chemical structures via their SMILES, InChI, InChIKey or image (not covered in this work), references suffer from largely insufficient reporting standards. Despite poor standardization of the initial reference field, proper referencing remains an indispensable way to establish the validity of structure-organism pairs. Better reporting practices, supported by tools such as Scholia (Blomqvist et al., 2017; Rasberry et al., 2019) and relying on Wikidata, Fatcat, or Semantic Scholar should improve reference-related information retrieval in the future.

In addition to curating the entries during data processing, 420 referenced structure-organism pairs were selected for manual validation. An entry was considered as valid if: (i) the structure (in the form of any structural descriptor that could be linked to the final sanitized InChIKey) was described in the reference (ii) the containing organism (as any organism descriptor that could be linked to the accepted canonical name) was described in the reference and (iii) the reference was describing the occurrence of the chemical structure in the biological organism. More details are available in the related methods section. This process allowed us to establish rules for automatic filtering and validation of the entries. The parameters of the automatic filtering are available as a function (filter_dirty.R) and are further described in the related methods section. The automatic filtering was then applied to all entries. To confirm the efficacy of the filtering process, a new subset of 100 diverse, automatically curated and automatically validated entries was manually checked, yielding a rate of 97% of true positives. The detailed results of the two manual validation steps are reported in Appendix 2. The resulting data is also available in the dataset shared at https://doi.org/10.5281/zenodo.5794106 (Rutz et al., 2021a). Table 1 shows an example of a referenced structure-organism pair before and after curation. This process resolved the structure to an InChIKey, the organism to a valid taxonomic name and the reference to a DOI, thereby completing the establishment of the essential referenced structure-organism pair.

Table 1. Example of a referenced structure-organism pair before and after curation.

| Structure | Organism | Reference | |

|---|---|---|---|

| Before curation | Cyathocaline | Stem bark of Cyathocalyx zeylanica CHAMP. ex HOOK. f. & THOMS. (Annonaceae) | Wijeratne E. M. K., de Silva L. B., Kikuchi T., Tezuka Y., Gunatilaka A. A. L., Kingston D. G. I., J. Nat. Prod., 58, 459–462 (1995). |

| After curation | VFIIVOHWCNHINZ-UHFFFAOYSA-N | Cyathocalyx zeylanicus | 10.1021 /NP50117A020 |

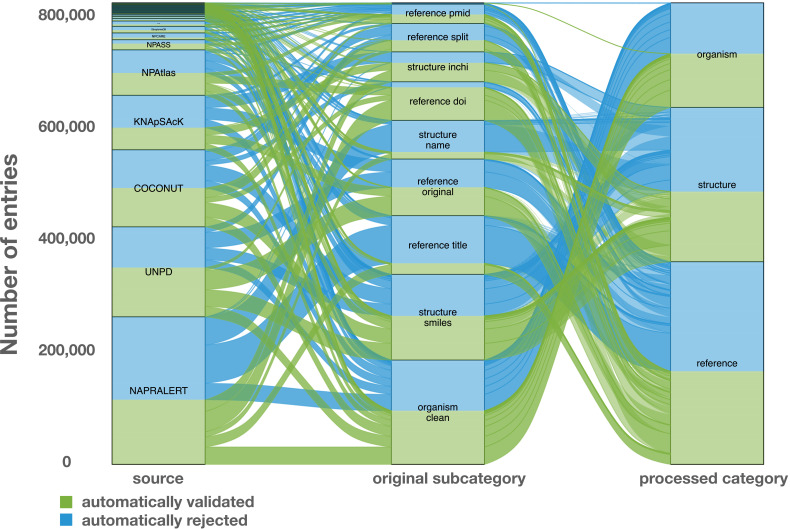

Challenging examples encountered during the development of the curation process were compiled in an edge cases table (tests/tests.tsv) to allow for automated unit testing. These tests allow a continuous revalidation of any change made to the code, ensuring that corrected errors will not reappear. The alluvial plot in Figure 2 illustrates the individual contribution of each source and original subcategory that led to the processed categories: structure, organism, and reference.

Figure 2. Alluvial plot of the data transformation flow within LOTUS during the automated curation and validation processes.

The figure also reflects the relative proportions of the data stream in terms of the contributions from the various sources (‘source’ block, left), the composition of the harmonized subcategories (‘original subcategory’ block, middle) and the validated data after curation (‘processed category’ block, right). Automatically validated entries are represented in green, rejected entries in blue. The figure is available under the CC0 license at https://commons.wikimedia.org/wiki/File:Lotus_initiative_1_alluvial_plot.svg.

The figure highlights, for example, the essential contribution of the reference DOI category to the final validated entries. A similar pattern can be seen concerning structures, where the validation rate of structural identifiers is higher than chemical names. The combination of the results of the automated curation pipeline and the manually curated entries led to the establishment of four categories (manually validated, manually rejected, automatically validated and automatically rejected) of the referenced structure-organism pairs that formed the processed part of the SSOT. Out of a total of 2.5M+ initial pairs, the manual and automatic validation retained 750,000+ pairs (approximately 30%), which were then selected for dissemination on Wikidata. Among validated entries, multiple ones were redundant among the source databases, thus also explaining the decrease of entries between the initial pairs and validated ones. Moreover, because data quality was favored over quantity, the number of rejected entries is high. Among them, multiple correct entries were certainly falsely rejected, but still not disseminated. All rejected entries were kept aside for later manual inspection and validation. These are publicly available at https://doi.org/10.5281/zenodo.5794597 (Rutz et al., 2021b). In the end, the disseminated data contained 290,000+ unique chemical structures, 40,000+ distinct organisms and 75,000+ references.

Data dissemination

Research worldwide can benefit the most when all results of published scientific studies are fully accessible immediately upon publication (Agosti and Johnson, 2002). This concept is considered the foundation of scientific investigation and a prerequisite for the effective direction of new research efforts based on prior information. To achieve this, research results have to be made publicly available and reusable. As computers are now the main investigation tool for a growing number of scientists, all research data including those in publications should be disseminated in computer-readable format, following the FAIR principles. LOTUS uses Wikidata as a repository for referenced structure-organism pairs, as this allows documented research data to be integrated with a large, pre-existing and extensible body of chemical and biological knowledge. The dynamic nature of Wikidata encourages the continuous curation of deposited data through the user community. Independence from individual and institutional funding represents another major advantage of Wikidata. The Wikidata knowledge base and the option to use elaborate SPARQL queries allow the exploration of the dataset from a sheer unlimited number of angles. The openness of Wikidata also offers unprecedented opportunities for community curation, which will support, if not guarantee, a dynamic and evolving data repository. At the same time, certain limitations of this approach can be anticipated. Despite (or possibly due to) their power, SPARQL queries can be complex and potentially require an in-depth understanding of the models and data structure. This involves a steep learning curve which can discourage some end-users. Furthermore, traditional ways to query electronic NP resources such as structural or spectral searches are currently not within the scope of Wikidata and, are thus addressed in LNPN. Using the pre-existing COCONUT template, LNPN hosting allows the user to perform structural searches by directly drawing a molecule, thereby addressing the current lack of such structural search possibilities in Wikidata. Since metabolite profiling by Liquid Chromatography (LC) - Mass Spectrometry (MS) is now routinely used for the chemical composition assessment of natural extracts, future versions of LOTUS and COCONUT are envisioned to be augmented by predicted MS spectra and hosted at https://naturalproducts.net to allow mass and spectral-based queries. Note that such spectral database is already available at https://doi.org/10.5281/zenodo.5607264 (Allard et al., 2021). To facilitate queries focused on specific taxa (e.g. ‘return all molecules found in the Asteraceae family’), a unified taxonomy is paramount. As the taxonomy of living organisms is a complex and constantly evolving field, all the taxon identifiers from all accepted taxonomic DB for a given taxon name were kept. Initiatives such as the Open Tree of Life (OTL) (Rees and Cranston, 2017) will help to gradually reduce these discrepancies, and the Wikidata platform can and does support such developments. OTL also benefits from regular expert curation and new data. As the taxonomic identifier property for this resource did not exist in Wikidata, its creation was requested and obtained. The property is now available as ‘Open Tree of Life ID’ (P9157).

Following the previously described curation process, all validated entries have been made available through Wikidata and LNPN. LNPN will be regularly mirroring Wikidata LOTUS through the SSOT as described in Figure 1.

User interaction with LOTUS data

The possibilities to interact with the LOTUS data are numerous. The following gives examples of how to retrieve, add and edit LOTUS data.

Data retrieval

LOTUS data can be queried and retrieved either directly in Wikidata or on LNPN, both of which have distinct advantages. While Wikidata offers flexible and powerful queries capacities at the cost of potential complexity, LNPN has a graphical user interface with capabilities of drawing chemical structures, simplified structural or biological filtering and advanced chemical descriptors, albeit with a more rigid structure. For bulk download, a frozen version of LOTUS data (timestamp of 2021-12-20) is also available at https://doi.org/10.5281/zenodo.5794106 (Rutz et al., 2021a). More refined approaches to the direct interrogation of the up-to-date LOTUS data both in Wikidata and LNPN are detailed hereafter.

Wikidata

The easiest way to search for NP occurrence information in Wikidata is by typing the name of a chemical structure directly into the ‘Search Wikidata’ field, which (for left-to-right languages) can be found in the upper right corner of the Wikidata homepage or any other Wikidata page. For example, by typing ‘erysodine’, the user will land on the page of this compound (Q27265641). Scrolling down to the ‘found in taxon’ statement will allow the user to view the biological organisms reported to contain this NP (Figure 3). Clicking the reference link under each taxon name links to the publication(s) documenting the occurrence.

Figure 3. Illustration of the ‘found in taxon’ statement section on the Wikidata page of erysodine Q27265641 showing a selection of erysodine-containing taxa and the references documenting these occurrences.

The typical approach to more elaborated querying involves writing SPARQL queries using the Wikidata Query Service or another direct connection to a SPARQL endpoint. Table 2 contains some examples from simple to more elaborated queries, demonstrating what can be done using this approach. The full-text queries with explanations are included in Supplementary file 1.

Table 2. Potential questions about structure-organism relationships and corresponding Wikidata queries.

| Question | Wikidata SPARQL query |

|---|---|

| What are the compounds present in Mouse-ear cress (Arabidopsis thaliana) or its child taxa? | https://w.wiki/4Vcv |

| Which organisms are known to contain β-sitosterol? | https://w.wiki/4VFn |

| Which organisms are known to contain stereoisomers of β-sitosterol? | https://w.wiki/4VFq |

| Which pigments are found in which taxa, according to which reference? | https://w.wiki/4VFx |

| What are examples of organisms where compounds were found in an organism sharing the same parent taxon, but not in the organism itself? | https://w.wiki/4Wt3 |

| Which Zephyranthes species lack compounds known from at least two species in the genus? | https://w.wiki/4VG3 |

| How many compounds are structurally similar to compounds labeled as antibiotics? (grouped by the parent taxon of the containing organism) | https://w.wiki/4VG4 |

| Which organisms contain indolic scaffolds? Count occurrences, group and order the results by the parent taxon. | https://w.wiki/4VG9 |

| Which compounds with known bioactivities were isolated from Actinobacteria, between 2014 and 2019, with related organisms and references? | https://w.wiki/4VGC |

| Which compounds labeled as terpenoids were found in Aspergillus species, between 2010 and 2020, with related references? | https://w.wiki/4VGD |

| Which are the available referenced structure-organism pairs on Wikidata? (example limited to 1000 results) | https://w.wiki/4VFh |

The queries presented in Table 2 are only selected examples, and many other ways of interrogating LOTUS can be formulated. Generic queries can be used, for example, for hypothesis generation when starting a research project. For instance, a generic SPARQL query - listed in Table 2 as “Which are the available referenced structure-organism pairs on Wikidata?” - retrieves all structures, identified by their InChIKey (P235), which contain ‘found in taxon’ (P703) statements that are stated in (P248) a bibliographic reference: https://w.wiki/4VFh. Data can then be exported in various formats, such as classical tabular formats, json, or html tables (see Download tab on the lower right of the query frame). At the time of writing (2021-12-20), this query (without the LIMIT 1000) returned 951,800 entries; a frozen query result is available at https://doi.org/10.5281/zenodo.5668854 (Rutz et al., 2021d).

Targeted queries allowing to interrogate LOTUS data from the perspective of one of the three objects forming the referenced structure-organism pairs can also be built. Users can, for example, retrieve a list of all structures reported from a given organism, such as all structures reported from Arabidopsis thaliana (Q158695) or its child taxa (https://w.wiki/4Vcv). Alternatively, all organisms containing a given chemical can be queried via its structure, such as in the search for all organisms where β-sitosterol (Q121802) was found in (https://w.wiki/4VFn). For programmatic access, the lotus-wikidata-exporter repository also allows data retrieval in RDF format and as TSV tables.

To further showcase the possibilities, two additional queries were established (https://w.wiki/4VGC and https://w.wiki/4VGD). Both queries were inspired by recent literature review works (Jose et al., 2021; Zhao et al., 2022). The first work describes compounds found in Actinobacteria, with a biological focus on compounds with reported bioactivity. The second one describes compounds found in Aspergillus spp., with a chemical focus on terpenoids. In both cases, in seconds, the queries allow retrieving a table similar to the ones in the mentioned literature reviews. While these queries are not a direct substitute for manual literature review, they do allow researchers to quickly begin such a review process with a very strong body of relevant references.

For a convenient expansion or limitation of the results, certain types of queries such as structure or similarity searches usually exist in molecular electronic resources. As these queries are not natively integrated by SPARQL, they are not readily available for Wikidata exploration. To address such limitation, Galgonek et al. developed an in-house SPARQL engine that allows utilization of Sachem, a high-performance chemical DB cartridge for PostgreSQL for fingerprint-guided substructure and similarity search (Kratochvíl et al., 2018). The engine is used by the Integrated Database of Small Molecules (IDSM) that operates, among other things, several dedicated endpoints allowing structural search in selected small-molecule datasets via SPARQL (Kratochvíl et al., 2019). To allow substructure and similarity searches via SPARQL also on compounds from Wikidata, a dedicated IDSM/Sachem endpoint was created for the LOTUS project. The endpoint indexes isomeric (P2017) and canonical (P233) SMILES code available in Wikidata. To ensure that data is kept up-to-date, SMILES codes are automatically downloaded from Wikidata daily. The endpoint allows users to run federated queries and, thereby, proceed to structure-oriented searches on the LOTUS data hosted at Wikidata. For example, the SPARQL query https://w.wiki/4VG9 returns a list of all organisms that produce NP with an indolic scaffold. The output is aggregated at the parent taxa level of the containing organisms and ranked by the number of scaffold occurrences.

Regarding the versioning aspects, some challenges are implied by the dynamic nature of the Wikidata environment. However, tracking of the data evolution can be achieved in multiple ways and at different levels: at the full Wikidata level, dumps are regularly created (https://dumps.wikimedia.org/wikidatawiki/entities) while at the individual entry level the full history of modification can be consulted (see following link for the full edit history of erythromycin for example (https://www.wikidata.org/w/index.php?title=Q213511&action=history)).

We propose to the users a simple approach to document, version and share the output of queries on the LOTUS data at a defined time point. For this, in addition to sharing the short url of a the SPARQL query (which will return results evolving over time), a simple archiving of the returned table to Zenodo or similar platform can be done. In order to gather results of SPARQL queries, we established the LOTUS Initiative Community repository. The following link allows to directly contribute to the community repository https://zenodo.org/deposit/new?c=the-lotus-initiative. For example, the output of this Wikidata SPARQL query https://w.wiki/4N8G realized on the 2021-11-10T16:56 can be easily archived and shared in a publication via its DOI 10.5281/zenodo.5668380.

Lotus.naturalproducts.net (LNPN)

In the search field of the LNPN interface (https://lotus.naturalproducts.net), simple queries can be achieved by typing the molecule name (e.g. ibogaine) or pasting a SMILES, InChI, InChIKey string, or a Wikidata identifier. All compounds reported from a given organism can be found by entering the organism name at the species or any higher taxa level (e.g. Tabernanthe iboga). Compound search by chemical class is also possible.

Alternatively, a structure can be directly drawn in the structure search interface (https://lotus.naturalproducts.net/search/structure), where the user can also decide on the nature of the structure search (exact, similarity, substructure search). Refined search mode combining multiple search criteria, in particular physicochemical properties, is available in the advanced search interface (https://lotus.naturalproducts.net/search/advanced).

Within LNPN, LOTUS bulk data can be retrieved as SDF or SMILES files, or as a complete MongoDB dump via https://lotus.naturalproducts.net/download. Extensive documentation describing the search possibilities and data entries is available at https://lotus.naturalproducts.net/documentation. LNPN can also be queried via the application programming interface (API) as described in the documentation.

Data addition and evolution

One major advantage of the LOTUS architecture is that every user has the option to contribute to the NP occurrences documentation effort by adding new or editing existing data. As all LOTUS data applies the SSOT mechanism, reprocessing of previously treated elements is avoided. However, at the moment, the SSOT channels are not open to the public for direct write access to maintain data coherence and evolution of the SSOT scheme. For now, the users can employ the following approaches to add or modify data in LOTUS.

Sources

LOTUS data management involves regular re-importing of both current and new data sources. New and edited information from these electronic NP resources will be checked against the SSOT. If absent or different, data will be passed through the curation pipeline and subsequently stored in the SSOT. Accordingly, by contributing to external electronic NP resources, any researcher has a means of providing new data for LOTUS, keeping in mind the inevitable delay between data addition and subsequent inclusion into LOTUS.

Wikidata

The currently favored approach to add new data to LOTUS is to create or edit Wikidata entries directly. Newly created or edited data will then be imported into the SSOT. There are several ways to interact with Wikidata which depend on the technical skills of the user and the volume of data to be uploaded/modified.

Pre-requisites

While direct Wikidata upload is possible, contributors are encouraged to use the LOTUS curation pipeline as a preliminary step to strengthen the initial data quality. For this, a specific mode of the LOTUS processor can be called (see Custom mode). The added data will therefore benefit from the curation and validation stages implemented in the LOTUS processing pipeline.

Manual upload

Any researcher interested in reporting NP occurrences can manually add the data directly in Wikidata, without any particular technical knowledge requirement. For this the creation of a Wikidata account and following the general object editing guidelines is advised. Regarding the addition of NP-centered objects (i.e. referenced structure-organisms pairs), users shall refer to the WikiProject Chemistry/Natural products group page.

A tutorial for the manual creation and upload of a referenced structure-organism pair to Wikidata is available in Supplementary file 2.

Batch and automated upload

Through the initial curation process described previously, 750,000+ referenced structure-organism pairs were validated for Wikidata upload. To automate this process, a set of programs were written to automatically process the curated outputs, group references, organisms and compounds, check if they are already present in Wikidata (using SPARQL and direct Wikidata querying) and insert or update the entities as needed (i.e. upserting). These scripts can be used for future batch upload of properly curated and referenced structure-organism pairs to Wikidata. Programs for data addition to Wikidata can be found in the repository lotus-wikidata-interact. The following Xtools page offers an overview of the latest activity performed by our NPimporterBot, using those programs.

Data editing

Even if correct at a given time point, scientific advances can invalidate or update previously uploaded data. Thus, the possibility to continuously edit the data is desirable and guarantees data quality and sustainability. Community-maintained knowledge bases such as Wikidata encourage such a process. Wikidata presents the advantage of allowing both manual and automated correction. Field-specific robots such as SuccuBot, KrBot, Pi_bot and ProteinBoxBot or our NPimporterBot went through an approval process. The robots are capable of performing thousands of edits without the need for human input. This automation helps reduce the amount of incorrect data that would otherwise require manual editing. However, manual curation by human experts remains irreplaceable as a standard. Users who value this approach and are interested in contributing are invited to follow the manual curation tutorial in Supplementary file 2.

The Scholia platform provides a visual interface to display the links among Wikidata objects such as researchers, topics, species or chemicals. It now provides an interesting way to view the chemical compounds found in a given biological organism (see here for the metabolome view of Eurycoma longifolia). If Scholia currently does not offer a direct editing interface for scientific references, it still allows users to proceed to convenient batch editing via QuickStatements. The adaptation of such a framework to edit the referenced structure-pairs in the LOTUS initiative could thus facilitate the capture of future expert curation, especially manual efforts that cannot be replaced by automated scripts.

Data interpretation

To illustrate the nature and dimensions of the LOTUS dataset, some selected examples of data interpretation are shown. First, the distribution of chemical structures among four important NP reservoirs: plants, fungi, animals, and bacteria (Table 3). Then, the distribution of biological organisms according to the number of related chemical structures and likewise the distribution of chemical structures across biological organisms are illustrated (Figure 4). Furthermore, the individual electronic NP resources participation in LOTUS data is resumed using the UpSet plot depiction, which allows the visualization of intersections in data sets (Figure 5). Across these figures we take again the two previous examples, i.e, β-sitosterol as chemical structure and Arabidopsis thaliana as biological organism because of their well-documented statuses. Finally, a biologically interpreted chemical tree and a chemically-interpreted biological tree are presented (Figures 6 and 7). The examples illustrate the overall chemical and biological coverage of LOTUS by linking family-specific classes of chemical structures to their taxonomic position. Table 3, Figures 4, 6 and 7 were generated using the frozen data (2021-12-20 timestamp), which is available for download at https://doi.org/10.5281/zenodo.5794106 (Rutz et al., 2021a). Figure 5 required a dataset containing information from closed resources and the complete data used for its generation is therefore not available for public distribution. All scripts used for the generation of the figures are available in the lotus-processor repository in the src/4_visualizing directory for reproducibility.

Distribution of chemical structures across reported biological organisms in LOTUS

Table 3 summarizes the distribution of chemical structures and their chemical classes (according to NPClassifier Kim et al., 2021) across the biological organisms reported in LOTUS. For this, biological organisms were grouped into four artificial taxonomic levels (plants, fungi, animals, and bacteria). These were built by combining the two highest taxonomic levels in the OTL taxonomy, namely Domain and Kingdom levels. “Plants” corresponded to “Eukaryota_Archaeplastida”, “Fungi” to “Eukaryota_Fungi”, “Animals” to “Eukaryota_Metazoa” and “Bacteria” to “Bacteria_NA”. The category corresponding to “Eukaryota_NA” mainly contained Algae, but also other organisms such as Amoebozoa and was therefore excluded. This represented less than 1% of all entries. The details of this process are available under src/3_analyzing/structure_taxon_distribution.R. When the chemical structure/class was reported only in one taxonomic grouping, it was counted as ‘specific’.

Table 3. Distribution and specificity of chemical structures across four important NP reservoirs: plants, fungi, animals, and bacteria.

When the chemical structure/class appeared only in one group and not the three others, they were counted as ‘specific’. Chemical classes were attributed with NPClassifier.

| Group | Organisms | 2D Structure-Organism pairs | 2D chemical structures | Specific 2D chemical structures | Chemical classes | Specific chemical classes |

|---|---|---|---|---|---|---|

| Plantae | 28,439 | 342,891 | 95,191 | 90,672 (95%) | 545 | 59 (11%) |

| Fungi | 4,003 | 36,950 | 22,594 | 20,194 (89%) | 417 | 19 (5%) |

| Animalia | 2,716 | 24,114 | 15,242 | 11,822 (78%) | 455 | 14 (3%) |

| Bacteria | 1,555 | 23,198 | 15,895 | 14,130 (89%) | 385 | 43 (11%) |

Distributions of organisms per structure and structures per organism

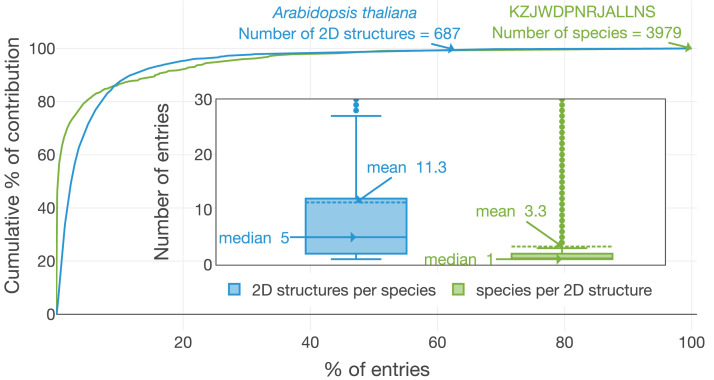

Readily achievable outcomes from LOTUS show that the depth of exploration of the world of NP is rather limited: as depicted in Figure 4, on average, three organisms are reported per chemical structure and eleven structures per organism. Notably, half of all structures have been reported from a single organism and half of all studied organisms are reported to contain five or fewer structures. Metabolomics studies suggest that these numbers are heavily underrated (Noteborn et al., 2000; Wang et al., 2020) and indicate that a better reporting of the metabolites detected in the course of NP chemistry investigations should greatly improve coverage.

This incomplete coverage may be partially explained by the habit in classical NP journals to accept only new and/or bioactive chemical structures for publication. Another possible explanation is the fact that specific chemical classes have been under heavier scrutiny by the natural products community than others. For example, alkaloids have three specific characteristics which favor their reporting in the literature. First, they are often endowed with potent biological activities making them a target in the frame of pharmacognosy research. Second, their chemical nature makes them readily accessible from complex biological matrices through acido-basic extraction. Third, they ionize greatly in positive MS mode, which makes their detection even at a very low concentration possible, where other compounds present in much higher concentrations are not detected. It is thus a complex task to answer the following question: “Is the currently observed repartition of alkaloids across the tree of life a reflection of their true biological occurrence or is this repartition biased by the aforementioned characteristics of this chemical class?” While the LOTUS initiative does not allow yet disentangling the bias from the true occurrence, it should offer sound and strong foundations for such challenging research problematic.

Another obvious explanation for the limited coverage (see Figure 4) is the fact that most of the chemical structures in LOTUS have been physically isolated and described. This is an extremely time-consuming effort that can obviously not be carried on all metabolites of all biological organisms. Here, the sensitivity of mass spectrometry and the ever-increasing efficiency of computational metabolite annotation solutions could offer a strong take. The documentation of metabolite annotation results obtained on large collections of biological matrices and the associated metadata within knowledge graphs offers exciting perspectives regarding the possibilities to expand both the chemical and biological coverage of the LOTUS data in a feasible manner.

Figure 4. Distribution of ‘structures per organism’ and ‘organisms per structure’.

The number of organisms linked to the planar structure of β-sitosterol (KZJWDPNRJALLNS) and the number of chemical structures in Arabidopsis thaliana are two exemplary highlights. A. thaliana contains 687 different short InChIKeys (i.e. 2D structures) and KZJWDPNRJALLNS is reported in 3979 distinct organisms. Less than 10% of the species contain more than 80% of the structural diversity present within LOTUS. In parallel, 80% of the species present in LOTUS are linked to less than 10% of the structures. The figure is available under the CC0 license at https://commons.wikimedia.org/wiki/File:Lotus_initiative_1_structure_organism_distribution.svg.

Contribution of individual electronic NP resources to LOTUS

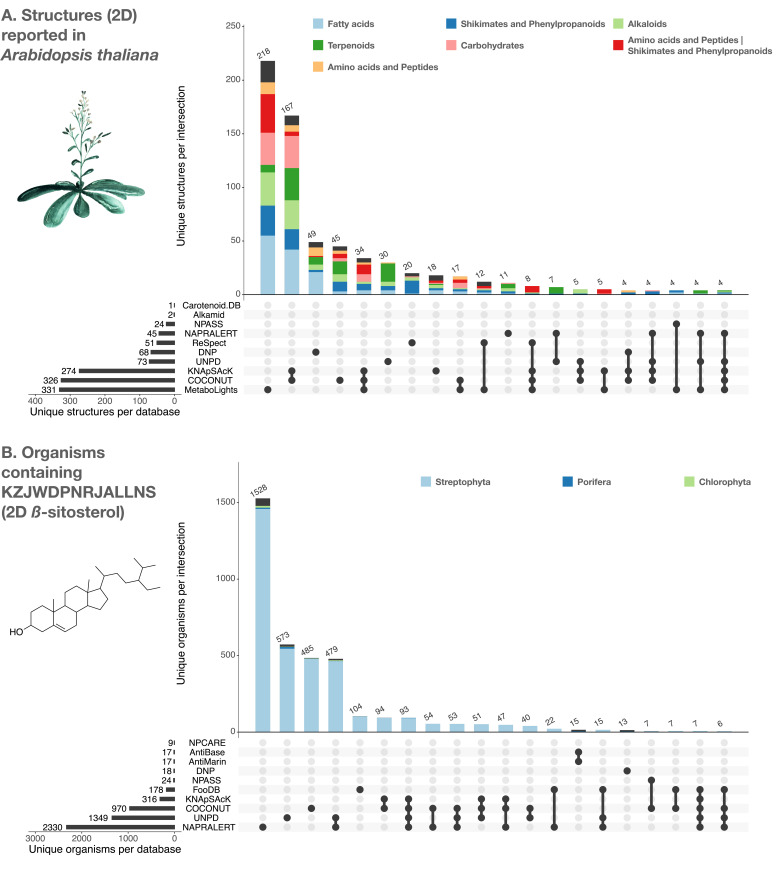

The added value of the LOTUS initiative to assemble multiple electronic NP resources is illustrated in Figure 5: Panel A shows the contributions of the individual electronic NP resources to the ensemble of chemical structures found in one of the most studied vascular plants, Arabidopsis thaliana (“Mouse-ear cress”; Q147096). Panel B shows the ensemble of taxa reported to contain the planar structure of the widely occurring triterpenoid β-sitosterol (Q121802).

Figure 5. UpSet plots of the individual contribution of electronic NP resources to the planar structures found in Arabidopsis thaliana (A) and to organisms reported to contain the planar structure of β-sitosterol (KZJWDPNRJALLNS) (B).

UpSet plots are evolved Venn diagrams, allowing to represent intersections between multiple sets. The horizontal bars on the lower left represent the number of corresponding entries per electronic NP resource. The dots and their connecting line represent the intersection between source and consolidate sets. The vertical bars indicate the number of entries at the intersection. For example, 479 organisms containing the planar structure of β-sitosterol are present in both UNPD and NAPRALERT, whereas each of them respectively reports 1349 and 2330 organisms containing the planar structure of β-sitosterol. The figure is available under the CC0 license at https://commons.wikimedia.org/wiki/File:Lotus_initiative_1_upset_plot.svg.

Figure 5A also shows that according to NPClassifier, the ‘chemical pathway’ category distribution across electronic NP resources is not conserved. Note that NPClassifier and ClassyFire (Djoumbou Feunang et al., 2016) chemical classification results are both available as metadata in the frozen LOTUS export and LNPN. Both classification tools return a chemical taxonomy for individual structures, thus allowing their grouping at higher hierarchical levels, in the same way as it is done for biological taxonomies. The UpSet plot in Figure 5 indicates the poor overlap of preexisting electronic NP resources and the added value of an aggregated dataset. This is particularly well illustrated in Figure 5B., where the number of organisms for which the planar structure of β-sitosterol (KZJWDPNRJALLNS) has been reported is shown for each intersection. NAPRALERT has by far the highest number of entries (2330 in total), while other electronic NP resources complement this well: e.g. UNPD has 573 reported organisms with β-sitosterol that do not overlap with any other resource. Of note, β-sitosterol is documented in only 13 organisms in the DNP, highlighting the importance of a better systematic reporting of ubiquitous metabolites and the interest of multiple data sources agglomeration.

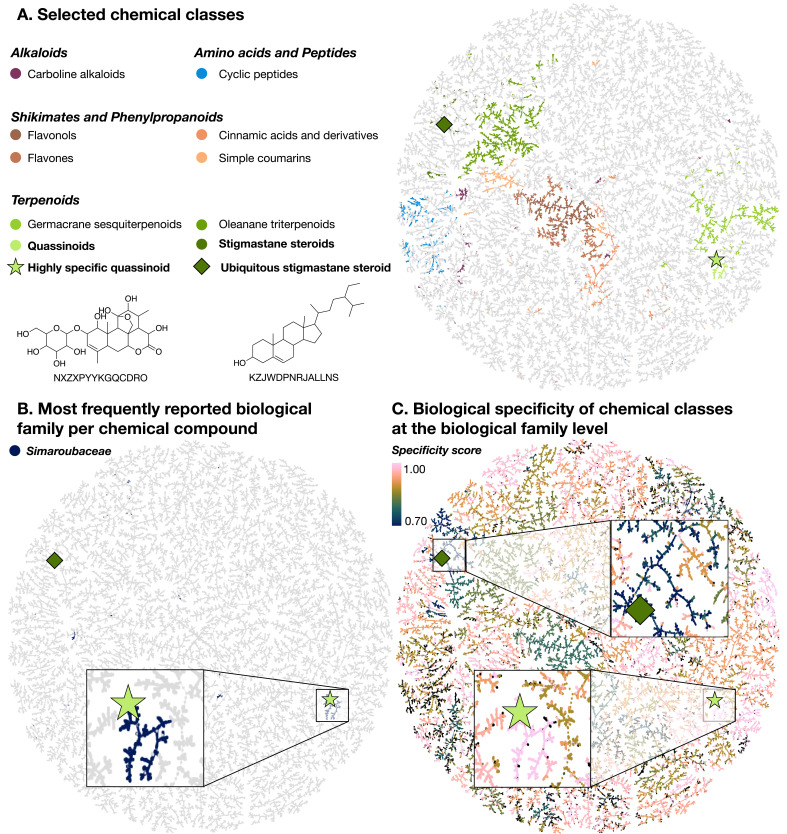

A biologically interpreted chemical tree

The chemical diversity captured in LOTUS is here displayed using TMAP (Figure 6), a visualization library allowing the structural organization of large chemical datasets as a minimum spanning tree (Probst and Reymond, 2020). Using Faerun, an interactive HTML file is generated to display metadata and molecule structures by embedding the SmilesDrawer library (Probst and Reymond, 2018a; Probst and Reymond, 2018b). Planar structures were used for all compounds to generate the TMAP (chemical space tree-map) using MAP4 encoding (Capecchi et al., 2020). As the tree organizes structures according to their molecular fingerprint, an anticipated coherence between the clustering of compounds and the mapped NPClassifier chemical class is observed (Figure 6A.). For clarity, some of the most represented chemical classes of LOTUS plus quassinoids and stigmastane steroids are mapped, with examples of a quassinoid (NXZXPYYKGQCDRO) (light green star) and a stigmastane steroid (KZJWDPNRJALLNS) (dark green diamond) and their corresponding location in the TMAP.

Figure 6. TMAP visualizations of the chemical diversity present in LOTUS.

Each dot corresponds to a chemical structure. A highly specific quassinoid (NXZXPYYKGQCDRO) (light green star) and an ubiquitous stigmastane steroid (KZJWDPNRJALLNS) (dark green diamond) are mapped as examples in all visualizations. In panel A., compounds (dots) are colored according to the NPClassifier chemical class they belong to. In panel B., compounds that are mostly reported in the Simaroubaceae family are highlighted in blue. Finally, in panel C., the compounds are colored according to the specificity score of chemical classes found in biological organisms. This biological specificity score at a given taxonomic level for a given chemical class is calculated as a Jensen-Shannon divergence. A score of 1 suggests that compounds are highly specific, 0 that they are ubiquitous. Zooms on a group of compounds of high biological specificity score (in pink) and on compounds of low specificity (blue) are depicted. An interactive HTML visualization of the LOTUS TMAP is available at https://lotus.nprod.net/post/lotus-tmap/ and archived at https://doi.org/10.5281/zenodo.5801807 (Rutz and Gaudry, 2021). The figure is available under the CC0 license at https://commons.wikimedia.org/wiki/File:Lotus_initiative_1_biologically_interpreted_chemical_tree.svg.

To explore relationships between chemistry and biology, it is possible to map taxonomical information such as the most reported biological family per chemical compound (Figure 6B.) or the biological specificity of chemical classes (Figure 6C.) on the TMAP. The biological specificity score at a given taxonomic level for a given chemical class is calculated as a Jensen-Shannon divergence. A score of 1 suggests that compounds are highly specific, 0 that they are ubiquitous. For more details, see 3_analyzing/jensen_shannon_divergence.R. This visualization allows to highlight chemical classes specific to a given taxon, such as the quassinoids in the Simaroubaceae family. In this case, it is striking to see how well the compounds of a given chemical class (quassinoids) (Figure 6A.) and the most reported plant family per compound (Simaroubaceae) (Figure 6B.) overlap. This is also evidenced on Figure 6C. with a Jensen-Shannon divergence of 0.99 at the biological family level for quassinoids. In this plot, it is also possible to identify chemical classes that are widely spread among living organisms, such as the stigmastane steroids, which exhibit a Jensen-Shannon divergence of 0.73 at the biological family level. This means that repartition of stigmastane steroids among families is not specific. Figure 7—figure supplement 1 further supports this statement.

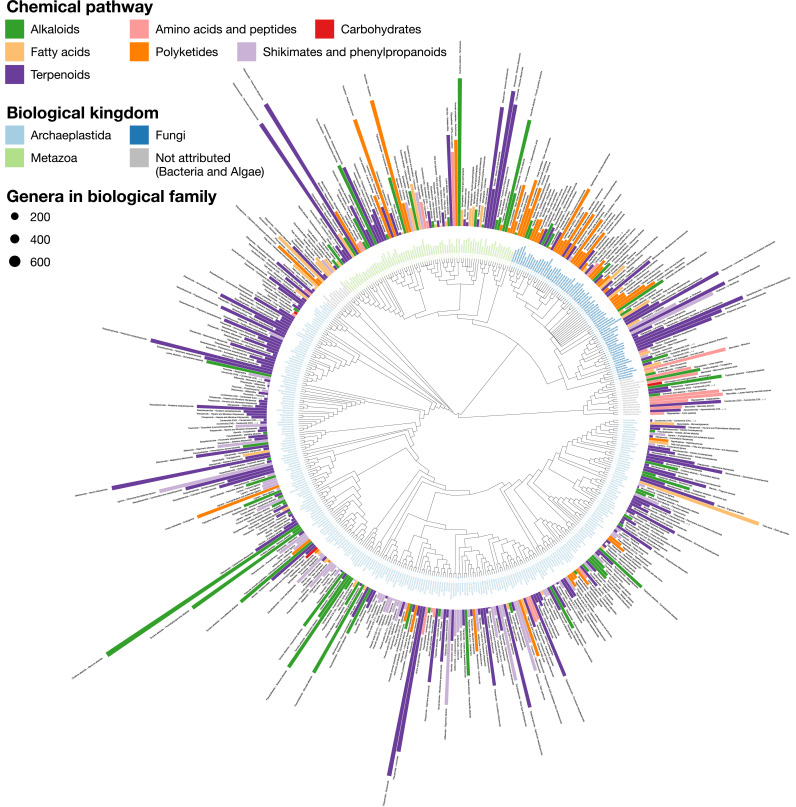

A chemically interpreted biological tree

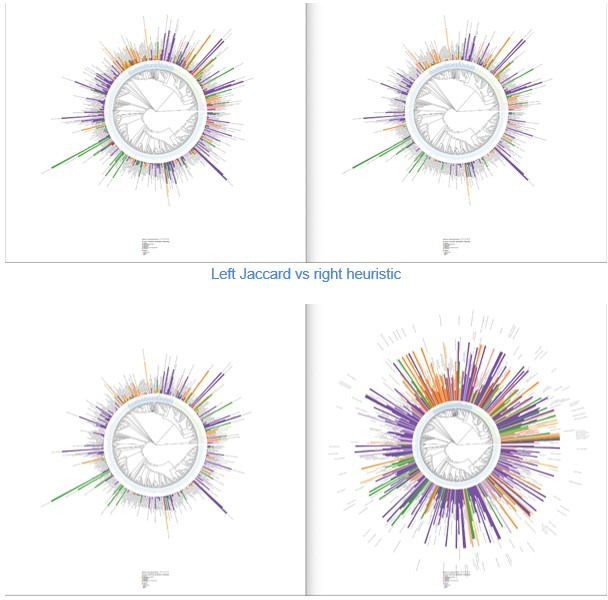

An alternative view of the biological and chemical diversity covered by LOTUS is illustrated in Figure 7. Here, chemical compounds are not organized but biological organisms are placed in their taxonomy. To limit bias due to under-reporting in the literature and keep a reasonable display size, only families with at least 50 reported compounds were included. Organisms were classified according to the OTL taxonomy and structures according to NPClassifier. The tips were labeled according to the biological family and colored according to their biological kingdom. The bars represent structure specificity of the most characteristic chemical class of the given biological family (the higher the more specific). This specificity score was a Jaccard index between the chemical class and the biological family. For more details, see 4_visualizing/plot_magicTree.R.

Figure 7. LOTUS provides new means of exploring and representing chemical and biological diversity.

The tree generated from current LOTUS data builds on biological taxonomy and employs the kingdom as tips label color (only families containing 50+ chemical structures were considered). The outer bars correspond to the most specific chemical class found in the biological family. The height of the bar is proportional to a specificity score corresponding to a Jaccard index between the chemical class and the biological family. The bar color corresponds to the chemical pathway of the most specific chemical class in the NPClassifier classification system. The size of the leaf nodes corresponds to the number of genera reported in the family. The figure is vectorized and zoomable for detailed inspection and is available under the CC0 license at https://commons.wikimedia.org/wiki/File:Lotus_initiative_1_chemically_interpreted_biological_tree.svg.

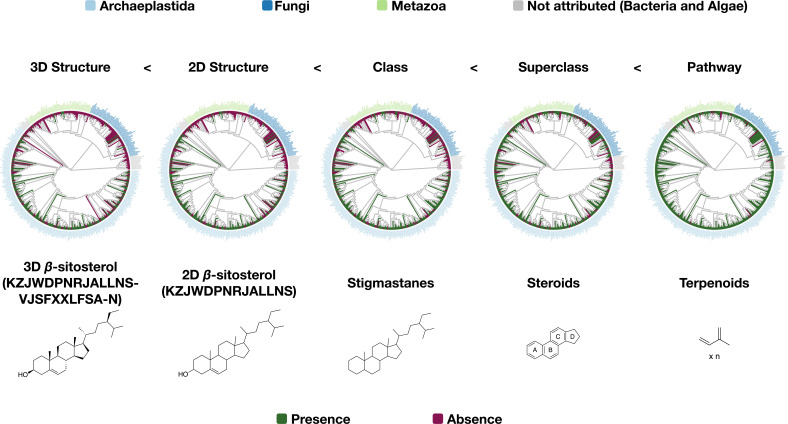

Figure 7—figure supplement 1. Distribution of β-sitosterol and related chemical parents among families with at least 50 reported compounds present in LOTUS.

Figure 7 makes it possible to spot highly specific compound classes such as trinervitane terpenoids in the Termitidae, the rhizoxin macrolides in the Rhizopodaceae, or the quassinoids and limonoids typical, respectively, of Simaroubaceae and Meliaceae. Similarly, tendencies of more generic occurrence of NP can be observed. For example, within the fungal kingdom, Basidiomycotina appear to have a higher biosynthetic specificity toward terpenoids than other fungi, which mostly focus on polyketides production. As explained previously, Figure 7 is highly dependent of the data reported in literature. As also illustrated in Figure 4, some compounds can be over-studied among several organisms, and many organisms studied for specific compounds only. This is a direct consequence of the way the NP community reports its data actually. Having this in mind, when observed at a finer scale, down to the structure level, such chemotaxonomic representation can give valuable insights. For example, among all chemical structures, only two were found in all biological kingdoms, namely heptadecanoic acid (KEMQGTRYUADPNZ-UHFFFAOYSA-N) and β-carotene (OENHQHLEOONYIE-JLTXGRSLSA-N). Looking at the distribution of β-sitosterol (KZJWDPNRJALLNS-VJSFXXLFSA-N) within the overall biological tree, Figure 7—figure supplement 1 plots its presence/absence versus those of its superior chemical classifications, namely the stigmastane, steroid and terpenoid derivatives, over the same tree used in Figure 7. The comparison of these five chemically interpreted biological trees clearly highlights the increasing speciation of the β-sitosterol biosynthetic pathway in the Archaeplastida kingdom, while the superior classes are distributed across all kingdoms. Figure 7 is zoomable and vectorized for detailed inspection.

As illustrated, the possibility of data interrogation at multiple precision levels, from fully defined chemical structures to broader chemical classes, is of great interest, for example, for taxonomic and evolution studies. This makes LOTUS a unique resource for the advancement of chemotaxonomy, a discipline pioneered by Augustin Pyramus de Candolle and pursued by other notable researchers (Robert Hegnauer, Otto R. Gottlieb) (Gottlieb, 1982; Hegnauer, 1986a; Candolle, 1816). Six decades after Hegnauer’s publication of ‘Die Chemotaxonomie der Pflanzen’ (Hegnauer, 1986b) much remains to be done for the advancement of this field of study and the LOTUS initiative aims to provide a solid basis for researchers willing to pursue these exciting explorations at the interface of chemistry, biology and evolution.

As shown recently in the context of spectral annotation (Dührkop et al., 2021), lowering the precision level of the annotation allows a broader coverage along with greater confidence. Genetic studies investigating the pathways involved and the organisms carrying the responsible biosynthetic genes would be of interest to confirm the previous observations. These forms of data interpretation exemplify the importance of reporting not only new structures, but also novel occurrences of known structures in organisms as comprehensive chemotaxonomic studies are pivotal for a better understanding of the metabolomes of living organisms.

The integration of multiple knowledge sources, for example genetics for NP producing gene clusters (Kautsar et al., 2020) combined to taxonomies and occurrences DB, also opens new opportunities to understand if an organism is responsible for the biosynthesis of a NP or merely contains it. This understanding is of utmost importance for the chemotaxonomic field and will help to understand to which extent microorganisms (endosymbionts) play a role in host development and its NP expression potential (Saikkonen et al., 2004).

Conclusion and Perspectives

Advancing natural products knowledge

At its current development stage, data harmonized and curated throughout the LOTUS initiative remains imperfect and, by the very nature of research, at least partially biased (see Introduction). In the context of bioactive NP research, and due to global editorial practices, it should not be ignored that many publications tend to emphasize new compounds and/or those for which interesting bioactivity has been measured. Near-ubiquitous (primarily plant-based) compounds, if broadly bioactive, tend to be overrepresented in the NP literature, yet the implication of their wide distribution in nature and associated patterns of non-specific activity are often underappreciated (Bisson et al., 2016b). Ideally, all characterized compounds independent of structural novelty and/or bioactivity profile should be documented, and the sharing of verified structure-organism pairs is fundamental to the advancement of NP research.

The LOTUS initiative provides a framework for rigorous review and incorporation of new records and already presents a valuable overview of the distribution of NP occurrences studied to date. While current data presents a reasonable approximation of the chemistries of a few well-studied organisms such as Arabidopsis thaliana, it remains patchy for many other organisms represented in the dataset. Community participation is the most efficient means of achieving a better documentation of NP occurrences, and the comprehensive editing opportunities provided within LOTUS and through the associated Wikidata distribution platform open new opportunities for such collaborative engagement. In addition to facilitating the introduction of new data, it also provides a forum for critical review of existing data (see an example of a Wikidata Talk page here), as well as harmonization and verification of existing NP datasets as they come online.

Fostering FAIRness and TRUSTworthiness

The LOTUS harmonized data and dissemination of referenced structure-organism pairs through Wikidata, enables novel forms of queries and transformational perspectives in NP research. As LOTUS follows the guidelines of FAIRness and TRUSTworthiness, all researchers across disciplines can benefit from this opportunity, whether the interest is in ecology and evolution, chemical ecology, drug discovery, biosynthesis pathway elucidation, chemotaxonomy, or other research fields connected with NP.

Researchers worldwide uniformly acknowledge the limitations caused by the intrinsic unavailability of essential (raw) data (Bisson et al., 2016a). In addition to being FAIR, LOTUS data is also open with a clear license, while closed data is still a major impediment to advancement of science (Murray-Rust, 2008). The lack of progress in such direction is partly due to elements in the dissemination channels of the classical print and static PDF publication formats that complicate or sometimes even discourage data sharing, for example, due to page limitations and economically motivated mechanisms, including those involved in the focus on and calculation of journal impact factors. In particular raw data such as experimental readings, spectroscopic data, instrumental measurements, statistical, and other calculations are valued by all, but disseminated by only very few. The immense value of raw data and the desire to advance the public dissemination has recently been documented in detail for nuclear magnetic resonance (NMR) spectroscopic data by a large consortium of NP researchers (McAlpine et al., 2019). However, to generate the vital flow of contributed data, the effort associated with preparing and submitting content to open repositories as well as data reuse should be better acknowledged in academia, government, regulatory, and industrial environments (Cousijn et al., 2019; Cousijn et al., 2018; Pierce et al., 2019). The introduction of LOTUS provides here a new opportunity to advance the FAIR guiding principles for scientific data management and stewardship (Wilkinson et al., 2016).

Opening new perspectives for spectral data

The possibilities for expansion and future applications of the Wikidata-based LOTUS initiative are significant. For example, properly formatted spectral data (e.g. obtained by MS or NMR) can be linked to the Wikidata entries of the originating chemical compounds. MassBank (Horai et al., 2010) and SPLASH (Wohlgemuth et al., 2010) identifiers are already reported in Wikidata, and this existing information can be used to report MassBank or SPLASH records for example for Arabidopsis thaliana compounds (https://w.wiki/3PJD). Such possibilities will help to bridge experimental data results obtained during the early stages of NP research with data that has been reported and formatted in different contexts. This opens exciting perspectives for structural dereplication, NP annotation, and metabolomic analysis. The authors have previously demonstrated that taxonomically informed metabolite annotation is critical for the improvement of the NP annotation process (Rutz et al., 2019). Alternative approaches linking structural annotation to biological organisms have also shown substantial improvements (Hoffmann et al., 2021). In this context, the LOTUS initiative offers new opportunities for linking chemical objects to both their biological occurrences and spectral information and should significantly facilitate such applications.

Integrating chemodiversity, biodiversity, and human health

As shown in Figure 7—figure supplement 1, observing the chemical and biological diversity at various granularities offers new insights. Regarding the chemical objects involved, it will be important to document the taxonomies of chemical annotations for the Wikidata entries. However, this is a rather complex task, for which stability and coverage issues will have to be addressed first. Existing chemical taxonomies such as ChEBI, ClassyFire, or NPClassifier are evolving steadily, and it will be important to constantly update the tools used to make further annotations. Promising efforts have been undertaken to automate the inclusion of Wikidata structures into a chemical ontology. Such approach exploits the SMILES and SMARTS associated properties to infer a chemical classification for the structure. See for example, the entry related to emericellolide B. Repositioning NP within their greater biosynthetic context is another major challenge - and active field of research. The fact that the LOTUS initiative disseminates its data through Wikidata will help facilitate further integration with biological pathway knowledge bases such as WikiPathways and contribute to this complex task (Martens et al., 2021; Slenter et al., 2018).

In the field of ecology, molecular traits are gaining increased attention (Kessler and Kalske, 2018; Sedio, 2017; Taylor and Dunn, 2018). The LOTUS architecture can help to associate classical plant traits (e.g. leaf surface area, photosynthetic capacities, etc.) with Wikidata biological organisms entries, and, thus, allow their integration and comparison with chemicals that are associated with the organisms. Likewise, the association of biogeography data documented in repositories such as GBIF could be further exploited in Wikidata to pursue the exciting but understudied topic of ‘chemodiversity hotspots’ (Defossez et al., 2021).

Other NP-related information of great interest remains poorly formatted. One example of such a shortcoming relates to traditional medicine (and the field studying it: ethnomedicine and ethnobotany), which is the historical and empiric approach of mankind to discover and use bioactive products from Nature, primarily plants. The amount of knowledge generated in human history on the use of medicinal substances represents a fascinating yet underutilized amount of information. Notably, the body of literature on the pharmacology and toxicology of NP is compound-centric, increases steadily, and relatively scattered, but still highly relevant for exploring the role and potential utility of NP for human health. To this end, the LOTUS initiative represents a potential framework for new concepts by which such information could be valued and conserved in the digital era (Allard et al., 2018; Cordell, 2017a; Cordell, 2017b). This underscores the transformative value of the LOTUS initiative for the advancement of traditional medicine and its interest for drug discovery in health systems worldwide.

Shortcomings and challenges

Despite these strong advantages, the establishment and functioning of the LOTUS curation pipeline is not devoid of defaults and we list hereafter some of the observed shortcomings and associated challenges.

First, the LOTUS processing pipeline is heavy. It includes many dependencies and is convoluted. We tried to simplify the process and associated programs as much as possible but they remain consequent. This is the consequence of the heterogeneous nature of the source information and the number of successive operations required to process the data.

Second, while the overall objective of the LOTUS processing pipeline is to increase data quality, the pipeline also transforms data during the process, and, in some cases, data quality can be degraded or errors can be propagated. For example, regarding the chemical objects, the processing pipeline performs a systematic sanitization step that includes salt removal, uncharging of molecules and dimers resolving. We decided to apply this step systematically after observing a high ratio of artifacts within salts, charged or dimeric molecules. This thus implies that correct salts, charged or dimeric molecules in the input data will suffer an unwanted ‘sanitization’ step. Also, the LOTUS processing step uses external libraries and tools for the automated ‘name to structure’ and ‘structure to name’ translations. These remain challenging as they rely on sets of predefined rules which do not cover all cases and can commonly lead to incorrect translations.

On the biological organisms curation side, we are aware of shortcomings, whether inherent to specific inputs or regarding limitations of the general process. Regarding inputs, some cases are clearly not resolvable except through human curation. For example, the word Lotus can refer both to the genus of a plant of the Fabaceae family (https://www.wikidata.org/wiki/Q3645698) or to the vernacular name of Nelumbo nucifera (https://www.wikidata.org/wiki/Q16528). In fact, the name of the LOTUS Initiative comes, in part, from this taxonomic curiosity - and the challenge for its automated curation. To give another striking illustration, Ficus variegata corresponds both to a plant (https://www.wikidata.org/wiki/Q5446649) and to a mollusc (https://www.wikidata.org/wiki/Q502030). For specific names coming from traditional Chinese medicine or other sources using vernacular names, translation was dependent on hand curated dictionaries, which are clearly not exhaustive. Additionally, it is noteworthy to remind that the validation of the processed entries relies in part on partly imperfect rules, thus leading to erroneous entries in the output data. However, we also deliberately kept those rules restrictive in order to overall favor quality over quantity (see Figure 2).

Thus, despite our efforts, there is no doubt that incorrect structure-organism pairs have been uploaded on Wikidata (and some correct ones have not). We however expect that the editing facilities offered by this platform and community efforts will, over time, improve data quality.

Summary and outlook

Despite these challenges, the various facets discussed above connect with ongoing and future developments that the tandem of the LOTUS initiative and its Wikidata integration can accommodate through a broader knowledge base. The information of the LOTUS initiative is already readily accessible by third party projects build on top of Wikidata such as the SLING project (https://github.com/ringgaard/sling, see entry for gliotoxin) or the Plant Humanities Lab project (https://lab.plant-humanities.org, see entry for Ilex guayusa in the ‘From Related Items’ section). LOTUS data has also been integrated to PubChem (https://pubchem.ncbi.nlm.nih.gov/source/25132) to complement the natural products related metadata of this major chemical DB. For an example, see Gentiana lutea.

Behind the scenes, all underlying resources represent data in a multidimensional space and can be extracted as individual graphs, which can then be interconnected. The craft of appropriate federated queries allows users to navigate these graphs and fully exploit their potential (Waagmeester et al., 2020; Kratochvíl et al., 2018). The development of interfaces such as RDFFrames (Mohamed et al., 2020) will also facilitate the use of the wide arsenal of existing machine learning approaches to automate reasoning on these knowledge graphs.

Overall, the LOTUS initiative aims to make more and better data available. While we did our best to ensure high data quality, the current processing pipeline still removes a lot of correct entries and misses or induces some incorrect ones. Aware of those imperfections, our project hopefully paves the way for the establishment of an open, durable and expandable electronic NP resource. The design and efforts of the LOTUS initiative reflect our conviction that the integration of NP research results is long-needed and requires a truly open and FAIR approach to information dissemination, with high quality data directly flowing from its source to public knowledge bases. We believe that the LOTUS initiative has the potential to fuel a virtuous cycle of research habits and, as a result, contribute to a better understanding of Life and its chemistry.

Materials and methods

Key resources table.

| Reagent type (species) or resource | Designation | Source or reference | Identifiers | Additional information |

|---|---|---|---|---|

| Software, algorithm | Lotus-processor code | This work (https://github.com/lotusnprod/lotus-processor, Rutz, 2022a) | Archived at https://doi.org/10.5281/zenodo.5802107 | |

| Software, algorithm | Lotus-web code | This work (https://github.com/lotusnprod/lotus-web, Rutz, 2022b) | Archived at https://doi.org/10.5281/zenodo.5802119 | |

| Software, algorithm | Lotus-wikidata-interact code | This work (https://github.com/lotusnprod/lotus-wikidata-interact, Rutz, 2022c) | Archived at https://doi.org/10.5281/zenodo.5802113 | |

| Software, algorithm | Global Names Architeture | https://globalnames.org | QID:Q65691453 | See Additional executable files |

| Software, algorithm | Java | https://www.java.com | QID:Q251 | |

| Software, algorithm | Kotlin | https://kotlinlang.org | QID:Q3816639 | See Kotlin packages |

| Software, algorithm | Manubot | https://manubot.org | QID:Q96473455 RRID:SCR_018553 | Repository available at https://github.com/lotusnprod/lotus-manuscript |

| Software, algorithm | NPClassifier | https://npclassifier.ucsd.edu | See https://doi.org/10.1021/acs.jnatprod.1c00399 | |

| Software, algorithm | OPSIN | https://github.com/dan2097/opsin | QID:Q26481302 | See Additional executable files |

| Software, algorithm | Python Programming Language | https://www.python.org | QID:Q28865 RRID:SCR_008394 | See Python packages |

| Software, algorithm | R Project for Statistical Computing | https://www.r-project.org | QID:Q206904 RRID:SCR_001905 | See R packages |

| Software, algorithm | Molconvert | https://docs.chemaxon.com/display/docs/molconvert.md | QID:Q55377678 | See Chemical structures |

| Software, algorithm | Wikidata | https://www.wikidata.org | QID:Q2013 RRID:SCR_018492 | Project page https://www.wikidata.org/wiki/Wikidata:WikiProject_Chemistry/Natural_products |

| Other | Lotus custom dictionaries | This work | Archived at https://doi.org/10.5281/zenodo.5801798 | |

| Other | Chemical identifier resolver | https://cactus.nci.nih.gov/chemical/structure | See Chemical structures | |

| Other | CrossRef | https://www.crossref.org | QID:Q5188229 RRID:SCR_003217 | See References |

| Other | PubChem | https://pubchem.ncbi.nlm.nih.gov | QID:Q278487 RRID:SCR_004284 | LOTUS data https://pubchem.ncbi.nlm.nih.gov/source/25132 |

| Other | PubMed | https://pubmed.ncbi.nlm.nih.gov | QID:Q180686 RRID:SCR_004846 | See References |

| Other | Taxonomic data sources | https://resolver.globalnames.org/data_sources | See Translation | |

| Other | Natural Products data sources | See Appendix 1 |

Data gathering

Before their inclusion, the overall quality of the source was manually assessed to estimate, both, the quality of referenced structure-organism pairs and the lack of ambiguities in the links between data and references. This led to the identification of thirty-six electronic NP resources as valuable LOTUS input. Data from the proprietary Dictionary of Natural Products (DNP v 29.2) was also used for comparison purposes only and is not publicly disseminated. FooDB was also curated but not publicly disseminated since its license proscribed sharing in Wikidata. Appendix 1 gives all necessary details regarding electronic NP resources access and characteristics.